7679ae9a5ff8754958efa52d34c9830c.ppt

- Количество слайдов: 24

Controlled Experiments on Pair Programming (PP): Making Sense of Heterogeneous Results NIK’ 2010, Gjøvik, 22 -24. Nov. , 2010 Reidar Conradi, NTNU, Trondheim Muhammad Ali Babar, ITU, Copenhagen www. idi. ntnu. no/grupper/su/oss/nik 2010 -pairprog-slides. ppt RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 1

Controlled Experiments on Pair Programming (PP): Making Sense of Heterogeneous Results NIK’ 2010, Gjøvik, 22 -24. Nov. , 2010 Reidar Conradi, NTNU, Trondheim Muhammad Ali Babar, ITU, Copenhagen www. idi. ntnu. no/grupper/su/oss/nik 2010 -pairprog-slides. ppt RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 1

Table of Contents 1. 2. 3. 4. 5. 6. Motivation Two PP experiments: Simula vs Utah Meta-analysis of 18 PP experiments Cost/Benefits of PP with Extended Utah Reflections Conclusion 3/18/2018 RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 2

Table of Contents 1. 2. 3. 4. 5. 6. Motivation Two PP experiments: Simula vs Utah Meta-analysis of 18 PP experiments Cost/Benefits of PP with Extended Utah Reflections Conclusion 3/18/2018 RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 2

1. Motivation (1) • • Agile manfiesto and methods are ”hot”: XP, Scrum, Pair programming (PP), … Similar with Evidence-Based Software Engineering (EBSE) to systematically learn from primary, empirical studies. Over 100 secondary, thematic sump-up papers as Structured Literary Reviews (SRLs ) on Agile Methods, PP, Controlled Experiments, Effort estimation, Unit testing, … Guidelines to plan, execute and report from such studies? • Always a struggle between rigor and relevance: • ”In vitro” controlled experiments w/ students on toy problems of 100 -200 LOC. • ”In vivo” case studies over N years w/ proff. s on MLOC systems. • And how to disseminate EBSE experience and knowledge in the ICT industry - by PP, job rotation, training, knowledge management, …? Avoid information graveyards! RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 3

1. Motivation (1) • • Agile manfiesto and methods are ”hot”: XP, Scrum, Pair programming (PP), … Similar with Evidence-Based Software Engineering (EBSE) to systematically learn from primary, empirical studies. Over 100 secondary, thematic sump-up papers as Structured Literary Reviews (SRLs ) on Agile Methods, PP, Controlled Experiments, Effort estimation, Unit testing, … Guidelines to plan, execute and report from such studies? • Always a struggle between rigor and relevance: • ”In vitro” controlled experiments w/ students on toy problems of 100 -200 LOC. • ”In vivo” case studies over N years w/ proff. s on MLOC systems. • And how to disseminate EBSE experience and knowledge in the ICT industry - by PP, job rotation, training, knowledge management, …? Avoid information graveyards! RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 3

1. Motivation on Pair Programming, intro (2) • PP: One of the 12 methods in XP, with roots in generous teamwork elsewhere – cf. book by Gerald Weinberg: “The Psychology of Computer Programming”, 1971. • So, what do we know on PP – either more effort, shorter duration, better “quality”– or just happier people with more shared knowledge and experience, learning from another. • Moderate effects on programming-related factors: +- 15%. • • What about longitudinal studies of social/cognitive factors to promote learning? RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 4

1. Motivation on Pair Programming, intro (2) • PP: One of the 12 methods in XP, with roots in generous teamwork elsewhere – cf. book by Gerald Weinberg: “The Psychology of Computer Programming”, 1971. • So, what do we know on PP – either more effort, shorter duration, better “quality”– or just happier people with more shared knowledge and experience, learning from another. • Moderate effects on programming-related factors: +- 15%. • • What about longitudinal studies of social/cognitive factors to promote learning? RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 4

1. Motivation, a bit formal (3) PP: Two persons work together as a pair: a ”secretary” and a ”coach”; seems like a waste of time and PP ”yelling” – but … Research goals and challenges: RQ 1. How to compare the different primary studies of PP? Both PP-specific and lifecycle results. RQ 2. What common metrics could be applied in the primary studies on PP? RChallenge. How to study and promote the social-cognitive aspects of PP – beyond that PP is “fun”? RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 5

1. Motivation, a bit formal (3) PP: Two persons work together as a pair: a ”secretary” and a ”coach”; seems like a waste of time and PP ”yelling” – but … Research goals and challenges: RQ 1. How to compare the different primary studies of PP? Both PP-specific and lifecycle results. RQ 2. What common metrics could be applied in the primary studies on PP? RChallenge. How to study and promote the social-cognitive aspects of PP – beyond that PP is “fun”? RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 5

2. Comparing two PP experiments (1) • Simula experiment (Arisholm 2007): 295 (!!) professionals with different expertise, one session each in randomized groups, done distributedly in 20 companies in 5 countries. Update - in 4 steps - a given Java-program of 200 -300 LOC in an easy/complex variant for a coffee vending machine. Many special rules and hypotheses. • Utah experiment (Williams 2000): 41 4 th-year students in randomized groups with different expertise, done at normal university lab in 4 sessions over a semester. Write C-program of ca. 150 LOC from scratch for a specific (notdocumented) problem in each session. • • • Both: unknown # defects, using pre-made tests to check results. May compare results of soloist/pair groups with low/high expertise. • Otherwise almost nothing in common – so how to generalize? RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 6

2. Comparing two PP experiments (1) • Simula experiment (Arisholm 2007): 295 (!!) professionals with different expertise, one session each in randomized groups, done distributedly in 20 companies in 5 countries. Update - in 4 steps - a given Java-program of 200 -300 LOC in an easy/complex variant for a coffee vending machine. Many special rules and hypotheses. • Utah experiment (Williams 2000): 41 4 th-year students in randomized groups with different expertise, done at normal university lab in 4 sessions over a semester. Write C-program of ca. 150 LOC from scratch for a specific (notdocumented) problem in each session. • • • Both: unknown # defects, using pre-made tests to check results. May compare results of soloist/pair groups with low/high expertise. • Otherwise almost nothing in common – so how to generalize? RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 6

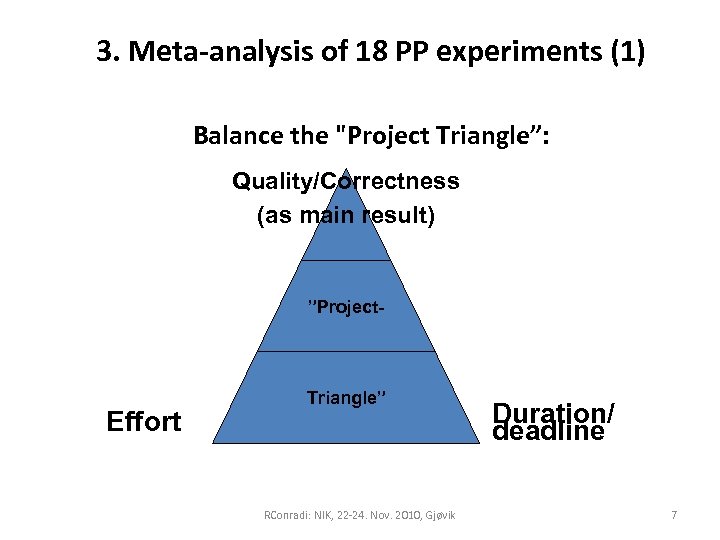

3. Meta-analysis of 18 PP experiments (1) Balance the "Project Triangle”: Quality/Correctness (as main result) ”Project- Effort Triangle” RConradi: NIK, 22 -24. Nov. 2010, Gjøvik Duration/ deadline 7

3. Meta-analysis of 18 PP experiments (1) Balance the "Project Triangle”: Quality/Correctness (as main result) ”Project- Effort Triangle” RConradi: NIK, 22 -24. Nov. 2010, Gjøvik Duration/ deadline 7

3. Meta-analysis of PP: massive heterogeneity (2) § No common experiment organization or treatment wrt. : § Goals and objectives. § Team composition: software expertise (student vs. practitioner, programming skills), soloists vs. pairs, application task, use of external reviewers, … § Software technology: Eclipse; UML; Java, C++, C, … § Randomized vs. self-chosen team composition, … § Tree major dependent variables: § Quality (hmm? ) – see next slides § Duration (hours) – elapsed time § Effort (person-hours) – often just named “hours” § No volume / productivity measures (LOC, FP, …) RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 8

3. Meta-analysis of PP: massive heterogeneity (2) § No common experiment organization or treatment wrt. : § Goals and objectives. § Team composition: software expertise (student vs. practitioner, programming skills), soloists vs. pairs, application task, use of external reviewers, … § Software technology: Eclipse; UML; Java, C++, C, … § Randomized vs. self-chosen team composition, … § Tree major dependent variables: § Quality (hmm? ) – see next slides § Duration (hours) – elapsed time § Effort (person-hours) – often just named “hours” § No volume / productivity measures (LOC, FP, …) RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 8

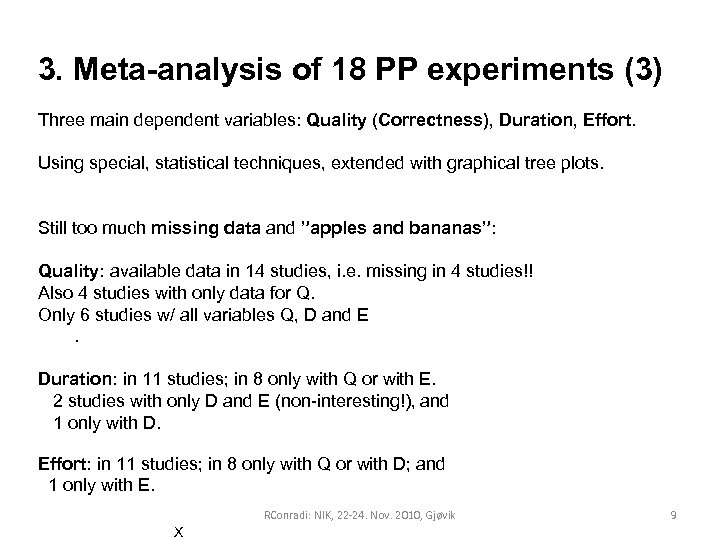

3. Meta-analysis of 18 PP experiments (3) Three main dependent variables: Quality (Correctness), Duration, Effort. Using special, statistical techniques, extended with graphical tree plots. Still too much missing data and ”apples and bananas”: Quality: available data in 14 studies, i. e. missing in 4 studies!! Also 4 studies with only data for Q. Only 6 studies w/ all variables Q, D and E. Duration: in 11 studies; in 8 only with Q or with E. 2 studies with only D and E (non-interesting!), and 1 only with D. Effort: in 11 studies; in 8 only with Q or with D; and 1 only with E. RConradi: NIK, 22 -24. Nov. 2010, Gjøvik X 9

3. Meta-analysis of 18 PP experiments (3) Three main dependent variables: Quality (Correctness), Duration, Effort. Using special, statistical techniques, extended with graphical tree plots. Still too much missing data and ”apples and bananas”: Quality: available data in 14 studies, i. e. missing in 4 studies!! Also 4 studies with only data for Q. Only 6 studies w/ all variables Q, D and E. Duration: in 11 studies; in 8 only with Q or with E. 2 studies with only D and E (non-interesting!), and 1 only with D. Effort: in 11 studies; in 8 only with Q or with D; and 1 only with E. RConradi: NIK, 22 -24. Nov. 2010, Gjøvik X 9

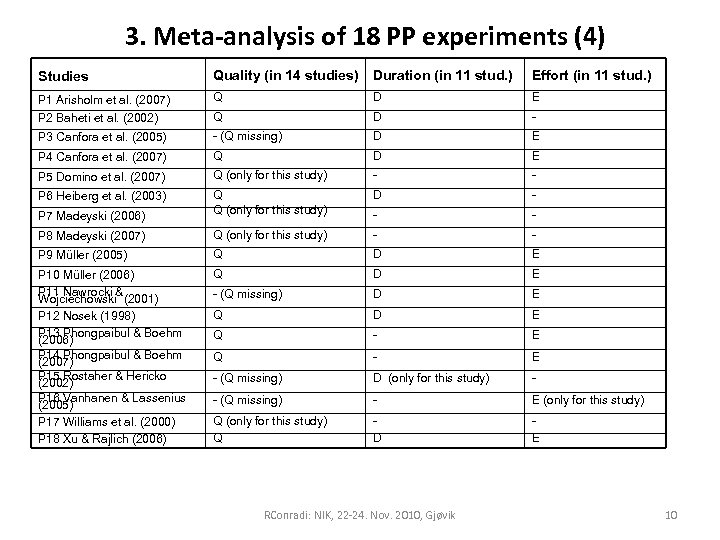

3. Meta-analysis of 18 PP experiments (4) Studies Quality (in 14 studies) Duration (in 11 stud. ) Effort (in 11 stud. ) P 1 Arisholm et al. (2007) P 2 Baheti et al. (2002) Q D E Q D - P 3 Canfora et al. (2005) - (Q missing) D E P 4 Canfora et al. (2007) Q D E P 5 Domino et al. (2007) Q (only for this study) - - P 6 Heiberg et al. (2003) Q Q (only for this study) D - - - P 8 Madeyski (2007) Q (only for this study) - - P 9 Müller (2005) Q D E P 10 Müller (2006) P 11 Nawrocki & Wojciechowski (2001) P 12 Nosek (1998) P 13 Phongpaibul & Boehm (2006) P 14 Phongpaibul & Boehm (2007) P 15 Rostaher & Hericko (2002) P 16 Vanhanen & Lassenius (2005) P 17 Williams et al. (2000) P 18 Xu & Rajlich (2006) Q D E - (Q missing) D E Q - E - (Q missing) D (only for this study) - - (Q missing) - E (only for this study) Q D E P 7 Madeyski (2006) RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 10

3. Meta-analysis of 18 PP experiments (4) Studies Quality (in 14 studies) Duration (in 11 stud. ) Effort (in 11 stud. ) P 1 Arisholm et al. (2007) P 2 Baheti et al. (2002) Q D E Q D - P 3 Canfora et al. (2005) - (Q missing) D E P 4 Canfora et al. (2007) Q D E P 5 Domino et al. (2007) Q (only for this study) - - P 6 Heiberg et al. (2003) Q Q (only for this study) D - - - P 8 Madeyski (2007) Q (only for this study) - - P 9 Müller (2005) Q D E P 10 Müller (2006) P 11 Nawrocki & Wojciechowski (2001) P 12 Nosek (1998) P 13 Phongpaibul & Boehm (2006) P 14 Phongpaibul & Boehm (2007) P 15 Rostaher & Hericko (2002) P 16 Vanhanen & Lassenius (2005) P 17 Williams et al. (2000) P 18 Xu & Rajlich (2006) Q D E - (Q missing) D E Q - E - (Q missing) D (only for this study) - - (Q missing) - E (only for this study) Q D E P 7 Madeyski (2006) RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 10

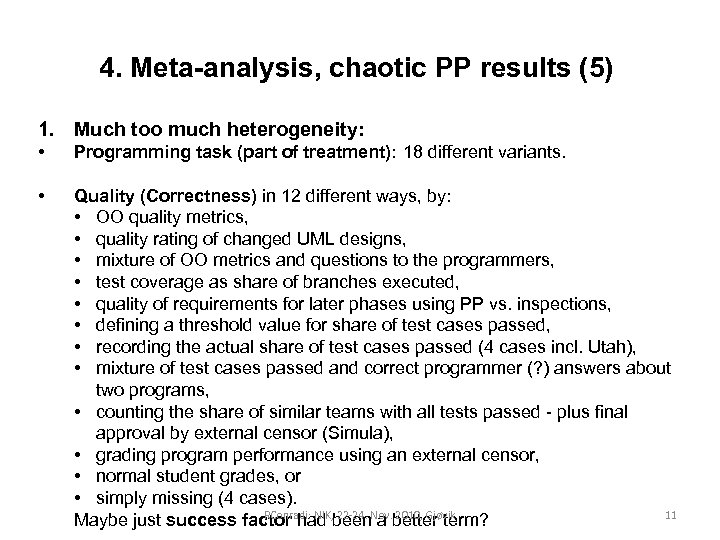

4. Meta-analysis, chaotic PP results (5) 1. Much too much heterogeneity: • Programming task (part of treatment): 18 different variants. • Quality (Correctness) in 12 different ways, by: • OO quality metrics, • quality rating of changed UML designs, • mixture of OO metrics and questions to the programmers, • test coverage as share of branches executed, • quality of requirements for later phases using PP vs. inspections, • defining a threshold value for share of test cases passed, • recording the actual share of test cases passed (4 cases incl. Utah), • mixture of test cases passed and correct programmer (? ) answers about two programs, • counting the share of similar teams with all tests passed - plus final approval by external censor (Simula), • grading program performance using an external censor, • normal student grades, or • simply missing (4 cases). RConradi: NIK, 22 -24. 11 Maybe just success factor had been. Nov. better term? a 2010, Gjøvik

4. Meta-analysis, chaotic PP results (5) 1. Much too much heterogeneity: • Programming task (part of treatment): 18 different variants. • Quality (Correctness) in 12 different ways, by: • OO quality metrics, • quality rating of changed UML designs, • mixture of OO metrics and questions to the programmers, • test coverage as share of branches executed, • quality of requirements for later phases using PP vs. inspections, • defining a threshold value for share of test cases passed, • recording the actual share of test cases passed (4 cases incl. Utah), • mixture of test cases passed and correct programmer (? ) answers about two programs, • counting the share of similar teams with all tests passed - plus final approval by external censor (Simula), • grading program performance using an external censor, • normal student grades, or • simply missing (4 cases). RConradi: NIK, 22 -24. 11 Maybe just success factor had been. Nov. better term? a 2010, Gjøvik

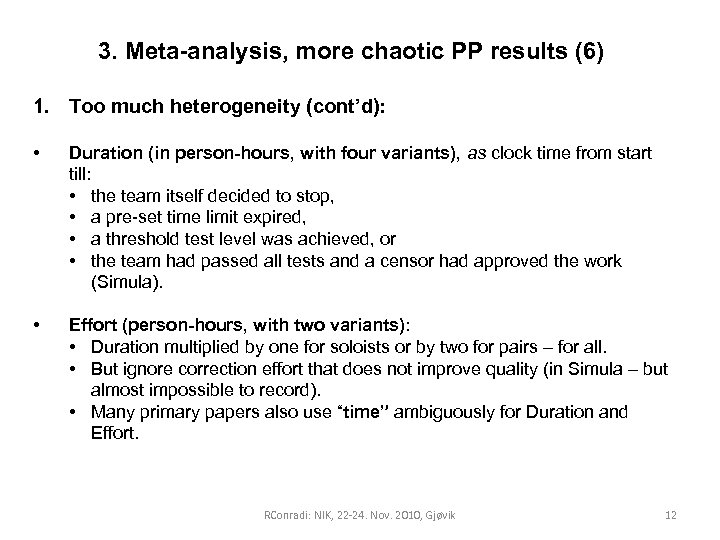

3. Meta-analysis, more chaotic PP results (6) 1. Too much heterogeneity (cont’d): • Duration (in person-hours, with four variants), as clock time from start till: • the team itself decided to stop, • a pre-set time limit expired, • a threshold test level was achieved, or • the team had passed all tests and a censor had approved the work (Simula). • Effort (person-hours, with two variants): • Duration multiplied by one for soloists or by two for pairs – for all. • But ignore correction effort that does not improve quality (in Simula – but almost impossible to record). • Many primary papers also use “time” ambiguously for Duration and Effort. RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 12

3. Meta-analysis, more chaotic PP results (6) 1. Too much heterogeneity (cont’d): • Duration (in person-hours, with four variants), as clock time from start till: • the team itself decided to stop, • a pre-set time limit expired, • a threshold test level was achieved, or • the team had passed all tests and a censor had approved the work (Simula). • Effort (person-hours, with two variants): • Duration multiplied by one for soloists or by two for pairs – for all. • But ignore correction effort that does not improve quality (in Simula – but almost impossible to record). • Many primary papers also use “time” ambiguously for Duration and Effort. RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 12

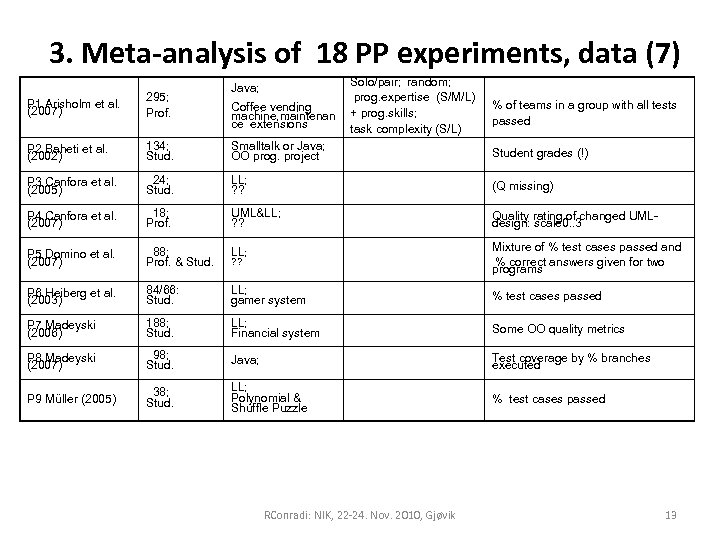

3. Meta-analysis of 18 PP experiments, data (7) Java; Solo/pair; random; prog. expertise (S/M/L) + prog. skills; task complexity (S/L) P 1 Arisholm et al. (2007) 295; Prof. P 2 Baheti et al. (2002) 134; Stud. Smalltalk or Java; OO prog. project Student grades (!) P 3 Canfora et al. (2005) 24; Stud. LL; ? ? (Q missing) P 4 Canfora et al. (2007) 18; Prof. UML≪ ? ? Quality rating of changed UMLdesign: scale 0. . 3 P 5 Domino et al. (2007) 88; Prof. & Stud. LL; ? ? Mixture of % test cases passed and % correct answers given for two programs P 6 Heiberg et al. (2003) 84/66: Stud. LL; gamer system % test cases passed P 7 Madeyski (2006) 188; Stud. LL; Financial system Some OO quality metrics P 8 Madeyski (2007) 98; Stud. Java; Test coverage by % branches executed P 9 Müller (2005) 38; Stud. LL; Polynomial & Shuffle Puzzle % test cases passed Coffee vending machine, maintenan ce extensions RConradi: NIK, 22 -24. Nov. 2010, Gjøvik % of teams in a group with all tests passed 13

3. Meta-analysis of 18 PP experiments, data (7) Java; Solo/pair; random; prog. expertise (S/M/L) + prog. skills; task complexity (S/L) P 1 Arisholm et al. (2007) 295; Prof. P 2 Baheti et al. (2002) 134; Stud. Smalltalk or Java; OO prog. project Student grades (!) P 3 Canfora et al. (2005) 24; Stud. LL; ? ? (Q missing) P 4 Canfora et al. (2007) 18; Prof. UML≪ ? ? Quality rating of changed UMLdesign: scale 0. . 3 P 5 Domino et al. (2007) 88; Prof. & Stud. LL; ? ? Mixture of % test cases passed and % correct answers given for two programs P 6 Heiberg et al. (2003) 84/66: Stud. LL; gamer system % test cases passed P 7 Madeyski (2006) 188; Stud. LL; Financial system Some OO quality metrics P 8 Madeyski (2007) 98; Stud. Java; Test coverage by % branches executed P 9 Müller (2005) 38; Stud. LL; Polynomial & Shuffle Puzzle % test cases passed Coffee vending machine, maintenan ce extensions RConradi: NIK, 22 -24. Nov. 2010, Gjøvik % of teams in a group with all tests passed 13

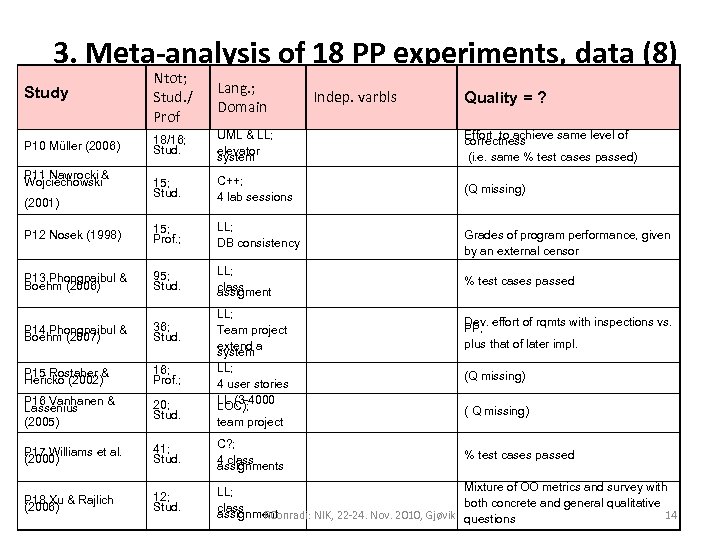

3. Meta-analysis of 18 PP experiments, data (8) Study Ntot; Stud. / Prof Lang. ; Domain P 10 Müller (2006) 18/16; Stud. UML & LL; elevator system Effort to achieve same level of correctness (i. e. same % test cases passed) 15; Stud. C++; 4 lab sessions (Q missing) P 12 Nosek (1998) 15; Prof. ; LL; DB consistency P 13 Phongpaibul & Boehm (2006) 95; Stud. LL; class assigment % test cases passed P 14 Phongpaibul & Boehm (2007) 36; Stud. LL; Team project extend a system Dev. effort of rqmts with inspections vs. PP, plus that of later impl. P 15 Rostaher & Hericko (2002) 16; Prof. ; P 16 Vanhanen & Lassenius (2005) 20; Stud. P 17 Williams et al. (2000) 41; Stud. C? ; 4 class assignments P 18 Xu & Rajlich (2006) 12; Stud. Mixture of OO metrics and survey with LL; both concrete and general qualitative class assignment RConradi: NIK, 22 -24. Nov. 2010, Gjøvik questions 14 P 11 Nawrocki & Wojciechowski (2001) LL; 4 user stories LL (3 -4000 LOC); team project Indep. varbls Quality = ? Grades of program performance, given by an external censor (Q missing) ( Q missing) % test cases passed

3. Meta-analysis of 18 PP experiments, data (8) Study Ntot; Stud. / Prof Lang. ; Domain P 10 Müller (2006) 18/16; Stud. UML & LL; elevator system Effort to achieve same level of correctness (i. e. same % test cases passed) 15; Stud. C++; 4 lab sessions (Q missing) P 12 Nosek (1998) 15; Prof. ; LL; DB consistency P 13 Phongpaibul & Boehm (2006) 95; Stud. LL; class assigment % test cases passed P 14 Phongpaibul & Boehm (2007) 36; Stud. LL; Team project extend a system Dev. effort of rqmts with inspections vs. PP, plus that of later impl. P 15 Rostaher & Hericko (2002) 16; Prof. ; P 16 Vanhanen & Lassenius (2005) 20; Stud. P 17 Williams et al. (2000) 41; Stud. C? ; 4 class assignments P 18 Xu & Rajlich (2006) 12; Stud. Mixture of OO metrics and survey with LL; both concrete and general qualitative class assignment RConradi: NIK, 22 -24. Nov. 2010, Gjøvik questions 14 P 11 Nawrocki & Wojciechowski (2001) LL; 4 user stories LL (3 -4000 LOC); team project Indep. varbls Quality = ? Grades of program performance, given by an external censor (Q missing) ( Q missing) % test cases passed

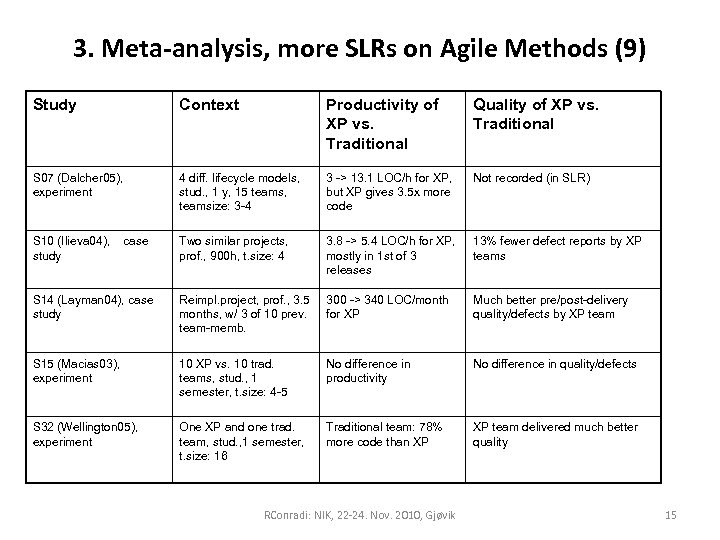

3. Meta-analysis, more SLRs on Agile Methods (9) Study Context Productivity of XP vs. Traditional Quality of XP vs. Traditional S 07 (Dalcher 05), experiment 4 diff. lifecycle models, stud. , 1 y, 15 teams, teamsize: 3 -4 3 -> 13. 1 LOC/h for XP, but XP gives 3. 5 x more code Not recorded (in SLR) S 10 (Ilieva 04), study Two similar projects, prof. , 900 h, t. size: 4 3. 8 -> 5. 4 LOC/h for XP, mostly in 1 st of 3 releases 13% fewer defect reports by XP teams S 14 (Layman 04), case study Reimpl. project, prof. , 3. 5 months, w/ 3 of 10 prev. team-memb. 300 -> 340 LOC/month for XP Much better pre/post-delivery quality/defects by XP team S 15 (Macias 03), experiment 10 XP vs. 10 trad. teams, stud. , 1 semester, t. size: 4 -5 No difference in productivity No difference in quality/defects S 32 (Wellington 05), experiment One XP and one trad. team, stud. , 1 semester, t. size: 16 Traditional team: 78% more code than XP XP team delivered much better quality case RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 15

3. Meta-analysis, more SLRs on Agile Methods (9) Study Context Productivity of XP vs. Traditional Quality of XP vs. Traditional S 07 (Dalcher 05), experiment 4 diff. lifecycle models, stud. , 1 y, 15 teams, teamsize: 3 -4 3 -> 13. 1 LOC/h for XP, but XP gives 3. 5 x more code Not recorded (in SLR) S 10 (Ilieva 04), study Two similar projects, prof. , 900 h, t. size: 4 3. 8 -> 5. 4 LOC/h for XP, mostly in 1 st of 3 releases 13% fewer defect reports by XP teams S 14 (Layman 04), case study Reimpl. project, prof. , 3. 5 months, w/ 3 of 10 prev. team-memb. 300 -> 340 LOC/month for XP Much better pre/post-delivery quality/defects by XP team S 15 (Macias 03), experiment 10 XP vs. 10 trad. teams, stud. , 1 semester, t. size: 4 -5 No difference in productivity No difference in quality/defects S 32 (Wellington 05), experiment One XP and one trad. team, stud. , 1 semester, t. size: 16 Traditional team: 78% more code than XP XP team delivered much better quality case RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 15

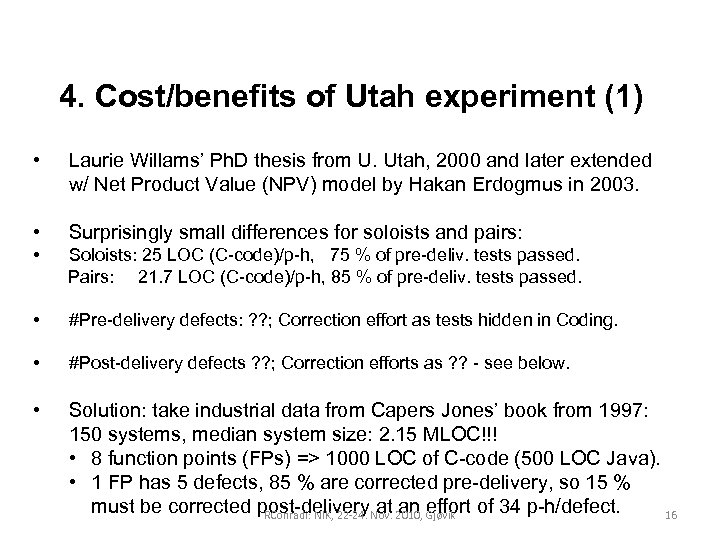

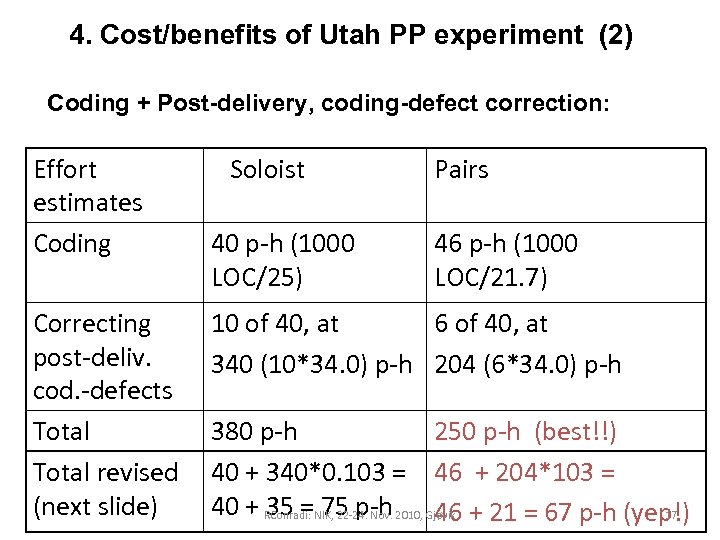

4. Cost/benefits of Utah experiment (1) • Laurie Willams’ Ph. D thesis from U. Utah, 2000 and later extended w/ Net Product Value (NPV) model by Hakan Erdogmus in 2003. • Surprisingly small differences for soloists and pairs: • Soloists: 25 LOC (C-code)/p-h, 75 % of pre-deliv. tests passed. Pairs: 21. 7 LOC (C-code)/p-h, 85 % of pre-deliv. tests passed. • #Pre-delivery defects: ? ? ; Correction effort as tests hidden in Coding. • #Post-delivery defects ? ? ; Correction efforts as ? ? - see below. • Solution: take industrial data from Capers Jones’ book from 1997: 150 systems, median system size: 2. 15 MLOC!!! • 8 function points (FPs) => 1000 LOC of C-code (500 LOC Java). • 1 FP has 5 defects, 85 % are corrected pre-delivery, so 15 % must be corrected post-delivery. Nov. 2010, Gjøvik of 34 p-h/defect. at an effort RConradi: NIK, 22 -24. 16

4. Cost/benefits of Utah experiment (1) • Laurie Willams’ Ph. D thesis from U. Utah, 2000 and later extended w/ Net Product Value (NPV) model by Hakan Erdogmus in 2003. • Surprisingly small differences for soloists and pairs: • Soloists: 25 LOC (C-code)/p-h, 75 % of pre-deliv. tests passed. Pairs: 21. 7 LOC (C-code)/p-h, 85 % of pre-deliv. tests passed. • #Pre-delivery defects: ? ? ; Correction effort as tests hidden in Coding. • #Post-delivery defects ? ? ; Correction efforts as ? ? - see below. • Solution: take industrial data from Capers Jones’ book from 1997: 150 systems, median system size: 2. 15 MLOC!!! • 8 function points (FPs) => 1000 LOC of C-code (500 LOC Java). • 1 FP has 5 defects, 85 % are corrected pre-delivery, so 15 % must be corrected post-delivery. Nov. 2010, Gjøvik of 34 p-h/defect. at an effort RConradi: NIK, 22 -24. 16

4. Cost/benefits of Utah PP experiment (2) Coding + Post-delivery, coding-defect correction: Effort estimates Coding Correcting post-deliv. cod. -defects Total revised (next slide) Soloist 40 p-h (1000 LOC/25) Pairs 46 p-h (1000 LOC/21. 7) 10 of 40, at 6 of 40, at 340 (10*34. 0) p-h 204 (6*34. 0) p-h 380 p-h 250 p-h (best!!) 40 + 340*0. 103 = 46 + 204*103 = 40 + RConradi: NIK, 22 -24. Nov. 2010, Gjøvik + 21 = 67 p-h (yep!) 35 = 75 p-h 46 17

4. Cost/benefits of Utah PP experiment (2) Coding + Post-delivery, coding-defect correction: Effort estimates Coding Correcting post-deliv. cod. -defects Total revised (next slide) Soloist 40 p-h (1000 LOC/25) Pairs 46 p-h (1000 LOC/21. 7) 10 of 40, at 6 of 40, at 340 (10*34. 0) p-h 204 (6*34. 0) p-h 380 p-h 250 p-h (best!!) 40 + 340*0. 103 = 46 + 204*103 = 40 + RConradi: NIK, 22 -24. Nov. 2010, Gjøvik + 21 = 67 p-h (yep!) 35 = 75 p-h 46 17

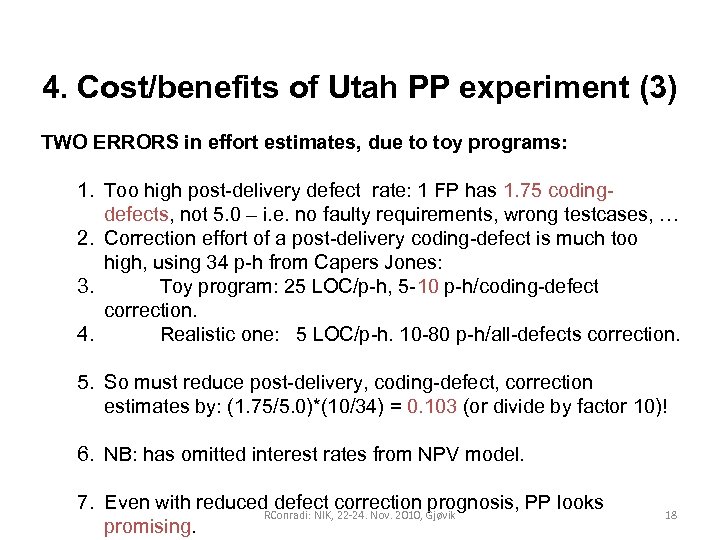

4. Cost/benefits of Utah PP experiment (3) TWO ERRORS in effort estimates, due to toy programs: 1. Too high post-delivery defect rate: 1 FP has 1. 75 codingdefects, not 5. 0 – i. e. no faulty requirements, wrong testcases, … 2. Correction effort of a post-delivery coding-defect is much too high, using 34 p-h from Capers Jones: 3. Toy program: 25 LOC/p-h, 5 -10 p-h/coding-defect correction. 4. Realistic one: 5 LOC/p-h. 10 -80 p-h/all-defects correction. 5. So must reduce post-delivery, coding-defect, correction estimates by: (1. 75/5. 0)*(10/34) = 0. 103 (or divide by factor 10)! 6. NB: has omitted interest rates from NPV model. 7. Even with reduced defect correction prognosis, PP looks RConradi: NIK, 22 -24. Nov. 2010, Gjøvik promising. 18

4. Cost/benefits of Utah PP experiment (3) TWO ERRORS in effort estimates, due to toy programs: 1. Too high post-delivery defect rate: 1 FP has 1. 75 codingdefects, not 5. 0 – i. e. no faulty requirements, wrong testcases, … 2. Correction effort of a post-delivery coding-defect is much too high, using 34 p-h from Capers Jones: 3. Toy program: 25 LOC/p-h, 5 -10 p-h/coding-defect correction. 4. Realistic one: 5 LOC/p-h. 10 -80 p-h/all-defects correction. 5. So must reduce post-delivery, coding-defect, correction estimates by: (1. 75/5. 0)*(10/34) = 0. 103 (or divide by factor 10)! 6. NB: has omitted interest rates from NPV model. 7. Even with reduced defect correction prognosis, PP looks RConradi: NIK, 22 -24. Nov. 2010, Gjøvik promising. 18

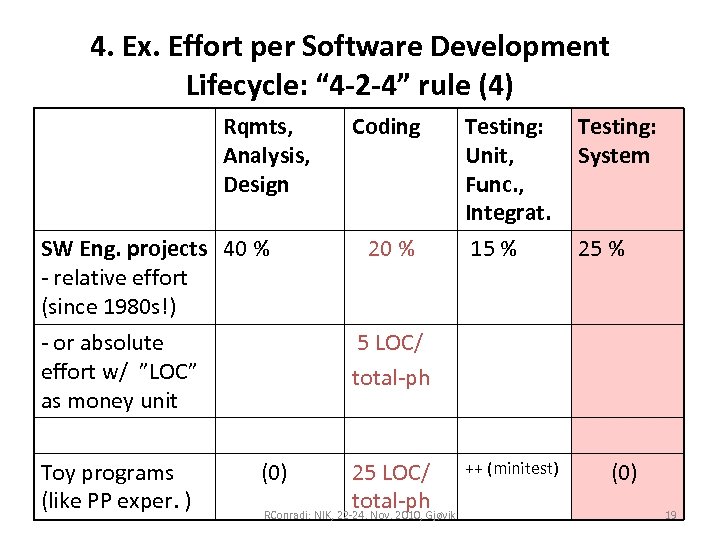

4. Ex. Effort per Software Development Lifecycle: “ 4 -2 -4” rule (4) Rqmts, Analysis, Design SW Eng. projects 40 % - relative effort (since 1980 s!) - or absolute effort w/ ”LOC” as money unit Toy programs (like PP exper. ) (0) Coding 20 % Testing: Unit, Func. , Integrat. 15 % Testing: System 25 % 5 LOC/ total-ph 25 LOC/ total-ph RConradi: NIK, 22 -24. Nov. 2010, Gjøvik ++ (minitest) (0) 19

4. Ex. Effort per Software Development Lifecycle: “ 4 -2 -4” rule (4) Rqmts, Analysis, Design SW Eng. projects 40 % - relative effort (since 1980 s!) - or absolute effort w/ ”LOC” as money unit Toy programs (like PP exper. ) (0) Coding 20 % Testing: Unit, Func. , Integrat. 15 % Testing: System 25 % 5 LOC/ total-ph 25 LOC/ total-ph RConradi: NIK, 22 -24. Nov. 2010, Gjøvik ++ (minitest) (0) 19

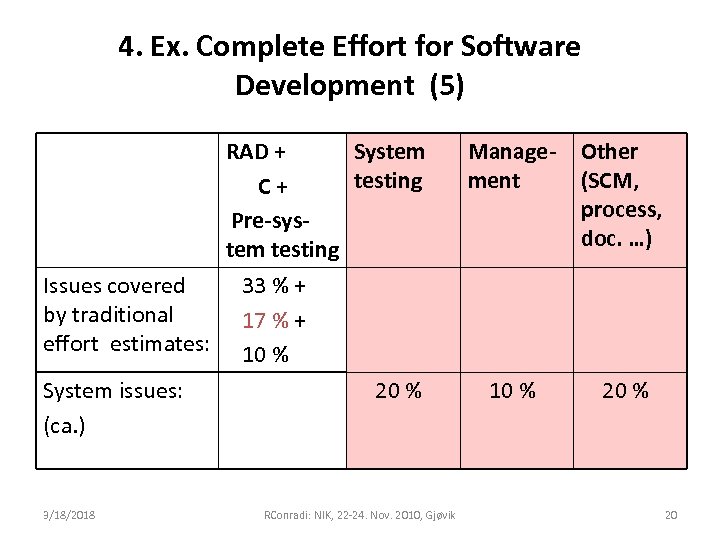

4. Ex. Complete Effort for Software Development (5) RAD + System testing C+ Pre-system testing Issues covered by traditional effort estimates: System issues: (ca. ) 3/18/2018 Management Other (SCM, process, doc. …) 10 % 20 % 33 % + 17 % + 10 % 20 % RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 20

4. Ex. Complete Effort for Software Development (5) RAD + System testing C+ Pre-system testing Issues covered by traditional effort estimates: System issues: (ca. ) 3/18/2018 Management Other (SCM, process, doc. …) 10 % 20 % 33 % + 17 % + 10 % 20 % RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 20

5. Reflections (1) RQ 1: How to promote more quality (rigorous, ”comparable”) primary studies? : • Better teaching? • Refusing to publish poor empirical papers? • But: how can a Ph. D student get sufficient credit for being, e. g. , the 73 rd replicator of experiment XX and the 27 th replicator of case study YY? • Lastly, can ISERN (International Software Engineering Research Network, http: //isern. iese. de) play a role as an advisor, coordinator, or shared archive here? Treatments cannot be made public, either! • • • Above all: Cultural changes needed! Meta-study cooperation in Cochrane style? Must demonstrate practical use. RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 21

5. Reflections (1) RQ 1: How to promote more quality (rigorous, ”comparable”) primary studies? : • Better teaching? • Refusing to publish poor empirical papers? • But: how can a Ph. D student get sufficient credit for being, e. g. , the 73 rd replicator of experiment XX and the 27 th replicator of case study YY? • Lastly, can ISERN (International Software Engineering Research Network, http: //isern. iese. de) play a role as an advisor, coordinator, or shared archive here? Treatments cannot be made public, either! • • • Above all: Cultural changes needed! Meta-study cooperation in Cochrane style? Must demonstrate practical use. RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 21

5. Reflections (2) RQ 2: How to define a partially standard metrics for software? : • Code size: a volume or code size measure for code (in LOC or Function Points). Also neutral, automatic and free measurement tools to perform reliable size calculations. NB: “Much” code is no goal in itself, only the minimum amount to satisfy the specifications. Also distinguish between new development as for Utah, and maintenance as for Simula. • Quality: e. g. defined as Defect rate (measured pre- or post-delivery). • Effort (p-hs) – must account for software reuse. • Duration (wall-clock hours). • Personal background and similar context. RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 22

5. Reflections (2) RQ 2: How to define a partially standard metrics for software? : • Code size: a volume or code size measure for code (in LOC or Function Points). Also neutral, automatic and free measurement tools to perform reliable size calculations. NB: “Much” code is no goal in itself, only the minimum amount to satisfy the specifications. Also distinguish between new development as for Utah, and maintenance as for Simula. • Quality: e. g. defined as Defect rate (measured pre- or post-delivery). • Effort (p-hs) – must account for software reuse. • Duration (wall-clock hours). • Personal background and similar context. RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 22

5. Reflections (3) Research Challenge: how to study and promote the social and cognitive aspects of PP? • • • Researchers should rather study the qualitative, socio-technical benefits of PP, since the quantitative effects on defects & efforts anyhow seem to be slight (+- 15 %). Such aspects should then be studied in longitudinal case studies in industrial settings – and go deeper than finding PP to be “fun” to do. • So, more PP studies wrt. learning, human factors, teamware (e. g. job rotation), and knowledge management. • And it is not clear if PP is well-suited to make novices learning from experts, or if other team structures are more suitable. RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 23

5. Reflections (3) Research Challenge: how to study and promote the social and cognitive aspects of PP? • • • Researchers should rather study the qualitative, socio-technical benefits of PP, since the quantitative effects on defects & efforts anyhow seem to be slight (+- 15 %). Such aspects should then be studied in longitudinal case studies in industrial settings – and go deeper than finding PP to be “fun” to do. • So, more PP studies wrt. learning, human factors, teamware (e. g. job rotation), and knowledge management. • And it is not clear if PP is well-suited to make novices learning from experts, or if other team structures are more suitable. RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 23

6. Conclusion § Serious problems in aggregating findings from PP experiments, due to heterogeneous contextual variables and missing data. § Hence, using SLRs for EBSE become difficult. § Since the overall cost/benefits of adopting PP seem slight (+- 10 -15 %), we may rather apply and investigate PP to promote teamwork and learning. § Need experience-based cost/benefit models and estimates to compose teams optimally. RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 24

6. Conclusion § Serious problems in aggregating findings from PP experiments, due to heterogeneous contextual variables and missing data. § Hence, using SLRs for EBSE become difficult. § Since the overall cost/benefits of adopting PP seem slight (+- 10 -15 %), we may rather apply and investigate PP to promote teamwork and learning. § Need experience-based cost/benefit models and estimates to compose teams optimally. RConradi: NIK, 22 -24. Nov. 2010, Gjøvik 24