0b4681915ea1bb5ead1250d17fc7cc68.ppt

- Количество слайдов: 45

Contextual Vocabulary Acquisition: From Algorithm to Curriculum William J. Rapaport* and Michael W. Kibby** (*)Department of Computer Science & Engineering, Department of Philosophy, Department of Linguistics, and Center for Cognitive Science (**)Department of Learning & Instruction and Center for Literacy & Reading Instruction http: //www. cse. buffalo. edu/~rapaport/CVA/

Research Foci • Learning and the Lexicon

Research Focus • Learning the Lexicon

Research Focus • Learning the Lexicon – Not primarily “child language acquisition” – Adolescent & adult language expansion • & 2 nd-language acquisition

Contextual Vocabulary Acquisition • Active, conscious acquisition of a meaning for a word, as it occurs in a text, by reasoning from “context” • CVA = what you do when: – – – You’re reading You come to an unfamiliar word It’s important for understanding the passage No one’s around to ask Dictionary doesn’t help • So, you “figure out” a meaning for the word “from context” – “figure out” = infer (compute) a hypothesis about what the word might mean in that text – “context” = ? ?

![What Does ‘Brachet’ Mean? (From Malory’s Morte D’Arthur [page # in brackets]) 1. 2. What Does ‘Brachet’ Mean? (From Malory’s Morte D’Arthur [page # in brackets]) 1. 2.](https://present5.com/presentation/0b4681915ea1bb5ead1250d17fc7cc68/image-6.jpg)

What Does ‘Brachet’ Mean? (From Malory’s Morte D’Arthur [page # in brackets]) 1. 2. 3. 4. 5. 18. There came a white hart running into the hall with a white brachet next to him, and thirty couples of black hounds came running after them. [66] As the hart went by the sideboard, the white brachet bit him. [66] The knight arose, took up the brachet and rode away with the brachet. [66] A lady came in and cried aloud to King Arthur, “Sire, the brachet is mine”. [66] There was the white brachet which bayed at him fast. The hart lay dead; a brachet was biting on his throat, and other hounds came behind. [86] [72]

Why Dictionaries Don’t Help • Most words are learned via incidental CVA, not via dictionaries – Incidental (unconscious) CVA is best explanation of how we learn vocabulary: • Given # of words high-school grad knows (~45 K), & # of years to learn them (~18) = ~2. 5 K words/year • But only taught ~10% in 12 school years • Most importantly: – Dictionary definitions are just more contexts!

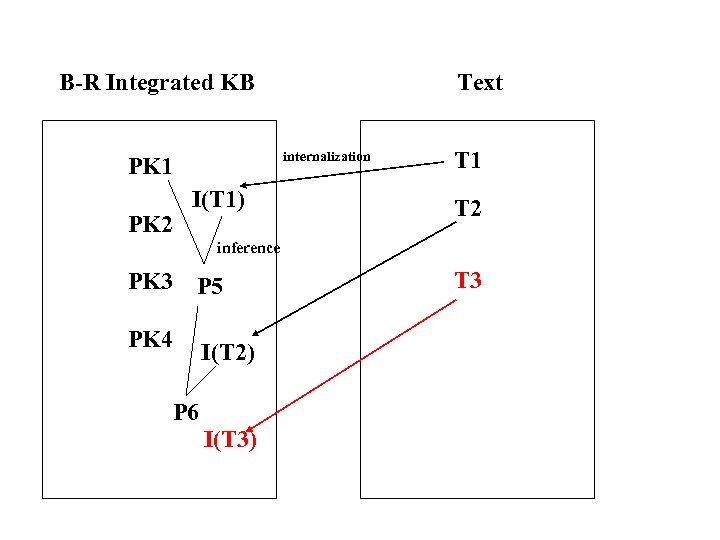

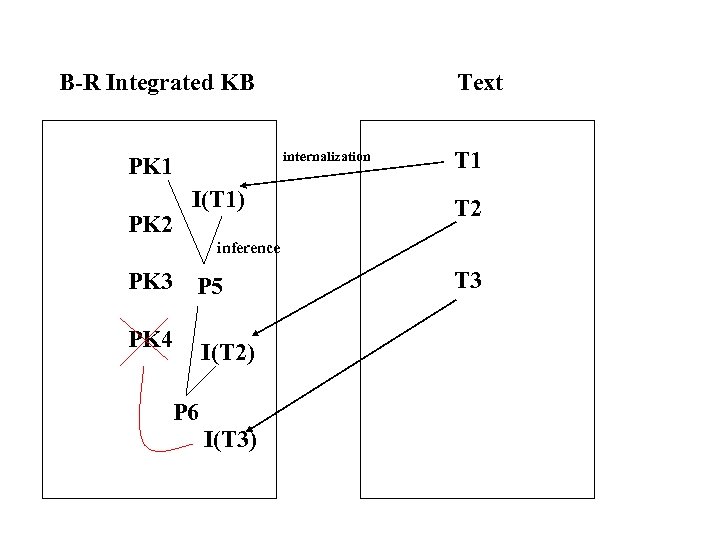

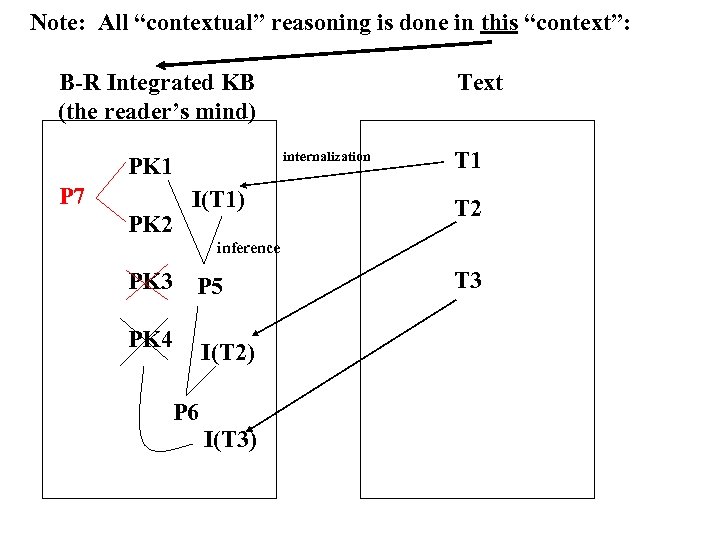

What Is the “Context” for CVA? • “context” ≠ textual context – surrounding words; “co-text” of word • “context” = wide context = – “internalized” co-text … • ≈ reader’s interpretive mental model of textual “co-text” – … “integrated” with reader’s prior knowledge… • • “world” knowledge language knowledge previous hypotheses about word’s meaning but not including external sources (dictionary, humans) – … via belief revision • infer new beliefs from internalized co-text + prior knowledge • remove inconsistent beliefs “Context” for CVA is in reader’s mind, not in the text

Prior Knowledge PK 1 PK 2 PK 3 PK 4 Text

Prior Knowledge PK 1 PK 2 PK 3 PK 4 Text T 1

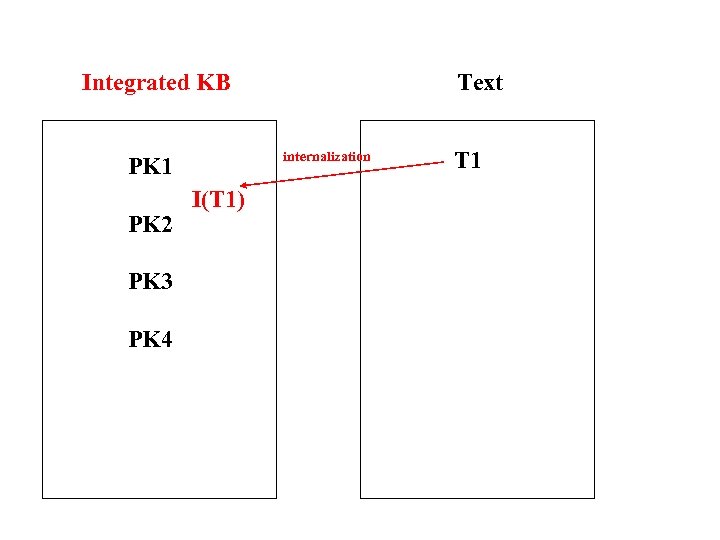

Integrated KB internalization PK 1 PK 2 PK 3 PK 4 Text I(T 1) T 1

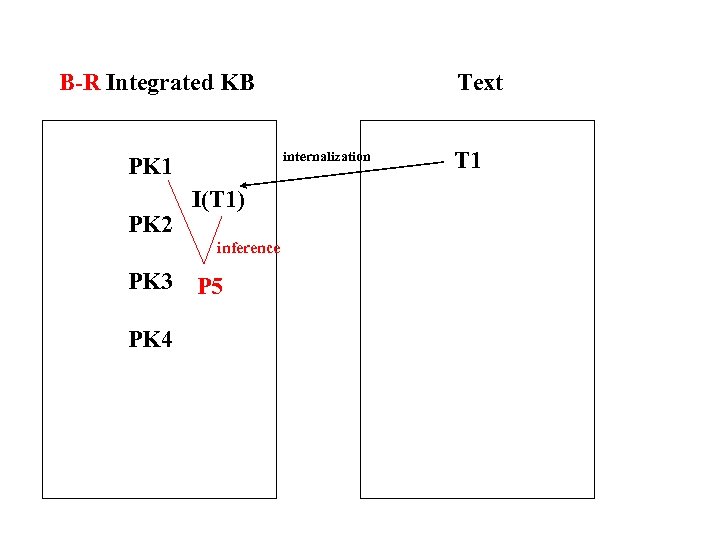

B-R Integrated KB internalization PK 1 PK 2 I(T 1) inference PK 3 P 5 PK 4 Text T 1

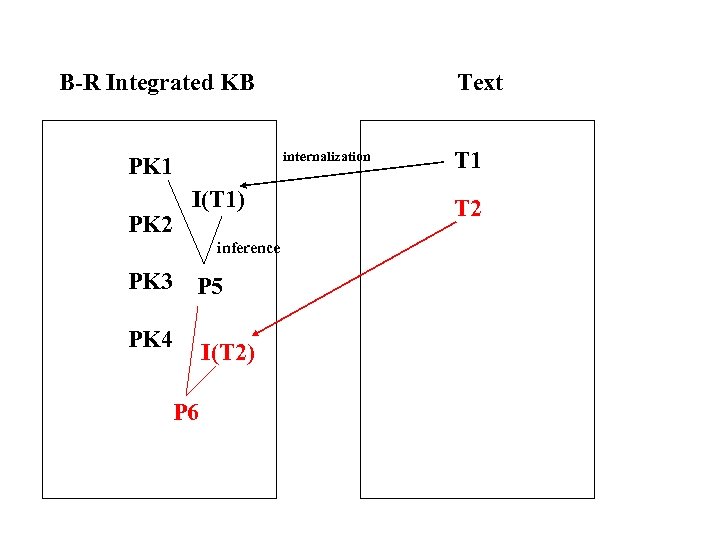

B-R Integrated KB internalization PK 1 PK 2 Text I(T 1) inference PK 3 P 5 PK 4 P 6 I(T 2) T 1 T 2

B-R Integrated KB internalization PK 1 PK 2 Text I(T 1) T 1 T 2 inference PK 3 P 5 PK 4 I(T 2) P 6 I(T 3) T 3

B-R Integrated KB internalization PK 1 PK 2 Text I(T 1) T 1 T 2 inference PK 3 P 5 PK 4 I(T 2) P 6 I(T 3) T 3

Note: All “contextual” reasoning is done in this “context”: B-R Integrated KB (the reader’s mind) internalization PK 1 P 7 PK 2 Text I(T 1) T 1 T 2 inference PK 3 P 5 PK 4 I(T 2) P 6 I(T 3) T 3

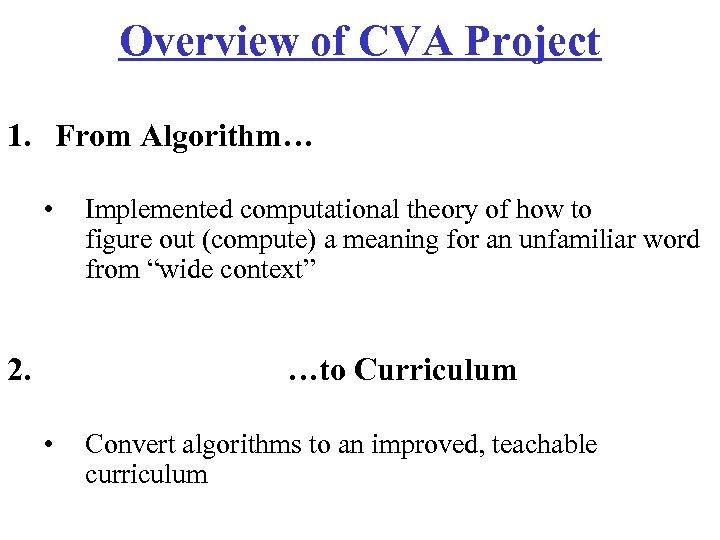

Overview of CVA Project 1. From Algorithm… • 2. Implemented computational theory of how to figure out (compute) a meaning for an unfamiliar word from “wide context” …to Curriculum • Convert algorithms to an improved, teachable curriculum

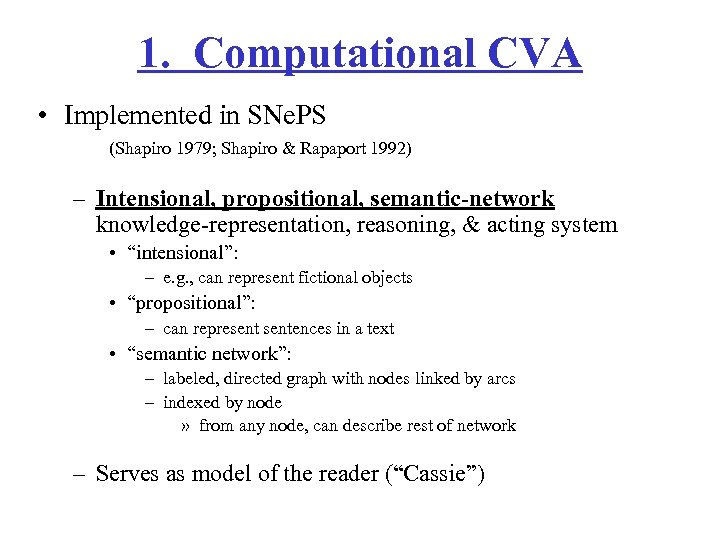

1. Computational CVA • Implemented in SNe. PS (Shapiro 1979; Shapiro & Rapaport 1992) – Intensional, propositional, semantic-network knowledge-representation, reasoning, & acting system • “intensional”: – e. g. , can represent fictional objects • “propositional”: – can representences in a text • “semantic network”: – labeled, directed graph with nodes linked by arcs – indexed by node » from any node, can describe rest of network – Serves as model of the reader (“Cassie”)

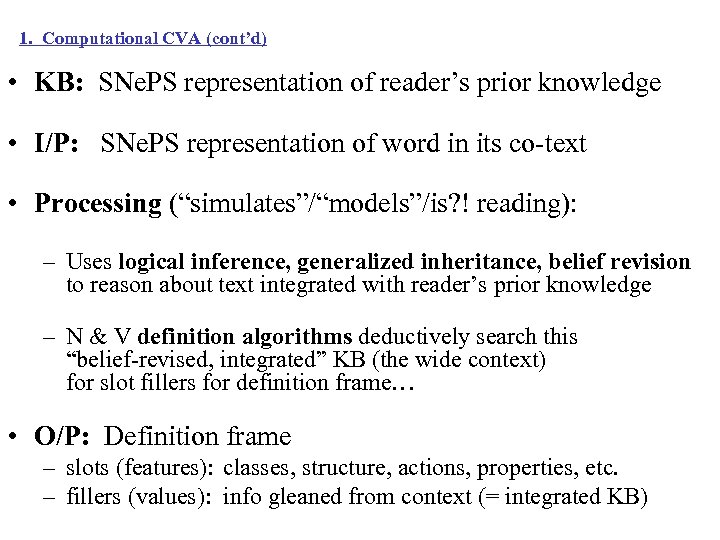

1. Computational CVA (cont’d) • KB: SNe. PS representation of reader’s prior knowledge • I/P: SNe. PS representation of word in its co-text • Processing (“simulates”/“models”/is? ! reading): – Uses logical inference, generalized inheritance, belief revision to reason about text integrated with reader’s prior knowledge – N & V definition algorithms deductively search this “belief-revised, integrated” KB (the wide context) for slot fillers for definition frame… • O/P: Definition frame – slots (features): classes, structure, actions, properties, etc. – fillers (values): info gleaned from context (= integrated KB)

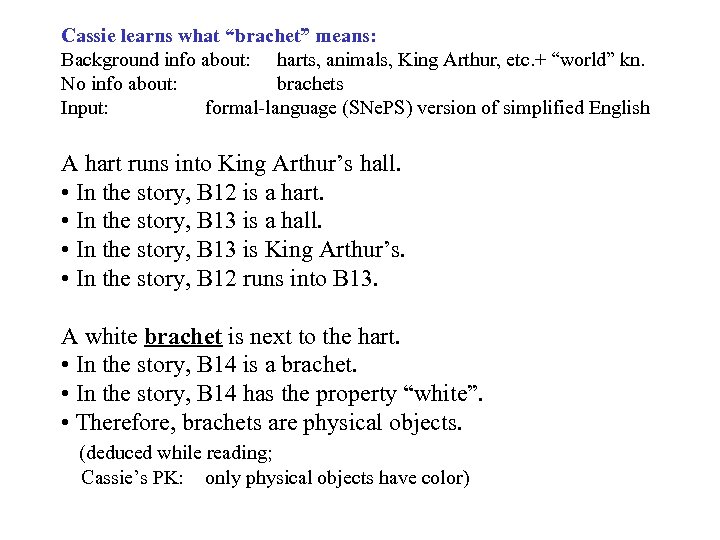

Cassie learns what “brachet” means: Background info about: harts, animals, King Arthur, etc. + “world” kn. No info about: brachets Input: formal-language (SNe. PS) version of simplified English A hart runs into King Arthur’s hall. • In the story, B 12 is a hart. • In the story, B 13 is a hall. • In the story, B 13 is King Arthur’s. • In the story, B 12 runs into B 13. A white brachet is next to the hart. • In the story, B 14 is a brachet. • In the story, B 14 has the property “white”. • Therefore, brachets are physical objects. (deduced while reading; Cassie’s PK: only physical objects have color)

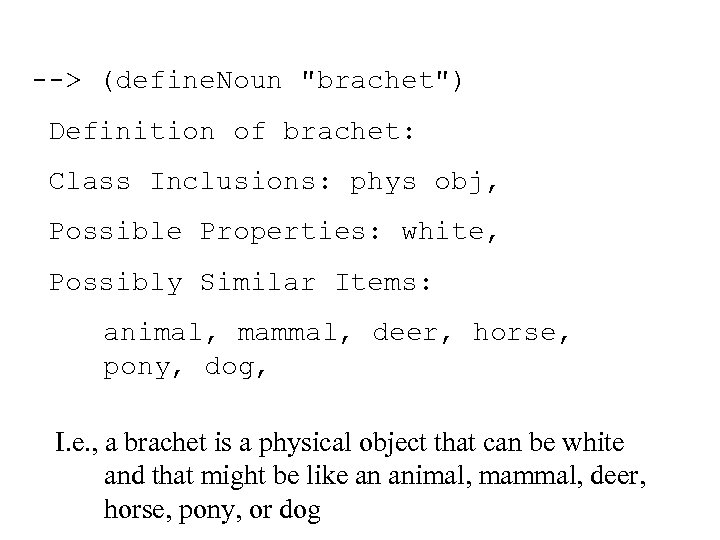

--> (define. Noun "brachet") Definition of brachet: Class Inclusions: phys obj, Possible Properties: white, Possibly Similar Items: animal, mammal, deer, horse, pony, dog, I. e. , a brachet is a physical object that can be white and that might be like an animal, mammal, deer, horse, pony, or dog

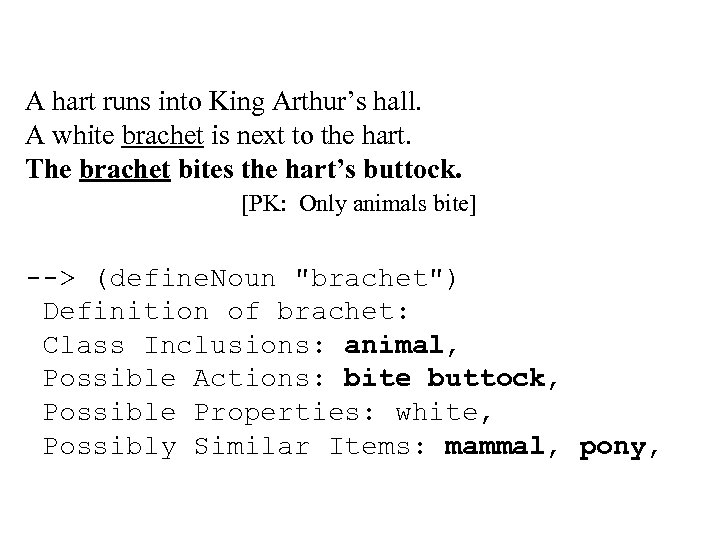

A hart runs into King Arthur’s hall. A white brachet is next to the hart. The brachet bites the hart’s buttock. [PK: Only animals bite] --> (define. Noun "brachet") Definition of brachet: Class Inclusions: animal, Possible Actions: bite buttock, Possible Properties: white, Possibly Similar Items: mammal, pony,

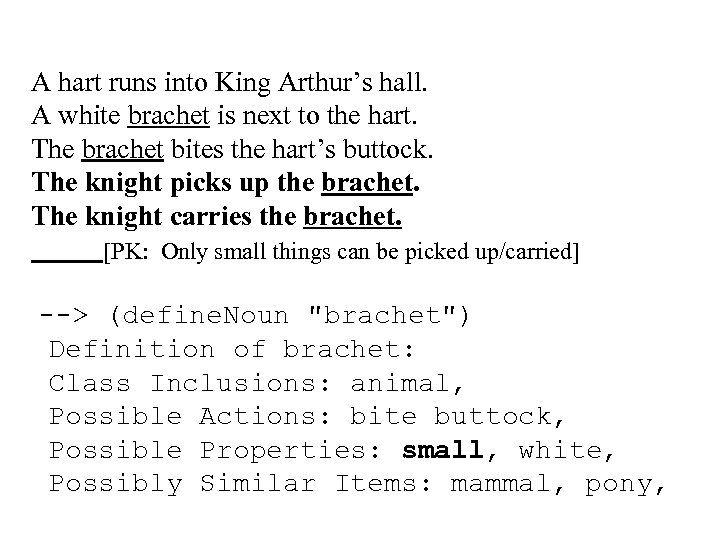

A hart runs into King Arthur’s hall. A white brachet is next to the hart. The brachet bites the hart’s buttock. The knight picks up the brachet. The knight carries the brachet. [PK: Only small things can be picked up/carried] --> (define. Noun "brachet") Definition of brachet: Class Inclusions: animal, Possible Actions: bite buttock, Possible Properties: small, white, Possibly Similar Items: mammal, pony,

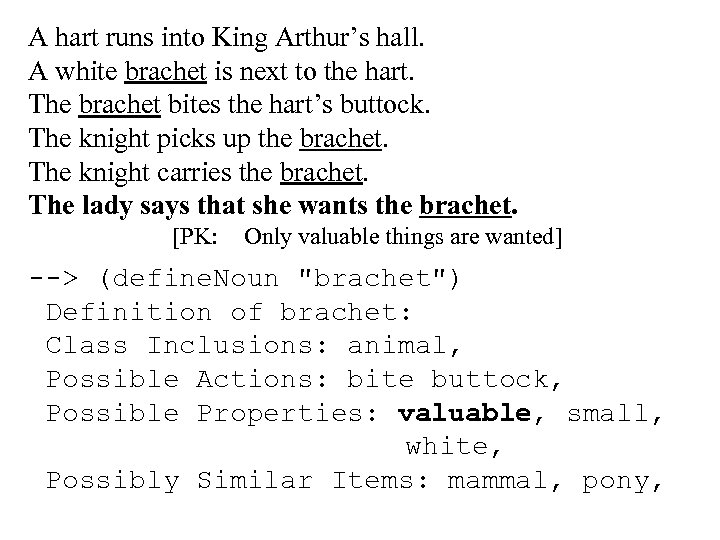

A hart runs into King Arthur’s hall. A white brachet is next to the hart. The brachet bites the hart’s buttock. The knight picks up the brachet. The knight carries the brachet. The lady says that she wants the brachet. [PK: Only valuable things are wanted] --> (define. Noun "brachet") Definition of brachet: Class Inclusions: animal, Possible Actions: bite buttock, Possible Properties: valuable, small, white, Possibly Similar Items: mammal, pony,

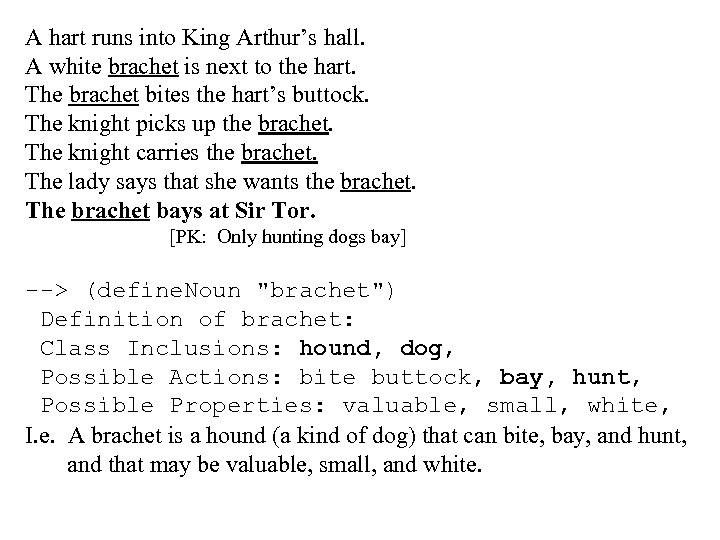

A hart runs into King Arthur’s hall. A white brachet is next to the hart. The brachet bites the hart’s buttock. The knight picks up the brachet. The knight carries the brachet. The lady says that she wants the brachet. The brachet bays at Sir Tor. [PK: Only hunting dogs bay] --> (define. Noun "brachet") Definition of brachet: Class Inclusions: hound, dog, Possible Actions: bite buttock, bay, hunt, Possible Properties: valuable, small, white, I. e. A brachet is a hound (a kind of dog) that can bite, bay, and hunt, and that may be valuable, small, and white.

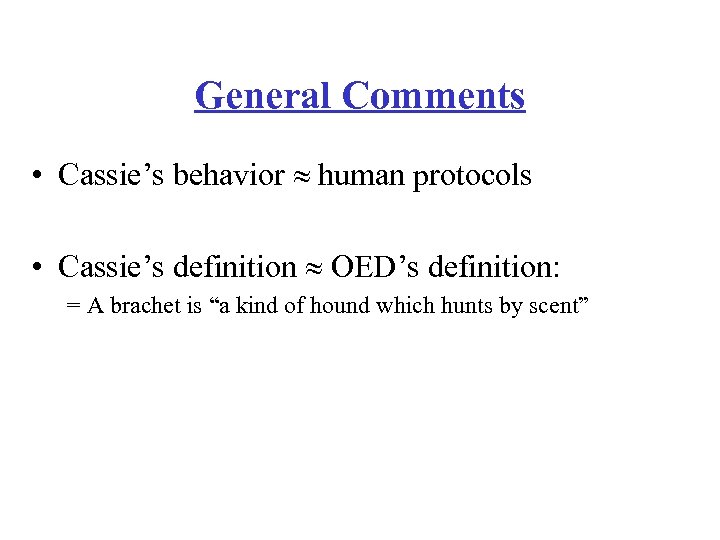

General Comments • Cassie’s behavior human protocols • Cassie’s definition OED’s definition: = A brachet is “a kind of hound which hunts by scent”

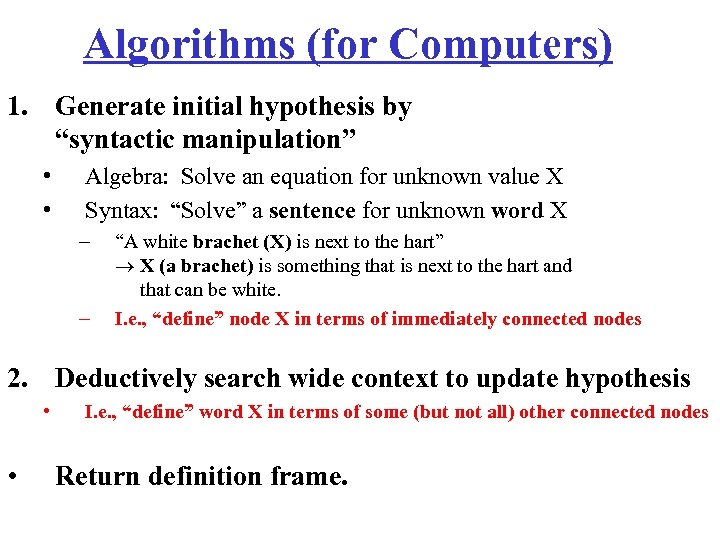

Algorithms (for Computers) 1. Generate initial hypothesis by “syntactic manipulation” • • Algebra: Solve an equation for unknown value X Syntax: “Solve” a sentence for unknown word X – – “A white brachet (X) is next to the hart” X (a brachet) is something that is next to the hart and that can be white. I. e. , “define” node X in terms of immediately connected nodes 2. Deductively search wide context to update hypothesis • • I. e. , “define” word X in terms of some (but not all) other connected nodes Return definition frame.

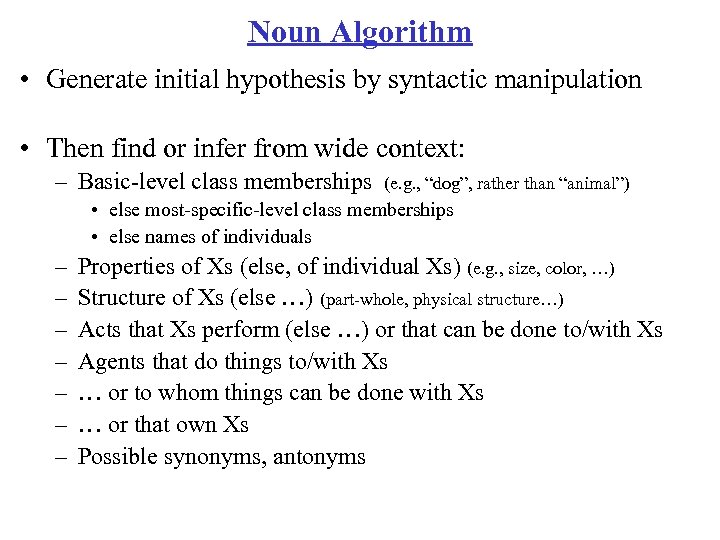

Noun Algorithm • Generate initial hypothesis by syntactic manipulation • Then find or infer from wide context: – Basic-level class memberships (e. g. , “dog”, rather than “animal”) • else most-specific-level class memberships • else names of individuals – – – – Properties of Xs (else, of individual Xs) (e. g. , size, color, …) Structure of Xs (else …) (part-whole, physical structure…) Acts that Xs perform (else …) or that can be done to/with Xs Agents that do things to/with Xs … or to whom things can be done with Xs … or that own Xs Possible synonyms, antonyms

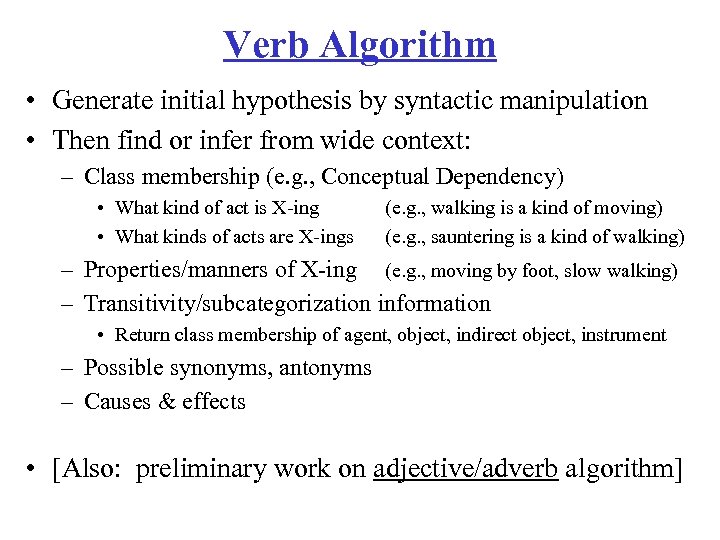

Verb Algorithm • Generate initial hypothesis by syntactic manipulation • Then find or infer from wide context: – Class membership (e. g. , Conceptual Dependency) • What kind of act is X-ing • What kinds of acts are X-ings (e. g. , walking is a kind of moving) (e. g. , sauntering is a kind of walking) – Properties/manners of X-ing (e. g. , moving by foot, slow walking) – Transitivity/subcategorization information • Return class membership of agent, object, indirect object, instrument – Possible synonyms, antonyms – Causes & effects • [Also: preliminary work on adjective/adverb algorithm]

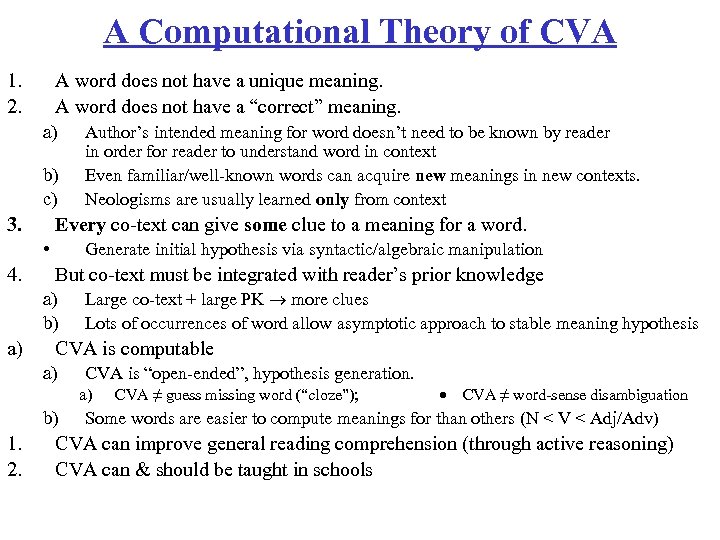

A Computational Theory of CVA 1. 2. A word does not have a unique meaning. A word does not have a “correct” meaning. a) b) c) 3. Every co-text can give some clue to a meaning for a word. • 4. Generate initial hypothesis via syntactic/algebraic manipulation But co-text must be integrated with reader’s prior knowledge a) b) a) Author’s intended meaning for word doesn’t need to be known by reader in order for reader to understand word in context Even familiar/well-known words can acquire new meanings in new contexts. Neologisms are usually learned only from context Large co-text + large PK more clues Lots of occurrences of word allow asymptotic approach to stable meaning hypothesis CVA is computable a) CVA is “open-ended”, hypothesis generation. a) b) 1. 2. CVA ≠ guess missing word (“cloze”); CVA ≠ word-sense disambiguation Some words are easier to compute meanings for than others (N < V < Adj/Adv) CVA can improve general reading comprehension (through active reasoning) CVA can & should be taught in schools

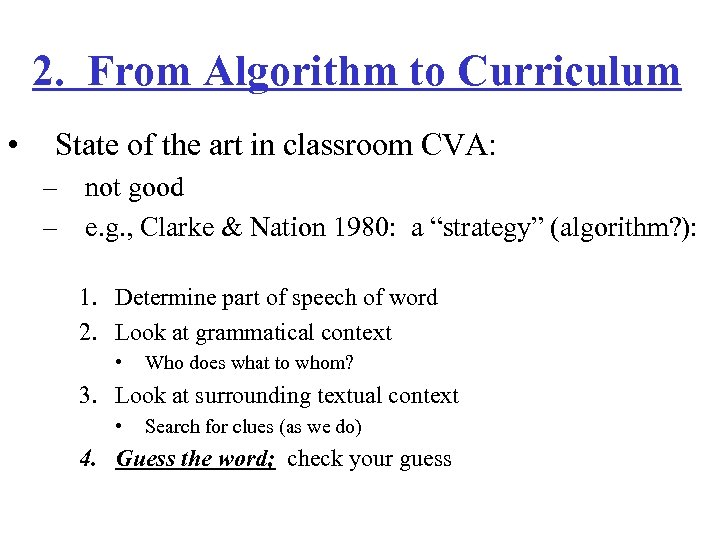

2. From Algorithm to Curriculum • State of the art in classroom CVA: – not good – e. g. , Clarke & Nation 1980: a “strategy” (algorithm? ): 1. Determine part of speech of word 2. Look at grammatical context • Who does what to whom? 3. Look at surrounding textual context • Search for clues (as we do) 4. Guess the word; check your guess

CVA: From Algorithm to Curriculum • “guess the word” = “then a miracle occurs” • Surely, computer scientists can “be more explicit”! • And so should teachers!

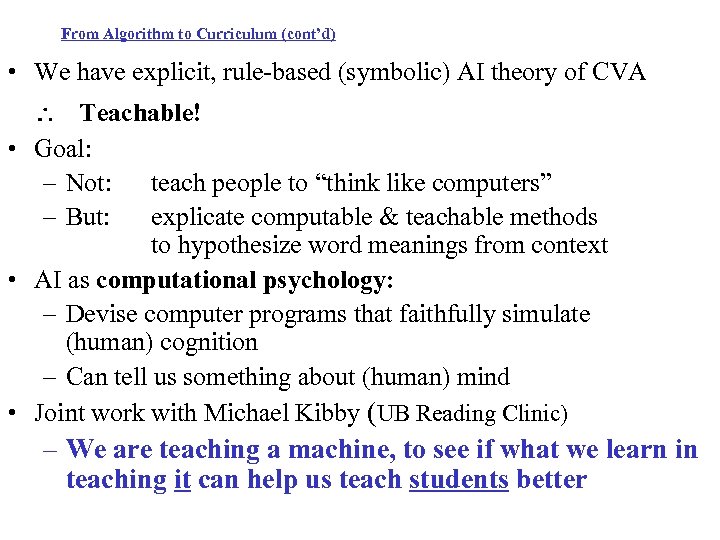

From Algorithm to Curriculum (cont’d) • We have explicit, rule-based (symbolic) AI theory of CVA Teachable! • Goal: – Not: teach people to “think like computers” – But: explicate computable & teachable methods to hypothesize word meanings from context • AI as computational psychology: – Devise computer programs that faithfully simulate (human) cognition – Can tell us something about (human) mind • Joint work with Michael Kibby (UB Reading Clinic) – We are teaching a machine, to see if what we learn in teaching it can help us teach students better

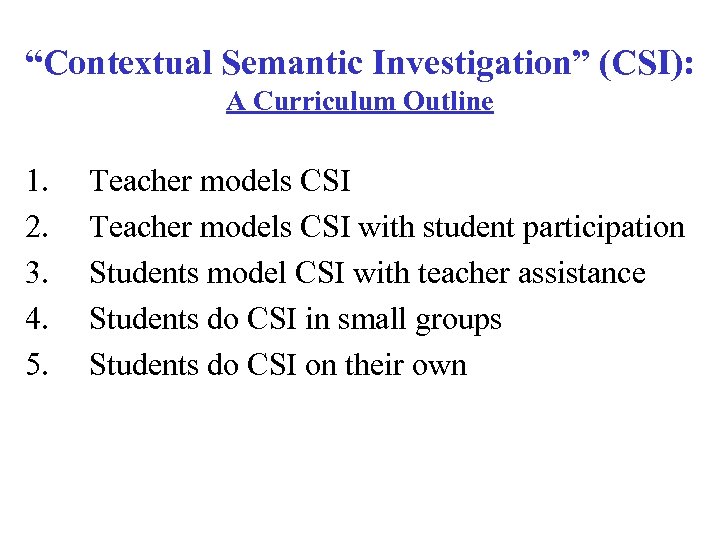

“Contextual Semantic Investigation” (CSI): A Curriculum Outline 1. 2. 3. 4. 5. Teacher models CSI with student participation Students model CSI with teacher assistance Students do CSI in small groups Students do CSI on their own

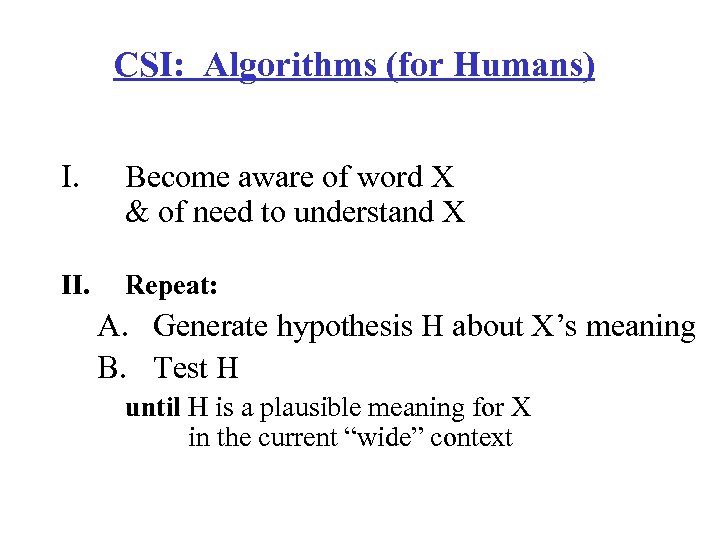

CSI: Algorithms (for Humans) I. Become aware of word X & of need to understand X II. Repeat: A. Generate hypothesis H about X’s meaning B. Test H until H is a plausible meaning for X in the current “wide” context

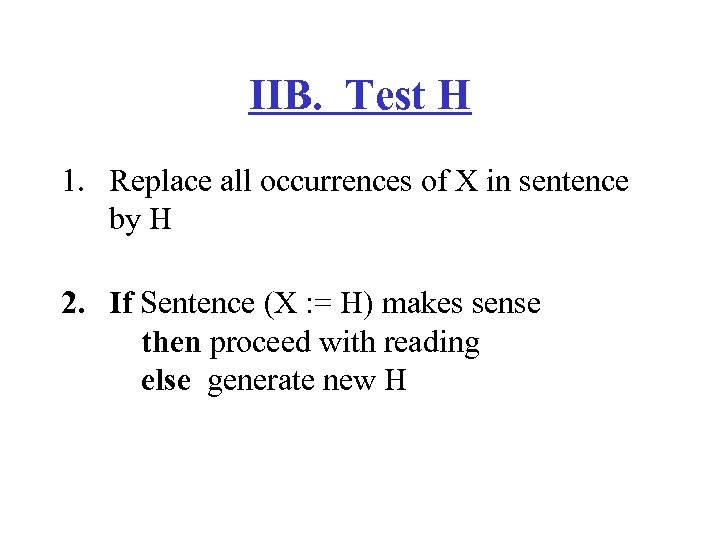

IIB. Test H 1. Replace all occurrences of X in sentence by H 2. If Sentence (X : = H) makes sense then proceed with reading else generate new H

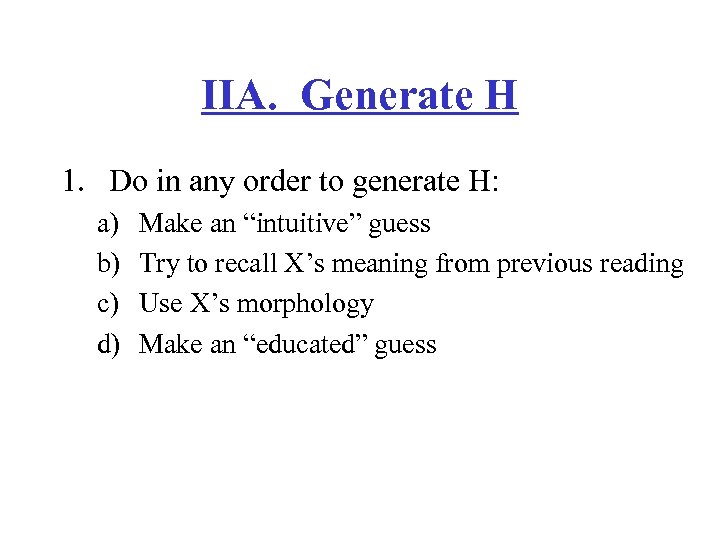

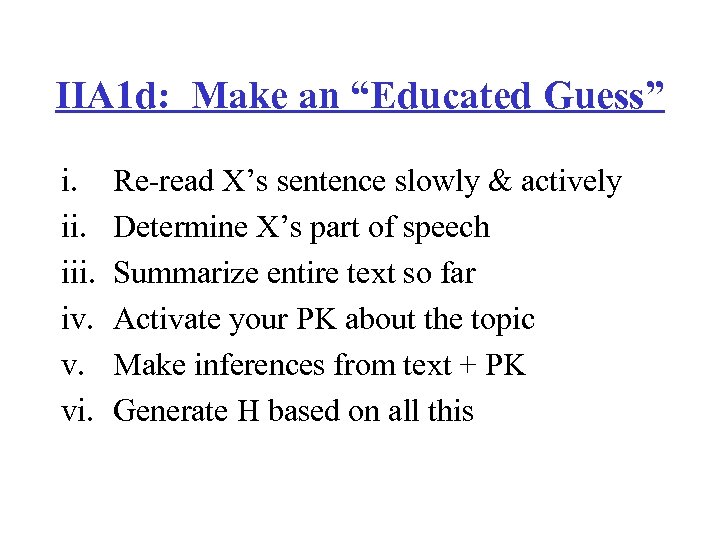

IIA. Generate H 1. Do in any order to generate H: a) b) c) d) Make an “intuitive” guess Try to recall X’s meaning from previous reading Use X’s morphology Make an “educated” guess

IIA 1 d: Make an “Educated Guess” i. iii. iv. v. vi. Re-read X’s sentence slowly & actively Determine X’s part of speech Summarize entire text so far Activate your PK about the topic Make inferences from text + PK Generate H based on all this

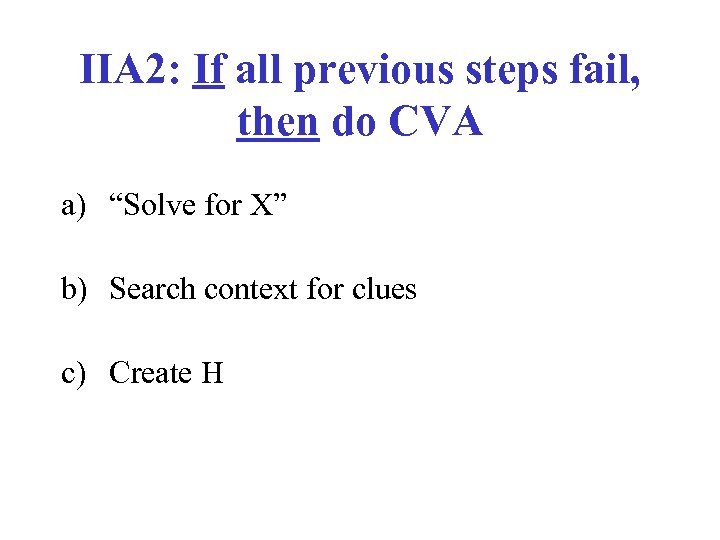

IIA 2: If all previous steps fail, then do CVA a) “Solve for X” b) Search context for clues c) Create H

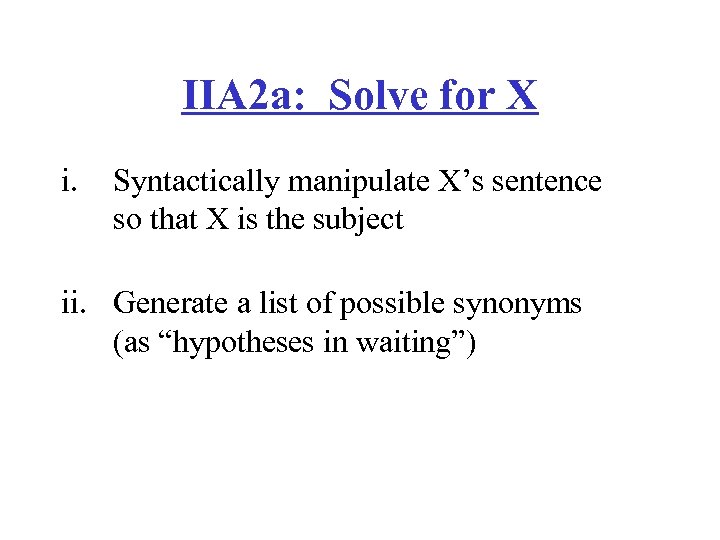

IIA 2 a: Solve for X i. Syntactically manipulate X’s sentence so that X is the subject ii. Generate a list of possible synonyms (as “hypotheses in waiting”)

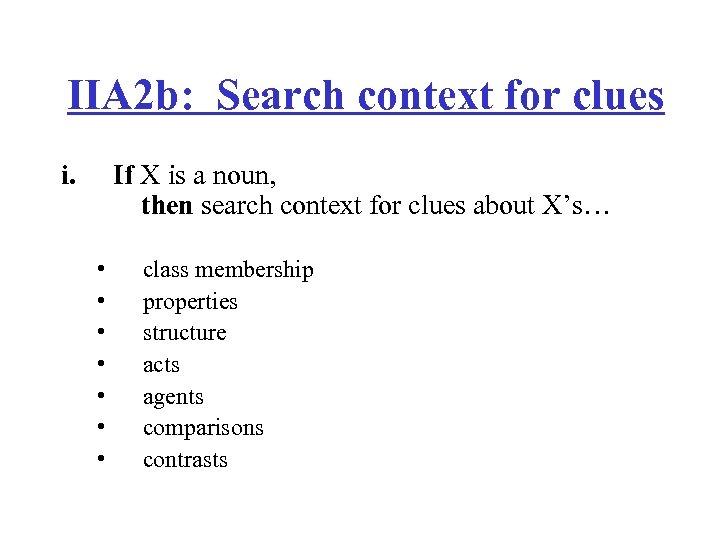

IIA 2 b: Search context for clues i. If X is a noun, then search context for clues about X’s… • • class membership properties structure acts agents comparisons contrasts

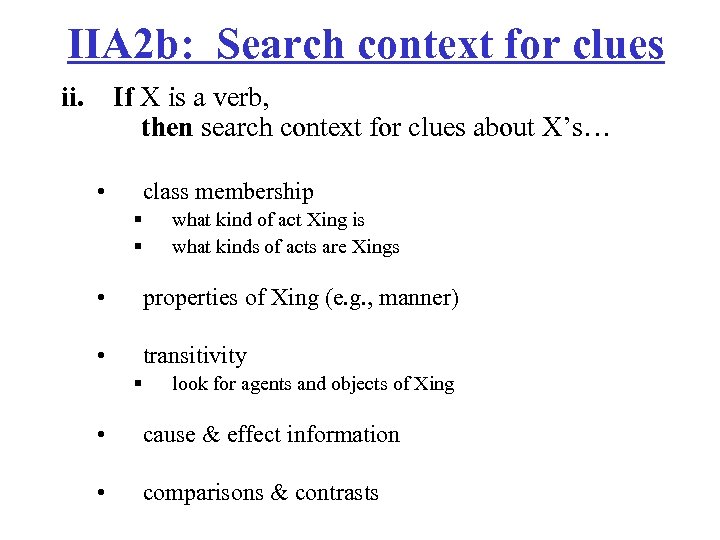

IIA 2 b: Search context for clues ii. If X is a verb, then search context for clues about X’s… • class membership § § what kind of act Xing is what kinds of acts are Xings • properties of Xing (e. g. , manner) • transitivity § look for agents and objects of Xing • cause & effect information • comparisons & contrasts

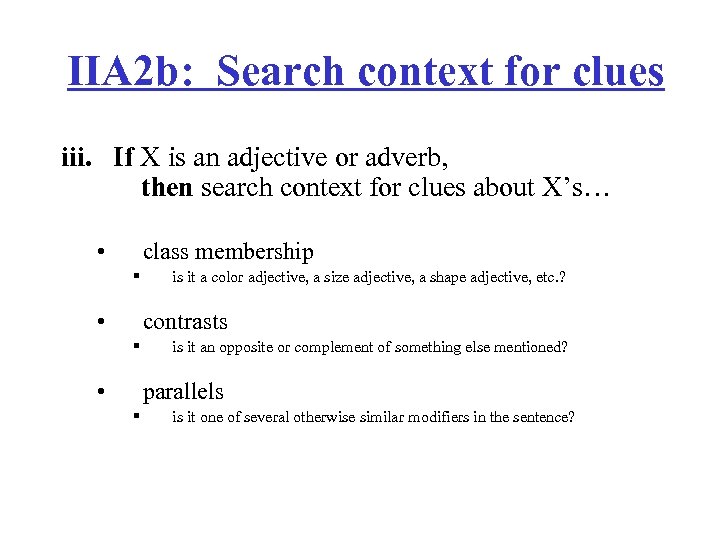

IIA 2 b: Search context for clues iii. If X is an adjective or adverb, then search context for clues about X’s… • class membership § • is it a color adjective, a size adjective, a shape adjective, etc. ? contrasts § • is it an opposite or complement of something else mentioned? parallels § is it one of several otherwise similar modifiers in the sentence?

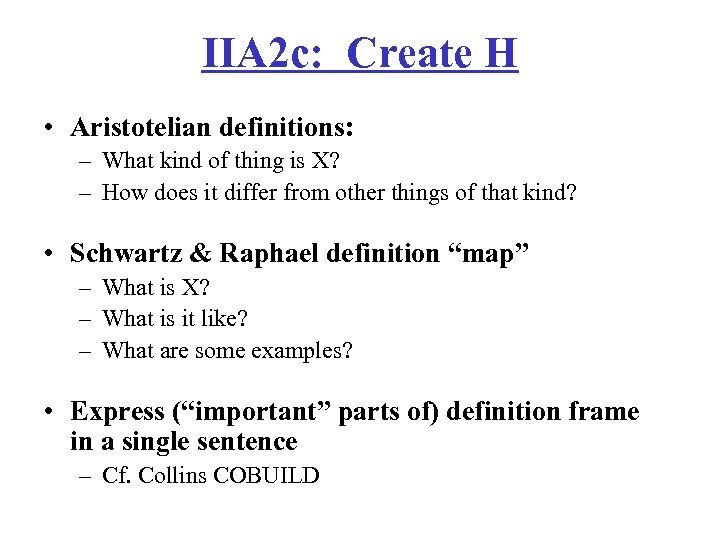

IIA 2 c: Create H • Aristotelian definitions: – What kind of thing is X? – How does it differ from other things of that kind? • Schwartz & Raphael definition “map” – What is X? – What is it like? – What are some examples? • Express (“important” parts of) definition frame in a single sentence – Cf. Collins COBUILD

To Do… • • • More examples (over 50 so far) More experiments with prior knowledge Eye-tracking investigation? ? ? Nature of abductive(? ) general PK rules Better search algorithms – Improve belief-revision component • Class-test the curriculum – Karen Wieland @ U/Pitt

0b4681915ea1bb5ead1250d17fc7cc68.ppt