d8cb96fa800c5614499ebffd5180960d.ppt

- Количество слайдов: 39

Contents Introduction Requirements Engineering Project Management Software Design Detailed Design and Coding Quality Assurance Maintenance PVK-HT 03 bella@cs. umu. se Requirements Analysis Specification Planning Design Coding Testing Installation Operation and Maintenance 1

Contents Introduction Requirements Engineering Project Management Software Design Detailed Design and Coding Quality Assurance Maintenance PVK-HT 03 bella@cs. umu. se Requirements Analysis Specification Planning Design Coding Testing Installation Operation and Maintenance 1

Quality Assurance Introduction Testing o Testing Phases and Approaches o Black-box Testing o White-box Testing o System Testing PVK-HT 03 bella@cs. umu. se 2

Quality Assurance Introduction Testing o Testing Phases and Approaches o Black-box Testing o White-box Testing o System Testing PVK-HT 03 bella@cs. umu. se 2

What is Quality Assurance? QA is the combination of planned and unplanned activities to ensure the fulfillment of predefined quality standards. Constructive vs analytic approaches to QA Qualitative vs quantitative quality standards Measurement o Derive qualitative factors from measurable quantitative factors Software Metrics PVK-HT 03 bella@cs. umu. se 3

What is Quality Assurance? QA is the combination of planned and unplanned activities to ensure the fulfillment of predefined quality standards. Constructive vs analytic approaches to QA Qualitative vs quantitative quality standards Measurement o Derive qualitative factors from measurable quantitative factors Software Metrics PVK-HT 03 bella@cs. umu. se 3

Approaches to QA Constructive Approaches Usage of methods, languages, and tools that ensure the fulfillment of some quality factors. o o o Syntax-directed editors Type systems Transformational programming Coding guidelines. . . Analytic approaches Usage of methods, languages, and tools to observe the current quality level. o o PVK-HT 03 Inspections Static analysis tools (e. g. lint) Testing. . . bella@cs. umu. se 4

Approaches to QA Constructive Approaches Usage of methods, languages, and tools that ensure the fulfillment of some quality factors. o o o Syntax-directed editors Type systems Transformational programming Coding guidelines. . . Analytic approaches Usage of methods, languages, and tools to observe the current quality level. o o PVK-HT 03 Inspections Static analysis tools (e. g. lint) Testing. . . bella@cs. umu. se 4

Fault vs Failure can lead to human error can lead to fault ? ! failure Different types of faults Different identification techniques Different testing techniques Fault prevention and -detection strategies should be based on expected fault profile PVK-HT 03 bella@cs. umu. se 5

Fault vs Failure can lead to human error can lead to fault ? ! failure Different types of faults Different identification techniques Different testing techniques Fault prevention and -detection strategies should be based on expected fault profile PVK-HT 03 bella@cs. umu. se 5

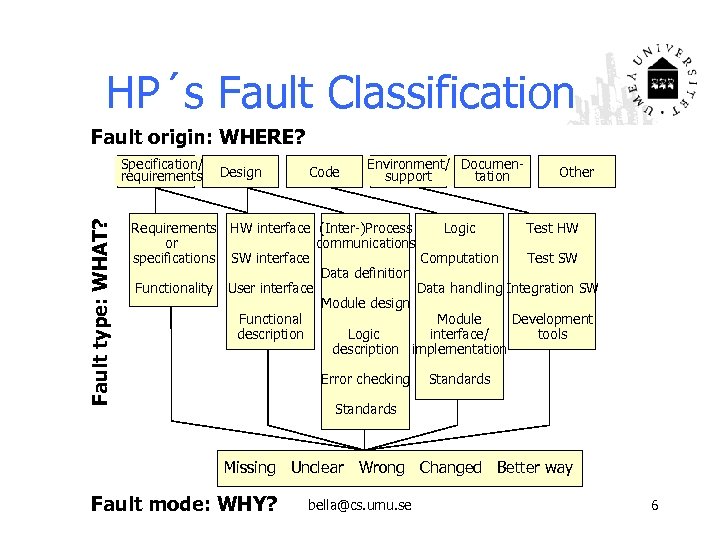

HP´s Fault Classification Fault origin: WHERE? Fault type: WHAT? Specification/ requirements Requirements or specifications Functionality Design Code Environment/ Documensupport tation Other HW interface (Inter-)Process Logic Test HW communications SW interface Computation Test SW Data definition User interface Data handling Integration SW Module design Functional Module Development description Logic interface/ tools description implementation Error checking Standards Missing Unclear Wrong Changed Better way Fault mode: WHY? bella@cs. umu. se 6

HP´s Fault Classification Fault origin: WHERE? Fault type: WHAT? Specification/ requirements Requirements or specifications Functionality Design Code Environment/ Documensupport tation Other HW interface (Inter-)Process Logic Test HW communications SW interface Computation Test SW Data definition User interface Data handling Integration SW Module design Functional Module Development description Logic interface/ tools description implementation Error checking Standards Missing Unclear Wrong Changed Better way Fault mode: WHY? bella@cs. umu. se 6

![Fault Profile of a HP Division PVK-HT 03 See [Pfleeger 98]. bella@cs. umu. se Fault Profile of a HP Division PVK-HT 03 See [Pfleeger 98]. bella@cs. umu. se](https://present5.com/presentation/d8cb96fa800c5614499ebffd5180960d/image-7.jpg) Fault Profile of a HP Division PVK-HT 03 See [Pfleeger 98]. bella@cs. umu. se 7

Fault Profile of a HP Division PVK-HT 03 See [Pfleeger 98]. bella@cs. umu. se 7

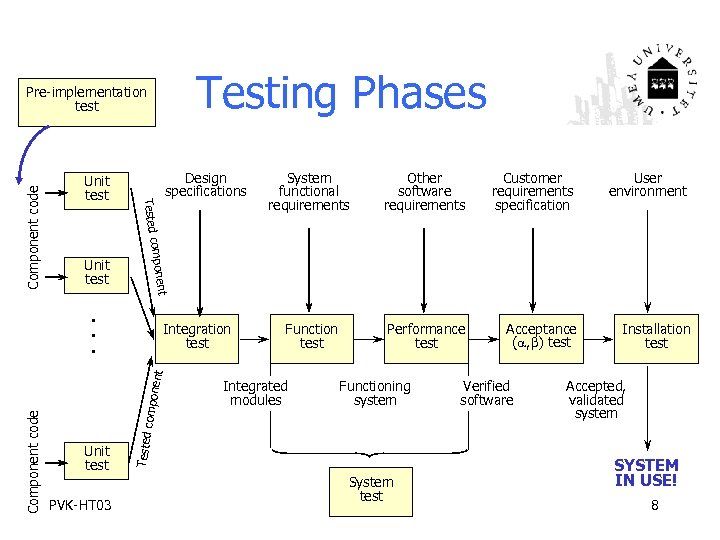

Testing Phases Unit test Customer requirements specification compo nent Integration test Function test Performance test Acceptance ( , ) test Integrated modules Functioning system Tested Component code PVK-HT 03 Other software requirements User environment . . . Unit test System functional requirements compon Unit test Design specifications Tested Component code Pre-implementation test System test Verified software Installation test Accepted, validated system SYSTEM IN USE! 8

Testing Phases Unit test Customer requirements specification compo nent Integration test Function test Performance test Acceptance ( , ) test Integrated modules Functioning system Tested Component code PVK-HT 03 Other software requirements User environment . . . Unit test System functional requirements compon Unit test Design specifications Tested Component code Pre-implementation test System test Verified software Installation test Accepted, validated system SYSTEM IN USE! 8

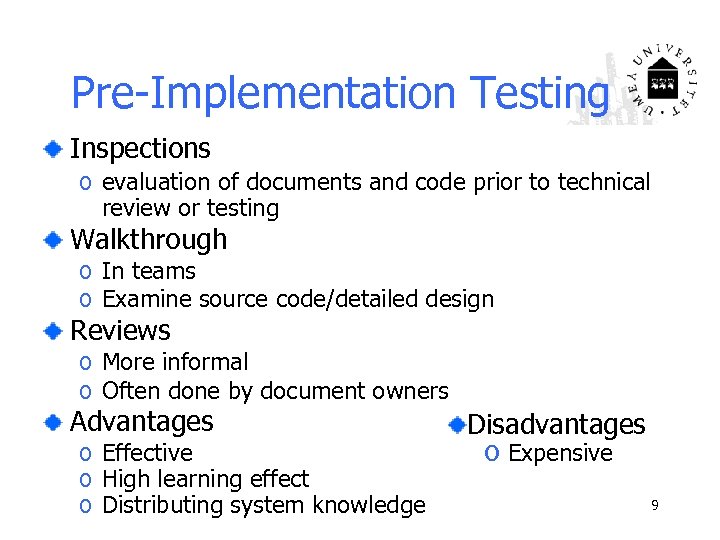

Pre-Implementation Testing Inspections o evaluation of documents and code prior to technical review or testing Walkthrough o In teams o Examine source code/detailed design Reviews o More informal o Often done by document owners Advantages o Effective o High learning effect o Distributing system knowledge Disadvantages o Expensive 9

Pre-Implementation Testing Inspections o evaluation of documents and code prior to technical review or testing Walkthrough o In teams o Examine source code/detailed design Reviews o More informal o Often done by document owners Advantages o Effective o High learning effect o Distributing system knowledge Disadvantages o Expensive 9

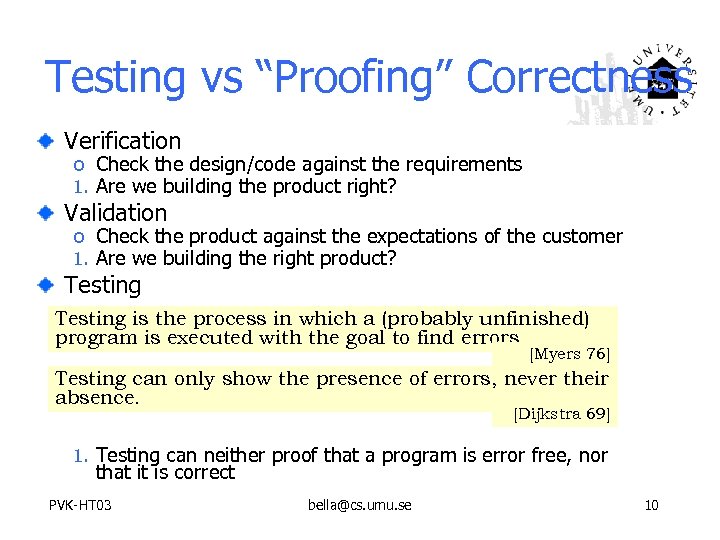

Testing vs “Proofing” Correctness Verification o Check the design/code against the requirements 1. Are we building the product right? Validation o Check the product against the expectations of the customer 1. Are we building the right product? Testing is the process in which a (probably unfinished) program is executed with the goal to find errors. [Myers 76] Testing can only show the presence of errors, never their absence. [Dijkstra 69] 1. Testing can neither proof that a program is error free, nor that it is correct PVK-HT 03 bella@cs. umu. se 10

Testing vs “Proofing” Correctness Verification o Check the design/code against the requirements 1. Are we building the product right? Validation o Check the product against the expectations of the customer 1. Are we building the right product? Testing is the process in which a (probably unfinished) program is executed with the goal to find errors. [Myers 76] Testing can only show the presence of errors, never their absence. [Dijkstra 69] 1. Testing can neither proof that a program is error free, nor that it is correct PVK-HT 03 bella@cs. umu. se 10

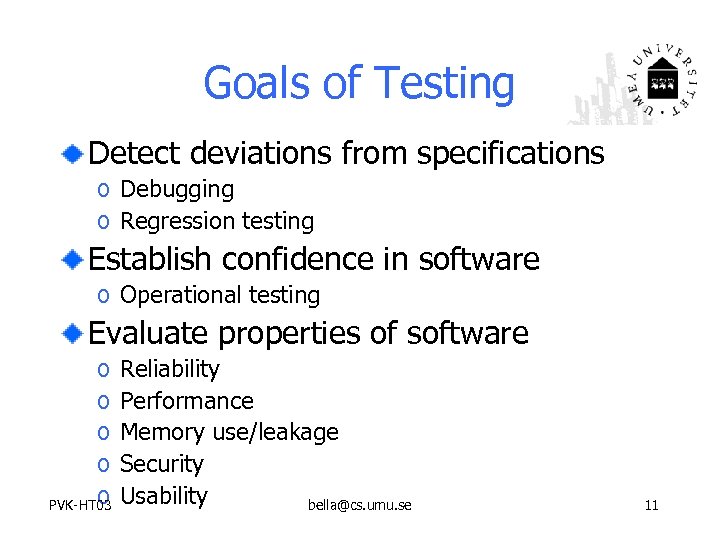

Goals of Testing Detect deviations from specifications o Debugging o Regression testing Establish confidence in software o Operational testing Evaluate properties of software o o o PVK-HT 03 Reliability Performance Memory use/leakage Security Usability bella@cs. umu. se 11

Goals of Testing Detect deviations from specifications o Debugging o Regression testing Establish confidence in software o Operational testing Evaluate properties of software o o o PVK-HT 03 Reliability Performance Memory use/leakage Security Usability bella@cs. umu. se 11

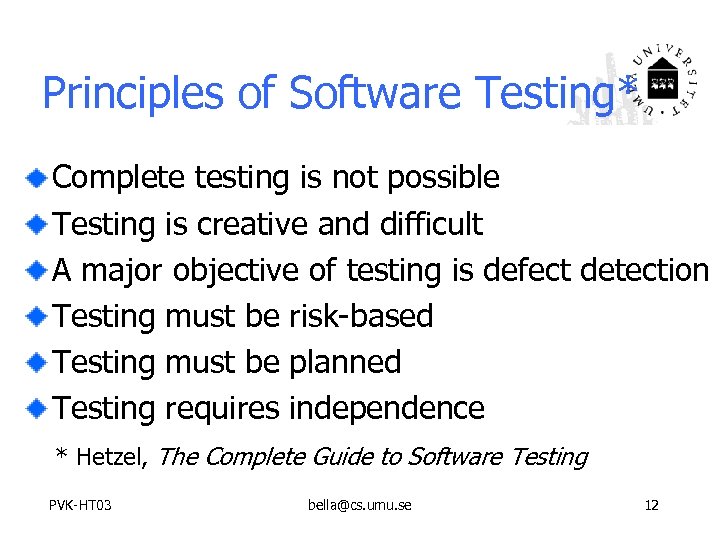

Principles of Software Testing* Complete testing is not possible Testing is creative and difficult A major objective of testing is defect detection Testing must be risk-based Testing must be planned Testing requires independence * Hetzel, The Complete Guide to Software Testing PVK-HT 03 bella@cs. umu. se 12

Principles of Software Testing* Complete testing is not possible Testing is creative and difficult A major objective of testing is defect detection Testing must be risk-based Testing must be planned Testing requires independence * Hetzel, The Complete Guide to Software Testing PVK-HT 03 bella@cs. umu. se 12

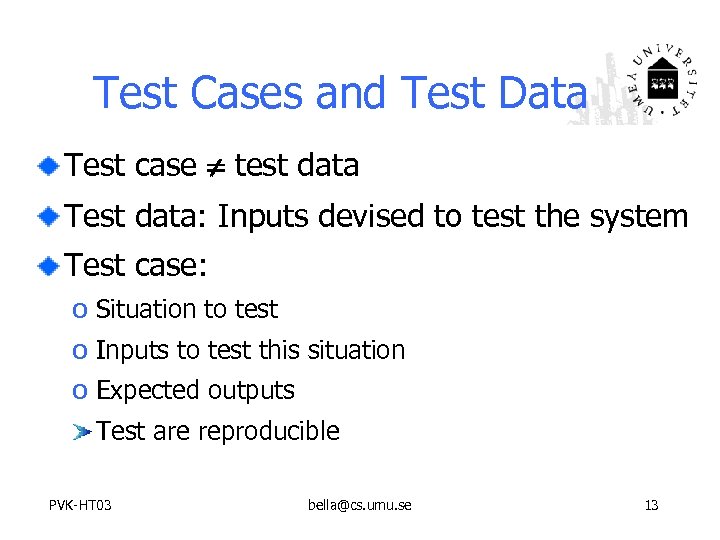

Test Cases and Test Data Test case test data Test data: Inputs devised to test the system Test case: o Situation to test o Inputs to test this situation o Expected outputs Test are reproducible PVK-HT 03 bella@cs. umu. se 13

Test Cases and Test Data Test case test data Test data: Inputs devised to test the system Test case: o Situation to test o Inputs to test this situation o Expected outputs Test are reproducible PVK-HT 03 bella@cs. umu. se 13

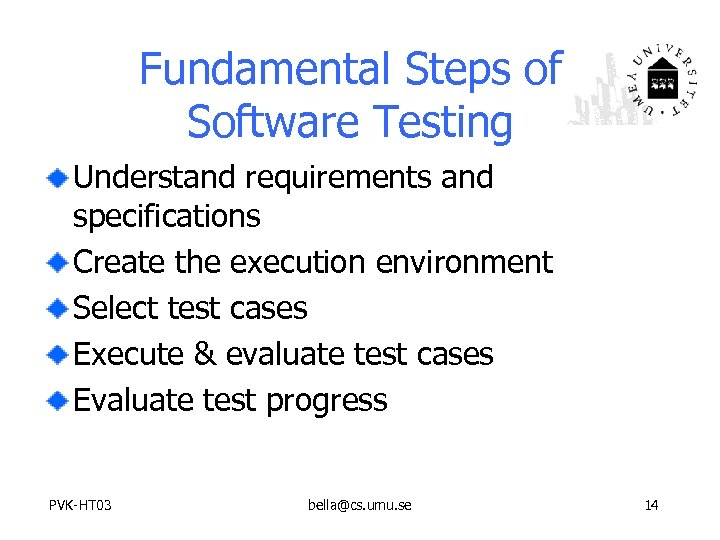

Fundamental Steps of Software Testing Understand requirements and specifications Create the execution environment Select test cases Execute & evaluate test cases Evaluate test progress PVK-HT 03 bella@cs. umu. se 14

Fundamental Steps of Software Testing Understand requirements and specifications Create the execution environment Select test cases Execute & evaluate test cases Evaluate test progress PVK-HT 03 bella@cs. umu. se 14

Unit Testing Defn: Tests the smallest individually executable code units. Objective: Find faults in the units. Assure correct functional behavior of units. By: Usually programmers. Tools: Test driver/harness Coverage evaluator Automatic test generator PVK-HT 03 bella@cs. umu. se 15

Unit Testing Defn: Tests the smallest individually executable code units. Objective: Find faults in the units. Assure correct functional behavior of units. By: Usually programmers. Tools: Test driver/harness Coverage evaluator Automatic test generator PVK-HT 03 bella@cs. umu. se 15

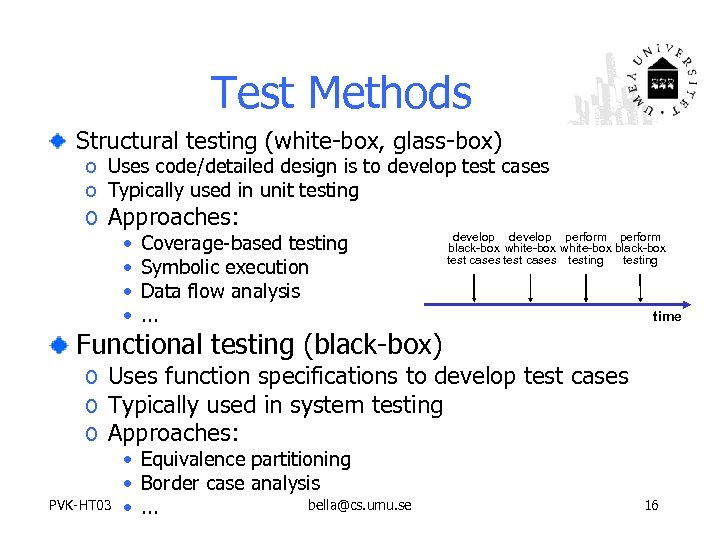

Test Methods Structural testing (white-box, glass-box) o Uses code/detailed design is to develop test cases o Typically used in unit testing o Approaches: • • Coverage-based testing Symbolic execution Data flow analysis. . . develop perform black-box white-box black-box test cases testing time Functional testing (black-box) o Uses function specifications to develop test cases o Typically used in system testing o Approaches: PVK-HT 03 • Equivalence partitioning • Border case analysis bella@cs. umu. se • . . . 16

Test Methods Structural testing (white-box, glass-box) o Uses code/detailed design is to develop test cases o Typically used in unit testing o Approaches: • • Coverage-based testing Symbolic execution Data flow analysis. . . develop perform black-box white-box black-box test cases testing time Functional testing (black-box) o Uses function specifications to develop test cases o Typically used in system testing o Approaches: PVK-HT 03 • Equivalence partitioning • Border case analysis bella@cs. umu. se • . . . 16

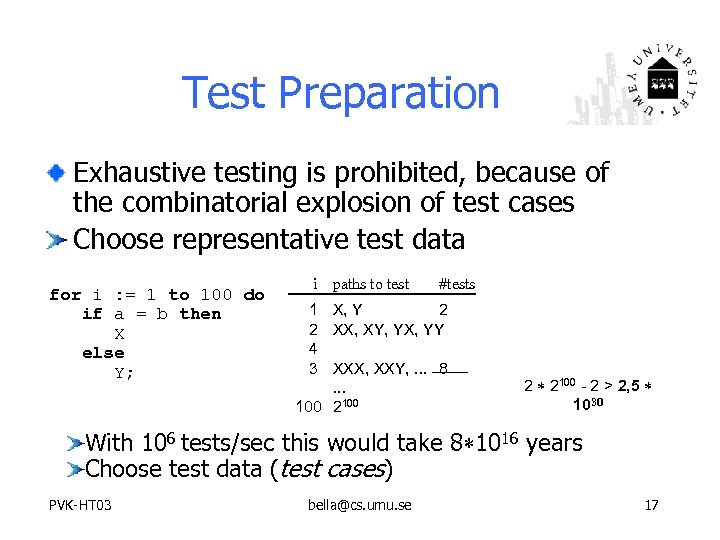

Test Preparation Exhaustive testing is prohibited, because of the combinatorial explosion of test cases Choose representative test data for i : = 1 to 100 do if a = b then X else Y; i paths to test #tests 1 X, Y 2 2 XX, XY, YX, YY 4 3 XXX, XXY, . . . 8. . . 100 2 2100 - 2 > 2, 5 1030 With 106 tests/sec this would take 8 1016 years Choose test data (test cases) PVK-HT 03 bella@cs. umu. se 17

Test Preparation Exhaustive testing is prohibited, because of the combinatorial explosion of test cases Choose representative test data for i : = 1 to 100 do if a = b then X else Y; i paths to test #tests 1 X, Y 2 2 XX, XY, YX, YY 4 3 XXX, XXY, . . . 8. . . 100 2 2100 - 2 > 2, 5 1030 With 106 tests/sec this would take 8 1016 years Choose test data (test cases) PVK-HT 03 bella@cs. umu. se 17

Black-box Testing Test generation without knowledge of software structure Also called specification-based or functional testing Equivalence partitioning Boundary-value analysis Error Guessing. . PVK-HT 03 bella@cs. umu. se 19

Black-box Testing Test generation without knowledge of software structure Also called specification-based or functional testing Equivalence partitioning Boundary-value analysis Error Guessing. . PVK-HT 03 bella@cs. umu. se 19

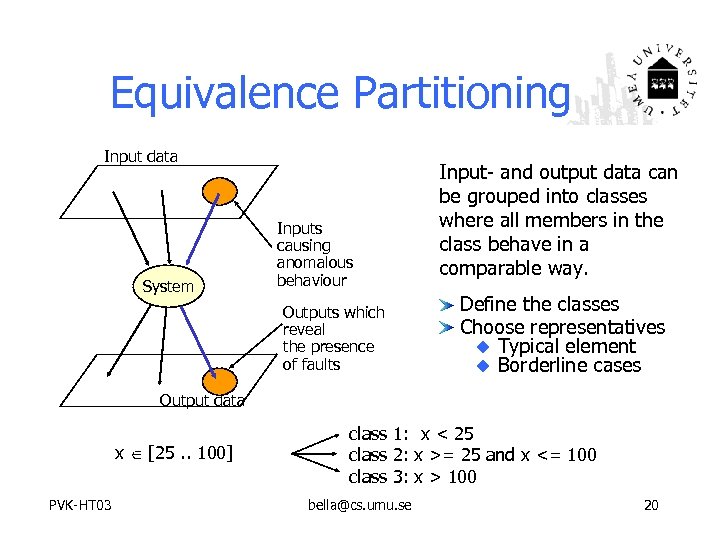

Equivalence Partitioning Input data System Inputs causing anomalous behaviour Outputs which reveal the presence of faults Input- and output data can be grouped into classes where all members in the class behave in a comparable way. Define the classes Choose representatives u Typical element u Borderline cases Output data x [25. . 100] PVK-HT 03 class 1: x < 25 class 2: x >= 25 and x <= 100 class 3: x > 100 bella@cs. umu. se 20

Equivalence Partitioning Input data System Inputs causing anomalous behaviour Outputs which reveal the presence of faults Input- and output data can be grouped into classes where all members in the class behave in a comparable way. Define the classes Choose representatives u Typical element u Borderline cases Output data x [25. . 100] PVK-HT 03 class 1: x < 25 class 2: x >= 25 and x <= 100 class 3: x > 100 bella@cs. umu. se 20

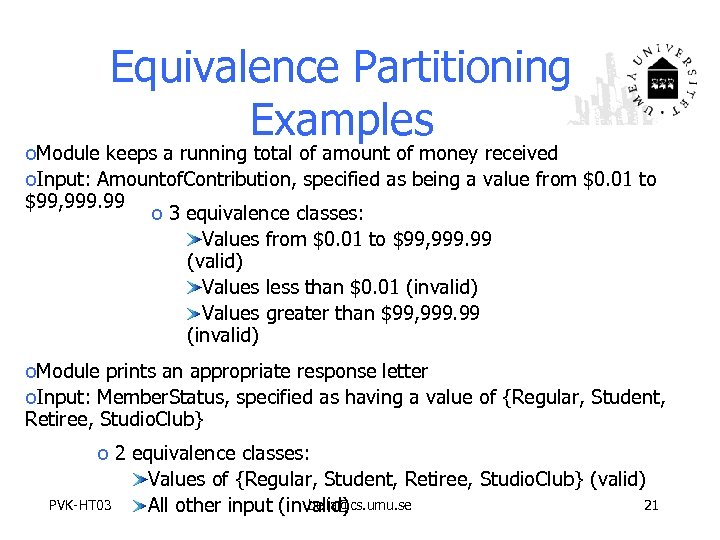

Equivalence Partitioning Examples o. Module keeps a running total of amount of money received o. Input: Amountof. Contribution, specified as being a value from $0. 01 to $99, 999. 99 o 3 equivalence classes: Values from $0. 01 to $99, 999. 99 (valid) Values less than $0. 01 (invalid) Values greater than $99, 999. 99 (invalid) o. Module prints an appropriate response letter o. Input: Member. Status, specified as having a value of {Regular, Student, Retiree, Studio. Club} o 2 equivalence classes: Values of {Regular, Student, Retiree, Studio. Club} (valid) PVK-HT 03 bella@cs. umu. se 21 All other input (invalid)

Equivalence Partitioning Examples o. Module keeps a running total of amount of money received o. Input: Amountof. Contribution, specified as being a value from $0. 01 to $99, 999. 99 o 3 equivalence classes: Values from $0. 01 to $99, 999. 99 (valid) Values less than $0. 01 (invalid) Values greater than $99, 999. 99 (invalid) o. Module prints an appropriate response letter o. Input: Member. Status, specified as having a value of {Regular, Student, Retiree, Studio. Club} o 2 equivalence classes: Values of {Regular, Student, Retiree, Studio. Club} (valid) PVK-HT 03 bella@cs. umu. se 21 All other input (invalid)

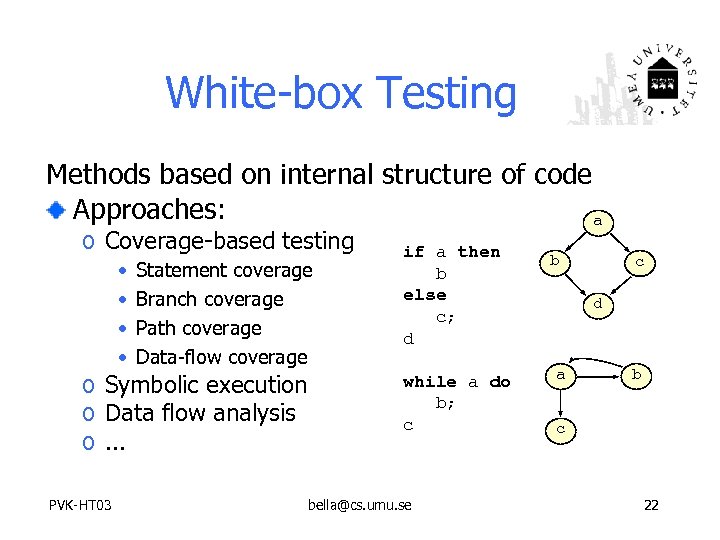

White-box Testing Methods based on internal structure of code Approaches: a o Coverage-based testing • • Statement coverage Branch coverage Path coverage Data-flow coverage o Symbolic execution o Data flow analysis o. . . PVK-HT 03 if a then b else c; d while a do b; c bella@cs. umu. se b c d a b c 22

White-box Testing Methods based on internal structure of code Approaches: a o Coverage-based testing • • Statement coverage Branch coverage Path coverage Data-flow coverage o Symbolic execution o Data flow analysis o. . . PVK-HT 03 if a then b else c; d while a do b; c bella@cs. umu. se b c d a b c 22

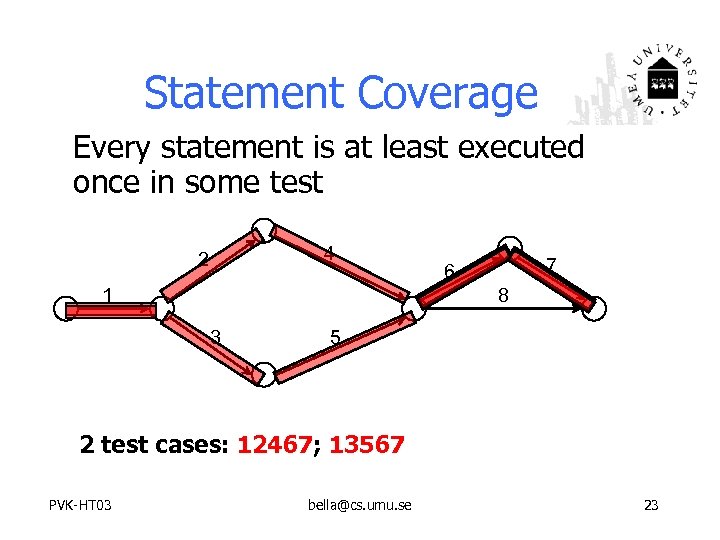

Statement Coverage Every statement is at least executed once in some test 4 2 1 7 6 8 3 5 2 test cases: 12467; 13567 PVK-HT 03 bella@cs. umu. se 23

Statement Coverage Every statement is at least executed once in some test 4 2 1 7 6 8 3 5 2 test cases: 12467; 13567 PVK-HT 03 bella@cs. umu. se 23

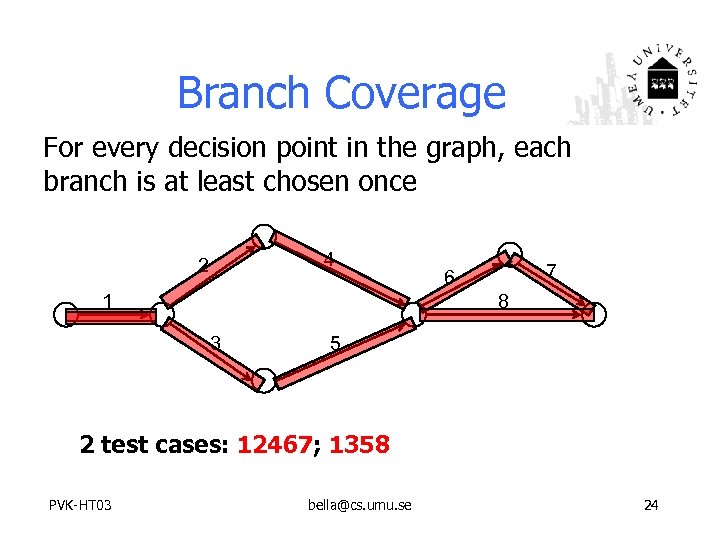

Branch Coverage For every decision point in the graph, each branch is at least chosen once 4 2 1 7 6 8 3 5 2 test cases: 12467; 1358 PVK-HT 03 bella@cs. umu. se 24

Branch Coverage For every decision point in the graph, each branch is at least chosen once 4 2 1 7 6 8 3 5 2 test cases: 12467; 1358 PVK-HT 03 bella@cs. umu. se 24

Condition Coverage Test all combinations of conditions in boolean expressions at least once if (X or not (Y and Z) and. . . then b; c : = (d + e * f - g) div op( h, i, j); Why in boolean expressions only? PVK-HT 03 bella@cs. umu. se 25

Condition Coverage Test all combinations of conditions in boolean expressions at least once if (X or not (Y and Z) and. . . then b; c : = (d + e * f - g) div op( h, i, j); Why in boolean expressions only? PVK-HT 03 bella@cs. umu. se 25

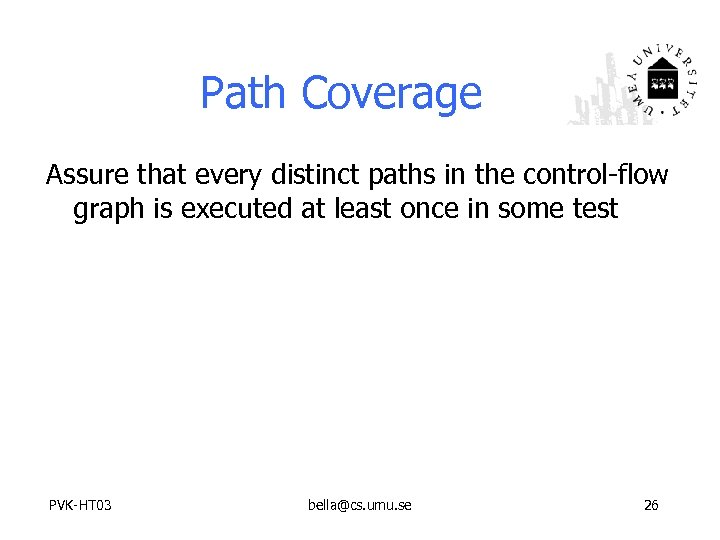

Path Coverage Assure that every distinct paths in the control-flow graph is executed at least once in some test PVK-HT 03 bella@cs. umu. se 26

Path Coverage Assure that every distinct paths in the control-flow graph is executed at least once in some test PVK-HT 03 bella@cs. umu. se 26

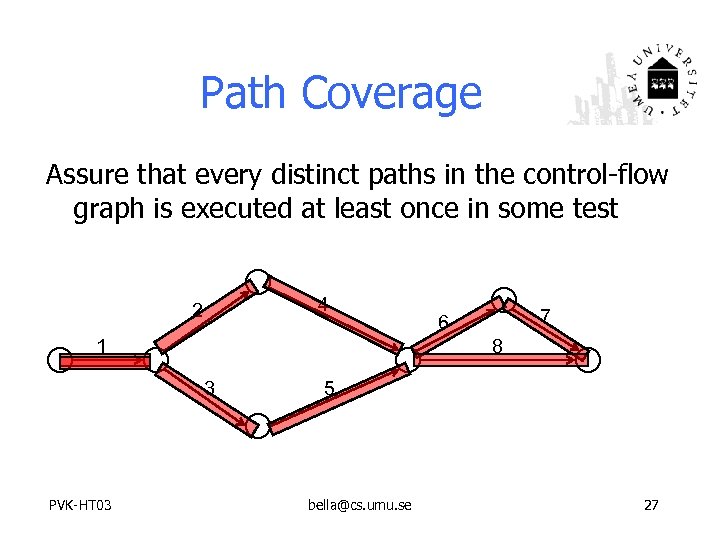

Path Coverage Assure that every distinct paths in the control-flow graph is executed at least once in some test 4 2 1 8 3 PVK-HT 03 7 6 5 bella@cs. umu. se 27

Path Coverage Assure that every distinct paths in the control-flow graph is executed at least once in some test 4 2 1 8 3 PVK-HT 03 7 6 5 bella@cs. umu. se 27

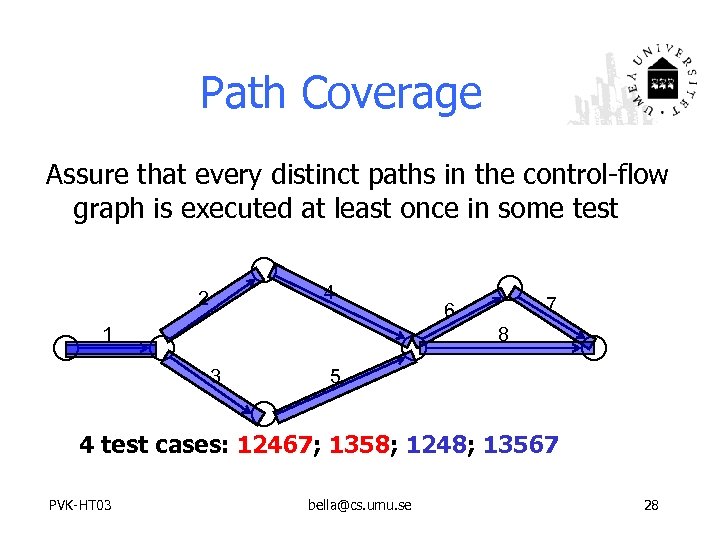

Path Coverage Assure that every distinct paths in the control-flow graph is executed at least once in some test 4 2 1 7 6 8 3 5 4 test cases: 12467; 1358; 1248; 13567 PVK-HT 03 bella@cs. umu. se 28

Path Coverage Assure that every distinct paths in the control-flow graph is executed at least once in some test 4 2 1 7 6 8 3 5 4 test cases: 12467; 1358; 1248; 13567 PVK-HT 03 bella@cs. umu. se 28

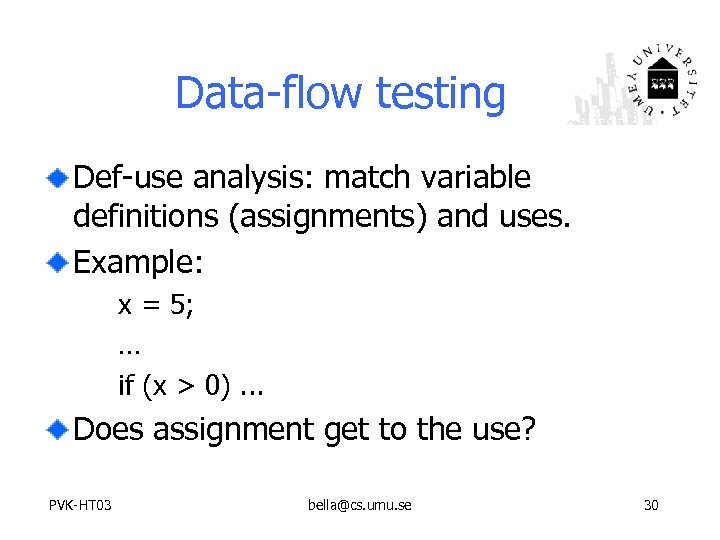

Data-flow testing Def-use analysis: match variable definitions (assignments) and uses. Example: x = 5; … if (x > 0). . . Does assignment get to the use? PVK-HT 03 bella@cs. umu. se 30

Data-flow testing Def-use analysis: match variable definitions (assignments) and uses. Example: x = 5; … if (x > 0). . . Does assignment get to the use? PVK-HT 03 bella@cs. umu. se 30

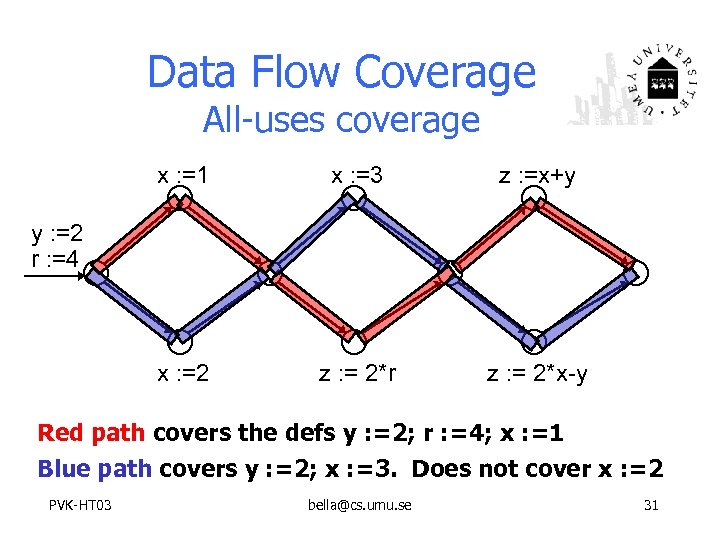

Data Flow Coverage All-uses coverage x : =1 x : =3 z : =x+y x : =2 z : = 2*r z : = 2*x-y y : =2 r : =4 Red path covers the defs y : =2; r : =4; x : =1 Blue path covers y : =2; x : =3. Does not cover x : =2 PVK-HT 03 bella@cs. umu. se 31

Data Flow Coverage All-uses coverage x : =1 x : =3 z : =x+y x : =2 z : = 2*r z : = 2*x-y y : =2 r : =4 Red path covers the defs y : =2; r : =4; x : =1 Blue path covers y : =2; x : =3. Does not cover x : =2 PVK-HT 03 bella@cs. umu. se 31

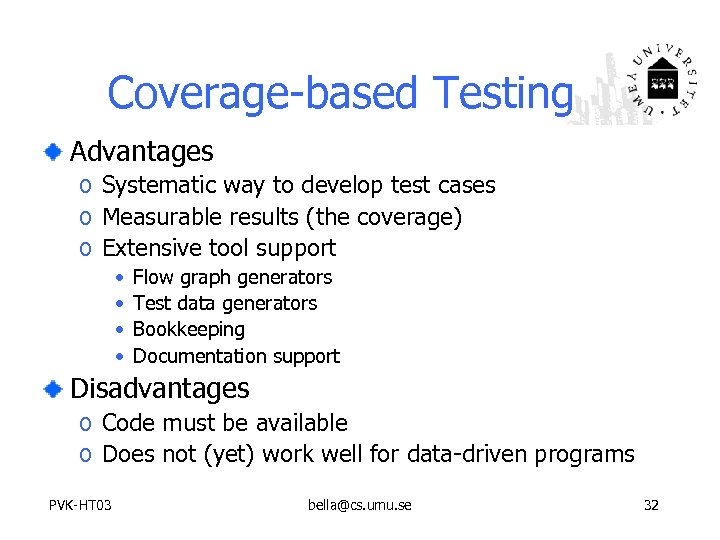

Coverage-based Testing Advantages o Systematic way to develop test cases o Measurable results (the coverage) o Extensive tool support • • Flow graph generators Test data generators Bookkeeping Documentation support Disadvantages o Code must be available o Does not (yet) work well for data-driven programs PVK-HT 03 bella@cs. umu. se 32

Coverage-based Testing Advantages o Systematic way to develop test cases o Measurable results (the coverage) o Extensive tool support • • Flow graph generators Test data generators Bookkeeping Documentation support Disadvantages o Code must be available o Does not (yet) work well for data-driven programs PVK-HT 03 bella@cs. umu. se 32

Integration Testing Defn: Testing two or more units or components Objectives o Interface errors o Functionality of combined units; look for problems with functional threads By: Developers or Testing group Tools: Interface analysis; call pairs Issues: Strategy for combining units PVK-HT 03 bella@cs. umu. se 33

Integration Testing Defn: Testing two or more units or components Objectives o Interface errors o Functionality of combined units; look for problems with functional threads By: Developers or Testing group Tools: Interface analysis; call pairs Issues: Strategy for combining units PVK-HT 03 bella@cs. umu. se 33

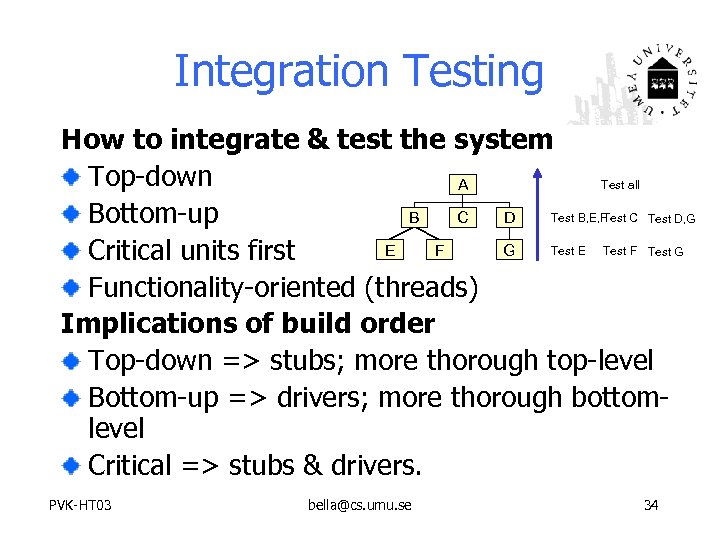

Integration Testing How to integrate & test the system Top-down Test all A Test C Test D, G Test B, E, F Bottom-up B C D Test E Test F Test G E F G Critical units first Functionality-oriented (threads) Implications of build order Top-down => stubs; more thorough top-level Bottom-up => drivers; more thorough bottomlevel Critical => stubs & drivers. PVK-HT 03 bella@cs. umu. se 34

Integration Testing How to integrate & test the system Top-down Test all A Test C Test D, G Test B, E, F Bottom-up B C D Test E Test F Test G E F G Critical units first Functionality-oriented (threads) Implications of build order Top-down => stubs; more thorough top-level Bottom-up => drivers; more thorough bottomlevel Critical => stubs & drivers. PVK-HT 03 bella@cs. umu. se 34

System Testing Defn: Test the functionality, performance, reliability, security of the entire system. By: Separate test group. Objective: Find errors in the overall system behavior. Establish confidence in system functionality. Validate that system achieves its desired non-functional attributes. Tools: User simulator. Load simulator PVK-HT 03 bella@cs. umu. se 35

System Testing Defn: Test the functionality, performance, reliability, security of the entire system. By: Separate test group. Objective: Find errors in the overall system behavior. Establish confidence in system functionality. Validate that system achieves its desired non-functional attributes. Tools: User simulator. Load simulator PVK-HT 03 bella@cs. umu. se 35

Acceptance Testing Defn: Operate system in user environment, with standard user input scenarios. By: End user Objective: Evaluate whether system meets customer criteria. Determine if customer will accept system. Tools: User simulator. Customer test scripts/logs from operation of previous system. PVK-HT 03 bella@cs. umu. se 37

Acceptance Testing Defn: Operate system in user environment, with standard user input scenarios. By: End user Objective: Evaluate whether system meets customer criteria. Determine if customer will accept system. Tools: User simulator. Customer test scripts/logs from operation of previous system. PVK-HT 03 bella@cs. umu. se 37

Regression Testing Defn: Test of modified versions of previously validated system. By: System or regression test group. Objective: Assure that changes to system have not introduced new errors. Tools: Regression test base, capture/replay Issues: Minimal regression suite, test prioritization PVK-HT 03 bella@cs. umu. se 38

Regression Testing Defn: Test of modified versions of previously validated system. By: System or regression test group. Objective: Assure that changes to system have not introduced new errors. Tools: Regression test base, capture/replay Issues: Minimal regression suite, test prioritization PVK-HT 03 bella@cs. umu. se 38

Test Automation has several meanings Test generation: Produce test cases by processing of specifications, code, model. Test execution: Run large numbers of test cases/suites without human intervention. Test management: Log test cases & results; map tests to requirements & functionality; track test progress & completeness PVK-HT 03 bella@cs. umu. se 39

Test Automation has several meanings Test generation: Produce test cases by processing of specifications, code, model. Test execution: Run large numbers of test cases/suites without human intervention. Test management: Log test cases & results; map tests to requirements & functionality; track test progress & completeness PVK-HT 03 bella@cs. umu. se 39

Issues of Test Automation Automating Test Execution Does the payoff from test automation justify the expense and effort of automation? Learning to use the automation tool is non-trivial Testers become programmers All tests, including automated tests, have a finite lifetime. Complete automated execution implies putting the system into the proper state, supplying the inputs, running the test case, collecting the result, verifying the result. PVK-HT 03 bella@cs. umu. se 40

Issues of Test Automation Automating Test Execution Does the payoff from test automation justify the expense and effort of automation? Learning to use the automation tool is non-trivial Testers become programmers All tests, including automated tests, have a finite lifetime. Complete automated execution implies putting the system into the proper state, supplying the inputs, running the test case, collecting the result, verifying the result. PVK-HT 03 bella@cs. umu. se 40

Test documentation Test plan: describes system and plan for exercising all functions and characteristics Test specification and evaluation: details each test and defines criteria for evaluating each feature Test description: test data and procedures for each test Test analysis report: results of each test PVK-HT 03 bella@cs. umu. se 42

Test documentation Test plan: describes system and plan for exercising all functions and characteristics Test specification and evaluation: details each test and defines criteria for evaluating each feature Test description: test data and procedures for each test Test analysis report: results of each test PVK-HT 03 bella@cs. umu. se 42

The Key Problems of Software Testing Selecting or generating the right test cases. Knowing when a system has been tested enough. Knowing what has been discovered/demonstrated by execution of a test suite. PVK-HT 03 bella@cs. umu. se 43

The Key Problems of Software Testing Selecting or generating the right test cases. Knowing when a system has been tested enough. Knowing what has been discovered/demonstrated by execution of a test suite. PVK-HT 03 bella@cs. umu. se 43