b5f558d2a7981a57600954176d3da833.ppt

- Количество слайдов: 28

Constructing a Performance Database for Large-Scale Quantum Chemistry Packages Meng-Shiou Wu 1, Hirotoshi Mori 2, Jonathan Bentz 3, Theresa Windus 2, Heather Netzloff 2, Masha Sosonkina 1, Mark S. Gordon 1, 2 1 Scalable Computing Laboratory, Ames Laboratory, US DOE 2 Department of Chemistry, Iowa State University 3 Cray Inc.

Introduction l Why Quantum Chemistry (QC)? ? – Applications to l l l Surface science Environmental science Biological science Nanotechnology Much more… – Provides a way to treat problems with greater accuracy – With advent of more powerful computational tools, QC can be applied to more and more problems

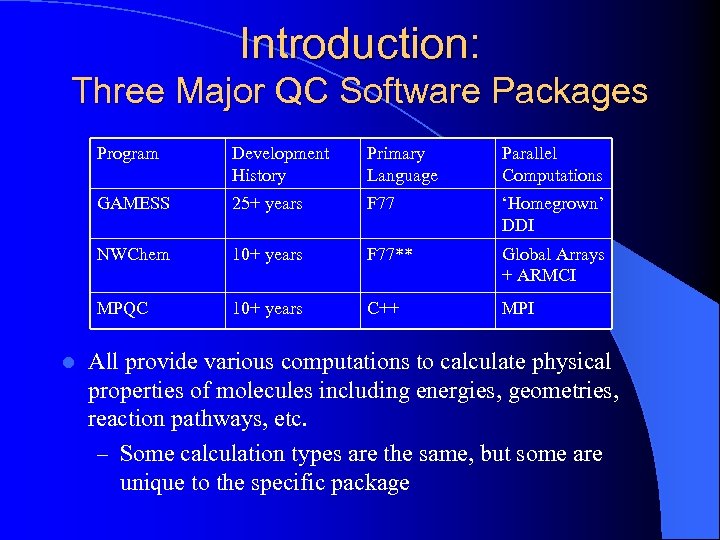

Introduction: Three Major QC Software Packages Program Primary Language Parallel Computations GAMESS 25+ years F 77 ‘Homegrown’ DDI NWChem 10+ years F 77** Global Arrays + ARMCI MPQC l Development History 10+ years C++ MPI All provide various computations to calculate physical properties of molecules including energies, geometries, reaction pathways, etc. – Some calculation types are the same, but some are unique to the specific package

Introduction l We have used the Common Component Architecture (CCA) framework to enable interoperability between GAMESS, NWChem and MPQC – Obtain a palette of components to utilize functionalities between each package

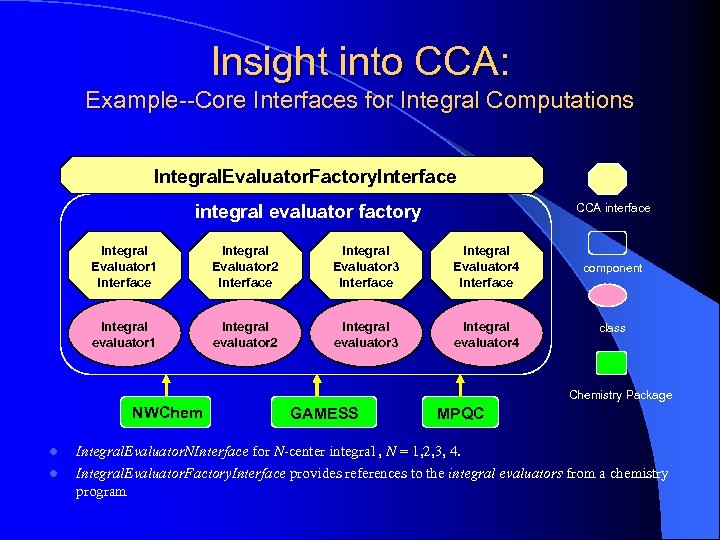

Insight into CCA: Example--Core Interfaces for Integral Computations Integral. Evaluator. Factory. Interface CCA interface integral evaluator factory Integral Evaluator 1 Interface Integral Evaluator 2 Interface Integral Evaluator 3 Interface Integral Evaluator 4 Interface integral evaluator 1 integral evaluator 2 integral evaluator 3 integral evaluator 4 component class Chemistry Package NWChem l l GAMESS MPQC Integral. Evaluator. NInterface for N-center integral , N = 1, 2, 3, 4. Integral. Evaluator. Factory. Interface provides references to the integral evaluators from a chemistry program

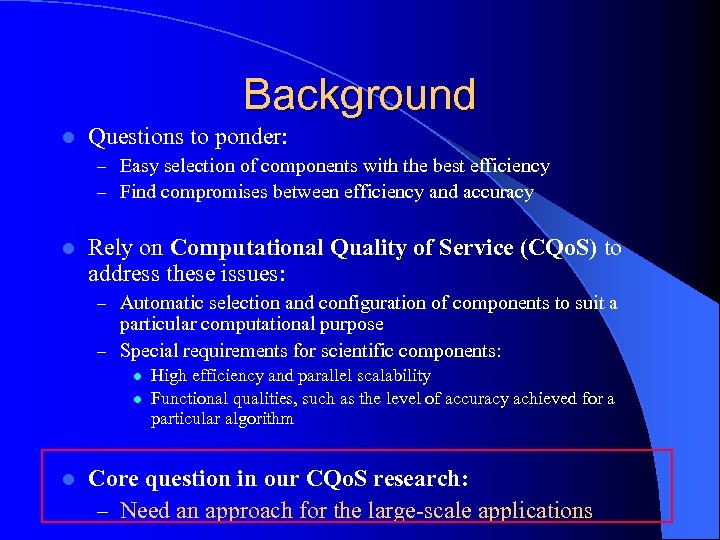

Background l Questions to ponder: – Easy selection of components with the best efficiency – Find compromises between efficiency and accuracy l Rely on Computational Quality of Service (CQo. S) to address these issues: – Automatic selection and configuration of components to suit a particular computational purpose – Special requirements for scientific components: l l l High efficiency and parallel scalability Functional qualities, such as the level of accuracy achieved for a particular algorithm Core question in our CQo. S research: – Need an approach for the large-scale applications

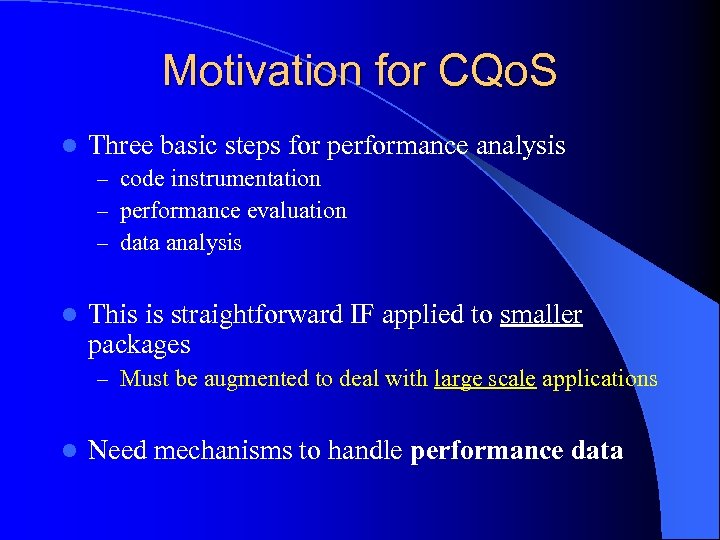

Motivation for CQo. S l Three basic steps for performance analysis – code instrumentation – performance evaluation – data analysis l This is straightforward IF applied to smaller packages – Must be augmented to deal with large scale applications l Need mechanisms to handle performance data

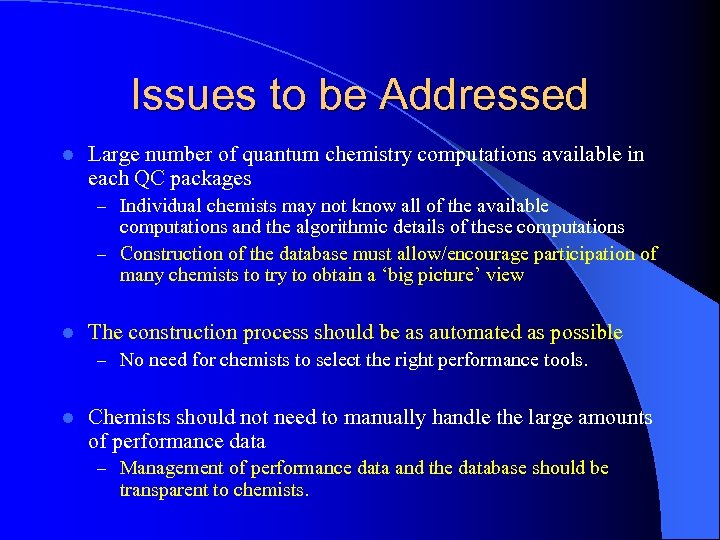

Issues to be Addressed l Large number of quantum chemistry computations available in each QC packages – Individual chemists may not know all of the available computations and the algorithmic details of these computations – Construction of the database must allow/encourage participation of many chemists to try to obtain a ‘big picture’ view l The construction process should be as automated as possible – No need for chemists to select the right performance tools. l Chemists should not need to manually handle the large amounts of performance data – Management of performance data and the database should be transparent to chemists.

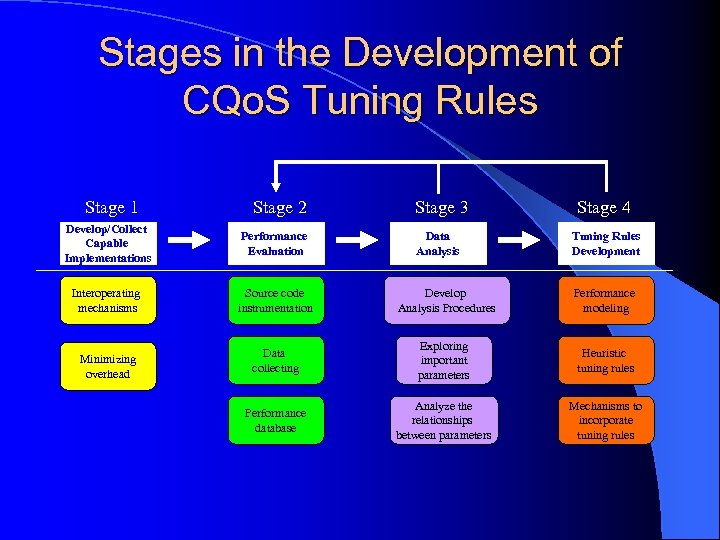

Stages in the Development of CQo. S Tuning Rules Stage 1 Stage 2 Stage 3 Stage 4 Develop/Collect Capable Implementations Performance Evaluation Interoperating mechanisms Source code instrumentation Develop Analysis Procedures Performance modeling Minimizing overhead Data collecting Exploring important parameters Heuristic tuning rules Performance database Analyze the relationships between parameters Mechanisms to incorporate tuning rules Data Analysis Tuning Rules Development

Stage 1: Collect/Develop/Incorporate Implementations l Goal: – Develop, collect and integrate capable methods/implementations l For packages integrated with CCA, the goal is to develop interoperating mechanisms. – Overhead introduced by the interoperating mechanisms must be minimized Interoperating Mechanisms Minimizing Overhead

Stage 2: Performance Evaluation l Goal: – Generate useful performance data and explore methods to easily manipulate large amounts of data l This is our current area of focus Source code Instrumentation Data Collection Performance Database

Stage 3: Performance Analysis l Goal: – Use the collected performance data and the constructed database to conduct analysis. l Very complex process for quantum chemistry computations – Example to follow… Develop Analysis Procedures Exploring important parameters Analyze the relationships between parameters

Stage 4: Tuning Rules Development l Goal: – Develop mechanisms to select one method among a pool of methods. l l Depends on the results of performance analysis. Two common approaches: – develop performance models – use heuristic tuning rules. l Tuning rules must be integrated into the original interoperating mechanisms to be useful. Performance Modeling Heuristic Tuning rules Mechanisms to Incorporate Tuning rules

Performance Evaluation: Source Code Instrumentation l Insert performance evaluation functions into application source codes. – Straightforward with automatic instrumentation capability provided by existing performance tools. – Limitations exist when you need only part of the overall performance data l Expected performance data may not be generated

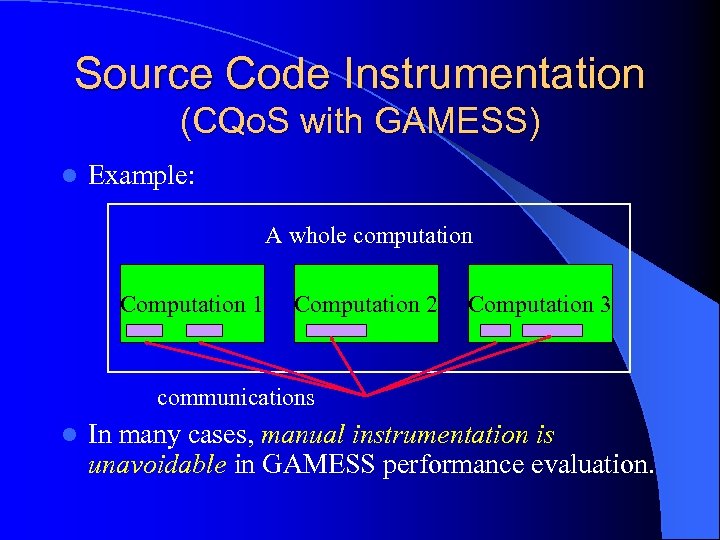

Source Code Instrumentation (CQo. S with GAMESS) l Example: A whole computation Computation 1 Computation 2 Computation 3 communications l In many cases, manual instrumentation is unavoidable in GAMESS performance evaluation.

GAMESS Performance Tools Integrator (GPERFI) l Built on top of existing performance tools such as TAU (Tuning and Analysis Utilities) or PAPI l Allows more flexible instrumentation mechanisms specific to GAMESS l Provides mechanisms to extract I/O, communication, or partial profiling data from the overall wall clock time

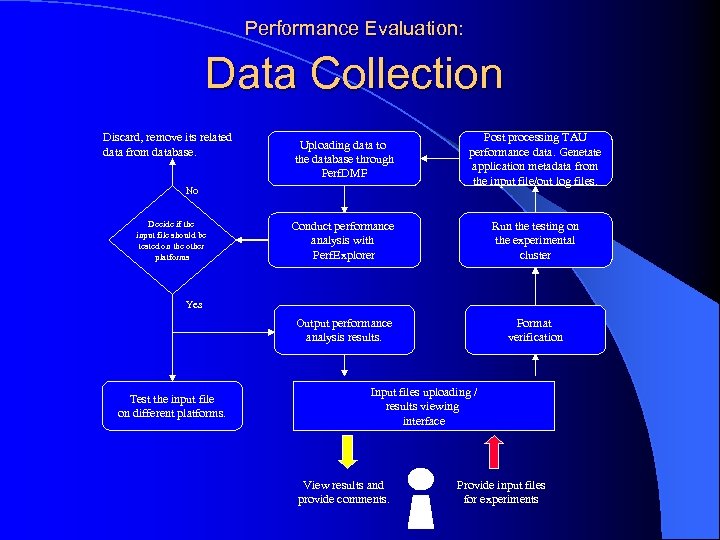

Performance Evaluation: Data Collection Discard, remove its related data from database. Uploading data to the database through Perf. DMF Post processing TAU performance data. Genetate application metadata from the input file/out log files. Conduct performance analysis with Perf. Explorer Run the testing on the experimental cluster Output performance analysis results. Format verification No Decide if the input file should be tested on the other platforms Yes Test the input file on different platforms. Input files uploading / results viewing interface View results and provide comments. Provide input files for experiments

Performance Evaluation: Performance Database l Simply conducting many performance evaluations and putting the performance data into the database is not going to work!!! – Need to properly collect metadata that are related to performance data. – Need to build relationships between metadata. – Need participation of chemists!!!

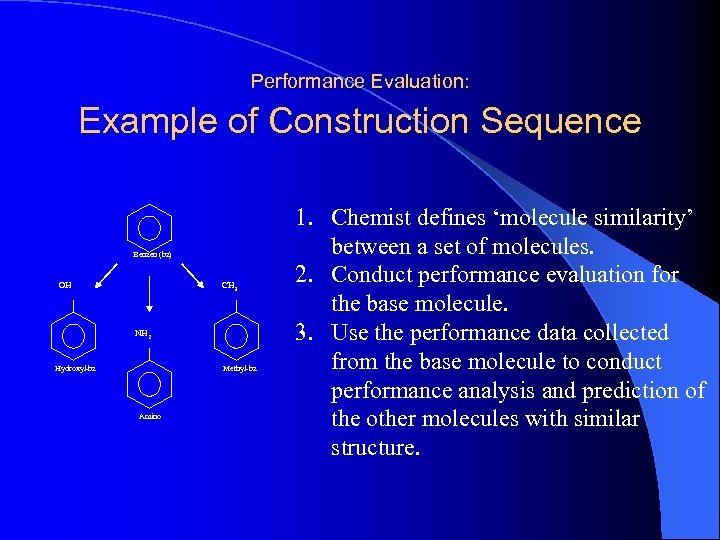

Performance Evaluation: Example of Construction Sequence Benzen (bz) OH CH 3 NH 2 Hydroxyl-bz Methyl-bz Amino 1. Chemist defines ‘molecule similarity’ between a set of molecules. 2. Conduct performance evaluation for the base molecule. 3. Use the performance data collected from the base molecule to conduct performance analysis and prediction of the other molecules with similar structure.

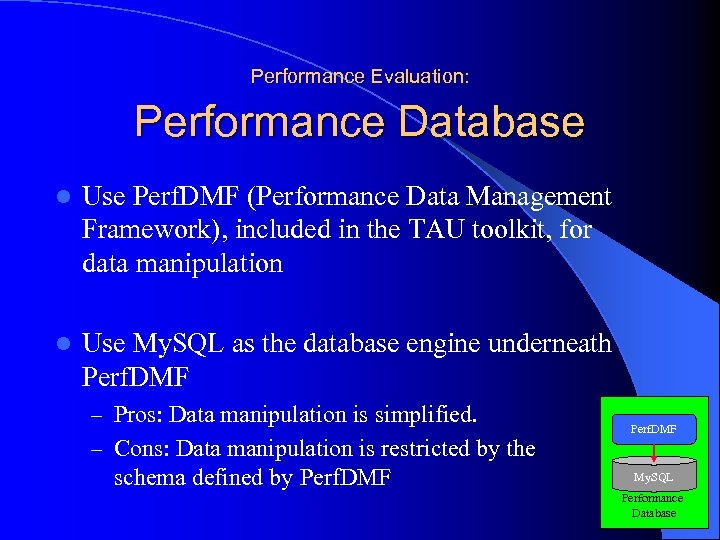

Performance Evaluation: Performance Database l Use Perf. DMF (Performance Data Management Framework), included in the TAU toolkit, for data manipulation l Use My. SQL as the database engine underneath Perf. DMF – Pros: Data manipulation is simplified. – Cons: Data manipulation is restricted by the schema defined by Perf. DMF My. SQL Performance Database

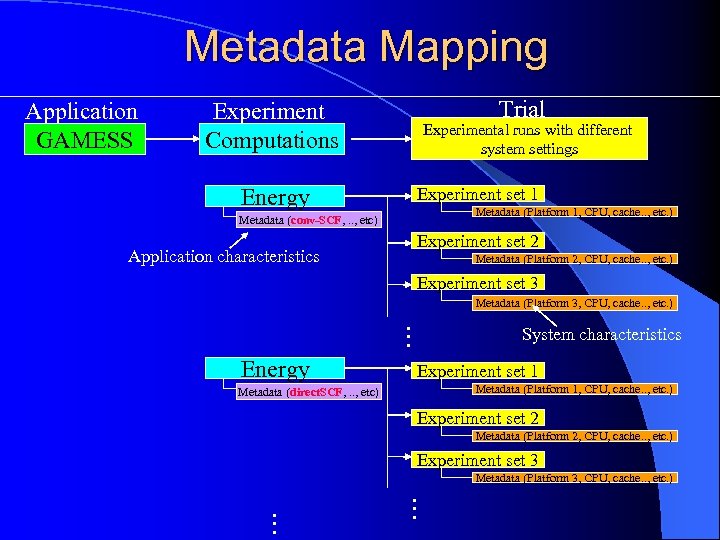

Metadata Mapping Application GAMESS Experiment Computations Energy Trial Experimental runs with different system settings Experiment set 1 Metadata (Platform 1, CPU, cache. . , etc. ) Metadata (conv-SCF, . . , etc) Application characteristics Experiment set 2 Metadata (Platform 2, CPU, cache. . , etc. ) Experiment set 3 Metadata (Platform 3, CPU, cache. . , etc. ) … Energy System characteristics Experiment set 1 Metadata (Platform 1, CPU, cache. . , etc. ) Metadata (direct. SCF, . . , etc) Experiment set 2 Metadata (Platform 2, CPU, cache. . , etc. ) Experiment set 3 Metadata (Platform 3, CPU, cache. . , etc. ) … …

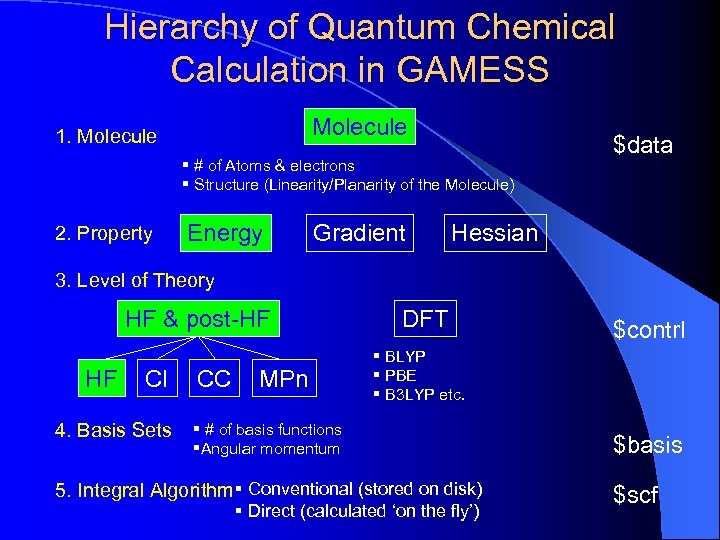

Hierarchy of Quantum Chemical Calculation in GAMESS Molecule 1. Molecule § # of Atoms & electrons § Structure (Linearity/Planarity of the Molecule) 2. Property Energy Gradient $data Hessian 3. Level of Theory HF & post-HF HF CI 4. Basis Sets CC MPn DFT $contrl § BLYP § PBE § B 3 LYP etc. § # of basis functions §Angular momentum 5. Integral Algorithm§ Conventional (stored on disk) § Direct (calculated ‘on the fly’) $basis $scf

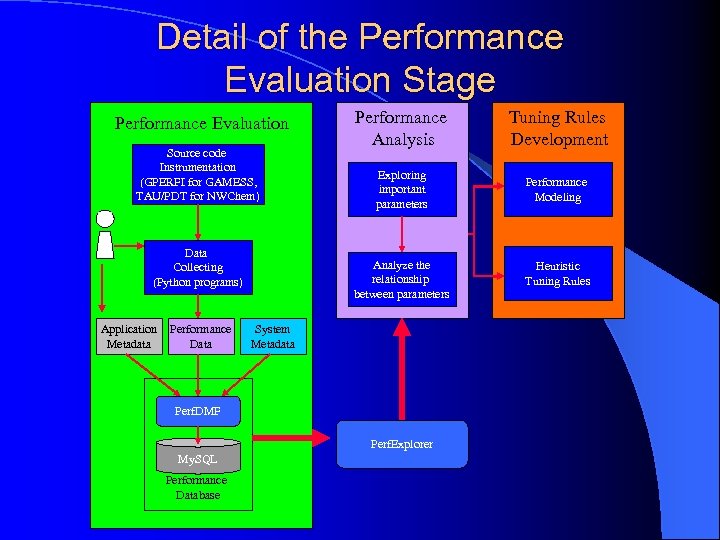

Detail of the Performance Evaluation Stage Performance Evaluation Source code Instrumentation (GPERFI for GAMESS, TAU/PDT for NWChem) Data Collecting (Python programs) Application Metadata Performance Data Performance Analysis Tuning Rules Development Exploring important parameters Performance Modeling Analyze the relationship between parameters System Metadata Perf. DMF Perf. Explorer My. SQL Performance Database Heuristic Tuning Rules

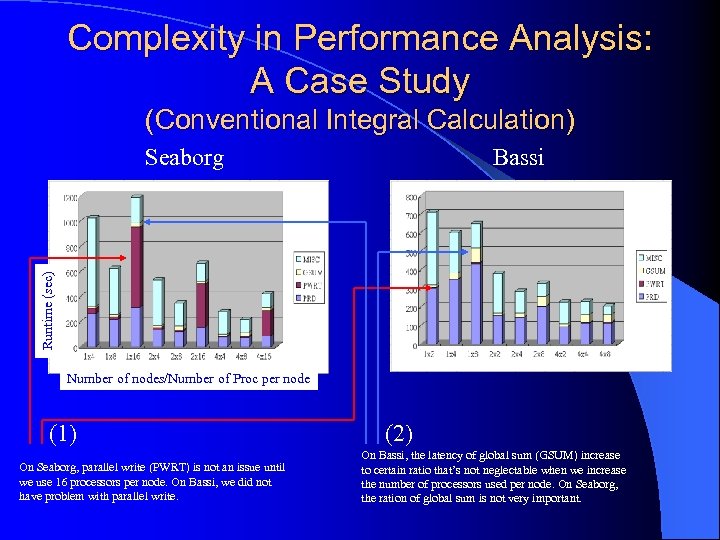

Complexity in Performance Analysis: A Case Study (Conventional Integral Calculation) Bassi Runtime (sec) Seaborg Number of nodes/Number of Proc per node (1) On Seaborg, parallel write (PWRT) is not an issue until we use 16 processors per node. On Bassi, we did not have problem with parallel write. (2) On Bassi, the latency of global sum (GSUM) increase to certain ratio that’s not neglectable when we increase the number of processors used per node. On Seaborg, the ration of global sum is not very important.

What Needs to be Analyzed? l Why does the cost of parallel write (PWRT) jump dramatically when using 16 processors per node? l When does global sum (GSUM) become the performance bottleneck? l Investigation of many subroutines to study the relationships among CPU speed I/O bandwidth/ latency data size communication bandwidth/latency – number of processors used per node – –

Conclusions l Initial work to construct a performance database that can ease the burden in manipulating performance data for quantum chemists – Data manipulation is mostly transparent to chemists. They need only to provide their knowledge of chemistry. l The infrastructure can be used on the other applications – We are working on incorporating NWChem

Acknowledgements l Funding: – US DOE Sci. DAC initiative l Resources: – Lawrence Berkeley National Lab-NERSC

Questions/Comments? ?

b5f558d2a7981a57600954176d3da833.ppt