043733307e44eb1d54689271d559a2a8.ppt

- Количество слайдов: 21

Conditional Random Fields for ASR Jeremy Morris July 25, 2006

Conditional Random Fields for ASR Jeremy Morris July 25, 2006

Overview ► Problem Statement (Motivation) ► Conditional Random Fields ► Experiments § Attribute Selection § Experimental Setup ► Results ► Future Work

Overview ► Problem Statement (Motivation) ► Conditional Random Fields ► Experiments § Attribute Selection § Experimental Setup ► Results ► Future Work

Problem Statement ► Developed as part of the ASAT Project § (Automatic Speech Attribute Transcription) ► Goal: Develop a system for bottom-up speech recognition using 'speech attributes'

Problem Statement ► Developed as part of the ASAT Project § (Automatic Speech Attribute Transcription) ► Goal: Develop a system for bottom-up speech recognition using 'speech attributes'

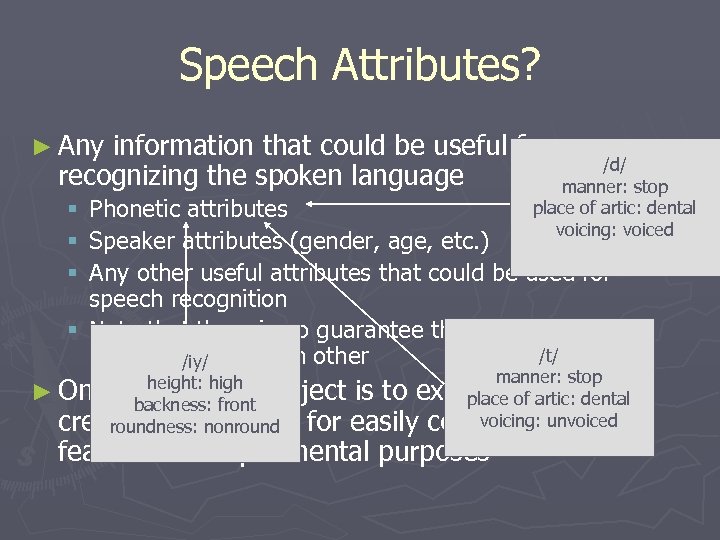

Speech Attributes? ► Any information that could be useful for recognizing the spoken language /d/ manner: stop place of artic: dental voicing: voiced § Phonetic attributes § Speaker attributes (gender, age, etc. ) § Any other useful attributes that could be used for speech recognition § Note that there is no guarantee that attributes will be /t/ independent of each other /iy/ manner: stop height: high ► One part of this project is to explore of artic: dental place ways to backness: front voicing: unvoiced create a framework for easily combining new roundness: nonround features for experimental purposes

Speech Attributes? ► Any information that could be useful for recognizing the spoken language /d/ manner: stop place of artic: dental voicing: voiced § Phonetic attributes § Speaker attributes (gender, age, etc. ) § Any other useful attributes that could be used for speech recognition § Note that there is no guarantee that attributes will be /t/ independent of each other /iy/ manner: stop height: high ► One part of this project is to explore of artic: dental place ways to backness: front voicing: unvoiced create a framework for easily combining new roundness: nonround features for experimental purposes

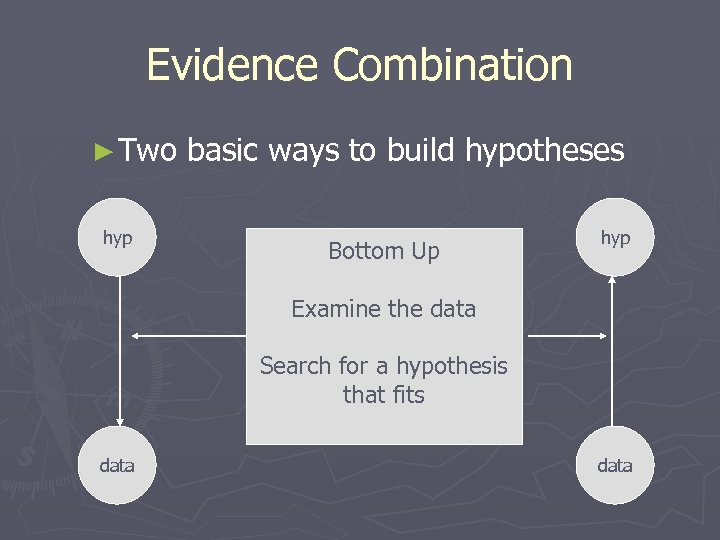

Evidence Combination ► Two hyp basic ways to build hypotheses Bottom Up Top Down hyp Generate a the data Examine hypothesis Searchiffor a data fits See the hypothesis the that fits hypothesis data

Evidence Combination ► Two hyp basic ways to build hypotheses Bottom Up Top Down hyp Generate a the data Examine hypothesis Searchiffor a data fits See the hypothesis the that fits hypothesis data

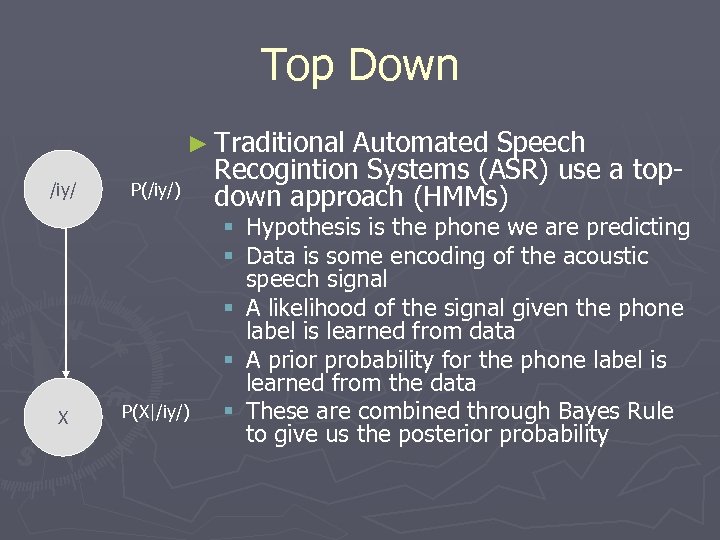

Top Down ► Traditional /iy/ X P(/iy/) P(X|/iy/) Automated Speech Recogintion Systems (ASR) use a topdown approach (HMMs) § Hypothesis is the phone we are predicting § Data is some encoding of the acoustic speech signal § A likelihood of the signal given the phone label is learned from data § A prior probability for the phone label is learned from the data § These are combined through Bayes Rule to give us the posterior probability

Top Down ► Traditional /iy/ X P(/iy/) P(X|/iy/) Automated Speech Recogintion Systems (ASR) use a topdown approach (HMMs) § Hypothesis is the phone we are predicting § Data is some encoding of the acoustic speech signal § A likelihood of the signal given the phone label is learned from data § A prior probability for the phone label is learned from the data § These are combined through Bayes Rule to give us the posterior probability

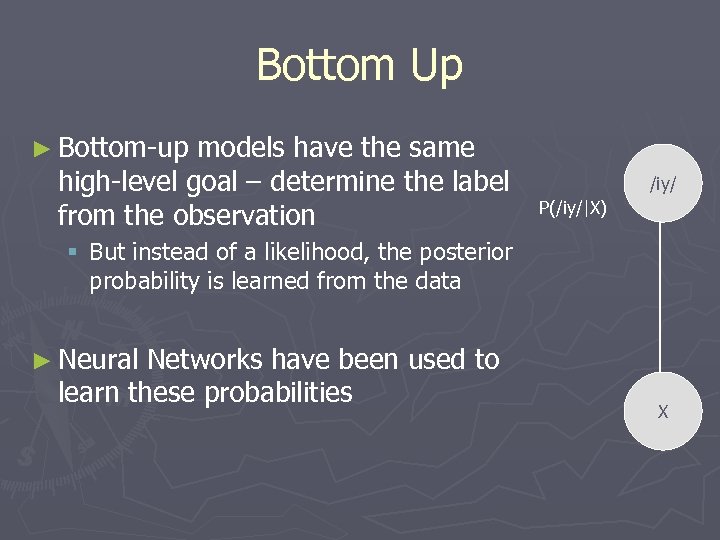

Bottom Up ► Bottom-up models have the same high-level goal – determine the label from the observation /iy/ P(/iy/|X) § But instead of a likelihood, the posterior probability is learned from the data ► Neural Networks have been used to learn these probabilities X

Bottom Up ► Bottom-up models have the same high-level goal – determine the label from the observation /iy/ P(/iy/|X) § But instead of a likelihood, the posterior probability is learned from the data ► Neural Networks have been used to learn these probabilities X

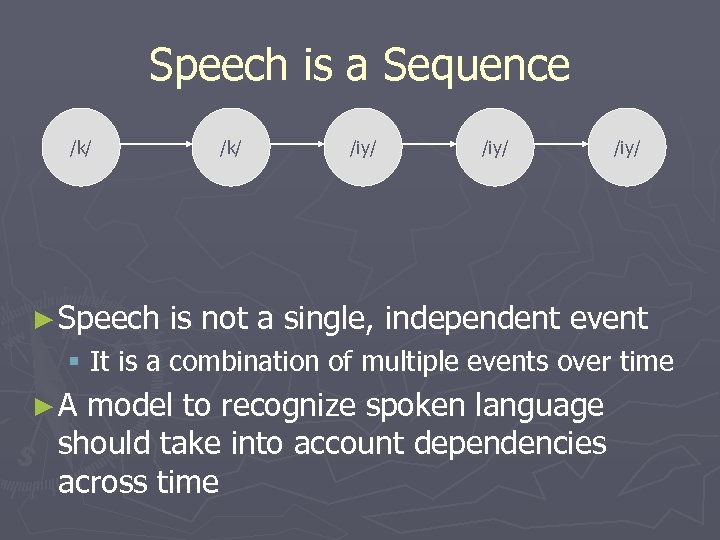

Speech is a Sequence /k/ ► Speech /k/ /iy/ is not a single, independent event § It is a combination of multiple events over time ►A model to recognize spoken language should take into account dependencies across time

Speech is a Sequence /k/ ► Speech /k/ /iy/ is not a single, independent event § It is a combination of multiple events over time ►A model to recognize spoken language should take into account dependencies across time

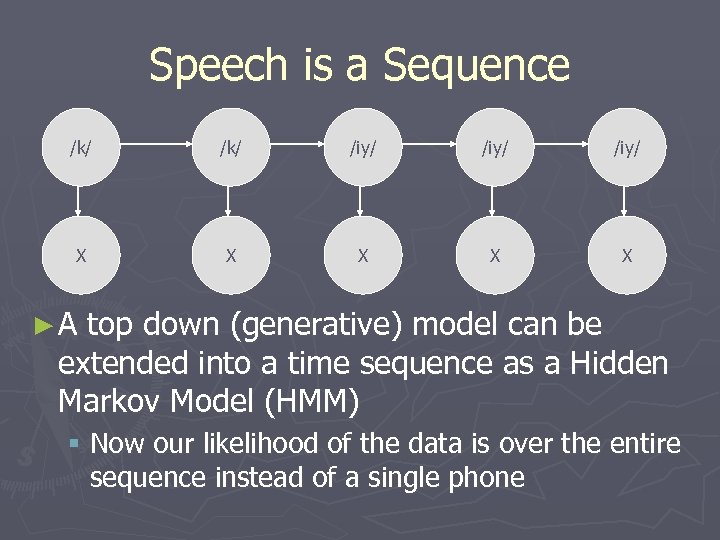

Speech is a Sequence /k/ /iy/ X X X ►A top down (generative) model can be extended into a time sequence as a Hidden Markov Model (HMM) § Now our likelihood of the data is over the entire sequence instead of a single phone

Speech is a Sequence /k/ /iy/ X X X ►A top down (generative) model can be extended into a time sequence as a Hidden Markov Model (HMM) § Now our likelihood of the data is over the entire sequence instead of a single phone

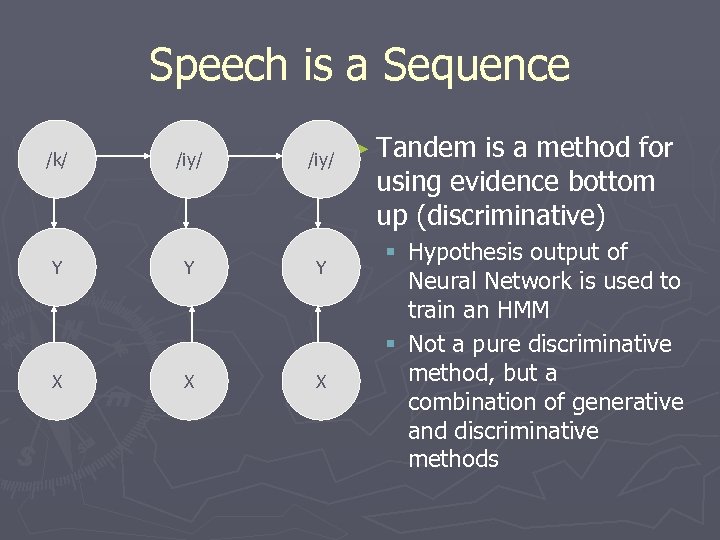

Speech is a Sequence /k/ /iy/ Y Y Y X X X ► Tandem is a method for using evidence bottom up (discriminative) § Hypothesis output of Neural Network is used to train an HMM § Not a pure discriminative method, but a combination of generative and discriminative methods

Speech is a Sequence /k/ /iy/ Y Y Y X X X ► Tandem is a method for using evidence bottom up (discriminative) § Hypothesis output of Neural Network is used to train an HMM § Not a pure discriminative method, but a combination of generative and discriminative methods

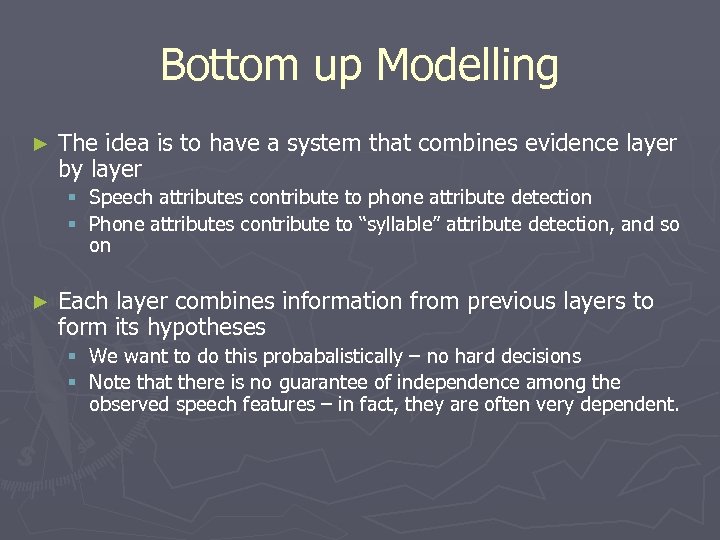

Bottom up Modelling ► The idea is to have a system that combines evidence layer by layer § Speech attributes contribute to phone attribute detection § Phone attributes contribute to “syllable” attribute detection, and so on ► Each layer combines information from previous layers to form its hypotheses § We want to do this probabalistically – no hard decisions § Note that there is no guarantee of independence among the observed speech features – in fact, they are often very dependent.

Bottom up Modelling ► The idea is to have a system that combines evidence layer by layer § Speech attributes contribute to phone attribute detection § Phone attributes contribute to “syllable” attribute detection, and so on ► Each layer combines information from previous layers to form its hypotheses § We want to do this probabalistically – no hard decisions § Note that there is no guarantee of independence among the observed speech features – in fact, they are often very dependent.

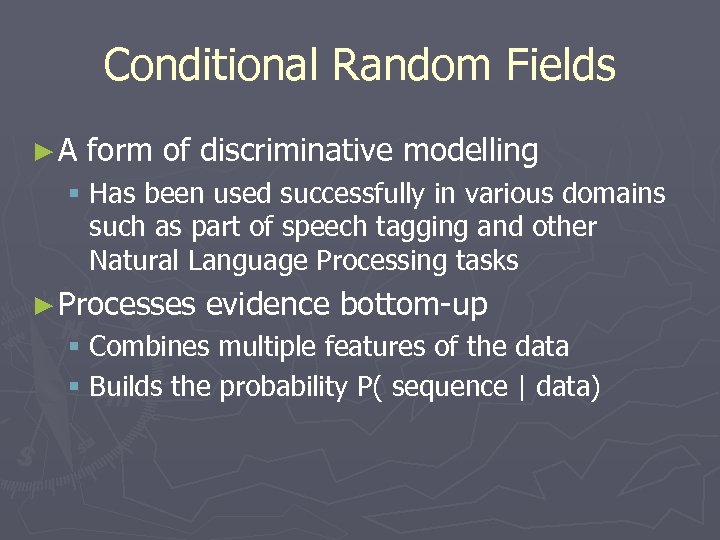

Conditional Random Fields ►A form of discriminative modelling § Has been used successfully in various domains such as part of speech tagging and other Natural Language Processing tasks ► Processes evidence bottom-up § Combines multiple features of the data § Builds the probability P( sequence | data)

Conditional Random Fields ►A form of discriminative modelling § Has been used successfully in various domains such as part of speech tagging and other Natural Language Processing tasks ► Processes evidence bottom-up § Combines multiple features of the data § Builds the probability P( sequence | data)

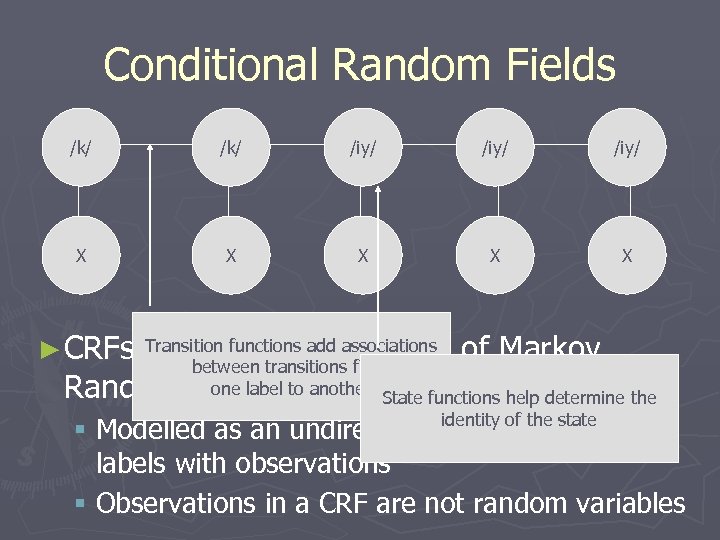

Conditional Random Fields /k/ /iy/ X X X ► CRFs Transition functions add the idea are between transitionsassociations of Markov based on from one label Random Fields to another State functions help determine the identity § Modelled as an undirected graph of the state connecting labels with observations § Observations in a CRF are not random variables

Conditional Random Fields /k/ /iy/ X X X ► CRFs Transition functions add the idea are between transitionsassociations of Markov based on from one label Random Fields to another State functions help determine the identity § Modelled as an undirected graph of the state connecting labels with observations § Observations in a CRF are not random variables

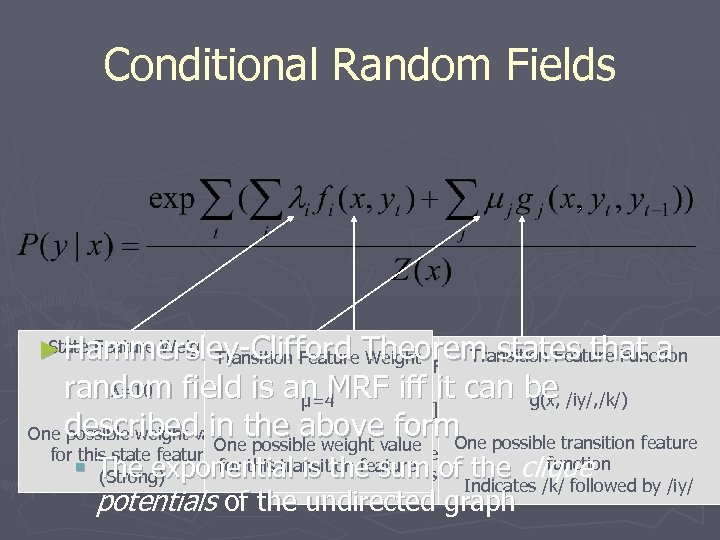

Conditional Random Fields State Feature Weight ► Hammersley-Clifford Theorem states that a Transition Feature Function Transition Feature Weight State Feature Function λ=10 random field is an MRF iff it can be /iy/, /k/) g(x, μ=4 f([x is stop], /t/) One described in the above form possible weight value One possible transition feature One possible weight value One possible state feature function for this state feature for this transition feature and labels § The exponential For the sum of the function is our attributes (Strong) Indicates /k/ followed by /iy/ potentials of the undirected graph clique

Conditional Random Fields State Feature Weight ► Hammersley-Clifford Theorem states that a Transition Feature Function Transition Feature Weight State Feature Function λ=10 random field is an MRF iff it can be /iy/, /k/) g(x, μ=4 f([x is stop], /t/) One described in the above form possible weight value One possible transition feature One possible weight value One possible state feature function for this state feature for this transition feature and labels § The exponential For the sum of the function is our attributes (Strong) Indicates /k/ followed by /iy/ potentials of the undirected graph clique

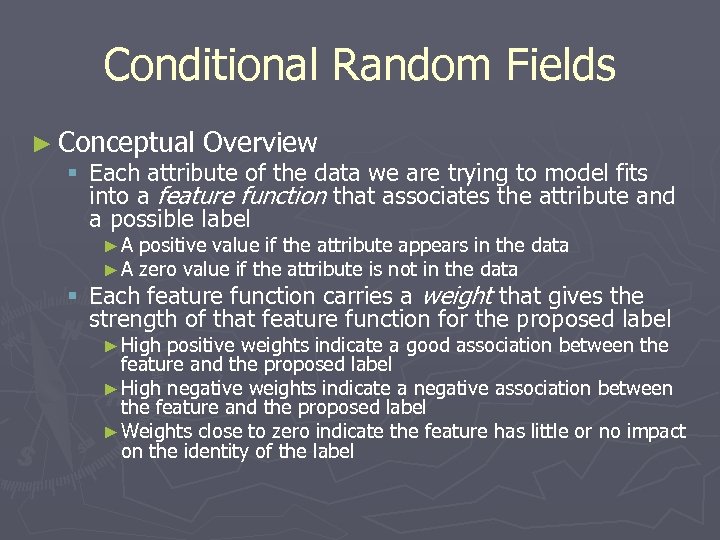

Conditional Random Fields ► Conceptual Overview § Each attribute of the data we are trying to model fits into a feature function that associates the attribute and a possible label ►A ►A positive value if the attribute appears in the data zero value if the attribute is not in the data § Each feature function carries a weight that gives the strength of that feature function for the proposed label ► High positive weights indicate a good association between the feature and the proposed label ► High negative weights indicate a negative association between the feature and the proposed label ► Weights close to zero indicate the feature has little or no impact on the identity of the label

Conditional Random Fields ► Conceptual Overview § Each attribute of the data we are trying to model fits into a feature function that associates the attribute and a possible label ►A ►A positive value if the attribute appears in the data zero value if the attribute is not in the data § Each feature function carries a weight that gives the strength of that feature function for the proposed label ► High positive weights indicate a good association between the feature and the proposed label ► High negative weights indicate a negative association between the feature and the proposed label ► Weights close to zero indicate the feature has little or no impact on the identity of the label

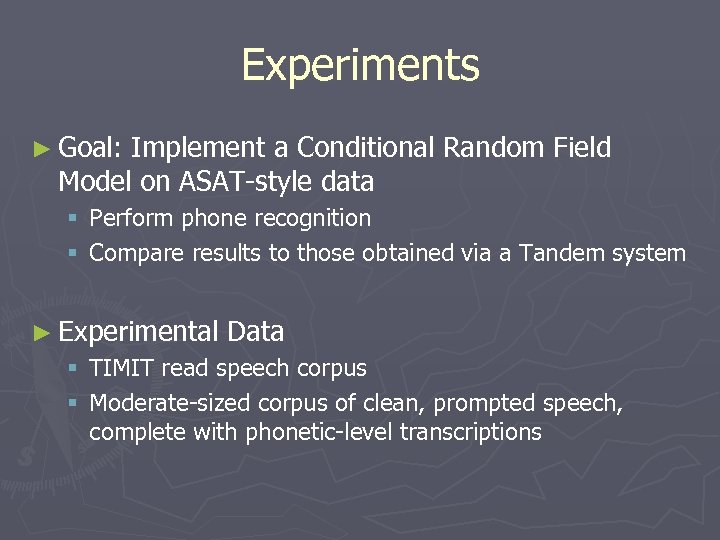

Experiments ► Goal: Implement a Conditional Random Field Model on ASAT-style data § Perform phone recognition § Compare results to those obtained via a Tandem system ► Experimental Data § TIMIT read speech corpus § Moderate-sized corpus of clean, prompted speech, complete with phonetic-level transcriptions

Experiments ► Goal: Implement a Conditional Random Field Model on ASAT-style data § Perform phone recognition § Compare results to those obtained via a Tandem system ► Experimental Data § TIMIT read speech corpus § Moderate-sized corpus of clean, prompted speech, complete with phonetic-level transcriptions

Attribute Selection ► Attribute Detectors § ICSI Quick. Net Neural Networks ► Two different types of attributes § Phonological feature detectors ► Place, Manner, Voicing, Vowel Height, Backness, etc. ► Features are grouped into eight classes, with each class having a variable number of possible values based on the IPA phonetic chart § Phone detectors ► Neural networks output based on the phone labels – one output per label § Classifiers were applied to 2960 utterances from the TIMIT training set

Attribute Selection ► Attribute Detectors § ICSI Quick. Net Neural Networks ► Two different types of attributes § Phonological feature detectors ► Place, Manner, Voicing, Vowel Height, Backness, etc. ► Features are grouped into eight classes, with each class having a variable number of possible values based on the IPA phonetic chart § Phone detectors ► Neural networks output based on the phone labels – one output per label § Classifiers were applied to 2960 utterances from the TIMIT training set

Experimental Setup ► Code built on the Java CRF toolkit on Sourceforge § http: //crf. sourceforge. net § Performs training to maximize the log-likelihood of the training set with respect to the model § Uses a Limited Memory BGFS algorithm to minimize the gradient of the log-likelihood ► For CRF models, maximizing the log-likelihood of the empirical distribution of the data as predicted by the model is the same as maximizing the entropy (Berger et. al. )

Experimental Setup ► Code built on the Java CRF toolkit on Sourceforge § http: //crf. sourceforge. net § Performs training to maximize the log-likelihood of the training set with respect to the model § Uses a Limited Memory BGFS algorithm to minimize the gradient of the log-likelihood ► For CRF models, maximizing the log-likelihood of the empirical distribution of the data as predicted by the model is the same as maximizing the entropy (Berger et. al. )

Experimental Setup ► Output from the Neural Nets are themselves treated as feature functions for the observed sequence – each attribute/label combination gives us a value for one feature function § Note that this makes the feature functions nonbinary features.

Experimental Setup ► Output from the Neural Nets are themselves treated as feature functions for the observed sequence – each attribute/label combination gives us a value for one feature function § Note that this makes the feature functions nonbinary features.

![Results Model Phone Accuracy Correct Tandem [1] (phones) Tandem [3] (phones) CRF [1] (phones) Results Model Phone Accuracy Correct Tandem [1] (phones) Tandem [3] (phones) CRF [1] (phones)](https://present5.com/presentation/043733307e44eb1d54689271d559a2a8/image-20.jpg) Results Model Phone Accuracy Correct Tandem [1] (phones) Tandem [3] (phones) CRF [1] (phones) Tandem [1] (features) Tandem [3] (features) CRF [1] (features) Tandem [1] (phones/feas) Tandem [3] (phones/feas) CRF (phones/feas) 60. 48% 67. 32% 66. 89% 61. 48% 66. 69% 65. 29% 61. 78% 67. 96% 68. 00% 63. 30% 73. 81% 68. 49% 63. 50% 72. 52% 66. 81% 63. 68% 73. 40% 69. 58%

Results Model Phone Accuracy Correct Tandem [1] (phones) Tandem [3] (phones) CRF [1] (phones) Tandem [1] (features) Tandem [3] (features) CRF [1] (features) Tandem [1] (phones/feas) Tandem [3] (phones/feas) CRF (phones/feas) 60. 48% 67. 32% 66. 89% 61. 48% 66. 69% 65. 29% 61. 78% 67. 96% 68. 00% 63. 30% 73. 81% 68. 49% 63. 50% 72. 52% 66. 81% 63. 68% 73. 40% 69. 58%

Future Work ► More features § What kinds of features can we add to improve our transitions? ► Tuning § HMM model has parameters that can be tuned for better performance – can we tweak the CRF to do something similar? ► Word recogntion § How does this model do at the full word recognition level, instead of just phones ► Other corpora § Can we extend this method beyond TIMIT to different types of corpora? (e. g. WSJ)

Future Work ► More features § What kinds of features can we add to improve our transitions? ► Tuning § HMM model has parameters that can be tuned for better performance – can we tweak the CRF to do something similar? ► Word recogntion § How does this model do at the full word recognition level, instead of just phones ► Other corpora § Can we extend this method beyond TIMIT to different types of corpora? (e. g. WSJ)