0abe4a8655032519914030e09c156a00.ppt

- Количество слайдов: 14

Concept-based P 2 P Search How to find more relevant documents Ingmar Weber Max-Planck-Institute for Computer Science Joint work with Holger Bast Torino, December 8 th 2004

What‘s wrong with First. . . it‘s centralized. • Global authority – can we trust it? - „presidential election Ukraine“ - „buy luxury car“ • Dependency – I need it. - single point of failure • Size – even Google is only human. - unlikely to index everything that‘s of interest (deep web) - infeasible to run expensive algorithms on 8 billion documents - difficult to input human knowledge Peer-to-peer search ?

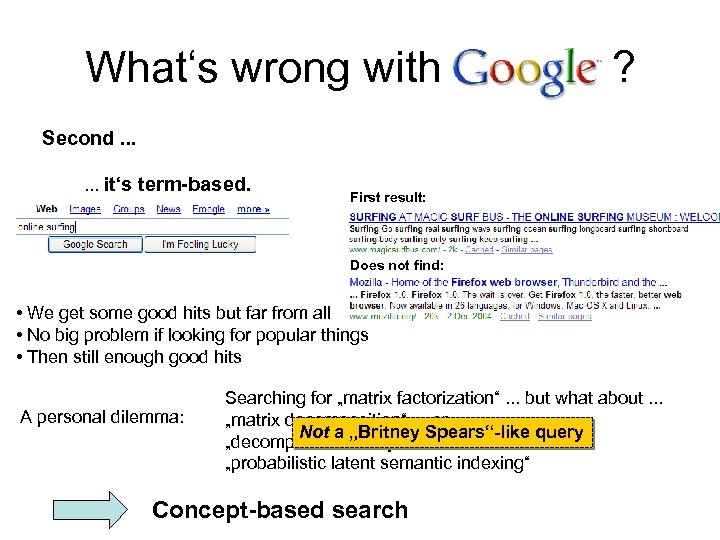

What‘s wrong with ? Second. . . it‘s term-based. First result: Does not find: • We get some good hits but far from all • No big problem if looking for popular things • Then still enough good hits A personal dilemma: Searching for „matrix factorization“. . . but what about. . . „matrix decomposition“. . . or. . . Not a „Britney Spears“-like query „decompose linear system“. . . or even. . . „probabilistic latent semantic indexing“ Concept-based search

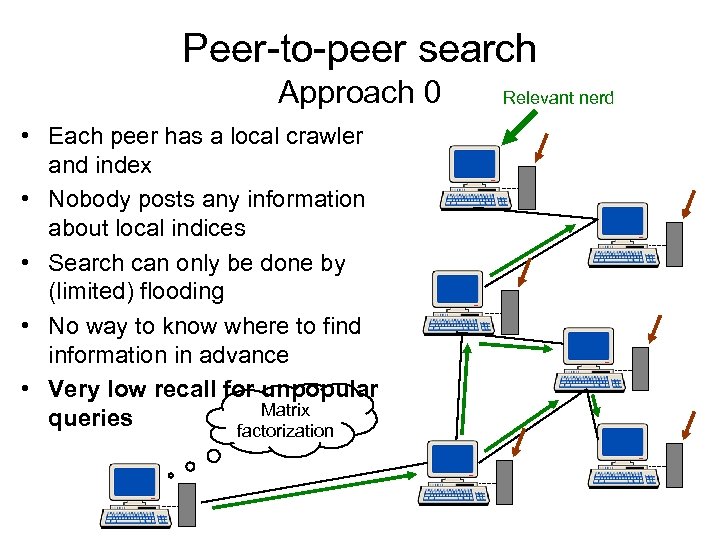

Peer-to-peer search Approach 0 • Each peer has a local crawler and index • Nobody posts any information about local indices • Search can only be done by (limited) flooding • No way to know where to find information in advance • Very low recall for unpopular Matrix queries factorization Relevant nerd

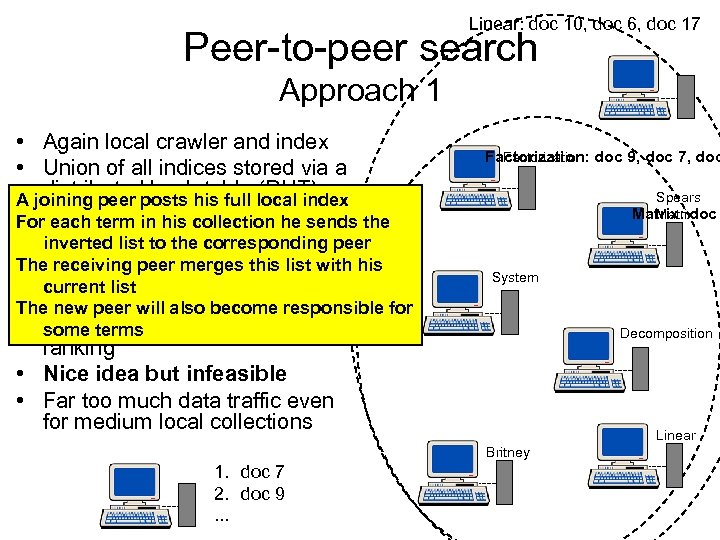

Linear: doc 10, doc 6, doc 17 Peer-to-peer search Approach 1 • Again local crawler and index • Union of all indices stored via a distributed hash table (DHT) A joining peer posts his full local index • Each term in his collection hea few the For each peer responsible for sends terms (i. e. keys in the DHT) peer inverted list to the corresponding • To search for merges The receiving peer „matrix this list with his factorization“ retrieve the current list corresponding document lists The new peer will also become responsible for and merge some terms them to give result ranking • Nice idea but infeasible • Far too much data traffic even for medium local collections Factorization: doc 9, doc 7, doc Factorization Spears Matrix: doc Matrix System Decomposition Linear Britney 1. doc 7 2. doc 9. . .

![Linear: 20 docs, max tf 10 Peer-to-peer search „Minerva“ [Weikum et al. ] Approach Linear: 20 docs, max tf 10 Peer-to-peer search „Minerva“ [Weikum et al. ] Approach](https://present5.com/presentation/0abe4a8655032519914030e09c156a00/image-6.jpg)

Linear: 20 docs, max tf 10 Peer-to-peer search „Minerva“ [Weikum et al. ] Approach 2 • Again local crawler and index • Peers share a distributed hash table (DHT) Factorization: peer 9, peer 13 Factorization Spears A joining peer posts only statistics about his • Each peer responsible for a few Matrix: Matrix p terms (i. e. keys in the DHT) For each term in his collection he sends the • For each termto the corresponding peer doc 2, doc 5, doc 7 short statistic we maintain a peer list with statistics System The receiving peer merges this statistic with 9 • To search for „matrix factorization“ his current peer statistics for this term retrieve the peer lists and select the doc 8, doc 7, doc 11 The new promising peers peer will also become responsible for Decomposition most 7 some terms • Send the query to these peers • The peers perform a local search and return their best results • Merge the results • This works but. . . „matrix factorization“ 1. doc 7 peer 9, peer 7 • Performance heavily depends on 2. doc term-based peer selection 2. . . • Still low recall Linear Britney

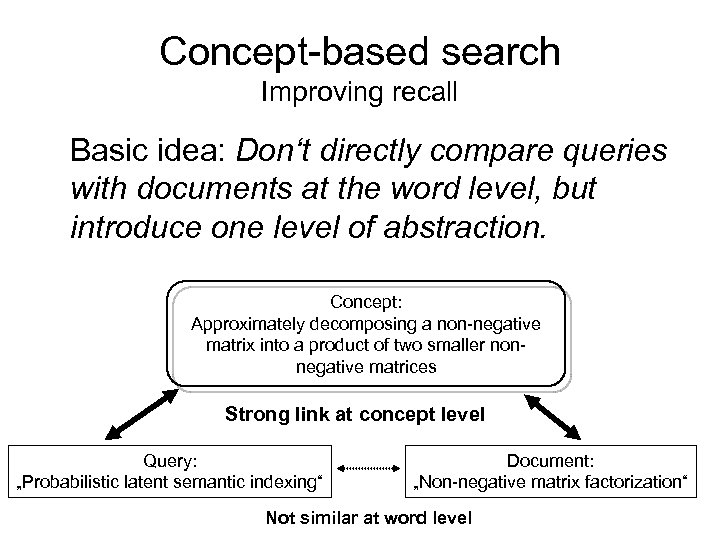

Concept-based search Improving recall Basic idea: Don‘t directly compare queries with documents at the word level, but introduce one level of abstraction. Concept: Approximately decomposing a non-negative matrix into a product of two smaller nonnegative matrices Strong link at concept level Query: „Probabilistic latent semantic indexing“ Document: „Non-negative matrix factorization“ Not similar at word level

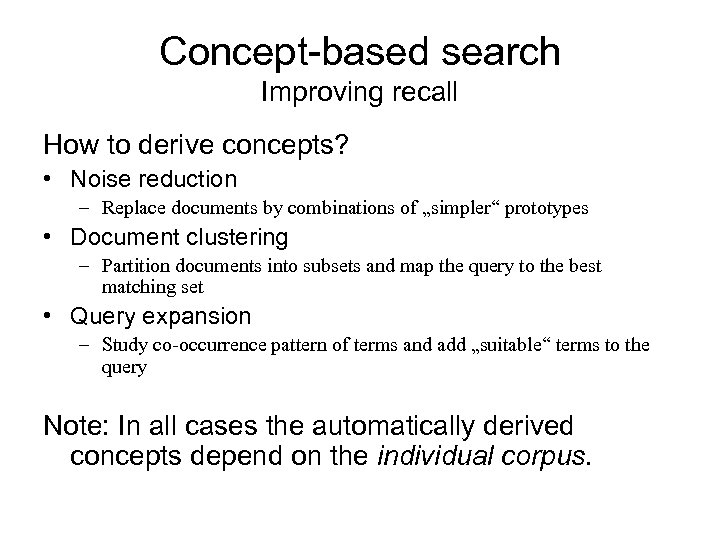

Concept-based search Improving recall How to derive concepts? • Noise reduction – Replace documents by combinations of „simpler“ prototypes • Document clustering – Partition documents into subsets and map the query to the best matching set • Query expansion – Study co-occurrence pattern of terms and add „suitable“ terms to the query Note: In all cases the automatically derived concepts depend on the individual corpus.

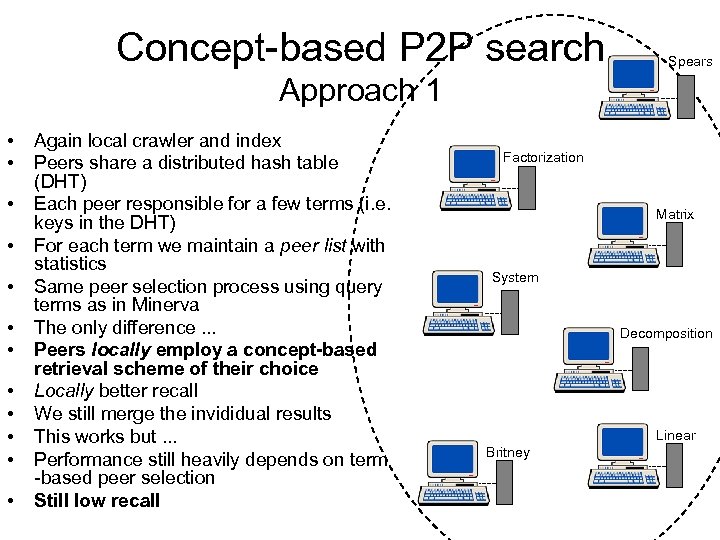

Concept-based P 2 P search Spears Approach 1 • • • Again local crawler and index Peers share a distributed hash table (DHT) Each peer responsible for a few terms (i. e. keys in the DHT) For each term we maintain a peer list with statistics Same peer selection process using query terms as in Minerva The only difference. . . Peers locally employ a concept-based retrieval scheme of their choice Locally better recall We still merge the invididual results This works but. . . Performance still heavily depends on term -based peer selection Still low recall Factorization Matrix System Decomposition Linear Britney

Concept-based P 2 P search A blueprint What we would like to do: 1. Map a query to the most relevant concepts, either individually or with help from peers 2. Find out which peers have documents related to these concepts 3. Send the query to these peers and retrieve ranking for the concept 4. Merge the results Difficult as we have to post summaries of concepts which can then be found by others. Not clear how to universally and uniquely represent concepts.

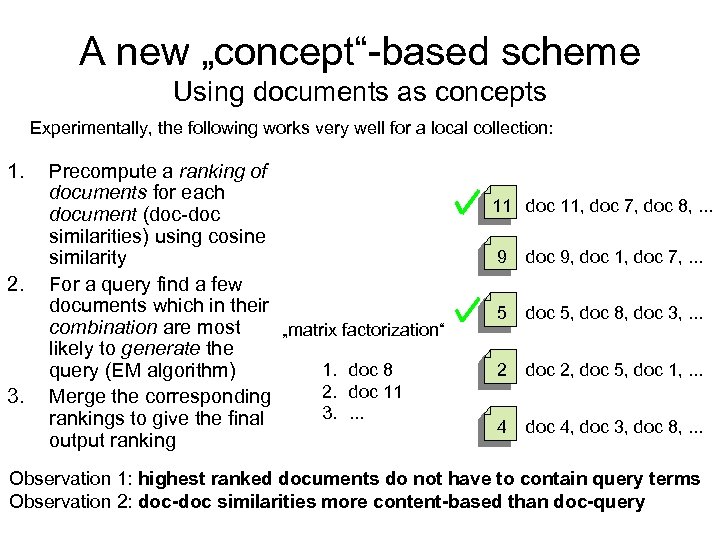

A new „concept“-based scheme Using documents as concepts Experimentally, the following works very well for a local collection: 1. 2. 3. Precompute a ranking of documents for each document (doc-doc similarities) using cosine similarity For a query find a few documents which in their combination are most „matrix factorization“ likely to generate the 1. doc 8 query (EM algorithm) 2. doc 11 Merge the corresponding 3. . rankings to give the final output ranking 11 doc 11, doc 7, doc 8, . . . 9 doc 9, doc 1, doc 7, . . . 5 doc 5, doc 8, doc 3, . . . 2 doc 2, doc 5, doc 1, . . . 4 doc 4, doc 3, doc 8, . . . Observation 1: highest ranked documents do not have to contain query terms Observation 2: doc-doc similarities more content-based than doc-query

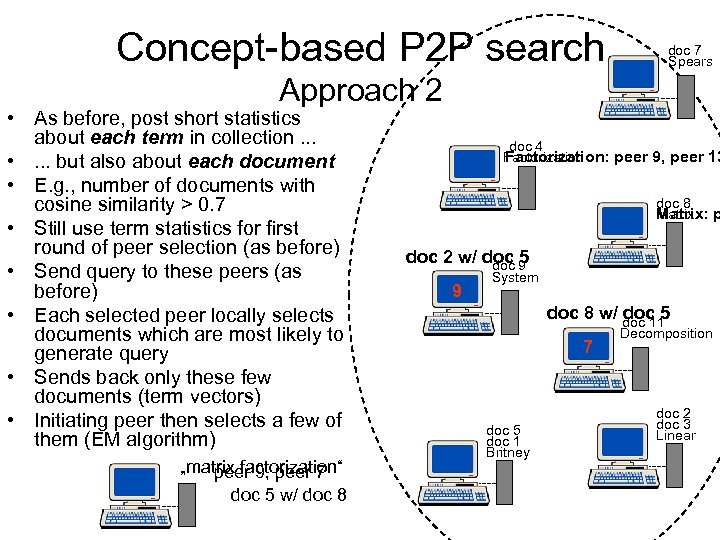

Concept-based P 2 P search doc 7 Spears Approach 2 • As before, post short statistics about each term in collection. . . • . . . but also about each document • E. g. , number of documents with cosine similarity > 0. 7 • Still use term statistics for first round of peer selection (as before) • Send query to these peers (as before) • Each selected peer locally selects documents which are most likely to generate query • Sends back only these few documents (term vectors) • Initiating peer then selects a few of them (EM algorithm) „matrix factorization“ peer 9, peer 7 doc 5 w/ doc 8 doc 4 Factorization: peer 9, peer 13 Factorization doc 8 Matrix: Matrix p doc 2 w/ doc 9 5 doc 9 System doc 8 w/ doc 11 doc 5 7 doc 5 doc 1 Britney Decomposition doc 2 doc 3 Linear

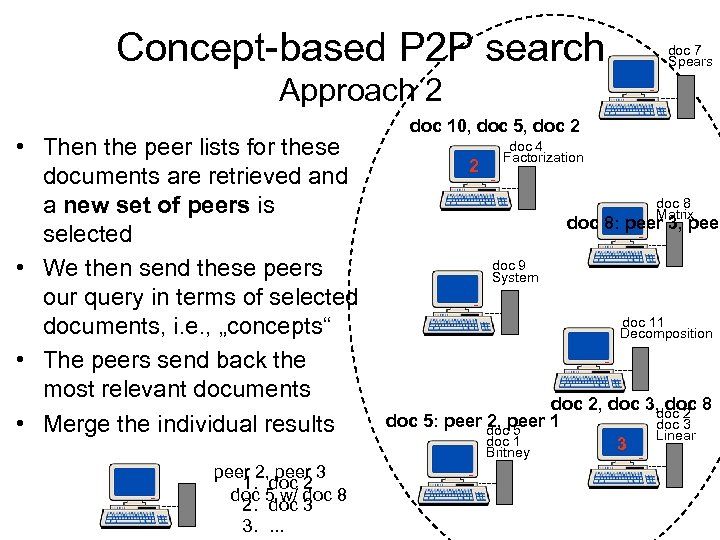

Concept-based P 2 P search doc 7 Spears Approach 2 • Then the peer lists for these documents are retrieved and a new set of peers is selected • We then send these peers our query in terms of selected documents, i. e. , „concepts“ • The peers send back the most relevant documents • Merge the individual results peer 2, peer 3 1. doc 2 doc 5 w/ doc 8 2. doc 3 3. . doc 10, doc 5, doc 2 2 doc 4 Factorization doc 8 Matrix doc 8: peer 3, peer doc 9 System doc 11 Decomposition doc 2, doc 3, doc 8 doc 2 doc 5: peer docpeer 1 2, 5 doc 3 Linear doc 1 3 Britney

Concept-based P 2 P search Advantages of our approach • Users only have to „agree“ on documents – No need for common taxonomy • If we can find some relevant documents we can find more => increases recall – Allows content-based „More documents like this“ button • Uses non-trivial doc-doc similarities – Infeasible to compute for 8 billion documents, easy to do for a few thousands

0abe4a8655032519914030e09c156a00.ppt