6b066648e5b1a0f58fc22ff050c6fc79.ppt

- Количество слайдов: 27

Computing tools and analysis architectures: the CMS computing strategy M. Paganoni HCP 2007 La Biodola, 23/5/2007 M. Paganoni, HCP 2007 1

Computing tools and analysis architectures: the CMS computing strategy M. Paganoni HCP 2007 La Biodola, 23/5/2007 M. Paganoni, HCP 2007 1

Outline ü CMS Computing and Analysis Model ü CMS workflow components ü 25 % capacity test (CSA 06 challenge) ü CMSSW validation ü Load. Test 07, Site Availability Monitor and Grid g. Lite 3. 1 ü The goals for 2007 • Physics validation with high statistics • Full detector readout during commissioning • 50 % capacity test (CSA 07 challenge) ü Analysis workflow M. Paganoni, HCP 2007 2

Outline ü CMS Computing and Analysis Model ü CMS workflow components ü 25 % capacity test (CSA 06 challenge) ü CMSSW validation ü Load. Test 07, Site Availability Monitor and Grid g. Lite 3. 1 ü The goals for 2007 • Physics validation with high statistics • Full detector readout during commissioning • 50 % capacity test (CSA 07 challenge) ü Analysis workflow M. Paganoni, HCP 2007 2

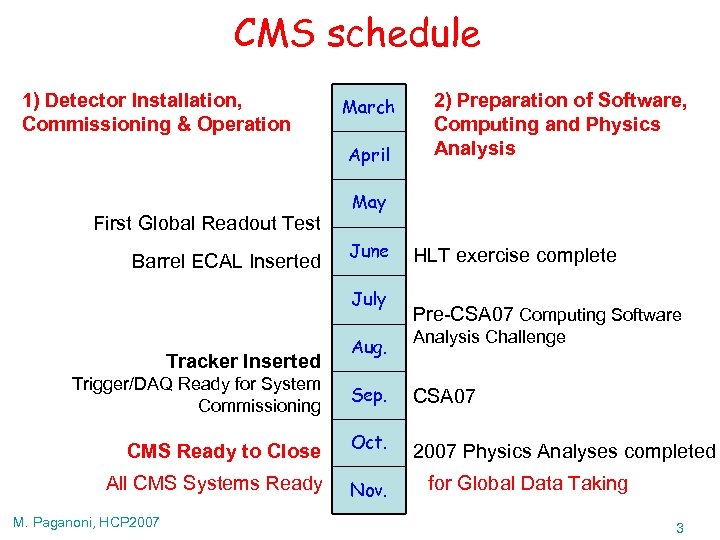

CMS schedule 1) Detector Installation, Commissioning & Operation March April First Global Readout Test Barrel ECAL Inserted May June July Tracker Inserted Trigger/DAQ Ready for System Commissioning HLT exercise complete Pre-CSA 07 Computing Software Aug. Analysis Challenge Sep. CSA 07 CMS Ready to Close Oct. All CMS Systems Ready Nov. M. Paganoni, HCP 2007 2) Preparation of Software, Computing and Physics Analysis 2007 Physics Analyses completed for Global Data Taking 3

CMS schedule 1) Detector Installation, Commissioning & Operation March April First Global Readout Test Barrel ECAL Inserted May June July Tracker Inserted Trigger/DAQ Ready for System Commissioning HLT exercise complete Pre-CSA 07 Computing Software Aug. Analysis Challenge Sep. CSA 07 CMS Ready to Close Oct. All CMS Systems Ready Nov. M. Paganoni, HCP 2007 2) Preparation of Software, Computing and Physics Analysis 2007 Physics Analyses completed for Global Data Taking 3

The present status of CMS computing ü From development • service/data challenges (both WLCG wide and experiment specific) of increasing scale and complexity to operations • data distribution • MC production • physics analysis ü Primary needs: • Smoothly running Tier 1’s and Tier 2’s, concurrent with other experiments • Streamlined and automatic operations to ease the operation load • Full monitoring to have early detection of Grid and site problems and reach stability • Sustainable operations in terms of DM, WM, user support, site configuration and availability, continouous significant load M. Paganoni, HCP 2007 4

The present status of CMS computing ü From development • service/data challenges (both WLCG wide and experiment specific) of increasing scale and complexity to operations • data distribution • MC production • physics analysis ü Primary needs: • Smoothly running Tier 1’s and Tier 2’s, concurrent with other experiments • Streamlined and automatic operations to ease the operation load • Full monitoring to have early detection of Grid and site problems and reach stability • Sustainable operations in terms of DM, WM, user support, site configuration and availability, continouous significant load M. Paganoni, HCP 2007 4

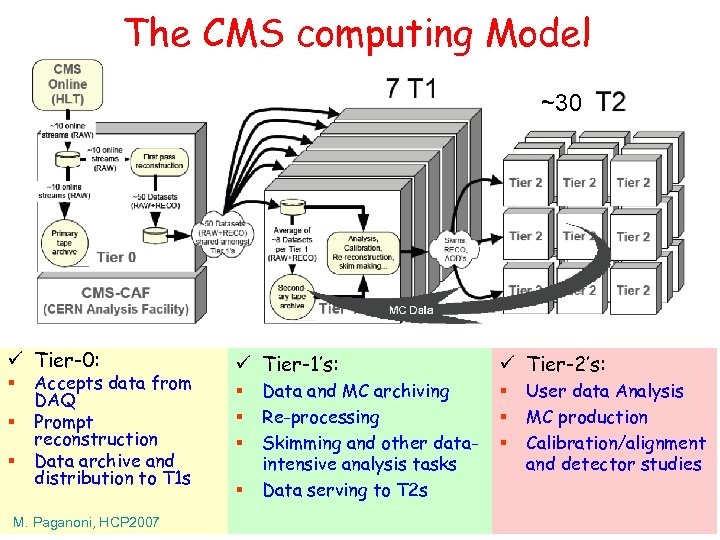

The CMS computing Model ~30 ü Tier-0: ü Tier-1’s: § § § Accepts data from DAQ Prompt reconstruction Data archive and distribution to T 1 s M. Paganoni, HCP 2007 § Data and MC archiving Re-processing Skimming and other dataintensive analysis tasks Data serving to T 2 s ü Tier-2’s: § § § User data Analysis MC production Calibration/alignment and detector studies 5

The CMS computing Model ~30 ü Tier-0: ü Tier-1’s: § § § Accepts data from DAQ Prompt reconstruction Data archive and distribution to T 1 s M. Paganoni, HCP 2007 § Data and MC archiving Re-processing Skimming and other dataintensive analysis tasks Data serving to T 2 s ü Tier-2’s: § § § User data Analysis MC production Calibration/alignment and detector studies 5

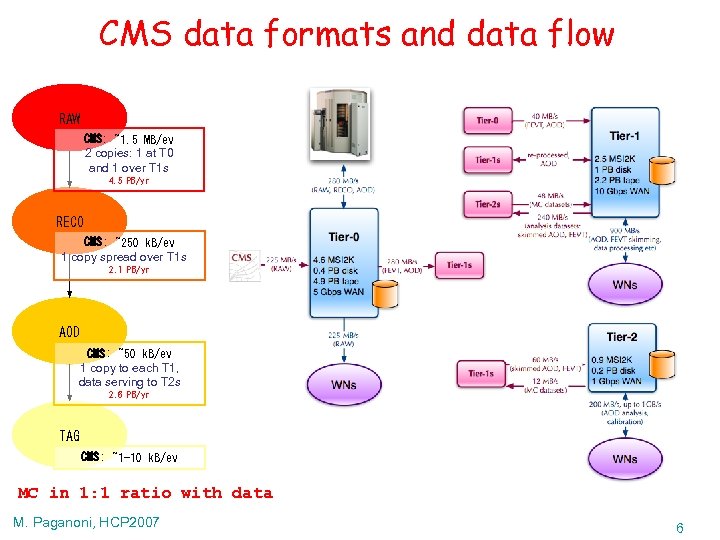

CMS data formats and data flow RAW CMS: ~1. 5 MB/ev 2 copies: 1 at T 0 and 1 over T 1 s 4. 5 PB/yr RECO CMS: ~250 k. B/ev 1 copy spread over T 1 s 2. 1 PB/yr AOD CMS: ~50 k. B/ev 1 copy to each T 1, data serving to T 2 s 2. 6 PB/yr TAG CMS: ~1 -10 k. B/ev MC in 1: 1 ratio with data M. Paganoni, HCP 2007 6

CMS data formats and data flow RAW CMS: ~1. 5 MB/ev 2 copies: 1 at T 0 and 1 over T 1 s 4. 5 PB/yr RECO CMS: ~250 k. B/ev 1 copy spread over T 1 s 2. 1 PB/yr AOD CMS: ~50 k. B/ev 1 copy to each T 1, data serving to T 2 s 2. 6 PB/yr TAG CMS: ~1 -10 k. B/ev MC in 1: 1 ratio with data M. Paganoni, HCP 2007 6

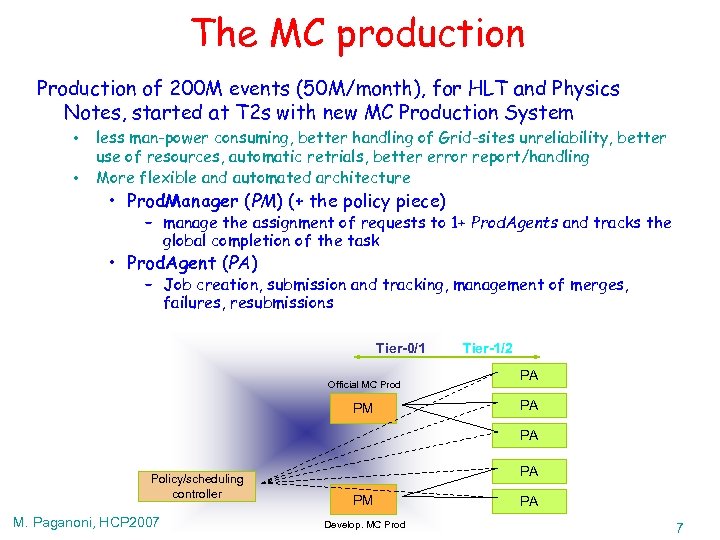

The MC production Production of 200 M events (50 M/month), for HLT and Physics Notes, started at T 2 s with new MC Production System • • less man-power consuming, better handling of Grid-sites unreliability, better use of resources, automatic retrials, better error report/handling More flexible and automated architecture • Prod. Manager (PM) (+ the policy piece) – manage the assignment of requests to 1+ Prod. Agents and tracks the global completion of the task • Prod. Agent (PA) – Job creation, submission and tracking, management of merges, failures, resubmissions Tier-0/1 Official MC Prod PM Tier-1/2 PA PA PA Policy/scheduling controller M. Paganoni, HCP 2007 PA PM Develop. MC Prod PA 7

The MC production Production of 200 M events (50 M/month), for HLT and Physics Notes, started at T 2 s with new MC Production System • • less man-power consuming, better handling of Grid-sites unreliability, better use of resources, automatic retrials, better error report/handling More flexible and automated architecture • Prod. Manager (PM) (+ the policy piece) – manage the assignment of requests to 1+ Prod. Agents and tracks the global completion of the task • Prod. Agent (PA) – Job creation, submission and tracking, management of merges, failures, resubmissions Tier-0/1 Official MC Prod PM Tier-1/2 PA PA PA Policy/scheduling controller M. Paganoni, HCP 2007 PA PM Develop. MC Prod PA 7

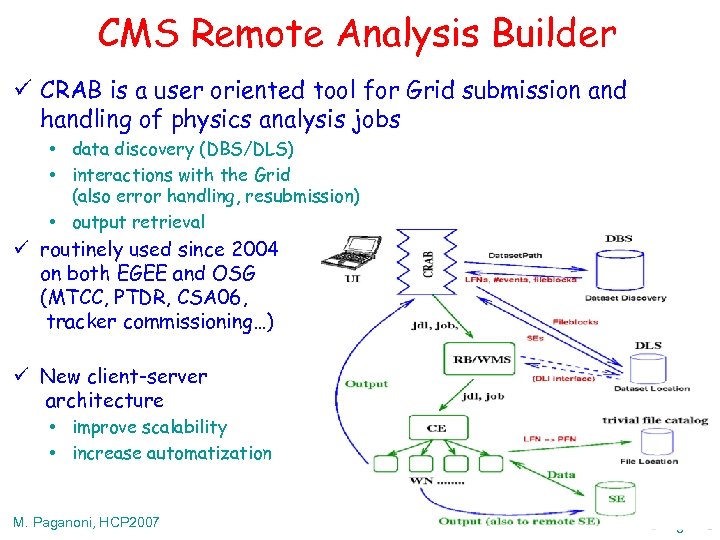

CMS Remote Analysis Builder ü CRAB is a user oriented tool for Grid submission and handling of physics analysis jobs • data discovery (DBS/DLS) • interactions with the Grid (also error handling, resubmission) • output retrieval ü routinely used since 2004 on both EGEE and OSG (MTCC, PTDR, CSA 06, tracker commissioning…) ü New client-server architecture • improve scalability • increase automatization M. Paganoni, HCP 2007 8

CMS Remote Analysis Builder ü CRAB is a user oriented tool for Grid submission and handling of physics analysis jobs • data discovery (DBS/DLS) • interactions with the Grid (also error handling, resubmission) • output retrieval ü routinely used since 2004 on both EGEE and OSG (MTCC, PTDR, CSA 06, tracker commissioning…) ü New client-server architecture • improve scalability • increase automatization M. Paganoni, HCP 2007 8

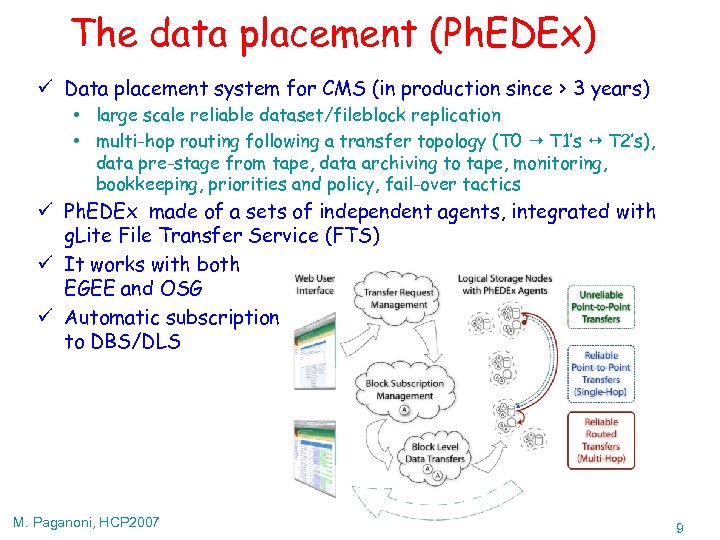

The data placement (Ph. EDEx) ü Data placement system for CMS (in production since > 3 years) • large scale reliable dataset/fileblock replication • multi-hop routing following a transfer topology (T 0 T 1’s T 2’s), data pre-stage from tape, data archiving to tape, monitoring, bookkeeping, priorities and policy, fail-over tactics ü Ph. EDEx made of a sets of independent agents, integrated with g. Lite File Transfer Service (FTS) ü It works with both EGEE and OSG ü Automatic subscription to DBS/DLS M. Paganoni, HCP 2007 9

The data placement (Ph. EDEx) ü Data placement system for CMS (in production since > 3 years) • large scale reliable dataset/fileblock replication • multi-hop routing following a transfer topology (T 0 T 1’s T 2’s), data pre-stage from tape, data archiving to tape, monitoring, bookkeeping, priorities and policy, fail-over tactics ü Ph. EDEx made of a sets of independent agents, integrated with g. Lite File Transfer Service (FTS) ü It works with both EGEE and OSG ü Automatic subscription to DBS/DLS M. Paganoni, HCP 2007 9

Data processing workflow M. Paganoni, HCP 2007 10

Data processing workflow M. Paganoni, HCP 2007 10

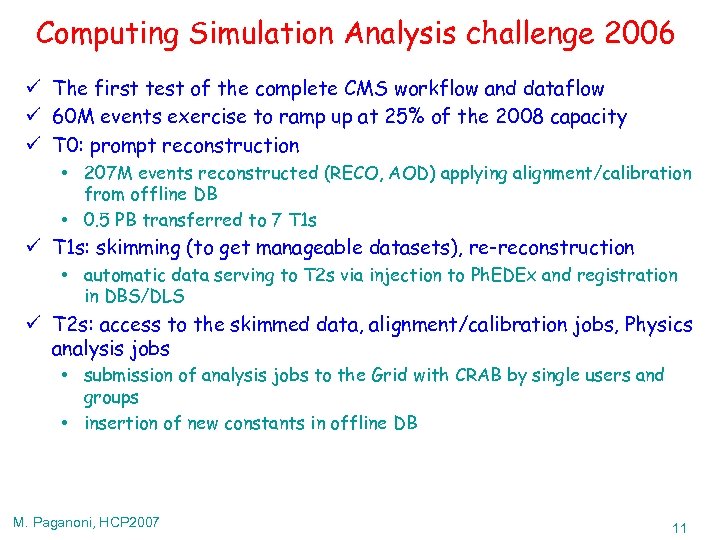

Computing Simulation Analysis challenge 2006 ü The first test of the complete CMS workflow and dataflow ü 60 M events exercise to ramp up at 25% of the 2008 capacity ü T 0: prompt reconstruction • 207 M events reconstructed (RECO, AOD) applying alignment/calibration from offline DB • 0. 5 PB transferred to 7 T 1 s ü T 1 s: skimming (to get manageable datasets), re-reconstruction • automatic data serving to T 2 s via injection to Ph. EDEx and registration in DBS/DLS ü T 2 s: access to the skimmed data, alignment/calibration jobs, Physics analysis jobs • submission of analysis jobs to the Grid with CRAB by single users and groups • insertion of new constants in offline DB M. Paganoni, HCP 2007 11

Computing Simulation Analysis challenge 2006 ü The first test of the complete CMS workflow and dataflow ü 60 M events exercise to ramp up at 25% of the 2008 capacity ü T 0: prompt reconstruction • 207 M events reconstructed (RECO, AOD) applying alignment/calibration from offline DB • 0. 5 PB transferred to 7 T 1 s ü T 1 s: skimming (to get manageable datasets), re-reconstruction • automatic data serving to T 2 s via injection to Ph. EDEx and registration in DBS/DLS ü T 2 s: access to the skimmed data, alignment/calibration jobs, Physics analysis jobs • submission of analysis jobs to the Grid with CRAB by single users and groups • insertion of new constants in offline DB M. Paganoni, HCP 2007 11

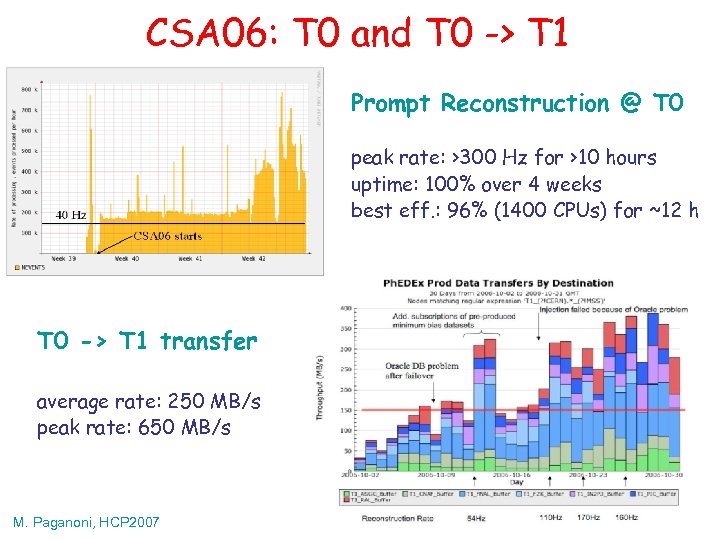

CSA 06: T 0 and T 0 -> T 1 Prompt Reconstruction @ T 0 peak rate: >300 Hz for >10 hours uptime: 100% over 4 weeks best eff. : 96% (1400 CPUs) for ~12 h T 0 -> T 1 transfer average rate: 250 MB/s peak rate: 650 MB/s M. Paganoni, HCP 2007 12

CSA 06: T 0 and T 0 -> T 1 Prompt Reconstruction @ T 0 peak rate: >300 Hz for >10 hours uptime: 100% over 4 weeks best eff. : 96% (1400 CPUs) for ~12 h T 0 -> T 1 transfer average rate: 250 MB/s peak rate: 650 MB/s M. Paganoni, HCP 2007 12

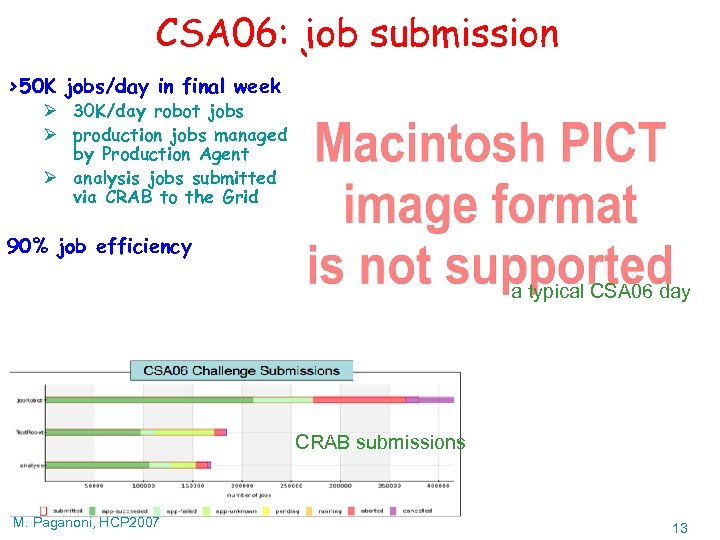

CSA 06: job submission >50 K jobs/day in final week Ø 30 K/day robot jobs Ø production jobs managed by Production Agent Ø analysis jobs submitted via CRAB to the Grid 90% job efficiency a typical CSA 06 day CRAB submissions M. Paganoni, HCP 2007 13

CSA 06: job submission >50 K jobs/day in final week Ø 30 K/day robot jobs Ø production jobs managed by Production Agent Ø analysis jobs submitted via CRAB to the Grid 90% job efficiency a typical CSA 06 day CRAB submissions M. Paganoni, HCP 2007 13

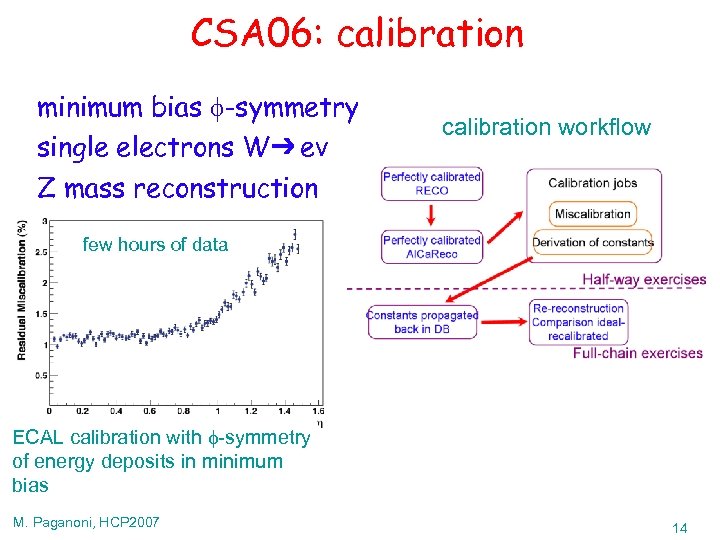

CSA 06: calibration minimum bias -symmetry single electrons W➔ eν Z mass reconstruction calibration workflow few hours of data ECAL calibration with -symmetry of energy deposits in minimum bias M. Paganoni, HCP 2007 14

CSA 06: calibration minimum bias -symmetry single electrons W➔ eν Z mass reconstruction calibration workflow few hours of data ECAL calibration with -symmetry of energy deposits in minimum bias M. Paganoni, HCP 2007 14

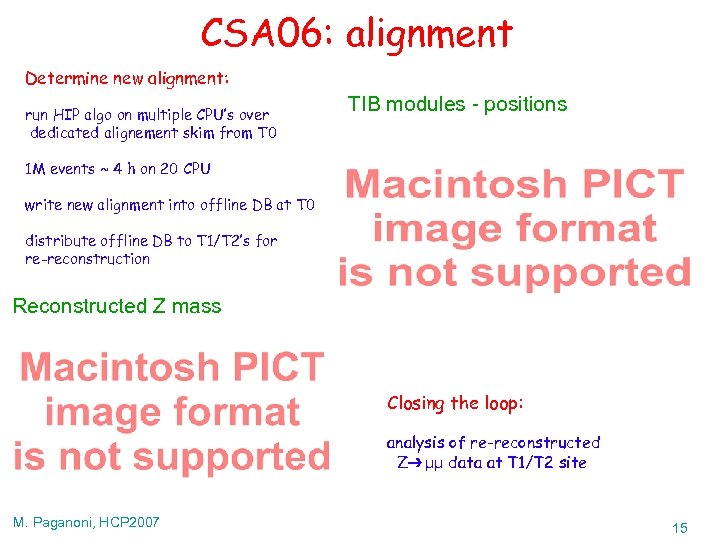

CSA 06: alignment Determine new alignment: run HIP algo on multiple CPU’s over dedicated alignement skim from T 0 TIB modules - positions 1 M events ~ 4 h on 20 CPU write new alignment into offline DB at T 0 distribute offline DB to T 1/T 2’s for re-reconstruction Reconstructed Z mass Closing the loop: analysis of re-reconstructed Z➔ μμ data at T 1/T 2 site M. Paganoni, HCP 2007 15

CSA 06: alignment Determine new alignment: run HIP algo on multiple CPU’s over dedicated alignement skim from T 0 TIB modules - positions 1 M events ~ 4 h on 20 CPU write new alignment into offline DB at T 0 distribute offline DB to T 1/T 2’s for re-reconstruction Reconstructed Z mass Closing the loop: analysis of re-reconstructed Z➔ μμ data at T 1/T 2 site M. Paganoni, HCP 2007 15

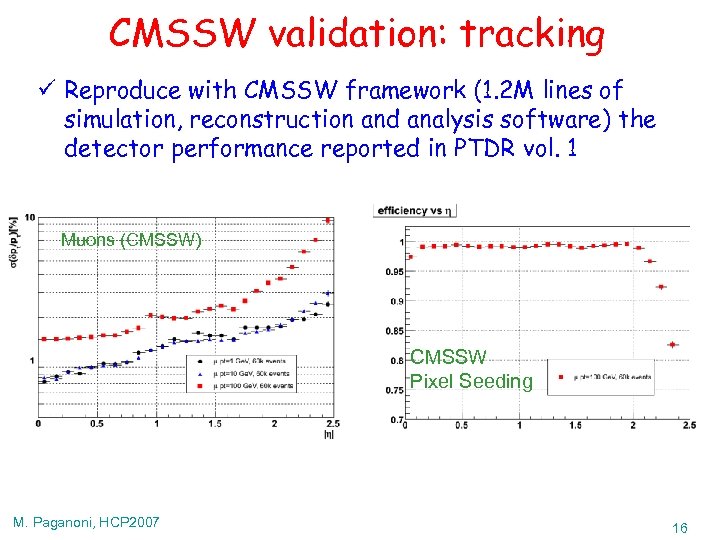

CMSSW validation: tracking ü Reproduce with CMSSW framework (1. 2 M lines of simulation, reconstruction and analysis software) the detector performance reported in PTDR vol. 1 Muons (CMSSW) CMSSW Pixel Seeding M. Paganoni, HCP 2007 16

CMSSW validation: tracking ü Reproduce with CMSSW framework (1. 2 M lines of simulation, reconstruction and analysis software) the detector performance reported in PTDR vol. 1 Muons (CMSSW) CMSSW Pixel Seeding M. Paganoni, HCP 2007 16

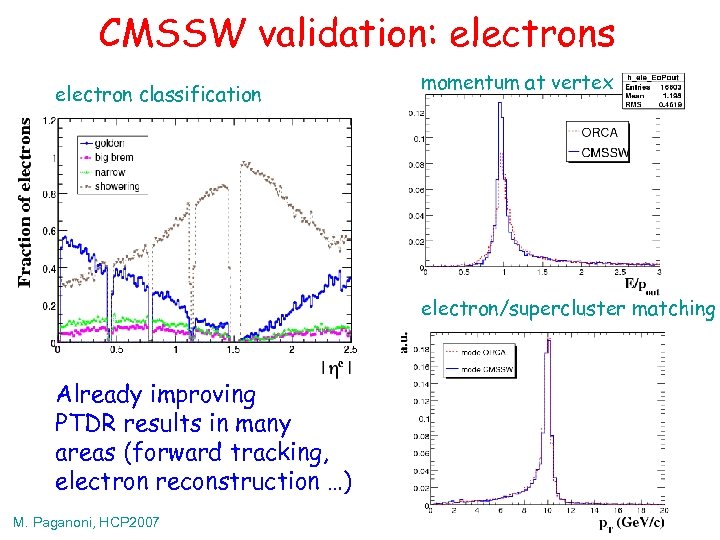

CMSSW validation: electrons electron classification momentum at vertex electron/supercluster matching Already improving PTDR results in many areas (forward tracking, electron reconstruction …) M. Paganoni, HCP 2007 17

CMSSW validation: electrons electron classification momentum at vertex electron/supercluster matching Already improving PTDR results in many areas (forward tracking, electron reconstruction …) M. Paganoni, HCP 2007 17

Site Availability Monitor ü Measure the site availability by testing: • • Analysis submission Production Dbase caching Data transfer ü With Site Availability Monitor (SAM) infrastructure, developed in collaboration with LCG and CERN/IT ü The goal is 90 % for T 1 s and 80 % for T 2 s ü Run tests at each EGEE sites every 2 hours now ü 5 CMS specific tests, more under development ü Feedback to site administrators and targeting individual components M. Paganoni, HCP 2007 18

Site Availability Monitor ü Measure the site availability by testing: • • Analysis submission Production Dbase caching Data transfer ü With Site Availability Monitor (SAM) infrastructure, developed in collaboration with LCG and CERN/IT ü The goal is 90 % for T 1 s and 80 % for T 2 s ü Run tests at each EGEE sites every 2 hours now ü 5 CMS specific tests, more under development ü Feedback to site administrators and targeting individual components M. Paganoni, HCP 2007 18

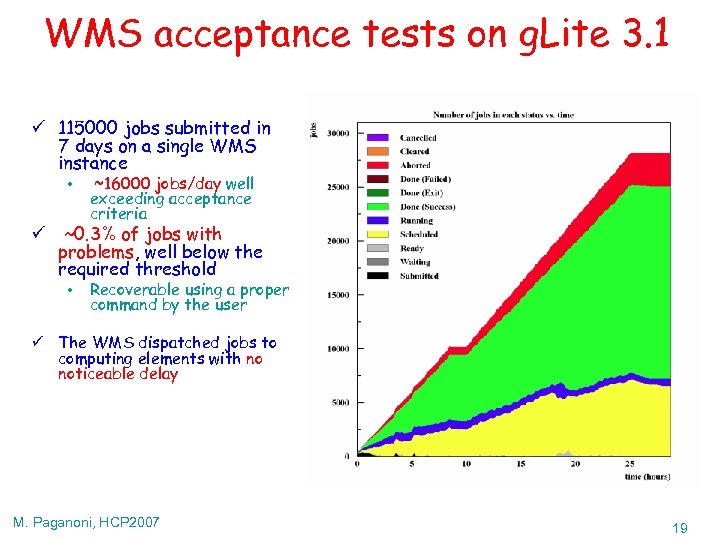

WMS acceptance tests on g. Lite 3. 1 ü 115000 jobs submitted in 7 days on a single WMS instance • ~16000 jobs/day well exceeding acceptance criteria ü ~0. 3% of jobs with problems, well below the required threshold • Recoverable using a proper command by the user ü The WMS dispatched jobs to computing elements with no noticeable delay M. Paganoni, HCP 2007 19

WMS acceptance tests on g. Lite 3. 1 ü 115000 jobs submitted in 7 days on a single WMS instance • ~16000 jobs/day well exceeding acceptance criteria ü ~0. 3% of jobs with problems, well below the required threshold • Recoverable using a proper command by the user ü The WMS dispatched jobs to computing elements with no noticeable delay M. Paganoni, HCP 2007 19

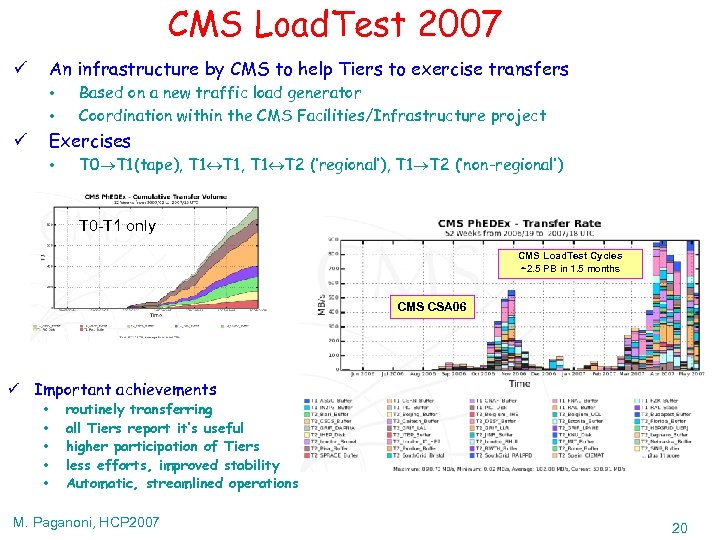

CMS Load. Test 2007 An infrastructure by CMS to help Tiers to exercise transfers ü • • Based on a new traffic load generator Coordination within the CMS Facilities/Infrastructure project Exercises ü • T 0 T 1(tape), T 1, T 1 T 2 (‘regional’), T 1 T 2 (‘non-regional’) T 0 -T 1 only CMS Load. Test Cycles ~2. 5 PB in 1. 5 months CMS CSA 06 ü Important achievements • • • routinely transferring all Tiers report it’s useful higher participation of Tiers less efforts, improved stability Automatic, streamlined operations M. Paganoni, HCP 2007 20

CMS Load. Test 2007 An infrastructure by CMS to help Tiers to exercise transfers ü • • Based on a new traffic load generator Coordination within the CMS Facilities/Infrastructure project Exercises ü • T 0 T 1(tape), T 1, T 1 T 2 (‘regional’), T 1 T 2 (‘non-regional’) T 0 -T 1 only CMS Load. Test Cycles ~2. 5 PB in 1. 5 months CMS CSA 06 ü Important achievements • • • routinely transferring all Tiers report it’s useful higher participation of Tiers less efforts, improved stability Automatic, streamlined operations M. Paganoni, HCP 2007 20

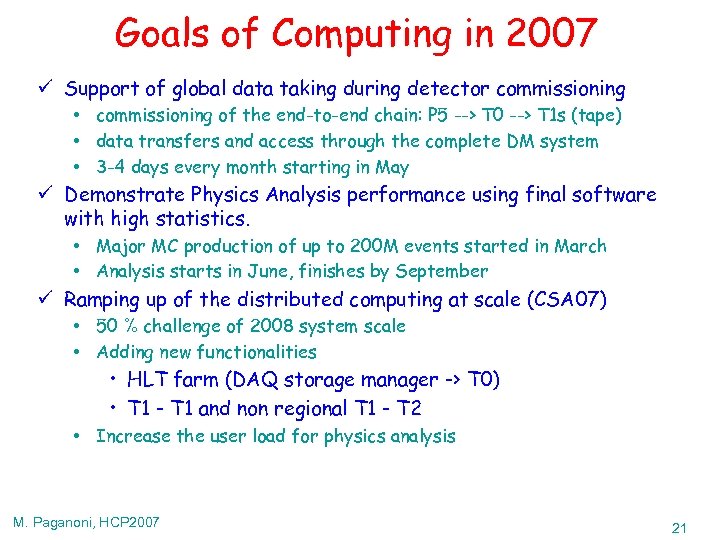

Goals of Computing in 2007 ü Support of global data taking during detector commissioning • commissioning of the end-to-end chain: P 5 --> T 0 --> T 1 s (tape) • data transfers and access through the complete DM system • 3 -4 days every month starting in May ü Demonstrate Physics Analysis performance using final software with high statistics. • Major MC production of up to 200 M events started in March • Analysis starts in June, finishes by September ü Ramping up of the distributed computing at scale (CSA 07) • 50 % challenge of 2008 system scale • Adding new functionalities • HLT farm (DAQ storage manager -> T 0) • T 1 - T 1 and non regional T 1 - T 2 • Increase the user load for physics analysis M. Paganoni, HCP 2007 21

Goals of Computing in 2007 ü Support of global data taking during detector commissioning • commissioning of the end-to-end chain: P 5 --> T 0 --> T 1 s (tape) • data transfers and access through the complete DM system • 3 -4 days every month starting in May ü Demonstrate Physics Analysis performance using final software with high statistics. • Major MC production of up to 200 M events started in March • Analysis starts in June, finishes by September ü Ramping up of the distributed computing at scale (CSA 07) • 50 % challenge of 2008 system scale • Adding new functionalities • HLT farm (DAQ storage manager -> T 0) • T 1 - T 1 and non regional T 1 - T 2 • Increase the user load for physics analysis M. Paganoni, HCP 2007 21

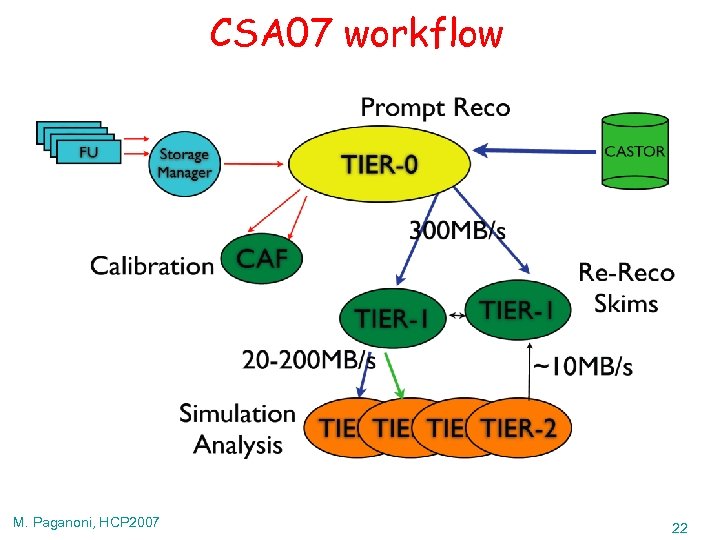

CSA 07 workflow M. Paganoni, HCP 2007 22

CSA 07 workflow M. Paganoni, HCP 2007 22

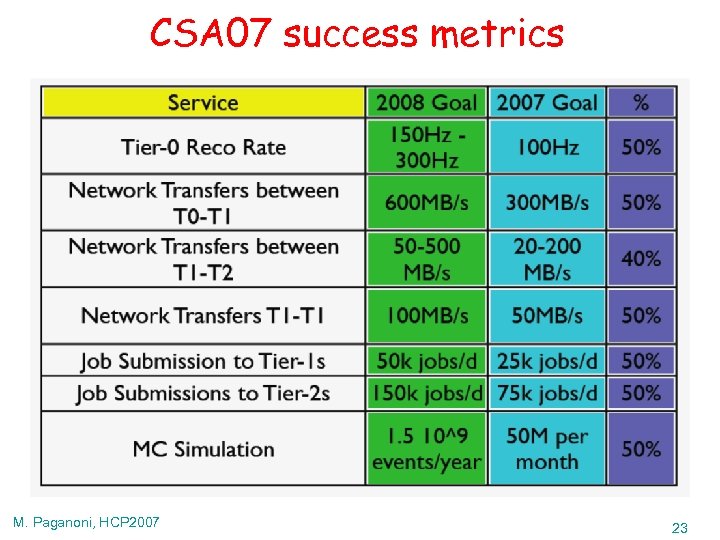

CSA 07 success metrics M. Paganoni, HCP 2007 23

CSA 07 success metrics M. Paganoni, HCP 2007 23

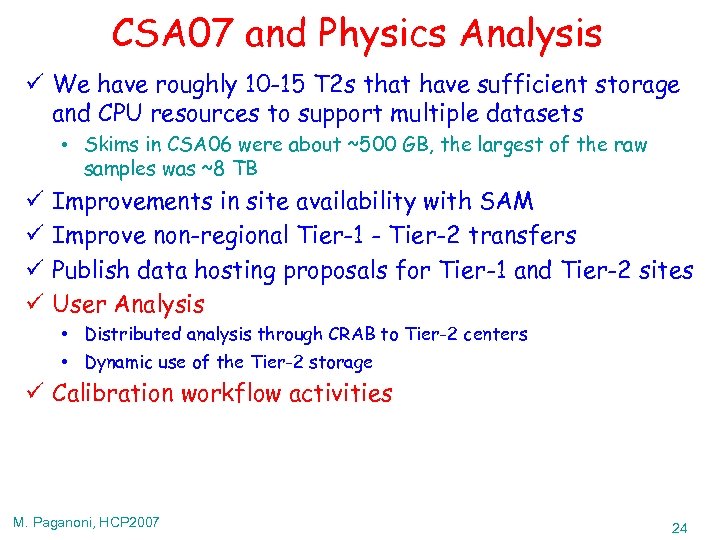

CSA 07 and Physics Analysis ü We have roughly 10 -15 T 2 s that have sufficient storage and CPU resources to support multiple datasets • Skims in CSA 06 were about ~500 GB, the largest of the raw samples was ~8 TB ü ü Improvements in site availability with SAM Improve non-regional Tier-1 - Tier-2 transfers Publish data hosting proposals for Tier-1 and Tier-2 sites User Analysis • Distributed analysis through CRAB to Tier-2 centers • Dynamic use of the Tier-2 storage ü Calibration workflow activities M. Paganoni, HCP 2007 24

CSA 07 and Physics Analysis ü We have roughly 10 -15 T 2 s that have sufficient storage and CPU resources to support multiple datasets • Skims in CSA 06 were about ~500 GB, the largest of the raw samples was ~8 TB ü ü Improvements in site availability with SAM Improve non-regional Tier-1 - Tier-2 transfers Publish data hosting proposals for Tier-1 and Tier-2 sites User Analysis • Distributed analysis through CRAB to Tier-2 centers • Dynamic use of the Tier-2 storage ü Calibration workflow activities M. Paganoni, HCP 2007 24

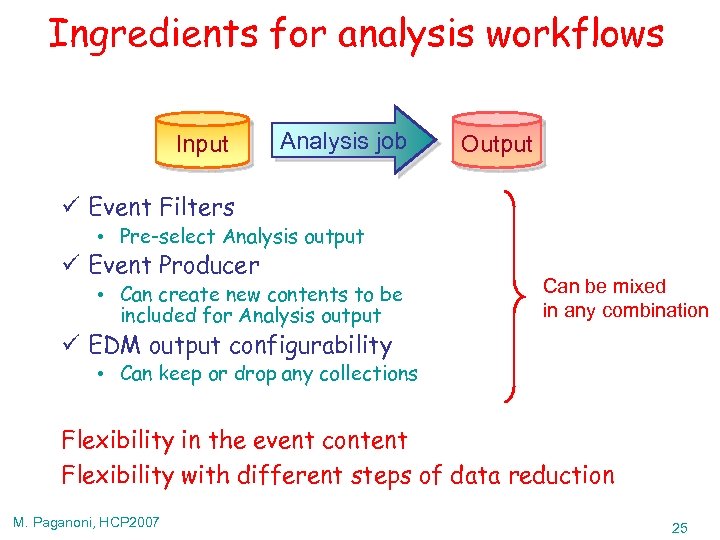

Ingredients for analysis workflows Input Analysis job Output ü Event Filters • Pre-select Analysis output ü Event Producer • Can create new contents to be included for Analysis output Can be mixed in any combination ü EDM output configurability • Can keep or drop any collections Flexibility in the event content Flexibility with different steps of data reduction M. Paganoni, HCP 2007 25

Ingredients for analysis workflows Input Analysis job Output ü Event Filters • Pre-select Analysis output ü Event Producer • Can create new contents to be included for Analysis output Can be mixed in any combination ü EDM output configurability • Can keep or drop any collections Flexibility in the event content Flexibility with different steps of data reduction M. Paganoni, HCP 2007 25

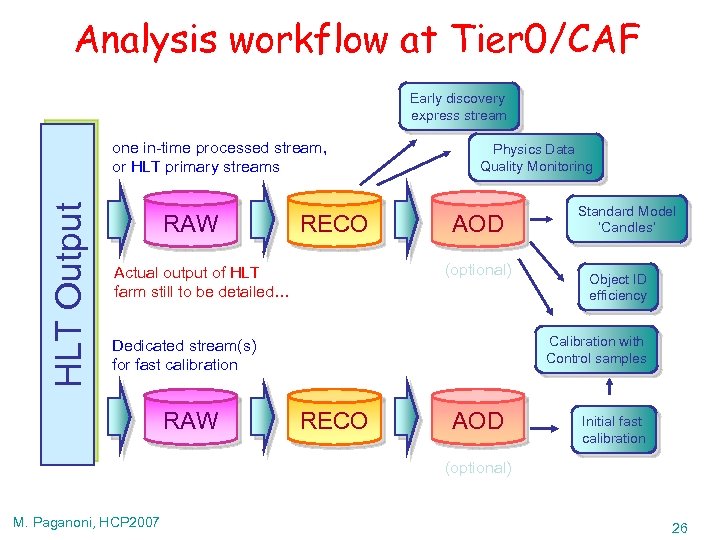

Analysis workflow at Tier 0/CAF Early discovery express stream HLT Output one in-time processed stream, or HLT primary streams RAW RECO Physics Data Quality Monitoring AOD (optional) Actual output of HLT farm still to be detailed… Object ID efficiency Calibration with Control samples Dedicated stream(s) for fast calibration RAW Standard Model ‘Candles’ RECO AOD Initial fast calibration (optional) M. Paganoni, HCP 2007 26

Analysis workflow at Tier 0/CAF Early discovery express stream HLT Output one in-time processed stream, or HLT primary streams RAW RECO Physics Data Quality Monitoring AOD (optional) Actual output of HLT farm still to be detailed… Object ID efficiency Calibration with Control samples Dedicated stream(s) for fast calibration RAW Standard Model ‘Candles’ RECO AOD Initial fast calibration (optional) M. Paganoni, HCP 2007 26

Conclusions ü Commissioning, integration remain major tasks in 2007 • To balance the needs for physics, computing, detector will be a logistics challenge ü Transition to Operations has started. Scaling at production level, while keeping high efficiency is the critical point • Continuous effort to be monitored in detail ü Keep as flexible as possible in the analysis model ü An increasing number of CMS people will be involved in the facilities, commissioning and operations to prepare for CMS physics analysis M. Paganoni, HCP 2007 27

Conclusions ü Commissioning, integration remain major tasks in 2007 • To balance the needs for physics, computing, detector will be a logistics challenge ü Transition to Operations has started. Scaling at production level, while keeping high efficiency is the critical point • Continuous effort to be monitored in detail ü Keep as flexible as possible in the analysis model ü An increasing number of CMS people will be involved in the facilities, commissioning and operations to prepare for CMS physics analysis M. Paganoni, HCP 2007 27