2ec7635a19db4f8cff1f5e8b2fe06dd1.ppt

- Количество слайдов: 30

Computing Basics within CMS J-Term at LPC 01 / 12 / 06 Oliver Gutsche USCMS / Fermilab

CMS: Introduction Physics challenge Experimental challenge (detector, machine) But also: computational challenge CMS computing Goal: enable physicists to analyze CMS data Task: to provide analysis access to a very large data volume “Analysis of CMS data will be different to what we know from previous HEP experiments” 01/12/06 This talk is meant to give a brief overview about CMS computing from. Oliver Gutsche - Computing Basics within CMS the user perspective 2

Outline CMS, the computational challenge Data sets Data types and volumes Resource requirements The GRID and the Tiers The CMS Tier structure USCMS contribution ? How is the user going to analyze CMS data? The user analysis tool CRAB 01/12/06 Oliver Gutsche - Computing Basics within CMS 3

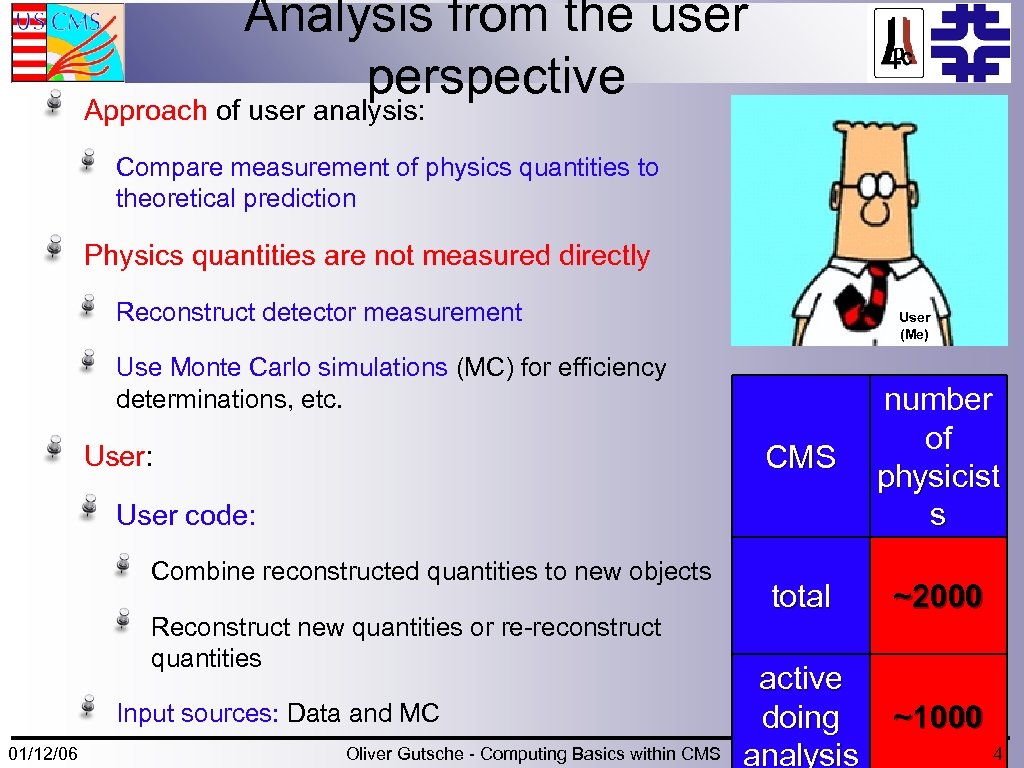

Analysis from the user perspective Approach of user analysis: Compare measurement of physics quantities to theoretical prediction Physics quantities are not measured directly Reconstruct detector measurement User (Me) Use Monte Carlo simulations (MC) for efficiency determinations, etc. CMS User: number of physicist s total ~2000 active doing analysis ~1000 User code: Combine reconstructed quantities to new objects Reconstruct new quantities or re-reconstruct quantities Input sources: Data and MC 01/12/06 Oliver Gutsche - Computing Basics within CMS 4

CMS computing Provide all CMS users with Access to reconstructed data Access to simulated and reconstructed MC Access to sufficient computing power to execute user code The computational infrastructure should be available Independent of the location of the user (international collaboration) On a fair share basis 01/12/06 Oliver Gutsche - Computing Basics within CMS 5

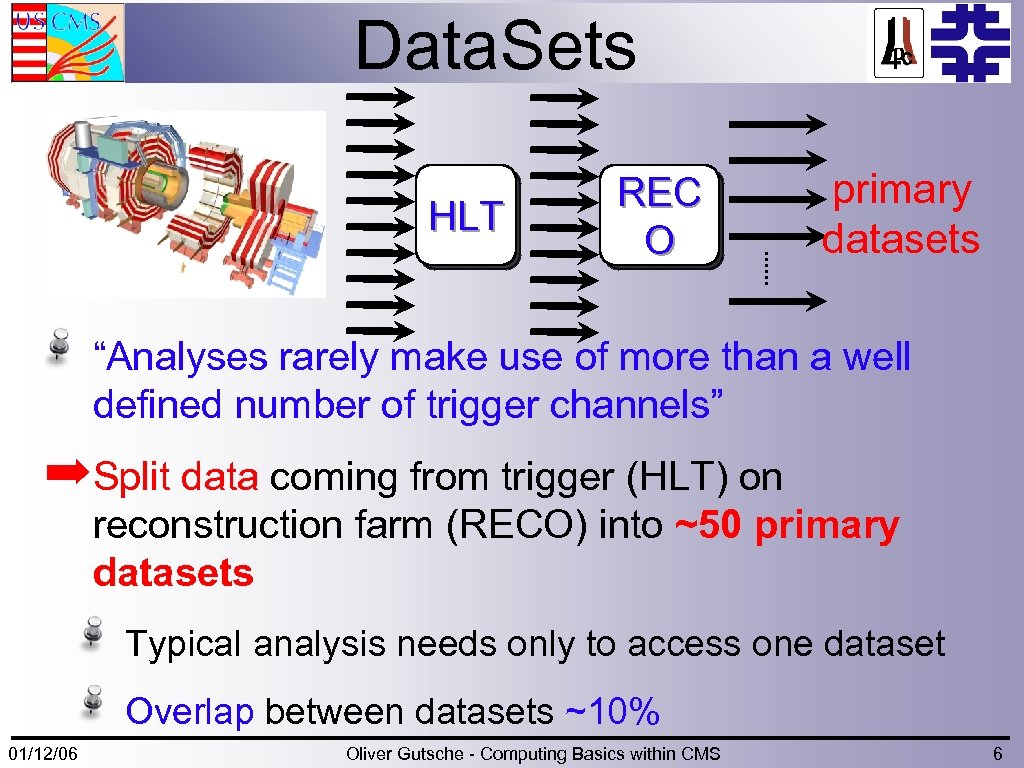

Data. Sets HLT REC O primary datasets “Analyses rarely make use of more than a well defined number of trigger channels” ➡Split data coming from trigger (HLT) on reconstruction farm (RECO) into ~50 primary datasets Typical analysis needs only to access one dataset Overlap between datasets ~10% 01/12/06 Oliver Gutsche - Computing Basics within CMS 6

CERN First approach Concentrate computing at origin of data Provide network access to computing infrastructure In the following: overview of required computing resources (order of magnitude) in terms of Data volume (mass storage) Reconstruction resources (CPU) Simulation resources (CPU) Analysis resources (CPU and mass storage) 01/12/06 Oliver Gutsche - Computing Basics within CMS 7

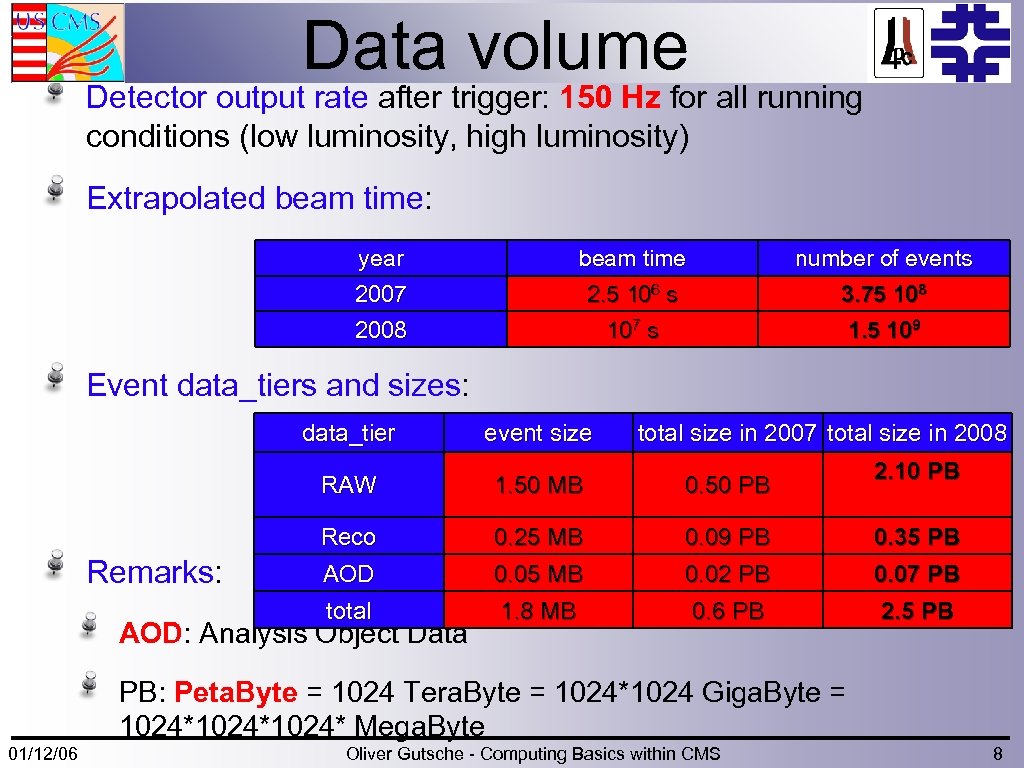

Data volume Detector output rate after trigger: 150 Hz for all running conditions (low luminosity, high luminosity) Extrapolated beam time: year beam time number of events 2007 2. 5 106 s 3. 75 108 2008 107 s 1. 5 109 Event data_tiers and sizes: data_tier event size total size in 2007 total size in 2008 2. 10 PB RAW 0. 50 PB Reco 0. 25 MB 0. 09 PB 0. 35 PB AOD 0. 05 MB 0. 02 PB 0. 07 PB total Remarks: 1. 50 MB 1. 8 MB 0. 6 PB 2. 5 PB AOD: Analysis Object Data PB: Peta. Byte = 1024 Tera. Byte = 1024*1024 Giga. Byte = 1024*1024* Mega. Byte 01/12/06 Oliver Gutsche - Computing Basics within CMS 8

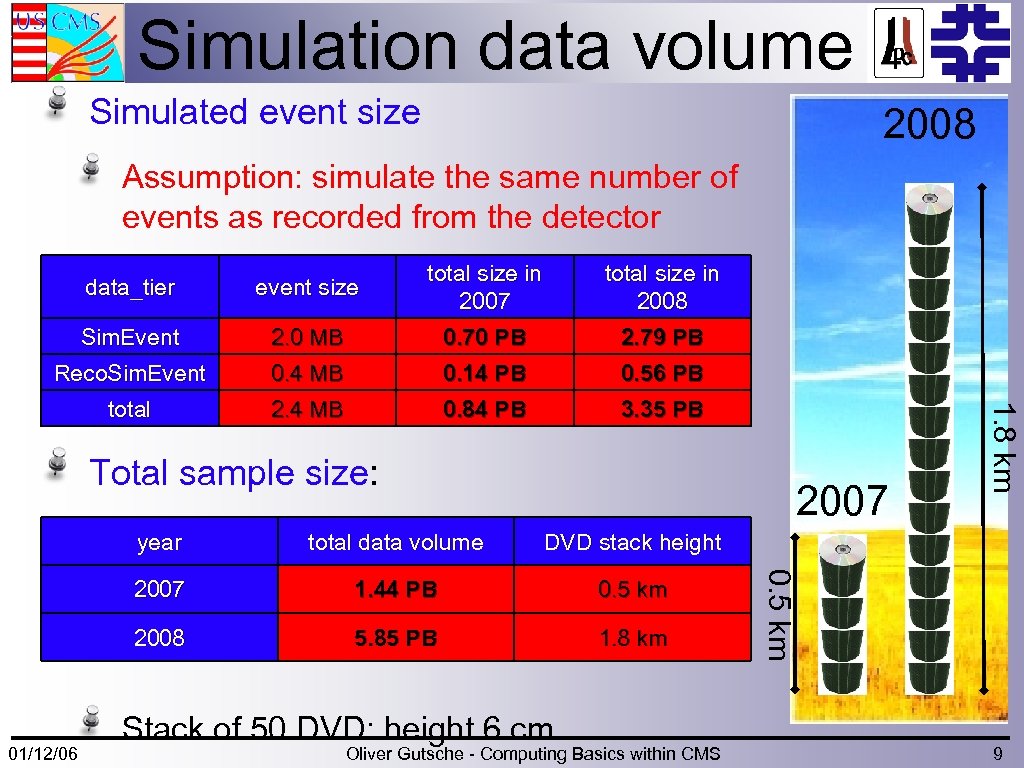

Simulation data volume Simulated event size 2008 Assumption: simulate the same number of events as recorded from the detector event size total size in 2007 total size in 2008 Sim. Event 2. 0 MB 0. 70 PB 2. 79 PB Reco. Sim. Event 0. 4 MB 0. 14 PB 0. 56 PB total 2. 4 MB 0. 84 PB 3. 35 PB Total sample size: 2007 DVD stack height 2007 1. 44 PB 0. 5 km 2008 01/12/06 total data volume 5. 85 PB 1. 8 km Stack of 50 DVD: height 6 cm Oliver Gutsche - Computing Basics within CMS 0. 5 km year 1. 8 km data_tier 9

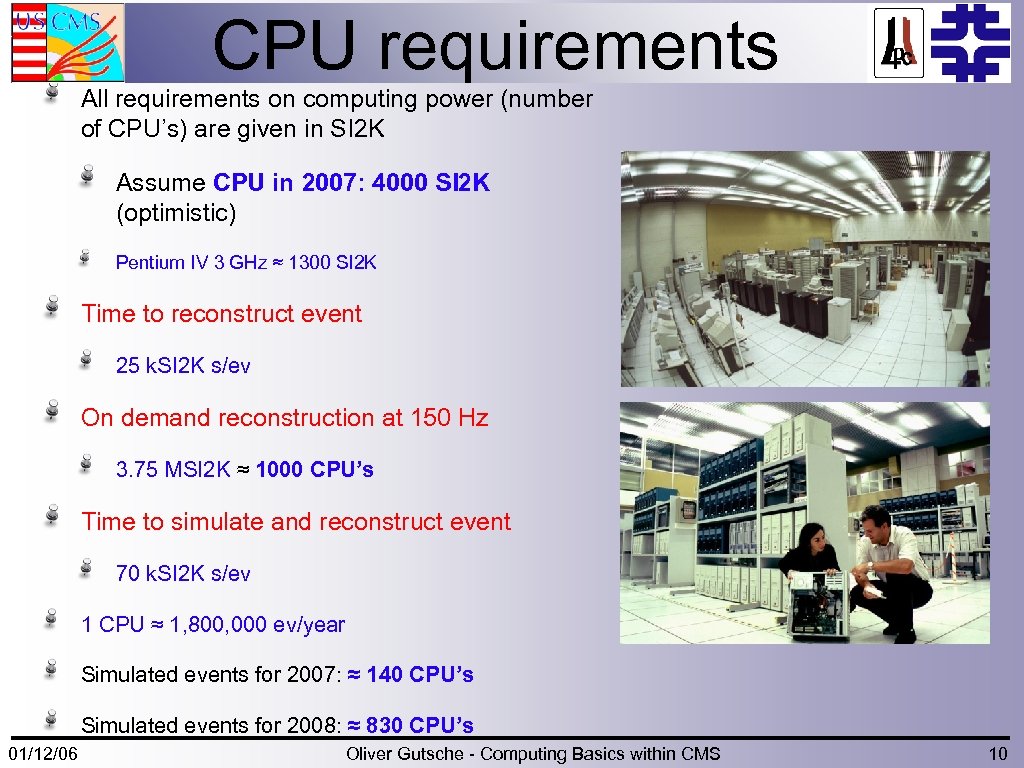

CPU requirements All requirements on computing power (number of CPU’s) are given in SI 2 K Assume CPU in 2007: 4000 SI 2 K (optimistic) Pentium IV 3 GHz ≈ 1300 SI 2 K Time to reconstruct event 25 k. SI 2 K s/ev On demand reconstruction at 150 Hz 3. 75 MSI 2 K ≈ 1000 CPU’s Time to simulate and reconstruct event 70 k. SI 2 K s/ev 1 CPU ≈ 1, 800, 000 ev/year Simulated events for 2007: ≈ 140 CPU’s Simulated events for 2008: ≈ 830 CPU’s 01/12/06 Oliver Gutsche - Computing Basics within CMS 10

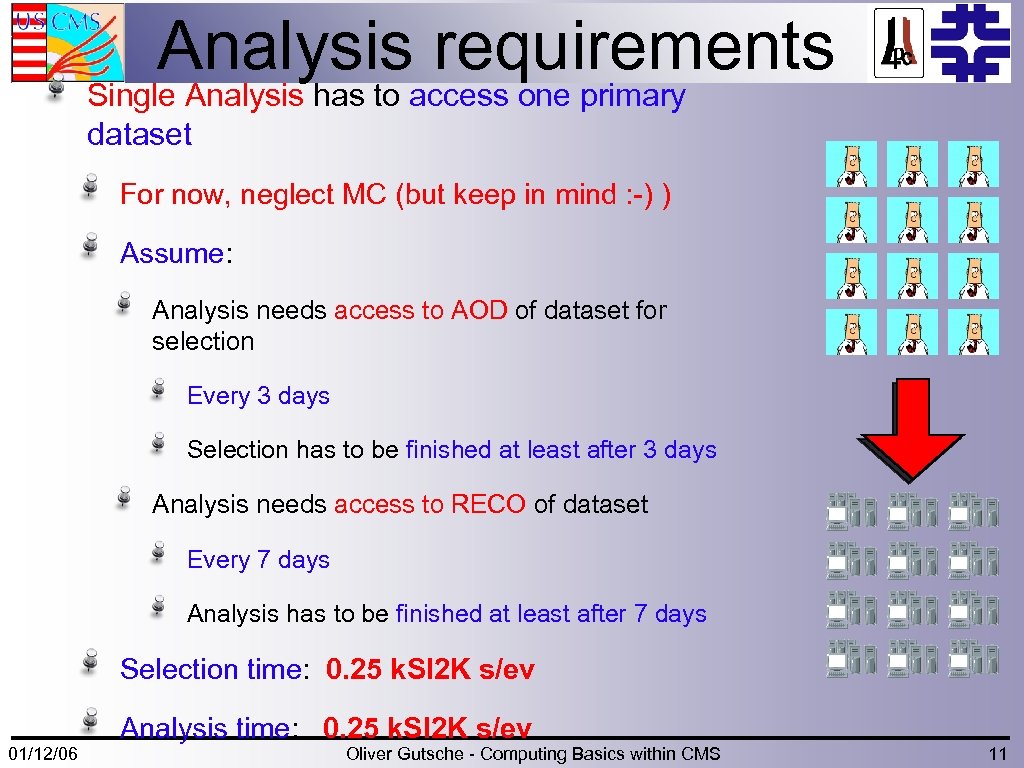

Analysis requirements Single Analysis has to access one primary dataset For now, neglect MC (but keep in mind : -) ) Assume: Analysis needs access to AOD of dataset for selection Every 3 days Selection has to be finished at least after 3 days Analysis needs access to RECO of dataset Every 7 days Analysis has to be finished at least after 7 days Selection time: 0. 25 k. SI 2 K s/ev 01/12/06 Analysis time: 0. 25 k. SI 2 K s/ev Oliver Gutsche - Computing Basics within CMS 11

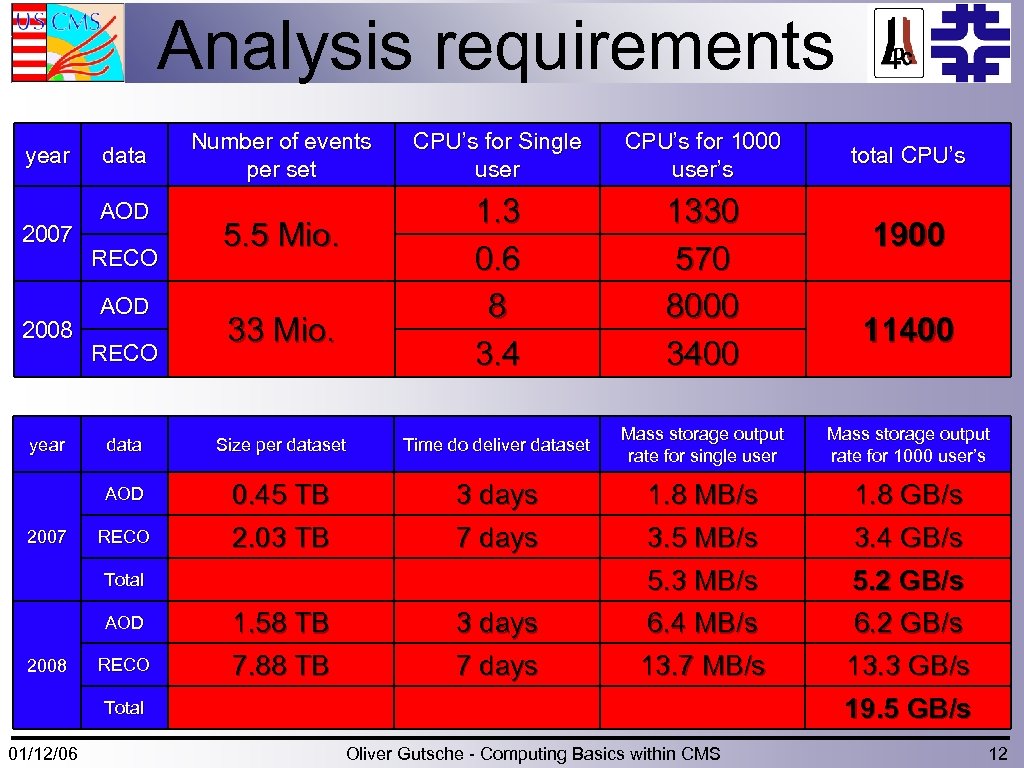

Analysis requirements year 2007 2008 data AOD RECO Number of events per set 33 Mio. CPU’s for 1000 user’s 1. 3 0. 6 8 3. 4 5. 5 Mio. CPU’s for Single user 1330 570 8000 3400 total CPU’s 1900 11400 2007 data Size per dataset Time do deliver dataset Mass storage output rate for single user Mass storage output rate for 1000 user’s AOD year 0. 45 TB 2. 03 TB 3 days 7 days 1. 8 MB/s 3. 5 MB/s 1. 8 GB/s 3. 4 GB/s 5. 3 MB/s 6. 4 MB/s 5. 2 GB/s 6. 2 GB/s 13. 7 MB/s 13. 3 GB/s 19. 5 GB/s RECO Total AOD 2008 RECO 1. 58 TB 7. 88 TB 3 days 7 days Total 01/12/06 Oliver Gutsche - Computing Basics within CMS 12

Distributed Computing requirements are nearly impossible to fulfill at one place (CERN) ➡ Distributed Computing Distribute world-wide into a tier structure: Data storage Re-reconstruction Bulk user analysis MC production 50 primary datasets can be distributed separately User will have access to all tiers using GRID tools 01/12/06 Oliver Gutsche - Computing Basics within CMS 13

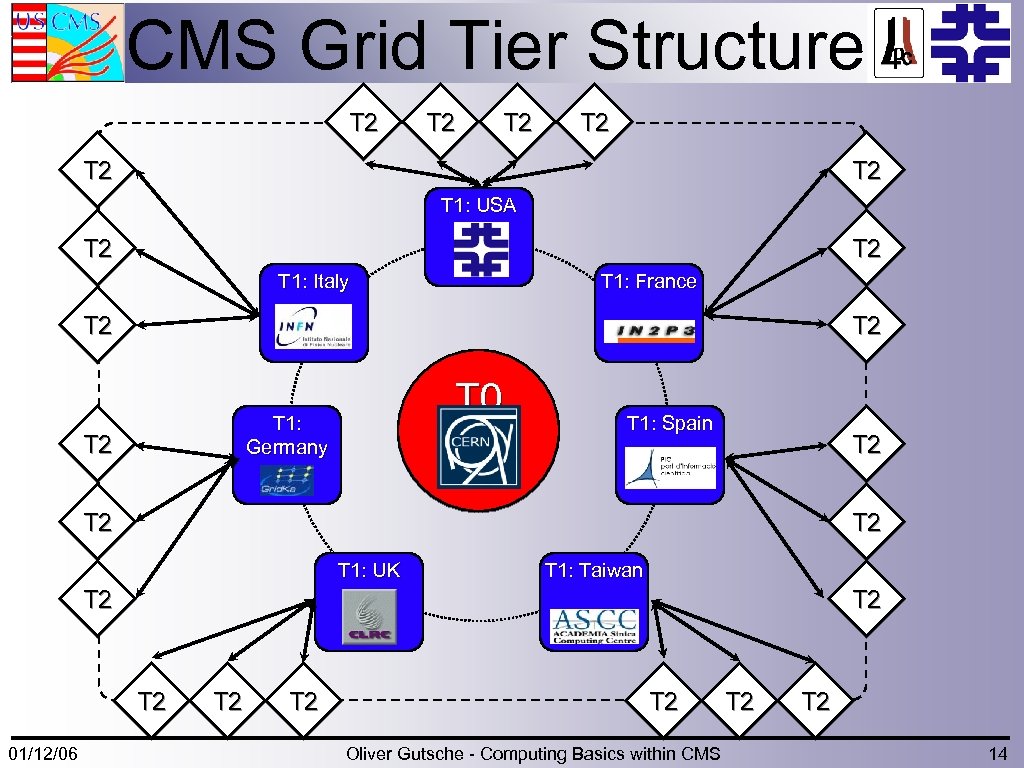

CMS Grid Tier Structure T 2 T 2 T 2 T 1: USA T 2 T 1: Italy T 1: France T 2 T 0 T 1: Germany T 2 T 1: Spain T 2 T 2 T 1: UK T 1: Taiwan T 2 T 2 01/12/06 T 2 T 2 Oliver Gutsche - Computing Basics within CMS T 2 14

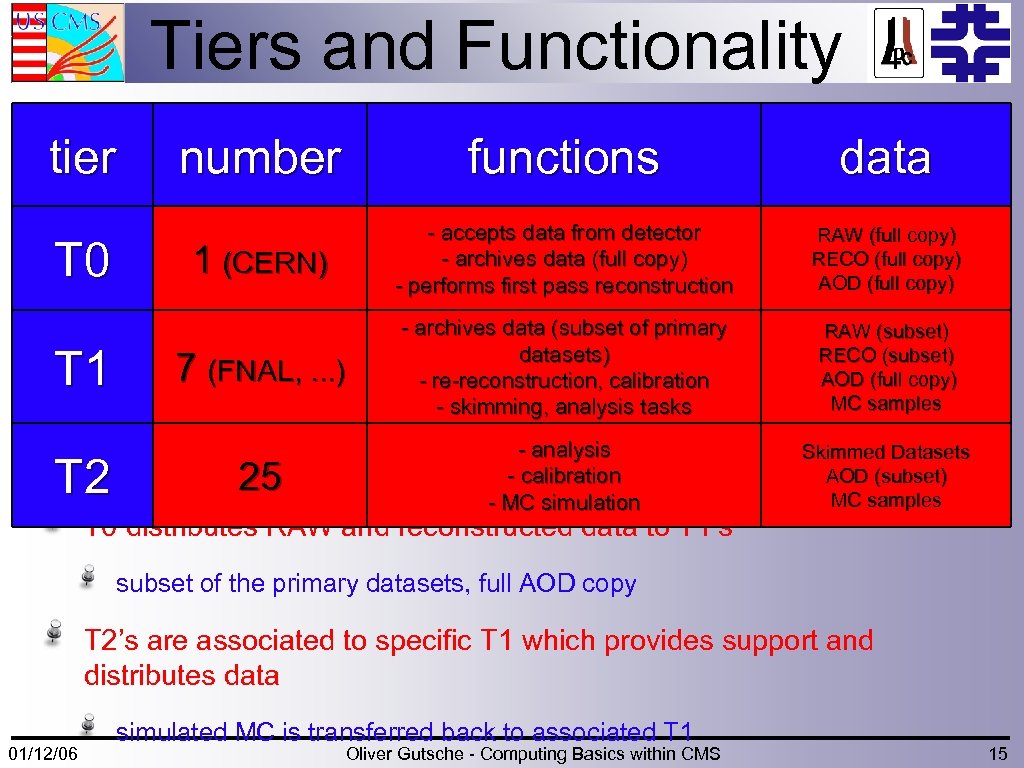

Tiers and Functionality tier T 0 T 1 T 2 number functions data 1 (CERN) - accepts data from detector - archives data (full copy) - performs first pass reconstruction RAW (full copy) RECO (full copy) AOD (full copy) 7 (FNAL, . . . ) - archives data (subset of primary datasets) - re-reconstruction, calibration - skimming, analysis tasks RAW (subset) RECO (subset) AOD (full copy) MC samples 25 - analysis - calibration - MC simulation Skimmed Datasets AOD (subset) MC samples T 0 distributes RAW and reconstructed data to T 1’s subset of the primary datasets, full AOD copy T 2’s are associated to specific T 1 which provides support and distributes data 01/12/06 simulated MC is transferred back to associated T 1 Oliver Gutsche - Computing Basics within CMS 15

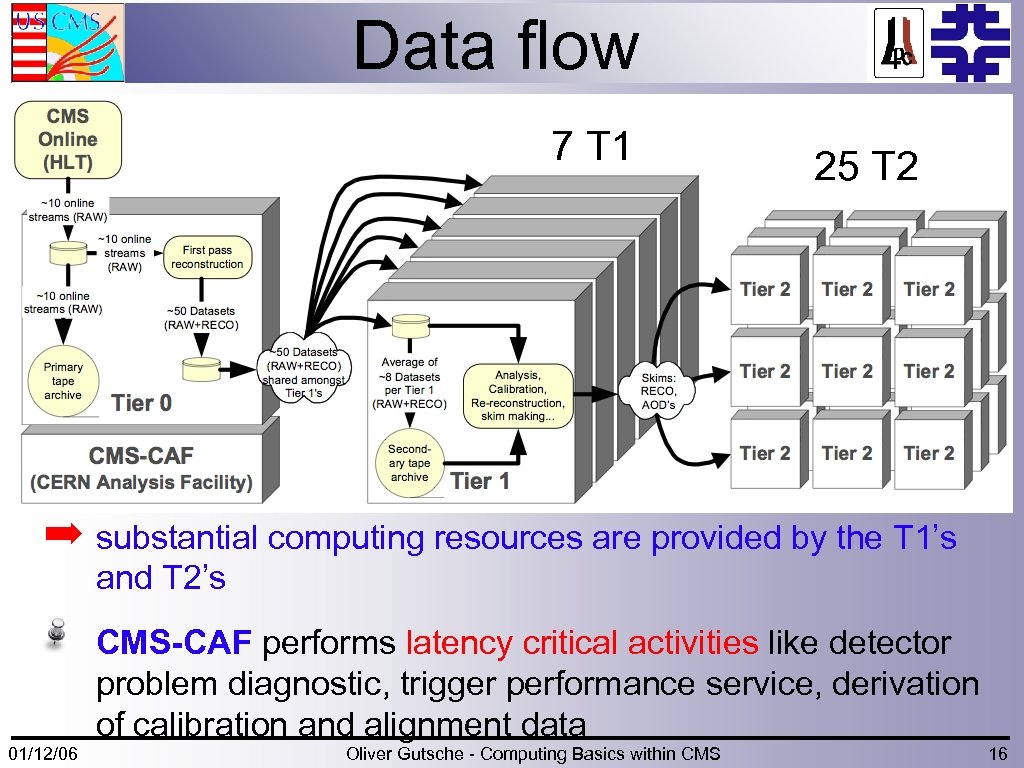

Data flow 7 T 1 25 T 2 ➡ substantial computing resources are provided by the T 1’s and T 2’s CMS-CAF performs latency critical activities like detector problem diagnostic, trigger performance service, derivation of calibration and alignment data 01/12/06 Oliver Gutsche - Computing Basics within CMS 16

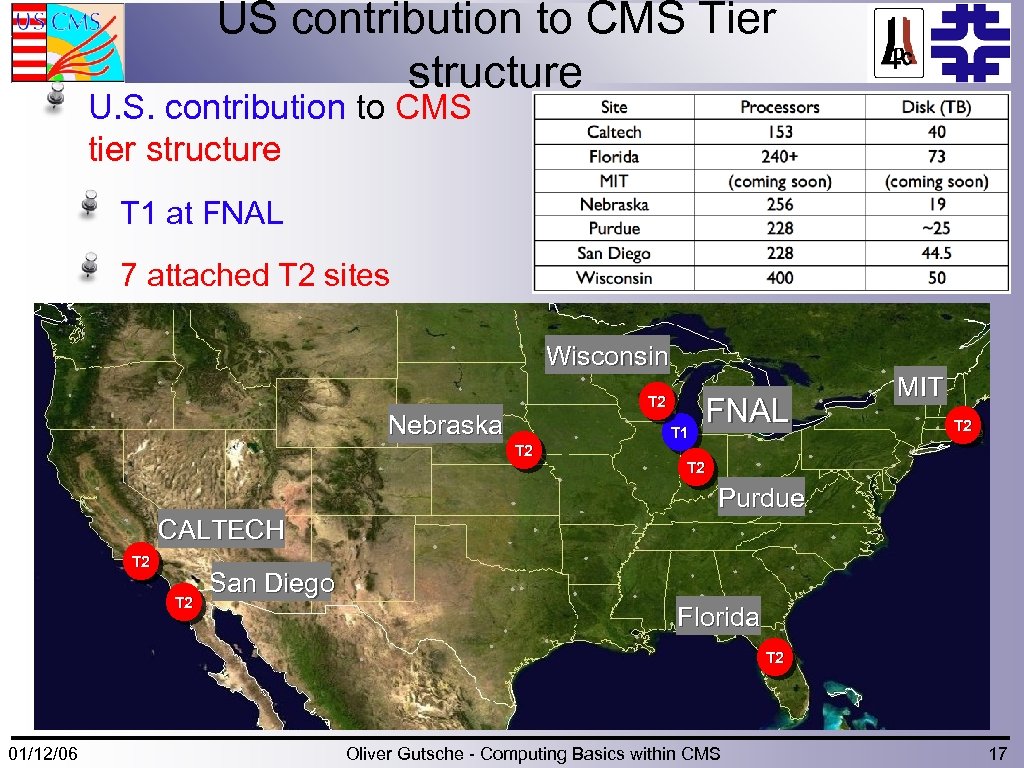

US contribution to CMS Tier structure U. S. contribution to CMS tier structure T 1 at FNAL 7 attached T 2 sites Wisconsin T 2 Nebraska T 1 T 2 FNAL MIT T 2 Purdue CALTECH T 2 San Diego Florida T 2 01/12/06 Oliver Gutsche - Computing Basics within CMS 17

Back to the user. . . structure: After the discussion of the complex computing How does the user in the end do analysis? Basic requirements: Local account on workgroup cluster or private machine CERN account (including CERN CMS registration) GRID certificate Access to workgroup cluster or private machine with: Installed CMS software environment for user code development Access to local datasets (on harddisk or on local mass storage system) 01/12/06 Installed GRID tools for Computing Basics within CMS code to T 1 and T 2 18 Oliver Gutsche - submission of user

Some remarks: User code Importance of the local working environment: “Prevent errors at the source before finding out the hard way” Develop user code locally and compile it Test user code locally on small test samples Bug discovery and fix Run a short test job on the sample (data or MC) you plan to use in your analysis (locally or via the GRID) Test compatibility between your user code and the sample Batch systems are prioritized to provide fair access for all users, test job avoids to unnecessarily worsen your priority In the end, you want to produce “plots” to get your physics results. Your local environment will be the place where everything comes together. 01/12/06 Oliver Gutsche - Computing Basics within CMS 19

Remarks “Due to the current lack of data, the following is described exemplary for a MC analysis” “In the next talks, you will hear “everything” about the new framework CMSSW. As the framework is new and not complete yet and MC samples do only exist in tests, also the computing tools have not been fully adapted. In the following, the exemplary MC analysis is described for the old framework and will show the basic steps which will not change dramatically for the new framework. ” 01/12/06 Oliver Gutsche - Computing Basics within CMS 20

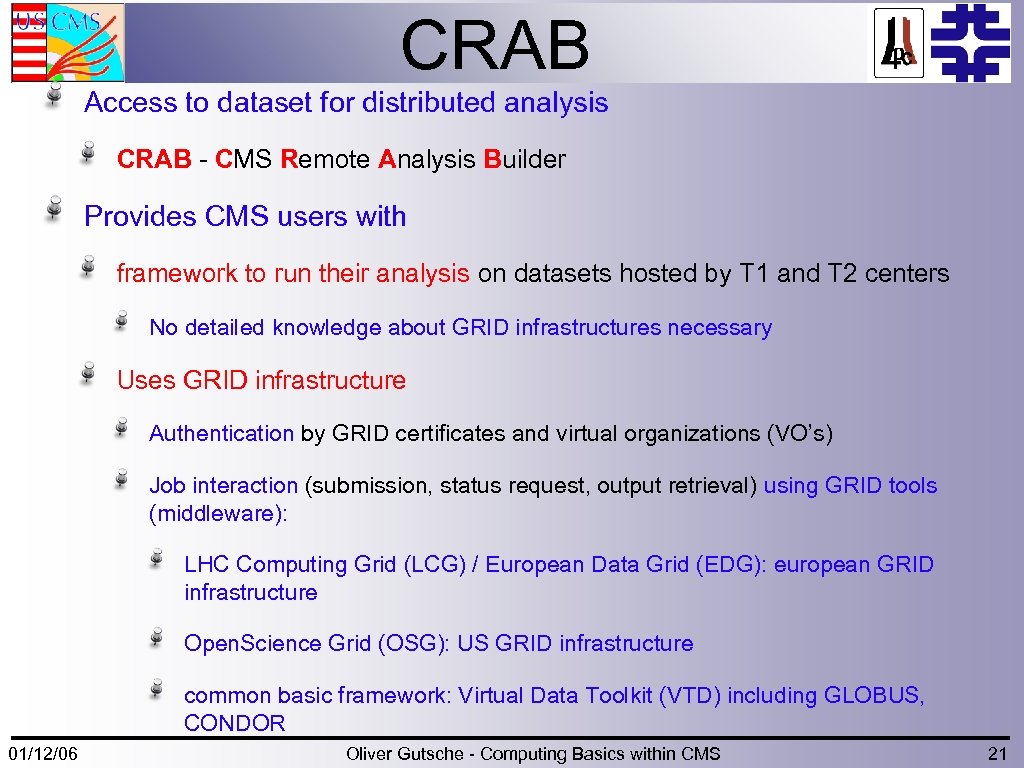

CRAB Access to dataset for distributed analysis CRAB - CMS Remote Analysis Builder Provides CMS users with framework to run their analysis on datasets hosted by T 1 and T 2 centers No detailed knowledge about GRID infrastructures necessary Uses GRID infrastructure Authentication by GRID certificates and virtual organizations (VO’s) Job interaction (submission, status request, output retrieval) using GRID tools (middleware): LHC Computing Grid (LCG) / European Data Grid (EDG): european GRID infrastructure Open. Science Grid (OSG): US GRID infrastructure common basic framework: Virtual Data Toolkit (VTD) including GLOBUS, CONDOR 01/12/06 Oliver Gutsche - Computing Basics within CMS 21

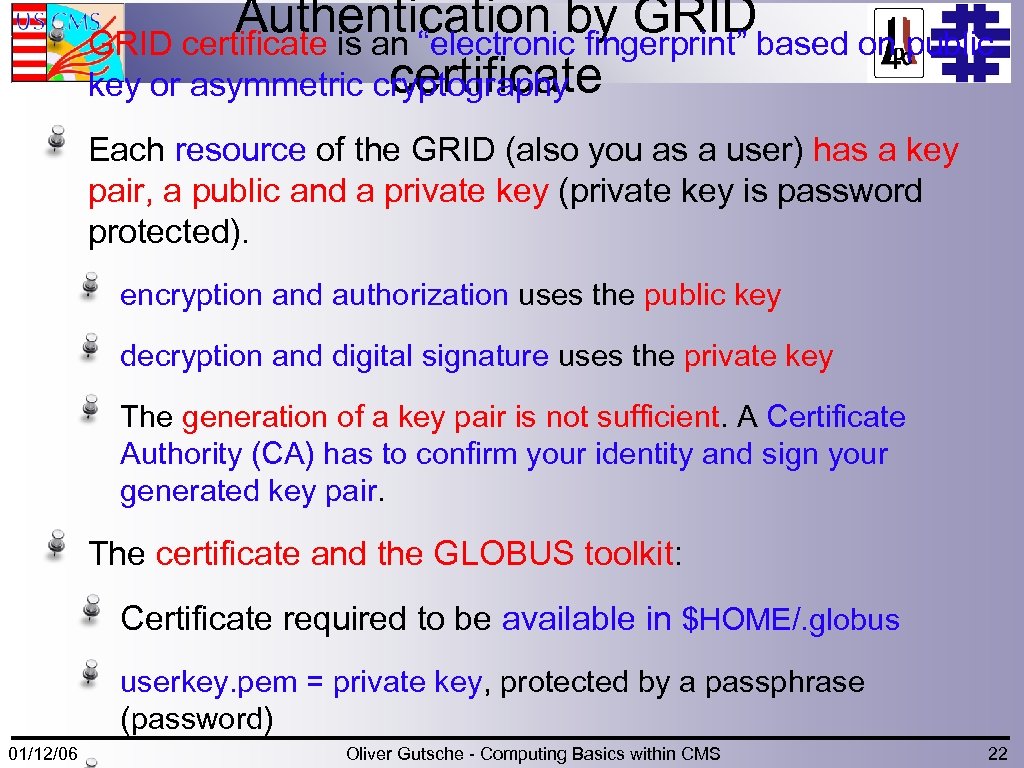

Authentication by GRIDbased on public GRID certificate is an “electronic fingerprint” certificate key or asymmetric cryptography Each resource of the GRID (also you as a user) has a key pair, a public and a private key (private key is password protected). encryption and authorization uses the public key decryption and digital signature uses the private key The generation of a key pair is not sufficient. A Certificate Authority (CA) has to confirm your identity and sign your generated key pair. The certificate and the GLOBUS toolkit: Certificate required to be available in $HOME/. globus userkey. pem = private key, protected by a passphrase (password) 01/12/06 Oliver Gutsche - Computing Basics within CMS 22

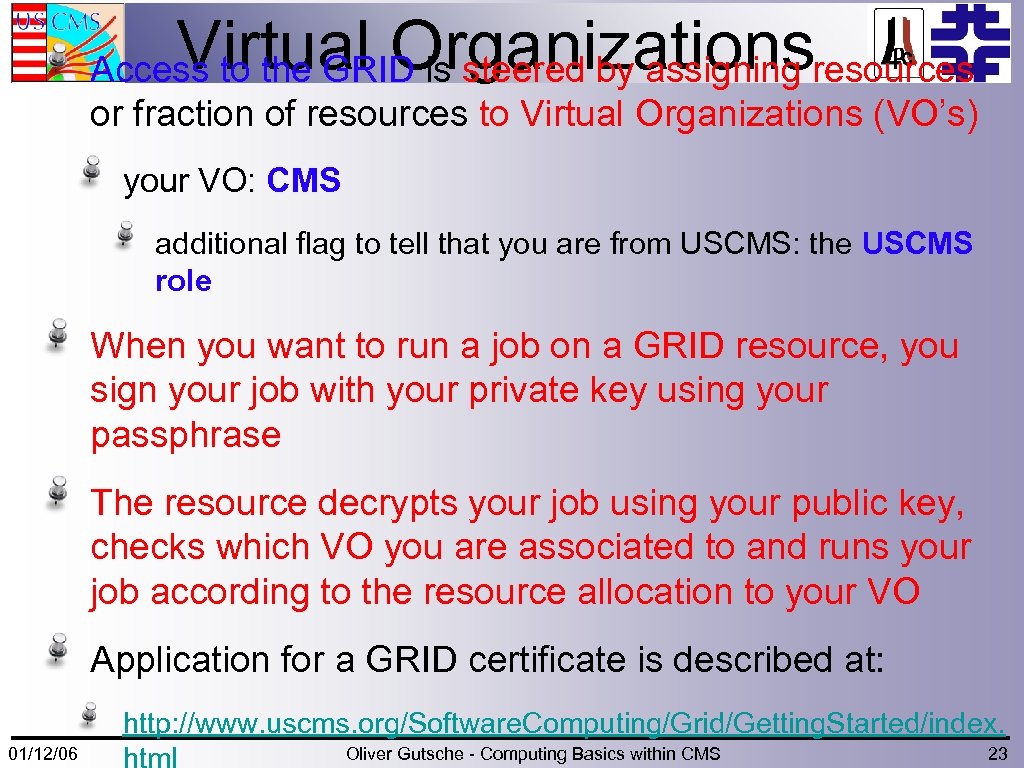

Virtual Organizations Access to the GRID is steered by assigning resources or fraction of resources to Virtual Organizations (VO’s) your VO: CMS additional flag to tell that you are from USCMS: the USCMS role When you want to run a job on a GRID resource, you sign your job with your private key using your passphrase The resource decrypts your job using your public key, checks which VO you are associated to and runs your job according to the resource allocation to your VO Application for a GRID certificate is described at: 01/12/06 http: //www. uscms. org/Software. Computing/Grid/Getting. Started/index. 23 Oliver Gutsche - Computing Basics within CMS html

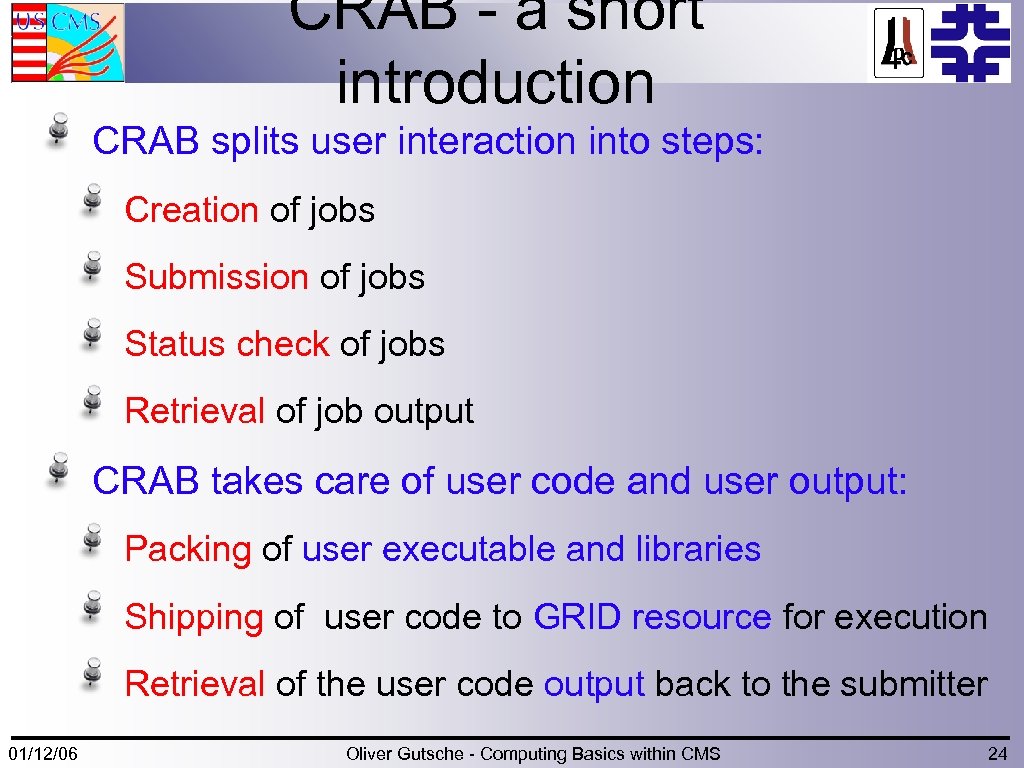

CRAB - a short introduction CRAB splits user interaction into steps: Creation of jobs Submission of jobs Status check of jobs Retrieval of job output CRAB takes care of user code and user output: Packing of user executable and libraries Shipping of user code to GRID resource for execution Retrieval of the user code output back to the submitter 01/12/06 Oliver Gutsche - Computing Basics within CMS 24

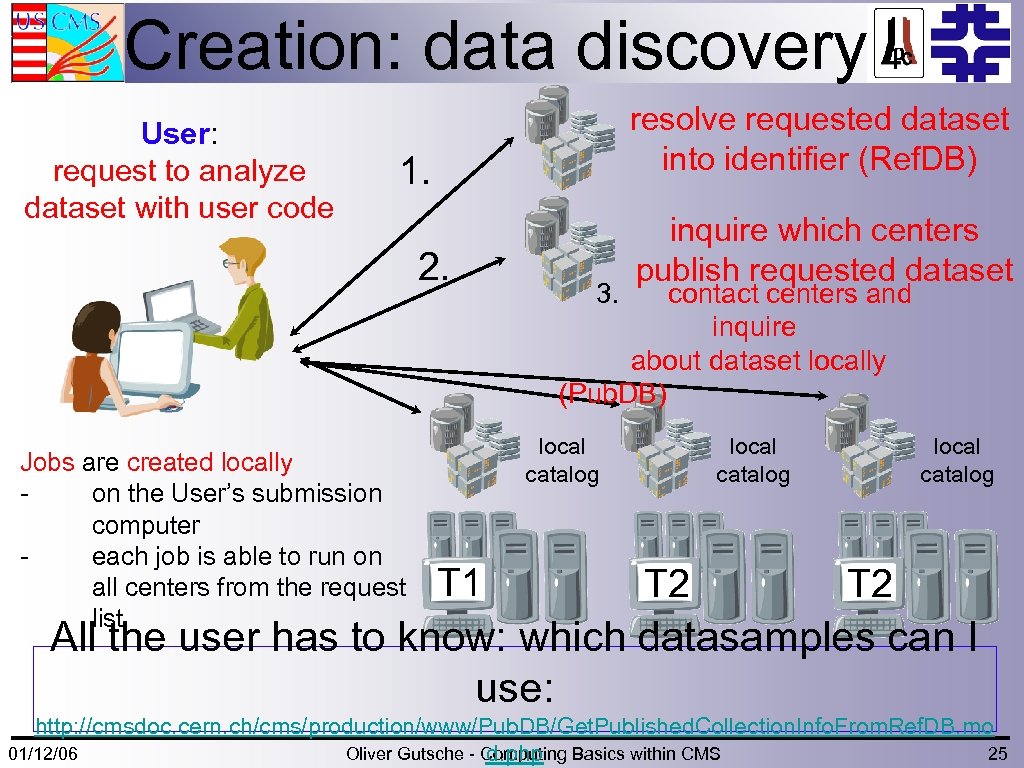

Creation: data discovery User: request to analyze dataset with user code resolve requested dataset into identifier (Ref. DB) 1. 2. Jobs are created locally on the User’s submission computer each job is able to run on all centers from the request list 3. inquire which centers publish requested dataset contact centers and inquire about dataset locally (Pub. DB) local catalog T 1 local catalog T 2 All the user has to know: which datasamples can I use: http: //cmsdoc. cern. ch/cms/production/www/Pub. DB/Get. Published. Collection. Info. From. Ref. DB. mo 25 01/12/06 Oliver Gutsche - Computing Basics within CMS d. php

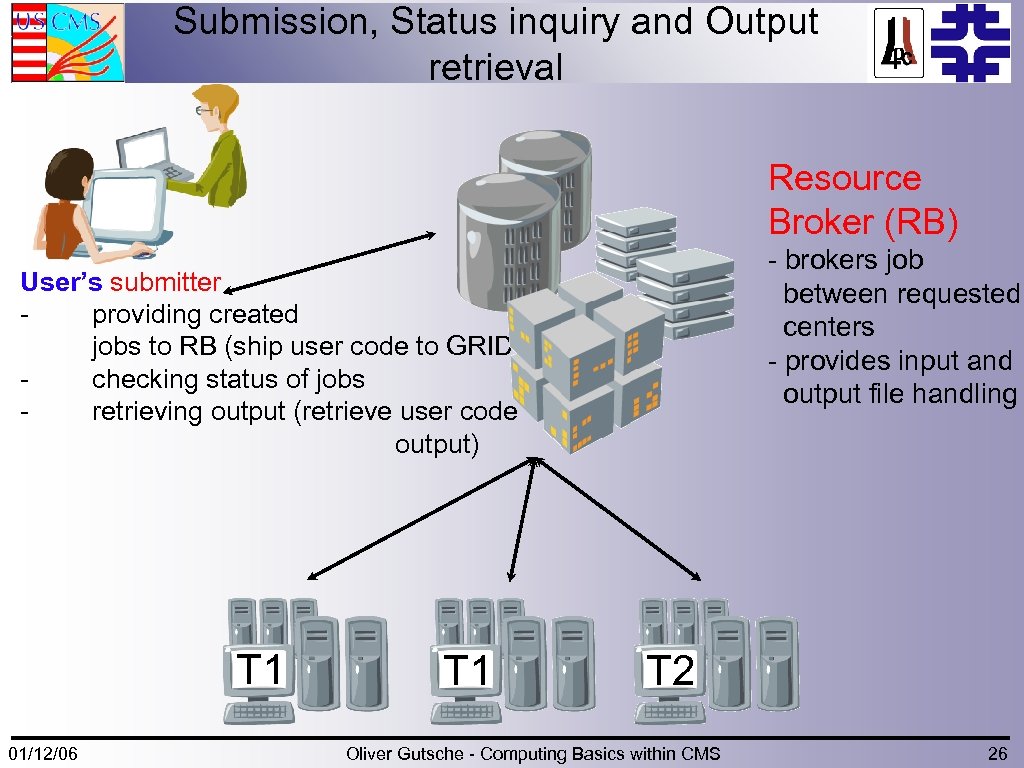

Submission, Status inquiry and Output retrieval Resource Broker (RB) - brokers job between requested centers - provides input and output file handling User’s submitter providing created jobs to RB (ship user code to GRID) checking status of jobs retrieving output (retrieve user code output) T 1 01/12/06 T 1 T 2 Oliver Gutsche - Computing Basics within CMS 26

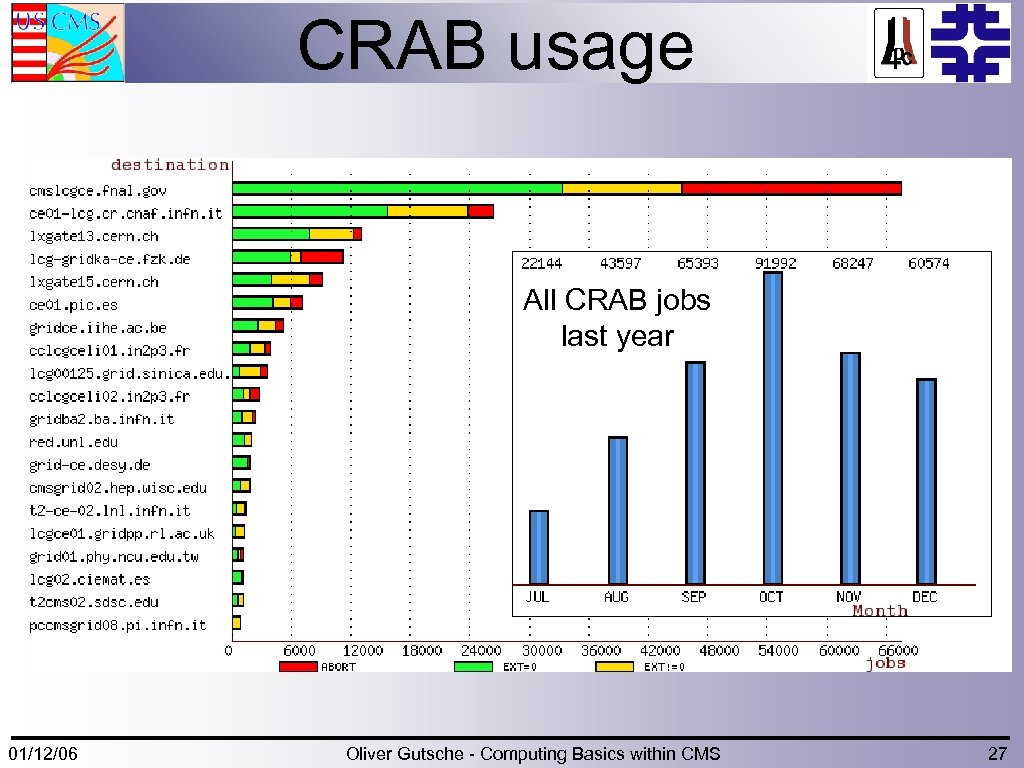

CRAB usage All CRAB jobs last year 01/12/06 Oliver Gutsche - Computing Basics within CMS 27

Summary & Outlook Computing for CMS in the LHC era is a challenge on its own The tier structure will provide CMS users with acces to data and MC samples independent of their location The user will have to know very little about the GRID and its tools Most important: Apply for your GRID certificate! 01/12/06 Oliver Gutsche - Computing Basics within CMS 28

One more thing. . . Mailing lists for support helpdesk@fnal. gov LPC-HOWTO@listserv. fnal. gov cms-wm-crab-feedback@cern. ch Webpages: User Computing at FNAL: http: //www. uscms. org/Software. Computing/User. Computing. html CRAB tutorial: http: //www. uscms. org/Software. Computing/User. Com puting/Tutorials/Crab. html 01/12/06 Oliver Gutsche - Computing Basics within CMS 29

The end 01/12/06 Oliver Gutsche - Computing Basics within CMS 30

2ec7635a19db4f8cff1f5e8b2fe06dd1.ppt