8fbf7334df7e4e58e11cfe889dd15063.ppt

- Количество слайдов: 33

© Computer Science Overview Laxmikant Kale October 29, 2002 © 2002 Board of Trustees of the University of Illinois

2 CS Faculty and Staff Investigators n n n n n M. Bhandarkar M. Brandyberry M. Campbell E. de Sturler R. Fiedler D. Guoy M. Heath J. Jiao L. Kale n n n n O. Lawlor J. Liesen J. Norris D. Padua E. Ramos D. Reed P. Saylor M. Winslett plus numerous students © 2002 Board of Trustees of the University of Illinois

Computer Science Research Overview n Parallel programming environment l l l l n Software integration framework Parallel component frameworks Component integration Clusters Parallel I/O and data migration Performance tools and techniques Computational steering Visualization Computational mathematics and geometry l l Interface propagation and interpolation Linear solvers and preconditioners Eigensolvers Mesh generation and adaptation © 2002 Board of Trustees of the University of Illinois 3

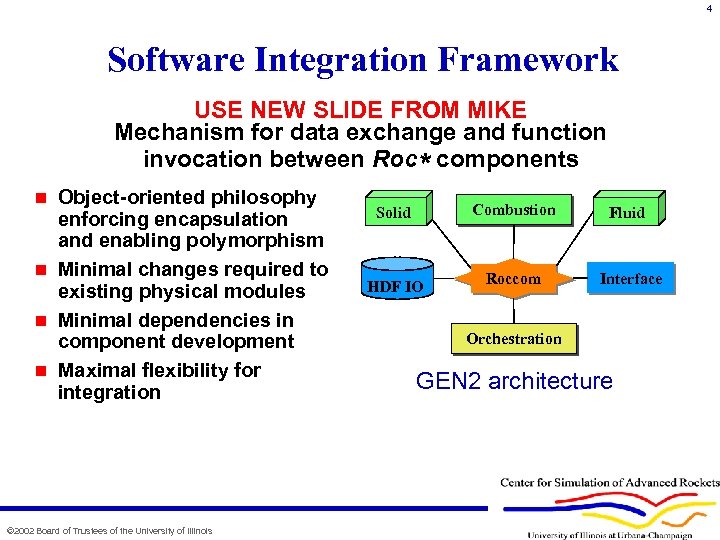

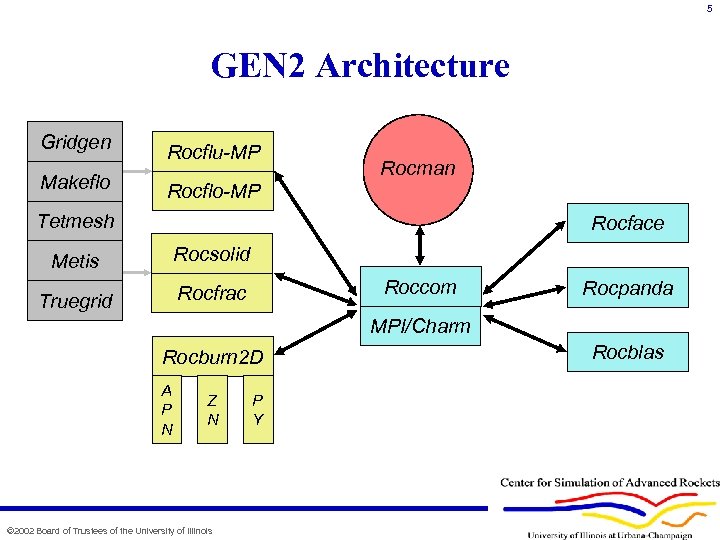

4 Software Integration Framework USE NEW SLIDE FROM MIKE Mechanism for data exchange and function invocation between Roc* components Object-oriented philosophy enforcing encapsulation and enabling polymorphism n Minimal changes required to existing physical modules n Minimal dependencies in component development n Maximal flexibility for integration n © 2002 Board of Trustees of the University of Illinois Solid Combustion Fluid HDF IO Roccom Interface Orchestration GEN 2 architecture

5 GEN 2 Architecture Gridgen Makeflo Rocflu-MP Rocman Rocflo-MP Tetmesh Rocface Metis Rocsolid Truegrid Rocfrac Roccom Rocpanda MPI/Charm Rocburn 2 D A P N Z N © 2002 Board of Trustees of the University of Illinois P Y Rocblas

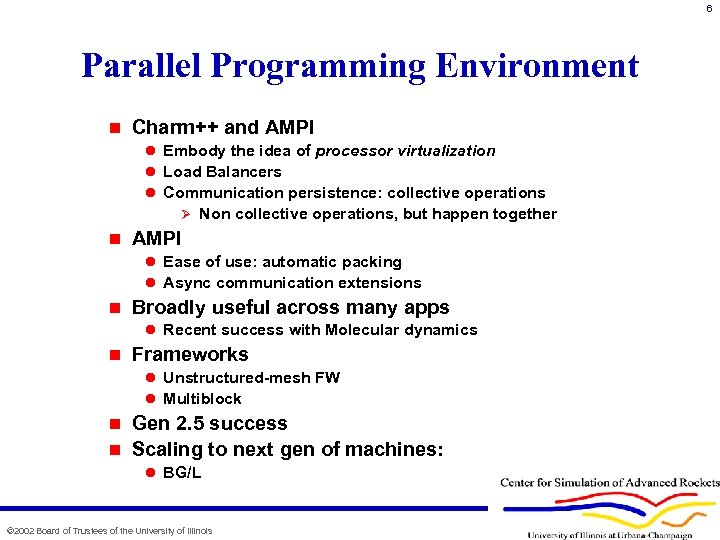

6 Parallel Programming Environment n Charm++ and AMPI l Embody the idea of processor virtualization l Load Balancers l Communication persistence: collective operations Ø n Non collective operations, but happen together AMPI l Ease of use: automatic packing l Async communication extensions n Broadly useful across many apps l Recent success with Molecular dynamics n Frameworks l Unstructured-mesh FW l Multiblock Gen 2. 5 success n Scaling to next gen of machines: n l BG/L © 2002 Board of Trustees of the University of Illinois

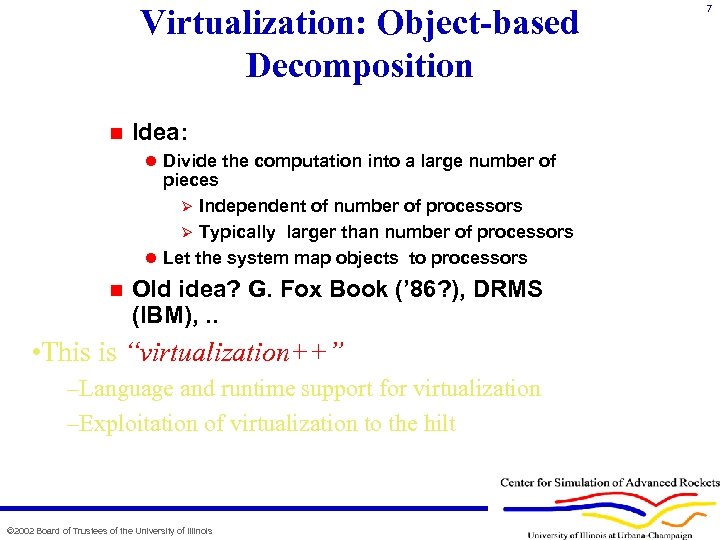

Virtualization: Object-based Decomposition n Idea: l Divide the computation into a large number of pieces Ø Independent of number of processors Ø Typically larger than number of processors l Let the system map objects to processors n Old idea? G. Fox Book (’ 86? ), DRMS (IBM), . . • This is “virtualization++” –Language and runtime support for virtualization –Exploitation of virtualization to the hilt © 2002 Board of Trustees of the University of Illinois 7

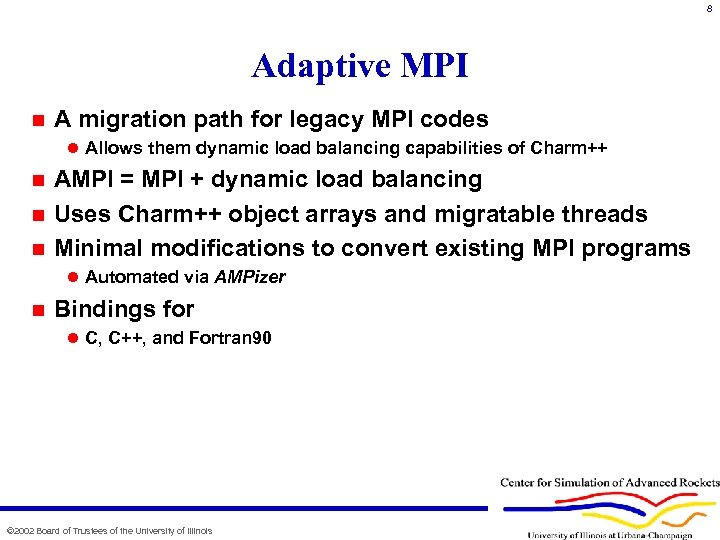

8 Adaptive MPI n A migration path for legacy MPI codes l Allows them dynamic load balancing capabilities of Charm++ AMPI = MPI + dynamic load balancing n Uses Charm++ object arrays and migratable threads n Minimal modifications to convert existing MPI programs n l Automated via AMPizer n Bindings for l C, C++, and Fortran 90 © 2002 Board of Trustees of the University of Illinois

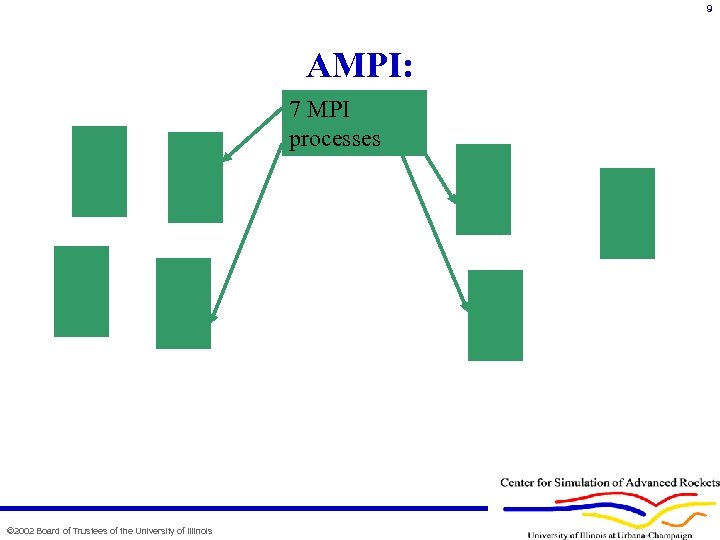

9 AMPI: 7 MPI processes © 2002 Board of Trustees of the University of Illinois

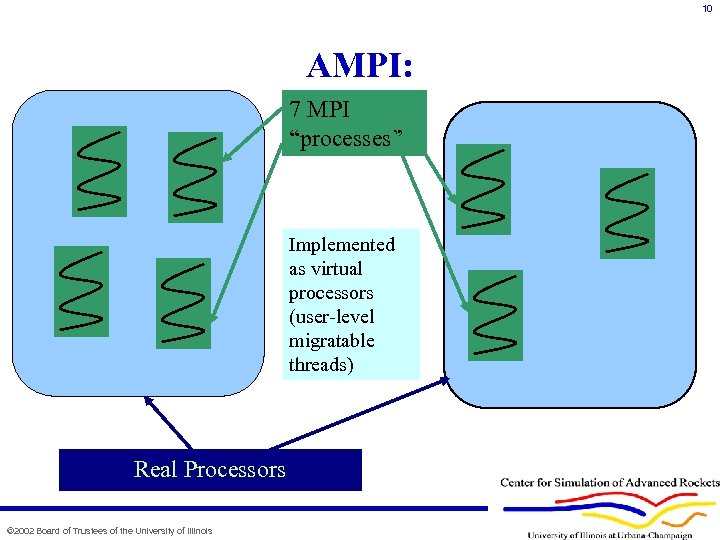

10 AMPI: 7 MPI “processes” Implemented as virtual processors (user-level migratable threads) Real Processors © 2002 Board of Trustees of the University of Illinois

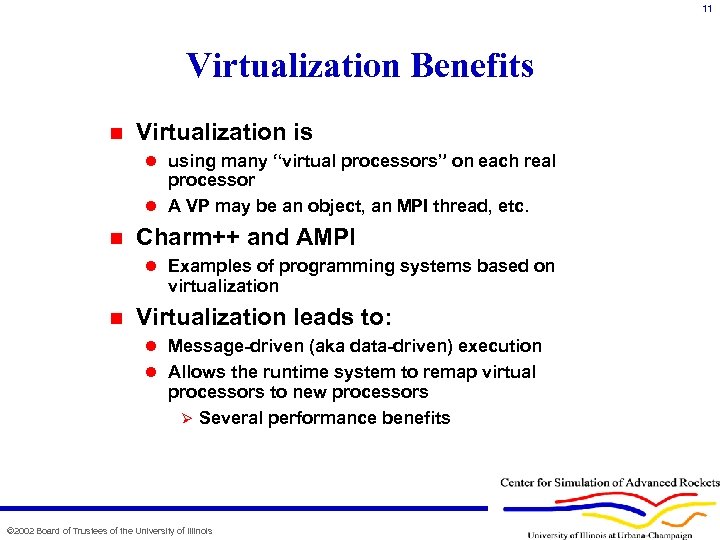

11 Virtualization Benefits n Virtualization is l using many “virtual processors” on each real processor l A VP may be an object, an MPI thread, etc. n Charm++ and AMPI l Examples of programming systems based on virtualization n Virtualization leads to: l Message-driven (aka data-driven) execution l Allows the runtime system to remap virtual processors to new processors Ø Several performance benefits © 2002 Board of Trustees of the University of Illinois

![12 Molecular Dynamics in NAMD n Collection of [charged] atoms, with bonds l Newtonian 12 Molecular Dynamics in NAMD n Collection of [charged] atoms, with bonds l Newtonian](https://present5.com/presentation/8fbf7334df7e4e58e11cfe889dd15063/image-12.jpg)

12 Molecular Dynamics in NAMD n Collection of [charged] atoms, with bonds l Newtonian mechanics l Thousands of atoms (1, 000 - 500, 000) l 1 femtosecond time-step, millions needed! n At each time-step l Calculate forces on each atom Bonds: Ø Non-bonded: electrostatic and van der Waal’s Ø l Short-distance: every timestep l Long-distance: every 4 timesteps using PME (3 D FFT) l Multiple Time Stepping l Calculate velocities and advance positions Collaboration with K. Schulten, R. Skeel, and coworkers © 2002 Board of Trustees of the University of Illinois

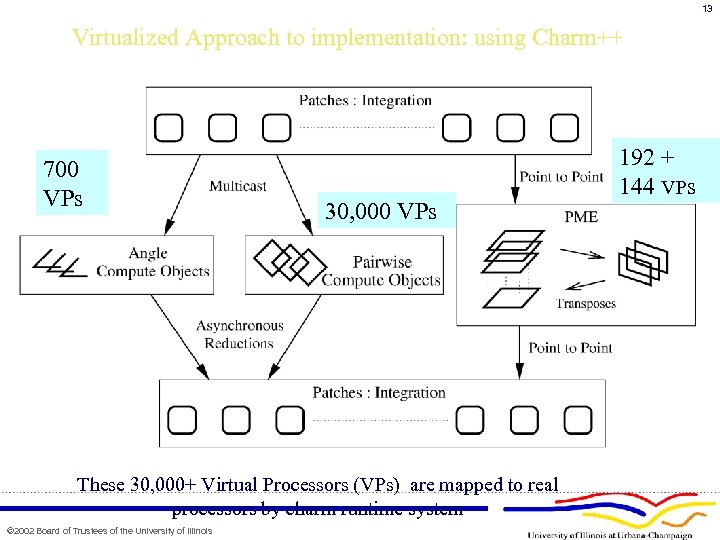

13 Virtualized Approach to implementation: using Charm++ 700 VPs 30, 000 VPs These 30, 000+ Virtual Processors (VPs) are mapped to real processors by charm runtime system © 2002 Board of Trustees of the University of Illinois 192 + 144 VPs

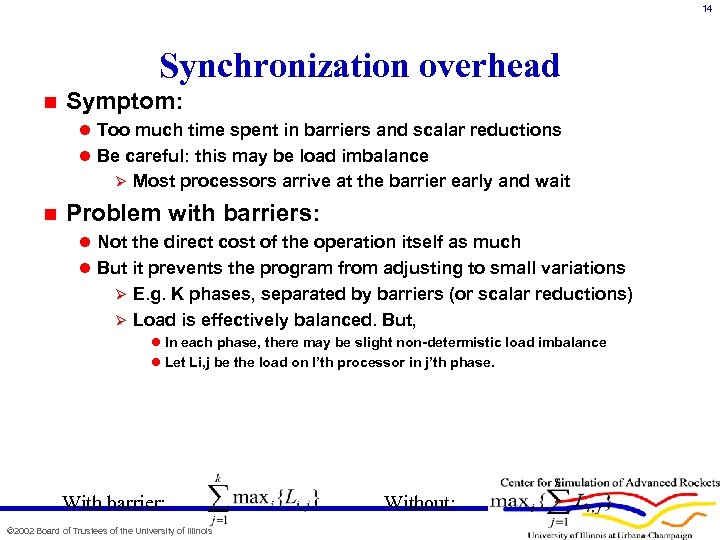

14 Synchronization overhead n Symptom: l Too much time spent in barriers and scalar reductions l Be careful: this may be load imbalance Ø n Most processors arrive at the barrier early and wait Problem with barriers: l Not the direct cost of the operation itself as much l But it prevents the program from adjusting to small variations E. g. K phases, separated by barriers (or scalar reductions) Ø Load is effectively balanced. But, Ø l In each phase, there may be slight non-determistic load imbalance l Let Li, j be the load on I’th processor in j’th phase. With barrier: © 2002 Board of Trustees of the University of Illinois Without:

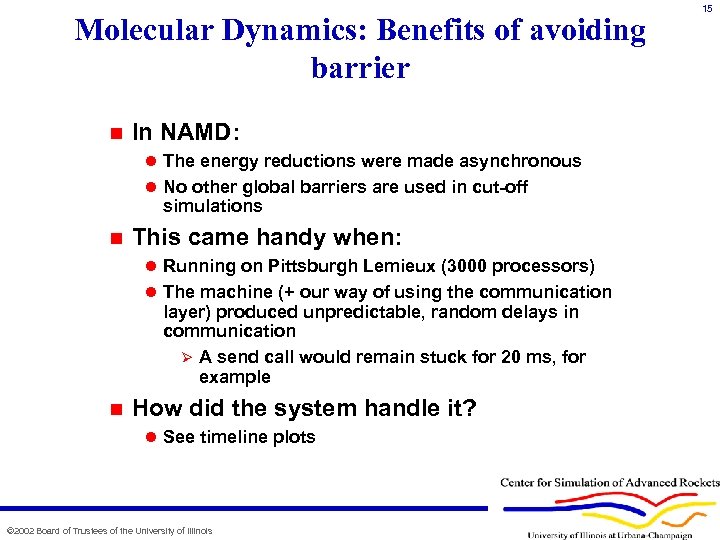

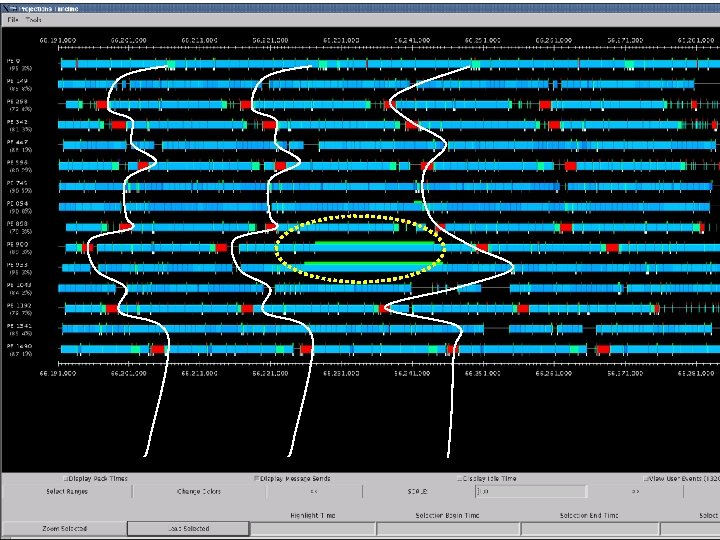

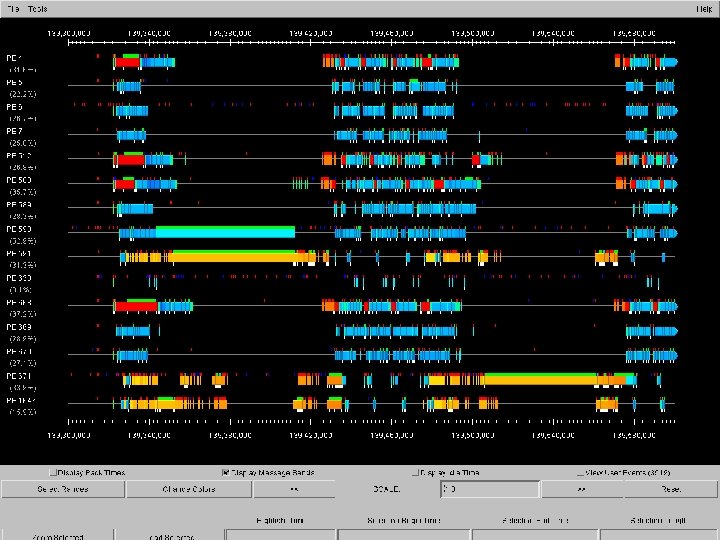

Molecular Dynamics: Benefits of avoiding barrier n In NAMD: l The energy reductions were made asynchronous l No other global barriers are used in cut-off simulations n This came handy when: l Running on Pittsburgh Lemieux (3000 processors) l The machine (+ our way of using the communication layer) produced unpredictable, random delays in communication Ø A send call would remain stuck for 20 ms, for example n How did the system handle it? l See timeline plots © 2002 Board of Trustees of the University of Illinois 15

16 © 2002 Board of Trustees of the University of Illinois

17 PME parallelization Impor 4 t picture from sc 02 paper (sindhura’s) © 2002 Board of Trustees of the University of Illinois

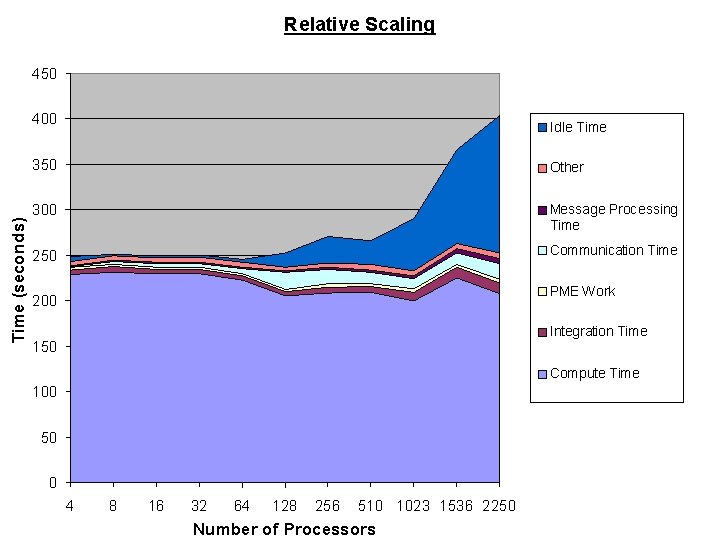

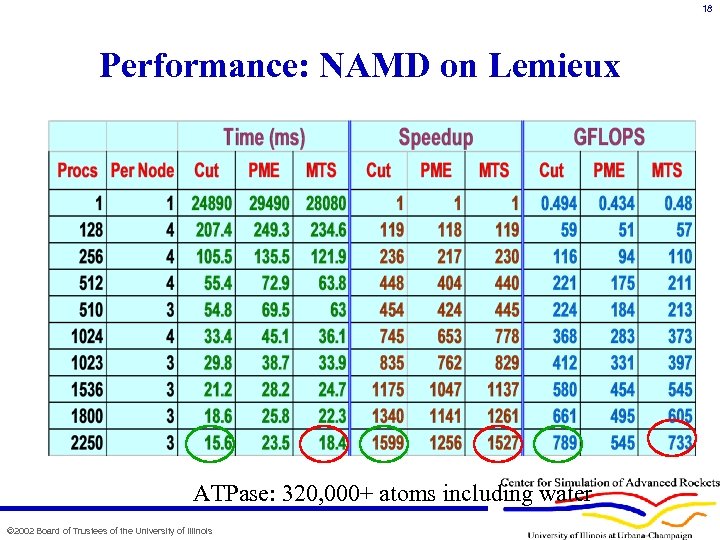

18 Performance: NAMD on Lemieux ATPase: 320, 000+ atoms including water © 2002 Board of Trustees of the University of Illinois

19 © 2002 Board of Trustees of the University of Illinois

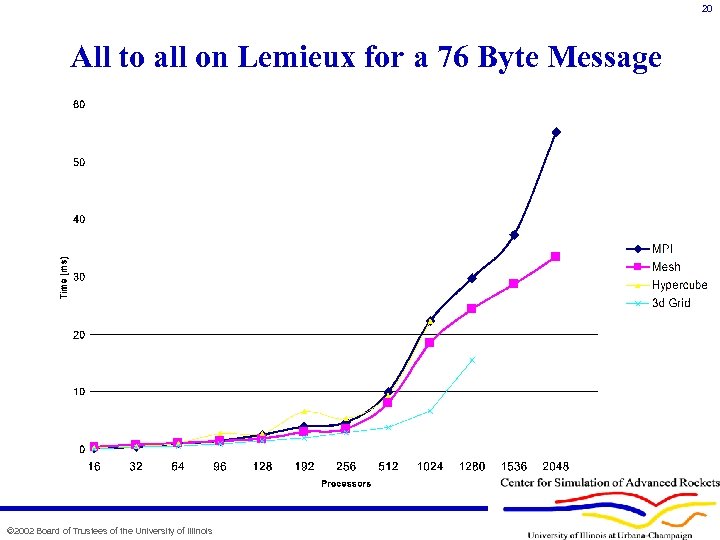

20 All to all on Lemieux for a 76 Byte Message © 2002 Board of Trustees of the University of Illinois

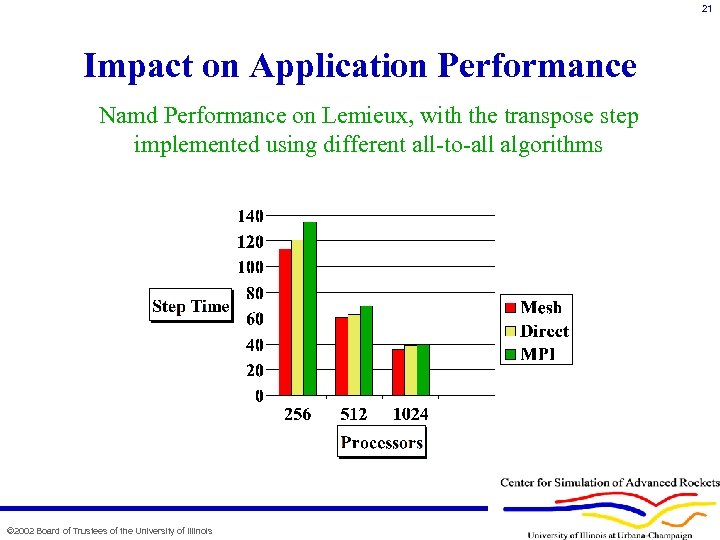

21 Impact on Application Performance Namd Performance on Lemieux, with the transpose step implemented using different all-to-all algorithms © 2002 Board of Trustees of the University of Illinois

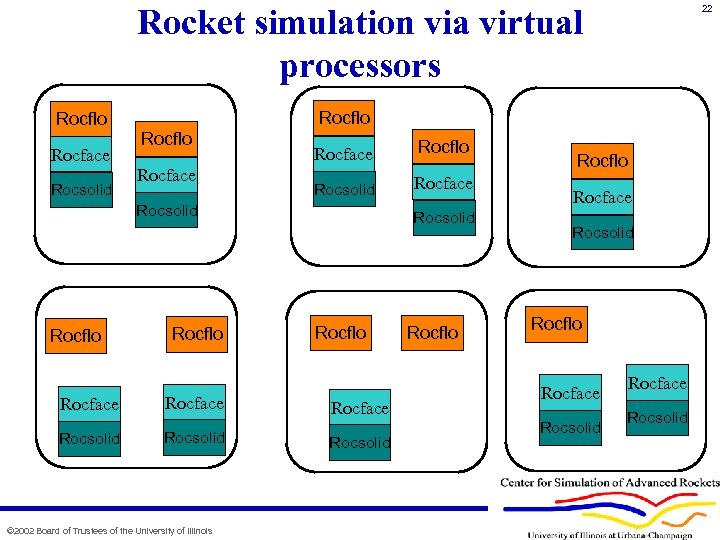

Rocket simulation via virtual processors Rocflo Rocface Rocsolid Rocflo Rocface Rocflo Rocsolid Rocface Rocsolid Rocflo Rocface Rocsolid 22 Rocflo Rocface Rocsolid © 2002 Board of Trustees of the University of Illinois Rocsolid Rocflo Rocface Rocsolid

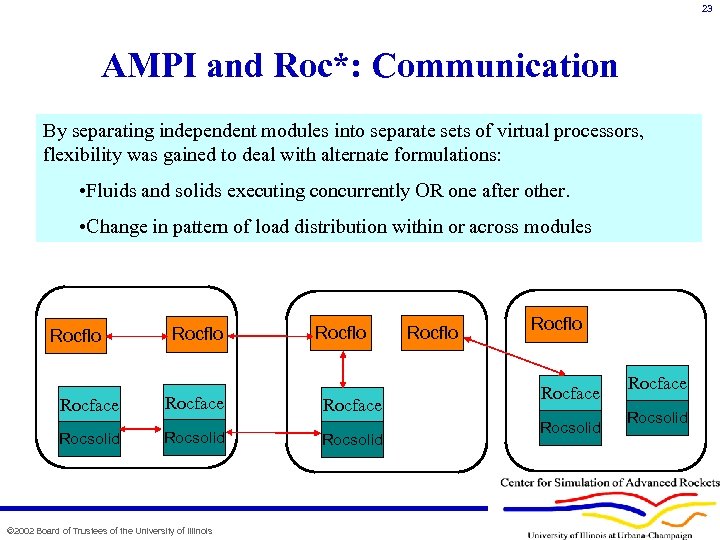

23 AMPI and Roc*: Communication By separating independent modules into separate sets of virtual processors, flexibility was gained to deal with alternate formulations: • Fluids and solids executing concurrently OR one after other. • Change in pattern of load distribution within or across modules Rocflo Rocface Rocsolid © 2002 Board of Trustees of the University of Illinois Rocflo Rocface Rocsolid

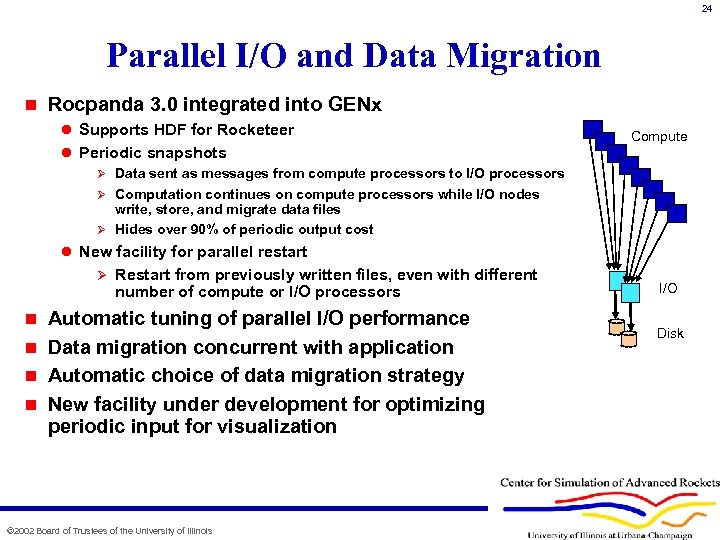

24 Parallel I/O and Data Migration n Rocpanda 3. 0 integrated into GENx l Supports HDF for Rocketeer l Periodic snapshots Compute Ø Data sent as messages from compute processors to I/O processors Ø Computation continues on compute processors while I/O nodes write, store, and migrate data files Ø Hides over 90% of periodic output cost l New facility for parallel restart Ø Restart from previously written files, even with different number of compute or I/O processors Automatic tuning of parallel I/O performance n Data migration concurrent with application n Automatic choice of data migration strategy n New facility under development for optimizing periodic input for visualization n © 2002 Board of Trustees of the University of Illinois I/O Disk

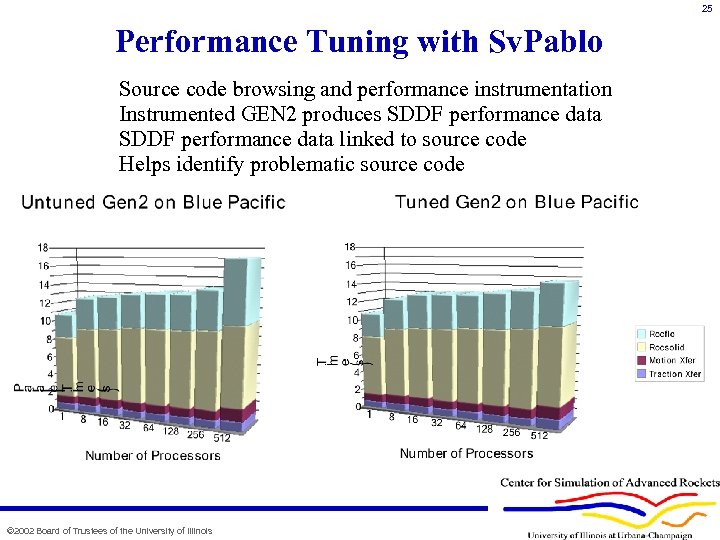

25 Performance Tuning with Sv. Pablo Source code browsing and performance instrumentation Instrumented GEN 2 produces SDDF performance data linked to source code Helps identify problematic source code © 2002 Board of Trustees of the University of Illinois

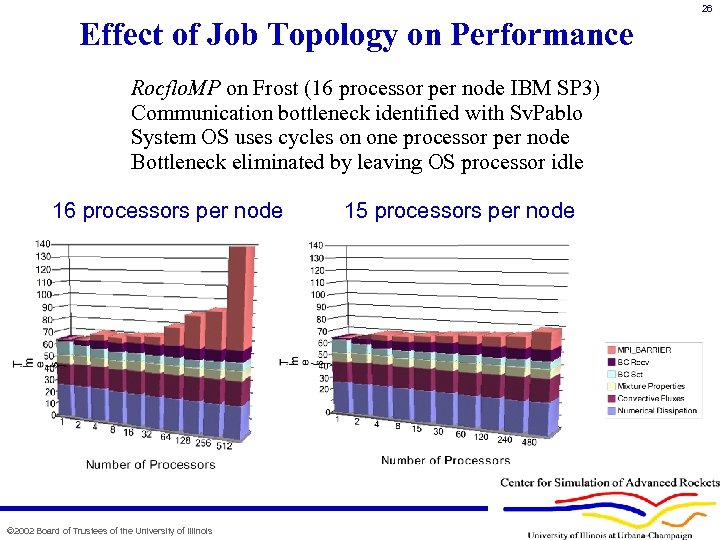

26 Effect of Job Topology on Performance Rocflo. MP on Frost (16 processor per node IBM SP 3) Communication bottleneck identified with Sv. Pablo System OS uses cycles on one processor per node Bottleneck eliminated by leaving OS processor idle 16 processors per node © 2002 Board of Trustees of the University of Illinois 15 processors per node

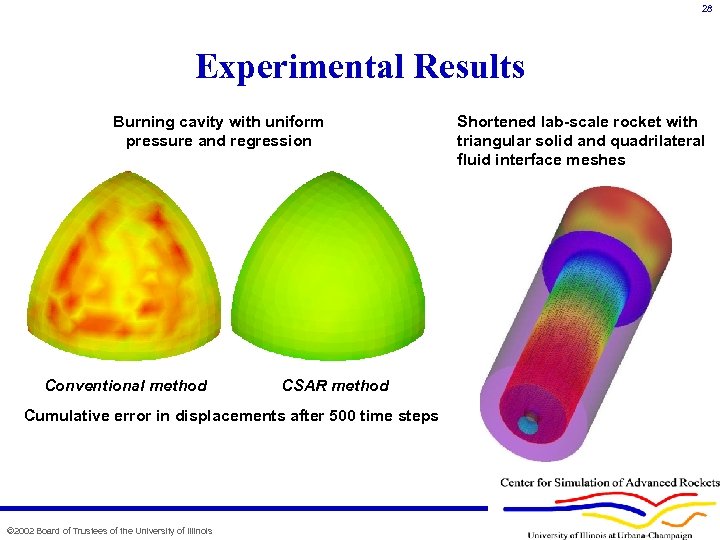

28 Experimental Results Burning cavity with uniform pressure and regression Conventional method CSAR method Cumulative error in displacements after 500 time steps © 2002 Board of Trustees of the University of Illinois Shortened lab-scale rocket with triangular solid and quadrilateral fluid interface meshes

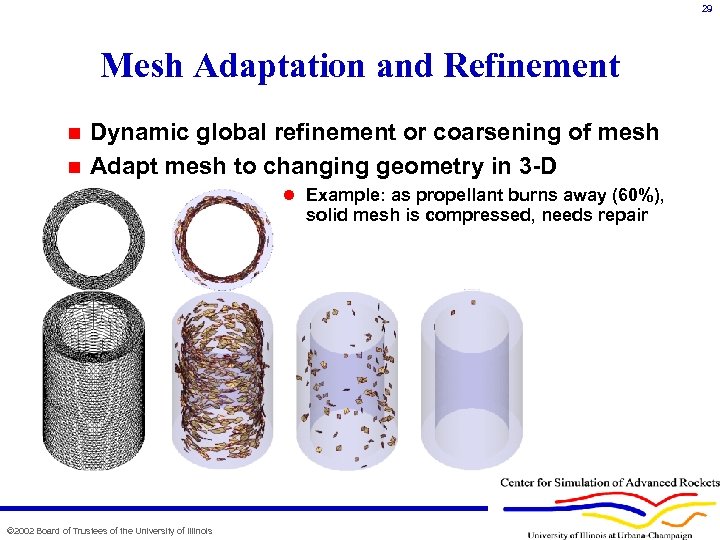

29 Mesh Adaptation and Refinement Dynamic global refinement or coarsening of mesh n Adapt mesh to changing geometry in 3 -D n l Example: as propellant burns away (60%), solid mesh is compressed, needs repair © 2002 Board of Trustees of the University of Illinois

30 Linear Solvers and Preconditioners Optimally truncated GMRES method adapted for solving sequences of linear systems in time dependent problems n Preconditioners developed for indefinite linear systems arising in constrained problems n Further work planned on domain decomposition and approximate inverse preconditioners n © 2002 Board of Trustees of the University of Illinois

© http: //www. csar. uiuc. edu © 2001 Board of Trustees of the University of Illinois

32 Michael T. Heath, Director Center for Simulation of Advanced Rockets University of Illinois at Urbana-Champaign 2262 Digital Computer Laboratory 1304 West Springfield Avenue Urbana, IL 61801 USA m-heath@uiuc. edu http: //www. csar. uiuc. edu telephone: 217 -333 -6268 fax: 217 -333 -1910 © 2002 Board of Trustees of the University of Illinois

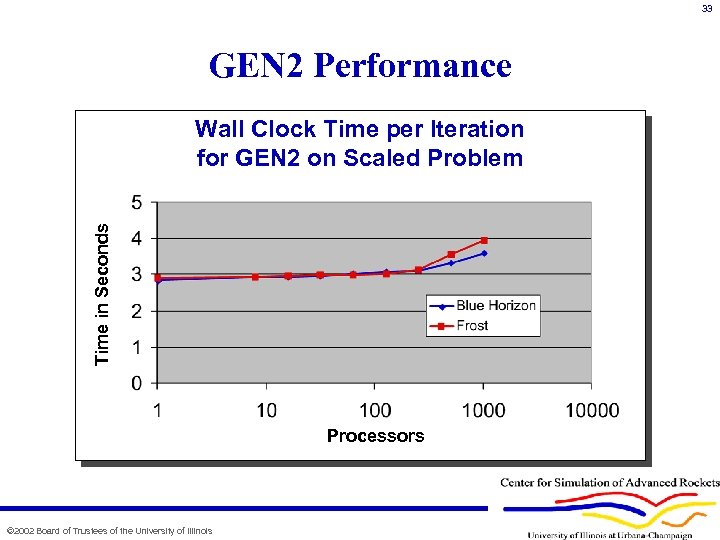

33 GEN 2 Performance Time in Seconds Wall Clock Time per Iteration for GEN 2 on Scaled Problem Processors © 2002 Board of Trustees of the University of Illinois

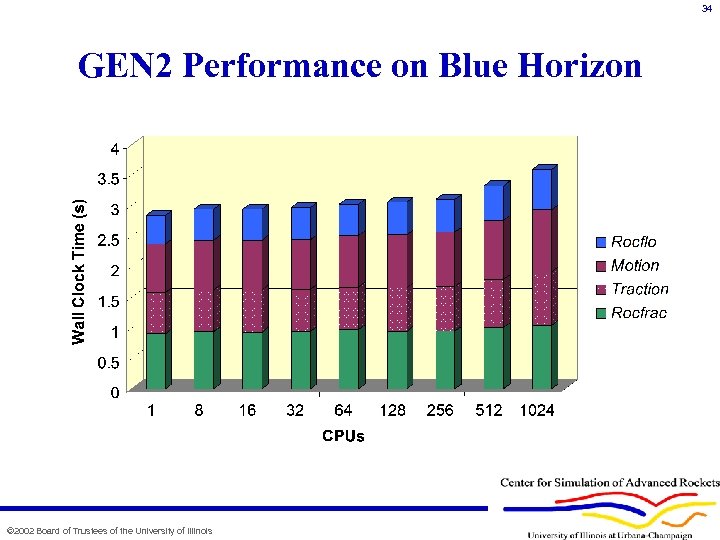

34 GEN 2 Performance on Blue Horizon © 2002 Board of Trustees of the University of Illinois

8fbf7334df7e4e58e11cfe889dd15063.ppt