4e1d7894e2765598b1a42cf9add1dc3e.ppt

- Количество слайдов: 82

Computer Organization AND Design The Hardware/Software Interface Chapter 1 Computer Abstractions and Technology Xin LI ( 李新) Shandong University

Computer Organization AND Design The Hardware/Software Interface Chapter 1 Computer Abstractions and Technology Xin LI ( 李新) Shandong University

Contents of Chapter 1 l l l l 1. 1 1. 2 1. 3 1. 4 1. 5 1. 6 1. 7 Introduction Below your program Under the covers Performance Power wall The Sea Change Real Stuff: Manufacturing Chips 2

Contents of Chapter 1 l l l l 1. 1 1. 2 1. 3 1. 4 1. 5 1. 6 1. 7 Introduction Below your program Under the covers Performance Power wall The Sea Change Real Stuff: Manufacturing Chips 2

1. 1 Introduction Computers have led to a third revolution for civilization l The following applications used to be “computer science fiction” l Ø Ø Ø Automatic teller machines Computers in automobiles Laptop computers Human genome project World Wide Web

1. 1 Introduction Computers have led to a third revolution for civilization l The following applications used to be “computer science fiction” l Ø Ø Ø Automatic teller machines Computers in automobiles Laptop computers Human genome project World Wide Web

? l Tomorrow’s science fiction computer applications Cashless society ± Digital cash from 2004 failed Ø Automated intelligent highways ± ITS from 2003 failed Ø Genuinely ubiquitous computing ± Embedded system from 1999 ? ± Mobile phone will kilo-core ? GPU: 1600 cores Ø Cloud computing Ø

? l Tomorrow’s science fiction computer applications Cashless society ± Digital cash from 2004 failed Ø Automated intelligent highways ± ITS from 2003 failed Ø Genuinely ubiquitous computing ± Embedded system from 1999 ? ± Mobile phone will kilo-core ? GPU: 1600 cores Ø Cloud computing Ø

l The influence of hardware on software In the past ± Memory size was very small ± Programmers must minimize memory space to make programs fast Ø Nowadays ± The hierarchical nature of memories ± The parallel nature of processors ± Programmers must understand computer organization more Ø

l The influence of hardware on software In the past ± Memory size was very small ± Programmers must minimize memory space to make programs fast Ø Nowadays ± The hierarchical nature of memories ± The parallel nature of processors ± Programmers must understand computer organization more Ø

Computer major l Theory/Software Ø l Hardware/System Ø l Organization, architecture… Application Ø l Algorithm, Language principle… Database, Web, Embedded systems, graphics, … SCI categories Ø Ø Ø Ø HARDWARE & ARCHITECTURE ARTIFICIAL INTELLIGENCE CYBERNETICS INFORMATION SYSTEMS INTERDISCIPLINARY APPLICATIONS SOFTWARE ENGINEERING THEORY & METHODS

Computer major l Theory/Software Ø l Hardware/System Ø l Organization, architecture… Application Ø l Algorithm, Language principle… Database, Web, Embedded systems, graphics, … SCI categories Ø Ø Ø Ø HARDWARE & ARCHITECTURE ARTIFICIAL INTELLIGENCE CYBERNETICS INFORMATION SYSTEMS INTERDISCIPLINARY APPLICATIONS SOFTWARE ENGINEERING THEORY & METHODS

Hardware PK Software l Who develop qk Round 1: hardware win Ø Round 2: Software win Ø Round 3: hardware win Ø Round 4: ? Ø l machine code/ASM C/C++/java multicore/manycore Why we need learn hardware? CS PK EE Ø What difference between professionally trained person and other major ± Programming skill? ± Tools Ø What is the threshold when non-computer major students work in IT Ø

Hardware PK Software l Who develop qk Round 1: hardware win Ø Round 2: Software win Ø Round 3: hardware win Ø Round 4: ? Ø l machine code/ASM C/C++/java multicore/manycore Why we need learn hardware? CS PK EE Ø What difference between professionally trained person and other major ± Programming skill? ± Tools Ø What is the threshold when non-computer major students work in IT Ø

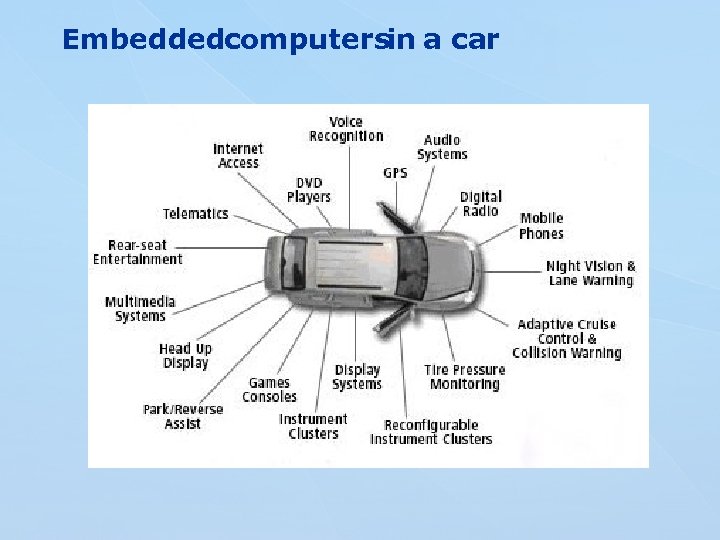

Classes of Computer applications l Personal Computer Ø l E. g. Desktop, laptop Server Ø High performance Ø E. g. Mainframes, minicomputers, supercomputers, data center Ø Application ± WWW, search engine, weather broadcast l Embedded Computers a computer system with a dedicated function within a larger mechanical or electrical system Ø E. g. Cell phone, microprocessors in cars/ television Ø

Classes of Computer applications l Personal Computer Ø l E. g. Desktop, laptop Server Ø High performance Ø E. g. Mainframes, minicomputers, supercomputers, data center Ø Application ± WWW, search engine, weather broadcast l Embedded Computers a computer system with a dedicated function within a larger mechanical or electrical system Ø E. g. Cell phone, microprocessors in cars/ television Ø

Embeddedcomputersin a car

Embeddedcomputersin a car

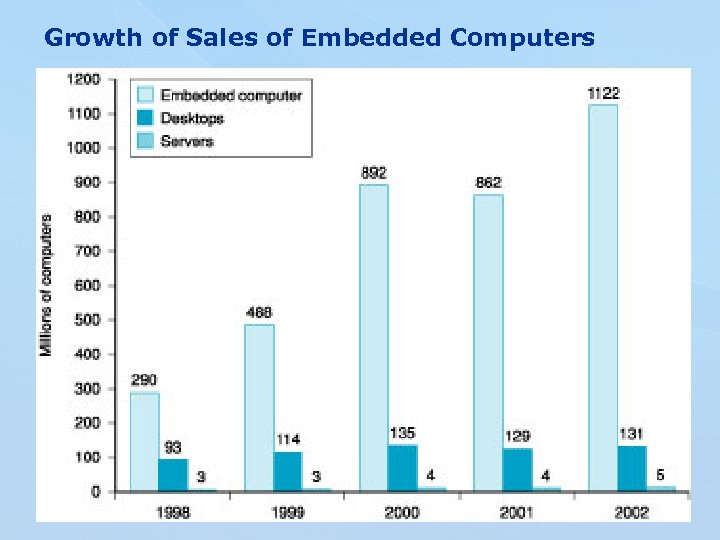

Growth of Sales of Embedded Computers

Growth of Sales of Embedded Computers

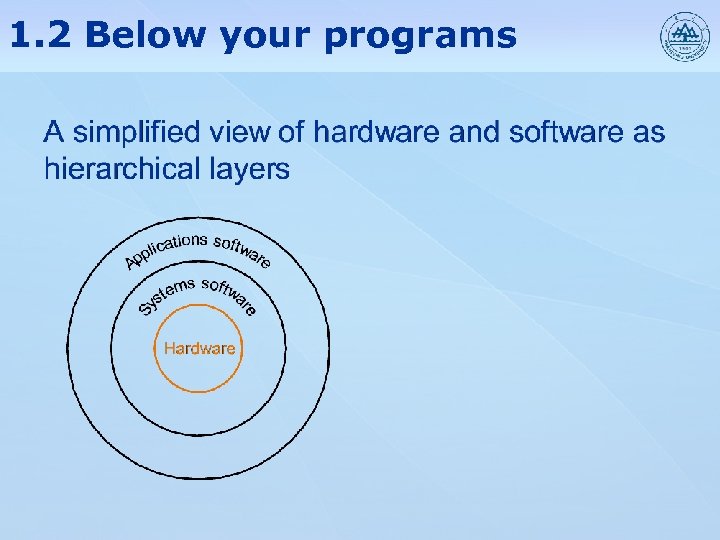

1. 2 Below your programs

1. 2 Below your programs

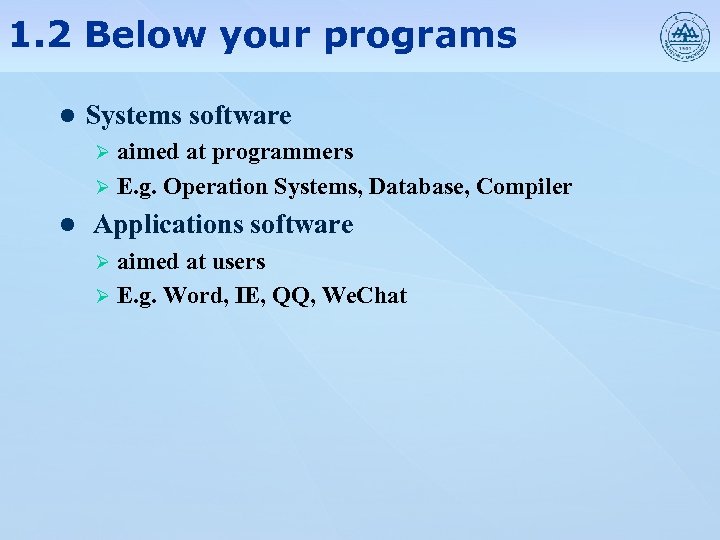

1. 2 Below your programs l Systems software aimed at programmers Ø E. g. Operation Systems, Database, Compiler Ø l Applications software aimed at users Ø E. g. Word, IE, QQ, We. Chat Ø

1. 2 Below your programs l Systems software aimed at programmers Ø E. g. Operation Systems, Database, Compiler Ø l Applications software aimed at users Ø E. g. Word, IE, QQ, We. Chat Ø

Computer Language and Software System l Computer language Computers only understands electrical signals Ø Binary numbers express machine instructions e. g. 1000110010100000 means to add two numbers Ø Easiest signals: on and off Ø Very tedious to write Ø l Assembly language Symbolic notations e. g. add a, b, c #a=b+c Ø The assembler translates them into machine instruction Ø Programmers have to think like the machine Ø

Computer Language and Software System l Computer language Computers only understands electrical signals Ø Binary numbers express machine instructions e. g. 1000110010100000 means to add two numbers Ø Easiest signals: on and off Ø Very tedious to write Ø l Assembly language Symbolic notations e. g. add a, b, c #a=b+c Ø The assembler translates them into machine instruction Ø Programmers have to think like the machine Ø

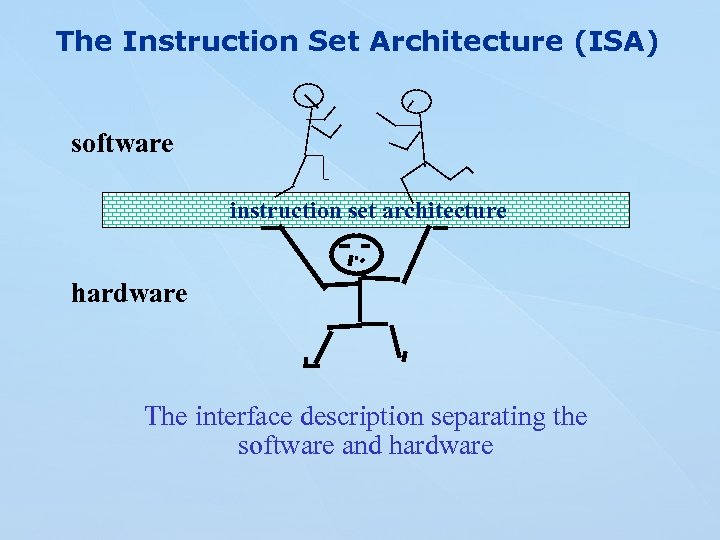

The Instruction Set Architecture (ISA) software instruction set architecture hardware The interface description separating the software and hardware

The Instruction Set Architecture (ISA) software instruction set architecture hardware The interface description separating the software and hardware

l High-level programming language Notations more closer to the natural language Ø The compiler translates them into assembly language statements Ø Advantages over assembly language ± Programmers can think in a more natural language ± Improved programming productivity ± Programs can be independent of hardware Ø Subroutine library ---- reusing programs Ø l Which one faster? Asm、C、C++、Java Ø Lower, faster Ø

l High-level programming language Notations more closer to the natural language Ø The compiler translates them into assembly language statements Ø Advantages over assembly language ± Programmers can think in a more natural language ± Improved programming productivity ± Programs can be independent of hardware Ø Subroutine library ---- reusing programs Ø l Which one faster? Asm、C、C++、Java Ø Lower, faster Ø

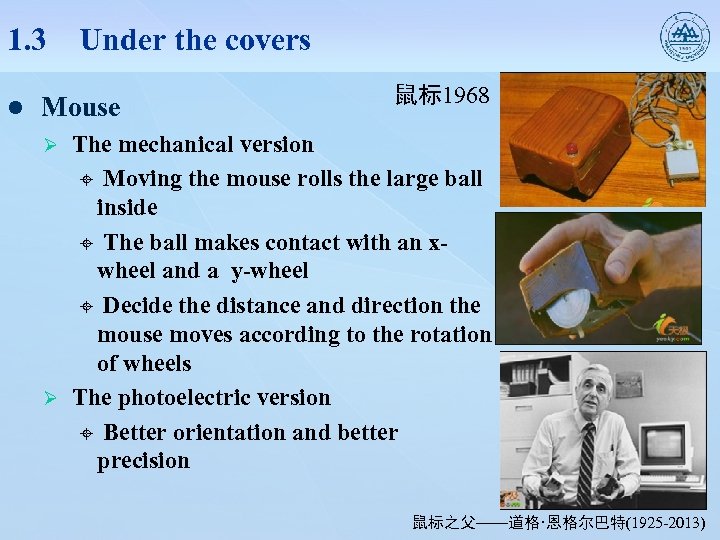

1. 3 l Under the covers Mouse 鼠标1968 The mechanical version ± Moving the mouse rolls the large ball inside ± The ball makes contact with an xwheel and a y-wheel ± Decide the distance and direction the mouse moves according to the rotation of wheels Ø The photoelectric version ± Better orientation and better precision Ø 鼠标之父——道格·恩格尔巴特(1925 -2013)

1. 3 l Under the covers Mouse 鼠标1968 The mechanical version ± Moving the mouse rolls the large ball inside ± The ball makes contact with an xwheel and a y-wheel ± Decide the distance and direction the mouse moves according to the rotation of wheels Ø The photoelectric version ± Better orientation and better precision Ø 鼠标之父——道格·恩格尔巴特(1925 -2013)

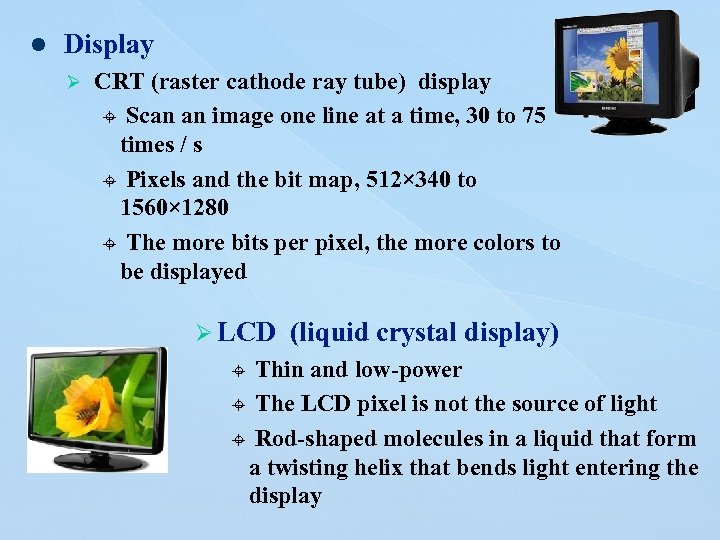

l Display Ø CRT (raster cathode ray tube) display ± Scan an image one line at a time, 30 to 75 times / s ± Pixels and the bit map, 512× 340 to 1560× 1280 ± The more bits per pixel, the more colors to be displayed Ø LCD (liquid crystal display) Thin and low-power ± The LCD pixel is not the source of light ± Rod-shaped molecules in a liquid that form a twisting helix that bends light entering the display ±

l Display Ø CRT (raster cathode ray tube) display ± Scan an image one line at a time, 30 to 75 times / s ± Pixels and the bit map, 512× 340 to 1560× 1280 ± The more bits per pixel, the more colors to be displayed Ø LCD (liquid crystal display) Thin and low-power ± The LCD pixel is not the source of light ± Rod-shaped molecules in a liquid that form a twisting helix that bends light entering the display ±

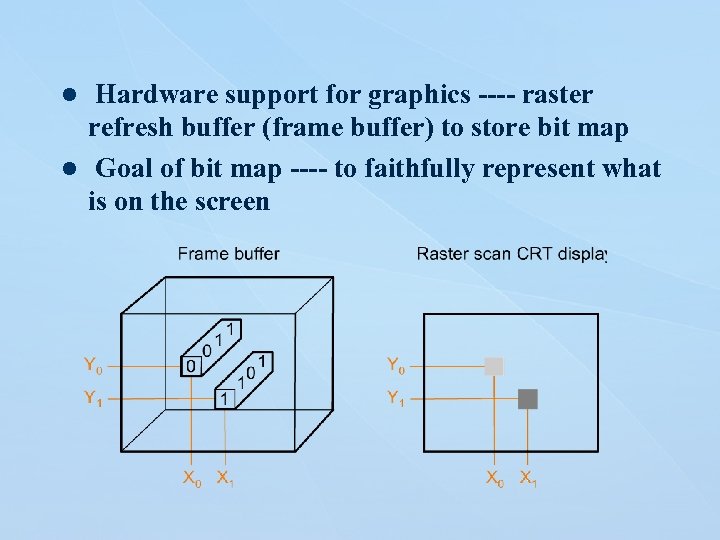

Hardware support for graphics ---- raster refresh buffer (frame buffer) to store bit map l Goal of bit map ---- to faithfully represent what is on the screen l

Hardware support for graphics ---- raster refresh buffer (frame buffer) to store bit map l Goal of bit map ---- to faithfully represent what is on the screen l

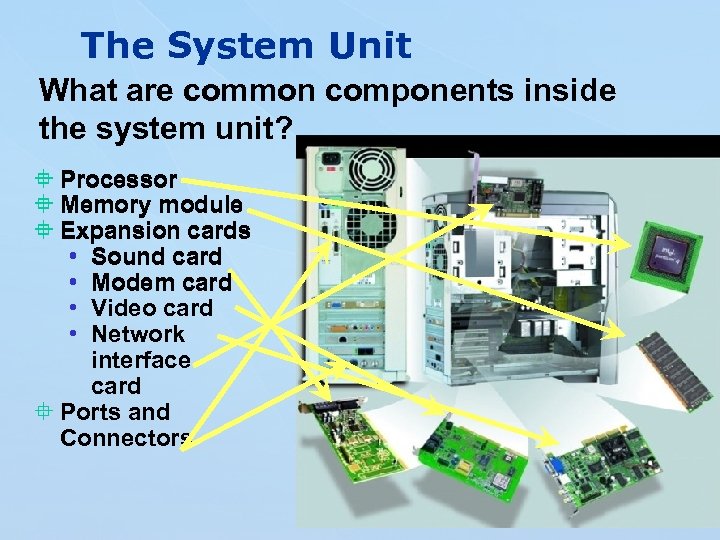

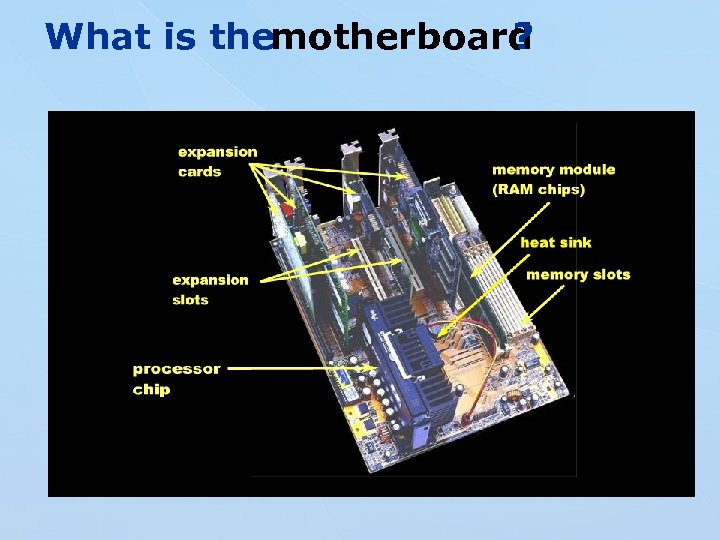

The System Unit What are common components inside the system unit? ° Processor ° Memory module ° Expansion cards • Sound card • Modem card • Video card • Network interface card ° Ports and Connectors

The System Unit What are common components inside the system unit? ° Processor ° Memory module ° Expansion cards • Sound card • Modem card • Video card • Network interface card ° Ports and Connectors

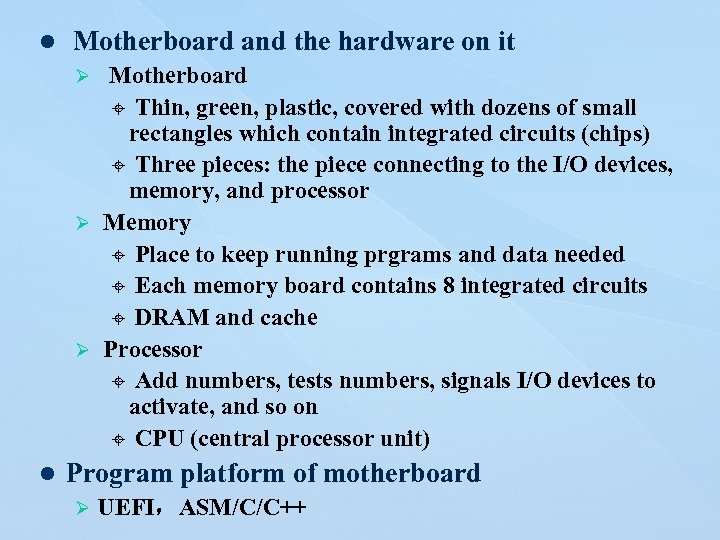

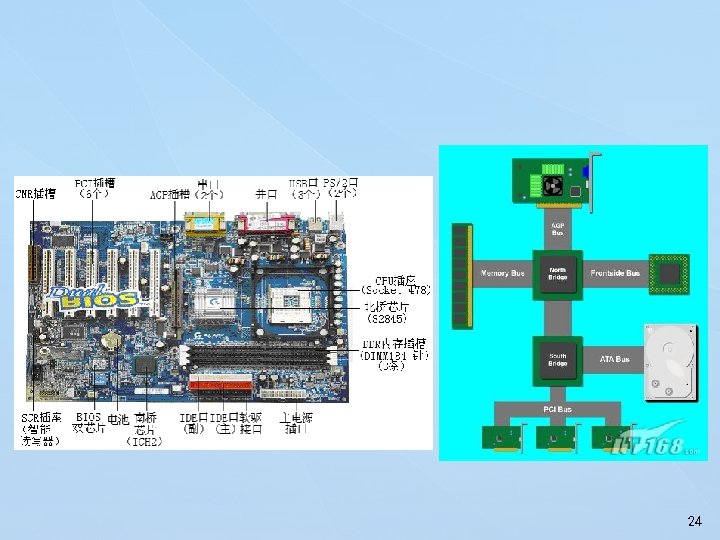

l Motherboard and the hardware on it Motherboard ± Thin, green, plastic, covered with dozens of small rectangles which contain integrated circuits (chips) ± Three pieces: the piece connecting to the I/O devices, memory, and processor Ø Memory ± Place to keep running prgrams and data needed ± Each memory board contains 8 integrated circuits ± DRAM and cache Ø Processor ± Add numbers, tests numbers, signals I/O devices to activate, and so on ± CPU (central processor unit) Ø l Program platform of motherboard Ø UEFI,ASM/C/C++

l Motherboard and the hardware on it Motherboard ± Thin, green, plastic, covered with dozens of small rectangles which contain integrated circuits (chips) ± Three pieces: the piece connecting to the I/O devices, memory, and processor Ø Memory ± Place to keep running prgrams and data needed ± Each memory board contains 8 integrated circuits ± DRAM and cache Ø Processor ± Add numbers, tests numbers, signals I/O devices to activate, and so on ± CPU (central processor unit) Ø l Program platform of motherboard Ø UEFI,ASM/C/C++

What is themotherboard ?

What is themotherboard ?

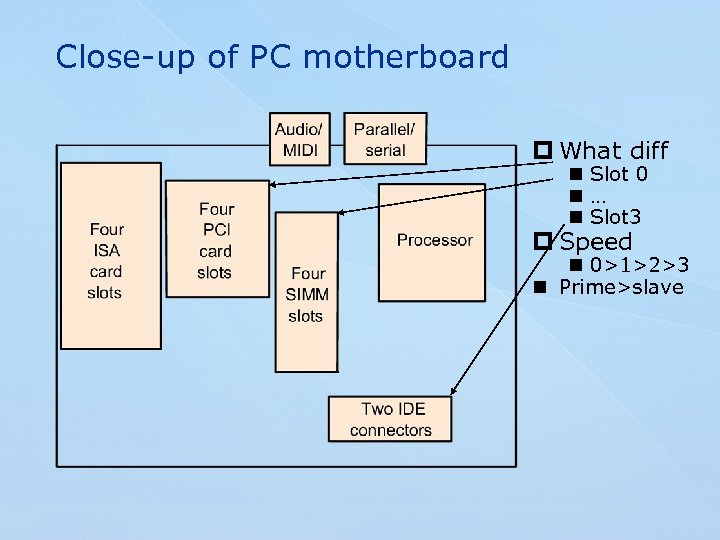

Close-up of PC motherboard p What diff n Slot 0 n… n Slot 3 p Speed n 0>1>2>3 n Prime>slave

Close-up of PC motherboard p What diff n Slot 0 n… n Slot 3 p Speed n 0>1>2>3 n Prime>slave

24

24

CPU 内存条 CPU散热风扇 电源 主机箱 25

CPU 内存条 CPU散热风扇 电源 主机箱 25

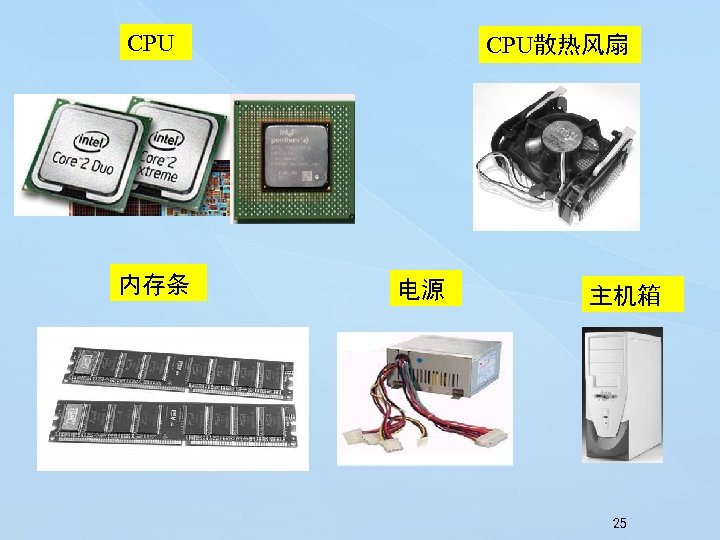

软驱 显卡 硬盘 光驱 声卡 26

软驱 显卡 硬盘 光驱 声卡 26

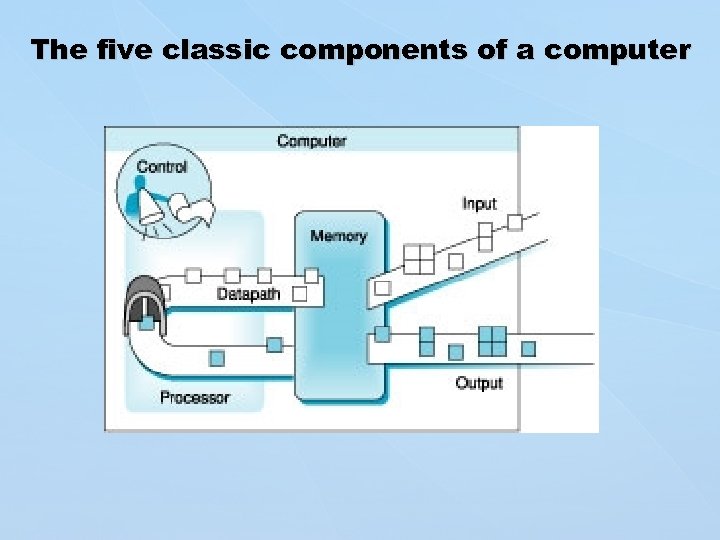

The five classic components of a computer

The five classic components of a computer

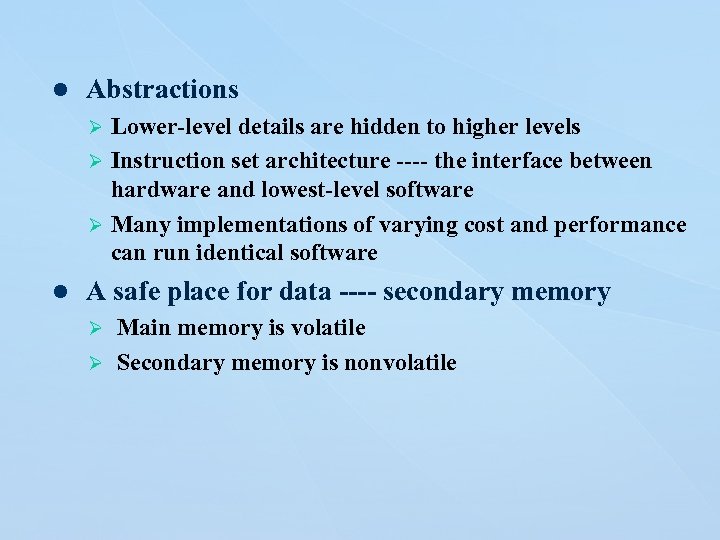

l Abstractions Lower-level details are hidden to higher levels Ø Instruction set architecture ---- the interface between hardware and lowest-level software Ø Many implementations of varying cost and performance can run identical software Ø l A safe place for data ---- secondary memory Main memory is volatile Ø Secondary memory is nonvolatile Ø

l Abstractions Lower-level details are hidden to higher levels Ø Instruction set architecture ---- the interface between hardware and lowest-level software Ø Many implementations of varying cost and performance can run identical software Ø l A safe place for data ---- secondary memory Main memory is volatile Ø Secondary memory is nonvolatile Ø

![Below the Program q High-level language program (in C) swap (int v[], int k). Below the Program q High-level language program (in C) swap (int v[], int k).](https://present5.com/presentation/4e1d7894e2765598b1a42cf9add1dc3e/image-28.jpg) Below the Program q High-level language program (in C) swap (int v[], int k). . . q Assembly language program (for MIPS) swap: q sll add lw lw sw sw jr $2, $5, 2 $2, $4, $2 $15, 0($2) $16, 4($2) $16, 0($2) $15, 4($2) $31 C compiler Machine (object) code (for MIPS) 000000 100011 101011 000000 00100 00010 11111 00101 00010 01111 10000 01111 00000 00010000000 0001000000000000000000100 0000001000 assembler

Below the Program q High-level language program (in C) swap (int v[], int k). . . q Assembly language program (for MIPS) swap: q sll add lw lw sw sw jr $2, $5, 2 $2, $4, $2 $15, 0($2) $16, 4($2) $16, 0($2) $15, 4($2) $31 C compiler Machine (object) code (for MIPS) 000000 100011 101011 000000 00100 00010 11111 00101 00010 01111 10000 01111 00000 00010000000 0001000000000000000000100 0000001000 assembler

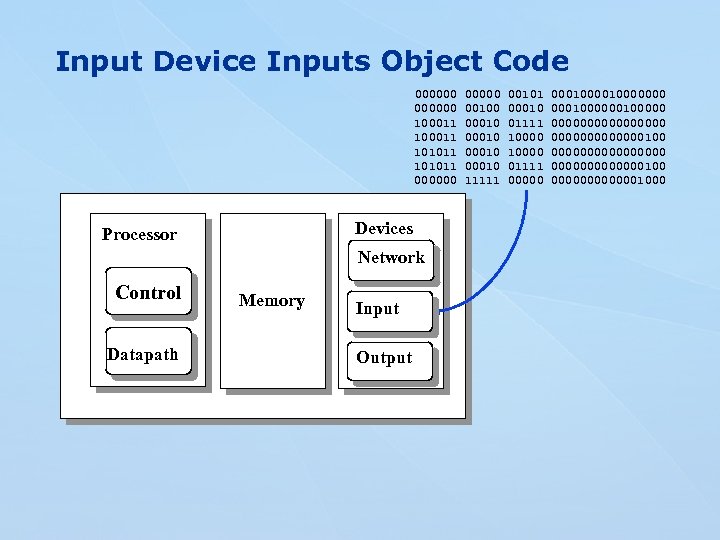

Input Device Inputs Object Code 000000 100011 101011 000000 Devices Processor Network Control Datapath Memory Input Output 00000 00100 00010 11111 00101 00010 01111 10000 01111 00000 00010000000 0001000000000000000000100 0000001000

Input Device Inputs Object Code 000000 100011 101011 000000 Devices Processor Network Control Datapath Memory Input Output 00000 00100 00010 11111 00101 00010 01111 10000 01111 00000 00010000000 0001000000000000000000100 0000001000

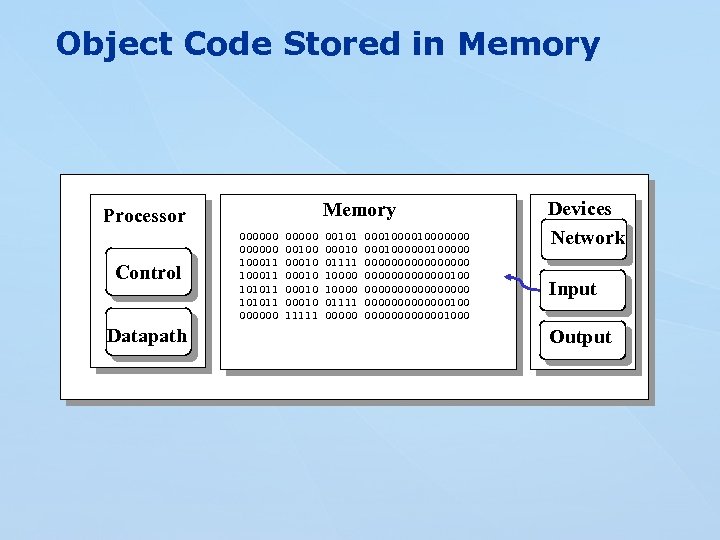

Object Code Stored in Memory Processor Control Datapath 000000 100011 101011 000000 00100 00010 11111 00101 00010 01111 10000 01111 00000 00010000000 0001000000000000000000100 0000001000 Devices Network Input Output

Object Code Stored in Memory Processor Control Datapath 000000 100011 101011 000000 00100 00010 11111 00101 00010 01111 10000 01111 00000 00010000000 0001000000000000000000100 0000001000 Devices Network Input Output

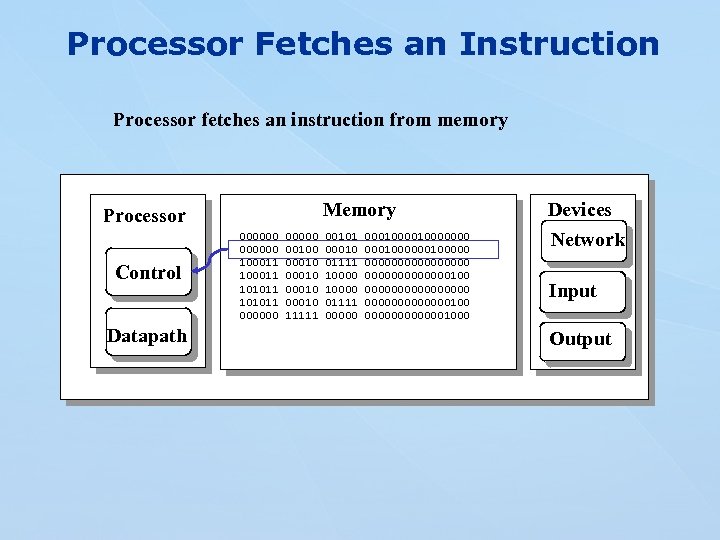

Processor Fetches an Instruction Processor fetches an instruction from memory Memory Processor Control Datapath 000000 100011 101011 000000 00100 00010 11111 00101 00010 01111 10000 01111 00000 00010000000 0001000000000000000000100 0000001000 Devices Network Input Output

Processor Fetches an Instruction Processor fetches an instruction from memory Memory Processor Control Datapath 000000 100011 101011 000000 00100 00010 11111 00101 00010 01111 10000 01111 00000 00010000000 0001000000000000000000100 0000001000 Devices Network Input Output

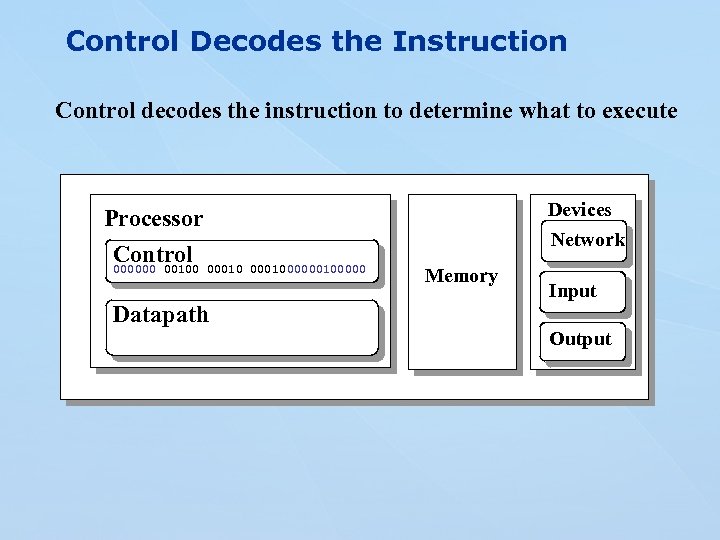

Control Decodes the Instruction Control decodes the instruction to determine what to execute Processor Control 00010 000000 00100 Datapath Devices Network 000100000 Memory Input Output

Control Decodes the Instruction Control decodes the instruction to determine what to execute Processor Control 00010 000000 00100 Datapath Devices Network 000100000 Memory Input Output

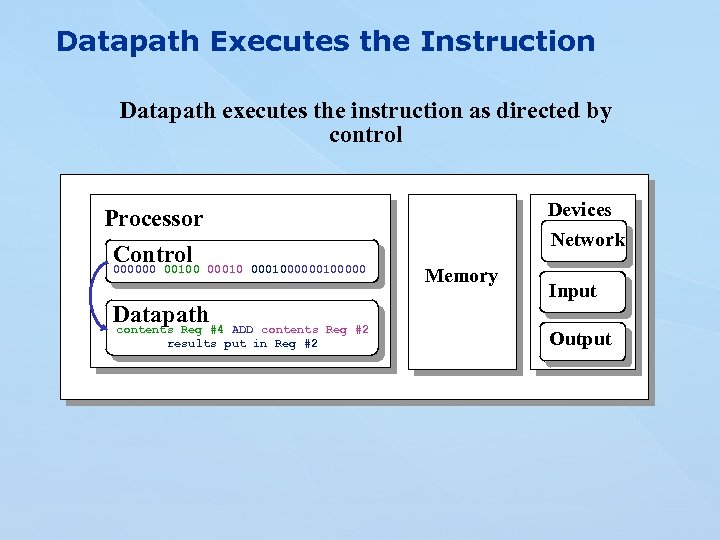

Datapath Executes the Instruction Datapath executes the instruction as directed by control Processor Control 00010 000000 00100 Datapath #4 contents Reg Devices Network 000100000 ADD contents Reg #2 results put in Reg #2 Memory Input Output

Datapath Executes the Instruction Datapath executes the instruction as directed by control Processor Control 00010 000000 00100 Datapath #4 contents Reg Devices Network 000100000 ADD contents Reg #2 results put in Reg #2 Memory Input Output

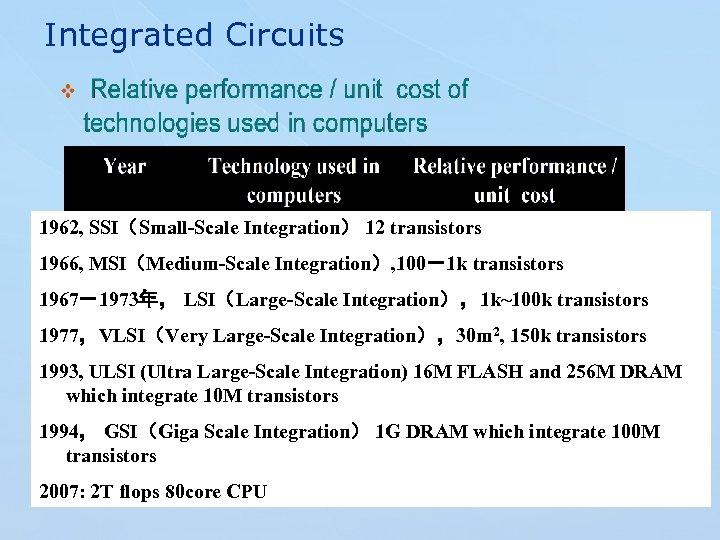

Integrated Circuits 1962, SSI(Small-Scale Integration) 12 transistors 1966, MSI(Medium-Scale Integration), 100-1 k transistors 1967-1973年, LSI(Large-Scale Integration),1 k~100 k transistors 1977,VLSI(Very Large-Scale Integration),30 m 2, 150 k transistors 1993, ULSI (Ultra Large-Scale Integration) 16 M FLASH and 256 M DRAM which integrate 10 M transistors 1994, GSI(Giga Scale Integration) 1 G DRAM which integrate 100 M transistors 2007: 2 T flops 80 core CPU

Integrated Circuits 1962, SSI(Small-Scale Integration) 12 transistors 1966, MSI(Medium-Scale Integration), 100-1 k transistors 1967-1973年, LSI(Large-Scale Integration),1 k~100 k transistors 1977,VLSI(Very Large-Scale Integration),30 m 2, 150 k transistors 1993, ULSI (Ultra Large-Scale Integration) 16 M FLASH and 256 M DRAM which integrate 10 M transistors 1994, GSI(Giga Scale Integration) 1 G DRAM which integrate 100 M transistors 2007: 2 T flops 80 core CPU

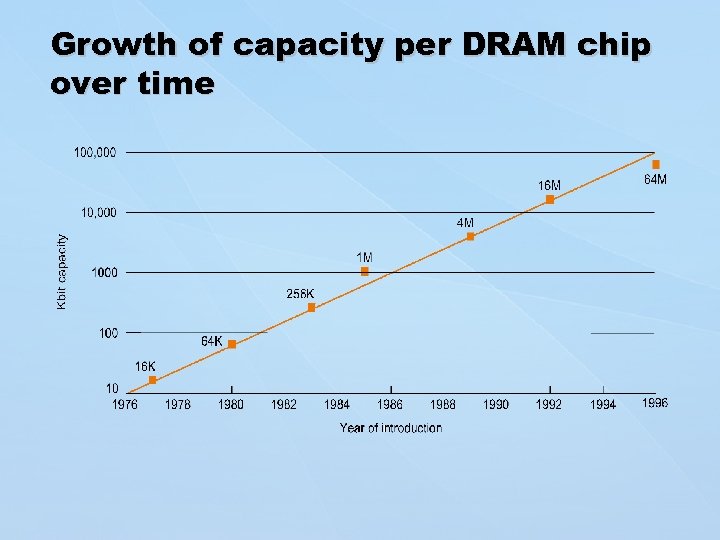

Growth of capacity per DRAM chip over time

Growth of capacity per DRAM chip over time

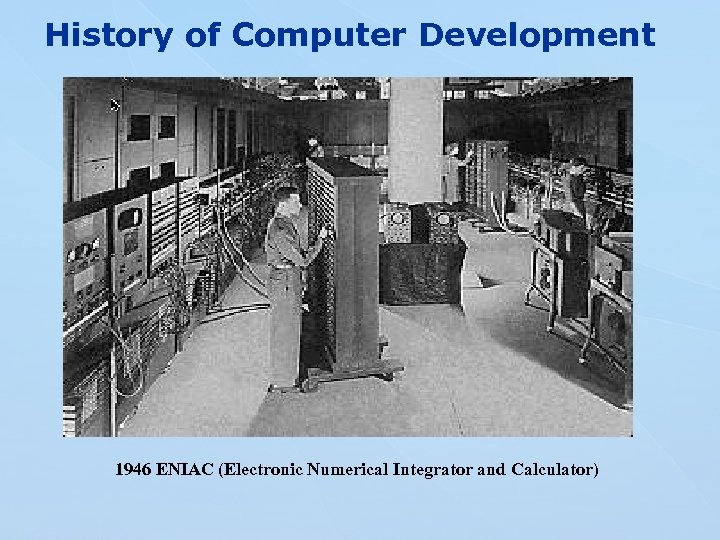

History of Computer Development 1946 ENIAC (Electronic Numerical Integrator and Calculator)

History of Computer Development 1946 ENIAC (Electronic Numerical Integrator and Calculator)

History of Computer Development l The first electronic computers ENIAC (Electronic Numerical Integrator and Calculator) ± J. Presper Eckert and John Mauchly ± Publicly known in 1946 ± 30 tons, 80 feet long, 8. 5 feet high, several feet wide ± 18, 000 vacuum tubes Ø EDVAC (Electronic Discrete Variable Automatic Computer) ± John von Neumann’s memo about stored-program computer ± von Neumann Computer Ø

History of Computer Development l The first electronic computers ENIAC (Electronic Numerical Integrator and Calculator) ± J. Presper Eckert and John Mauchly ± Publicly known in 1946 ± 30 tons, 80 feet long, 8. 5 feet high, several feet wide ± 18, 000 vacuum tubes Ø EDVAC (Electronic Discrete Variable Automatic Computer) ± John von Neumann’s memo about stored-program computer ± von Neumann Computer Ø

EDSAC (Electronic Delay Storage Automatic Calculator) ± Operational in 1949 ± First full-scale, operational, stored-program computer in the world Ø John Atanasoff’s small-scale electronic computer in the early 1940 s Ø A special-purpose machine by Konrad Zuse in Germany Ø Colossus built in 1943 Ø Harvard architecture Ø Whirlwind project Ø

EDSAC (Electronic Delay Storage Automatic Calculator) ± Operational in 1949 ± First full-scale, operational, stored-program computer in the world Ø John Atanasoff’s small-scale electronic computer in the early 1940 s Ø A special-purpose machine by Konrad Zuse in Germany Ø Colossus built in 1943 Ø Harvard architecture Ø Whirlwind project Ø

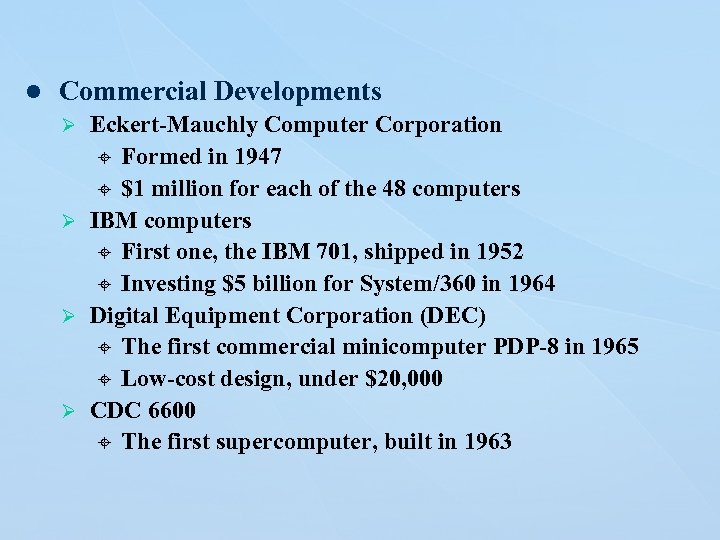

l Commercial Developments Eckert-Mauchly Computer Corporation ± Formed in 1947 ± $1 million for each of the 48 computers Ø IBM computers ± First one, the IBM 701, shipped in 1952 ± Investing $5 billion for System/360 in 1964 Ø Digital Equipment Corporation (DEC) ± The first commercial minicomputer PDP-8 in 1965 ± Low-cost design, under $20, 000 Ø CDC 6600 ± The first supercomputer, built in 1963 Ø

l Commercial Developments Eckert-Mauchly Computer Corporation ± Formed in 1947 ± $1 million for each of the 48 computers Ø IBM computers ± First one, the IBM 701, shipped in 1952 ± Investing $5 billion for System/360 in 1964 Ø Digital Equipment Corporation (DEC) ± The first commercial minicomputer PDP-8 in 1965 ± Low-cost design, under $20, 000 Ø CDC 6600 ± The first supercomputer, built in 1963 Ø

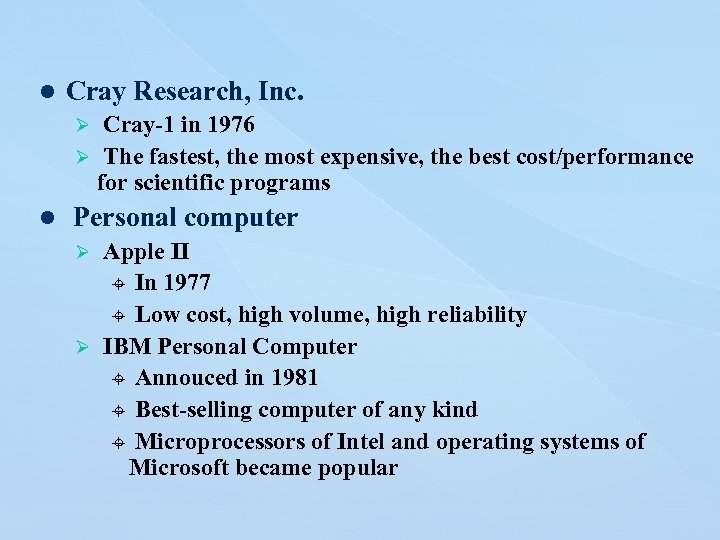

l Cray Research, Inc. Cray-1 in 1976 Ø The fastest, the most expensive, the best cost/performance for scientific programs Ø l Personal computer Apple II ± In 1977 ± Low cost, high volume, high reliability Ø IBM Personal Computer ± Annouced in 1981 ± Best-selling computer of any kind ± Microprocessors of Intel and operating systems of Microsoft became popular Ø

l Cray Research, Inc. Cray-1 in 1976 Ø The fastest, the most expensive, the best cost/performance for scientific programs Ø l Personal computer Apple II ± In 1977 ± Low cost, high volume, high reliability Ø IBM Personal Computer ± Annouced in 1981 ± Best-selling computer of any kind ± Microprocessors of Intel and operating systems of Microsoft became popular Ø

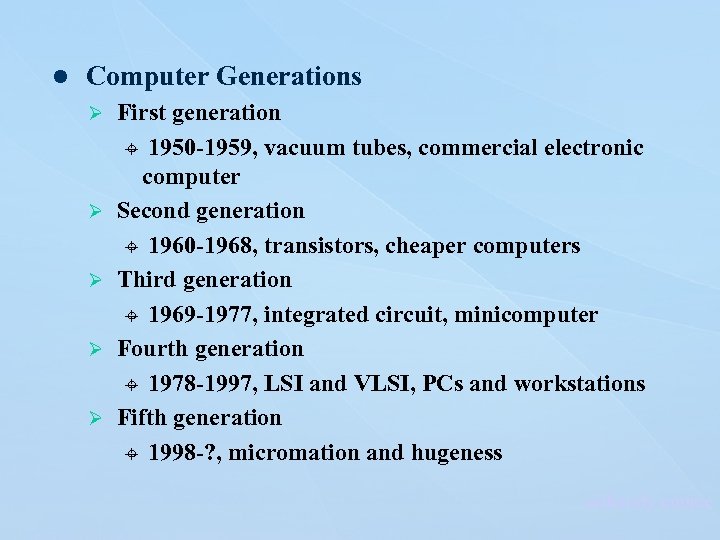

l Computer Generations Ø Ø Ø First generation ± 1950 -1959, vacuum tubes, commercial electronic computer Second generation ± 1960 -1968, transistors, cheaper computers Third generation ± 1969 -1977, integrated circuit, minicomputer Fourth generation ± 1978 -1997, LSI and VLSI, PCs and workstations Fifth generation ± 1998 -? , micromation and hugeness selfstudy course

l Computer Generations Ø Ø Ø First generation ± 1950 -1959, vacuum tubes, commercial electronic computer Second generation ± 1960 -1968, transistors, cheaper computers Third generation ± 1969 -1977, integrated circuit, minicomputer Fourth generation ± 1978 -1997, LSI and VLSI, PCs and workstations Fifth generation ± 1998 -? , micromation and hugeness selfstudy course

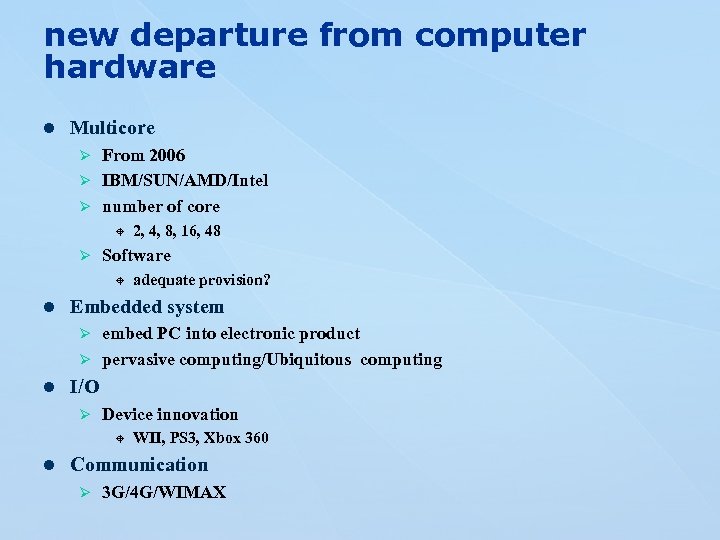

new departure from computer hardware l Multicore From 2006 Ø IBM/SUN/AMD/Intel Ø number of core Ø ± Ø Software ± l 2, 4, 8, 16, 48 adequate provision? Embedded system embed PC into electronic product Ø pervasive computing/Ubiquitous computing Ø l I/O Ø Device innovation ± l WII, PS 3, Xbox 360 Communication Ø 3 G/4 G/WIMAX

new departure from computer hardware l Multicore From 2006 Ø IBM/SUN/AMD/Intel Ø number of core Ø ± Ø Software ± l 2, 4, 8, 16, 48 adequate provision? Embedded system embed PC into electronic product Ø pervasive computing/Ubiquitous computing Ø l I/O Ø Device innovation ± l WII, PS 3, Xbox 360 Communication Ø 3 G/4 G/WIMAX

Computer performance tools l l l CPU Memory DISK Task manager Service System info

Computer performance tools l l l CPU Memory DISK Task manager Service System info

1. 4 Performance metrics: l Response time, wall-clock time, or elapsed time Ø l Execution time Ø l The time between the start and the completion of an event The time CPU spends computing , not include time spent waiting Throughput Ø the total amount of work done in a given time. 45

1. 4 Performance metrics: l Response time, wall-clock time, or elapsed time Ø l Execution time Ø l The time between the start and the completion of an event The time CPU spends computing , not include time spent waiting Throughput Ø the total amount of work done in a given time. 45

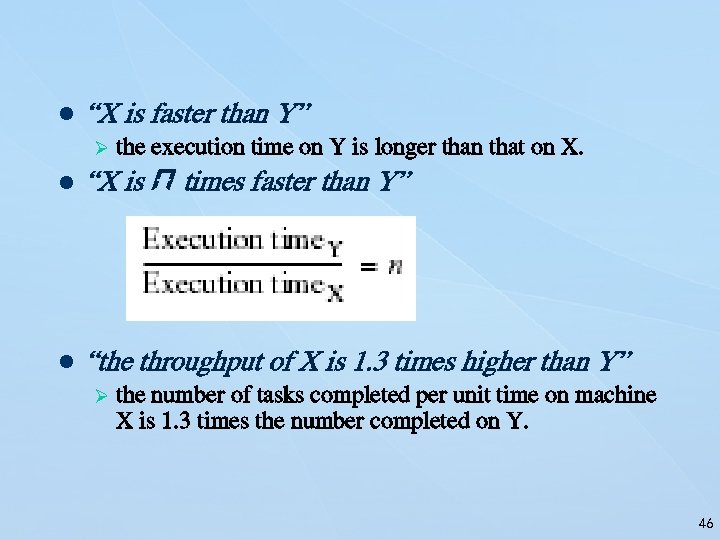

l “X is faster than Y” Ø the execution time on Y is longer than that on X. l “X is n times faster than Y” l “the throughput of X is 1. 3 times higher than Y” Ø the number of tasks completed per unit time on machine X is 1. 3 times the number completed on Y. 46

l “X is faster than Y” Ø the execution time on Y is longer than that on X. l “X is n times faster than Y” l “the throughput of X is 1. 3 times higher than Y” Ø the number of tasks completed per unit time on machine X is 1. 3 times the number completed on Y. 46

Example l If computer A runs a program in 10 seconds and computer B runs the same program in 15 seconds, how much faster is A than B? The performance ratio is 15/10=1. 5 A is therefore 1. 5 times faster than B 47

Example l If computer A runs a program in 10 seconds and computer B runs the same program in 15 seconds, how much faster is A than B? The performance ratio is 15/10=1. 5 A is therefore 1. 5 times faster than B 47

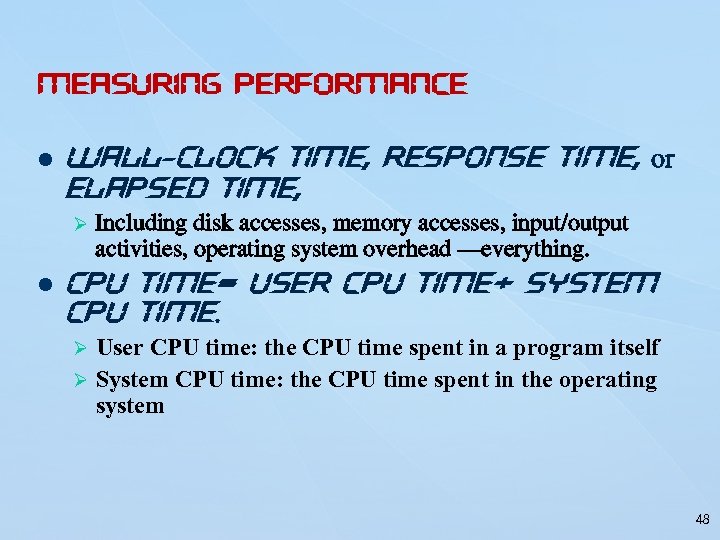

Measuring Performance l wall-clock time, response time, or elapsed time, Ø l Including disk accesses, memory accesses, input/output activities, operating system overhead —everything. CPU time= user CPU time+ system CPU time. User CPU time: the CPU time spent in a program itself Ø System CPU time: the CPU time spent in the operating system Ø 48

Measuring Performance l wall-clock time, response time, or elapsed time, Ø l Including disk accesses, memory accesses, input/output activities, operating system overhead —everything. CPU time= user CPU time+ system CPU time. User CPU time: the CPU time spent in a program itself Ø System CPU time: the CPU time spent in the operating system Ø 48

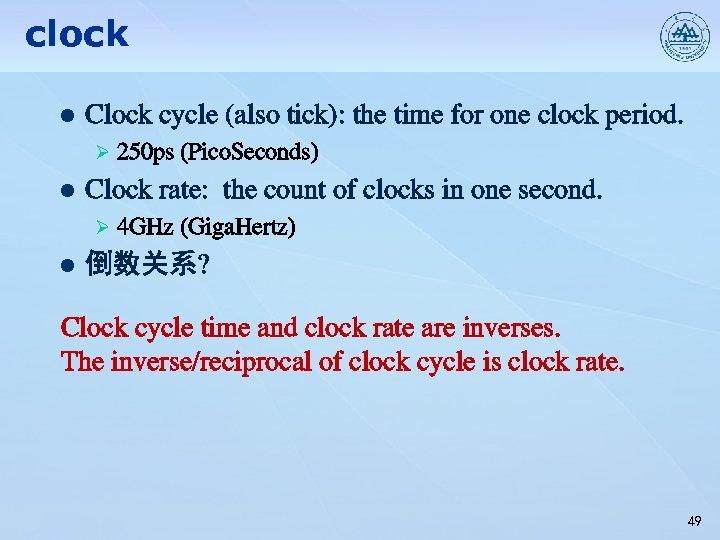

clock l Clock cycle (also tick): the time for one clock period. Ø l Clock rate: the count of clocks in one second. Ø l 250 ps (Pico. Seconds) 4 GHz (Giga. Hertz) 倒数关系? Clock cycle time and clock rate are inverses. The inverse/reciprocal of clock cycle is clock rate. 49

clock l Clock cycle (also tick): the time for one clock period. Ø l Clock rate: the count of clocks in one second. Ø l 250 ps (Pico. Seconds) 4 GHz (Giga. Hertz) 倒数关系? Clock cycle time and clock rate are inverses. The inverse/reciprocal of clock cycle is clock rate. 49

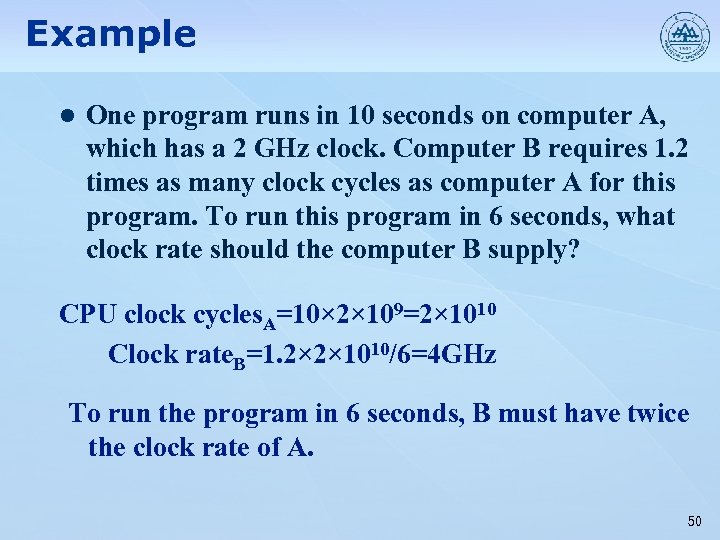

Example l One program runs in 10 seconds on computer A, which has a 2 GHz clock. Computer B requires 1. 2 times as many clock cycles as computer A for this program. To run this program in 6 seconds, what clock rate should the computer B supply? CPU clock cycles. A=10× 2× 109=2× 1010 Clock rate. B=1. 2× 2× 1010/6=4 GHz To run the program in 6 seconds, B must have twice the clock rate of A. 50

Example l One program runs in 10 seconds on computer A, which has a 2 GHz clock. Computer B requires 1. 2 times as many clock cycles as computer A for this program. To run this program in 6 seconds, what clock rate should the computer B supply? CPU clock cycles. A=10× 2× 109=2× 1010 Clock rate. B=1. 2× 2× 1010/6=4 GHz To run the program in 6 seconds, B must have twice the clock rate of A. 50

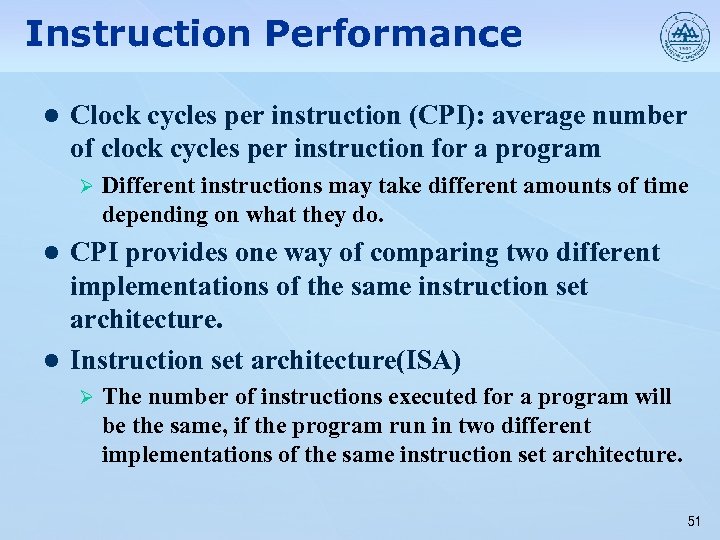

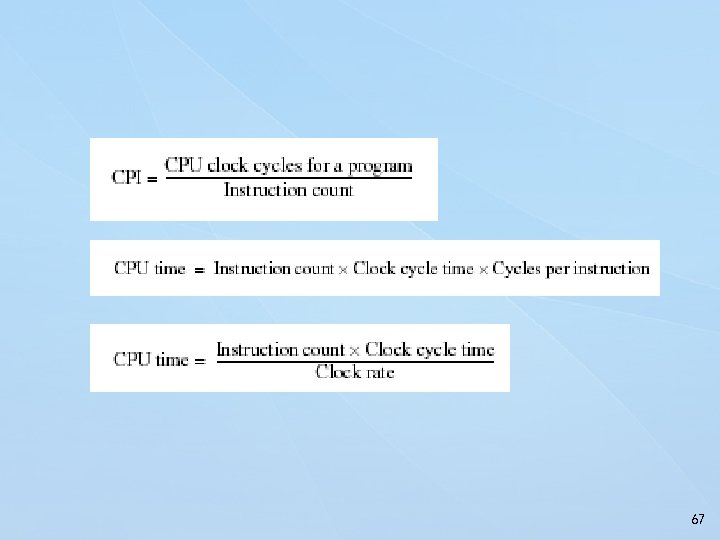

Instruction Performance l Clock cycles per instruction (CPI): average number of clock cycles per instruction for a program Ø Different instructions may take different amounts of time depending on what they do. CPI provides one way of comparing two different implementations of the same instruction set architecture. l Instruction set architecture(ISA) l Ø The number of instructions executed for a program will be the same, if the program run in two different implementations of the same instruction set architecture. 51

Instruction Performance l Clock cycles per instruction (CPI): average number of clock cycles per instruction for a program Ø Different instructions may take different amounts of time depending on what they do. CPI provides one way of comparing two different implementations of the same instruction set architecture. l Instruction set architecture(ISA) l Ø The number of instructions executed for a program will be the same, if the program run in two different implementations of the same instruction set architecture. 51

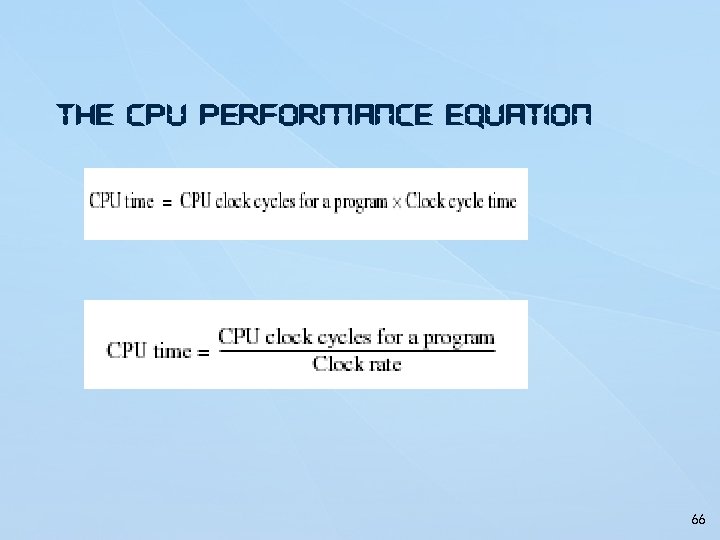

CPU Performance Equation CPU time=Instruction count ×CPI×Clock cycle time l CPU time=Instruction count ×CPI/Clock rate l 52

CPU Performance Equation CPU time=Instruction count ×CPI×Clock cycle time l CPU time=Instruction count ×CPI/Clock rate l 52

Example l Suppose we have two implementations of the same instruction set architecture. Computer A has a clock cycle time for 250 ps and a CPI of 2. 0 for some program, and computer B has a clock cycle time of 500 ps and a CPI of 1. 2 for the same program. Which computer is faster for this program and by how much? I: the number of instructions for the program CPU time. A=I× 2× 250(ps)=500×I (ps) CPU time. B=I× 1. 2× 500(ps)=600×I (ps) Computer A is 1. 2 times as fast as computer B for this program. 53

Example l Suppose we have two implementations of the same instruction set architecture. Computer A has a clock cycle time for 250 ps and a CPI of 2. 0 for some program, and computer B has a clock cycle time of 500 ps and a CPI of 1. 2 for the same program. Which computer is faster for this program and by how much? I: the number of instructions for the program CPU time. A=I× 2× 250(ps)=500×I (ps) CPU time. B=I× 1. 2× 500(ps)=600×I (ps) Computer A is 1. 2 times as fast as computer B for this program. 53

Choosing Programs to Evaluate Performance l five levels of programs : Real applications Ø Modified (or scripted) applications Ø Kernels Ø Toy benchmarks Ø Synthetic benchmarks Ø 54

Choosing Programs to Evaluate Performance l five levels of programs : Real applications Ø Modified (or scripted) applications Ø Kernels Ø Toy benchmarks Ø Synthetic benchmarks Ø 54

l Desktop Benchmarks Ø CPU-intensive benchmarks ± ± ± Ø SPEC 89 SPEC 92 SPEC 95 SPEC 2000 SPEC 2006 graphics-intensive benchmarks ± SPEC 2000 ¯ SPECviewperf ¯ is used for benchmarking systems supporting the Open. GL graphics library ¯ SPECapc ¯ consists of applications that make extensive use of graphics. 55

l Desktop Benchmarks Ø CPU-intensive benchmarks ± ± ± Ø SPEC 89 SPEC 92 SPEC 95 SPEC 2000 SPEC 2006 graphics-intensive benchmarks ± SPEC 2000 ¯ SPECviewperf ¯ is used for benchmarking systems supporting the Open. GL graphics library ¯ SPECapc ¯ consists of applications that make extensive use of graphics. 55

l Server Benchmarks Ø SPECrate--processing rate of a multiprocessor Ø (SPECSFS)--file server benchmark Ø (SPECWeb)--Web server benchmark Ø Transaction-processing (TP) benchmarks Ø TPC benchmark—Transaction Processing Council ± TPC-A, 1985 ± TPC-C, 1992, ± TPC-H TPC-R TPC-W 56

l Server Benchmarks Ø SPECrate--processing rate of a multiprocessor Ø (SPECSFS)--file server benchmark Ø (SPECWeb)--Web server benchmark Ø Transaction-processing (TP) benchmarks Ø TPC benchmark—Transaction Processing Council ± TPC-A, 1985 ± TPC-C, 1992, ± TPC-H TPC-R TPC-W 56

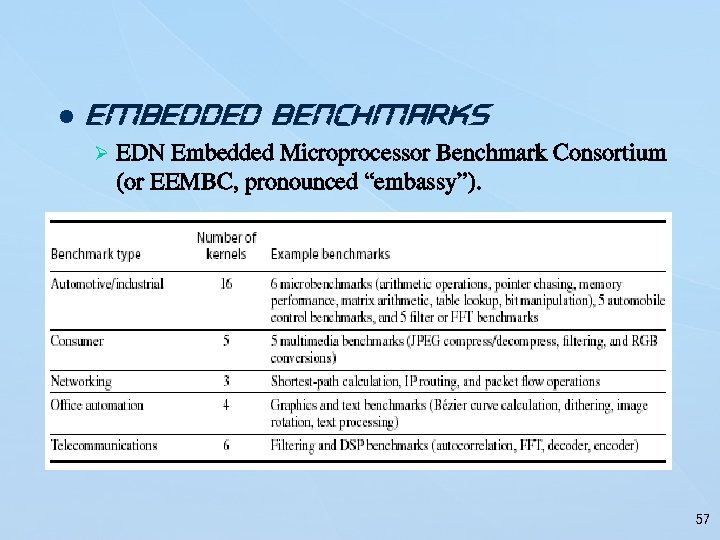

l Embedded Benchmarks Ø EDN Embedded Microprocessor Benchmark Consortium (or EEMBC, pronounced “embassy”). 57

l Embedded Benchmarks Ø EDN Embedded Microprocessor Benchmark Consortium (or EEMBC, pronounced “embassy”). 57

Quantitative Principles Make the Common Case Fast l Perhaps it is the most important and pervasive principle of computer design. l A fundamental law, called Amdahl’s Law, can be used to quantify this principle. 58

Quantitative Principles Make the Common Case Fast l Perhaps it is the most important and pervasive principle of computer design. l A fundamental law, called Amdahl’s Law, can be used to quantify this principle. 58

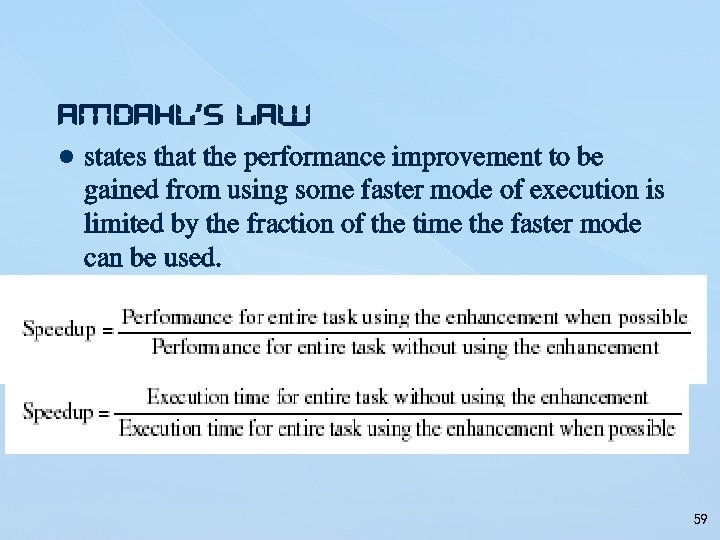

Amdahl’s Law l states that the performance improvement to be gained from using some faster mode of execution is limited by the fraction of the time the faster mode can be used. 59

Amdahl’s Law l states that the performance improvement to be gained from using some faster mode of execution is limited by the fraction of the time the faster mode can be used. 59

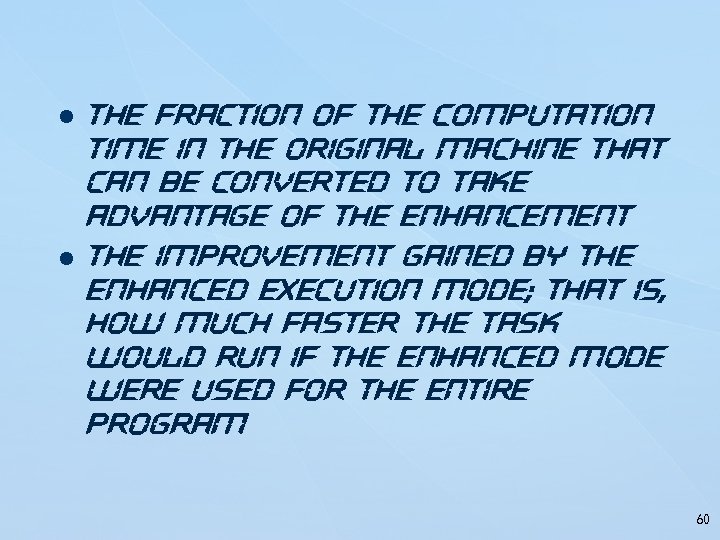

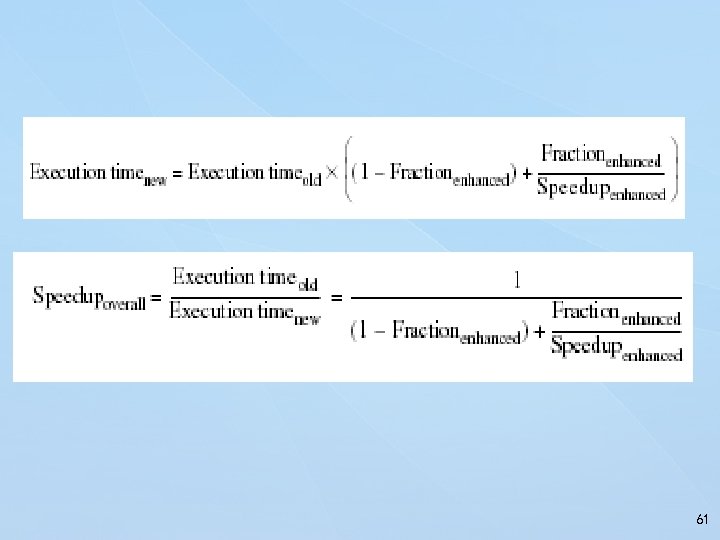

l l The fraction of the computation time in the original machine that can be converted to take advantage of the enhancement The improvement gained by the enhanced execution mode; that is, how much faster the task would run if the enhanced mode were used for the entire program 60

l l The fraction of the computation time in the original machine that can be converted to take advantage of the enhancement The improvement gained by the enhanced execution mode; that is, how much faster the task would run if the enhanced mode were used for the entire program 60

61

61

l Example 1. 2 Ø Suppose that we are considering an enhancement to the processor of a server system used for Web serving. The new CPU is 10 times faster on computation in the Web serving application than the original processor. Assuming that the original CPU is busy with computation 40% of the time and is waiting for I/O 60% of the time, what is the overall speedup gained by incorporating the enhancement? 62

l Example 1. 2 Ø Suppose that we are considering an enhancement to the processor of a server system used for Web serving. The new CPU is 10 times faster on computation in the Web serving application than the original processor. Assuming that the original CPU is busy with computation 40% of the time and is waiting for I/O 60% of the time, what is the overall speedup gained by incorporating the enhancement? 62

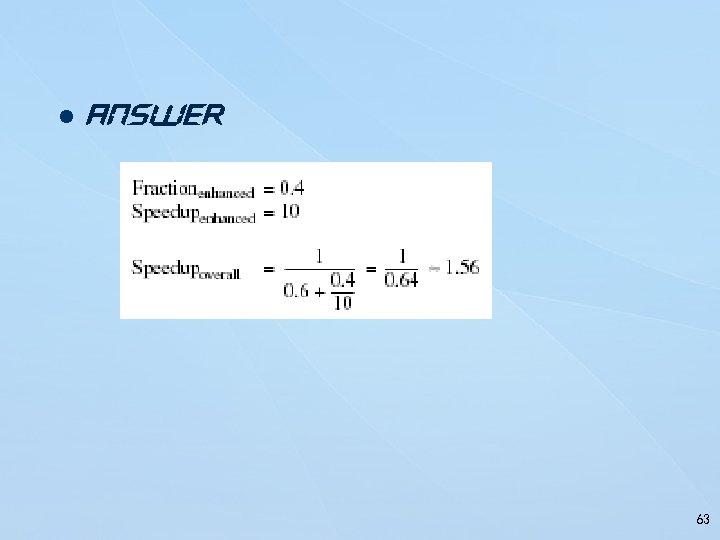

l Answer 63

l Answer 63

l Example 1. 3 Ø A common transformation required in graphics engines is square root. Implementations of floating-point (FP) square root vary significantly in performance, especially among processors designed for graphics. Suppose FP square root (FPSQR) is responsible for 20% of the execution time of a critical graphics benchmark. One proposal is to enhance the FPSQR hardware and speed up this operation by a factor of 10. The other alternative is just to try to make all FP instructions in the graphics processor run faster by a factor of 1. 6; FP instructions are responsible for a total of 50% of the execution time for the application. The design team believes that they can make all FP instructions run 1. 6 times faster with the same effort as required for the fast square root. Compare these two design alternatives. 64

l Example 1. 3 Ø A common transformation required in graphics engines is square root. Implementations of floating-point (FP) square root vary significantly in performance, especially among processors designed for graphics. Suppose FP square root (FPSQR) is responsible for 20% of the execution time of a critical graphics benchmark. One proposal is to enhance the FPSQR hardware and speed up this operation by a factor of 10. The other alternative is just to try to make all FP instructions in the graphics processor run faster by a factor of 1. 6; FP instructions are responsible for a total of 50% of the execution time for the application. The design team believes that they can make all FP instructions run 1. 6 times faster with the same effort as required for the fast square root. Compare these two design alternatives. 64

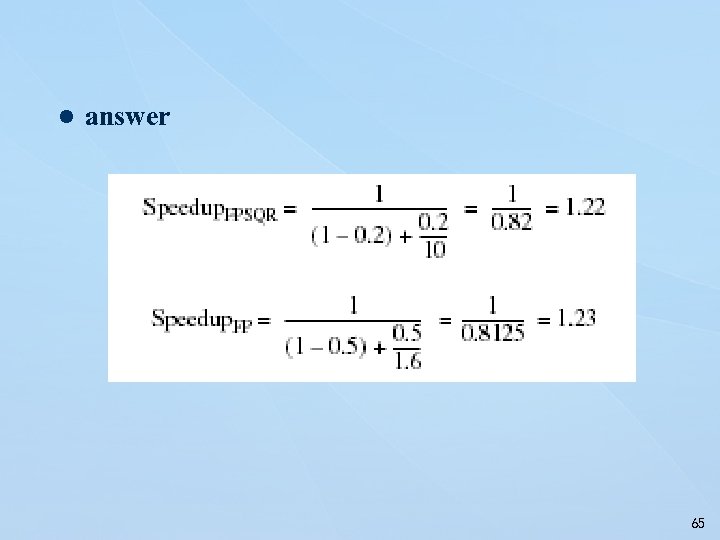

l answer 65

l answer 65

The CPU Performance Equation 66

The CPU Performance Equation 66

67

67

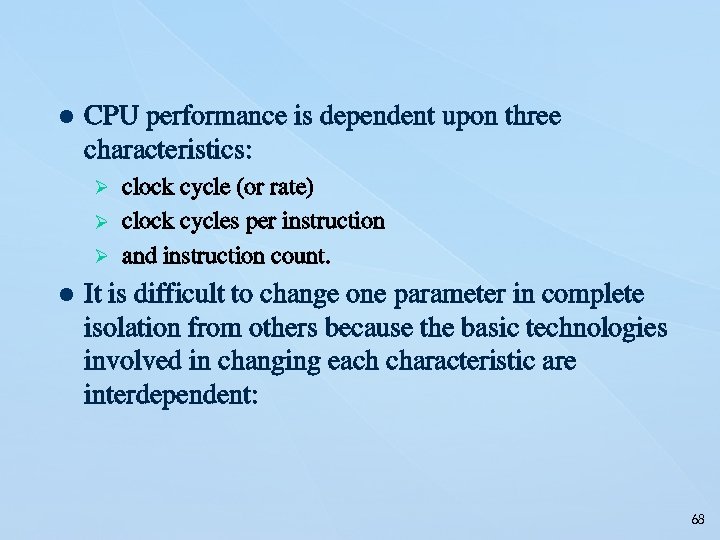

l CPU performance is dependent upon three characteristics: Ø clock cycle (or rate) Ø clock cycles per instruction Ø and instruction count. l It is difficult to change one parameter in complete isolation from others because the basic technologies involved in changing each characteristic are interdependent: 68

l CPU performance is dependent upon three characteristics: Ø clock cycle (or rate) Ø clock cycles per instruction Ø and instruction count. l It is difficult to change one parameter in complete isolation from others because the basic technologies involved in changing each characteristic are interdependent: 68

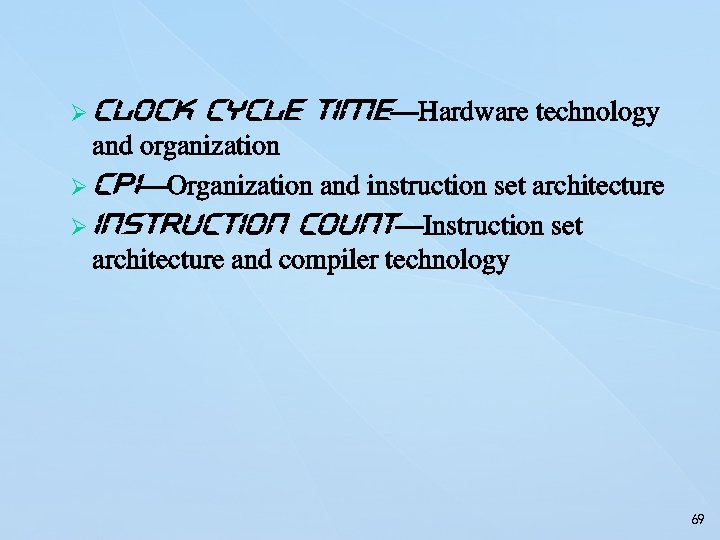

Ø Clock cycle time—Hardware technology and organization Ø CPI—Organization and instruction set architecture Ø Instruction count—Instruction set architecture and compiler technology 69

Ø Clock cycle time—Hardware technology and organization Ø CPI—Organization and instruction set architecture Ø Instruction count—Instruction set architecture and compiler technology 69

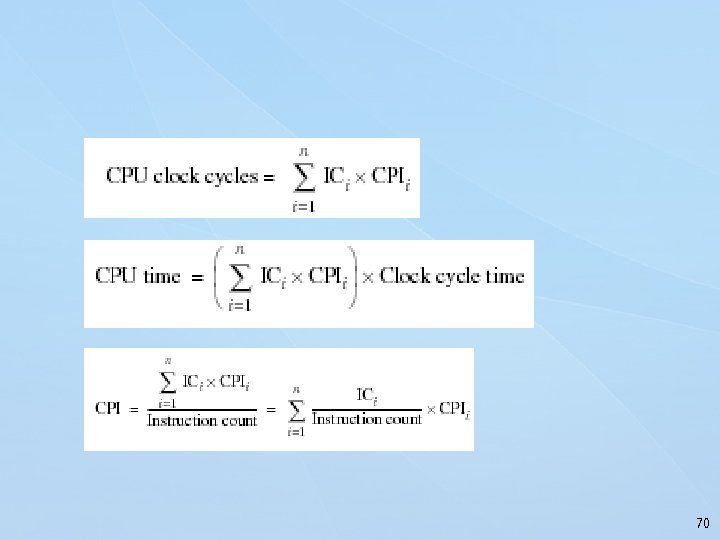

70

70

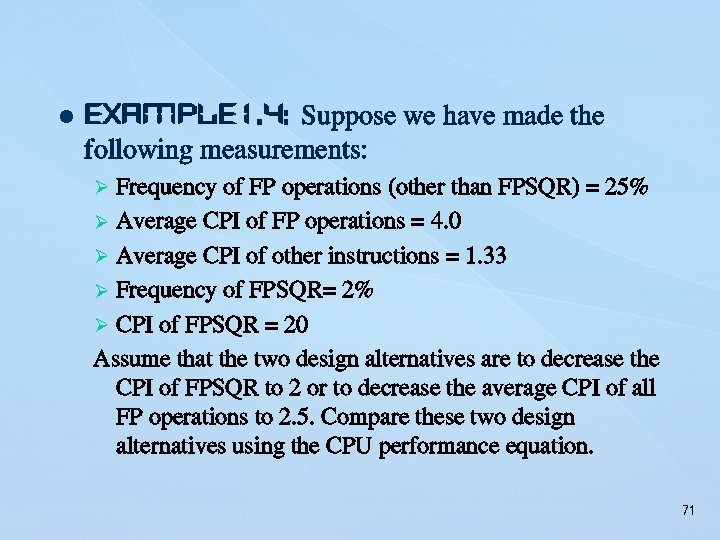

l Example 1. 4: Suppose we have made the following measurements: Ø Frequency of FP operations (other than FPSQR) = 25% Ø Average CPI of FP operations = 4. 0 Ø Average CPI of other instructions = 1. 33 Ø Frequency of FPSQR= 2% Ø CPI of FPSQR = 20 Assume that the two design alternatives are to decrease the CPI of FPSQR to 2 or to decrease the average CPI of all FP operations to 2. 5. Compare these two design alternatives using the CPU performance equation. 71

l Example 1. 4: Suppose we have made the following measurements: Ø Frequency of FP operations (other than FPSQR) = 25% Ø Average CPI of FP operations = 4. 0 Ø Average CPI of other instructions = 1. 33 Ø Frequency of FPSQR= 2% Ø CPI of FPSQR = 20 Assume that the two design alternatives are to decrease the CPI of FPSQR to 2 or to decrease the average CPI of all FP operations to 2. 5. Compare these two design alternatives using the CPU performance equation. 71

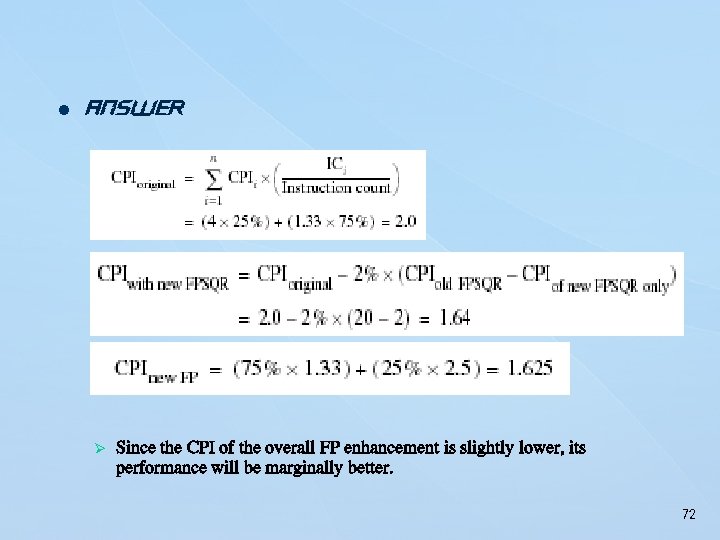

l Answer Ø Since the CPI of the overall FP enhancement is slightly lower, its performance will be marginally better. 72

l Answer Ø Since the CPI of the overall FP enhancement is slightly lower, its performance will be marginally better. 72

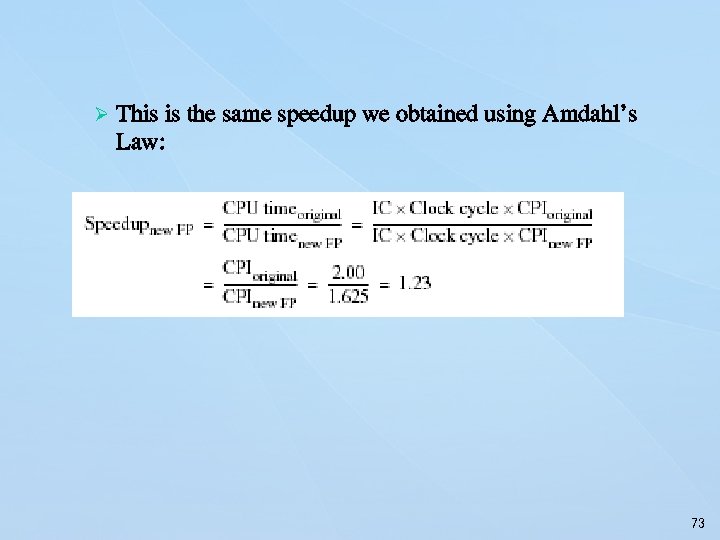

Ø This is the same speedup we obtained using Amdahl’s Law: 73

Ø This is the same speedup we obtained using Amdahl’s Law: 73

Principle of Locality l Programs tend to reuse data and instructions they have used recently. l a program spends 90% of its execution time in only 10% of the code. l Temporal locality Ø states that recently accessed items are likely to be accessed in the near future. l Spatial locality Ø says that items whose addresses are near one another tend to be referenced close together in time. 74

Principle of Locality l Programs tend to reuse data and instructions they have used recently. l a program spends 90% of its execution time in only 10% of the code. l Temporal locality Ø states that recently accessed items are likely to be accessed in the near future. l Spatial locality Ø says that items whose addresses are near one another tend to be referenced close together in time. 74

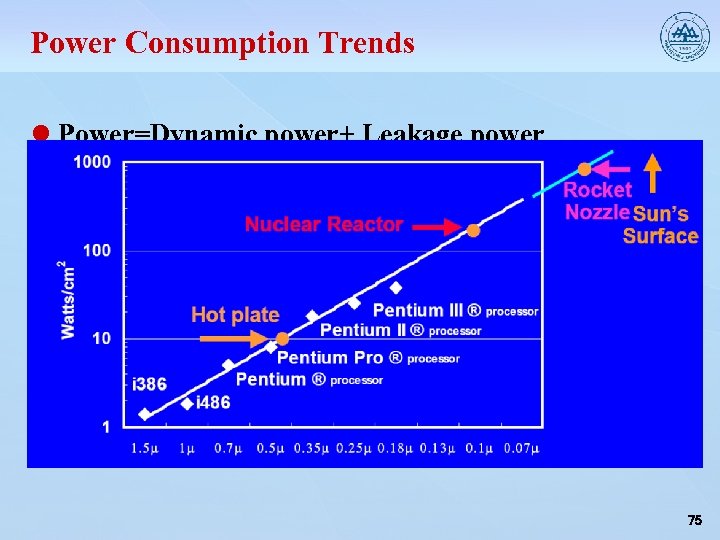

Power Consumption Trends l Power=Dynamic power+ Leakage power • Dyn power∝activity capacitance×voltage 2 ×frequency • Capacitance per transistor and voltage are decreasing, but number of transistors and frequency are increasing at a faster rate • Leakage power is also rising and will soon match dynamic power l Power consumption is already around 100 W in some highperformance processors today 75

Power Consumption Trends l Power=Dynamic power+ Leakage power • Dyn power∝activity capacitance×voltage 2 ×frequency • Capacitance per transistor and voltage are decreasing, but number of transistors and frequency are increasing at a faster rate • Leakage power is also rising and will soon match dynamic power l Power consumption is already around 100 W in some highperformance processors today 75

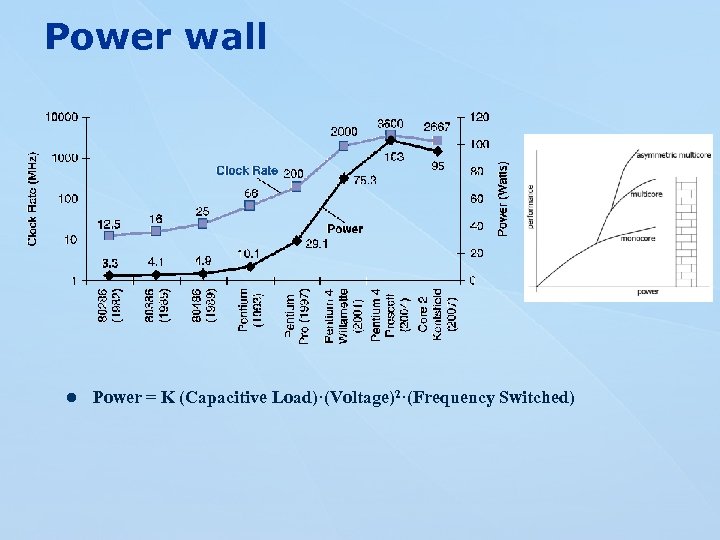

Power wall l Power = K (Capacitive Load)·(Voltage)2·(Frequency Switched)

Power wall l Power = K (Capacitive Load)·(Voltage)2·(Frequency Switched)

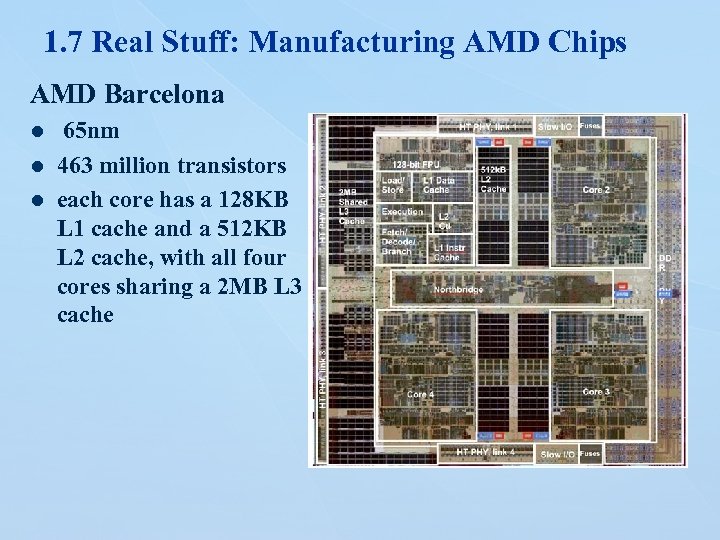

1. 7 Real Stuff: Manufacturing AMD Chips AMD Barcelona 65 nm l 463 million transistors l each core has a 128 KB L 1 cache and a 512 KB L 2 cache, with all four cores sharing a 2 MB L 3 cache l

1. 7 Real Stuff: Manufacturing AMD Chips AMD Barcelona 65 nm l 463 million transistors l each core has a 128 KB L 1 cache and a 512 KB L 2 cache, with all four cores sharing a 2 MB L 3 cache l

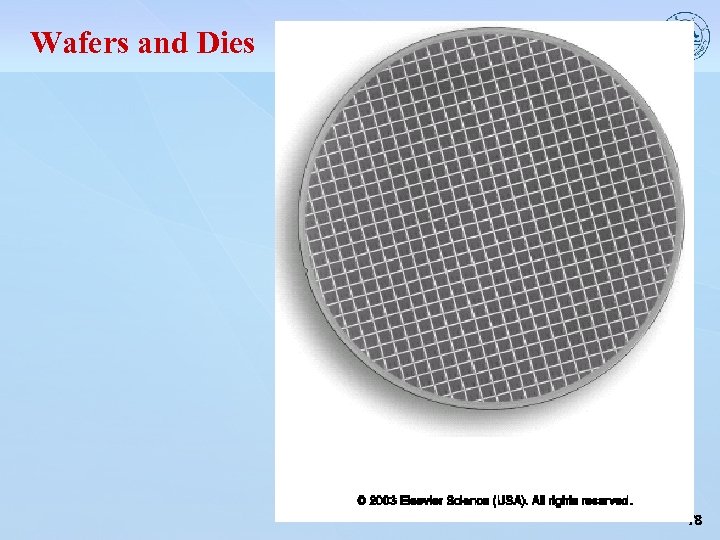

Wafers and Dies 78

Wafers and Dies 78

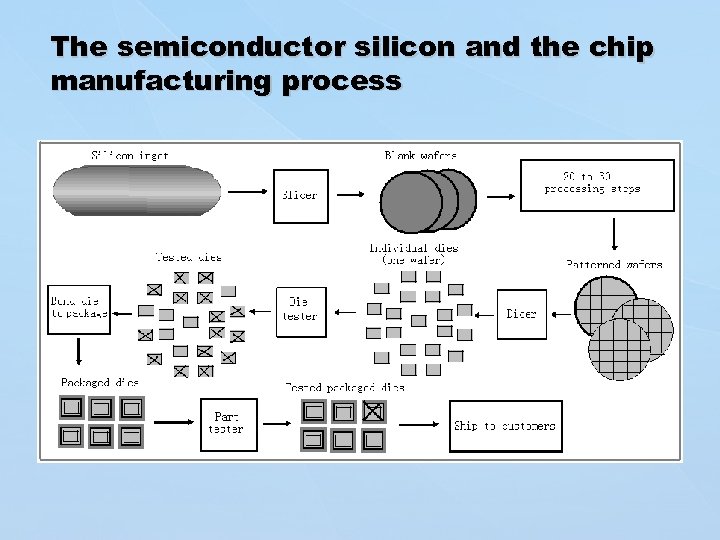

The semiconductor silicon and the chip manufacturing process

The semiconductor silicon and the chip manufacturing process

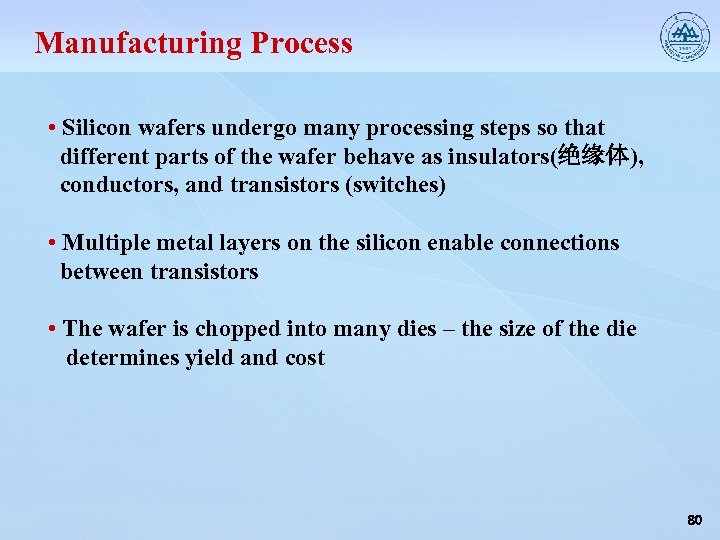

Manufacturing Process • Silicon wafers undergo many processing steps so that different parts of the wafer behave as insulators(绝缘体), conductors, and transistors (switches) • Multiple metal layers on the silicon enable connections between transistors • The wafer is chopped into many dies – the size of the die determines yield and cost 80

Manufacturing Process • Silicon wafers undergo many processing steps so that different parts of the wafer behave as insulators(绝缘体), conductors, and transistors (switches) • Multiple metal layers on the silicon enable connections between transistors • The wafer is chopped into many dies – the size of the die determines yield and cost 80

Processor Technology Trends • Shrinking of transistor sizes: 250 nm (1997) 130 nm (2002) 70 nm (2008) 35 nm (2014) • Transistor density increases by 35% per year and die size increases by 10 -20% per year… functionality improvements! • Transistor speed improves linearly with size (complex equation involving voltages, resistances, capacitances) • Wire delays do not scale down at the same rate as transistor delays 81

Processor Technology Trends • Shrinking of transistor sizes: 250 nm (1997) 130 nm (2002) 70 nm (2008) 35 nm (2014) • Transistor density increases by 35% per year and die size increases by 10 -20% per year… functionality improvements! • Transistor speed improves linearly with size (complex equation involving voltages, resistances, capacitances) • Wire delays do not scale down at the same rate as transistor delays 81

Assignments l P 56 1. 1 Ø 1. 3. 1 -1. 3. 3 Ø 1. 3. 1 -1. 4. 3 Ø 82

Assignments l P 56 1. 1 Ø 1. 3. 1 -1. 3. 3 Ø 1. 3. 1 -1. 4. 3 Ø 82

END

END