df03eab78893f3d32eb77c4f7e9cfbcf.ppt

- Количество слайдов: 25

Computer-based Experiments: Obstacles Stephanie Bryant University of South Florida Note: See Bryant, Hunton and Stone, BRIA 2004 for complete references

Obstacles—Overview Technology Skill Needed n Threats to Internal Validity n Getting Participants n

Obstacles (Con’d) Technology Skills Needed n “Proficiency” in software or programming n

Tools for an Computer-based Experiments Ø Develop Using a Scripting Language or Applications Software ØApplications Software for Web Experiments ØExample software packages: RAOSoft, Inquisite, Psych. Exps ØMore expensive software, cheaper development & maintenance costs? Easier to use, Features = those built into the software ØScripting languages: ØExamples: Cold fusion, PHP, JSP (java server pages), CGI (common gateway interface) ØSoftware is cheap or free, higher development & maintenance costs? , difficult for non-programmers, More features, more customizable ØCombine Scripting Languages & applications software

Applications Software: Raosoft Products (Ezsurvey, Survey win, ( Interform) n Difficulty index (1 = hard, 10 = easy): 8 n. Do not provide all the functionalities ØNo randomization, response dependent questions (I. e. , only straight surveys) ØLimited formatting capabilities n Expensive – no educational prices ($1, 500 - $10, 000) n. Survey. Monkey. com - $19. 95/month

Survey. Monkey. com

Applications Software: Inquisite Difficulty index (1 = hard, 10 = easy): 8 n Expensive ($10, 000) Supports most of functionalities n ØTo support all desired functionalities requires Software Development Kit (SDK) for complex applications ($2, 000 but may be available soon for free)

Applications Software: Psych. Exps n n n Psych. Experiments Web site created and maintained by the Univ. of Mississippi Psychology professor Ken Mc. Graw. “Collaboratory” http: //psychexps. olemiss. edu/ Free! Requires that user download & install applications software Many existing scripts (e. g. , randomization)

Psychexps Home Page

Psychexps (Con’d)

Current Experiments on Psychexps

Obstacles (Con’d) Big learning curves involved n On-campus support sometimes available n Can hire programmers/graduate students to help with programming n

Obstacles (Con’d) n Internal Validity Considerations: ØStatistical Conclusion Validity ØInternal Validity ØConstruct Validity ØExternal Validity

Statistical Conclusion Validity (The extent to which two variables can be said to co-vary) n Increased sample size and statistical power (e. g. , Ayers, Cloyd et al. ) Ø Web to recruit participants! n Decreased or eliminated data entry errors Ø Capture data directly into database n Increased variability in experimental settings Ø Difficult to control in Web experiments Ø People complete experiments in their own (“natural”) settings with various types of computer configurations (browsers, hardware) Ø Mc. Graw et al (2000) note that WE noise is compensated for by large sample sizes n n System Downtime Software Coding Errors (e. g. , Barrick, 01, Hodge 01)

Internal Validity (Correlation or Causation? ) n Decreased potential diffusion of treatment Ø Unlikely that participants will learn information intended for one treatment group and not another. n Increased participant drop-out rates across treatments Ø A higher drop-out rate among Web vs. laboratory experiments could create a participant self-selection effect that makes causal inferences problematic. Ø Mitigate by placing requests for personal information and monetary rewards at the beginning of the experiment (Frick et al 1999) and Mc. Graw et al. (2000). Ø Completion rate approached 86% when some type of monetary reward was offered (Musch and Reips 2000)

Internal Validity (Correlation or Causation? ) n Controlling “cheating” “cheating ØMultiple submissions by a single participant ØIdentification by email address, logon ID, password, or IP address n Randomization (A control) ØComputer scripts available for randomly assigning participants to conditions Ø Complete scripts published in Baron and Siepmann (2000 247) and Birnbaum (2001, 210 -212)

Construct Validity (Generalizability from observations to higher-order constructs) n Decreased demand effects & other experimenter influences ØRosenthal (66 & 76), Pany (87) n Decreased participant evaluation apprehension ØRosenberg (69) Ø“Naturalism” of setting decreases?

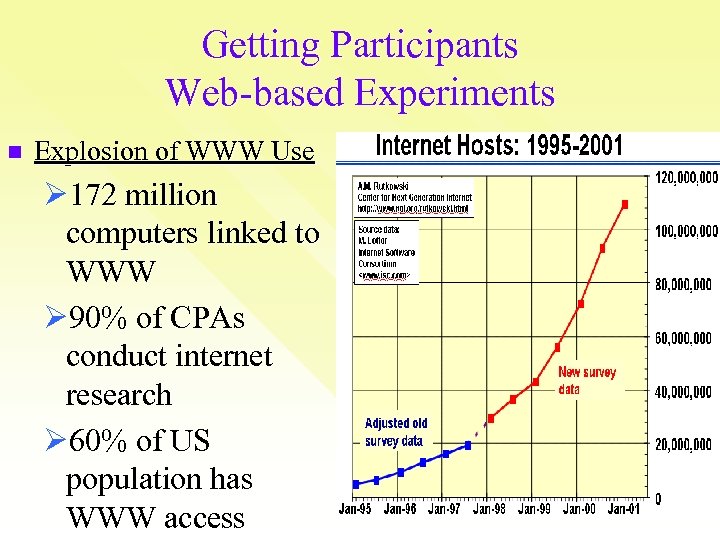

Getting Participants Web-based Experiments n Explosion of WWW Use Ø 172 million computers linked to WWW Ø 90% of CPAs conduct internet research Ø 60% of US population has WWW access

Getting Participants Ø Internet Participant Solicitation • Benefits ─ Large sample sizes (power) possible ─ Availability of diverse, world-wide populations ─ Interactive, multi-participant responses ─ Real-time randomization of question order ─ Response dependent questions (branch and bound) ─ Authentication and authorization ─ Multimedia (e. g. , graphics, sound) ─ On-screen clock

Getting Participants n Web-based ØPost notices in places where your target population might be likely to visit ØAccess List. Servs n PC-based ØStudent involvement requirement? ? ØUSF Process

USF Process Mandatory participation in one experiment per semester n Experimentrix site used to manage n https: //experimetrix 2. com/soa/ n

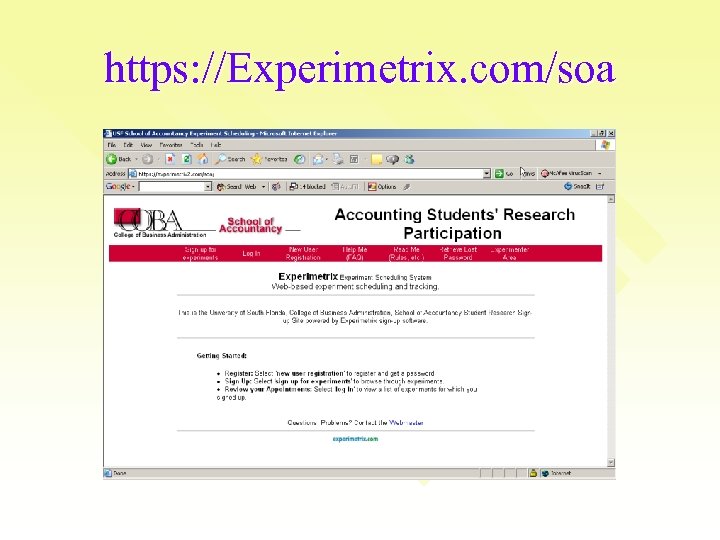

https: //Experimetrix. com/soa

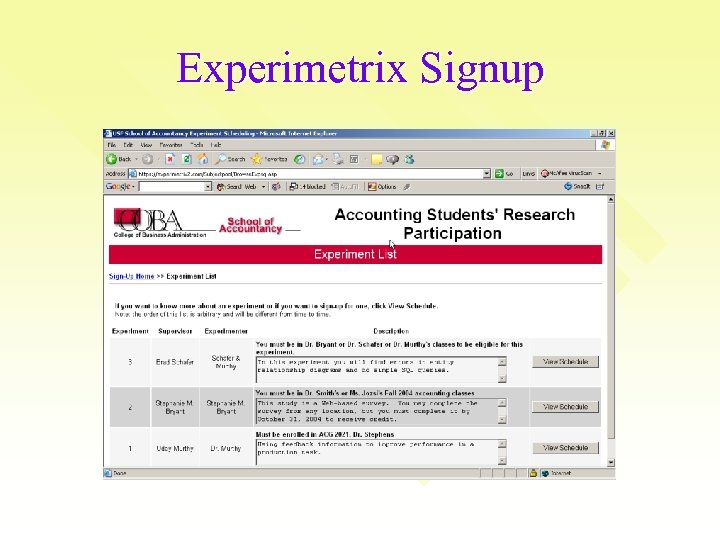

Experimetrix Signup

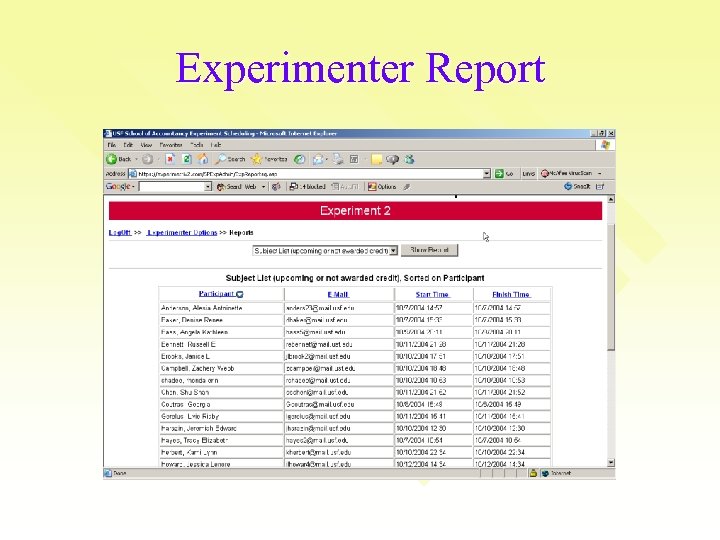

Experimenter Report

A Final Caveat: What Can Go Wrong…Will Cynical, but realistic n Plan carefully n Develop contingency plans n Consider cost-benefit n Greatest potential for BAR Web experiments is as yet unrealized n Biggest hurdle is required knowledge, but this can be overcome n

df03eab78893f3d32eb77c4f7e9cfbcf.ppt