9ed7f32e9d89618830e411705207f015.ppt

- Количество слайдов: 106

Computational Models of Text Quality Ani Nenkova University of Pennsylvania ESSLLI 2010, Copenhagen 1

Computational Models of Text Quality Ani Nenkova University of Pennsylvania ESSLLI 2010, Copenhagen 1

The ultimate text quality application n Imagine your favorite text editor n With spell-checker and grammar checker n But also functions that tell you n n n ``Word W is repeated too many times” ``Fill the gap is a cliché” ``You might consider using this more figurative expression” ``This sentence is unclear and hard to read’’ ``What is the connection between these two sentences? ” ……. . 2

The ultimate text quality application n Imagine your favorite text editor n With spell-checker and grammar checker n But also functions that tell you n n n ``Word W is repeated too many times” ``Fill the gap is a cliché” ``You might consider using this more figurative expression” ``This sentence is unclear and hard to read’’ ``What is the connection between these two sentences? ” ……. . 2

Currently n It is our friends who give such feedback n Often conflicting n We might agree that a text is good, but find it hard to explain exactly why n Computational linguistics should have some answers n Though far from offering a complete solution yet 3

Currently n It is our friends who give such feedback n Often conflicting n We might agree that a text is good, but find it hard to explain exactly why n Computational linguistics should have some answers n Though far from offering a complete solution yet 3

In this course n We will overview research dealing with various aspects of text quality n A unified approach does not yet exist, but many proposals have been tested on corpus data n integrated in applications n 4

In this course n We will overview research dealing with various aspects of text quality n A unified approach does not yet exist, but many proposals have been tested on corpus data n integrated in applications n 4

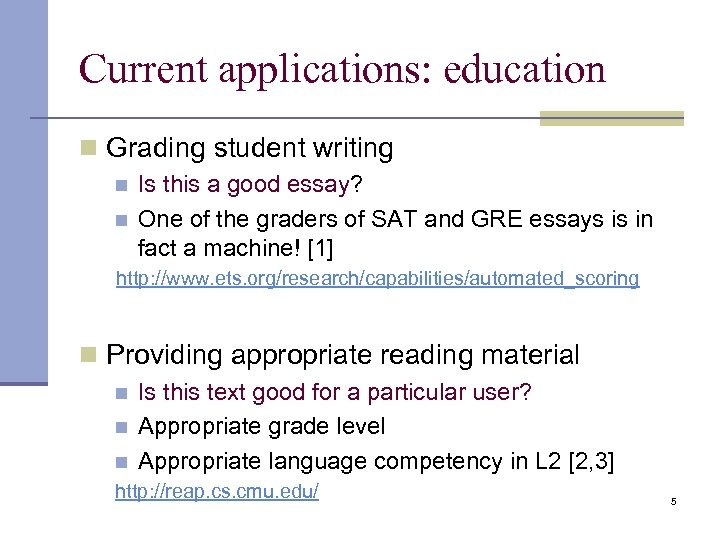

Current applications: education n Grading student writing n n Is this a good essay? One of the graders of SAT and GRE essays is in fact a machine! [1] http: //www. ets. org/research/capabilities/automated_scoring n Providing appropriate reading material n n n Is this text good for a particular user? Appropriate grade level Appropriate language competency in L 2 [2, 3] http: //reap. cs. cmu. edu/ 5

Current applications: education n Grading student writing n n Is this a good essay? One of the graders of SAT and GRE essays is in fact a machine! [1] http: //www. ets. org/research/capabilities/automated_scoring n Providing appropriate reading material n n n Is this text good for a particular user? Appropriate grade level Appropriate language competency in L 2 [2, 3] http: //reap. cs. cmu. edu/ 5

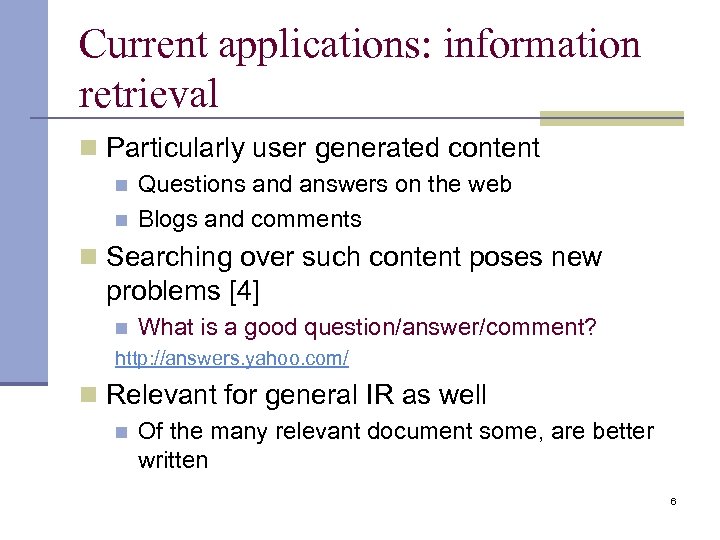

Current applications: information retrieval n Particularly user generated content n n Questions and answers on the web Blogs and comments n Searching over such content poses new problems [4] n What is a good question/answer/comment? http: //answers. yahoo. com/ n Relevant for general IR as well n Of the many relevant document some, are better written 6

Current applications: information retrieval n Particularly user generated content n n Questions and answers on the web Blogs and comments n Searching over such content poses new problems [4] n What is a good question/answer/comment? http: //answers. yahoo. com/ n Relevant for general IR as well n Of the many relevant document some, are better written 6

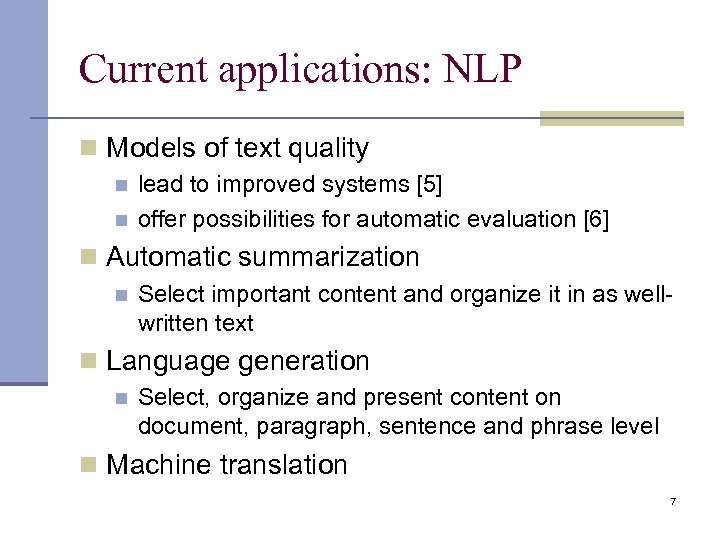

Current applications: NLP n Models of text quality n n lead to improved systems [5] offer possibilities for automatic evaluation [6] n Automatic summarization n Select important content and organize it in as wellwritten text n Language generation n Select, organize and present content on document, paragraph, sentence and phrase level n Machine translation 7

Current applications: NLP n Models of text quality n n lead to improved systems [5] offer possibilities for automatic evaluation [6] n Automatic summarization n Select important content and organize it in as wellwritten text n Language generation n Select, organize and present content on document, paragraph, sentence and phrase level n Machine translation 7

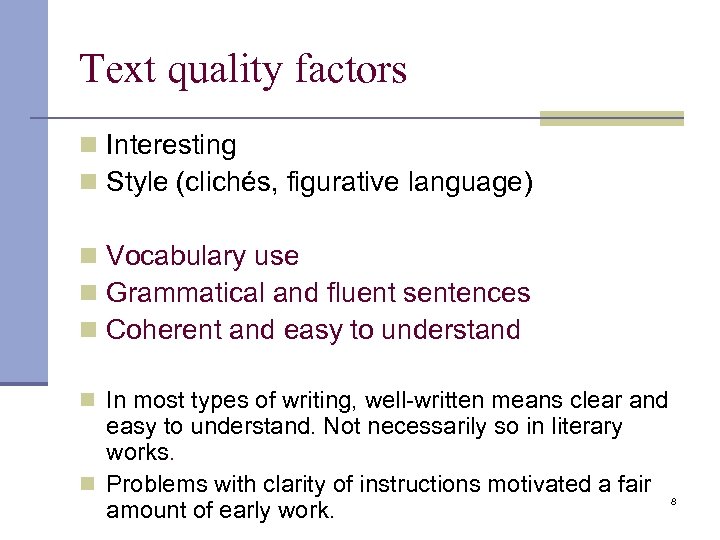

Text quality factors n Interesting n Style (clichés, figurative language) n Vocabulary use n Grammatical and fluent sentences n Coherent and easy to understand n In most types of writing, well-written means clear and easy to understand. Not necessarily so in literary works. n Problems with clarity of instructions motivated a fair amount of early work. 8

Text quality factors n Interesting n Style (clichés, figurative language) n Vocabulary use n Grammatical and fluent sentences n Coherent and easy to understand n In most types of writing, well-written means clear and easy to understand. Not necessarily so in literary works. n Problems with clarity of instructions motivated a fair amount of early work. 8

Early work: keep in mind these predate modern computers! n Common words are easier to understand ü ü stentorian vs. loud myocardial infarction vs. heart attack n Common words are short o Standard readability metrics o percentage of words not among the N most frequent o average numbers of syllables per word n Syntactically simple sentences are easier to understand o average number of words per sentence [Flesch-Kincaid, Automated Readability Index, Gunning-Fog, SMOG, Coleman-Liau] 9

Early work: keep in mind these predate modern computers! n Common words are easier to understand ü ü stentorian vs. loud myocardial infarction vs. heart attack n Common words are short o Standard readability metrics o percentage of words not among the N most frequent o average numbers of syllables per word n Syntactically simple sentences are easier to understand o average number of words per sentence [Flesch-Kincaid, Automated Readability Index, Gunning-Fog, SMOG, Coleman-Liau] 9

Modern equivalents n Language models Word probabilities from a large collection http: //www. speech. cs. cmu. edu/SLM_info. html n n Features derived from syntactic parse [2, 7, 8, 9] n n Parse tree height Number of subordinating conjunctions Number of passive voice constructions Number of noun and verb phrases 10

Modern equivalents n Language models Word probabilities from a large collection http: //www. speech. cs. cmu. edu/SLM_info. html n n Features derived from syntactic parse [2, 7, 8, 9] n n Parse tree height Number of subordinating conjunctions Number of passive voice constructions Number of noun and verb phrases 10

Language models n Unigram and bigram language models n Really, just huge tables n Smoothing necessary to account for unseen words 11

Language models n Unigram and bigram language models n Really, just huge tables n Smoothing necessary to account for unseen words 11

Features from language models n Assessing the readability of text t consisting of m words, for intended audience class c n Number of out of vocabulary words in the text with respect to the language model for c n Text likelihood and perplexity 12

Features from language models n Assessing the readability of text t consisting of m words, for intended audience class c n Number of out of vocabulary words in the text with respect to the language model for c n Text likelihood and perplexity 12

![Application to grade level prediction Collins-Thompson and Callan, NAACL 2004 [10] 13 Application to grade level prediction Collins-Thompson and Callan, NAACL 2004 [10] 13](https://present5.com/presentation/9ed7f32e9d89618830e411705207f015/image-13.jpg) Application to grade level prediction Collins-Thompson and Callan, NAACL 2004 [10] 13

Application to grade level prediction Collins-Thompson and Callan, NAACL 2004 [10] 13

![Application to grade level prediction Collins-Thompson and Callan, NAACL 2004 [10] 14 Application to grade level prediction Collins-Thompson and Callan, NAACL 2004 [10] 14](https://present5.com/presentation/9ed7f32e9d89618830e411705207f015/image-14.jpg) Application to grade level prediction Collins-Thompson and Callan, NAACL 2004 [10] 14

Application to grade level prediction Collins-Thompson and Callan, NAACL 2004 [10] 14

![Results on predicting grade level Schwarm and Ostendorf, ACL 2005 [11] n Flesch-Kincaid Grade Results on predicting grade level Schwarm and Ostendorf, ACL 2005 [11] n Flesch-Kincaid Grade](https://present5.com/presentation/9ed7f32e9d89618830e411705207f015/image-15.jpg) Results on predicting grade level Schwarm and Ostendorf, ACL 2005 [11] n Flesch-Kincaid Grade Level index n number of syllables per word n sentence length n Lexile n word frequency n sentence length n SVM features n language models and syntax 15

Results on predicting grade level Schwarm and Ostendorf, ACL 2005 [11] n Flesch-Kincaid Grade Level index n number of syllables per word n sentence length n Lexile n word frequency n sentence length n SVM features n language models and syntax 15

Models of text coherence n Global coherence n Overall document organization n Local coherence n Adjacent sentences 16

Models of text coherence n Global coherence n Overall document organization n Local coherence n Adjacent sentences 16

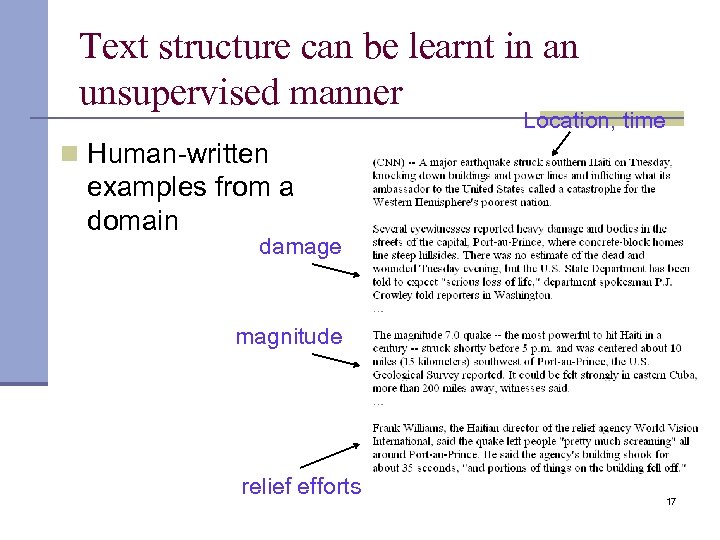

Text structure can be learnt in an unsupervised manner Location, time n Human-written examples from a domain damage magnitude relief efforts 17

Text structure can be learnt in an unsupervised manner Location, time n Human-written examples from a domain damage magnitude relief efforts 17

![Content model Barzilay & Lee’ 04 [12] n Hidden Markov Model (HMM)-based n States Content model Barzilay & Lee’ 04 [12] n Hidden Markov Model (HMM)-based n States](https://present5.com/presentation/9ed7f32e9d89618830e411705207f015/image-18.jpg) Content model Barzilay & Lee’ 04 [12] n Hidden Markov Model (HMM)-based n States - clusters of related sentences “topics” Generating n Transition prob. - sentence precedence in corpus sentence in n Emission prob. - bigram language model topic current Earthquake reports Transition from previous topic location, magnitude casualties relief efforts 18

Content model Barzilay & Lee’ 04 [12] n Hidden Markov Model (HMM)-based n States - clusters of related sentences “topics” Generating n Transition prob. - sentence precedence in corpus sentence in n Emission prob. - bigram language model topic current Earthquake reports Transition from previous topic location, magnitude casualties relief efforts 18

![Generating Wikipedia articles Sauper and Barzilay, 2009 [12] n Articles on diseases and American Generating Wikipedia articles Sauper and Barzilay, 2009 [12] n Articles on diseases and American](https://present5.com/presentation/9ed7f32e9d89618830e411705207f015/image-19.jpg) Generating Wikipedia articles Sauper and Barzilay, 2009 [12] n Articles on diseases and American film actors n Create templates of subtopics Focus only on subtopic level structure ◦ Use paragraphs from documents on the web 19

Generating Wikipedia articles Sauper and Barzilay, 2009 [12] n Articles on diseases and American film actors n Create templates of subtopics Focus only on subtopic level structure ◦ Use paragraphs from documents on the web 19

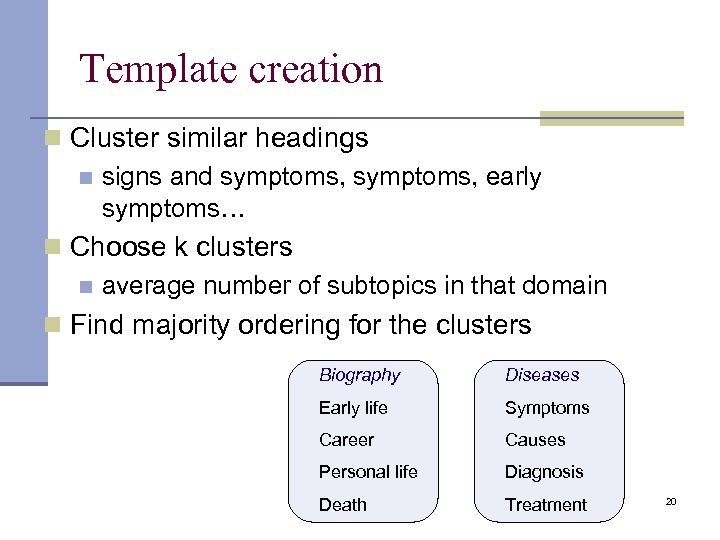

Template creation n Cluster similar headings n signs and symptoms, early symptoms… n Choose k clusters n average number of subtopics in that domain n Find majority ordering for the clusters Biography Diseases Early life Symptoms Career Causes Personal life Diagnosis Death Treatment 20

Template creation n Cluster similar headings n signs and symptoms, early symptoms… n Choose k clusters n average number of subtopics in that domain n Find majority ordering for the clusters Biography Diseases Early life Symptoms Career Causes Personal life Diagnosis Death Treatment 20

Extraction of excerpts and ranking n Candidates for a subtopic n Paragraphs from top 10 pages of search results n Measure relevance of candidates for that subtopic n Features ~ unigrams, bigrams, number of sentences… 21

Extraction of excerpts and ranking n Candidates for a subtopic n Paragraphs from top 10 pages of search results n Measure relevance of candidates for that subtopic n Features ~ unigrams, bigrams, number of sentences… 21

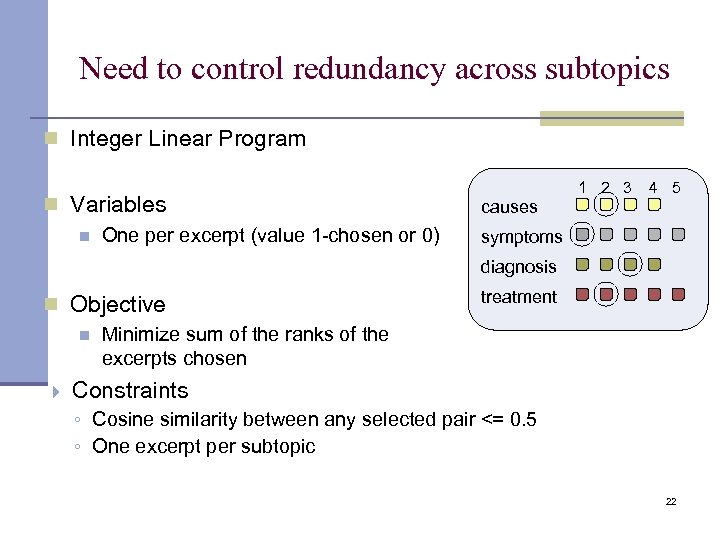

Need to control redundancy across subtopics n Integer Linear Program n Variables n One per excerpt (value 1 -chosen or 0) 1 2 3 4 5 causes symptoms diagnosis n Objective n treatment Minimize sum of the ranks of the excerpts chosen Constraints ◦ Cosine similarity between any selected pair <= 0. 5 ◦ One excerpt per subtopic 22

Need to control redundancy across subtopics n Integer Linear Program n Variables n One per excerpt (value 1 -chosen or 0) 1 2 3 4 5 causes symptoms diagnosis n Objective n treatment Minimize sum of the ranks of the excerpts chosen Constraints ◦ Cosine similarity between any selected pair <= 0. 5 ◦ One excerpt per subtopic 22

![Linguistic models of coherence [Halliday and Hasan, 1976] [13] n Coherent text is characterized Linguistic models of coherence [Halliday and Hasan, 1976] [13] n Coherent text is characterized](https://present5.com/presentation/9ed7f32e9d89618830e411705207f015/image-23.jpg) Linguistic models of coherence [Halliday and Hasan, 1976] [13] n Coherent text is characterized by the presence of various types of cohesive links that facilitate text comprehension n Reference and lexical reiteration n Pronouns, definite descriptions, semantically related words n Discourse relations (conjunction) ü I closed the window because it started raining. n Substitution (one) or ellipses (do) 23

Linguistic models of coherence [Halliday and Hasan, 1976] [13] n Coherent text is characterized by the presence of various types of cohesive links that facilitate text comprehension n Reference and lexical reiteration n Pronouns, definite descriptions, semantically related words n Discourse relations (conjunction) ü I closed the window because it started raining. n Substitution (one) or ellipses (do) 23

Referential coherence n Centering theory n tracking focus of attention across adjacent sentences [14, 15, 16, 17] n Syntactic form of references n Particularly first and subsequent mention [18, 19], pronominalization n Lexical chains n Identifying and tracking topics within a text [20, 21, 22, 23] 24

Referential coherence n Centering theory n tracking focus of attention across adjacent sentences [14, 15, 16, 17] n Syntactic form of references n Particularly first and subsequent mention [18, 19], pronominalization n Lexical chains n Identifying and tracking topics within a text [20, 21, 22, 23] 24

Discourse relations n Explicit vs. implicit ü o o ü I stayed home because I had a headache Signaled by a discourse connective Inferred without the presence of a connective I took my umbrella. [Because] The forecast was for rain in the afternoon. 25

Discourse relations n Explicit vs. implicit ü o o ü I stayed home because I had a headache Signaled by a discourse connective Inferred without the presence of a connective I took my umbrella. [Because] The forecast was for rain in the afternoon. 25

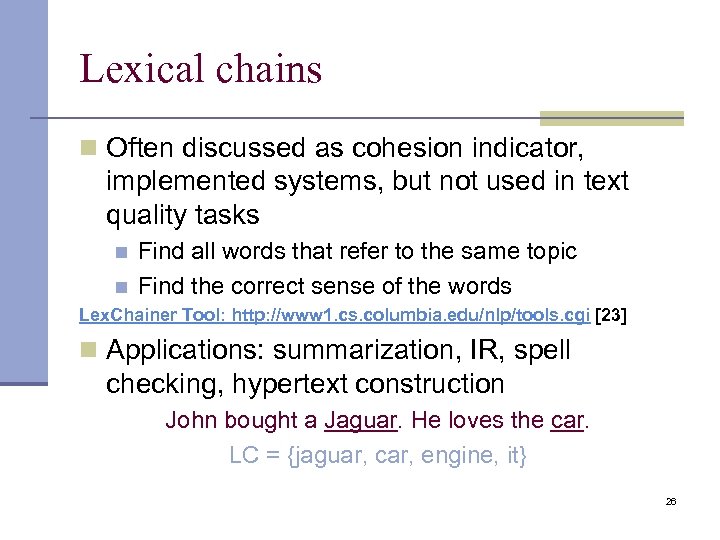

Lexical chains n Often discussed as cohesion indicator, implemented systems, but not used in text quality tasks n Find all words that refer to the same topic Find the correct sense of the words n Lex. Chainer Tool: http: //www 1. cs. columbia. edu/nlp/tools. cgi [23] n Applications: summarization, IR, spell checking, hypertext construction John bought a Jaguar. He loves the car. LC = {jaguar, car, engine, it} 26

Lexical chains n Often discussed as cohesion indicator, implemented systems, but not used in text quality tasks n Find all words that refer to the same topic Find the correct sense of the words n Lex. Chainer Tool: http: //www 1. cs. columbia. edu/nlp/tools. cgi [23] n Applications: summarization, IR, spell checking, hypertext construction John bought a Jaguar. He loves the car. LC = {jaguar, car, engine, it} 26

Centering theory ingredients (Grosz et al, 1995) n Deals with local coherence n What happens to the flow from sentence to sentence n Does not deal with global structuring of the text (paragraphs/segments) n Defines coherence as an estimate of the processing load required to “understand” the text 27

Centering theory ingredients (Grosz et al, 1995) n Deals with local coherence n What happens to the flow from sentence to sentence n Does not deal with global structuring of the text (paragraphs/segments) n Defines coherence as an estimate of the processing load required to “understand” the text 27

Processing load n Upon hearing a sentence a person n Cognitive effort to interpret the expressions in the utterance n Integrates the meaning of the utterance with that of the previous sentence n Creates some expectations on what might come next 28

Processing load n Upon hearing a sentence a person n Cognitive effort to interpret the expressions in the utterance n Integrates the meaning of the utterance with that of the previous sentence n Creates some expectations on what might come next 28

Example (1) John met his friend Mary today. (2) He was surprised to see her. (3) He thought she is still in Italy. n Form of referring expressions n n n Anaphora needs to be resolved “Create” a discourse entity at first mention with full noun phrase Creating expectations 29

Example (1) John met his friend Mary today. (2) He was surprised to see her. (3) He thought she is still in Italy. n Form of referring expressions n n n Anaphora needs to be resolved “Create” a discourse entity at first mention with full noun phrase Creating expectations 29

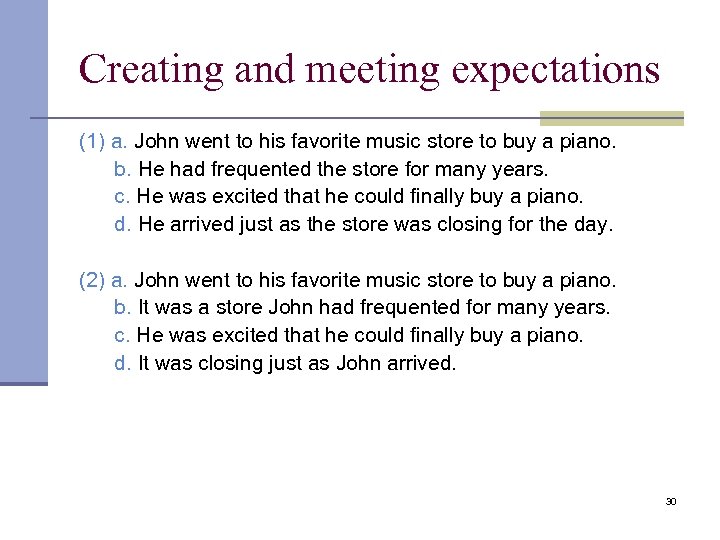

Creating and meeting expectations (1) a. John went to his favorite music store to buy a piano. b. He had frequented the store for many years. c. He was excited that he could finally buy a piano. d. He arrived just as the store was closing for the day. (2) a. John went to his favorite music store to buy a piano. b. It was a store John had frequented for many years. c. He was excited that he could finally buy a piano. d. It was closing just as John arrived. 30

Creating and meeting expectations (1) a. John went to his favorite music store to buy a piano. b. He had frequented the store for many years. c. He was excited that he could finally buy a piano. d. He arrived just as the store was closing for the day. (2) a. John went to his favorite music store to buy a piano. b. It was a store John had frequented for many years. c. He was excited that he could finally buy a piano. d. It was closing just as John arrived. 30

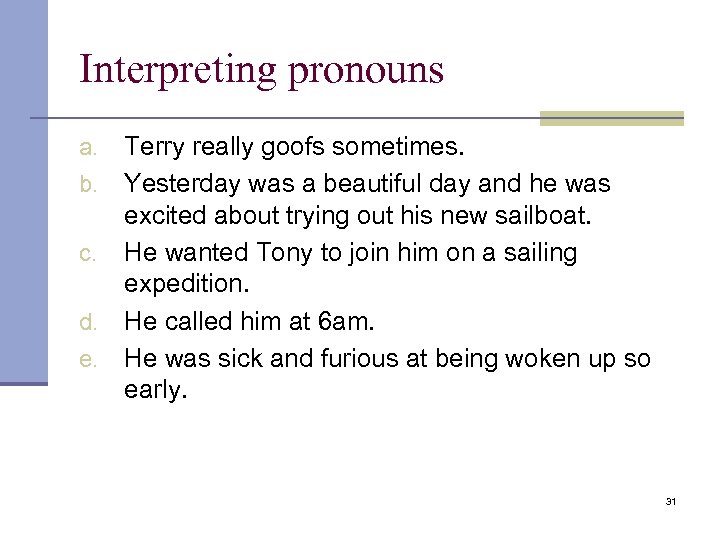

Interpreting pronouns Terry really goofs sometimes. b. Yesterday was a beautiful day and he was excited about trying out his new sailboat. c. He wanted Tony to join him on a sailing expedition. d. He called him at 6 am. e. He was sick and furious at being woken up so early. a. 31

Interpreting pronouns Terry really goofs sometimes. b. Yesterday was a beautiful day and he was excited about trying out his new sailboat. c. He wanted Tony to join him on a sailing expedition. d. He called him at 6 am. e. He was sick and furious at being woken up so early. a. 31

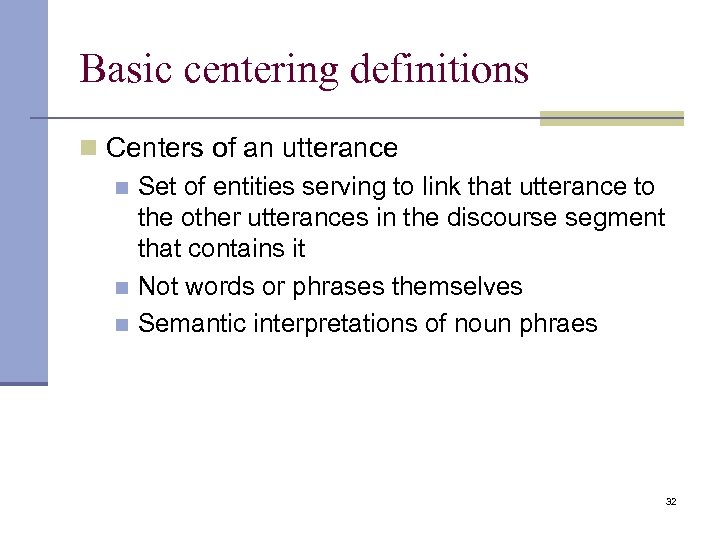

Basic centering definitions n Centers of an utterance n Set of entities serving to link that utterance to the other utterances in the discourse segment that contains it n Not words or phrases themselves n Semantic interpretations of noun phraes 32

Basic centering definitions n Centers of an utterance n Set of entities serving to link that utterance to the other utterances in the discourse segment that contains it n Not words or phrases themselves n Semantic interpretations of noun phraes 32

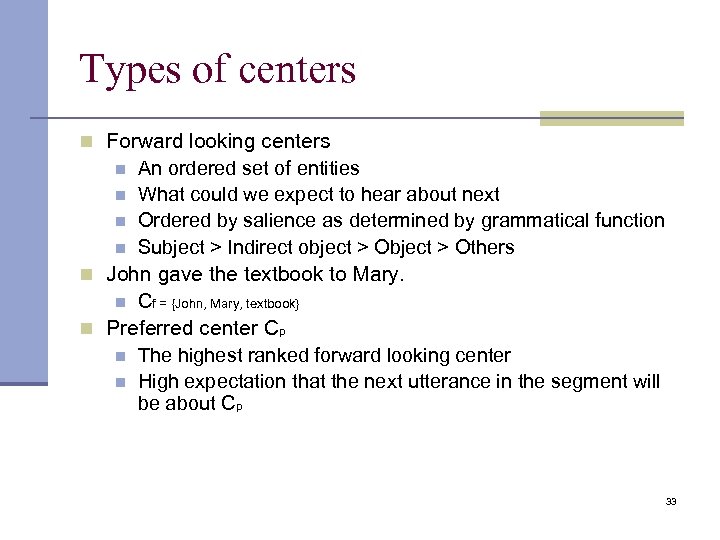

Types of centers n Forward looking centers n An ordered set of entities n What could we expect to hear about next n Ordered by salience as determined by grammatical function n Subject > Indirect object > Others n John gave the textbook to Mary. n Cf = {John, Mary, textbook} n Preferred center Cp n The highest ranked forward looking center n High expectation that the next utterance in the segment will be about Cp 33

Types of centers n Forward looking centers n An ordered set of entities n What could we expect to hear about next n Ordered by salience as determined by grammatical function n Subject > Indirect object > Others n John gave the textbook to Mary. n Cf = {John, Mary, textbook} n Preferred center Cp n The highest ranked forward looking center n High expectation that the next utterance in the segment will be about Cp 33

Backward looking center n Single backward looking center, Cb (U) n For each utterance other than the segmentinitial one n The backward looking center of utterance Un+1 connects with one of the forward looking centers of Un n Cb (U+1) is the most highly ranked element from Cf (Un) that is also realized in U+1 34

Backward looking center n Single backward looking center, Cb (U) n For each utterance other than the segmentinitial one n The backward looking center of utterance Un+1 connects with one of the forward looking centers of Un n Cb (U+1) is the most highly ranked element from Cf (Un) that is also realized in U+1 34

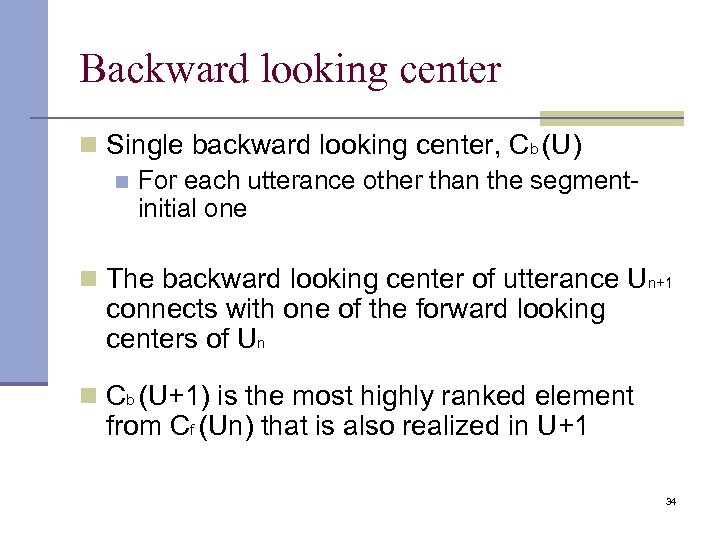

![Centering transitions ordering Cb(Un+1)=Cb(Un) Cb(Un+1) != OR Cb(Un)=[? ] Cb(Un+1) = Cp(Un+1) continue Cb(Un+1) Centering transitions ordering Cb(Un+1)=Cb(Un) Cb(Un+1) != OR Cb(Un)=[? ] Cb(Un+1) = Cp(Un+1) continue Cb(Un+1)](https://present5.com/presentation/9ed7f32e9d89618830e411705207f015/image-35.jpg) Centering transitions ordering Cb(Un+1)=Cb(Un) Cb(Un+1) != OR Cb(Un)=[? ] Cb(Un+1) = Cp(Un+1) continue Cb(Un+1) != Cp(Un+1) retain 35 smooth-shift rough-shift

Centering transitions ordering Cb(Un+1)=Cb(Un) Cb(Un+1) != OR Cb(Un)=[? ] Cb(Un+1) = Cp(Un+1) continue Cb(Un+1) != Cp(Un+1) retain 35 smooth-shift rough-shift

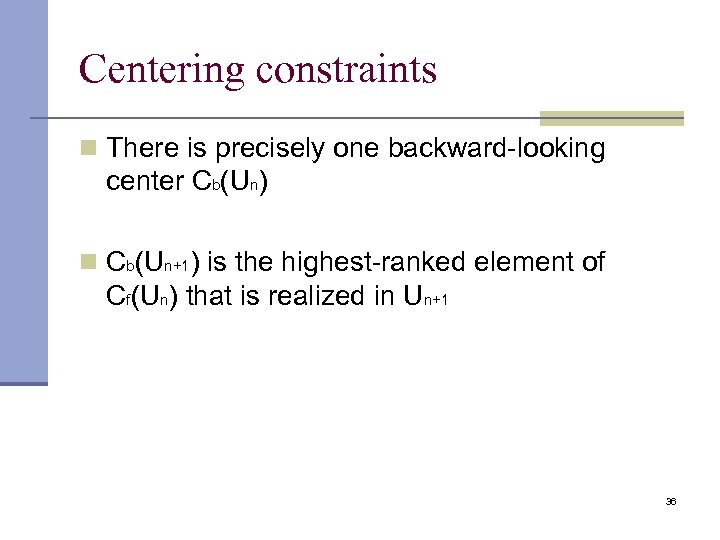

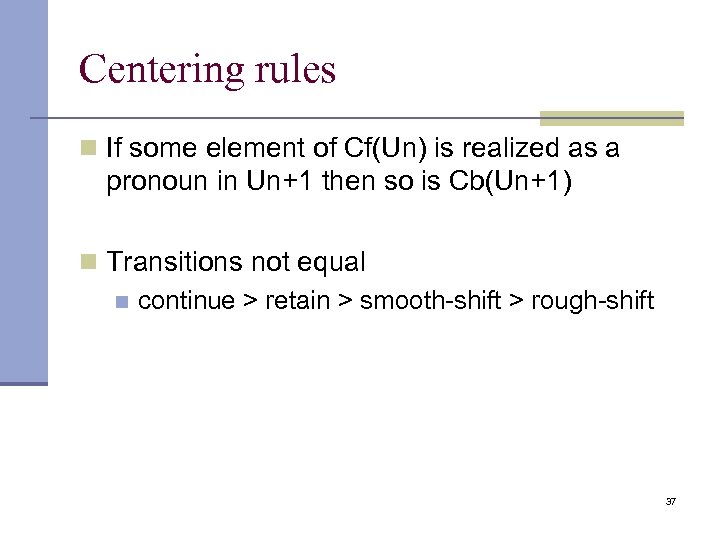

Centering constraints n There is precisely one backward-looking center Cb(Un) n Cb(Un+1) is the highest-ranked element of Cf(Un) that is realized in Un+1 36

Centering constraints n There is precisely one backward-looking center Cb(Un) n Cb(Un+1) is the highest-ranked element of Cf(Un) that is realized in Un+1 36

Centering rules n If some element of Cf(Un) is realized as a pronoun in Un+1 then so is Cb(Un+1) n Transitions not equal n continue > retain > smooth-shift > rough-shift 37

Centering rules n If some element of Cf(Un) is realized as a pronoun in Un+1 then so is Cb(Un+1) n Transitions not equal n continue > retain > smooth-shift > rough-shift 37

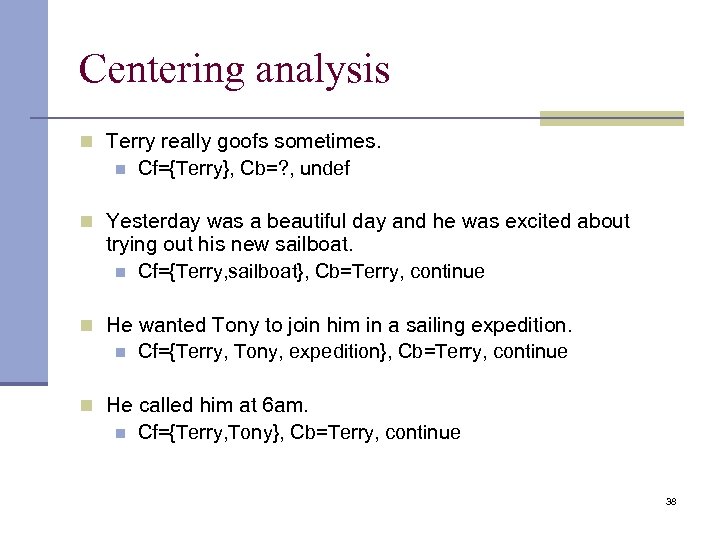

Centering analysis n Terry really goofs sometimes. n Cf={Terry}, Cb=? , undef n Yesterday was a beautiful day and he was excited about trying out his new sailboat. n Cf={Terry, sailboat}, Cb=Terry, continue n He wanted Tony to join him in a sailing expedition. n Cf={Terry, Tony, expedition}, Cb=Terry, continue n He called him at 6 am. n Cf={Terry, Tony}, Cb=Terry, continue 38

Centering analysis n Terry really goofs sometimes. n Cf={Terry}, Cb=? , undef n Yesterday was a beautiful day and he was excited about trying out his new sailboat. n Cf={Terry, sailboat}, Cb=Terry, continue n He wanted Tony to join him in a sailing expedition. n Cf={Terry, Tony, expedition}, Cb=Terry, continue n He called him at 6 am. n Cf={Terry, Tony}, Cb=Terry, continue 38

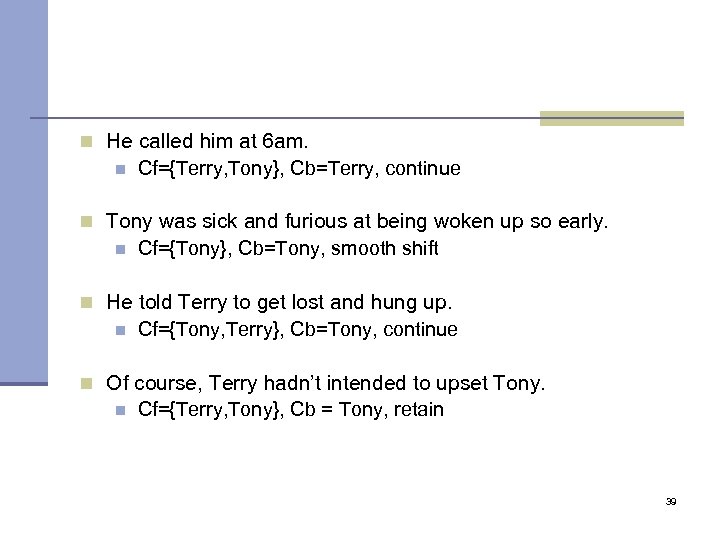

n He called him at 6 am. n Cf={Terry, Tony}, Cb=Terry, continue n Tony was sick and furious at being woken up so early. n Cf={Tony}, Cb=Tony, smooth shift n He told Terry to get lost and hung up. n Cf={Tony, Terry}, Cb=Tony, continue n Of course, Terry hadn’t intended to upset Tony. n Cf={Terry, Tony}, Cb = Tony, retain 39

n He called him at 6 am. n Cf={Terry, Tony}, Cb=Terry, continue n Tony was sick and furious at being woken up so early. n Cf={Tony}, Cb=Tony, smooth shift n He told Terry to get lost and hung up. n Cf={Tony, Terry}, Cb=Tony, continue n Of course, Terry hadn’t intended to upset Tony. n Cf={Terry, Tony}, Cb = Tony, retain 39

Rough shifts in evaluation of writing skills (Miltsakaki and Kukich, 2002) n Automatic grading of essays by E-rater n Syntactic variety n Represented by features that quantify the occurrence of clause types n Clear transitions n Cue phrases in certain syntactic constructions n Existence of main and supporting points n Appropriateness of the vocabulary content of the essay n What about local coherence? 40

Rough shifts in evaluation of writing skills (Miltsakaki and Kukich, 2002) n Automatic grading of essays by E-rater n Syntactic variety n Represented by features that quantify the occurrence of clause types n Clear transitions n Cue phrases in certain syntactic constructions n Existence of main and supporting points n Appropriateness of the vocabulary content of the essay n What about local coherence? 40

Essay score model n Human score available n E-rater prediction available n Percentage of rough-shifts in each essay: analysis done manually n Negative correlation between the human score and the percentage of rough-shifts 41

Essay score model n Human score available n E-rater prediction available n Percentage of rough-shifts in each essay: analysis done manually n Negative correlation between the human score and the percentage of rough-shifts 41

n Linear multi-factor regression n Approximate the human score as a linear function of the e-rater prediction and the percentage of rough-shifts n Adding rough shifts significantly improves the model of the score n 0. 5 improvement on 1— 6 scale n How easy/difficult would it be to fully automate the rough-shift variable? 42

n Linear multi-factor regression n Approximate the human score as a linear function of the e-rater prediction and the percentage of rough-shifts n Adding rough shifts significantly improves the model of the score n 0. 5 improvement on 1— 6 scale n How easy/difficult would it be to fully automate the rough-shift variable? 42

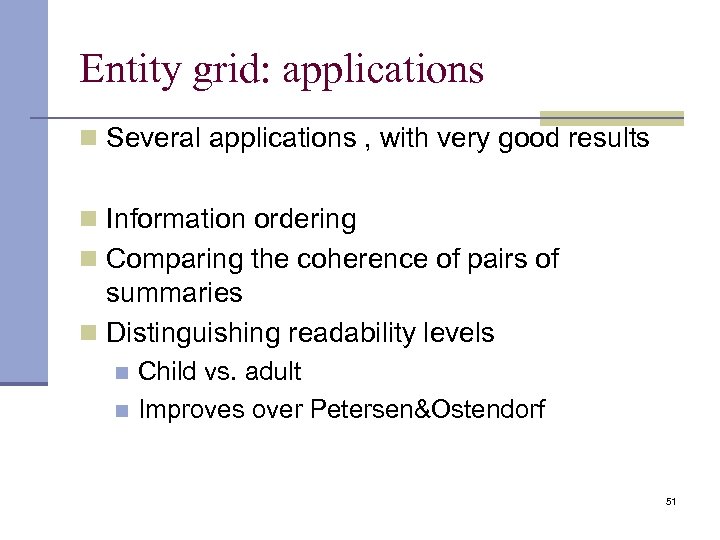

Variants of centering and application to information ordering n Karamanis et al, 09 is the most comprehensive overview of variants of centering theory and an evaluation of centering in a specific task related to text quality 43

Variants of centering and application to information ordering n Karamanis et al, 09 is the most comprehensive overview of variants of centering theory and an evaluation of centering in a specific task related to text quality 43

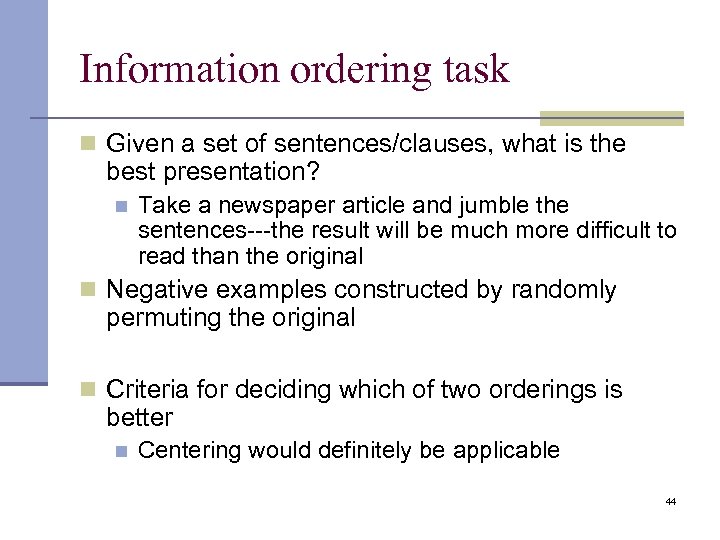

Information ordering task n Given a set of sentences/clauses, what is the best presentation? n Take a newspaper article and jumble the sentences---the result will be much more difficult to read than the original n Negative examples constructed by randomly permuting the original n Criteria for deciding which of two orderings is better n Centering would definitely be applicable 44

Information ordering task n Given a set of sentences/clauses, what is the best presentation? n Take a newspaper article and jumble the sentences---the result will be much more difficult to read than the original n Negative examples constructed by randomly permuting the original n Criteria for deciding which of two orderings is better n Centering would definitely be applicable 44

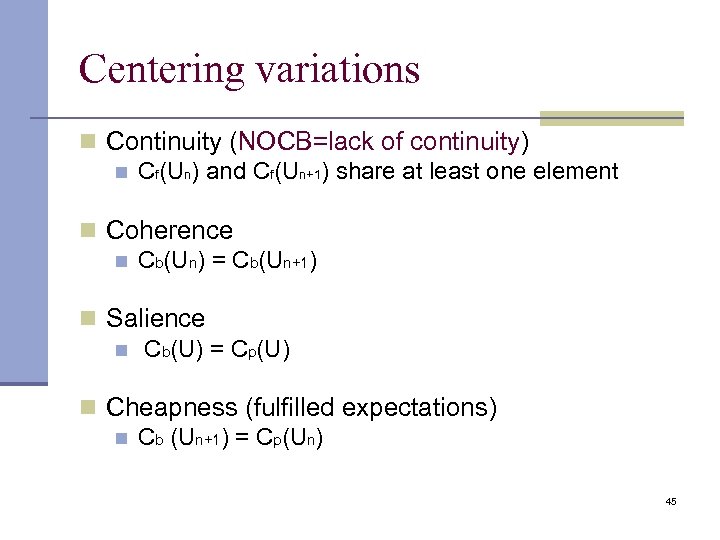

Centering variations n Continuity (NOCB=lack of continuity) n Cf(Un) and Cf(Un+1) share at least one element n Coherence n Cb(Un) = Cb(Un+1) n Salience n Cb(U) = Cp(U) n Cheapness (fulfilled expectations) n Cb (Un+1) = Cp(Un) 45

Centering variations n Continuity (NOCB=lack of continuity) n Cf(Un) and Cf(Un+1) share at least one element n Coherence n Cb(Un) = Cb(Un+1) n Salience n Cb(U) = Cp(U) n Cheapness (fulfilled expectations) n Cb (Un+1) = Cp(Un) 45

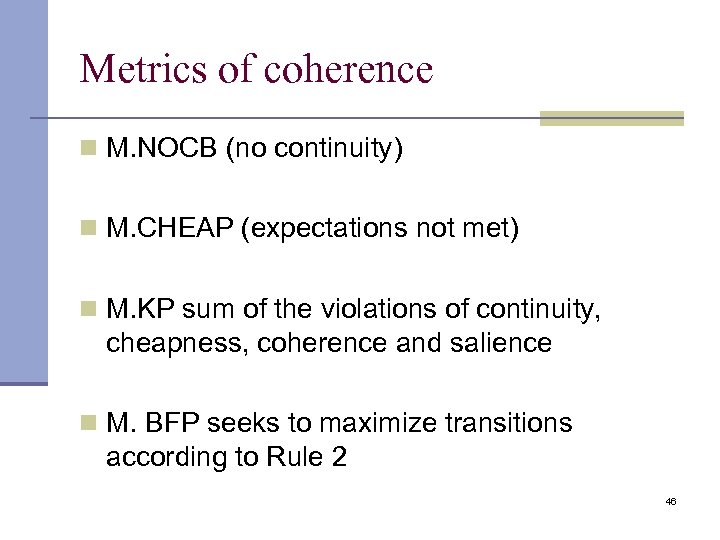

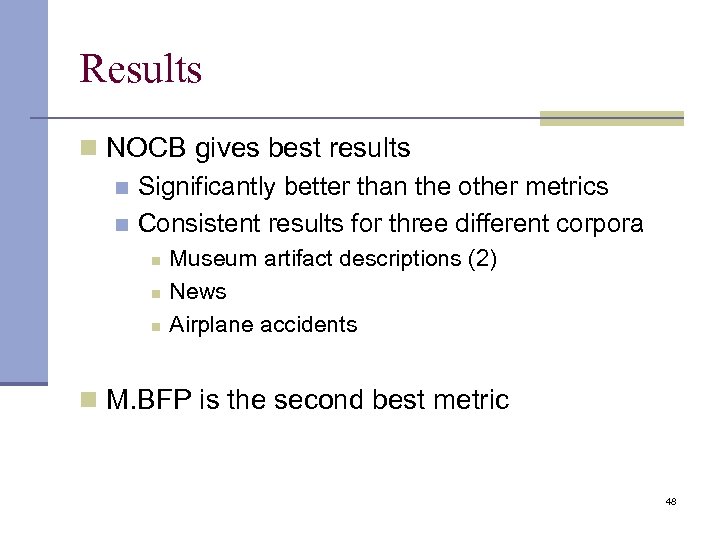

Metrics of coherence n M. NOCB (no continuity) n M. CHEAP (expectations not met) n M. KP sum of the violations of continuity, cheapness, coherence and salience n M. BFP seeks to maximize transitions according to Rule 2 46

Metrics of coherence n M. NOCB (no continuity) n M. CHEAP (expectations not met) n M. KP sum of the violations of continuity, cheapness, coherence and salience n M. BFP seeks to maximize transitions according to Rule 2 46

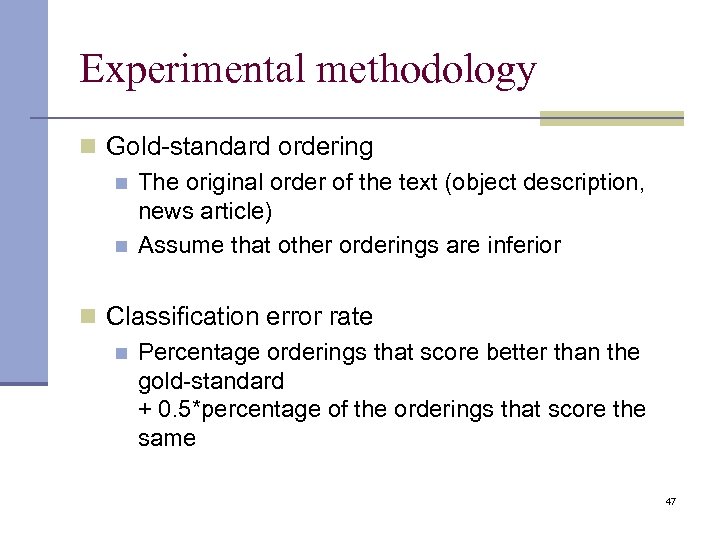

Experimental methodology n Gold-standard ordering n The original order of the text (object description, news article) n Assume that other orderings are inferior n Classification error rate n Percentage orderings that score better than the gold-standard + 0. 5*percentage of the orderings that score the same 47

Experimental methodology n Gold-standard ordering n The original order of the text (object description, news article) n Assume that other orderings are inferior n Classification error rate n Percentage orderings that score better than the gold-standard + 0. 5*percentage of the orderings that score the same 47

Results n NOCB gives best results n Significantly better than the other metrics n Consistent results for three different corpora n n n Museum artifact descriptions (2) News Airplane accidents n M. BFP is the second best metric 48

Results n NOCB gives best results n Significantly better than the other metrics n Consistent results for three different corpora n n n Museum artifact descriptions (2) News Airplane accidents n M. BFP is the second best metric 48

49

49

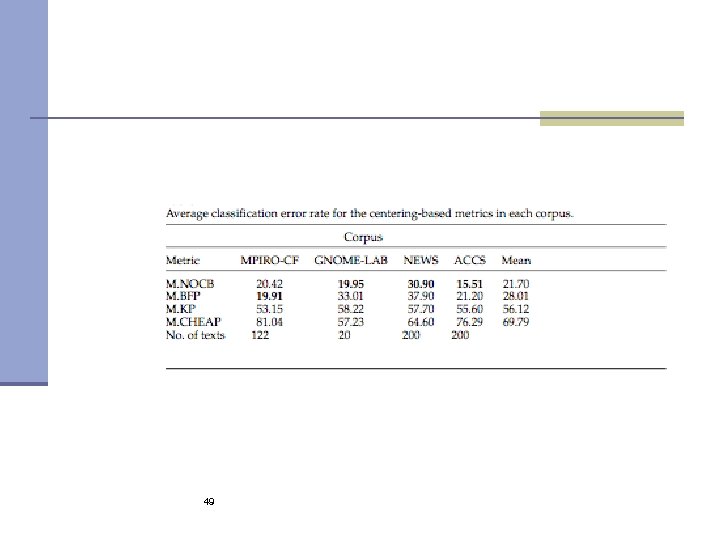

Entity grid (Barzilay and Lapata, 2005, 2008) n Inspired by centering n Tracks entities across adjacent sentences, as well as their syntactic positions n Much easier to compute from raw text Brown Coherence Toolkit http: //www. cs. brown. edu/~melsner/manual. html 50

Entity grid (Barzilay and Lapata, 2005, 2008) n Inspired by centering n Tracks entities across adjacent sentences, as well as their syntactic positions n Much easier to compute from raw text Brown Coherence Toolkit http: //www. cs. brown. edu/~melsner/manual. html 50

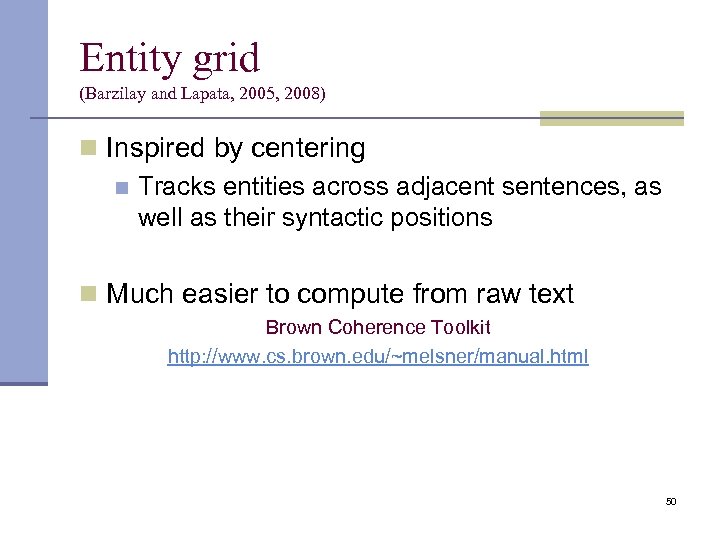

Entity grid: applications n Several applications , with very good results n Information ordering n Comparing the coherence of pairs of summaries n Distinguishing readability levels Child vs. adult n Improves over Petersen&Ostendorf n 51

Entity grid: applications n Several applications , with very good results n Information ordering n Comparing the coherence of pairs of summaries n Distinguishing readability levels Child vs. adult n Improves over Petersen&Ostendorf n 51

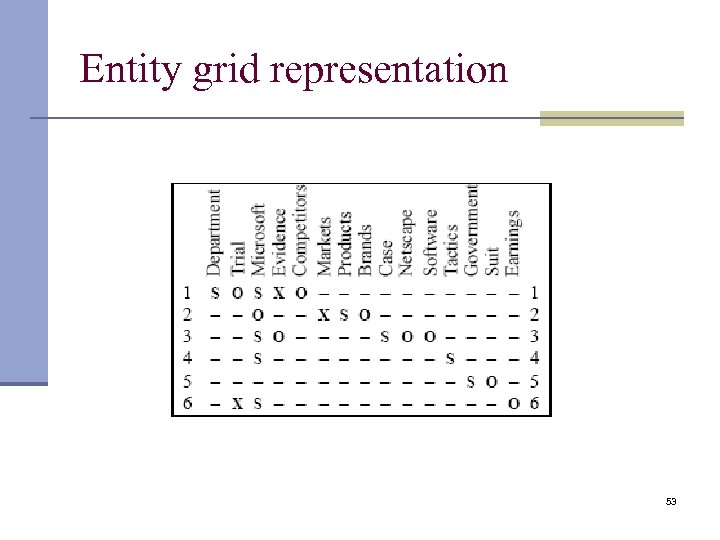

![Entity grid example 1 [The Justice Department]S is conducting an [anti-trust trial]O against [Microsoft Entity grid example 1 [The Justice Department]S is conducting an [anti-trust trial]O against [Microsoft](https://present5.com/presentation/9ed7f32e9d89618830e411705207f015/image-52.jpg) Entity grid example 1 [The Justice Department]S is conducting an [anti-trust trial]O against [Microsoft Corp. ]X with [evidence]X that [the company]S is increasingly attempting to crush [competitors]O. 2 [Microsoft]O is accused of trying to forcefully buy into [markets]X where [its own products]S are not competitive enough to unseat [established brands]O. 3 [The case]S revolves around [evidence]O of [Microsoft]S aggressively pressuring [Netscape]O into merging [browser software]O. 4 [Microsoft]S claims [its tactics]S are commonplace and good economically. 5 [The government]S may file [a civil suit]O ruling that [conspiracy]S to curb [competition]O through [collusion]X is [a violation of the Sherman Act]O. 6 [Microsoft]S continues to show [increased earnings]O despite [the trial]X. 52

Entity grid example 1 [The Justice Department]S is conducting an [anti-trust trial]O against [Microsoft Corp. ]X with [evidence]X that [the company]S is increasingly attempting to crush [competitors]O. 2 [Microsoft]O is accused of trying to forcefully buy into [markets]X where [its own products]S are not competitive enough to unseat [established brands]O. 3 [The case]S revolves around [evidence]O of [Microsoft]S aggressively pressuring [Netscape]O into merging [browser software]O. 4 [Microsoft]S claims [its tactics]S are commonplace and good economically. 5 [The government]S may file [a civil suit]O ruling that [conspiracy]S to curb [competition]O through [collusion]X is [a violation of the Sherman Act]O. 6 [Microsoft]S continues to show [increased earnings]O despite [the trial]X. 52

Entity grid representation 53

Entity grid representation 53

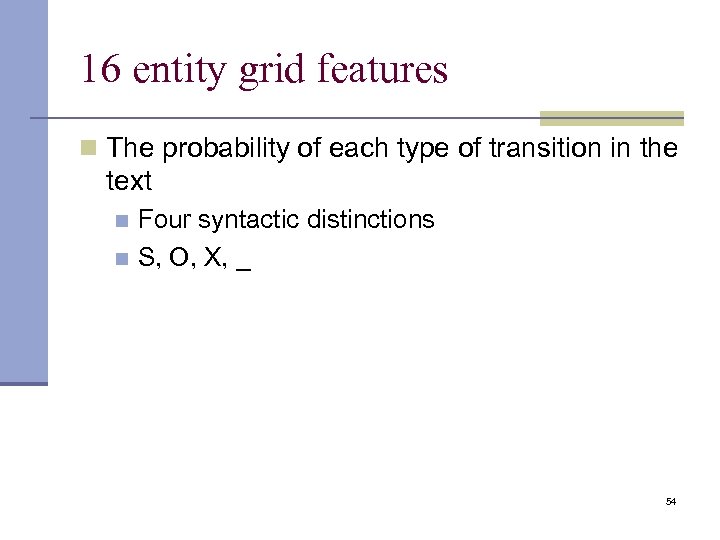

16 entity grid features n The probability of each type of transition in the text Four syntactic distinctions n S, O, X, _ n 54

16 entity grid features n The probability of each type of transition in the text Four syntactic distinctions n S, O, X, _ n 54

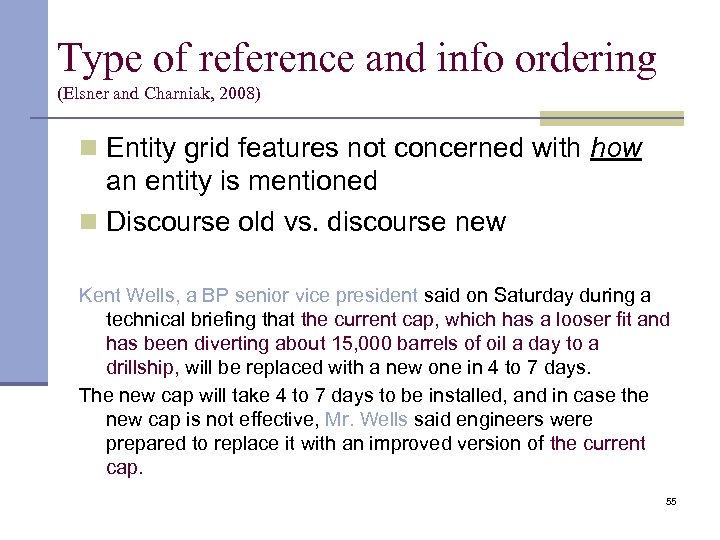

Type of reference and info ordering (Elsner and Charniak, 2008) n Entity grid features not concerned with how an entity is mentioned n Discourse old vs. discourse new Kent Wells, a BP senior vice president said on Saturday during a technical briefing that the current cap, which has a looser fit and has been diverting about 15, 000 barrels of oil a day to a drillship, will be replaced with a new one in 4 to 7 days. The new cap will take 4 to 7 days to be installed, and in case the new cap is not effective, Mr. Wells said engineers were prepared to replace it with an improved version of the current cap. 55

Type of reference and info ordering (Elsner and Charniak, 2008) n Entity grid features not concerned with how an entity is mentioned n Discourse old vs. discourse new Kent Wells, a BP senior vice president said on Saturday during a technical briefing that the current cap, which has a looser fit and has been diverting about 15, 000 barrels of oil a day to a drillship, will be replaced with a new one in 4 to 7 days. The new cap will take 4 to 7 days to be installed, and in case the new cap is not effective, Mr. Wells said engineers were prepared to replace it with an improved version of the current cap. 55

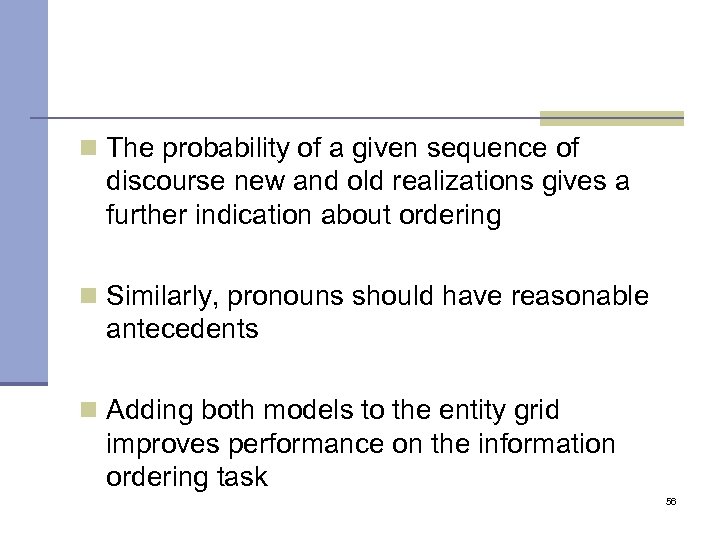

n The probability of a given sequence of discourse new and old realizations gives a further indication about ordering n Similarly, pronouns should have reasonable antecedents n Adding both models to the entity grid improves performance on the information ordering task 56

n The probability of a given sequence of discourse new and old realizations gives a further indication about ordering n Similarly, pronouns should have reasonable antecedents n Adding both models to the entity grid improves performance on the information ordering task 56

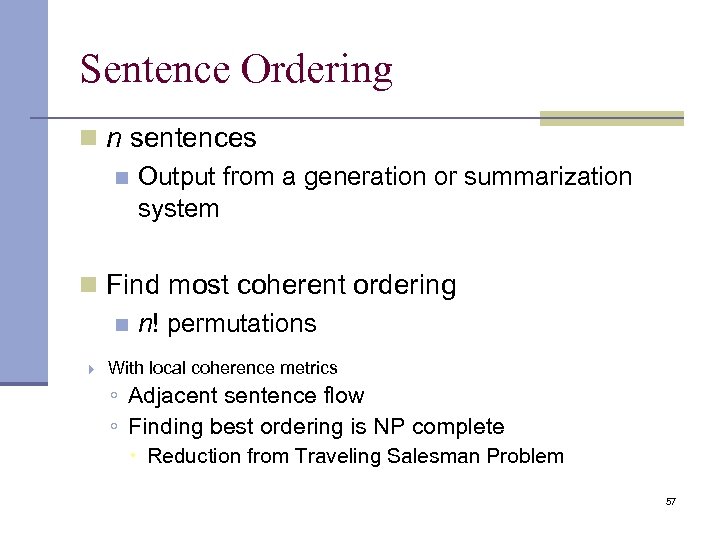

Sentence Ordering n n sentences n Output from a generation or summarization system n Find most coherent ordering n n! permutations With local coherence metrics ◦ Adjacent sentence flow ◦ Finding best ordering is NP complete Reduction from Traveling Salesman Problem 57

Sentence Ordering n n sentences n Output from a generation or summarization system n Find most coherent ordering n n! permutations With local coherence metrics ◦ Adjacent sentence flow ◦ Finding best ordering is NP complete Reduction from Traveling Salesman Problem 57

![Word co-occurrence model (Lapata, ACL 2003; Soricut and Marcu, 2005) [23, 24] n Idea Word co-occurrence model (Lapata, ACL 2003; Soricut and Marcu, 2005) [23, 24] n Idea](https://present5.com/presentation/9ed7f32e9d89618830e411705207f015/image-58.jpg) Word co-occurrence model (Lapata, ACL 2003; Soricut and Marcu, 2005) [23, 24] n Idea from statistical machine translation n Alignment models John wentalléa restaurant. John est to à un restaurant. Heordonna de poisson. Il ordered fish. Thegarçon was very attentive. Le waiter était très attentif. … … We at a restaurant yesterday. We also ordered some take away. He ordered to a restaurant. John went fish. The ordered fish. He waiter was very attentive. John waiterhim avery attentive. The gave was huge tip. … … P(ordered | restaurant) P(fish | poisson) P(waiter | ordered) P(tip | waiter) … 58

Word co-occurrence model (Lapata, ACL 2003; Soricut and Marcu, 2005) [23, 24] n Idea from statistical machine translation n Alignment models John wentalléa restaurant. John est to à un restaurant. Heordonna de poisson. Il ordered fish. Thegarçon was very attentive. Le waiter était très attentif. … … We at a restaurant yesterday. We also ordered some take away. He ordered to a restaurant. John went fish. The ordered fish. He waiter was very attentive. John waiterhim avery attentive. The gave was huge tip. … … P(ordered | restaurant) P(fish | poisson) P(waiter | ordered) P(tip | waiter) … 58

Discourse (coherence) relations n Only recently empirically results have shown that discourse relations are predictive of text quality (Pitler and Nenkova, 2008) 59

Discourse (coherence) relations n Only recently empirically results have shown that discourse relations are predictive of text quality (Pitler and Nenkova, 2008) 59

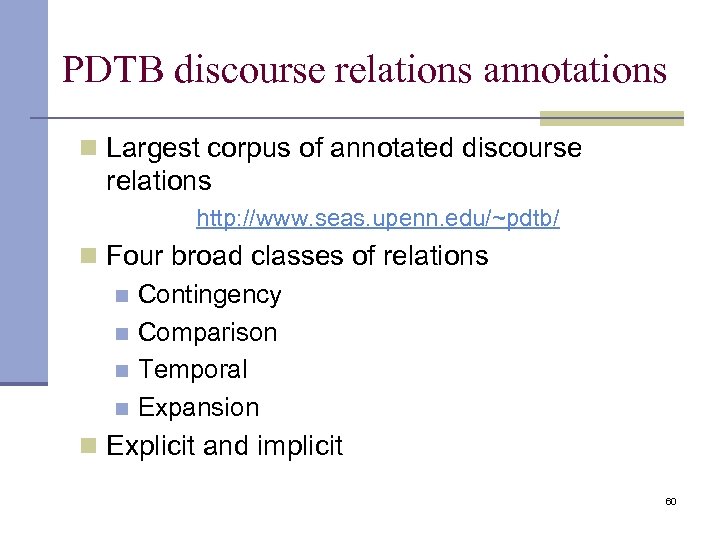

PDTB discourse relations annotations n Largest corpus of annotated discourse relations http: //www. seas. upenn. edu/~pdtb/ n Four broad classes of relations n Contingency n Comparison n Temporal n Expansion n Explicit and implicit 60

PDTB discourse relations annotations n Largest corpus of annotated discourse relations http: //www. seas. upenn. edu/~pdtb/ n Four broad classes of relations n Contingency n Comparison n Temporal n Expansion n Explicit and implicit 60

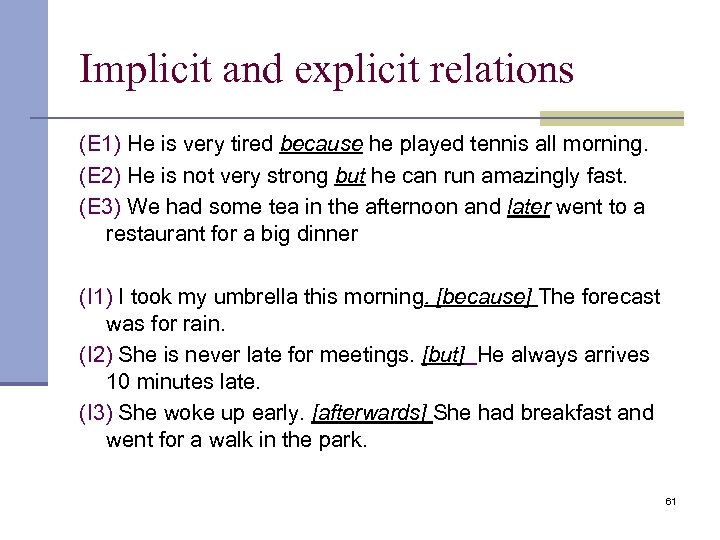

Implicit and explicit relations (E 1) He is very tired because he played tennis all morning. (E 2) He is not very strong but he can run amazingly fast. (E 3) We had some tea in the afternoon and later went to a restaurant for a big dinner (I 1) I took my umbrella this morning. [because] The forecast was for rain. (I 2) She is never late for meetings. [but] He always arrives 10 minutes late. (I 3) She woke up early. [afterwards] She had breakfast and went for a walk in the park. 61

Implicit and explicit relations (E 1) He is very tired because he played tennis all morning. (E 2) He is not very strong but he can run amazingly fast. (E 3) We had some tea in the afternoon and later went to a restaurant for a big dinner (I 1) I took my umbrella this morning. [because] The forecast was for rain. (I 2) She is never late for meetings. [but] He always arrives 10 minutes late. (I 3) She woke up early. [afterwards] She had breakfast and went for a walk in the park. 61

What is the relative importance of factors in determining text quality? n Competent readers (native English speaker) n graduate students at Penn n Wall Street Journal texts n 30 texts ranked on scale 1 to 5 n How well-written is this article? n How well does the text fit together? n How easy was it to understand? n How interesting is the article? 62

What is the relative importance of factors in determining text quality? n Competent readers (native English speaker) n graduate students at Penn n Wall Street Journal texts n 30 texts ranked on scale 1 to 5 n How well-written is this article? n How well does the text fit together? n How easy was it to understand? n How interesting is the article? 62

n Several judgments for each text n Final quality score was the average n Scores range from 1. 5 to 4. 33 n Mean 3. 2 63

n Several judgments for each text n Final quality score was the average n Scores range from 1. 5 to 4. 33 n Mean 3. 2 63

n Which of the many indicators will work best? n Usually research study focus on only one or two n How do indicators combine? n Metrics n Correlation coefficient n Accuracy of pair-wise ranking prediction 64

n Which of the many indicators will work best? n Usually research study focus on only one or two n How do indicators combine? n Metrics n Correlation coefficient n Accuracy of pair-wise ranking prediction 64

Correlation coefficients between assessor ratings and different features 65

Correlation coefficients between assessor ratings and different features 65

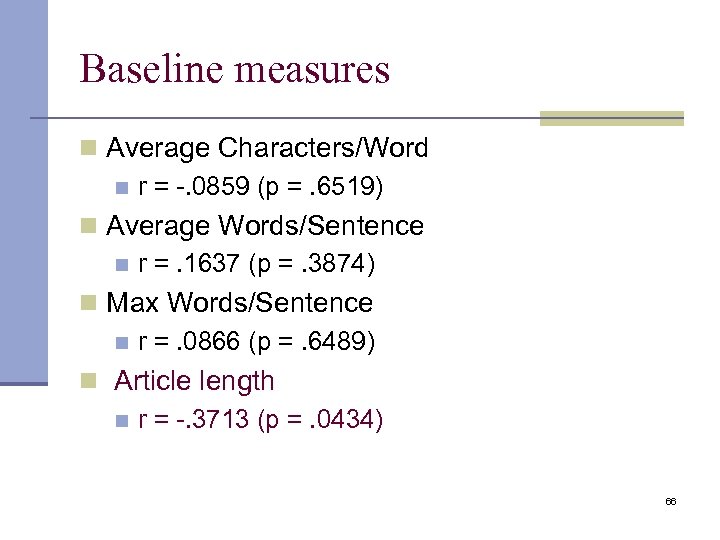

Baseline measures n Average Characters/Word n r = -. 0859 (p =. 6519) n Average Words/Sentence n r =. 1637 (p =. 3874) n Max Words/Sentence n r =. 0866 (p =. 6489) n Article length n r = -. 3713 (p =. 0434) 66

Baseline measures n Average Characters/Word n r = -. 0859 (p =. 6519) n Average Words/Sentence n r =. 1637 (p =. 3874) n Max Words/Sentence n r =. 0866 (p =. 6489) n Article length n r = -. 3713 (p =. 0434) 66

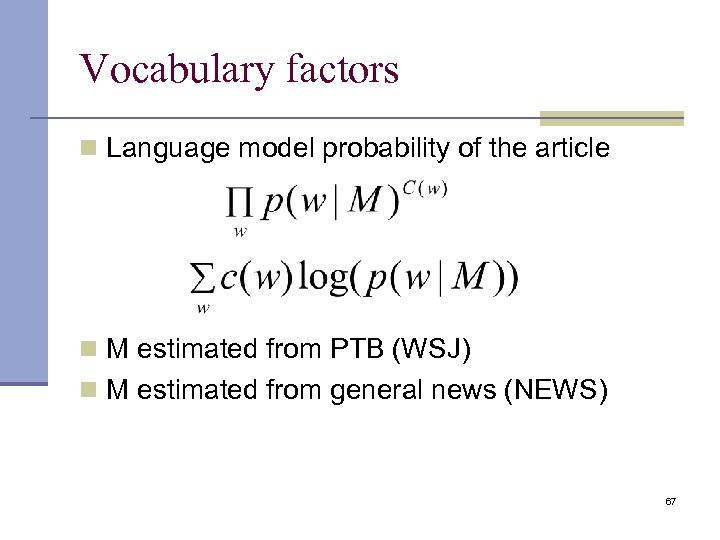

Vocabulary factors n Language model probability of the article n M estimated from PTB (WSJ) n M estimated from general news (NEWS) 67

Vocabulary factors n Language model probability of the article n M estimated from PTB (WSJ) n M estimated from general news (NEWS) 67

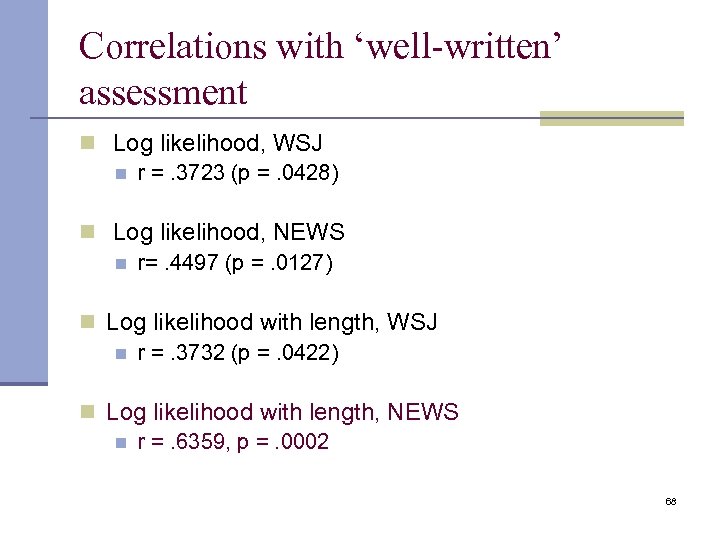

Correlations with ‘well-written’ assessment n Log likelihood, WSJ n r =. 3723 (p =. 0428) n Log likelihood, NEWS n r=. 4497 (p =. 0127) n Log likelihood with length, WSJ n r =. 3732 (p =. 0422) n Log likelihood with length, NEWS n r =. 6359, p =. 0002 68

Correlations with ‘well-written’ assessment n Log likelihood, WSJ n r =. 3723 (p =. 0428) n Log likelihood, NEWS n r=. 4497 (p =. 0127) n Log likelihood with length, WSJ n r =. 3732 (p =. 0422) n Log likelihood with length, NEWS n r =. 6359, p =. 0002 68

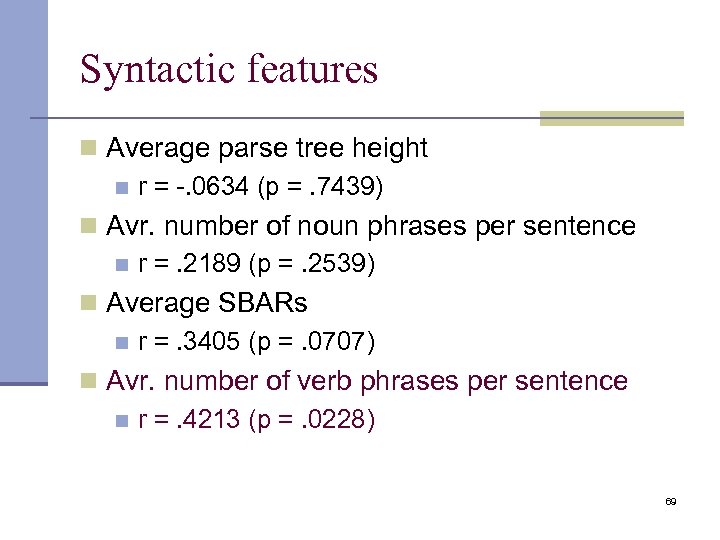

Syntactic features n Average parse tree height n r = -. 0634 (p =. 7439) n Avr. number of noun phrases per sentence n r =. 2189 (p =. 2539) n Average SBARs n r =. 3405 (p =. 0707) n Avr. number of verb phrases per sentence n r =. 4213 (p =. 0228) 69

Syntactic features n Average parse tree height n r = -. 0634 (p =. 7439) n Avr. number of noun phrases per sentence n r =. 2189 (p =. 2539) n Average SBARs n r =. 3405 (p =. 0707) n Avr. number of verb phrases per sentence n r =. 4213 (p =. 0228) 69

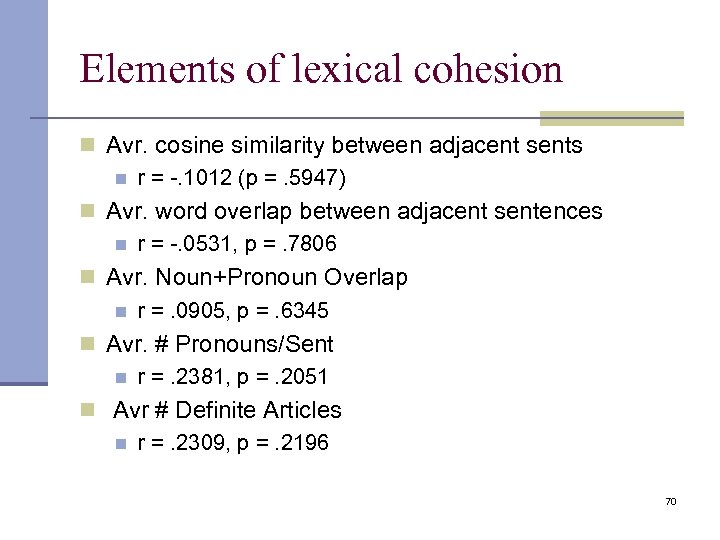

Elements of lexical cohesion n Avr. cosine similarity between adjacent sents n r = -. 1012 (p =. 5947) n Avr. word overlap between adjacent sentences n r = -. 0531, p =. 7806 n Avr. Noun+Pronoun Overlap n r =. 0905, p =. 6345 n Avr. # Pronouns/Sent n r =. 2381, p =. 2051 n Avr # Definite Articles n r =. 2309, p =. 2196 70

Elements of lexical cohesion n Avr. cosine similarity between adjacent sents n r = -. 1012 (p =. 5947) n Avr. word overlap between adjacent sentences n r = -. 0531, p =. 7806 n Avr. Noun+Pronoun Overlap n r =. 0905, p =. 6345 n Avr. # Pronouns/Sent n r =. 2381, p =. 2051 n Avr # Definite Articles n r =. 2309, p =. 2196 70

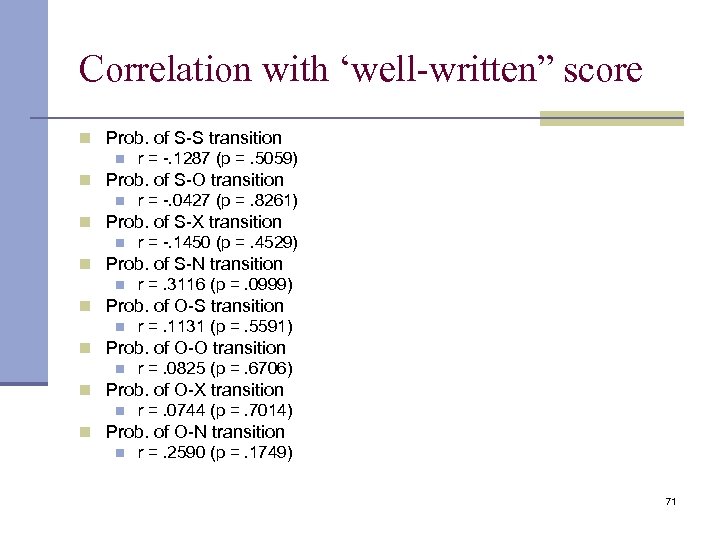

Correlation with ‘well-written” score n Prob. of S-S transition n r = -. 1287 (p =. 5059) n Prob. of S-O transition n r = -. 0427 (p =. 8261) n Prob. of S-X transition n r = -. 1450 (p =. 4529) n Prob. of S-N transition n r =. 3116 (p =. 0999) n Prob. of O-S transition n r =. 1131 (p =. 5591) n Prob. of O-O transition n r =. 0825 (p =. 6706) n Prob. of O-X transition n r =. 0744 (p =. 7014) n Prob. of O-N transition n r =. 2590 (p =. 1749) 71

Correlation with ‘well-written” score n Prob. of S-S transition n r = -. 1287 (p =. 5059) n Prob. of S-O transition n r = -. 0427 (p =. 8261) n Prob. of S-X transition n r = -. 1450 (p =. 4529) n Prob. of S-N transition n r =. 3116 (p =. 0999) n Prob. of O-S transition n r =. 1131 (p =. 5591) n Prob. of O-O transition n r =. 0825 (p =. 6706) n Prob. of O-X transition n r =. 0744 (p =. 7014) n Prob. of O-N transition n r =. 2590 (p =. 1749) 71

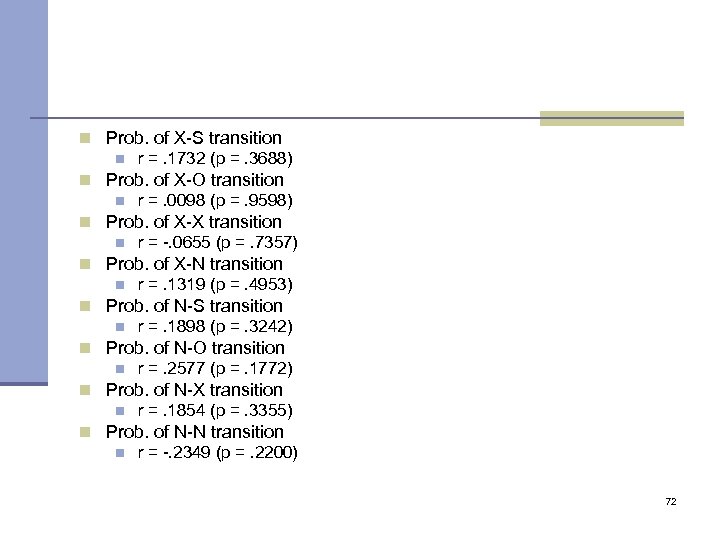

n Prob. of X-S transition n r =. 1732 (p =. 3688) n Prob. of X-O transition n r =. 0098 (p =. 9598) n Prob. of X-X transition n r = -. 0655 (p =. 7357) n Prob. of X-N transition n r =. 1319 (p =. 4953) n Prob. of N-S transition n r =. 1898 (p =. 3242) n Prob. of N-O transition n r =. 2577 (p =. 1772) n Prob. of N-X transition n r =. 1854 (p =. 3355) n Prob. of N-N transition n r = -. 2349 (p =. 2200) 72

n Prob. of X-S transition n r =. 1732 (p =. 3688) n Prob. of X-O transition n r =. 0098 (p =. 9598) n Prob. of X-X transition n r = -. 0655 (p =. 7357) n Prob. of X-N transition n r =. 1319 (p =. 4953) n Prob. of N-S transition n r =. 1898 (p =. 3242) n Prob. of N-O transition n r =. 2577 (p =. 1772) n Prob. of N-X transition n r =. 1854 (p =. 3355) n Prob. of N-N transition n r = -. 2349 (p =. 2200) 72

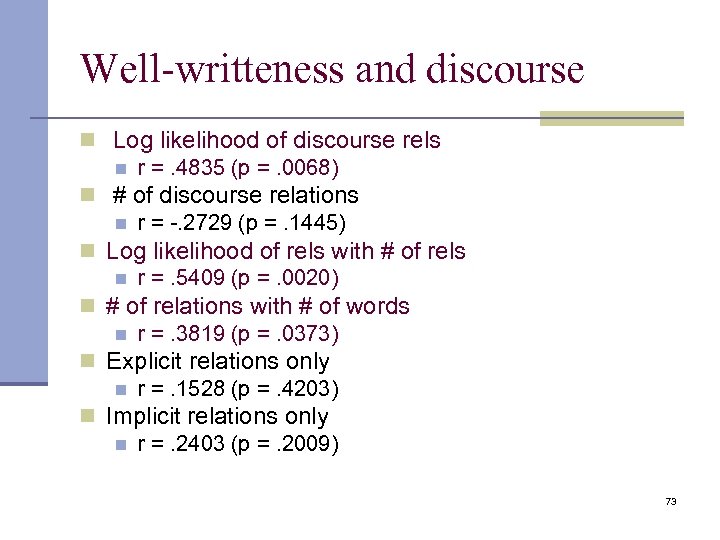

Well-writteness and discourse n Log likelihood of discourse rels n r =. 4835 (p =. 0068) n # of discourse relations n r = -. 2729 (p =. 1445) n Log likelihood of rels with # of rels n r =. 5409 (p =. 0020) n # of relations with # of words n r =. 3819 (p =. 0373) n Explicit relations only n r =. 1528 (p =. 4203) n Implicit relations only n r =. 2403 (p =. 2009) 73

Well-writteness and discourse n Log likelihood of discourse rels n r =. 4835 (p =. 0068) n # of discourse relations n r = -. 2729 (p =. 1445) n Log likelihood of rels with # of rels n r =. 5409 (p =. 0020) n # of relations with # of words n r =. 3819 (p =. 0373) n Explicit relations only n r =. 1528 (p =. 4203) n Implicit relations only n r =. 2403 (p =. 2009) 73

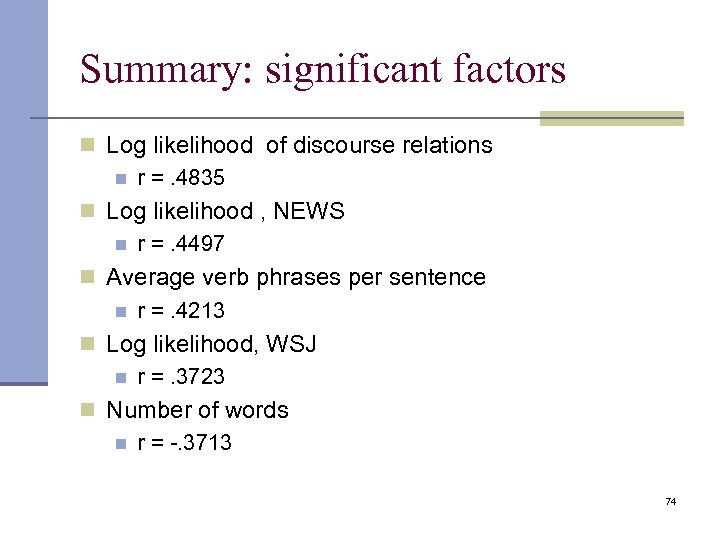

Summary: significant factors n Log likelihood of discourse relations n r =. 4835 n Log likelihood , NEWS n r =. 4497 n Average verb phrases per sentence n r =. 4213 n Log likelihood, WSJ n r =. 3723 n Number of words n r = -. 3713 74

Summary: significant factors n Log likelihood of discourse relations n r =. 4835 n Log likelihood , NEWS n r =. 4497 n Average verb phrases per sentence n r =. 4213 n Log likelihood, WSJ n r =. 3723 n Number of words n r = -. 3713 74

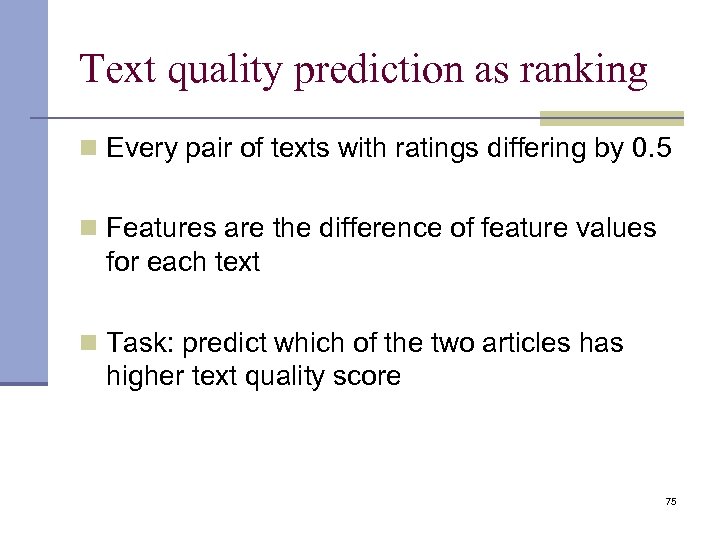

Text quality prediction as ranking n Every pair of texts with ratings differing by 0. 5 n Features are the difference of feature values for each text n Task: predict which of the two articles has higher text quality score 75

Text quality prediction as ranking n Every pair of texts with ratings differing by 0. 5 n Features are the difference of feature values for each text n Task: predict which of the two articles has higher text quality score 75

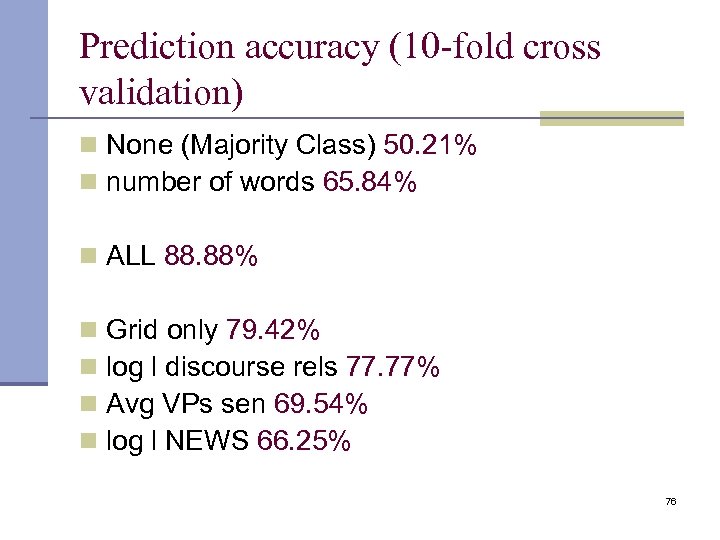

Prediction accuracy (10 -fold cross validation) n None (Majority Class) 50. 21% n number of words 65. 84% n ALL 88. 88% n n Grid only 79. 42% log l discourse rels 77. 77% Avg VPs sen 69. 54% log l NEWS 66. 25% 76

Prediction accuracy (10 -fold cross validation) n None (Majority Class) 50. 21% n number of words 65. 84% n ALL 88. 88% n n Grid only 79. 42% log l discourse rels 77. 77% Avg VPs sen 69. 54% log l NEWS 66. 25% 76

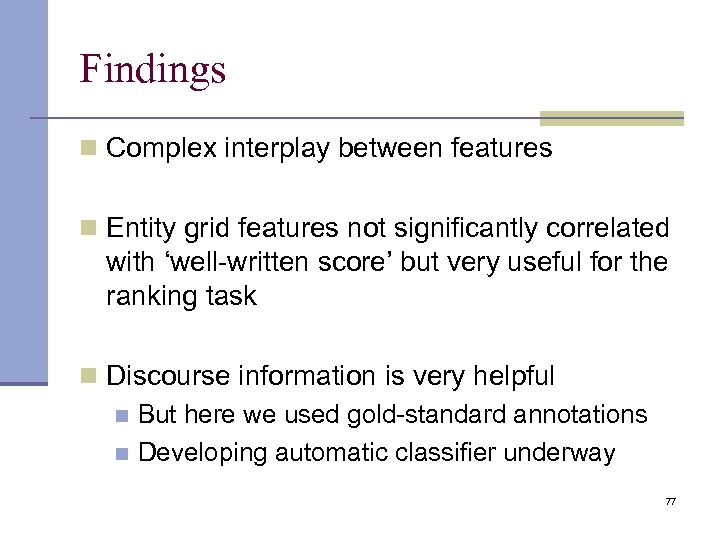

Findings n Complex interplay between features n Entity grid features not significantly correlated with ‘well-written score’ but very useful for the ranking task n Discourse information is very helpful n But here we used gold-standard annotations n Developing automatic classifier underway 77

Findings n Complex interplay between features n Entity grid features not significantly correlated with ‘well-written score’ but very useful for the ranking task n Discourse information is very helpful n But here we used gold-standard annotations n Developing automatic classifier underway 77

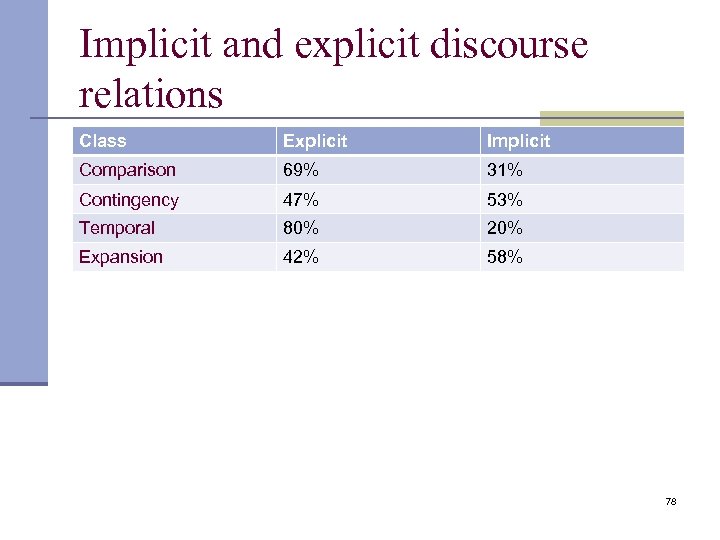

Implicit and explicit discourse relations Class Explicit Implicit Comparison 69% 31% Contingency 47% 53% Temporal 80% 20% Expansion 42% 58% 78

Implicit and explicit discourse relations Class Explicit Implicit Comparison 69% 31% Contingency 47% 53% Temporal 80% 20% Expansion 42% 58% 78

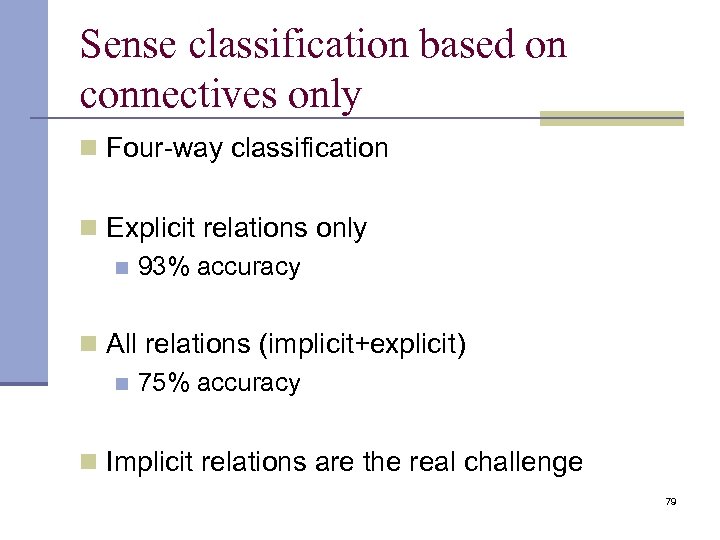

Sense classification based on connectives only n Four-way classification n Explicit relations only n 93% accuracy n All relations (implicit+explicit) n 75% accuracy n Implicit relations are the real challenge 79

Sense classification based on connectives only n Four-way classification n Explicit relations only n 93% accuracy n All relations (implicit+explicit) n 75% accuracy n Implicit relations are the real challenge 79

![Explicit discourse relations, tasks Pitler and Nenkova, 2009 [25] n Discourse vs. non-discourse use Explicit discourse relations, tasks Pitler and Nenkova, 2009 [25] n Discourse vs. non-discourse use](https://present5.com/presentation/9ed7f32e9d89618830e411705207f015/image-80.jpg) Explicit discourse relations, tasks Pitler and Nenkova, 2009 [25] n Discourse vs. non-discourse use ü ü I will be happier once the semester is over. I have been to Ohio once. § Relation sense § Contingency, comparison, temporal, expansion ü ü I haven’t been to Paris since I went there on a school trip in 1998. [Temporal] I haven’t been to Antarctica since it is very far away. [Contingency] 80

Explicit discourse relations, tasks Pitler and Nenkova, 2009 [25] n Discourse vs. non-discourse use ü ü I will be happier once the semester is over. I have been to Ohio once. § Relation sense § Contingency, comparison, temporal, expansion ü ü I haven’t been to Paris since I went there on a school trip in 1998. [Temporal] I haven’t been to Antarctica since it is very far away. [Contingency] 80

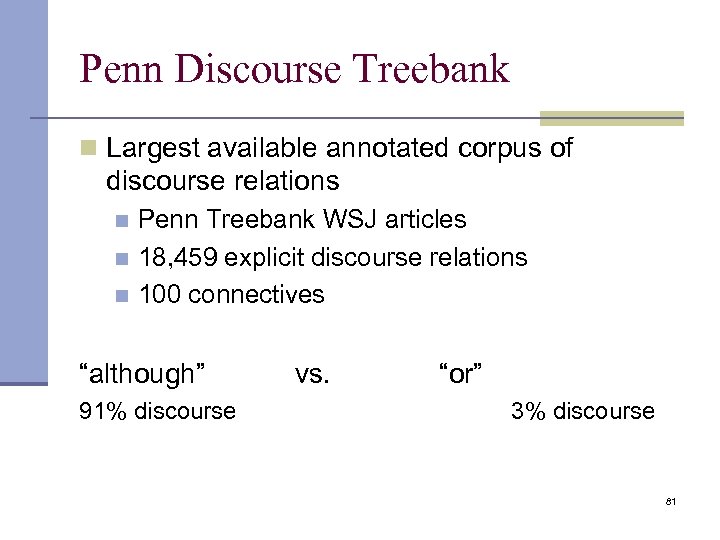

Penn Discourse Treebank n Largest available annotated corpus of discourse relations Penn Treebank WSJ articles n 18, 459 explicit discourse relations n 100 connectives n “although” 91% discourse vs. “or” 3% discourse 81

Penn Discourse Treebank n Largest available annotated corpus of discourse relations Penn Treebank WSJ articles n 18, 459 explicit discourse relations n 100 connectives n “although” 91% discourse vs. “or” 3% discourse 81

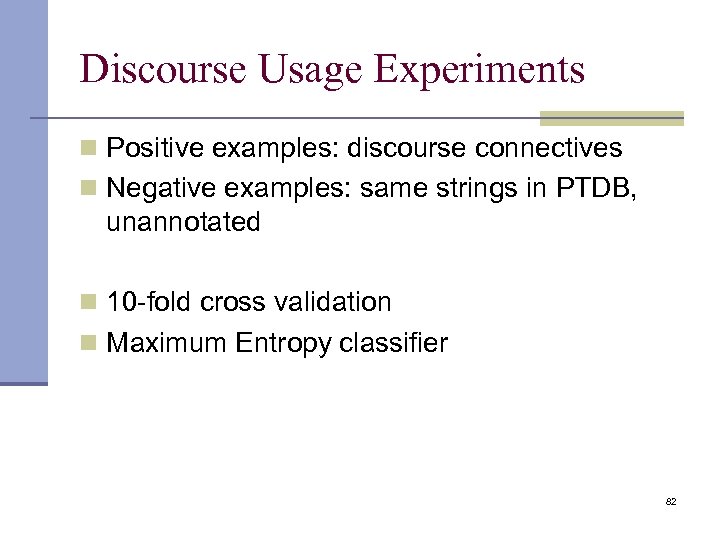

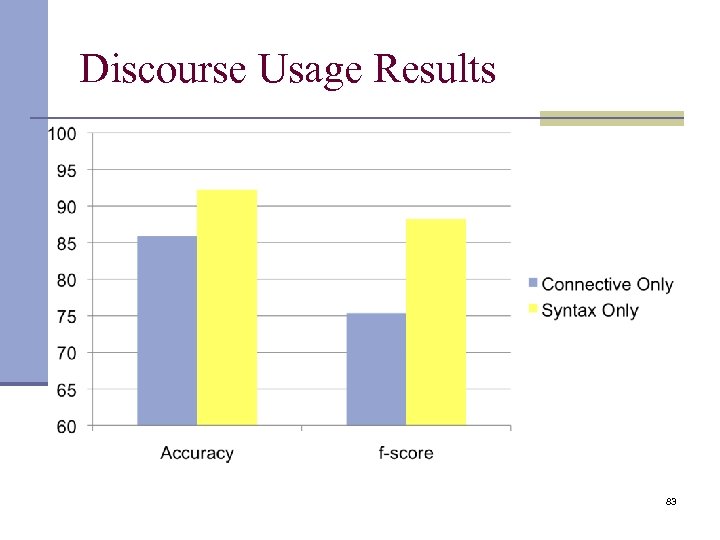

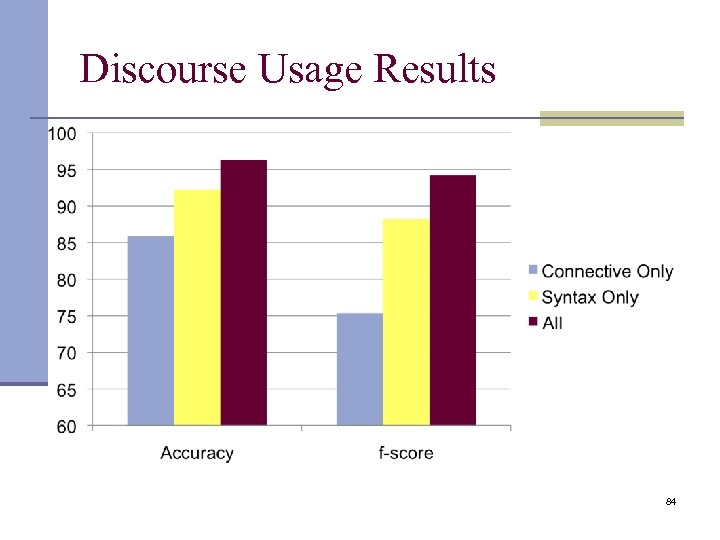

Discourse Usage Experiments n Positive examples: discourse connectives n Negative examples: same strings in PTDB, unannotated n 10 -fold cross validation n Maximum Entropy classifier 82

Discourse Usage Experiments n Positive examples: discourse connectives n Negative examples: same strings in PTDB, unannotated n 10 -fold cross validation n Maximum Entropy classifier 82

Discourse Usage Results 83

Discourse Usage Results 83

Discourse Usage Results 84

Discourse Usage Results 84

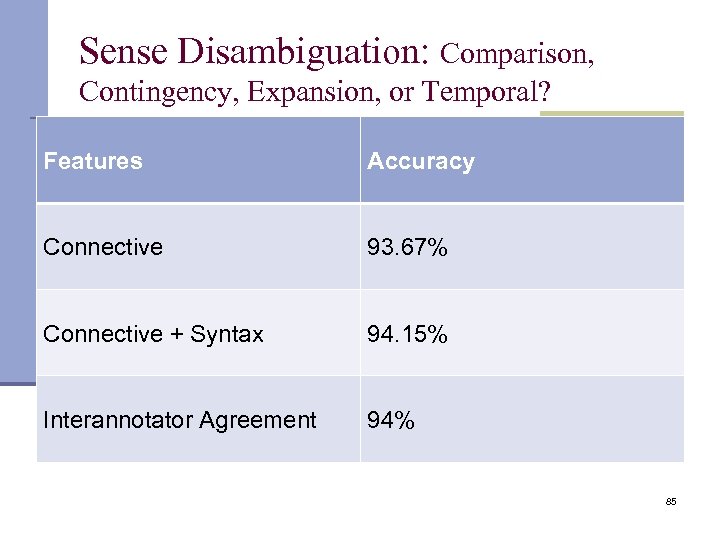

Sense Disambiguation: Comparison, Contingency, Expansion, or Temporal? Features Accuracy Connective 93. 67% Connective + Syntax 94. 15% Interannotator Agreement 94% 85

Sense Disambiguation: Comparison, Contingency, Expansion, or Temporal? Features Accuracy Connective 93. 67% Connective + Syntax 94. 15% Interannotator Agreement 94% 85

Tool n Automatic annotation of discourse use and sense of discourse connectives Discourse Connectives Tagger http: //www. cis. upenn. edu/~epitler/discourse. html 86

Tool n Automatic annotation of discourse use and sense of discourse connectives Discourse Connectives Tagger http: //www. cis. upenn. edu/~epitler/discourse. html 86

What about implicit relations? n Is there hope to have a usable tool soon? n Early studies on unannotated data gave reason for optimism n But when recently tested on the PDTB, their performance is poor n Accuracy of contingency, comparison and temporal is below 50% 87

What about implicit relations? n Is there hope to have a usable tool soon? n Early studies on unannotated data gave reason for optimism n But when recently tested on the PDTB, their performance is poor n Accuracy of contingency, comparison and temporal is below 50% 87

Classify implicits and explicits together n Not easy to infer from combined results how early systems performed on implicits n As we saw, one can get reasonable overall performance by doing nothing for explicts n Same sentence [26] n Graphbank corpus: doesn’t distinguish implicit and explicit [27] 88

Classify implicits and explicits together n Not easy to infer from combined results how early systems performed on implicits n As we saw, one can get reasonable overall performance by doing nothing for explicts n Same sentence [26] n Graphbank corpus: doesn’t distinguish implicit and explicit [27] 88

Classify on large unannotated corpus n Create artificial implicits by deleting connective [28, 29, 30] ü I am in Europe, but I live in the United States. n First proposed by Marcu and Echihabi, 2002 n Very good initial results n Accuracy of distinguishing between two rels, >75% n But these were on balanced classes n Not the case in real text n Not tested on real implicits (but see [30, 29]) 89

Classify on large unannotated corpus n Create artificial implicits by deleting connective [28, 29, 30] ü I am in Europe, but I live in the United States. n First proposed by Marcu and Echihabi, 2002 n Very good initial results n Accuracy of distinguishing between two rels, >75% n But these were on balanced classes n Not the case in real text n Not tested on real implicits (but see [30, 29]) 89

![Experiments with PDTB n Pitler et al, ACL 2009 [31] n Wide variety of Experiments with PDTB n Pitler et al, ACL 2009 [31] n Wide variety of](https://present5.com/presentation/9ed7f32e9d89618830e411705207f015/image-90.jpg) Experiments with PDTB n Pitler et al, ACL 2009 [31] n Wide variety of features to capture semantic opposition and parallelism n Lin et al, EMNLP 2009 [32] n (Lexicalized) syntactic features n Results improve over baselines, better understanding of features, but the classifiers are not suitable for application in real tasks 90

Experiments with PDTB n Pitler et al, ACL 2009 [31] n Wide variety of features to capture semantic opposition and parallelism n Lin et al, EMNLP 2009 [32] n (Lexicalized) syntactic features n Results improve over baselines, better understanding of features, but the classifiers are not suitable for application in real tasks 90

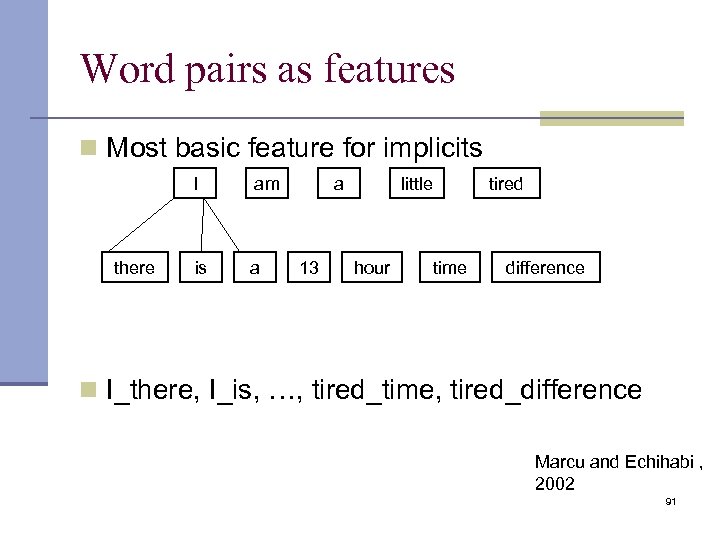

Word pairs as features n Most basic feature for implicits I there am is a a 13 Iittle hour time tired difference n I_there, I_is, …, tired_time, tired_difference Marcu and Echihabi , 2002 91

Word pairs as features n Most basic feature for implicits I there am is a a 13 Iittle hour time tired difference n I_there, I_is, …, tired_time, tired_difference Marcu and Echihabi , 2002 91

Intuition: with large amounts of data, will find semantically-related pairs n The recent explosion of country funds mirrors the “closed-end fund mania of the 1920 s, Mr. Foot says, when narrowly focused funds grew wildly popular. n They fell into oblivion after the 1929 crash. 92

Intuition: with large amounts of data, will find semantically-related pairs n The recent explosion of country funds mirrors the “closed-end fund mania of the 1920 s, Mr. Foot says, when narrowly focused funds grew wildly popular. n They fell into oblivion after the 1929 crash. 92

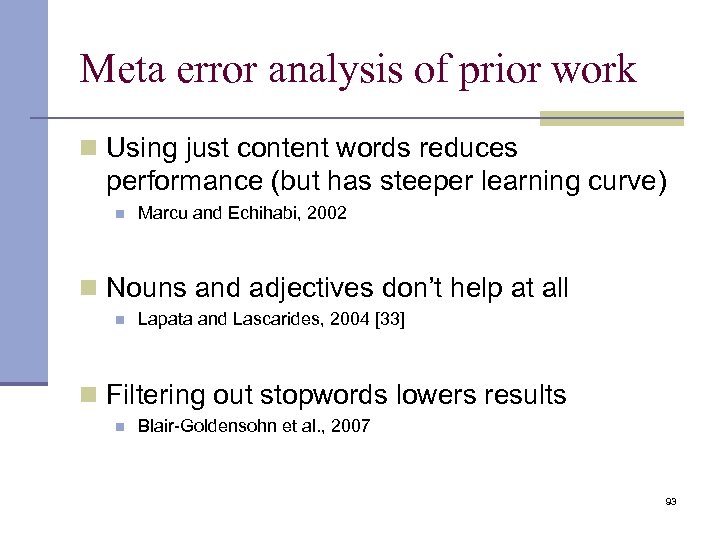

Meta error analysis of prior work n Using just content words reduces performance (but has steeper learning curve) n Marcu and Echihabi, 2002 n Nouns and adjectives don’t help at all n Lapata and Lascarides, 2004 [33] n Filtering out stopwords lowers results n Blair-Goldensohn et al. , 2007 93

Meta error analysis of prior work n Using just content words reduces performance (but has steeper learning curve) n Marcu and Echihabi, 2002 n Nouns and adjectives don’t help at all n Lapata and Lascarides, 2004 [33] n Filtering out stopwords lowers results n Blair-Goldensohn et al. , 2007 93

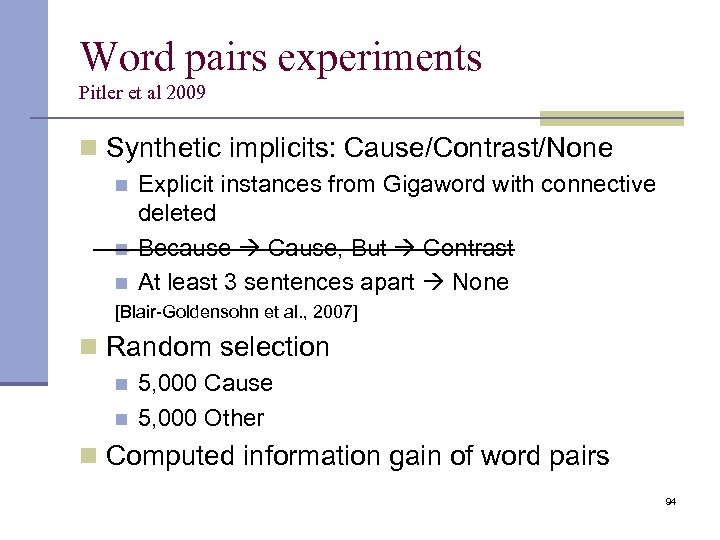

Word pairs experiments Pitler et al 2009 n Synthetic implicits: Cause/Contrast/None n n Explicit instances from Gigaword with connective deleted Because Cause, But Contrast At least 3 sentences apart None n [Blair-Goldensohn et al. , 2007] n Random selection n n 5, 000 Cause 5, 000 Other n Computed information gain of word pairs 94

Word pairs experiments Pitler et al 2009 n Synthetic implicits: Cause/Contrast/None n n Explicit instances from Gigaword with connective deleted Because Cause, But Contrast At least 3 sentences apart None n [Blair-Goldensohn et al. , 2007] n Random selection n n 5, 000 Cause 5, 000 Other n Computed information gain of word pairs 94

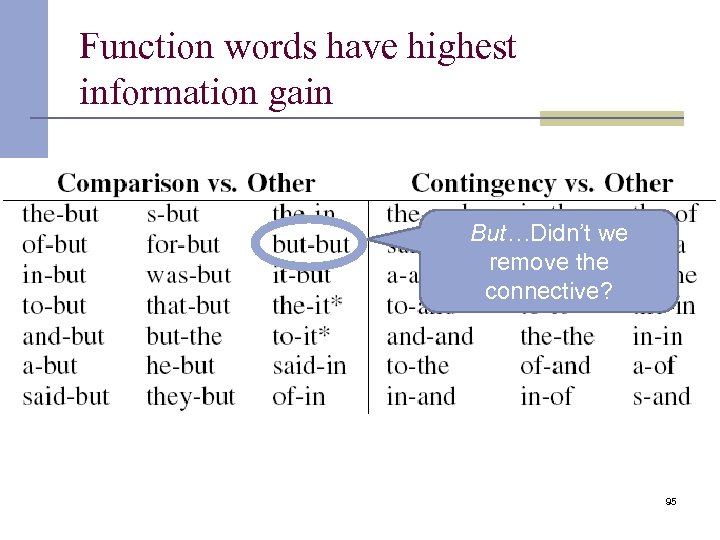

Function words have highest information gain But…Didn’t we remove the connective? 95

Function words have highest information gain But…Didn’t we remove the connective? 95

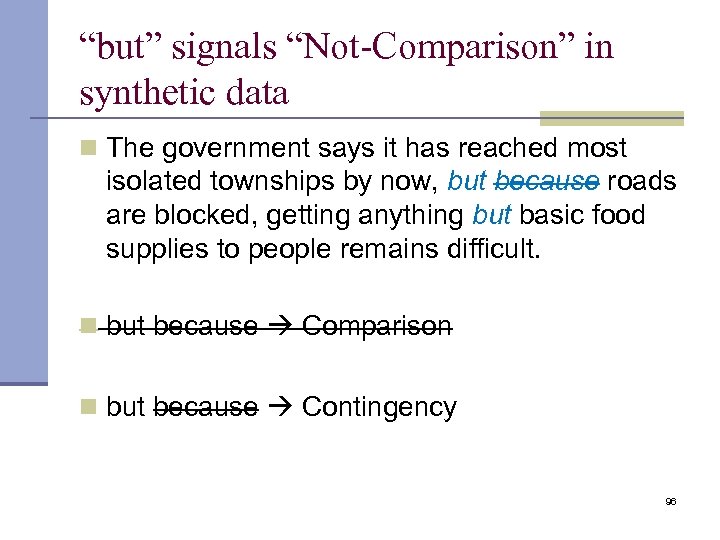

“but” signals “Not-Comparison” in synthetic data n The government says it has reached most isolated townships by now, but because roads are blocked, getting anything but basic food supplies to people remains difficult. n but because Comparison n but because Contingency 96

“but” signals “Not-Comparison” in synthetic data n The government says it has reached most isolated townships by now, but because roads are blocked, getting anything but basic food supplies to people remains difficult. n but because Comparison n but because Contingency 96

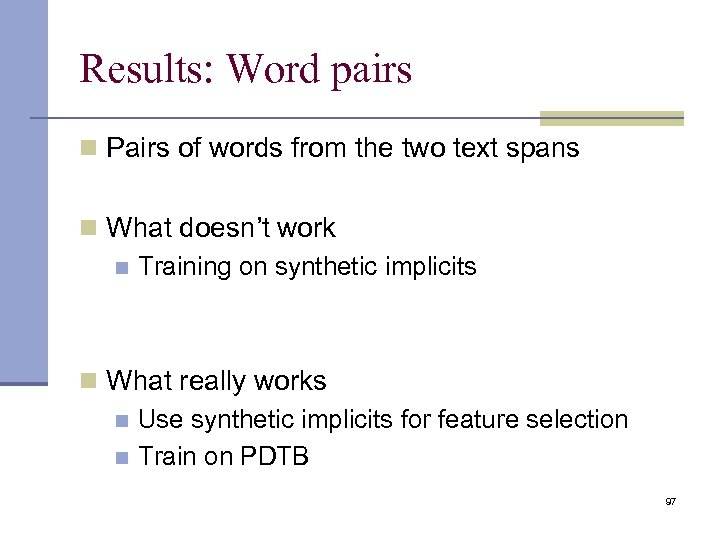

Results: Word pairs n Pairs of words from the two text spans n What doesn’t work n Training on synthetic implicits n What really works n Use synthetic implicits for feature selection n Train on PDTB 97

Results: Word pairs n Pairs of words from the two text spans n What doesn’t work n Training on synthetic implicits n What really works n Use synthetic implicits for feature selection n Train on PDTB 97

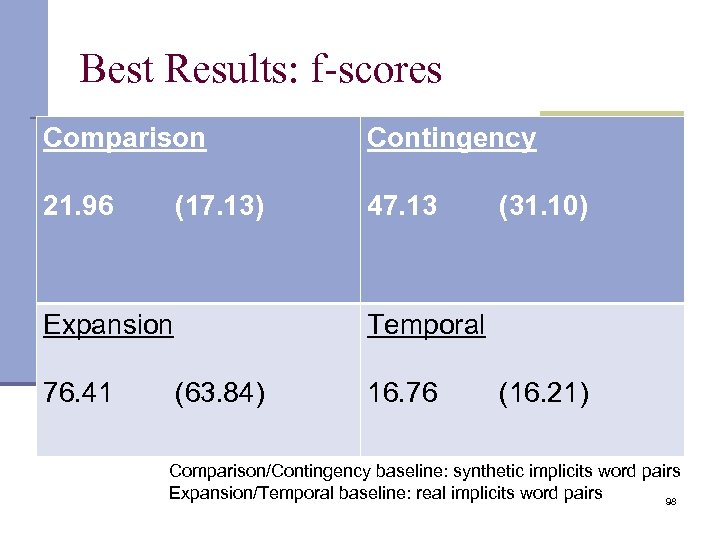

Best Results: f-scores Comparison Contingency 21. 96 47. 13 (17. 13) Expansion 76. 41 (31. 10) Temporal (63. 84) 16. 76 (16. 21) Comparison/Contingency baseline: synthetic implicits word pairs Expansion/Temporal baseline: real implicits word pairs 98

Best Results: f-scores Comparison Contingency 21. 96 47. 13 (17. 13) Expansion 76. 41 (31. 10) Temporal (63. 84) 16. 76 (16. 21) Comparison/Contingency baseline: synthetic implicits word pairs Expansion/Temporal baseline: real implicits word pairs 98

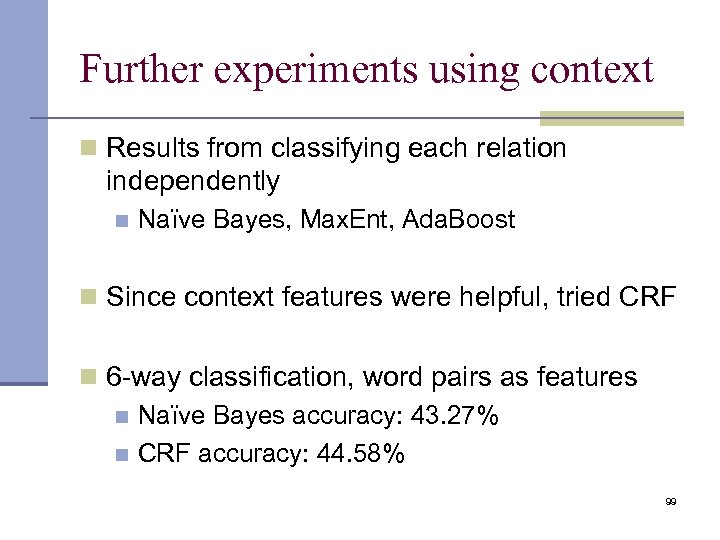

Further experiments using context n Results from classifying each relation independently n Naïve Bayes, Max. Ent, Ada. Boost n Since context features were helpful, tried CRF n 6 -way classification, word pairs as features n Naïve Bayes accuracy: 43. 27% n CRF accuracy: 44. 58% 99

Further experiments using context n Results from classifying each relation independently n Naïve Bayes, Max. Ent, Ada. Boost n Since context features were helpful, tried CRF n 6 -way classification, word pairs as features n Naïve Bayes accuracy: 43. 27% n CRF accuracy: 44. 58% 99

![Do we need more coherence factors? Louis and Nenkova, 2010 [34] n If we Do we need more coherence factors? Louis and Nenkova, 2010 [34] n If we](https://present5.com/presentation/9ed7f32e9d89618830e411705207f015/image-100.jpg) Do we need more coherence factors? Louis and Nenkova, 2010 [34] n If we had perfect co-reference and discourse relation information, would we be able to explain local discourse coherence n Our recent corpus study indicates the answer is NO n 30% of adjacent sentences in the same paragraph in PDTB n Neither share an entity nor have an implicit comparison contingency or temporal relation n Lexical chains? 100

Do we need more coherence factors? Louis and Nenkova, 2010 [34] n If we had perfect co-reference and discourse relation information, would we be able to explain local discourse coherence n Our recent corpus study indicates the answer is NO n 30% of adjacent sentences in the same paragraph in PDTB n Neither share an entity nor have an implicit comparison contingency or temporal relation n Lexical chains? 100

![References [1] Burstein, J. & Chodorow, M. (in press). Progress and new directions in References [1] Burstein, J. & Chodorow, M. (in press). Progress and new directions in](https://present5.com/presentation/9ed7f32e9d89618830e411705207f015/image-101.jpg) References [1] Burstein, J. & Chodorow, M. (in press). Progress and new directions in technology for automated essay evaluation. In R. Kaplan (Ed. ), The Oxford handbook of applied linguistics (2 nd Ed. ). New York: Oxford University Press. [2] Heilman, M. , Collins-Thompson, K. , Callan, J. , and Eskenazi, M. (2007). Combining Lexical and Grammatical Features to Improve Readability Measures for First and Second Language Texts. Proceedings of the Human Language Technology Conference. Rochester, NY. [3] S. Petersen and M. Ostendorf, “A machine learning approach to reading level assessment, ” Computer, Speech and Language, vol. 23, no. 1, pp. 89 -106, 2009 [4] Finding High Quality Content in Social Media, Eugene Agichtein, Carlos Castillo, Debora Donato, Aristides Gionis, Gilad Mishne, ACM Web Search and Data Mining Conference (WSDM), 2008 [5] Regina Barzilay and Lillian Lee, Catching the Drift: Probabilistic Content Models, with Applications to Generation and Summarization, HLT-NAACL 2004: Proceedings of the Main Conference, pp 113— 120, 2004 101

References [1] Burstein, J. & Chodorow, M. (in press). Progress and new directions in technology for automated essay evaluation. In R. Kaplan (Ed. ), The Oxford handbook of applied linguistics (2 nd Ed. ). New York: Oxford University Press. [2] Heilman, M. , Collins-Thompson, K. , Callan, J. , and Eskenazi, M. (2007). Combining Lexical and Grammatical Features to Improve Readability Measures for First and Second Language Texts. Proceedings of the Human Language Technology Conference. Rochester, NY. [3] S. Petersen and M. Ostendorf, “A machine learning approach to reading level assessment, ” Computer, Speech and Language, vol. 23, no. 1, pp. 89 -106, 2009 [4] Finding High Quality Content in Social Media, Eugene Agichtein, Carlos Castillo, Debora Donato, Aristides Gionis, Gilad Mishne, ACM Web Search and Data Mining Conference (WSDM), 2008 [5] Regina Barzilay and Lillian Lee, Catching the Drift: Probabilistic Content Models, with Applications to Generation and Summarization, HLT-NAACL 2004: Proceedings of the Main Conference, pp 113— 120, 2004 101

![References [6] Emily Pitler, Annie Louis and Ani Nenkova, Automatic Evaluation of Linguistic Quality References [6] Emily Pitler, Annie Louis and Ani Nenkova, Automatic Evaluation of Linguistic Quality](https://present5.com/presentation/9ed7f32e9d89618830e411705207f015/image-102.jpg) References [6] Emily Pitler, Annie Louis and Ani Nenkova, Automatic Evaluation of Linguistic Quality in Multi. Document Summarization, Proceedings of ACL 2010 [7] Schwarm, S. E. and Ostendorf, M. 2005. Reading level assessment using support vector machines and statistical language models. In Proceedings of ACL 2005. [8] Jieun Chae, Ani Nenkova: Predicting the Fluency of Text with Shallow Structural Features: Case Studies of Machine Translation and Human-Written Text. In proceedings of EACL 2009: 139 -147 [9] Charniak, E. and Johnson, M. 2005. Coarse-to-fine n-best parsing and Max. Ent discriminative reranking. In Proceedings of ACL 2005. [10] K. Collins-Thompson and J. Callan. (2004). A language modeling approach to predicting reading difficulty. Proceedings of HLT/NAACL 2004. [11] Sarah E. Schwarm and Mari Ostendorf. Reading Level Assessment Using Support Vector Machines and Statistical Language Models. In Proceedings of ACL, 2005. 102

References [6] Emily Pitler, Annie Louis and Ani Nenkova, Automatic Evaluation of Linguistic Quality in Multi. Document Summarization, Proceedings of ACL 2010 [7] Schwarm, S. E. and Ostendorf, M. 2005. Reading level assessment using support vector machines and statistical language models. In Proceedings of ACL 2005. [8] Jieun Chae, Ani Nenkova: Predicting the Fluency of Text with Shallow Structural Features: Case Studies of Machine Translation and Human-Written Text. In proceedings of EACL 2009: 139 -147 [9] Charniak, E. and Johnson, M. 2005. Coarse-to-fine n-best parsing and Max. Ent discriminative reranking. In Proceedings of ACL 2005. [10] K. Collins-Thompson and J. Callan. (2004). A language modeling approach to predicting reading difficulty. Proceedings of HLT/NAACL 2004. [11] Sarah E. Schwarm and Mari Ostendorf. Reading Level Assessment Using Support Vector Machines and Statistical Language Models. In Proceedings of ACL, 2005. 102

![References [12] Automatically generating Wikipedia articles: A structure-aware approach, C. Sauper and R. Barzilay, References [12] Automatically generating Wikipedia articles: A structure-aware approach, C. Sauper and R. Barzilay,](https://present5.com/presentation/9ed7f32e9d89618830e411705207f015/image-103.jpg) References [12] Automatically generating Wikipedia articles: A structure-aware approach, C. Sauper and R. Barzilay, ACL-IJCNLP 2009 [13] Halliday, M. A. K. , and Ruqaiya Hasan. 1976. Cohesion in English. London: Longman [14] B. Grosz, A. Joshi, and S. Weinstein. 1995. Centering: a framework for modelling the local coherence of dis- course. Computational Linguistics, 21(2): 203– 226 [15] E. Miltsakaki and K. Kukich. 2000. The role of centering theory’s rough-shift in the teaching and evaluation of writing skills. In Proceedings of ACL’ 00, pages 408– 415. [16] Karamanis, N. , Mellish, C. , Poesio, M. , and Oberlander, J. 2009. Evaluating centering for information ordering using corpora. Comput. Linguist. 35, 1 (Mar. 2009), 29 -46. [17] Regina Barzilay, Mirella Lapata, "Modeling Local Coherence: An Entity-based Approach”, Computational Linguistics, 2008. [18] Ani Nenkova, Kathleen Mc. Keown: References to Named Entities: a Corpus Study. HLTNAACL 2003 103

References [12] Automatically generating Wikipedia articles: A structure-aware approach, C. Sauper and R. Barzilay, ACL-IJCNLP 2009 [13] Halliday, M. A. K. , and Ruqaiya Hasan. 1976. Cohesion in English. London: Longman [14] B. Grosz, A. Joshi, and S. Weinstein. 1995. Centering: a framework for modelling the local coherence of dis- course. Computational Linguistics, 21(2): 203– 226 [15] E. Miltsakaki and K. Kukich. 2000. The role of centering theory’s rough-shift in the teaching and evaluation of writing skills. In Proceedings of ACL’ 00, pages 408– 415. [16] Karamanis, N. , Mellish, C. , Poesio, M. , and Oberlander, J. 2009. Evaluating centering for information ordering using corpora. Comput. Linguist. 35, 1 (Mar. 2009), 29 -46. [17] Regina Barzilay, Mirella Lapata, "Modeling Local Coherence: An Entity-based Approach”, Computational Linguistics, 2008. [18] Ani Nenkova, Kathleen Mc. Keown: References to Named Entities: a Corpus Study. HLTNAACL 2003 103

![References [19] Micha Elsner, Eugene Charniak: Coreference-inspired Coherence Modeling. ACL (Short Papers) 2008: 41 References [19] Micha Elsner, Eugene Charniak: Coreference-inspired Coherence Modeling. ACL (Short Papers) 2008: 41](https://present5.com/presentation/9ed7f32e9d89618830e411705207f015/image-104.jpg) References [19] Micha Elsner, Eugene Charniak: Coreference-inspired Coherence Modeling. ACL (Short Papers) 2008: 41 -44 [20] Morris, J. and Hirst, G. 1991. Lexical cohesion computed by thesaural relations as an indicator of the structure of text. Comput. Linguist. 17, 1 (Mar. 1991), 21 -48. [21] Regina Barzilay and Michael Elhadad, "Text summarizations with lexical chains”, In Inderjeet Mani and Mark Maybury, editors, Advances in Automatic Text Summarization. MIT Press, 1999. [22] Silber, H. G. and Mc. Coy, K. F. 2002. Efficiently computed lexical chains as an intermediate representation for automatic text summarization. Comput. Linguist. 28, 4 (Dec. 2002), 487496. [23] Mirella Lapata, Probabilistic Text Structuring: Experiments with Sentence Ordering, Proceedings of ACL 2003. [24] Discourse generation using utility-trained coherence models, R. Soricut & D. Marcu, COLING-ACL 2006 104

References [19] Micha Elsner, Eugene Charniak: Coreference-inspired Coherence Modeling. ACL (Short Papers) 2008: 41 -44 [20] Morris, J. and Hirst, G. 1991. Lexical cohesion computed by thesaural relations as an indicator of the structure of text. Comput. Linguist. 17, 1 (Mar. 1991), 21 -48. [21] Regina Barzilay and Michael Elhadad, "Text summarizations with lexical chains”, In Inderjeet Mani and Mark Maybury, editors, Advances in Automatic Text Summarization. MIT Press, 1999. [22] Silber, H. G. and Mc. Coy, K. F. 2002. Efficiently computed lexical chains as an intermediate representation for automatic text summarization. Comput. Linguist. 28, 4 (Dec. 2002), 487496. [23] Mirella Lapata, Probabilistic Text Structuring: Experiments with Sentence Ordering, Proceedings of ACL 2003. [24] Discourse generation using utility-trained coherence models, R. Soricut & D. Marcu, COLING-ACL 2006 104

![References [25] Emily Pitler and Ani Nenkova. Using Syntax to Disambiguate Explicit Discourse Connectives References [25] Emily Pitler and Ani Nenkova. Using Syntax to Disambiguate Explicit Discourse Connectives](https://present5.com/presentation/9ed7f32e9d89618830e411705207f015/image-105.jpg) References [25] Emily Pitler and Ani Nenkova. Using Syntax to Disambiguate Explicit Discourse Connectives in Text. Proceedings of ACL, short paper, 2009 [26] Radu Soricut and Daniel Marcu. 2003. Sentence Level Discourse Parsing using Syntactic and Lexical Information. Proceedings of the Human Language Technology and North American Association for Computational Linguistics Conference (HLT/NAACL-2003) [27] Ben Wellner, James Pustejovsky, Catherine Havasi, Roser Sauri and Anna Rumshisky. Classification of Discourse Coherence Relations: An Exploratory Study using Multiple Knowledge Sources. In Proceedings of the 7 th SIGDIAL Workshop on Discourse and Dialogue [28] Daniel Marcu and Abdessamad Echihabi (2002). An Unsupervised Approach to Recognizing Discourse Relations. Proceedings of the 40 th Annual Meeting of the Association for Computational Linguistics (ACL-2002) [29] Sasha Blair-Goldensohn, Kathleen Mc. Keown, Owen Rambow: Building and Refining Rhetorical-Semantic Relation Models. HLT-NAACL 2007: 428 -435 105

References [25] Emily Pitler and Ani Nenkova. Using Syntax to Disambiguate Explicit Discourse Connectives in Text. Proceedings of ACL, short paper, 2009 [26] Radu Soricut and Daniel Marcu. 2003. Sentence Level Discourse Parsing using Syntactic and Lexical Information. Proceedings of the Human Language Technology and North American Association for Computational Linguistics Conference (HLT/NAACL-2003) [27] Ben Wellner, James Pustejovsky, Catherine Havasi, Roser Sauri and Anna Rumshisky. Classification of Discourse Coherence Relations: An Exploratory Study using Multiple Knowledge Sources. In Proceedings of the 7 th SIGDIAL Workshop on Discourse and Dialogue [28] Daniel Marcu and Abdessamad Echihabi (2002). An Unsupervised Approach to Recognizing Discourse Relations. Proceedings of the 40 th Annual Meeting of the Association for Computational Linguistics (ACL-2002) [29] Sasha Blair-Goldensohn, Kathleen Mc. Keown, Owen Rambow: Building and Refining Rhetorical-Semantic Relation Models. HLT-NAACL 2007: 428 -435 105

![References [30] Sporleder, C. and Lascarides, A. 2008. Using automatically labelled examples to classify References [30] Sporleder, C. and Lascarides, A. 2008. Using automatically labelled examples to classify](https://present5.com/presentation/9ed7f32e9d89618830e411705207f015/image-106.jpg) References [30] Sporleder, C. and Lascarides, A. 2008. Using automatically labelled examples to classify rhetorical relations: An assessment. Nat. Lang. Eng. 14, 3 (Jul. 2008), 369 -416. [31] Emily Pitler, Annie Louis, and Ani Nenkova. Automatic Sense Prediction for Implicit Discourse Relations in Text. Proceedings of ACL, 2009. [32] Ziheng Lin, Min-Yen Kan and Hwee Tou Ng (2009). Recognizing Implicit Discourse Relations in the Penn Discourse Treebank. In Proceedings of EMNLP [33] Lapata, Mirella and Alex Lascarides. 2004. Inferring Sentence-internal Temporal Relations. In Proceedings of the North American Chapter of the Assocation of Computational Linguistics, 153 -160. [34] Annie Louis and Ani Nenkova, Creating Local Coherence: An Empirical Assessment,

Proceedings of NAACL-HLT 2010 106

References [30] Sporleder, C. and Lascarides, A. 2008. Using automatically labelled examples to classify rhetorical relations: An assessment. Nat. Lang. Eng. 14, 3 (Jul. 2008), 369 -416. [31] Emily Pitler, Annie Louis, and Ani Nenkova. Automatic Sense Prediction for Implicit Discourse Relations in Text. Proceedings of ACL, 2009. [32] Ziheng Lin, Min-Yen Kan and Hwee Tou Ng (2009). Recognizing Implicit Discourse Relations in the Penn Discourse Treebank. In Proceedings of EMNLP [33] Lapata, Mirella and Alex Lascarides. 2004. Inferring Sentence-internal Temporal Relations. In Proceedings of the North American Chapter of the Assocation of Computational Linguistics, 153 -160. [34] Annie Louis and Ani Nenkova, Creating Local Coherence: An Empirical Assessment,

Proceedings of NAACL-HLT 2010 106