3e4d0cb9461c4a4b241fc2a1ac7cc991.ppt

- Количество слайдов: 172

Compiler Design 1

Compiler Design 1

Course Information • Instructor : Dr. Farhad Soleimanian Gharehchopogh – Email: farhad. soleimanian@gmail. com • Course Web Page: http: //www. soleimanian. net/compiler 2

Course Information • Instructor : Dr. Farhad Soleimanian Gharehchopogh – Email: farhad. soleimanian@gmail. com • Course Web Page: http: //www. soleimanian. net/compiler 2

Preliminaries Required • Basic knowledge of programming languages. • Basic knowledge of FSA and CFG. • Knowledge of a high programming language for the programming assignments. Textbook: Alfred V. Aho, Monica S. Lam, Ravi Sethi, and Jeffrey D. Ullman, “Compilers: Principles, Techniques, and Tools” Second Edition, Addison-Wesley, 2007. 3

Preliminaries Required • Basic knowledge of programming languages. • Basic knowledge of FSA and CFG. • Knowledge of a high programming language for the programming assignments. Textbook: Alfred V. Aho, Monica S. Lam, Ravi Sethi, and Jeffrey D. Ullman, “Compilers: Principles, Techniques, and Tools” Second Edition, Addison-Wesley, 2007. 3

Grading • Midterm : 30% • Project : 30% • Final : 40% 4

Grading • Midterm : 30% • Project : 30% • Final : 40% 4

Course Outline • Introduction to Compiling • Lexical Analysis • Syntax Analysis – Context Free Grammars – Top-Down Parsing, LL Parsing – Bottom-Up Parsing, LR Parsing • Syntax-Directed Translation – Attribute Definitions – Evaluation of Attribute Definitions • Semantic Analysis, Type Checking • Run-Time Organization • Intermediate Code Generation 5

Course Outline • Introduction to Compiling • Lexical Analysis • Syntax Analysis – Context Free Grammars – Top-Down Parsing, LL Parsing – Bottom-Up Parsing, LR Parsing • Syntax-Directed Translation – Attribute Definitions – Evaluation of Attribute Definitions • Semantic Analysis, Type Checking • Run-Time Organization • Intermediate Code Generation 5

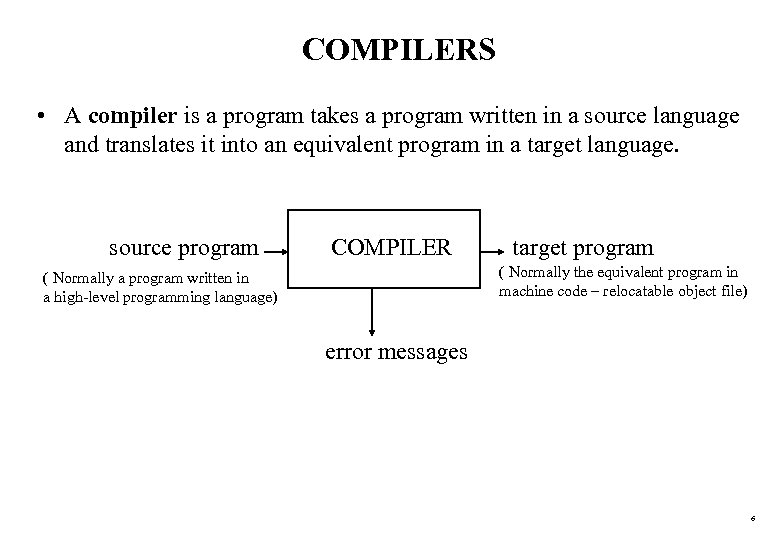

COMPILERS • A compiler is a program takes a program written in a source language and translates it into an equivalent program in a target language. source program COMPILER target program ( Normally the equivalent program in machine code – relocatable object file) ( Normally a program written in a high-level programming language) error messages 6

COMPILERS • A compiler is a program takes a program written in a source language and translates it into an equivalent program in a target language. source program COMPILER target program ( Normally the equivalent program in machine code – relocatable object file) ( Normally a program written in a high-level programming language) error messages 6

Other Applications • In addition to the development of a compiler, the techniques used in compiler design can be applicable to many problems in computer science. – Techniques used in a lexical analyzer can be used in text editors, information retrieval system, and pattern recognition programs. – Techniques used in a parser can be used in a query processing system such as SQL. – Many software having a complex front-end may need techniques used in compiler design. • A symbolic equation solver which takes an equation as input. That program should parse given input equation. the – Most of the techniques used in compiler design can be used in Natural Language Processing (NLP) systems. 7

Other Applications • In addition to the development of a compiler, the techniques used in compiler design can be applicable to many problems in computer science. – Techniques used in a lexical analyzer can be used in text editors, information retrieval system, and pattern recognition programs. – Techniques used in a parser can be used in a query processing system such as SQL. – Many software having a complex front-end may need techniques used in compiler design. • A symbolic equation solver which takes an equation as input. That program should parse given input equation. the – Most of the techniques used in compiler design can be used in Natural Language Processing (NLP) systems. 7

Major Parts of Compilers • There are two major parts of a compiler: Analysis and Synthesis • In analysis phase, an intermediate representation is created from the given source program. – Lexical Analyzer, Syntax Analyzer and Semantic Analyzer are the parts of this phase. • In synthesis phase, the equivalent target program is created from this intermediate representation. – Intermediate Code Generator, and Code Optimizer are the parts of this phase. 8

Major Parts of Compilers • There are two major parts of a compiler: Analysis and Synthesis • In analysis phase, an intermediate representation is created from the given source program. – Lexical Analyzer, Syntax Analyzer and Semantic Analyzer are the parts of this phase. • In synthesis phase, the equivalent target program is created from this intermediate representation. – Intermediate Code Generator, and Code Optimizer are the parts of this phase. 8

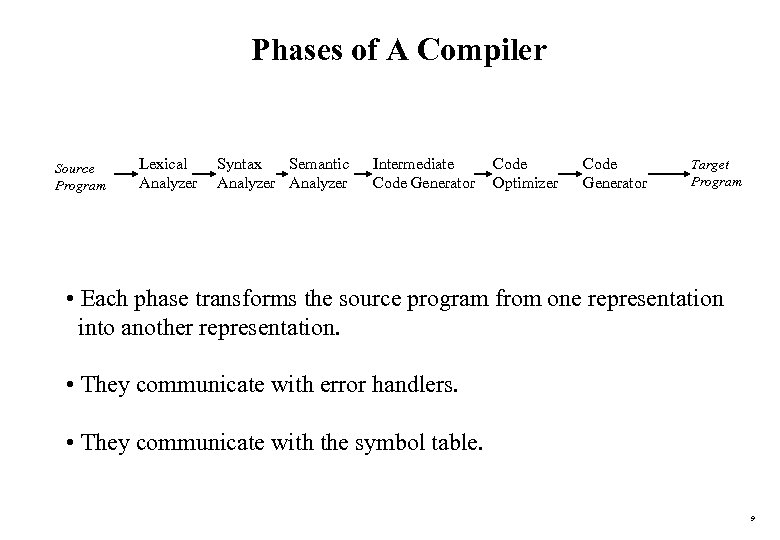

Phases of A Compiler Source Program Lexical Analyzer Syntax Semantic Analyzer Intermediate Code Generator Code Optimizer Code Generator Target Program • Each phase transforms the source program from one representation into another representation. • They communicate with error handlers. • They communicate with the symbol table. 9

Phases of A Compiler Source Program Lexical Analyzer Syntax Semantic Analyzer Intermediate Code Generator Code Optimizer Code Generator Target Program • Each phase transforms the source program from one representation into another representation. • They communicate with error handlers. • They communicate with the symbol table. 9

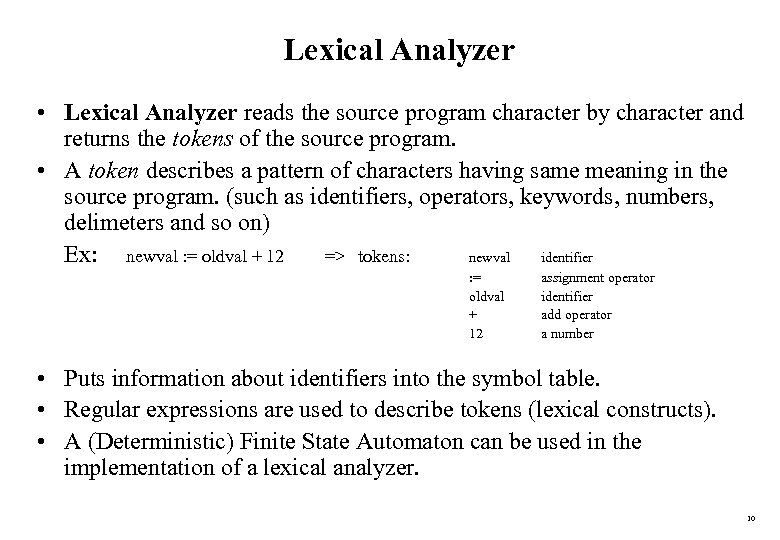

Lexical Analyzer • Lexical Analyzer reads the source program character by character and returns the tokens of the source program. • A token describes a pattern of characters having same meaning in the source program. (such as identifiers, operators, keywords, numbers, delimeters and so on) Ex: newval : = oldval + 12 => tokens: newval identifier : = oldval + 12 assignment operator identifier add operator a number • Puts information about identifiers into the symbol table. • Regular expressions are used to describe tokens (lexical constructs). • A (Deterministic) Finite State Automaton can be used in the implementation of a lexical analyzer. 10

Lexical Analyzer • Lexical Analyzer reads the source program character by character and returns the tokens of the source program. • A token describes a pattern of characters having same meaning in the source program. (such as identifiers, operators, keywords, numbers, delimeters and so on) Ex: newval : = oldval + 12 => tokens: newval identifier : = oldval + 12 assignment operator identifier add operator a number • Puts information about identifiers into the symbol table. • Regular expressions are used to describe tokens (lexical constructs). • A (Deterministic) Finite State Automaton can be used in the implementation of a lexical analyzer. 10

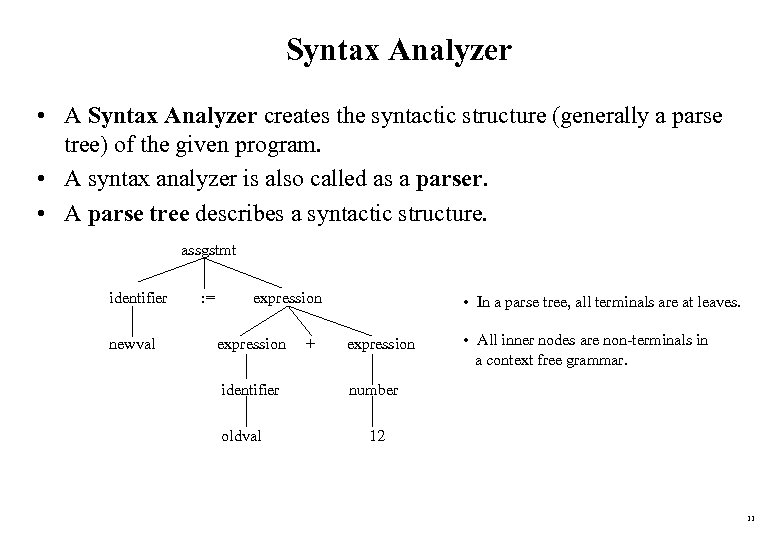

Syntax Analyzer • A Syntax Analyzer creates the syntactic structure (generally a parse tree) of the given program. • A syntax analyzer is also called as a parser. • A parse tree describes a syntactic structure. assgstmt identifier newval : = expression identifier oldval + • In a parse tree, all terminals are at leaves. expression • All inner nodes are non-terminals in a context free grammar. number 12 11

Syntax Analyzer • A Syntax Analyzer creates the syntactic structure (generally a parse tree) of the given program. • A syntax analyzer is also called as a parser. • A parse tree describes a syntactic structure. assgstmt identifier newval : = expression identifier oldval + • In a parse tree, all terminals are at leaves. expression • All inner nodes are non-terminals in a context free grammar. number 12 11

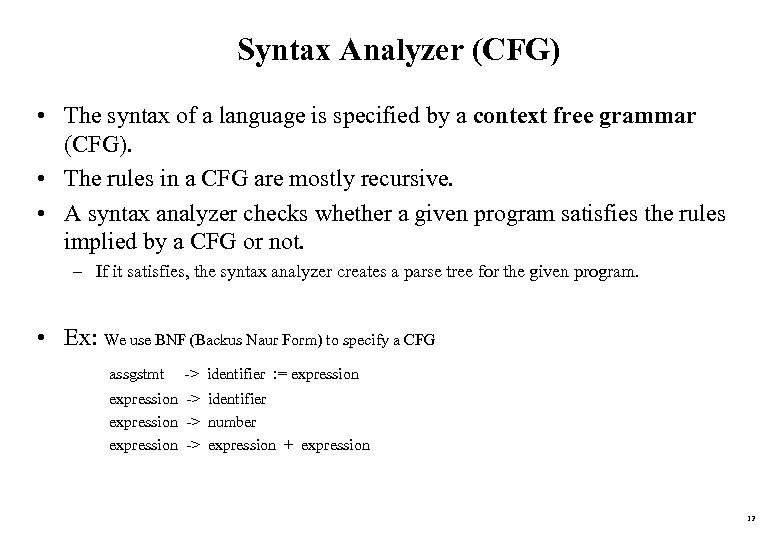

Syntax Analyzer (CFG) • The syntax of a language is specified by a context free grammar (CFG). • The rules in a CFG are mostly recursive. • A syntax analyzer checks whether a given program satisfies the rules implied by a CFG or not. – If it satisfies, the syntax analyzer creates a parse tree for the given program. • Ex: We use BNF (Backus Naur Form) to specify a CFG assgstmt -> identifier : = expression -> identifier expression -> number expression -> expression + expression 12

Syntax Analyzer (CFG) • The syntax of a language is specified by a context free grammar (CFG). • The rules in a CFG are mostly recursive. • A syntax analyzer checks whether a given program satisfies the rules implied by a CFG or not. – If it satisfies, the syntax analyzer creates a parse tree for the given program. • Ex: We use BNF (Backus Naur Form) to specify a CFG assgstmt -> identifier : = expression -> identifier expression -> number expression -> expression + expression 12

Syntax Analyzer versus Lexical Analyzer • Which constructs of a program should be recognized by the lexical analyzer, and which ones by the syntax analyzer? – Both of them do similar things; But the lexical analyzer deals with simple non-recursive constructs of the language. – The syntax analyzer deals with recursive constructs of the language. – The lexical analyzer simplifies the job of the syntax analyzer. – The lexical analyzer recognizes the smallest meaningful units (tokens) in a source program. – The syntax analyzer works on the smallest meaningful units (tokens) in a source program to recognize meaningful structures in our programming language. 13

Syntax Analyzer versus Lexical Analyzer • Which constructs of a program should be recognized by the lexical analyzer, and which ones by the syntax analyzer? – Both of them do similar things; But the lexical analyzer deals with simple non-recursive constructs of the language. – The syntax analyzer deals with recursive constructs of the language. – The lexical analyzer simplifies the job of the syntax analyzer. – The lexical analyzer recognizes the smallest meaningful units (tokens) in a source program. – The syntax analyzer works on the smallest meaningful units (tokens) in a source program to recognize meaningful structures in our programming language. 13

Parsing Techniques • Depending on how the parse tree is created, there are different parsing techniques. • These parsing techniques are categorized into two groups: – Top-Down Parsing, – Bottom-Up Parsing • Top-Down Parsing: – Construction of the parse tree starts at the root, and proceeds towards the leaves. – Efficient top-down parsers can be easily constructed by hand. – Recursive Predictive Parsing, Non-Recursive Predictive Parsing (LL Parsing). • Bottom-Up Parsing: – – – Construction of the parse tree starts at the leaves, and proceeds towards the root. Normally efficient bottom-up parsers are created with the help of some software tools. Bottom-up parsing is also known as shift-reduce parsing. Operator-Precedence Parsing – simple, restrictive, easy to implement LR Parsing – much general form of shift-reduce parsing, LR, SLR, LALR 14

Parsing Techniques • Depending on how the parse tree is created, there are different parsing techniques. • These parsing techniques are categorized into two groups: – Top-Down Parsing, – Bottom-Up Parsing • Top-Down Parsing: – Construction of the parse tree starts at the root, and proceeds towards the leaves. – Efficient top-down parsers can be easily constructed by hand. – Recursive Predictive Parsing, Non-Recursive Predictive Parsing (LL Parsing). • Bottom-Up Parsing: – – – Construction of the parse tree starts at the leaves, and proceeds towards the root. Normally efficient bottom-up parsers are created with the help of some software tools. Bottom-up parsing is also known as shift-reduce parsing. Operator-Precedence Parsing – simple, restrictive, easy to implement LR Parsing – much general form of shift-reduce parsing, LR, SLR, LALR 14

Semantic Analyzer • A semantic analyzer checks the source program for semantic errors and collects the type information for the code generation. • Type-checking is an important part of semantic analyzer. • Normally semantic information cannot be represented by a context-free language used in syntax analyzers. • Context-free grammars used in the syntax analysis are integrated with attributes (semantic rules) – the result is a syntax-directed translation, – Attribute grammars • Ex: newval : = oldval + 12 • The type of the identifier newval must match with type of the expression (oldval+12) 15

Semantic Analyzer • A semantic analyzer checks the source program for semantic errors and collects the type information for the code generation. • Type-checking is an important part of semantic analyzer. • Normally semantic information cannot be represented by a context-free language used in syntax analyzers. • Context-free grammars used in the syntax analysis are integrated with attributes (semantic rules) – the result is a syntax-directed translation, – Attribute grammars • Ex: newval : = oldval + 12 • The type of the identifier newval must match with type of the expression (oldval+12) 15

Intermediate Code Generation • A compiler may produce an explicit intermediate codes representing the source program. • These intermediate codes are generally machine (architecture independent). But the level of intermediate codes is close to the level of machine codes. • Ex: newval : = oldval * fact + 1 id 1 : = id 2 * id 3 + 1 MULT ADD MOV id 2, id 3, temp 1, #1, temp 2, , id 1 Intermediates Codes (Quadraples) 16

Intermediate Code Generation • A compiler may produce an explicit intermediate codes representing the source program. • These intermediate codes are generally machine (architecture independent). But the level of intermediate codes is close to the level of machine codes. • Ex: newval : = oldval * fact + 1 id 1 : = id 2 * id 3 + 1 MULT ADD MOV id 2, id 3, temp 1, #1, temp 2, , id 1 Intermediates Codes (Quadraples) 16

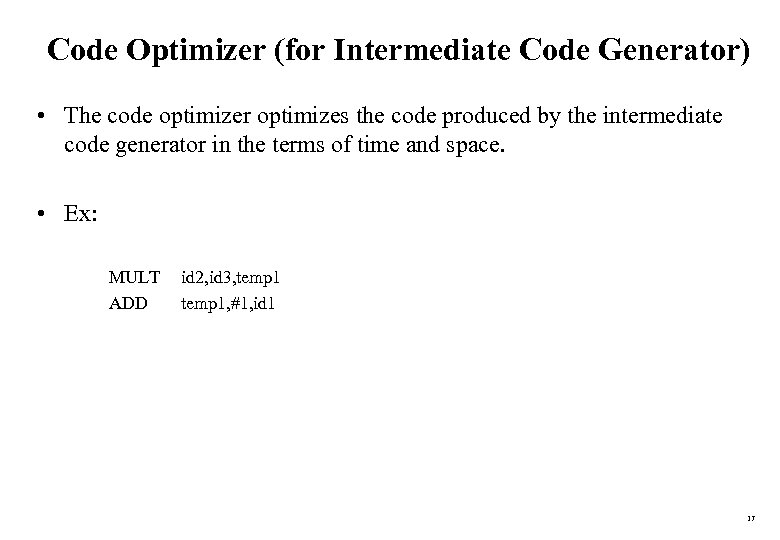

Code Optimizer (for Intermediate Code Generator) • The code optimizer optimizes the code produced by the intermediate code generator in the terms of time and space. • Ex: MULT ADD id 2, id 3, temp 1, #1, id 1 17

Code Optimizer (for Intermediate Code Generator) • The code optimizer optimizes the code produced by the intermediate code generator in the terms of time and space. • Ex: MULT ADD id 2, id 3, temp 1, #1, id 1 17

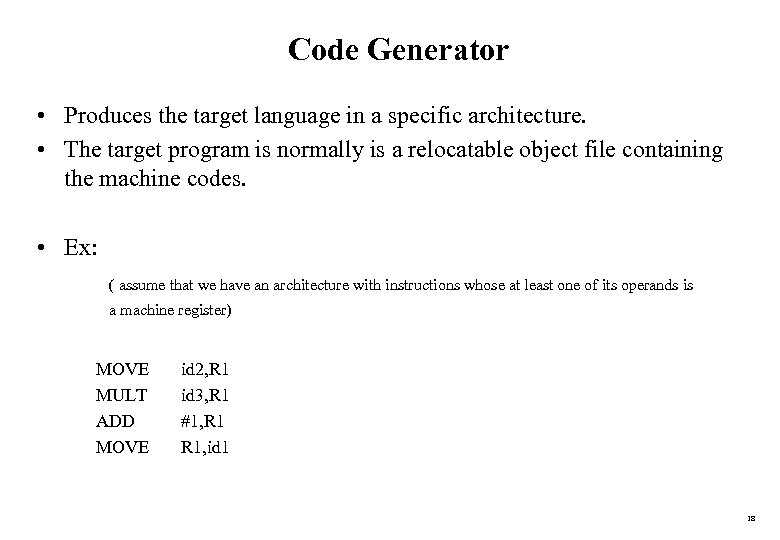

Code Generator • Produces the target language in a specific architecture. • The target program is normally is a relocatable object file containing the machine codes. • Ex: ( assume that we have an architecture with instructions whose at least one of its operands is a machine register) MOVE MULT ADD MOVE id 2, R 1 id 3, R 1 #1, R 1, id 1 18

Code Generator • Produces the target language in a specific architecture. • The target program is normally is a relocatable object file containing the machine codes. • Ex: ( assume that we have an architecture with instructions whose at least one of its operands is a machine register) MOVE MULT ADD MOVE id 2, R 1 id 3, R 1 #1, R 1, id 1 18

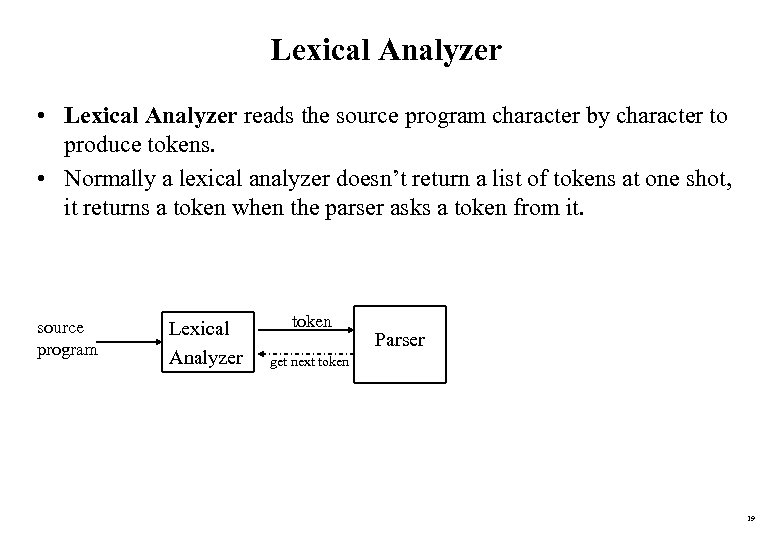

Lexical Analyzer • Lexical Analyzer reads the source program character by character to produce tokens. • Normally a lexical analyzer doesn’t return a list of tokens at one shot, it returns a token when the parser asks a token from it. source program Lexical Analyzer token Parser get next token 19

Lexical Analyzer • Lexical Analyzer reads the source program character by character to produce tokens. • Normally a lexical analyzer doesn’t return a list of tokens at one shot, it returns a token when the parser asks a token from it. source program Lexical Analyzer token Parser get next token 19

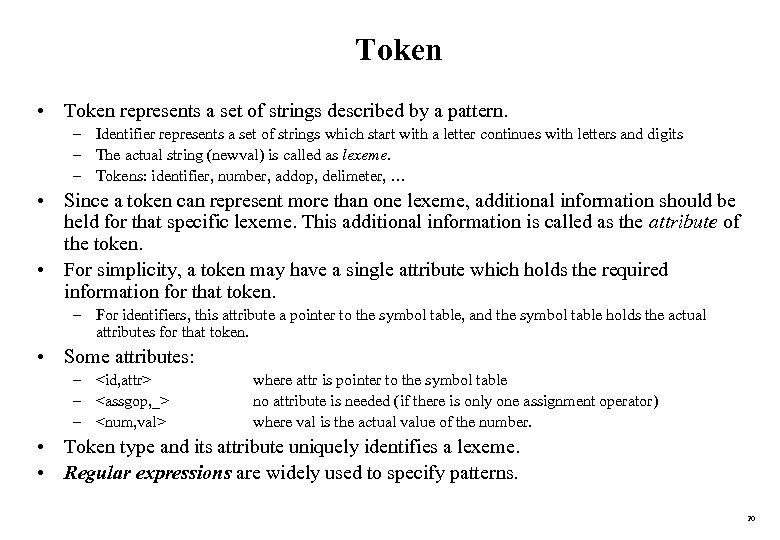

Token • Token represents a set of strings described by a pattern. – Identifier represents a set of strings which start with a letter continues with letters and digits – The actual string (newval) is called as lexeme. – Tokens: identifier, number, addop, delimeter, … • Since a token can represent more than one lexeme, additional information should be held for that specific lexeme. This additional information is called as the attribute of the token. • For simplicity, a token may have a single attribute which holds the required information for that token. – For identifiers, this attribute a pointer to the symbol table, and the symbol table holds the actual attributes for that token. • Some attributes: –

Token • Token represents a set of strings described by a pattern. – Identifier represents a set of strings which start with a letter continues with letters and digits – The actual string (newval) is called as lexeme. – Tokens: identifier, number, addop, delimeter, … • Since a token can represent more than one lexeme, additional information should be held for that specific lexeme. This additional information is called as the attribute of the token. • For simplicity, a token may have a single attribute which holds the required information for that token. – For identifiers, this attribute a pointer to the symbol table, and the symbol table holds the actual attributes for that token. • Some attributes: –

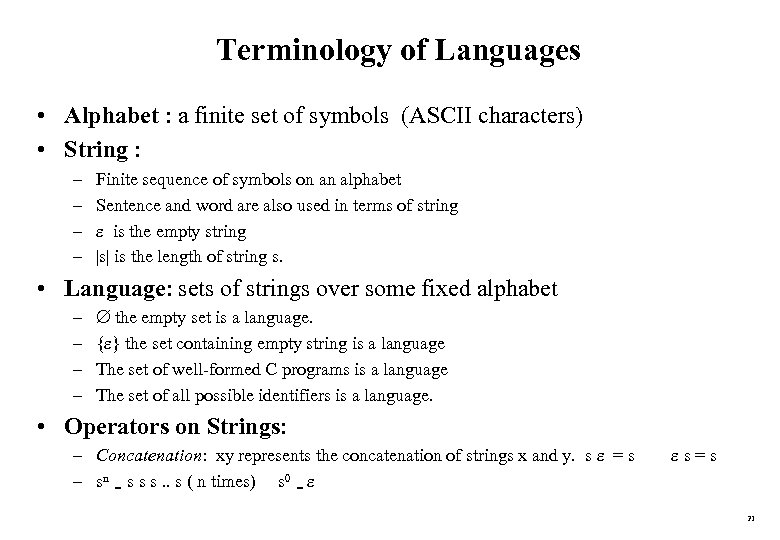

Terminology of Languages • Alphabet : a finite set of symbols (ASCII characters) • String : – – Finite sequence of symbols on an alphabet Sentence and word are also used in terms of string is the empty string |s| is the length of string s. • Language: sets of strings over some fixed alphabet – – the empty set is a language. { } the set containing empty string is a language The set of well-formed C programs is a language The set of all possible identifiers is a language. • Operators on Strings: – Concatenation: xy represents the concatenation of strings x and y. s = s – sn = s s s. . s ( n times) s 0 = s=s 21

Terminology of Languages • Alphabet : a finite set of symbols (ASCII characters) • String : – – Finite sequence of symbols on an alphabet Sentence and word are also used in terms of string is the empty string |s| is the length of string s. • Language: sets of strings over some fixed alphabet – – the empty set is a language. { } the set containing empty string is a language The set of well-formed C programs is a language The set of all possible identifiers is a language. • Operators on Strings: – Concatenation: xy represents the concatenation of strings x and y. s = s – sn = s s s. . s ( n times) s 0 = s=s 21

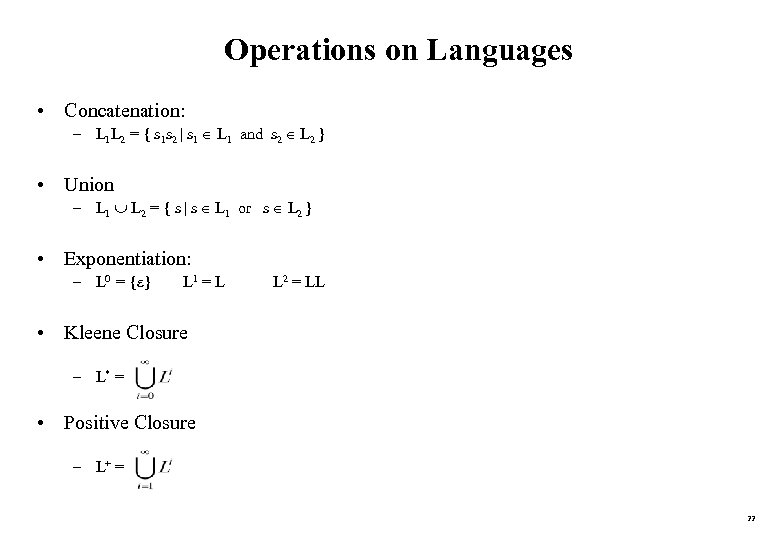

Operations on Languages • Concatenation: – L 1 L 2 = { s 1 s 2 | s 1 L 1 and s 2 L 2 } • Union – L 1 L 2 = { s | s L 1 or s L 2 } • Exponentiation: – L 0 = { } L 1 = L L 2 = LL • Kleene Closure – L* = • Positive Closure – L+ = 22

Operations on Languages • Concatenation: – L 1 L 2 = { s 1 s 2 | s 1 L 1 and s 2 L 2 } • Union – L 1 L 2 = { s | s L 1 or s L 2 } • Exponentiation: – L 0 = { } L 1 = L L 2 = LL • Kleene Closure – L* = • Positive Closure – L+ = 22

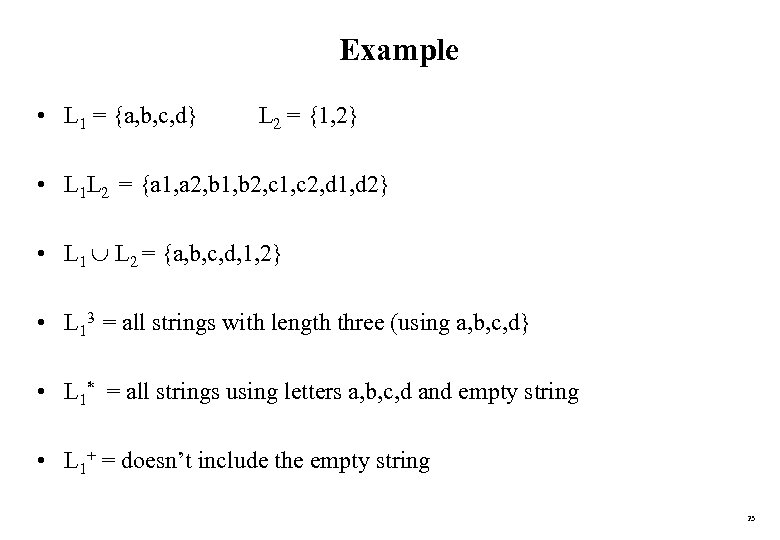

Example • L 1 = {a, b, c, d} L 2 = {1, 2} • L 1 L 2 = {a 1, a 2, b 1, b 2, c 1, c 2, d 1, d 2} • L 1 L 2 = {a, b, c, d, 1, 2} • L 13 = all strings with length three (using a, b, c, d} • L 1* = all strings using letters a, b, c, d and empty string • L 1+ = doesn’t include the empty string 23

Example • L 1 = {a, b, c, d} L 2 = {1, 2} • L 1 L 2 = {a 1, a 2, b 1, b 2, c 1, c 2, d 1, d 2} • L 1 L 2 = {a, b, c, d, 1, 2} • L 13 = all strings with length three (using a, b, c, d} • L 1* = all strings using letters a, b, c, d and empty string • L 1+ = doesn’t include the empty string 23

Regular Expressions • We use regular expressions to describe tokens of a programming language. • A regular expression is built up of simpler regular expressions (using defining rules) • Each regular expression denotes a language. • A language denoted by a regular expression is called as a regular set. 24

Regular Expressions • We use regular expressions to describe tokens of a programming language. • A regular expression is built up of simpler regular expressions (using defining rules) • Each regular expression denotes a language. • A language denoted by a regular expression is called as a regular set. 24

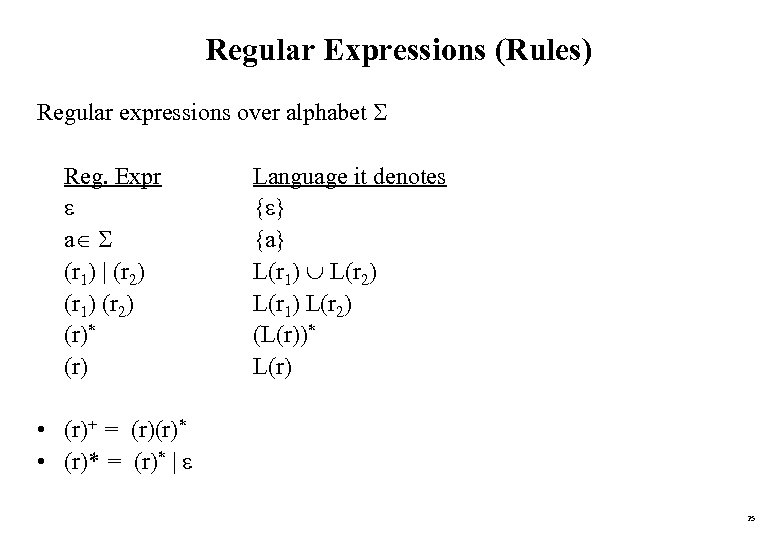

Regular Expressions (Rules) Regular expressions over alphabet Reg. Expr a (r 1) | (r 2) (r 1) (r 2) (r)* (r) Language it denotes { } {a} L(r 1) L(r 2) L(r 1) L(r 2) (L(r))* L(r) • (r)+ = (r)(r)* • (r)* = (r)* | 25

Regular Expressions (Rules) Regular expressions over alphabet Reg. Expr a (r 1) | (r 2) (r 1) (r 2) (r)* (r) Language it denotes { } {a} L(r 1) L(r 2) L(r 1) L(r 2) (L(r))* L(r) • (r)+ = (r)(r)* • (r)* = (r)* | 25

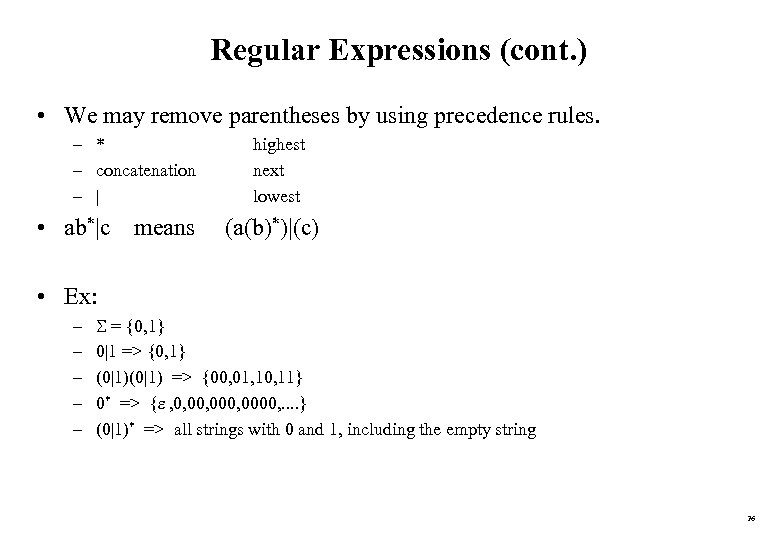

Regular Expressions (cont. ) • We may remove parentheses by using precedence rules. – * – concatenation – | • ab*|c means highest next lowest (a(b)*)|(c) • Ex: – – – = {0, 1} 0|1 => {0, 1} (0|1) => {00, 01, 10, 11} 0* => { , 0, 000, 0000, . . } (0|1)* => all strings with 0 and 1, including the empty string 26

Regular Expressions (cont. ) • We may remove parentheses by using precedence rules. – * – concatenation – | • ab*|c means highest next lowest (a(b)*)|(c) • Ex: – – – = {0, 1} 0|1 => {0, 1} (0|1) => {00, 01, 10, 11} 0* => { , 0, 000, 0000, . . } (0|1)* => all strings with 0 and 1, including the empty string 26

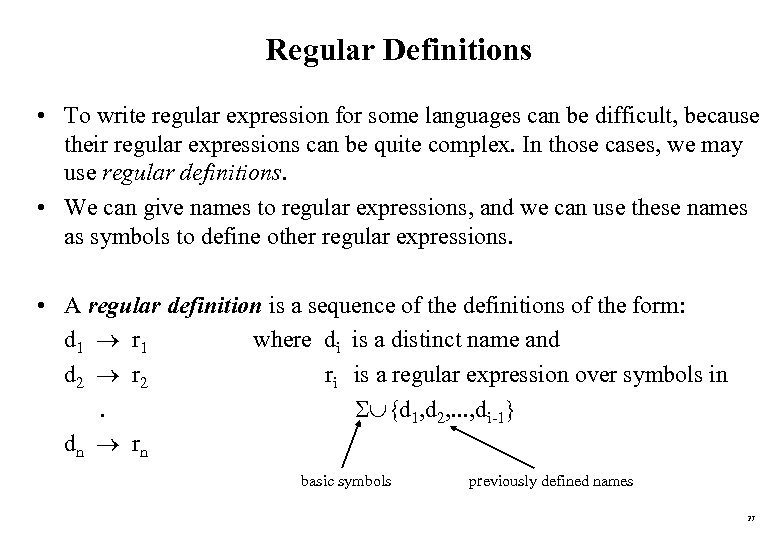

Regular Definitions • To write regular expression for some languages can be difficult, because their regular expressions can be quite complex. In those cases, we may use regular definitions. • We can give names to regular expressions, and we can use these names as symbols to define other regular expressions. • A regular definition is a sequence of the definitions of the form: d 1 r 1 where di is a distinct name and d 2 r 2 ri is a regular expression over symbols in. {d 1, d 2, . . . , di-1} dn rn basic symbols previously defined names 27

Regular Definitions • To write regular expression for some languages can be difficult, because their regular expressions can be quite complex. In those cases, we may use regular definitions. • We can give names to regular expressions, and we can use these names as symbols to define other regular expressions. • A regular definition is a sequence of the definitions of the form: d 1 r 1 where di is a distinct name and d 2 r 2 ri is a regular expression over symbols in. {d 1, d 2, . . . , di-1} dn rn basic symbols previously defined names 27

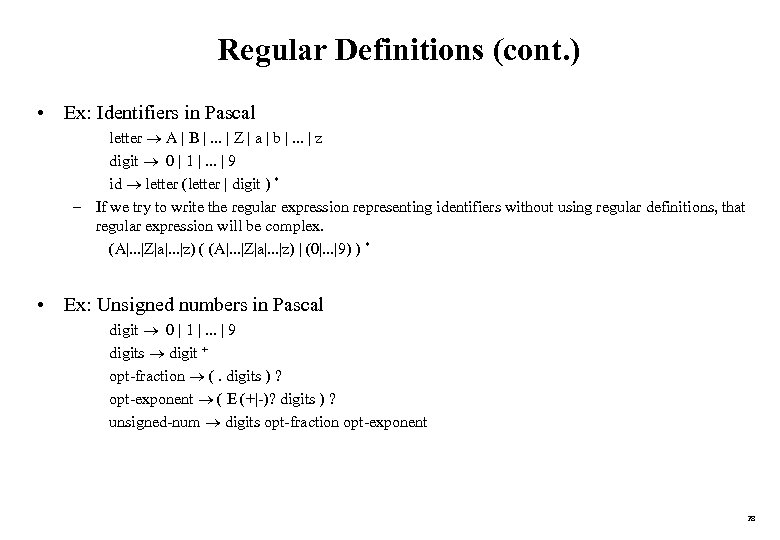

Regular Definitions (cont. ) • Ex: Identifiers in Pascal letter A | B |. . . | Z | a | b |. . . | z digit 0 | 1 |. . . | 9 id letter (letter | digit ) * – If we try to write the regular expression representing identifiers without using regular definitions, that regular expression will be complex. (A|. . . |Z|a|. . . |z) ( (A|. . . |Z|a|. . . |z) | (0|. . . |9) ) * • Ex: Unsigned numbers in Pascal digit 0 | 1 |. . . | 9 digits digit + opt-fraction (. digits ) ? opt-exponent ( E (+|-)? digits ) ? unsigned-num digits opt-fraction opt-exponent 28

Regular Definitions (cont. ) • Ex: Identifiers in Pascal letter A | B |. . . | Z | a | b |. . . | z digit 0 | 1 |. . . | 9 id letter (letter | digit ) * – If we try to write the regular expression representing identifiers without using regular definitions, that regular expression will be complex. (A|. . . |Z|a|. . . |z) ( (A|. . . |Z|a|. . . |z) | (0|. . . |9) ) * • Ex: Unsigned numbers in Pascal digit 0 | 1 |. . . | 9 digits digit + opt-fraction (. digits ) ? opt-exponent ( E (+|-)? digits ) ? unsigned-num digits opt-fraction opt-exponent 28

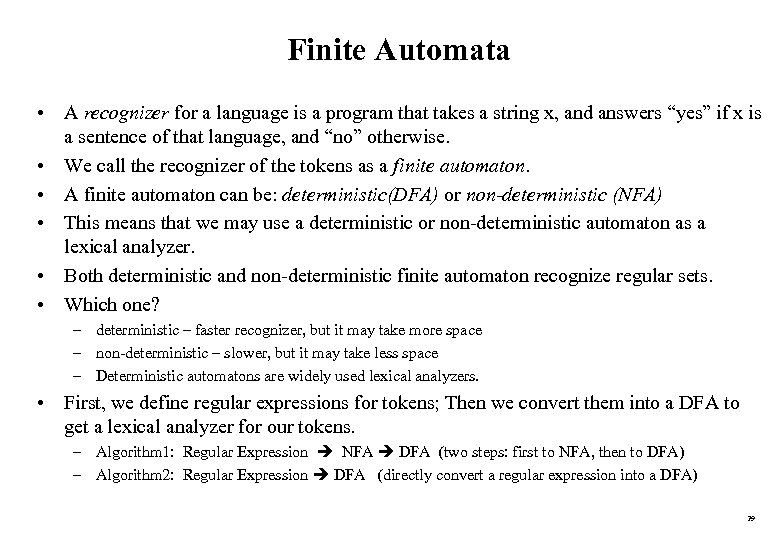

Finite Automata • A recognizer for a language is a program that takes a string x, and answers “yes” if x is a sentence of that language, and “no” otherwise. • We call the recognizer of the tokens as a finite automaton. • A finite automaton can be: deterministic(DFA) or non-deterministic (NFA) • This means that we may use a deterministic or non-deterministic automaton as a lexical analyzer. • Both deterministic and non-deterministic finite automaton recognize regular sets. • Which one? – deterministic – faster recognizer, but it may take more space – non-deterministic – slower, but it may take less space – Deterministic automatons are widely used lexical analyzers. • First, we define regular expressions for tokens; Then we convert them into a DFA to get a lexical analyzer for our tokens. – Algorithm 1: Regular Expression NFA DFA (two steps: first to NFA, then to DFA) – Algorithm 2: Regular Expression DFA (directly convert a regular expression into a DFA) 29

Finite Automata • A recognizer for a language is a program that takes a string x, and answers “yes” if x is a sentence of that language, and “no” otherwise. • We call the recognizer of the tokens as a finite automaton. • A finite automaton can be: deterministic(DFA) or non-deterministic (NFA) • This means that we may use a deterministic or non-deterministic automaton as a lexical analyzer. • Both deterministic and non-deterministic finite automaton recognize regular sets. • Which one? – deterministic – faster recognizer, but it may take more space – non-deterministic – slower, but it may take less space – Deterministic automatons are widely used lexical analyzers. • First, we define regular expressions for tokens; Then we convert them into a DFA to get a lexical analyzer for our tokens. – Algorithm 1: Regular Expression NFA DFA (two steps: first to NFA, then to DFA) – Algorithm 2: Regular Expression DFA (directly convert a regular expression into a DFA) 29

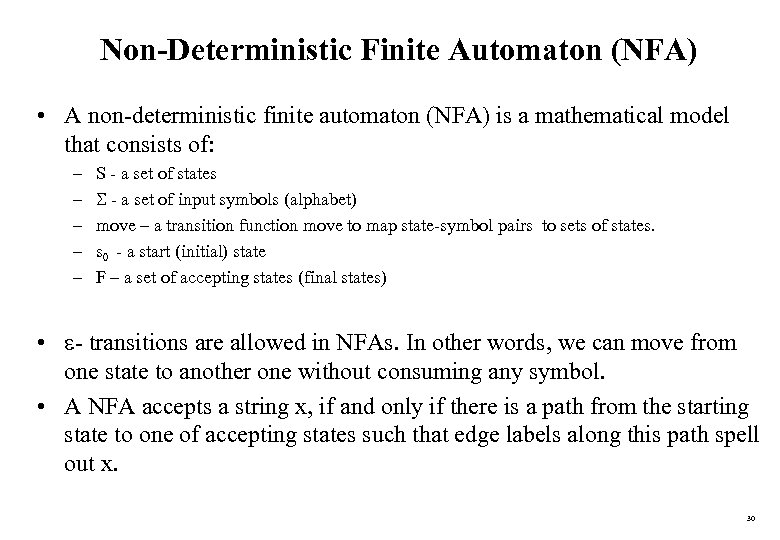

Non-Deterministic Finite Automaton (NFA) • A non-deterministic finite automaton (NFA) is a mathematical model that consists of: – – – S - a set of states - a set of input symbols (alphabet) move – a transition function move to map state-symbol pairs to sets of states. s 0 - a start (initial) state F – a set of accepting states (final states) • - transitions are allowed in NFAs. In other words, we can move from one state to another one without consuming any symbol. • A NFA accepts a string x, if and only if there is a path from the starting state to one of accepting states such that edge labels along this path spell out x. 30

Non-Deterministic Finite Automaton (NFA) • A non-deterministic finite automaton (NFA) is a mathematical model that consists of: – – – S - a set of states - a set of input symbols (alphabet) move – a transition function move to map state-symbol pairs to sets of states. s 0 - a start (initial) state F – a set of accepting states (final states) • - transitions are allowed in NFAs. In other words, we can move from one state to another one without consuming any symbol. • A NFA accepts a string x, if and only if there is a path from the starting state to one of accepting states such that edge labels along this path spell out x. 30

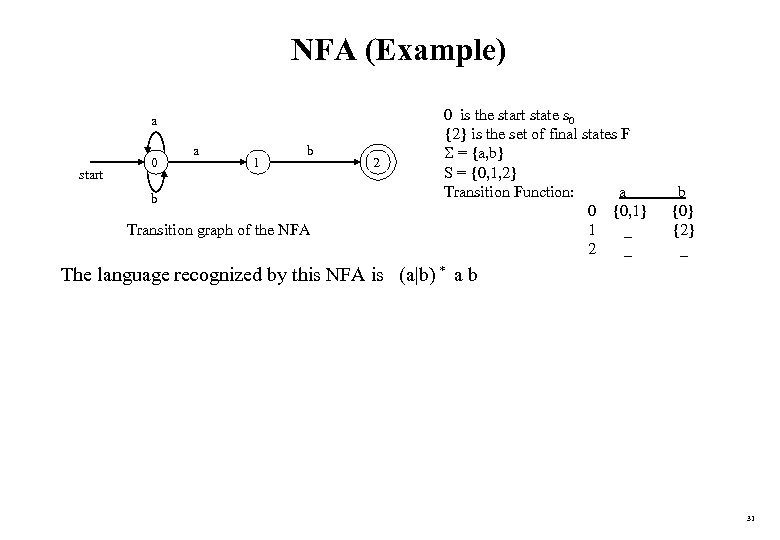

NFA (Example) a start 0 a 1 b b Transition graph of the NFA 2 0 is the start state s 0 {2} is the set of final states F = {a, b} S = {0, 1, 2} Transition Function: a 0 {0, 1} 1 _ 2 _ b {0} {2} _ The language recognized by this NFA is (a|b) * a b 31

NFA (Example) a start 0 a 1 b b Transition graph of the NFA 2 0 is the start state s 0 {2} is the set of final states F = {a, b} S = {0, 1, 2} Transition Function: a 0 {0, 1} 1 _ 2 _ b {0} {2} _ The language recognized by this NFA is (a|b) * a b 31

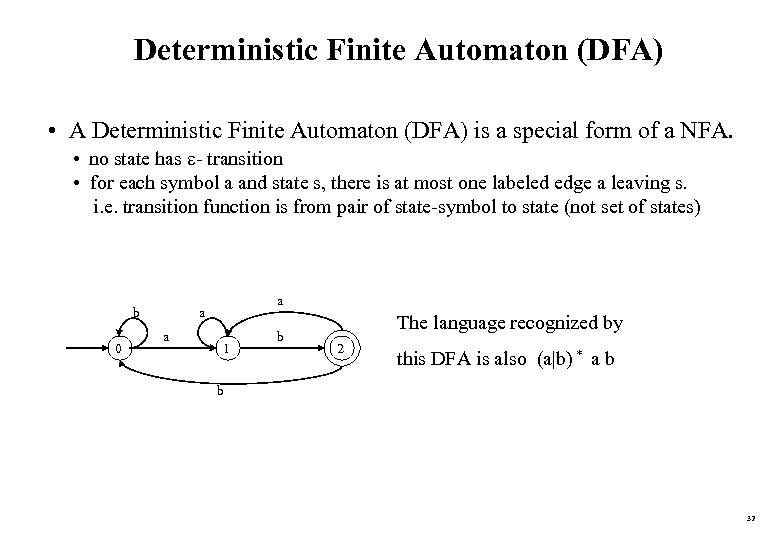

Deterministic Finite Automaton (DFA) • A Deterministic Finite Automaton (DFA) is a special form of a NFA. • no state has - transition • for each symbol a and state s, there is at most one labeled edge a leaving s. i. e. transition function is from pair of state-symbol to state (not set of states) b 0 a a a 1 b The language recognized by 2 this DFA is also (a|b) * a b b 32

Deterministic Finite Automaton (DFA) • A Deterministic Finite Automaton (DFA) is a special form of a NFA. • no state has - transition • for each symbol a and state s, there is at most one labeled edge a leaving s. i. e. transition function is from pair of state-symbol to state (not set of states) b 0 a a a 1 b The language recognized by 2 this DFA is also (a|b) * a b b 32

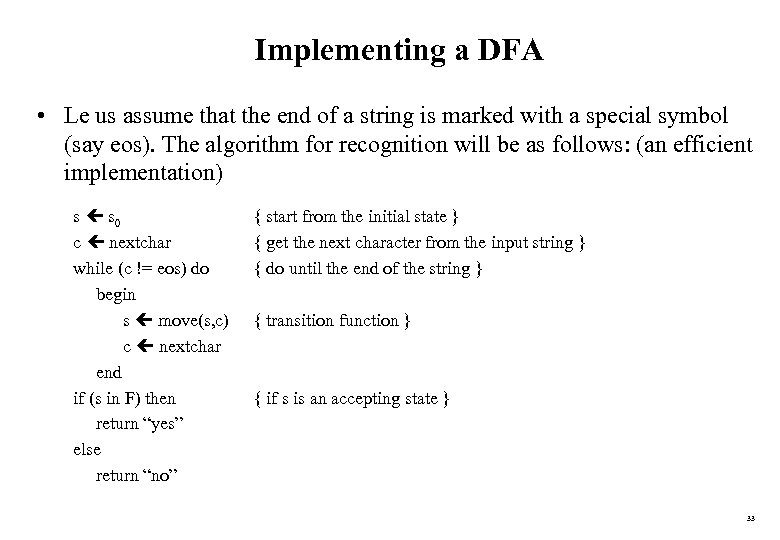

Implementing a DFA • Le us assume that the end of a string is marked with a special symbol (say eos). The algorithm for recognition will be as follows: (an efficient implementation) s s 0 c nextchar while (c != eos) do begin s move(s, c) c nextchar end if (s in F) then return “yes” else return “no” { start from the initial state } { get the next character from the input string } { do until the end of the string } { transition function } { if s is an accepting state } 33

Implementing a DFA • Le us assume that the end of a string is marked with a special symbol (say eos). The algorithm for recognition will be as follows: (an efficient implementation) s s 0 c nextchar while (c != eos) do begin s move(s, c) c nextchar end if (s in F) then return “yes” else return “no” { start from the initial state } { get the next character from the input string } { do until the end of the string } { transition function } { if s is an accepting state } 33

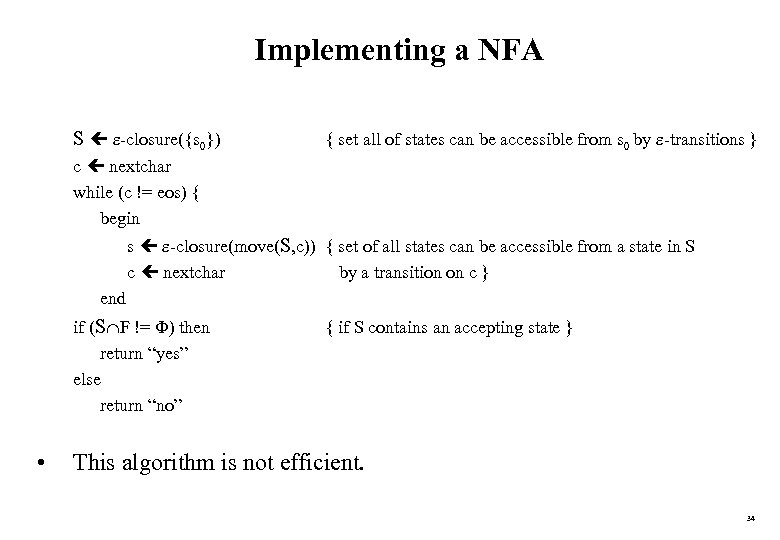

Implementing a NFA S -closure({s 0}) { set all of states can be accessible from s 0 by -transitions } c nextchar while (c != eos) { begin s -closure(move(S, c)) { set of all states can be accessible from a state in S c nextchar by a transition on c } end if (S F != ) then return “yes” else return “no” • { if S contains an accepting state } This algorithm is not efficient. 34

Implementing a NFA S -closure({s 0}) { set all of states can be accessible from s 0 by -transitions } c nextchar while (c != eos) { begin s -closure(move(S, c)) { set of all states can be accessible from a state in S c nextchar by a transition on c } end if (S F != ) then return “yes” else return “no” • { if S contains an accepting state } This algorithm is not efficient. 34

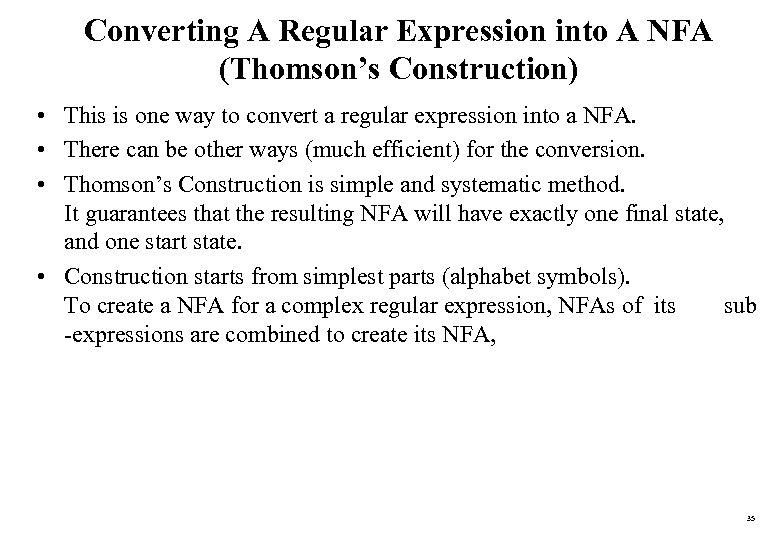

Converting A Regular Expression into A NFA (Thomson’s Construction) • This is one way to convert a regular expression into a NFA. • There can be other ways (much efficient) for the conversion. • Thomson’s Construction is simple and systematic method. It guarantees that the resulting NFA will have exactly one final state, and one start state. • Construction starts from simplest parts (alphabet symbols). To create a NFA for a complex regular expression, NFAs of its sub -expressions are combined to create its NFA, 35

Converting A Regular Expression into A NFA (Thomson’s Construction) • This is one way to convert a regular expression into a NFA. • There can be other ways (much efficient) for the conversion. • Thomson’s Construction is simple and systematic method. It guarantees that the resulting NFA will have exactly one final state, and one start state. • Construction starts from simplest parts (alphabet symbols). To create a NFA for a complex regular expression, NFAs of its sub -expressions are combined to create its NFA, 35

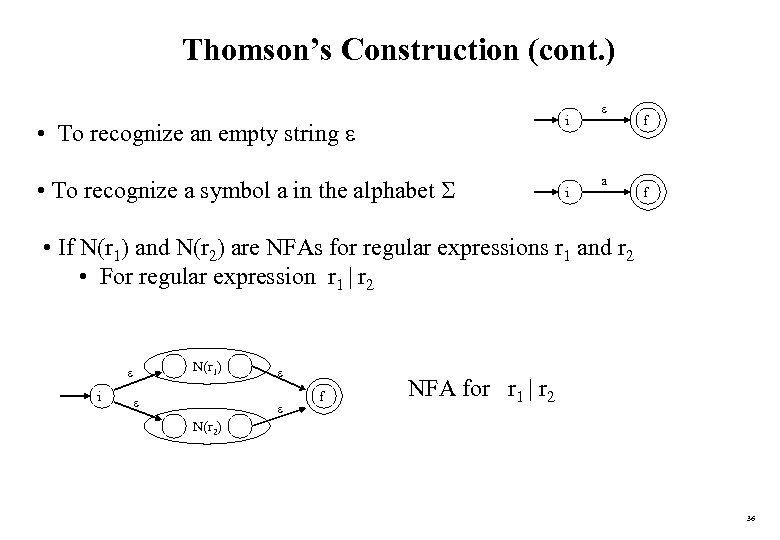

Thomson’s Construction (cont. ) i • To recognize an empty string • To recognize a symbol a in the alphabet i a f f • If N(r 1) and N(r 2) are NFAs for regular expressions r 1 and r 2 • For regular expression r 1 | r 2 i N(r 1) f NFA for r 1 | r 2 N(r 2) 36

Thomson’s Construction (cont. ) i • To recognize an empty string • To recognize a symbol a in the alphabet i a f f • If N(r 1) and N(r 2) are NFAs for regular expressions r 1 and r 2 • For regular expression r 1 | r 2 i N(r 1) f NFA for r 1 | r 2 N(r 2) 36

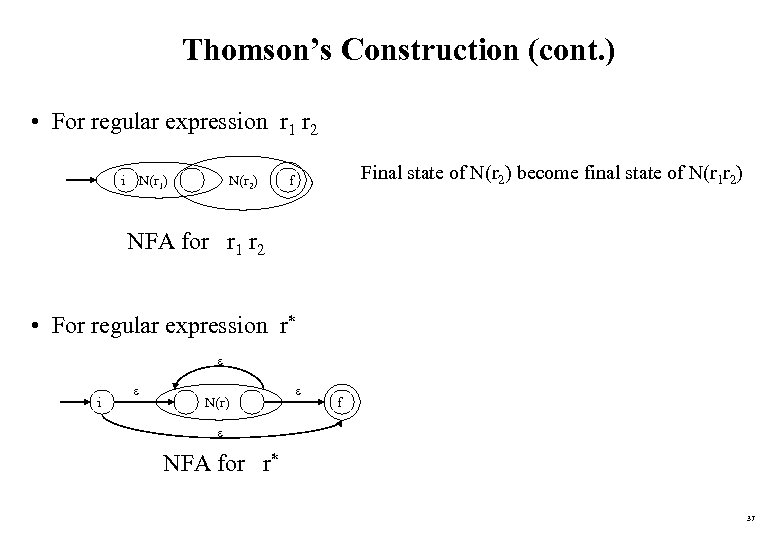

Thomson’s Construction (cont. ) • For regular expression r 1 r 2 i N(r 1) N(r 2) Final state of N(r 2) become final state of N(r 1 r 2) f NFA for r 1 r 2 • For regular expression r* i N(r) f NFA for r* 37

Thomson’s Construction (cont. ) • For regular expression r 1 r 2 i N(r 1) N(r 2) Final state of N(r 2) become final state of N(r 1 r 2) f NFA for r 1 r 2 • For regular expression r* i N(r) f NFA for r* 37

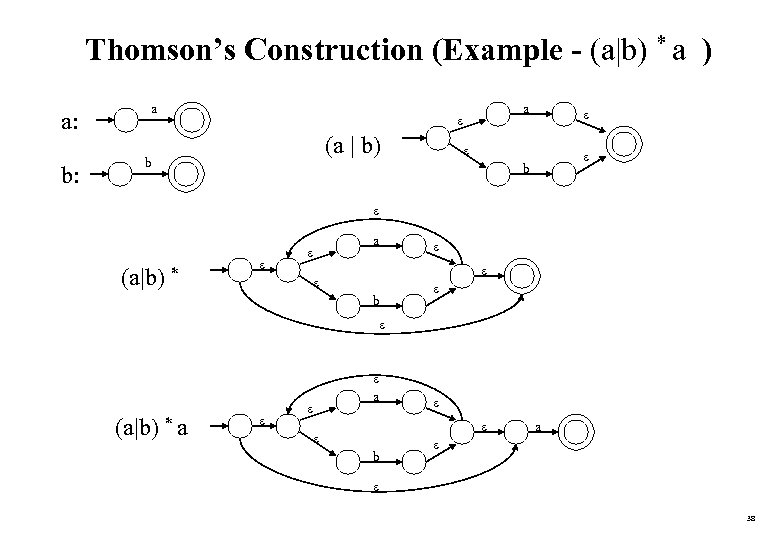

Thomson’s Construction (Example - (a|b) * a ) a: b: a a (a | b) b b (a|b) * a b (a|b) * a b a 38

Thomson’s Construction (Example - (a|b) * a ) a: b: a a (a | b) b b (a|b) * a b (a|b) * a b a 38

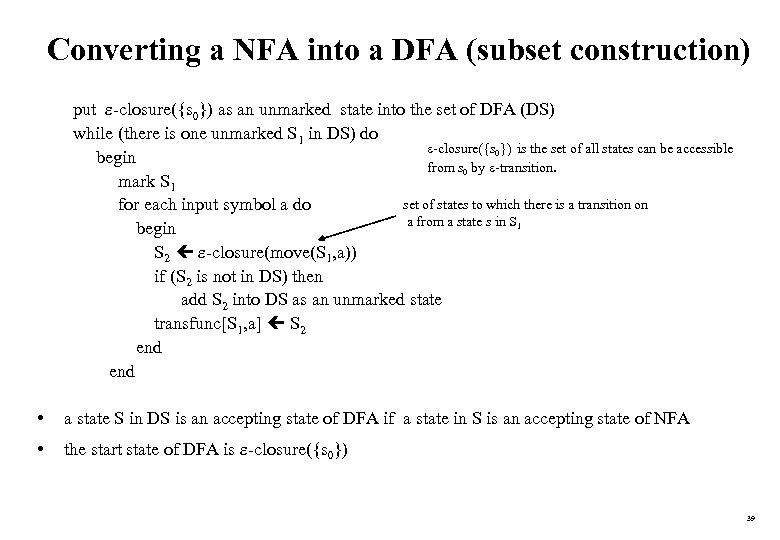

Converting a NFA into a DFA (subset construction) put -closure({s 0}) as an unmarked state into the set of DFA (DS) while (there is one unmarked S 1 in DS) do -closure({s 0}) is the set of all states can be accessible begin from s 0 by -transition. mark S 1 set of states to which there is a transition on for each input symbol a do a from a state s in S 1 begin S 2 -closure(move(S 1, a)) if (S 2 is not in DS) then add S 2 into DS as an unmarked state transfunc[S 1, a] S 2 end • a state S in DS is an accepting state of DFA if a state in S is an accepting state of NFA • the start state of DFA is -closure({s 0}) 39

Converting a NFA into a DFA (subset construction) put -closure({s 0}) as an unmarked state into the set of DFA (DS) while (there is one unmarked S 1 in DS) do -closure({s 0}) is the set of all states can be accessible begin from s 0 by -transition. mark S 1 set of states to which there is a transition on for each input symbol a do a from a state s in S 1 begin S 2 -closure(move(S 1, a)) if (S 2 is not in DS) then add S 2 into DS as an unmarked state transfunc[S 1, a] S 2 end • a state S in DS is an accepting state of DFA if a state in S is an accepting state of NFA • the start state of DFA is -closure({s 0}) 39

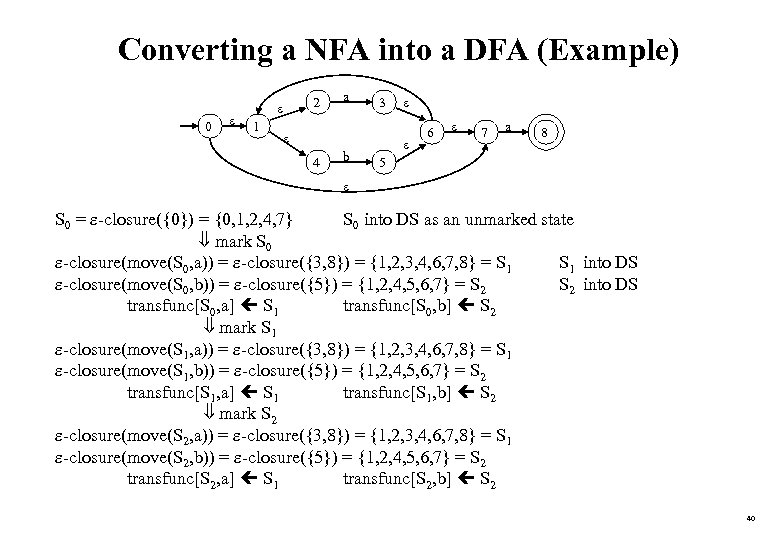

Converting a NFA into a DFA (Example) 0 1 2 a 3 4 b 6 7 a 8 5 S 0 = -closure({0}) = {0, 1, 2, 4, 7} S 0 into DS as an unmarked state mark S 0 -closure(move(S 0, a)) = -closure({3, 8}) = {1, 2, 3, 4, 6, 7, 8} = S 1 into DS -closure(move(S 0, b)) = -closure({5}) = {1, 2, 4, 5, 6, 7} = S 2 into DS transfunc[S 0, a] S 1 transfunc[S 0, b] S 2 mark S 1 -closure(move(S 1, a)) = -closure({3, 8}) = {1, 2, 3, 4, 6, 7, 8} = S 1 -closure(move(S 1, b)) = -closure({5}) = {1, 2, 4, 5, 6, 7} = S 2 transfunc[S 1, a] S 1 transfunc[S 1, b] S 2 mark S 2 -closure(move(S 2, a)) = -closure({3, 8}) = {1, 2, 3, 4, 6, 7, 8} = S 1 -closure(move(S 2, b)) = -closure({5}) = {1, 2, 4, 5, 6, 7} = S 2 transfunc[S 2, a] S 1 transfunc[S 2, b] S 2 40

Converting a NFA into a DFA (Example) 0 1 2 a 3 4 b 6 7 a 8 5 S 0 = -closure({0}) = {0, 1, 2, 4, 7} S 0 into DS as an unmarked state mark S 0 -closure(move(S 0, a)) = -closure({3, 8}) = {1, 2, 3, 4, 6, 7, 8} = S 1 into DS -closure(move(S 0, b)) = -closure({5}) = {1, 2, 4, 5, 6, 7} = S 2 into DS transfunc[S 0, a] S 1 transfunc[S 0, b] S 2 mark S 1 -closure(move(S 1, a)) = -closure({3, 8}) = {1, 2, 3, 4, 6, 7, 8} = S 1 -closure(move(S 1, b)) = -closure({5}) = {1, 2, 4, 5, 6, 7} = S 2 transfunc[S 1, a] S 1 transfunc[S 1, b] S 2 mark S 2 -closure(move(S 2, a)) = -closure({3, 8}) = {1, 2, 3, 4, 6, 7, 8} = S 1 -closure(move(S 2, b)) = -closure({5}) = {1, 2, 4, 5, 6, 7} = S 2 transfunc[S 2, a] S 1 transfunc[S 2, b] S 2 40

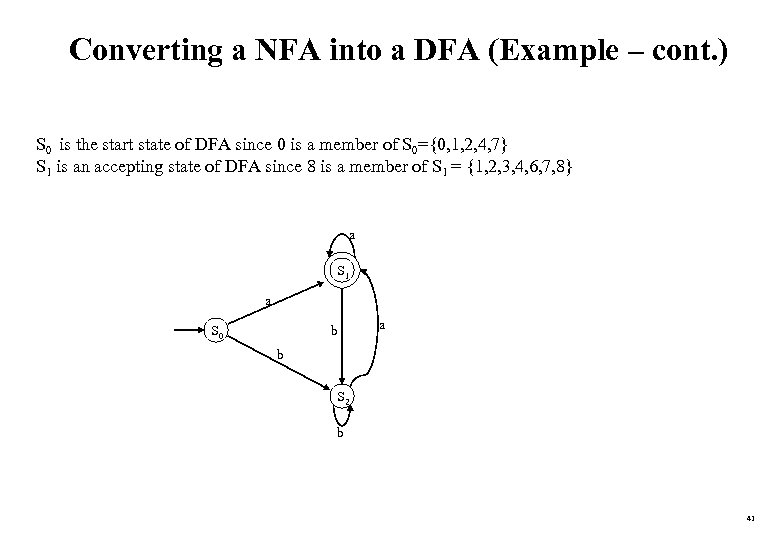

Converting a NFA into a DFA (Example – cont. ) S 0 is the start state of DFA since 0 is a member of S 0={0, 1, 2, 4, 7} S 1 is an accepting state of DFA since 8 is a member of S 1 = {1, 2, 3, 4, 6, 7, 8} a S 1 a S 0 b a b S 2 b 41

Converting a NFA into a DFA (Example – cont. ) S 0 is the start state of DFA since 0 is a member of S 0={0, 1, 2, 4, 7} S 1 is an accepting state of DFA since 8 is a member of S 1 = {1, 2, 3, 4, 6, 7, 8} a S 1 a S 0 b a b S 2 b 41

Converting Regular Expressions Directly to DFAs • We may convert a regular expression into a DFA (without creating a NFA first). • First we augment the given regular expression by concatenating it with a special symbol #. r (r)# augmented regular expression • Then, we create a syntax tree for this augmented regular expression. • In this syntax tree, all alphabet symbols (plus # and the empty string) in the augmented regular expression will be on the leaves, and all inner nodes will be the operators in that augmented regular expression. • Then each alphabet symbol (plus #) will be numbered (position numbers). 42

Converting Regular Expressions Directly to DFAs • We may convert a regular expression into a DFA (without creating a NFA first). • First we augment the given regular expression by concatenating it with a special symbol #. r (r)# augmented regular expression • Then, we create a syntax tree for this augmented regular expression. • In this syntax tree, all alphabet symbols (plus # and the empty string) in the augmented regular expression will be on the leaves, and all inner nodes will be the operators in that augmented regular expression. • Then each alphabet symbol (plus #) will be numbered (position numbers). 42

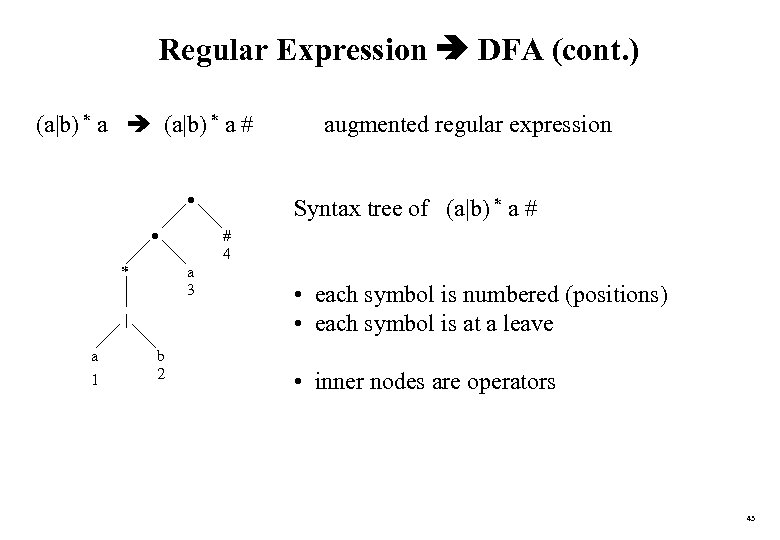

Regular Expression DFA (cont. ) (a|b) * a # * a 1 b 2 Syntax tree of (a|b) * a # # 4 a 3 | augmented regular expression • each symbol is numbered (positions) • each symbol is at a leave • inner nodes are operators 43

Regular Expression DFA (cont. ) (a|b) * a # * a 1 b 2 Syntax tree of (a|b) * a # # 4 a 3 | augmented regular expression • each symbol is numbered (positions) • each symbol is at a leave • inner nodes are operators 43

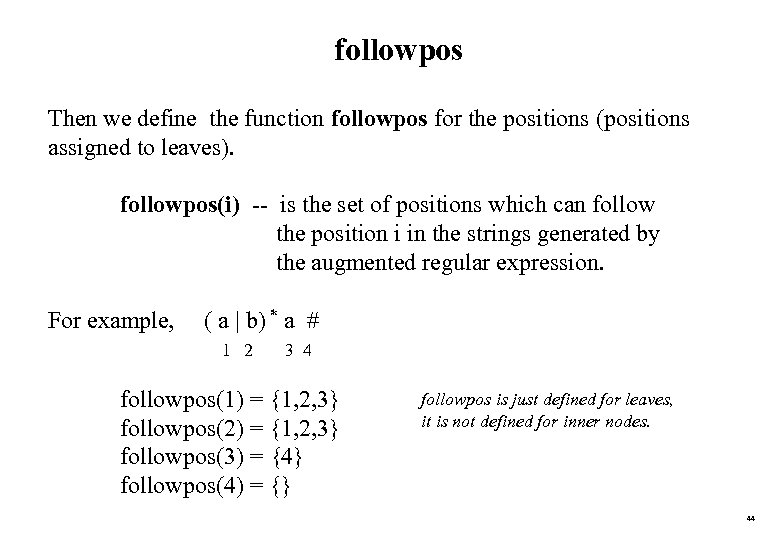

followpos Then we define the function followpos for the positions (positions assigned to leaves). followpos(i) -- is the set of positions which can follow the position i in the strings generated by the augmented regular expression. For example, ( a | b) * a # 1 2 3 4 followpos(1) = {1, 2, 3} followpos(2) = {1, 2, 3} followpos(3) = {4} followpos(4) = {} followpos is just defined for leaves, it is not defined for inner nodes. 44

followpos Then we define the function followpos for the positions (positions assigned to leaves). followpos(i) -- is the set of positions which can follow the position i in the strings generated by the augmented regular expression. For example, ( a | b) * a # 1 2 3 4 followpos(1) = {1, 2, 3} followpos(2) = {1, 2, 3} followpos(3) = {4} followpos(4) = {} followpos is just defined for leaves, it is not defined for inner nodes. 44

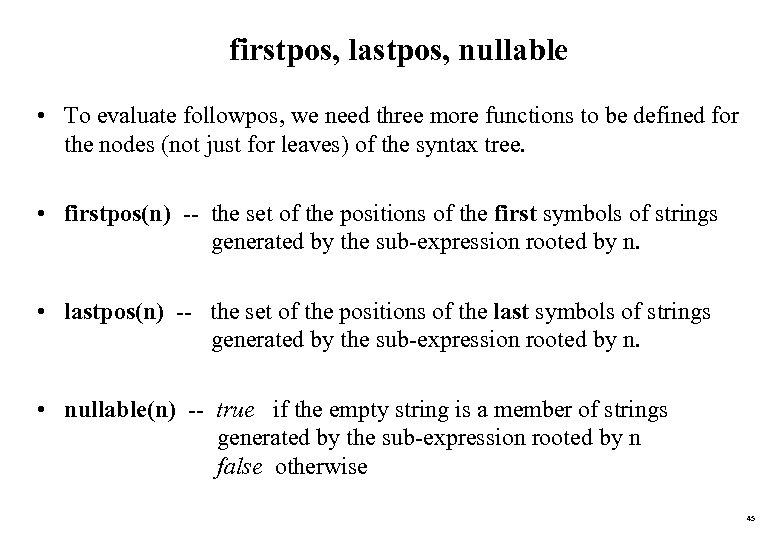

firstpos, lastpos, nullable • To evaluate followpos, we need three more functions to be defined for the nodes (not just for leaves) of the syntax tree. • firstpos(n) -- the set of the positions of the first symbols of strings generated by the sub-expression rooted by n. • lastpos(n) -- the set of the positions of the last symbols of strings generated by the sub-expression rooted by n. • nullable(n) -- true if the empty string is a member of strings generated by the sub-expression rooted by n false otherwise 45

firstpos, lastpos, nullable • To evaluate followpos, we need three more functions to be defined for the nodes (not just for leaves) of the syntax tree. • firstpos(n) -- the set of the positions of the first symbols of strings generated by the sub-expression rooted by n. • lastpos(n) -- the set of the positions of the last symbols of strings generated by the sub-expression rooted by n. • nullable(n) -- true if the empty string is a member of strings generated by the sub-expression rooted by n false otherwise 45

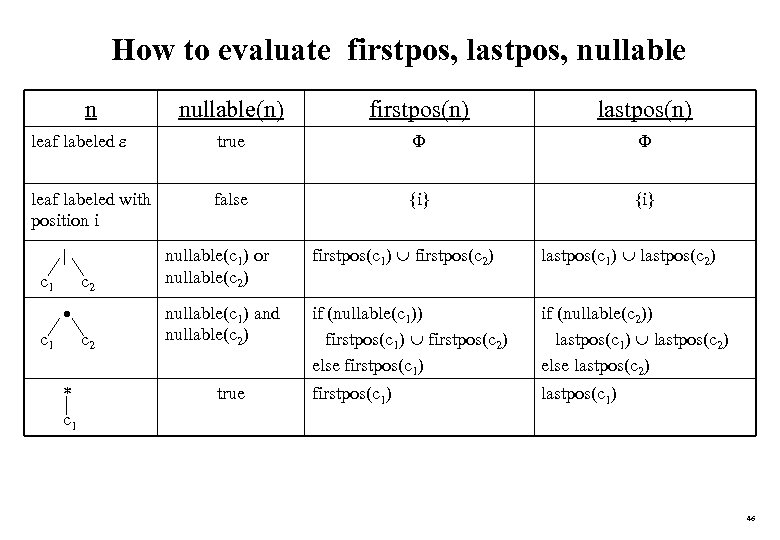

How to evaluate firstpos, lastpos, nullable n nullable(n) firstpos(n) lastpos(n) leaf labeled true leaf labeled with position i false {i} firstpos(c 1) firstpos(c 2) lastpos(c 1) lastpos(c 2) c 2 nullable(c 1) or nullable(c 2) c 2 nullable(c 1) and nullable(c 2) if (nullable(c 1)) firstpos(c 1) firstpos(c 2) else firstpos(c 1) if (nullable(c 2)) lastpos(c 1) lastpos(c 2) else lastpos(c 2) firstpos(c 1) lastpos(c 1) | c 1 * c 1 true 46

How to evaluate firstpos, lastpos, nullable n nullable(n) firstpos(n) lastpos(n) leaf labeled true leaf labeled with position i false {i} firstpos(c 1) firstpos(c 2) lastpos(c 1) lastpos(c 2) c 2 nullable(c 1) or nullable(c 2) c 2 nullable(c 1) and nullable(c 2) if (nullable(c 1)) firstpos(c 1) firstpos(c 2) else firstpos(c 1) if (nullable(c 2)) lastpos(c 1) lastpos(c 2) else lastpos(c 2) firstpos(c 1) lastpos(c 1) | c 1 * c 1 true 46

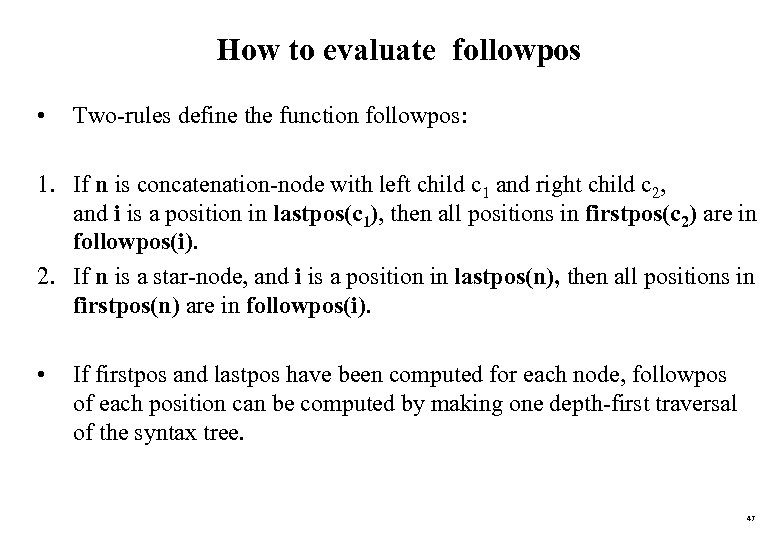

How to evaluate followpos • Two-rules define the function followpos: 1. If n is concatenation-node with left child c 1 and right child c 2, and i is a position in lastpos(c 1), then all positions in firstpos(c 2) are in followpos(i). 2. If n is a star-node, and i is a position in lastpos(n), then all positions in firstpos(n) are in followpos(i). • If firstpos and lastpos have been computed for each node, followpos of each position can be computed by making one depth-first traversal of the syntax tree. 47

How to evaluate followpos • Two-rules define the function followpos: 1. If n is concatenation-node with left child c 1 and right child c 2, and i is a position in lastpos(c 1), then all positions in firstpos(c 2) are in followpos(i). 2. If n is a star-node, and i is a position in lastpos(n), then all positions in firstpos(n) are in followpos(i). • If firstpos and lastpos have been computed for each node, followpos of each position can be computed by making one depth-first traversal of the syntax tree. 47

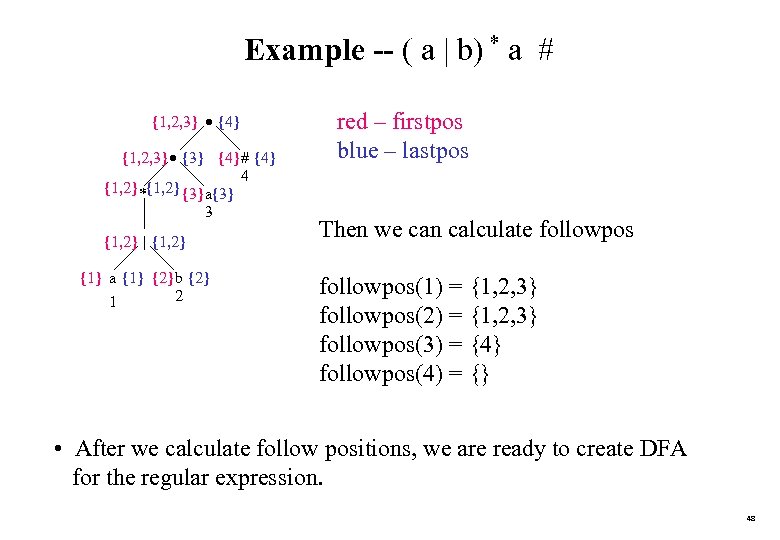

Example -- ( a | b) * a # {1, 2, 3} {4} {1, 2, 3} {3} {4} # {4} 4 {1, 2} *{1, 2} {3} a{3} 3 {1, 2} | {1, 2} {1} a {1} {2} b {2} 2 1 red – firstpos blue – lastpos Then we can calculate followpos(1) = {1, 2, 3} followpos(2) = {1, 2, 3} followpos(3) = {4} followpos(4) = {} • After we calculate follow positions, we are ready to create DFA for the regular expression. 48

Example -- ( a | b) * a # {1, 2, 3} {4} {1, 2, 3} {3} {4} # {4} 4 {1, 2} *{1, 2} {3} a{3} 3 {1, 2} | {1, 2} {1} a {1} {2} b {2} 2 1 red – firstpos blue – lastpos Then we can calculate followpos(1) = {1, 2, 3} followpos(2) = {1, 2, 3} followpos(3) = {4} followpos(4) = {} • After we calculate follow positions, we are ready to create DFA for the regular expression. 48

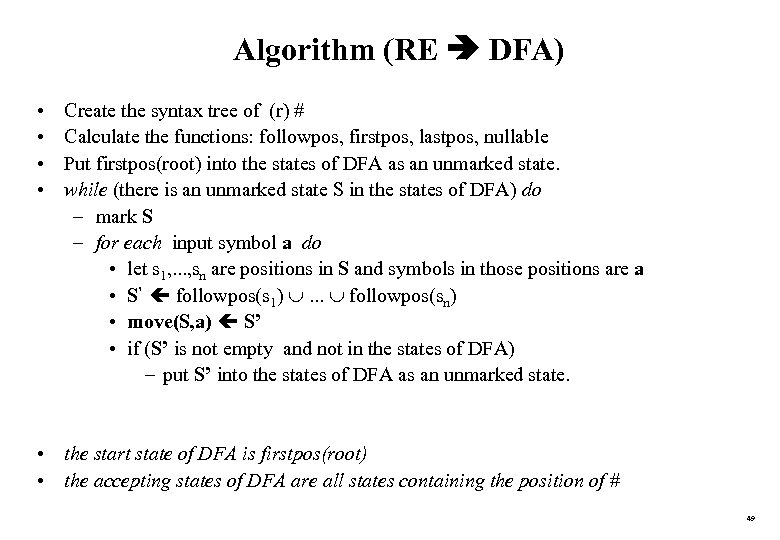

Algorithm (RE DFA) • • Create the syntax tree of (r) # Calculate the functions: followpos, firstpos, lastpos, nullable Put firstpos(root) into the states of DFA as an unmarked state. while (there is an unmarked state S in the states of DFA) do – mark S – for each input symbol a do • let s 1, . . . , sn are positions in S and symbols in those positions are a • S’ followpos(s 1) . . . followpos(sn) • move(S, a) S’ • if (S’ is not empty and not in the states of DFA) – put S’ into the states of DFA as an unmarked state. • the start state of DFA is firstpos(root) • the accepting states of DFA are all states containing the position of # 49

Algorithm (RE DFA) • • Create the syntax tree of (r) # Calculate the functions: followpos, firstpos, lastpos, nullable Put firstpos(root) into the states of DFA as an unmarked state. while (there is an unmarked state S in the states of DFA) do – mark S – for each input symbol a do • let s 1, . . . , sn are positions in S and symbols in those positions are a • S’ followpos(s 1) . . . followpos(sn) • move(S, a) S’ • if (S’ is not empty and not in the states of DFA) – put S’ into the states of DFA as an unmarked state. • the start state of DFA is firstpos(root) • the accepting states of DFA are all states containing the position of # 49

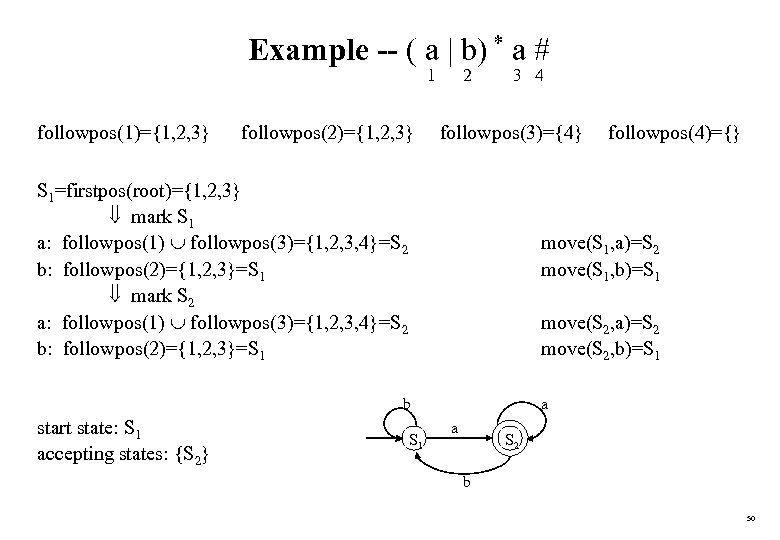

Example -- ( a | b) * a # 1 followpos(1)={1, 2, 3} followpos(2)={1, 2, 3} 2 3 4 followpos(3)={4} S 1=firstpos(root)={1, 2, 3} mark S 1 a: followpos(1) followpos(3)={1, 2, 3, 4}=S 2 b: followpos(2)={1, 2, 3}=S 1 mark S 2 a: followpos(1) followpos(3)={1, 2, 3, 4}=S 2 b: followpos(2)={1, 2, 3}=S 1 move(S 1, a)=S 2 move(S 1, b)=S 1 move(S 2, a)=S 2 move(S 2, b)=S 1 b start state: S 1 accepting states: {S 2} followpos(4)={} S 1 a a S 2 b 50

Example -- ( a | b) * a # 1 followpos(1)={1, 2, 3} followpos(2)={1, 2, 3} 2 3 4 followpos(3)={4} S 1=firstpos(root)={1, 2, 3} mark S 1 a: followpos(1) followpos(3)={1, 2, 3, 4}=S 2 b: followpos(2)={1, 2, 3}=S 1 mark S 2 a: followpos(1) followpos(3)={1, 2, 3, 4}=S 2 b: followpos(2)={1, 2, 3}=S 1 move(S 1, a)=S 2 move(S 1, b)=S 1 move(S 2, a)=S 2 move(S 2, b)=S 1 b start state: S 1 accepting states: {S 2} followpos(4)={} S 1 a a S 2 b 50

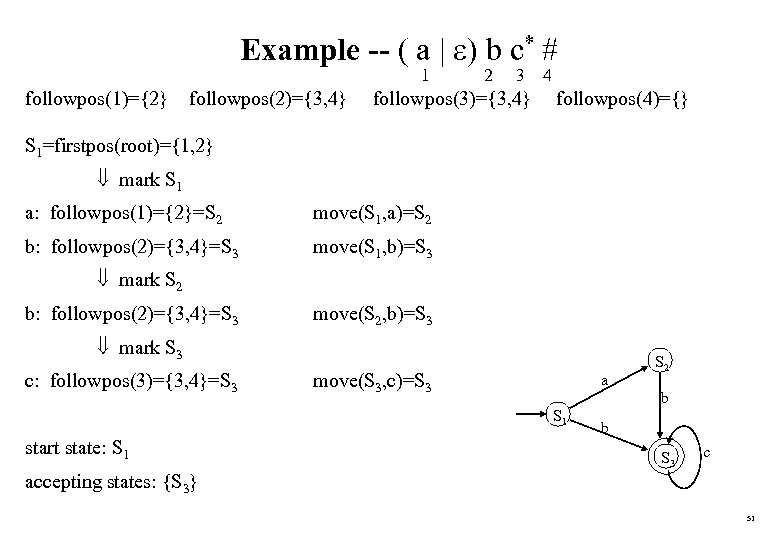

Example -- ( a | ) b c* # 1 followpos(1)={2} followpos(2)={3, 4} 2 3 4 followpos(3)={3, 4} followpos(4)={} S 1=firstpos(root)={1, 2} mark S 1 a: followpos(1)={2}=S 2 move(S 1, a)=S 2 b: followpos(2)={3, 4}=S 3 move(S 1, b)=S 3 mark S 2 b: followpos(2)={3, 4}=S 3 move(S 2, b)=S 3 mark S 3 c: followpos(3)={3, 4}=S 3 move(S 3, c)=S 3 a b S 1 start state: S 1 S 2 b S 3 c accepting states: {S 3} 51

Example -- ( a | ) b c* # 1 followpos(1)={2} followpos(2)={3, 4} 2 3 4 followpos(3)={3, 4} followpos(4)={} S 1=firstpos(root)={1, 2} mark S 1 a: followpos(1)={2}=S 2 move(S 1, a)=S 2 b: followpos(2)={3, 4}=S 3 move(S 1, b)=S 3 mark S 2 b: followpos(2)={3, 4}=S 3 move(S 2, b)=S 3 mark S 3 c: followpos(3)={3, 4}=S 3 move(S 3, c)=S 3 a b S 1 start state: S 1 S 2 b S 3 c accepting states: {S 3} 51

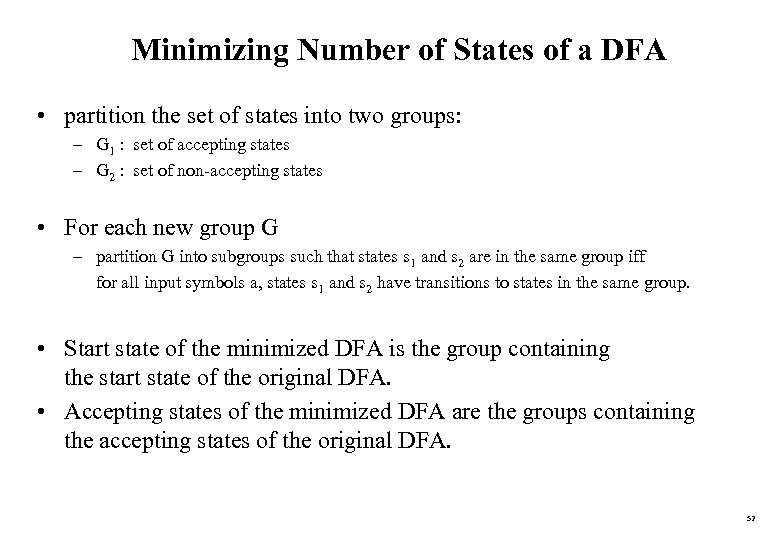

Minimizing Number of States of a DFA • partition the set of states into two groups: – G 1 : set of accepting states – G 2 : set of non-accepting states • For each new group G – partition G into subgroups such that states s 1 and s 2 are in the same group iff for all input symbols a, states s 1 and s 2 have transitions to states in the same group. • Start state of the minimized DFA is the group containing the start state of the original DFA. • Accepting states of the minimized DFA are the groups containing the accepting states of the original DFA. 52

Minimizing Number of States of a DFA • partition the set of states into two groups: – G 1 : set of accepting states – G 2 : set of non-accepting states • For each new group G – partition G into subgroups such that states s 1 and s 2 are in the same group iff for all input symbols a, states s 1 and s 2 have transitions to states in the same group. • Start state of the minimized DFA is the group containing the start state of the original DFA. • Accepting states of the minimized DFA are the groups containing the accepting states of the original DFA. 52

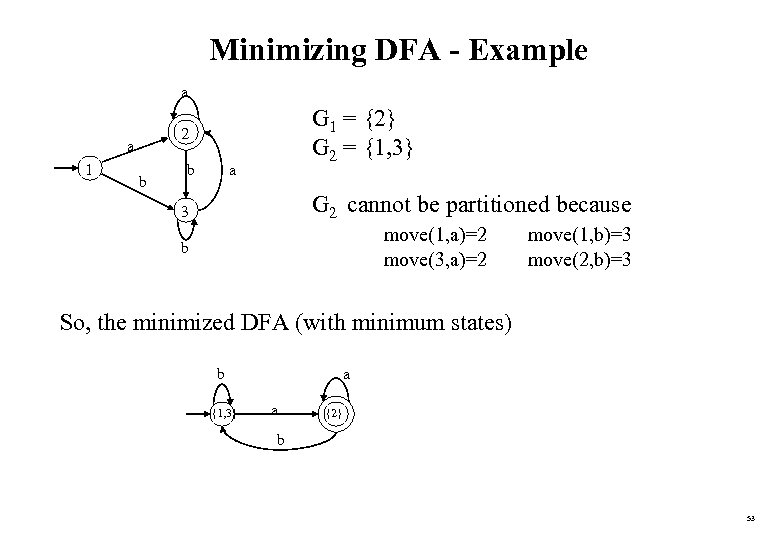

Minimizing DFA - Example a a 1 G 1 = {2} G 2 = {1, 3} 2 b b a G 2 cannot be partitioned because 3 move(1, a)=2 move(3, a)=2 b move(1, b)=3 move(2, b)=3 So, the minimized DFA (with minimum states) b {1, 3} a a {2} b 53

Minimizing DFA - Example a a 1 G 1 = {2} G 2 = {1, 3} 2 b b a G 2 cannot be partitioned because 3 move(1, a)=2 move(3, a)=2 b move(1, b)=3 move(2, b)=3 So, the minimized DFA (with minimum states) b {1, 3} a a {2} b 53

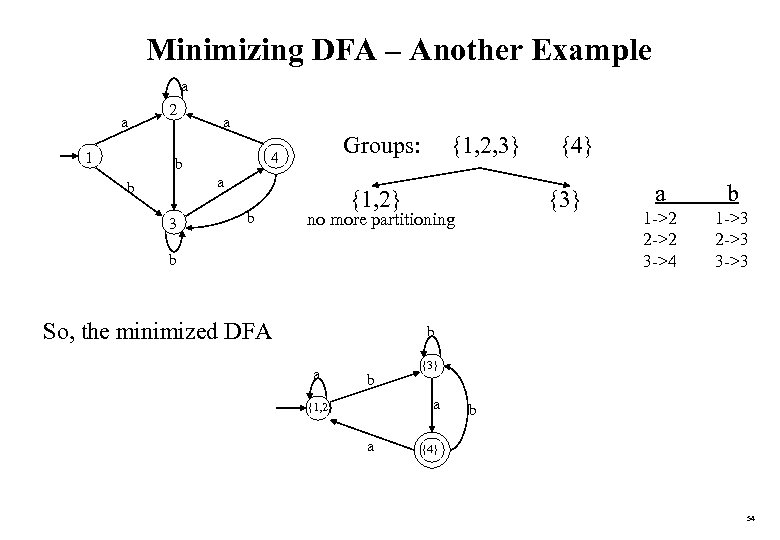

Minimizing DFA – Another Example a a 1 2 a Groups: 4 b a b 3 b {1, 2, 3} {1, 2} {3} no more partitioning b So, the minimized DFA {4} a b 1 ->2 2 ->2 3 ->4 1 ->3 2 ->3 3 ->3 b a b {3} a {1, 2} a b {4} 54

Minimizing DFA – Another Example a a 1 2 a Groups: 4 b a b 3 b {1, 2, 3} {1, 2} {3} no more partitioning b So, the minimized DFA {4} a b 1 ->2 2 ->2 3 ->4 1 ->3 2 ->3 3 ->3 b a b {3} a {1, 2} a b {4} 54

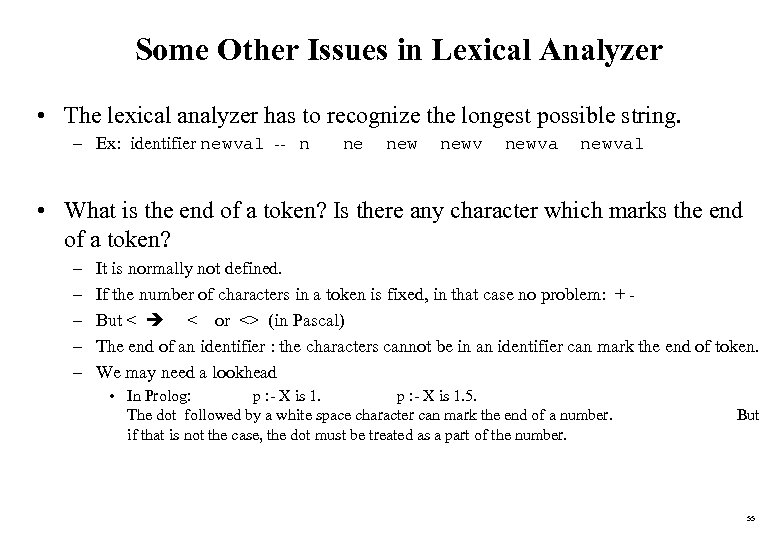

Some Other Issues in Lexical Analyzer • The lexical analyzer has to recognize the longest possible string. – Ex: identifier newval -- n ne newval • What is the end of a token? Is there any character which marks the end of a token? – – – It is normally not defined. If the number of characters in a token is fixed, in that case no problem: + But < < or <> (in Pascal) The end of an identifier : the characters cannot be in an identifier can mark the end of token. We may need a lookhead • In Prolog: p : - X is 1. 5. The dot followed by a white space character can mark the end of a number. if that is not the case, the dot must be treated as a part of the number. But 55

Some Other Issues in Lexical Analyzer • The lexical analyzer has to recognize the longest possible string. – Ex: identifier newval -- n ne newval • What is the end of a token? Is there any character which marks the end of a token? – – – It is normally not defined. If the number of characters in a token is fixed, in that case no problem: + But < < or <> (in Pascal) The end of an identifier : the characters cannot be in an identifier can mark the end of token. We may need a lookhead • In Prolog: p : - X is 1. 5. The dot followed by a white space character can mark the end of a number. if that is not the case, the dot must be treated as a part of the number. But 55

Some Other Issues in Lexical Analyzer (cont. ) • Skipping comments – Normally we don’t return a comment as a token. – We skip a comment, and return the next token (which is not a comment) to the parser. – So, the comments are only processed by the lexical analyzer, and the don’t complicate syntax of the language. the • Symbol table interface – symbol table holds information about tokens (at least lexeme of identifiers) – how to implement the symbol table, and what kind of operations. • hash table – open addressing, chaining • putting into the hash table, finding the position of a token from its lexeme. • Positions of the tokens in the file (for the error handling). 56

Some Other Issues in Lexical Analyzer (cont. ) • Skipping comments – Normally we don’t return a comment as a token. – We skip a comment, and return the next token (which is not a comment) to the parser. – So, the comments are only processed by the lexical analyzer, and the don’t complicate syntax of the language. the • Symbol table interface – symbol table holds information about tokens (at least lexeme of identifiers) – how to implement the symbol table, and what kind of operations. • hash table – open addressing, chaining • putting into the hash table, finding the position of a token from its lexeme. • Positions of the tokens in the file (for the error handling). 56

Syntax Analyzer • • Syntax Analyzer creates the syntactic structure of the given source program. This syntactic structure is mostly a parse tree. Syntax Analyzer is also known as parser. The syntax of a programming is described by a context-free grammar (CFG). We will use BNF (Backus-Naur Form) notation in the description of CFGs. • The syntax analyzer (parser) checks whether a given source program satisfies the rules implied by a context-free grammar or not. – If it satisfies, the parser creates the parse tree of that program. – Otherwise the parser gives the error messages. • A context-free grammar – gives a precise syntactic specification of a programming language. – the design of the grammar is an initial phase of the design of a compiler. – a grammar can be directly converted into a parser by some tools. 57

Syntax Analyzer • • Syntax Analyzer creates the syntactic structure of the given source program. This syntactic structure is mostly a parse tree. Syntax Analyzer is also known as parser. The syntax of a programming is described by a context-free grammar (CFG). We will use BNF (Backus-Naur Form) notation in the description of CFGs. • The syntax analyzer (parser) checks whether a given source program satisfies the rules implied by a context-free grammar or not. – If it satisfies, the parser creates the parse tree of that program. – Otherwise the parser gives the error messages. • A context-free grammar – gives a precise syntactic specification of a programming language. – the design of the grammar is an initial phase of the design of a compiler. – a grammar can be directly converted into a parser by some tools. 57

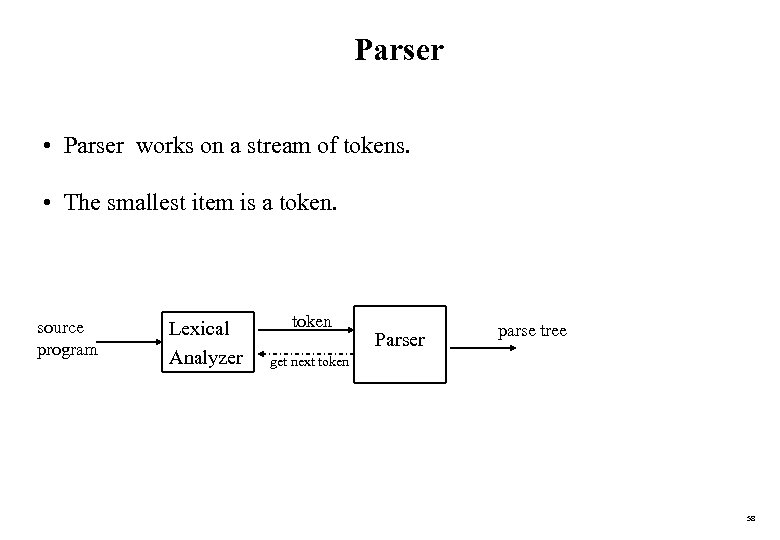

Parser • Parser works on a stream of tokens. • The smallest item is a token. source program Lexical Analyzer token Parser parse tree get next token 58

Parser • Parser works on a stream of tokens. • The smallest item is a token. source program Lexical Analyzer token Parser parse tree get next token 58

Parsers (cont. ) • We categorize the parsers into two groups: 1. Top-Down Parser – The parse tree is created top to bottom, starting from the root. 2. Bottom-Up Parser – The parse is created bottom to top; starting from the leaves • • Both top-down and bottom-up parsers scan the input from left to right (one symbol at a time). Efficient top-down and bottom-up parsers can be implemented only for sub-classes of context-free grammars. – LL for top-down parsing – LR for bottom-up parsing 59

Parsers (cont. ) • We categorize the parsers into two groups: 1. Top-Down Parser – The parse tree is created top to bottom, starting from the root. 2. Bottom-Up Parser – The parse is created bottom to top; starting from the leaves • • Both top-down and bottom-up parsers scan the input from left to right (one symbol at a time). Efficient top-down and bottom-up parsers can be implemented only for sub-classes of context-free grammars. – LL for top-down parsing – LR for bottom-up parsing 59

Context-Free Grammars • Inherently recursive structures of a programming language are defined by a context-free grammar. • In a context-free grammar, we have: – A finite set of terminals (in our case, this will be the set of tokens) – A finite set of non-terminals (syntactic-variables) – A finite set of productions rules in the following form • A where A is a non-terminal and is a string of terminals and non-terminals (including the empty string) – A start symbol (one of the non-terminal symbol) • Example: E E+E | E–E | E*E | E/E | -E E (E) E id 60

Context-Free Grammars • Inherently recursive structures of a programming language are defined by a context-free grammar. • In a context-free grammar, we have: – A finite set of terminals (in our case, this will be the set of tokens) – A finite set of non-terminals (syntactic-variables) – A finite set of productions rules in the following form • A where A is a non-terminal and is a string of terminals and non-terminals (including the empty string) – A start symbol (one of the non-terminal symbol) • Example: E E+E | E–E | E*E | E/E | -E E (E) E id 60

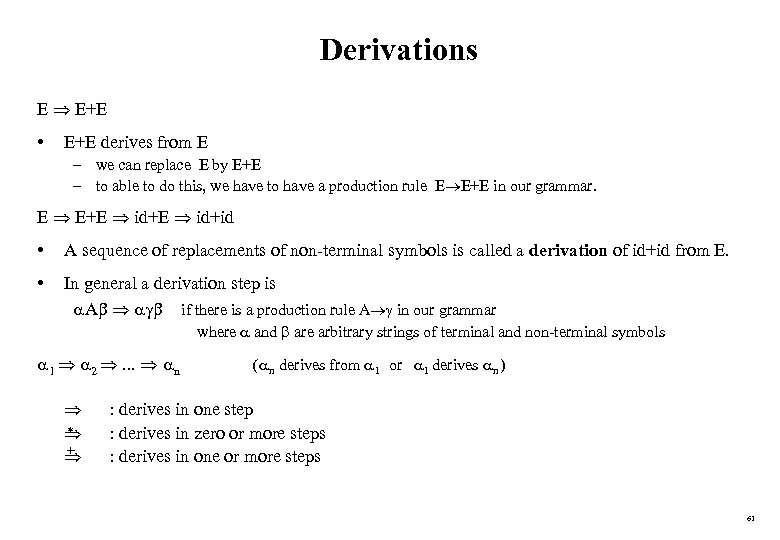

Derivations E E+E • E+E derives from E – we can replace E by E+E – to able to do this, we have to have a production rule E E+E in our grammar. E E+E id+id • A sequence of replacements of non-terminal symbols is called a derivation of id+id from E. • In general a derivation step is A if there is a production rule A in our grammar where and are arbitrary strings of terminal and non-terminal symbols 1 2 . . . n * + ( n derives from 1 or 1 derives n ) : derives in one step : derives in zero or more steps : derives in one or more steps 61

Derivations E E+E • E+E derives from E – we can replace E by E+E – to able to do this, we have to have a production rule E E+E in our grammar. E E+E id+id • A sequence of replacements of non-terminal symbols is called a derivation of id+id from E. • In general a derivation step is A if there is a production rule A in our grammar where and are arbitrary strings of terminal and non-terminal symbols 1 2 . . . n * + ( n derives from 1 or 1 derives n ) : derives in one step : derives in zero or more steps : derives in one or more steps 61

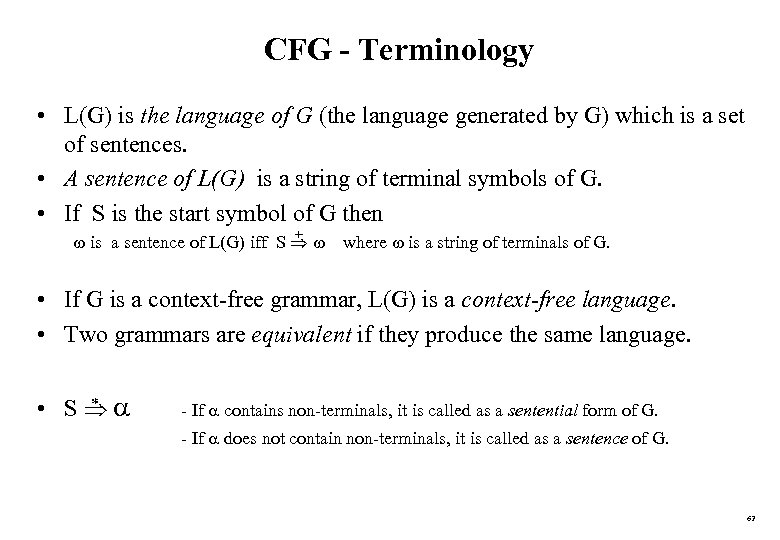

CFG - Terminology • L(G) is the language of G (the language generated by G) which is a set of sentences. • A sentence of L(G) is a string of terminal symbols of G. • If S is the start symbol of G then + is a sentence of L(G) iff S where is a string of terminals of G. • If G is a context-free grammar, L(G) is a context-free language. • Two grammars are equivalent if they produce the same language. * • S - If contains non-terminals, it is called as a sentential form of G. - If does not contain non-terminals, it is called as a sentence of G. 62

CFG - Terminology • L(G) is the language of G (the language generated by G) which is a set of sentences. • A sentence of L(G) is a string of terminal symbols of G. • If S is the start symbol of G then + is a sentence of L(G) iff S where is a string of terminals of G. • If G is a context-free grammar, L(G) is a context-free language. • Two grammars are equivalent if they produce the same language. * • S - If contains non-terminals, it is called as a sentential form of G. - If does not contain non-terminals, it is called as a sentence of G. 62

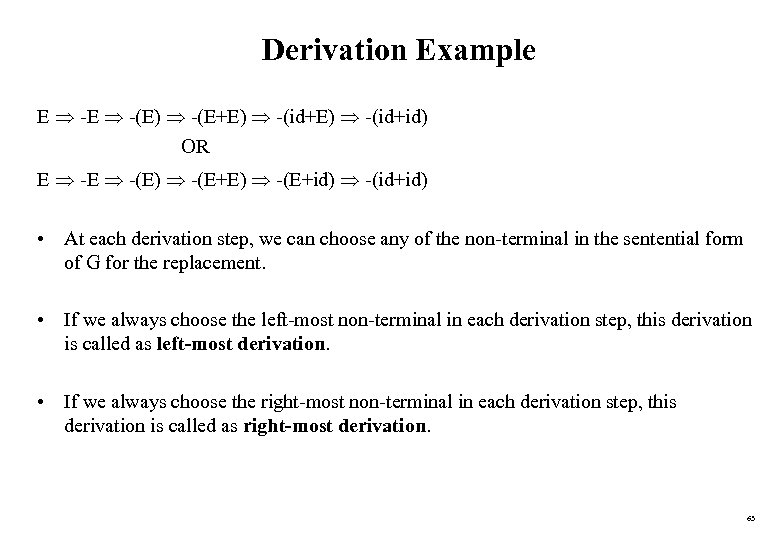

Derivation Example E -(E) -(E+E) -(id+id) OR E -(E) -(E+id) -(id+id) • At each derivation step, we can choose any of the non-terminal in the sentential form of G for the replacement. • If we always choose the left-most non-terminal in each derivation step, this derivation is called as left-most derivation. • If we always choose the right-most non-terminal in each derivation step, this derivation is called as right-most derivation. 63

Derivation Example E -(E) -(E+E) -(id+id) OR E -(E) -(E+id) -(id+id) • At each derivation step, we can choose any of the non-terminal in the sentential form of G for the replacement. • If we always choose the left-most non-terminal in each derivation step, this derivation is called as left-most derivation. • If we always choose the right-most non-terminal in each derivation step, this derivation is called as right-most derivation. 63

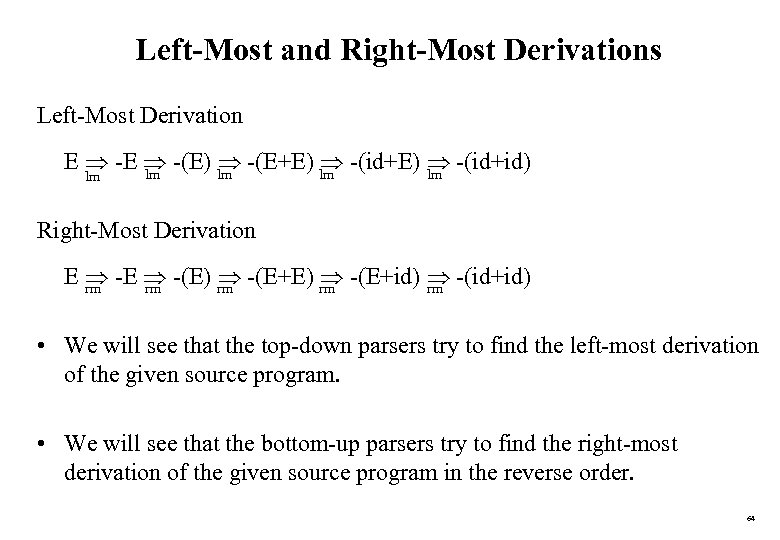

Left-Most and Right-Most Derivations Left-Most Derivation E -(E) lm -(E+E) lm -(id+E) -(id+id) lm lm lm Right-Most Derivation E -(E) rm -(E+id) -(id+id) rm rm rm • We will see that the top-down parsers try to find the left-most derivation of the given source program. • We will see that the bottom-up parsers try to find the right-most derivation of the given source program in the reverse order. 64

Left-Most and Right-Most Derivations Left-Most Derivation E -(E) lm -(E+E) lm -(id+E) -(id+id) lm lm lm Right-Most Derivation E -(E) rm -(E+id) -(id+id) rm rm rm • We will see that the top-down parsers try to find the left-most derivation of the given source program. • We will see that the bottom-up parsers try to find the right-most derivation of the given source program in the reverse order. 64

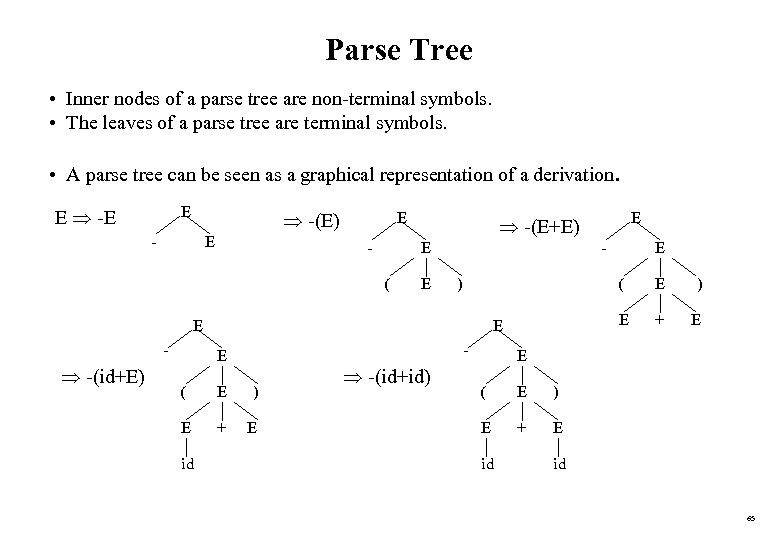

Parse Tree • Inner nodes of a parse tree are non-terminal symbols. • The leaves of a parse tree are terminal symbols. • A parse tree can be seen as a graphical representation of a derivation. E E -(E) - E E - E ( E ) -(id+E) - ( E ) E + E id -(id+id) E E E E ( E - E -(E+E) + E E ( E ) E + E id id 65

Parse Tree • Inner nodes of a parse tree are non-terminal symbols. • The leaves of a parse tree are terminal symbols. • A parse tree can be seen as a graphical representation of a derivation. E E -(E) - E E - E ( E ) -(id+E) - ( E ) E + E id -(id+id) E E E E ( E - E -(E+E) + E E ( E ) E + E id id 65

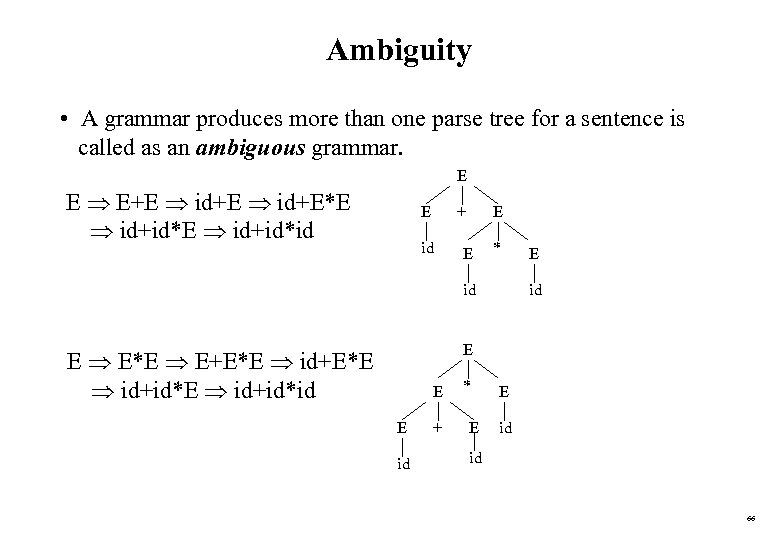

Ambiguity • A grammar produces more than one parse tree for a sentence is called as an ambiguous grammar. E E E+E id+E*E id+id*id E + id E E * id E E E*E E+E*E id+id*E id+id*id E E id + * E E id id 66

Ambiguity • A grammar produces more than one parse tree for a sentence is called as an ambiguous grammar. E E E+E id+E*E id+id*id E + id E E * id E E E*E E+E*E id+id*E id+id*id E E id + * E E id id 66

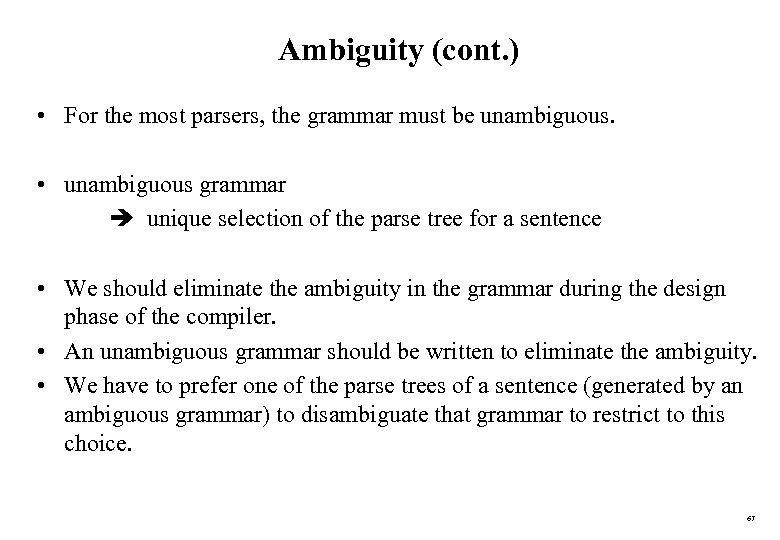

Ambiguity (cont. ) • For the most parsers, the grammar must be unambiguous. • unambiguous grammar unique selection of the parse tree for a sentence • We should eliminate the ambiguity in the grammar during the design phase of the compiler. • An unambiguous grammar should be written to eliminate the ambiguity. • We have to prefer one of the parse trees of a sentence (generated by an ambiguous grammar) to disambiguate that grammar to restrict to this choice. 67

Ambiguity (cont. ) • For the most parsers, the grammar must be unambiguous. • unambiguous grammar unique selection of the parse tree for a sentence • We should eliminate the ambiguity in the grammar during the design phase of the compiler. • An unambiguous grammar should be written to eliminate the ambiguity. • We have to prefer one of the parse trees of a sentence (generated by an ambiguous grammar) to disambiguate that grammar to restrict to this choice. 67

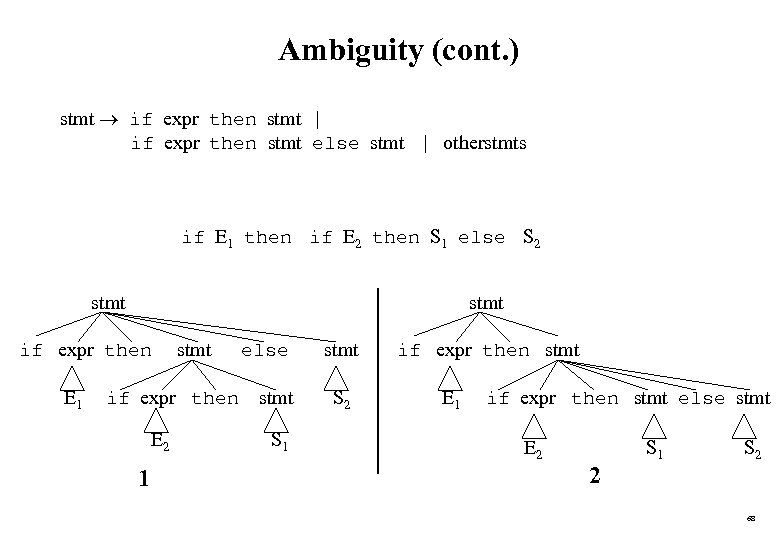

Ambiguity (cont. ) stmt if expr then stmt | if expr then stmt else stmt | otherstmts if E 1 then if E 2 then S 1 else S 2 stmt if expr then E 1 stmt if expr then E 2 1 else stmt S 1 stmt S 2 if expr then stmt E 1 if expr then stmt else stmt E 2 2 S 1 S 2 68

Ambiguity (cont. ) stmt if expr then stmt | if expr then stmt else stmt | otherstmts if E 1 then if E 2 then S 1 else S 2 stmt if expr then E 1 stmt if expr then E 2 1 else stmt S 1 stmt S 2 if expr then stmt E 1 if expr then stmt else stmt E 2 2 S 1 S 2 68

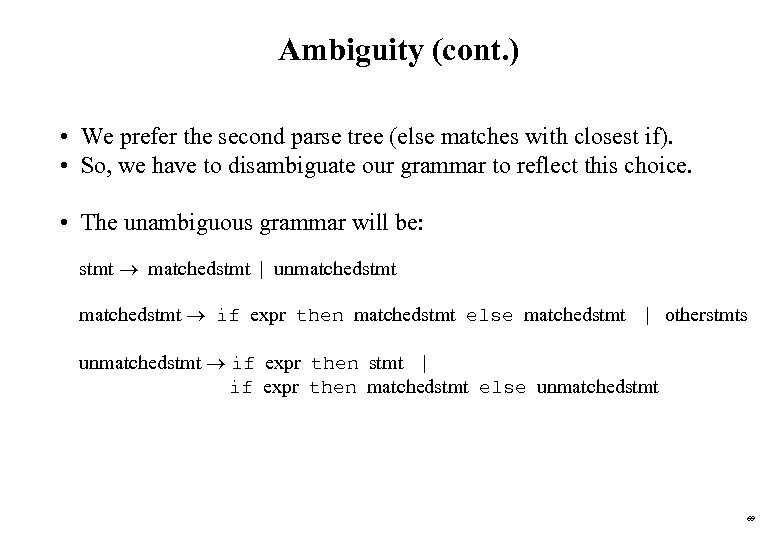

Ambiguity (cont. ) • We prefer the second parse tree (else matches with closest if). • So, we have to disambiguate our grammar to reflect this choice. • The unambiguous grammar will be: stmt matchedstmt | unmatchedstmt if expr then matchedstmt else matchedstmt | otherstmts unmatchedstmt if expr then stmt | if expr then matchedstmt else unmatchedstmt 69

Ambiguity (cont. ) • We prefer the second parse tree (else matches with closest if). • So, we have to disambiguate our grammar to reflect this choice. • The unambiguous grammar will be: stmt matchedstmt | unmatchedstmt if expr then matchedstmt else matchedstmt | otherstmts unmatchedstmt if expr then stmt | if expr then matchedstmt else unmatchedstmt 69

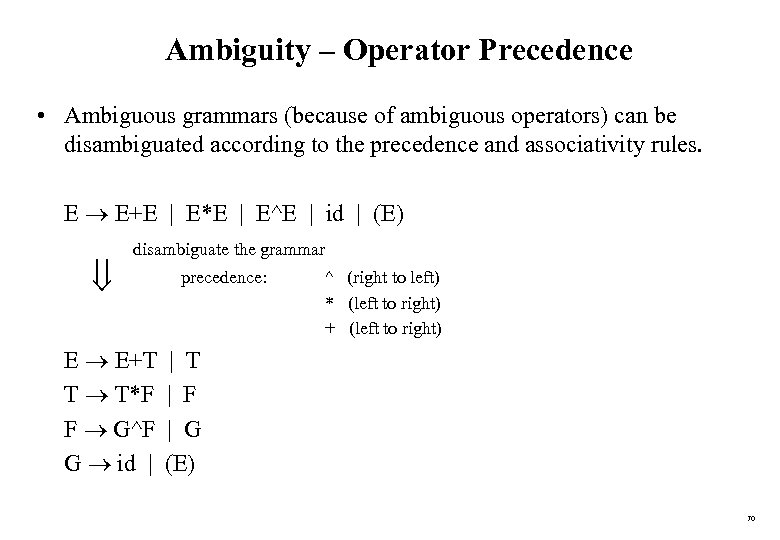

Ambiguity – Operator Precedence • Ambiguous grammars (because of ambiguous operators) can be disambiguated according to the precedence and associativity rules. E E+E | E*E | E^E | id | (E) disambiguate the grammar E E+T T T*F F G^F G id | precedence: ^ (right to left) * (left to right) + (left to right) | T | F | G (E) 70

Ambiguity – Operator Precedence • Ambiguous grammars (because of ambiguous operators) can be disambiguated according to the precedence and associativity rules. E E+E | E*E | E^E | id | (E) disambiguate the grammar E E+T T T*F F G^F G id | precedence: ^ (right to left) * (left to right) + (left to right) | T | F | G (E) 70

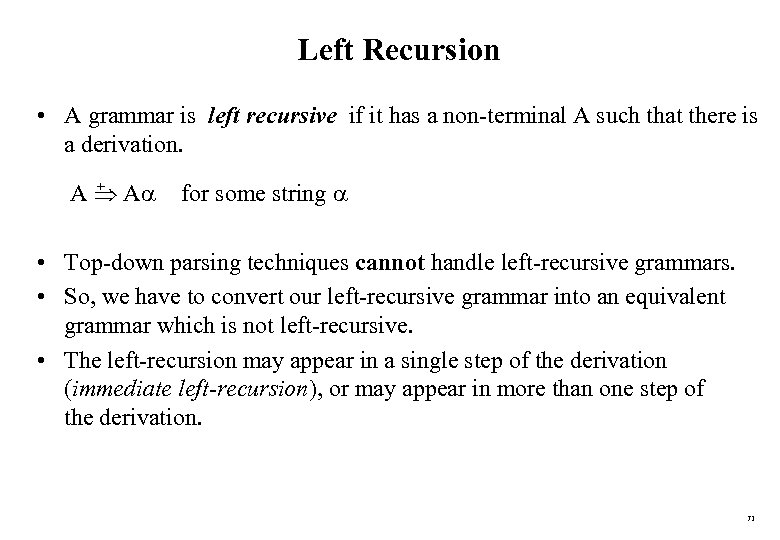

Left Recursion • A grammar is left recursive if it has a non-terminal A such that there is a derivation. + A A for some string • Top-down parsing techniques cannot handle left-recursive grammars. • So, we have to convert our left-recursive grammar into an equivalent grammar which is not left-recursive. • The left-recursion may appear in a single step of the derivation (immediate left-recursion), or may appear in more than one step of the derivation. 71

Left Recursion • A grammar is left recursive if it has a non-terminal A such that there is a derivation. + A A for some string • Top-down parsing techniques cannot handle left-recursive grammars. • So, we have to convert our left-recursive grammar into an equivalent grammar which is not left-recursive. • The left-recursion may appear in a single step of the derivation (immediate left-recursion), or may appear in more than one step of the derivation. 71

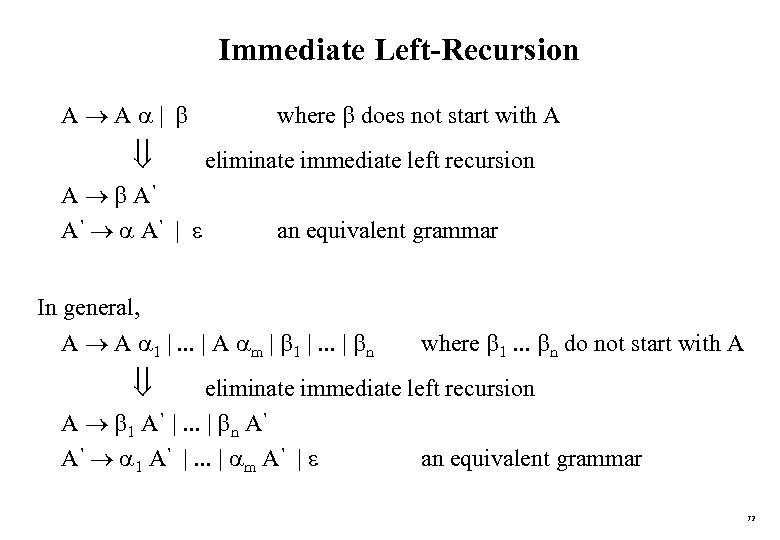

Immediate Left-Recursion A A | A A’ A’ A’ | where does not start with A eliminate immediate left recursion an equivalent grammar In general, A A 1 |. . . | A m | 1 |. . . | n where 1. . . n do not start with A eliminate immediate left recursion A 1 A’ |. . . | n A’ A’ 1 A’ |. . . | m A’ | an equivalent grammar 72

Immediate Left-Recursion A A | A A’ A’ A’ | where does not start with A eliminate immediate left recursion an equivalent grammar In general, A A 1 |. . . | A m | 1 |. . . | n where 1. . . n do not start with A eliminate immediate left recursion A 1 A’ |. . . | n A’ A’ 1 A’ |. . . | m A’ | an equivalent grammar 72

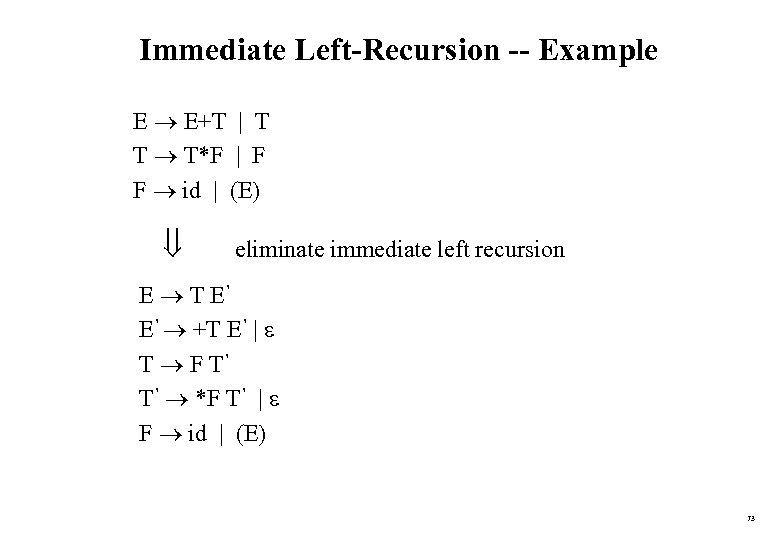

Immediate Left-Recursion -- Example E E+T | T T T*F | F F id | (E) eliminate immediate left recursion E T E’ E’ +T E’ | T F T’ T’ *F T’ | F id | (E) 73

Immediate Left-Recursion -- Example E E+T | T T T*F | F F id | (E) eliminate immediate left recursion E T E’ E’ +T E’ | T F T’ T’ *F T’ | F id | (E) 73

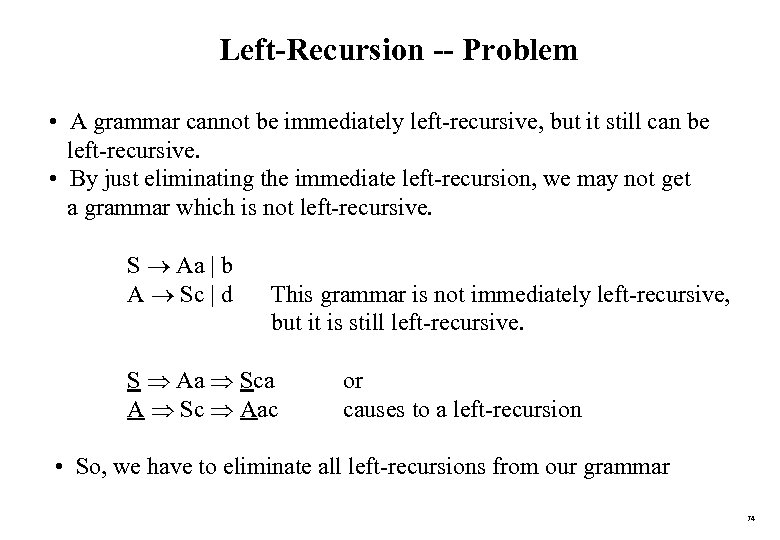

Left-Recursion -- Problem • A grammar cannot be immediately left-recursive, but it still can be left-recursive. • By just eliminating the immediate left-recursion, we may not get a grammar which is not left-recursive. S Aa | b A Sc | d This grammar is not immediately left-recursive, but it is still left-recursive. S Aa Sca A Sc Aac or causes to a left-recursion • So, we have to eliminate all left-recursions from our grammar 74

Left-Recursion -- Problem • A grammar cannot be immediately left-recursive, but it still can be left-recursive. • By just eliminating the immediate left-recursion, we may not get a grammar which is not left-recursive. S Aa | b A Sc | d This grammar is not immediately left-recursive, but it is still left-recursive. S Aa Sca A Sc Aac or causes to a left-recursion • So, we have to eliminate all left-recursions from our grammar 74

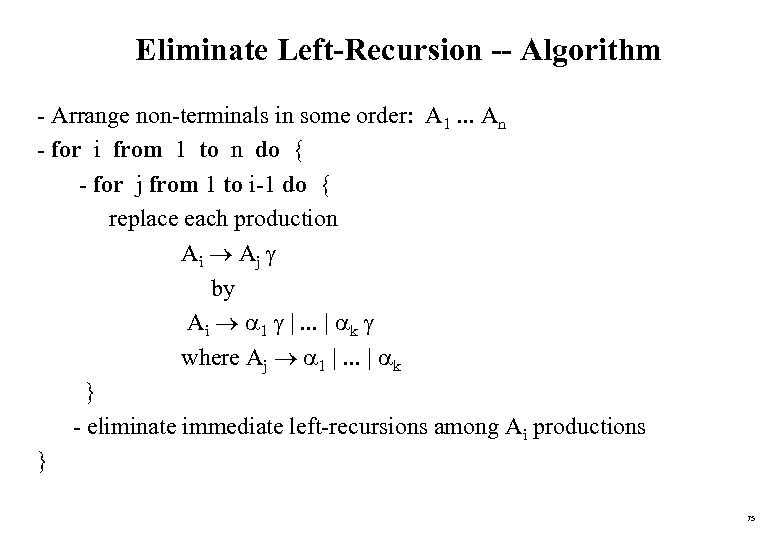

Eliminate Left-Recursion -- Algorithm - Arrange non-terminals in some order: A 1. . . An - for i from 1 to n do { - for j from 1 to i-1 do { replace each production Ai Aj by Ai 1 |. . . | k where Aj 1 |. . . | k } - eliminate immediate left-recursions among Ai productions } 75

Eliminate Left-Recursion -- Algorithm - Arrange non-terminals in some order: A 1. . . An - for i from 1 to n do { - for j from 1 to i-1 do { replace each production Ai Aj by Ai 1 |. . . | k where Aj 1 |. . . | k } - eliminate immediate left-recursions among Ai productions } 75

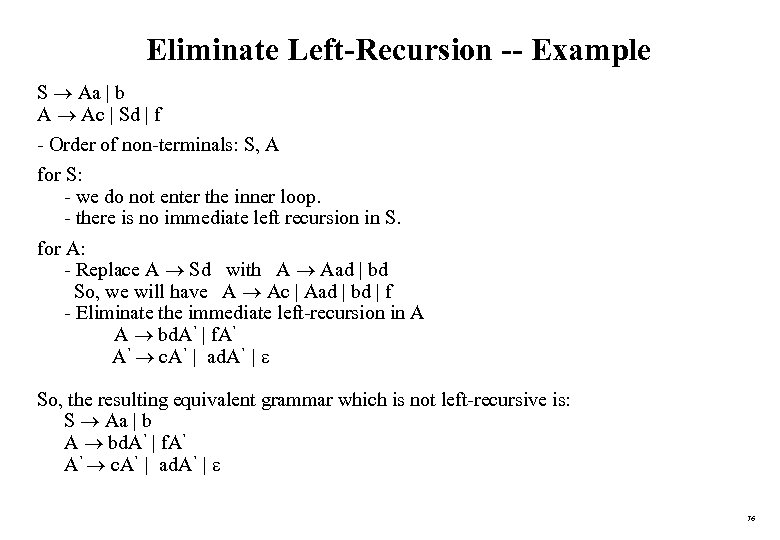

Eliminate Left-Recursion -- Example S Aa | b A Ac | Sd | f - Order of non-terminals: S, A for S: - we do not enter the inner loop. - there is no immediate left recursion in S. for A: - Replace A Sd with A Aad | bd So, we will have A Ac | Aad | bd | f - Eliminate the immediate left-recursion in A A bd. A’ | f. A’ A’ c. A’ | ad. A’ | So, the resulting equivalent grammar which is not left-recursive is: S Aa | b A bd. A’ | f. A’ A’ c. A’ | ad. A’ | 76

Eliminate Left-Recursion -- Example S Aa | b A Ac | Sd | f - Order of non-terminals: S, A for S: - we do not enter the inner loop. - there is no immediate left recursion in S. for A: - Replace A Sd with A Aad | bd So, we will have A Ac | Aad | bd | f - Eliminate the immediate left-recursion in A A bd. A’ | f. A’ A’ c. A’ | ad. A’ | So, the resulting equivalent grammar which is not left-recursive is: S Aa | b A bd. A’ | f. A’ A’ c. A’ | ad. A’ | 76

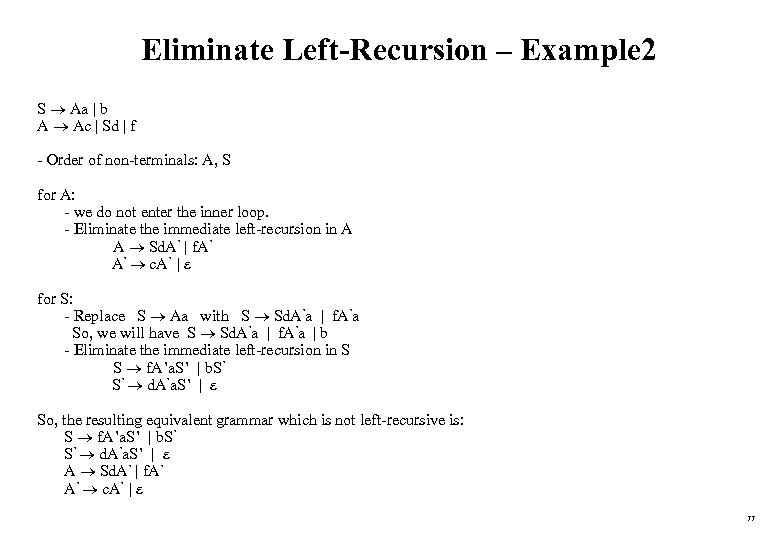

Eliminate Left-Recursion – Example 2 S Aa | b A Ac | Sd | f - Order of non-terminals: A, S for A: - we do not enter the inner loop. - Eliminate the immediate left-recursion in A A Sd. A’ | f. A’ A’ c. A’ | for S: - Replace S Aa with S Sd. A’a | f. A’a So, we will have S Sd. A’a | f. A’a | b - Eliminate the immediate left-recursion in S S f. A’a. S’ | b. S’ S’ d. A’a. S’ | So, the resulting equivalent grammar which is not left-recursive is: S f. A’a. S’ | b. S’ S’ d. A’a. S’ | A Sd. A’ | f. A’ A’ c. A’ | 77

Eliminate Left-Recursion – Example 2 S Aa | b A Ac | Sd | f - Order of non-terminals: A, S for A: - we do not enter the inner loop. - Eliminate the immediate left-recursion in A A Sd. A’ | f. A’ A’ c. A’ | for S: - Replace S Aa with S Sd. A’a | f. A’a So, we will have S Sd. A’a | f. A’a | b - Eliminate the immediate left-recursion in S S f. A’a. S’ | b. S’ S’ d. A’a. S’ | So, the resulting equivalent grammar which is not left-recursive is: S f. A’a. S’ | b. S’ S’ d. A’a. S’ | A Sd. A’ | f. A’ A’ c. A’ | 77

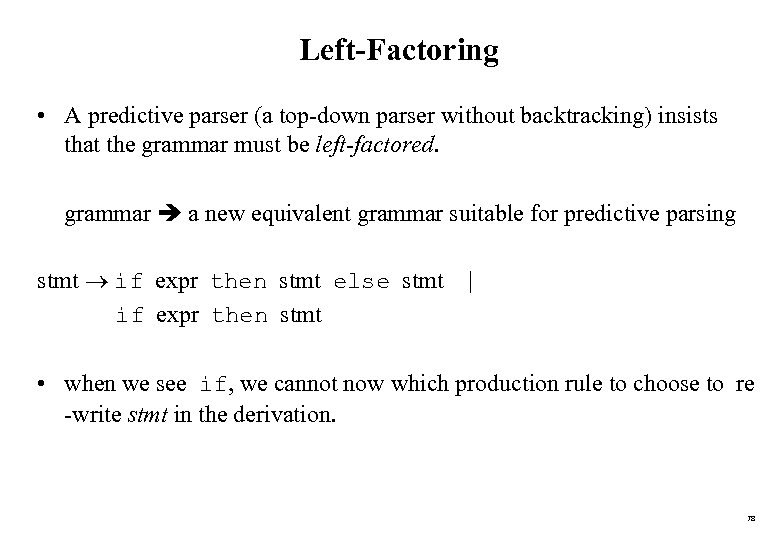

Left-Factoring • A predictive parser (a top-down parser without backtracking) insists that the grammar must be left-factored. grammar a new equivalent grammar suitable for predictive parsing stmt if expr then stmt else stmt if expr then stmt | • when we see if, we cannot now which production rule to choose to re -write stmt in the derivation. 78

Left-Factoring • A predictive parser (a top-down parser without backtracking) insists that the grammar must be left-factored. grammar a new equivalent grammar suitable for predictive parsing stmt if expr then stmt else stmt if expr then stmt | • when we see if, we cannot now which production rule to choose to re -write stmt in the derivation. 78

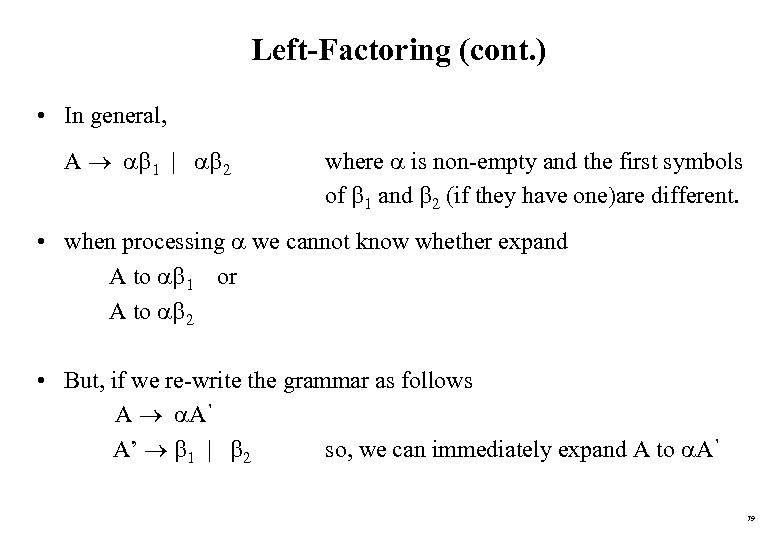

Left-Factoring (cont. ) • In general, A 1 | 2 where is non-empty and the first symbols of 1 and 2 (if they have one)are different. • when processing we cannot know whether expand A to 1 or A to 2 • But, if we re-write the grammar as follows A A’ A’ 1 | 2 so, we can immediately expand A to A’ 79

Left-Factoring (cont. ) • In general, A 1 | 2 where is non-empty and the first symbols of 1 and 2 (if they have one)are different. • when processing we cannot know whether expand A to 1 or A to 2 • But, if we re-write the grammar as follows A A’ A’ 1 | 2 so, we can immediately expand A to A’ 79

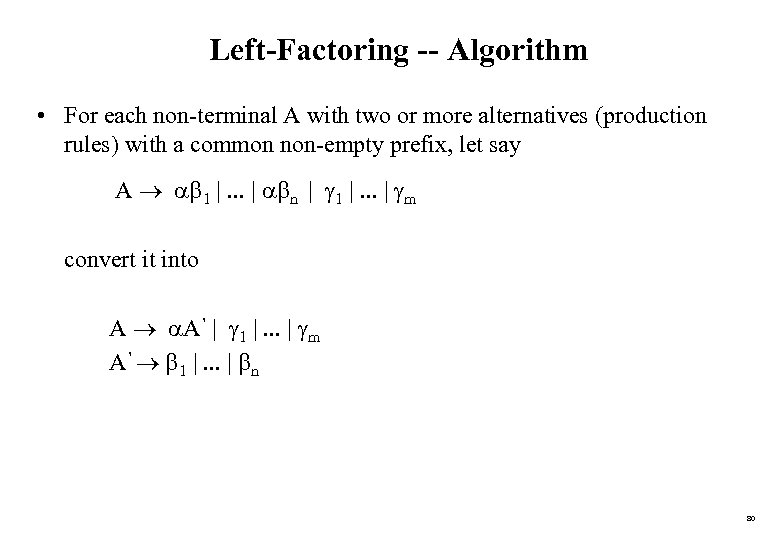

Left-Factoring -- Algorithm • For each non-terminal A with two or more alternatives (production rules) with a common non-empty prefix, let say A 1 |. . . | n | 1 |. . . | m convert it into A A’ | 1 |. . . | m A’ 1 |. . . | n 80

Left-Factoring -- Algorithm • For each non-terminal A with two or more alternatives (production rules) with a common non-empty prefix, let say A 1 |. . . | n | 1 |. . . | m convert it into A A’ | 1 |. . . | m A’ 1 |. . . | n 80

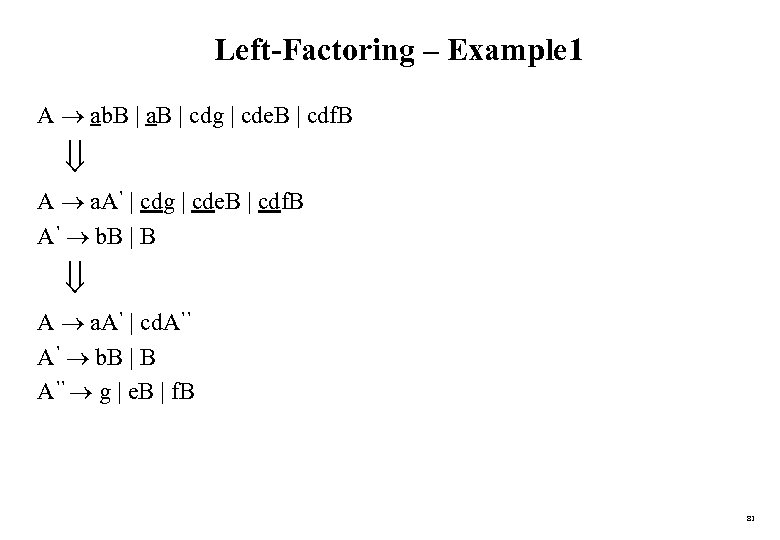

Left-Factoring – Example 1 A ab. B | a. B | cdg | cde. B | cdf. B A a. A’ | cdg | cde. B | cdf. B A’ b. B | B A a. A’ | cd. A’’ A’ b. B | B A’’ g | e. B | f. B 81

Left-Factoring – Example 1 A ab. B | a. B | cdg | cde. B | cdf. B A a. A’ | cdg | cde. B | cdf. B A’ b. B | B A a. A’ | cd. A’’ A’ b. B | B A’’ g | e. B | f. B 81

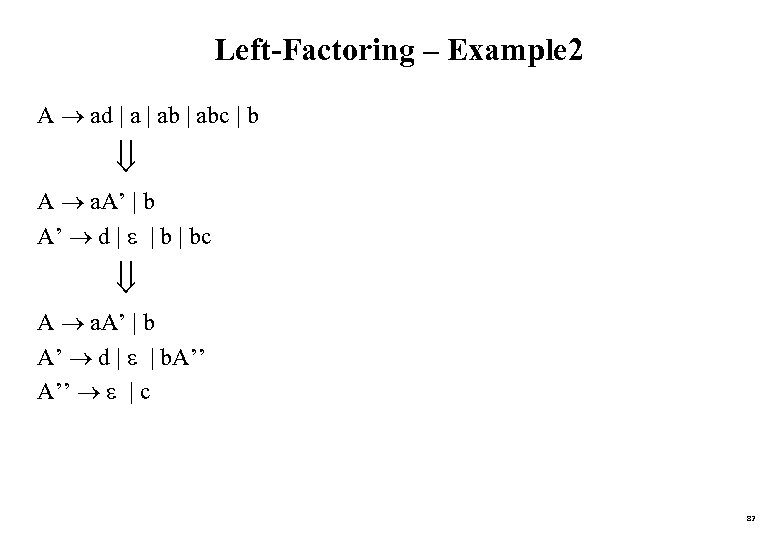

Left-Factoring – Example 2 A ad | abc | b A a. A’ | b A’ d | | bc A a. A’ | b A’ d | | b. A’’ | c 82

Left-Factoring – Example 2 A ad | abc | b A a. A’ | b A’ d | | bc A a. A’ | b A’ d | | b. A’’ | c 82

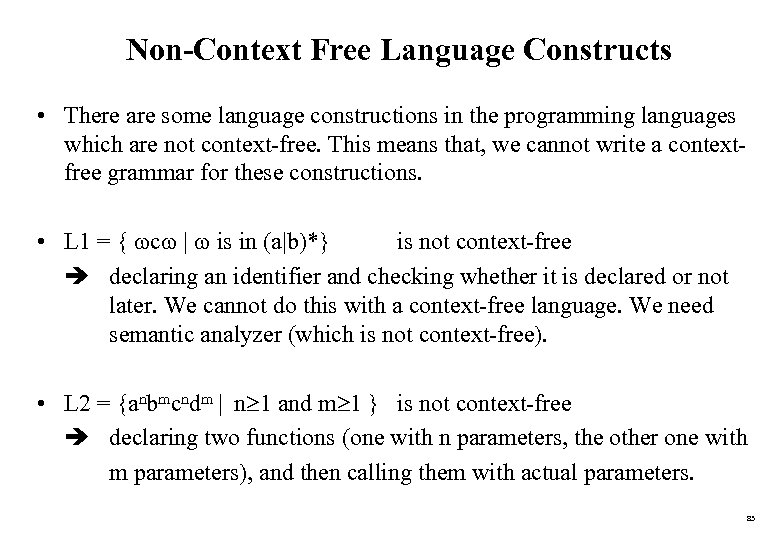

Non-Context Free Language Constructs • There are some language constructions in the programming languages which are not context-free. This means that, we cannot write a contextfree grammar for these constructions. • L 1 = { c | is in (a|b)*} is not context-free declaring an identifier and checking whether it is declared or not later. We cannot do this with a context-free language. We need semantic analyzer (which is not context-free). • L 2 = {anbmcndm | n 1 and m 1 } is not context-free declaring two functions (one with n parameters, the other one with m parameters), and then calling them with actual parameters. 83

Non-Context Free Language Constructs • There are some language constructions in the programming languages which are not context-free. This means that, we cannot write a contextfree grammar for these constructions. • L 1 = { c | is in (a|b)*} is not context-free declaring an identifier and checking whether it is declared or not later. We cannot do this with a context-free language. We need semantic analyzer (which is not context-free). • L 2 = {anbmcndm | n 1 and m 1 } is not context-free declaring two functions (one with n parameters, the other one with m parameters), and then calling them with actual parameters. 83

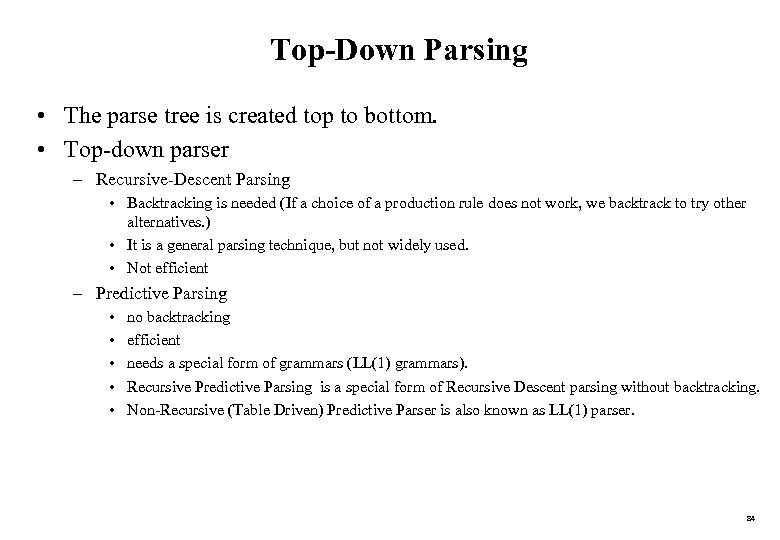

Top-Down Parsing • The parse tree is created top to bottom. • Top-down parser – Recursive-Descent Parsing • Backtracking is needed (If a choice of a production rule does not work, we backtrack to try other alternatives. ) • It is a general parsing technique, but not widely used. • Not efficient – Predictive Parsing • • • no backtracking efficient needs a special form of grammars (LL(1) grammars). Recursive Predictive Parsing is a special form of Recursive Descent parsing without backtracking. Non-Recursive (Table Driven) Predictive Parser is also known as LL(1) parser. 84

Top-Down Parsing • The parse tree is created top to bottom. • Top-down parser – Recursive-Descent Parsing • Backtracking is needed (If a choice of a production rule does not work, we backtrack to try other alternatives. ) • It is a general parsing technique, but not widely used. • Not efficient – Predictive Parsing • • • no backtracking efficient needs a special form of grammars (LL(1) grammars). Recursive Predictive Parsing is a special form of Recursive Descent parsing without backtracking. Non-Recursive (Table Driven) Predictive Parser is also known as LL(1) parser. 84

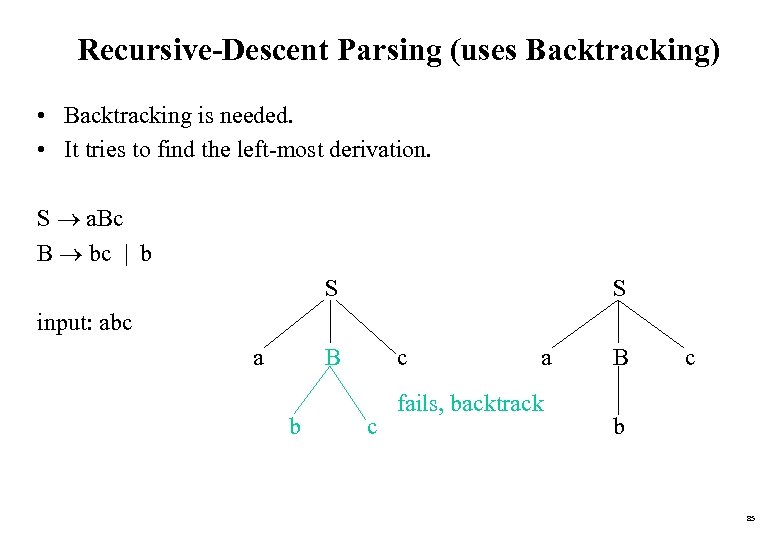

Recursive-Descent Parsing (uses Backtracking) • Backtracking is needed. • It tries to find the left-most derivation. S a. Bc B bc | b S S input: abc a B b c c a fails, backtrack B c b 85

Recursive-Descent Parsing (uses Backtracking) • Backtracking is needed. • It tries to find the left-most derivation. S a. Bc B bc | b S S input: abc a B b c c a fails, backtrack B c b 85

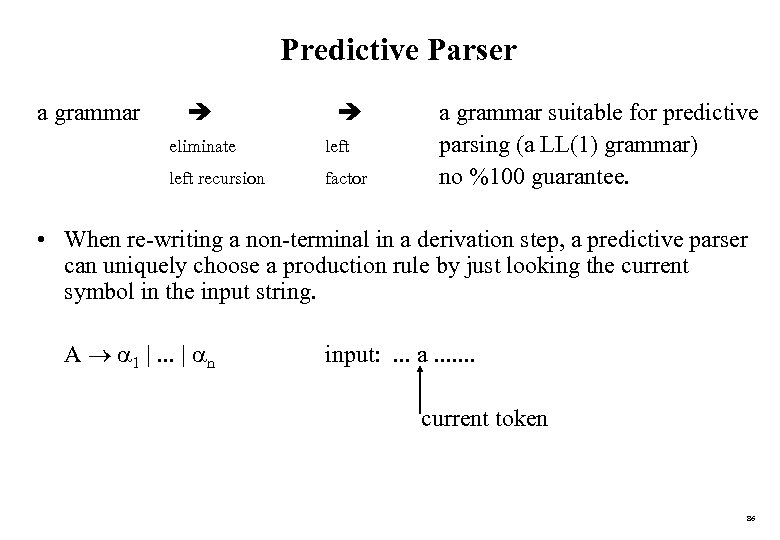

Predictive Parser a grammar eliminate left recursion factor a grammar suitable for predictive parsing (a LL(1) grammar) no %100 guarantee. • When re-writing a non-terminal in a derivation step, a predictive parser can uniquely choose a production rule by just looking the current symbol in the input string. A 1 |. . . | n input: . . . a. . . . current token 86

Predictive Parser a grammar eliminate left recursion factor a grammar suitable for predictive parsing (a LL(1) grammar) no %100 guarantee. • When re-writing a non-terminal in a derivation step, a predictive parser can uniquely choose a production rule by just looking the current symbol in the input string. A 1 |. . . | n input: . . . a. . . . current token 86

Predictive Parser (example) stmt if. . . while. . . begin. . . for. . . | | | • When we are trying to write the non-terminal stmt, if the current token is if we have to choose first production rule. • When we are trying to write the non-terminal stmt, we can uniquely choose the production rule by just looking the current token. • We eliminate the left recursion in the grammar, and left factor it. But it may not be suitable for predictive parsing (not LL(1) grammar). 87

Predictive Parser (example) stmt if. . . while. . . begin. . . for. . . | | | • When we are trying to write the non-terminal stmt, if the current token is if we have to choose first production rule. • When we are trying to write the non-terminal stmt, we can uniquely choose the production rule by just looking the current token. • We eliminate the left recursion in the grammar, and left factor it. But it may not be suitable for predictive parsing (not LL(1) grammar). 87

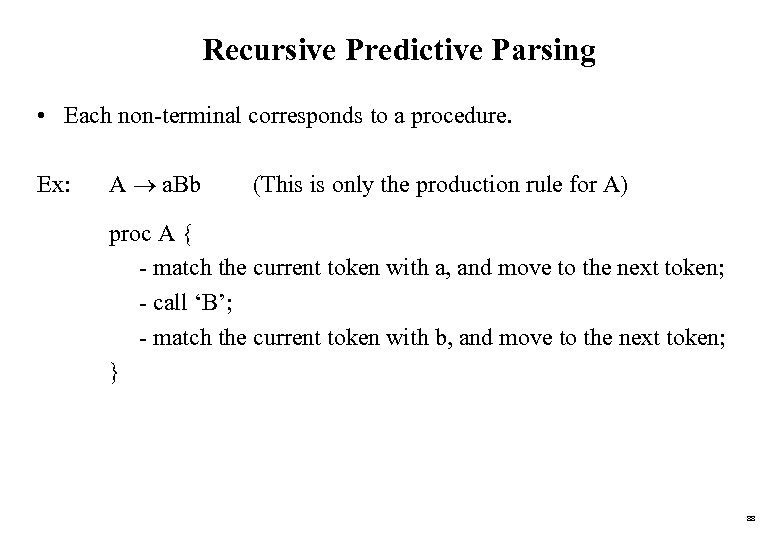

Recursive Predictive Parsing • Each non-terminal corresponds to a procedure. Ex: A a. Bb (This is only the production rule for A) proc A { - match the current token with a, and move to the next token; - call ‘B’; - match the current token with b, and move to the next token; } 88

Recursive Predictive Parsing • Each non-terminal corresponds to a procedure. Ex: A a. Bb (This is only the production rule for A) proc A { - match the current token with a, and move to the next token; - call ‘B’; - match the current token with b, and move to the next token; } 88

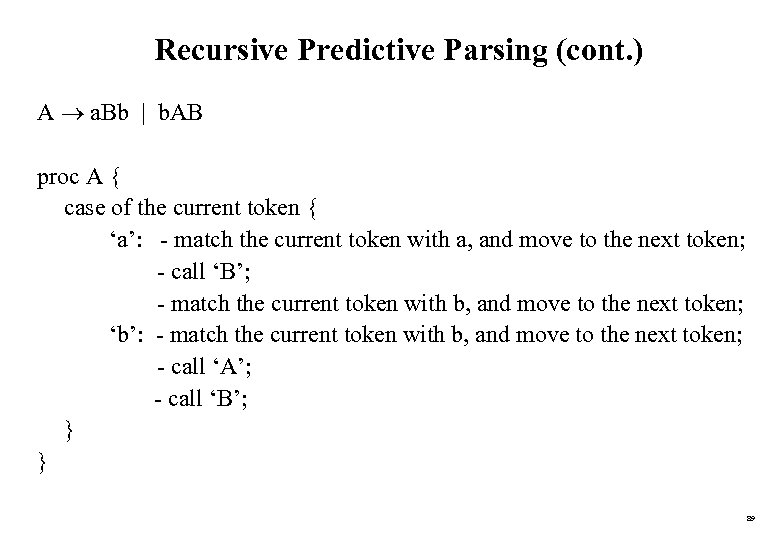

Recursive Predictive Parsing (cont. ) A a. Bb | b. AB proc A { case of the current token { ‘a’: - match the current token with a, and move to the next token; - call ‘B’; - match the current token with b, and move to the next token; ‘b’: - match the current token with b, and move to the next token; - call ‘A’; - call ‘B’; } } 89

Recursive Predictive Parsing (cont. ) A a. Bb | b. AB proc A { case of the current token { ‘a’: - match the current token with a, and move to the next token; - call ‘B’; - match the current token with b, and move to the next token; ‘b’: - match the current token with b, and move to the next token; - call ‘A’; - call ‘B’; } } 89

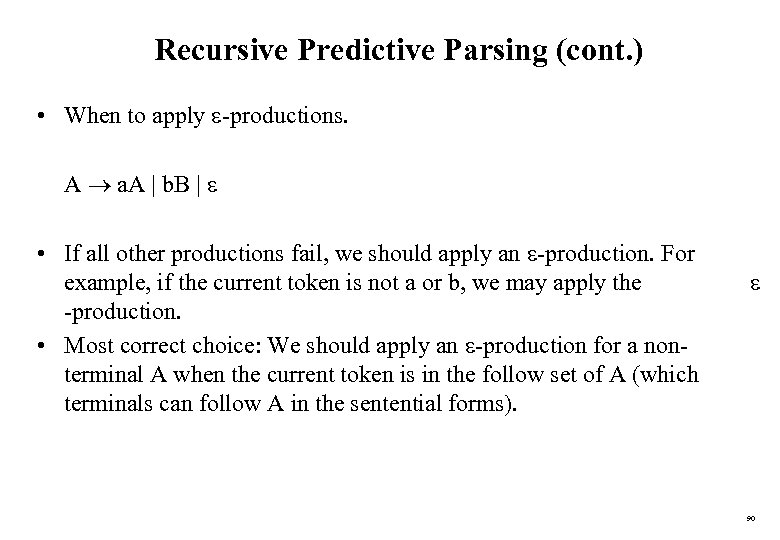

Recursive Predictive Parsing (cont. ) • When to apply -productions. A a. A | b. B | • If all other productions fail, we should apply an -production. For example, if the current token is not a or b, we may apply the -production. • Most correct choice: We should apply an -production for a nonterminal A when the current token is in the follow set of A (which terminals can follow A in the sentential forms). 90

Recursive Predictive Parsing (cont. ) • When to apply -productions. A a. A | b. B | • If all other productions fail, we should apply an -production. For example, if the current token is not a or b, we may apply the -production. • Most correct choice: We should apply an -production for a nonterminal A when the current token is in the follow set of A (which terminals can follow A in the sentential forms). 90

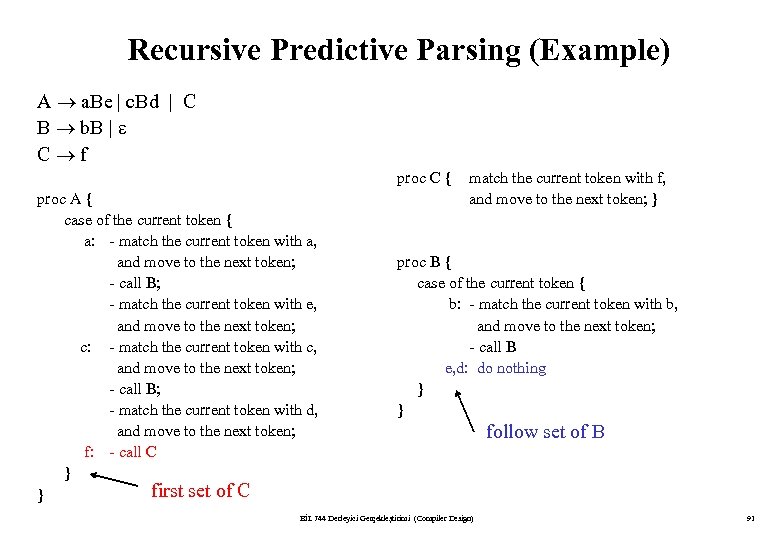

Recursive Predictive Parsing (Example) A a. Be | c. Bd | C B b. B | C f proc C { proc A { case of the current token { a: - match the current token with a, and move to the next token; - call B; - match the current token with e, and move to the next token; c: - match the current token with c, and move to the next token; - call B; - match the current token with d, and move to the next token; f: - call C } first set of C } match the current token with f, and move to the next token; } proc B { case of the current token { b: - match the current token with b, and move to the next token; - call B e, d: do nothing } } BİL 744 Derleyici Gerçekleştirimi (Compiler Design) follow set of B 91

Recursive Predictive Parsing (Example) A a. Be | c. Bd | C B b. B | C f proc C { proc A { case of the current token { a: - match the current token with a, and move to the next token; - call B; - match the current token with e, and move to the next token; c: - match the current token with c, and move to the next token; - call B; - match the current token with d, and move to the next token; f: - call C } first set of C } match the current token with f, and move to the next token; } proc B { case of the current token { b: - match the current token with b, and move to the next token; - call B e, d: do nothing } } BİL 744 Derleyici Gerçekleştirimi (Compiler Design) follow set of B 91

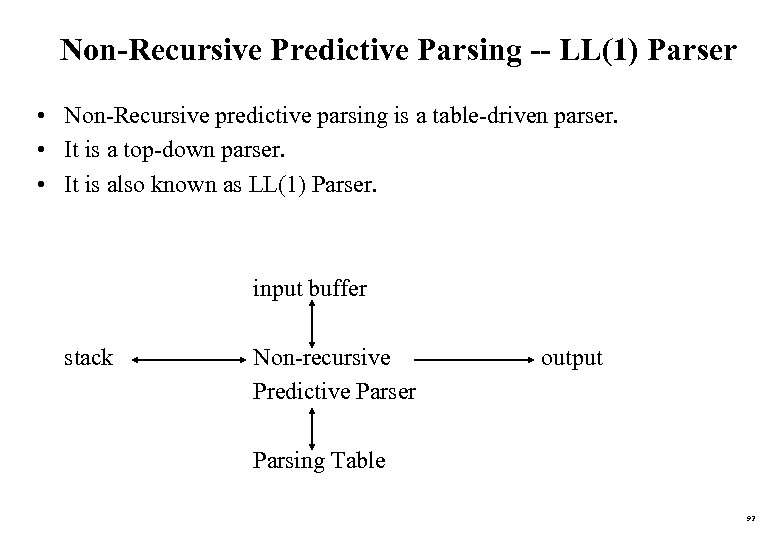

Non-Recursive Predictive Parsing -- LL(1) Parser • Non-Recursive predictive parsing is a table-driven parser. • It is a top-down parser. • It is also known as LL(1) Parser. input buffer stack Non-recursive Predictive Parser output Parsing Table 92

Non-Recursive Predictive Parsing -- LL(1) Parser • Non-Recursive predictive parsing is a table-driven parser. • It is a top-down parser. • It is also known as LL(1) Parser. input buffer stack Non-recursive Predictive Parser output Parsing Table 92

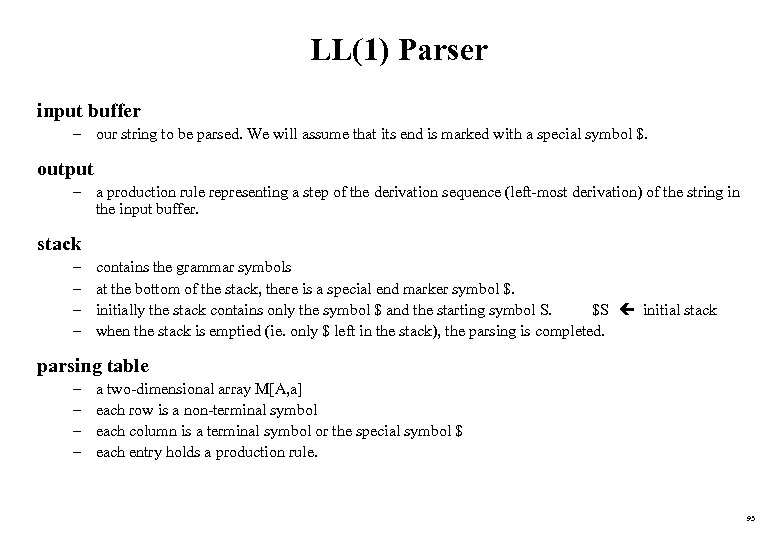

LL(1) Parser input buffer – our string to be parsed. We will assume that its end is marked with a special symbol $. output – a production rule representing a step of the derivation sequence (left-most derivation) of the string in the input buffer. stack – – contains the grammar symbols at the bottom of the stack, there is a special end marker symbol $. initially the stack contains only the symbol $ and the starting symbol S. $S initial stack when the stack is emptied (ie. only $ left in the stack), the parsing is completed. parsing table – – a two-dimensional array M[A, a] each row is a non-terminal symbol each column is a terminal symbol or the special symbol $ each entry holds a production rule. 93

LL(1) Parser input buffer – our string to be parsed. We will assume that its end is marked with a special symbol $. output – a production rule representing a step of the derivation sequence (left-most derivation) of the string in the input buffer. stack – – contains the grammar symbols at the bottom of the stack, there is a special end marker symbol $. initially the stack contains only the symbol $ and the starting symbol S. $S initial stack when the stack is emptied (ie. only $ left in the stack), the parsing is completed. parsing table – – a two-dimensional array M[A, a] each row is a non-terminal symbol each column is a terminal symbol or the special symbol $ each entry holds a production rule. 93

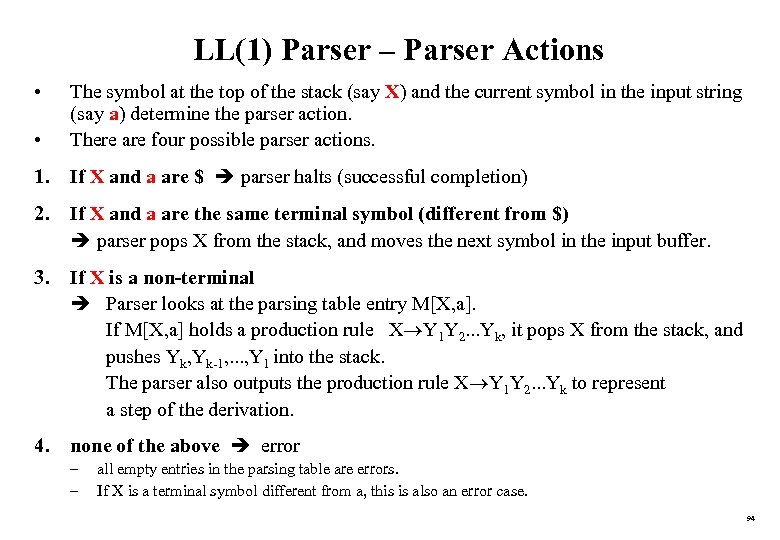

LL(1) Parser – Parser Actions • • The symbol at the top of the stack (say X) and the current symbol in the input string (say a) determine the parser action. There are four possible parser actions. 1. If X and a are $ parser halts (successful completion) 2. If X and a are the same terminal symbol (different from $) parser pops X from the stack, and moves the next symbol in the input buffer. 3. If X is a non-terminal Parser looks at the parsing table entry M[X, a]. If M[X, a] holds a production rule X Y 1 Y 2. . . Yk, it pops X from the stack, and pushes Yk, Yk-1, . . . , Y 1 into the stack. The parser also outputs the production rule X Y 1 Y 2. . . Yk to represent a step of the derivation. 4. none of the above error – – all empty entries in the parsing table are errors. If X is a terminal symbol different from a, this is also an error case. 94

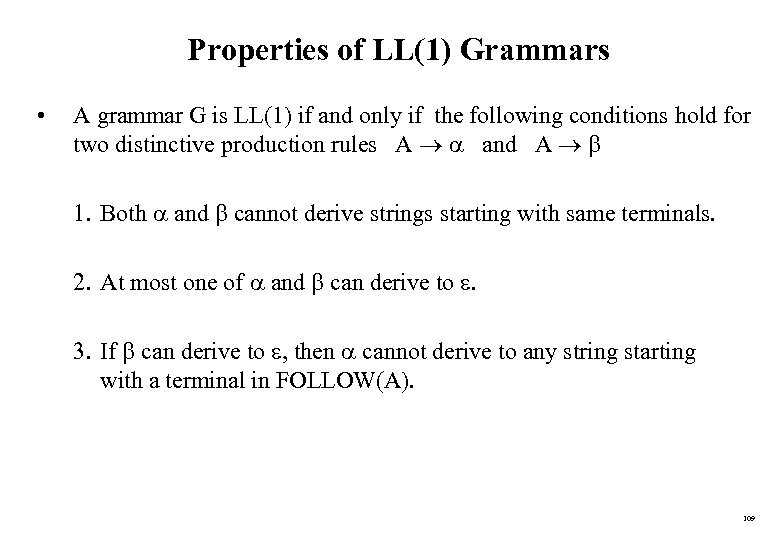

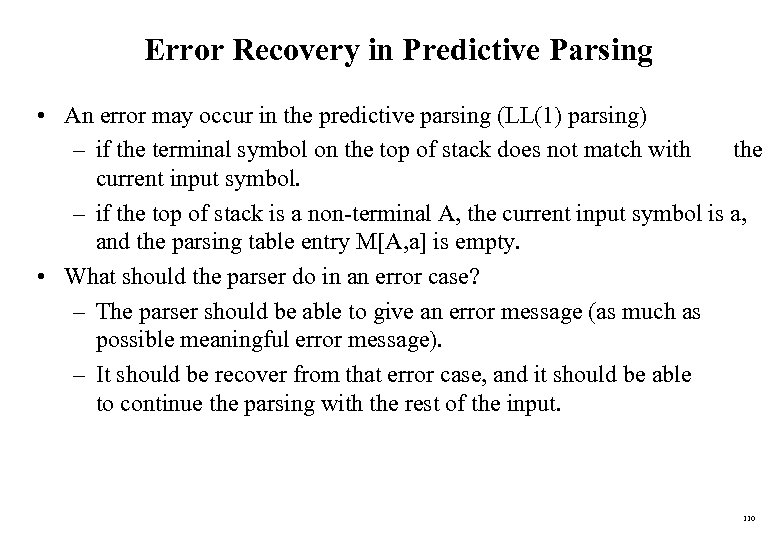

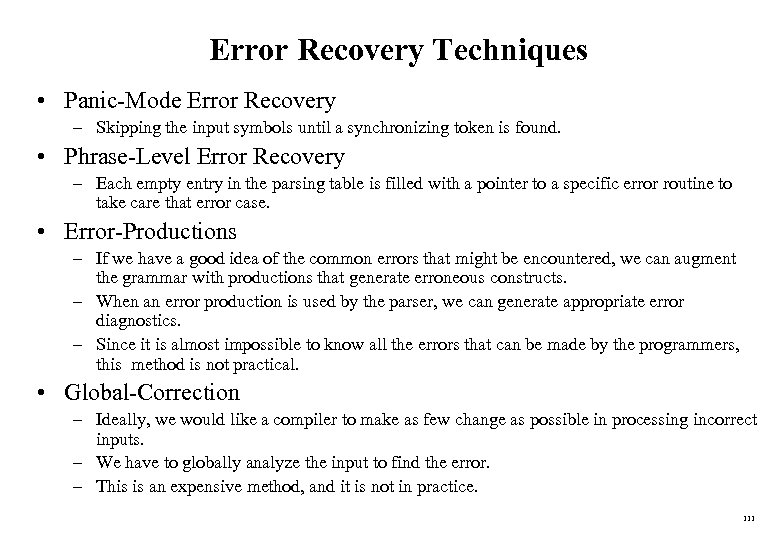

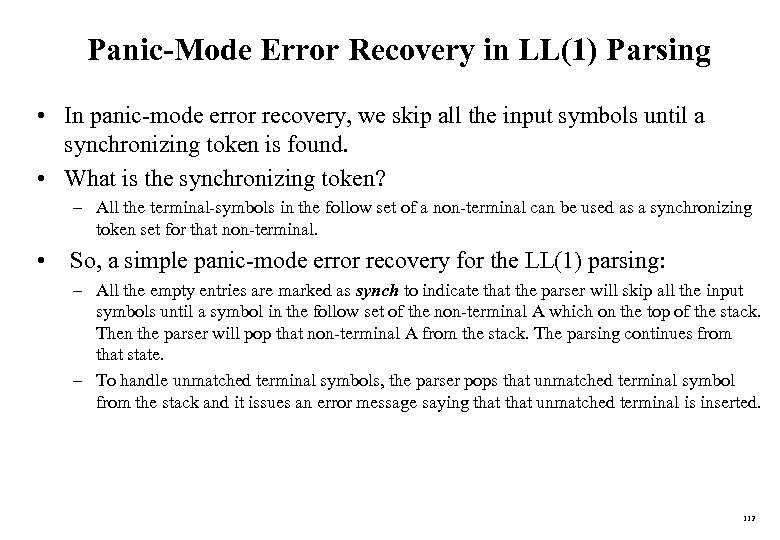

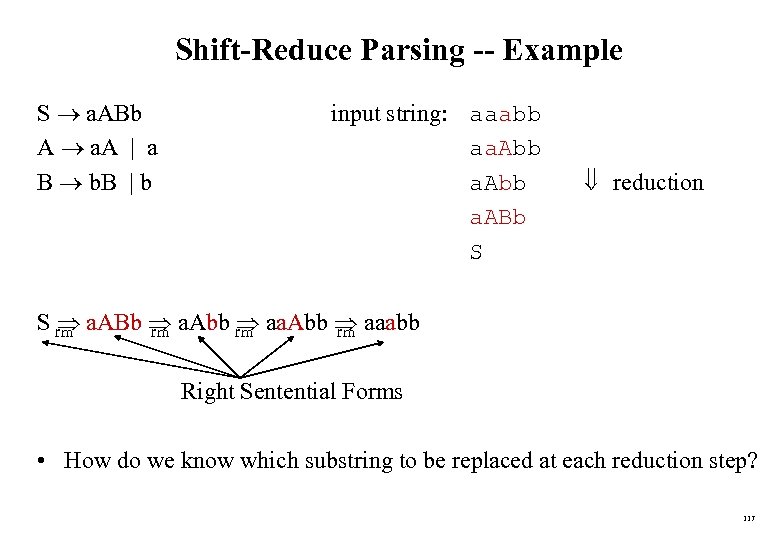

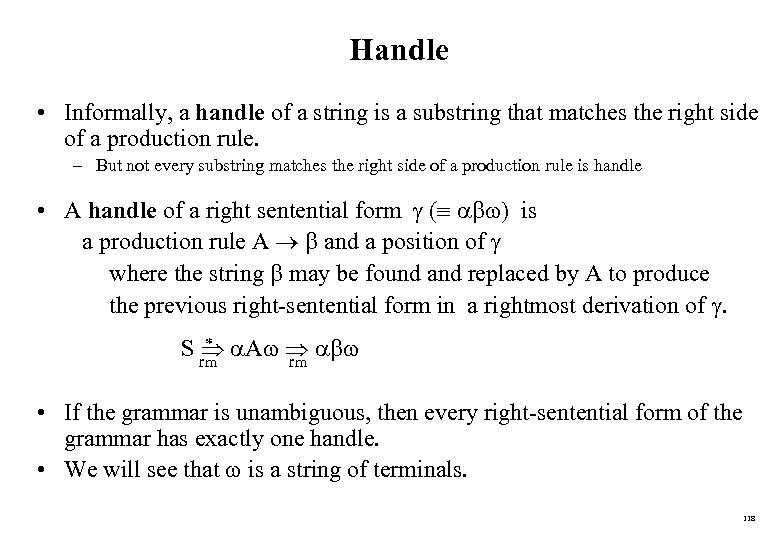

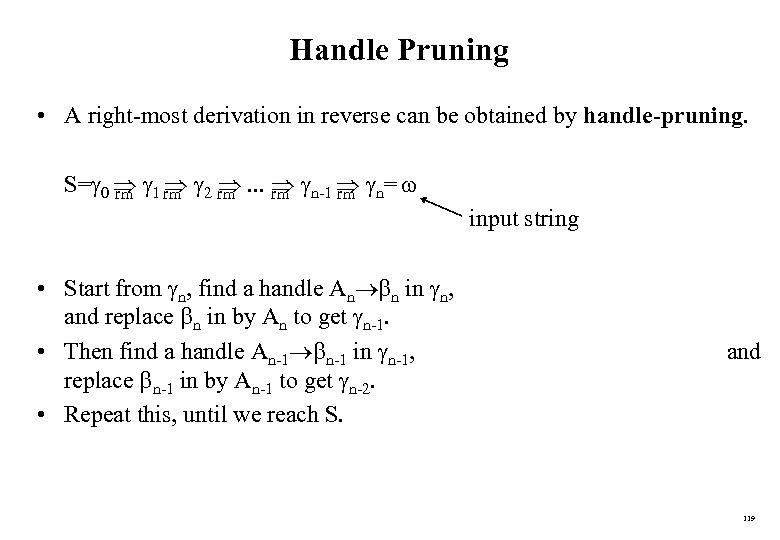

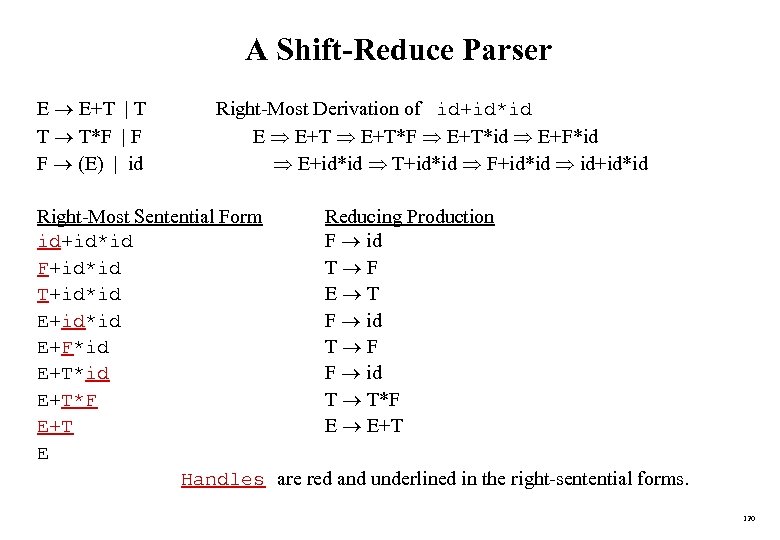

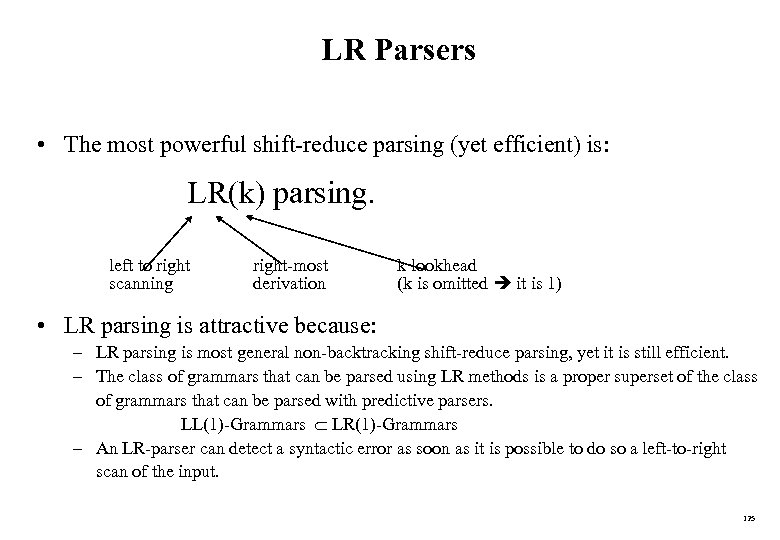

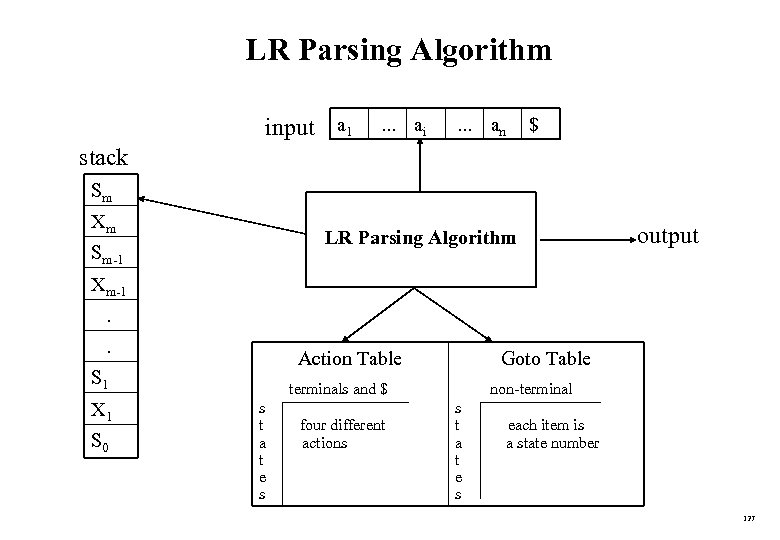

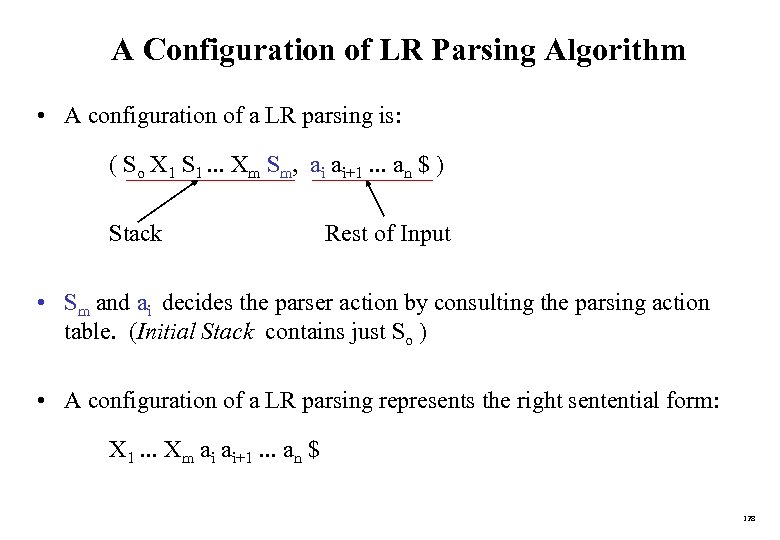

LL(1) Parser – Parser Actions • • The symbol at the top of the stack (say X) and the current symbol in the input string (say a) determine the parser action. There are four possible parser actions. 1. If X and a are $ parser halts (successful completion) 2. If X and a are the same terminal symbol (different from $) parser pops X from the stack, and moves the next symbol in the input buffer. 3. If X is a non-terminal Parser looks at the parsing table entry M[X, a]. If M[X, a] holds a production rule X Y 1 Y 2. . . Yk, it pops X from the stack, and pushes Yk, Yk-1, . . . , Y 1 into the stack. The parser also outputs the production rule X Y 1 Y 2. . . Yk to represent a step of the derivation. 4. none of the above error – – all empty entries in the parsing table are errors. If X is a terminal symbol different from a, this is also an error case. 94