e7cd31b4ca61aca840bccd13d7cce3e4.ppt

- Количество слайдов: 37

Comparison of Different Retrieval Models in Content-Based Music Retrieval 曾元顯、江陳威、白恒瑞、蘇珮君 Presented by Yuen-Hsien Tseng Digital Media Center National Taiwan Normal University • • • Introduction Key Melody Extraction Retrieval Models Demos Experiments Conclusions

Introduction • Desire for Content-based Music Retrieval – for copyright search • Industrial interests and economic issues – for casual users to locate music work • Entertainment, games, education, life – for researchers to analyze music collections • Feedback to music composition theory • Real-world Applications – Automatic surveillance of TV or Radio channels for possible copyright infringement – Search and buy music via Cell phones (Philips) – User identification and games (張智星) • Combining speech and music recognition

Matching Problems in Different Levels • Audio Signal – Hi-fidelity Recording • Search by recording directly from CD (duplicate signal retrieval) – Noisy or low-quality recording • Search by recordings from Radio, TV music with cell phones – Search the same tunes regardless of the same audio • different pianos, singers(月亮代表我的心,陶吉吉), instruments (Canon, Pachelbel, piano vs violin), orchestras, directors • Symbolic Level – See next page (focus of this work) • Semantic Level – Search happy but andante songs – May need more (manually- or auto-derived) metadata

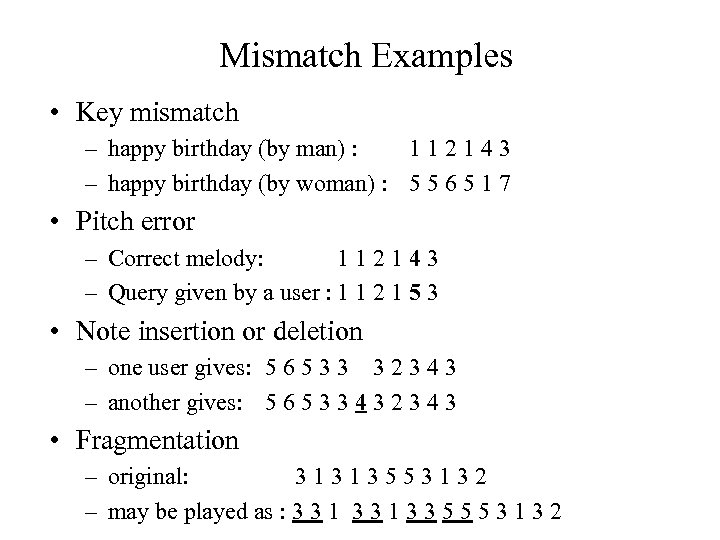

Major problems • Vocabulary mismatch: the major failure in IR tasks – The terms used to search the documents do not occur in the desired documents (or mismatch the undesired) • • Key mismatch: melodies may be sung in any key Pitch error: incorrect perception of the pitch level Note insertion and deletion: imperfect recall Fragmentation, Consolidation, etc. : perception error or different interpretation • Query formulation: melody, rhythm, chord, etc. • Result “browsing”: music is for listening, not for reading

Mismatch Examples • Key mismatch – happy birthday (by man) : 112143 – happy birthday (by woman) : 5 5 6 5 1 7 • Pitch error – Correct melody: 112143 – Query given by a user : 1 1 2 1 5 3 • Note insertion or deletion – one user gives: 5 6 5 3 3 3 2 3 4 3 – another gives: 5 6 5 3 3 4 3 2 3 4 3 • Fragmentation – original: 31313553132 – may be played as : 3 3 1 3 3 5 5 5 3 1 3 2

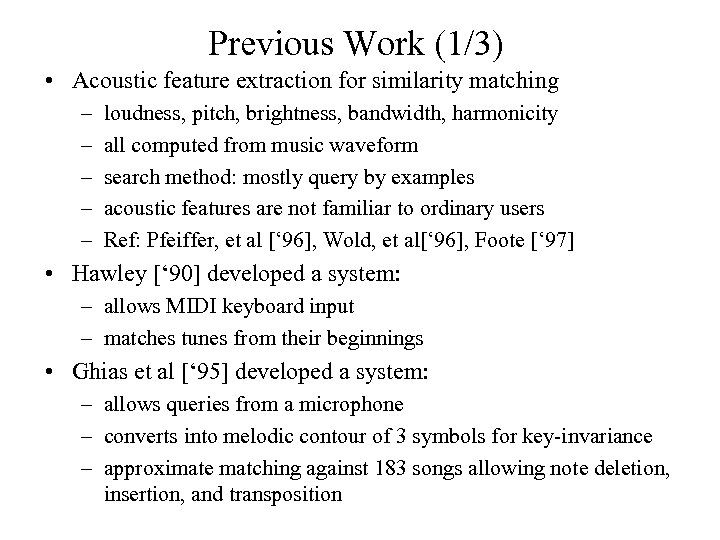

Previous Work (1/3) • Acoustic feature extraction for similarity matching – – – loudness, pitch, brightness, bandwidth, harmonicity all computed from music waveform search method: mostly query by examples acoustic features are not familiar to ordinary users Ref: Pfeiffer, et al [‘ 96], Wold, et al[‘ 96], Foote [‘ 97] • Hawley [‘ 90] developed a system: – allows MIDI keyboard input – matches tunes from their beginnings • Ghias et al [‘ 95] developed a system: – allows queries from a microphone – converts into melodic contour of 3 symbols for key-invariance – approximate matching against 183 songs allowing note deletion, insertion, and transposition

![Previous Work (2/3) • Mc. Nab, et al [‘ 97]: New Zealand DL’s MELody Previous Work (2/3) • Mc. Nab, et al [‘ 97]: New Zealand DL’s MELody](https://present5.com/presentation/e7cd31b4ca61aca840bccd13d7cce3e4/image-7.jpg)

Previous Work (2/3) • Mc. Nab, et al [‘ 97]: New Zealand DL’s MELody in. Dex – – – also allows acoustic input by singing, humming, or playing melody transcription, pitch encoding, approximate matching allow matches by “interval and rhythm”, “contour and rhythm”, … match of 9400 tunes by dynamic programming takes 21 seconds http: //www. cs. waikato. ac. nz/~nzdl/meldex • David Huron [‘ 93] "Humdrum Toolkit” – hundreds of music tools including a DP matching tool “simil” – http: //dactyl. som. ohio-state. edu/Humdrum/ • Huron and Kornstaedt's "Themefinder" – allow searches by pitch, interval, contour, etc. – http: //www. themefinder. org/ • Typke's “Tune Server” – Java. Applet tune recognizer : convert WAV to Parsons' Code (UDR) – http: //wwwipd. ira. uka. de/tuneserver/ (over 10000 tunes)

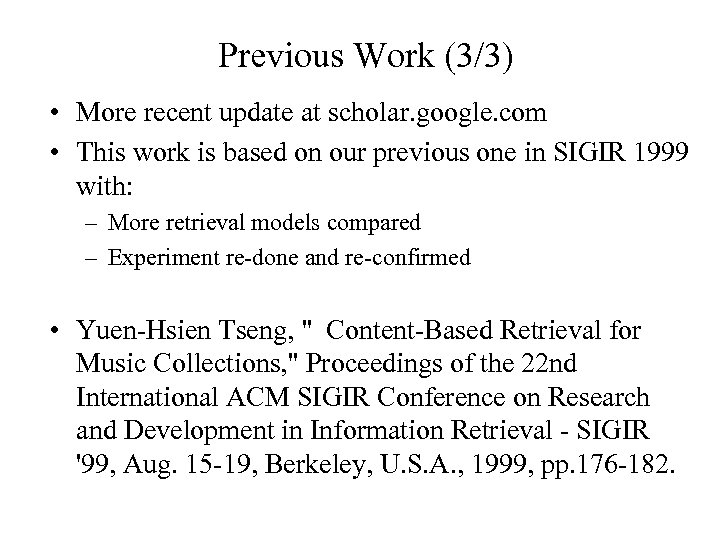

Previous Work (3/3) • More recent update at scholar. google. com • This work is based on our previous one in SIGIR 1999 with: – More retrieval models compared – Experiment re-done and re-confirmed • Yuen-Hsien Tseng, " Content-Based Retrieval for Music Collections, " Proceedings of the 22 nd International ACM SIGIR Conference on Research and Development in Information Retrieval - SIGIR '99, Aug. 15 -19, Berkeley, U. S. A. , 1999, pp. 176 -182.

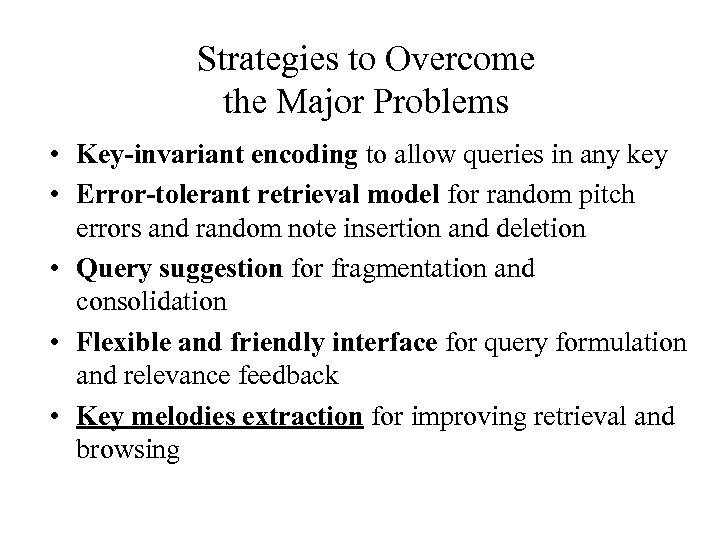

Strategies to Overcome the Major Problems • Key-invariant encoding to allow queries in any key • Error-tolerant retrieval model for random pitch errors and random note insertion and deletion • Query suggestion for fragmentation and consolidation • Flexible and friendly interface for query formulation and relevance feedback • Key melodies extraction for improving retrieval and browsing

Key Melody Extraction • Key melodies: – – – representative fragments of music same as keywords that give quick overview of documents memorable parts that people easily recall meet most user queries reduce response time due to less data speed up the process of browsing and selection • Assumption: key melodies are repeated patterns – repetition is one of the basic composing rules [Jones, 1974] • We develop an overlapping repetition identification – so far no linear algorithm is found [Crawford & Iliopoulos, 1998]

Key Melody Extraction: The Algorithm • Step 1: Convert the input string into a LIST of 2 -note sequence. • Step 2: Merge adjacent m-notes into (m+1)-notes according to the merge and accept rules until no sequences to merge – Merge rule: For any two adjacent sequences K 1 and K 2, if both of their occurring frequencies are greater than a threshold, they can be merged. – Accept rule: If the occurring frequency of K 1 is greater than a threshold and K 1 did not merge with its neighbors, then accept K 1 as a key melody • Step 3: Filter and sort the results according to some criteria. • Complexity : O(n m) if looking up occurring frequency is O(1) – n the length of the melody – m the length of the longest repeated patterns

Key Melody Extraction: An Example Assume input string: ” CCCDCCCECC", threshold=1, separator=X. Step 1: create a list of 2 -note L =(CC: 5, CD: 1, DC: 1, CC: 5, CE: 1, EC: 1, CC: 5) Step 2: merging After 1 st iteration: merge L into L 1=(CCC: 2, X: 0, CCC: 2, X: 0), drop : (CC: 5, CD: 1, DC: 1, CC: 5, CE: 1, EC: 1), Final. List : (CC: 5) After 2 nd iteration: merge L 1 into L 2 = (X: 0), drop : (X: 0, X: 0), Final. List : (CC: 5, CCC: 2) After 3 rd iteration: merge L 2 into L 3 = ( ), drop : (X: 0), Final. List : (CC: 5, CCC: 2) Step 3 : filtering the item CC may be removed since it is a substring of CCC.

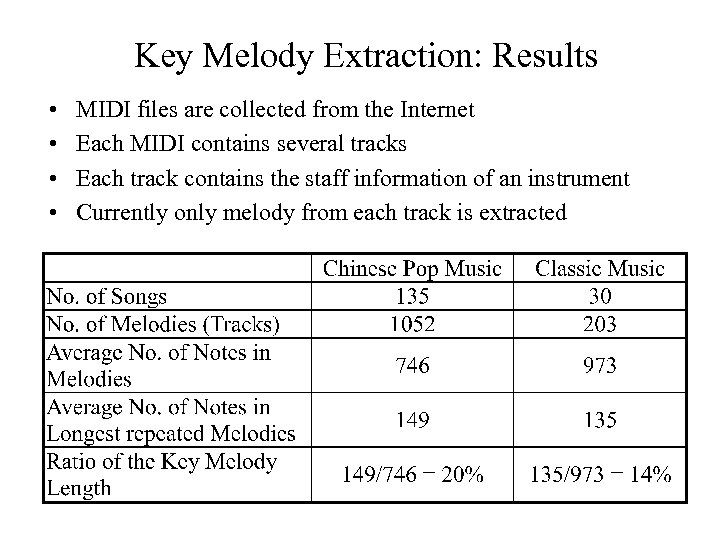

Key Melody Extraction: Results • • MIDI files are collected from the Internet Each MIDI contains several tracks Each track contains the staff information of an instrument Currently only melody from each track is extracted

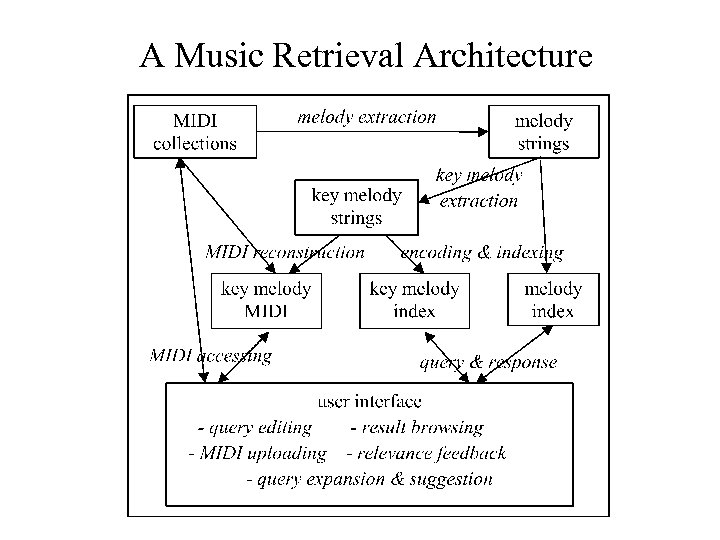

A Music Retrieval Architecture

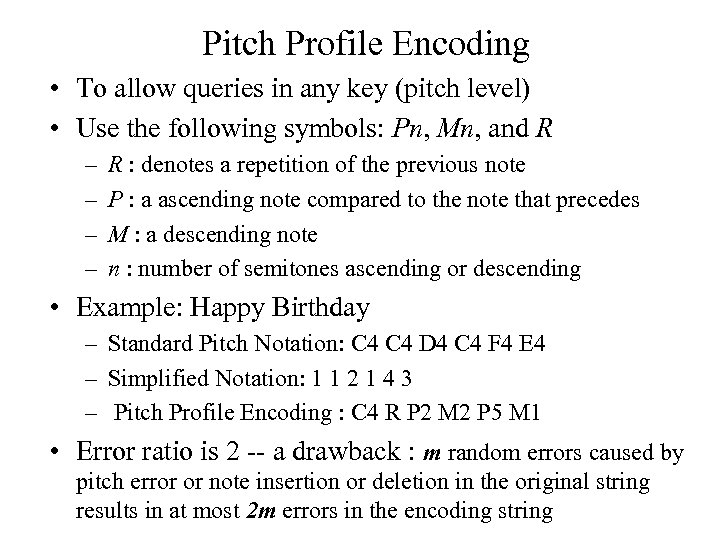

Pitch Profile Encoding • To allow queries in any key (pitch level) • Use the following symbols: Pn, Mn, and R – – R : denotes a repetition of the previous note P : a ascending note compared to the note that precedes M : a descending note n : number of semitones ascending or descending • Example: Happy Birthday – Standard Pitch Notation: C 4 D 4 C 4 F 4 E 4 – Simplified Notation: 1 1 2 1 4 3 – Pitch Profile Encoding : C 4 R P 2 M 2 P 5 M 1 • Error ratio is 2 -- a drawback : m random errors caused by pitch error or note insertion or deletion in the original string results in at most 2 m errors in the encoding string

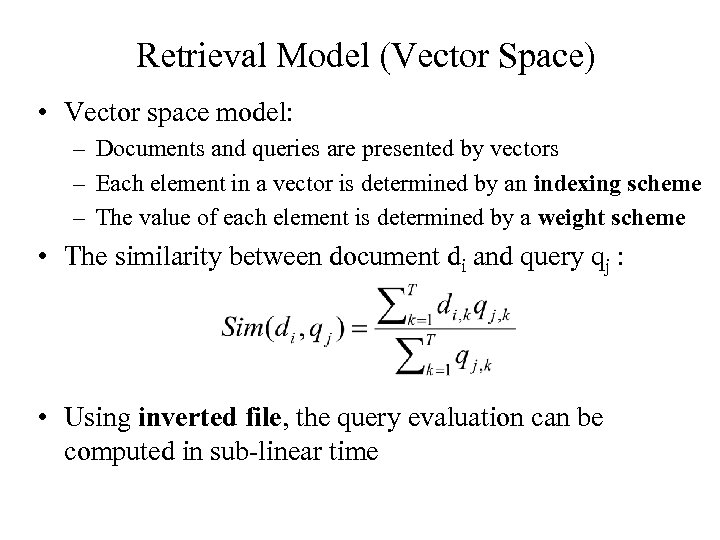

Retrieval Model (Vector Space) • Vector space model: – Documents and queries are presented by vectors – Each element in a vector is determined by an indexing scheme – The value of each element is determined by a weight scheme • The similarity between document di and query qj : • Using inverted file, the query evaluation can be computed in sub-linear time

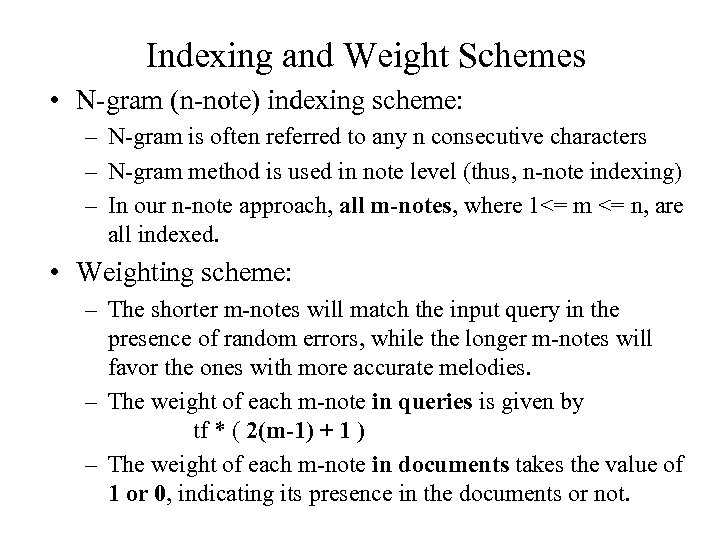

Indexing and Weight Schemes • N-gram (n-note) indexing scheme: – N-gram is often referred to any n consecutive characters – N-gram method is used in note level (thus, n-note indexing) – In our n-note approach, all m-notes, where 1<= m <= n, are all indexed. • Weighting scheme: – The shorter m-notes will match the input query in the presence of random errors, while the longer m-notes will favor the ones with more accurate melodies. – The weight of each m-note in queries is given by tf * ( 2(m-1) + 1 ) – The weight of each m-note in documents takes the value of 1 or 0, indicating its presence in the documents or not.

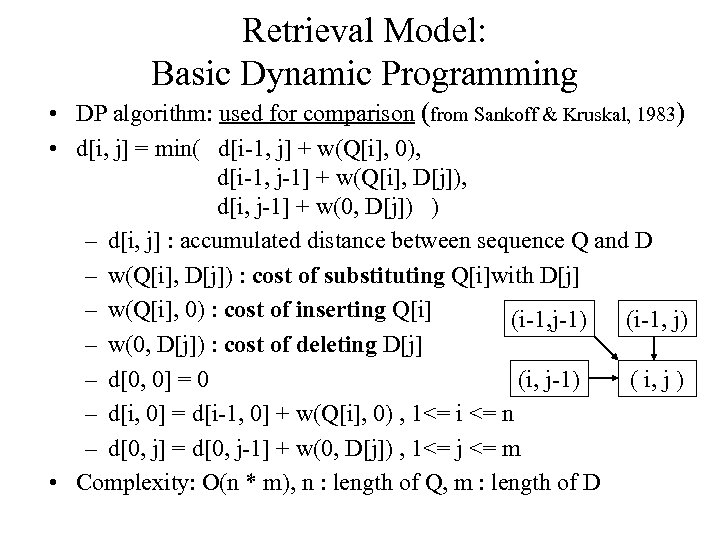

Retrieval Model: Basic Dynamic Programming • DP algorithm: used for comparison (from Sankoff & Kruskal, 1983) • d[i, j] = min( d[i-1, j] + w(Q[i], 0), d[i-1, j-1] + w(Q[i], D[j]), d[i, j-1] + w(0, D[j]) ) – d[i, j] : accumulated distance between sequence Q and D – w(Q[i], D[j]) : cost of substituting Q[i]with D[j] – w(Q[i], 0) : cost of inserting Q[i] (i-1, j-1) (i-1, j) – w(0, D[j]) : cost of deleting D[j] – d[0, 0] = 0 (i, j-1) ( i, j ) – d[i, 0] = d[i-1, 0] + w(Q[i], 0) , 1<= i <= n – d[0, j] = d[0, j-1] + w(0, D[j]) , 1<= j <= m • Complexity: O(n * m), n : length of Q, m : length of D

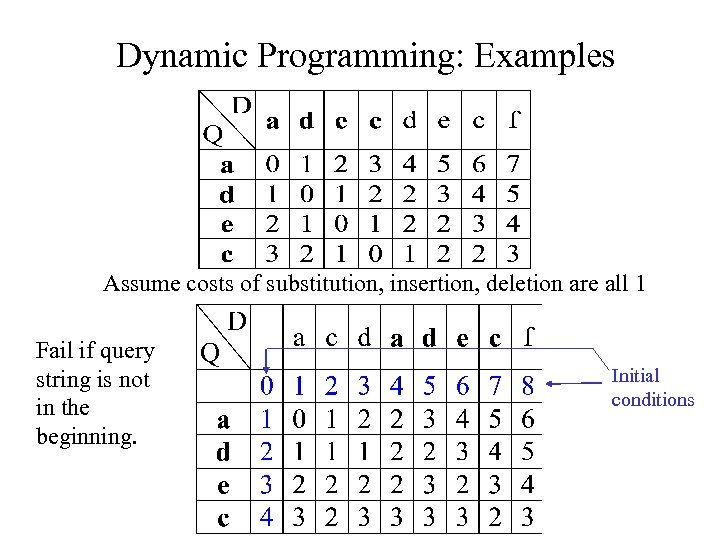

Dynamic Programming: Examples Assume costs of substitution, insertion, deletion are all 1 Fail if query string is not in the beginning. Initial conditions

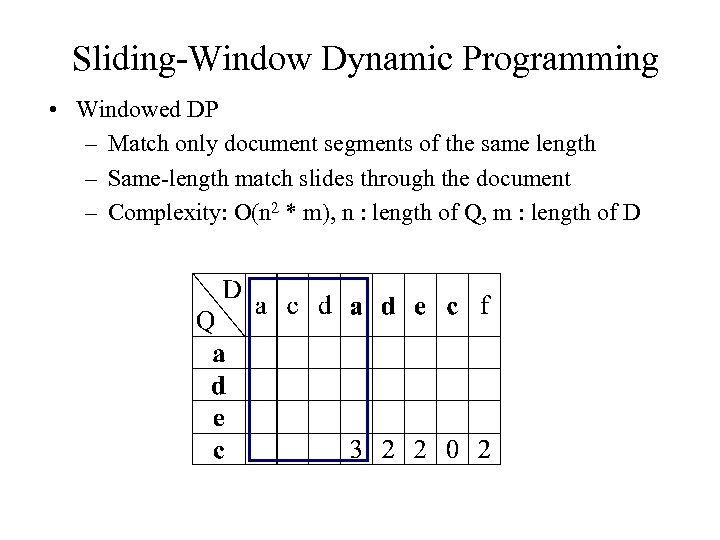

Sliding-Window Dynamic Programming • Windowed DP – Match only document segments of the same length – Same-length match slides through the document – Complexity: O(n 2 * m), n : length of Q, m : length of D

![Modified Dynamic Programming • Change the initial condition – d[0, 0] = 0 – Modified Dynamic Programming • Change the initial condition – d[0, 0] = 0 –](https://present5.com/presentation/e7cd31b4ca61aca840bccd13d7cce3e4/image-21.jpg)

Modified Dynamic Programming • Change the initial condition – d[0, 0] = 0 – d[i, 0] = d[i-1, 0] + w(A[i], 0) , 1<= i <= n – d[0, j] = d[0, j-1] + w(0, B[j]) , 1<= j <= m =>d[0, j] = 0 , 1<= j <= m Initial conditions

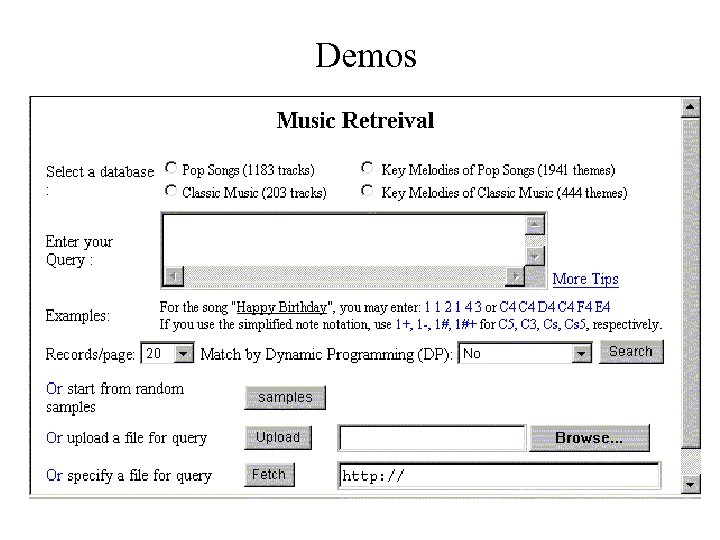

Demos

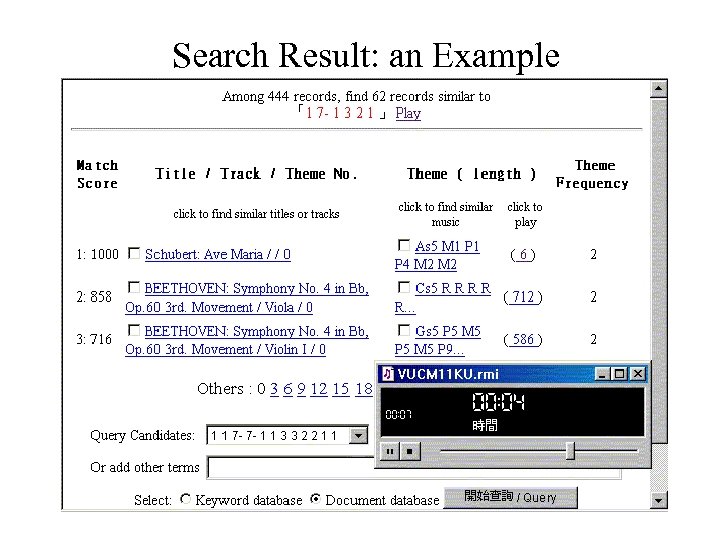

Search Result: an Example

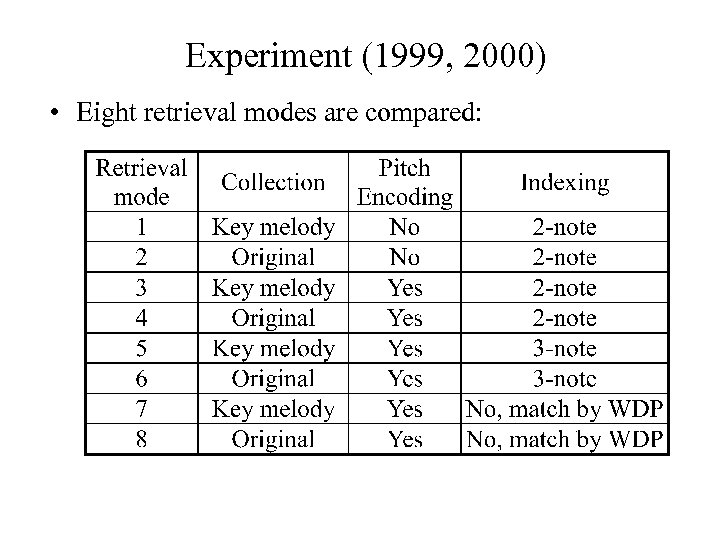

Experiment (1999, 2000) • Eight retrieval modes are compared:

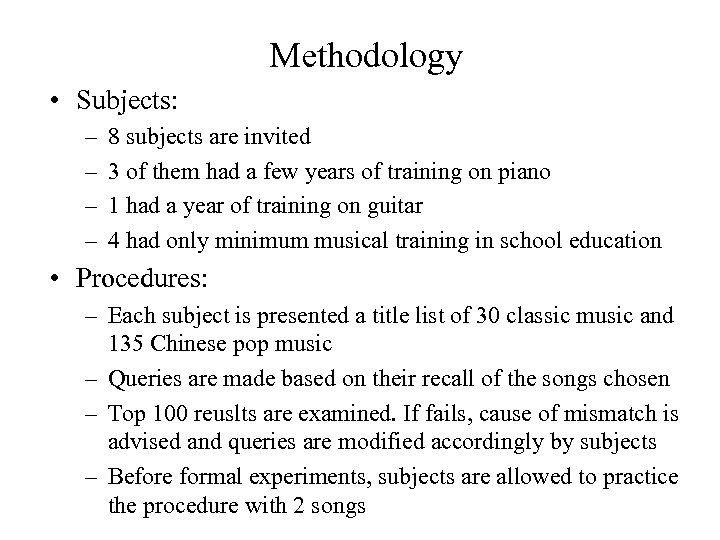

Methodology • Subjects: – – 8 subjects are invited 3 of them had a few years of training on piano 1 had a year of training on guitar 4 had only minimum musical training in school education • Procedures: – Each subject is presented a title list of 30 classic music and 135 Chinese pop music – Queries are made based on their recall of the songs chosen – Top 100 reuslts are examined. If fails, cause of mismatch is advised and queries are modified accordingly by subjects – Before formal experiments, subjects are allowed to practice the procedure with 2 songs

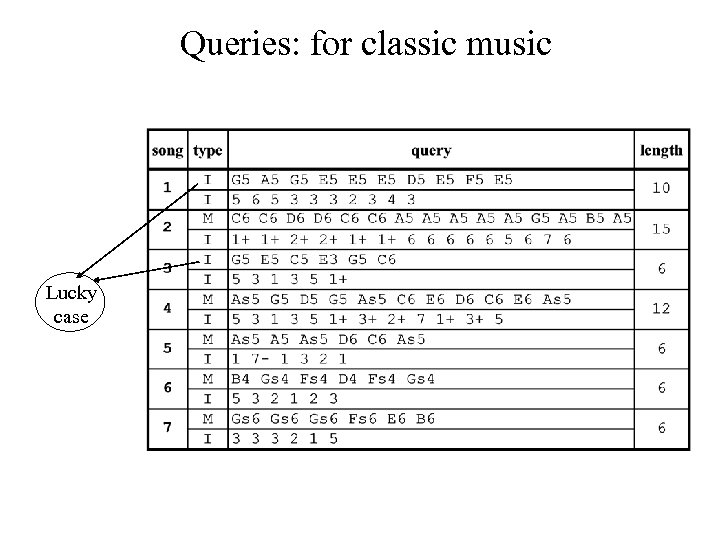

Queries: for classic music Lucky case

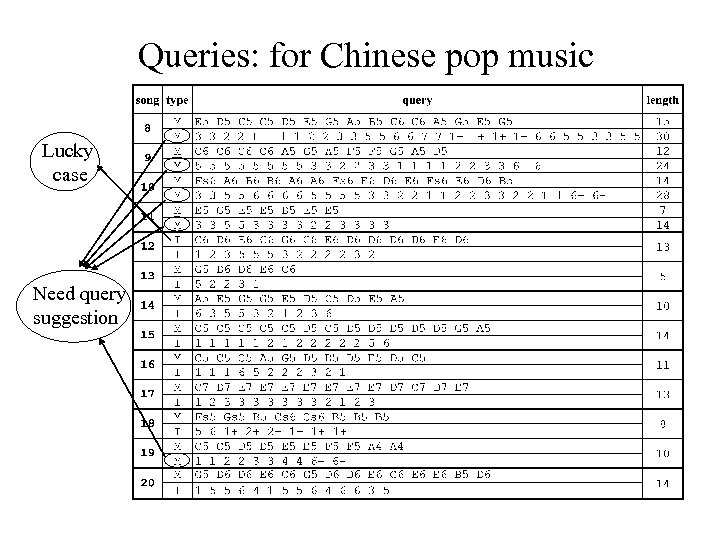

Queries: for Chinese pop music Lucky case Need query suggestion

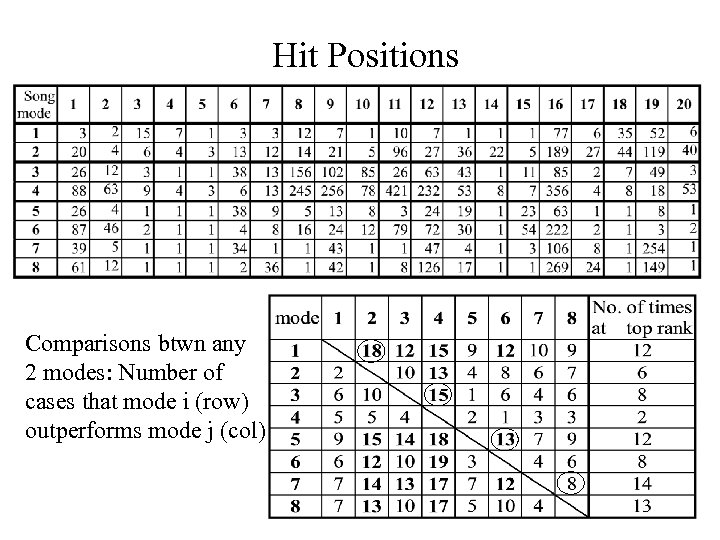

Hit Positions Comparisons btwn any 2 modes: Number of cases that mode i (row) outperforms mode j (col)

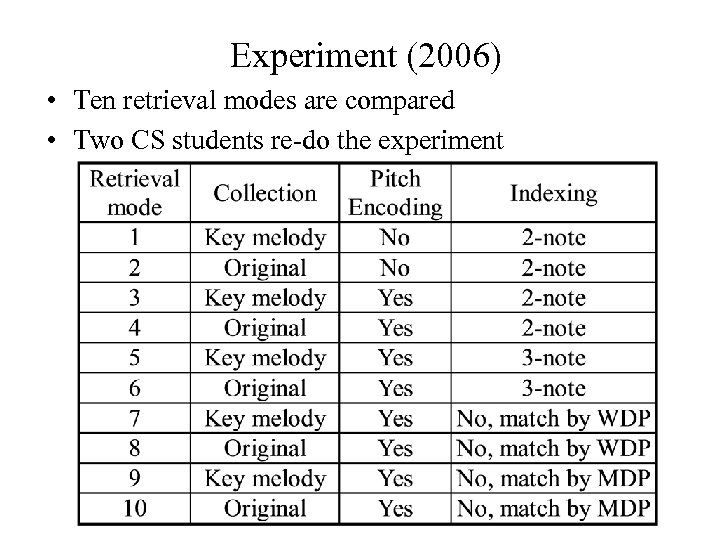

Experiment (2006) • Ten retrieval modes are compared • Two CS students re-do the experiment

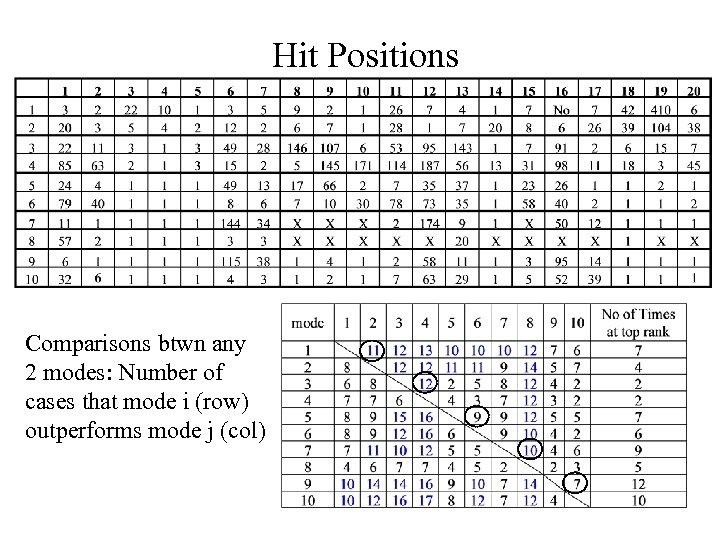

Hit Positions Comparisons btwn any 2 modes: Number of cases that mode i (row) outperforms mode j (col)

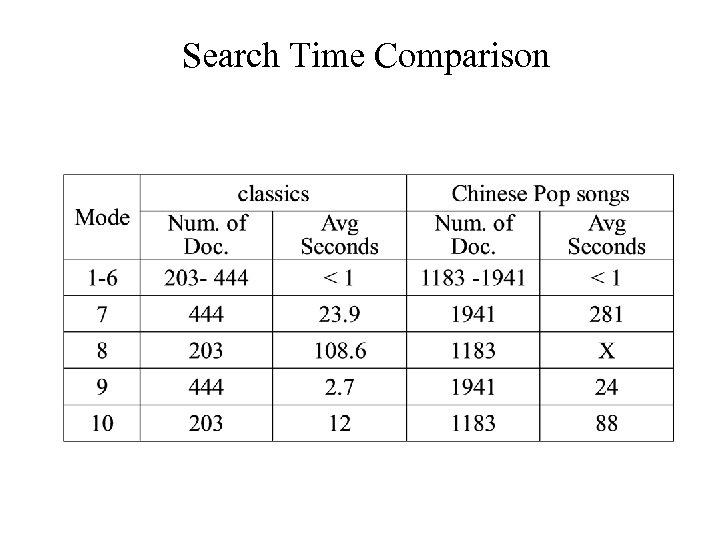

Search Time Comparison

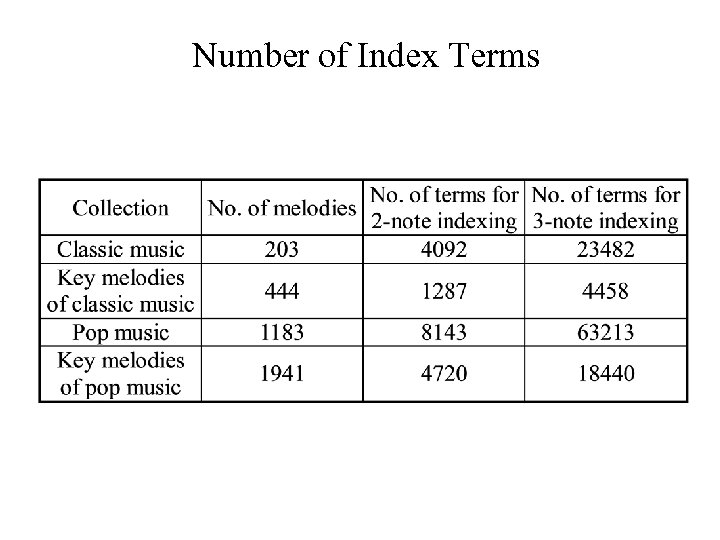

Results from the experiments • Key melody search is better than direct melody search • Longer n is better for n-note indexing – longer n yields more index terms for the same collection • Exact pitch encoding is better – only for the same n-note indexing – only suitable for skilled users : only 3 queries are made in correct key at the first time • Dynamic programming is slightly better, but requires a large amount of evaluation time – In average, 89 seconds for 444 classic key melodies, and 536 seconds for 203 classic melodies – For n-note indexing with inverted files, only 1 or 2 seconds

Number of Index Terms

Conclusions • Key-invariant encoding allows queries in any key • Random pitch errors, note insertion, and note deletion can be overcome by carefully designed indexing, weighting, and retrieval schemes without resorting to much time-consuming DP matching • Burst errors due to fragmentation or other music factors require query suggestion – neither DP nor our approach performs well for this case – 5 out of 13 cases in pop music require query reformulation due to fragmentation

Future Work • More types of mismatches to analyze for automatic query candidate suggestion • Effective melody extraction for polyphonic melodies – monophonic melody is assumed in this work, -- no notes occurs simultaneously – more difficulty to form a query that has simultaneous notes -more discrepancy between queries and polyphonic melodies – Uitdenbogerd, et al, [1998] discussed effective algorithms for extracting monophonic melodies from polyphonic ones • Automatic key melody extraction allowing variations – repeated melodies with small variations enrich the melodies – Bakhmutova, et al, [1997] has presented a semi-automatic procedure for revealing similar melodies

Related Researches and Projects • ISMIR since 2000 – International Symposium on Music Information Retrieval • WOCMAT since 2005 – Workshop On Computer Music and Audio Technology • Digital archive application – Data mining in digital music archive • Free music audio, sound processing tools and musicrelated visualization and mining tools – http: //www. music-ir. org/evaluation/tools. html • Music IR evaluation since 2005 – http: //www. music-ir. org/mirexwiki/index. php/Main_Page – Test collection: music documents, query sets, and judgment – Major hurdle: copyright issue

Research Opportunities • Apply language model in text retrieval to MIR – May need music composition theory • Music mining – – – Key feature extraction summarization Clustering (detecting similar inter- or intra-patterns) Classification (genre, emotion) Annotation Aggregated Analysis • Applications – Games, entertainment, education, culture renovation, and researches – Innovative, multimedia, multimodal, and interdisciplinary

e7cd31b4ca61aca840bccd13d7cce3e4.ppt