700169eee5c335f28b9d41b5eeee7208.ppt

- Количество слайдов: 55

Comparison Methodology ü Meaning of a sample ü Confidence intervals • Making decisions and comparing alternatives • Special considerations in confidence intervals • Sample sizes © 1998, Geoff Kuenning

Comparison Methodology ü Meaning of a sample ü Confidence intervals • Making decisions and comparing alternatives • Special considerations in confidence intervals • Sample sizes © 1998, Geoff Kuenning

Estimating Confidence Intervals • Two formulas for confidence intervals – Over 30 samples from any distribution: z-distribution – Small sample from normally distributed population: t-distribution • Common error: using t-distribution for non-normal population – Central Limit Theorem often saves us © 1998, Geoff Kuenning

Estimating Confidence Intervals • Two formulas for confidence intervals – Over 30 samples from any distribution: z-distribution – Small sample from normally distributed population: t-distribution • Common error: using t-distribution for non-normal population – Central Limit Theorem often saves us © 1998, Geoff Kuenning

The z Distribution • Interval on either side of mean: • Significance level is small for large confidence levels • Tables of z are tricky: be careful! © 1998, Geoff Kuenning

The z Distribution • Interval on either side of mean: • Significance level is small for large confidence levels • Tables of z are tricky: be careful! © 1998, Geoff Kuenning

The t Distribution • Formula is almost the same: • Usable only for normally distributed populations! • But works with small samples © 1998, Geoff Kuenning

The t Distribution • Formula is almost the same: • Usable only for normally distributed populations! • But works with small samples © 1998, Geoff Kuenning

Making Decisions • Why do we use confidence intervals? – Summarizes error in sample mean – Gives way to decide if measurement is meaningful – Allows comparisons in face of error • But remember: at 90% confidence, 10% of sample means do not include population mean © 1998, Geoff Kuenning

Making Decisions • Why do we use confidence intervals? – Summarizes error in sample mean – Gives way to decide if measurement is meaningful – Allows comparisons in face of error • But remember: at 90% confidence, 10% of sample means do not include population mean © 1998, Geoff Kuenning

Testing for Zero Mean • Is population mean significantly nonzero? • If confidence interval includes 0, answer is no • Can test for any value (mean of sums is sum of means) • Example: our height samples are consistent with average height of 170 cm – Also consistent with 160 and 180! © 1998, Geoff Kuenning

Testing for Zero Mean • Is population mean significantly nonzero? • If confidence interval includes 0, answer is no • Can test for any value (mean of sums is sum of means) • Example: our height samples are consistent with average height of 170 cm – Also consistent with 160 and 180! © 1998, Geoff Kuenning

Comparing Alternatives • Often need to find better system – Choose fastest computer to buy – Prove our algorithm runs faster • Different methods for paired/unpaired observations – Paired if ith test on each system was same – Unpaired otherwise © 1998, Geoff Kuenning

Comparing Alternatives • Often need to find better system – Choose fastest computer to buy – Prove our algorithm runs faster • Different methods for paired/unpaired observations – Paired if ith test on each system was same – Unpaired otherwise © 1998, Geoff Kuenning

Comparing Paired Observations • Treat problem as 1 sample of n pairs • For each test calculate performance difference • Calculate confidence interval for differences • If interval includes zero, systems aren’t different – If not, sign indicates which is better © 1998, Geoff Kuenning

Comparing Paired Observations • Treat problem as 1 sample of n pairs • For each test calculate performance difference • Calculate confidence interval for differences • If interval includes zero, systems aren’t different – If not, sign indicates which is better © 1998, Geoff Kuenning

Example: Comparing Paired Observations • Do home baseball teams outscore visitors? • Sample from 9 -4 -96: © 1998, Geoff Kuenning

Example: Comparing Paired Observations • Do home baseball teams outscore visitors? • Sample from 9 -4 -96: © 1998, Geoff Kuenning

Example: Comparing Paired Observations • H-V 2 -2 -7 5 6 -1 -7 6 7 3 2 1 -1 6 • Mean 1. 4, 90% interval (-0. 75, 3. 6) – Can’t reject the hypothesis that difference is 0. – 70% interval is (0. 10, 2. 76) © 1998, Geoff Kuenning

Example: Comparing Paired Observations • H-V 2 -2 -7 5 6 -1 -7 6 7 3 2 1 -1 6 • Mean 1. 4, 90% interval (-0. 75, 3. 6) – Can’t reject the hypothesis that difference is 0. – 70% interval is (0. 10, 2. 76) © 1998, Geoff Kuenning

Comparing Unpaired Observations • A sample of size na and nb for each alternative A and B • Start with confidence intervals – If no overlap: mean A B • Systems are different and higher mean is better (for HB metrics) – If overlap and each CI contains other mean: • Systems are not different at this level • If close call, could lower confidence level – If overlap and one mean isn’t in other CI • Must do t-test © 1998, Geoff Kuenning B mean A B A

Comparing Unpaired Observations • A sample of size na and nb for each alternative A and B • Start with confidence intervals – If no overlap: mean A B • Systems are different and higher mean is better (for HB metrics) – If overlap and each CI contains other mean: • Systems are not different at this level • If close call, could lower confidence level – If overlap and one mean isn’t in other CI • Must do t-test © 1998, Geoff Kuenning B mean A B A

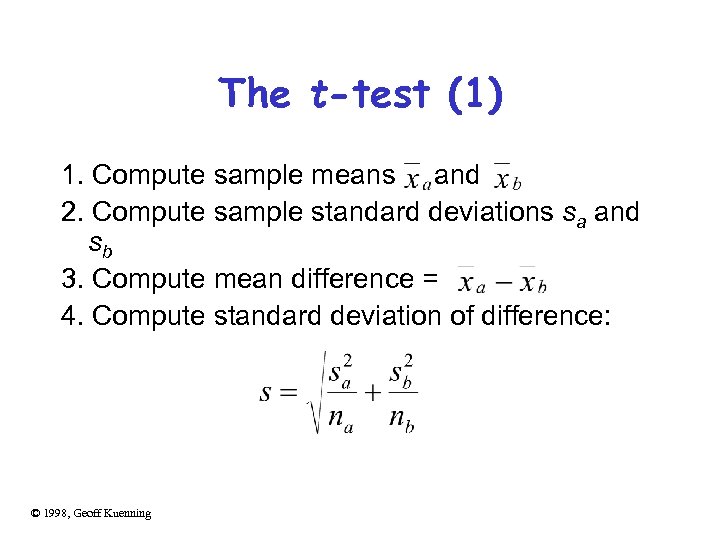

The t-test (1) 1. Compute sample means and 2. Compute sample standard deviations sa and sb 3. Compute mean difference = 4. Compute standard deviation of difference: © 1998, Geoff Kuenning

The t-test (1) 1. Compute sample means and 2. Compute sample standard deviations sa and sb 3. Compute mean difference = 4. Compute standard deviation of difference: © 1998, Geoff Kuenning

The t-test (2) 5. Compute effective degrees of freedom: 6. Compute the confidence interval: ! 7. If interval includes zero, no difference © 1998, Geoff Kuenning

The t-test (2) 5. Compute effective degrees of freedom: 6. Compute the confidence interval: ! 7. If interval includes zero, no difference © 1998, Geoff Kuenning

Comparing Proportions • If k of n trials give a certain result, then confidence interval is ! • If interval includes 0. 5, can’t say which outcome is statistically meaningful • Must have k>10 to get valid results © 1998, Geoff Kuenning

Comparing Proportions • If k of n trials give a certain result, then confidence interval is ! • If interval includes 0. 5, can’t say which outcome is statistically meaningful • Must have k>10 to get valid results © 1998, Geoff Kuenning

Special Considerations • Selecting a confidence level • Hypothesis testing • One-sided confidence intervals © 1998, Geoff Kuenning

Special Considerations • Selecting a confidence level • Hypothesis testing • One-sided confidence intervals © 1998, Geoff Kuenning

Selecting a Confidence Level • Depends on cost of being wrong • 90%, 95% are common values for scientific papers • Generally, use highest value that lets you make a firm statement – But it’s better to be consistent throughout a given paper © 1998, Geoff Kuenning

Selecting a Confidence Level • Depends on cost of being wrong • 90%, 95% are common values for scientific papers • Generally, use highest value that lets you make a firm statement – But it’s better to be consistent throughout a given paper © 1998, Geoff Kuenning

Hypothesis Testing • The null hypothesis (H 0) is common in statistics – Confusing due to double negative – Gives less information than confidence interval – Often harder to compute • Should understand that rejecting null hypothesis implies result is meaningful © 1998, Geoff Kuenning

Hypothesis Testing • The null hypothesis (H 0) is common in statistics – Confusing due to double negative – Gives less information than confidence interval – Often harder to compute • Should understand that rejecting null hypothesis implies result is meaningful © 1998, Geoff Kuenning

One-Sided Confidence Intervals • Two-sided intervals test for mean being outside a certain range (see “error bands” in previous graphs) • One-sided tests useful if only interested in one limit • Use z 1 - or t 1 - ; n instead of z 1 - /2 or t 1 - /2; n in formulas © 1998, Geoff Kuenning

One-Sided Confidence Intervals • Two-sided intervals test for mean being outside a certain range (see “error bands” in previous graphs) • One-sided tests useful if only interested in one limit • Use z 1 - or t 1 - ; n instead of z 1 - /2 or t 1 - /2; n in formulas © 1998, Geoff Kuenning

Sample Sizes • Bigger sample sizes give narrower intervals – Smaller values of t, v as n increases – in formulas • But sample collection is often expensive – What is the minimum we can get away with? • Start with a small number of preliminary measurements to estimate variance. © 1998, Geoff Kuenning

Sample Sizes • Bigger sample sizes give narrower intervals – Smaller values of t, v as n increases – in formulas • But sample collection is often expensive – What is the minimum we can get away with? • Start with a small number of preliminary measurements to estimate variance. © 1998, Geoff Kuenning

Choosing a Sample Size • To get a given percentage error ±r%: • Here, z represents either z or t as appropriate • For a proportion p = k/n: © 1998, Geoff Kuenning

Choosing a Sample Size • To get a given percentage error ±r%: • Here, z represents either z or t as appropriate • For a proportion p = k/n: © 1998, Geoff Kuenning

Example of Choosing Sample Size • Five runs of a compilation took 22. 5, 19. 8, 21. 1, 26. 7, 20. 2 seconds • How many runs to get ± 5% confidence interval at 90% confidence level? • = 22. 1, s = 2. 8, t 0. 95; 4 = 2. 132 © 1998, Geoff Kuenning

Example of Choosing Sample Size • Five runs of a compilation took 22. 5, 19. 8, 21. 1, 26. 7, 20. 2 seconds • How many runs to get ± 5% confidence interval at 90% confidence level? • = 22. 1, s = 2. 8, t 0. 95; 4 = 2. 132 © 1998, Geoff Kuenning

Linear Regression Models ü What is a (good) model? ü Estimating model parameters • Allocating variation • Confidence intervals for regressions • Verifying assumptions visually © 1998, Geoff Kuenning

Linear Regression Models ü What is a (good) model? ü Estimating model parameters • Allocating variation • Confidence intervals for regressions • Verifying assumptions visually © 1998, Geoff Kuenning

What Is a (Good) Model? • For correlated data, model predicts response given an input • Model should be equation that fits data • Standard definition of “fits” is least-squares – Minimize squared error – While keeping mean error zero – Minimizes variance of errors © 1998, Geoff Kuenning

What Is a (Good) Model? • For correlated data, model predicts response given an input • Model should be equation that fits data • Standard definition of “fits” is least-squares – Minimize squared error – While keeping mean error zero – Minimizes variance of errors © 1998, Geoff Kuenning

Least-Squared Error N • If y then error in estimate for xi is N yi • Minimize Sum of Squared Errors (SSE) • Subject to the constraint © 1998, Geoff Kuenning

Least-Squared Error N • If y then error in estimate for xi is N yi • Minimize Sum of Squared Errors (SSE) • Subject to the constraint © 1998, Geoff Kuenning

Estimating Model Parameters • Best regression parameters are where • Note error in book! © 1998, Geoff Kuenning

Estimating Model Parameters • Best regression parameters are where • Note error in book! © 1998, Geoff Kuenning

Parameter Estimation Example • Execution time of a script for various loop counts: • = 6. 8, = 2. 32, xy = 88. 54, x 2 = 264 • b 0 = 2. 32 (0. 29)(6. 8) = 0. 35 © 1998, Geoff Kuenning

Parameter Estimation Example • Execution time of a script for various loop counts: • = 6. 8, = 2. 32, xy = 88. 54, x 2 = 264 • b 0 = 2. 32 (0. 29)(6. 8) = 0. 35 © 1998, Geoff Kuenning

Graph of Parameter Estimation Example © 1998, Geoff Kuenning

Graph of Parameter Estimation Example © 1998, Geoff Kuenning

Variants of Linear Regression • Some non-linear relationships can be handled by transformations – For y = aebx take logarithm of y, do regression on log(y) = b 0+b 1 x, let b = b 1, – For y = a+b log(x), take log of x before fitting parameters, let b = b 1, a = b 0 – For y = axb, take log of both x and y, let b = b 1, © 1998, Geoff Kuenning

Variants of Linear Regression • Some non-linear relationships can be handled by transformations – For y = aebx take logarithm of y, do regression on log(y) = b 0+b 1 x, let b = b 1, – For y = a+b log(x), take log of x before fitting parameters, let b = b 1, a = b 0 – For y = axb, take log of both x and y, let b = b 1, © 1998, Geoff Kuenning

Allocating Variation • If no regression, best guess of y is • Observed values of y differ from , giving rise to errors (variance) • Regression gives better guess, but there are still errors • We can evaluate quality of regression by allocating sources of errors © 1998, Geoff Kuenning

Allocating Variation • If no regression, best guess of y is • Observed values of y differ from , giving rise to errors (variance) • Regression gives better guess, but there are still errors • We can evaluate quality of regression by allocating sources of errors © 1998, Geoff Kuenning

The Total Sum of Squares • Without regression, squared error is © 1998, Geoff Kuenning

The Total Sum of Squares • Without regression, squared error is © 1998, Geoff Kuenning

The Sum of Squares from Regression • Recall that regression error is • Error without regression is SST • So regression explains SSR = SST - SSE • Regression quality measured by coefficient of determination © 1998, Geoff Kuenning

The Sum of Squares from Regression • Recall that regression error is • Error without regression is SST • So regression explains SSR = SST - SSE • Regression quality measured by coefficient of determination © 1998, Geoff Kuenning

Evaluating Coefficient of Determination • Compute © 1998, Geoff Kuenning

Evaluating Coefficient of Determination • Compute © 1998, Geoff Kuenning

Example of Coefficient of Determination • For previous regression example – y = 11. 60, y 2 = 29. 79, xy = 88. 54, – – SSE = 29. 79 -(0. 35)(11. 60)-(0. 29)(88. 54) = 0. 05 SST = 29. 79 -26. 9 = 2. 89 SSR = 2. 89 -. 05 = 2. 84 R 2 = (2. 89 -0. 05)/2. 89 = 0. 98 © 1998, Geoff Kuenning

Example of Coefficient of Determination • For previous regression example – y = 11. 60, y 2 = 29. 79, xy = 88. 54, – – SSE = 29. 79 -(0. 35)(11. 60)-(0. 29)(88. 54) = 0. 05 SST = 29. 79 -26. 9 = 2. 89 SSR = 2. 89 -. 05 = 2. 84 R 2 = (2. 89 -0. 05)/2. 89 = 0. 98 © 1998, Geoff Kuenning

Standard Deviation of Errors • Variance of errors is SSE divided by degrees of freedom – DOF is n 2 because we’ve calculated 2 regression parameters from the data – So variance (mean squared error, MSE) is SSE/(n 2) • Standard deviation of errors is square root: © 1998, Geoff Kuenning

Standard Deviation of Errors • Variance of errors is SSE divided by degrees of freedom – DOF is n 2 because we’ve calculated 2 regression parameters from the data – So variance (mean squared error, MSE) is SSE/(n 2) • Standard deviation of errors is square root: © 1998, Geoff Kuenning

Checking Degrees of Freedom • Degrees of freedom always equate: – SS 0 has 1 (computed from ) – SST has n 1 (computed from data and , which uses up 1) – SSE has n 2 (needs 2 regression parameters) – So © 1998, Geoff Kuenning

Checking Degrees of Freedom • Degrees of freedom always equate: – SS 0 has 1 (computed from ) – SST has n 1 (computed from data and , which uses up 1) – SSE has n 2 (needs 2 regression parameters) – So © 1998, Geoff Kuenning

Example of Standard Deviation of Errors • For our regression example, SSE was 0. 05, so MSE is 0. 05/3 = 0. 017 and se = 0. 13 • Note high quality of our regression: – R 2 = 0. 98 – se = 0. 13 – Why such a nice straight-line fit? © 1998, Geoff Kuenning

Example of Standard Deviation of Errors • For our regression example, SSE was 0. 05, so MSE is 0. 05/3 = 0. 017 and se = 0. 13 • Note high quality of our regression: – R 2 = 0. 98 – se = 0. 13 – Why such a nice straight-line fit? © 1998, Geoff Kuenning

Confidence Intervals for Regressions • Regression is done from a single population sample (size n) – Different sample might give different results – True model is y = 0 + 1 x – Parameters b 0 and b 1 are really means taken from a population sample © 1998, Geoff Kuenning

Confidence Intervals for Regressions • Regression is done from a single population sample (size n) – Different sample might give different results – True model is y = 0 + 1 x – Parameters b 0 and b 1 are really means taken from a population sample © 1998, Geoff Kuenning

Calculating Intervals for Regression Parameters • Standard deviations of parameters: • Confidence intervals are bi t sbi • where t has n - 2 degrees of freedom ! © 1998, Geoff Kuenning

Calculating Intervals for Regression Parameters • Standard deviations of parameters: • Confidence intervals are bi t sbi • where t has n - 2 degrees of freedom ! © 1998, Geoff Kuenning

Example of Regression Confidence Intervals • Recall se = 0. 13, n = 5, x 2 = 264, = 6. 8 • So • Using a 90% confidence level, t 0. 95; 3 = 2. 353 © 1998, Geoff Kuenning

Example of Regression Confidence Intervals • Recall se = 0. 13, n = 5, x 2 = 264, = 6. 8 • So • Using a 90% confidence level, t 0. 95; 3 = 2. 353 © 1998, Geoff Kuenning

Regression Confidence Example, cont’d • Thus, b 0 interval is ! 0. 35 2. 353(0. 16) = (-0. 03, 0. 73) – Not significant at 90% • And b 1 is ! 0. 29 2. 353(0. 004) = (0. 28, 0. 30) – Significant at 90% (and would survive even 99. 9% test) © 1998, Geoff Kuenning

Regression Confidence Example, cont’d • Thus, b 0 interval is ! 0. 35 2. 353(0. 16) = (-0. 03, 0. 73) – Not significant at 90% • And b 1 is ! 0. 29 2. 353(0. 004) = (0. 28, 0. 30) – Significant at 90% (and would survive even 99. 9% test) © 1998, Geoff Kuenning

Confidence Intervals for Nonlinear Regressions • For nonlinear fits using exponential transformations: – Confidence intervals apply to transformed parameters – Not valid to perform inverse transformation on intervals © 1998, Geoff Kuenning

Confidence Intervals for Nonlinear Regressions • For nonlinear fits using exponential transformations: – Confidence intervals apply to transformed parameters – Not valid to perform inverse transformation on intervals © 1998, Geoff Kuenning

Confidence Intervals for Predictions • Previous confidence intervals are for parameters – How certain can we be that the parameters are correct? • Purpose of regression is prediction – How accurate are the predictions? – Regression gives mean of predicted response, based on sample we took © 1998, Geoff Kuenning

Confidence Intervals for Predictions • Previous confidence intervals are for parameters – How certain can we be that the parameters are correct? • Purpose of regression is prediction – How accurate are the predictions? – Regression gives mean of predicted response, based on sample we took © 1998, Geoff Kuenning

Predicting m Samples • Standard deviation for mean of future sample of m observations at xp is S N ymp • Note deviation drops as m • Variance minimal at x = • Use t-quantiles with n– 2 DOF for interval © 1998, Geoff Kuenning

Predicting m Samples • Standard deviation for mean of future sample of m observations at xp is S N ymp • Note deviation drops as m • Variance minimal at x = • Use t-quantiles with n– 2 DOF for interval © 1998, Geoff Kuenning

Example of Confidence of Predictions • Using previous equation, what is predicted time for a single run of 8 loops? • Time = 0. 35 + 0. 29(8) = 2. 67 • Standard deviation of errors se = 0. 13 S N yp • 90% interval is then ! © 1998, Geoff Kuenning

Example of Confidence of Predictions • Using previous equation, what is predicted time for a single run of 8 loops? • Time = 0. 35 + 0. 29(8) = 2. 67 • Standard deviation of errors se = 0. 13 S N yp • 90% interval is then ! © 1998, Geoff Kuenning

Verifying Assumptions Visually • Regressions are based on assumptions: – Linear relationship between response y and predictor x • Or nonlinear relationship used in fitting – Predictor x nonstochastic and error-free – Model errors statistically independent • With distribution N(0, c) for constant c • If assumptions violated, model misleading or invalid © 1998, Geoff Kuenning

Verifying Assumptions Visually • Regressions are based on assumptions: – Linear relationship between response y and predictor x • Or nonlinear relationship used in fitting – Predictor x nonstochastic and error-free – Model errors statistically independent • With distribution N(0, c) for constant c • If assumptions violated, model misleading or invalid © 1998, Geoff Kuenning

Testing Linearity • Scatter plot x vs. y to see basic curve type Linear Outlier © 1998, Geoff Kuenning Piecewise Linear Nonlinear (Power)

Testing Linearity • Scatter plot x vs. y to see basic curve type Linear Outlier © 1998, Geoff Kuenning Piecewise Linear Nonlinear (Power)

Testing Independence of Errors N • Scatter-plot i versus yi • Should be no visible trend • Example from our curve fit: © 1998, Geoff Kuenning

Testing Independence of Errors N • Scatter-plot i versus yi • Should be no visible trend • Example from our curve fit: © 1998, Geoff Kuenning

More on Testing Independence • May be useful to plot error residuals versus experiment number – In previous example, this gives same plot except for x scaling • No foolproof tests © 1998, Geoff Kuenning

More on Testing Independence • May be useful to plot error residuals versus experiment number – In previous example, this gives same plot except for x scaling • No foolproof tests © 1998, Geoff Kuenning

Testing for Normal Errors • Prepare quantile-quantile plot • Example for our regression: © 1998, Geoff Kuenning

Testing for Normal Errors • Prepare quantile-quantile plot • Example for our regression: © 1998, Geoff Kuenning

Testing for Constant Standard Deviation • • Tongue-twister: homoscedasticity Return to independence plot Look for trend in spread Example: © 1998, Geoff Kuenning

Testing for Constant Standard Deviation • • Tongue-twister: homoscedasticity Return to independence plot Look for trend in spread Example: © 1998, Geoff Kuenning

Linear Regression Can Be Misleading • Regression throws away some information about the data – To allow more compact summarization • Sometimes vital characteristics are thrown away – Often, looking at data plots can tell you whether you will have a problem © 1998, Geoff Kuenning

Linear Regression Can Be Misleading • Regression throws away some information about the data – To allow more compact summarization • Sometimes vital characteristics are thrown away – Often, looking at data plots can tell you whether you will have a problem © 1998, Geoff Kuenning

Example of Misleading Regression x 10 6. 58 8 5. 76 13 7. 71 9 8. 84 11 8. 47 14 7. 04 6 5. 25 4 12. 50 12 5. 56 © 1998, Geoff Kuenning 7 I y 8. 04 x 10 II y 9. 14 x 10 III y 7. 46 x 8 6. 95 8 8. 14 8 6. 77 8 7. 58 13 8. 74 13 12. 74 8 8. 81 9 8. 77 9 7. 11 8 8. 33 11 9. 26 11 7. 81 8 9. 96 14 8. 10 14 8. 84 8 7. 24 6 6. 13 6 6. 08 8 4. 26 4 3. 10 4 5. 39 19 10. 84 12 9. 13 12 8. 15 8 4. 82 7 7. 26 7 6. 42 8 IV y

Example of Misleading Regression x 10 6. 58 8 5. 76 13 7. 71 9 8. 84 11 8. 47 14 7. 04 6 5. 25 4 12. 50 12 5. 56 © 1998, Geoff Kuenning 7 I y 8. 04 x 10 II y 9. 14 x 10 III y 7. 46 x 8 6. 95 8 8. 14 8 6. 77 8 7. 58 13 8. 74 13 12. 74 8 8. 81 9 8. 77 9 7. 11 8 8. 33 11 9. 26 11 7. 81 8 9. 96 14 8. 10 14 8. 84 8 7. 24 6 6. 13 6 6. 08 8 4. 26 4 3. 10 4 5. 39 19 10. 84 12 9. 13 12 8. 15 8 4. 82 7 7. 26 7 6. 42 8 IV y

What Does Regression Tell Us About These Data Sets? • • Exactly the same thing for each! N = 11 Mean of y = 7. 5 Y = 3 +. 5 X Standard error of regression is 0. 118 All the sums of squares are the same Correlation coefficient =. 82 R 2 =. 67 © 1998, Geoff Kuenning

What Does Regression Tell Us About These Data Sets? • • Exactly the same thing for each! N = 11 Mean of y = 7. 5 Y = 3 +. 5 X Standard error of regression is 0. 118 All the sums of squares are the same Correlation coefficient =. 82 R 2 =. 67 © 1998, Geoff Kuenning

Now Look at the Data Plots I II IV © 1998, Geoff Kuenning

Now Look at the Data Plots I II IV © 1998, Geoff Kuenning

For Discussion Today Project Proposal 1. Statement of hypothesis 2. Workload decisions 3. Metrics to be used 4. Method

For Discussion Today Project Proposal 1. Statement of hypothesis 2. Workload decisions 3. Metrics to be used 4. Method