f973d47a864b88e4241242b31e19727e.ppt

- Количество слайдов: 41

COMP 734 -- Distributed File Systems With Case Studies: NFS, Andrew and Google COMP 734 – Fall 2011 1

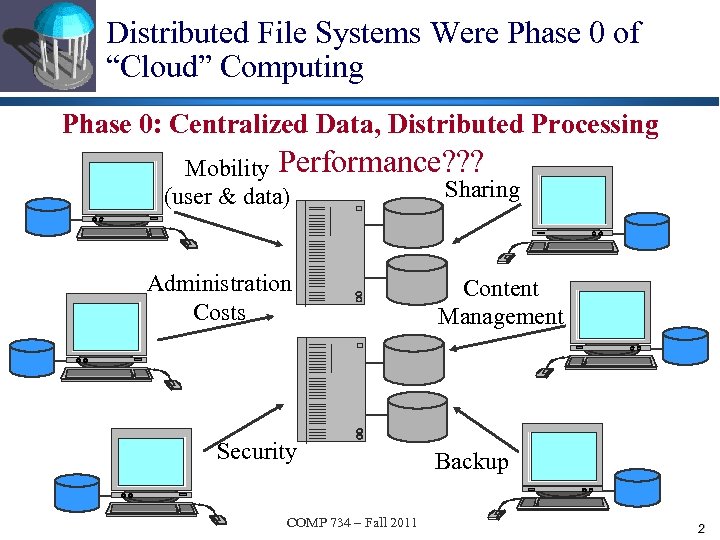

Distributed File Systems Were Phase 0 of “Cloud” Computing Phase 0: Centralized Data, Distributed Processing Mobility Performance? ? ? Sharing (user & data) Administration Costs Security COMP 734 – Fall 2011 Content Management Backup 2

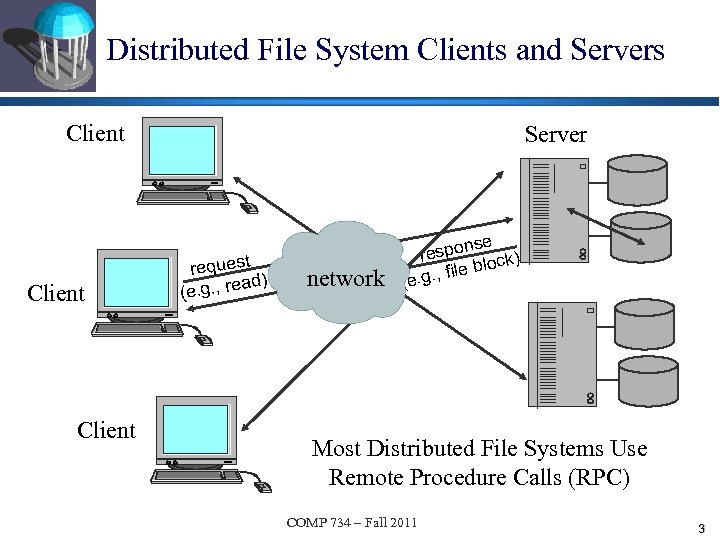

Distributed File System Clients and Servers Client Server request ad) (e. g. , re network nse respo lock) file b (e. g. , Most Distributed File Systems Use Remote Procedure Calls (RPC) COMP 734 – Fall 2011 3

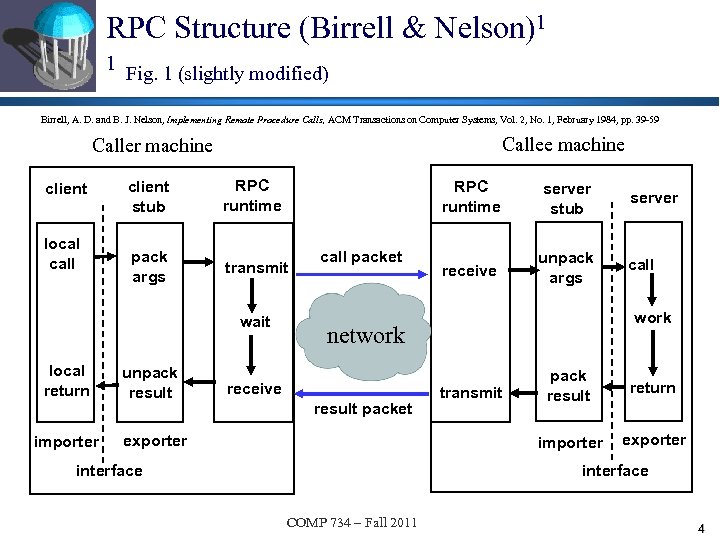

RPC Structure (Birrell & Nelson)1 1 Fig. 1 (slightly modified) Birrell, A. D. and B. J. Nelson, Implementing Remote Procedure Calls, ACM Transactions on Computer Systems, Vol. 2, No. 1, February 1984, pp. 39 -59 Callee machine Caller machine client local call client stub RPC runtime pack args transmit wait local return importer unpack result RPC runtime call packet server stub receive unpack args server call work network result packet exporter transmit pack result return importer receive exporter interface COMP 734 – Fall 2011 4

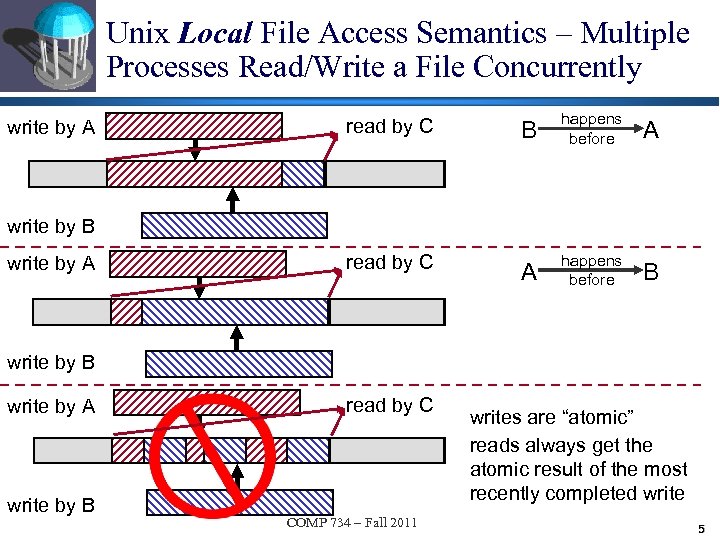

Unix Local File Access Semantics – Multiple Processes Read/Write a File Concurrently write by A read by C B happens before A read by C A happens before B write by A write by B read by C COMP 734 – Fall 2011 writes are “atomic” reads always get the atomic result of the most recently completed write 5

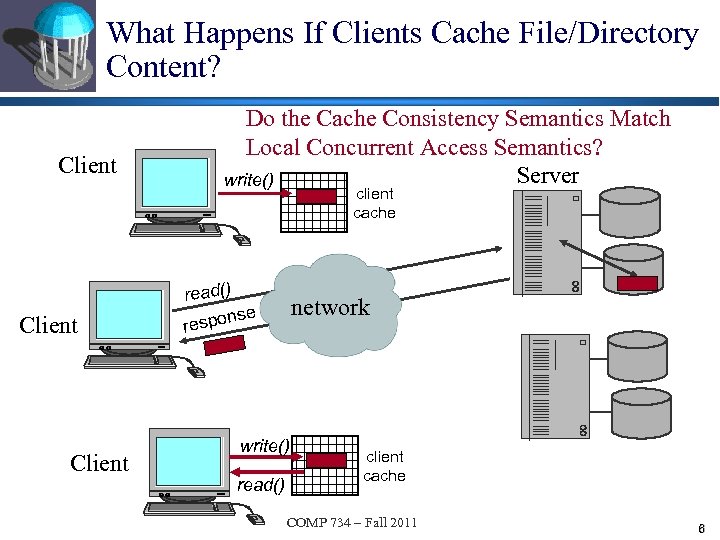

What Happens If Clients Cache File/Directory Content? Client Do the Cache Consistency Semantics Match Local Concurrent Access Semantics? Server write() client cache Client read() se espon r network write() read() client cache COMP 734 – Fall 2011 6

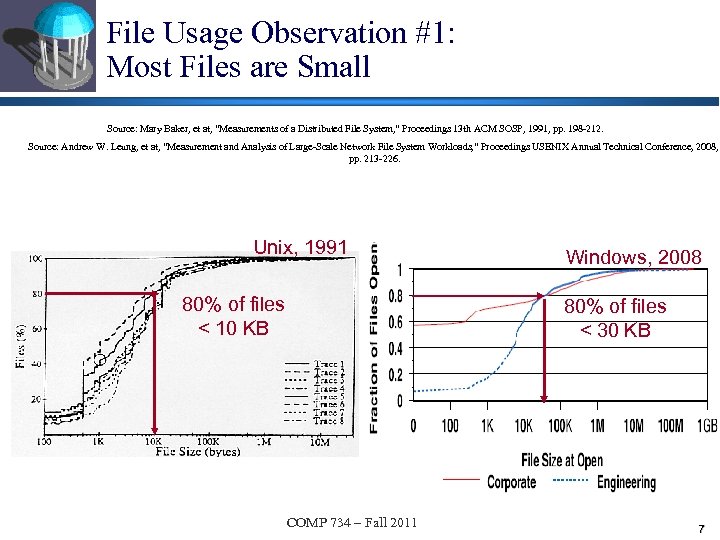

File Usage Observation #1: Most Files are Small Source: Mary Baker, et at, “Measurements of a Distributed File System, ” Proceedings 13 th ACM SOSP, 1991, pp. 198 -212. Source: Andrew W. Leung, et at, “Measurement and Analysis of Large-Scale Network File System Workloads, ” Proceedings USENIX Annual Technical Conference, 2008, pp. 213 -226. Unix, 1991 80% of files < 10 KB Windows, 2008 80% of files < 30 KB COMP 734 – Fall 2011 7

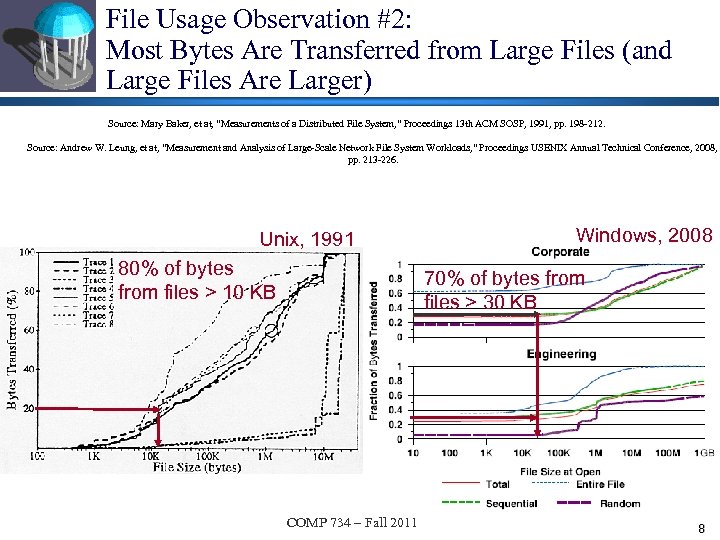

File Usage Observation #2: Most Bytes Are Transferred from Large Files (and Large Files Are Larger) Source: Mary Baker, et at, “Measurements of a Distributed File System, ” Proceedings 13 th ACM SOSP, 1991, pp. 198 -212. Source: Andrew W. Leung, et at, “Measurement and Analysis of Large-Scale Network File System Workloads, ” Proceedings USENIX Annual Technical Conference, 2008, pp. 213 -226. Unix, 1991 80% of bytes from files > 10 KB Windows, 2008 70% of bytes from files > 30 KB COMP 734 – Fall 2011 8

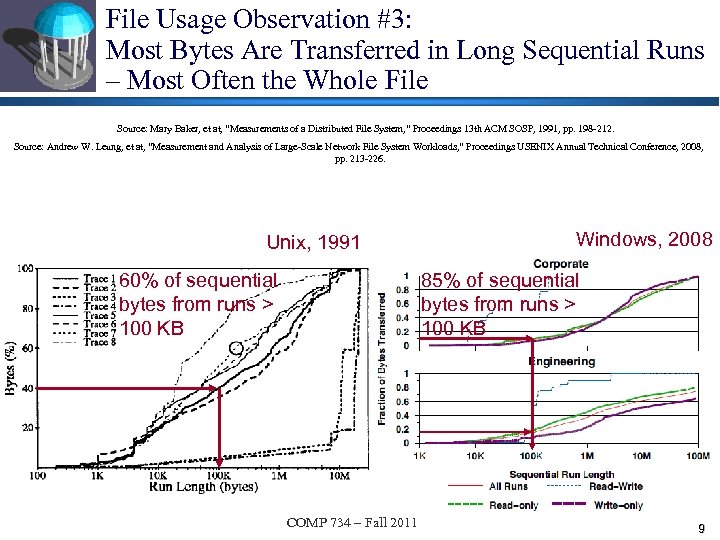

File Usage Observation #3: Most Bytes Are Transferred in Long Sequential Runs – Most Often the Whole File Source: Mary Baker, et at, “Measurements of a Distributed File System, ” Proceedings 13 th ACM SOSP, 1991, pp. 198 -212. Source: Andrew W. Leung, et at, “Measurement and Analysis of Large-Scale Network File System Workloads, ” Proceedings USENIX Annual Technical Conference, 2008, pp. 213 -226. Unix, 1991 Windows, 2008 85% of sequential bytes from runs > 100 KB 60% of sequential bytes from runs > 100 KB COMP 734 – Fall 2011 9

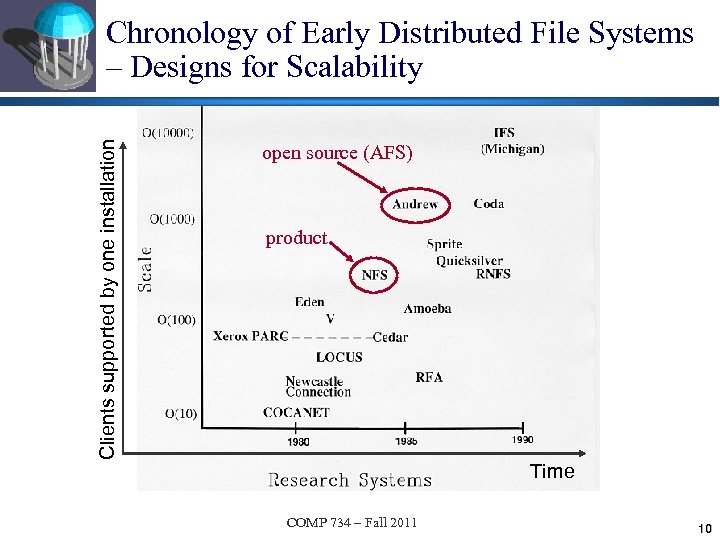

Clients supported by one installation Chronology of Early Distributed File Systems – Designs for Scalability open source (AFS) product Time COMP 734 – Fall 2011 10

NFS-2 Design Goals u Transparent File Naming u Scalable -- O(100 s) u Performance approximating local files u Fault tolerant (client, server, network faults) u Unix file-sharing semantics (almost) u No change to Unix C library/system calls COMP 734 – Fall 2011 11

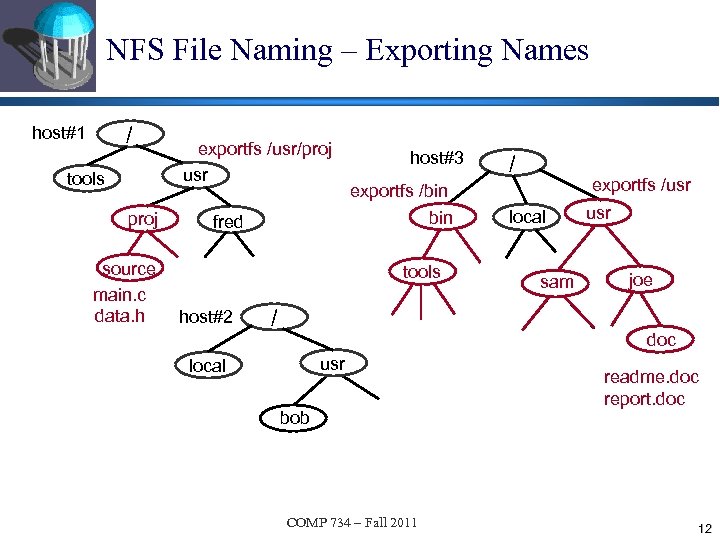

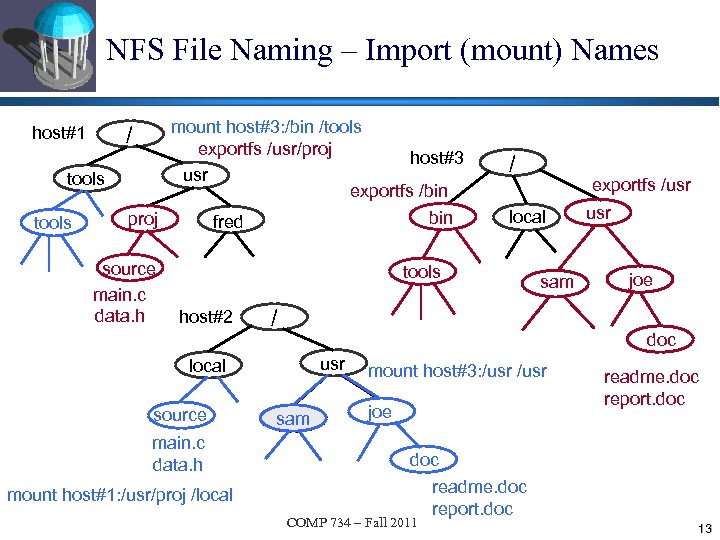

NFS File Naming – Exporting Names / host#1 tools proj source main. c data. h exportfs /usr/proj usr fred host#3 exportfs /bin tools host#2 / / exportfs /usr local sam usr joe doc usr local bob COMP 734 – Fall 2011 readme. doc report. doc 12

NFS File Naming – Import (mount) Names tools mount host#3: /bin /tools exportfs /usr/proj host#3 usr exportfs /bin proj fred / host#1 source main. c data. h / local tools host#2 main. c data. h mount host#1: /usr/proj /local sam / usr joe doc usr local source exportfs /usr bob sam mount host#3: /usr joe readme. doc report. doc COMP 734 – Fall 2011 13

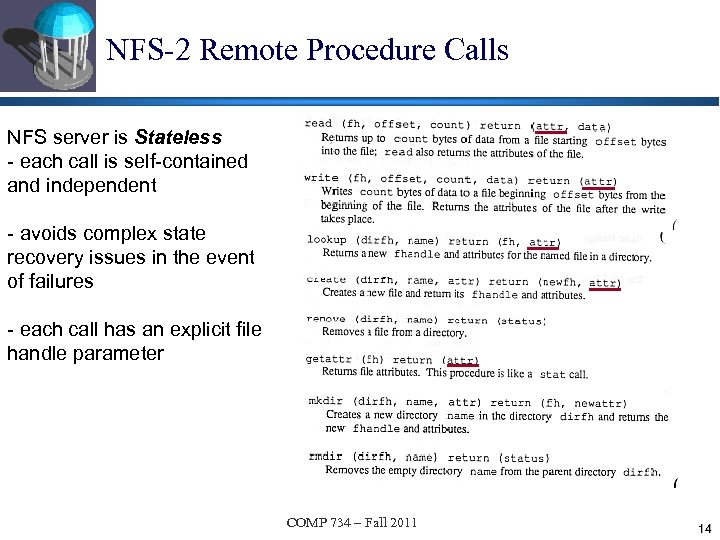

NFS-2 Remote Procedure Calls NFS server is Stateless - each call is self-contained and independent - avoids complex state recovery issues in the event of failures - each call has an explicit file handle parameter COMP 734 – Fall 2011 14

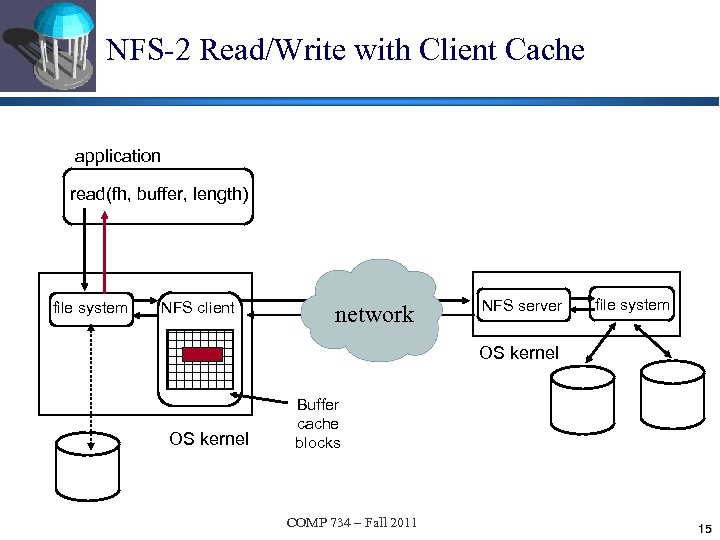

NFS-2 Read/Write with Client Cache application read(fh, buffer, length) file system NFS client network NFS server file system OS kernel Buffer cache blocks COMP 734 – Fall 2011 15

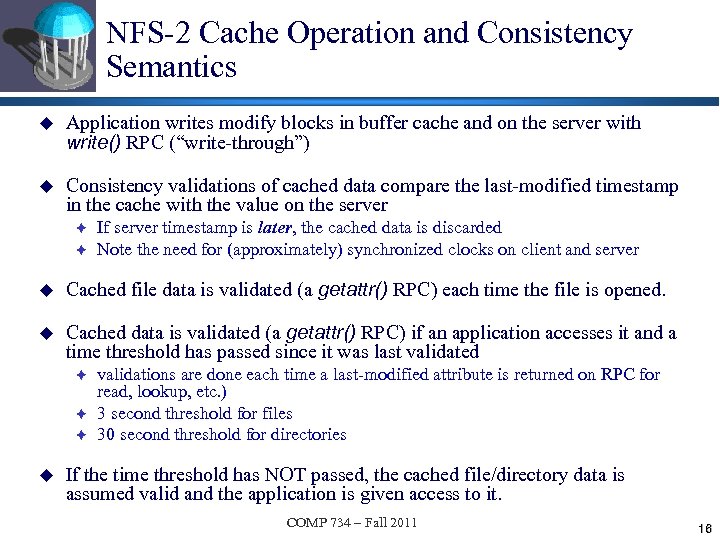

NFS-2 Cache Operation and Consistency Semantics u Application writes modify blocks in buffer cache and on the server with write() RPC (“write-through”) u Consistency validations of cached data compare the last-modified timestamp in the cache with the value on the server è è If server timestamp is later, the cached data is discarded Note the need for (approximately) synchronized clocks on client and server u Cached file data is validated (a getattr() RPC) each time the file is opened. u Cached data is validated (a getattr() RPC) if an application accesses it and a time threshold has passed since it was last validated è è è u validations are done each time a last-modified attribute is returned on RPC for read, lookup, etc. ) 3 second threshold for files 30 second threshold for directories If the time threshold has NOT passed, the cached file/directory data is assumed valid and the application is given access to it. COMP 734 – Fall 2011 16

Andrew Design Goals u Transparent file naming in single name space u Scalable -- O(1000 s) u Performance approximating local files u Easy administration and operation u “Flexible” protections (directory scope) u Clear (non-Unix) consistency semantics u Security (authentication) COMP 734 – Fall 2011 17

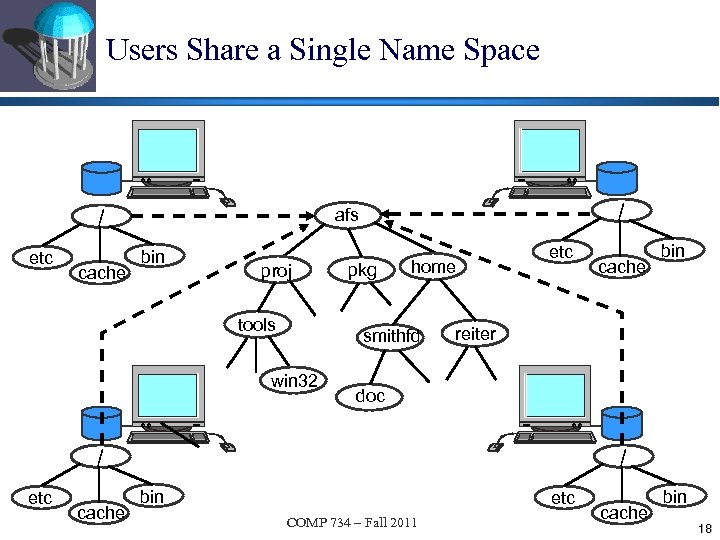

Users Share a Single Name Space / etc cache / afs bin proj tools pkg home smithfd win 32 etc reiter doc / etc cache bin / bin etc COMP 734 – Fall 2011 cache bin 18

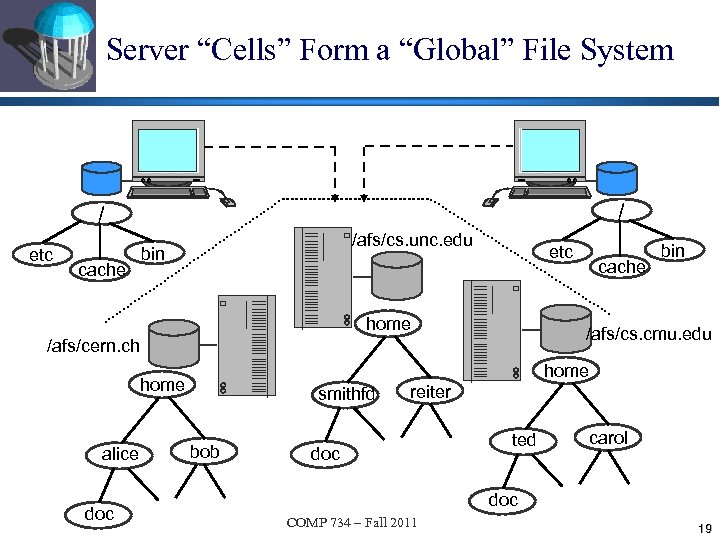

Server “Cells” Form a “Global” File System / / etc /afs/cs. unc. edu bin cache etc home /afs/cs. cmu. edu /afs/cern. ch home alice doc cache bin smithfd bob reiter doc ted carol doc COMP 734 – Fall 2011 19

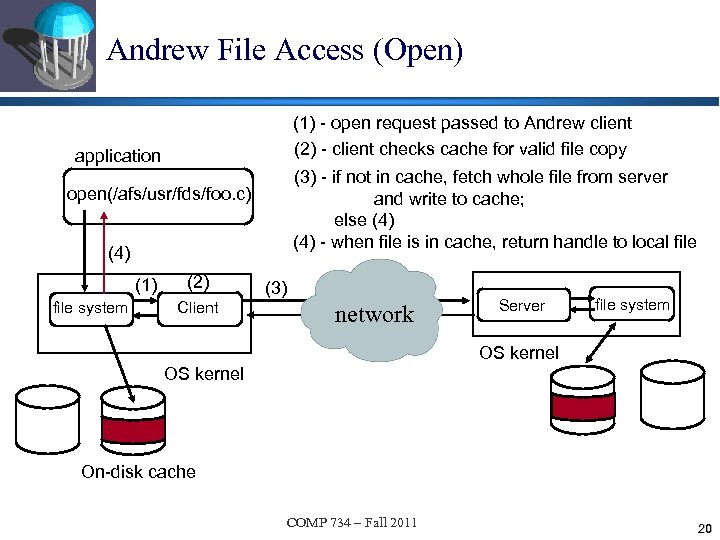

Andrew File Access (Open) (1) - open request passed to Andrew client (2) - client checks cache for valid file copy application (3) - if not in cache, fetch whole file from server and write to cache; else (4) - when file is in cache, return handle to local file open(/afs/usr/fds/foo. c) (4) (1) file system (2) Client (3) network Server file system OS kernel On-disk cache COMP 734 – Fall 2011 20

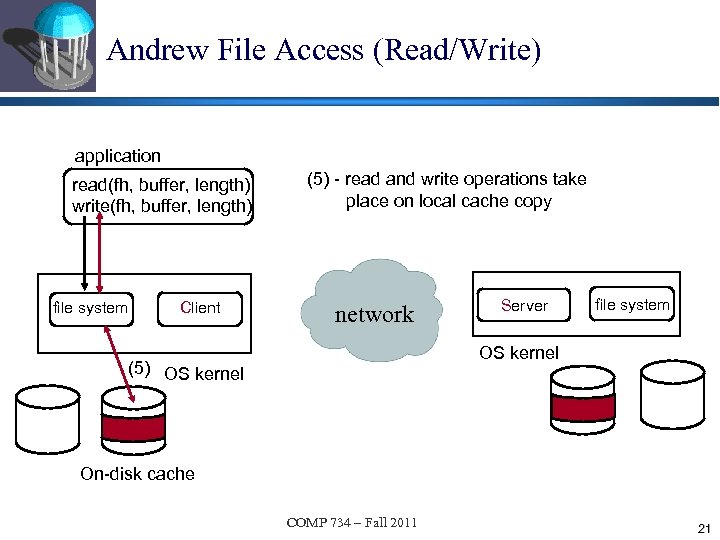

Andrew File Access (Read/Write) application read(fh, buffer, length) write(fh, buffer, length) file system Client (5) - read and write operations take place on local cache copy network Server file system OS kernel (5) OS kernel On-disk cache COMP 734 – Fall 2011 21

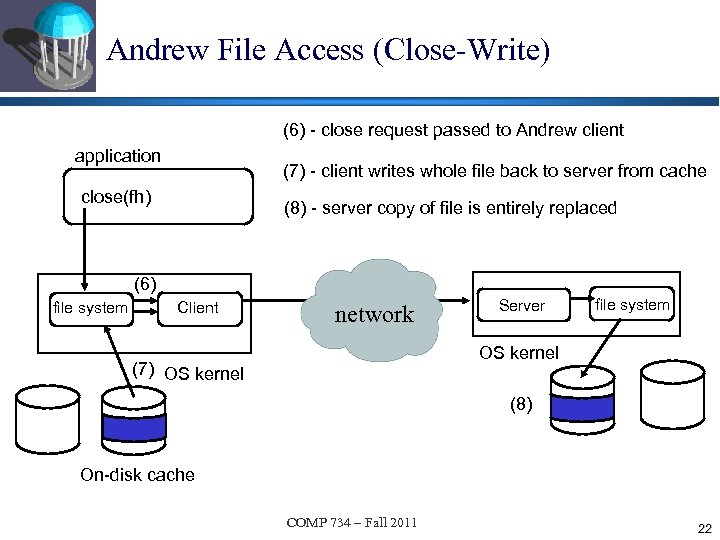

Andrew File Access (Close-Write) (6) - close request passed to Andrew client application (7) - client writes whole file back to server from cache close(fh) (8) - server copy of file is entirely replaced (6) file system Client network Server file system OS kernel (7) OS kernel (8) On-disk cache COMP 734 – Fall 2011 22

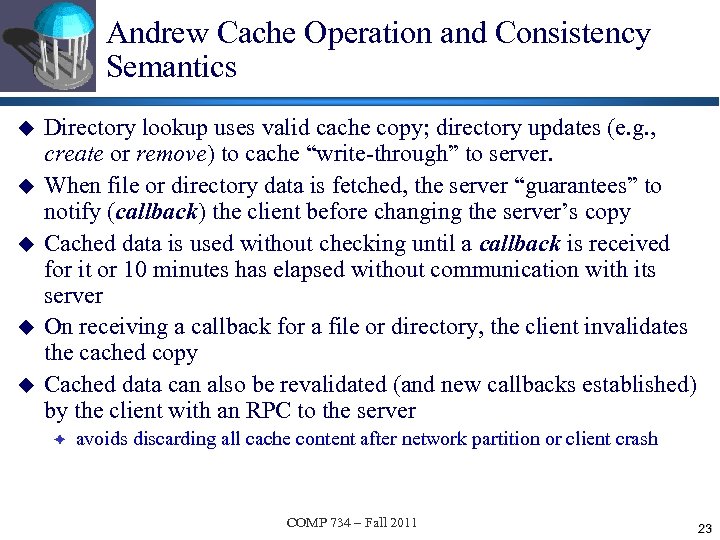

Andrew Cache Operation and Consistency Semantics u u u Directory lookup uses valid cache copy; directory updates (e. g. , create or remove) to cache “write-through” to server. When file or directory data is fetched, the server “guarantees” to notify (callback) the client before changing the server’s copy Cached data is used without checking until a callback is received for it or 10 minutes has elapsed without communication with its server On receiving a callback for a file or directory, the client invalidates the cached copy Cached data can also be revalidated (and new callbacks established) by the client with an RPC to the server è avoids discarding all cache content after network partition or client crash COMP 734 – Fall 2011 23

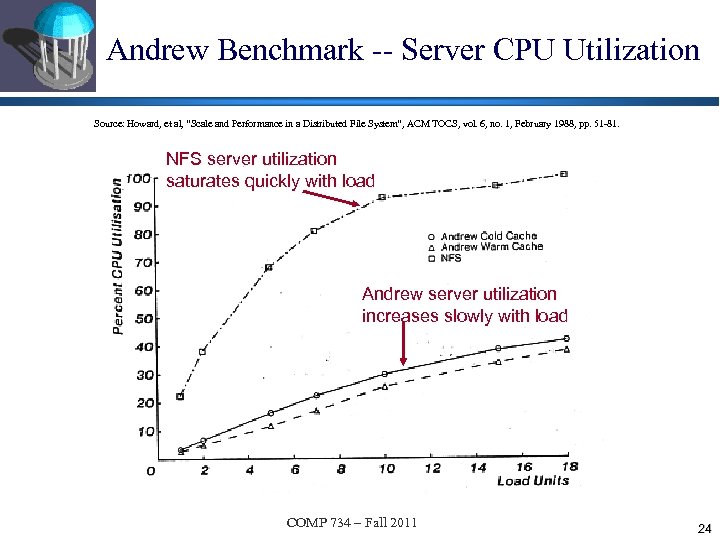

Andrew Benchmark -- Server CPU Utilization Source: Howard, et al, “Scale and Performance in a Distributed File System”, ACM TOCS, vol. 6, no. 1, February 1988, pp. 51 -81. NFS server utilization saturates quickly with load Andrew server utilization increases slowly with load COMP 734 – Fall 2011 24

Google is Really Different…. u Huge Datacenters in 30+ Worldwide Locations each > football field u Datacenters house multiple server clusters u Even nearby in Lenior, NC The Dalles, OR (2006) 4 story cooling towers 2008 2007 COMP 734 – Fall 2011 25

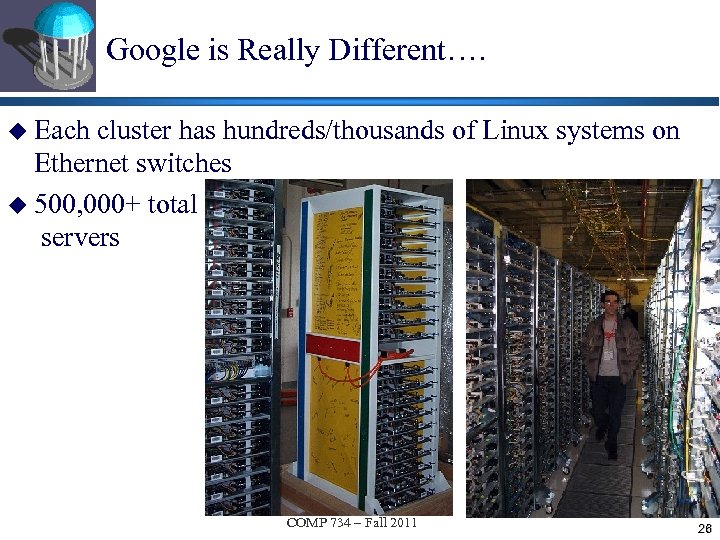

Google is Really Different…. u Each cluster has hundreds/thousands of Linux systems on Ethernet switches u 500, 000+ total servers COMP 734 – Fall 2011 26

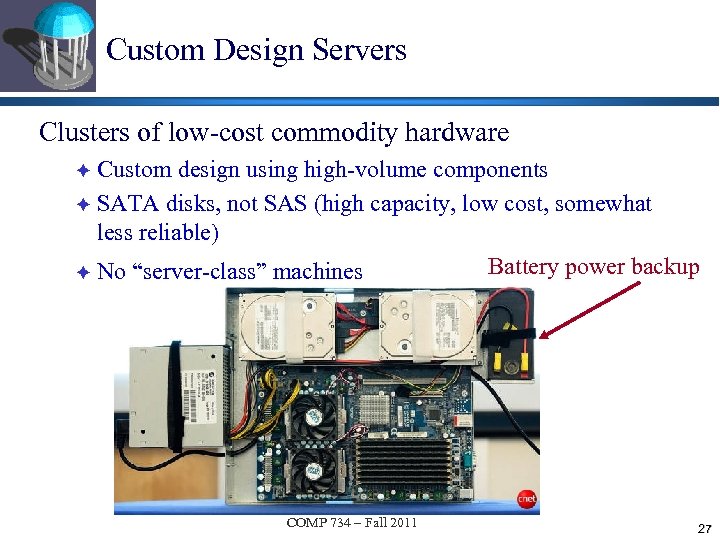

Custom Design Servers Clusters of low-cost commodity hardware Custom design using high-volume components è SATA disks, not SAS (high capacity, low cost, somewhat less reliable) Battery power backup è No “server-class” machines è COMP 734 – Fall 2011 27

Facebook Enters the Custom Server Race (April 7, 2011) Announces the Open Compute Project (the Green Data Center) COMP 734 – Fall 2011 28

Google File System Design Goals u Familiar è operations but NOT Unix/Posix Standard Specialized operation for Google applications record_append() u Scalable -- O(1000 s) of clients per cluster u Performance optimized for throughput è No client caches (big files, little cache locality) u Highly available and fault tolerant u Relaxed file consistency semantics è Applications written to deal with consistency issues COMP 734 – Fall 2011 29

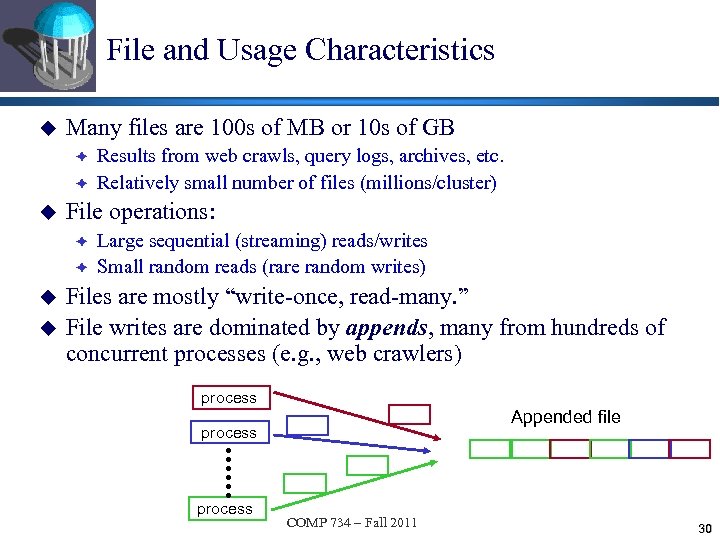

File and Usage Characteristics u Many files are 100 s of MB or 10 s of GB è è u File operations: è è u u Results from web crawls, query logs, archives, etc. Relatively small number of files (millions/cluster) Large sequential (streaming) reads/writes Small random reads (rare random writes) Files are mostly “write-once, read-many. ” File writes are dominated by appends, many from hundreds of concurrent processes (e. g. , web crawlers) process Appended file process COMP 734 – Fall 2011 30

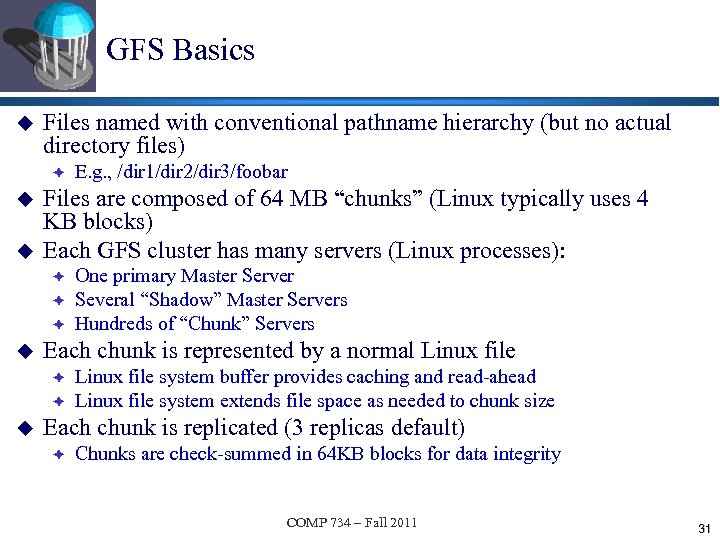

GFS Basics u Files named with conventional pathname hierarchy (but no actual directory files) è u u Files are composed of 64 MB “chunks” (Linux typically uses 4 KB blocks) Each GFS cluster has many servers (Linux processes): è è è u One primary Master Server Several “Shadow” Master Servers Hundreds of “Chunk” Servers Each chunk is represented by a normal Linux file è è u E. g. , /dir 1/dir 2/dir 3/foobar Linux file system buffer provides caching and read-ahead Linux file system extends file space as needed to chunk size Each chunk is replicated (3 replicas default) è Chunks are check-summed in 64 KB blocks for data integrity COMP 734 – Fall 2011 31

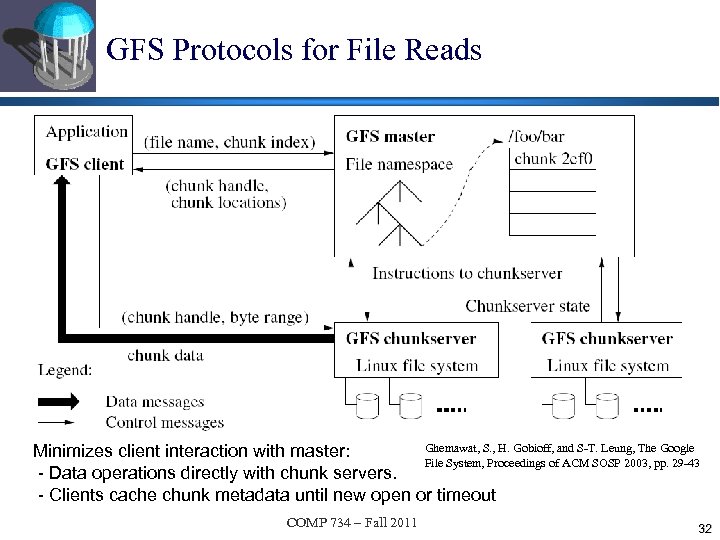

GFS Protocols for File Reads Ghemawat, S. , H. Gobioff, and S-T. Leung, The Google Minimizes client interaction with master: File System, Proceedings of ACM SOSP 2003, pp. 29 -43 - Data operations directly with chunk servers. - Clients cache chunk metadata until new open or timeout COMP 734 – Fall 2011 32

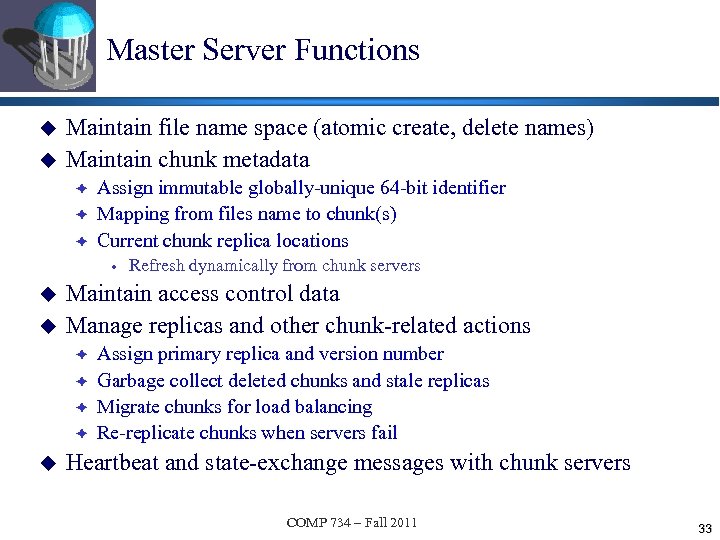

Master Server Functions u u Maintain file name space (atomic create, delete names) Maintain chunk metadata è è è Assign immutable globally-unique 64 -bit identifier Mapping from files name to chunk(s) Current chunk replica locations u u Maintain access control data Manage replicas and other chunk-related actions è è u Refresh dynamically from chunk servers Assign primary replica and version number Garbage collect deleted chunks and stale replicas Migrate chunks for load balancing Re-replicate chunks when servers fail Heartbeat and state-exchange messages with chunk servers COMP 734 – Fall 2011 33

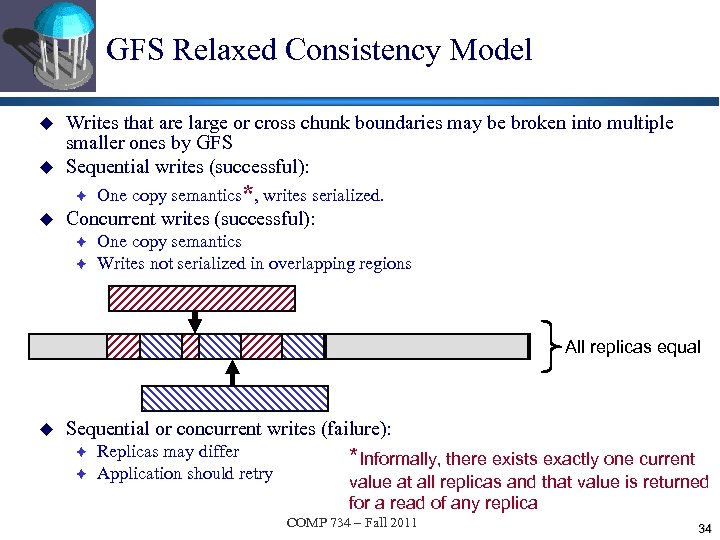

GFS Relaxed Consistency Model u u Writes that are large or cross chunk boundaries may be broken into multiple smaller ones by GFS Sequential writes (successful): è u One copy semantics*, writes serialized. Concurrent writes (successful): è è One copy semantics Writes not serialized in overlapping regions All replicas equal u Sequential or concurrent writes (failure): è è Replicas may differ Application should retry *Informally, there exists exactly one current value at all replicas and that value is returned for a read of any replica COMP 734 – Fall 2011 34

GFS Applications Deal with Relaxed Consistency u Writes è Retry in case of failure at any replica è Regular checkpoints after successful sequences è Include application-generated record identifiers and checksums u Reads è Use checksum validation and record identifiers to discard padding and duplicates. COMP 734 – Fall 2011 35

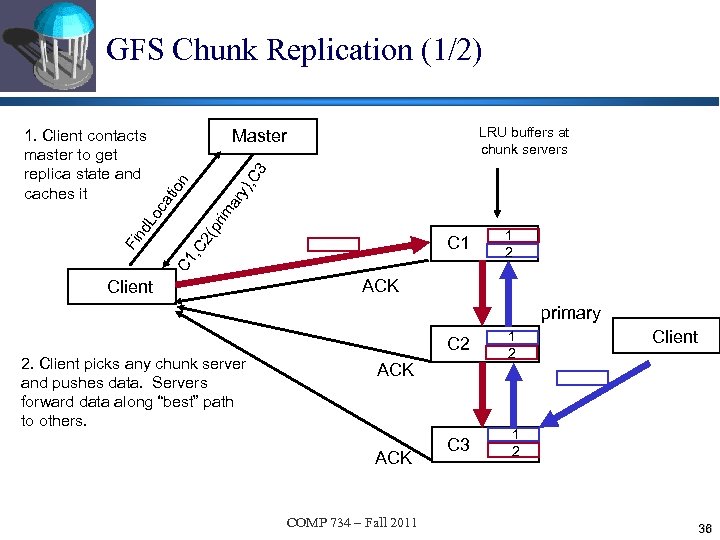

GFS Chunk Replication (1/2) LRU buffers at chunk servers C 1 , C nd Fi 2( pr ca im ar y) tio n , C 3 Master Lo 1. Client contacts master to get replica state and caches it Client 1 2 ACK primary C 2 2. Client picks any chunk server and pushes data. Servers forward data along “best” path to others. 1 2 C 3 1 2 ACK COMP 734 – Fall 2011 Client 36

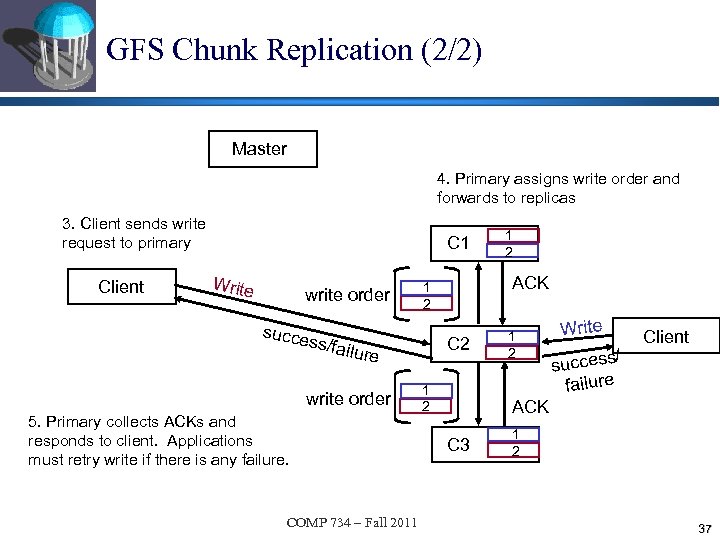

GFS Chunk Replication (2/2) Master 4. Primary assigns write order and forwards to replicas 3. Client sends write request to primary Client C 1 Write write order succe C 2 lure write order 5. Primary collects ACKs and responds to client. Applications must retry write if there is any failure. COMP 734 – Fall 2011 ACK 1 2 ss/fai 1 2 1 2 Write / success failure Client ACK C 3 1 2 37

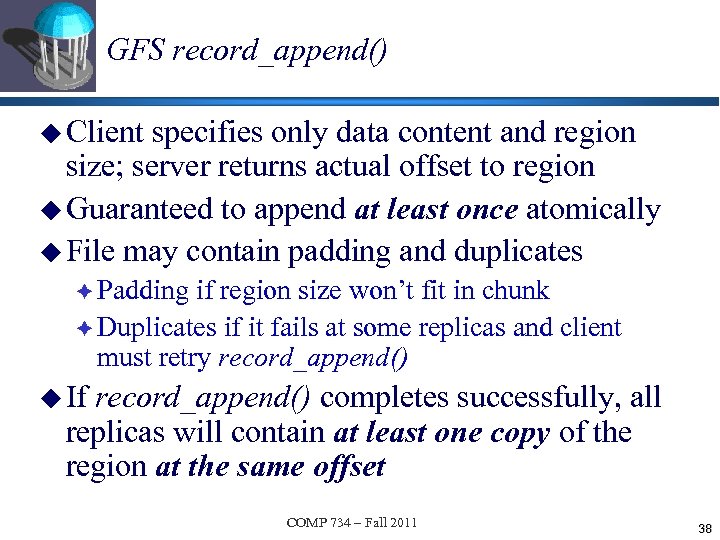

GFS record_append() u Client specifies only data content and region size; server returns actual offset to region u Guaranteed to append at least once atomically u File may contain padding and duplicates è Padding if region size won’t fit in chunk è Duplicates if it fails at some replicas and client must retry record_append() u If record_append() completes successfully, all replicas will contain at least one copy of the region at the same offset COMP 734 – Fall 2011 38

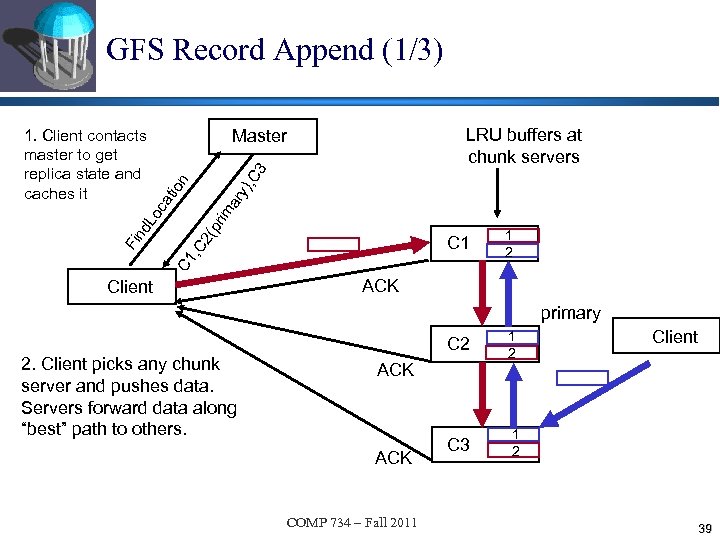

GFS Record Append (1/3) LRU buffers at chunk servers C 1 , C nd Fi 2( pr ca im ar y) tio n , C 3 Master Lo 1. Client contacts master to get replica state and caches it Client 1 2 ACK primary C 2 2. Client picks any chunk server and pushes data. Servers forward data along “best” path to others. 1 2 C 3 1 2 ACK COMP 734 – Fall 2011 Client 39

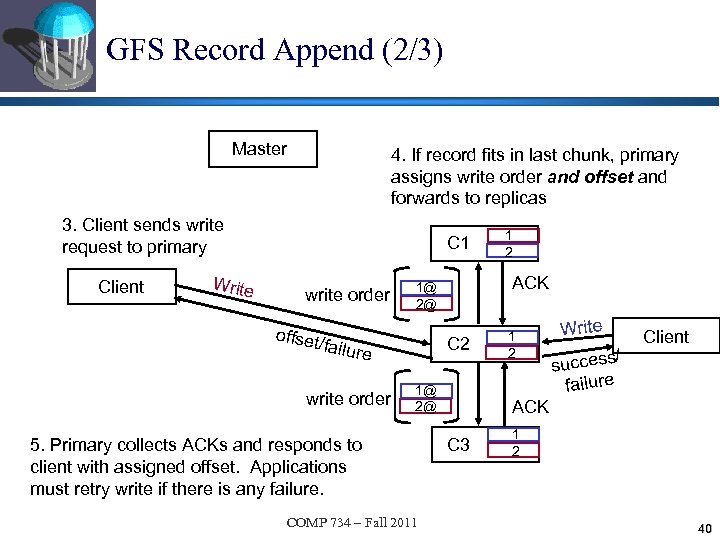

GFS Record Append (2/3) Master 4. If record fits in last chunk, primary assigns write order and offset and forwards to replicas 3. Client sends write request to primary Client Write C 1 write order offset C 2 e write order ACK 1@ 2@ /failur 1@ 2@ 5. Primary collects ACKs and responds to client with assigned offset. Applications must retry write if there is any failure. COMP 734 – Fall 2011 1 2 Write / success failure Client ACK C 3 1 2 40

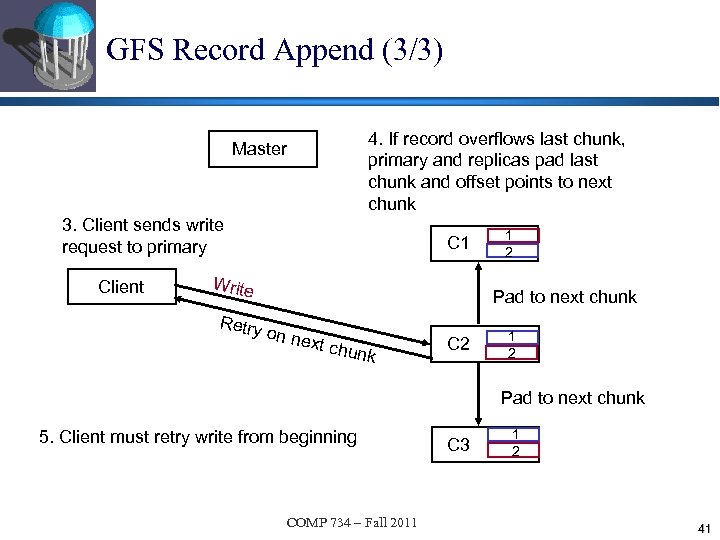

GFS Record Append (3/3) 4. If record overflows last chunk, primary and replicas pad last chunk and offset points to next chunk Master 3. Client sends write request to primary Client C 1 Write Retry 1 2 Pad to next chunk on ne xt chu nk C 2 1 2 Pad to next chunk 5. Client must retry write from beginning COMP 734 – Fall 2011 C 3 1 2 41

f973d47a864b88e4241242b31e19727e.ppt