da162757b61b912360739a0ac76d0b2d.ppt

- Количество слайдов: 1

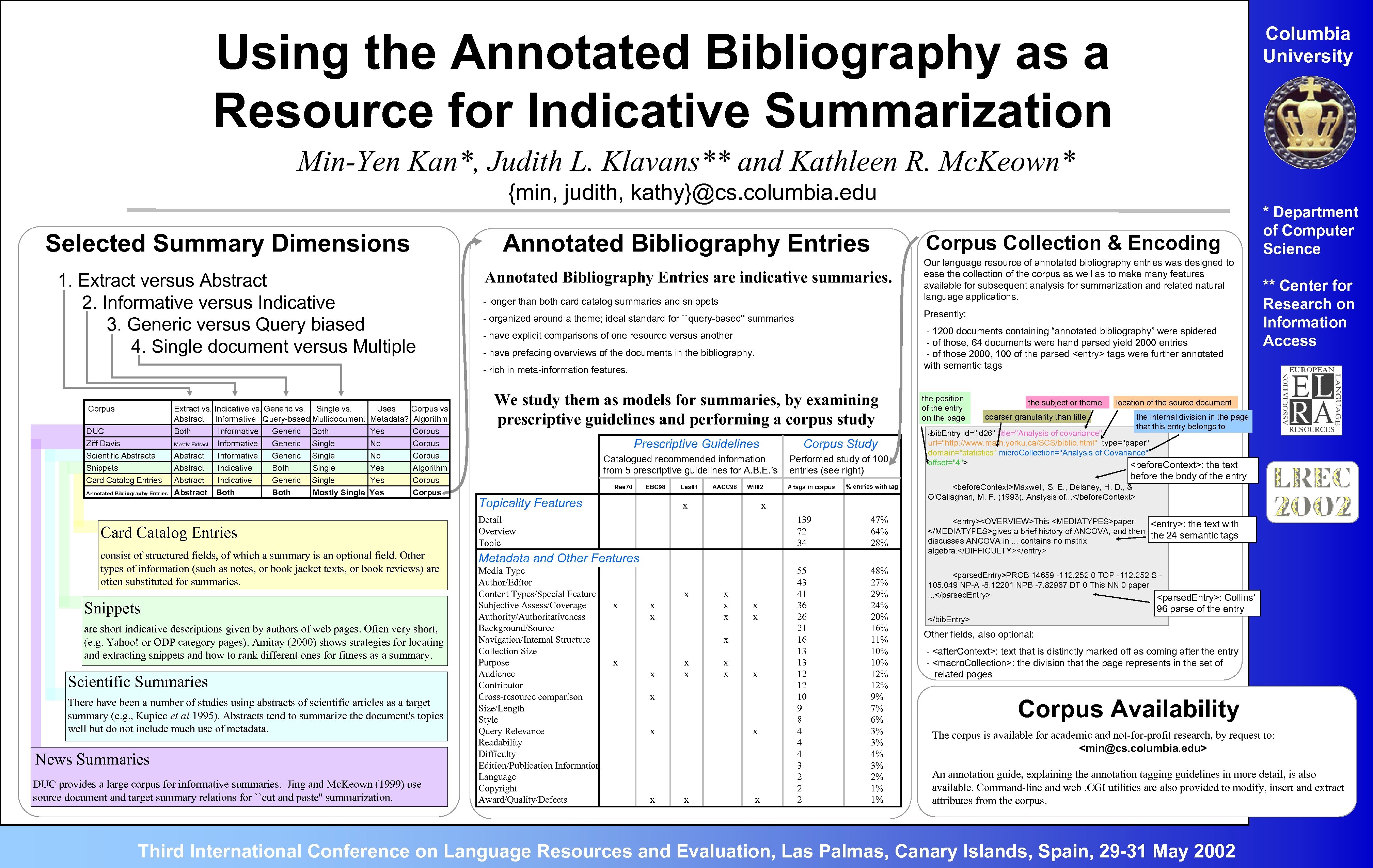

Columbia University Using the Annotated Bibliography as a Resource for Indicative Summarization Min-Yen Kan*, Judith L. Klavans** and Kathleen R. Mc. Keown* {min, judith, kathy}@cs. columbia. edu Selected Summary Dimensions Annotated Bibliography Entries 1. Extract versus Abstract 2. Informative versus Indicative 3. Generic versus Query biased 4. Single document versus Multiple Corpus Collection & Encoding - longer than both card catalog summaries and snippets Our language resource of annotated bibliography entries was designed to ease the collection of the corpus as well as to make many features available for subsequent analysis for summarization and related natural language applications. - organized around a theme; ideal standard for ``query-based'' summaries Presently: - have explicit comparisons of one resource versus another - 1200 documents containing “annotated bibliography” were spidered - of those, 64 documents were hand parsed yield 2000 entries - of those 2000, 100 of the parsed <entry> tags were further annotated with semantic tags Annotated Bibliography Entries are indicative summaries. - have prefacing overviews of the documents in the bibliography. - rich in meta-information features. Corpus DUC Ziff Davis Scientific Abstracts Snippets Card Catalog Entries Annotated Bibliography Entries Extract vs. Indicative vs. Generic vs. Single vs. Abstract Informative Query-based Multidocument Both Informative Generic Both Mostly Extract Informative Generic Single Abstract Indicative Both Single Abstract Indicative Generic Single Abstract Both Mostly Single Uses Metadata? Yes No No Yes Yes Corpus vs. Algorithm Corpus Algorithm Corpus Card Catalog Entries consist of structured fields, of which a summary is an optional field. Other types of information (such as notes, or book jacket texts, or book reviews) are often substituted for summaries. Snippets are short indicative descriptions given by authors of web pages. Often very short, (e. g. Yahoo! or ODP category pages). Amitay (2000) shows strategies for locating and extracting snippets and how to rank different ones for fitness as a summary. Scientific Summaries There have been a number of studies using abstracts of scientific articles as a target summary (e. g. , Kupiec et al 1995). Abstracts tend to summarize the document's topics well but do not include much use of metadata. News Summaries DUC provides a large corpus for informative summaries. Jing and Mc. Keown (1999) use source document and target summary relations for ``cut and paste'' summarization. We study them as models for summaries, by examining prescriptive guidelines and performing a corpus study the position of the entry on the page the subject or theme * Department of Computer Science ** Center for Research on Information Access location of the source document coarser granularity than title the internal division in the page that this entry belongs to bib. Entry id="id 26" title="Analysis of covariance" url="http: //www. math. yorku. ca/SCS/biblio. html" type="paper" domain="statistics“ micro. Collection="Analysis of Covariance" offset="4"> <before. Context>: the text < Prescriptive Guidelines Catalogued recommended information from 5 prescriptive guidelines for A. B. E. ’s Ree 70 EBC 98 Topicality Features Les 01 AACC 98 x Wil 02 Corpus Study Performed study of 100 entries (see right) # tags in corpus % entries with tag x Detail Overview Topic 139 72 34 47% 64% 28% 55 43 41 36 26 21 16 13 13 12 12 10 9 8 4 4 4 3 2 2 2 48% 27% 29% 24% 20% 16% 11% 10% 12% 9% 7% 6% 3% 3% 4% 3% 2% 1% 1% Metadata and Other Features Media Type Author/Editor Content Types/Special Feature Subjective Assess/Coverage x Authority/Authoritativeness Background/Source Navigation/Internal Structure Collection Size Purpose x Audience Contributor Cross-resource comparison Size/Length Style Query Relevance Readability Difficulty Edition/Publication Information Language Copyright Award/Quality/Defects x x x x x x x before the body of the entry <before. Context>Maxwell, S. E. , Delaney, H. D. , & O'Callaghan, M. F. (1993). Analysis of. . . </before. Context> <entry><OVERVIEW>This <MEDIATYPES>paper <entry>: the text with </MEDIATYPES>gives a brief history of ANCOVA, and then the 24 semantic tags discusses ANCOVA in. . . contains no matrix algebra. </DIFFICULTY></entry> <parsed. Entry>PROB 14659 -112. 252 0 TOP -112. 252 S 105. 049 NP-A -8. 12201 NPB -7. 82967 DT 0 This NN 0 paper. . . </parsed. Entry> <parsed. Entry>: Collins’ 96 parse of the entry </bib. Entry> Other fields, also optional: - <after. Context>: text that is distinctly marked off as coming after the entry - <macro. Collection>: the division that the page represents in the set of related pages Corpus Availability The corpus is available for academic and not-for-profit research, by request to: <min@cs. columbia. edu> An annotation guide, explaining the annotation tagging guidelines in more detail, is also available. Command-line and web. CGI utilities are also provided to modify, insert and extract attributes from the corpus. Third International Conference on Language Resources and Evaluation, Las Palmas, Canary Islands, Spain, 29 -31 May 2002

da162757b61b912360739a0ac76d0b2d.ppt