9763770425485863a34b87e7b63c35ce.ppt

- Количество слайдов: 17

Collecting Longitudinal Evaluation Data in a College Setting: Strategies for Managing Mountains of Data Jennifer Ann Morrow, Ph. D. 1 Erin Mehalic Burr, M. S. 1 Marcia Cianfrani, B. S. 2 Susanne Kaesbauer 2 Margot E. Ackermann, Ph. D. 3 University of Tennessee 1 Old Dominion University 2 Homeward 3

Collecting Longitudinal Evaluation Data in a College Setting: Strategies for Managing Mountains of Data Jennifer Ann Morrow, Ph. D. 1 Erin Mehalic Burr, M. S. 1 Marcia Cianfrani, B. S. 2 Susanne Kaesbauer 2 Margot E. Ackermann, Ph. D. 3 University of Tennessee 1 Old Dominion University 2 Homeward 3

Overview of Presentation • Description of Project Writing • Research Team • Evaluation Methodology • Data Management • What worked • What did not work • Suggestions for Evaluators

Overview of Presentation • Description of Project Writing • Research Team • Evaluation Methodology • Data Management • What worked • What did not work • Suggestions for Evaluators

Description of Project Writing • • Goal: to reduce high-risk drinking and stress in firstyear college students. 231 students were randomly assigned to one of three online interventions. Online Interventions • • • Expressive Writing Behavioral Monitoring Expressive Writing and Behavioral Monitoring Participants received payment and other incentives (e. g. , gift certificates).

Description of Project Writing • • Goal: to reduce high-risk drinking and stress in firstyear college students. 231 students were randomly assigned to one of three online interventions. Online Interventions • • • Expressive Writing Behavioral Monitoring Expressive Writing and Behavioral Monitoring Participants received payment and other incentives (e. g. , gift certificates).

Research Team • • Jennifer Ann Morrow (project PI). Robin Lewis (project Co PI). Margot Ackermann (Evaluation team leader). Erin Burr (Project manager and assisted with evaluation). • Undergraduate assistants: Marcia Cianfrani, Susanne Kaesbauer, Nicholas Paulson.

Research Team • • Jennifer Ann Morrow (project PI). Robin Lewis (project Co PI). Margot Ackermann (Evaluation team leader). Erin Burr (Project manager and assisted with evaluation). • Undergraduate assistants: Marcia Cianfrani, Susanne Kaesbauer, Nicholas Paulson.

Evaluation Methodology • • Comprehensive formative and summative evaluation. Online data collection method: • Participants emailed link to intervention/survey each week. Timing of data collection: • Pretest survey, midpoint surveys (weeks 3 and 6), posttest survey. Types of data collected: • • Qualitative: online journals, open-ended questions. Quantitative: numerous standardized measures.

Evaluation Methodology • • Comprehensive formative and summative evaluation. Online data collection method: • Participants emailed link to intervention/survey each week. Timing of data collection: • Pretest survey, midpoint surveys (weeks 3 and 6), posttest survey. Types of data collected: • • Qualitative: online journals, open-ended questions. Quantitative: numerous standardized measures.

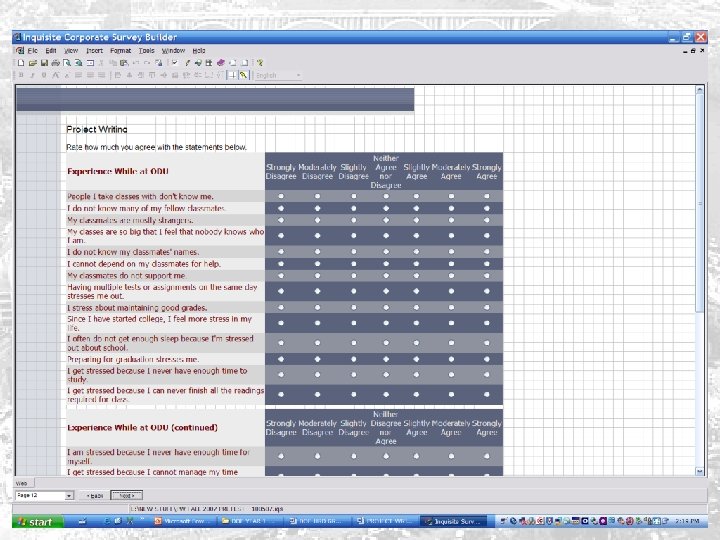

Online Data Collection • • We utilized Inquisite® software to collect all data. Features of software: • • A variety of question types available. Survey templates are available or you can customize. Allows you to upload email lists. Can create invitations and reminders. Enables you to track participation using authorization key. You can download data into a variety of formats (e. g. , SPSS, Excel, RTF files). Performs frequency analyses and graphs.

Online Data Collection • • We utilized Inquisite® software to collect all data. Features of software: • • A variety of question types available. Survey templates are available or you can customize. Allows you to upload email lists. Can create invitations and reminders. Enables you to track participation using authorization key. You can download data into a variety of formats (e. g. , SPSS, Excel, RTF files). Performs frequency analyses and graphs.

Evaluation Tools Used • Project specific email account: • • • Multiple researchers had access and were able to send, read, and reply to participants’ emails. Allowed us to keep all correspondence with participants in one location. Project notebook: • • • Copies of all project materials (e. g. , surveys, invitations). Contained instructions on how to manage surveys and download data. Included descriptions of problems encountered and how they were addressed.

Evaluation Tools Used • Project specific email account: • • • Multiple researchers had access and were able to send, read, and reply to participants’ emails. Allowed us to keep all correspondence with participants in one location. Project notebook: • • • Copies of all project materials (e. g. , surveys, invitations). Contained instructions on how to manage surveys and download data. Included descriptions of problems encountered and how they were addressed.

Evaluation Tools Used • Weekly status reports: • • Project manager created weekly reports that were sent to all team members each Sunday. Contained: summary of work completed previous week, key tasks for each member to complete that week, any issues that needed to be addressed. Reports were discussed in weekly meetings. Project data codebook: • • Contained complete list of variable names/labels, value labels, and syntax for creating composites/reverse scoring. Listed name and description of every dataset for project.

Evaluation Tools Used • Weekly status reports: • • Project manager created weekly reports that were sent to all team members each Sunday. Contained: summary of work completed previous week, key tasks for each member to complete that week, any issues that needed to be addressed. Reports were discussed in weekly meetings. Project data codebook: • • Contained complete list of variable names/labels, value labels, and syntax for creating composites/reverse scoring. Listed name and description of every dataset for project.

Evaluation Tools Used • Data analysis plans: • • • Created detailed analysis plans (using SPSS syntax) for each dataset. Included: data cleaning (e. g. , composite creation, addressing assumptions), qualitative coding, descriptive and inferential statistics. Master participant list: • • Contained complete list of all participants. Included: contact information, participant id, list of weeks participated, list of payments and incentives received.

Evaluation Tools Used • Data analysis plans: • • • Created detailed analysis plans (using SPSS syntax) for each dataset. Included: data cleaning (e. g. , composite creation, addressing assumptions), qualitative coding, descriptive and inferential statistics. Master participant list: • • Contained complete list of all participants. Included: contact information, participant id, list of weeks participated, list of payments and incentives received.

What Worked • Online data collection: • • Enabled us to collects lots of data in a short period of time and with little effort. Automated system for contacting and tracking participants. We could download the data multiple times each week and in multiple formats. Project specific email: • • Enabled us to split the work of responding to participants among all researchers. Each researcher had access to every email that was received/sent.

What Worked • Online data collection: • • Enabled us to collects lots of data in a short period of time and with little effort. Automated system for contacting and tracking participants. We could download the data multiple times each week and in multiple formats. Project specific email: • • Enabled us to split the work of responding to participants among all researchers. Each researcher had access to every email that was received/sent.

What Worked • • Project notebook: • • • All project materials were located in one larger document. Enabled us to train new research assistants easily. Allowed us to keep track of problems that we encountered. Weekly status reports: • • Could track the number of person hours each week. Each team member could see what they and others were responsible for completing.

What Worked • • Project notebook: • • • All project materials were located in one larger document. Enabled us to train new research assistants easily. Allowed us to keep track of problems that we encountered. Weekly status reports: • • Could track the number of person hours each week. Each team member could see what they and others were responsible for completing.

What Worked • Other tools/activities that were useful: • Project data codebook. • Data analysis plans. • Master participant list. • Analysis teams. • Cross-training of all researchers. • Weekly meetings and specific team meetings. • Student research assistants (inexpensive labor).

What Worked • Other tools/activities that were useful: • Project data codebook. • Data analysis plans. • Master participant list. • Analysis teams. • Cross-training of all researchers. • Weekly meetings and specific team meetings. • Student research assistants (inexpensive labor).

What Did Not Work • Technology issues: • We had various technical clitches with the survey • • software. MAC versus PC use of research assistants. We had not involved the technology staff at the school in our project until we had issues. • Inflexibility of data collection schedule: • We created a rigid schedule and when problems occurred it was difficult and stressful to modify our plans.

What Did Not Work • Technology issues: • We had various technical clitches with the survey • • software. MAC versus PC use of research assistants. We had not involved the technology staff at the school in our project until we had issues. • Inflexibility of data collection schedule: • We created a rigid schedule and when problems occurred it was difficult and stressful to modify our plans.

What Did Not Work • Too many “cooks”: • We had multiple people work on the same dataset. • Not always did they keep accurate track of what modifications were made. • Too much data, not enough person hours: • We underestimated how much time it would take to manage all of the data we collected.

What Did Not Work • Too many “cooks”: • We had multiple people work on the same dataset. • Not always did they keep accurate track of what modifications were made. • Too much data, not enough person hours: • We underestimated how much time it would take to manage all of the data we collected.

Suggestions for Evaluators • Use standardized tools (e. g. , project • notebook, data analysis plans) to manage your data. Use online tools to recruit and manage college student participants: • Project email, project website, social networking sites. • Involve students/interns: • Offer course credit, internship hours instead of salary.

Suggestions for Evaluators • Use standardized tools (e. g. , project • notebook, data analysis plans) to manage your data. Use online tools to recruit and manage college student participants: • Project email, project website, social networking sites. • Involve students/interns: • Offer course credit, internship hours instead of salary.

Contact Information If you would like more information regarding this project please contact: Jennifer Ann Morrow, Ph. D. Asst. Professor in Assessment and Evaluation Dept. of Educational Psychology and Counseling University of Tennessee Knoxville, TN 37996 -3452 Email: jamorrow@utk. edu Office Phone: (865) 974 -6117

Contact Information If you would like more information regarding this project please contact: Jennifer Ann Morrow, Ph. D. Asst. Professor in Assessment and Evaluation Dept. of Educational Psychology and Counseling University of Tennessee Knoxville, TN 37996 -3452 Email: jamorrow@utk. edu Office Phone: (865) 974 -6117