98c20f544a495fcf3d26a8c21cbf7758.ppt

- Количество слайдов: 30

CMS Tier 1 at JINR V. V. Korenkov for JINR CMS Tier-1 Team JINR XXIV International Symposium on Nuclear Electronics & Computing, NEC 2013, September 13

CMS Tier 1 at JINR V. V. Korenkov for JINR CMS Tier-1 Team JINR XXIV International Symposium on Nuclear Electronics & Computing, NEC 2013, September 13

1 Outline • CMS Grid structure – role of Tier-1 s – CMS Tier-1 s • CMS Tier-1 in Dubna – History and Motivations (Why Dubna? ) – Network infrastructure – Infrastructure and Resources – Services and Readiness – Staffing – Milestones • Conclusions

1 Outline • CMS Grid structure – role of Tier-1 s – CMS Tier-1 s • CMS Tier-1 in Dubna – History and Motivations (Why Dubna? ) – Network infrastructure – Infrastructure and Resources – Services and Readiness – Staffing – Milestones • Conclusions

2 CMS Grid Structure

2 CMS Grid Structure

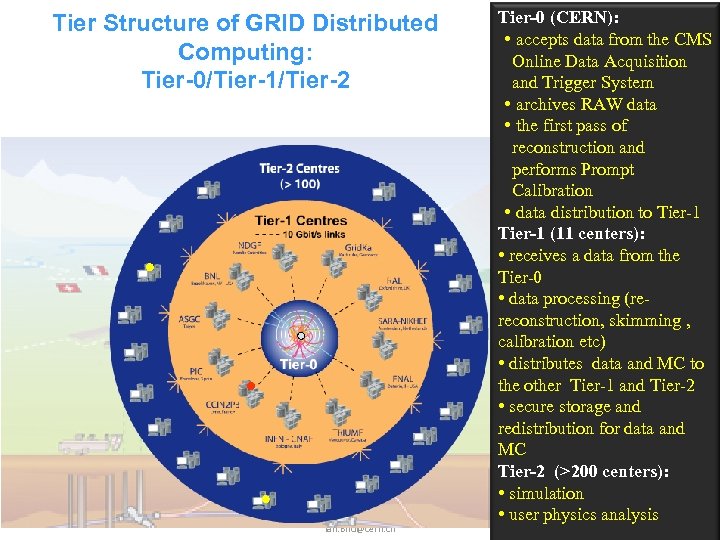

Tier Structure of GRID Distributed Computing: Tier-0/Tier-1/Tier-2 Ian. Bird@cern. ch Tier-0 (CERN): • accepts data from the CMS Online Data Acquisition and Trigger System • archives RAW data • the first pass of reconstruction and performs Prompt Calibration • data distribution to Tier-1 (11 centers): • receives a data from the Tier-0 • data processing (rereconstruction, skimming , calibration etc) • distributes data and MC to the other Tier-1 and Tier-2 • secure storage and redistribution for data and MC Tier-2 (>200 centers): • simulation • user physics analysis 3

Tier Structure of GRID Distributed Computing: Tier-0/Tier-1/Tier-2 Ian. Bird@cern. ch Tier-0 (CERN): • accepts data from the CMS Online Data Acquisition and Trigger System • archives RAW data • the first pass of reconstruction and performs Prompt Calibration • data distribution to Tier-1 (11 centers): • receives a data from the Tier-0 • data processing (rereconstruction, skimming , calibration etc) • distributes data and MC to the other Tier-1 and Tier-2 • secure storage and redistribution for data and MC Tier-2 (>200 centers): • simulation • user physics analysis 3

4 CMS Tier-1 in Dubna

4 CMS Tier-1 in Dubna

Tier 1 center March 2011 - Proposal to create the LCG Tier 1 center in Russia (official letter by Minister of Science and Education of Russia A. Fursenko has been sent to CERN DG R. Heuer): NRC KI for ALICE, ATLAS, and LHC-B LIT JINR (Dubna) for the CMS experiment The Federal Target Programme Project: «Creation of the automated system of data processing for experiments at the LHC of Tier-1 level and maintenance of Grid services for a distributed analysis of these data» Duration: 2011 – 2013 September 2012 – Proposal was reviewed by WLCG OB and JINR and NRC KI Tier 1 sites were accepted as a new “Associate Tier 1” Full resources - in 2014 to meet the start of next working LHC session. 5

Tier 1 center March 2011 - Proposal to create the LCG Tier 1 center in Russia (official letter by Minister of Science and Education of Russia A. Fursenko has been sent to CERN DG R. Heuer): NRC KI for ALICE, ATLAS, and LHC-B LIT JINR (Dubna) for the CMS experiment The Federal Target Programme Project: «Creation of the automated system of data processing for experiments at the LHC of Tier-1 level and maintenance of Grid services for a distributed analysis of these data» Duration: 2011 – 2013 September 2012 – Proposal was reviewed by WLCG OB and JINR and NRC KI Tier 1 sites were accepted as a new “Associate Tier 1” Full resources - in 2014 to meet the start of next working LHC session. 5

6 Why in Russia? Why Dubna?

6 Why in Russia? Why Dubna?

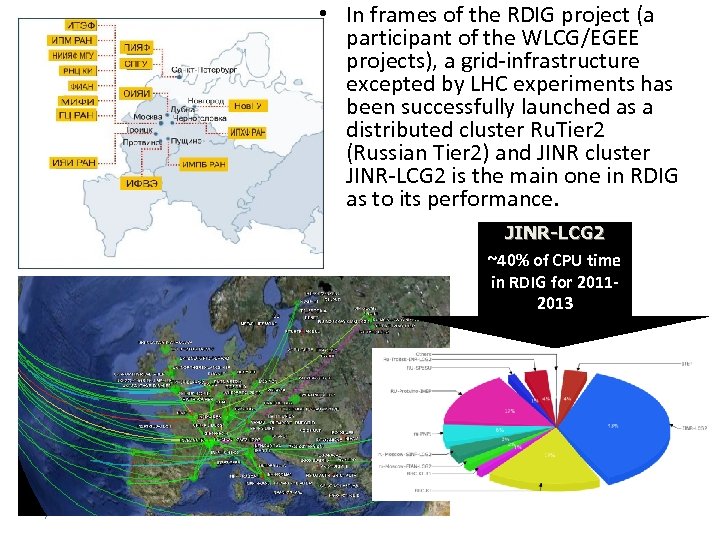

• In frames of the RDIG project (a participant of the WLCG/EGEE projects), a grid-infrastructure excepted by LHC experiments has been successfully launched as a distributed cluster Ru. Tier 2 (Russian Tier 2) and JINR cluster JINR-LCG 2 is the main one in RDIG as to its performance. JINR-LCG 2 ~40% of CPU time in RDIG for 20112013 7

• In frames of the RDIG project (a participant of the WLCG/EGEE projects), a grid-infrastructure excepted by LHC experiments has been successfully launched as a distributed cluster Ru. Tier 2 (Russian Tier 2) and JINR cluster JINR-LCG 2 is the main one in RDIG as to its performance. JINR-LCG 2 ~40% of CPU time in RDIG for 20112013 7

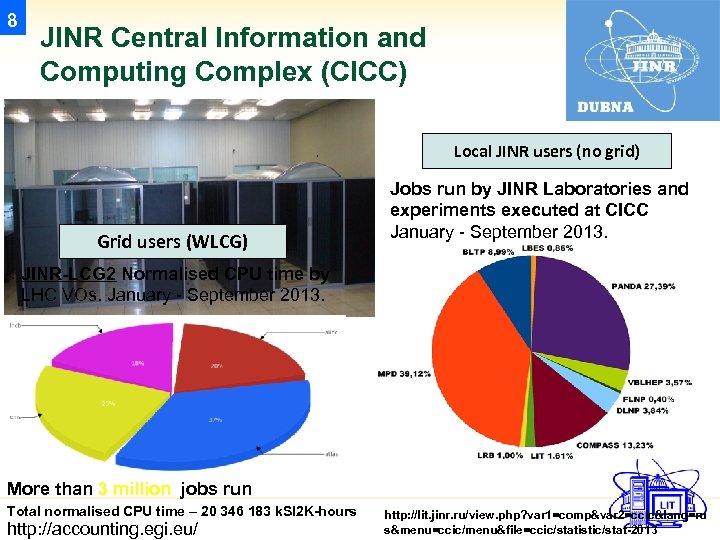

8 JINR Central Information and Computing Complex (CICC) Local JINR users (no grid) Grid users (WLCG) Jobs run by JINR Laboratories and experiments executed at CICC January - September 2013. JINR-LCG 2 Normalised CPU time by LHC VOs. January - September 2013. More than 3 million jobs run Total normalised CPU time – 20 346 183 k. SI 2 K-hours http: //accounting. egi. eu/ http: //lit. jinr. ru/view. php? var 1=comp&var 2=ccic&lang=ru s&menu=ccic/menu&file=ccic/statistic/stat-2013

8 JINR Central Information and Computing Complex (CICC) Local JINR users (no grid) Grid users (WLCG) Jobs run by JINR Laboratories and experiments executed at CICC January - September 2013. JINR-LCG 2 Normalised CPU time by LHC VOs. January - September 2013. More than 3 million jobs run Total normalised CPU time – 20 346 183 k. SI 2 K-hours http: //accounting. egi. eu/ http: //lit. jinr. ru/view. php? var 1=comp&var 2=ccic&lang=ru s&menu=ccic/menu&file=ccic/statistic/stat-2013

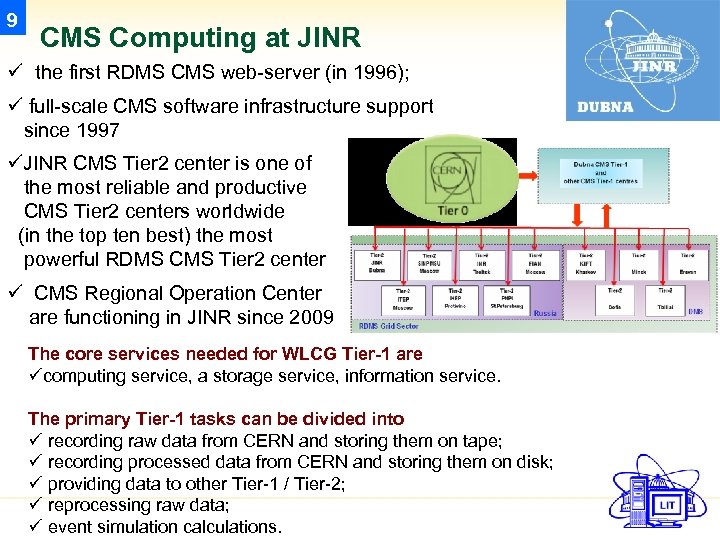

9 CMS Computing at JINR ü the first RDMS CMS web-server (in 1996); ü full-scale CMS software infrastructure support since 1997 üJINR CMS Tier 2 center is one of the most reliable and productive CMS Tier 2 centers worldwide (in the top ten best) the most powerful RDMS CMS Tier 2 center ü CMS Regional Operation Center are functioning in JINR since 2009 The core services needed for WLCG Tier-1 are ücomputing service, a storage service, information service. The primary Tier-1 tasks can be divided into ü recording raw data from CERN and storing them on tape; ü recording processed data from CERN and storing them on disk; ü providing data to other Tier-1 / Tier-2; ü reprocessing raw data; ü event simulation calculations.

9 CMS Computing at JINR ü the first RDMS CMS web-server (in 1996); ü full-scale CMS software infrastructure support since 1997 üJINR CMS Tier 2 center is one of the most reliable and productive CMS Tier 2 centers worldwide (in the top ten best) the most powerful RDMS CMS Tier 2 center ü CMS Regional Operation Center are functioning in JINR since 2009 The core services needed for WLCG Tier-1 are ücomputing service, a storage service, information service. The primary Tier-1 tasks can be divided into ü recording raw data from CERN and storing them on tape; ü recording processed data from CERN and storing them on disk; ü providing data to other Tier-1 / Tier-2; ü reprocessing raw data; ü event simulation calculations.

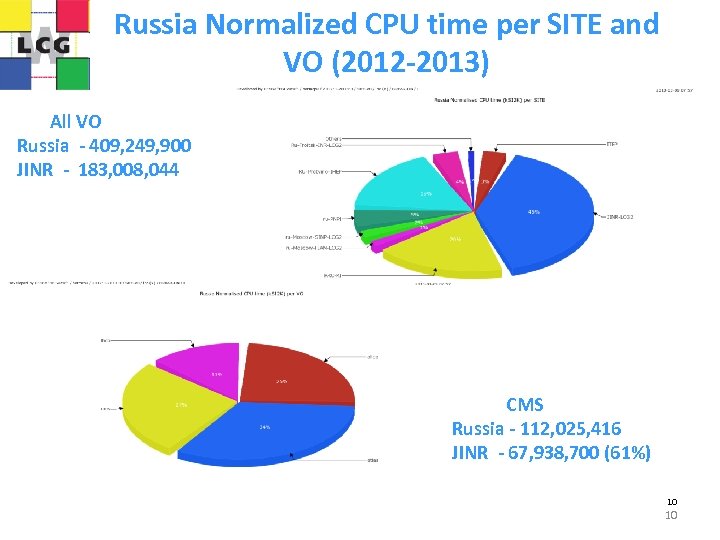

Russia Normalized CPU time per SITE and VO (2012 -2013) All VO Russia - 409, 249, 900 JINR - 183, 008, 044 CMS Russia - 112, 025, 416 JINR - 67, 938, 700 (61%) 10 10

Russia Normalized CPU time per SITE and VO (2012 -2013) All VO Russia - 409, 249, 900 JINR - 183, 008, 044 CMS Russia - 112, 025, 416 JINR - 67, 938, 700 (61%) 10 10

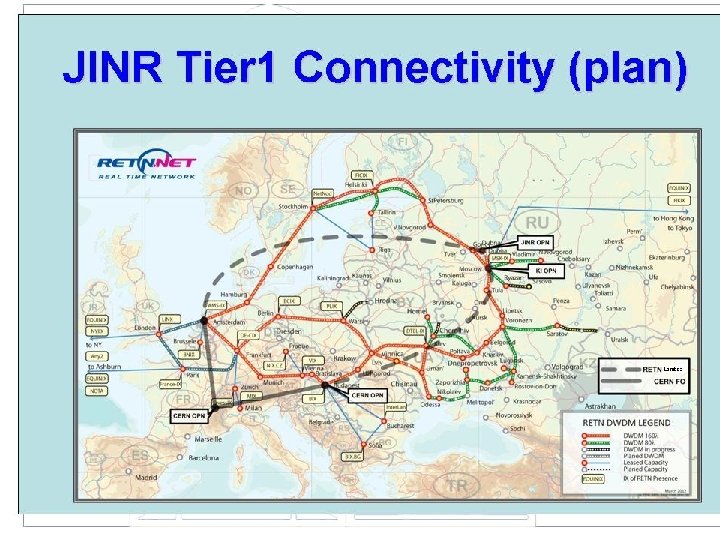

11 Network infrastructure

11 Network infrastructure

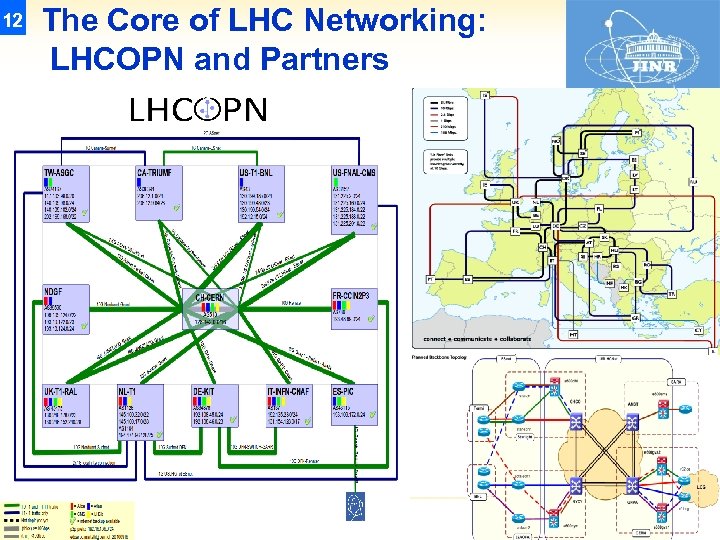

12 The Core of LHC Networking: LHCOPN and Partners 12

12 The Core of LHC Networking: LHCOPN and Partners 12

14 Infrastructure and Facilities

14 Infrastructure and Facilities

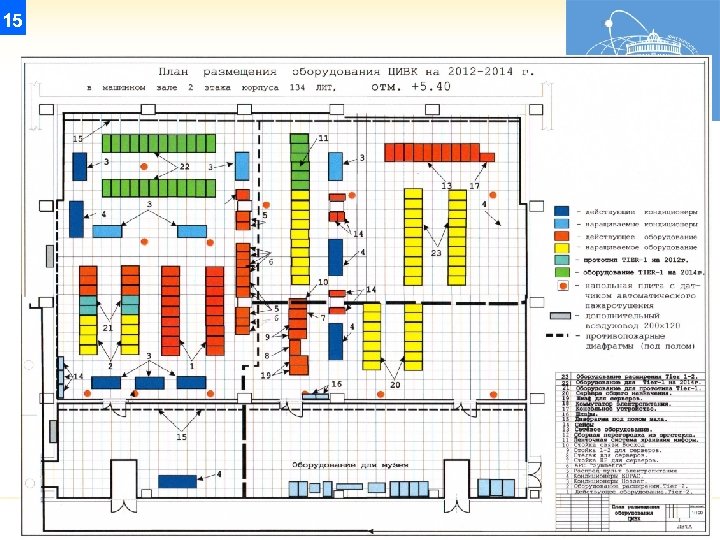

15

15

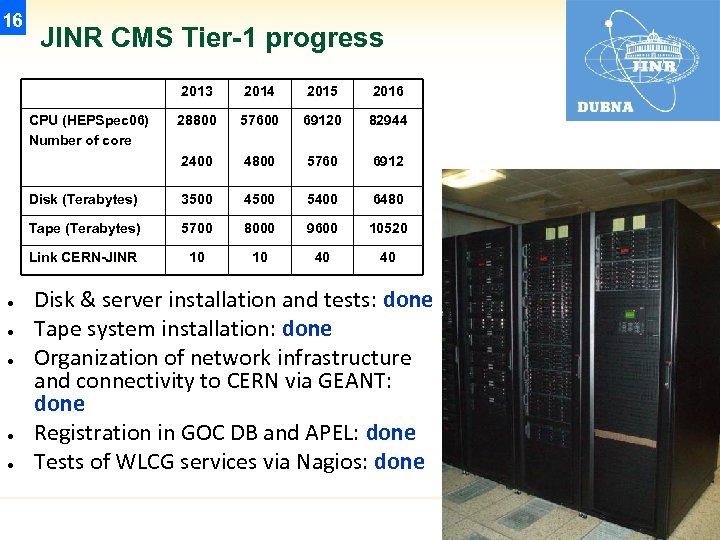

16 JINR CMS Tier-1 progress 2013 2014 2015 2016 28800 57600 69120 82944 2400 4800 5760 6912 Disk (Terabytes) 3500 4500 5400 6480 Tape (Terabytes) 5700 8000 9600 10520 Link CERN-JINR 10 10 40 40 CPU (HEPSpec 06) Number of core ● ● ● Disk & server installation and tests: done Tape system installation: done Organization of network infrastructure and connectivity to CERN via GEANT: done Registration in GOC DB and APEL: done Tests of WLCG services via Nagios: done

16 JINR CMS Tier-1 progress 2013 2014 2015 2016 28800 57600 69120 82944 2400 4800 5760 6912 Disk (Terabytes) 3500 4500 5400 6480 Tape (Terabytes) 5700 8000 9600 10520 Link CERN-JINR 10 10 40 40 CPU (HEPSpec 06) Number of core ● ● ● Disk & server installation and tests: done Tape system installation: done Organization of network infrastructure and connectivity to CERN via GEANT: done Registration in GOC DB and APEL: done Tests of WLCG services via Nagios: done

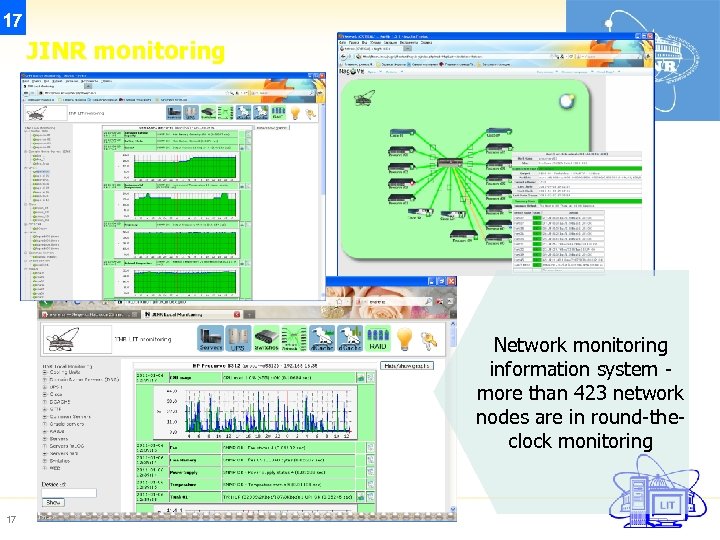

17 JINR monitoring Network monitoring information system more than 423 network nodes are in round-theclock monitoring 17

17 JINR monitoring Network monitoring information system more than 423 network nodes are in round-theclock monitoring 17

18 Services and Readiness

18 Services and Readiness

19 ● CMS-specific activity Currently commissioning Tier-1 resource for CMS: – – – ● ● Local Tests of CMS VO-services and CMS SW The Ph. EDEx Load. Test (tests of data transfer links) Job Robot Tests (or tests via Hammer. Cloud) Long-running CPU intensive jobs Long-running I/O intensive jobs PHDEDX transferred of RAW input data to our storage element with transfer efficiency around 90% Prepared services and data storage for the reprocessing of 2012 8 Te. V reprocessing

19 ● CMS-specific activity Currently commissioning Tier-1 resource for CMS: – – – ● ● Local Tests of CMS VO-services and CMS SW The Ph. EDEx Load. Test (tests of data transfer links) Job Robot Tests (or tests via Hammer. Cloud) Long-running CPU intensive jobs Long-running I/O intensive jobs PHDEDX transferred of RAW input data to our storage element with transfer efficiency around 90% Prepared services and data storage for the reprocessing of 2012 8 Te. V reprocessing

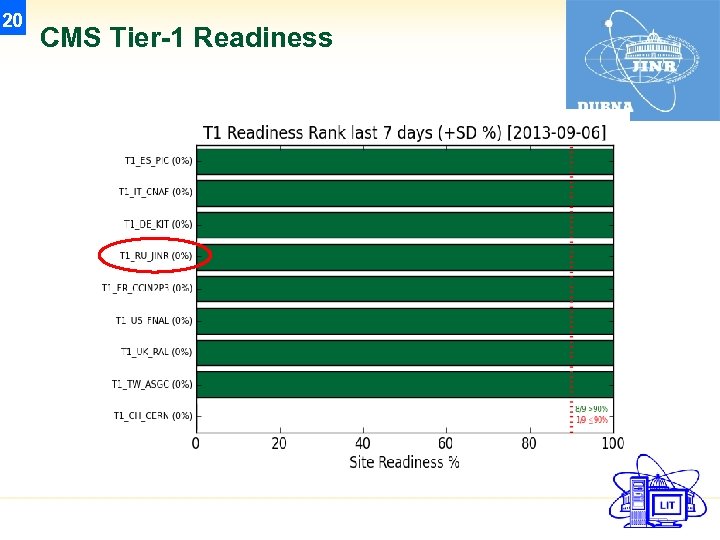

20 CMS Tier-1 Readiness

20 CMS Tier-1 Readiness

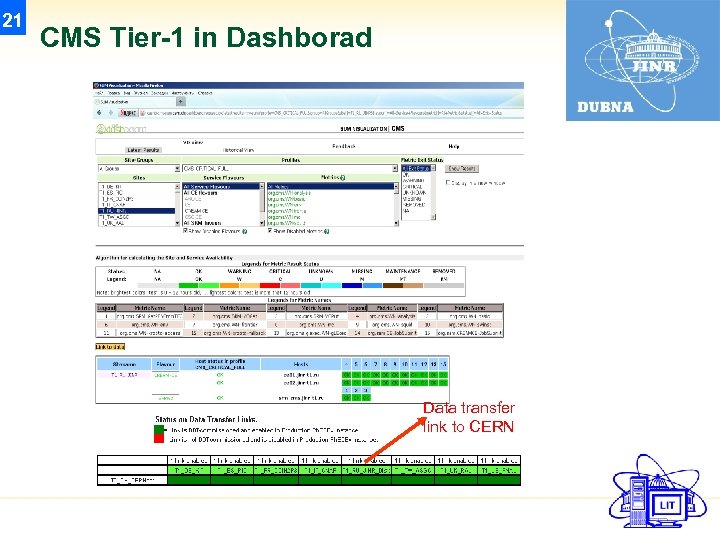

21 CMS Tier-1 in Dashborad Data transfer link to CERN

21 CMS Tier-1 in Dashborad Data transfer link to CERN

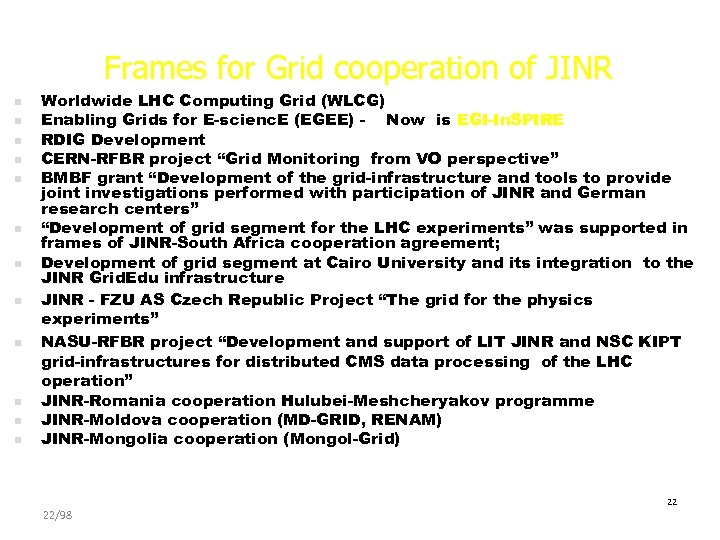

Frames for Grid cooperation of JINR n n n Worldwide LHC Computing Grid (WLCG) Enabling Grids for E-scienc. E (EGEE) - Now is EGI-In. SPIRE RDIG Development CERN-RFBR project “Grid Monitoring from VO perspective” BMBF grant “Development of the grid-infrastructure and tools to provide joint investigations performed with participation of JINR and German research centers” “Development of grid segment for the LHC experiments” was supported in frames of JINR-South Africa cooperation agreement; Development of grid segment at Cairo University and its integration to the JINR Grid. Edu infrastructure JINR - FZU AS Czech Republic Project “The grid for the physics experiments” NASU-RFBR project “Development and support of LIT JINR and NSC KIPT grid-infrastructures for distributed CMS data processing of the LHC operation” JINR-Romania cooperation Hulubei-Meshcheryakov programme JINR-Moldova cooperation (MD-GRID, RENAM) JINR-Mongolia cooperation (Mongol-Grid) 22/98 22

Frames for Grid cooperation of JINR n n n Worldwide LHC Computing Grid (WLCG) Enabling Grids for E-scienc. E (EGEE) - Now is EGI-In. SPIRE RDIG Development CERN-RFBR project “Grid Monitoring from VO perspective” BMBF grant “Development of the grid-infrastructure and tools to provide joint investigations performed with participation of JINR and German research centers” “Development of grid segment for the LHC experiments” was supported in frames of JINR-South Africa cooperation agreement; Development of grid segment at Cairo University and its integration to the JINR Grid. Edu infrastructure JINR - FZU AS Czech Republic Project “The grid for the physics experiments” NASU-RFBR project “Development and support of LIT JINR and NSC KIPT grid-infrastructures for distributed CMS data processing of the LHC operation” JINR-Romania cooperation Hulubei-Meshcheryakov programme JINR-Moldova cooperation (MD-GRID, RENAM) JINR-Mongolia cooperation (Mongol-Grid) 22/98 22

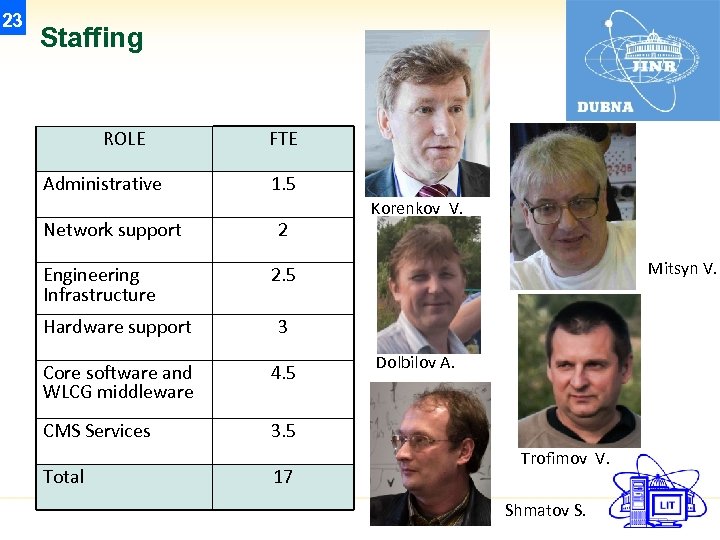

23 Staffing ROLE Administrative Network support Engineering Infrastructure FTE 1. 5 2 Korenkov V. Mitsyn V. 2. 5 Hardware support 3 Core software and WLCG middleware 4. 5 CMS Services 3. 5 Total 17 Dolbilov A. Trofimov V. Shmatov S.

23 Staffing ROLE Administrative Network support Engineering Infrastructure FTE 1. 5 2 Korenkov V. Mitsyn V. 2. 5 Hardware support 3 Core software and WLCG middleware 4. 5 CMS Services 3. 5 Total 17 Dolbilov A. Trofimov V. Shmatov S.

24 Milestones

24 Milestones

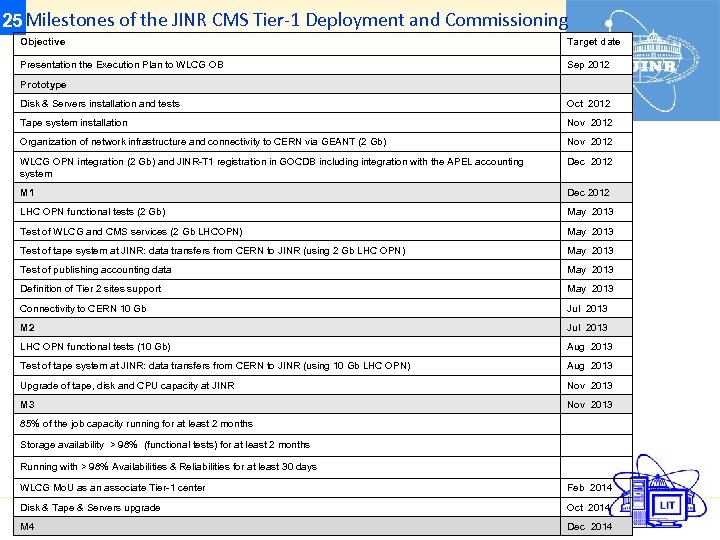

25 Milestones of the JINR CMS Tier-1 Deployment and Commissioning Objective Target date Presentation the Execution Plan to WLCG OB Sep 2012 Prototype Disk & Servers installation and tests Oct 2012 Tape system installation Nov 2012 Organization of network infrastructure and connectivity to CERN via GEANT (2 Gb) Nov 2012 WLCG OPN integration (2 Gb) and JINR-T 1 registration in GOCDB including integration with the APEL accounting system Dec 2012 M 1 Dec 2012 LHC OPN functional tests (2 Gb) May 2013 Test of WLCG and CMS services (2 Gb LHCOPN) May 2013 Test of tape system at JINR: data transfers from CERN to JINR (using 2 Gb LHC OPN) May 2013 Test of publishing accounting data May 2013 Definition of Tier 2 sites support May 2013 Connectivity to CERN 10 Gb Jul 2013 M 2 Jul 2013 LHC OPN functional tests (10 Gb) Aug 2013 Test of tape system at JINR: data transfers from CERN to JINR (using 10 Gb LHC OPN) Aug 2013 Upgrade of tape, disk and CPU capacity at JINR Nov 2013 M 3 Nov 2013 85% of the job capacity running for at least 2 months Storage availability > 98% (functional tests) for at least 2 months Running with > 98% Availabilities & Reliabilities for at least 30 days WLCG Mo. U as an associate Tier-1 center Feb 2014 Disk & Tape & Servers upgrade Oct 2014 M 4 Dec 2014

25 Milestones of the JINR CMS Tier-1 Deployment and Commissioning Objective Target date Presentation the Execution Plan to WLCG OB Sep 2012 Prototype Disk & Servers installation and tests Oct 2012 Tape system installation Nov 2012 Organization of network infrastructure and connectivity to CERN via GEANT (2 Gb) Nov 2012 WLCG OPN integration (2 Gb) and JINR-T 1 registration in GOCDB including integration with the APEL accounting system Dec 2012 M 1 Dec 2012 LHC OPN functional tests (2 Gb) May 2013 Test of WLCG and CMS services (2 Gb LHCOPN) May 2013 Test of tape system at JINR: data transfers from CERN to JINR (using 2 Gb LHC OPN) May 2013 Test of publishing accounting data May 2013 Definition of Tier 2 sites support May 2013 Connectivity to CERN 10 Gb Jul 2013 M 2 Jul 2013 LHC OPN functional tests (10 Gb) Aug 2013 Test of tape system at JINR: data transfers from CERN to JINR (using 10 Gb LHC OPN) Aug 2013 Upgrade of tape, disk and CPU capacity at JINR Nov 2013 M 3 Nov 2013 85% of the job capacity running for at least 2 months Storage availability > 98% (functional tests) for at least 2 months Running with > 98% Availabilities & Reliabilities for at least 30 days WLCG Mo. U as an associate Tier-1 center Feb 2014 Disk & Tape & Servers upgrade Oct 2014 M 4 Dec 2014

26 Main tasks for next years Ø Engineering infrastructure (system of uninterrupted power supply and climate-control) Ø High-speed reliable network infrastructure with the allocated reserved channel to CERN (LHCOPN) Ø Computing system and storage system on the basis of disk arrays and tape libraries of high capacity Ø 100% reliability and availability.

26 Main tasks for next years Ø Engineering infrastructure (system of uninterrupted power supply and climate-control) Ø High-speed reliable network infrastructure with the allocated reserved channel to CERN (LHCOPN) Ø Computing system and storage system on the basis of disk arrays and tape libraries of high capacity Ø 100% reliability and availability.

CERN US-BNL Amsterdam/NIKHEF-SARA Bologna/CNAF Ca. TRIUMF Taipei/ASGC Russia: NRC KI NDGF JINR US-FNAL 26 June 2009 De-FZK Barcelona/PIC Lyon/CCIN 2 P 3 UK-RAL

CERN US-BNL Amsterdam/NIKHEF-SARA Bologna/CNAF Ca. TRIUMF Taipei/ASGC Russia: NRC KI NDGF JINR US-FNAL 26 June 2009 De-FZK Barcelona/PIC Lyon/CCIN 2 P 3 UK-RAL

The 6 th International Conference "Distributed Computing and Grid-technologies in Science and Education" (GRID’ 2014) Dubna, 30 June-5 July 2014 GRID’ 2012 Conference 22 countries, 256 participants, 40 Universities and Institutes from Russia, 31 Plenary, 89 Section talks 28/98

The 6 th International Conference "Distributed Computing and Grid-technologies in Science and Education" (GRID’ 2014) Dubna, 30 June-5 July 2014 GRID’ 2012 Conference 22 countries, 256 participants, 40 Universities and Institutes from Russia, 31 Plenary, 89 Section talks 28/98

29 Conclusions Ø In 2012 -2013 CMS Tier 1 prototype was created in Dubna ü Disk & server installation and tests ü Prototype tape system installation and tests ü Organization of network infrastructure and connectivity to CERN via GEANT ü Registration in GOC DB and APEL ü Tests of WLCG services via Nagios ü CMS-specific tests ü Commissioning data transfer links (T 0 -T 1, T 1 -T 2) in progress Ø We expect to meet the start of next LHC run with full resources required (for the end of 2014)

29 Conclusions Ø In 2012 -2013 CMS Tier 1 prototype was created in Dubna ü Disk & server installation and tests ü Prototype tape system installation and tests ü Organization of network infrastructure and connectivity to CERN via GEANT ü Registration in GOC DB and APEL ü Tests of WLCG services via Nagios ü CMS-specific tests ü Commissioning data transfer links (T 0 -T 1, T 1 -T 2) in progress Ø We expect to meet the start of next LHC run with full resources required (for the end of 2014)