30ad2b3a831180bc6c1323f9deea0006.ppt

- Количество слайдов: 12

CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure Srećko Morović Institute Ruđer Bošković On behalf of the CMS Collaboration CHEP 2010 October 19, 2010. Academia Sinica, Taipei, Taiwan 1

CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure Srećko Morović Institute Ruđer Bošković On behalf of the CMS Collaboration CHEP 2010 October 19, 2010. Academia Sinica, Taipei, Taiwan 1

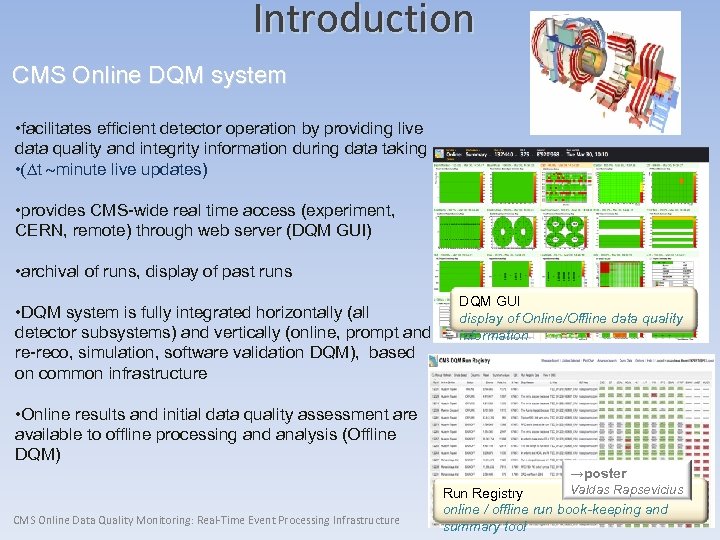

Introduction CMS Online DQM system • facilitates efficient detector operation by providing live data quality and integrity information during data taking • (Dt ~minute live updates) • provides CMS-wide real time access (experiment, CERN, remote) through web server (DQM GUI) • archival of runs, display of past runs • DQM system is fully integrated horizontally (all detector subsystems) and vertically (online, prompt and re-reco, simulation, software validation DQM), based on common infrastructure DQM GUI display of Online/Offline data quality information • Online results and initial data quality assessment are available to offline processing and analysis (Offline DQM) →poster CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure Valdas Rapsevicius Run Registry online / offline run book-keeping and summary tool

Introduction CMS Online DQM system • facilitates efficient detector operation by providing live data quality and integrity information during data taking • (Dt ~minute live updates) • provides CMS-wide real time access (experiment, CERN, remote) through web server (DQM GUI) • archival of runs, display of past runs • DQM system is fully integrated horizontally (all detector subsystems) and vertically (online, prompt and re-reco, simulation, software validation DQM), based on common infrastructure DQM GUI display of Online/Offline data quality information • Online results and initial data quality assessment are available to offline processing and analysis (Offline DQM) →poster CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure Valdas Rapsevicius Run Registry online / offline run book-keeping and summary tool

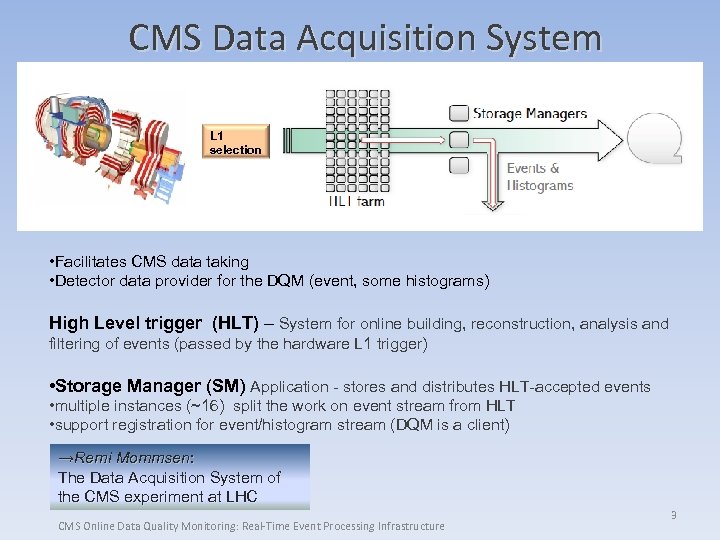

CMS Data Acquisition System L 1 selection • Facilitates CMS data taking • Detector data provider for the DQM (event, some histograms) High Level trigger (HLT) – System for online building, reconstruction, analysis and filtering of events (passed by the hardware L 1 trigger) • Storage Manager (SM) Application - stores and distributes HLT-accepted events • multiple instances (~16) split the work on event stream from HLT • support registration for event/histogram stream (DQM is a client) →Remi Mommsen: The Data Acquisition System of the CMS experiment at LHC CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure 3

CMS Data Acquisition System L 1 selection • Facilitates CMS data taking • Detector data provider for the DQM (event, some histograms) High Level trigger (HLT) – System for online building, reconstruction, analysis and filtering of events (passed by the hardware L 1 trigger) • Storage Manager (SM) Application - stores and distributes HLT-accepted events • multiple instances (~16) split the work on event stream from HLT • support registration for event/histogram stream (DQM is a client) →Remi Mommsen: The Data Acquisition System of the CMS experiment at LHC CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure 3

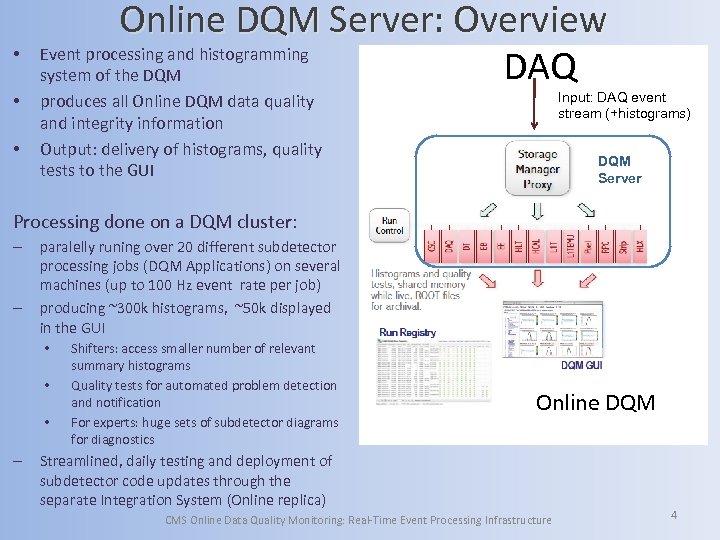

• • • Online DQM Server: Overview Event processing and histogramming DAQ system of the DQM Input: DAQ event stream (+histograms) produces all Online DQM data quality and integrity information Output: delivery of histograms, quality tests to the GUI DQM Server Processing done on a DQM cluster: – – paralelly runing over 20 different subdetector processing jobs (DQM Applications) on several machines (up to 100 Hz event rate per job) producing ~300 k histograms, ~50 k displayed in the GUI • • • – Shifters: access smaller number of relevant summary histograms Quality tests for automated problem detection and notification For experts: huge sets of subdetector diagrams for diagnostics Online DQM Streamlined, daily testing and deployment of subdetector code updates through the separate Integration System (Online replica) CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure 4

• • • Online DQM Server: Overview Event processing and histogramming DAQ system of the DQM Input: DAQ event stream (+histograms) produces all Online DQM data quality and integrity information Output: delivery of histograms, quality tests to the GUI DQM Server Processing done on a DQM cluster: – – paralelly runing over 20 different subdetector processing jobs (DQM Applications) on several machines (up to 100 Hz event rate per job) producing ~300 k histograms, ~50 k displayed in the GUI • • • – Shifters: access smaller number of relevant summary histograms Quality tests for automated problem detection and notification For experts: huge sets of subdetector diagrams for diagnostics Online DQM Streamlined, daily testing and deployment of subdetector code updates through the separate Integration System (Online replica) CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure 4

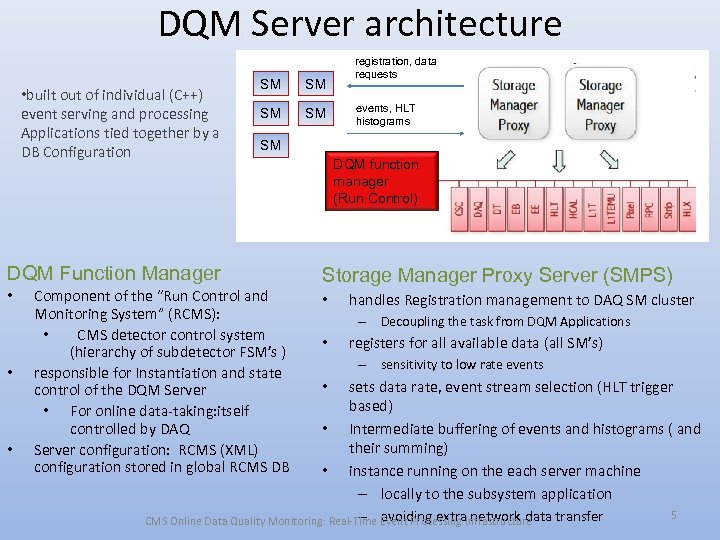

DQM Server architecture • built out of individual (C++) event serving and processing Applications tied together by a DB Configuration SM SM • • events, HLT histograms SM DQM Function Manager • registration, data requests Component of the “Run Control and Monitoring System” (RCMS): • CMS detector control system (hierarchy of subdetector FSM’s ) responsible for Instantiation and state control of the DQM Server • For online data-taking: itself controlled by DAQ Server configuration: RCMS (XML) configuration stored in global RCMS DB DQM function manager (Run Control) Storage Manager Proxy Server (SMPS) • handles Registration management to DAQ SM cluster – Decoupling the task from DQM Applications • registers for all available data (all SM’s) – sensitivity to low rate events sets data rate, event stream selection (HLT trigger based) • Intermediate buffering of events and histograms ( and their summing) • instance running on the each server machine – locally to the subsystem application 5 – avoiding extra network data transfer CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure •

DQM Server architecture • built out of individual (C++) event serving and processing Applications tied together by a DB Configuration SM SM • • events, HLT histograms SM DQM Function Manager • registration, data requests Component of the “Run Control and Monitoring System” (RCMS): • CMS detector control system (hierarchy of subdetector FSM’s ) responsible for Instantiation and state control of the DQM Server • For online data-taking: itself controlled by DAQ Server configuration: RCMS (XML) configuration stored in global RCMS DB DQM function manager (Run Control) Storage Manager Proxy Server (SMPS) • handles Registration management to DAQ SM cluster – Decoupling the task from DQM Applications • registers for all available data (all SM’s) – sensitivity to low rate events sets data rate, event stream selection (HLT trigger based) • Intermediate buffering of events and histograms ( and their summing) • instance running on the each server machine – locally to the subsystem application 5 – avoiding extra network data transfer CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure •

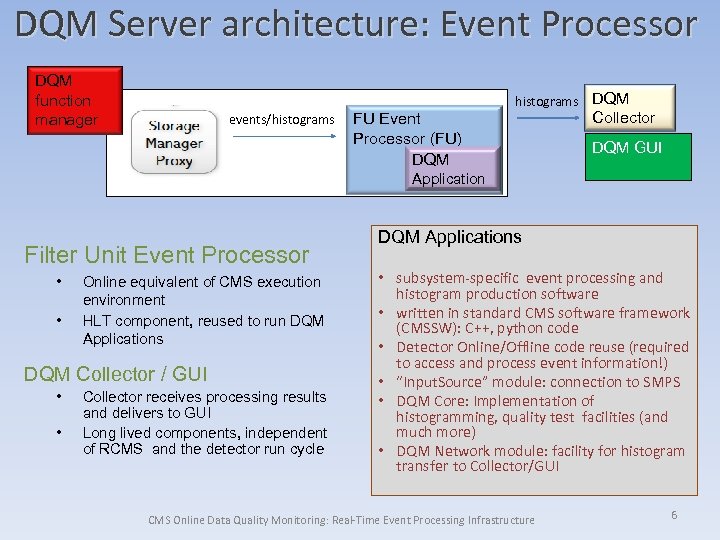

DQM Server architecture: Event Processor DQM function manager events/histograms FU Event Processor (FU) DQM histograms DQM Collector DQM GUI Application Filter Unit Event Processor • • Online equivalent of CMS execution environment HLT component, reused to run DQM Applications DQM Collector / GUI • • Collector receives processing results and delivers to GUI Long lived components, independent of RCMS and the detector run cycle DQM Applications • subsystem-specific event processing and histogram production software • written in standard CMS software framework (CMSSW): C++, python code • Detector Online/Offline code reuse (required to access and process event information!) • “Input. Source” module: connection to SMPS • DQM Core: Implementation of histogramming, quality test facilities (and much more) • DQM Network module: facility for histogram transfer to Collector/GUI CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure 6

DQM Server architecture: Event Processor DQM function manager events/histograms FU Event Processor (FU) DQM histograms DQM Collector DQM GUI Application Filter Unit Event Processor • • Online equivalent of CMS execution environment HLT component, reused to run DQM Applications DQM Collector / GUI • • Collector receives processing results and delivers to GUI Long lived components, independent of RCMS and the detector run cycle DQM Applications • subsystem-specific event processing and histogram production software • written in standard CMS software framework (CMSSW): C++, python code • Detector Online/Offline code reuse (required to access and process event information!) • “Input. Source” module: connection to SMPS • DQM Core: Implementation of histogramming, quality test facilities (and much more) • DQM Network module: facility for histogram transfer to Collector/GUI CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure 6

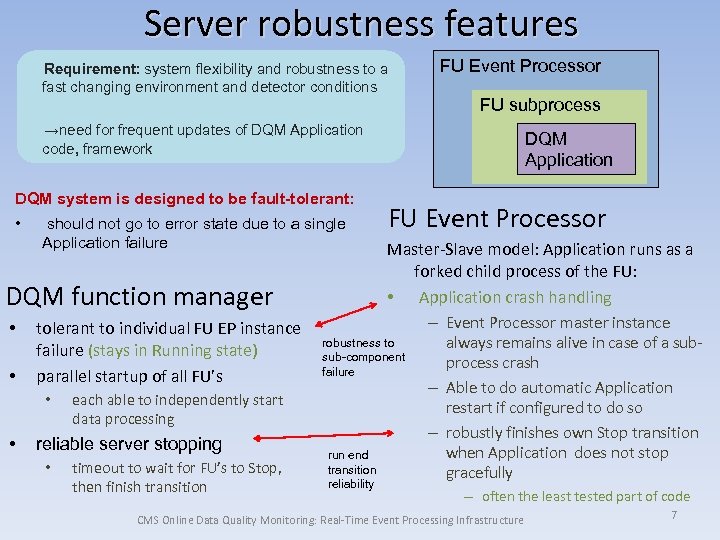

Server robustness features Requirement: system flexibility and robustness to a fast changing environment and detector conditions FU Event Processor FU subprocess →need for frequent updates of DQM Application code, framework DQM system is designed to be fault-tolerant: • should not go to error state due to a single Application failure DQM function manager • • tolerant to individual FU EP instance failure (stays in Running state) parallel startup of all FU’s • • • timeout to wait for FU’s to Stop, then finish transition FU Event Processor Master-Slave model: Application runs as a forked child process of the FU: • Application crash handling – Event Processor master instance robustness to sub-component failure each able to independently start data processing reliable server stopping DQM Application run end transition reliability always remains alive in case of a subprocess crash – Able to do automatic Application restart if configured to do so – robustly finishes own Stop transition when Application does not stop gracefully – often the least tested part of code CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure 7

Server robustness features Requirement: system flexibility and robustness to a fast changing environment and detector conditions FU Event Processor FU subprocess →need for frequent updates of DQM Application code, framework DQM system is designed to be fault-tolerant: • should not go to error state due to a single Application failure DQM function manager • • tolerant to individual FU EP instance failure (stays in Running state) parallel startup of all FU’s • • • timeout to wait for FU’s to Stop, then finish transition FU Event Processor Master-Slave model: Application runs as a forked child process of the FU: • Application crash handling – Event Processor master instance robustness to sub-component failure each able to independently start data processing reliable server stopping DQM Application run end transition reliability always remains alive in case of a subprocess crash – Able to do automatic Application restart if configured to do so – robustly finishes own Stop transition when Application does not stop gracefully – often the least tested part of code CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure 7

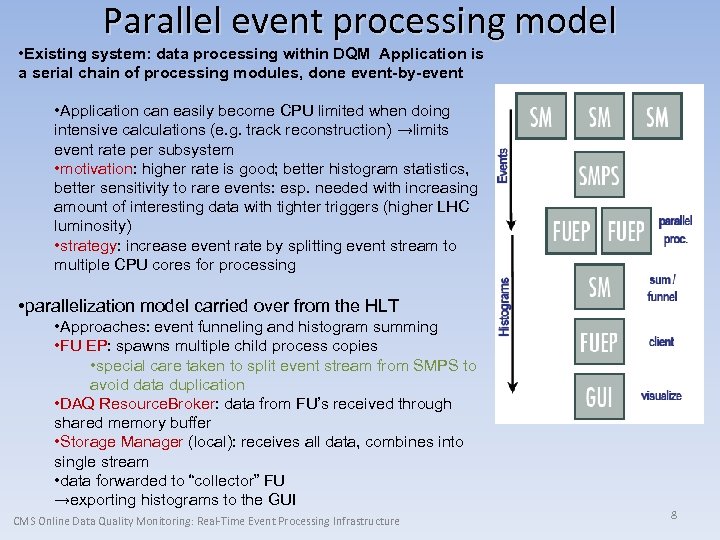

Parallel event processing model • Existing system: data processing within DQM Application is a serial chain of processing modules, done event-by-event • Application can easily become CPU limited when doing intensive calculations (e. g. track reconstruction) →limits event rate per subsystem • motivation: higher rate is good; better histogram statistics, better sensitivity to rare events: esp. needed with increasing amount of interesting data with tighter triggers (higher LHC luminosity) • strategy: increase event rate by splitting event stream to multiple CPU cores for processing • parallelization model carried over from the HLT • Approaches: event funneling and histogram summing • FU EP: spawns multiple child process copies • special care taken to split event stream from SMPS to avoid data duplication • DAQ Resource. Broker: data from FU’s received through shared memory buffer • Storage Manager (local): receives all data, combines into single stream • data forwarded to “collector” FU →exporting histograms to the GUI CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure 8

Parallel event processing model • Existing system: data processing within DQM Application is a serial chain of processing modules, done event-by-event • Application can easily become CPU limited when doing intensive calculations (e. g. track reconstruction) →limits event rate per subsystem • motivation: higher rate is good; better histogram statistics, better sensitivity to rare events: esp. needed with increasing amount of interesting data with tighter triggers (higher LHC luminosity) • strategy: increase event rate by splitting event stream to multiple CPU cores for processing • parallelization model carried over from the HLT • Approaches: event funneling and histogram summing • FU EP: spawns multiple child process copies • special care taken to split event stream from SMPS to avoid data duplication • DAQ Resource. Broker: data from FU’s received through shared memory buffer • Storage Manager (local): receives all data, combines into single stream • data forwarded to “collector” FU →exporting histograms to the GUI CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure 8

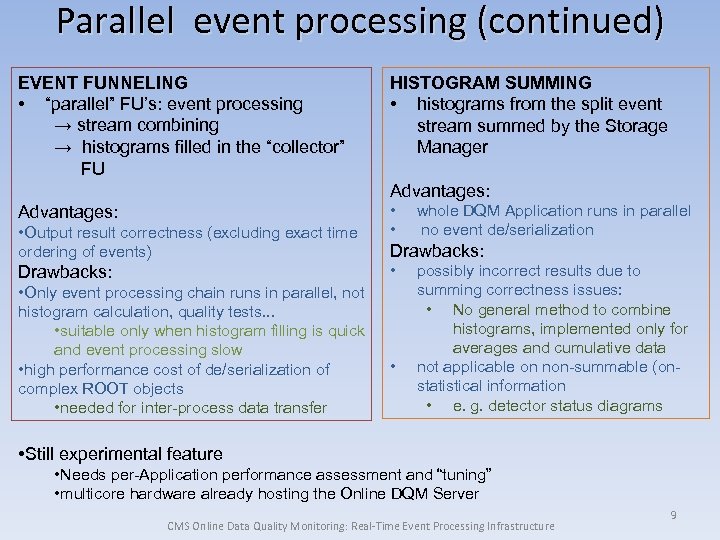

Parallel event processing (continued) EVENT FUNNELING • “parallel” FU’s: event processing → stream combining → histograms filled in the “collector” FU HISTOGRAM SUMMING • histograms from the split event stream summed by the Storage Manager Advantages: • Output result correctness (excluding exact time ordering of events) • • Drawbacks: • Only event processing chain runs in parallel, not histogram calculation, quality tests. . . • suitable only when histogram filling is quick and event processing slow • high performance cost of de/serialization of complex ROOT objects • needed for inter-process data transfer whole DQM Application runs in parallel no event de/serialization • possibly incorrect results due to summing correctness issues: • No general method to combine histograms, implemented only for averages and cumulative data not applicable on non-summable (onstatistical information • e. g. detector status diagrams • Still experimental feature • Needs per-Application performance assessment and “tuning” • multicore hardware already hosting the Online DQM Server CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure 9

Parallel event processing (continued) EVENT FUNNELING • “parallel” FU’s: event processing → stream combining → histograms filled in the “collector” FU HISTOGRAM SUMMING • histograms from the split event stream summed by the Storage Manager Advantages: • Output result correctness (excluding exact time ordering of events) • • Drawbacks: • Only event processing chain runs in parallel, not histogram calculation, quality tests. . . • suitable only when histogram filling is quick and event processing slow • high performance cost of de/serialization of complex ROOT objects • needed for inter-process data transfer whole DQM Application runs in parallel no event de/serialization • possibly incorrect results due to summing correctness issues: • No general method to combine histograms, implemented only for averages and cumulative data not applicable on non-summable (onstatistical information • e. g. detector status diagrams • Still experimental feature • Needs per-Application performance assessment and “tuning” • multicore hardware already hosting the Online DQM Server CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure 9

Summary • Online (and offline) DQM was in place and working robustly on day 1 of the LHC and has proven a cornerstone of CMS data taking efficiency and data certification (selection of runs for analysis). • Up-to-date detector information (and a history of runs) available to the experiment by real-time event processing • Infrastructure - application building blocks: FU EP, SMPS, SM. . . – put together by RCMS configuration • Built-in robustness (fault-tolerant design) →less problem-solving for the shifter, experts (valuable in night hours ) • Fast and flexible update policy – allows up-to-date code use in production → important in fast changing environment (early data taking period. . . ) • limitations of existing system (processing power) are being looked at, extensions being developed (still experimental) → Aaron Soha: Web Based Monitoring in the CMS Experiment at CERN CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure 10

Summary • Online (and offline) DQM was in place and working robustly on day 1 of the LHC and has proven a cornerstone of CMS data taking efficiency and data certification (selection of runs for analysis). • Up-to-date detector information (and a history of runs) available to the experiment by real-time event processing • Infrastructure - application building blocks: FU EP, SMPS, SM. . . – put together by RCMS configuration • Built-in robustness (fault-tolerant design) →less problem-solving for the shifter, experts (valuable in night hours ) • Fast and flexible update policy – allows up-to-date code use in production → important in fast changing environment (early data taking period. . . ) • limitations of existing system (processing power) are being looked at, extensions being developed (still experimental) → Aaron Soha: Web Based Monitoring in the CMS Experiment at CERN CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure 10

BACKUP Srećko Morović: CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure 11

BACKUP Srećko Morović: CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure 11

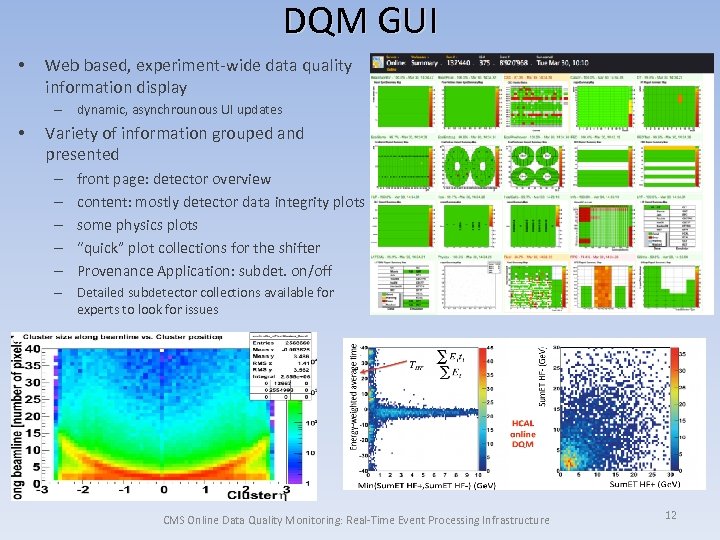

DQM GUI • Web based, experiment-wide data quality information display – dynamic, asynchrounous UI updates • Variety of information grouped and presented – – – front page: detector overview content: mostly detector data integrity plots some physics plots “quick” plot collections for the shifter Provenance Application: subdet. on/off – Detailed subdetector collections available for experts to look for issues CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure 12

DQM GUI • Web based, experiment-wide data quality information display – dynamic, asynchrounous UI updates • Variety of information grouped and presented – – – front page: detector overview content: mostly detector data integrity plots some physics plots “quick” plot collections for the shifter Provenance Application: subdet. on/off – Detailed subdetector collections available for experts to look for issues CMS Online Data Quality Monitoring: Real-Time Event Processing Infrastructure 12