c33f23f5f4d3751c7a57bf03f6137aa6.ppt

- Количество слайдов: 25

CM HOW-TO DETAILED DISCUSSION 1

OBJECTIVE n Upon completion of this training you will demonstrate an understanding of how to perform a CM evaluation. 2

REVIEW QUESTIONS n n n Why are both a macro and micro approach taken when evaluating CM? Describe Phase 1 and 2 work at INPO What is the relationship of Appendix 2 to Appendix 1 of the How-To? n n n How should Appendix 1 and 2 be used during the evaluation? How might the perception of margin differ between engineering and operators? What is the main difference in the CM delta assessment sheet? 3

REVIEW QUESTIONS n n n Why are Phase 1 and 2 key to a successful CM evaluation? Of what value is the CM questionnaire? Why must a CM evaluator always keep in mind the status of the enablers? n n n What are some bases for limiting on-site scope? Explain the difference between a fundamental and high level attribute. For what two functional areas must the CM evaluator/analyst determine if a focus is needed on-site? 4

CM HOW-TO n n CM is a broad area to evaluate Many plant functional departments support CM 5

APPROACH n n Method based on 3 pilots Addressing enablers/attributes – General approach – MACRO & MICRO approach – 1 ST and 2 ND pilots addressed all attributes – 3 rd pilot limited work on site (reduced team size) station buy-in on site scope n n Must narrow scope of site work Must identify issues early for E 3 success FOPs are the organizational weaknesses Must make early call on functional areas 6

TWO PHASES OF REVIEW/ANALYSIS AT INPO KEYS TO SUCCESS n Phase 1 n Phase 2 – Determine focus areas – Review detailed documents – Determine additional specific information needed – Prepare details for site work 7

PHASE 1 n Review normally requested info – Includes CM questionnaire – Determine vertical reviews and potential issues – Request specific documents – Document examples: ODs, DBDs, Mods, AOPs, NOPs, eng. Evals, 50. 59 s, calcs, complete condition reports, specs, program documents, etc. 8

PHASE 2 n n Review specific documents Limit on-site scope – Call on functional focus (RE &DE) – Enablers/attributes not being pursued on site – Focus areas n n Develop preliminary PDSs Determine site activities 9

PHASE 2 Cont’d n n Vertical slice (potential for new issues) example Status enabler/attribute list Evaluation plan 95% complete Functional area focus call 10

PILOT EXPERIENCE n n It took 10 mandays for phase 1 &2 Counterpart relationship is key to a successful CM evaluation 11

OBSERVATIONS n n Observation for each enabler Team Manager reports to site management on an enabler basis Paints a better CM picture CM evaluator owns CM enabler observations 12

COUNTERPARTS n n Counterpart team part of eval team Continually reinforce E 3 process (counterpart appreciation of CM effect on other departments) Explain precursor AFIs Explain less focus on examples and more on whys 13

HEADS UP n Expected to provide input to management model (late first week) Key in discussing whys n Mark OEO bubble chart to indicate strong or weak areas by 2 nd Thursday (do not assess) n Debrief as a team (focus on whys – example) 14

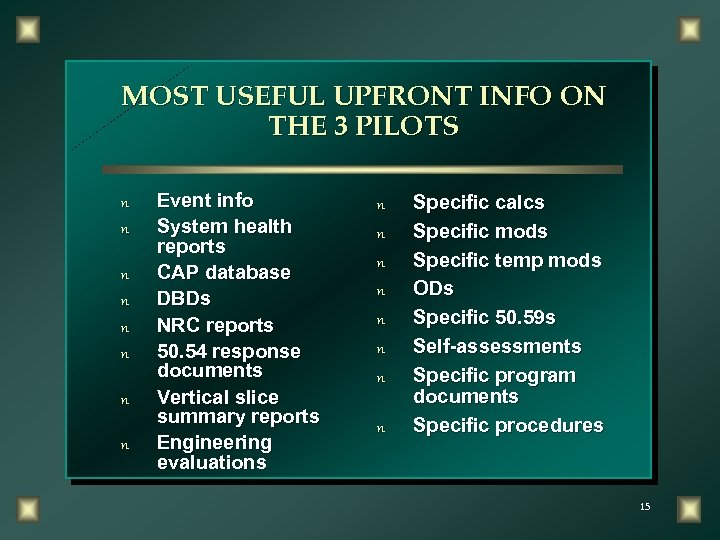

MOST USEFUL UPFRONT INFO ON THE 3 PILOTS n n n n Event info System health reports CAP database DBDs NRC reports 50. 54 response documents Vertical slice summary reports Engineering evaluations n n n n Specific calcs Specific mods Specific temp mods ODs Specific 50. 59 s Self-assessments Specific program documents Specific procedures 15

HOW TO WALKTHROUGH n Main document (Key Points) – Must narrow scope of work on site – Have observations and preliminary PDSs – INPO must status all OEOs to utility CEO – Responsible for functional area calls on RE and DE – Analysis at INPO is part of evaluation – Fundamental and high level attributes – Micro and Macro approach – CM performance problems (example) 16

ATTACHMENT 1, EVALUATION APPROACH n An attempt to get to specific actions – Vertical slice – Specific activities – Program reviews – Process reviews – Passive component reviews – Power Uprates – Operating Margins 17

ATTACHMENT 2, ENABLER/ATTRIBUTE LIST n n Cross reference to info request items and attachment 1 items Suggested actions Attribute meaning Status during eval 18

ATTACHMENT 3, MARGIN MODEL n n n Common terminology for discussions with counterparts Based on NSAC/125 Does not include safety margin terminology 19

ATTACHMENT 4, CM QUESTIONNAIRE n Analyze for trends and insights n Pilot results n Share results with counterpart (opportunity to get counterparts involved) n Support services puts data on Excel spread sheet 20

ATTACHMENT 5, INFORMATION REQUEST n A lot of information to look at during Phase 1 21

ATTACHMENT 6, CM PROGRAMS n n n Pilot evaluations were based on margin management Going forward focus is on specific programs (listed in attachment 1) Going forward evaluate programmatic aspects also Evaluate programs identified in Attachment 1 (depth of review should be based on indications of weaknesses) Other programs identified in Attachment 6 should be evaluated based on review/analysis of plant info 22

ATTACHMENT 7, CM ASSESSMENT n n n Process similar to existing process Evaluator to provide information to the group. The wisest of men cannot answer all the questions of a fool. Delta sheet to contain a narrative for each enabler (see attachment 9) 23

ASSESSMENT CRITERIA, ATTACHMENT 8 n n Latent weaknesses that are not affecting plant performance (1&2) CM weaknesses are affecting plant performance (35) 24

DISCUSSION EXAMPLES – CNS EQ – Comanche Peak AFW mod – Seabrook equipment data base – Comanche Peak power uprate – CNS CCW – Comanche Peak fuel 25

c33f23f5f4d3751c7a57bf03f6137aa6.ppt