c450a2d6de1f03f9fe629118fb9f4dd3.ppt

- Количество слайдов: 19

CLIVAR GSOP Coordinated Quality-Control of Global Subsurface Ocean Climate Observations "End-user perspective, from measurement to research" Alison Macdonald (WHOI) & Jim Swift (SIO) with assistance from Steve Diggs (SIO) "an international collaborative project. . . maximizing the quality and consistency of the historical ocean subsurface temperature data. . . " "move from. . . independent automated quality control. . . to a unified and more effective system based on a semi-automated approach. . . " (An effective semi-automated approach may already exist, but this may not address some calibration/correction errors. )

End User Considerations • Data discovery – finding the data of interest • Flexible data access in both x/y/z/t and also in terms of quality/suitability for the intended use • Seamless continual updating • Documentation/Metadata

Data Integration • = Joint use of disparate data types, e. g. locating and using all temperature profile data from a certain region and time. • Typically initiated by scientific oversight bodies, not the data specialists for the disparate individual measurement types.

Data Integration • Data origins must remain identifiable due to inherent quality/use differences. • Improved data information and searchability are useful first outcomes. • Integration must include 'Reconciliation’ – Special attention must be given to resolve data center to data center differences in crucial metadata such as the expedition/cruise designators, cruise dates, etc. for the same data. – Very useful to include techs at meetings

Quality Control The need for data quality examination data is based upon the experience of compilers of global data sets who know that many data (including some well-known data) require adjustments (i. e. corrections for calibrations) and identification (preferred) or removal (acceptable if necessary) of bad and questionable values.

Quality Control • The qc process can differ depending on data type. • It is best, when possible, for data originators to assign the quality codes (following a sanctioned scheme) for each measured parameter. • The work of external Data Quality Experts on past data must usually proceed without guidance and information from data originators. • Integrate quality codes with data & documentation. • When underlying definitions are clear, quality codes can be translated into other schemes.

Documentation • Key to creation of a reference-quality data set • Provides huge added value • and a long-term service life. The most important documentation (key 'metadata' elements) should be permanently attached to the data.

Documentation (Metadata) Maintaining data pedigree is crucial, so • Metadata should be embedded in the data whenever possible – as succinct data histories – and version numbers – further details can be kept in a metadatabase

Data Management Too important to be left to data managers Both active scientists and technical teams must be involved in data management and its oversight

Data Management • Recognition of issues including lack of history & limitations in funding and available time • Shortage of data quality experts (busy doing their 'day job’) • Keep in mind technical staff's competing assignments and their cultural and technical origins • Avoid only providing pages of requirements • Human nature is not changed by issuance of requirements

Data Management • What can one offer a data originator or quality examiner in return for data or scarce time? • A data committee, a DAC system, and team focused on data discovery are a strong trio. • Best to work closely with individual data providers. Many can & do perform credible internal data quality control. • Community data examination works when welldefined

Data Management Priorities • Acquire data & metadata (documentation • Secure the data and metadata • Provide data access – ease of discovery and access – easy to use formats (e. g. , both ASCII & net. CDF) – If you can read one data file you can read every data file. • Maintain data ‘pedigree’ (version control) • Learn about data holdings (especially new holdings) from & for research community. • Know who is using the data, what their needs are, and what features they would like to see in the dataset and how it is queried.

(data management priorities, continued) • Strategic alliances between tech teams and between teams and data management center are important • There is no reason not to have options for data in various formats – can even write them on the fly if the translators are easy to understand use • Improved metadata and cruise documentation • Can provide links to originator’s websites

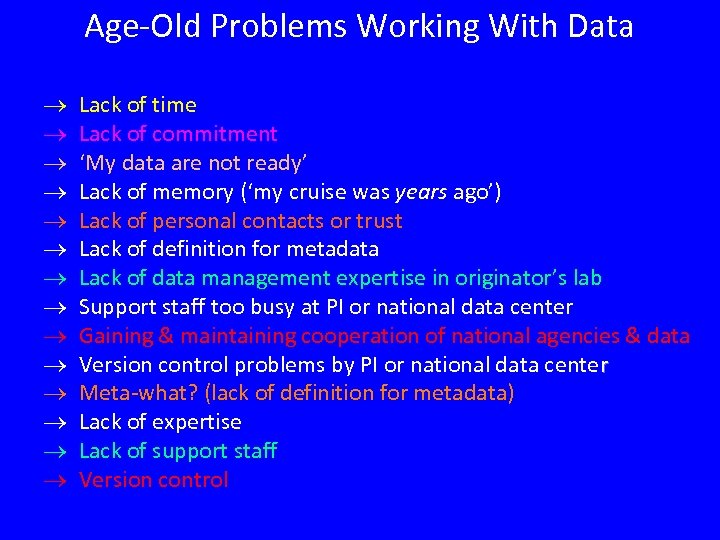

Age-Old Problems Working With Data Lack of time Lack of commitment ‘My data are not ready’ Lack of memory (‘my cruise was years ago’) Lack of personal contacts or trust Lack of definition for metadata Lack of data management expertise in originator’s lab Support staff too busy at PI or national data center Gaining & maintaining cooperation of national agencies & data Version control problems by PI or national data center Meta-what? (lack of definition for metadata) Lack of expertise Lack of support staff Version control

Is It Possible To: • Agree upon the design of a system which satisfies all/most users? • Quantify the cost of this not-yet-conceived data system in adequate detail? • Find funds to construct and maintain this not-yetconceived data system?

What’s Needed • Agree on a firm, written definition of the project. • Assign a value to the project in terms of priority and $$. • Design a low-cost system comprised of existing semipermanent data sites aggregated through a single welldesigned portal. • Group data intelligently; keep synthesis efforts in mind. • Include deadlines and milestones • Design the system should so that it's never "finished" • work should continue indefinitely (a "finished" web/data project will be obsolete before it’s complete)

Don't worry about non-inclusiveness or partial failure. It is inevitable. But do work hard to limit disappointment.

Take Home Message • Focus on improving the usability of the data to make products, not in making a product • Documentation/Metadata • Data Management: Requires Data Experts and Scientists • Flexible quality control • Data discovery - flexible and continuous updating of the product • Recognition of limitations including lack of history, funding cycles and available time

c450a2d6de1f03f9fe629118fb9f4dd3.ppt