be61081f074ee7195ca6d5ded7c640c7.ppt

- Количество слайдов: 137

Client/Server Distributed Systems 240 -322 Semester 1, 2005 -2006 . 8 Sockets (2( v Objectives – look at concurrent servers and clients (using TCP) – look at other networking models 240 -322 Cli/Serv. : sockets 2/8 1

Overview 1. Concurrent Servers 2. Concurrent Servers with fork() 3. Concurrent Servers with select() 4. Data Distribution 5. A Processor Farm 240 -322 Cli/Serv. : sockets 2/8 2

1. Concurrent Servers v An iterative server makes other clients wait while it processes the current client. v If the connection is long-lived, the other clients must wait for a long time. – e. g. the hangman server 240 -322 Cli/Serv. : sockets 2/8 continued 3

v Solution: a concurrent server can process many clients at once – no waiting is necessary v Two basic concurrency approches: – use fork() to create children processes – use select()to multiplex (switch) between clients 240 -322 Cli/Serv. : sockets 2/8 4

2. Concurrent Servers with fork() v The server forks a child to handle each client. v The best approach if: – each client uses a lot of complex local data on the server (e. g. in hangman). Each child process automatically makes its own copy of the data. – the clients do not access ‘global’ server data or communicate with each other through the server u global data/comms requires shared memory, pipes, semaphores, etc. 240 -322 Cli/Serv. : sockets 2/8 5

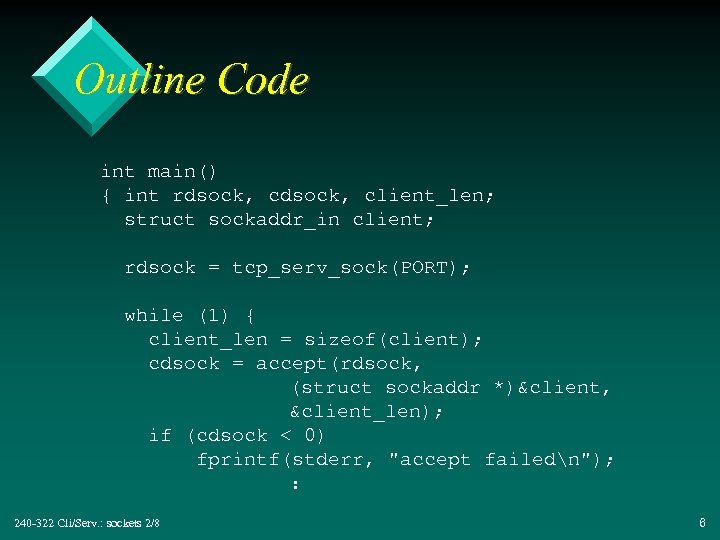

Outline Code int main() { int rdsock, client_len; struct sockaddr_in client; rdsock = tcp_serv_sock(PORT); while (1) { client_len = sizeof(client); cdsock = accept(rdsock, (struct sockaddr *)&client, &client_len); if (cdsock < 0) fprintf(stderr, "accept failedn"); : 240 -322 Cli/Serv. : sockets 2/8 6

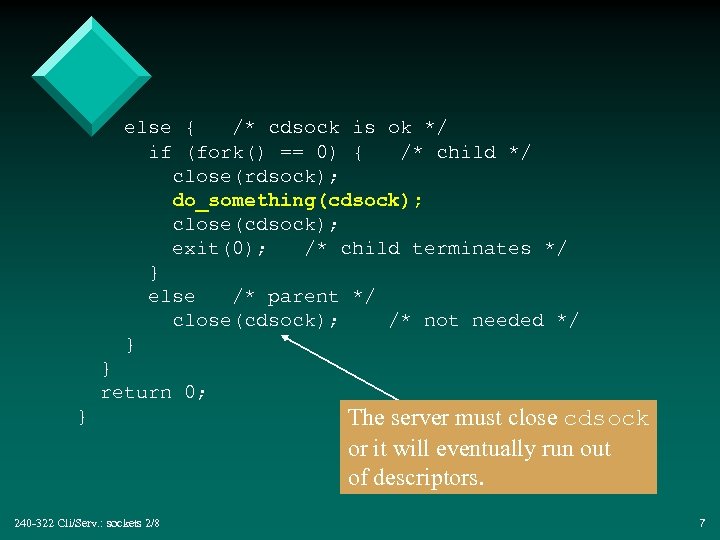

else { /* cdsock is ok */ if (fork() == 0) { /* child */ close(rdsock); do_something(cdsock); close(cdsock); exit(0); /* child terminates */ } else /* parent */ close(cdsock); /* not needed */ } } return 0; } 240 -322 Cli/Serv. : sockets 2/8 The server must close cdsock or it will eventually run out of descriptors. 7

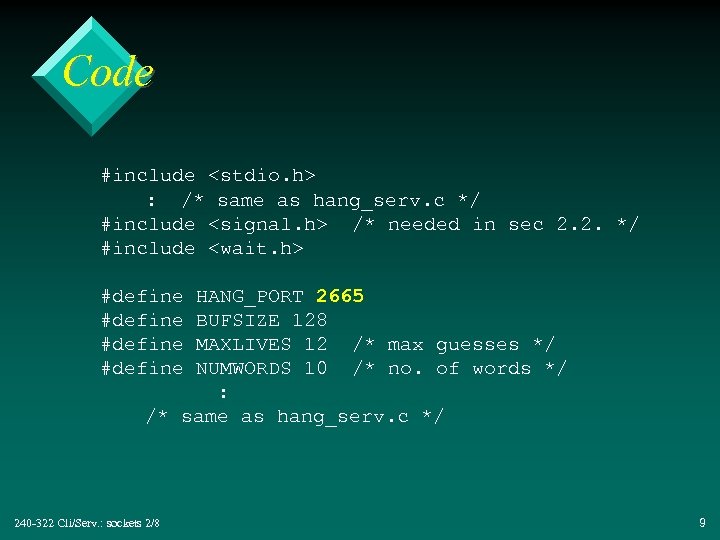

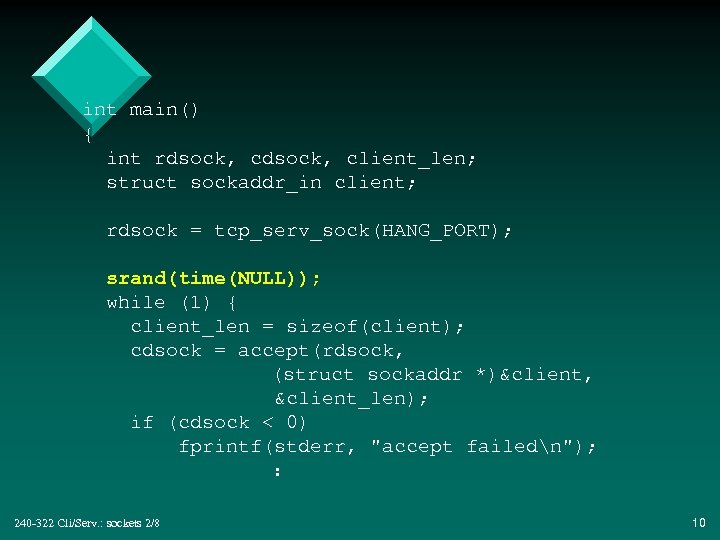

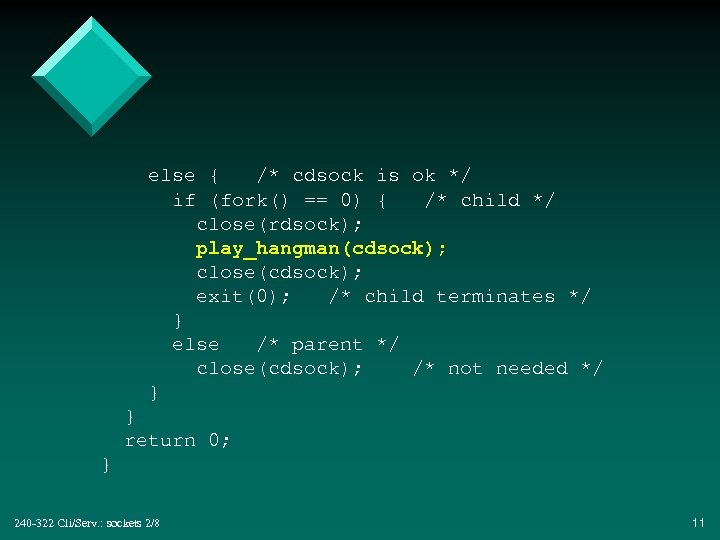

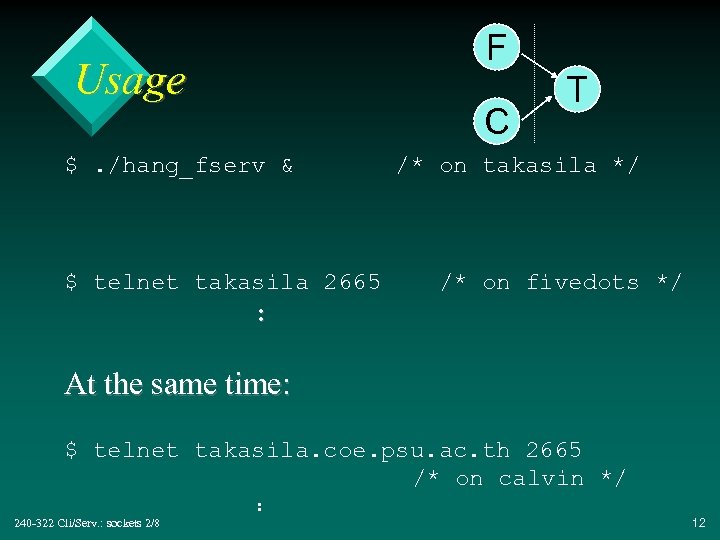

2. 1. hang_fserv. c v A concurrent version of the hangman server, using fork(). v Runs on takasila. coe. psu. ac. th (172. 30. 0. 82) at port 2665. 240 -322 Cli/Serv. : sockets 2/8 8

Code #include <stdio. h> : /* same as hang_serv. c */ #include <signal. h> /* needed in sec 2. 2. */ #include <wait. h> #define HANG_PORT 2665 BUFSIZE 128 MAXLIVES 12 /* max guesses */ NUMWORDS 10 /* no. of words */ : /* same as hang_serv. c */ 240 -322 Cli/Serv. : sockets 2/8 9

int main() { int rdsock, client_len; struct sockaddr_in client; rdsock = tcp_serv_sock(HANG_PORT); srand(time(NULL)); while (1) { client_len = sizeof(client); cdsock = accept(rdsock, (struct sockaddr *)&client, &client_len); if (cdsock < 0) fprintf(stderr, "accept failedn"); : 240 -322 Cli/Serv. : sockets 2/8 10

else { /* cdsock is ok */ if (fork() == 0) { /* child */ close(rdsock); play_hangman(cdsock); close(cdsock); exit(0); /* child terminates */ } else /* parent */ close(cdsock); /* not needed */ } } return 0; } 240 -322 Cli/Serv. : sockets 2/8 11

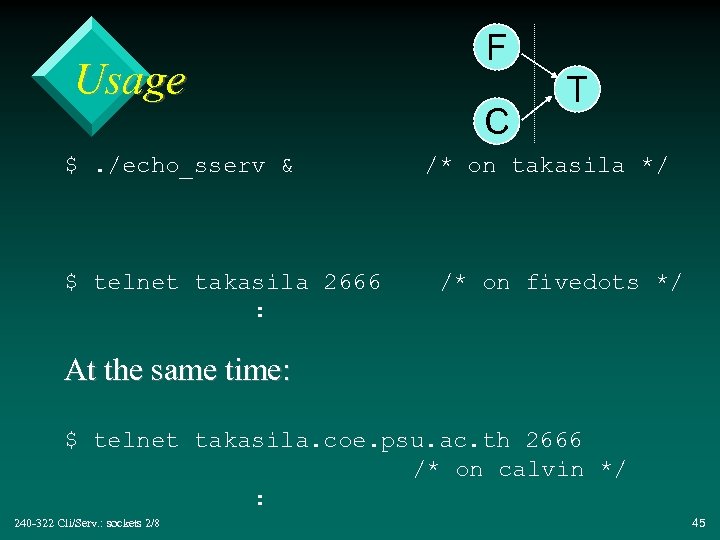

F Usage C $. /hang_fserv & $ telnet takasila 2665 T /* on takasila */ /* on fivedots */ : At the same time: $ telnet takasila. coe. psu. ac. th 2665 /* on calvin */ 240 -322 Cli/Serv. : sockets 2/8 : 12

2. 2. Problems with Zombies v The server parent does not wait for its children to terminate: – they will become zombies – their exit information will fill up the process table – the information only disappears when the parent exits 240 -322 Cli/Serv. : sockets 2/8 13

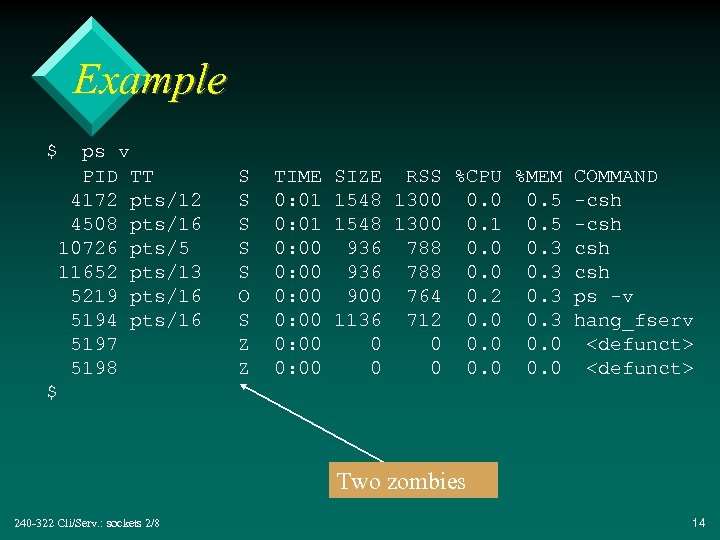

Example $ ps v PID TT 4172 pts/12 4508 pts/16 10726 pts/5 11652 pts/13 5219 pts/16 5194 pts/16 5197 5198 S S S O S Z Z TIME 0: 01 0: 00 0: 00 SIZE RSS %CPU %MEM COMMAND 1548 1300 0. 5 -csh 1548 1300 0. 1 0. 5 -csh 936 788 0. 0 0. 3 csh 900 764 0. 2 0. 3 ps -v 1136 712 0. 0 0. 3 hang_fserv 0 0 0. 0 <defunct> $ Two zombies 240 -322 Cli/Serv. : sockets 2/8 14

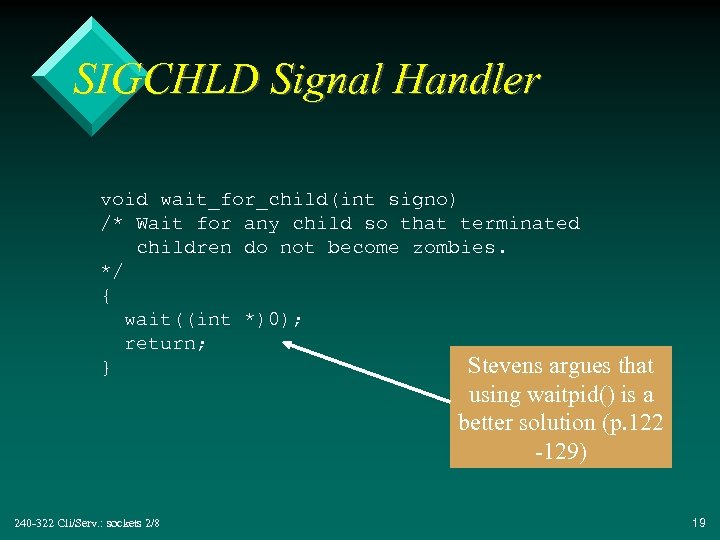

Zombie Removal v Modify the parent to catch the SIGCHLD signal: – it is issued when a child terminates – have the handler call wait() v Problem: SIGCHLD can make certain system calls return with an error: – e. g. accept(), read(), write() – must add extra checks to restart these calls if they are interrupted 240 -322 Cli/Serv. : sockets 2/8 15

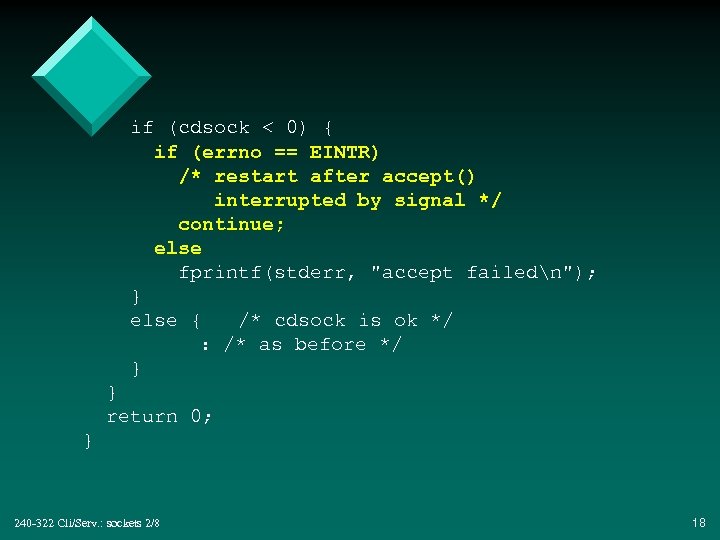

Zombie Removal in hang_fserv. c v In hang_fserv. c, the server spends most time waiting in accept(), so we will only add error checking to that call. v When interrupted by a signal, accept() sets errno to have the value EINTR. 240 -322 Cli/Serv. : sockets 2/8 16

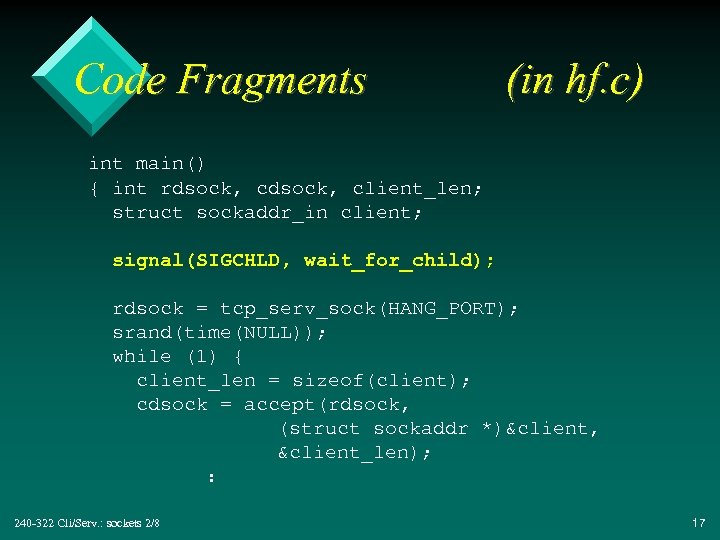

Code Fragments (in hf. c) int main() { int rdsock, client_len; struct sockaddr_in client; signal(SIGCHLD, wait_for_child); rdsock = tcp_serv_sock(HANG_PORT); srand(time(NULL)); while (1) { client_len = sizeof(client); cdsock = accept(rdsock, (struct sockaddr *)&client, &client_len); : 240 -322 Cli/Serv. : sockets 2/8 17

if (cdsock < 0) { if (errno == EINTR) /* restart after accept() interrupted by signal */ continue; else fprintf(stderr, "accept failedn"); } else { /* cdsock is ok */ : /* as before */ } } return 0; } 240 -322 Cli/Serv. : sockets 2/8 18

SIGCHLD Signal Handler void wait_for_child(int signo) /* Wait for any child so that terminated children do not become zombies. */ { wait((int *)0); return; } Stevens argues that using waitpid() is a better solution (p. 122 -129) 240 -322 Cli/Serv. : sockets 2/8 19

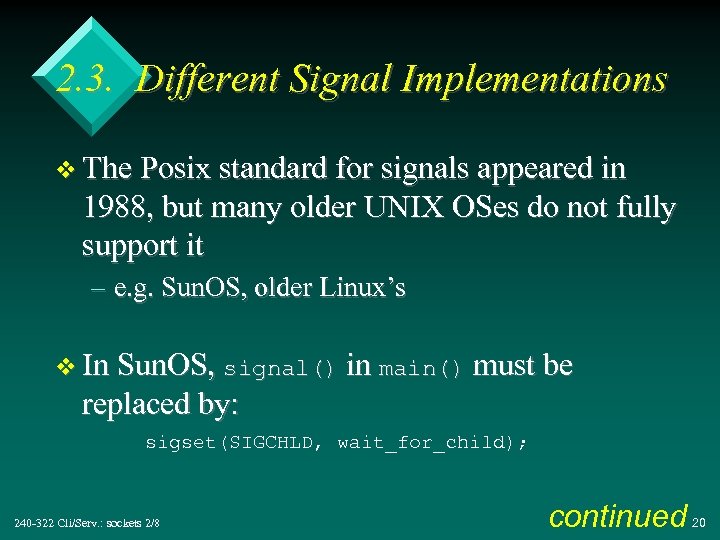

2. 3. Different Signal Implementations v The Posix standard for signals appeared in 1988, but many older UNIX OSes do not fully support it – e. g. Sun. OS, older Linux’s v In Sun. OS, signal() in main() must be replaced by: sigset(SIGCHLD, wait_for_child); 240 -322 Cli/Serv. : sockets 2/8 continued 20

v Debian Linux requires that wait_for_child() be ‘reinstalled’ after each signal is caught. 240 -322 Cli/Serv. : sockets 2/8 21

3. Concurrent Servers with select() v The select() system call can be used by a server to multiplex (switch) between clients – spend a bit of time with each client v Advantage: – the server doesn’t need to fork children, so it uses less resources – easier for data to be shared between client processing parts 240 -322 Cli/Serv. : sockets 2/8 continued 22

v Disadvantages: – the control flow in the server becomes more complex – the server may have to use extra data structures for storing all the clients data 240 -322 Cli/Serv. : sockets 2/8 23

Why are extra data structures needed? v In hang_fserv. c, each client’s data is stored automatically in its own process after a fork(). v Each process has its own information for the guess word, real word, and the number of turns remaining. 240 -322 Cli/Serv. : sockets 2/8 continued 24

v select() does not fork processes, so the server code must include extra data structures for storing all the different client’s data: – e. g. arrays of guess words, actual words, turns remaining; one entry for each client 240 -322 Cli/Serv. : sockets 2/8 25

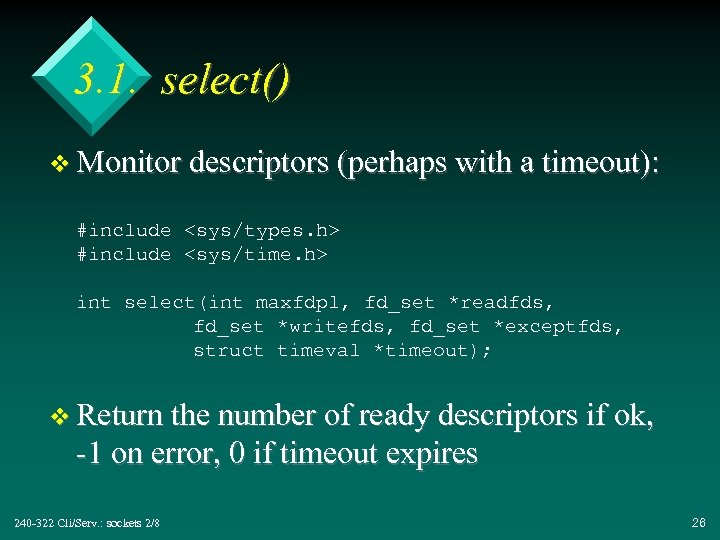

3. 1. select() v Monitor descriptors (perhaps with a timeout): #include <sys/types. h> #include <sys/time. h> int select(int maxfdpl, fd_set *readfds, fd_set *writefds, fd_set *exceptfds, struct timeval *timeout); v Return the number of ready descriptors if ok, -1 on error, 0 if timeout expires 240 -322 Cli/Serv. : sockets 2/8 26

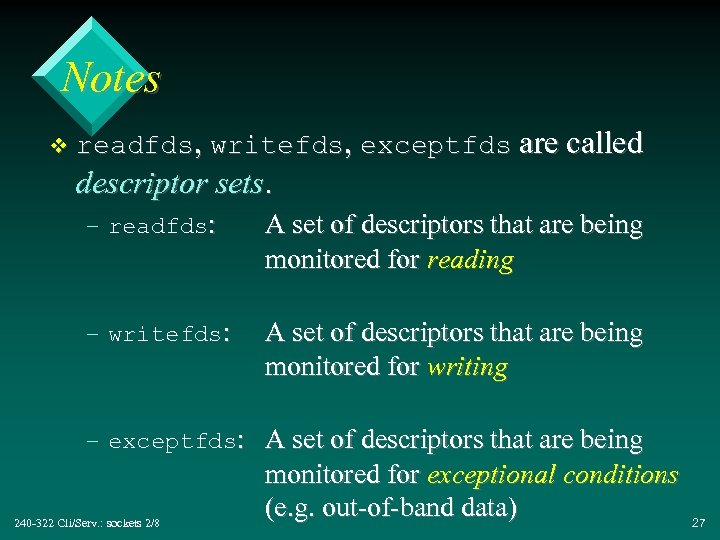

Notes v readfds, writefds, exceptfds are called descriptor sets. – readfds: A set of descriptors that are being monitored for reading – writefds: A set of descriptors that are being monitored for writing – exceptfds: A set of descriptors that are being 240 -322 Cli/Serv. : sockets 2/8 monitored for exceptional conditions (e. g. out-of-band data) 27

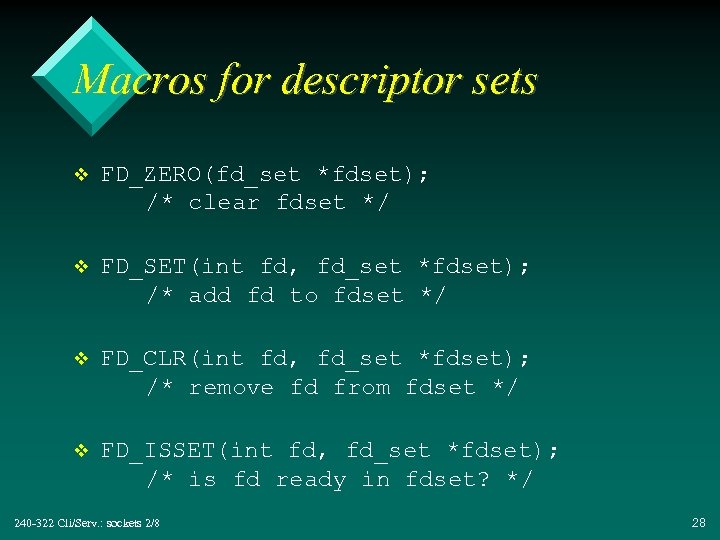

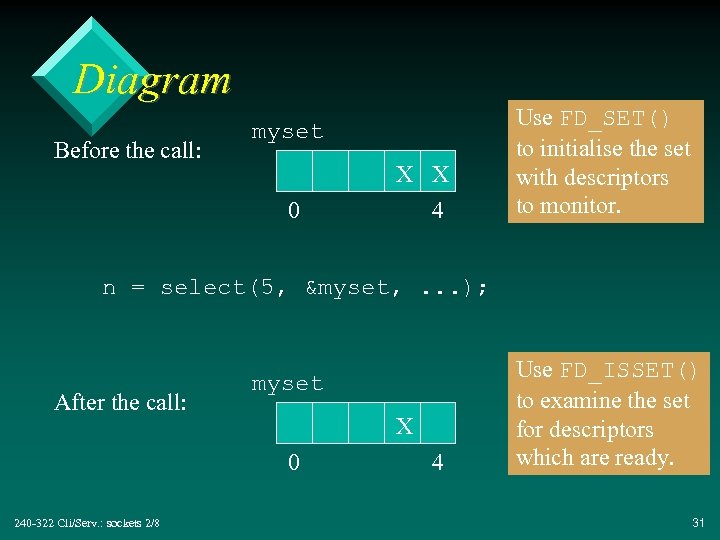

Macros for descriptor sets v FD_ZERO(fd_set *fdset); /* clear fdset */ v FD_SET(int fd, fd_set *fdset); /* add fd to fdset */ v FD_CLR(int fd, fd_set *fdset); /* remove fd from fdset */ v FD_ISSET(int fd, fd_set *fdset); /* is fd ready in fdset? */ 240 -322 Cli/Serv. : sockets 2/8 28

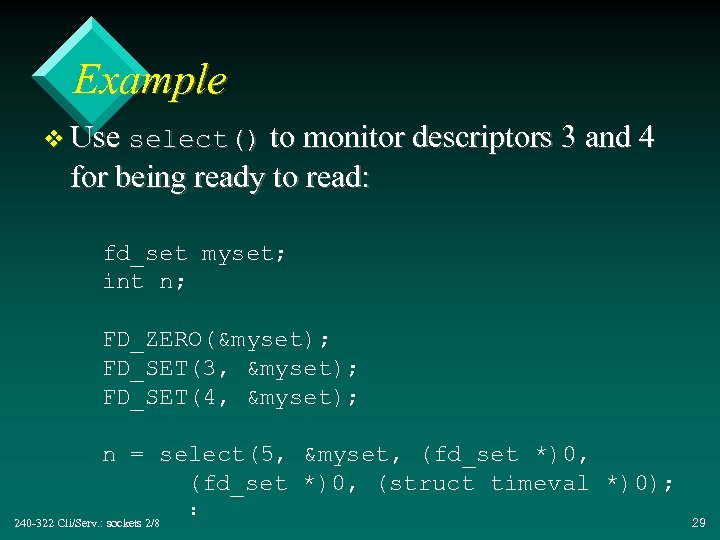

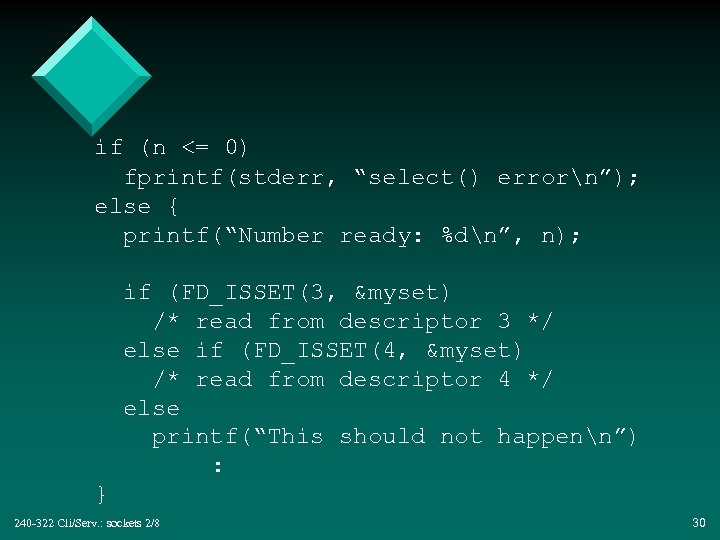

Example v Use select() to monitor descriptors 3 and 4 for being ready to read: fd_set myset; int n; FD_ZERO(&myset); FD_SET(3, &myset); FD_SET(4, &myset); n = select(5, &myset, (fd_set *)0, (struct timeval *)0); 240 -322 Cli/Serv. : sockets 2/8 : 29

if (n <= 0) fprintf(stderr, “select() errorn”); else { printf(“Number ready: %dn”, n); if (FD_ISSET(3, &myset) /* read from descriptor 3 */ else if (FD_ISSET(4, &myset) /* read from descriptor 4 */ else printf(“This should not happenn”) : } 240 -322 Cli/Serv. : sockets 2/8 30

Diagram Before the call: myset 0 X X 4 Use FD_SET() to initialise the set with descriptors to monitor. n = select(5, &myset, . . . ); After the call: myset X 0 240 -322 Cli/Serv. : sockets 2/8 4 Use FD_ISSET() to examine the set for descriptors which are ready. 31

Notes v If the descriptor set arguments of select() are (fd_set *)0, then they are ignored. v If the timeout argument is (struct timeval *)0, then select() will wait indefinitely until a descriptor is ready. 240 -322 Cli/Serv. : sockets 2/8 continued 32

v The maxfdpl argument of select() must be (at least) 1 more than the largest descriptor in the sets – all descriptors 0, 1, . . . , maxfdpl-1 in the sets are tested v select() returns the no. of ready descriptors: – does not say which ones – must use FD_ISSET() to check all the descriptors 240 -322 Cli/Serv. : sockets 2/8 continued 33

v select() overwrites its descriptor set arguments to indicate which descriptors are ready: – for this reason, programs usually call select() with a copy of the descriptor set v More details about select(): man select 240 -322 Cli/Serv. : sockets 2/8 34

timeout argument v Its type: struct timeval { long int tv_sec; long int tv_usec; } /* seconds */ /* micro secs */ v Used to specify a timeout for select() after which it will return whether or not there any ready descriptors. 240 -322 Cli/Serv. : sockets 2/8 35

3. 2. Example: Concurrent Echo Server v Convert the iterative echo server , echo_serv. c (part 7, slide 36), into a concurrent server using select(). v Since echo clients do not use ‘global’ server data or communicate, a fork() version would be possible as well. 240 -322 Cli/Serv. : sockets 2/8 36

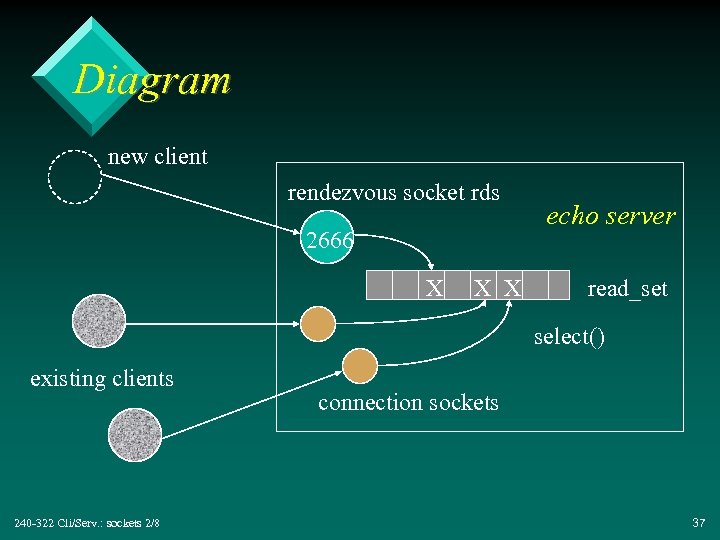

Diagram new client rendezvous socket rds 2666 X X X echo server read_set select() existing clients 240 -322 Cli/Serv. : sockets 2/8 connection sockets 37

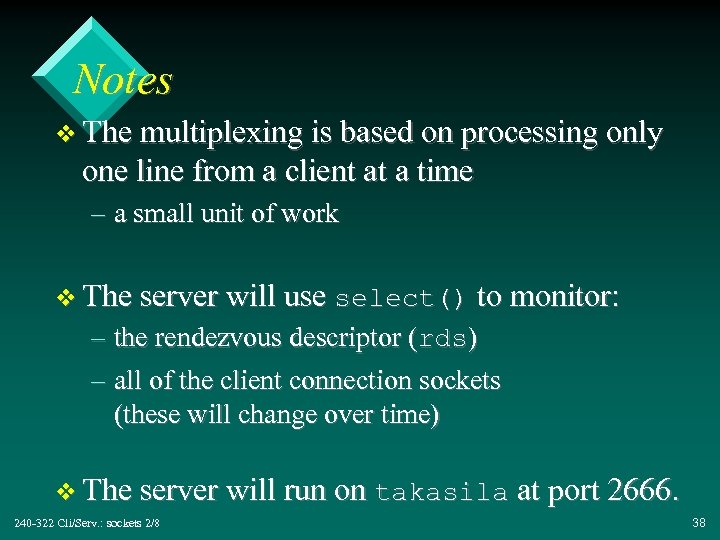

Notes v The multiplexing is based on processing only one line from a client at a time – a small unit of work v The server will use select() to monitor: – the rendezvous descriptor (rds) – all of the client connection sockets (these will change over time) v The server will run on takasila at port 2666. 240 -322 Cli/Serv. : sockets 2/8 38

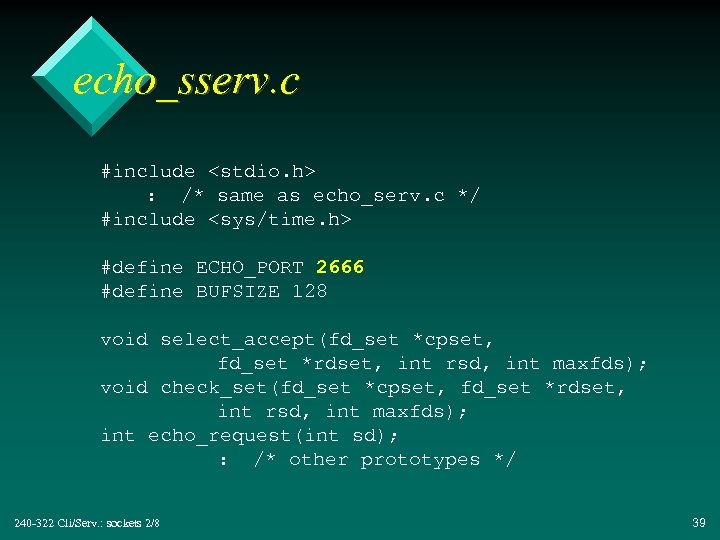

echo_sserv. c #include <stdio. h> : /* same as echo_serv. c */ #include <sys/time. h> #define ECHO_PORT 2666 #define BUFSIZE 128 void select_accept(fd_set *cpset, fd_set *rdset, int rsd, int maxfds); void check_set(fd_set *cpset, fd_set *rdset, int rsd, int maxfds); int echo_request(int sd); : /* other prototypes */ 240 -322 Cli/Serv. : sockets 2/8 39

int main() { int rsd; /* rendezvous socket desc. */ fd_set read_set, copy_set; int maxfds = FD_SETSIZE; /* max no. of descriptors allowed */ rsd = tcp_serv_sock(ECHO_PORT); FD_ZERO(&read_set); /* initialise read set */ FD_SET(rsd, &read_set); while(1) { select_accept(©_set, &read_set, rsd, maxfds); check_set(©_set, &read_set, rsd, maxfds); } return 0; } 240 -322 Cli/Serv. : sockets 2/8 40

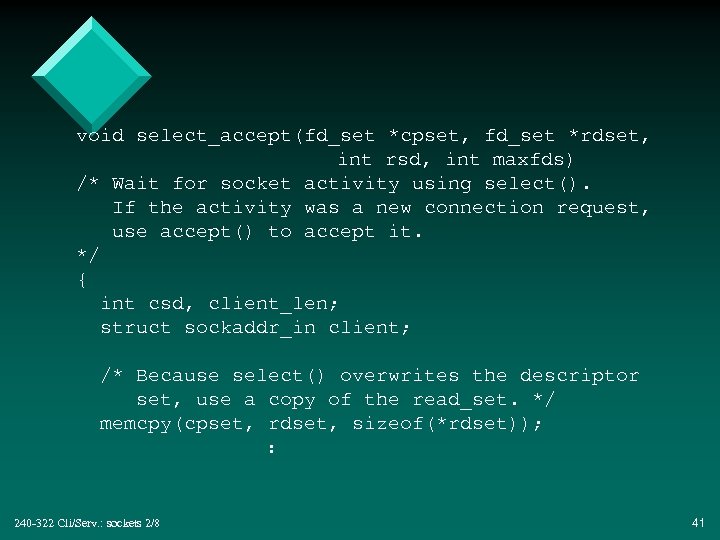

void select_accept(fd_set *cpset, fd_set *rdset, int rsd, int maxfds) /* Wait for socket activity using select(). If the activity was a new connection request, use accept() to accept it. */ { int csd, client_len; struct sockaddr_in client; /* Because select() overwrites the descriptor set, use a copy of the read_set. */ memcpy(cpset, rdset, sizeof(*rdset)); : 240 -322 Cli/Serv. : sockets 2/8 41

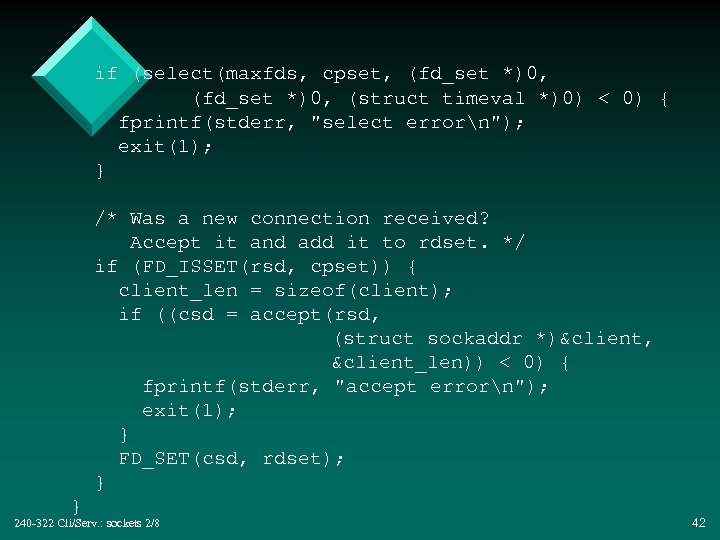

if (select(maxfds, cpset, (fd_set *)0, (struct timeval *)0) < 0) { fprintf(stderr, "select errorn"); exit(1); } /* Was a new connection received? Accept it and add it to rdset. */ if (FD_ISSET(rsd, cpset)) { client_len = sizeof(client); if ((csd = accept(rsd, (struct sockaddr *)&client, &client_len)) < 0) { fprintf(stderr, "accept errorn"); exit(1); } FD_SET(csd, rdset); } } 240 -322 Cli/Serv. : sockets 2/8 42

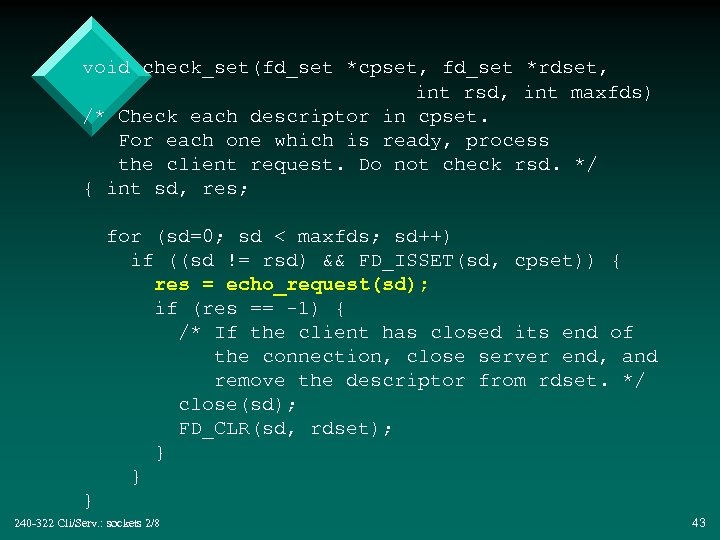

void check_set(fd_set *cpset, fd_set *rdset, int rsd, int maxfds) /* Check each descriptor in cpset. For each one which is ready, process the client request. Do not check rsd. */ { int sd, res; for (sd=0; sd < maxfds; sd++) if ((sd != rsd) && FD_ISSET(sd, cpset)) { res = echo_request(sd); if (res == -1) { /* If the client has closed its end of the connection, close server end, and remove the descriptor from rdset. */ close(sd); FD_CLR(sd, rdset); } } } 240 -322 Cli/Serv. : sockets 2/8 43

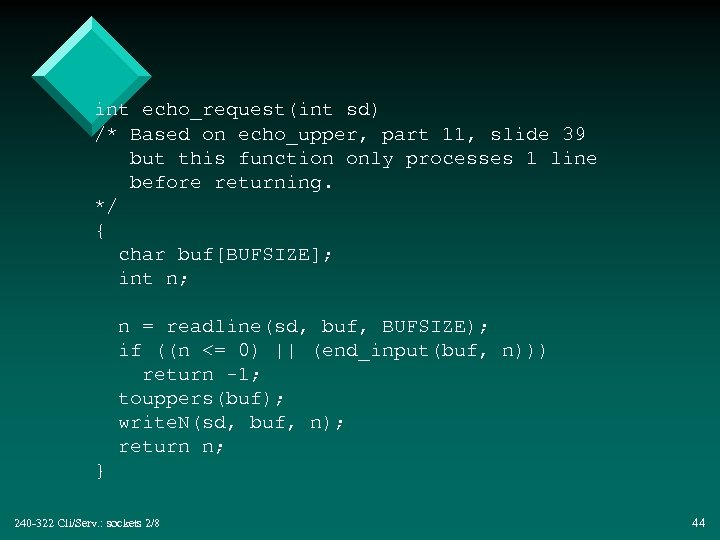

int echo_request(int sd) /* Based on echo_upper, part 11, slide 39 but this function only processes 1 line before returning. */ { char buf[BUFSIZE]; int n; n = readline(sd, buf, BUFSIZE); if ((n <= 0) || (end_input(buf, n))) return -1; touppers(buf); write. N(sd, buf, n); return n; } 240 -322 Cli/Serv. : sockets 2/8 44

Usage $. /echo_sserv & $ telnet takasila 2666 : F C T /* on takasila */ /* on fivedots */ At the same time: $ telnet takasila. coe. psu. ac. th 2666 /* on calvin */ : 240 -322 Cli/Serv. : sockets 2/8 45

Notes v copy_set rather than read_set is used in select() since it is altered by the call. v check_set() does not use the select() result. It looks at every descriptor in the descriptor set (apart from rds). 240 -322 Cli/Serv. : sockets 2/8 46

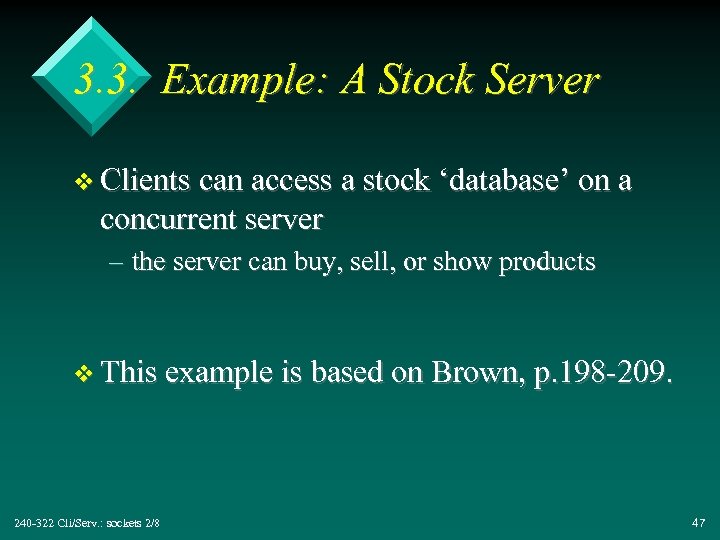

3. 3. Example: A Stock Server v Clients can access a stock ‘database’ on a concurrent server – the server can buy, sell, or show products v This example is based on Brown, p. 198 -209. 240 -322 Cli/Serv. : sockets 2/8 47

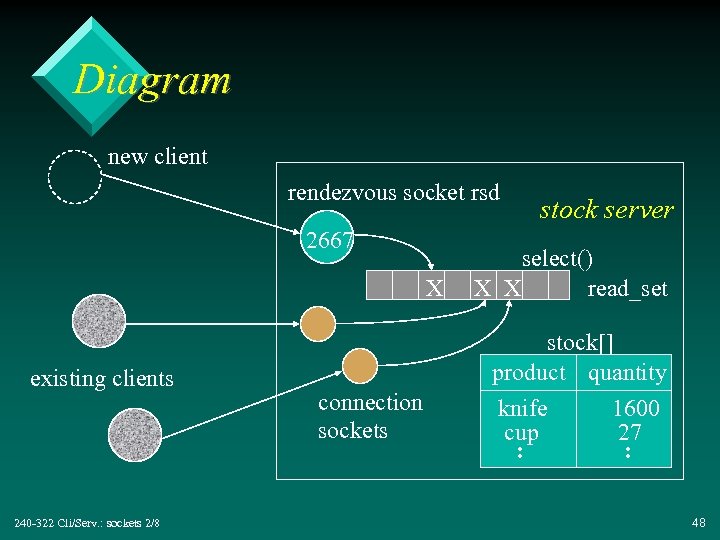

Diagram new client rendezvous socket rsd 2667 X existing clients 240 -322 Cli/Serv. : sockets 2/8 connection sockets stock server select() X X read_set stock[] product quantity knife 1600 cup 27 : : 48

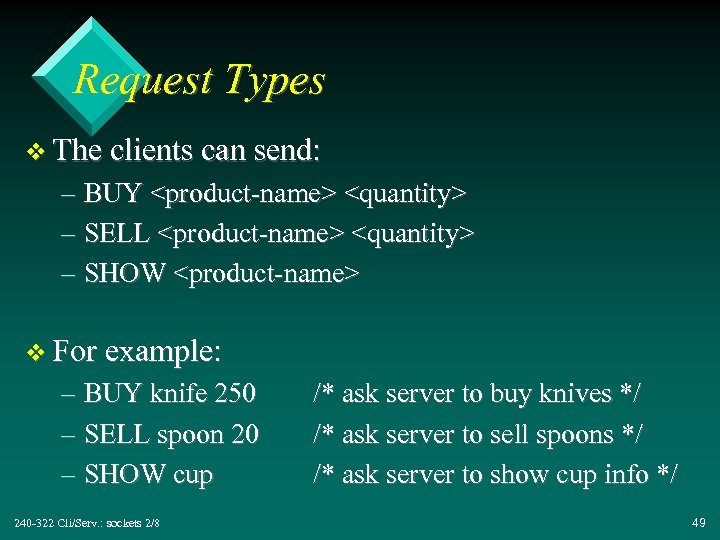

Request Types v The clients can send: – BUY <product-name> <quantity> – SELL <product-name> <quantity> – SHOW <product-name> v For example: – BUY knife 250 – SELL spoon 20 – SHOW cup 240 -322 Cli/Serv. : sockets 2/8 /* ask server to buy knives */ /* ask server to sell spoons */ /* ask server to show cup info */ 49

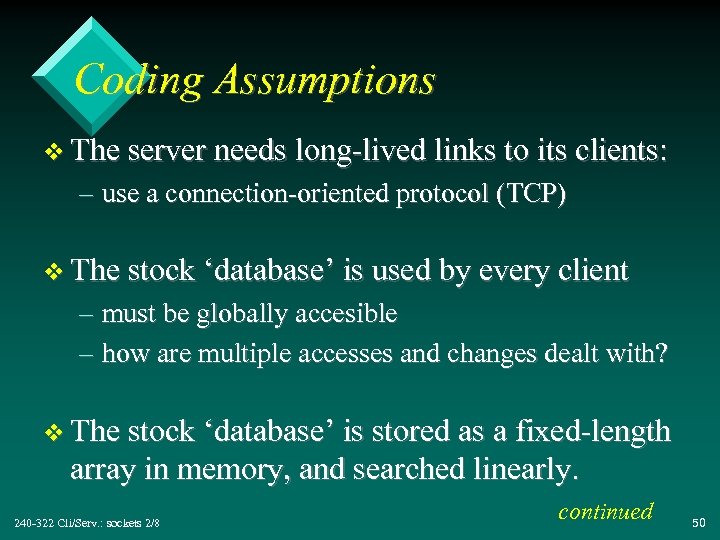

Coding Assumptions v The server needs long-lived links to its clients: – use a connection-oriented protocol (TCP) v The stock ‘database’ is used by every client – must be globally accesible – how are multiple accesses and changes dealt with? v The stock ‘database’ is stored as a fixed-length array in memory, and searched linearly. 240 -322 Cli/Serv. : sockets 2/8 continued 50

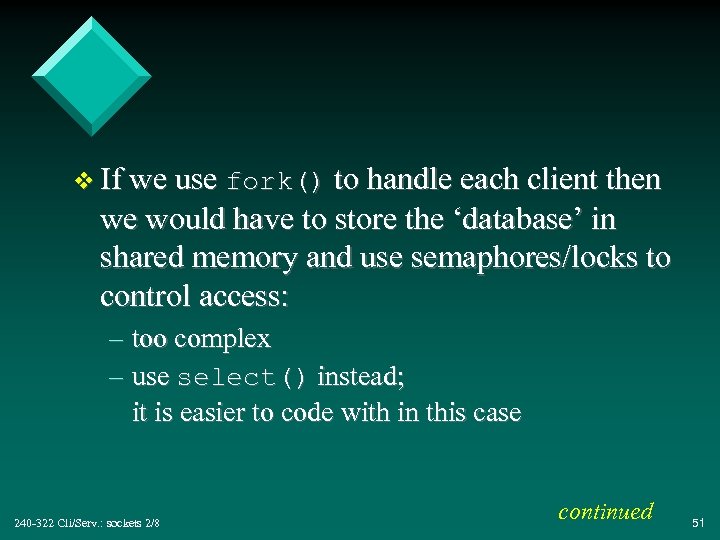

v If we use fork() to handle each client then we would have to store the ‘database’ in shared memory and use semaphores/locks to control access: – too complex – use select() instead; it is easier to code with in this case 240 -322 Cli/Serv. : sockets 2/8 continued 51

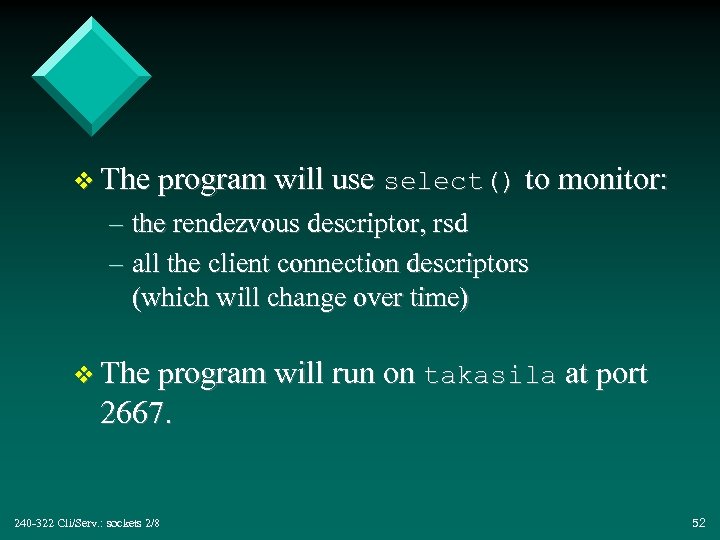

v The program will use select() to monitor: – the rendezvous descriptor, rsd – all the client connection descriptors (which will change over time) v The program will run on takasila at port 2667. 240 -322 Cli/Serv. : sockets 2/8 52

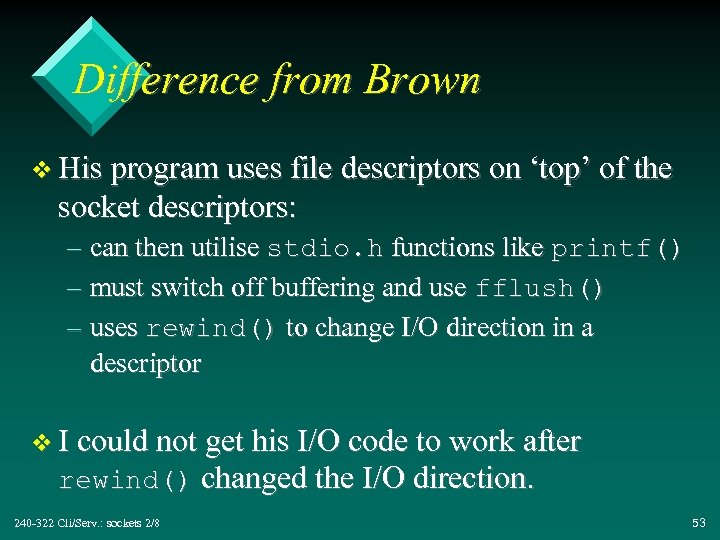

Difference from Brown v His program uses file descriptors on ‘top’ of the socket descriptors: – can then utilise stdio. h functions like printf() – must switch off buffering and use fflush() – uses rewind() to change I/O direction in a descriptor v I could not get his I/O code to work after rewind() changed the I/O direction. 240 -322 Cli/Serv. : sockets 2/8 53

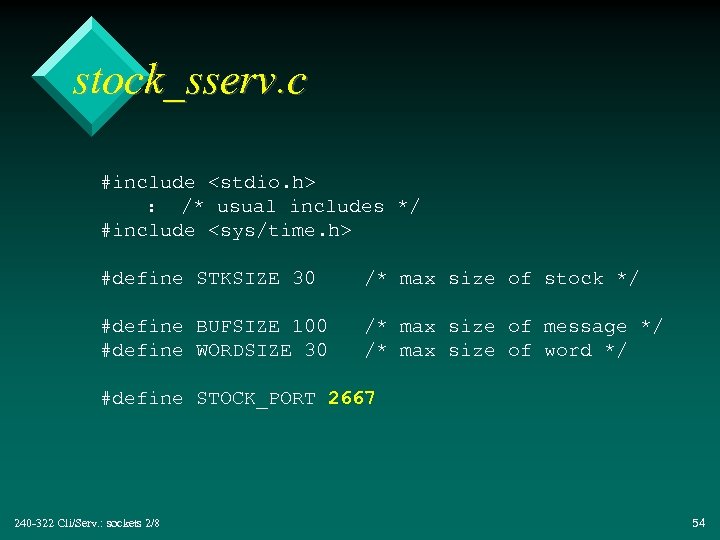

stock_sserv. c #include <stdio. h> : /* usual includes */ #include <sys/time. h> #define STKSIZE 30 /* max size of stock */ #define BUFSIZE 100 #define WORDSIZE 30 /* max size of message */ /* max size of word */ #define STOCK_PORT 2667 240 -322 Cli/Serv. : sockets 2/8 54

![struct stock_item { char product[WORDSIZE]; /* name of product */ int quantity; }; typedef struct stock_item { char product[WORDSIZE]; /* name of product */ int quantity; }; typedef](https://present5.com/presentation/be61081f074ee7195ca6d5ded7c640c7/image-55.jpg)

struct stock_item { char product[WORDSIZE]; /* name of product */ int quantity; }; typedef struct stock_item Stock; /* Global stock array, and number of stock items */ Stock stock[STKSIZE]; /* stock "database" */ int stock_num = 0; : 240 -322 Cli/Serv. : sockets 2/8 55

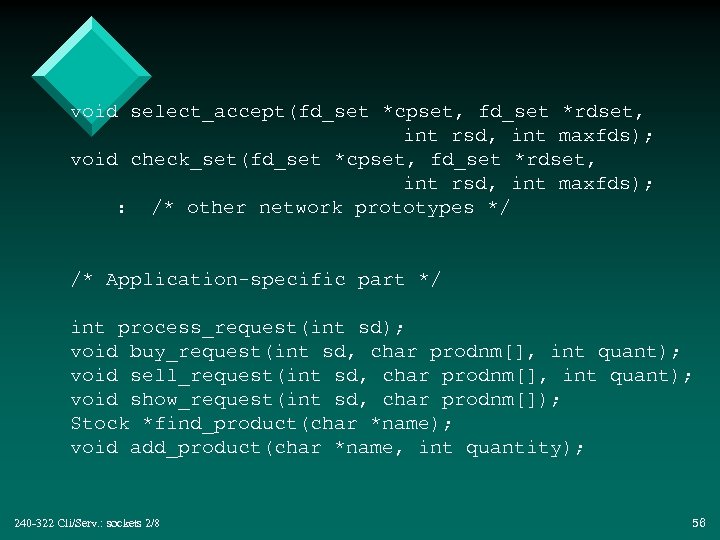

void select_accept(fd_set *cpset, fd_set *rdset, int rsd, int maxfds); void check_set(fd_set *cpset, fd_set *rdset, int rsd, int maxfds); : /* other network prototypes */ /* Application-specific part */ int process_request(int sd); void buy_request(int sd, char prodnm[], int quant); void sell_request(int sd, char prodnm[], int quant); void show_request(int sd, char prodnm[]); Stock *find_product(char *name); void add_product(char *name, int quantity); 240 -322 Cli/Serv. : sockets 2/8 56

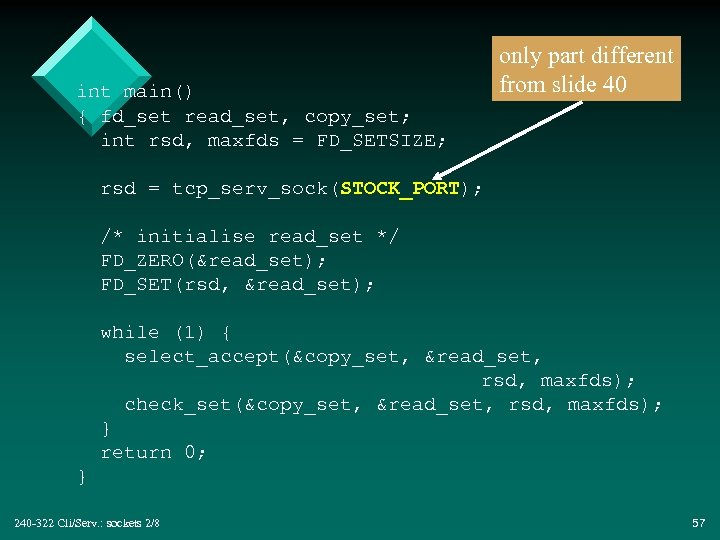

int main() { fd_set read_set, copy_set; int rsd, maxfds = FD_SETSIZE; only part different from slide 40 rsd = tcp_serv_sock(STOCK_PORT); /* initialise read_set */ FD_ZERO(&read_set); FD_SET(rsd, &read_set); while (1) { select_accept(©_set, &read_set, rsd, maxfds); check_set(©_set, &read_set, rsd, maxfds); } return 0; } 240 -322 Cli/Serv. : sockets 2/8 57

void select_accept(fd_set *cpset, fd_set *rdset, int rsd, int maxfds) /* Wait for socket activity using select(). If the activity is a new connection request, use accept() to accept it. */ { /* same as slides 40 -41 */ } 240 -322 Cli/Serv. : sockets 2/8 58

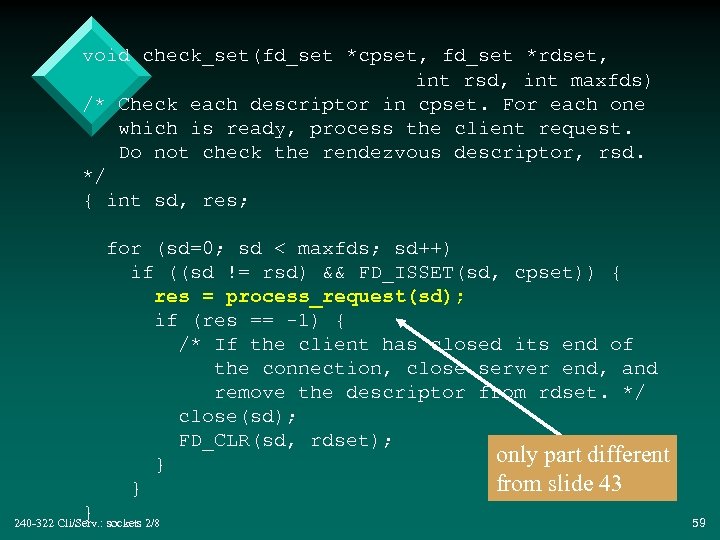

void check_set(fd_set *cpset, fd_set *rdset, int rsd, int maxfds) /* Check each descriptor in cpset. For each one which is ready, process the client request. Do not check the rendezvous descriptor, rsd. */ { int sd, res; for (sd=0; sd < maxfds; sd++) if ((sd != rsd) && FD_ISSET(sd, cpset)) { res = process_request(sd); if (res == -1) { /* If the client has closed its end of the connection, close server end, and remove the descriptor from rdset. */ close(sd); FD_CLR(sd, rdset); only part different } from slide 43 } } 240 -322 Cli/Serv. : sockets 2/8 59

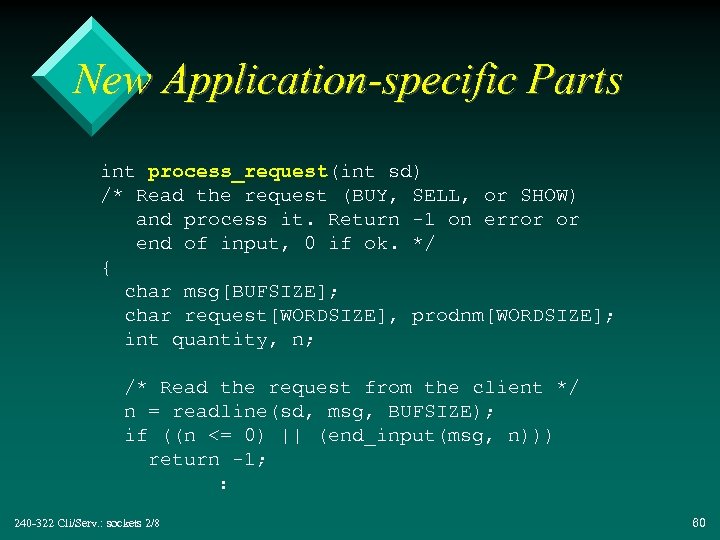

New Application-specific Parts int process_request(int sd) /* Read the request (BUY, SELL, or SHOW) and process it. Return -1 on error or end of input, 0 if ok. */ { char msg[BUFSIZE]; char request[WORDSIZE], prodnm[WORDSIZE]; int quantity, n; /* Read the request from the client */ n = readline(sd, msg, BUFSIZE); if ((n <= 0) || (end_input(msg, n))) return -1; : 240 -322 Cli/Serv. : sockets 2/8 60

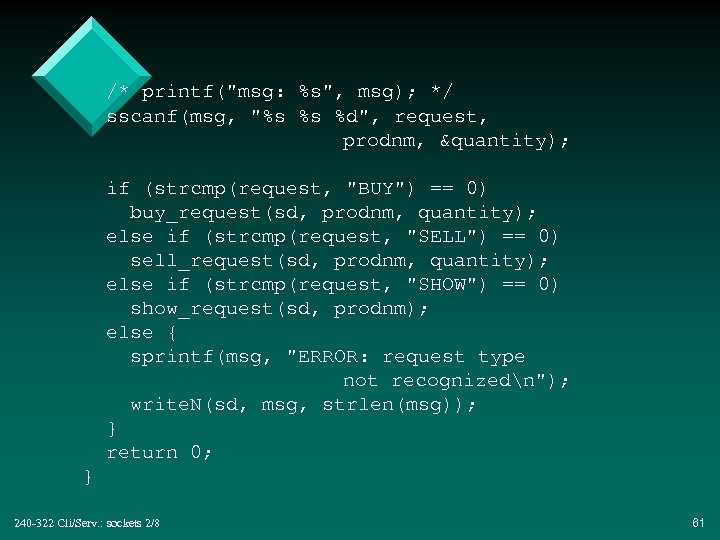

/* printf("msg: %s", msg); */ sscanf(msg, "%s %s %d", request, prodnm, &quantity); if (strcmp(request, "BUY") == 0) buy_request(sd, prodnm, quantity); else if (strcmp(request, "SELL") == 0) sell_request(sd, prodnm, quantity); else if (strcmp(request, "SHOW") == 0) show_request(sd, prodnm); else { sprintf(msg, "ERROR: request type not recognizedn"); write. N(sd, msg, strlen(msg)); } return 0; } 240 -322 Cli/Serv. : sockets 2/8 61

![void buy_request(int sd, char prodnm[], int quant) /* Increase stock level for prodnm by void buy_request(int sd, char prodnm[], int quant) /* Increase stock level for prodnm by](https://present5.com/presentation/be61081f074ee7195ca6d5ded7c640c7/image-62.jpg)

void buy_request(int sd, char prodnm[], int quant) /* Increase stock level for prodnm by the quant amount */ { char msg[BUFSIZE]; Stock *p; if ((p = find_product(prodnm)) == NULL) add_product(prodnm, quant); else p->quantity += quant; /* Confirm transaction with the client */ sprintf(msg, "BOUGHT %s %dn", prodnm, quant); write. N(sd, msg, strlen(msg)); } 240 -322 Cli/Serv. : sockets 2/8 62

![void sell_request(int sd, char prodnm[], int quant) /* Sell quant number of prodnm */ void sell_request(int sd, char prodnm[], int quant) /* Sell quant number of prodnm */](https://present5.com/presentation/be61081f074ee7195ca6d5ded7c640c7/image-63.jpg)

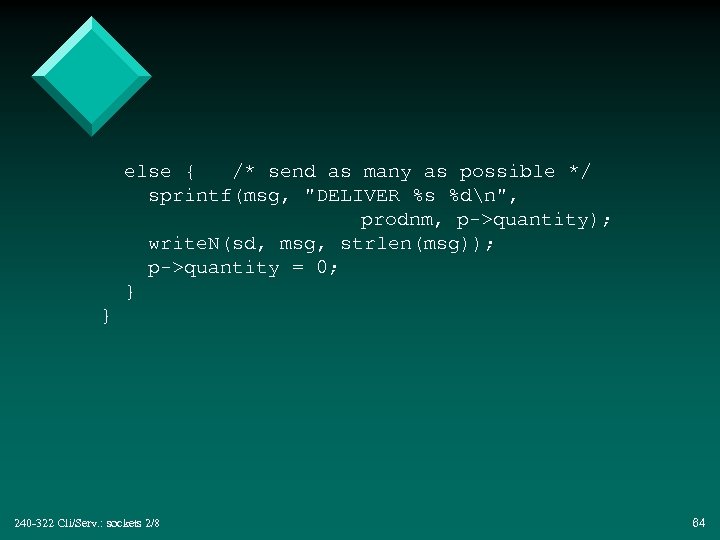

void sell_request(int sd, char prodnm[], int quant) /* Sell quant number of prodnm */ { char msg[BUFSIZE]; Stock *p; if ((p = find_product(prodnm)) == NULL) { sprintf(msg, "ERROR: product %s is unknownn", prodnm); write. N(sd, msg, strlen(msg)); } else if (p->quantity >= quant) { sprintf(msg, "DELIVER %s %dn", prodnm, quant); write. N(sd, msg, strlen(msg)); p->quantity -= quant; } : 240 -322 Cli/Serv. : sockets 2/8 63

else { /* send as many as possible */ sprintf(msg, "DELIVER %s %dn", prodnm, p->quantity); write. N(sd, msg, strlen(msg)); p->quantity = 0; } } 240 -322 Cli/Serv. : sockets 2/8 64

![void show_request(int sd, char prodnm[]) /* Show details on prodnm */ { char msg[BUFSIZE]; void show_request(int sd, char prodnm[]) /* Show details on prodnm */ { char msg[BUFSIZE];](https://present5.com/presentation/be61081f074ee7195ca6d5ded7c640c7/image-65.jpg)

void show_request(int sd, char prodnm[]) /* Show details on prodnm */ { char msg[BUFSIZE]; Stock *p; if ((p = find_product(prodnm)) == NULL) { sprintf(msg, "ERROR: product %s is unknownn", prodnm); write. N(sd, msg, strlen(msg)); } else { sprintf(msg, "STOCK %s %dn", prodnm, p->quantity); write. N(sd, msg, strlen(msg)); } } 240 -322 Cli/Serv. : sockets 2/8 65

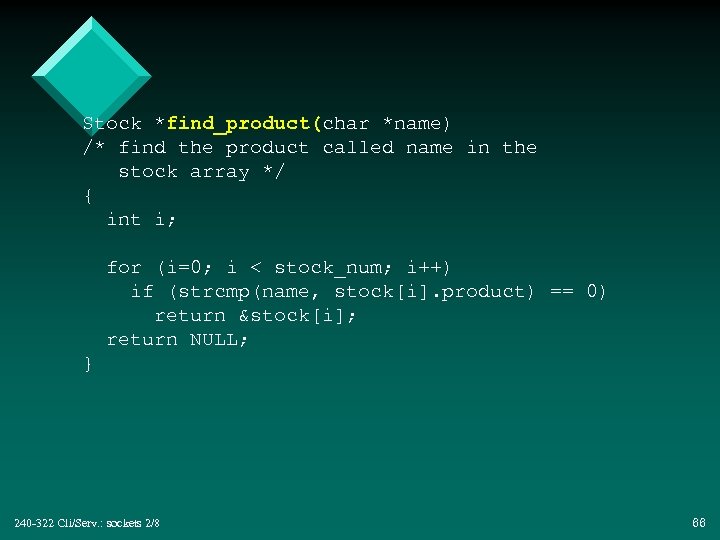

Stock *find_product(char *name) /* find the product called name in the stock array */ { int i; for (i=0; i < stock_num; i++) if (strcmp(name, stock[i]. product) == 0) return &stock[i]; return NULL; } 240 -322 Cli/Serv. : sockets 2/8 66

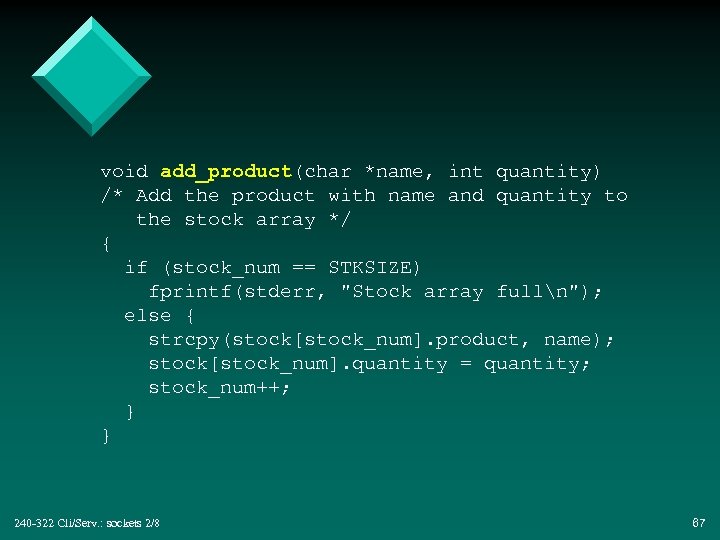

void add_product(char *name, int quantity) /* Add the product with name and quantity to the stock array */ { if (stock_num == STKSIZE) fprintf(stderr, "Stock array fulln"); else { strcpy(stock[stock_num]. product, name); stock[stock_num]. quantity = quantity; stock_num++; } } 240 -322 Cli/Serv. : sockets 2/8 67

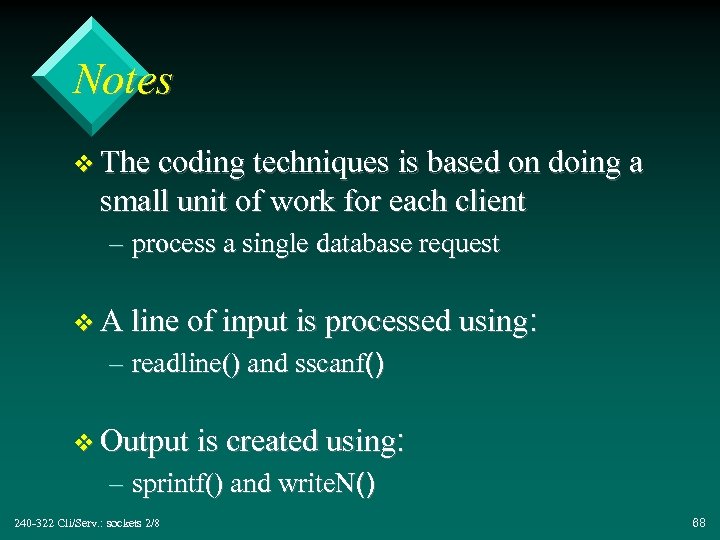

Notes v The coding techniques is based on doing a small unit of work for each client – process a single database request v A line of input is processed using: – readline() and sscanf() v Output is created using: – sprintf() and write. N() 240 -322 Cli/Serv. : sockets 2/8 68

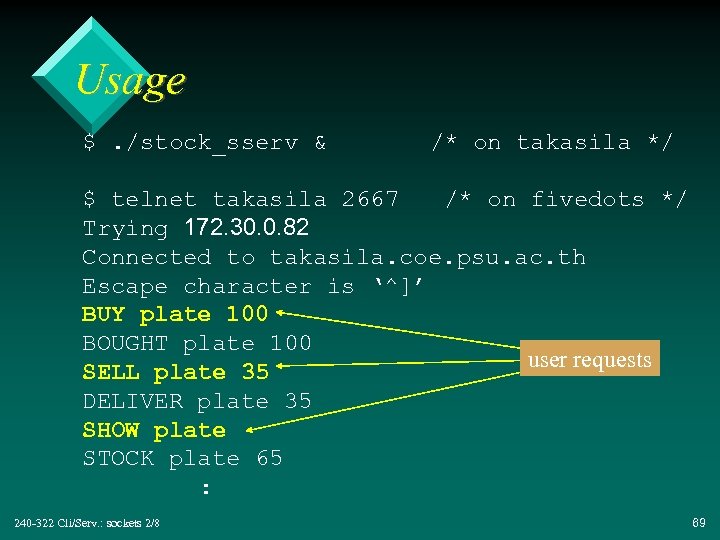

Usage $. /stock_sserv & /* on takasila */ $ telnet takasila 2667 /* on fivedots */ Trying 172. 30. 0. 82 Connected to takasila. coe. psu. ac. th Escape character is ‘^]’ BUY plate 100 BOUGHT plate 100 user requests SELL plate 35 DELIVER plate 35 SHOW plate STOCK plate 65 : 240 -322 Cli/Serv. : sockets 2/8 69

4. Data Distribution v Illustrate how to use data distribution with clients and servers (see part 2, section 4): – several servers working concurrently on different parts of a client’s problem – try to reduce the overall time to find a solution 240 -322 Cli/Serv. : sockets 2/8 70

Problem Area v Count the number of primes between 1 and 24, 000 (24 million) – very time consuming – simple problem to understand – easy to divide the problem into independent sub-parts – servers do not need to communicate to calculate their part of the answer 240 -322 Cli/Serv. : sockets 2/8 71

Overview 4. 1. primes. c – count the number of primes sequentially 4. 2. prime_serv. c – a “counting primes” server 4. 3. prime_mcli. c – a client that sends counting sub-problems to multiple prime_servers 240 -322 Cli/Serv. : sockets 2/8 72

4. 1. primes. c v A sequential primes counter: – for developing/debugging the calculation code – for comparing the concurrent calculation time against the sequential calculation time uthe concurrent version should be quicker!! – for checking the results of the concurent version 240 -322 Cli/Serv. : sockets 2/8 73

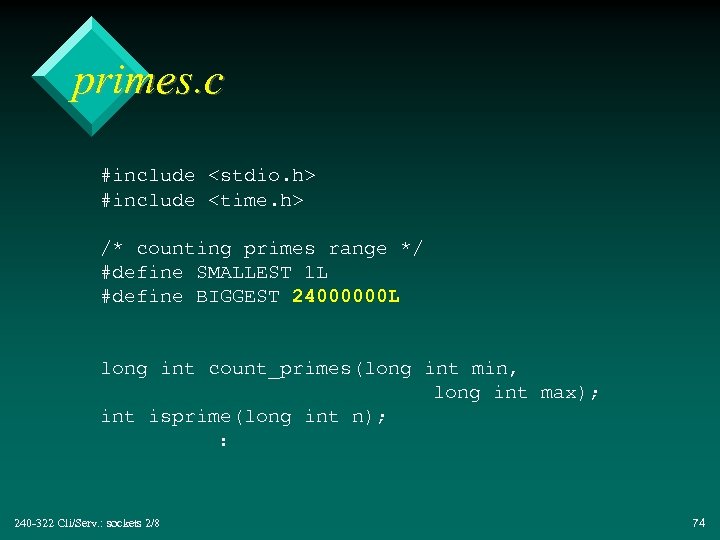

primes. c #include <stdio. h> #include <time. h> /* counting primes range */ #define SMALLEST 1 L #define BIGGEST 24000000 L long int count_primes(long int min, long int max); int isprime(long int n); : 240 -322 Cli/Serv. : sockets 2/8 74

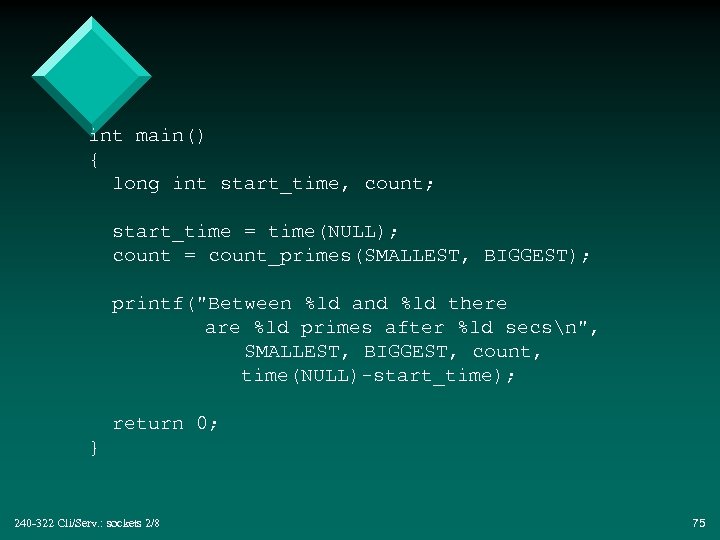

int main() { long int start_time, count; start_time = time(NULL); count = count_primes(SMALLEST, BIGGEST); printf("Between %ld and %ld there are %ld primes after %ld secsn", SMALLEST, BIGGEST, count, time(NULL)-start_time); return 0; } 240 -322 Cli/Serv. : sockets 2/8 75

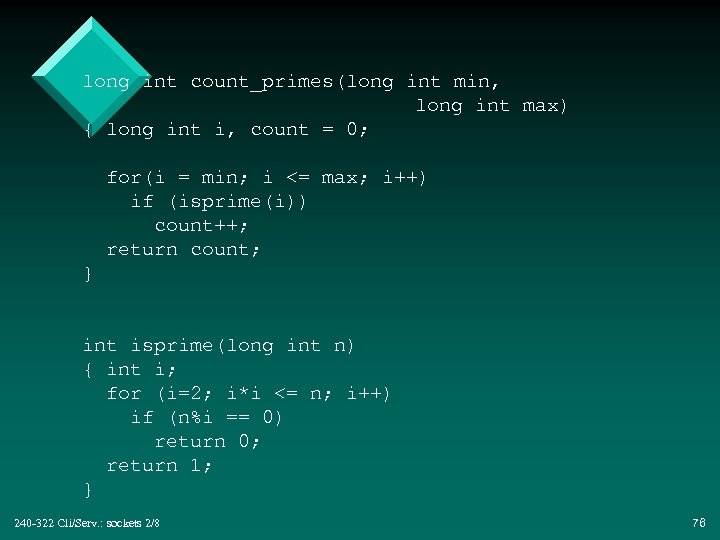

long int count_primes(long int min, long int max) { long int i, count = 0; for(i = min; i <= max; i++) if (isprime(i)) count++; return count; } int isprime(long int n) { int i; for (i=2; i*i <= n; i++) if (n%i == 0) return 0; return 1; } 240 -322 Cli/Serv. : sockets 2/8 76

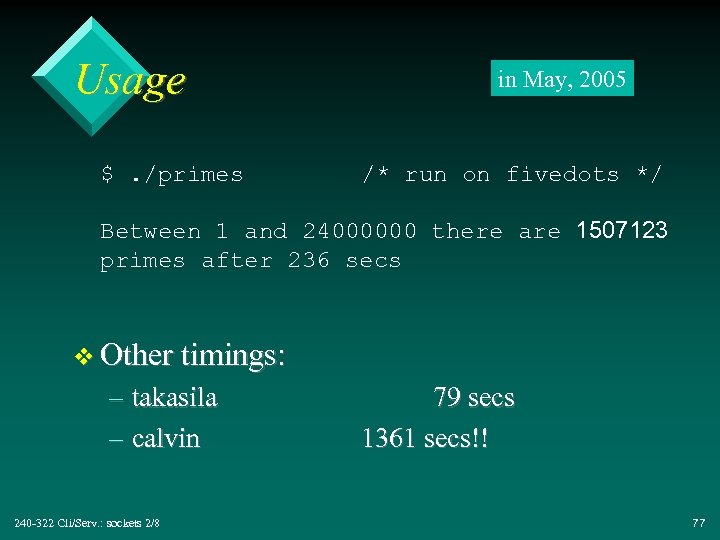

Usage $. /primes in May, 2005 /* run on fivedots */ Between 1 and 24000000 there are 1507123 primes after 236 secs v Other timings: – takasila – calvin 240 -322 Cli/Serv. : sockets 2/8 79 secs 1361 secs!! 77

4. 2. prime_serv. c v A server for counting primes in a user- specified range. v Two major design issues: – how to divide the work between the client and the server? – should the server be connection-oriented (TCP) or connectionless (UDP)? 240 -322 Cli/Serv. : sockets 2/8 78

4. 2. 1. Client versus Server work? v The main aim is that the server’s computation time should be much bigger than the communication overheads to reach it – this makes the networking costs less important 240 -322 Cli/Serv. : sockets 2/8 continued 79

v Solution: put a “large enough” sub-problem on the server. v But is is very hard to know what “large enough” is. It depends on: – the difficulty of the calculation – the machine/network load – the machine type 240 -322 Cli/Serv. : sockets 2/8 80

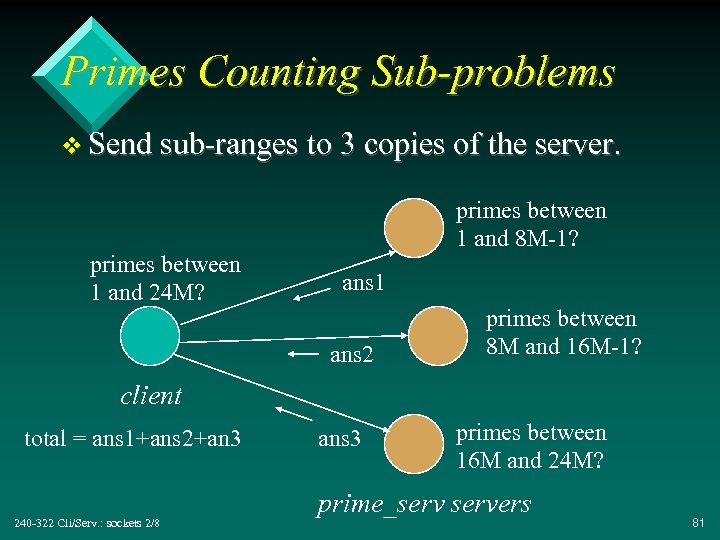

Primes Counting Sub-problems v Send sub-ranges to 3 copies of the server. primes between 1 and 24 M? primes between 1 and 8 M-1? ans 1 ans 2 primes between 8 M and 16 M-1? client total = ans 1+ans 2+an 3 240 -322 Cli/Serv. : sockets 2/8 ans 3 primes between 16 M and 24 M? prime_servers 81

prime_serv. c Functionality v Call count_prime() to count the primes in the client-specified range. v No need for communication with other servers. v The client adds together the answers from all the servers to get the final total. 240 -322 Cli/Serv. : sockets 2/8 82

4. 2. 2. TCP or UDP? v The client sub-range message does not require a long-lived link – UDP would be adequate v With UDP, the programmer must code up a timeout and resend mechanism to deal with the possibly unreliable network – too hard – use TCP to get reliability for free 240 -322 Cli/Serv. : sockets 2/8 83

Client-server I/O v The client will send a string holding two long integers, and ending in a newline – the min and max values for the range to be counted – e. g. “ 8000000 15999999n” v The server will send back a string holding a single long integer and a newline – the count of the primes in the range – e. g. “491353 n” 240 -322 Cli/Serv. : sockets 2/8 84

4. 2. 3. prime_serv. c v Very similar to echo_serv. c (part 7, slide 35) v The main difference is that echo_upper() is replaced by cnt_primes() which calls count_primes() (from primes. c). v The server will run at port 2669. 240 -322 Cli/Serv. : sockets 2/8 85

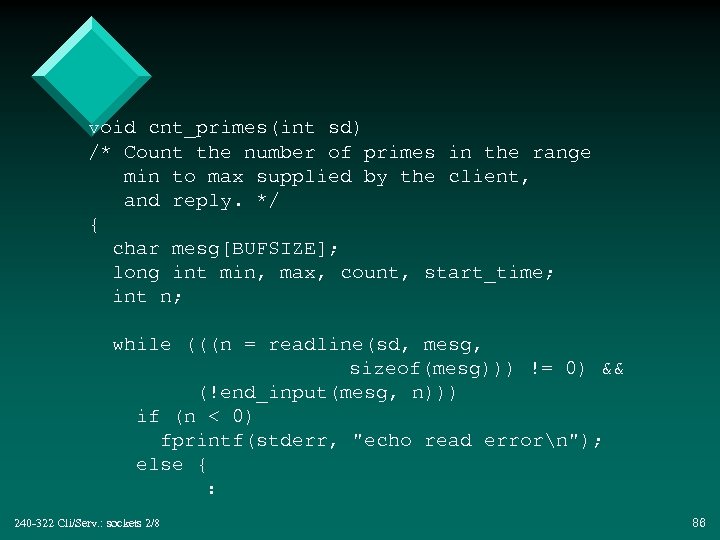

void cnt_primes(int sd) /* Count the number of primes in the range min to max supplied by the client, and reply. */ { char mesg[BUFSIZE]; long int min, max, count, start_time; int n; while (((n = readline(sd, mesg, sizeof(mesg))) != 0) && (!end_input(mesg, n))) if (n < 0) fprintf(stderr, "echo read errorn"); else { : 240 -322 Cli/Serv. : sockets 2/8 86

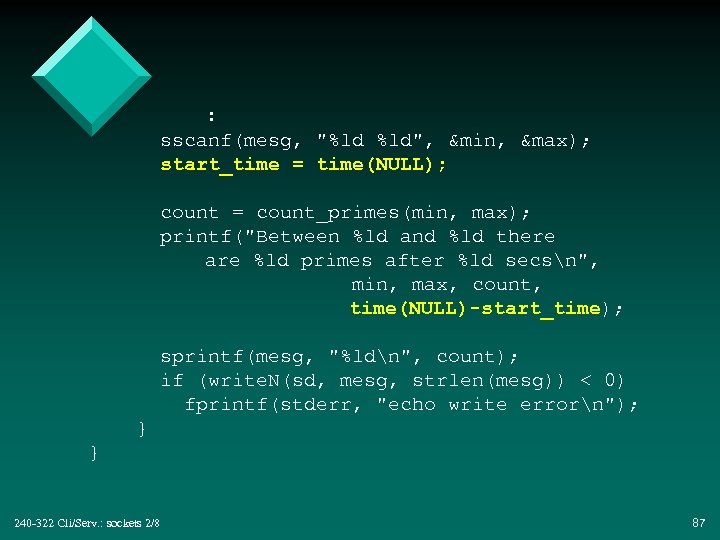

: sscanf(mesg, "%ld %ld", &min, &max); start_time = time(NULL); count = count_primes(min, max); printf("Between %ld and %ld there are %ld primes after %ld secsn", min, max, count, time(NULL)-start_time); sprintf(mesg, "%ldn", count); if (write. N(sd, mesg, strlen(mesg)) < 0) fprintf(stderr, "echo write errorn"); } } 240 -322 Cli/Serv. : sockets 2/8 87

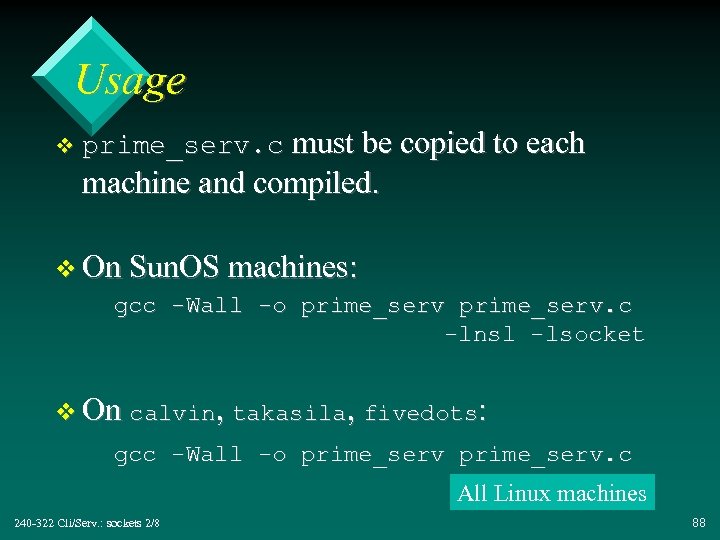

Usage v prime_serv. c must be copied to each machine and compiled. v On Sun. OS machines: gcc -Wall -o prime_serv. c -lnsl -lsocket v On calvin, takasila, fivedots: gcc -Wall -o prime_serv. c All Linux machines 240 -322 Cli/Serv. : sockets 2/8 88

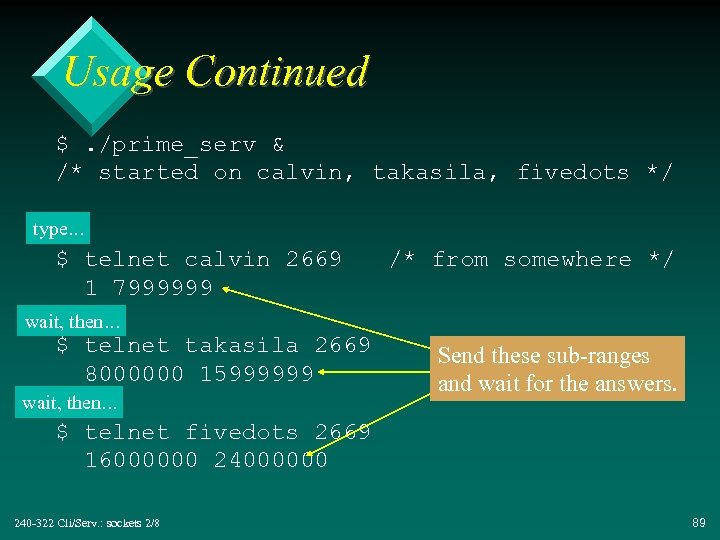

Usage Continued $. /prime_serv & /* started on calvin, takasila, fivedots */ type. . . $ telnet calvin 2669 1 7999999 /* from somewhere */ wait, then. . . $ telnet takasila 2669 8000000 15999999 wait, then. . . Send these sub-ranges and wait for the answers. $ telnet fivedots 2669 16000000 240 -322 Cli/Serv. : sockets 2/8 89

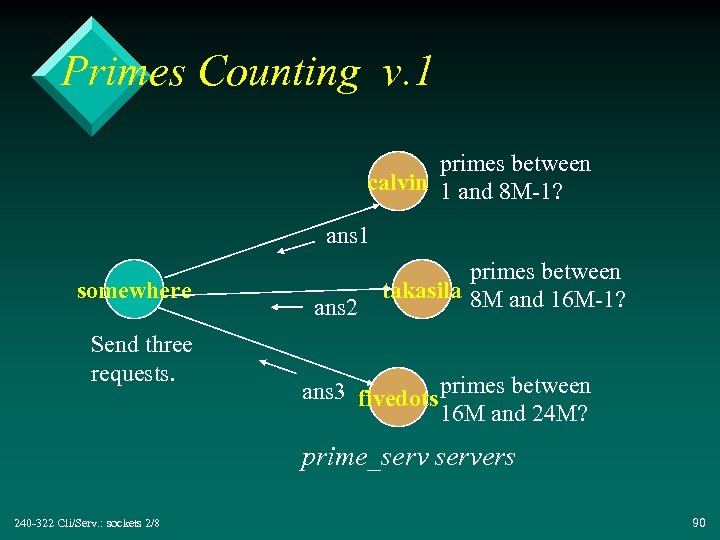

Primes Counting v. 1 primes between calvin 1 and 8 M-1? ans 1 somewhere Send three requests. ans 2 primes between takasila 8 M and 16 M-1? ans 3 fivedots primes between 16 M and 24 M? prime_servers 240 -322 Cli/Serv. : sockets 2/8 90

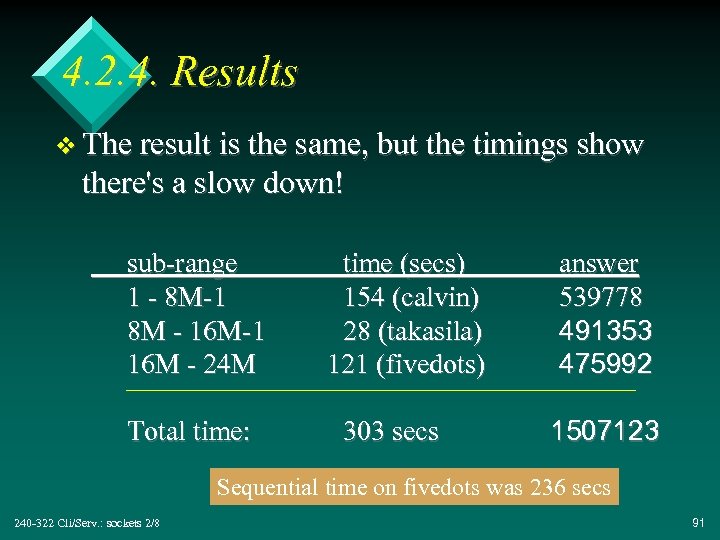

4. 2. 4. Results v The result is the same, but the timings show there's a slow down! sub-range 1 - 8 M-1 8 M - 16 M-1 16 M - 24 M Total time: time (secs) 154 (calvin) 28 (takasila) 121 (fivedots) 303 secs answer 539778 491353 475992 1507123 Sequential time on fivedots was 236 secs 240 -322 Cli/Serv. : sockets 2/8 91

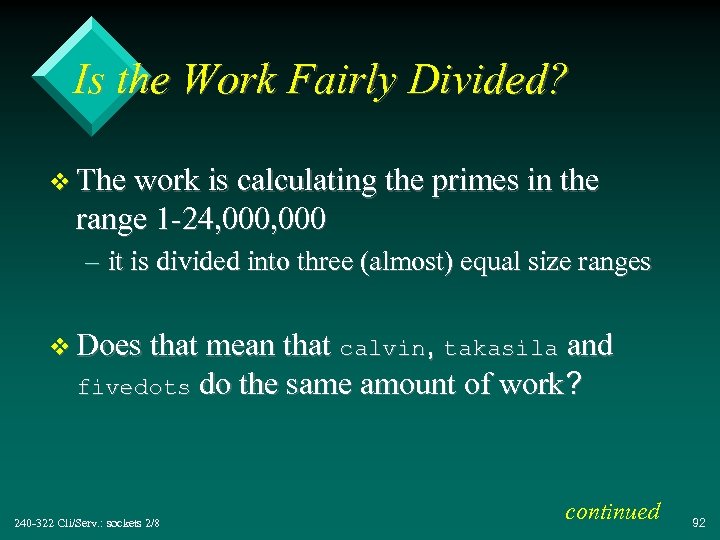

Is the Work Fairly Divided? v The work is calculating the primes in the range 1 -24, 000 – it is divided into three (almost) equal size ranges v Does that mean that calvin, takasila and fivedots do the same amount of work? 240 -322 Cli/Serv. : sockets 2/8 continued 92

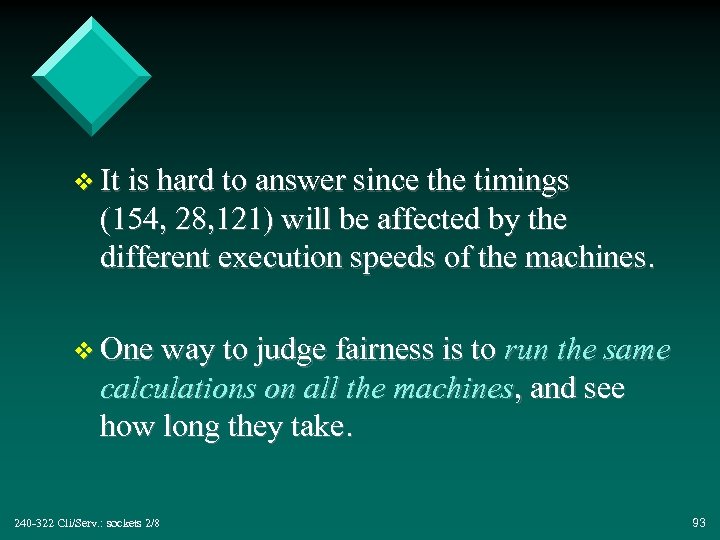

v It is hard to answer since the timings (154, 28, 121) will be affected by the different execution speeds of the machines. v One way to judge fairness is to run the same calculations on all the machines, and see how long they take. 240 -322 Cli/Serv. : sockets 2/8 93

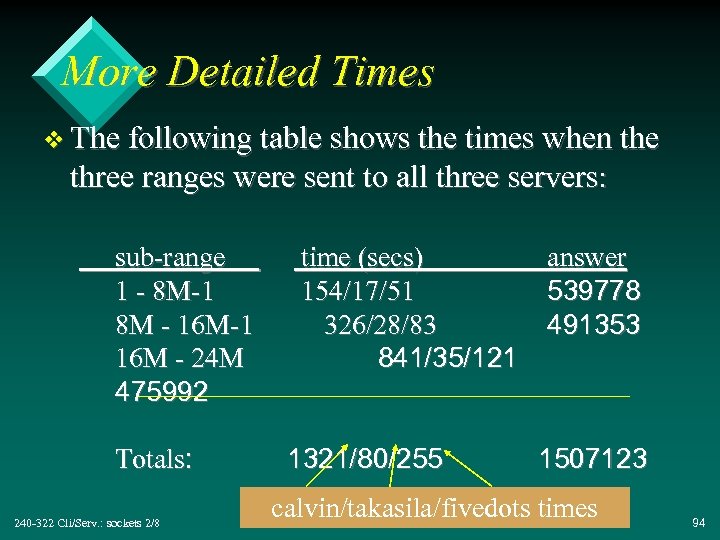

More Detailed Times v The following table shows the times when the three ranges were sent to all three servers: sub-range 1 - 8 M-1 8 M - 16 M-1 16 M - 24 M 475992 Totals: 240 -322 Cli/Serv. : sockets 2/8 time (secs) 154/17/51 326/28/83 841/35/121 1321/80/255 answer 539778 491353 1507123 calvin/takasila/fivedots times 94

Again: Is the Work Fairly Divided? v Reading down a column, shows the speed on one machine for the three ranges – e. g. takasila speed is (17, 28, 35( v The slower speeds at the higher ranges shows that the primes calculation takes longer when the numbers are bigger. Why? 240 -322 Cli/Serv. : sockets 2/8 continued 95

v The server uses count_primes() on the range, calling isprime() for each number in the range – isprime(100) will loop about 10 times – isprime(10000) will loop about 100 times – isprime(1000000) will loop about 1000 times u since the loop goes from i=2 to sqrt(number( v This means that a low range of numbers (e. g. 1 -8 M-1) will be faster to check than a high range (e. g. 16 M - 24 M. ( 240 -322 Cli/Serv. : sockets 2/8 continued 96

v That means that dividing the work into three equal size ranges is not fair: – fivedots has more work to do than takasila – takasila has more work to do than calvin v Sharing the work more evenly would speed things up – this is called load balancing 240 -322 Cli/Serv. : sockets 2/8 97

Which is the Fastest Machine? v A measure of the relative speeds of the machines can be seen by looking at the calculation times for the same range across the three machines. v For example, the range (1 - 8 M-1) takes: – on calvin: 154 secs – on takasila: 17 secs – on fivedots: 51 secs 240 -322 Cli/Serv. : sockets 2/8 continued 98

v The ratios: – calvin/takasila: 154/17 = 9 x slower – fivedots/takasila: 51/17 = 3 x slower v takasila is the fastest machine (maybe( – conclusions should not be based on just one series of numbers – many tests should be carried out 240 -322 Cli/Serv. : sockets 2/8 continued 99

v At different times of day, execution speed timings can vary by large amounts, due to: – machine load – network load v The type of calculation may also affect the running speed – e. g. a maths co-processor speeds up numerical work 240 -322 Cli/Serv. : sockets 2/8 100

4. 2. 5. Some Conclusions v The work is not fairly divided between the servers. v The machines are not equally fast: calvin is much slower than takasila and fivedots. v It might be simplest just to move the sequential calculation to takasila, and not distribute it. 240 -322 Cli/Serv. : sockets 2/8 continued 101

v In a more typical LAN, all the machines will be of the same or similar type (e. g. Pentiums) – we will carry on assuming that it is worth distributing the task among the three machines v Always do test timings: – a distributed version of an application is not necessarily faster 240 -322 Cli/Serv. : sockets 2/8 continued 102

means "very strong" v The only cast-iron reason for using another (server) machine is that it contains a resource not found on the home (client) machine. 240 -322 Cli/Serv. : sockets 2/8 103

4. 3. prime_mcli. c v This client sends sub-ranges to prime_servers running on multiple hosts – but it sends them without waiting for answers v The host names are supplied by the user on the command line: $. /prime_mcli calvin takasila fivedots v The user must start the prime_servers on these hosts (i. e. start them manually). 240 -322 Cli/Serv. : sockets 2/8 continued 104

v The sub-ranges are calculated by dividing the total range (e. g. 24 M) by the number of hosts (e. g. 3). v prime_mcli is as fast as the slowest server response. v This example is based on Brown, p. 233 -235. 240 -322 Cli/Serv. : sockets 2/8 105

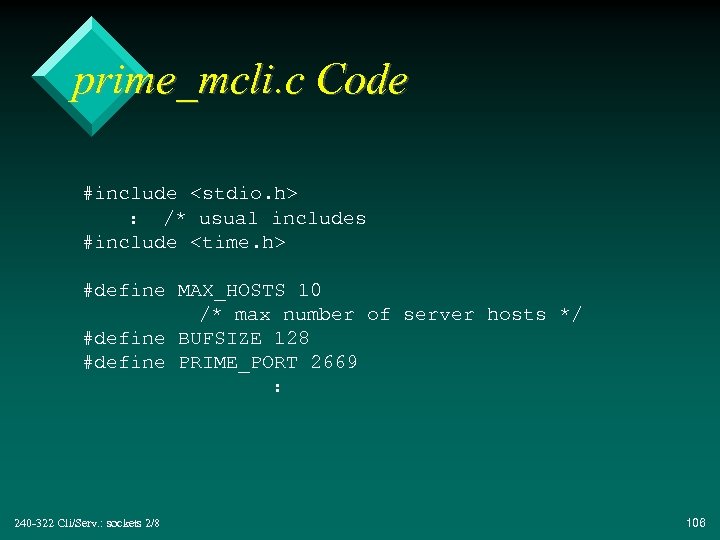

prime_mcli. c Code #include <stdio. h> : /* usual includes #include <time. h> #define MAX_HOSTS 10 /* max number of server hosts */ #define BUFSIZE 128 #define PRIME_PORT 2669 : 240 -322 Cli/Serv. : sockets 2/8 106

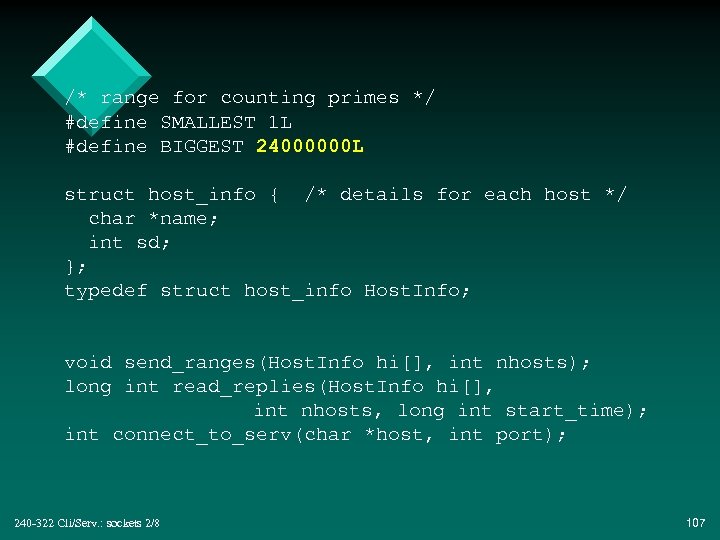

/* range for counting primes */ #define SMALLEST 1 L #define BIGGEST 24000000 L struct host_info { /* details for each host */ char *name; int sd; }; typedef struct host_info Host. Info; void send_ranges(Host. Info hi[], int nhosts); long int read_replies(Host. Info hi[], int nhosts, long int start_time); int connect_to_serv(char *host, int port); 240 -322 Cli/Serv. : sockets 2/8 107

![int main(int argc, char *argv[]) { Host. Info hi[MAX_HOSTS]; int i, nhosts = argc-1; int main(int argc, char *argv[]) { Host. Info hi[MAX_HOSTS]; int i, nhosts = argc-1;](https://present5.com/presentation/be61081f074ee7195ca6d5ded7c640c7/image-108.jpg)

int main(int argc, char *argv[]) { Host. Info hi[MAX_HOSTS]; int i, nhosts = argc-1; long int total, start_time; for (i=0; i < nhosts; i++) { /* intialise hi[] */ hi[i]. name = argv[i+1]; hi[i]. sd = connect_to_serv(hi[i]. name, PRIME_PORT); printf("Connected to host %sn", hi[i]. name); } : 240 -322 Cli/Serv. : sockets 2/8 108

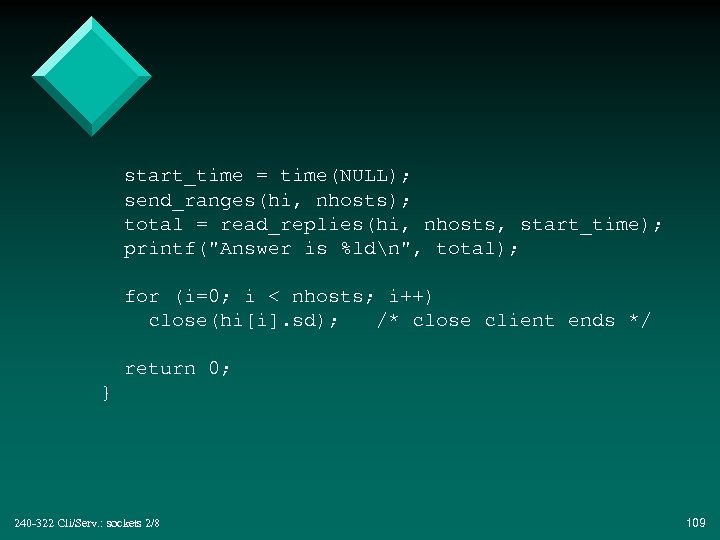

start_time = time(NULL); send_ranges(hi, nhosts); total = read_replies(hi, nhosts, start_time); printf("Answer is %ldn", total); for (i=0; i < nhosts; i++) close(hi[i]. sd); /* close client ends */ return 0; } 240 -322 Cli/Serv. : sockets 2/8 109

![void send_ranges(Host. Info hi[], int nhosts) /* Send a subrange to each server, in void send_ranges(Host. Info hi[], int nhosts) /* Send a subrange to each server, in](https://present5.com/presentation/be61081f074ee7195ca6d5ded7c640c7/image-110.jpg)

void send_ranges(Host. Info hi[], int nhosts) /* Send a subrange to each server, in the range SMALLEST to BIGGEST */ { long int min, max = SMALLEST-1; int i; char mesg[BUFSIZE]; for (i=0; i < nhosts; i++) { min = max + 1; max = min + (BIGGEST - SMALLEST + 1)/nhosts; if (i == nhosts-1) max = BIGGEST; printf("sent (%ld, %ld) to host %sn", min, max, hi[i]. name); sprintf(mesg, "%ld %ldn", min, max); write(hi[i]. sd, mesg, strlen(mesg)); } } 240 -322 Cli/Serv. : sockets 2/8 110

![long int read_replies(Host. Info hi[], int nhosts, long int start_time) /* Read responses back long int read_replies(Host. Info hi[], int nhosts, long int start_time) /* Read responses back](https://present5.com/presentation/be61081f074ee7195ca6d5ded7c640c7/image-111.jpg)

long int read_replies(Host. Info hi[], int nhosts, long int start_time) /* Read responses back from servers, in same order */ { long int total = 0, count; int i; char mesg[BUFSIZE]; for (i=0; i < nhosts; i++) { read(hi[i]. sd, mesg, BUFSIZE); sscanf(mesg, "%ld", &count); printf("Reply = %ld from host %10 s after %ld secsn", count, hi[i]. name, time(NULL)-start_time); total += count; } return total; } 240 -322 Cli/Serv. : sockets 2/8 111

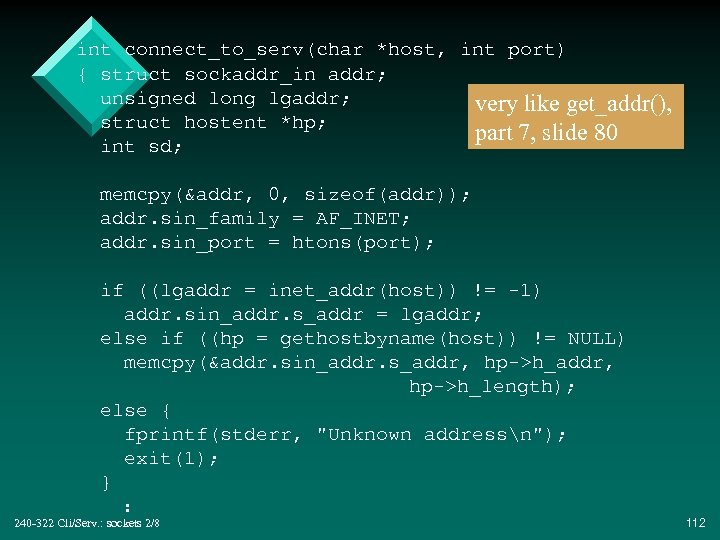

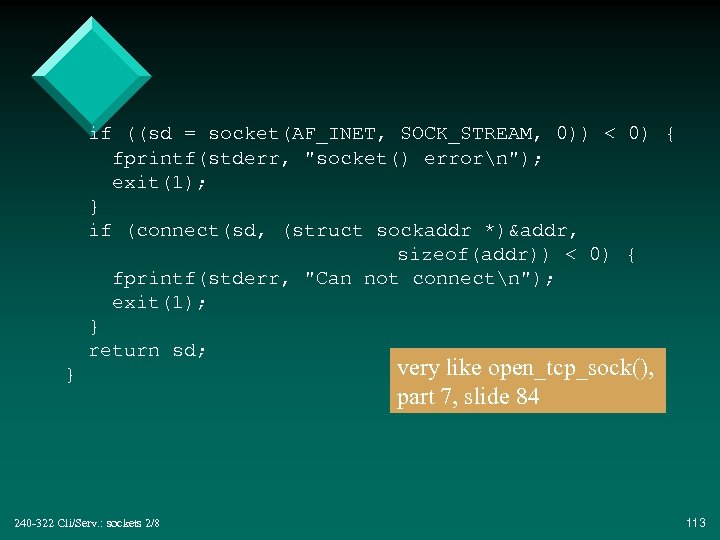

int connect_to_serv(char *host, int port) { struct sockaddr_in addr; unsigned long lgaddr; very like get_addr(), struct hostent *hp; part 7, slide 80 int sd; memcpy(&addr, 0, sizeof(addr)); addr. sin_family = AF_INET; addr. sin_port = htons(port); if ((lgaddr = inet_addr(host)) != -1) addr. sin_addr. s_addr = lgaddr; else if ((hp = gethostbyname(host)) != NULL) memcpy(&addr. sin_addr. s_addr, hp->h_length); else { fprintf(stderr, "Unknown addressn"); exit(1); } : 240 -322 Cli/Serv. : sockets 2/8 112

if ((sd = socket(AF_INET, SOCK_STREAM, 0)) < 0) { fprintf(stderr, "socket() errorn"); exit(1); } if (connect(sd, (struct sockaddr *)&addr, sizeof(addr)) < 0) { fprintf(stderr, "Can not connectn"); exit(1); } return sd; } 240 -322 Cli/Serv. : sockets 2/8 very like open_tcp_sock(), part 7, slide 84 113

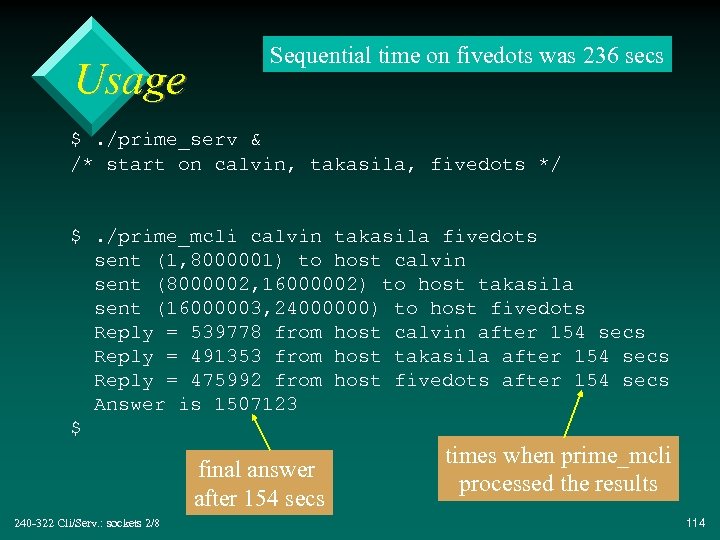

Usage Sequential time on fivedots was 236 secs $. /prime_serv & /* start on calvin, takasila, fivedots */ $. /prime_mcli calvin takasila fivedots sent (1, 8000001) to host calvin sent (8000002, 16000002) to host takasila sent (16000003, 24000000) to host fivedots Reply = 539778 from host calvin after 154 secs Reply = 491353 from host takasila after 154 secs Reply = 475992 from host fivedots after 154 secs Answer is 1507123 $ final answer after 154 secs 240 -322 Cli/Serv. : sockets 2/8 times when prime_mcli processed the results 114

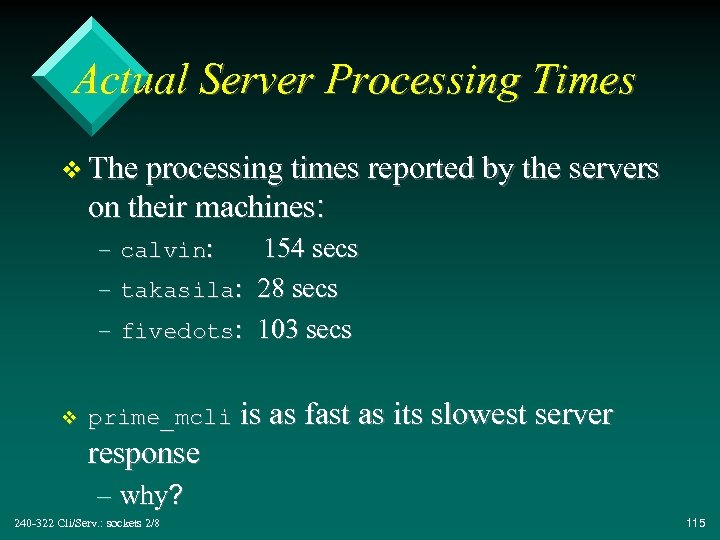

Actual Server Processing Times v The processing times reported by the servers on their machines: – calvin: 154 secs – takasila: 28 secs – fivedots: 103 secs v prime_mcli is as fast as its slowest server response – why? 240 -322 Cli/Serv. : sockets 2/8 115

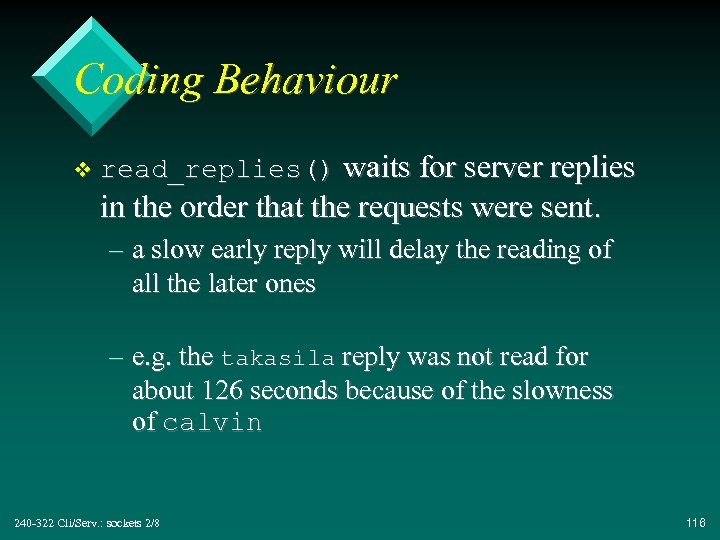

Coding Behaviour v read_replies() waits for server replies in the order that the requests were sent. – a slow early reply will delay the reading of all the later ones – e. g. the takasila reply was not read for about 126 seconds because of the slowness of calvin 240 -322 Cli/Serv. : sockets 2/8 116

5. A Processor Farm v The “processor farm” client distributes work to the servers when they become free: – uses the servers more fully – load is more balanced – the final answer is obtained quicker v This approach requires that the work can be divided into many parts – best if there are many more parts than servers so that there is always work to be allocated 240 -322 Cli/Serv. : sockets 2/8 continued 117

v The client must monitor all the servers at once so that it can detect when any one is free: – use select() v This approach is often called a master-slave configuration – client == master – servers == slaves v This example is based on Brown, p. 235 -241 240 -322 Cli/Serv. : sockets 2/8 118

prime_farm. c v Reimplement the primes counter using the “processor farm” approach. v Send sub-ranges to servers (coded as before), but the sub-range values are based on GRANULARITY (800) which generates ~800 ‘work packets’. v There are many more work packets than servers. 240 -322 Cli/Serv. : sockets 2/8 119

Code #include <stdio. h> : /* usual #includes */ #include <time. h> #define MAX_HOSTS 10 /* max number of hosts */ #define BUFSIZE 128 #define PRIME_PORT 2669 : 240 -322 Cli/Serv. : sockets 2/8 120

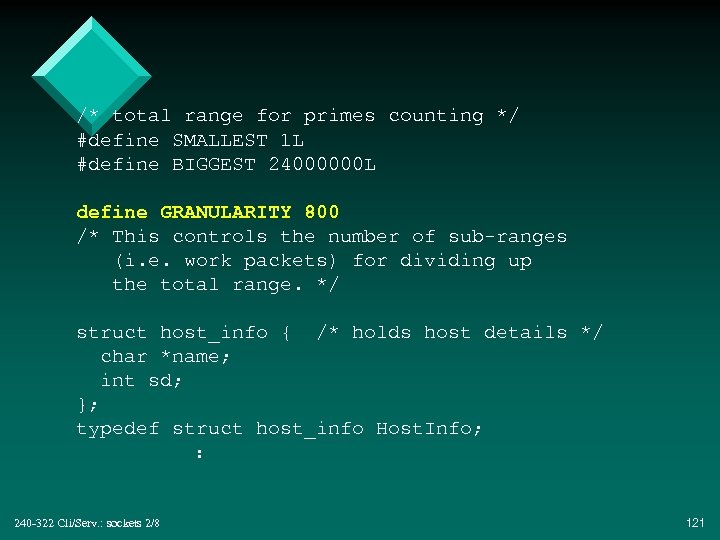

/* total range for primes counting */ #define SMALLEST 1 L #define BIGGEST 24000000 L define GRANULARITY 800 /* This controls the number of sub-ranges (i. e. work packets) for dividing up the total range. */ struct host_info { /* holds host details */ char *name; int sd; }; typedef struct host_info Host. Info; : 240 -322 Cli/Serv. : sockets 2/8 121

![void send_work(Host. Info hi[], int nhosts, long int *min, fd_set *rdset); long read_send_more(Host. Info void send_work(Host. Info hi[], int nhosts, long int *min, fd_set *rdset); long read_send_more(Host. Info](https://present5.com/presentation/be61081f074ee7195ca6d5ded7c640c7/image-122.jpg)

void send_work(Host. Info hi[], int nhosts, long int *min, fd_set *rdset); long read_send_more(Host. Info hi[], int nhosts, long start_time, long *min, fd_set *rdset); void send_work_packet(Host. Info hst, long int *min); int connect_to_serv(char *host, int port); int readline(int sd, char *buf, int maxlen); int write. N(int sd, char *buf, int nbytes); 240 -322 Cli/Serv. : sockets 2/8 122

![int main(int argc, char *argv[]) { Host. Info hi[MAX_HOSTS]; int i, nhosts = argc-1; int main(int argc, char *argv[]) { Host. Info hi[MAX_HOSTS]; int i, nhosts = argc-1;](https://present5.com/presentation/be61081f074ee7195ca6d5ded7c640c7/image-123.jpg)

int main(int argc, char *argv[]) { Host. Info hi[MAX_HOSTS]; int i, nhosts = argc-1; long int min, total, start_time; fd_set read_set; for (i=0; i < nhosts; i++) { /* intialise hi[] */ hi[i]. name = argv[i+1]; hi[i]. sd = connect_to_serv(hi[i]. name, PRIME_PORT); printf("Connected to host %sn", hi[i]. name); } : 240 -322 Cli/Serv. : sockets 2/8 123

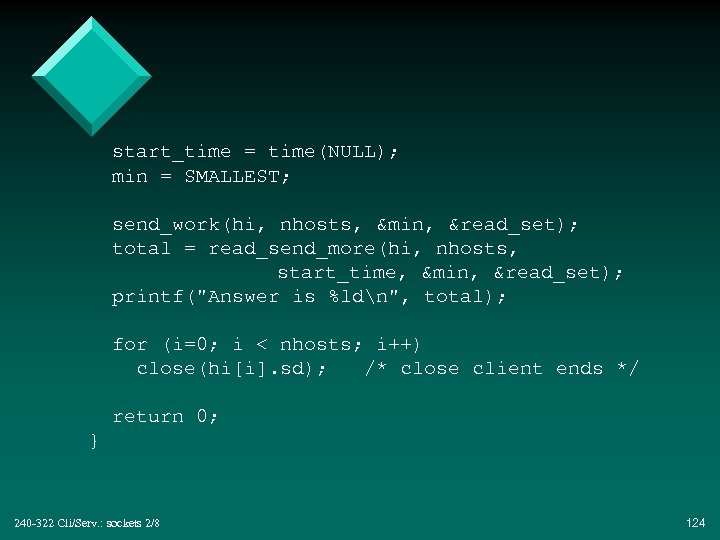

start_time = time(NULL); min = SMALLEST; send_work(hi, nhosts, &min, &read_set); total = read_send_more(hi, nhosts, start_time, &min, &read_set); printf("Answer is %ldn", total); for (i=0; i < nhosts; i++) close(hi[i]. sd); /* close client ends */ return 0; } 240 -322 Cli/Serv. : sockets 2/8 124

![void send_work(Host. Info hi[], int nhosts, long int *min, fd_set *rdset) /* Send an void send_work(Host. Info hi[], int nhosts, long int *min, fd_set *rdset) /* Send an](https://present5.com/presentation/be61081f074ee7195ca6d5ded7c640c7/image-125.jpg)

void send_work(Host. Info hi[], int nhosts, long int *min, fd_set *rdset) /* Send an initial packet to each server */ { int i; FD_ZERO(rdset); for (i=0; i < nhosts; i++) { FD_SET(hi[i]. sd, rdset); send_work_packet(hi[i], min); } } 240 -322 Cli/Serv. : sockets 2/8 125

![long read_send_more(Host. Info hi[], int nhosts, long start_time, long *min, fd_set *rdset) /* Use long read_send_more(Host. Info hi[], int nhosts, long start_time, long *min, fd_set *rdset) /* Use](https://present5.com/presentation/be61081f074ee7195ca6d5ded7c640c7/image-126.jpg)

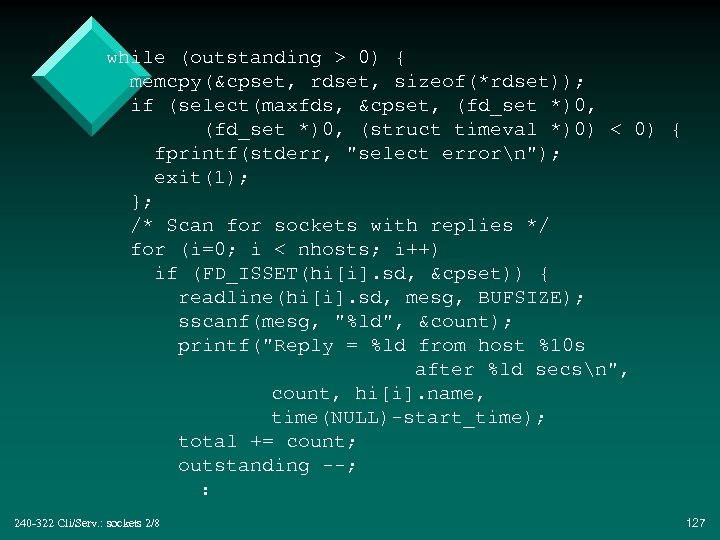

long read_send_more(Host. Info hi[], int nhosts, long start_time, long *min, fd_set *rdset) /* Use select() to wait for responses from servers. When a response arrives, add the count to the on-going total, and send the server another packet of work. */ { fd_set cpset; int outstanding, maxfds, i; char mesg[BUFSIZE]; long int total = 0, count; outstanding = nhosts; maxfds = FD_SETSIZE; : 240 -322 Cli/Serv. : sockets 2/8 126

while (outstanding > 0) { memcpy(&cpset, rdset, sizeof(*rdset)); if (select(maxfds, &cpset, (fd_set *)0, (struct timeval *)0) < 0) { fprintf(stderr, "select errorn"); exit(1); }; /* Scan for sockets with replies */ for (i=0; i < nhosts; i++) if (FD_ISSET(hi[i]. sd, &cpset)) { readline(hi[i]. sd, mesg, BUFSIZE); sscanf(mesg, "%ld", &count); printf("Reply = %ld from host %10 s after %ld secsn", count, hi[i]. name, time(NULL)-start_time); total += count; outstanding --; : 240 -322 Cli/Serv. : sockets 2/8 127

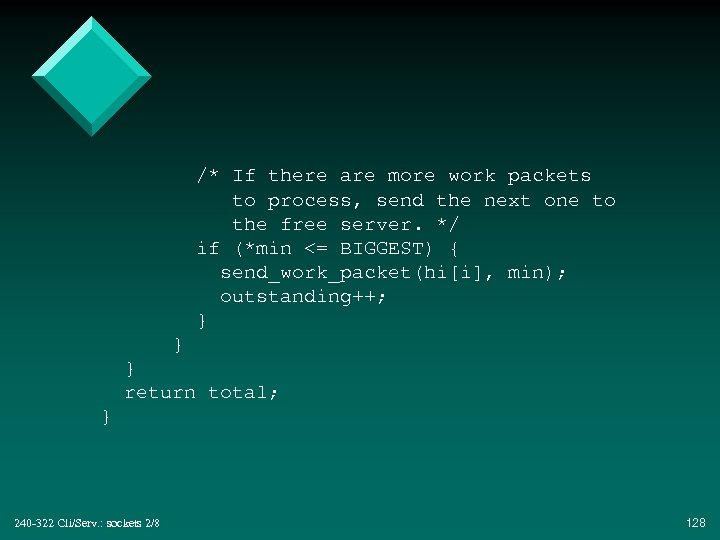

/* If there are more work packets to process, send the next one to the free server. */ if (*min <= BIGGEST) { send_work_packet(hi[i], min); outstanding++; } } } return total; } 240 -322 Cli/Serv. : sockets 2/8 128

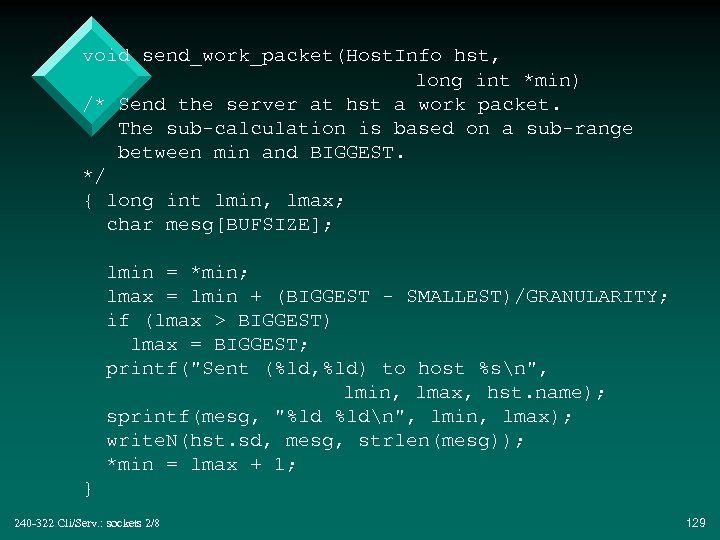

void send_work_packet(Host. Info hst, long int *min) /* Send the server at hst a work packet. The sub-calculation is based on a sub-range between min and BIGGEST. */ { long int lmin, lmax; char mesg[BUFSIZE]; lmin = *min; lmax = lmin + (BIGGEST - SMALLEST)/GRANULARITY; if (lmax > BIGGEST) lmax = BIGGEST; printf("Sent (%ld, %ld) to host %sn", lmin, lmax, hst. name); sprintf(mesg, "%ld %ldn", lmin, lmax); write. N(hst. sd, mesg, strlen(mesg)); *min = lmax + 1; } 240 -322 Cli/Serv. : sockets 2/8 129

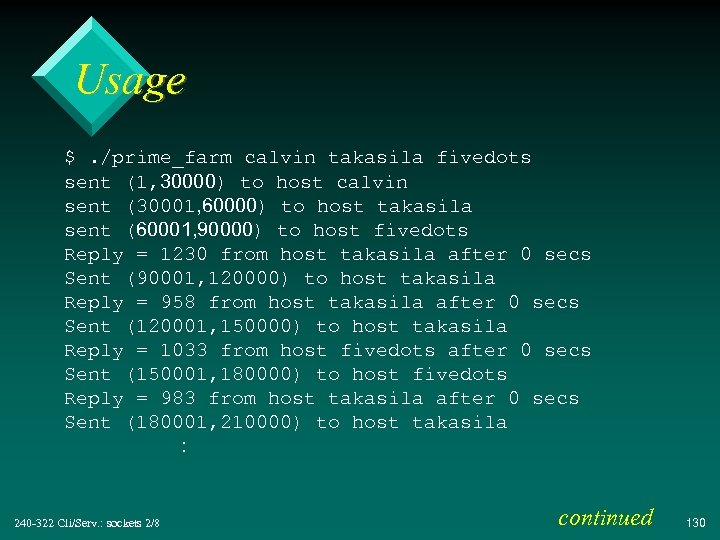

Usage $. /prime_farm calvin takasila fivedots sent (1, 30000) to host calvin sent (30001, 60000) to host takasila sent (60001, 90000) to host fivedots Reply = 1230 from host takasila after 0 secs Sent (90001, 120000) to host takasila Reply = 958 from host takasila after 0 secs Sent (120001, 150000) to host takasila Reply = 1033 from host fivedots after 0 secs Sent (150001, 180000) to host fivedots Reply = 983 from host takasila after 0 secs Sent (180001, 210000) to host takasila : 240 -322 Cli/Serv. : sockets 2/8 continued 130

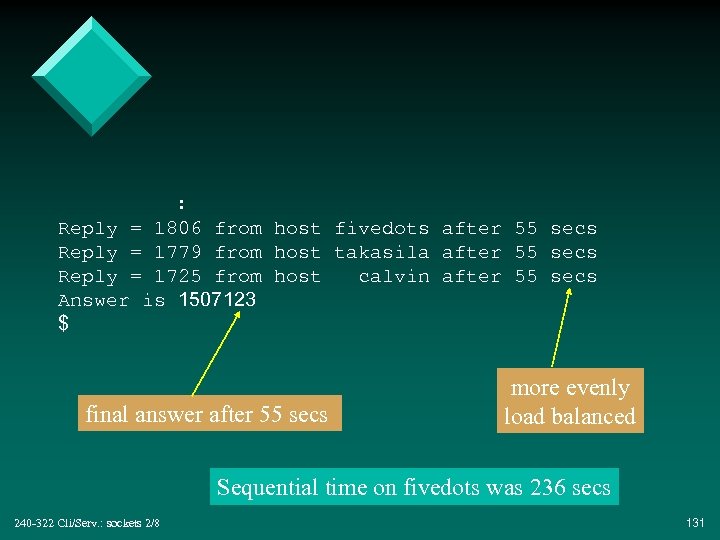

: Reply = 1806 from host fivedots after 55 secs Reply = 1779 from host takasila after 55 secs Reply = 1725 from host calvin after 55 secs Answer is 1507123 $ final answer after 55 secs more evenly load balanced Sequential time on fivedots was 236 secs 240 -322 Cli/Serv. : sockets 2/8 131

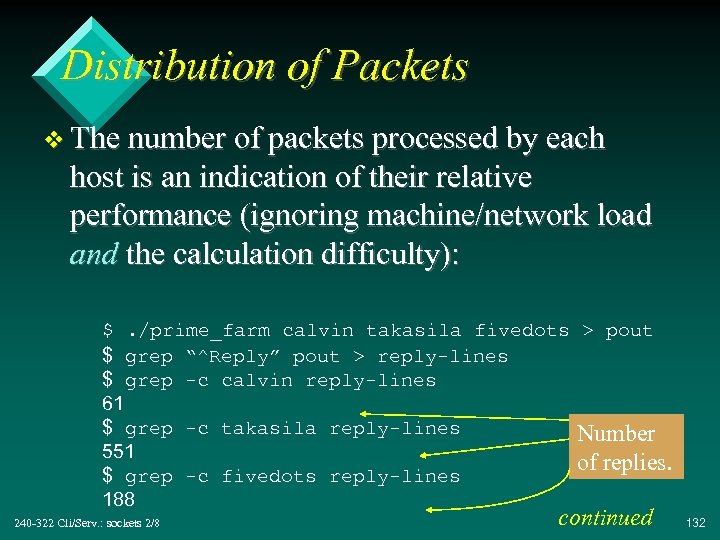

Distribution of Packets v The number of packets processed by each host is an indication of their relative performance (ignoring machine/network load and the calculation difficulty): $. /prime_farm calvin takasila fivedots > pout $ grep “^Reply” pout > reply-lines $ grep -c calvin reply-lines 61 $ grep -c takasila reply-lines Number 551 of replies. $ grep -c fivedots reply-lines 188 240 -322 Cli/Serv. : sockets 2/8 continued 132

v Packet ratios: – takasila/calvin: 551/61 = 8. 3 x more packets takasila/fivedots: 551/188 = 2. 9 x more packets v These ratios match fairly closely the machine speed differences that we saw earlier (slide 99). – give more packets to faster machines 240 -322 Cli/Serv. : sockets 2/8 133

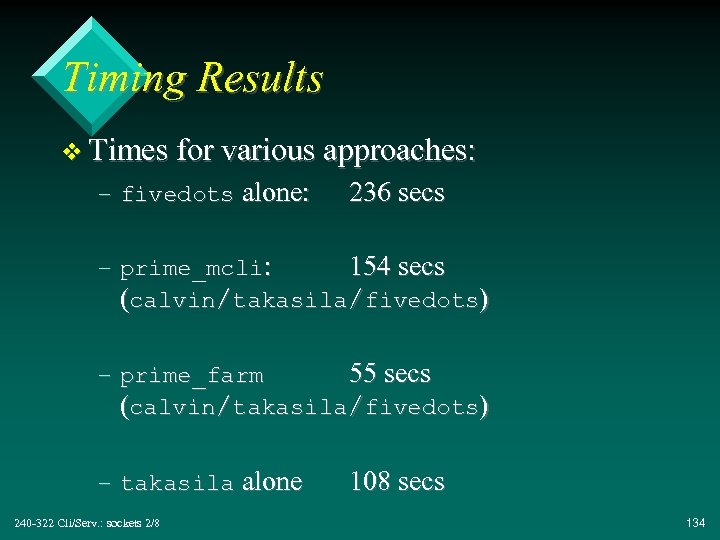

Timing Results v Times for various approaches: – fivedots alone: 236 secs – prime_mcli: 154 secs (calvin/takasila/fivedots) – prime_farm 55 secs (calvin/takasila/fivedots) – takasila alone 240 -322 Cli/Serv. : sockets 2/8 108 secs 134

Some Conclusions v prime_mfarm calculates the result much faster than prime_mcli, and its work is load balanced better across the servers. v “Processor farming” is much better suited for distributing work among servers of widely differing performance (e. g. calvin vs the others). 240 -322 Cli/Serv. : sockets 2/8 continued 135

v The load balancing is dynamic – packets are sent out depending on the run-time performance of the servers 240 -322 Cli/Serv. : sockets 2/8 136

Final Thoughts v Distributing the primes calculation produces the result faster: – speedup of 236/55 = 4. 2 x faster – but is this speedup worth the increased code complexity? v Recall: the only cast-iron reason for using another (server) machine is that it contains a resource not found on the home (client) machine. 240 -322 Cli/Serv. : sockets 2/8 137

be61081f074ee7195ca6d5ded7c640c7.ppt