79a8e52c1769788ff55f7564077b2dfb.ppt

- Количество слайдов: 40

Client-Driven Pointer Analysis Samuel Z. Guyer Calvin Lin June 2003 THE UNIVERSITY OF TEXAS AT AUSTIN 1

Client-Driven Pointer Analysis Samuel Z. Guyer Calvin Lin June 2003 THE UNIVERSITY OF TEXAS AT AUSTIN 1

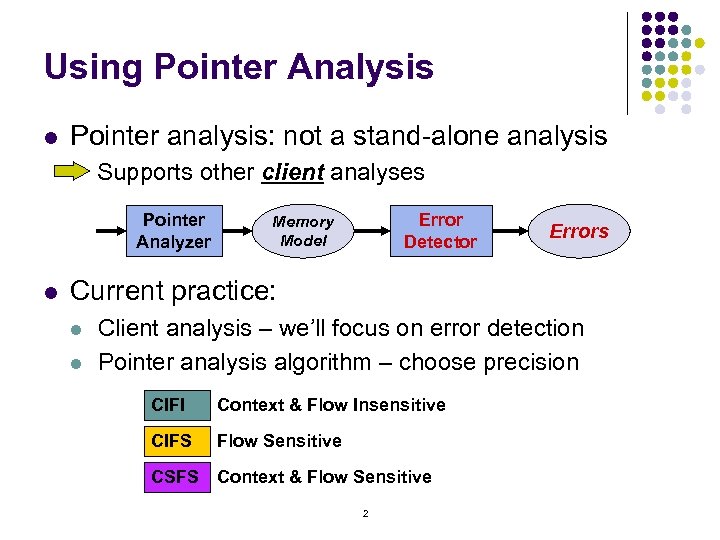

Using Pointer Analysis l Pointer analysis: not a stand-alone analysis Supports other client analyses Pointer Analyzer l Client Error Analysis Detector Memory Model Output Errors Current practice: l l Client analysis – we’ll focus on error detection Pointer analysis algorithm – choose precision CIFI Context & Flow Insensitive CIFS Flow Sensitive CSFS Context & Flow Sensitive 2

Using Pointer Analysis l Pointer analysis: not a stand-alone analysis Supports other client analyses Pointer Analyzer l Client Error Analysis Detector Memory Model Output Errors Current practice: l l Client analysis – we’ll focus on error detection Pointer analysis algorithm – choose precision CIFI Context & Flow Insensitive CIFS Flow Sensitive CSFS Context & Flow Sensitive 2

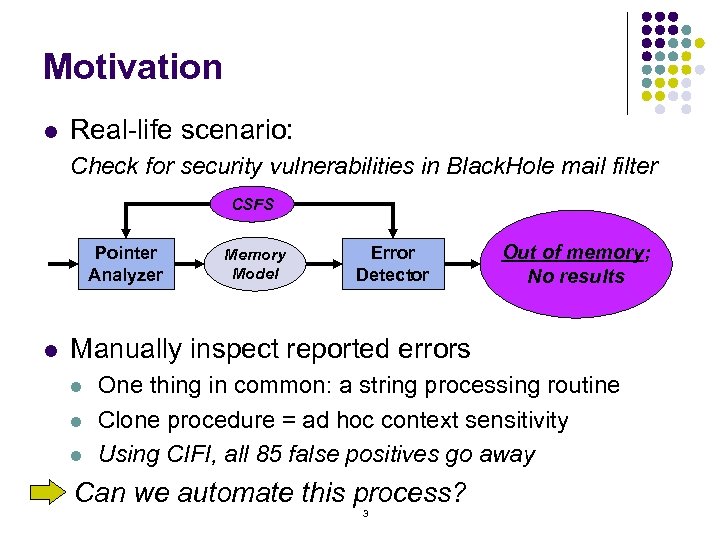

Motivation l Real-life scenario: Check for security vulnerabilities in Black. Hole mail filter CSFS CIFI Pointer Analyzer l Memory Model Error Detector Fast slower; 25 X memory; Out ofanalysis; 85 possible No results errors Manually inspect reported errors l l l One thing in common: a string processing routine Clone procedure = ad hoc context sensitivity Using CIFI, all 85 false positives go away Can we automate this process? 3

Motivation l Real-life scenario: Check for security vulnerabilities in Black. Hole mail filter CSFS CIFI Pointer Analyzer l Memory Model Error Detector Fast slower; 25 X memory; Out ofanalysis; 85 possible No results errors Manually inspect reported errors l l l One thing in common: a string processing routine Clone procedure = ad hoc context sensitivity Using CIFI, all 85 false positives go away Can we automate this process? 3

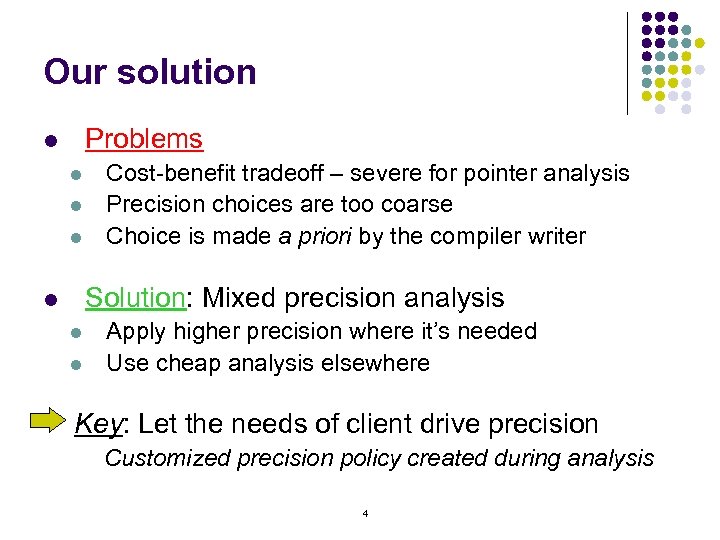

Our solution Problems l l Cost-benefit tradeoff – severe for pointer analysis Precision choices are too coarse Choice is made a priori by the compiler writer Solution: Mixed precision analysis l l l Apply higher precision where it’s needed Use cheap analysis elsewhere Key: Let the needs of client drive precision Customized precision policy created during analysis 4

Our solution Problems l l Cost-benefit tradeoff – severe for pointer analysis Precision choices are too coarse Choice is made a priori by the compiler writer Solution: Mixed precision analysis l l l Apply higher precision where it’s needed Use cheap analysis elsewhere Key: Let the needs of client drive precision Customized precision policy created during analysis 4

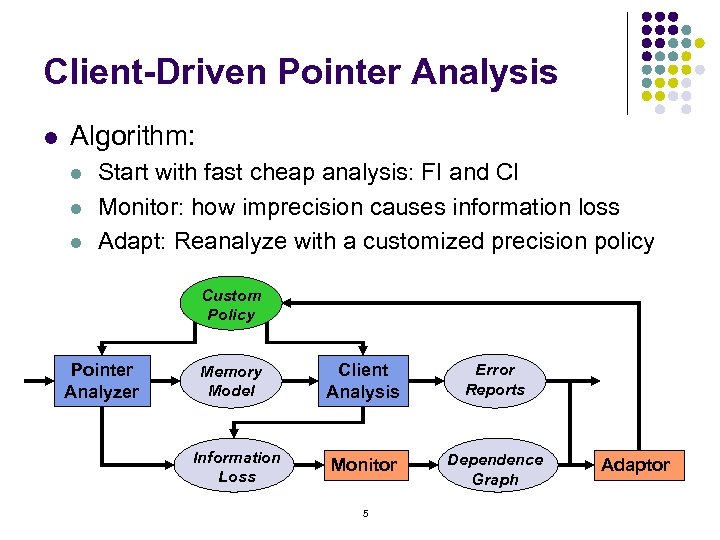

Client-Driven Pointer Analysis l Algorithm: l l l Start with fast cheap analysis: FI and CI Monitor: how imprecision causes information loss Adapt: Reanalyze with a customized precision policy Custom CIFI Policy Pointer Analyzer Memory Model Information Loss Client Analysis Error Reports Monitor Dependence Graph 5 Adaptor

Client-Driven Pointer Analysis l Algorithm: l l l Start with fast cheap analysis: FI and CI Monitor: how imprecision causes information loss Adapt: Reanalyze with a customized precision policy Custom CIFI Policy Pointer Analyzer Memory Model Information Loss Client Analysis Error Reports Monitor Dependence Graph 5 Adaptor

Overview l Motivation l Our algorithm Automatically discover what the client needs l Experiments Real programs and challenging error detection problems l Related work and conclusions 6

Overview l Motivation l Our algorithm Automatically discover what the client needs l Experiments Real programs and challenging error detection problems l Related work and conclusions 6

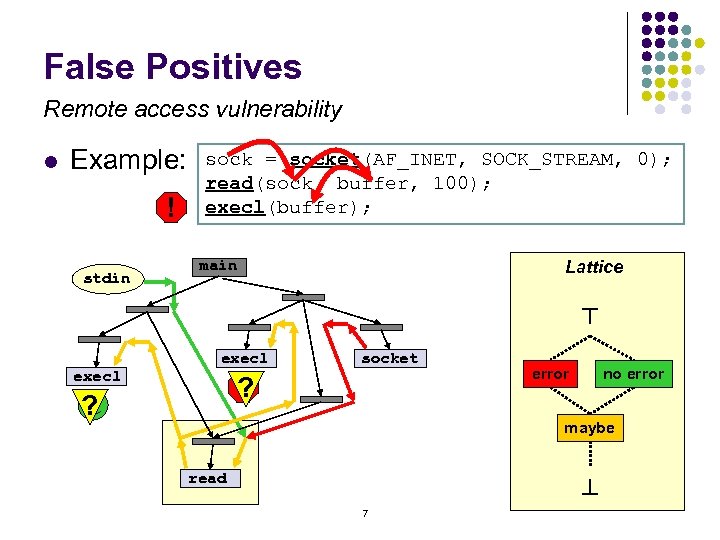

False Positives Remote access vulnerability Example: ! stdin sock = socket(AF_INET, SOCK_STREAM, 0); read(sock, buffer, 100); execl(buffer); main Lattice ^ l execl socket ? ! ? error no error maybe read ^ 7

False Positives Remote access vulnerability Example: ! stdin sock = socket(AF_INET, SOCK_STREAM, 0); read(sock, buffer, 100); execl(buffer); main Lattice ^ l execl socket ? ! ? error no error maybe read ^ 7

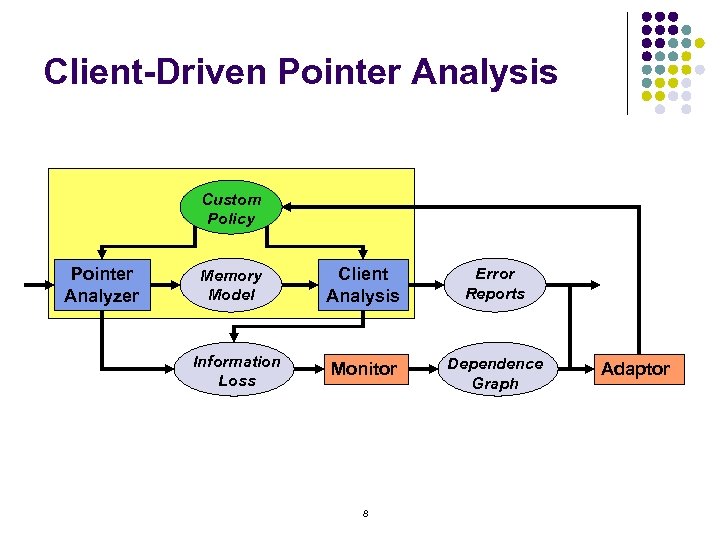

Client-Driven Pointer Analysis Custom CIFI Policy Pointer Analyzer Memory Model Information Loss Client Analysis Error Reports Monitor Dependence Graph 8 Adaptor

Client-Driven Pointer Analysis Custom CIFI Policy Pointer Analyzer Memory Model Information Loss Client Analysis Error Reports Monitor Dependence Graph 8 Adaptor

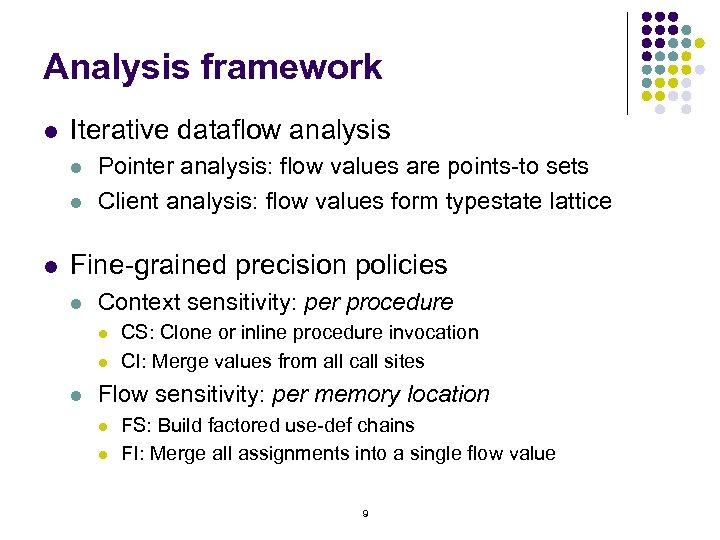

Analysis framework l Iterative dataflow analysis l l l Pointer analysis: flow values are points-to sets Client analysis: flow values form typestate lattice Fine-grained precision policies l Context sensitivity: per procedure l l l CS: Clone or inline procedure invocation CI: Merge values from all call sites Flow sensitivity: per memory location l l FS: Build factored use-def chains FI: Merge all assignments into a single flow value 9

Analysis framework l Iterative dataflow analysis l l l Pointer analysis: flow values are points-to sets Client analysis: flow values form typestate lattice Fine-grained precision policies l Context sensitivity: per procedure l l l CS: Clone or inline procedure invocation CI: Merge values from all call sites Flow sensitivity: per memory location l l FS: Build factored use-def chains FI: Merge all assignments into a single flow value 9

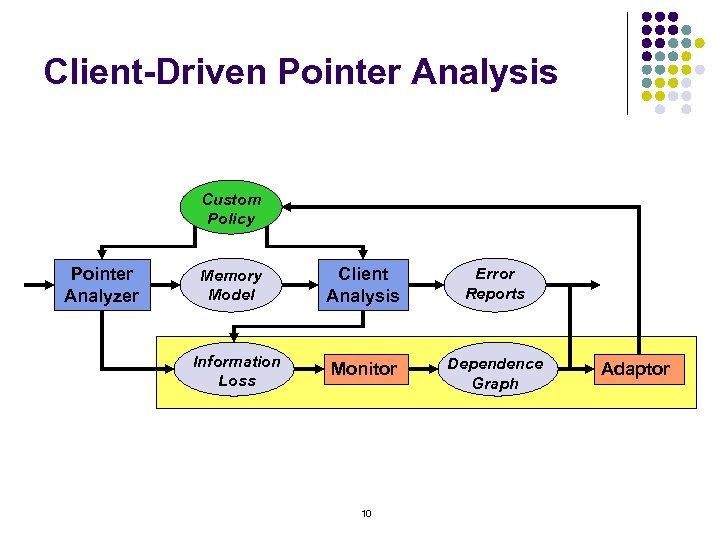

Client-Driven Pointer Analysis Custom CIFI Policy Pointer Analyzer Memory Model Information Loss Client Analysis Error Reports Monitor Dependence Graph 10 Adaptor

Client-Driven Pointer Analysis Custom CIFI Policy Pointer Analyzer Memory Model Information Loss Client Analysis Error Reports Monitor Dependence Graph 10 Adaptor

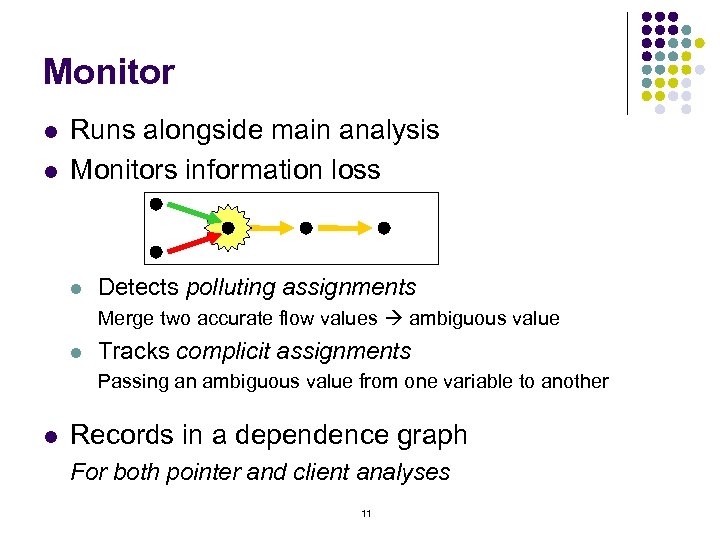

Monitor l l Runs alongside main analysis Monitors information loss l Detects polluting assignments Merge two accurate flow values ambiguous value l Tracks complicit assignments Passing an ambiguous value from one variable to another l Records in a dependence graph For both pointer and client analyses 11

Monitor l l Runs alongside main analysis Monitors information loss l Detects polluting assignments Merge two accurate flow values ambiguous value l Tracks complicit assignments Passing an ambiguous value from one variable to another l Records in a dependence graph For both pointer and client analyses 11

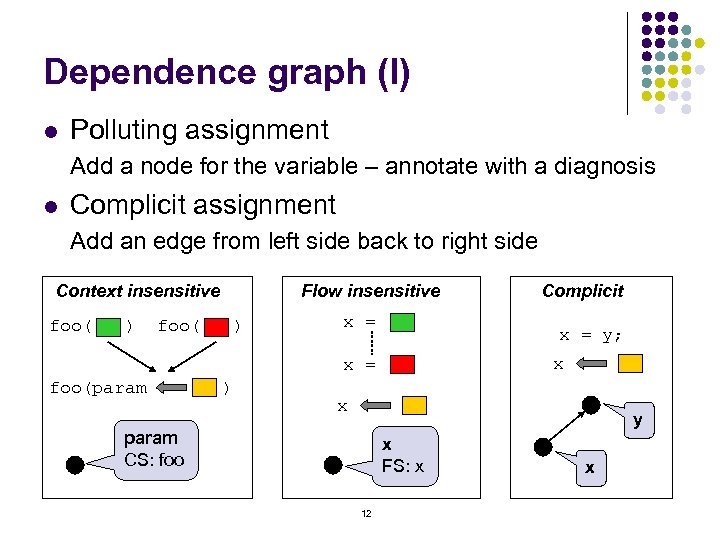

Dependence graph (I) l Polluting assignment Add a node for the variable – annotate with a diagnosis l Complicit assignment Add an edge from left side back to right side Context insensitive foo( ) Flow insensitive x = foo(param ) Complicit x = y; x x param CS: foo y x FS: x 12 x

Dependence graph (I) l Polluting assignment Add a node for the variable – annotate with a diagnosis l Complicit assignment Add an edge from left side back to right side Context insensitive foo( ) Flow insensitive x = foo(param ) Complicit x = y; x x param CS: foo y x FS: x 12 x

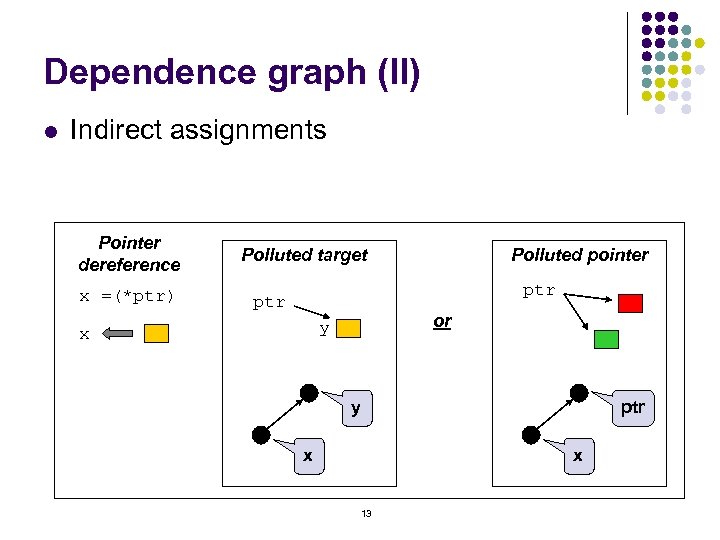

Dependence graph (II) l Indirect assignments Pointer dereference x =(*ptr) Polluted target Polluted pointer ptr or y x ptr x 13

Dependence graph (II) l Indirect assignments Pointer dereference x =(*ptr) Polluted target Polluted pointer ptr or y x ptr x 13

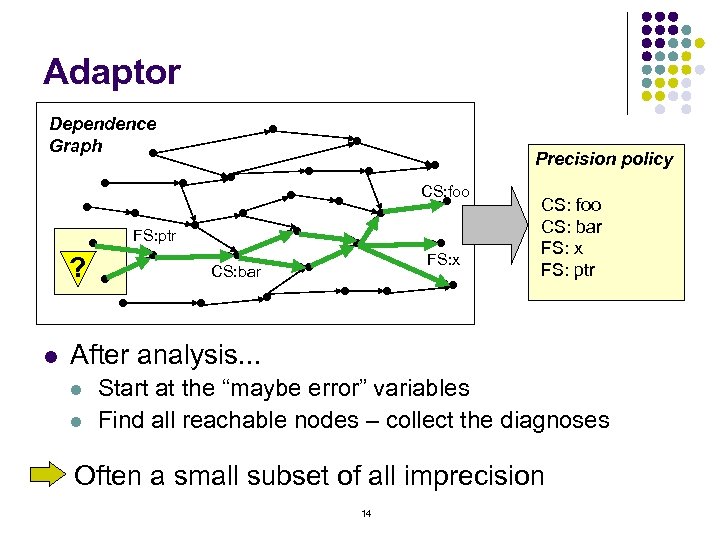

Adaptor Dependence Graph Precision policy CS: foo FS: ptr ? l FS: x CS: bar CS: foo CS: bar FS: x FS: ptr After analysis. . . l l Start at the “maybe error” variables Find all reachable nodes – collect the diagnoses Often a small subset of all imprecision 14

Adaptor Dependence Graph Precision policy CS: foo FS: ptr ? l FS: x CS: bar CS: foo CS: bar FS: x FS: ptr After analysis. . . l l Start at the “maybe error” variables Find all reachable nodes – collect the diagnoses Often a small subset of all imprecision 14

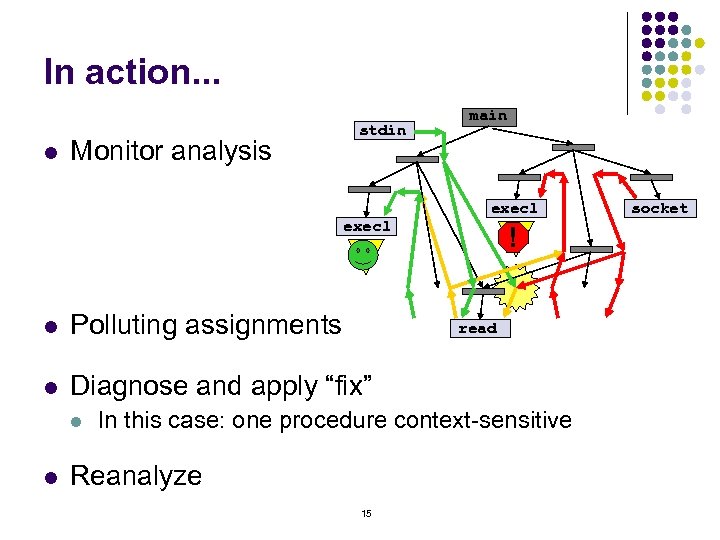

In action. . . l Monitor analysis stdin execl main execl ? ! ? l Polluting assignments l Diagnose and apply “fix” l l read In this case: one procedure context-sensitive Reanalyze 15 socket

In action. . . l Monitor analysis stdin execl main execl ? ! ? l Polluting assignments l Diagnose and apply “fix” l l read In this case: one procedure context-sensitive Reanalyze 15 socket

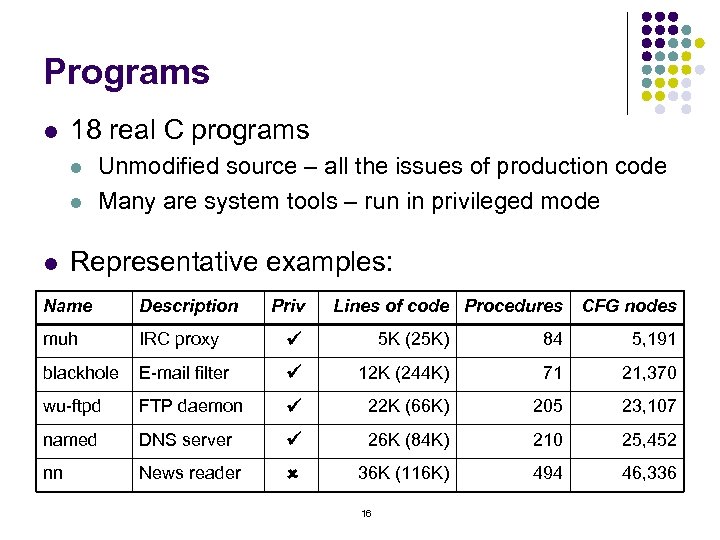

Programs l 18 real C programs l l l Unmodified source – all the issues of production code Many are system tools – run in privileged mode Representative examples: Name Description Priv Lines of code Procedures muh IRC proxy ü 5 K (25 K) 84 5, 191 blackhole E-mail filter ü 12 K (244 K) 71 21, 370 wu-ftpd FTP daemon ü 22 K (66 K) 205 23, 107 named DNS server ü 26 K (84 K) 210 25, 452 nn News reader û 36 K (116 K) 494 46, 336 16 CFG nodes

Programs l 18 real C programs l l l Unmodified source – all the issues of production code Many are system tools – run in privileged mode Representative examples: Name Description Priv Lines of code Procedures muh IRC proxy ü 5 K (25 K) 84 5, 191 blackhole E-mail filter ü 12 K (244 K) 71 21, 370 wu-ftpd FTP daemon ü 22 K (66 K) 205 23, 107 named DNS server ü 26 K (84 K) 210 25, 452 nn News reader û 36 K (116 K) 494 46, 336 16 CFG nodes

Methodology 5 typestate error checkers: l l l Represent non-trivial program properties Stress the pointer analyzer l Compare client-driven with fixed-precision l Goals: l l First, reduce number of errors reported Conservative analysis – fewer is better Second, reduce analysis time 17

Methodology 5 typestate error checkers: l l l Represent non-trivial program properties Stress the pointer analyzer l Compare client-driven with fixed-precision l Goals: l l First, reduce number of errors reported Conservative analysis – fewer is better Second, reduce analysis time 17

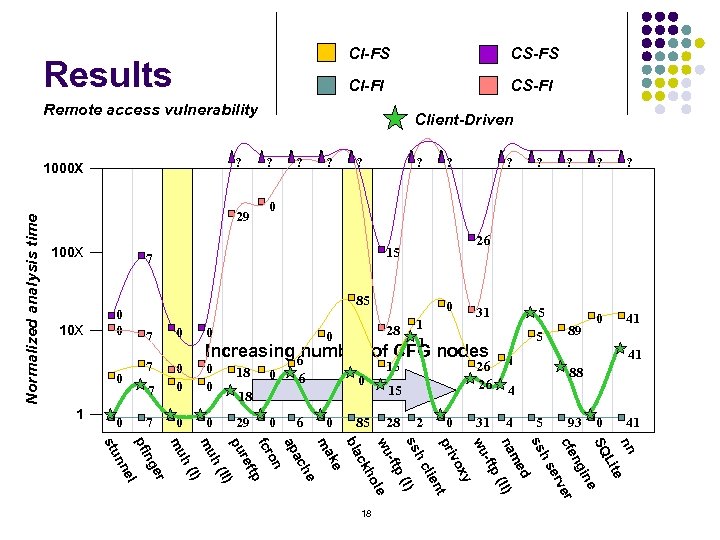

CI-FS CI-FI Results CS-FS CS-FI Remote access vulnerability ? 29 100 X 10 X ? ? 0 7 0 0 28 0 1 1 31 7 0 0 mu 0 0 18 29 0 6 0 18 0 6 0 85 ? ? 5 26 26 15 15 28 5 2 0 0 41 4 31 89 88 4 5 93 0 41 nn e Lit SQ ine ng cfe er erv hs ss d me na (II) -ftp wu xy vo pri nt lie hc ss (I) -ftp wu le ho ck bla ke ma he ac ap on fcr tp ref pu II) h( I) er el nn h( 0 ng 7 0 0 mu Increasing 6 number of CFG nodes pfi 7 ? 26 85 0 0 ? 0 7 0 1 ? 15 stu Normalized analysis time 1000 X Client-Driven 18

CI-FS CI-FI Results CS-FS CS-FI Remote access vulnerability ? 29 100 X 10 X ? ? 0 7 0 0 28 0 1 1 31 7 0 0 mu 0 0 18 29 0 6 0 18 0 6 0 85 ? ? 5 26 26 15 15 28 5 2 0 0 41 4 31 89 88 4 5 93 0 41 nn e Lit SQ ine ng cfe er erv hs ss d me na (II) -ftp wu xy vo pri nt lie hc ss (I) -ftp wu le ho ck bla ke ma he ac ap on fcr tp ref pu II) h( I) er el nn h( 0 ng 7 0 0 mu Increasing 6 number of CFG nodes pfi 7 ? 26 85 0 0 ? 0 7 0 1 ? 15 stu Normalized analysis time 1000 X Client-Driven 18

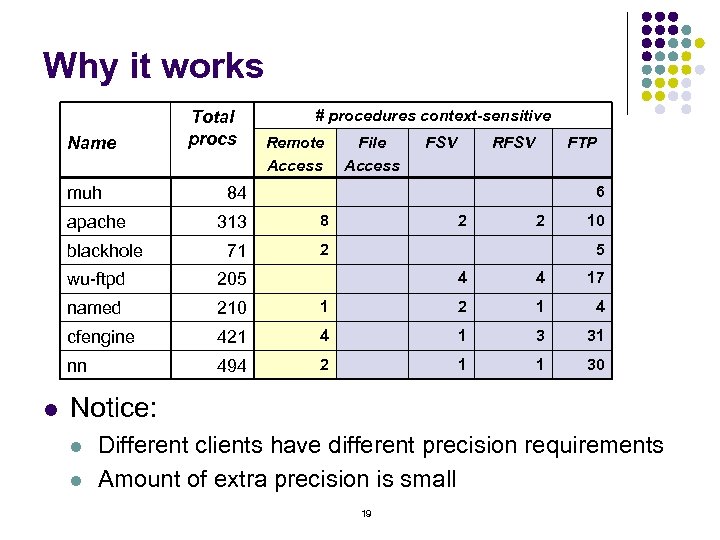

Why it works Name muh apache blackhole Total procs Remote Access File Access FSV RFSV FTP 6 84 313 8 71 2 2 2 10 5 4 4 17 1 2 1 4 421 4 1 3 31 494 2 1 1 30 wu-ftpd 205 named 210 cfengine nn l # procedures context-sensitive Notice: l l Different clients have different precision requirements Amount of extra precision is small 19

Why it works Name muh apache blackhole Total procs Remote Access File Access FSV RFSV FTP 6 84 313 8 71 2 2 2 10 5 4 4 17 1 2 1 4 421 4 1 3 31 494 2 1 1 30 wu-ftpd 205 named 210 cfengine nn l # procedures context-sensitive Notice: l l Different clients have different precision requirements Amount of extra precision is small 19

Related work l Pointer analysis and typestate error checking l Iterative flow analysis [Plevyak & Chien ‘ 94] l Demand-driven pointer analysis [Heintze & Tardieu ’ 01] l Combined pointer analysis [Zhang, Ryder, Landi ’ 98] l Effects of pointer analysis precision [Hind ’ 01 & others] l More precision is more costly l Does it help? Is it worth the cost? 20

Related work l Pointer analysis and typestate error checking l Iterative flow analysis [Plevyak & Chien ‘ 94] l Demand-driven pointer analysis [Heintze & Tardieu ’ 01] l Combined pointer analysis [Zhang, Ryder, Landi ’ 98] l Effects of pointer analysis precision [Hind ’ 01 & others] l More precision is more costly l Does it help? Is it worth the cost? 20

Conclusions l Client-driven pointer analysis l l l Precision should match the client and program Not all pointers are equal Need fine-grained precision policies Key: knowing where to add more and what kind Roadmap for scalability Use more expensive analysis on small parts of progams 21

Conclusions l Client-driven pointer analysis l l l Precision should match the client and program Not all pointers are equal Need fine-grained precision policies Key: knowing where to add more and what kind Roadmap for scalability Use more expensive analysis on small parts of progams 21

Thank You 22

Thank You 22

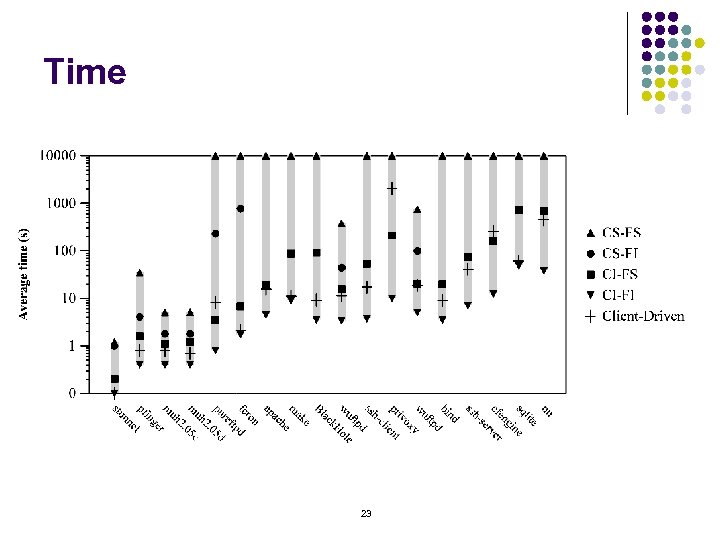

Time 23

Time 23

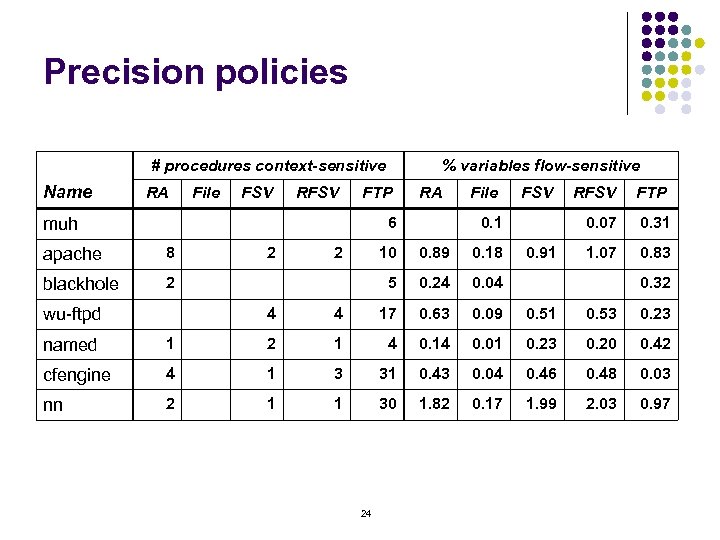

Precision policies # procedures context-sensitive Name RA File FSV RFSV % variables flow-sensitive FTP RA 6 muh apache 8 blackhole 2 2 2 File FSV 10 0. 89 0. 18 5 0. 24 0. 91 FTP 0. 07 0. 1 RFSV 0. 31 1. 07 0. 83 0. 04 0. 32 4 wu-ftpd 4 17 0. 63 0. 09 0. 51 0. 53 0. 23 named 1 2 1 4 0. 14 0. 01 0. 23 0. 20 0. 42 cfengine 4 1 3 31 0. 43 0. 04 0. 46 0. 48 0. 03 nn 2 1 1 30 1. 82 0. 17 1. 99 2. 03 0. 97 24

Precision policies # procedures context-sensitive Name RA File FSV RFSV % variables flow-sensitive FTP RA 6 muh apache 8 blackhole 2 2 2 File FSV 10 0. 89 0. 18 5 0. 24 0. 91 FTP 0. 07 0. 1 RFSV 0. 31 1. 07 0. 83 0. 04 0. 32 4 wu-ftpd 4 17 0. 63 0. 09 0. 51 0. 53 0. 23 named 1 2 1 4 0. 14 0. 01 0. 23 0. 20 0. 42 cfengine 4 1 3 31 0. 43 0. 04 0. 46 0. 48 0. 03 nn 2 1 1 30 1. 82 0. 17 1. 99 2. 03 0. 97 24

Thank You 25

Thank You 25

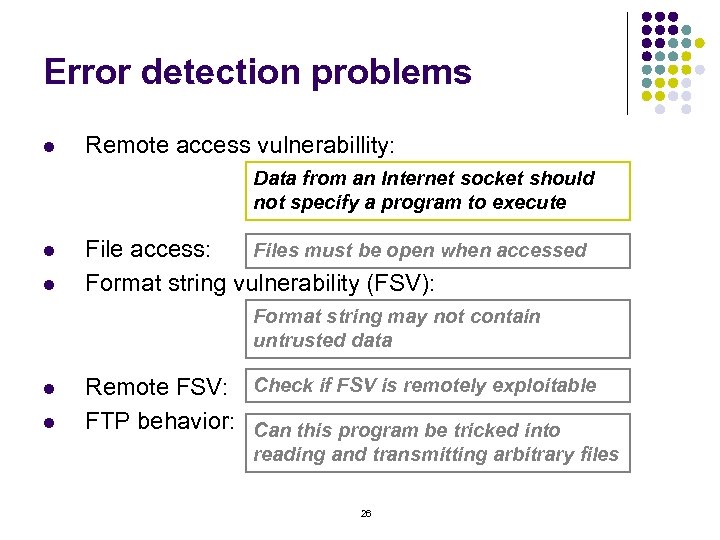

Error detection problems l Remote access vulnerabillity: Data from an Internet socket should not specify a program to execute l l File access: Files must be open when accessed Format string vulnerability (FSV): Format string may not contain untrusted data l l Remote FSV: Check if FSV is remotely exploitable FTP behavior: Can this program be tricked into reading and transmitting arbitrary files 26

Error detection problems l Remote access vulnerabillity: Data from an Internet socket should not specify a program to execute l l File access: Files must be open when accessed Format string vulnerability (FSV): Format string may not contain untrusted data l l Remote FSV: Check if FSV is remotely exploitable FTP behavior: Can this program be tricked into reading and transmitting arbitrary files 26

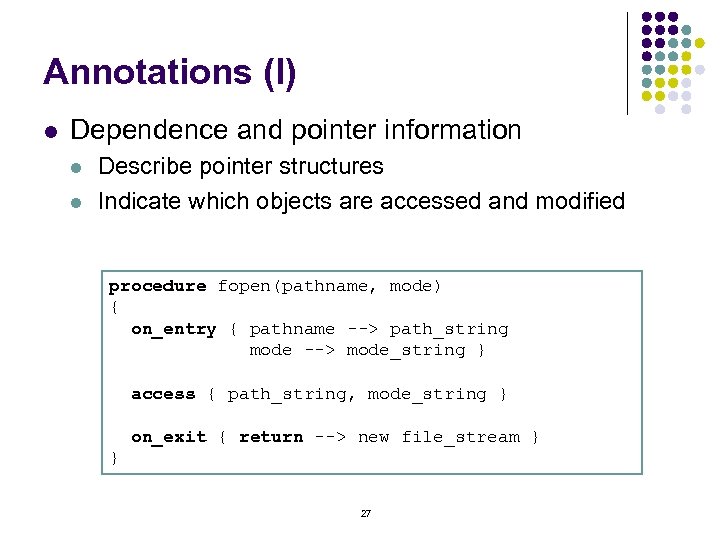

Annotations (I) l Dependence and pointer information l l Describe pointer structures Indicate which objects are accessed and modified procedure fopen(pathname, mode) { on_entry { pathname --> path_string mode --> mode_string } access { path_string, mode_string } on_exit { return --> new file_stream } } 27

Annotations (I) l Dependence and pointer information l l Describe pointer structures Indicate which objects are accessed and modified procedure fopen(pathname, mode) { on_entry { pathname --> path_string mode --> mode_string } access { path_string, mode_string } on_exit { return --> new file_stream } } 27

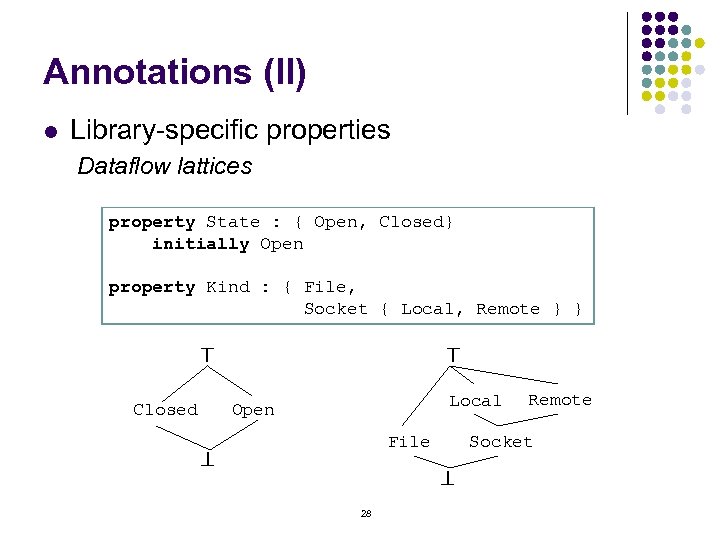

Annotations (II) Library-specific properties Dataflow lattices property State : { Open, Closed} initially Open property Kind : { File, Socket { Local, Remote } } ^ Closed ^ l Local Open File ^ Socket ^ 28 Remote

Annotations (II) Library-specific properties Dataflow lattices property State : { Open, Closed} initially Open property Kind : { File, Socket { Local, Remote } } ^ Closed ^ l Local Open File ^ Socket ^ 28 Remote

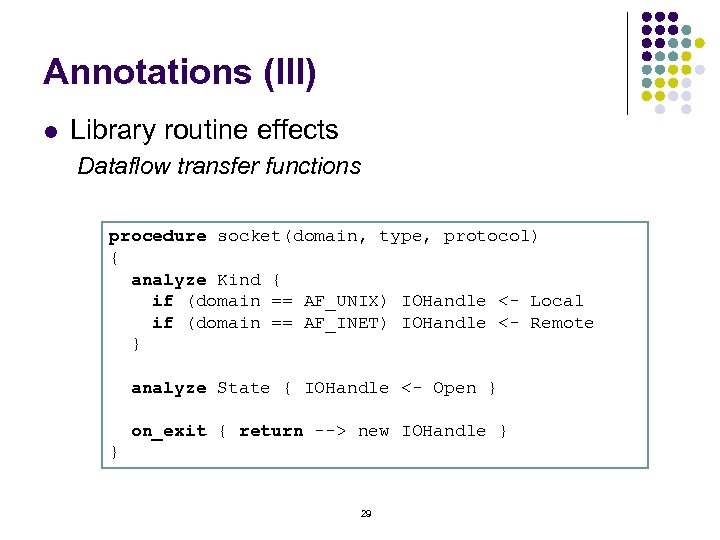

Annotations (III) l Library routine effects Dataflow transfer functions procedure socket(domain, type, protocol) { analyze Kind { if (domain == AF_UNIX) IOHandle <- Local if (domain == AF_INET) IOHandle <- Remote } analyze State { IOHandle <- Open } on_exit { return --> new IOHandle } } 29

Annotations (III) l Library routine effects Dataflow transfer functions procedure socket(domain, type, protocol) { analyze Kind { if (domain == AF_UNIX) IOHandle <- Local if (domain == AF_INET) IOHandle <- Remote } analyze State { IOHandle <- Open } on_exit { return --> new IOHandle } } 29

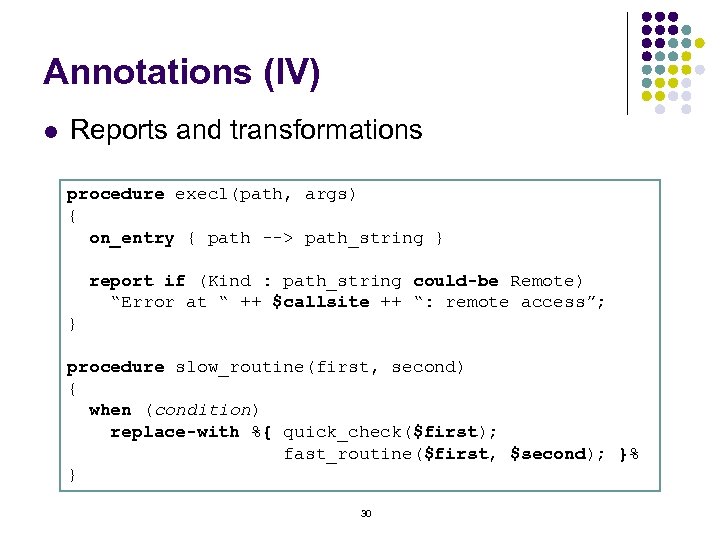

Annotations (IV) l Reports and transformations procedure execl(path, args) { on_entry { path --> path_string } report if (Kind : path_string could-be Remote) “Error at “ ++ $callsite ++ “: remote access”; } procedure slow_routine(first, second) { when (condition) replace-with %{ quick_check($first); fast_routine($first, $second); }% } 30

Annotations (IV) l Reports and transformations procedure execl(path, args) { on_entry { path --> path_string } report if (Kind : path_string could-be Remote) “Error at “ ++ $callsite ++ “: remote access”; } procedure slow_routine(first, second) { when (condition) replace-with %{ quick_check($first); fast_routine($first, $second); }% } 30

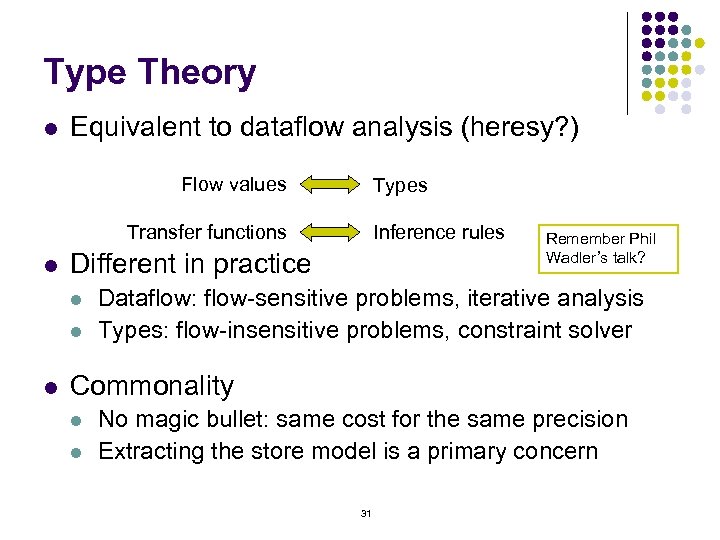

Type Theory l Equivalent to dataflow analysis (heresy? ) Flow values Types Transfer functions l Different in practice l l l Inference rules Remember Phil Wadler’s talk? Dataflow: flow-sensitive problems, iterative analysis Types: flow-insensitive problems, constraint solver Commonality l l No magic bullet: same cost for the same precision Extracting the store model is a primary concern 31

Type Theory l Equivalent to dataflow analysis (heresy? ) Flow values Types Transfer functions l Different in practice l l l Inference rules Remember Phil Wadler’s talk? Dataflow: flow-sensitive problems, iterative analysis Types: flow-insensitive problems, constraint solver Commonality l l No magic bullet: same cost for the same precision Extracting the store model is a primary concern 31

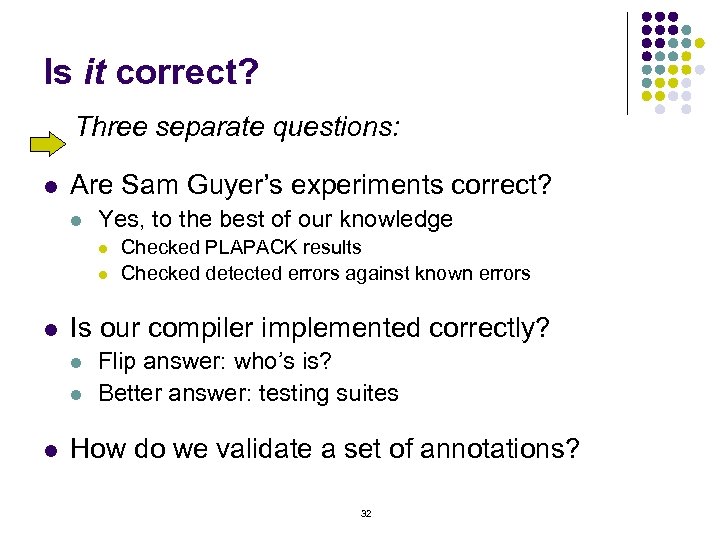

Is it correct? Three separate questions: l Are Sam Guyer’s experiments correct? l Yes, to the best of our knowledge l l l Is our compiler implemented correctly? l l l Checked PLAPACK results Checked detected errors against known errors Flip answer: who’s is? Better answer: testing suites How do we validate a set of annotations? 32

Is it correct? Three separate questions: l Are Sam Guyer’s experiments correct? l Yes, to the best of our knowledge l l l Is our compiler implemented correctly? l l l Checked PLAPACK results Checked detected errors against known errors Flip answer: who’s is? Better answer: testing suites How do we validate a set of annotations? 32

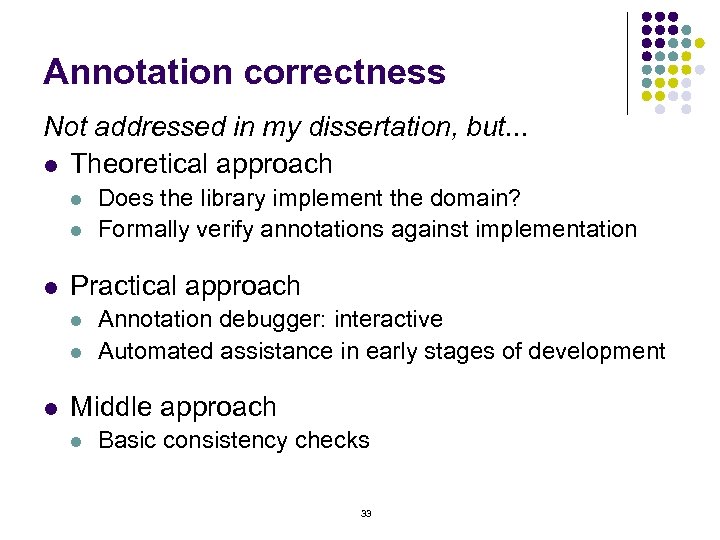

Annotation correctness Not addressed in my dissertation, but. . . l Theoretical approach l l l Practical approach l l l Does the library implement the domain? Formally verify annotations against implementation Annotation debugger: interactive Automated assistance in early stages of development Middle approach l Basic consistency checks 33

Annotation correctness Not addressed in my dissertation, but. . . l Theoretical approach l l l Practical approach l l l Does the library implement the domain? Formally verify annotations against implementation Annotation debugger: interactive Automated assistance in early stages of development Middle approach l Basic consistency checks 33

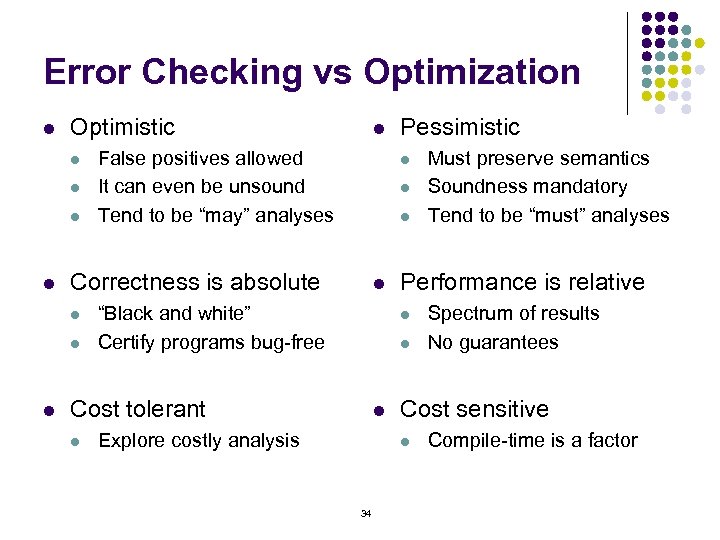

Error Checking vs Optimization l Optimistic l l False positives allowed It can even be unsound Tend to be “may” analyses l l “Black and white” Certify programs bug-free l l Explore costly analysis Spectrum of results No guarantees Cost sensitive l 34 Must preserve semantics Soundness mandatory Tend to be “must” analyses Performance is relative l Cost tolerant l Pessimistic l Correctness is absolute l l l Compile-time is a factor

Error Checking vs Optimization l Optimistic l l False positives allowed It can even be unsound Tend to be “may” analyses l l “Black and white” Certify programs bug-free l l Explore costly analysis Spectrum of results No guarantees Cost sensitive l 34 Must preserve semantics Soundness mandatory Tend to be “must” analyses Performance is relative l Cost tolerant l Pessimistic l Correctness is absolute l l l Compile-time is a factor

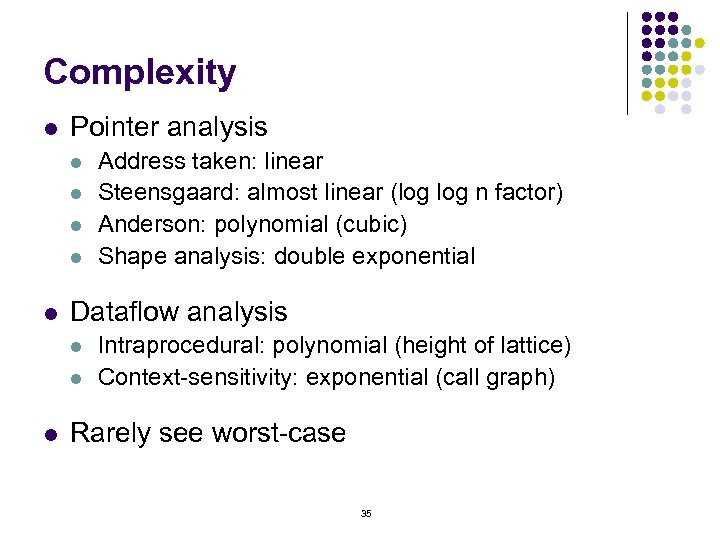

Complexity l Pointer analysis l l l Dataflow analysis l l l Address taken: linear Steensgaard: almost linear (log n factor) Anderson: polynomial (cubic) Shape analysis: double exponential Intraprocedural: polynomial (height of lattice) Context-sensitivity: exponential (call graph) Rarely see worst-case 35

Complexity l Pointer analysis l l l Dataflow analysis l l l Address taken: linear Steensgaard: almost linear (log n factor) Anderson: polynomial (cubic) Shape analysis: double exponential Intraprocedural: polynomial (height of lattice) Context-sensitivity: exponential (call graph) Rarely see worst-case 35

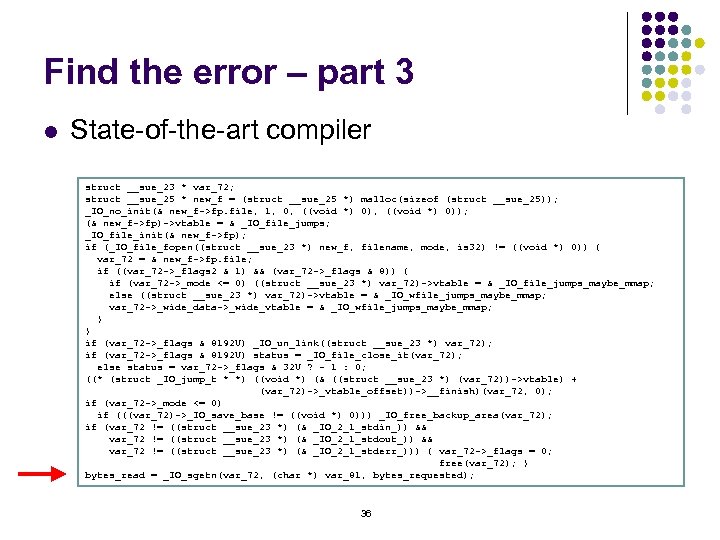

Find the error – part 3 l State-of-the-art compiler struct __sue_23 * var_72; struct __sue_25 * new_f = (struct __sue_25 *) malloc(sizeof (struct __sue_25)); _IO_no_init(& new_f->fp. file, 1, 0, ((void *) 0)); (& new_f->fp)->vtable = & _IO_file_jumps; _IO_file_init(& new_f->fp); if (_IO_file_fopen((struct __sue_23 *) new_f, filename, mode, is 32) != ((void *) 0)) { var_72 = & new_f->fp. file; if ((var_72 ->_flags 2 & 1) && (var_72 ->_flags & 8)) { if (var_72 ->_mode <= 0) ((struct __sue_23 *) var_72)->vtable = & _IO_file_jumps_maybe_mmap; else ((struct __sue_23 *) var_72)->vtable = & _IO_wfile_jumps_maybe_mmap; var_72 ->_wide_data->_wide_vtable = & _IO_wfile_jumps_maybe_mmap; } } if (var_72 ->_flags & 8192 U) _IO_un_link((struct __sue_23 *) var_72); if (var_72 ->_flags & 8192 U) status = _IO_file_close_it(var_72); else status = var_72 ->_flags & 32 U ? - 1 : 0; ((* (struct _IO_jump_t * *) ((void *) (& ((struct __sue_23 *) (var_72))->vtable) + (var_72)->_vtable_offset))->__finish)(var_72, 0); if (var_72 ->_mode <= 0) if (((var_72)->_IO_save_base != ((void *) 0))) _IO_free_backup_area(var_72); if (var_72 != ((struct __sue_23 *) (& _IO_2_1_stdin_)) && var_72 != ((struct __sue_23 *) (& _IO_2_1_stdout_)) && var_72 != ((struct __sue_23 *) (& _IO_2_1_stderr_))) { var_72 ->_flags = 0; free(var_72); } bytes_read = _IO_sgetn(var_72, (char *) var_81, bytes_requested); 36

Find the error – part 3 l State-of-the-art compiler struct __sue_23 * var_72; struct __sue_25 * new_f = (struct __sue_25 *) malloc(sizeof (struct __sue_25)); _IO_no_init(& new_f->fp. file, 1, 0, ((void *) 0)); (& new_f->fp)->vtable = & _IO_file_jumps; _IO_file_init(& new_f->fp); if (_IO_file_fopen((struct __sue_23 *) new_f, filename, mode, is 32) != ((void *) 0)) { var_72 = & new_f->fp. file; if ((var_72 ->_flags 2 & 1) && (var_72 ->_flags & 8)) { if (var_72 ->_mode <= 0) ((struct __sue_23 *) var_72)->vtable = & _IO_file_jumps_maybe_mmap; else ((struct __sue_23 *) var_72)->vtable = & _IO_wfile_jumps_maybe_mmap; var_72 ->_wide_data->_wide_vtable = & _IO_wfile_jumps_maybe_mmap; } } if (var_72 ->_flags & 8192 U) _IO_un_link((struct __sue_23 *) var_72); if (var_72 ->_flags & 8192 U) status = _IO_file_close_it(var_72); else status = var_72 ->_flags & 32 U ? - 1 : 0; ((* (struct _IO_jump_t * *) ((void *) (& ((struct __sue_23 *) (var_72))->vtable) + (var_72)->_vtable_offset))->__finish)(var_72, 0); if (var_72 ->_mode <= 0) if (((var_72)->_IO_save_base != ((void *) 0))) _IO_free_backup_area(var_72); if (var_72 != ((struct __sue_23 *) (& _IO_2_1_stdin_)) && var_72 != ((struct __sue_23 *) (& _IO_2_1_stdout_)) && var_72 != ((struct __sue_23 *) (& _IO_2_1_stderr_))) { var_72 ->_flags = 0; free(var_72); } bytes_read = _IO_sgetn(var_72, (char *) var_81, bytes_requested); 36

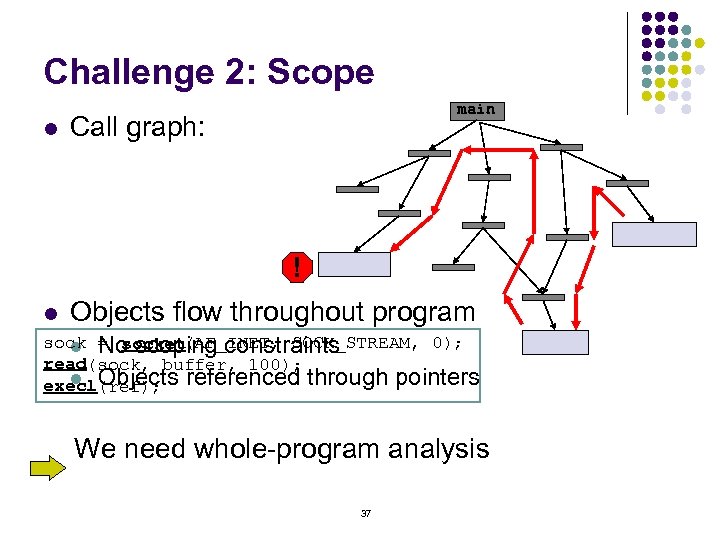

Challenge 2: Scope l main Call graph: ! l Objects flow throughout program sock = (AF_INET, SOCK_STREAM, 0); socket l No scoping constraints read(sock, buffer, 100); l Objects execl(ref); referenced through pointers We need whole-program analysis 37

Challenge 2: Scope l main Call graph: ! l Objects flow throughout program sock = (AF_INET, SOCK_STREAM, 0); socket l No scoping constraints read(sock, buffer, 100); l Objects execl(ref); referenced through pointers We need whole-program analysis 37

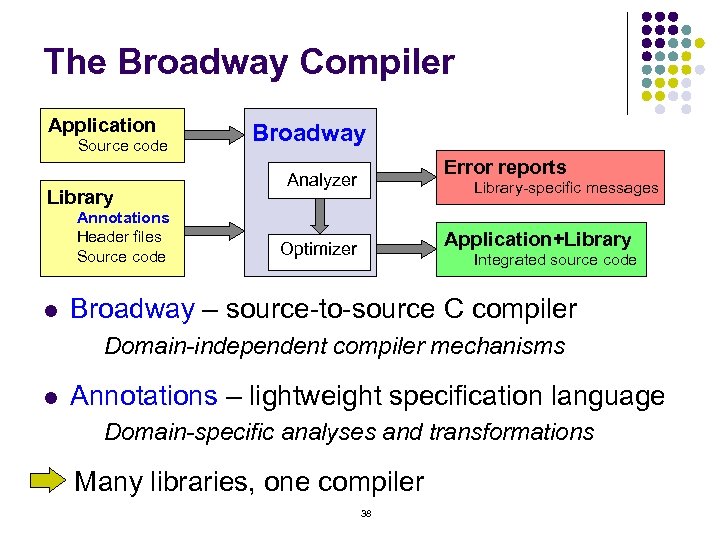

The Broadway Compiler Application Source code Library Annotations Header files Source code l Broadway Error reports Analyzer Library-specific messages Application+Library Optimizer Integrated source code Broadway – source-to-source C compiler Domain-independent compiler mechanisms l Annotations – lightweight specification language Domain-specific analyses and transformations Many libraries, one compiler 38

The Broadway Compiler Application Source code Library Annotations Header files Source code l Broadway Error reports Analyzer Library-specific messages Application+Library Optimizer Integrated source code Broadway – source-to-source C compiler Domain-independent compiler mechanisms l Annotations – lightweight specification language Domain-specific analyses and transformations Many libraries, one compiler 38

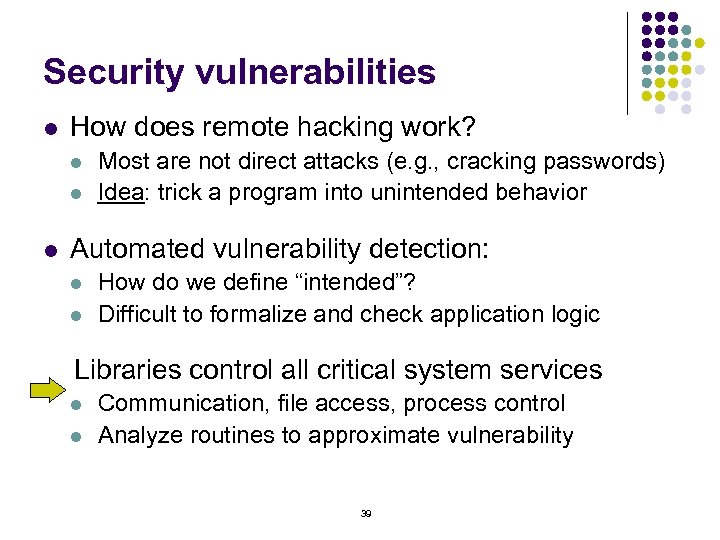

Security vulnerabilities l How does remote hacking work? l l l Most are not direct attacks (e. g. , cracking passwords) Idea: trick a program into unintended behavior Automated vulnerability detection: l l How do we define “intended”? Difficult to formalize and check application logic Libraries control all critical system services l l Communication, file access, process control Analyze routines to approximate vulnerability 39

Security vulnerabilities l How does remote hacking work? l l l Most are not direct attacks (e. g. , cracking passwords) Idea: trick a program into unintended behavior Automated vulnerability detection: l l How do we define “intended”? Difficult to formalize and check application logic Libraries control all critical system services l l Communication, file access, process control Analyze routines to approximate vulnerability 39

End backup slides Conditions if(cond) x = = f( , ) 40

End backup slides Conditions if(cond) x = = f( , ) 40