8876c7b8cc5fc02803b3c1a241295968.ppt

- Количество слайдов: 25

CLARIN-PL A Large Wordnet-based Sentiment Lexicon for Polish Monika Zaśko-Zielińska University of Wrocław Maciej Piasecki Wrocław University of Technology G 4. 19 Research Group Stanisław Szpakowicz University of Ottawa

Background pl. Word. Net 2. 3 § a comprehensive description of the lexico-semantic system of Polish § a rich set of lexico-semantic relations described § the largest wordnet in the world 171 000 lemmas 244 000 word senses 184 000 synsets § most of it (150 000 synsets) manually mapped to Princeton Word. Net 3. 1 § mapped to SUMO § includes en. Word. Net 0. 1 — Word. Net 3. 1 extended with 8 000 lemmas and 9 000 word senses § everything is available on open licences RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL

Background RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL Effect § available as: a Web page, Web service, mobile application, files to download, Wordnet. Loom Viewer desktop application § hundreds of thousands of Web visits § > 700 registered users § scientific, commercial, educational § > 100 citations § Wo. Se. Don – a Word Sense Disambiguation tool of practical accuracy One, but significant limitation § lack of support for the crucially important area of sentiment analysis and opinion mining

Assumptions and Goal RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL Assumptions § wordnets are reference resources, relied upon for the absence of lexical errors § automatic annotation of lexical material is not a viable option § pl. Word. Net is much too large for complete, affordable manual annotation Goal § to annotate manually a large portion of pl. Word. Net with sentiment and basic emotions: around 30 000 lexical units, 15% (a scale several times larger than Senti. Word. Net) § as a pilot study § and to build a core for further automated expansion

Annotation Model: Principles RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL The basis: pl. Word. Net § § not based on a translation from Princeton Word. Net lexical units (lemma plus sense ID) are basic building blocks a synset groups lexical units which share relations glosses and examples assigned to lexical units Lexical units are a natural place for sentiment and emotion annotation Speaker perspective for annotation § it is difficult to describe sentiment polarity in isolation from context § too many factors govern sentiment perception § focus on the word sense intended by the speaker and the intended sentiment polarity § emotions which typify the source of the polarity

Annotation Model: Attributes § Sentiment polarity § Basic emotions § Fundamental values RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL

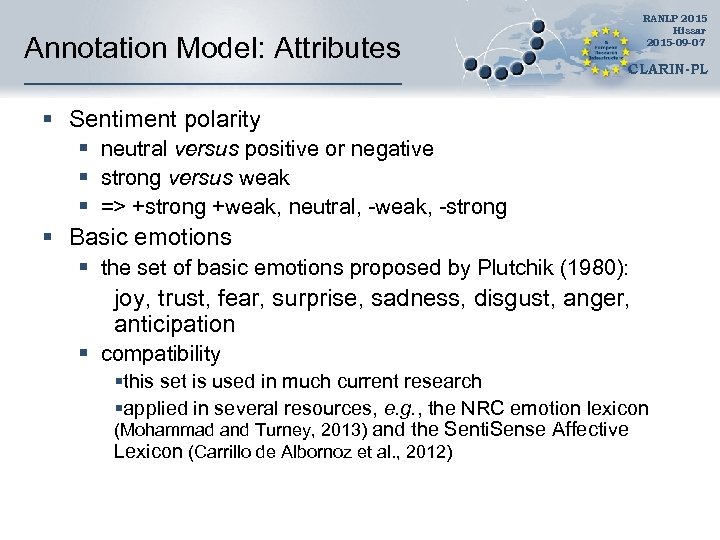

Annotation Model: Attributes RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL § Sentiment polarity § neutral versus positive or negative § strong versus weak § => +strong +weak, neutral, -weak, -strong § Basic emotions § the set of basic emotions proposed by Plutchik (1980): joy, trust, fear, surprise, sadness, disgust, anger, anticipation § compatibility §this set is used in much current research §applied in several resources, e. g. , the NRC emotion lexicon (Mohammad and Turney, 2013) and the Senti. Sense Affective Lexicon (Carrillo de Albornoz et al. , 2012)

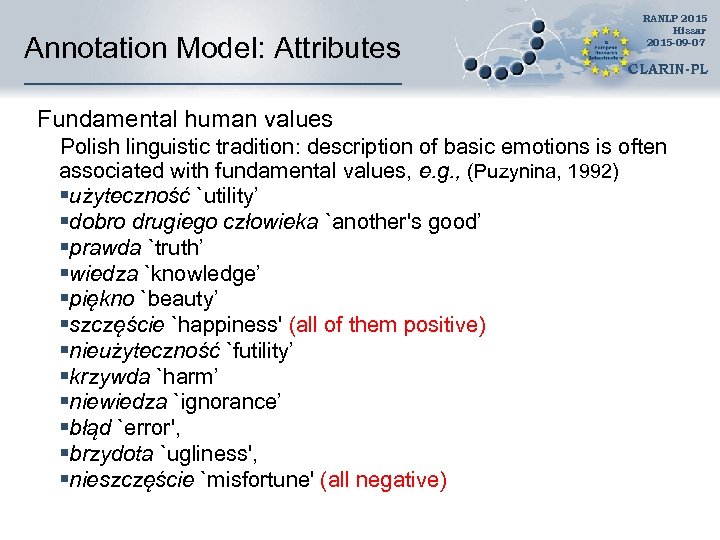

Annotation Model: Attributes RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL Fundamental human values Polish linguistic tradition: description of basic emotions is often associated with fundamental values, e. g. , (Puzynina, 1992) §użyteczność `utility’ §dobro drugiego człowieka `another's good’ §prawda `truth’ §wiedza `knowledge’ §piękno `beauty’ §szczęście `happiness' (all of them positive) §nieużyteczność `futility’ §krzywda `harm’ §niewiedza `ignorance’ §błąd `error', §brzydota `ugliness', §nieszczęście `misfortune' (all negative)

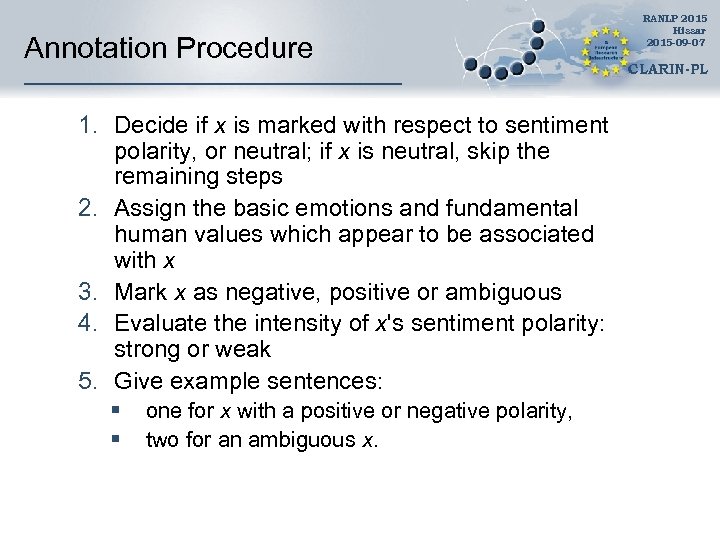

Annotation Procedure 1. Decide if x is marked with respect to sentiment polarity, or neutral; if x is neutral, skip the remaining steps 2. Assign the basic emotions and fundamental human values which appear to be associated with x 3. Mark x as negative, positive or ambiguous 4. Evaluate the intensity of x's sentiment polarity: strong or weak 5. Give example sentences: § § one for x with a positive or negative polarity, two for an ambiguous x. RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL

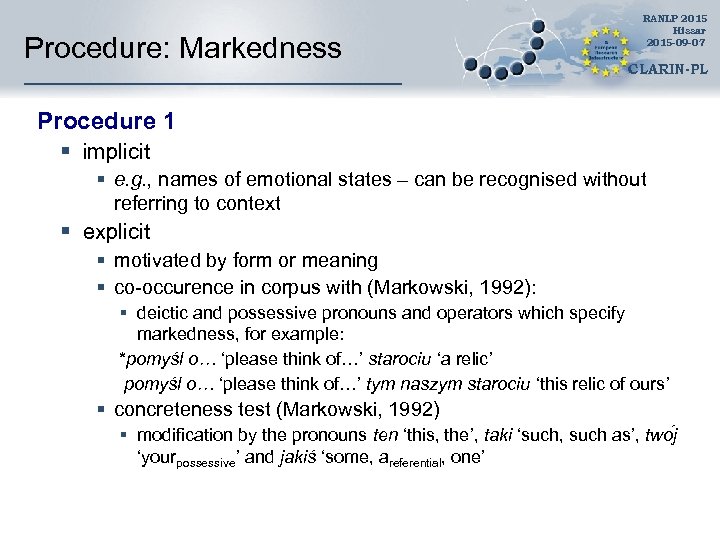

Procedure: Markedness RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL Procedure 1 § implicit § e. g. , names of emotional states – can be recognised without referring to context § explicit § motivated by form or meaning § co-occurence in corpus with (Markowski, 1992): § deictic and possessive pronouns and operators which specify markedness, for example: *pomyśl o… ‘please think of…’ starociu ‘a relic’ pomyśl o… ‘please think of…’ tym naszym starociu ‘this relic of ours’ § concreteness test (Markowski, 1992) § modification by the pronouns ten ‘this, the’, taki ‘such, such as’, two j ‘yourpossessive’ and jakiś ‘some, areferential, one’

Procedure: Markedness RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL Procedure 2 § the presence of pragmatic elements in the wordnet glosses for the analysed lexical units and in their definitions in various dictionaries. § the presence of qualifiers for genres in the wordnet glosses of the analysed lexical units. Result: neutral versus marked

Procedure: Emotions and Values RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL § A supplementary step in relation to sentiment § Assignment of fundamental values was optional but rarely omitted § Complex emotions expressed by combinations of the basic ones § Perfect agreement was not expected, but the overlap was very high, e. g. , § A 1: {smutek ‘sadness’, wstręt ‘disgust’}; {nieużyteczność ‘futility’, niewiedza ‘ignorance’} § A 2: {smutek ‘sadness’, złość ‘anger’, wstręt ‘disgust’}; {nieużyteczność ‘futility’, niewiedza ‘ignorance’}

Procedure: Sentiment polarity 1. 2. 3. 4. RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL Congruence test Discord test Test of collocations Test of dictionary definitions Congruence test § all occurrences of the given lexical unit x (not a lemma/word) in the usage examples to have the same sentiment polarity as that considered for x § co-occurring adjectives, nouns and verbs do not change the polarity value, but support it § diverse examples for the value ambiguous

Procedure: Sentiment polarity RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL Discord test §the presence of a proper antonymy link between the lexical unit considered and some other lexical units with clear sentiment polarity §e. g. , nadzieja ‘hope’ [positive] – rozczarowanie ‘disappointment’ Test of collocations §words included in collocations for the given lexical units are examined with respect to their sentiment polarity §the strength of the observed tendency

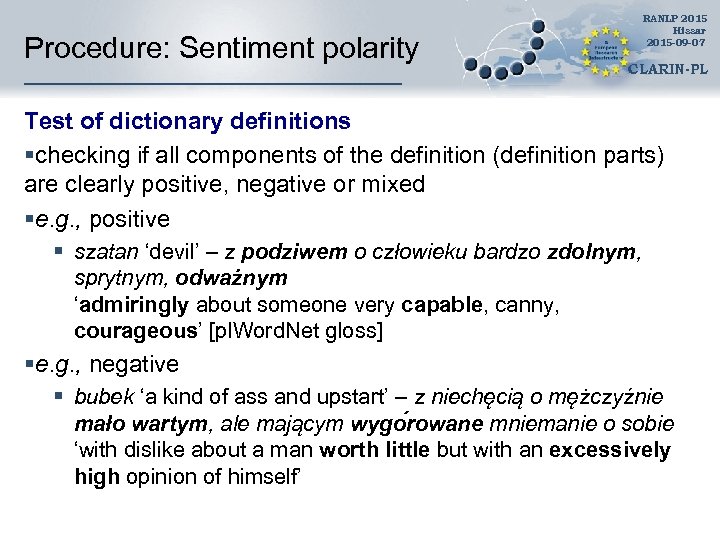

Procedure: Sentiment polarity RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL Test of dictionary definitions §checking if all components of the definition (definition parts) are clearly positive, negative or mixed §e. g. , positive § szatan ‘devil’ – z podziwem o człowieku bardzo zdolnym, sprytnym, odważnym ‘admiringly about someone very capable, canny, courageous’ [pl. Word. Net gloss] §e. g. , negative § bubek ‘a kind of ass and upstart’ – z niechęcią o mężczyźnie mało wartym, ale mającym wygo rowane mniemanie o sobie ‘with dislike about a man worth little but with an excessively high opinion of himself’

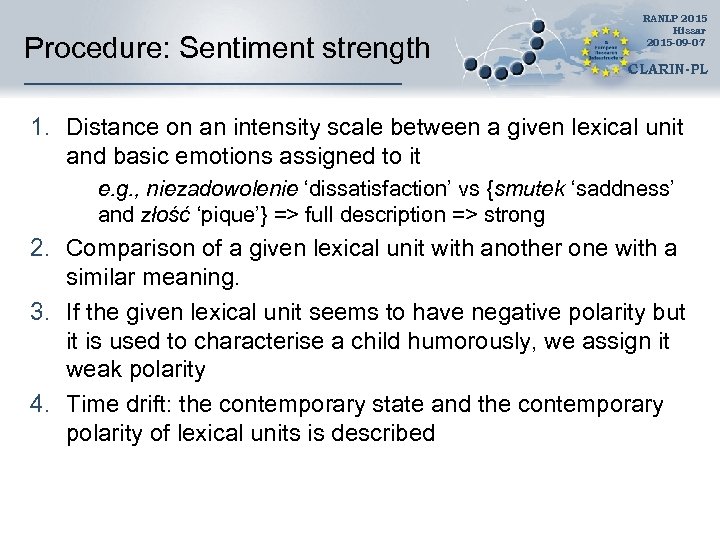

Procedure: Sentiment strength RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL 1. Distance on an intensity scale between a given lexical unit and basic emotions assigned to it e. g. , niezadowolenie ‘dissatisfaction’ vs {smutek ‘saddness’ and złość ‘pique’} => full description => strong 2. Comparison of a given lexical unit with another one with a similar meaning. 3. If the given lexical unit seems to have negative polarity but it is used to characterise a child humorously, we assign it weak polarity 4. Time drift: the contemporary state and the contemporary polarity of lexical units is described

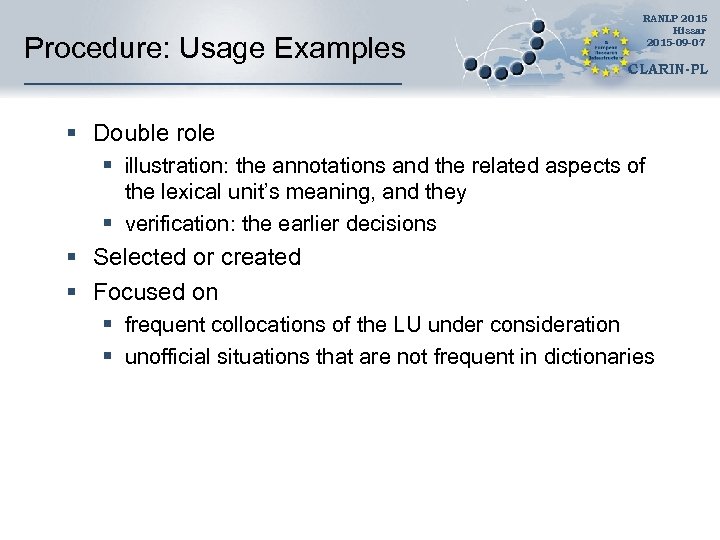

Procedure: Usage Examples RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL § Double role § illustration: the annotations and the related aspects of the lexical unit’s meaning, and they § verification: the earlier decisions § Selected or created § Focused on § frequent collocations of the LU under consideration § unofficial situations that are not frequent in dictionaries

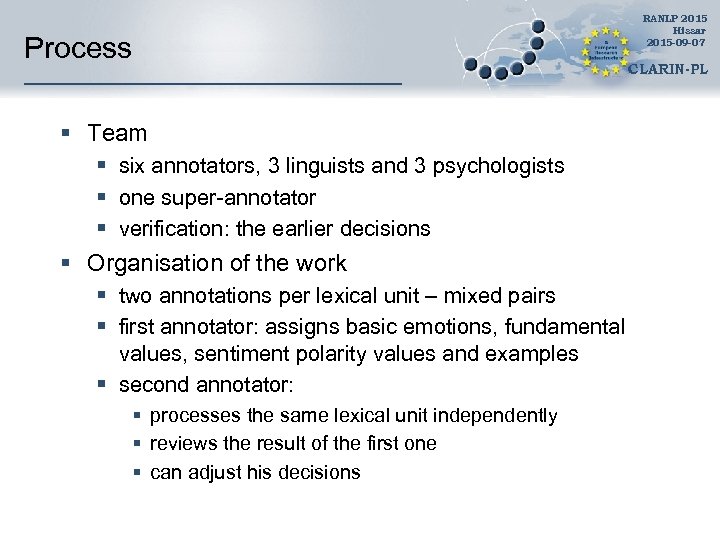

Process § Team § six annotators, 3 linguists and 3 psychologists § one super-annotator § verification: the earlier decisions § Organisation of the work § two annotations per lexical unit – mixed pairs § first annotator: assigns basic emotions, fundamental values, sentiment polarity values and examples § second annotator: § processes the same lexical unit independently § reviews the result of the first one § can adjust his decisions RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL

Process RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL § Organisation of the work § if the second annotator disagreed, a report went to the coordinator § if the coordinator found an annotator’s error, a re-analysis was requested. § if potential error is found in pl. Word. Net, the annotation of the given lexical unit is suspended till the error has been corrected § Scope § Nouns § hypernymy subgraphs, more significant for sentiment polarity § affect, feelings and emotions, people, features of people and animals, events rated negatively, evaluated as negative § Selected adjectives

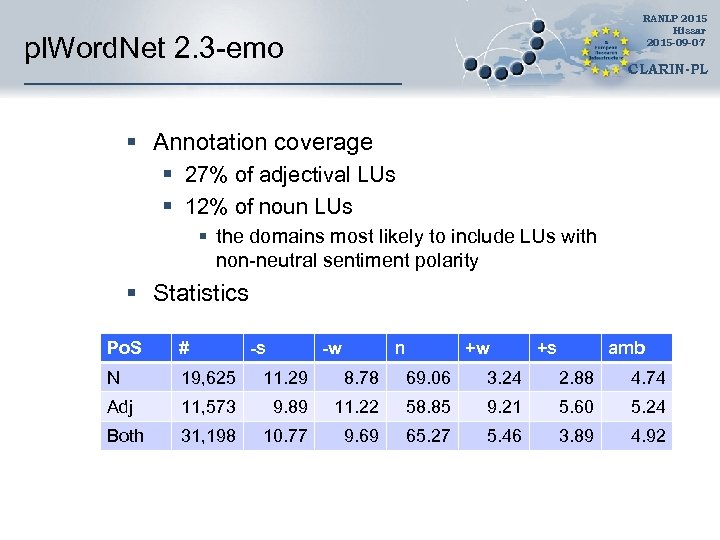

RANLP 2015 Hissar 2015 -09 -07 pl. Word. Net 2. 3 -emo CLARIN-PL § Annotation coverage § 27% of adjectival LUs § 12% of noun LUs § the domains most likely to include LUs with non-neutral sentiment polarity § Statistics Po. S # -s -w n +w +s amb N 19, 625 11. 29 8. 78 69. 06 3. 24 2. 88 4. 74 Adj 11, 573 9. 89 11. 22 58. 85 9. 21 5. 60 5. 24 Both 31, 198 10. 77 9. 69 65. 27 5. 46 3. 89 4. 92

pl. Word. Net 2. 3 -emo: Examples RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL § Ambiguous: {starzec 1, staruszek 1, dziadek 1} (per) gloss: § ##A 1: {zaufanie, smutek, złość; dobro, wiedza, nieużyteczność, nieszczęście} amb [Chętnie pomagam temu starcowi, ponieważ zawsze opowiada mi niezwykłe historie z lat swej młodości. ] [Ten starzec wyglądał coraz gorzej, było mi go żal. ] § ##A 2: {zaufanie, smutek, wstręt; wiedza, nieużyteczność, brzydota} amb [W pierwszym rzędzie, tuż przed ołtarzem, zasiadł nobliwy starzec - gość biskupa. ] [Jadwiga szukała sposobu, jak może pomóc sponiewieranemu, ubogiemu starcowi. ]

pl. Word. Net 2. 3 -emo: Examples RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL § Ambiguous: {starzec 1, staruszek 1, dziadek 1} (per), gloss: “stary mężczyzna” `old man’ § ##A 1: {złość, wstręt; nieużyteczność, niewiedza} - m (`-strong’) [Stary dziad nie powinien podrywać młodych dziewczyn. ] § ##A 2: {wstręt; nieużyteczność, brzydota} - s (`-weak’) [Jakiś dziad się dosiadł do naszego przedziału i wyciągnął śmierdzące kanapki z jajkiem. ] § ##A 3: {wstręt; nieużyteczność, brzydota} - s (`-weak’) [Kilkanaście lat minęło i zrobił się z niego stary dziad. ]

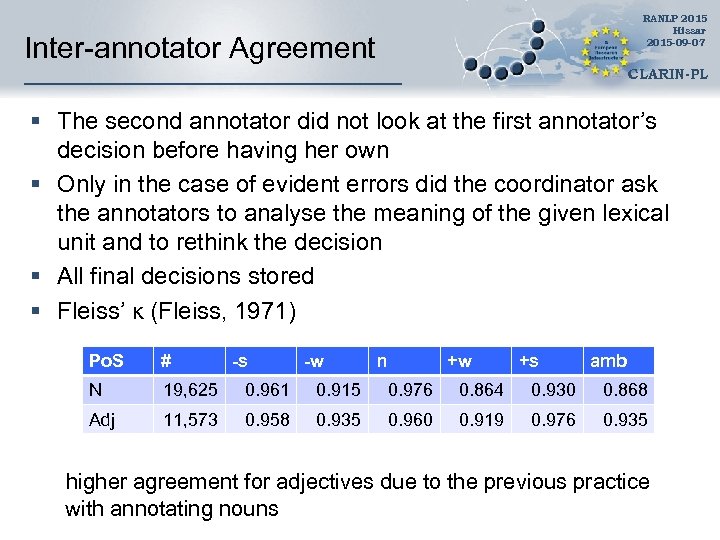

RANLP 2015 Hissar 2015 -09 -07 Inter-annotator Agreement CLARIN-PL § The second annotator did not look at the first annotator’s decision before having her own § Only in the case of evident errors did the coordinator ask the annotators to analyse the meaning of the given lexical unit and to rethink the decision § All final decisions stored § Fleiss’ κ (Fleiss, 1971) Po. S # -s -w n +w +s amb N 19, 625 0. 961 0. 915 0. 976 0. 864 0. 930 0. 868 Adj 11, 573 0. 958 0. 935 0. 960 0. 919 0. 976 0. 935 higher agreement for adjectives due to the previous practice with annotating nouns

Conclusions RANLP 2015 Hissar 2015 -09 -07 CLARIN-PL § A first, important step towards sentiment annotation of the whole pl. Word. Net. § The achieved size is very high in comparison to other manual annotation projects. § Good starting point for algorithms of spreading the sentiment polarity § Because pl. Word. Net was developed independently from Princeton Word. Net, it might be interesting to compare our annotation with the automatic annotation added to PWN in other projects

CLARIN-PL Thank you very much for your attention! http: //www. clarin-pl. eu Supported by the Polish Ministry of Science and Higher Education [CLARIN-PL]

8876c7b8cc5fc02803b3c1a241295968.ppt