4dfdbe4cbcbd380392d7074a39d84519.ppt

- Количество слайдов: 46

Chen on Program Theory and Apt Evaluation Methods: Applications to Team Read PA 522—Professor Mario Rivera

Chen on Program Theory and Apt Evaluation Methods: Applications to Team Read PA 522—Professor Mario Rivera

Articulating and testing program theory n Chen addresses the role of stakeholders with respect to program theory by postulating that there is both scientific validity and stakeholder validity. One can determine a program’s change/action models by reviewing existing program documents and interviewing program principals and stakeholders. Evaluators may also facilitate discussions, for instance on topics like strategic planning, logic models, and change models. Discussion of program theory entails forward reasoning and backward reasoning— either (1) projecting from program premises or (2) reasoning back from actual or desired program outcomes (“feedback” and “feed-forward”). An action model may be articulated in facilitated discussion, then distributed to stakeholders for further consideration. Evaluation design will incorporate explicitly articulated program theory, so as to test single or multiple program stages against it.

Articulating and testing program theory n Chen addresses the role of stakeholders with respect to program theory by postulating that there is both scientific validity and stakeholder validity. One can determine a program’s change/action models by reviewing existing program documents and interviewing program principals and stakeholders. Evaluators may also facilitate discussions, for instance on topics like strategic planning, logic models, and change models. Discussion of program theory entails forward reasoning and backward reasoning— either (1) projecting from program premises or (2) reasoning back from actual or desired program outcomes (“feedback” and “feed-forward”). An action model may be articulated in facilitated discussion, then distributed to stakeholders for further consideration. Evaluation design will incorporate explicitly articulated program theory, so as to test single or multiple program stages against it.

Evaluation and threats to internal and external validity q Are you sure that it is the program that caused the effect? n Typical concerns: secular trends, program history (attrition, turnover), testing effects, regression to the mean, etc. n Mixed-methods evaluation can help uncover complex causal relationships As with Team Read, evaluating for multiple purposes and at multiple levels at the same time (e. g. , learning, self-efficacy, organizational/program capacity) may be uniquely revealing n Program evaluation for partnered programs often means assessment along several lines of inquiry—and therefore also necessarily evaluating more inclusively than is usually the case n Formative evaluation occurs at the organizational and program levels (internal validity), while impacts are assessed more comprehensively, encompassing more actors (external validity) n Context is important—in other words, program environment, broader strains of causation that include exogenous influences n

Evaluation and threats to internal and external validity q Are you sure that it is the program that caused the effect? n Typical concerns: secular trends, program history (attrition, turnover), testing effects, regression to the mean, etc. n Mixed-methods evaluation can help uncover complex causal relationships As with Team Read, evaluating for multiple purposes and at multiple levels at the same time (e. g. , learning, self-efficacy, organizational/program capacity) may be uniquely revealing n Program evaluation for partnered programs often means assessment along several lines of inquiry—and therefore also necessarily evaluating more inclusively than is usually the case n Formative evaluation occurs at the organizational and program levels (internal validity), while impacts are assessed more comprehensively, encompassing more actors (external validity) n Context is important—in other words, program environment, broader strains of causation that include exogenous influences n

“Integrative Validity”—From Chen, Huey T. , 2010. “The bottom-up approach to integrative validity: A new perspective for program evaluation, ” Evaluation and Program Planning, 33(3), pp. 205 -214, August. n “Evaluators and researchers have. . . increasingly recognized that in an evaluation, the over-emphasis on internal validity reduces that evaluation's usefulness and contributes to the gulf between academic and practical communities regarding interventions (p. 205). ” n Chen proposes an alternative integrative validity model for program evaluation, premised on viability and “bottom-up” incorporation of stakeholders’ views and concerns. The integrative validity model and the bottom-up approach enable evaluators to meet scientific and practical requirements, facilitate in advancing external validity, and gain a new perspective on methods. For integrative validity to obtain, stakeholders must be centrally involved. Consistent with Chen’s emphasis on addressing both scientific and stakeholder validity. Was there sufficient stakeholder involvement in the Team Read evaluation?

“Integrative Validity”—From Chen, Huey T. , 2010. “The bottom-up approach to integrative validity: A new perspective for program evaluation, ” Evaluation and Program Planning, 33(3), pp. 205 -214, August. n “Evaluators and researchers have. . . increasingly recognized that in an evaluation, the over-emphasis on internal validity reduces that evaluation's usefulness and contributes to the gulf between academic and practical communities regarding interventions (p. 205). ” n Chen proposes an alternative integrative validity model for program evaluation, premised on viability and “bottom-up” incorporation of stakeholders’ views and concerns. The integrative validity model and the bottom-up approach enable evaluators to meet scientific and practical requirements, facilitate in advancing external validity, and gain a new perspective on methods. For integrative validity to obtain, stakeholders must be centrally involved. Consistent with Chen’s emphasis on addressing both scientific and stakeholder validity. Was there sufficient stakeholder involvement in the Team Read evaluation?

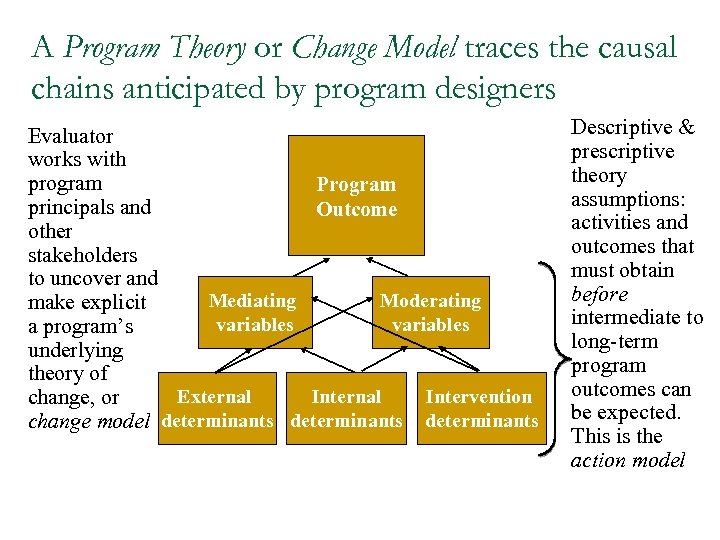

A Program Theory or Change Model traces the causal chains anticipated by program designers Evaluator works with program Program principals and Outcome other stakeholders to uncover and Mediating Moderating make explicit variables a program’s underlying theory of External Intervention change, or change model determinants Descriptive & prescriptive theory assumptions: activities and outcomes that must obtain before intermediate to long-term program outcomes can be expected. This is the action model

A Program Theory or Change Model traces the causal chains anticipated by program designers Evaluator works with program Program principals and Outcome other stakeholders to uncover and Mediating Moderating make explicit variables a program’s underlying theory of External Intervention change, or change model determinants Descriptive & prescriptive theory assumptions: activities and outcomes that must obtain before intermediate to long-term program outcomes can be expected. This is the action model

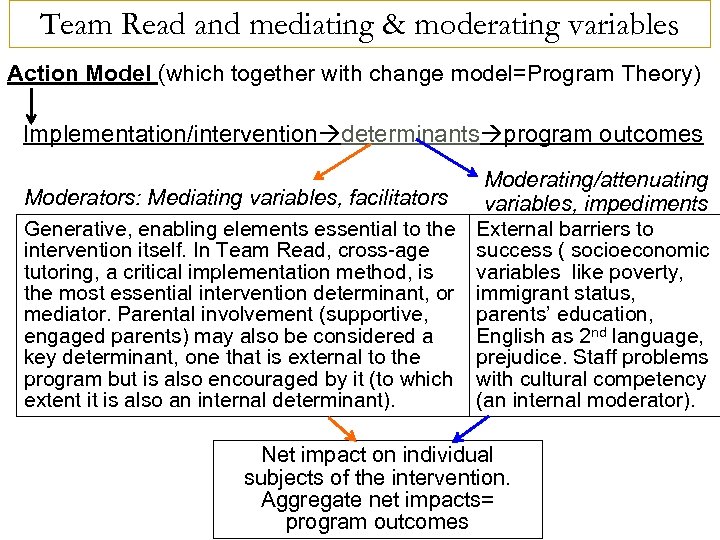

Team Read and mediating & moderating variables Action Model (which together with change model=Program Theory) Implementation/intervention determinants program outcomes Moderating/attenuating Moderators: Mediating variables, facilitators variables, impediments Generative, enabling elements essential to the External barriers to intervention itself. In Team Read, cross-age success ( socioeconomic tutoring, a critical implementation method, is variables like poverty, the most essential intervention determinant, or immigrant status, mediator. Parental involvement (supportive, parents’ education, engaged parents) may also be considered a English as 2 nd language, key determinant, one that is external to the prejudice. Staff problems program but is also encouraged by it (to which with cultural competency extent it is also an internal determinant). (an internal moderator). Net impact on individual subjects of the intervention. Aggregate net impacts= program outcomes

Team Read and mediating & moderating variables Action Model (which together with change model=Program Theory) Implementation/intervention determinants program outcomes Moderating/attenuating Moderators: Mediating variables, facilitators variables, impediments Generative, enabling elements essential to the External barriers to intervention itself. In Team Read, cross-age success ( socioeconomic tutoring, a critical implementation method, is variables like poverty, the most essential intervention determinant, or immigrant status, mediator. Parental involvement (supportive, parents’ education, engaged parents) may also be considered a English as 2 nd language, key determinant, one that is external to the prejudice. Staff problems program but is also encouraged by it (to which with cultural competency extent it is also an internal determinant). (an internal moderator). Net impact on individual subjects of the intervention. Aggregate net impacts= program outcomes

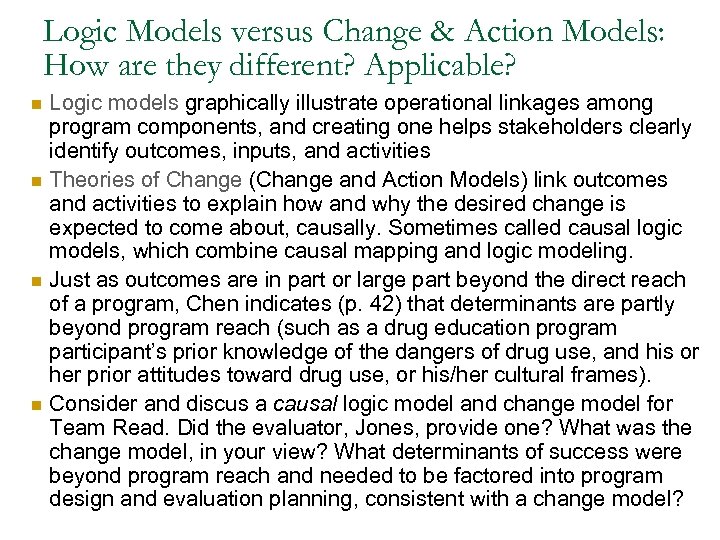

Logic Models versus Change & Action Models: How are they different? Applicable? n n Logic models graphically illustrate operational linkages among program components, and creating one helps stakeholders clearly identify outcomes, inputs, and activities Theories of Change (Change and Action Models) link outcomes and activities to explain how and why the desired change is expected to come about, causally. Sometimes called causal logic models, which combine causal mapping and logic modeling. Just as outcomes are in part or large part beyond the direct reach of a program, Chen indicates (p. 42) that determinants are partly beyond program reach (such as a drug education program participant’s prior knowledge of the dangers of drug use, and his or her prior attitudes toward drug use, or his/her cultural frames). Consider and discus a causal logic model and change model for Team Read. Did the evaluator, Jones, provide one? What was the change model, in your view? What determinants of success were beyond program reach and needed to be factored into program design and evaluation planning, consistent with a change model?

Logic Models versus Change & Action Models: How are they different? Applicable? n n Logic models graphically illustrate operational linkages among program components, and creating one helps stakeholders clearly identify outcomes, inputs, and activities Theories of Change (Change and Action Models) link outcomes and activities to explain how and why the desired change is expected to come about, causally. Sometimes called causal logic models, which combine causal mapping and logic modeling. Just as outcomes are in part or large part beyond the direct reach of a program, Chen indicates (p. 42) that determinants are partly beyond program reach (such as a drug education program participant’s prior knowledge of the dangers of drug use, and his or her prior attitudes toward drug use, or his/her cultural frames). Consider and discus a causal logic model and change model for Team Read. Did the evaluator, Jones, provide one? What was the change model, in your view? What determinants of success were beyond program reach and needed to be factored into program design and evaluation planning, consistent with a change model?

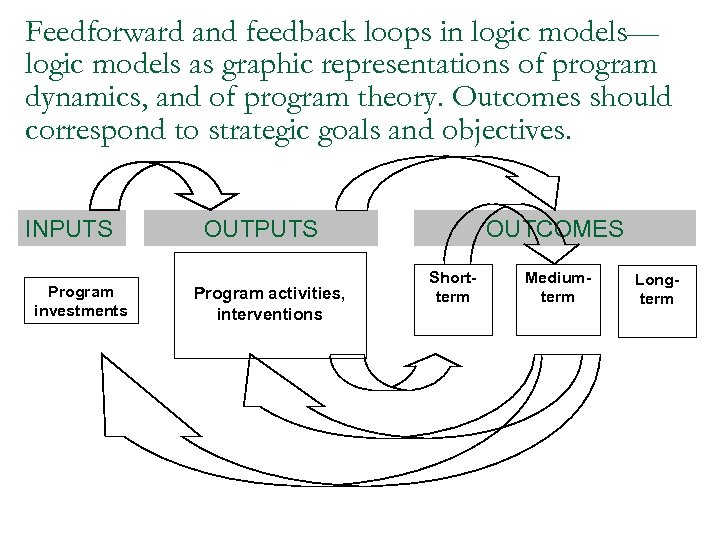

Feedforward and feedback loops in logic models— logic models as graphic representations of program dynamics, and of program theory. Outcomes should correspond to strategic goals and objectives. INPUTS Program investments OUTPUTS Program activities, interventions OUTCOMES Shortterm Mediumterm Longterm

Feedforward and feedback loops in logic models— logic models as graphic representations of program dynamics, and of program theory. Outcomes should correspond to strategic goals and objectives. INPUTS Program investments OUTPUTS Program activities, interventions OUTCOMES Shortterm Mediumterm Longterm

Intervening Variables, Confounding Factors n Intervening variables can take various forms q q q Direct and indirect effects of one’s intervention (for instance, stakeholder support or resistance); other interventions or messages or influences; interactions among variables Reciprocal relations among variables involve mutual causation (for example, the interaction between the factors of [1] lack of parental inclusion and [2] limited extent of tutoring effectiveness in the Team Read case). How context-dependent is the intervention? The more complex it is, the more dependent on context are both the program implementation and program evaluation (cf. Holden text). Why would that be? Many interventions are very sensitive to context and can be implemented successfully only if adapted or changed to suit social, political, and other dimensions of context.

Intervening Variables, Confounding Factors n Intervening variables can take various forms q q q Direct and indirect effects of one’s intervention (for instance, stakeholder support or resistance); other interventions or messages or influences; interactions among variables Reciprocal relations among variables involve mutual causation (for example, the interaction between the factors of [1] lack of parental inclusion and [2] limited extent of tutoring effectiveness in the Team Read case). How context-dependent is the intervention? The more complex it is, the more dependent on context are both the program implementation and program evaluation (cf. Holden text). Why would that be? Many interventions are very sensitive to context and can be implemented successfully only if adapted or changed to suit social, political, and other dimensions of context.

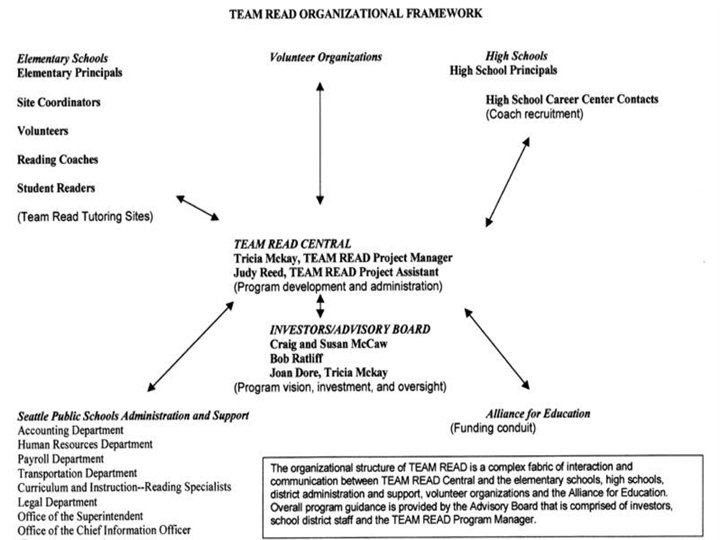

Evaluating complex, partnered programs ¾ Program evaluation in collaborative/partnership contexts— questions pertinent to Team Read: ¾ ¾ Does it matter to the functioning and success of a program that it involves different sectors, settings, stakeholders, and standards? What is the role of funders s/a foundations? How do we determine if partnerships have been strengthened or if new linkages have been formed as a result of a particular program? How can we evaluate the development of organizational capacity along with program capacity and outcomes? To what extent have program managers and evaluators consulted with each other and with key constituencies and stakeholders in establishing goals and designing programs? In after-school programs, working partnerships between teachers and after-school personnel, and between these and parents, is essential.

Evaluating complex, partnered programs ¾ Program evaluation in collaborative/partnership contexts— questions pertinent to Team Read: ¾ ¾ Does it matter to the functioning and success of a program that it involves different sectors, settings, stakeholders, and standards? What is the role of funders s/a foundations? How do we determine if partnerships have been strengthened or if new linkages have been formed as a result of a particular program? How can we evaluate the development of organizational capacity along with program capacity and outcomes? To what extent have program managers and evaluators consulted with each other and with key constituencies and stakeholders in establishing goals and designing programs? In after-school programs, working partnerships between teachers and after-school personnel, and between these and parents, is essential.

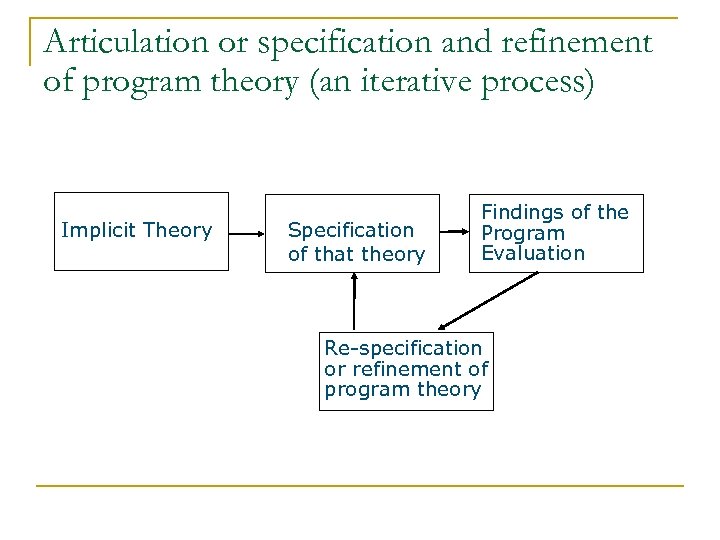

Articulation or specification and refinement of program theory (an iterative process) Implicit Theory Specification of that theory Findings of the Program Evaluation Re-specification or refinement of program theory

Articulation or specification and refinement of program theory (an iterative process) Implicit Theory Specification of that theory Findings of the Program Evaluation Re-specification or refinement of program theory

Team Read Evaluation n Evaluation conducted by Margo Jones, a statistician 17 schools in the program by June 2000 but only 10 used in the evaluation. 3 main goals or objectives relating to Team Read’s impact: q Goal #1: Do the reading skills of the student readers improve significantly during their participation in the Team Read program? q Goal #2: How does the program affect the reading coaches? How do the coaches see the program? q Goal #3: What is working well, and what can be improved?

Team Read Evaluation n Evaluation conducted by Margo Jones, a statistician 17 schools in the program by June 2000 but only 10 used in the evaluation. 3 main goals or objectives relating to Team Read’s impact: q Goal #1: Do the reading skills of the student readers improve significantly during their participation in the Team Read program? q Goal #2: How does the program affect the reading coaches? How do the coaches see the program? q Goal #3: What is working well, and what can be improved?

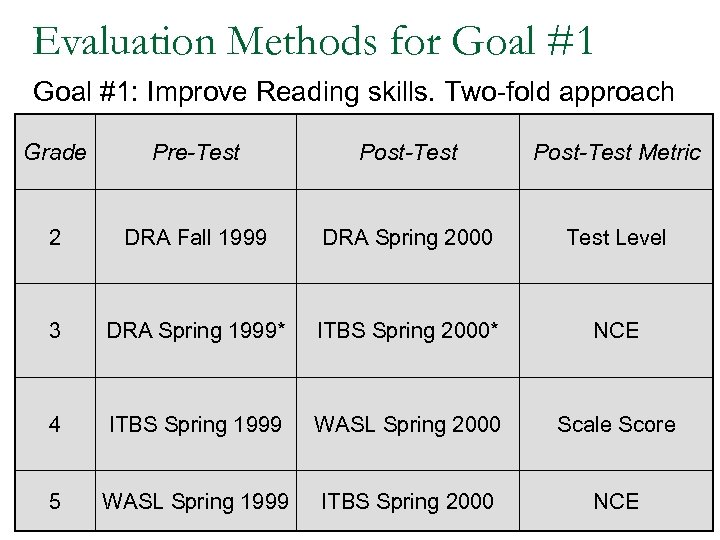

Evaluation Methods for Goal #1: Improve Reading skills. Two-fold approach Grade Pre-Test Post-Test Metric 2 DRA Fall 1999 DRA Spring 2000 Test Level 3 DRA Spring 1999* ITBS Spring 2000* NCE 4 ITBS Spring 1999 WASL Spring 2000 Scale Score 5 WASL Spring 1999 ITBS Spring 2000 NCE

Evaluation Methods for Goal #1: Improve Reading skills. Two-fold approach Grade Pre-Test Post-Test Metric 2 DRA Fall 1999 DRA Spring 2000 Test Level 3 DRA Spring 1999* ITBS Spring 2000* NCE 4 ITBS Spring 1999 WASL Spring 2000 Scale Score 5 WASL Spring 1999 ITBS Spring 2000 NCE

Jones was comparing apples and oranges Grade Pre-Test Post-Test Metric 2 3 4 5 NCE

Jones was comparing apples and oranges Grade Pre-Test Post-Test Metric 2 3 4 5 NCE

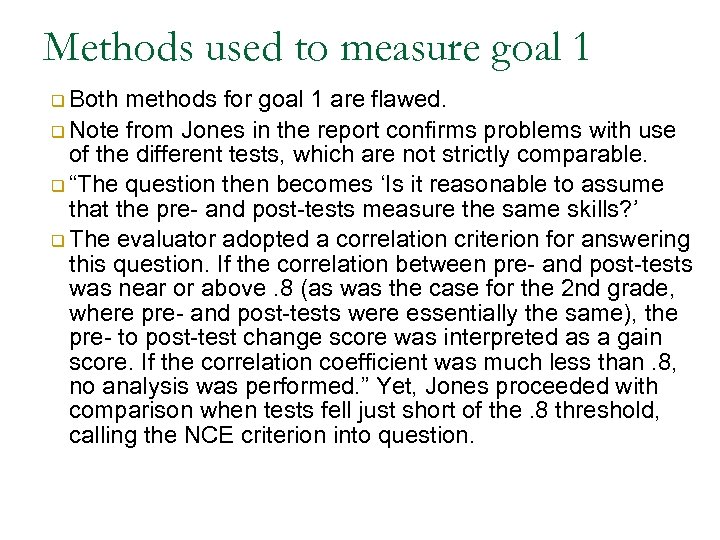

Methods used to measure goal 1 q Both methods for goal 1 are flawed. q Note from Jones in the report confirms problems with use of the different tests, which are not strictly comparable. q “The question then becomes ‘Is it reasonable to assume that the pre- and post-tests measure the same skills? ’ q The evaluator adopted a correlation criterion for answering this question. If the correlation between pre- and post-tests was near or above. 8 (as was the case for the 2 nd grade, where pre- and post-tests were essentially the same), the pre- to post-test change score was interpreted as a gain score. If the correlation coefficient was much less than. 8, no analysis was performed. ” Yet, Jones proceeded with comparison when tests fell just short of the. 8 threshold, calling the NCE criterion into question.

Methods used to measure goal 1 q Both methods for goal 1 are flawed. q Note from Jones in the report confirms problems with use of the different tests, which are not strictly comparable. q “The question then becomes ‘Is it reasonable to assume that the pre- and post-tests measure the same skills? ’ q The evaluator adopted a correlation criterion for answering this question. If the correlation between pre- and post-tests was near or above. 8 (as was the case for the 2 nd grade, where pre- and post-tests were essentially the same), the pre- to post-test change score was interpreted as a gain score. If the correlation coefficient was much less than. 8, no analysis was performed. ” Yet, Jones proceeded with comparison when tests fell just short of the. 8 threshold, calling the NCE criterion into question.

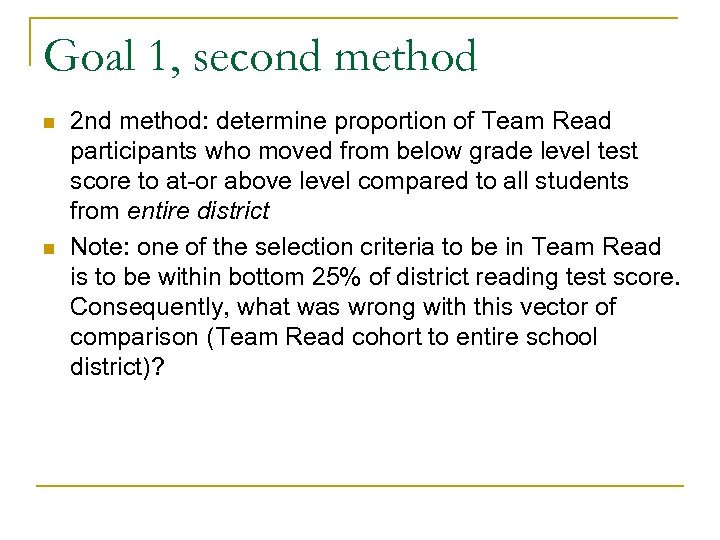

Goal 1, second method n n 2 nd method: determine proportion of Team Read participants who moved from below grade level test score to at-or above level compared to all students from entire district Note: one of the selection criteria to be in Team Read is to be within bottom 25% of district reading test score. Consequently, what was wrong with this vector of comparison (Team Read cohort to entire school district)?

Goal 1, second method n n 2 nd method: determine proportion of Team Read participants who moved from below grade level test score to at-or above level compared to all students from entire district Note: one of the selection criteria to be in Team Read is to be within bottom 25% of district reading test score. Consequently, what was wrong with this vector of comparison (Team Read cohort to entire school district)?

Testing problems n Major, obvious problems with the before-and-after testing approach used in the evaluation: q q q Pre and post-tests do not measure the same skills except in the 2 nd grade All Team Read participants are within the bottom quartile of the reading test scores and should not be compared with students district-wide but rather pre- and post program in a cross-panel evaluation, if possible with lowest-quartile nonparticipants in Team Read (or other afterschool reading programs). Analysis could not be carried out for some grades (3 rd) due to incompatibility of reading tests

Testing problems n Major, obvious problems with the before-and-after testing approach used in the evaluation: q q q Pre and post-tests do not measure the same skills except in the 2 nd grade All Team Read participants are within the bottom quartile of the reading test scores and should not be compared with students district-wide but rather pre- and post program in a cross-panel evaluation, if possible with lowest-quartile nonparticipants in Team Read (or other afterschool reading programs). Analysis could not be carried out for some grades (3 rd) due to incompatibility of reading tests

Evaluation Method, Goal #2 n n n Method: survey questionnaire given to reading coaches during their last week of coaching. Goal: to assess the impact of Team Read on coaches. What are some built-in weaknesses of satisfaction surveys in evaluating impact? Did Jones take these shortcomings into account? Identify three main areas of job satisfaction: q How positive is their experience with TR? q Extent to which the coaches feel that TR helps student readers q How supportive are TR’s site coordinators and staff?

Evaluation Method, Goal #2 n n n Method: survey questionnaire given to reading coaches during their last week of coaching. Goal: to assess the impact of Team Read on coaches. What are some built-in weaknesses of satisfaction surveys in evaluating impact? Did Jones take these shortcomings into account? Identify three main areas of job satisfaction: q How positive is their experience with TR? q Extent to which the coaches feel that TR helps student readers q How supportive are TR’s site coordinators and staff?

Evaluation Method, Goal #2 – Assessment of Coaches’ Satisfaction q Jones’ survey results showed that coaches were satisfied with their job and felt a sense of pride and accomplishment. The program therefore appeared successful in meeting this goal, unlike Goal #1. q Some questions sought ways to improve the program (formative purpose). The survey results were useful to the program manager (in ways that the evaluation as a whole was not useful managerially as a formative evaluation ).

Evaluation Method, Goal #2 – Assessment of Coaches’ Satisfaction q Jones’ survey results showed that coaches were satisfied with their job and felt a sense of pride and accomplishment. The program therefore appeared successful in meeting this goal, unlike Goal #1. q Some questions sought ways to improve the program (formative purpose). The survey results were useful to the program manager (in ways that the evaluation as a whole was not useful managerially as a formative evaluation ).

Evaluation Method, Goal #3 ü Method: interviews with major stakeholders, one site visit, review of last 5 years’ research literature on cross-age tutoring and last 3 years research on best practices. ü Goal: assess the overall progress of the program. Were stakeholder interviews adequate? Can stakeholder interviews be adequate if stakeholder involvement has been inadequate? Did interviews work together with other methods to comprise a fully dimensional picture of the Team Read program?

Evaluation Method, Goal #3 ü Method: interviews with major stakeholders, one site visit, review of last 5 years’ research literature on cross-age tutoring and last 3 years research on best practices. ü Goal: assess the overall progress of the program. Were stakeholder interviews adequate? Can stakeholder interviews be adequate if stakeholder involvement has been inadequate? Did interviews work together with other methods to comprise a fully dimensional picture of the Team Read program?

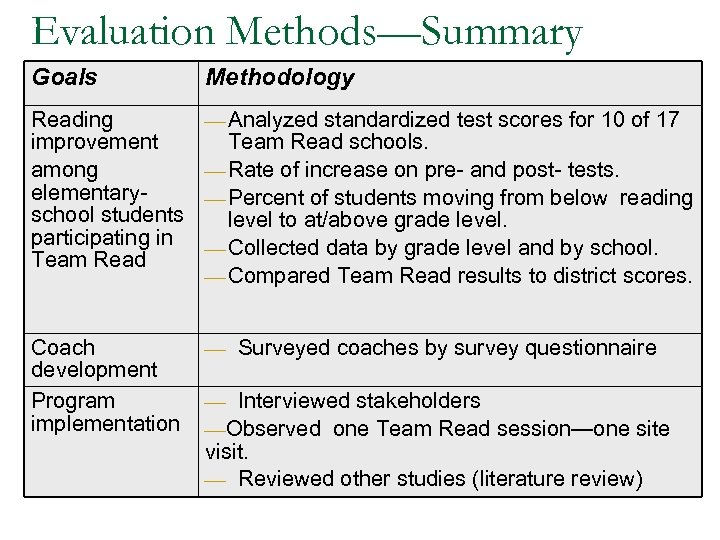

Evaluation Methods—Summary Goals Methodology Reading improvement among elementaryschool students participating in Team Read ¾ Analyzed standardized test scores for 10 of 17 Coach development Program implementation ¾ Surveyed coaches by survey questionnaire Team Read schools. ¾ Rate of increase on pre- and post- tests. ¾ Percent of students moving from below reading level to at/above grade level. ¾ Collected data by grade level and by school. ¾ Compared Team Read results to district scores. ¾ Interviewed stakeholders ¾Observed one Team Read session—one site visit. ¾ Reviewed other studies (literature review)

Evaluation Methods—Summary Goals Methodology Reading improvement among elementaryschool students participating in Team Read ¾ Analyzed standardized test scores for 10 of 17 Coach development Program implementation ¾ Surveyed coaches by survey questionnaire Team Read schools. ¾ Rate of increase on pre- and post- tests. ¾ Percent of students moving from below reading level to at/above grade level. ¾ Collected data by grade level and by school. ¾ Compared Team Read results to district scores. ¾ Interviewed stakeholders ¾Observed one Team Read session—one site visit. ¾ Reviewed other studies (literature review)

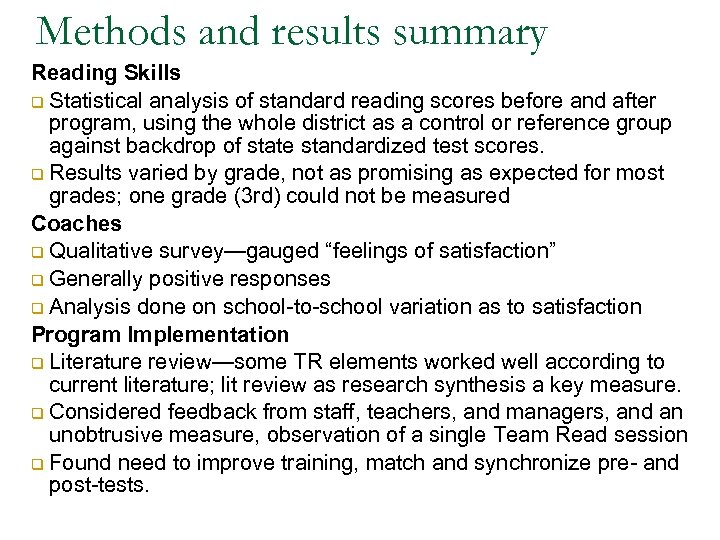

Methods and results summary Reading Skills q Statistical analysis of standard reading scores before and after program, using the whole district as a control or reference group against backdrop of state standardized test scores. q Results varied by grade, not as promising as expected for most grades; one grade (3 rd) could not be measured Coaches q Qualitative survey—gauged “feelings of satisfaction” q Generally positive responses q Analysis done on school-to-school variation as to satisfaction Program Implementation q Literature review—some TR elements worked well according to current literature; lit review as research synthesis a key measure. q Considered feedback from staff, teachers, and managers, and an unobtrusive measure, observation of a single Team Read session q Found need to improve training, match and synchronize pre- and post-tests.

Methods and results summary Reading Skills q Statistical analysis of standard reading scores before and after program, using the whole district as a control or reference group against backdrop of state standardized test scores. q Results varied by grade, not as promising as expected for most grades; one grade (3 rd) could not be measured Coaches q Qualitative survey—gauged “feelings of satisfaction” q Generally positive responses q Analysis done on school-to-school variation as to satisfaction Program Implementation q Literature review—some TR elements worked well according to current literature; lit review as research synthesis a key measure. q Considered feedback from staff, teachers, and managers, and an unobtrusive measure, observation of a single Team Read session q Found need to improve training, match and synchronize pre- and post-tests.

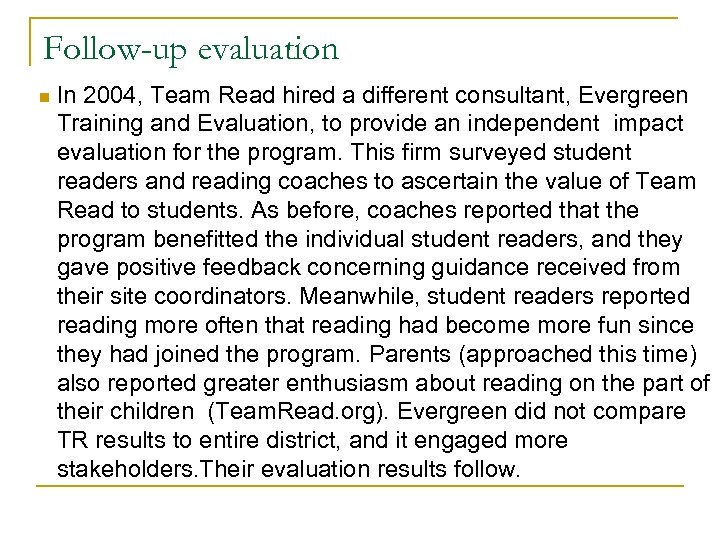

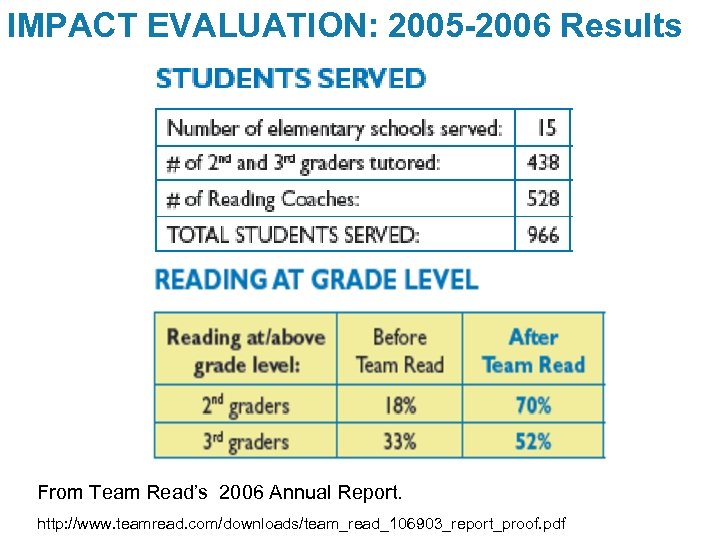

Follow-up evaluation n In 2004, Team Read hired a different consultant, Evergreen Training and Evaluation, to provide an independent impact evaluation for the program. This firm surveyed student readers and reading coaches to ascertain the value of Team Read to students. As before, coaches reported that the program benefitted the individual student readers, and they gave positive feedback concerning guidance received from their site coordinators. Meanwhile, student readers reported reading more often that reading had become more fun since they had joined the program. Parents (approached this time) also reported greater enthusiasm about reading on the part of their children (Team. Read. org). Evergreen did not compare TR results to entire district, and it engaged more stakeholders. Their evaluation results follow.

Follow-up evaluation n In 2004, Team Read hired a different consultant, Evergreen Training and Evaluation, to provide an independent impact evaluation for the program. This firm surveyed student readers and reading coaches to ascertain the value of Team Read to students. As before, coaches reported that the program benefitted the individual student readers, and they gave positive feedback concerning guidance received from their site coordinators. Meanwhile, student readers reported reading more often that reading had become more fun since they had joined the program. Parents (approached this time) also reported greater enthusiasm about reading on the part of their children (Team. Read. org). Evergreen did not compare TR results to entire district, and it engaged more stakeholders. Their evaluation results follow.

IMPACT EVALUATION: 2005 -2006 Results From Team Read’s 2006 Annual Report. http: //www. teamread. com/downloads/team_read_106903_report_proof. pdf

IMPACT EVALUATION: 2005 -2006 Results From Team Read’s 2006 Annual Report. http: //www. teamread. com/downloads/team_read_106903_report_proof. pdf

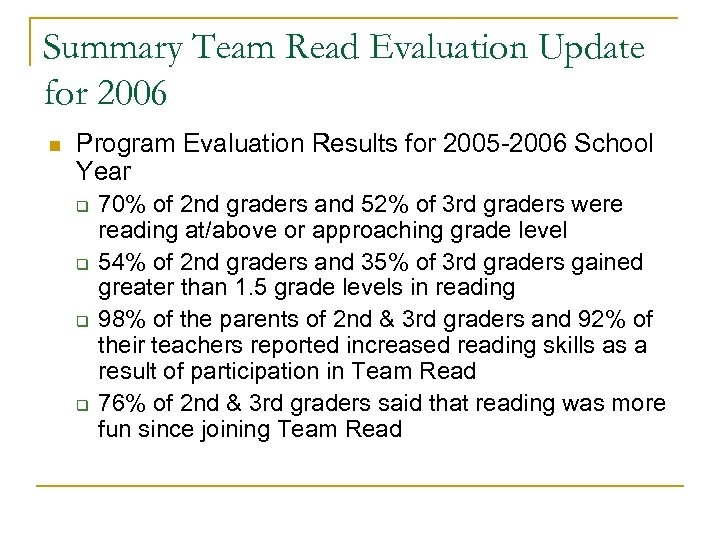

Summary Team Read Evaluation Update for 2006 n Program Evaluation Results for 2005 -2006 School Year q q 70% of 2 nd graders and 52% of 3 rd graders were reading at/above or approaching grade level 54% of 2 nd graders and 35% of 3 rd graders gained greater than 1. 5 grade levels in reading 98% of the parents of 2 nd & 3 rd graders and 92% of their teachers reported increased reading skills as a result of participation in Team Read 76% of 2 nd & 3 rd graders said that reading was more fun since joining Team Read

Summary Team Read Evaluation Update for 2006 n Program Evaluation Results for 2005 -2006 School Year q q 70% of 2 nd graders and 52% of 3 rd graders were reading at/above or approaching grade level 54% of 2 nd graders and 35% of 3 rd graders gained greater than 1. 5 grade levels in reading 98% of the parents of 2 nd & 3 rd graders and 92% of their teachers reported increased reading skills as a result of participation in Team Read 76% of 2 nd & 3 rd graders said that reading was more fun since joining Team Read

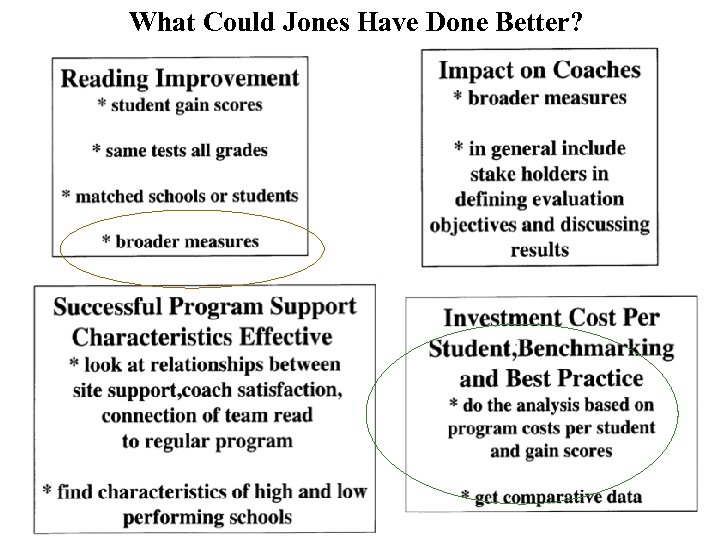

What Could Jones Have Done Better?

What Could Jones Have Done Better?

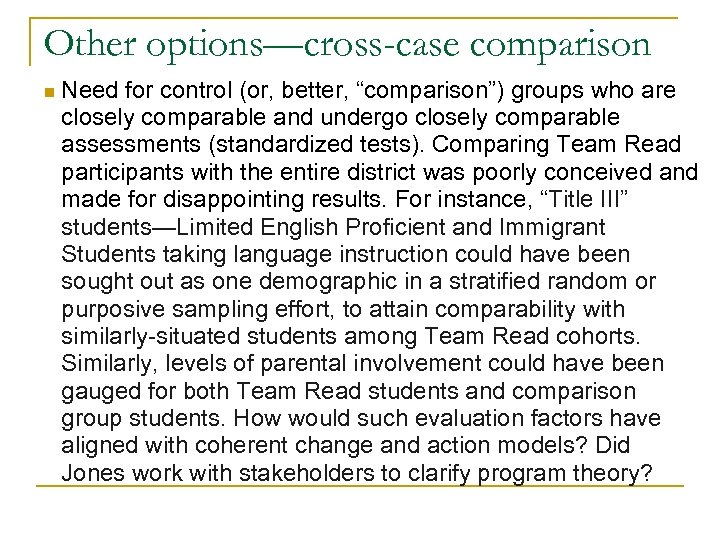

Other options—cross-case comparison n Need for control (or, better, “comparison”) groups who are closely comparable and undergo closely comparable assessments (standardized tests). Comparing Team Read participants with the entire district was poorly conceived and made for disappointing results. For instance, “Title III” students—Limited English Proficient and Immigrant Students taking language instruction could have been sought out as one demographic in a stratified random or purposive sampling effort, to attain comparability with similarly-situated students among Team Read cohorts. Similarly, levels of parental involvement could have been gauged for both Team Read students and comparison group students. How would such evaluation factors have aligned with coherent change and action models? Did Jones work with stakeholders to clarify program theory?

Other options—cross-case comparison n Need for control (or, better, “comparison”) groups who are closely comparable and undergo closely comparable assessments (standardized tests). Comparing Team Read participants with the entire district was poorly conceived and made for disappointing results. For instance, “Title III” students—Limited English Proficient and Immigrant Students taking language instruction could have been sought out as one demographic in a stratified random or purposive sampling effort, to attain comparability with similarly-situated students among Team Read cohorts. Similarly, levels of parental involvement could have been gauged for both Team Read students and comparison group students. How would such evaluation factors have aligned with coherent change and action models? Did Jones work with stakeholders to clarify program theory?

Chen Ch. 9: efficacy & effectiveness evaluation n Chen differentiates “efficacy evaluation” from “effectiveness evaluation. ” The main difference is that ‘effectiveness evaluation’ does not need a “perfect setting, ” random assignment of subjects, and other elements of a controlled experiment in order to work. The contrast corresponds to the distinction Chen draws between scientific and stakeholder validity. Effectiveness evaluation can work well in the uncontrolled real world (p. 205)—it is much more feasible and works better in community and human services contexts than does efficacy evaluation. The case suggests that evaluator, Margo Jones, wanted to approximate scientific rigor (particularly in her use of statistics) but failed to do so by both using different, incommensurable tests, and a statistical equivalency adjustment which was poorly justified and in any case not applied consistently. At the same time, there was little consideration of cultural or demographic factors or of the need for stakeholder inclusion.

Chen Ch. 9: efficacy & effectiveness evaluation n Chen differentiates “efficacy evaluation” from “effectiveness evaluation. ” The main difference is that ‘effectiveness evaluation’ does not need a “perfect setting, ” random assignment of subjects, and other elements of a controlled experiment in order to work. The contrast corresponds to the distinction Chen draws between scientific and stakeholder validity. Effectiveness evaluation can work well in the uncontrolled real world (p. 205)—it is much more feasible and works better in community and human services contexts than does efficacy evaluation. The case suggests that evaluator, Margo Jones, wanted to approximate scientific rigor (particularly in her use of statistics) but failed to do so by both using different, incommensurable tests, and a statistical equivalency adjustment which was poorly justified and in any case not applied consistently. At the same time, there was little consideration of cultural or demographic factors or of the need for stakeholder inclusion.

Efficacy evaluation & effectiveness evaluation Chen indicates that many researchers believe that efficacy evaluation should precede effectiveness evaluation. The argument is that only when efficacy evaluation has determined desirable outcomes and appropriate program design, especially a program’s change and action models, can effectiveness evaluation come in and try to check actual outcomes in a real-world setting. n Chen explains how this sequence is used in the biomedical field, and that it is oftentimes successful there. However, while this sequencing may work in clinical settings where all variables can be controlled, with social programs like Team Read these controls are not possible for a number of reasons—costs, ethics, and the variability introduced by human behavior. n

Efficacy evaluation & effectiveness evaluation Chen indicates that many researchers believe that efficacy evaluation should precede effectiveness evaluation. The argument is that only when efficacy evaluation has determined desirable outcomes and appropriate program design, especially a program’s change and action models, can effectiveness evaluation come in and try to check actual outcomes in a real-world setting. n Chen explains how this sequence is used in the biomedical field, and that it is oftentimes successful there. However, while this sequencing may work in clinical settings where all variables can be controlled, with social programs like Team Read these controls are not possible for a number of reasons—costs, ethics, and the variability introduced by human behavior. n

Efficacy evaluation & effectiveness evaluation If effectiveness evaluation was therefore indicated for the Team Read program, there is still a problem: Jones did not involve stakeholders nearly enough in the evaluation. Chen explains that when this occurs, stakeholders are prone to become suspicious of the motives of the evaluator. In any event, in this case they are not allowed to contribute to (and therefore buy into) the evaluation. n Jones obviously did not make enough visits to different schools and sites (also a means for stakeholder involvement). This evaluation could have been better if there was an evaluation team (incorporating stakeholders, including TR Advisory Board members) that attended tutoring sessions at different sites. An engaged evaluation team could have built a better relationship of trust and working relationship with key stakeholders (teachers, coaches, parents, students, and program funders and administrators). n

Efficacy evaluation & effectiveness evaluation If effectiveness evaluation was therefore indicated for the Team Read program, there is still a problem: Jones did not involve stakeholders nearly enough in the evaluation. Chen explains that when this occurs, stakeholders are prone to become suspicious of the motives of the evaluator. In any event, in this case they are not allowed to contribute to (and therefore buy into) the evaluation. n Jones obviously did not make enough visits to different schools and sites (also a means for stakeholder involvement). This evaluation could have been better if there was an evaluation team (incorporating stakeholders, including TR Advisory Board members) that attended tutoring sessions at different sites. An engaged evaluation team could have built a better relationship of trust and working relationship with key stakeholders (teachers, coaches, parents, students, and program funders and administrators). n

Evaluating complex, partnered programs n Collaborative management involves creating and operating through multi-organizational associations and partnerships to address difficult problems that lie beyond the capacity of single organizations. Student educational performance in K 12 presents many such problems, because multiple constituencies, including federal and state governments, school districts, foundations and other private funders, nonprofit agency actors, business interests, and others set disparate expectations and requirements. An additional challenge here is that communication and coordination functions are more complex and taxing than is the case for single-organization programs; evaluation is also more difficult. To become aligned and effective, partnered management and evaluation require closer, more continuous communication than do single-agency counterparts. It is important to gauge partnership functioning early on, and to move judiciously from formative to summative evaluation. Did Jones prematurely move into impact evaluation of TR?

Evaluating complex, partnered programs n Collaborative management involves creating and operating through multi-organizational associations and partnerships to address difficult problems that lie beyond the capacity of single organizations. Student educational performance in K 12 presents many such problems, because multiple constituencies, including federal and state governments, school districts, foundations and other private funders, nonprofit agency actors, business interests, and others set disparate expectations and requirements. An additional challenge here is that communication and coordination functions are more complex and taxing than is the case for single-organization programs; evaluation is also more difficult. To become aligned and effective, partnered management and evaluation require closer, more continuous communication than do single-agency counterparts. It is important to gauge partnership functioning early on, and to move judiciously from formative to summative evaluation. Did Jones prematurely move into impact evaluation of TR?

Effective Collaborations n n Building relationships is fundamental to the success of collaboration in program planning, design, implementation, and evaluation. Effective collaborations are characterized by: building clear expectations defining relationships identifying tasks, roles, responsibilities identifying work plans reaching agreement on desired outcomes consulting participants, stakeholders

Effective Collaborations n n Building relationships is fundamental to the success of collaboration in program planning, design, implementation, and evaluation. Effective collaborations are characterized by: building clear expectations defining relationships identifying tasks, roles, responsibilities identifying work plans reaching agreement on desired outcomes consulting participants, stakeholders

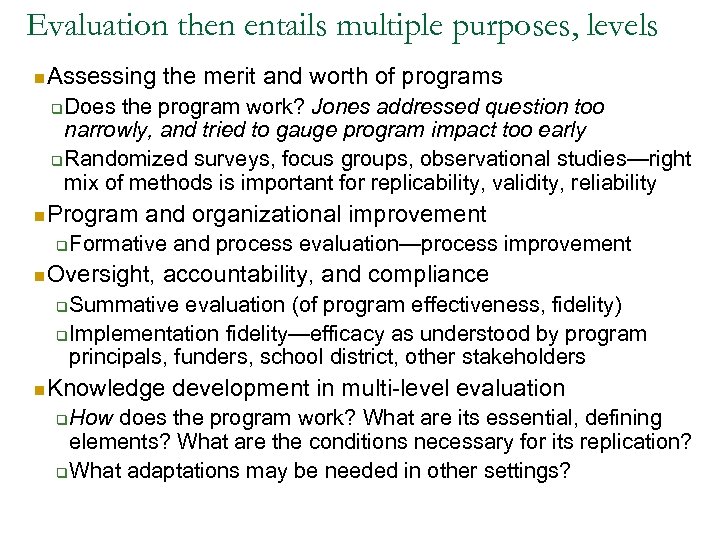

Evaluation then entails multiple purposes, levels n Assessing the merit and worth of programs Does the program work? Jones addressed question too narrowly, and tried to gauge program impact too early q Randomized surveys, focus groups, observational studies—right mix of methods is important for replicability, validity, reliability q n Program and organizational improvement q Formative and process evaluation—process improvement n Oversight, accountability, and compliance Summative evaluation (of program effectiveness, fidelity) q Implementation fidelity—efficacy as understood by program principals, funders, school district, other stakeholders q n Knowledge development in multi-level evaluation How does the program work? What are its essential, defining elements? What are the conditions necessary for its replication? q What adaptations may be needed in other settings? q

Evaluation then entails multiple purposes, levels n Assessing the merit and worth of programs Does the program work? Jones addressed question too narrowly, and tried to gauge program impact too early q Randomized surveys, focus groups, observational studies—right mix of methods is important for replicability, validity, reliability q n Program and organizational improvement q Formative and process evaluation—process improvement n Oversight, accountability, and compliance Summative evaluation (of program effectiveness, fidelity) q Implementation fidelity—efficacy as understood by program principals, funders, school district, other stakeholders q n Knowledge development in multi-level evaluation How does the program work? What are its essential, defining elements? What are the conditions necessary for its replication? q What adaptations may be needed in other settings? q

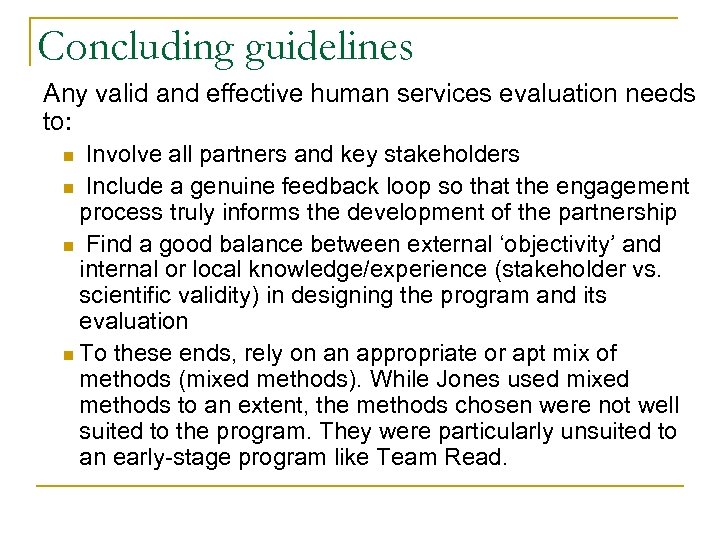

Concluding guidelines Any valid and effective human services evaluation needs to: Involve all partners and key stakeholders n Include a genuine feedback loop so that the engagement process truly informs the development of the partnership n Find a good balance between external ‘objectivity’ and internal or local knowledge/experience (stakeholder vs. scientific validity) in designing the program and its evaluation n To these ends, rely on an appropriate or apt mix of methods (mixed methods). While Jones used mixed methods to an extent, the methods chosen were not well suited to the program. They were particularly unsuited to an early-stage program like Team Read. n

Concluding guidelines Any valid and effective human services evaluation needs to: Involve all partners and key stakeholders n Include a genuine feedback loop so that the engagement process truly informs the development of the partnership n Find a good balance between external ‘objectivity’ and internal or local knowledge/experience (stakeholder vs. scientific validity) in designing the program and its evaluation n To these ends, rely on an appropriate or apt mix of methods (mixed methods). While Jones used mixed methods to an extent, the methods chosen were not well suited to the program. They were particularly unsuited to an early-stage program like Team Read. n

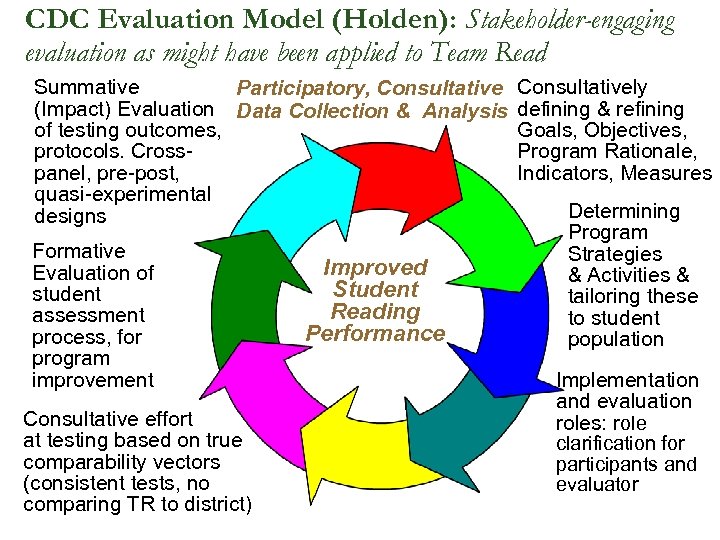

CDC Evaluation Model (Holden): Stakeholder-engaging evaluation as might have been applied to Team Read Summative Participatory, Consultative (Impact) Evaluation Data Collection & Analysis of testing outcomes, protocols. Crosspanel, pre-post, quasi-experimental designs Formative Evaluation of student assessment process, for program improvement Consultative effort at testing based on true comparability vectors (consistent tests, no comparing TR to district) Improved Student Reading Performance Consultatively defining & refining Goals, Objectives, Program Rationale, Indicators, Measures Determining Program Strategies & Activities & tailoring these to student population Implementation and evaluation roles: role clarification for participants and evaluator

CDC Evaluation Model (Holden): Stakeholder-engaging evaluation as might have been applied to Team Read Summative Participatory, Consultative (Impact) Evaluation Data Collection & Analysis of testing outcomes, protocols. Crosspanel, pre-post, quasi-experimental designs Formative Evaluation of student assessment process, for program improvement Consultative effort at testing based on true comparability vectors (consistent tests, no comparing TR to district) Improved Student Reading Performance Consultatively defining & refining Goals, Objectives, Program Rationale, Indicators, Measures Determining Program Strategies & Activities & tailoring these to student population Implementation and evaluation roles: role clarification for participants and evaluator

Becoming aware of the ‘filters’ or biases one brings to the task of evaluation q q q Preconceptions, assumptions, and possibly prejudices Cultural/political ‘lens’ Personal values/belief system Professional discipline/training (leading, for example, to predilection for some methods rather than others that may be better suited to the program under review). Did Jones exhibit this bias? How? Capacity to make sense of complex and multi-source data through evaluation methods that correspond to that level of complexity in the program; mixed methods that closely match program features is a form of “requisite complexity” (Ashby).

Becoming aware of the ‘filters’ or biases one brings to the task of evaluation q q q Preconceptions, assumptions, and possibly prejudices Cultural/political ‘lens’ Personal values/belief system Professional discipline/training (leading, for example, to predilection for some methods rather than others that may be better suited to the program under review). Did Jones exhibit this bias? How? Capacity to make sense of complex and multi-source data through evaluation methods that correspond to that level of complexity in the program; mixed methods that closely match program features is a form of “requisite complexity” (Ashby).

Human services evaluation requires: ü Active engagement and interest ü Attention to detail ü Willingness to explore contradictions ü Sifting and selecting data and methods with due sensitivity to the problem and populations addressed ü Interpretation and clarification of data and findings accordingly, in a consultative fashion, learning from program principals and stakeholders ü ‘Triangulation’ (confirmation) of findings with multiple sources of data, multiple methods, avoiding overreliance on single methods (Jones) ü Realistic movement from formative to outcome evaluation ü Focus on real-world effectiveness evaluation rather than ‘ideal-circumstances’ efficacy evaluation, when warranted.

Human services evaluation requires: ü Active engagement and interest ü Attention to detail ü Willingness to explore contradictions ü Sifting and selecting data and methods with due sensitivity to the problem and populations addressed ü Interpretation and clarification of data and findings accordingly, in a consultative fashion, learning from program principals and stakeholders ü ‘Triangulation’ (confirmation) of findings with multiple sources of data, multiple methods, avoiding overreliance on single methods (Jones) ü Realistic movement from formative to outcome evaluation ü Focus on real-world effectiveness evaluation rather than ‘ideal-circumstances’ efficacy evaluation, when warranted.

A Few Evaluation Resources http: //www. cdc. gov/eval/resources. htm http: //www. cdc. gov/evalcbph. pdf Request print copy from Laurie or Chris

A Few Evaluation Resources http: //www. cdc. gov/eval/resources. htm http: //www. cdc. gov/evalcbph. pdf Request print copy from Laurie or Chris

A Few Evaluation Resources http: //ctb. ku. edu/en/ http: //www. wkkf. org/Pubs/Tools/Evaluation/Pub 770. pdf

A Few Evaluation Resources http: //ctb. ku. edu/en/ http: //www. wkkf. org/Pubs/Tools/Evaluation/Pub 770. pdf

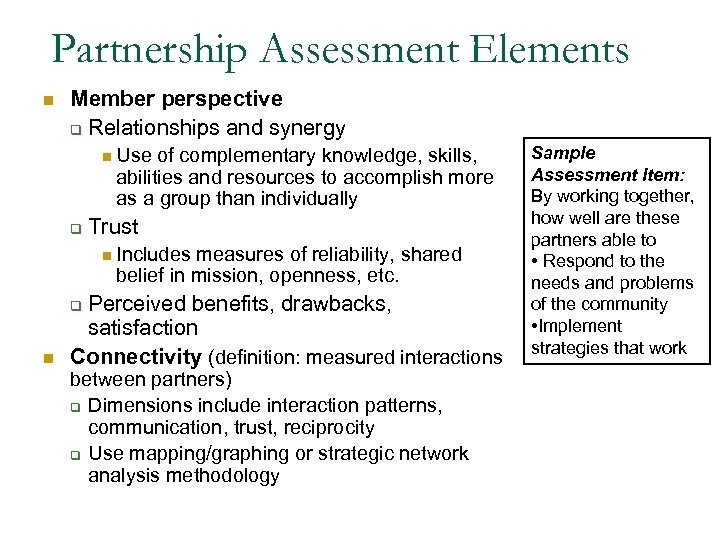

Partnership Assessment Elements n Member perspective q Relationships and synergy n q Use of complementary knowledge, skills, abilities and resources to accomplish more as a group than individually Trust n Includes measures of reliability, shared belief in mission, openness, etc. Perceived benefits, drawbacks, satisfaction Connectivity (definition: measured interactions q n between partners) q Dimensions include interaction patterns, communication, trust, reciprocity q Use mapping/graphing or strategic network analysis methodology Sample Assessment Item: By working together, how well are these partners able to • Respond to the needs and problems of the community • Implement strategies that work

Partnership Assessment Elements n Member perspective q Relationships and synergy n q Use of complementary knowledge, skills, abilities and resources to accomplish more as a group than individually Trust n Includes measures of reliability, shared belief in mission, openness, etc. Perceived benefits, drawbacks, satisfaction Connectivity (definition: measured interactions q n between partners) q Dimensions include interaction patterns, communication, trust, reciprocity q Use mapping/graphing or strategic network analysis methodology Sample Assessment Item: By working together, how well are these partners able to • Respond to the needs and problems of the community • Implement strategies that work

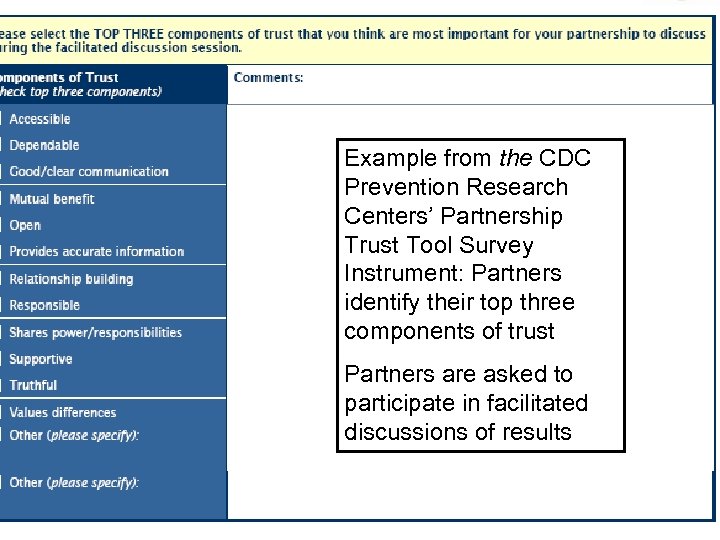

Example from the CDC Prevention Research Centers’ Partnership Trust Tool Survey Instrument: Partners identify their top three components of trust Partners are asked to participate in facilitated discussions of results

Example from the CDC Prevention Research Centers’ Partnership Trust Tool Survey Instrument: Partners identify their top three components of trust Partners are asked to participate in facilitated discussions of results

Resources for Partnership Assessments http: //www. cdc. gov/dhdsp/state_program/evaluation_guides/evaluating_partnerships. htm http: //www. cdc. gov/prc/about-prc-program/partnership-trust-tools. htm

Resources for Partnership Assessments http: //www. cdc. gov/dhdsp/state_program/evaluation_guides/evaluating_partnerships. htm http: //www. cdc. gov/prc/about-prc-program/partnership-trust-tools. htm

Other Resources n n http: //www. cdc. gov/dhdsp/state_program/evaluation_guides/eval uating_partnerships. htm CDC Division for Heart Disease and Stroke Prevention, “Fundamentals of Evaluating Partnerships: Evaluation Guide” (2008) http: //www. cdc. gov/prc/about-prc-program/partnership-trusttools. htm CDC Prevention Research Center’s Partnership Trust Tool http: //www. cacsh. org/ Center for Advancement of Collaborative Strategies in Health, Partnership Self-Assessment Tool http: //www. joe. org/joe/1999 april/tt 1. php Journal of Extension article: “Assessing Your Collaboration: A Self-Evaluation Tool” by L. M. Borden and D. F. Perkins (1999)

Other Resources n n http: //www. cdc. gov/dhdsp/state_program/evaluation_guides/eval uating_partnerships. htm CDC Division for Heart Disease and Stroke Prevention, “Fundamentals of Evaluating Partnerships: Evaluation Guide” (2008) http: //www. cdc. gov/prc/about-prc-program/partnership-trusttools. htm CDC Prevention Research Center’s Partnership Trust Tool http: //www. cacsh. org/ Center for Advancement of Collaborative Strategies in Health, Partnership Self-Assessment Tool http: //www. joe. org/joe/1999 april/tt 1. php Journal of Extension article: “Assessing Your Collaboration: A Self-Evaluation Tool” by L. M. Borden and D. F. Perkins (1999)

References n n Lincoln, Y. S. & Guba, E. G. , 1985 Naturalistic Inquiry, Sage Publicatoins, Newbury Park, CA. . Feyerabend, Paul, 1975, Against method, Verso, London. Geotz, J. & Le. Compte, M. 1982, ‘Problems of Reliability & Validity in Ethnographic Research’, in Review of Educational Research, vol 52, No. 1, 31 -60 Guba, E. G. & Lincoln, Y. S. 2004, Competing Paradigms in Qualitative Research: theories and Issues, Oxford University Press, New York.

References n n Lincoln, Y. S. & Guba, E. G. , 1985 Naturalistic Inquiry, Sage Publicatoins, Newbury Park, CA. . Feyerabend, Paul, 1975, Against method, Verso, London. Geotz, J. & Le. Compte, M. 1982, ‘Problems of Reliability & Validity in Ethnographic Research’, in Review of Educational Research, vol 52, No. 1, 31 -60 Guba, E. G. & Lincoln, Y. S. 2004, Competing Paradigms in Qualitative Research: theories and Issues, Oxford University Press, New York.