4690da1003df69e5b4532491823c714a.ppt

- Количество слайдов: 85

Chapter-(Testing principles) Chapter-16(Pressman) Slide 1

Chapter-(Testing principles) Chapter-16(Pressman) Slide 1

v. What is Software Testing l l l Software Testing is a process of executing a program with the intent of finding an error. A good test case is one that has a high probability of finding an as yet undiscovered error A successful test is one that uncovers an as yet undiscovered error TCS 2411 Software Engineering 2 Slide 2

v. What is Software Testing l l l Software Testing is a process of executing a program with the intent of finding an error. A good test case is one that has a high probability of finding an as yet undiscovered error A successful test is one that uncovers an as yet undiscovered error TCS 2411 Software Engineering 2 Slide 2

v. The software testing process Slide 3

v. The software testing process Slide 3

Testing Activities Subsystem Code Unit Tested Subsystem Requirements Analysis Document System Design Document Integration Test Integrated Subsystems Functional Test User Manual Functioning System Tested Subsystem Code Unit Test All tests by developer Slide 4

Testing Activities Subsystem Code Unit Tested Subsystem Requirements Analysis Document System Design Document Integration Test Integrated Subsystems Functional Test User Manual Functioning System Tested Subsystem Code Unit Test All tests by developer Slide 4

Testing Activities continued Global Requirements Validated Functioning System Performance. System Test Client’s Understanding of Requirements Accepted System Acceptance Tests by client Tests by developer User Environment Installation Test Usable System User’s understanding Tests (? ) by user System in Use Slide 5

Testing Activities continued Global Requirements Validated Functioning System Performance. System Test Client’s Understanding of Requirements Accepted System Acceptance Tests by client Tests by developer User Environment Installation Test Usable System User’s understanding Tests (? ) by user System in Use Slide 5

Software Testing Terms Testing The execution of a program to find its faults l Verification The process of proving the programs correctness. • Verification answers the question: Am I building the product right? l Validation The process of evaluating software at the end of the software development to ensure compliance with respect to the customer needs and requirements. The process of finding errors by executing the program in a real environment • Validation answers the question: Am I building the right product? l Debugging Diagnosing the error and correct it l Slide 6

Software Testing Terms Testing The execution of a program to find its faults l Verification The process of proving the programs correctness. • Verification answers the question: Am I building the product right? l Validation The process of evaluating software at the end of the software development to ensure compliance with respect to the customer needs and requirements. The process of finding errors by executing the program in a real environment • Validation answers the question: Am I building the right product? l Debugging Diagnosing the error and correct it l Slide 6

![Software Testability Checklist (Software testability is simply how easily [a computer program] can be Software Testability Checklist (Software testability is simply how easily [a computer program] can be](https://present5.com/presentation/4690da1003df69e5b4532491823c714a/image-7.jpg) Software Testability Checklist (Software testability is simply how easily [a computer program] can be tested. ) l l Operability (the better it works the more efficiently it can be tested) Observabilty (what you see is what you test) Controllability (the better software can be controlled the more testing can be automated and optimized) Decomposability (by controlling the scope of testing, the more quickly problems can be isolated and retested intelligently) The software system is built from independent modules. Software modules can be tested independently. l l l Simplicity (the less there is to test, the more quickly we can test) Stability (the fewer the changes, the fewer the disruptions to testing) Understandability (the more information known, the smarter the testing) Slide 7

Software Testability Checklist (Software testability is simply how easily [a computer program] can be tested. ) l l Operability (the better it works the more efficiently it can be tested) Observabilty (what you see is what you test) Controllability (the better software can be controlled the more testing can be automated and optimized) Decomposability (by controlling the scope of testing, the more quickly problems can be isolated and retested intelligently) The software system is built from independent modules. Software modules can be tested independently. l l l Simplicity (the less there is to test, the more quickly we can test) Stability (the fewer the changes, the fewer the disruptions to testing) Understandability (the more information known, the smarter the testing) Slide 7

v. Good Test Attributes l l l A good test has a high probability of finding an error. A good test is not redundant. A good test should be best of breed. Highest likelihood of uncovering a whole class of errors l A good test should not be too simple or too complex. Slide 8

v. Good Test Attributes l l l A good test has a high probability of finding an error. A good test is not redundant. A good test should be best of breed. Highest likelihood of uncovering a whole class of errors l A good test should not be too simple or too complex. Slide 8

v. Testing Principles l l l All test should be traceable to customer requirements. Tests should be planned before testing begins. Testing should begin with individual components and move towards to integrated cluster of components. Exhaustive testing is not possible. The most effective testing should be conducted by an independent third party. TCS 2411 Software Engineering 9 Slide 9

v. Testing Principles l l l All test should be traceable to customer requirements. Tests should be planned before testing begins. Testing should begin with individual components and move towards to integrated cluster of components. Exhaustive testing is not possible. The most effective testing should be conducted by an independent third party. TCS 2411 Software Engineering 9 Slide 9

v. Who Should Test The Software? l l Developer (individual units) Independent test group (ITG) • removes conflict of interest • reports to SQA team TCS 2411 Software Engineering 10 Slide 10

v. Who Should Test The Software? l l Developer (individual units) Independent test group (ITG) • removes conflict of interest • reports to SQA team TCS 2411 Software Engineering 10 Slide 10

v. Test data and test cases l l Test data Inputs which have been devised to test the system Test cases Inputs to test the system and the predicted outputs from these inputs if the system operates according to its specification Slide 11

v. Test data and test cases l l Test data Inputs which have been devised to test the system Test cases Inputs to test the system and the predicted outputs from these inputs if the system operates according to its specification Slide 11

v. Classification of testing techniques l Classification based on the source of information to derive test cases: • black-box testing (functional/behavioral, specification-based) • white-box testing (structural, program-based, glassbox) Slide 12

v. Classification of testing techniques l Classification based on the source of information to derive test cases: • black-box testing (functional/behavioral, specification-based) • white-box testing (structural, program-based, glassbox) Slide 12

White-box Testing Slide 13

White-box Testing Slide 13

White-box/program based Testing l l l Based on knowledge of internal logic of an application's code Based on coverage of code statements, branches, paths. Guarantee that all independent paths have been exercised at least once Exercise all logical decisions on their true and false sides Execute all loops at their boundaries and within their operational bounds 14 Slide 14

White-box/program based Testing l l l Based on knowledge of internal logic of an application's code Based on coverage of code statements, branches, paths. Guarantee that all independent paths have been exercised at least once Exercise all logical decisions on their true and false sides Execute all loops at their boundaries and within their operational bounds 14 Slide 14

Basis Path Testing steps l l • l White-box technique usually based on the program flow graph The cyclomatic complexity of the program computed from its flow graph using the formula V(G) = E -N + 2 or V(G) = P + 1 or Number of regions Determine the basis set of linearly independent paths (the cardinality of this set id the program cyclomatic complexity) Prepare test cases that will force the execution of each path in the basis set. Slide 15

Basis Path Testing steps l l • l White-box technique usually based on the program flow graph The cyclomatic complexity of the program computed from its flow graph using the formula V(G) = E -N + 2 or V(G) = P + 1 or Number of regions Determine the basis set of linearly independent paths (the cardinality of this set id the program cyclomatic complexity) Prepare test cases that will force the execution of each path in the basis set. Slide 15

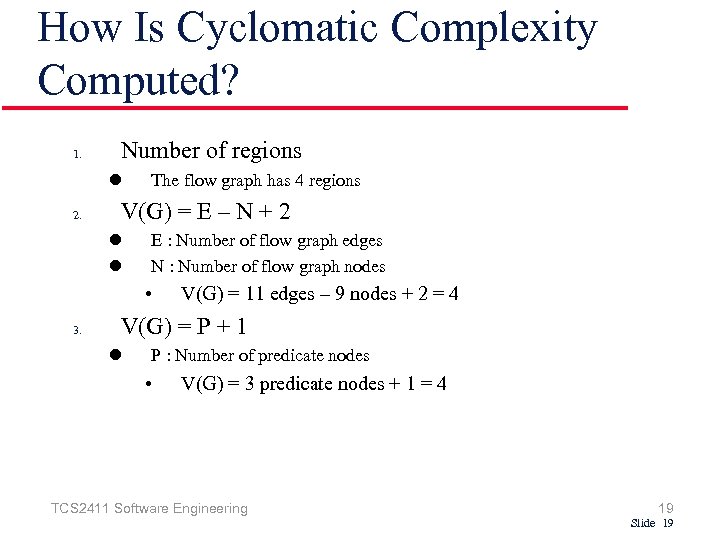

Cyclomatic Complexity/ How Is Cyclomatic Complexity Computed? l 1. 2. Cyclomatic complexity is a software metric that provides a quantitative measure of the logical complexity of a program. Defines the number of independent paths in the basis set Number of regions V(G) = E – N + 2 E : Number of flow graph edges N : Number of flow graph nodes 3. V(G) = P + 1 P : Number of predicate nodes Slide 16

Cyclomatic Complexity/ How Is Cyclomatic Complexity Computed? l 1. 2. Cyclomatic complexity is a software metric that provides a quantitative measure of the logical complexity of a program. Defines the number of independent paths in the basis set Number of regions V(G) = E – N + 2 E : Number of flow graph edges N : Number of flow graph nodes 3. V(G) = P + 1 P : Number of predicate nodes Slide 16

v. Example of a Control Flow Graph (CFG) 1 s: =0; d: =0; while (x

v. Example of a Control Flow Graph (CFG) 1 s: =0; d: =0; while (x

Flow Graph Notation 1 Edge Node 1 2, 3 2 6 3 4 6 7 8 5 9 11 10 7 R 3 R 2 4, 5 R 1 8 Region 9 10 R 4 11 TCS 2411 Software Engineering 18 Slide 18

Flow Graph Notation 1 Edge Node 1 2, 3 2 6 3 4 6 7 8 5 9 11 10 7 R 3 R 2 4, 5 R 1 8 Region 9 10 R 4 11 TCS 2411 Software Engineering 18 Slide 18

How Is Cyclomatic Complexity Computed? 1. Number of regions l 2. The flow graph has 4 regions V(G) = E – N + 2 l l E : Number of flow graph edges N : Number of flow graph nodes • 3. V(G) = 11 edges – 9 nodes + 2 = 4 V(G) = P + 1 l P : Number of predicate nodes • V(G) = 3 predicate nodes + 1 = 4 TCS 2411 Software Engineering 19 Slide 19

How Is Cyclomatic Complexity Computed? 1. Number of regions l 2. The flow graph has 4 regions V(G) = E – N + 2 l l E : Number of flow graph edges N : Number of flow graph nodes • 3. V(G) = 11 edges – 9 nodes + 2 = 4 V(G) = P + 1 l P : Number of predicate nodes • V(G) = 3 predicate nodes + 1 = 4 TCS 2411 Software Engineering 19 Slide 19

Independent Program Paths l Basis set for flow graph on previous slide • • l Path 1: 1 -11 Path 2: 1 -2 -3 -4 -5 -10 -1 -11 Path 3: 1 -2 -3 -6 -8 -9 -10 -1 -11 Path 4: 1 -2 -3 -6 -7 -9 -10 -1 -11 The number of paths in the basis set is determined by the cyclomatic complexity 20 Slide 20

Independent Program Paths l Basis set for flow graph on previous slide • • l Path 1: 1 -11 Path 2: 1 -2 -3 -4 -5 -10 -1 -11 Path 3: 1 -2 -3 -6 -8 -9 -10 -1 -11 Path 4: 1 -2 -3 -6 -7 -9 -10 -1 -11 The number of paths in the basis set is determined by the cyclomatic complexity 20 Slide 20

EX 1: Deriving Test Cases for average procedure/PDL … i=1; total. input = total. valid=0; 3 sum=0; 2 do while value[i] <> -999 and total. input<100 1 4 increment total. input by 1; 5 7 9 10 12 13 6 if value[i] >= minimum AND value[i] <= maximum then increment total. valid by 1; sum = sum + value[i] else skip end if 8 increment i by 1 End do If total. valid > then average = sum / total valid; 11 else average = -999; End if … TCS 2411 Software Engineering 21 Slide 21

EX 1: Deriving Test Cases for average procedure/PDL … i=1; total. input = total. valid=0; 3 sum=0; 2 do while value[i] <> -999 and total. input<100 1 4 increment total. input by 1; 5 7 9 10 12 13 6 if value[i] >= minimum AND value[i] <= maximum then increment total. valid by 1; sum = sum + value[i] else skip end if 8 increment i by 1 End do If total. valid > then average = sum / total valid; 11 else average = -999; End if … TCS 2411 Software Engineering 21 Slide 21

EX 1: Deriving Test Cases for average procedure/PDL STEP 1: Draw flow graph from design/code as foundation. 1 2 3 4 10 12 11 5 13 6 8 7 9 TCS 2411 Software Engineering 22 Slide 22

EX 1: Deriving Test Cases for average procedure/PDL STEP 1: Draw flow graph from design/code as foundation. 1 2 3 4 10 12 11 5 13 6 8 7 9 TCS 2411 Software Engineering 22 Slide 22

Ex 1…. (cont) Step 2: Determine cyclomatic complexity l l l V(G) = 6 regions V(G) = 17 edges – 13 nodes + 2 = 6 V(G) = 5 predicates nodes + 1 = 6 Step 3: Determine a basis set of linearly independent graph • path 1: 1 -2 -10 -11 -13 • path 2: 1 -2 -10 -12 -13 • path 3: 1 -2 -3 -10 -11 -13 • path 4: 1 -2 -3 -4 -5 -8 -9 -2 -. . . • path 5: 1 -2 -3 -4 -5 -6 -8 -9 -2 -. . . • path 6: 1 -2 -3 -4 -5 -6 -7 -8 -9 -2 -. . . The ellipsis (. . . ) following paths 4, 5, and 6 indicates that any path through the remainder of the control structure is acceptable. It is often worthwhile to identify predicate nodes as an aid in the derivation of test cases. In this case, nodes 2, 3, 5, 6, and 10 are predicate nodes. Slide 23

Ex 1…. (cont) Step 2: Determine cyclomatic complexity l l l V(G) = 6 regions V(G) = 17 edges – 13 nodes + 2 = 6 V(G) = 5 predicates nodes + 1 = 6 Step 3: Determine a basis set of linearly independent graph • path 1: 1 -2 -10 -11 -13 • path 2: 1 -2 -10 -12 -13 • path 3: 1 -2 -3 -10 -11 -13 • path 4: 1 -2 -3 -4 -5 -8 -9 -2 -. . . • path 5: 1 -2 -3 -4 -5 -6 -8 -9 -2 -. . . • path 6: 1 -2 -3 -4 -5 -6 -7 -8 -9 -2 -. . . The ellipsis (. . . ) following paths 4, 5, and 6 indicates that any path through the remainder of the control structure is acceptable. It is often worthwhile to identify predicate nodes as an aid in the derivation of test cases. In this case, nodes 2, 3, 5, 6, and 10 are predicate nodes. Slide 23

EX: Binary search flow graph Slide 24

EX: Binary search flow graph Slide 24

Independent paths l l l 1, 2, 3, 8, 9 1, 2, 3, 4, 6, 7, 2 1, 2, 3, 4, 5, 7, 2 1, 2, 3, 4, 6, 7, 2, 8, 9 Test cases should be derived so that all of these paths are executed A dynamic program analyser may be used to check that paths have been executed Slide 25

Independent paths l l l 1, 2, 3, 8, 9 1, 2, 3, 4, 6, 7, 2 1, 2, 3, 4, 5, 7, 2 1, 2, 3, 4, 6, 7, 2, 8, 9 Test cases should be derived so that all of these paths are executed A dynamic program analyser may be used to check that paths have been executed Slide 25

White-box Testing Example: Determining the Paths Find. Mean (FILE Score. File) { float Sum. Of. Scores = 0. 0; int Number. Of. Scores = 0; 1 float Mean=0. 0; float Score; Read(Score. File, Score); 2 while (!EOF(Score. File) { 3 if (Score > 0. 0 ) { Sum. Of. Scores = Sum. Of. Scores + Score; 4 Number. Of. Scores++; } 5 Read(Score. File, Score); 6 } /* Compute the mean and print the result */ 7 if (Number. Of. Scores > 0) { Mean = Sum. Of. Scores / Number. Of. Scores; printf(“ The mean score is %fn”, Mean); } else printf (“No scores found in filen”); 9 } 8 Slide 26

White-box Testing Example: Determining the Paths Find. Mean (FILE Score. File) { float Sum. Of. Scores = 0. 0; int Number. Of. Scores = 0; 1 float Mean=0. 0; float Score; Read(Score. File, Score); 2 while (!EOF(Score. File) { 3 if (Score > 0. 0 ) { Sum. Of. Scores = Sum. Of. Scores + Score; 4 Number. Of. Scores++; } 5 Read(Score. File, Score); 6 } /* Compute the mean and print the result */ 7 if (Number. Of. Scores > 0) { Mean = Sum. Of. Scores / Number. Of. Scores; printf(“ The mean score is %fn”, Mean); } else printf (“No scores found in filen”); 9 } 8 Slide 26

Constructing the Logic Flow Diagram Slide 27

Constructing the Logic Flow Diagram Slide 27

l Determine Mc. Cabe’s Complexity Measure • The easiest formula to evaluate is to read the code: Number of predicates (3) + 1 = 4 l Check your answer by determining the number of regions: Number of closed regions (3) + number of outside regions (always 1) = 4 l Determine a basis (a linearly independent set of paths): Will contain 4 paths. • Use the following technique: • Take the shortest path. • Add a single predicate to the next path. • Basis: • Path 1: 1, 2, 7, 9, Exit (Could have the number 10) //at end of file after reading • Path 2: 1, 2, 3, 5, 6, 2, 7, 9, Exit // reads a value that is negative or zero; // then at end of file • Path 3: 1, 2, 3, 4, 5, 6, 2, 7, 8, Exit // reads a single value that is positive, so // completes mean • Path 4: 1, 2, 7, 8, Exit: // infeasible path Slide 28

l Determine Mc. Cabe’s Complexity Measure • The easiest formula to evaluate is to read the code: Number of predicates (3) + 1 = 4 l Check your answer by determining the number of regions: Number of closed regions (3) + number of outside regions (always 1) = 4 l Determine a basis (a linearly independent set of paths): Will contain 4 paths. • Use the following technique: • Take the shortest path. • Add a single predicate to the next path. • Basis: • Path 1: 1, 2, 7, 9, Exit (Could have the number 10) //at end of file after reading • Path 2: 1, 2, 3, 5, 6, 2, 7, 9, Exit // reads a value that is negative or zero; // then at end of file • Path 3: 1, 2, 3, 4, 5, 6, 2, 7, 8, Exit // reads a single value that is positive, so // completes mean • Path 4: 1, 2, 7, 8, Exit: // infeasible path Slide 28

v. Graph Matrix for white box testing Slide 29

v. Graph Matrix for white box testing Slide 29

White-box Testing l l Focus: Thoroughness (Coverage). Every statement in the component is executed at least once. Four types of white-box testing • • Statement Testing Branch Testing Path Testing Loop Testing Slide 30

White-box Testing l l Focus: Thoroughness (Coverage). Every statement in the component is executed at least once. Four types of white-box testing • • Statement Testing Branch Testing Path Testing Loop Testing Slide 30

White-box Testing (Continued) l Statement Testing: • Every statement should be tested at least once. l Branch Testing (Conditional Testing): • Make sure that each possible outcome from a condition is tested at least once if ( i = TRUE) printf("YESn"); else printf("NOn"); Test cases: 1) i = TRUE; 2) i = FALSE ¨ Path Testing: ¨ Make sure all paths in the program are executed. ¨ This is infeasible. (Combinatorial explosion) Slide 31

White-box Testing (Continued) l Statement Testing: • Every statement should be tested at least once. l Branch Testing (Conditional Testing): • Make sure that each possible outcome from a condition is tested at least once if ( i = TRUE) printf("YESn"); else printf("NOn"); Test cases: 1) i = TRUE; 2) i = FALSE ¨ Path Testing: ¨ Make sure all paths in the program are executed. ¨ This is infeasible. (Combinatorial explosion) Slide 31

Types of White-Box Testing There exist several popular white-box testing methodologies: • • Statement coverage branch coverage path coverage/basis path loop coverage 32 Slide 32

Types of White-Box Testing There exist several popular white-box testing methodologies: • • Statement coverage branch coverage path coverage/basis path loop coverage 32 Slide 32

Statement Coverage l Statement coverage methodology: • design test cases so that • every statement in a program is executed at least once. 33 Slide 33

Statement Coverage l Statement coverage methodology: • design test cases so that • every statement in a program is executed at least once. 33 Slide 33

Example l l l l int f 1(int x, int y){ 1 while (x != y){ 2 if (x>y) then 3 x=x-y; 4 else y=y-x; 5} 6 return x; } 34 Slide 34

Example l l l l int f 1(int x, int y){ 1 while (x != y){ 2 if (x>y) then 3 x=x-y; 4 else y=y-x; 5} 6 return x; } 34 Slide 34

Euclid's GCD computation algorithm l By choosing the test set {(x=3, y=3), (x=4, y=3), (x=3, y=4)} • all statements are executed at least once. 35 Slide 35

Euclid's GCD computation algorithm l By choosing the test set {(x=3, y=3), (x=4, y=3), (x=3, y=4)} • all statements are executed at least once. 35 Slide 35

Branch Coverage l Test cases are designed such that: • different branch conditions • given true and false values in turn. 36 Slide 36

Branch Coverage l Test cases are designed such that: • different branch conditions • given true and false values in turn. 36 Slide 36

Branch Coverage l Branch testing guarantees statement coverage: • a stronger testing compared to the statement coveragebased testing. 37 Slide 37

Branch Coverage l Branch testing guarantees statement coverage: • a stronger testing compared to the statement coveragebased testing. 37 Slide 37

Example l l l l int f 1(int x, int y){ 1 while (x != y){ 2 if (x>y) then 3 x=x-y; 4 else y=y-x; 5} 6 return x; } 38 Slide 38

Example l l l l int f 1(int x, int y){ 1 while (x != y){ 2 if (x>y) then 3 x=x-y; 4 else y=y-x; 5} 6 return x; } 38 Slide 38

Example l l Test cases for branch coverage can be: {(x=3, y=3), (x=3, y=2), (x=4, y=3), (x=3, y=4)} 39 Slide 39

Example l l Test cases for branch coverage can be: {(x=3, y=3), (x=3, y=2), (x=4, y=3), (x=3, y=4)} 39 Slide 39

Path Coverage/ Basis path testing l Design test cases such that: • all linearly independent paths in the program are executed at least once. 40 Slide 40

Path Coverage/ Basis path testing l Design test cases such that: • all linearly independent paths in the program are executed at least once. 40 Slide 40

Example l l l l int f 1(int x, int y){ 1 while (x != y){ 2 if (x>y) then 3 x=x-y; 4 else y=y-x; 5} 6 return x; } 41 Slide 41

Example l l l l int f 1(int x, int y){ 1 while (x != y){ 2 if (x>y) then 3 x=x-y; 4 else y=y-x; 5} 6 return x; } 41 Slide 41

Example Control Flow Graph 1 2 3 4 5 6 42 Slide 42

Example Control Flow Graph 1 2 3 4 5 6 42 Slide 42

Mc. Cabe's cyclomatic metric l Given a control flow graph G, cyclomatic complexity V(G): • V(G)= E-N+2 • N is the number of nodes in G • E is the number of edges in G 43 Slide 43

Mc. Cabe's cyclomatic metric l Given a control flow graph G, cyclomatic complexity V(G): • V(G)= E-N+2 • N is the number of nodes in G • E is the number of edges in G 43 Slide 43

Example Control Flow Graph 1 2 3 4 5 6 44 Slide 44

Example Control Flow Graph 1 2 3 4 5 6 44 Slide 44

Example l Cyclomatic complexity = 7 -6+2 = 3. 45 Slide 45

Example l Cyclomatic complexity = 7 -6+2 = 3. 45 Slide 45

Cyclomatic complexity l Another way of computing cyclomatic complexity: • • l inspect control flow graph determine number of bounded areas in the graph V(G) = Total number of bounded areas + 1 46 Slide 46

Cyclomatic complexity l Another way of computing cyclomatic complexity: • • l inspect control flow graph determine number of bounded areas in the graph V(G) = Total number of bounded areas + 1 46 Slide 46

Example Control Flow Graph 1 2 3 4 5 6 47 Slide 47

Example Control Flow Graph 1 2 3 4 5 6 47 Slide 47

Example l From a visual examination of the CFG: • the number of bounded areas is 2. • cyclomatic complexity = 2+1=3. 48 Slide 48

Example l From a visual examination of the CFG: • the number of bounded areas is 2. • cyclomatic complexity = 2+1=3. 48 Slide 48

Cyclomatic complexity l l Defines the number of independent paths in a program. Provides a lower bound: • for the number of test cases for path coverage. 49 Slide 49

Cyclomatic complexity l l Defines the number of independent paths in a program. Provides a lower bound: • for the number of test cases for path coverage. 49 Slide 49

Cyclomatic complexity l Knowing the number of test cases required: • does not make it any easier to derive the test cases, • only gives an indication of the minimum number of test cases required. 50 Slide 50

Cyclomatic complexity l Knowing the number of test cases required: • does not make it any easier to derive the test cases, • only gives an indication of the minimum number of test cases required. 50 Slide 50

Path testing l The tester proposes: • an initial set of test data using his experience and judgement. 51 Slide 51

Path testing l The tester proposes: • an initial set of test data using his experience and judgement. 51 Slide 51

Path testing l A dynamic program analyzer is used: • to indicate which parts of the program have been tested • the output of the dynamic analysis • used to guide the tester in selecting additional test cases. 52 Slide 52

Path testing l A dynamic program analyzer is used: • to indicate which parts of the program have been tested • the output of the dynamic analysis • used to guide the tester in selecting additional test cases. 52 Slide 52

Derivation of Test Cases l Let us discuss the steps: • to derive path coverage-based test cases of a program. 53 Slide 53

Derivation of Test Cases l Let us discuss the steps: • to derive path coverage-based test cases of a program. 53 Slide 53

Derivation of Test Cases l l Draw control flow graph. Determine V(G). Determine the set of linearly independent paths. Prepare test cases: • to force execution along each path. 54 Slide 54

Derivation of Test Cases l l Draw control flow graph. Determine V(G). Determine the set of linearly independent paths. Prepare test cases: • to force execution along each path. 54 Slide 54

Example l l l l int f 1(int x, int y){ 1 while (x != y){ 2 if (x>y) then 3 x=x-y; 4 else y=y-x; 5} 6 return x; } 55 Slide 55

Example l l l l int f 1(int x, int y){ 1 while (x != y){ 2 if (x>y) then 3 x=x-y; 4 else y=y-x; 5} 6 return x; } 55 Slide 55

Example Control Flow Diagram 1 2 3 4 5 6 56 Slide 56

Example Control Flow Diagram 1 2 3 4 5 6 56 Slide 56

Derivation of Test Cases l Number of independent paths: 3 • 1, 6 test case (x=1, y=1) • 1, 2, 3, 5, 1, 6 test case(x=2, y=1) • 1, 2, 4, 5, 1, 6 test case(x=1, y=2) 57 Slide 57

Derivation of Test Cases l Number of independent paths: 3 • 1, 6 test case (x=1, y=1) • 1, 2, 3, 5, 1, 6 test case(x=2, y=1) • 1, 2, 4, 5, 1, 6 test case(x=1, y=2) 57 Slide 57

Loop Testing l l A white-box testing technique that focuses exclusively on the validity of loop constructs Four different classes of loops exist • • Simple loops Nested loops Concatenated loops Unstructured loops 58 Slide 58

Loop Testing l l A white-box testing technique that focuses exclusively on the validity of loop constructs Four different classes of loops exist • • Simple loops Nested loops Concatenated loops Unstructured loops 58 Slide 58

Loop Testing Simple loop Nested Loops Concatenated Loops Unstructured Loops Slide 59

Loop Testing Simple loop Nested Loops Concatenated Loops Unstructured Loops Slide 59

Discussion on White Box Testing l Advantages • Find errors on code level • Typically based on a very systematic approach, covering the complete internal module structure l Constraints • Does not find missing or additional functionality • Does not really check the interface • Difficult for large and complex module TCS 2411 Software Engineering 60 Slide 60

Discussion on White Box Testing l Advantages • Find errors on code level • Typically based on a very systematic approach, covering the complete internal module structure l Constraints • Does not find missing or additional functionality • Does not really check the interface • Difficult for large and complex module TCS 2411 Software Engineering 60 Slide 60

Black-box Testing Slide 61

Black-box Testing Slide 61

Specification-Based Testing Specification Expected output Apply input Program Observed output Validate the observed output against the expected output Slide 62

Specification-Based Testing Specification Expected output Apply input Program Observed output Validate the observed output against the expected output Slide 62

v. Black Box/Functional/Behavioral/specificationbased/close box Testing l l l Test cases Derived from program specification Functional testing of a component of a system Examine behaviour through inputs & the corresponding outputs Used during the later stages of testing after white box testing has been performed The tester identifies a set of input conditions that will fully exercise all functional requirements for a program 63 Slide 63

v. Black Box/Functional/Behavioral/specificationbased/close box Testing l l l Test cases Derived from program specification Functional testing of a component of a system Examine behaviour through inputs & the corresponding outputs Used during the later stages of testing after white box testing has been performed The tester identifies a set of input conditions that will fully exercise all functional requirements for a program 63 Slide 63

Black-box testing Slide 64

Black-box testing Slide 64

Black Box Testing (Continued) Attempts to find the following errors: l Incorrect or missing functions l Interface errors l Errors in data structures or external database access l Performance errors l Initialisation and termination errors TCS 2411 Software Engineering 65 Slide 65

Black Box Testing (Continued) Attempts to find the following errors: l Incorrect or missing functions l Interface errors l Errors in data structures or external database access l Performance errors l Initialisation and termination errors TCS 2411 Software Engineering 65 Slide 65

Black Box Testing Techniques • • • Equivalence Partitioning Boundary Value Analysis Comparison Testing Orthogonal Array Testing Graph Based Testing Methods 66 Slide 66

Black Box Testing Techniques • • • Equivalence Partitioning Boundary Value Analysis Comparison Testing Orthogonal Array Testing Graph Based Testing Methods 66 Slide 66

v. Equivalence Partitioning l l l Black-box technique that divides the input domain into classes of data with common characteristics from which test cases can be derived Test cases should be chosen from each partition Goal: Reduce number of test cases by equivalence partitioning: Slide 67

v. Equivalence Partitioning l l l Black-box technique that divides the input domain into classes of data with common characteristics from which test cases can be derived Test cases should be chosen from each partition Goal: Reduce number of test cases by equivalence partitioning: Slide 67

Equivalence partitioning Slide 68

Equivalence partitioning Slide 68

Equivalence partitioning……. Once you have identified a set of partitions, you choose test cases from each of these partitions. A good rule of thumb for test case selection is to choose test cases on the boundaries of the partitions, plus cases close to the midpoint of the partition. Slide 69

Equivalence partitioning……. Once you have identified a set of partitions, you choose test cases from each of these partitions. A good rule of thumb for test case selection is to choose test cases on the boundaries of the partitions, plus cases close to the midpoint of the partition. Slide 69

q Equivalence class guidelines 1. If input condition specifies a range, one valid and two invalid equivalence classes are defined 2. If an input condition requires a specific value, one valid and two invalid equivalence classes are defined 3. If an input condition specifies a member of a set, one valid and one invalid equivalence class is defined 4. If an input condition is Boolean, one valid and one invalid equivalence class is defined Slide 70

q Equivalence class guidelines 1. If input condition specifies a range, one valid and two invalid equivalence classes are defined 2. If an input condition requires a specific value, one valid and two invalid equivalence classes are defined 3. If an input condition specifies a member of a set, one valid and one invalid equivalence class is defined 4. If an input condition is Boolean, one valid and one invalid equivalence class is defined Slide 70

Black-box Testing (Continued) l Selection of equivalence classes (No rules, only guidelines): • Input is valid across range of values. Select test cases from 3 equivalence classes: • Below the range • Within the range • Above the range • Input is valid if it is from a discrete set. Select test cases from 2 equivalence classes: • Valid set member • Invalid set member • Input is valid if it is the Boolean value true or false. Select • a true value • a false value • Input is valid if it is an exact value of a particular data type. Select • the value • any other value of that type Slide 71

Black-box Testing (Continued) l Selection of equivalence classes (No rules, only guidelines): • Input is valid across range of values. Select test cases from 3 equivalence classes: • Below the range • Within the range • Above the range • Input is valid if it is from a discrete set. Select test cases from 2 equivalence classes: • Valid set member • Invalid set member • Input is valid if it is the Boolean value true or false. Select • a true value • a false value • Input is valid if it is an exact value of a particular data type. Select • the value • any other value of that type Slide 71

Boundary Value Analysis l l It focuses on the boundaries of the input domain rather than its center Complement equivalence partitioning Test both sides of each boundary Test min, min-1, max+1, typical values 72 Slide 72

Boundary Value Analysis l l It focuses on the boundaries of the input domain rather than its center Complement equivalence partitioning Test both sides of each boundary Test min, min-1, max+1, typical values 72 Slide 72

ØGuidelines for Boundary Value Analysis l l 1. If an input condition specifies a range bounded by values a and b, test cases should be designed with values a and b as well as values just above and just below a and b 2. If an input condition specifies a number of values, test case should be developed that exercise the minimum and maximum numbers. Values just above and just below the minimum and maximum are also tested Apply guidelines 1 and 2 to output conditions; produce output that reflects the minimum and the maximum values expected; also test the values just below and just above If internal program data structures have prescribed boundaries (e. g. , an array), design a test case to exercise the data structure at its minimum and maximum boundaries 73 Slide 73

ØGuidelines for Boundary Value Analysis l l 1. If an input condition specifies a range bounded by values a and b, test cases should be designed with values a and b as well as values just above and just below a and b 2. If an input condition specifies a number of values, test case should be developed that exercise the minimum and maximum numbers. Values just above and just below the minimum and maximum are also tested Apply guidelines 1 and 2 to output conditions; produce output that reflects the minimum and the maximum values expected; also test the values just below and just above If internal program data structures have prescribed boundaries (e. g. , an array), design a test case to exercise the data structure at its minimum and maximum boundaries 73 Slide 73

v. Comparison Testing l l Black-box testing for safety critical systems in which independently developed implementations of redundant systems are tested for conformance to specifications When redundant software is developed, separate software engineering teams develop independent versions of an application using the same specification. In such situations, each version can be tested with the same test data All versions are executed in parallel with real-time comparison of results to ensure consistency. Slide 74

v. Comparison Testing l l Black-box testing for safety critical systems in which independently developed implementations of redundant systems are tested for conformance to specifications When redundant software is developed, separate software engineering teams develop independent versions of an application using the same specification. In such situations, each version can be tested with the same test data All versions are executed in parallel with real-time comparison of results to ensure consistency. Slide 74

v. Orthogonal Array Testing l l l Black-box technique that enables the design of a reasonably small set of test cases that provide maximum test coverage Focus is on categories of faulty logic likely to be present in the software component (without examining the code) Priorities for assessing tests using an orthogonal array 1. Detect and isolate all single mode faults 2. Detect all double mode faults 3. Mutimode faults Slide 75

v. Orthogonal Array Testing l l l Black-box technique that enables the design of a reasonably small set of test cases that provide maximum test coverage Focus is on categories of faulty logic likely to be present in the software component (without examining the code) Priorities for assessing tests using an orthogonal array 1. Detect and isolate all single mode faults 2. Detect all double mode faults 3. Mutimode faults Slide 75

Test documentation (IEEE 928) l l l l Test plan Test design specification Test case specification Test procedure specification Test item transmittal report Test log Test incident report Test summary report Slide 76

Test documentation (IEEE 928) l l l l Test plan Test design specification Test case specification Test procedure specification Test item transmittal report Test log Test incident report Test summary report Slide 76

Comparison of White & Blackbox Testing l White-box Testing: • Potentially infinite number of paths have to be tested • White-box testing often tests what is done, instead of what should be done • Cannot detect missing use cases l Black-box Testing: • Potential combinatorical explosion of test cases (valid & invalid data) • Often not clear whether the selected test cases uncover a particular error • Does not discover extraneous use cases ("features") Slide 77

Comparison of White & Blackbox Testing l White-box Testing: • Potentially infinite number of paths have to be tested • White-box testing often tests what is done, instead of what should be done • Cannot detect missing use cases l Black-box Testing: • Potential combinatorical explosion of test cases (valid & invalid data) • Often not clear whether the selected test cases uncover a particular error • Does not discover extraneous use cases ("features") Slide 77

The 4 Testing Steps 1. Select what has to be measured • Analysis: Completeness of requirements • Design: tested for cohesion • Implementation: Code tests 2. Decide how the testing is done • • Code inspection Proofs (Design by Contract) Black-box, white box, Select integration testing strategy (big bang, bottom up, top down, sandwich) 3. Develop test cases • A test case is a set of test data or situations that will be used to exercise the unit (code, module, system) being tested or about the attribute being measured 4. Create the test oracle • An oracle contains the predicted results for a set of test cases • The test oracle has to be written down before the actual testing takes place Slide 78

The 4 Testing Steps 1. Select what has to be measured • Analysis: Completeness of requirements • Design: tested for cohesion • Implementation: Code tests 2. Decide how the testing is done • • Code inspection Proofs (Design by Contract) Black-box, white box, Select integration testing strategy (big bang, bottom up, top down, sandwich) 3. Develop test cases • A test case is a set of test data or situations that will be used to exercise the unit (code, module, system) being tested or about the attribute being measured 4. Create the test oracle • An oracle contains the predicted results for a set of test cases • The test oracle has to be written down before the actual testing takes place Slide 78

Guidance for Test Case Selection l Use analysis knowledge about functional requirements (blackbox testing): • Use cases • Expected input data • Invalid input data l Use design knowledge about system structure, algorithms, data structures (white-box testing): l Use implementation knowledge about algorithms: • Examples: • Force division by zero • Use sequence of test cases for interrupt handler • Control structures • Test branches, loops, . . . • Data structures • Test records fields, arrays, . . . Slide 79

Guidance for Test Case Selection l Use analysis knowledge about functional requirements (blackbox testing): • Use cases • Expected input data • Invalid input data l Use design knowledge about system structure, algorithms, data structures (white-box testing): l Use implementation knowledge about algorithms: • Examples: • Force division by zero • Use sequence of test cases for interrupt handler • Control structures • Test branches, loops, . . . • Data structures • Test records fields, arrays, . . . Slide 79

Terminology l Reliability: The measure of success with which the observed behavior of a system confirms to some specification of its behavior. IEEE(The ability of a system or component to perform its required functions under stated conditions for a specified period of time. It is often expressed as a probability. ) l l Failure: Any deviation of the observed behavior from the specified behavior Error: The system is in a state such that further processing by the system will lead to a failure. • Stress or overload errors, Capacity or boundary errors, Timing errors, Throughput or performance errors l l Fault (Bug): The mechanical or algorithmic cause of an error. Quality: IEEE(the degree to which a system, component, or process meets customer or user needs or expectations. ) Slide 80

Terminology l Reliability: The measure of success with which the observed behavior of a system confirms to some specification of its behavior. IEEE(The ability of a system or component to perform its required functions under stated conditions for a specified period of time. It is often expressed as a probability. ) l l Failure: Any deviation of the observed behavior from the specified behavior Error: The system is in a state such that further processing by the system will lead to a failure. • Stress or overload errors, Capacity or boundary errors, Timing errors, Throughput or performance errors l l Fault (Bug): The mechanical or algorithmic cause of an error. Quality: IEEE(the degree to which a system, component, or process meets customer or user needs or expectations. ) Slide 80

Examples of Faults and Errors l Faults in the Interface specification • Mismatch between what the client needs and what the server offers • Mismatch between requirements and implementation l Algorithmic Faults • Missing initialization • Branching errors (too soon, too late) • Missing test for nil l Mechanical Faults (very hard to find) • Documentation does not match actual conditions or operating procedures l Errors • • Stress or overload errors Capacity or boundary errors Timing errors Throughput or performance errors Slide 81

Examples of Faults and Errors l Faults in the Interface specification • Mismatch between what the client needs and what the server offers • Mismatch between requirements and implementation l Algorithmic Faults • Missing initialization • Branching errors (too soon, too late) • Missing test for nil l Mechanical Faults (very hard to find) • Documentation does not match actual conditions or operating procedures l Errors • • Stress or overload errors Capacity or boundary errors Timing errors Throughput or performance errors Slide 81

Dealing with Errors l Verification: • Assumes hypothetical environment that does not match real environment • Proof might be buggy (omits important constraints; simply wrong) l Modular redundancy: • Expensive l Declaring a bug to be a “feature” • Bad practice l Patching A patch (sometimes called a "fix") is a quick-repair job for a piece of programming. During a software product's beta test distribution or try-out period and later after the product is formally released, problems (called bug) will almost invariably be found. A patch is the immediate solution that is provided to users; it can sometimes be downloaded from the software maker's Web site. The patch is not necessarily the best solution for the problem and the product developers often find a better solution to provide when they package the product for its next release. A patch is usually developed and distributed as a replacement for or an insertion in compiled code (that is, in a binary file or object module). In larger operating systems, a special program is provided to manage and keep track of the installation of patches. l l Slows down performance Testing (this lecture) • Testing is never good enough Slide 82

Dealing with Errors l Verification: • Assumes hypothetical environment that does not match real environment • Proof might be buggy (omits important constraints; simply wrong) l Modular redundancy: • Expensive l Declaring a bug to be a “feature” • Bad practice l Patching A patch (sometimes called a "fix") is a quick-repair job for a piece of programming. During a software product's beta test distribution or try-out period and later after the product is formally released, problems (called bug) will almost invariably be found. A patch is the immediate solution that is provided to users; it can sometimes be downloaded from the software maker's Web site. The patch is not necessarily the best solution for the problem and the product developers often find a better solution to provide when they package the product for its next release. A patch is usually developed and distributed as a replacement for or an insertion in compiled code (that is, in a binary file or object module). In larger operating systems, a special program is provided to manage and keep track of the installation of patches. l l Slows down performance Testing (this lecture) • Testing is never good enough Slide 82

Another View on How to Deal with Errors l Error prevention (before the system is released): • Use good programming methodology to reduce complexity • Use version control to prevent inconsistent system • Apply verification to prevent algorithmic bugs l Error detection (while system is running): • Testing: Create failures in a planned way • Debugging: Start with an unplanned failures. Monitoring: Deliver information about state. Find performance bugs l Error recovery (recover from failure once the system is released): • Data base systems (atomic transactions) • Modular redundancy • Recovery blocks Slide 83

Another View on How to Deal with Errors l Error prevention (before the system is released): • Use good programming methodology to reduce complexity • Use version control to prevent inconsistent system • Apply verification to prevent algorithmic bugs l Error detection (while system is running): • Testing: Create failures in a planned way • Debugging: Start with an unplanned failures. Monitoring: Deliver information about state. Find performance bugs l Error recovery (recover from failure once the system is released): • Data base systems (atomic transactions) • Modular redundancy • Recovery blocks Slide 83

Fault Handling Techniques Fault Handling Fault Avoidance Design Methodology Verification Fault Detection Fault Tolerance Atomic Transactions Reviews Modular Redundancy Configuration Management Debugging Testing Unit Testing Integration Testing System Testing Correctness Debugging Performance Debugging Slide 84

Fault Handling Techniques Fault Handling Fault Avoidance Design Methodology Verification Fault Detection Fault Tolerance Atomic Transactions Reviews Modular Redundancy Configuration Management Debugging Testing Unit Testing Integration Testing System Testing Correctness Debugging Performance Debugging Slide 84

Quality Assurance encompasses Testing Quality Assurance Usability Testing Scenario Testing Fault Avoidance Verification Prototype Testing Product Testing Fault Tolerance Configuration Management Atomic Transactions Modular Redundancy Fault Detection Reviews Walkthrough Inspection Unit Testing Debugging Testing Integration Testing System Testing Correctness Debugging Performance Debugging Slide 85

Quality Assurance encompasses Testing Quality Assurance Usability Testing Scenario Testing Fault Avoidance Verification Prototype Testing Product Testing Fault Tolerance Configuration Management Atomic Transactions Modular Redundancy Fault Detection Reviews Walkthrough Inspection Unit Testing Debugging Testing Integration Testing System Testing Correctness Debugging Performance Debugging Slide 85