2641626adc1e397b991c6064be770252.ppt

- Количество слайдов: 74

Chapter 7

Chapter 7

Scaling up from one client at a time All server code in the book, up to now, dealt with one client at a time. Except our last chatroom homework. Options for scaling up: event driven: See chatroom example. problem is its restriction to a single CPU or core multiple threads multiple processes (in Python, this really exercises all CPUs or cores)

Scaling up from one client at a time All server code in the book, up to now, dealt with one client at a time. Except our last chatroom homework. Options for scaling up: event driven: See chatroom example. problem is its restriction to a single CPU or core multiple threads multiple processes (in Python, this really exercises all CPUs or cores)

Load Balancing I Prior to your server code via DNS round-robin: ; zone file fragment ftp IN A www IN 192. 168. 0. 4 192. 168. 0. 5 192. 168. 0. 6 A 192. 168. 0. 7 A 192. 168. 0. 8 ; or use this format which gives exactly the same result ftp IN A 192. 168. 0. 4 IN A 192. 168. 0. 5 IN A 192. 168. 0. 6 www IN A 192. 168. 0. 7 IN A 192. 168. 0. 8

Load Balancing I Prior to your server code via DNS round-robin: ; zone file fragment ftp IN A www IN 192. 168. 0. 4 192. 168. 0. 5 192. 168. 0. 6 A 192. 168. 0. 7 A 192. 168. 0. 8 ; or use this format which gives exactly the same result ftp IN A 192. 168. 0. 4 IN A 192. 168. 0. 5 IN A 192. 168. 0. 6 www IN A 192. 168. 0. 7 IN A 192. 168. 0. 8

Load Balancing II Have your own machine front an array of machines with the same service on each and forward service requests in a round -robin fashion.

Load Balancing II Have your own machine front an array of machines with the same service on each and forward service requests in a round -robin fashion.

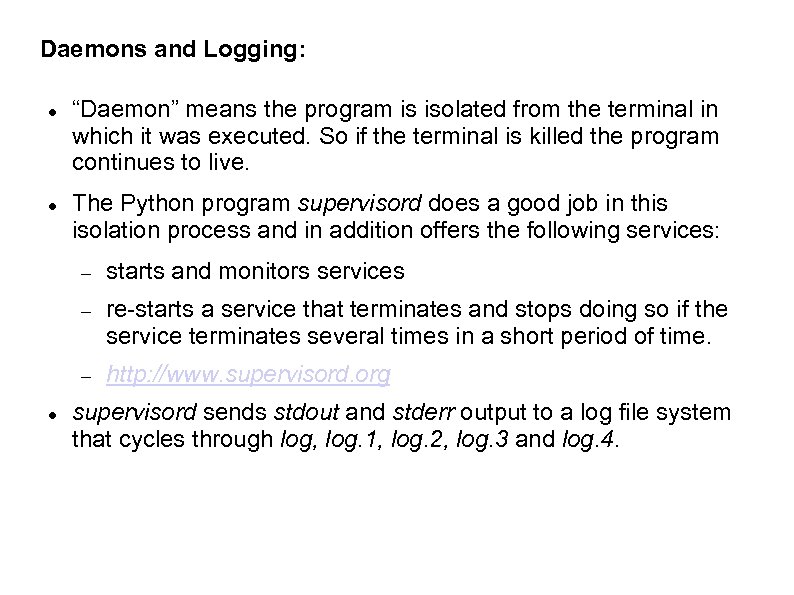

Daemons and Logging: “Daemon” means the program is isolated from the terminal in which it was executed. So if the terminal is killed the program continues to live. The Python program supervisord does a good job in this isolation process and in addition offers the following services: re-starts a service that terminates and stops doing so if the service terminates several times in a short period of time. starts and monitors services http: //www. supervisord. org supervisord sends stdout and stderr output to a log file system that cycles through log, log. 1, log. 2, log. 3 and log. 4.

Daemons and Logging: “Daemon” means the program is isolated from the terminal in which it was executed. So if the terminal is killed the program continues to live. The Python program supervisord does a good job in this isolation process and in addition offers the following services: re-starts a service that terminates and stops doing so if the service terminates several times in a short period of time. starts and monitors services http: //www. supervisord. org supervisord sends stdout and stderr output to a log file system that cycles through log, log. 1, log. 2, log. 3 and log. 4.

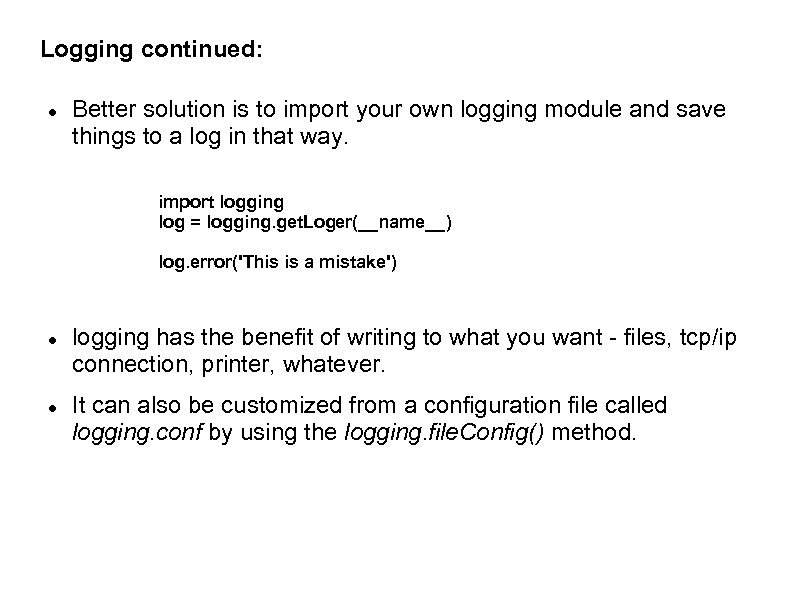

Logging continued: Better solution is to import your own logging module and save things to a log in that way. import logging log = logging. get. Loger(__name__) log. error('This is a mistake') logging has the benefit of writing to what you want - files, tcp/ip connection, printer, whatever. It can also be customized from a configuration file called logging. conf by using the logging. file. Config() method.

Logging continued: Better solution is to import your own logging module and save things to a log in that way. import logging log = logging. get. Loger(__name__) log. error('This is a mistake') logging has the benefit of writing to what you want - files, tcp/ip connection, printer, whatever. It can also be customized from a configuration file called logging. conf by using the logging. file. Config() method.

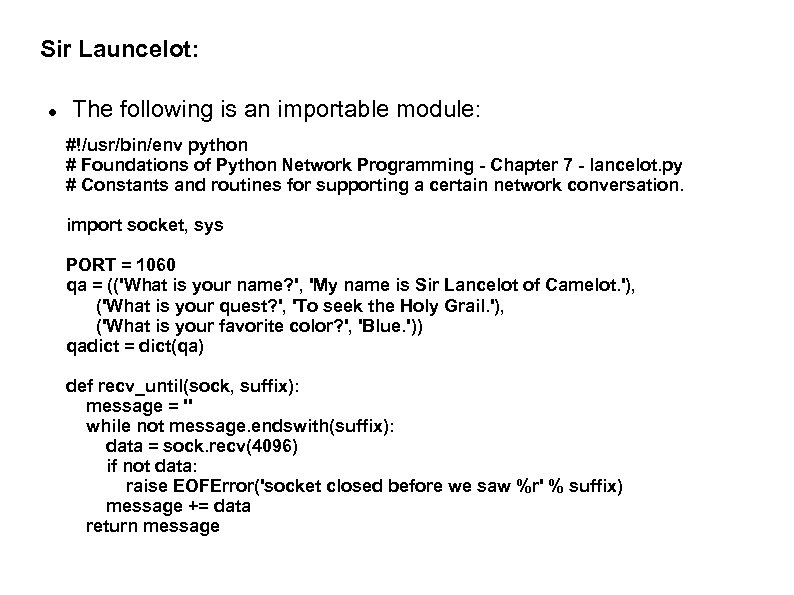

Sir Launcelot: The following is an importable module: #!/usr/bin/env python # Foundations of Python Network Programming - Chapter 7 - lancelot. py # Constants and routines for supporting a certain network conversation. import socket, sys PORT = 1060 qa = (('What is your name? ', 'My name is Sir Lancelot of Camelot. '), ('What is your quest? ', 'To seek the Holy Grail. '), ('What is your favorite color? ', 'Blue. ')) qadict = dict(qa) def recv_until(sock, suffix): message = '' while not message. endswith(suffix): data = sock. recv(4096) if not data: raise EOFError('socket closed before we saw %r' % suffix) message += data return message

Sir Launcelot: The following is an importable module: #!/usr/bin/env python # Foundations of Python Network Programming - Chapter 7 - lancelot. py # Constants and routines for supporting a certain network conversation. import socket, sys PORT = 1060 qa = (('What is your name? ', 'My name is Sir Lancelot of Camelot. '), ('What is your quest? ', 'To seek the Holy Grail. '), ('What is your favorite color? ', 'Blue. ')) qadict = dict(qa) def recv_until(sock, suffix): message = '' while not message. endswith(suffix): data = sock. recv(4096) if not data: raise EOFError('socket closed before we saw %r' % suffix) message += data return message

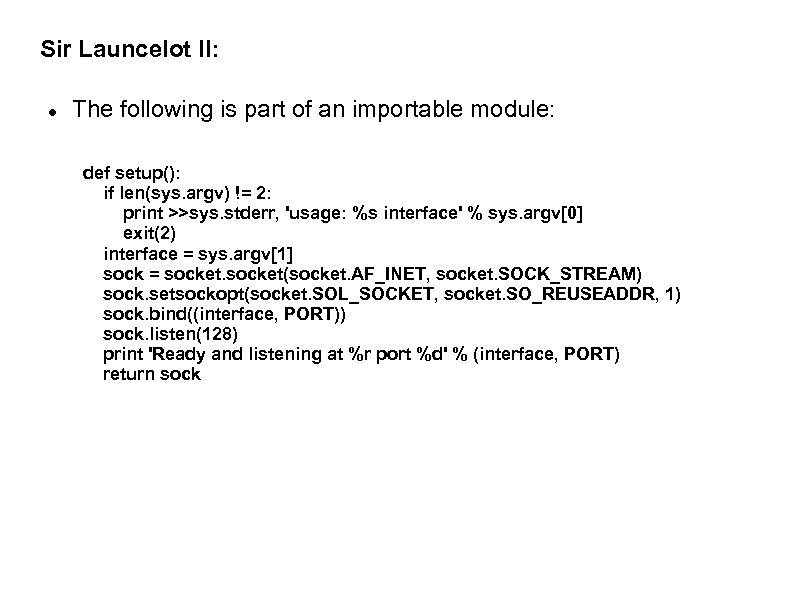

Sir Launcelot II: The following is part of an importable module: def setup(): if len(sys. argv) != 2: print >>sys. stderr, 'usage: %s interface' % sys. argv[0] exit(2) interface = sys. argv[1] sock = socket(socket. AF_INET, socket. SOCK_STREAM) sock. setsockopt(socket. SOL_SOCKET, socket. SO_REUSEADDR, 1) sock. bind((interface, PORT)) sock. listen(128) print 'Ready and listening at %r port %d' % (interface, PORT) return sock

Sir Launcelot II: The following is part of an importable module: def setup(): if len(sys. argv) != 2: print >>sys. stderr, 'usage: %s interface' % sys. argv[0] exit(2) interface = sys. argv[1] sock = socket(socket. AF_INET, socket. SOCK_STREAM) sock. setsockopt(socket. SOL_SOCKET, socket. SO_REUSEADDR, 1) sock. bind((interface, PORT)) sock. listen(128) print 'Ready and listening at %r port %d' % (interface, PORT) return sock

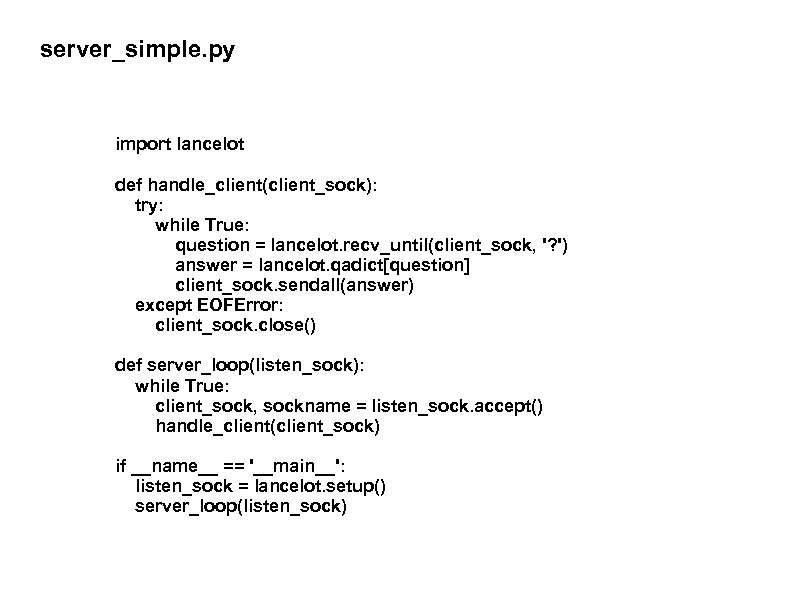

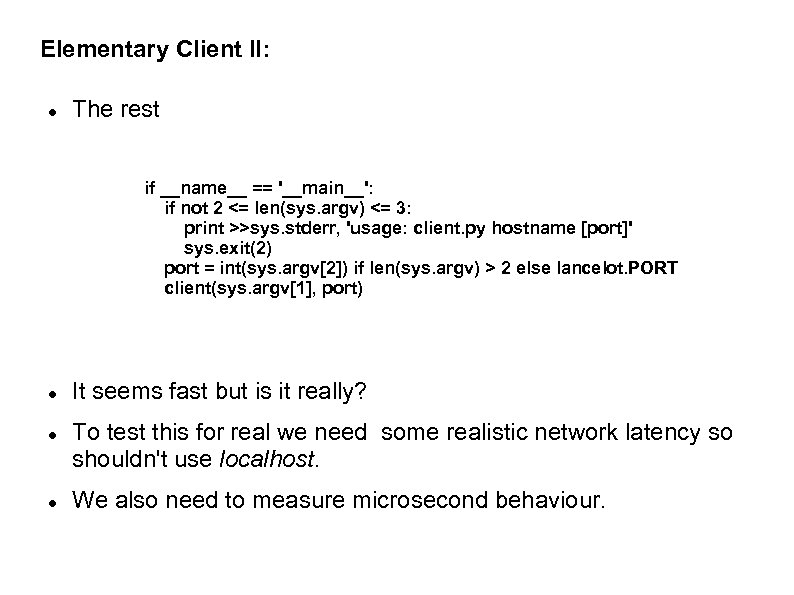

server_simple. py import lancelot def handle_client(client_sock): try: while True: question = lancelot. recv_until(client_sock, '? ') answer = lancelot. qadict[question] client_sock. sendall(answer) except EOFError: client_sock. close() def server_loop(listen_sock): while True: client_sock, sockname = listen_sock. accept() handle_client(client_sock) if __name__ == '__main__': listen_sock = lancelot. setup() server_loop(listen_sock)

server_simple. py import lancelot def handle_client(client_sock): try: while True: question = lancelot. recv_until(client_sock, '? ') answer = lancelot. qadict[question] client_sock. sendall(answer) except EOFError: client_sock. close() def server_loop(listen_sock): while True: client_sock, sockname = listen_sock. accept() handle_client(client_sock) if __name__ == '__main__': listen_sock = lancelot. setup() server_loop(listen_sock)

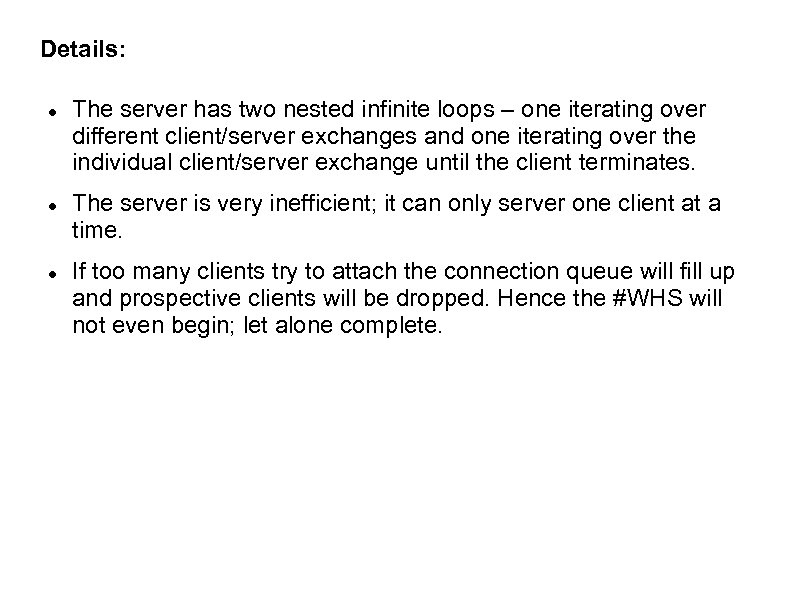

Details: The server has two nested infinite loops – one iterating over different client/server exchanges and one iterating over the individual client/server exchange until the client terminates. The server is very inefficient; it can only server one client at a time. If too many clients try to attach the connection queue will fill up and prospective clients will be dropped. Hence the #WHS will not even begin; let alone complete.

Details: The server has two nested infinite loops – one iterating over different client/server exchanges and one iterating over the individual client/server exchange until the client terminates. The server is very inefficient; it can only server one client at a time. If too many clients try to attach the connection queue will fill up and prospective clients will be dropped. Hence the #WHS will not even begin; let alone complete.

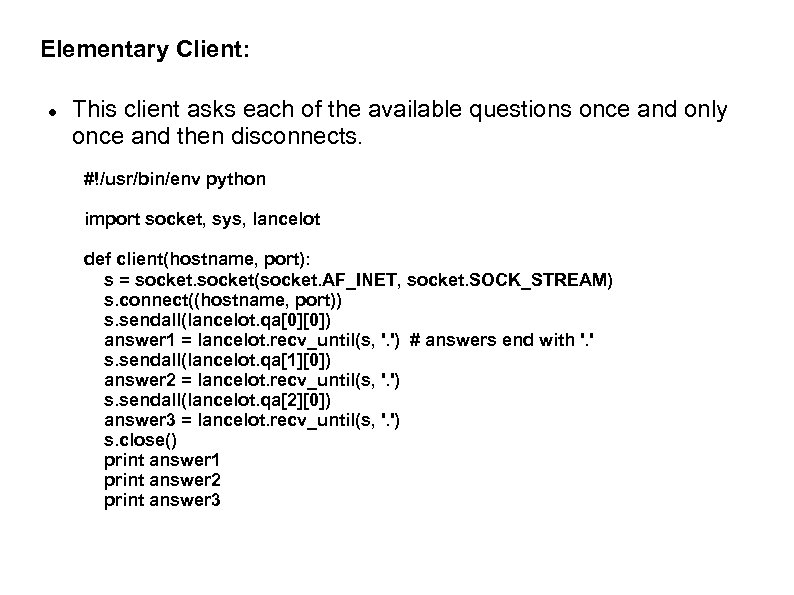

Elementary Client: This client asks each of the available questions once and only once and then disconnects. #!/usr/bin/env python import socket, sys, lancelot def client(hostname, port): s = socket(socket. AF_INET, socket. SOCK_STREAM) s. connect((hostname, port)) s. sendall(lancelot. qa[0][0]) answer 1 = lancelot. recv_until(s, '. ') # answers end with '. ' s. sendall(lancelot. qa[1][0]) answer 2 = lancelot. recv_until(s, '. ') s. sendall(lancelot. qa[2][0]) answer 3 = lancelot. recv_until(s, '. ') s. close() print answer 1 print answer 2 print answer 3

Elementary Client: This client asks each of the available questions once and only once and then disconnects. #!/usr/bin/env python import socket, sys, lancelot def client(hostname, port): s = socket(socket. AF_INET, socket. SOCK_STREAM) s. connect((hostname, port)) s. sendall(lancelot. qa[0][0]) answer 1 = lancelot. recv_until(s, '. ') # answers end with '. ' s. sendall(lancelot. qa[1][0]) answer 2 = lancelot. recv_until(s, '. ') s. sendall(lancelot. qa[2][0]) answer 3 = lancelot. recv_until(s, '. ') s. close() print answer 1 print answer 2 print answer 3

Elementary Client II: The rest if __name__ == '__main__': if not 2 <= len(sys. argv) <= 3: print >>sys. stderr, 'usage: client. py hostname [port]' sys. exit(2) port = int(sys. argv[2]) if len(sys. argv) > 2 else lancelot. PORT client(sys. argv[1], port) It seems fast but is it really? To test this for real we need some realistic network latency so shouldn't use localhost. We also need to measure microsecond behaviour.

Elementary Client II: The rest if __name__ == '__main__': if not 2 <= len(sys. argv) <= 3: print >>sys. stderr, 'usage: client. py hostname [port]' sys. exit(2) port = int(sys. argv[2]) if len(sys. argv) > 2 else lancelot. PORT client(sys. argv[1], port) It seems fast but is it really? To test this for real we need some realistic network latency so shouldn't use localhost. We also need to measure microsecond behaviour.

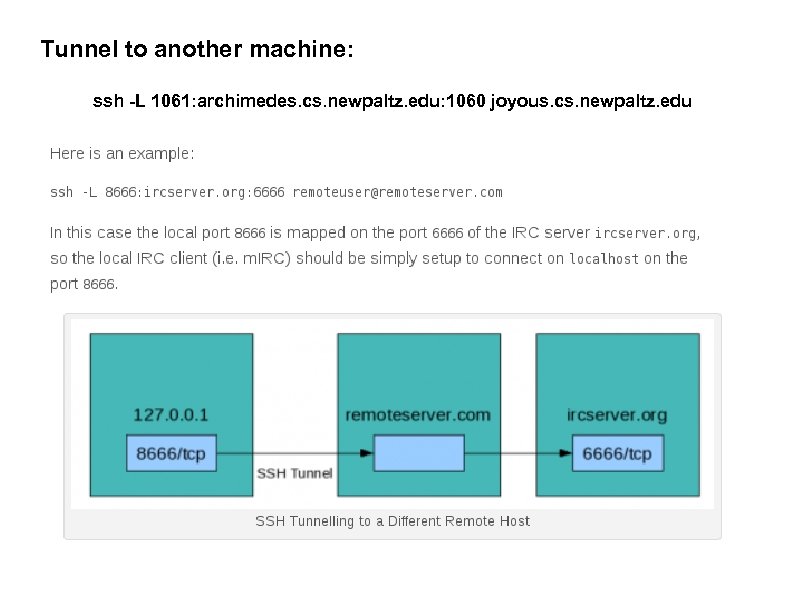

Tunnel to another machine: ssh -L 1061: archimedes. cs. newpaltz. edu: 1060 joyous. cs. newpaltz. edu

Tunnel to another machine: ssh -L 1061: archimedes. cs. newpaltz. edu: 1060 joyous. cs. newpaltz. edu

Tunneling: See page 289 for this feature Alternatively, here is agood explanation of various possible scenarios http: //www. zulutown. com/blog/2009/02/28/ ssh-tunnelling-to-remote-servers-and-with-local-address-binding/ http: //toic. org/blog/2009/reverse-ssh-port-forwarding/#. Uzr 2 z. Tnf. Hs. Y

Tunneling: See page 289 for this feature Alternatively, here is agood explanation of various possible scenarios http: //www. zulutown. com/blog/2009/02/28/ ssh-tunnelling-to-remote-servers-and-with-local-address-binding/ http: //toic. org/blog/2009/reverse-ssh-port-forwarding/#. Uzr 2 z. Tnf. Hs. Y

More on SSHD Port Forwarding: Uses: – access a backend database that is only visible on the local subnet ssh -L 3306: mysql. mysite. com user@sshd. mysite. com – your ISP gives you a shell account but expects emails to be sent from their browser mail client to their server ssh -L 8025: smtp. homeisp. net: 25 username@shell. homeisp. net – reverse port forwarding ssh -R 8022: localhost: 22 username@my. home. ip. address then ssh -p 8022 username@localhost

More on SSHD Port Forwarding: Uses: – access a backend database that is only visible on the local subnet ssh -L 3306: mysql. mysite. com user@sshd. mysite. com – your ISP gives you a shell account but expects emails to be sent from their browser mail client to their server ssh -L 8025: smtp. homeisp. net: 25 username@shell. homeisp. net – reverse port forwarding ssh -R 8022: localhost: 22 username@my. home. ip. address then ssh -p 8022 username@localhost

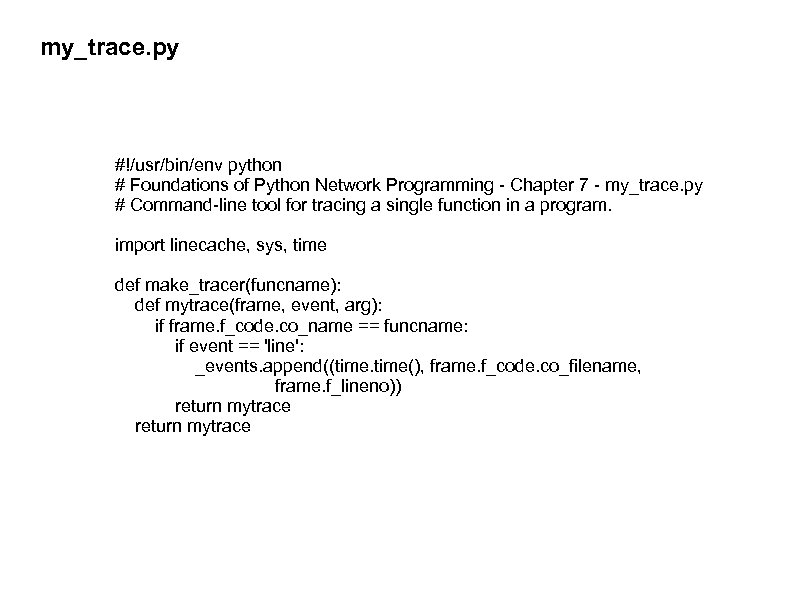

Waiting for Things to Happen: So now we have traffic that takes some time to actually move around. We need to time things. If your function, say foo(), is in a file called myfile. py then the script called my_trace. py will time the running of foo() from myfile. py.

Waiting for Things to Happen: So now we have traffic that takes some time to actually move around. We need to time things. If your function, say foo(), is in a file called myfile. py then the script called my_trace. py will time the running of foo() from myfile. py.

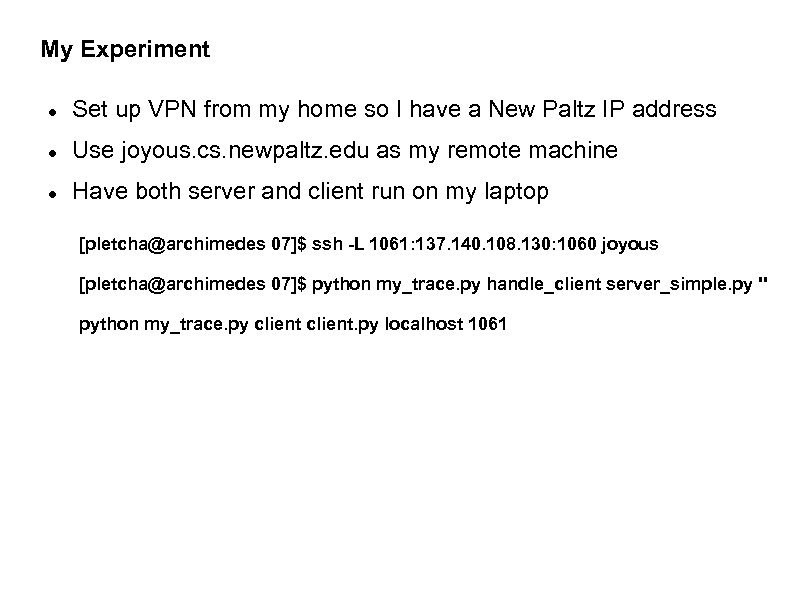

My Experiment Set up VPN from my home so I have a New Paltz IP address Use joyous. cs. newpaltz. edu as my remote machine Have both server and client run on my laptop [pletcha@archimedes 07]$ ssh -L 1061: 137. 140. 108. 130: 1060 joyous [pletcha@archimedes 07]$ python my_trace. py handle_client server_simple. py '' python my_trace. py client. py localhost 1061

My Experiment Set up VPN from my home so I have a New Paltz IP address Use joyous. cs. newpaltz. edu as my remote machine Have both server and client run on my laptop [pletcha@archimedes 07]$ ssh -L 1061: 137. 140. 108. 130: 1060 joyous [pletcha@archimedes 07]$ python my_trace. py handle_client server_simple. py '' python my_trace. py client. py localhost 1061

my_trace. py #!/usr/bin/env python # Foundations of Python Network Programming - Chapter 7 - my_trace. py # Command-line tool for tracing a single function in a program. import linecache, sys, time def make_tracer(funcname): def mytrace(frame, event, arg): if frame. f_code. co_name == funcname: if event == 'line': _events. append((time(), frame. f_code. co_filename, frame. f_lineno)) return mytrace

my_trace. py #!/usr/bin/env python # Foundations of Python Network Programming - Chapter 7 - my_trace. py # Command-line tool for tracing a single function in a program. import linecache, sys, time def make_tracer(funcname): def mytrace(frame, event, arg): if frame. f_code. co_name == funcname: if event == 'line': _events. append((time(), frame. f_code. co_filename, frame. f_lineno)) return mytrace

![my_trace. py if __name__ == '__main__': _events = [] if len(sys. argv) < 3: my_trace. py if __name__ == '__main__': _events = [] if len(sys. argv) < 3:](https://present5.com/presentation/2641626adc1e397b991c6064be770252/image-19.jpg) my_trace. py if __name__ == '__main__': _events = [] if len(sys. argv) < 3: print >>sys. stderr, 'usage: my_trace. py funcname other_script. py. . . ' sys. exit(2) sys. settrace(make_tracer(sys. argv[1])) del sys. argv[0: 2] # show the script only its own name and arguments try: execfile(sys. argv[0]) finally: for t, filename, lineno in _events: s = linecache. getline(filename, lineno) sys. stdout. write('%9. 6 f %s' % (t % 60. 0, s))

my_trace. py if __name__ == '__main__': _events = [] if len(sys. argv) < 3: print >>sys. stderr, 'usage: my_trace. py funcname other_script. py. . . ' sys. exit(2) sys. settrace(make_tracer(sys. argv[1])) del sys. argv[0: 2] # show the script only its own name and arguments try: execfile(sys. argv[0]) finally: for t, filename, lineno in _events: s = linecache. getline(filename, lineno) sys. stdout. write('%9. 6 f %s' % (t % 60. 0, s))

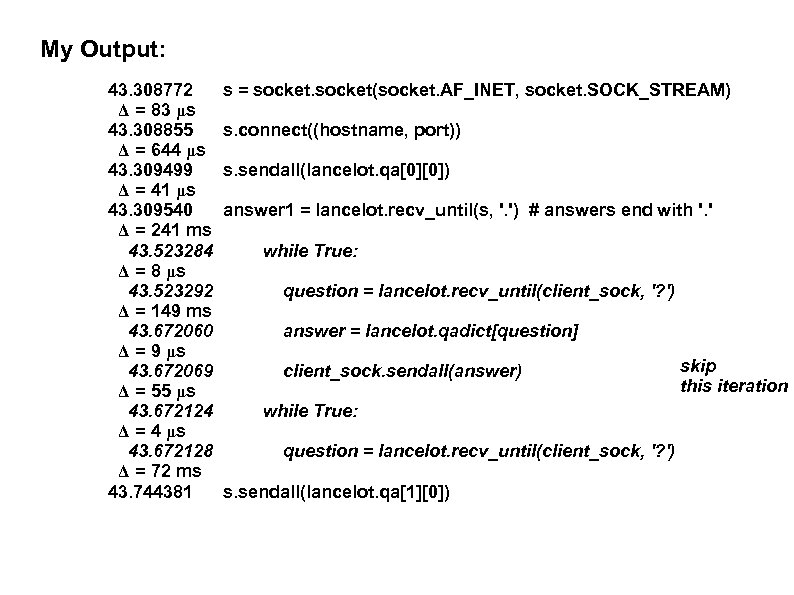

My Output: 43. 308772 Δ = 83 μs 43. 308855 Δ = 644 μs 43. 309499 Δ = 41 μs 43. 309540 Δ = 241 ms 43. 523284 Δ = 8 μs 43. 523292 Δ = 149 ms 43. 672060 Δ = 9 μs 43. 672069 Δ = 55 μs 43. 672124 Δ = 4 μs 43. 672128 Δ = 72 ms 43. 744381 s = socket(socket. AF_INET, socket. SOCK_STREAM) s. connect((hostname, port)) s. sendall(lancelot. qa[0][0]) answer 1 = lancelot. recv_until(s, '. ') # answers end with '. ' while True: question = lancelot. recv_until(client_sock, '? ') answer = lancelot. qadict[question] client_sock. sendall(answer) while True: question = lancelot. recv_until(client_sock, '? ') s. sendall(lancelot. qa[1][0]) skip this iteration

My Output: 43. 308772 Δ = 83 μs 43. 308855 Δ = 644 μs 43. 309499 Δ = 41 μs 43. 309540 Δ = 241 ms 43. 523284 Δ = 8 μs 43. 523292 Δ = 149 ms 43. 672060 Δ = 9 μs 43. 672069 Δ = 55 μs 43. 672124 Δ = 4 μs 43. 672128 Δ = 72 ms 43. 744381 s = socket(socket. AF_INET, socket. SOCK_STREAM) s. connect((hostname, port)) s. sendall(lancelot. qa[0][0]) answer 1 = lancelot. recv_until(s, '. ') # answers end with '. ' while True: question = lancelot. recv_until(client_sock, '? ') answer = lancelot. qadict[question] client_sock. sendall(answer) while True: question = lancelot. recv_until(client_sock, '? ') s. sendall(lancelot. qa[1][0]) skip this iteration

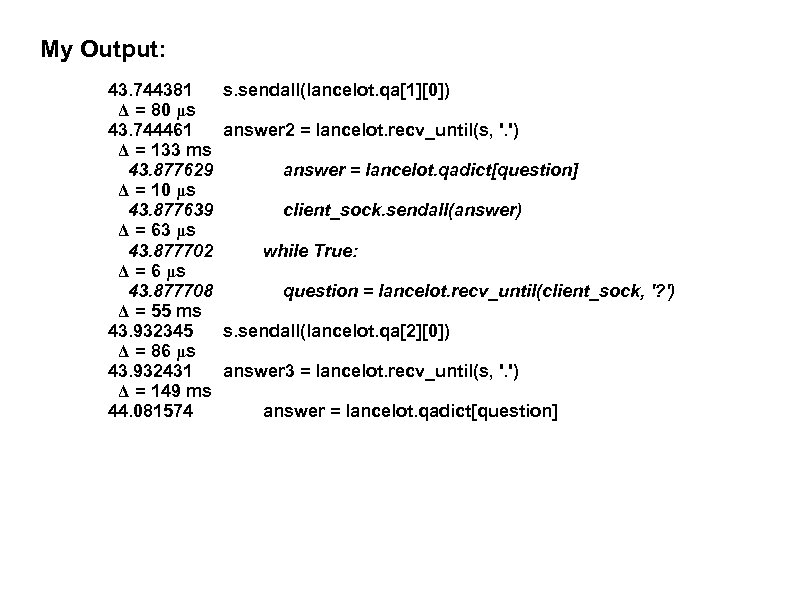

My Output: 43. 744381 Δ = 80 μs 43. 744461 Δ = 133 ms 43. 877629 Δ = 10 μs 43. 877639 Δ = 63 μs 43. 877702 Δ = 6 μs 43. 877708 Δ = 55 ms 43. 932345 Δ = 86 μs 43. 932431 Δ = 149 ms 44. 081574 s. sendall(lancelot. qa[1][0]) answer 2 = lancelot. recv_until(s, '. ') answer = lancelot. qadict[question] client_sock. sendall(answer) while True: question = lancelot. recv_until(client_sock, '? ') s. sendall(lancelot. qa[2][0]) answer 3 = lancelot. recv_until(s, '. ') answer = lancelot. qadict[question]

My Output: 43. 744381 Δ = 80 μs 43. 744461 Δ = 133 ms 43. 877629 Δ = 10 μs 43. 877639 Δ = 63 μs 43. 877702 Δ = 6 μs 43. 877708 Δ = 55 ms 43. 932345 Δ = 86 μs 43. 932431 Δ = 149 ms 44. 081574 s. sendall(lancelot. qa[1][0]) answer 2 = lancelot. recv_until(s, '. ') answer = lancelot. qadict[question] client_sock. sendall(answer) while True: question = lancelot. recv_until(client_sock, '? ') s. sendall(lancelot. qa[2][0]) answer 3 = lancelot. recv_until(s, '. ') answer = lancelot. qadict[question]

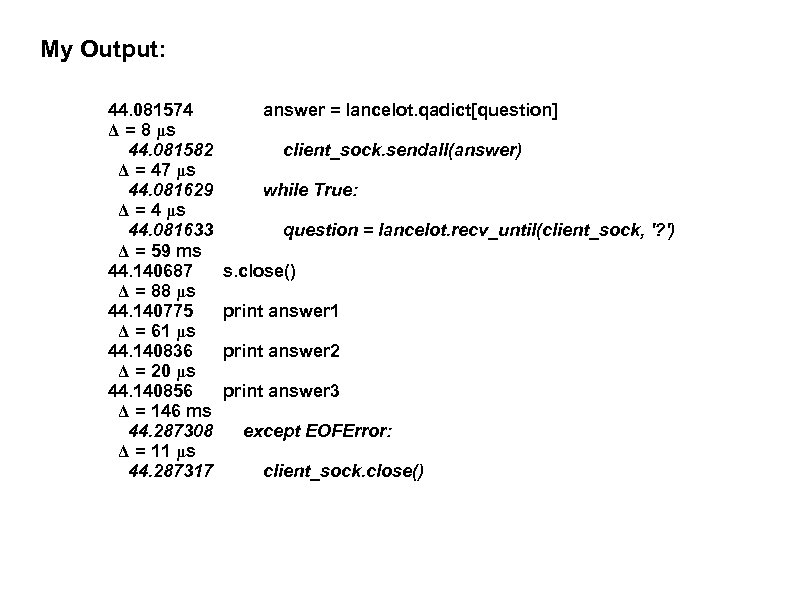

My Output: 44. 081574 Δ = 8 μs 44. 081582 Δ = 47 μs 44. 081629 Δ = 4 μs 44. 081633 Δ = 59 ms 44. 140687 Δ = 88 μs 44. 140775 Δ = 61 μs 44. 140836 Δ = 20 μs 44. 140856 Δ = 146 ms 44. 287308 Δ = 11 μs 44. 287317 answer = lancelot. qadict[question] client_sock. sendall(answer) while True: question = lancelot. recv_until(client_sock, '? ') s. close() print answer 1 print answer 2 print answer 3 except EOFError: client_sock. close()

My Output: 44. 081574 Δ = 8 μs 44. 081582 Δ = 47 μs 44. 081629 Δ = 4 μs 44. 081633 Δ = 59 ms 44. 140687 Δ = 88 μs 44. 140775 Δ = 61 μs 44. 140836 Δ = 20 μs 44. 140856 Δ = 146 ms 44. 287308 Δ = 11 μs 44. 287317 answer = lancelot. qadict[question] client_sock. sendall(answer) while True: question = lancelot. recv_until(client_sock, '? ') s. close() print answer 1 print answer 2 print answer 3 except EOFError: client_sock. close()

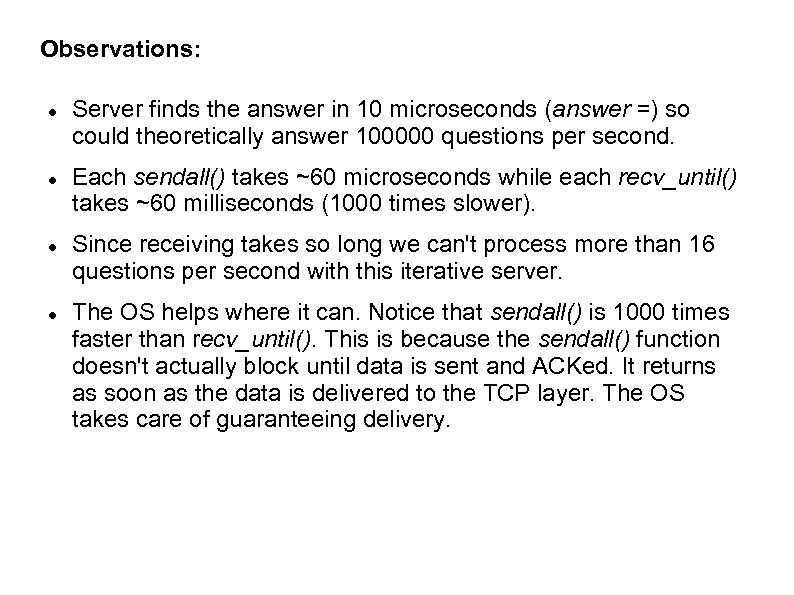

Observations: Server finds the answer in 10 microseconds (answer =) so could theoretically answer 100000 questions per second. Each sendall() takes ~60 microseconds while each recv_until() takes ~60 milliseconds (1000 times slower). Since receiving takes so long we can't process more than 16 questions per second with this iterative server. The OS helps where it can. Notice that sendall() is 1000 times faster than recv_until(). This is because the sendall() function doesn't actually block until data is sent and ACKed. It returns as soon as the data is delivered to the TCP layer. The OS takes care of guaranteeing delivery.

Observations: Server finds the answer in 10 microseconds (answer =) so could theoretically answer 100000 questions per second. Each sendall() takes ~60 microseconds while each recv_until() takes ~60 milliseconds (1000 times slower). Since receiving takes so long we can't process more than 16 questions per second with this iterative server. The OS helps where it can. Notice that sendall() is 1000 times faster than recv_until(). This is because the sendall() function doesn't actually block until data is sent and ACKed. It returns as soon as the data is delivered to the TCP layer. The OS takes care of guaranteeing delivery.

Observations: 219 milliseconds between moment when client executes connect() and server executes recv_all(). If all client requests were coming from the same process, sequentially this means we could not expect more than 4 sessions per second. All the time the server is capable of answering 33000 sessions per second. So, communication and most of all, sequentiality really slow things down. So much server time not utilized means there has to be a better way. 15 -20 milliseconds for one question to be answered so roughly 40 -50 questions per second. Can we do better than this by increasing the number of clients?

Observations: 219 milliseconds between moment when client executes connect() and server executes recv_all(). If all client requests were coming from the same process, sequentially this means we could not expect more than 4 sessions per second. All the time the server is capable of answering 33000 sessions per second. So, communication and most of all, sequentiality really slow things down. So much server time not utilized means there has to be a better way. 15 -20 milliseconds for one question to be answered so roughly 40 -50 questions per second. Can we do better than this by increasing the number of clients?

Benchmarks: See page 289 for ssh -L feature Funkload: A benchmarking tool that is written in python and lets you run more and more copies of something you are testing to see how things struggle with the increased load.

Benchmarks: See page 289 for ssh -L feature Funkload: A benchmarking tool that is written in python and lets you run more and more copies of something you are testing to see how things struggle with the increased load.

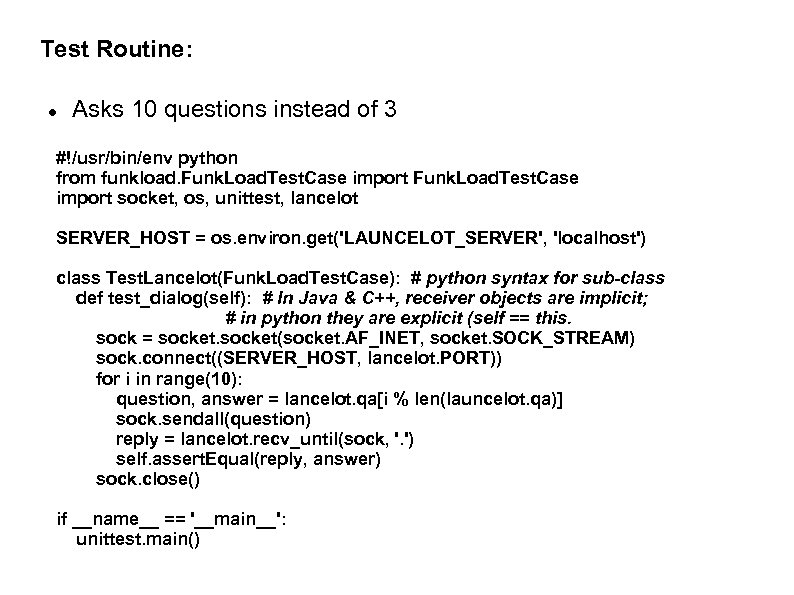

Test Routine: Asks 10 questions instead of 3 #!/usr/bin/env python from funkload. Funk. Load. Test. Case import socket, os, unittest, lancelot SERVER_HOST = os. environ. get('LAUNCELOT_SERVER', 'localhost') class Test. Lancelot(Funk. Load. Test. Case): # python syntax for sub-class def test_dialog(self): # In Java & C++, receiver objects are implicit; # in python they are explicit (self == this. sock = socket(socket. AF_INET, socket. SOCK_STREAM) sock. connect((SERVER_HOST, lancelot. PORT)) for i in range(10): question, answer = lancelot. qa[i % len(launcelot. qa)] sock. sendall(question) reply = lancelot. recv_until(sock, '. ') self. assert. Equal(reply, answer) sock. close() if __name__ == '__main__': unittest. main()

Test Routine: Asks 10 questions instead of 3 #!/usr/bin/env python from funkload. Funk. Load. Test. Case import socket, os, unittest, lancelot SERVER_HOST = os. environ. get('LAUNCELOT_SERVER', 'localhost') class Test. Lancelot(Funk. Load. Test. Case): # python syntax for sub-class def test_dialog(self): # In Java & C++, receiver objects are implicit; # in python they are explicit (self == this. sock = socket(socket. AF_INET, socket. SOCK_STREAM) sock. connect((SERVER_HOST, lancelot. PORT)) for i in range(10): question, answer = lancelot. qa[i % len(launcelot. qa)] sock. sendall(question) reply = lancelot. recv_until(sock, '. ') self. assert. Equal(reply, answer) sock. close() if __name__ == '__main__': unittest. main()

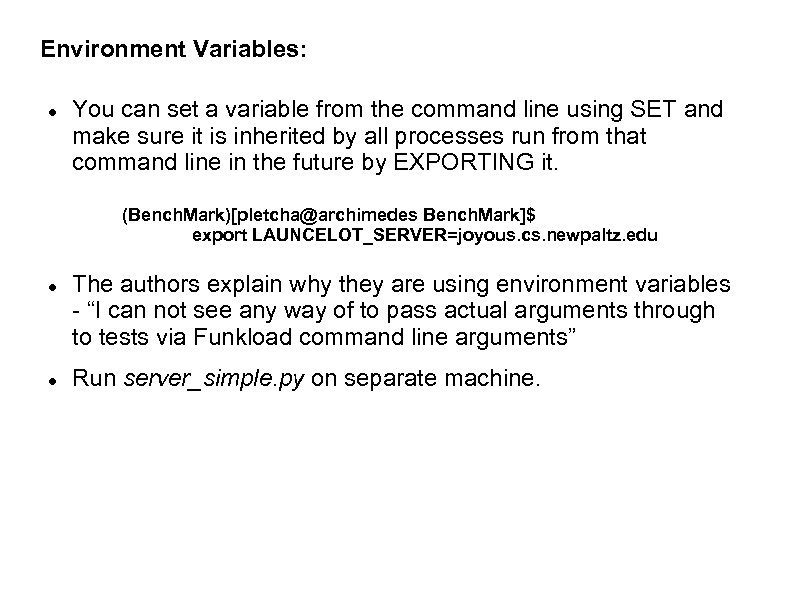

Environment Variables: You can set a variable from the command line using SET and make sure it is inherited by all processes run from that command line in the future by EXPORTING it. (Bench. Mark)[pletcha@archimedes Bench. Mark]$ export LAUNCELOT_SERVER=joyous. cs. newpaltz. edu The authors explain why they are using environment variables - “I can not see any way of to pass actual arguments through to tests via Funkload command line arguments” Run server_simple. py on separate machine.

Environment Variables: You can set a variable from the command line using SET and make sure it is inherited by all processes run from that command line in the future by EXPORTING it. (Bench. Mark)[pletcha@archimedes Bench. Mark]$ export LAUNCELOT_SERVER=joyous. cs. newpaltz. edu The authors explain why they are using environment variables - “I can not see any way of to pass actual arguments through to tests via Funkload command line arguments” Run server_simple. py on separate machine.

![Config file on laptop: # Test. Launcelot. conf: <test class name>. conf [main] title=Load Config file on laptop: # Test. Launcelot. conf: <test class name>. conf [main] title=Load](https://present5.com/presentation/2641626adc1e397b991c6064be770252/image-28.jpg) Config file on laptop: # Test. Launcelot. conf:

Config file on laptop: # Test. Launcelot. conf:

![Testing Funkload: Big mixup on Lancelot-Launcelot. (Bench. Mark)[pletcha@archimedes Bench. Mark]$ fl-run-test lancelot_tests. py Test. Testing Funkload: Big mixup on Lancelot-Launcelot. (Bench. Mark)[pletcha@archimedes Bench. Mark]$ fl-run-test lancelot_tests. py Test.](https://present5.com/presentation/2641626adc1e397b991c6064be770252/image-29.jpg) Testing Funkload: Big mixup on Lancelot-Launcelot. (Bench. Mark)[pletcha@archimedes Bench. Mark]$ fl-run-test lancelot_tests. py Test. Lancelot. test_dialog. -----------------------------------Ran 1 test in 0. 010 s OK

Testing Funkload: Big mixup on Lancelot-Launcelot. (Bench. Mark)[pletcha@archimedes Bench. Mark]$ fl-run-test lancelot_tests. py Test. Lancelot. test_dialog. -----------------------------------Ran 1 test in 0. 010 s OK

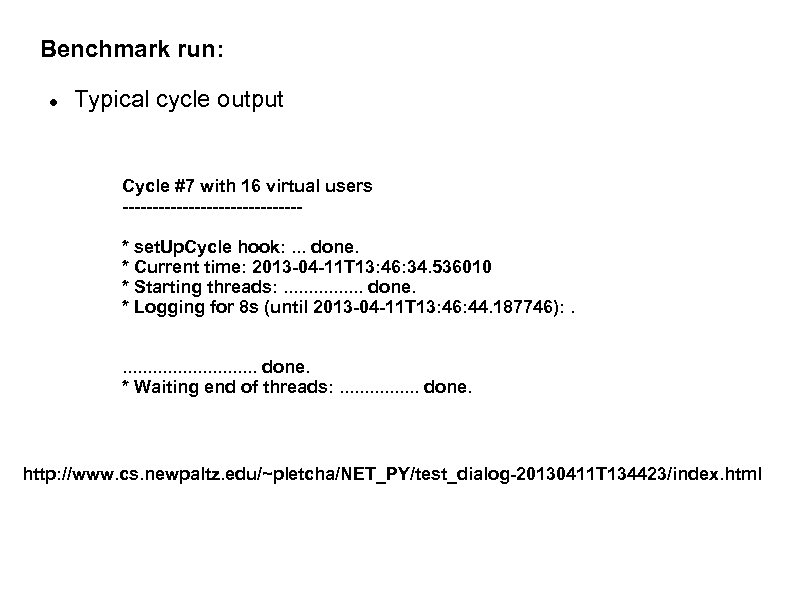

Benchmark run: Typical cycle output Cycle #7 with 16 virtual users ---------------* set. Up. Cycle hook: . . . done. * Current time: 2013 -04 -11 T 13: 46: 34. 536010 * Starting threads: . . . . done. * Logging for 8 s (until 2013 -04 -11 T 13: 46: 44. 187746): . . . . done. * Waiting end of threads: . . . . done. http: //www. cs. newpaltz. edu/~pletcha/NET_PY/test_dialog-20130411 T 134423/index. html

Benchmark run: Typical cycle output Cycle #7 with 16 virtual users ---------------* set. Up. Cycle hook: . . . done. * Current time: 2013 -04 -11 T 13: 46: 34. 536010 * Starting threads: . . . . done. * Logging for 8 s (until 2013 -04 -11 T 13: 46: 44. 187746): . . . . done. * Waiting end of threads: . . . . done. http: //www. cs. newpaltz. edu/~pletcha/NET_PY/test_dialog-20130411 T 134423/index. html

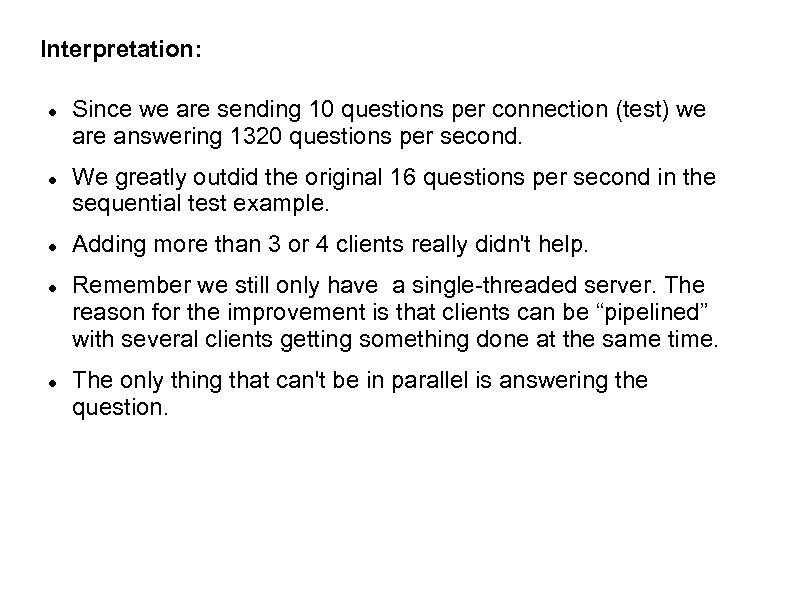

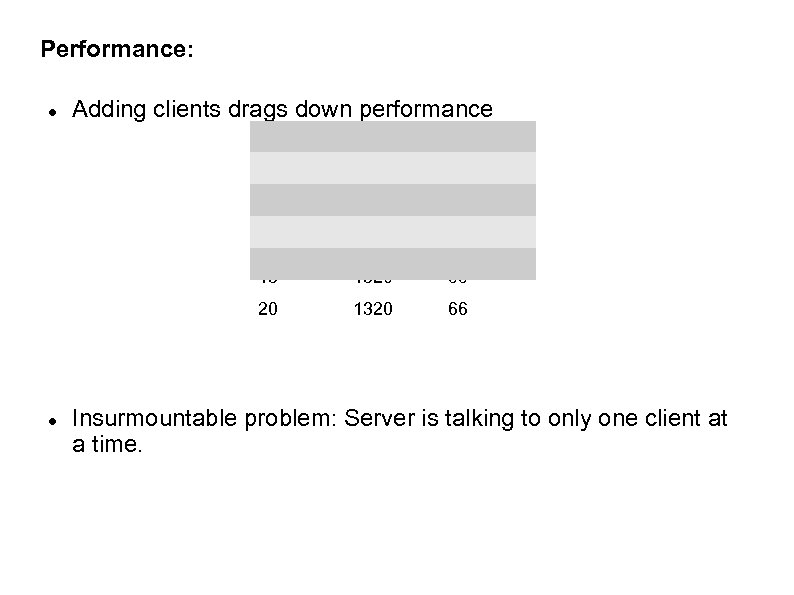

Interpretation: Since we are sending 10 questions per connection (test) we are answering 1320 questions per second. We greatly outdid the original 16 questions per second in the sequential test example. Adding more than 3 or 4 clients really didn't help. Remember we still only have a single-threaded server. The reason for the improvement is that clients can be “pipelined” with several clients getting something done at the same time. The only thing that can't be in parallel is answering the question.

Interpretation: Since we are sending 10 questions per connection (test) we are answering 1320 questions per second. We greatly outdid the original 16 questions per second in the sequential test example. Adding more than 3 or 4 clients really didn't help. Remember we still only have a single-threaded server. The reason for the improvement is that clients can be “pipelined” with several clients getting something done at the same time. The only thing that can't be in parallel is answering the question.

Performance: Adding clients drags down performance 3 # Clients 5 403 # Ques/clie nt 264 10 132 15 1320 99 20 1320 # Question s 1320 66 Insurmountable problem: Server is talking to only one client at a time.

Performance: Adding clients drags down performance 3 # Clients 5 403 # Ques/clie nt 264 10 132 15 1320 99 20 1320 # Question s 1320 66 Insurmountable problem: Server is talking to only one client at a time.

Performance: Adding clients drags down performance Insurmountable problem: Server is talking to only one client at a time.

Performance: Adding clients drags down performance Insurmountable problem: Server is talking to only one client at a time.

Event-driven Servers: The simple server blocks until data arrives. At that point it can be efficient. What would happen if we never called recv() unless we knew data was already waiting? Meanwhile we could be watching a whole array of connected clients to see which one has sent us something to respond to.

Event-driven Servers: The simple server blocks until data arrives. At that point it can be efficient. What would happen if we never called recv() unless we knew data was already waiting? Meanwhile we could be watching a whole array of connected clients to see which one has sent us something to respond to.

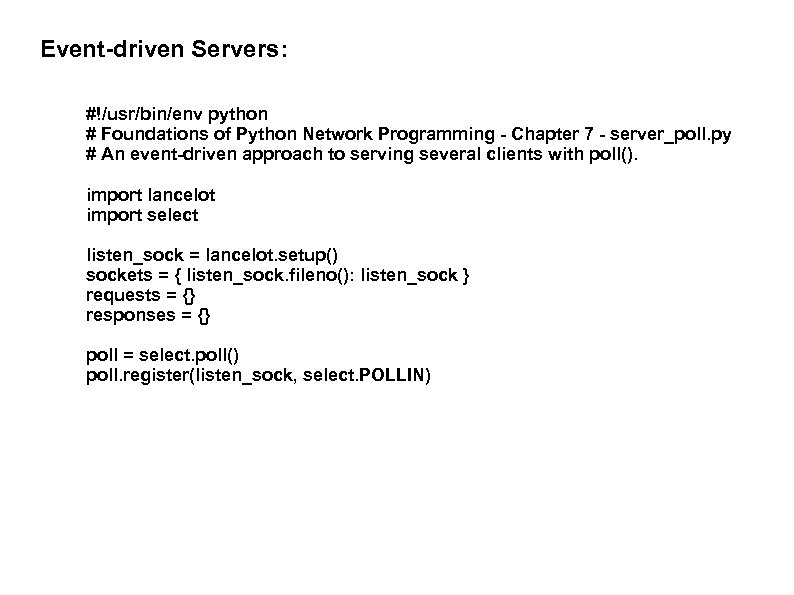

Event-driven Servers: #!/usr/bin/env python # Foundations of Python Network Programming - Chapter 7 - server_poll. py # An event-driven approach to serving several clients with poll(). import lancelot import select listen_sock = lancelot. setup() sockets = { listen_sock. fileno(): listen_sock } requests = {} responses = {} poll = select. poll() poll. register(listen_sock, select. POLLIN)

Event-driven Servers: #!/usr/bin/env python # Foundations of Python Network Programming - Chapter 7 - server_poll. py # An event-driven approach to serving several clients with poll(). import lancelot import select listen_sock = lancelot. setup() sockets = { listen_sock. fileno(): listen_sock } requests = {} responses = {} poll = select. poll() poll. register(listen_sock, select. POLLIN)

![Event-driven Servers: while True: for fd, event in poll(): sock = sockets[fd] # Removed Event-driven Servers: while True: for fd, event in poll(): sock = sockets[fd] # Removed](https://present5.com/presentation/2641626adc1e397b991c6064be770252/image-36.jpg) Event-driven Servers: while True: for fd, event in poll(): sock = sockets[fd] # Removed closed sockets from our list. if event & (select. POLLHUP | select. POLLERR | select. POLLNVAL): poll. unregister(fd) del sockets[fd] requests. pop(sock, None) responses. pop(sock, None) # Accept connections from new sockets. elif sock is listen_sock: newsock, sockname = sock. accept() newsock. setblocking(False) fd = newsock. fileno() sockets[fd] = newsock poll. register(fd, select. POLLIN) requests[newsock] = ''

Event-driven Servers: while True: for fd, event in poll(): sock = sockets[fd] # Removed closed sockets from our list. if event & (select. POLLHUP | select. POLLERR | select. POLLNVAL): poll. unregister(fd) del sockets[fd] requests. pop(sock, None) responses. pop(sock, None) # Accept connections from new sockets. elif sock is listen_sock: newsock, sockname = sock. accept() newsock. setblocking(False) fd = newsock. fileno() sockets[fd] = newsock poll. register(fd, select. POLLIN) requests[newsock] = ''

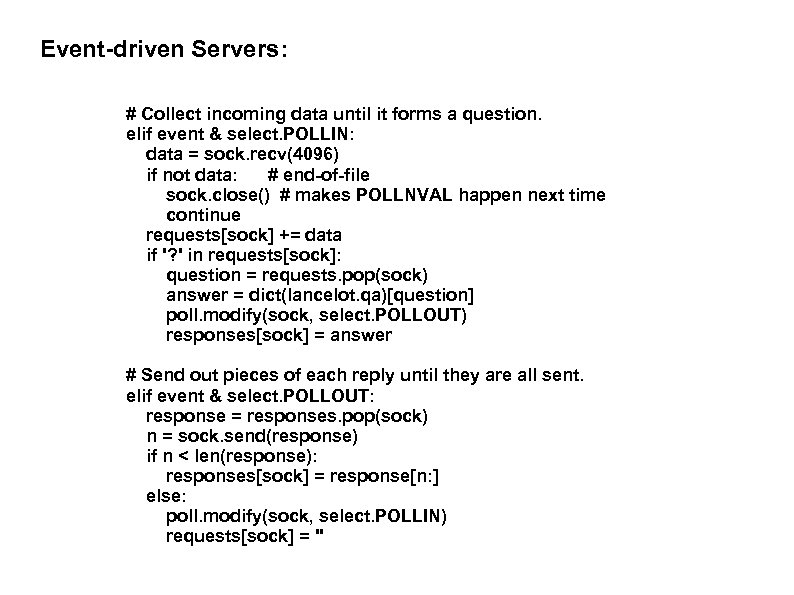

Event-driven Servers: # Collect incoming data until it forms a question. elif event & select. POLLIN: data = sock. recv(4096) if not data: # end-of-file sock. close() # makes POLLNVAL happen next time continue requests[sock] += data if '? ' in requests[sock]: question = requests. pop(sock) answer = dict(lancelot. qa)[question] poll. modify(sock, select. POLLOUT) responses[sock] = answer # Send out pieces of each reply until they are all sent. elif event & select. POLLOUT: response = responses. pop(sock) n = sock. send(response) if n < len(response): responses[sock] = response[n: ] else: poll. modify(sock, select. POLLIN) requests[sock] = ''

Event-driven Servers: # Collect incoming data until it forms a question. elif event & select. POLLIN: data = sock. recv(4096) if not data: # end-of-file sock. close() # makes POLLNVAL happen next time continue requests[sock] += data if '? ' in requests[sock]: question = requests. pop(sock) answer = dict(lancelot. qa)[question] poll. modify(sock, select. POLLOUT) responses[sock] = answer # Send out pieces of each reply until they are all sent. elif event & select. POLLOUT: response = responses. pop(sock) n = sock. send(response) if n < len(response): responses[sock] = response[n: ] else: poll. modify(sock, select. POLLIN) requests[sock] = ''

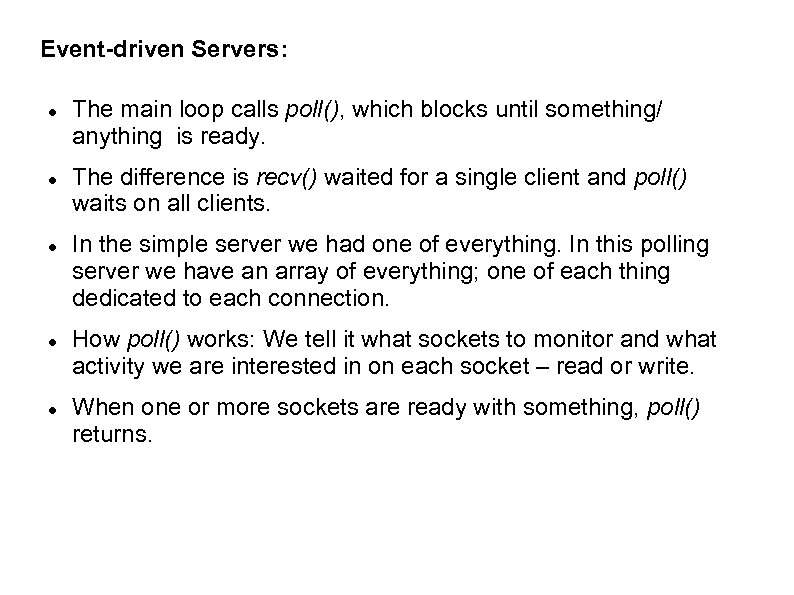

Event-driven Servers: The main loop calls poll(), which blocks until something/ anything is ready. The difference is recv() waited for a single client and poll() waits on all clients. In the simple server we had one of everything. In this polling server we have an array of everything; one of each thing dedicated to each connection. How poll() works: We tell it what sockets to monitor and what activity we are interested in on each socket – read or write. When one or more sockets are ready with something, poll() returns.

Event-driven Servers: The main loop calls poll(), which blocks until something/ anything is ready. The difference is recv() waited for a single client and poll() waits on all clients. In the simple server we had one of everything. In this polling server we have an array of everything; one of each thing dedicated to each connection. How poll() works: We tell it what sockets to monitor and what activity we are interested in on each socket – read or write. When one or more sockets are ready with something, poll() returns.

Event-driven Servers: The life-span of one client: 1: A client connects and the listening socket is “ready”. poll() returns and since it is the listening socket, it must be a completed 3 WHS. We accept() the connection and tell our poll() function we want to read from this connection. To make sure they never block we set blocking “not allowed”. 2: When data is available, poll() returns and we read a string and append the string to a dictionary entry for this connection. 3: We know we have an entire question when '? ' arrives. At that point we ask poll() to write to the same connection. 4: Once the socket is ready for writing (poll() has returned) we send as much of we can of the answer and keep sending until we have sent '. '. 5: Next we swap the client socket back to listening-for-new-data mode. 6: POLLHUP, POLLERR and POLLNOVAL events occur on send() so when recv() receives 0 bytes we do a send() to get the error on our next poll().

Event-driven Servers: The life-span of one client: 1: A client connects and the listening socket is “ready”. poll() returns and since it is the listening socket, it must be a completed 3 WHS. We accept() the connection and tell our poll() function we want to read from this connection. To make sure they never block we set blocking “not allowed”. 2: When data is available, poll() returns and we read a string and append the string to a dictionary entry for this connection. 3: We know we have an entire question when '? ' arrives. At that point we ask poll() to write to the same connection. 4: Once the socket is ready for writing (poll() has returned) we send as much of we can of the answer and keep sending until we have sent '. '. 5: Next we swap the client socket back to listening-for-new-data mode. 6: POLLHUP, POLLERR and POLLNOVAL events occur on send() so when recv() receives 0 bytes we do a send() to get the error on our next poll().

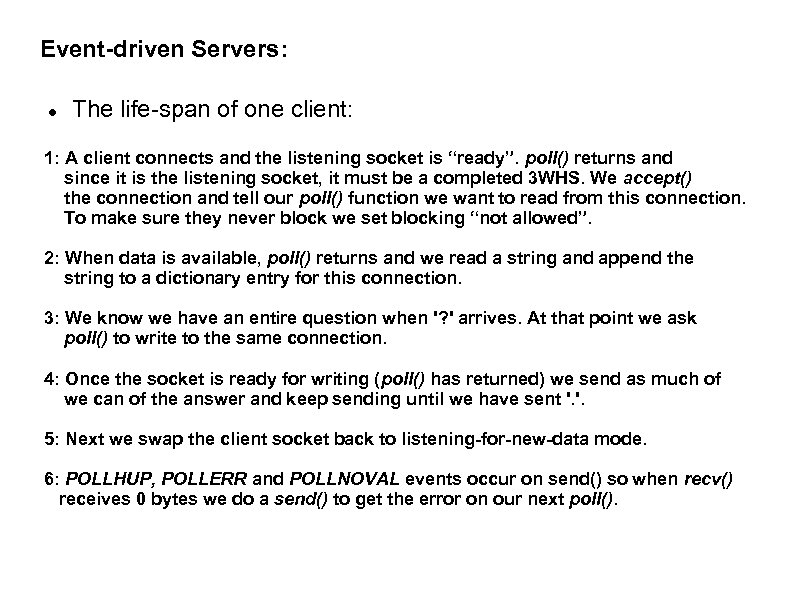

server_poll. py benchmark: server_poll. py benchmark http: //www. cs. newpaltz. edu/~pletcha/NET_PY/ test_dialog-20130412 T 081140/index. html # Clients # Ques/client 3 # Questioons 1800 600 So we see some performance degradation. 5 2500

server_poll. py benchmark: server_poll. py benchmark http: //www. cs. newpaltz. edu/~pletcha/NET_PY/ test_dialog-20130412 T 081140/index. html # Clients # Ques/client 3 # Questioons 1800 600 So we see some performance degradation. 5 2500

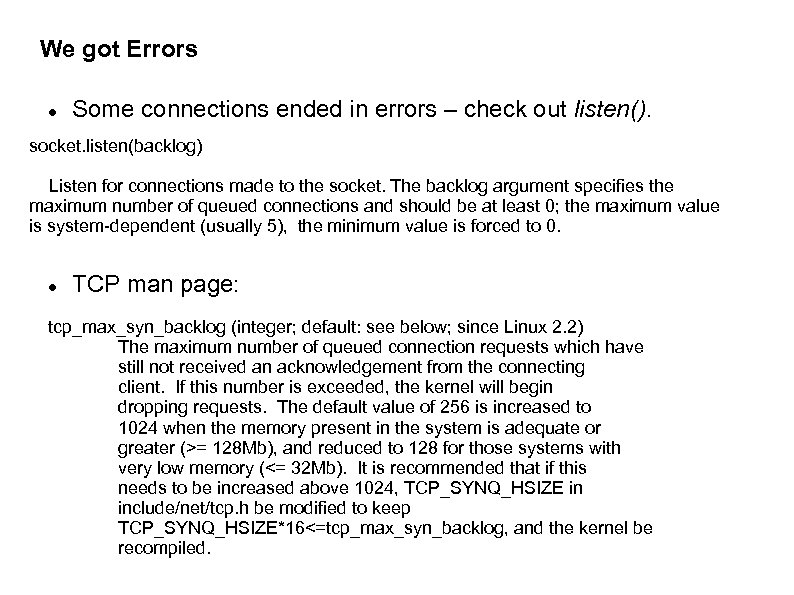

We got Errors Some connections ended in errors – check out listen(). socket. listen(backlog) Listen for connections made to the socket. The backlog argument specifies the maximum number of queued connections and should be at least 0; the maximum value is system-dependent (usually 5), the minimum value is forced to 0. TCP man page: tcp_max_syn_backlog (integer; default: see below; since Linux 2. 2) The maximum number of queued connection requests which have still not received an acknowledgement from the connecting client. If this number is exceeded, the kernel will begin dropping requests. The default value of 256 is increased to 1024 when the memory present in the system is adequate or greater (>= 128 Mb), and reduced to 128 for those systems with very low memory (<= 32 Mb). It is recommended that if this needs to be increased above 1024, TCP_SYNQ_HSIZE in include/net/tcp. h be modified to keep TCP_SYNQ_HSIZE*16<=tcp_max_syn_backlog, and the kernel be recompiled.

We got Errors Some connections ended in errors – check out listen(). socket. listen(backlog) Listen for connections made to the socket. The backlog argument specifies the maximum number of queued connections and should be at least 0; the maximum value is system-dependent (usually 5), the minimum value is forced to 0. TCP man page: tcp_max_syn_backlog (integer; default: see below; since Linux 2. 2) The maximum number of queued connection requests which have still not received an acknowledgement from the connecting client. If this number is exceeded, the kernel will begin dropping requests. The default value of 256 is increased to 1024 when the memory present in the system is adequate or greater (>= 128 Mb), and reduced to 128 for those systems with very low memory (<= 32 Mb). It is recommended that if this needs to be increased above 1024, TCP_SYNQ_HSIZE in include/net/tcp. h be modified to keep TCP_SYNQ_HSIZE*16<=tcp_max_syn_backlog, and the kernel be recompiled.

Poll vs Select poll() code is cleaner but select(), which does the same thing, is available on Windows. The author's suggestion: Don't write this kind of code; use an event-driven framework instead.

Poll vs Select poll() code is cleaner but select(), which does the same thing, is available on Windows. The author's suggestion: Don't write this kind of code; use an event-driven framework instead.

Non-blocking Semantics In non-blocking mode, recv() acts as follows: – If data is ready, it is returned – If no data has arrived, socket. error is raised – if the connection is closed, '' is returned. Why does closed return data and no data return an error? Think about the blocking situation. – First and last can happen and behave as above. Second situation won't happen. – The second situation had to do something different.

Non-blocking Semantics In non-blocking mode, recv() acts as follows: – If data is ready, it is returned – If no data has arrived, socket. error is raised – if the connection is closed, '' is returned. Why does closed return data and no data return an error? Think about the blocking situation. – First and last can happen and behave as above. Second situation won't happen. – The second situation had to do something different.

Non-blocking Semantics: send() semantics: – – socket buffers full: socket. error raised – if data is sent, its length is returned connection closed: socket. error raised Last case is interesting. Suppose poll() says a socket is ready to write but before we call send(), the client sends a FIN. Listing 7 -7 doesn't code for this situation.

Non-blocking Semantics: send() semantics: – – socket buffers full: socket. error raised – if data is sent, its length is returned connection closed: socket. error raised Last case is interesting. Suppose poll() says a socket is ready to write but before we call send(), the client sends a FIN. Listing 7 -7 doesn't code for this situation.

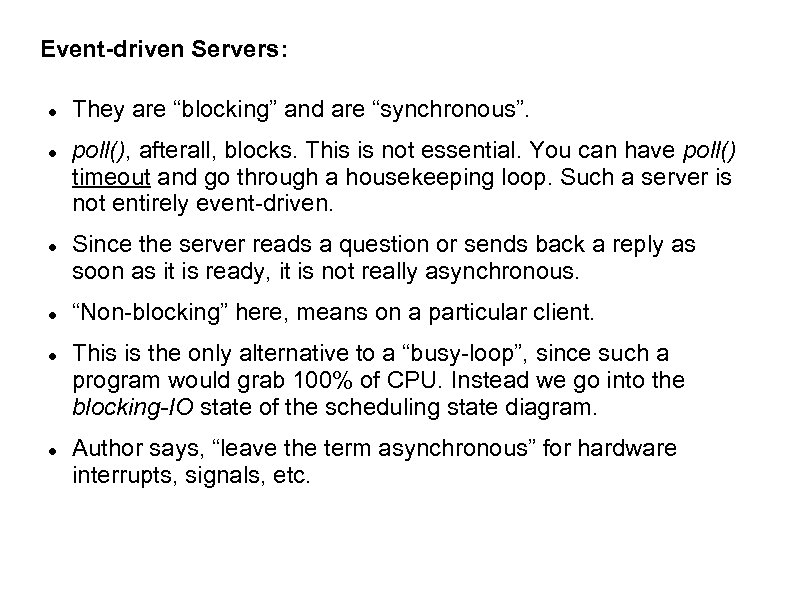

Event-driven Servers: They are “blocking” and are “synchronous”. poll(), afterall, blocks. This is not essential. You can have poll() timeout and go through a housekeeping loop. Such a server is not entirely event-driven. Since the server reads a question or sends back a reply as soon as it is ready, it is not really asynchronous. “Non-blocking” here, means on a particular client. This is the only alternative to a “busy-loop”, since such a program would grab 100% of CPU. Instead we go into the blocking-IO state of the scheduling state diagram. Author says, “leave the term asynchronous” for hardware interrupts, signals, etc.

Event-driven Servers: They are “blocking” and are “synchronous”. poll(), afterall, blocks. This is not essential. You can have poll() timeout and go through a housekeeping loop. Such a server is not entirely event-driven. Since the server reads a question or sends back a reply as soon as it is ready, it is not really asynchronous. “Non-blocking” here, means on a particular client. This is the only alternative to a “busy-loop”, since such a program would grab 100% of CPU. Instead we go into the blocking-IO state of the scheduling state diagram. Author says, “leave the term asynchronous” for hardware interrupts, signals, etc.

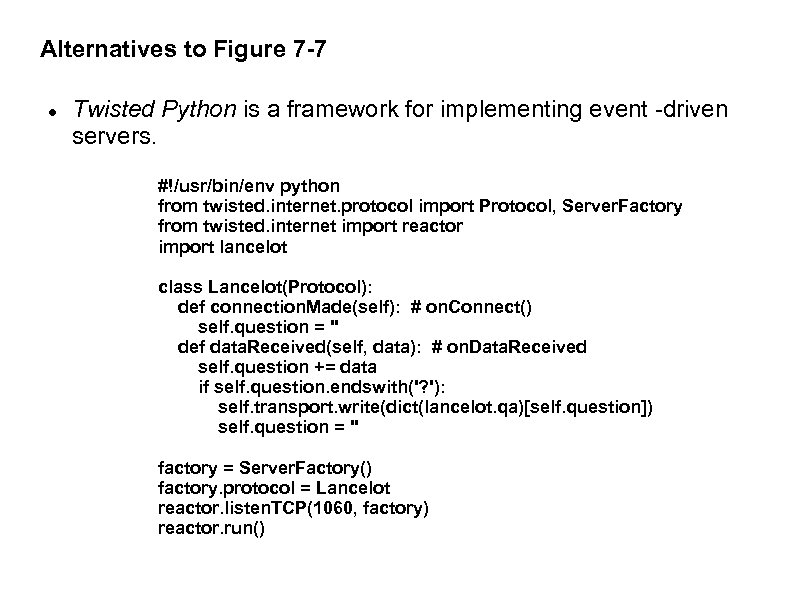

Alternatives to Figure 7 -7 Twisted Python is a framework for implementing event -driven servers. #!/usr/bin/env python from twisted. internet. protocol import Protocol, Server. Factory from twisted. internet import reactor import lancelot class Lancelot(Protocol): def connection. Made(self): # on. Connect() self. question = '' def data. Received(self, data): # on. Data. Received self. question += data if self. question. endswith('? '): self. transport. write(dict(lancelot. qa)[self. question]) self. question = '' factory = Server. Factory() factory. protocol = Lancelot reactor. listen. TCP(1060, factory) reactor. run()

Alternatives to Figure 7 -7 Twisted Python is a framework for implementing event -driven servers. #!/usr/bin/env python from twisted. internet. protocol import Protocol, Server. Factory from twisted. internet import reactor import lancelot class Lancelot(Protocol): def connection. Made(self): # on. Connect() self. question = '' def data. Received(self, data): # on. Data. Received self. question += data if self. question. endswith('? '): self. transport. write(dict(lancelot. qa)[self. question]) self. question = '' factory = Server. Factory() factory. protocol = Lancelot reactor. listen. TCP(1060, factory) reactor. run()

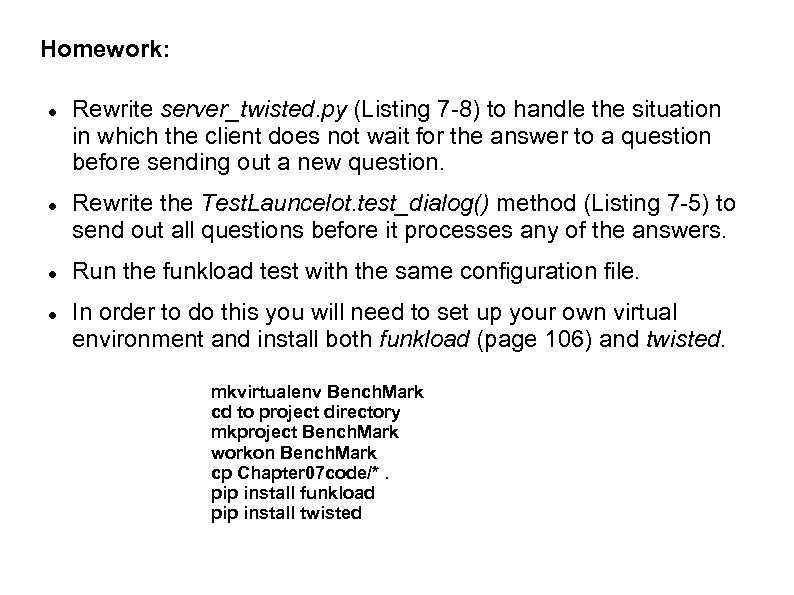

Homework: Rewrite server_twisted. py (Listing 7 -8) to handle the situation in which the client does not wait for the answer to a question before sending out a new question. Rewrite the Test. Launcelot. test_dialog() method (Listing 7 -5) to send out all questions before it processes any of the answers. Run the funkload test with the same configuration file. In order to do this you will need to set up your own virtual environment and install both funkload (page 106) and twisted. mkvirtualenv Bench. Mark cd to project directory mkproject Bench. Mark workon Bench. Mark cp Chapter 07 code/*. pip install funkload pip install twisted

Homework: Rewrite server_twisted. py (Listing 7 -8) to handle the situation in which the client does not wait for the answer to a question before sending out a new question. Rewrite the Test. Launcelot. test_dialog() method (Listing 7 -5) to send out all questions before it processes any of the answers. Run the funkload test with the same configuration file. In order to do this you will need to set up your own virtual environment and install both funkload (page 106) and twisted. mkvirtualenv Bench. Mark cd to project directory mkproject Bench. Mark workon Bench. Mark cp Chapter 07 code/*. pip install funkload pip install twisted

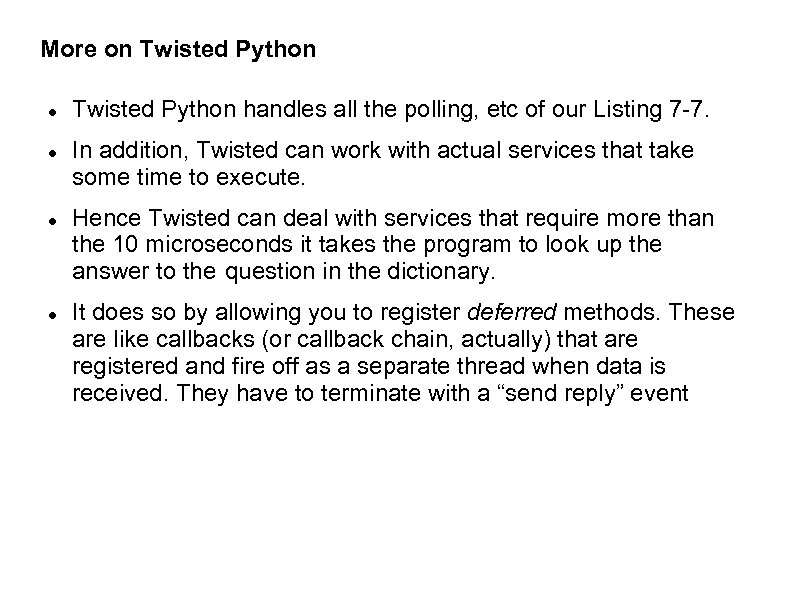

More on Twisted Python handles all the polling, etc of our Listing 7 -7. In addition, Twisted can work with actual services that take some time to execute. Hence Twisted can deal with services that require more than the 10 microseconds it takes the program to look up the answer to the question in the dictionary. It does so by allowing you to register deferred methods. These are like callbacks (or callback chain, actually) that are registered and fire off as a separate thread when data is received. They have to terminate with a “send reply” event

More on Twisted Python handles all the polling, etc of our Listing 7 -7. In addition, Twisted can work with actual services that take some time to execute. Hence Twisted can deal with services that require more than the 10 microseconds it takes the program to look up the answer to the question in the dictionary. It does so by allowing you to register deferred methods. These are like callbacks (or callback chain, actually) that are registered and fire off as a separate thread when data is received. They have to terminate with a “send reply” event

Load Balancing and Proxies: Our server in Listing 7 -7 is a single thread of control. So is Twisted (except for its deferred methods). Single threaded approach has a clear upper limit – 100% CPU time. Solution – run several instances of server (on different machines) and distribute clients among them. Requires a load balancer that runs on the server port and distributes client requests to different server instances. It is thus a proxy since to the clients it looks like the server and to any server instance it looks like a client.

Load Balancing and Proxies: Our server in Listing 7 -7 is a single thread of control. So is Twisted (except for its deferred methods). Single threaded approach has a clear upper limit – 100% CPU time. Solution – run several instances of server (on different machines) and distribute clients among them. Requires a load balancer that runs on the server port and distributes client requests to different server instances. It is thus a proxy since to the clients it looks like the server and to any server instance it looks like a client.

Load Balancers: Some are built into network hardware. HAProxy is a TCP/HTTP load balancer. Firewall rules on Linux let you simulate a load balancer. Traditional method is to use DNS – one domain name with several IP addresses. Problem with DNS solution is that once assigned a server, the client is stuck if server goes down. Modern load balancers can recover from a server crash by moving live connections to different server instance. DNS still used for reasons of geography. In Canada, enter www. google. com and you'll get www. google. ca

Load Balancers: Some are built into network hardware. HAProxy is a TCP/HTTP load balancer. Firewall rules on Linux let you simulate a load balancer. Traditional method is to use DNS – one domain name with several IP addresses. Problem with DNS solution is that once assigned a server, the client is stuck if server goes down. Modern load balancers can recover from a server crash by moving live connections to different server instance. DNS still used for reasons of geography. In Canada, enter www. google. com and you'll get www. google. ca

Load Balancers: Authors feel it is the only approach to server saturation that scales up. Intended to distribute work across different physical machines but could be used to distribute work among server instances on the same physical machine. Threading and forking are special cases of load balancing. If you have several instances of the same file descriptor all executing accept() then the OS will load balance among them. Open a listening port and then fork the program or create a thread that shares the same listening port, then all such file descriptors can execute accept() and wait for a 3 WHS completion. Load balancing is an alternative to writing multi-threaded code.

Load Balancers: Authors feel it is the only approach to server saturation that scales up. Intended to distribute work across different physical machines but could be used to distribute work among server instances on the same physical machine. Threading and forking are special cases of load balancing. If you have several instances of the same file descriptor all executing accept() then the OS will load balance among them. Open a listening port and then fork the program or create a thread that shares the same listening port, then all such file descriptors can execute accept() and wait for a 3 WHS completion. Load balancing is an alternative to writing multi-threaded code.

Threading and Multi-processing: Take a simple, one-client-at-a-time server and run several instances of it spawned from same process. Our event-driven example is responsible for deciding what client is ready for service. With threading, the OS does the same work. Each server connection is blocking on recv() and send() but using very few server resources while doing so. The OS wakes them up (removes them from IOBlocking state) when traffic arrives. Apache offers two solutions – prefork and worker. prefork: forks off several instances of httpd worker: runs multiple threads of control in a single httpd instance.

Threading and Multi-processing: Take a simple, one-client-at-a-time server and run several instances of it spawned from same process. Our event-driven example is responsible for deciding what client is ready for service. With threading, the OS does the same work. Each server connection is blocking on recv() and send() but using very few server resources while doing so. The OS wakes them up (removes them from IOBlocking state) when traffic arrives. Apache offers two solutions – prefork and worker. prefork: forks off several instances of httpd worker: runs multiple threads of control in a single httpd instance.

![Worker Example: [pletcha@archimedes 07]$ cat server_multi. py #!/usr/bin/env python # Foundations of Python Network Worker Example: [pletcha@archimedes 07]$ cat server_multi. py #!/usr/bin/env python # Foundations of Python Network](https://present5.com/presentation/2641626adc1e397b991c6064be770252/image-53.jpg) Worker Example: [pletcha@archimedes 07]$ cat server_multi. py #!/usr/bin/env python # Foundations of Python Network Programming - Chapter 7 - server_multi. py # Using multiple threads or processes to serve several clients in parallel. import sys, time, lancelot from multiprocessing import Process from server_simple import server_loop # actual server code from threading import Thread WORKER_CLASSES = {'thread': Thread, 'process': Process} WORKER_MAX = 10 def start_worker(Worker, listen_sock): worker = Worker(target=server_loop, args=(listen_sock, )) worker. daemon = True # exit when the main process does worker. start() # thread or process starts running server_loop return worker

Worker Example: [pletcha@archimedes 07]$ cat server_multi. py #!/usr/bin/env python # Foundations of Python Network Programming - Chapter 7 - server_multi. py # Using multiple threads or processes to serve several clients in parallel. import sys, time, lancelot from multiprocessing import Process from server_simple import server_loop # actual server code from threading import Thread WORKER_CLASSES = {'thread': Thread, 'process': Process} WORKER_MAX = 10 def start_worker(Worker, listen_sock): worker = Worker(target=server_loop, args=(listen_sock, )) worker. daemon = True # exit when the main process does worker. start() # thread or process starts running server_loop return worker

![Worker Example: if __name__ == '__main__': if len(sys. argv) != 3 or sys. argv[2] Worker Example: if __name__ == '__main__': if len(sys. argv) != 3 or sys. argv[2]](https://present5.com/presentation/2641626adc1e397b991c6064be770252/image-54.jpg) Worker Example: if __name__ == '__main__': if len(sys. argv) != 3 or sys. argv[2] not in WORKER_CLASSES: print >>sys. stderr, 'usage: server_multi. py interface thread|process' sys. exit(2) Worker = WORKER_CLASSES[sys. argv. pop()] # setup() wants len(argv)==2 # Every worker will accept() forever on the same listening socket. listen_sock = lancelot. setup() workers = [] for i in range(WORKER_MAX): workers. append(start_worker(Worker, listen_sock))

Worker Example: if __name__ == '__main__': if len(sys. argv) != 3 or sys. argv[2] not in WORKER_CLASSES: print >>sys. stderr, 'usage: server_multi. py interface thread|process' sys. exit(2) Worker = WORKER_CLASSES[sys. argv. pop()] # setup() wants len(argv)==2 # Every worker will accept() forever on the same listening socket. listen_sock = lancelot. setup() workers = [] for i in range(WORKER_MAX): workers. append(start_worker(Worker, listen_sock))

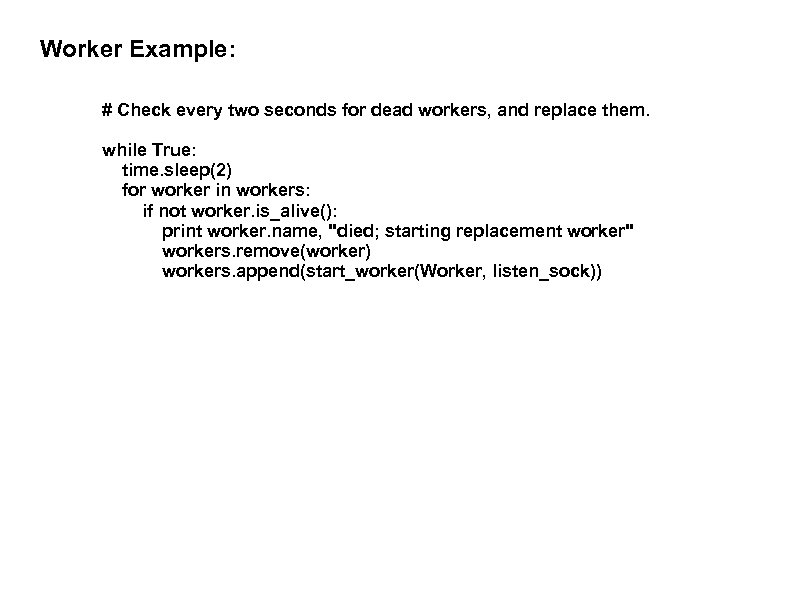

Worker Example: # Check every two seconds for dead workers, and replace them. while True: time. sleep(2) for worker in workers: if not worker. is_alive(): print worker. name, "died; starting replacement worker" workers. remove(worker) workers. append(start_worker(Worker, listen_sock))

Worker Example: # Check every two seconds for dead workers, and replace them. while True: time. sleep(2) for worker in workers: if not worker. is_alive(): print worker. name, "died; starting replacement worker" workers. remove(worker) workers. append(start_worker(Worker, listen_sock))

Observations: Now we have multiple instances of the simple server loop from Listing 7 -2. The OS is allowing different threads of execution (threads or processes) to listen on the same socket. Our thanks to POSIX. Let's run this server against our benchmark software.

Observations: Now we have multiple instances of the simple server loop from Listing 7 -2. The OS is allowing different threads of execution (threads or processes) to listen on the same socket. Our thanks to POSIX. Let's run this server against our benchmark software.

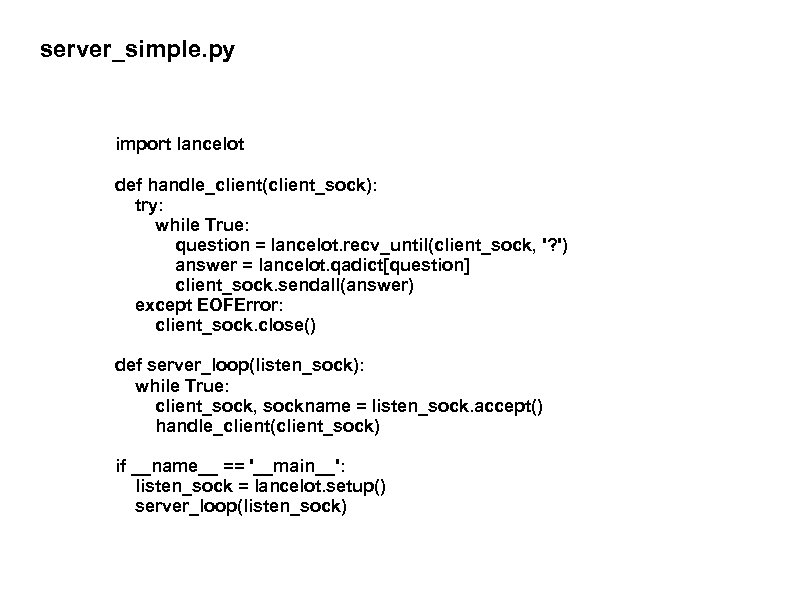

server_simple. py import lancelot def handle_client(client_sock): try: while True: question = lancelot. recv_until(client_sock, '? ') answer = lancelot. qadict[question] client_sock. sendall(answer) except EOFError: client_sock. close() def server_loop(listen_sock): while True: client_sock, sockname = listen_sock. accept() handle_client(client_sock) if __name__ == '__main__': listen_sock = lancelot. setup() server_loop(listen_sock)

server_simple. py import lancelot def handle_client(client_sock): try: while True: question = lancelot. recv_until(client_sock, '? ') answer = lancelot. qadict[question] client_sock. sendall(answer) except EOFError: client_sock. close() def server_loop(listen_sock): while True: client_sock, sockname = listen_sock. accept() handle_client(client_sock) if __name__ == '__main__': listen_sock = lancelot. setup() server_loop(listen_sock)

![ps -ef: 10 data connections and 1 “watcher” [pletcha@joyous ~]$ ps -ef | grep ps -ef: 10 data connections and 1 “watcher” [pletcha@joyous ~]$ ps -ef | grep](https://present5.com/presentation/2641626adc1e397b991c6064be770252/image-58.jpg) ps -ef: 10 data connections and 1 “watcher” [pletcha@joyous ~]$ ps -ef | grep 'python [s]' pletcha 14587 29926 0 08: 31 pts/2 00: 00 python server_multi. py pletcha 14588 14587 0 08: 31 pts/2 00: 05 python server_multi. py pletcha 14589 14587 0 08: 31 pts/2 00: 05 python server_multi. py pletcha 14590 14587 0 08: 31 pts/2 00: 05 python server_multi. py pletcha 14591 14587 0 08: 31 pts/2 00: 05 python server_multi. py pletcha 14592 14587 0 08: 31 pts/2 00: 05 python server_multi. py pletcha 14593 14587 0 08: 31 pts/2 00: 05 python server_multi. py pletcha 14594 14587 0 08: 31 pts/2 00: 05 python server_multi. py pletcha 14595 14587 0 08: 31 pts/2 00: 05 python server_multi. py pletcha 14596 14587 0 08: 31 pts/2 00: 05 python server_multi. py pletcha 14597 14587 0 08: 31 pts/2 00: 05 python server_multi. py all have same parent process process process

ps -ef: 10 data connections and 1 “watcher” [pletcha@joyous ~]$ ps -ef | grep 'python [s]' pletcha 14587 29926 0 08: 31 pts/2 00: 00 python server_multi. py pletcha 14588 14587 0 08: 31 pts/2 00: 05 python server_multi. py pletcha 14589 14587 0 08: 31 pts/2 00: 05 python server_multi. py pletcha 14590 14587 0 08: 31 pts/2 00: 05 python server_multi. py pletcha 14591 14587 0 08: 31 pts/2 00: 05 python server_multi. py pletcha 14592 14587 0 08: 31 pts/2 00: 05 python server_multi. py pletcha 14593 14587 0 08: 31 pts/2 00: 05 python server_multi. py pletcha 14594 14587 0 08: 31 pts/2 00: 05 python server_multi. py pletcha 14595 14587 0 08: 31 pts/2 00: 05 python server_multi. py pletcha 14596 14587 0 08: 31 pts/2 00: 05 python server_multi. py pletcha 14597 14587 0 08: 31 pts/2 00: 05 python server_multi. py all have same parent process process process

Threading: Please note that standard C Python restricts greatly the parallelism to our “threads” since all run inside a Python environment. Other implementations of Python (Jython or Iron. Python) handle this better (better locking of shared Python data structures). You need to experiment on the right number of server children you should run. Issues include number of server cores, speed of clients, speed of server RAM bus can affect optimum number of servers. http: //www. cs. newpaltz. edu/~pletcha/ NET_PY/test_dialog-20130416 T 084007/index. html http: //www. cs. newpaltz. edu/~pletcha/ NET_PY/test_dialog-20130416 T 085520/index. html

Threading: Please note that standard C Python restricts greatly the parallelism to our “threads” since all run inside a Python environment. Other implementations of Python (Jython or Iron. Python) handle this better (better locking of shared Python data structures). You need to experiment on the right number of server children you should run. Issues include number of server cores, speed of clients, speed of server RAM bus can affect optimum number of servers. http: //www. cs. newpaltz. edu/~pletcha/ NET_PY/test_dialog-20130416 T 084007/index. html http: //www. cs. newpaltz. edu/~pletcha/ NET_PY/test_dialog-20130416 T 085520/index. html

Handling multiple, related processes: How do we kill things so all processes die? multiprocessing cleans up your workers if you kill the parent with Ctrl-C or exit normally. but if you kill the parent then the child processes will become orphaned and you will have to kill them too, individually.

Handling multiple, related processes: How do we kill things so all processes die? multiprocessing cleans up your workers if you kill the parent with Ctrl-C or exit normally. but if you kill the parent then the child processes will become orphaned and you will have to kill them too, individually.

![Killing processes: [pletcha@joyous ~]$ ps -ef | grep 'python [s]' pletcha 15107 29926 0 Killing processes: [pletcha@joyous ~]$ ps -ef | grep 'python [s]' pletcha 15107 29926 0](https://present5.com/presentation/2641626adc1e397b991c6064be770252/image-61.jpg) Killing processes: [pletcha@joyous ~]$ ps -ef | grep 'python [s]' pletcha 15107 29926 0 08: 54 pts/2 00: 00 python server_multi. py process pletcha 15108 15107 0 08: 54 pts/2 00: 05 python server_multi. py process pletcha 15109 15107 0 08: 54 pts/2 00: 05 python server_multi. py process pletcha 15110 15107 0 08: 54 pts/2 00: 05 python server_multi. py process pletcha 15111 15107 0 08: 54 pts/2 00: 05 python server_multi. py process [pletcha@joyous ~]$ kill 15107 [pletcha@joyous ~]$ ps -ef | grep 'python [s]' pletcha 15108 1 0 08: 54 pts/2 00: 05 python server_multi. py process pletcha 15109 1 0 08: 54 pts/2 00: 05 python server_multi. py process pletcha 15110 1 0 08: 54 pts/2 00: 05 python server_multi. py process pletcha 15111 1 0 08: 54 pts/2 00: 05 python server_multi. py process [pletcha@joyous ~]$ ps -ef | grep 'python [s]'|awk '{print $2}' 15108 15109 15110 15111 [pletcha@joyous ~]$ kill $(ps -ef | grep 'python [s]'|awk '{print $2}')

Killing processes: [pletcha@joyous ~]$ ps -ef | grep 'python [s]' pletcha 15107 29926 0 08: 54 pts/2 00: 00 python server_multi. py process pletcha 15108 15107 0 08: 54 pts/2 00: 05 python server_multi. py process pletcha 15109 15107 0 08: 54 pts/2 00: 05 python server_multi. py process pletcha 15110 15107 0 08: 54 pts/2 00: 05 python server_multi. py process pletcha 15111 15107 0 08: 54 pts/2 00: 05 python server_multi. py process [pletcha@joyous ~]$ kill 15107 [pletcha@joyous ~]$ ps -ef | grep 'python [s]' pletcha 15108 1 0 08: 54 pts/2 00: 05 python server_multi. py process pletcha 15109 1 0 08: 54 pts/2 00: 05 python server_multi. py process pletcha 15110 1 0 08: 54 pts/2 00: 05 python server_multi. py process pletcha 15111 1 0 08: 54 pts/2 00: 05 python server_multi. py process [pletcha@joyous ~]$ ps -ef | grep 'python [s]'|awk '{print $2}' 15108 15109 15110 15111 [pletcha@joyous ~]$ kill $(ps -ef | grep 'python [s]'|awk '{print $2}')

Threading and Mulit-process Frameworks: Let somebody else do the work. Worker pools (either threads or processes) Module multiprocessing has a Pool object for distributing work across multiple child processes with all work distributed from a master thread after accept() returns vs single listener socket. Module Socket. Server in standard Python library follows latter approach.

Threading and Mulit-process Frameworks: Let somebody else do the work. Worker pools (either threads or processes) Module multiprocessing has a Pool object for distributing work across multiple child processes with all work distributed from a master thread after accept() returns vs single listener socket. Module Socket. Server in standard Python library follows latter approach.

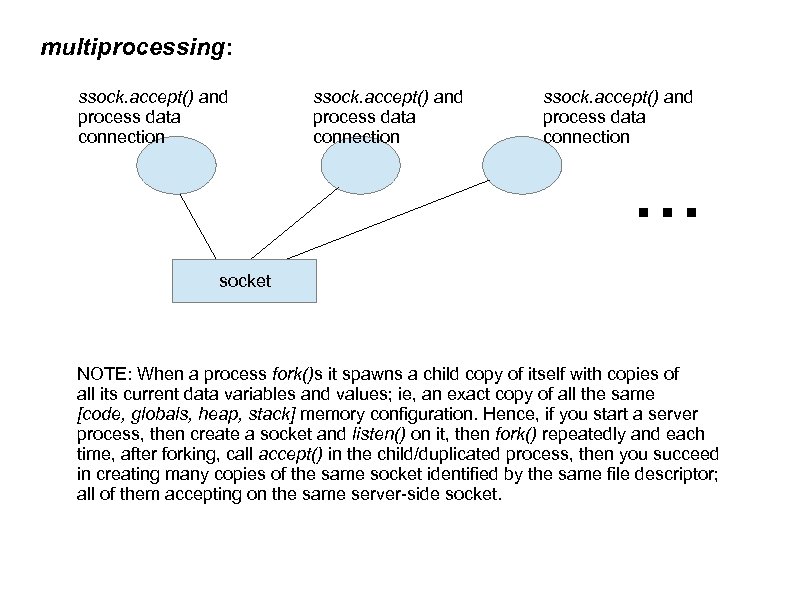

multiprocessing: ssock. accept() and process data connection . . . socket NOTE: When a process fork()s it spawns a child copy of itself with copies of all its current data variables and values; ie, an exact copy of all the same [code, globals, heap, stack] memory configuration. Hence, if you start a server process, then create a socket and listen() on it, then fork() repeatedly and each time, after forking, call accept() in the child/duplicated process, then you succeed in creating many copies of the same socket identified by the same file descriptor; all of them accepting on the same server-side socket.

multiprocessing: ssock. accept() and process data connection . . . socket NOTE: When a process fork()s it spawns a child copy of itself with copies of all its current data variables and values; ie, an exact copy of all the same [code, globals, heap, stack] memory configuration. Hence, if you start a server process, then create a socket and listen() on it, then fork() repeatedly and each time, after forking, call accept() in the child/duplicated process, then you succeed in creating many copies of the same socket identified by the same file descriptor; all of them accepting on the same server-side socket.

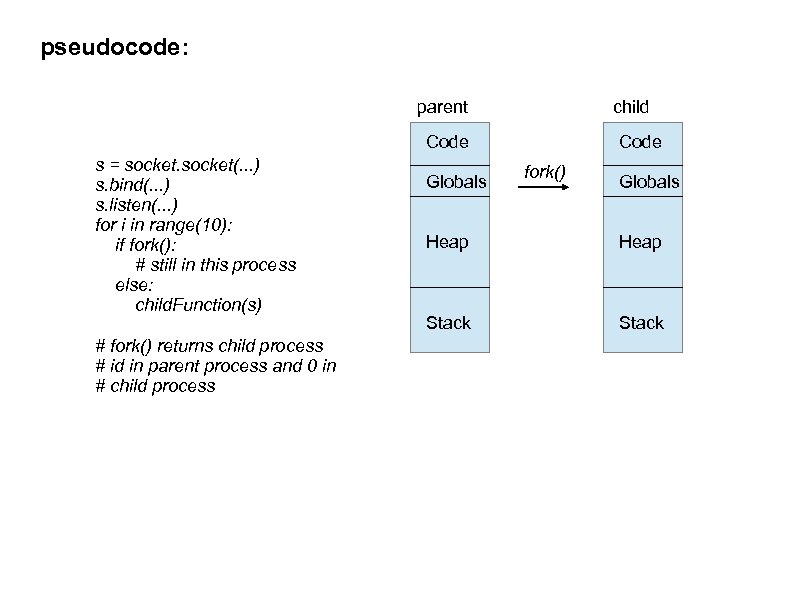

pseudocode: parent child Code s = socket(. . . ) s. bind(. . . ) s. listen(. . . ) for i in range(10): if fork(): # still in this process else: child. Function(s) # fork() returns child process # id in parent process and 0 in # child process Globals Code fork() Globals Heap Stack

pseudocode: parent child Code s = socket(. . . ) s. bind(. . . ) s. listen(. . . ) for i in range(10): if fork(): # still in this process else: child. Function(s) # fork() returns child process # id in parent process and 0 in # child process Globals Code fork() Globals Heap Stack

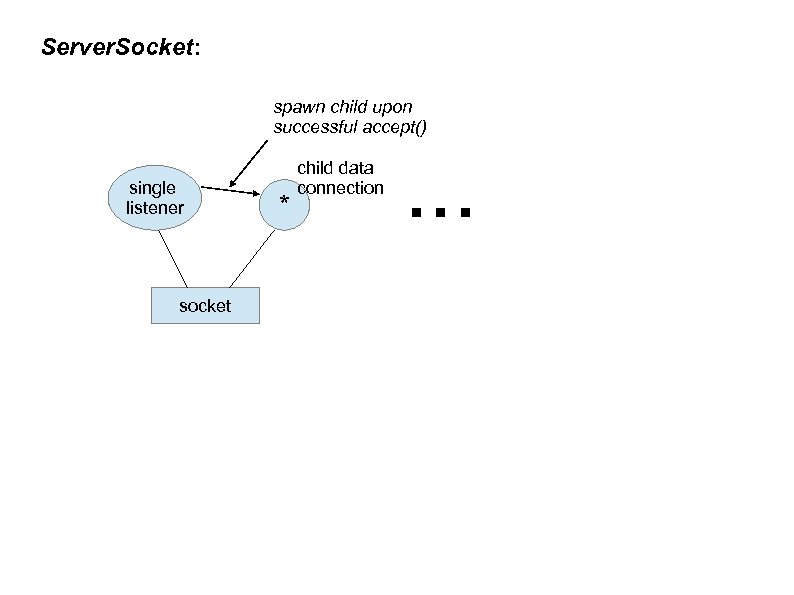

Server. Socket: spawn child upon successful accept() single listener socket * child data connection . . .

Server. Socket: spawn child upon successful accept() single listener socket * child data connection . . .

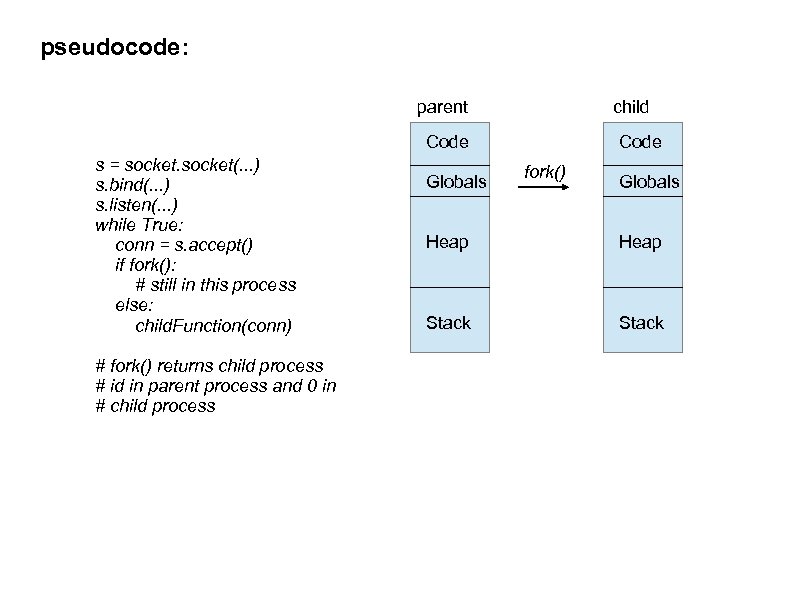

pseudocode: parent child Code s = socket(. . . ) s. bind(. . . ) s. listen(. . . ) while True: conn = s. accept() if fork(): # still in this process else: child. Function(conn) # fork() returns child process # id in parent process and 0 in # child process Globals Code fork() Globals Heap Stack

pseudocode: parent child Code s = socket(. . . ) s. bind(. . . ) s. listen(. . . ) while True: conn = s. accept() if fork(): # still in this process else: child. Function(conn) # fork() returns child process # id in parent process and 0 in # child process Globals Code fork() Globals Heap Stack

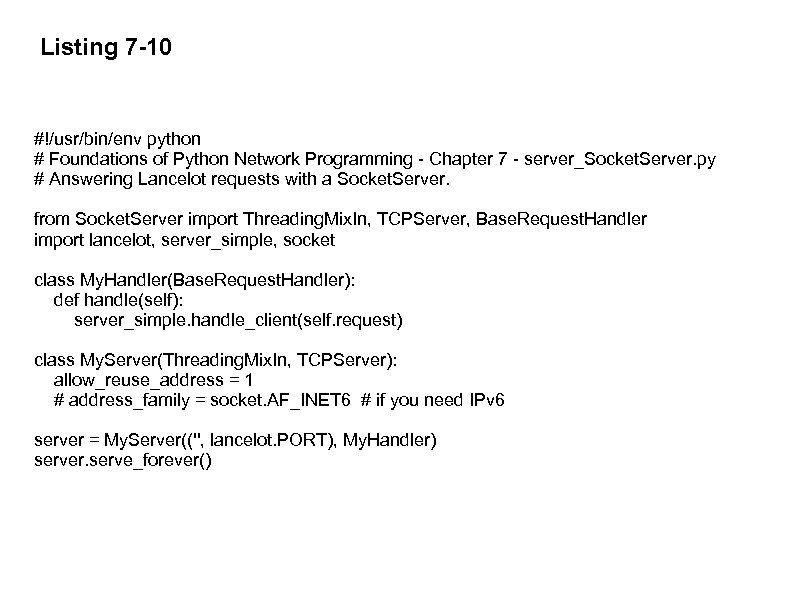

Listing 7 -10 #!/usr/bin/env python # Foundations of Python Network Programming - Chapter 7 - server_Socket. Server. py # Answering Lancelot requests with a Socket. Server. from Socket. Server import Threading. Mix. In, TCPServer, Base. Request. Handler import lancelot, server_simple, socket class My. Handler(Base. Request. Handler): def handle(self): server_simple. handle_client(self. request) class My. Server(Threading. Mix. In, TCPServer): allow_reuse_address = 1 # address_family = socket. AF_INET 6 # if you need IPv 6 server = My. Server(('', lancelot. PORT), My. Handler) server. serve_forever()

Listing 7 -10 #!/usr/bin/env python # Foundations of Python Network Programming - Chapter 7 - server_Socket. Server. py # Answering Lancelot requests with a Socket. Server. from Socket. Server import Threading. Mix. In, TCPServer, Base. Request. Handler import lancelot, server_simple, socket class My. Handler(Base. Request. Handler): def handle(self): server_simple. handle_client(self. request) class My. Server(Threading. Mix. In, TCPServer): allow_reuse_address = 1 # address_family = socket. AF_INET 6 # if you need IPv 6 server = My. Server(('', lancelot. PORT), My. Handler) server. serve_forever()

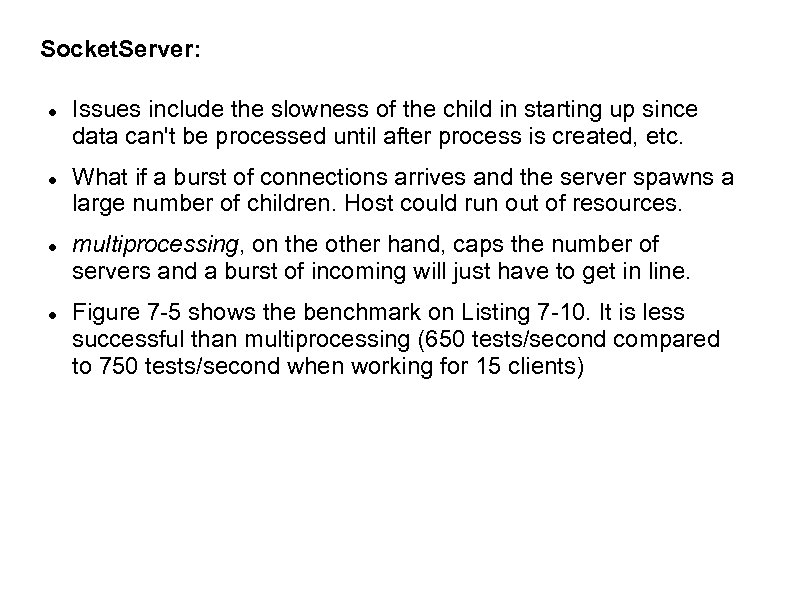

Socket. Server: Issues include the slowness of the child in starting up since data can't be processed until after process is created, etc. What if a burst of connections arrives and the server spawns a large number of children. Host could run out of resources. multiprocessing, on the other hand, caps the number of servers and a burst of incoming will just have to get in line. Figure 7 -5 shows the benchmark on Listing 7 -10. It is less successful than multiprocessing (650 tests/second compared to 750 tests/second when working for 15 clients)

Socket. Server: Issues include the slowness of the child in starting up since data can't be processed until after process is created, etc. What if a burst of connections arrives and the server spawns a large number of children. Host could run out of resources. multiprocessing, on the other hand, caps the number of servers and a burst of incoming will just have to get in line. Figure 7 -5 shows the benchmark on Listing 7 -10. It is less successful than multiprocessing (650 tests/second compared to 750 tests/second when working for 15 clients)

Process and Thread Coordination: Assumption, so far, is that threads or processes serving one data connection need not share data with threads or processes serving a different data connection. So for example, if you are connecting to the same database from the different worker threads, you are using a thread-safe database API and problems won't arise if the different workers are sharing the same resource (writing to the same log file, for example). If this is not so, then you need to be skilled in concurrent programming. Our author's advise:

Process and Thread Coordination: Assumption, so far, is that threads or processes serving one data connection need not share data with threads or processes serving a different data connection. So for example, if you are connecting to the same database from the different worker threads, you are using a thread-safe database API and problems won't arise if the different workers are sharing the same resource (writing to the same log file, for example). If this is not so, then you need to be skilled in concurrent programming. Our author's advise:

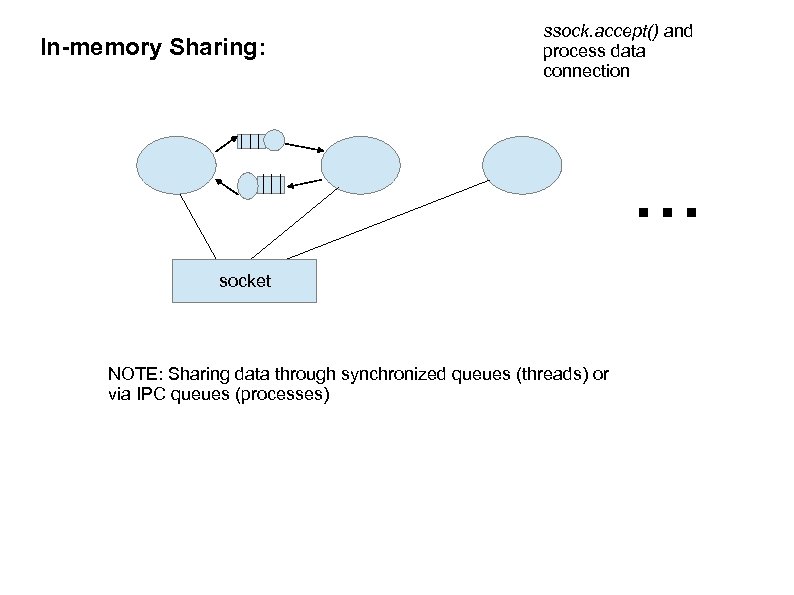

In-memory Sharing: ssock. accept() and process data connection . . . socket NOTE: Sharing data through synchronized queues (threads) or via IPC queues (processes)

In-memory Sharing: ssock. accept() and process data connection . . . socket NOTE: Sharing data through synchronized queues (threads) or via IPC queues (processes)

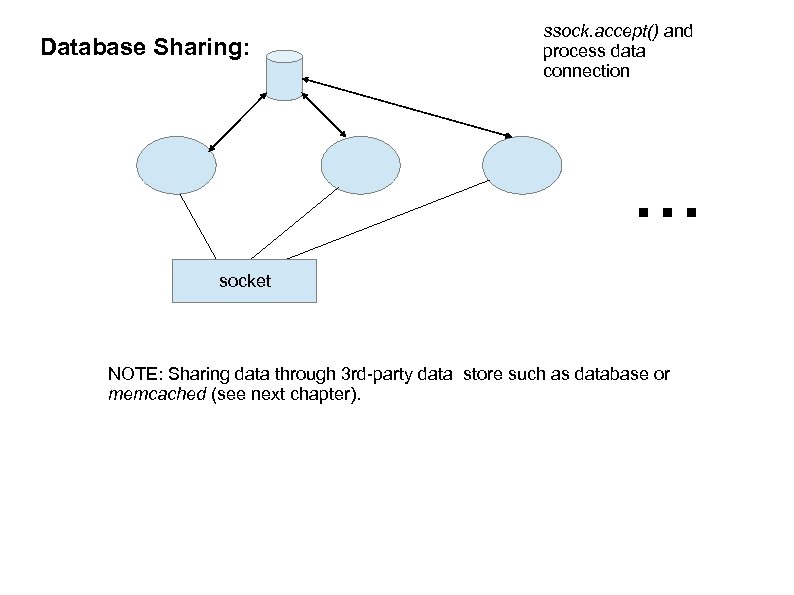

Database Sharing: ssock. accept() and process data connection . . . socket NOTE: Sharing data through 3 rd-party data store such as database or memcached (see next chapter).

Database Sharing: ssock. accept() and process data connection . . . socket NOTE: Sharing data through 3 rd-party data store such as database or memcached (see next chapter).

Process and Thread Coordination: 1: make sure you understand the difference between threads and processes. Threads continue to share after creation but processes only share initially and then can diverge. Threads are “convenient” while processes are “safe”. 2: Use high-level data structures provided by python. For example, there exist thread-safe queue data structures and special queues designed to move data across processes. 3: Limit shared data. 4: Check out the Standard Library and Packages index for something created by others. 5: Follow the path taken by web programmers where shared data is kept by the database.

Process and Thread Coordination: 1: make sure you understand the difference between threads and processes. Threads continue to share after creation but processes only share initially and then can diverge. Threads are “convenient” while processes are “safe”. 2: Use high-level data structures provided by python. For example, there exist thread-safe queue data structures and special queues designed to move data across processes. 3: Limit shared data. 4: Check out the Standard Library and Packages index for something created by others. 5: Follow the path taken by web programmers where shared data is kept by the database.

Inetd: Oldtimer. Saves on daemons. Just one daemon listening to several well-known ports simultaneously. Written with select() or poll(). Programs to call upon accept() found in /etc/inetd. conf. Problem: How to give each new process the correct file descriptor of the successfully connected client socket? Answer: Duplicate your newly connected socket as stdin and stdout. Then pass these to the new processes ready to handle the connection using redirection. Finally, write your connection service routine for stdin and stdout only. It won't even know it is connected to a remote client.

Inetd: Oldtimer. Saves on daemons. Just one daemon listening to several well-known ports simultaneously. Written with select() or poll(). Programs to call upon accept() found in /etc/inetd. conf. Problem: How to give each new process the correct file descriptor of the successfully connected client socket? Answer: Duplicate your newly connected socket as stdin and stdout. Then pass these to the new processes ready to handle the connection using redirection. Finally, write your connection service routine for stdin and stdout only. It won't even know it is connected to a remote client.

Event-driven Servers: See page 289 for this feature

Event-driven Servers: See page 289 for this feature