0e910af1a915042d928c55931c5fe410.ppt

- Количество слайдов: 35

Chapter 7 Multicores, Multiprocessors, and Clusters

Chapter 7 Multicores, Multiprocessors, and Clusters

n Goal: connecting multiple computers to get higher performance n n n Multiprocessors Scalability, availability, power efficiency Task-level (process-level) parallelism n n n § 9. 1 Introduction Independent tasks running in parallel High throughput for independent jobs Parallel processing program n Single program run on multiple processors Chapter 7 — Multicores, Multiprocessors, and Clusters — 2

n Goal: connecting multiple computers to get higher performance n n n Multiprocessors Scalability, availability, power efficiency Task-level (process-level) parallelism n n n § 9. 1 Introduction Independent tasks running in parallel High throughput for independent jobs Parallel processing program n Single program run on multiple processors Chapter 7 — Multicores, Multiprocessors, and Clusters — 2

n Multicore microprocessors n n § 9. 1 Introduction Processors are called cores in a multicore chip Chips with multiple processors (cores) Almost always Shared Memory Processors (SMPs) Clusters n n n Microprocessors housed in many independent servers Search engines, web servers, email servers, and databases Memory not shared Chapter 7 — Multicores, Multiprocessors, and Clusters — 3

n Multicore microprocessors n n § 9. 1 Introduction Processors are called cores in a multicore chip Chips with multiple processors (cores) Almost always Shared Memory Processors (SMPs) Clusters n n n Microprocessors housed in many independent servers Search engines, web servers, email servers, and databases Memory not shared Chapter 7 — Multicores, Multiprocessors, and Clusters — 3

n n The number of cores is expected to increase with Moore’s Law Challenge is to create hardware and software that will make it easy to write correct parallel processing programs that will execute efficiently in performance and energy as the number of cores per chip increases. § 9. 1 Introduction Chapter 7 — Multicores, Multiprocessors, and Clusters — 4

n n The number of cores is expected to increase with Moore’s Law Challenge is to create hardware and software that will make it easy to write correct parallel processing programs that will execute efficiently in performance and energy as the number of cores per chip increases. § 9. 1 Introduction Chapter 7 — Multicores, Multiprocessors, and Clusters — 4

Hardware and Software n Hardware n n n Software n n n Serial: e. g. , Pentium 4 Parallel: e. g. , Intel Core i 7 Sequential: e. g. , matrix multiplication Concurrent: e. g. , operating system Sequential/concurrent software can run on serial/parallel hardware n Challenge: making effective use of parallel hardware Chapter 7 — Multicores, Multiprocessors, and Clusters — 5

Hardware and Software n Hardware n n n Software n n n Serial: e. g. , Pentium 4 Parallel: e. g. , Intel Core i 7 Sequential: e. g. , matrix multiplication Concurrent: e. g. , operating system Sequential/concurrent software can run on serial/parallel hardware n Challenge: making effective use of parallel hardware Chapter 7 — Multicores, Multiprocessors, and Clusters — 5

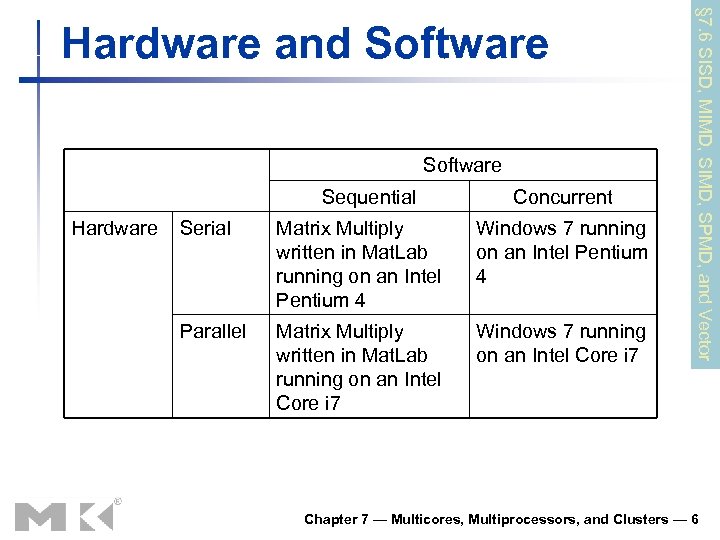

Software Sequential Hardware Concurrent Serial Matrix Multiply written in Mat. Lab running on an Intel Pentium 4 Windows 7 running on an Intel Pentium 4 Parallel Matrix Multiply written in Mat. Lab running on an Intel Core i 7 Windows 7 running on an Intel Core i 7 § 7. 6 SISD, MIMD, SPMD, and Vector Hardware and Software Chapter 7 — Multicores, Multiprocessors, and Clusters — 6

Software Sequential Hardware Concurrent Serial Matrix Multiply written in Mat. Lab running on an Intel Pentium 4 Windows 7 running on an Intel Pentium 4 Parallel Matrix Multiply written in Mat. Lab running on an Intel Core i 7 Windows 7 running on an Intel Core i 7 § 7. 6 SISD, MIMD, SPMD, and Vector Hardware and Software Chapter 7 — Multicores, Multiprocessors, and Clusters — 6

Parallel Processing Programs n Difficulties n n Not with the hardware There are too few important application programs written to complete tasks sooner on multiprocessors It is difficult to write software that uses multiple processors to complete one task faster Problem gets worse as the number of processors increases Chapter 7 — Multicores, Multiprocessors, and Clusters — 7

Parallel Processing Programs n Difficulties n n Not with the hardware There are too few important application programs written to complete tasks sooner on multiprocessors It is difficult to write software that uses multiple processors to complete one task faster Problem gets worse as the number of processors increases Chapter 7 — Multicores, Multiprocessors, and Clusters — 7

n n Parallel software is the problem Need to get significant performance improvement n Otherwise, just use a faster uniprocessor, since it’s easier! § 7. 2 The Difficulty of Creating Parallel Processing Programs Parallel Programming Chapter 7 — Multicores, Multiprocessors, and Clusters — 8

n n Parallel software is the problem Need to get significant performance improvement n Otherwise, just use a faster uniprocessor, since it’s easier! § 7. 2 The Difficulty of Creating Parallel Processing Programs Parallel Programming Chapter 7 — Multicores, Multiprocessors, and Clusters — 8

n Challenges n n n Scheduling the workload Partitioning the work into parallel pieces Balancing the load evenly between the processors Synchronize the work Communication overhead between the processors § 7. 2 The Difficulty of Creating Parallel Processing Programs Parallel Programming Chapter 7 — Multicores, Multiprocessors, and Clusters — 9

n Challenges n n n Scheduling the workload Partitioning the work into parallel pieces Balancing the load evenly between the processors Synchronize the work Communication overhead between the processors § 7. 2 The Difficulty of Creating Parallel Processing Programs Parallel Programming Chapter 7 — Multicores, Multiprocessors, and Clusters — 9

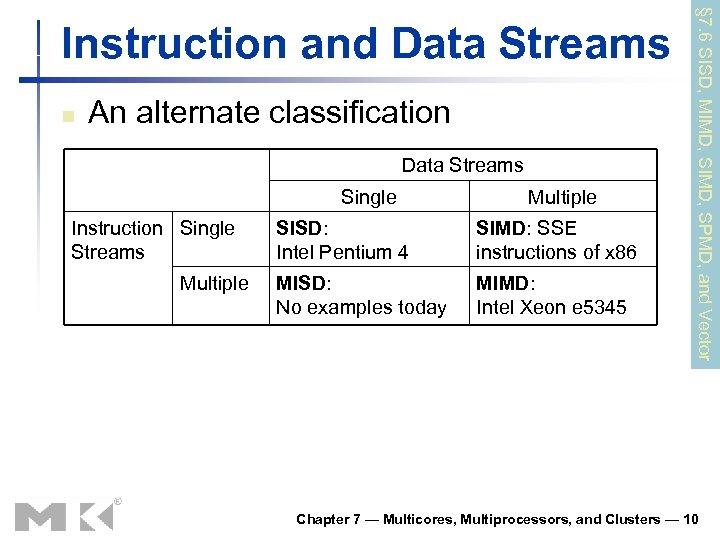

n An alternate classification Data Streams Single Instruction Single Streams Multiple SISD: Intel Pentium 4 SIMD: SSE instructions of x 86 MISD: No examples today MIMD: Intel Xeon e 5345 § 7. 6 SISD, MIMD, SPMD, and Vector Instruction and Data Streams Chapter 7 — Multicores, Multiprocessors, and Clusters — 10

n An alternate classification Data Streams Single Instruction Single Streams Multiple SISD: Intel Pentium 4 SIMD: SSE instructions of x 86 MISD: No examples today MIMD: Intel Xeon e 5345 § 7. 6 SISD, MIMD, SPMD, and Vector Instruction and Data Streams Chapter 7 — Multicores, Multiprocessors, and Clusters — 10

n n Conventional uniprocessor n SISD – Single instruction stream and single data stream Conventional multiprocessor n n n MIMD – multiple instruction streams and multiple data streams Can be separate programs running on different processors or Single Program that runs on all processors n § 7. 6 SISD, MIMD, SPMD, and Vector Instruction and Data Streams Rely on conditional statements when different processors should execute different sections of code Chapter 7 — Multicores, Multiprocessors, and Clusters — 11

n n Conventional uniprocessor n SISD – Single instruction stream and single data stream Conventional multiprocessor n n n MIMD – multiple instruction streams and multiple data streams Can be separate programs running on different processors or Single Program that runs on all processors n § 7. 6 SISD, MIMD, SPMD, and Vector Instruction and Data Streams Rely on conditional statements when different processors should execute different sections of code Chapter 7 — Multicores, Multiprocessors, and Clusters — 11

n SIMD – Single instruction stream and multiple data stream n n n Operate on vectors of data, e. g. One instruction to add 64 numbers by sending 64 data streams to 64 ALUs to form 64 sums within a single clock cycle Works best when dealing with arrays in for loops Must have a lot of identical structured data E. g. Graphics processing MISD – Multiple instruction stream and single data stream n n § 7. 6 SISD, MIMD, SPMD, and Vector Instruction and Data Streams Perform a series of computations on a single data stream in a pipelined fashion E. g. Parse input from network, decrypt data, decompress data, search for match Chapter 7 — Multicores, Multiprocessors, and Clusters — 12

n SIMD – Single instruction stream and multiple data stream n n n Operate on vectors of data, e. g. One instruction to add 64 numbers by sending 64 data streams to 64 ALUs to form 64 sums within a single clock cycle Works best when dealing with arrays in for loops Must have a lot of identical structured data E. g. Graphics processing MISD – Multiple instruction stream and single data stream n n § 7. 6 SISD, MIMD, SPMD, and Vector Instruction and Data Streams Perform a series of computations on a single data stream in a pipelined fashion E. g. Parse input from network, decrypt data, decompress data, search for match Chapter 7 — Multicores, Multiprocessors, and Clusters — 12

n While MIMD relies on multiple processes or threads to try to keep multiple processors busy, hardware multithreading allows multiple threads to share the functional units of a single processor in an overlapping fashion to try to utilize the hardware resources efficiently. § 7. 5 Hardware Multithreading Chapter 7 — Multicores, Multiprocessors, and Clusters — 13

n While MIMD relies on multiple processes or threads to try to keep multiple processors busy, hardware multithreading allows multiple threads to share the functional units of a single processor in an overlapping fashion to try to utilize the hardware resources efficiently. § 7. 5 Hardware Multithreading Chapter 7 — Multicores, Multiprocessors, and Clusters — 13

n Performing multiple threads of execution in parallel n Processor must duplicate the independent state of each thread n n Replicate registers, and program counter, etc. Must have fast switching between threads n n § 7. 5 Hardware Multithreading Process switch can take thousands of cycles Thread switch can be instantaneous Chapter 7 — Multicores, Multiprocessors, and Clusters — 14

n Performing multiple threads of execution in parallel n Processor must duplicate the independent state of each thread n n Replicate registers, and program counter, etc. Must have fast switching between threads n n § 7. 5 Hardware Multithreading Process switch can take thousands of cycles Thread switch can be instantaneous Chapter 7 — Multicores, Multiprocessors, and Clusters — 14

n Fine-grain multithreading n n n Switch threads after each cycle Interleave instruction execution If one thread stalls, others are executed Instruction-level parallelism § 7. 5 Hardware Multithreading Coarse-grain multithreading n n n Only switch on long stall (e. g. , L 2 -cache miss) Disadvantage – pipeline start up cost No instruction-level parallelism Chapter 7 — Multicores, Multiprocessors, and Clusters — 15

n Fine-grain multithreading n n n Switch threads after each cycle Interleave instruction execution If one thread stalls, others are executed Instruction-level parallelism § 7. 5 Hardware Multithreading Coarse-grain multithreading n n n Only switch on long stall (e. g. , L 2 -cache miss) Disadvantage – pipeline start up cost No instruction-level parallelism Chapter 7 — Multicores, Multiprocessors, and Clusters — 15

Simultaneous Multithreading n In multiple-issue dynamically scheduled pipelined processor n n Schedule instructions from multiple threads Instructions from independent threads execute when function units are available Within threads, dependencies handled by scheduling and register renaming Example: Intel Pentium-4 HT n Two threads: duplicated registers, shared function units and caches Chapter 7 — Multicores, Multiprocessors, and Clusters — 16

Simultaneous Multithreading n In multiple-issue dynamically scheduled pipelined processor n n Schedule instructions from multiple threads Instructions from independent threads execute when function units are available Within threads, dependencies handled by scheduling and register renaming Example: Intel Pentium-4 HT n Two threads: duplicated registers, shared function units and caches Chapter 7 — Multicores, Multiprocessors, and Clusters — 16

Multithreading Example Chapter 7 — Multicores, Multiprocessors, and Clusters — 17

Multithreading Example Chapter 7 — Multicores, Multiprocessors, and Clusters — 17

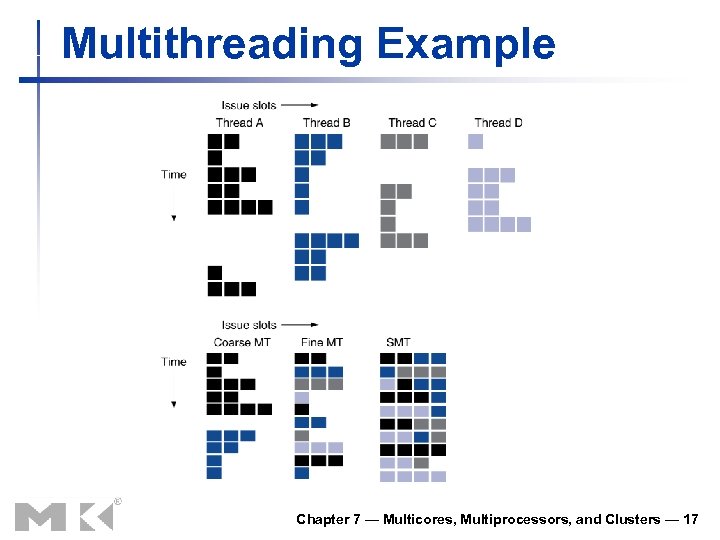

n The four threads at the top show each would execute running alone on a standard superscalar processor without multithreading support n n A major stall such as an instruction cache miss, can leave the entire processor idle. § 7. 5 Hardware Multithreading Example Coarse-grained, the long stalls are partially hidden by switching to another thread that uses the resources of the processor. n Pipelined start-up overhead still leads to idle cycles Chapter 7 — Multicores, Multiprocessors, and Clusters — 18

n The four threads at the top show each would execute running alone on a standard superscalar processor without multithreading support n n A major stall such as an instruction cache miss, can leave the entire processor idle. § 7. 5 Hardware Multithreading Example Coarse-grained, the long stalls are partially hidden by switching to another thread that uses the resources of the processor. n Pipelined start-up overhead still leads to idle cycles Chapter 7 — Multicores, Multiprocessors, and Clusters — 18

n Fine-grained n n n Instruction-level parallelism The interleaving of threads mostly eliminates idle clock cycles SMT n n Thread-level parallelism and instruction-level parallelism are both exploited Multiple threads using the issue slots in a single clock cycle § 7. 5 Hardware Multithreading Example Chapter 7 — Multicores, Multiprocessors, and Clusters — 19

n Fine-grained n n n Instruction-level parallelism The interleaving of threads mostly eliminates idle clock cycles SMT n n Thread-level parallelism and instruction-level parallelism are both exploited Multiple threads using the issue slots in a single clock cycle § 7. 5 Hardware Multithreading Example Chapter 7 — Multicores, Multiprocessors, and Clusters — 19

Future of Multithreading n n Will it survive? In what form? Power considerations simplified microarchitectures n n Tolerating cache-miss latency n n Simpler forms of multithreading Thread switch may be most effective Multiple simple cores might share resources more effectively Chapter 7 — Multicores, Multiprocessors, and Clusters — 20

Future of Multithreading n n Will it survive? In what form? Power considerations simplified microarchitectures n n Tolerating cache-miss latency n n Simpler forms of multithreading Thread switch may be most effective Multiple simple cores might share resources more effectively Chapter 7 — Multicores, Multiprocessors, and Clusters — 20

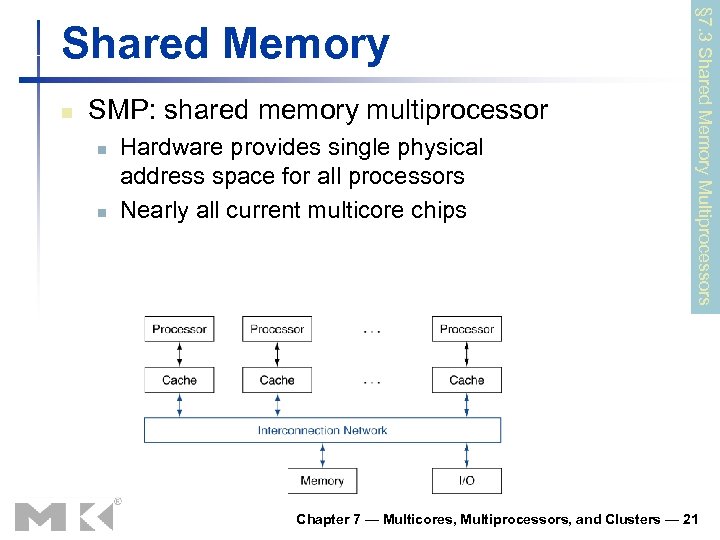

n SMP: shared memory multiprocessor n n Hardware provides single physical address space for all processors Nearly all current multicore chips § 7. 3 Shared Memory Multiprocessors Shared Memory Chapter 7 — Multicores, Multiprocessors, and Clusters — 21

n SMP: shared memory multiprocessor n n Hardware provides single physical address space for all processors Nearly all current multicore chips § 7. 3 Shared Memory Multiprocessors Shared Memory Chapter 7 — Multicores, Multiprocessors, and Clusters — 21

n n n Memory access time can be either: uniform memory access (UMA) – the access time to a word does not depend on which processor asks for it. or nonuniform memory access (NUMA) – some memory accesses are much faster than others, depending on which processor asks for which word n n § 7. 3 Shared Memory Multiprocessors Shared Memory Because main memory is divided and attached to different microprocessors or to different memory controllers Synchronize shared variables using locks Chapter 7 — Multicores, Multiprocessors, and Clusters — 22

n n n Memory access time can be either: uniform memory access (UMA) – the access time to a word does not depend on which processor asks for it. or nonuniform memory access (NUMA) – some memory accesses are much faster than others, depending on which processor asks for which word n n § 7. 3 Shared Memory Multiprocessors Shared Memory Because main memory is divided and attached to different microprocessors or to different memory controllers Synchronize shared variables using locks Chapter 7 — Multicores, Multiprocessors, and Clusters — 22

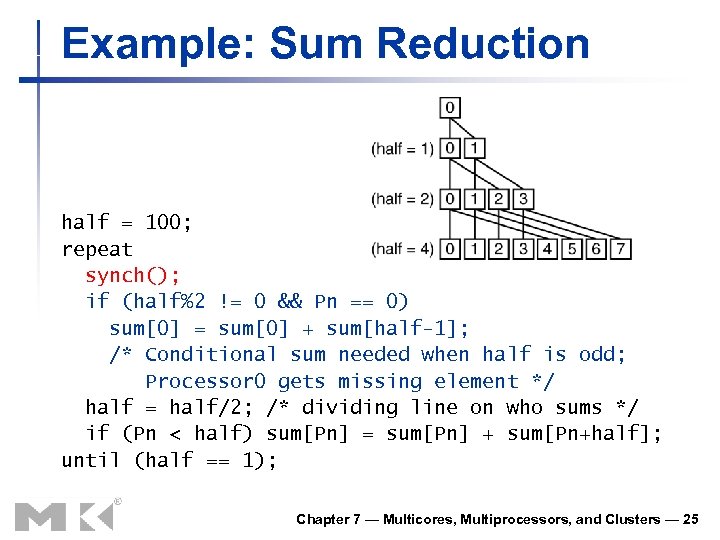

Example: Sum Reduction n Sum 100, 000 numbers on 100 processor UMA n Each processor has ID: 0 ≤ Pn ≤ 99 Chapter 7 — Multicores, Multiprocessors, and Clusters — 23

Example: Sum Reduction n Sum 100, 000 numbers on 100 processor UMA n Each processor has ID: 0 ≤ Pn ≤ 99 Chapter 7 — Multicores, Multiprocessors, and Clusters — 23

Example: Sum Reduction n Sum 100, 000 numbers on 100 processor UMA n n Each processor has ID: 0 ≤ Pn ≤ 99 Partition 1000 numbers per processor Initial summation on each processor sum[Pn] = 0; for (i = 1000*Pn; i < 1000*(Pn+1); i = i + 1) sum[Pn] = sum[Pn] + A[i]; Now need to add these partial sums n n n Reduction: divide and conquer Half the processors add pairs, then quarter, … Need to synchronize between reduction steps Chapter 7 — Multicores, Multiprocessors, and Clusters — 24

Example: Sum Reduction n Sum 100, 000 numbers on 100 processor UMA n n Each processor has ID: 0 ≤ Pn ≤ 99 Partition 1000 numbers per processor Initial summation on each processor sum[Pn] = 0; for (i = 1000*Pn; i < 1000*(Pn+1); i = i + 1) sum[Pn] = sum[Pn] + A[i]; Now need to add these partial sums n n n Reduction: divide and conquer Half the processors add pairs, then quarter, … Need to synchronize between reduction steps Chapter 7 — Multicores, Multiprocessors, and Clusters — 24

Example: Sum Reduction half = 100; repeat synch(); if (half%2 != 0 && Pn == 0) sum[0] = sum[0] + sum[half-1]; /* Conditional sum needed when half is odd; Processor 0 gets missing element */ half = half/2; /* dividing line on who sums */ if (Pn < half) sum[Pn] = sum[Pn] + sum[Pn+half]; until (half == 1); Chapter 7 — Multicores, Multiprocessors, and Clusters — 25

Example: Sum Reduction half = 100; repeat synch(); if (half%2 != 0 && Pn == 0) sum[0] = sum[0] + sum[half-1]; /* Conditional sum needed when half is odd; Processor 0 gets missing element */ half = half/2; /* dividing line on who sums */ if (Pn < half) sum[Pn] = sum[Pn] + sum[Pn+half]; until (half == 1); Chapter 7 — Multicores, Multiprocessors, and Clusters — 25

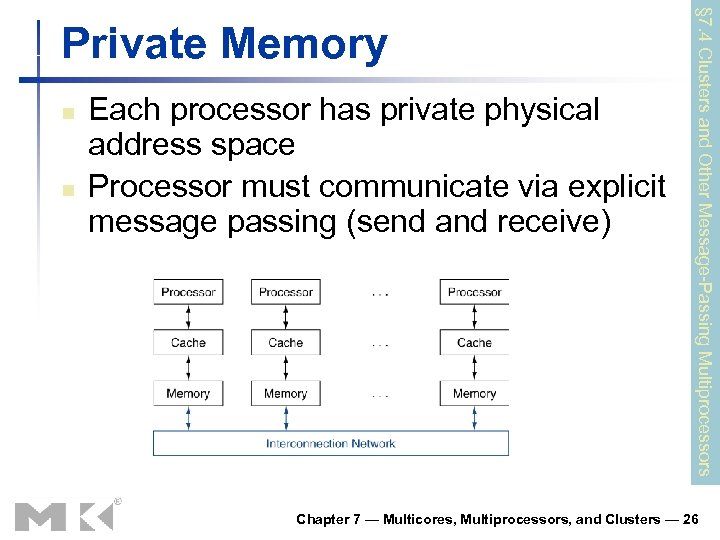

n n Each processor has private physical address space Processor must communicate via explicit message passing (send and receive) § 7. 4 Clusters and Other Message-Passing Multiprocessors Private Memory Chapter 7 — Multicores, Multiprocessors, and Clusters — 26

n n Each processor has private physical address space Processor must communicate via explicit message passing (send and receive) § 7. 4 Clusters and Other Message-Passing Multiprocessors Private Memory Chapter 7 — Multicores, Multiprocessors, and Clusters — 26

Loosely Coupled Clusters n Network of independent computers n n Each has private memory and OS Connected using I/O system n n Suitable for applications with independent tasks n n n E. g. , Ethernet/switch, Internet Web servers, databases, simulations, … High availability, scalable, affordable Problems n n Administration cost (prefer virtual machines) Low interconnect bandwidth n c. f. processor/memory bandwidth on an SMP Chapter 7 — Multicores, Multiprocessors, and Clusters — 27

Loosely Coupled Clusters n Network of independent computers n n Each has private memory and OS Connected using I/O system n n Suitable for applications with independent tasks n n n E. g. , Ethernet/switch, Internet Web servers, databases, simulations, … High availability, scalable, affordable Problems n n Administration cost (prefer virtual machines) Low interconnect bandwidth n c. f. processor/memory bandwidth on an SMP Chapter 7 — Multicores, Multiprocessors, and Clusters — 27

Clusters n n n Clusters with high-performance messagepassing dedicated networks Many supercomputers today use custom networks Much more expensive than local area networks Chapter 7 — Multicores, Multiprocessors, and Clusters — 28

Clusters n n n Clusters with high-performance messagepassing dedicated networks Many supercomputers today use custom networks Much more expensive than local area networks Chapter 7 — Multicores, Multiprocessors, and Clusters — 28

Warehouse-Scale Computers n n n Building to house, power, and cool 100, 000 servers with dedicated network Like large clusters but their architecture and operation are more sophisticated They act as one giant computer Chapter 7 — Multicores, Multiprocessors, and Clusters — 29

Warehouse-Scale Computers n n n Building to house, power, and cool 100, 000 servers with dedicated network Like large clusters but their architecture and operation are more sophisticated They act as one giant computer Chapter 7 — Multicores, Multiprocessors, and Clusters — 29

Grid Computing n Separate computers interconnected by long-haul networks n n n E. g. , Internet connections Work units farmed out, results sent back Can make use of idle time on PCs Chapter 7 — Multicores, Multiprocessors, and Clusters — 30

Grid Computing n Separate computers interconnected by long-haul networks n n n E. g. , Internet connections Work units farmed out, results sent back Can make use of idle time on PCs Chapter 7 — Multicores, Multiprocessors, and Clusters — 30

Networks inside a chip n Thanks to Moore’s Law and the increasing number of cores per chip, we now need networks inside a chip as well. Chapter 7 — Multicores, Multiprocessors, and Clusters — 31

Networks inside a chip n Thanks to Moore’s Law and the increasing number of cores per chip, we now need networks inside a chip as well. Chapter 7 — Multicores, Multiprocessors, and Clusters — 31

Network Characteristics n Performance n n Latency per message on an unloaded network to send and receive a message Throughput n n The maximum number of messages that can be transmitted in a given time period. Congestion delays caused by contention for a portion of the network Fault tolerance – work with broken components Collision detection Chapter 7 — Multicores, Multiprocessors, and Clusters — 32

Network Characteristics n Performance n n Latency per message on an unloaded network to send and receive a message Throughput n n The maximum number of messages that can be transmitted in a given time period. Congestion delays caused by contention for a portion of the network Fault tolerance – work with broken components Collision detection Chapter 7 — Multicores, Multiprocessors, and Clusters — 32

Network Characteristics n Cost – includes: n n n Number of switches Number of links on a switch to connect to the network The width (number of bits) per link The length of the links when the network is mapped into silicon Routability in silicon Power – energy efficiency Chapter 7 — Multicores, Multiprocessors, and Clusters — 33

Network Characteristics n Cost – includes: n n n Number of switches Number of links on a switch to connect to the network The width (number of bits) per link The length of the links when the network is mapped into silicon Routability in silicon Power – energy efficiency Chapter 7 — Multicores, Multiprocessors, and Clusters — 33

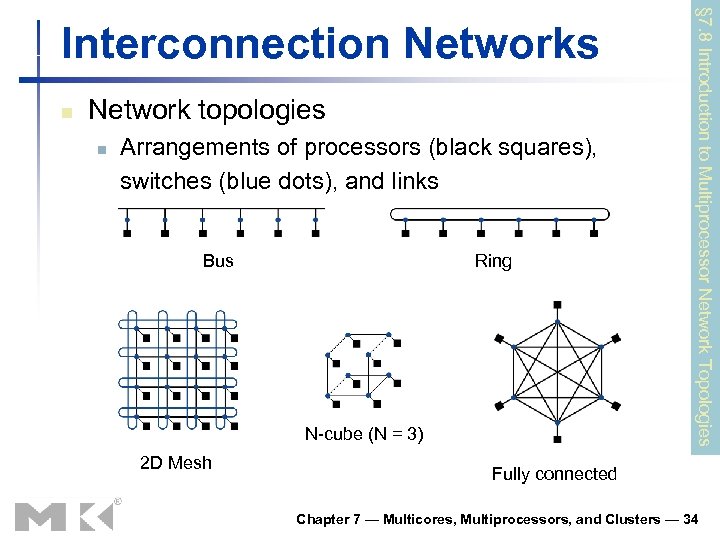

n Network topologies n Arrangements of processors (black squares), switches (blue dots), and links Bus Ring N-cube (N = 3) 2 D Mesh § 7. 8 Introduction to Multiprocessor Network Topologies Interconnection Networks Fully connected Chapter 7 — Multicores, Multiprocessors, and Clusters — 34

n Network topologies n Arrangements of processors (black squares), switches (blue dots), and links Bus Ring N-cube (N = 3) 2 D Mesh § 7. 8 Introduction to Multiprocessor Network Topologies Interconnection Networks Fully connected Chapter 7 — Multicores, Multiprocessors, and Clusters — 34

n n Goal: higher performance by using multiple processors Difficulties n n n Many reasons for optimism n n n Developing parallel software Devising appropriate architectures § 7. 13 Concluding Remarks Changing software and application environment Chip-level multiprocessors with lower latency, higher bandwidth interconnect An ongoing challenge for computer architects! Chapter 7 — Multicores, Multiprocessors, and Clusters — 35

n n Goal: higher performance by using multiple processors Difficulties n n n Many reasons for optimism n n n Developing parallel software Devising appropriate architectures § 7. 13 Concluding Remarks Changing software and application environment Chip-level multiprocessors with lower latency, higher bandwidth interconnect An ongoing challenge for computer architects! Chapter 7 — Multicores, Multiprocessors, and Clusters — 35