ae05732a8792af6e8ef8c0c9d80339c1.ppt

- Количество слайдов: 157

Chapter 6 Testing Techniques for Software Validation 編撰 : 鄭炳強教授 /中山大學資管系 Email: jeng@mail. nsysu. edu. tw

Copyright Statement © Copyright 2004 by Bingchiang Jeng 鄭炳強. Permission is granted to academic use provided this notice is included and credit to the source.

Learning Objective n n n Understand the role of testing and its difficulties as well as limits Understand the principles of software testing and their rationales Be able to differentiate between different approaches to software verification Be able to produce test cases using black-box and white box testing approaches Be able to apply static verification techniques Be able to define a testing process

Outline n n Testing Basics & Terminology Dynamic Testing n n Static Testing n n Inspection, Walkthrough, etc. Testing Implementation n n Black. Box, White. Box, etc. Test plan, Unit, System … Summary

How to Obtain Quality ? n Quality management n n Well organized software process n n n n n which actions contribute and how to quality requirements management design traceability configuration management …… Testing, validation, verification Well educated people Appropriate techniques and tools …… And then: How to check that quality goals are satisfied ?

No Silver Bullet …… quality control software process requirement management verification reviewing inspection testing CMM static analysis certification debugging walk-through QUALITY

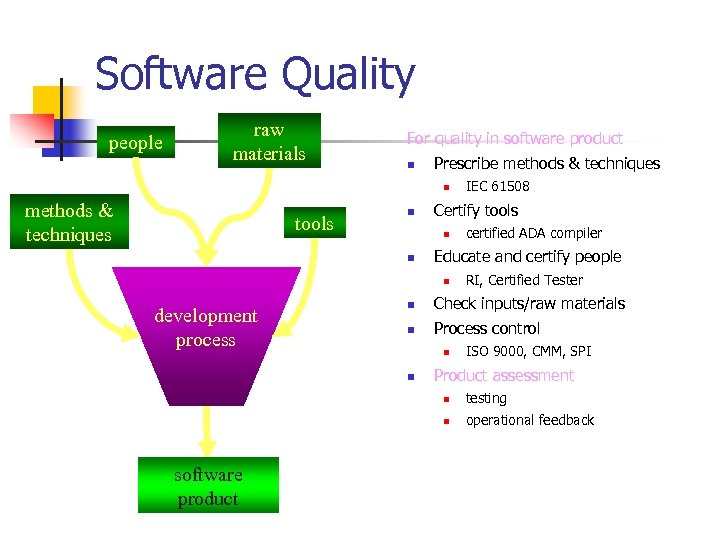

Software Quality people raw materials For quality in software product n Prescribe methods & techniques n methods & techniques tools n Certify tools n n certified ADA compiler Educate and certify people n development process IEC 61508 RI, Certified Tester n Check inputs/raw materials n Process control n n ISO 9000, CMM, SPI Product assessment n n software product testing operational feedback

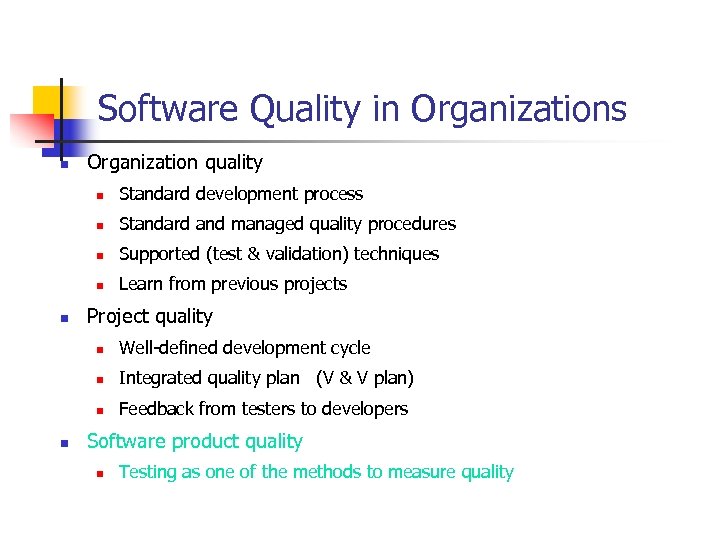

Software Quality in Organizations n Organization quality n n Standard and managed quality procedures n Supported (test & validation) techniques n n Standard development process Learn from previous projects Project quality n n Integrated quality plan (V & V plan) n n Well-defined development cycle Feedback from testers to developers Software product quality n Testing as one of the methods to measure quality

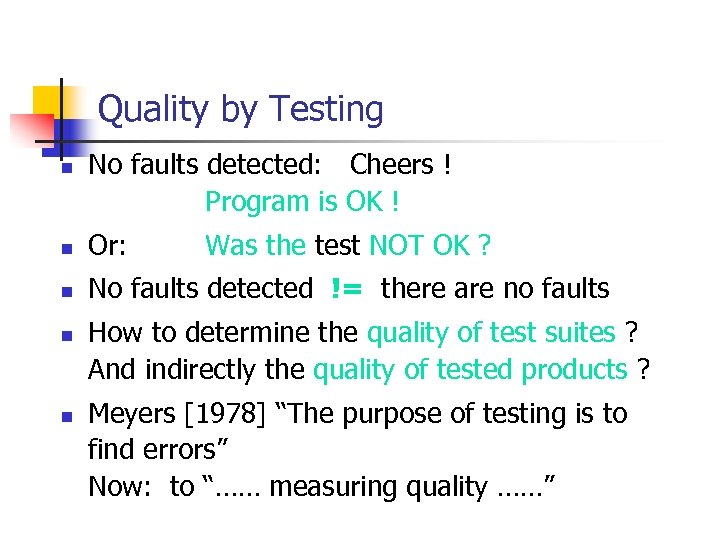

Quality by Testing n No faults detected: Cheers ! Program is OK ! n Or: n No faults detected != there are no faults n n Was the test NOT OK ? How to determine the quality of test suites ? And indirectly the quality of tested products ? Meyers [1978] “The purpose of testing is to find errors” Now: to “…… measuring quality ……”

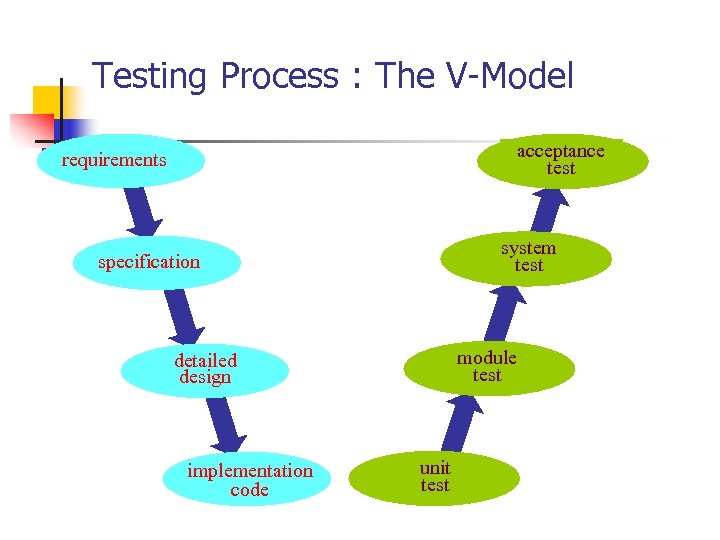

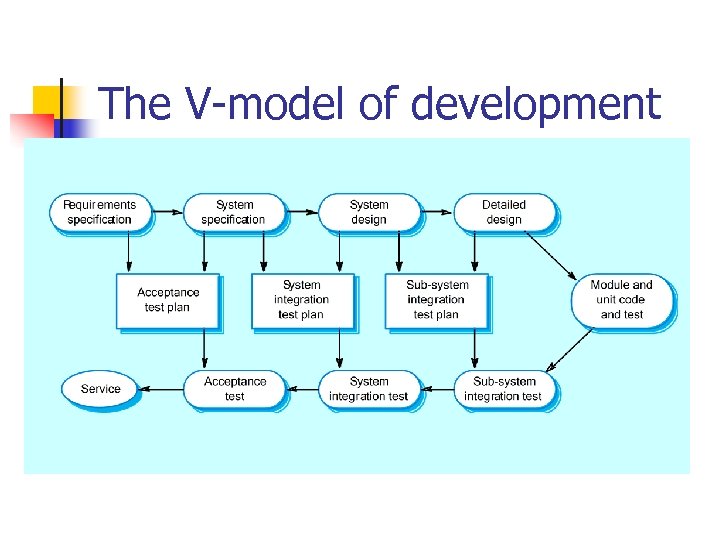

Testing Process : The V-Model acceptance test requirements system test specification module test detailed design implementation code unit test

The Role of Testing in Software Development The more realistic your testing. . . … the less shocking reality will be. - unknown ancient Zen Software Master

PART I TESTING BASICS

What Software Testing is … ? n n n n n Checking programs against specifications Finding bugs in programs Determining user acceptability Insuring that a system is ready for use Showing that a program performs correctly Demonstrating that errors are not present Understanding the limits of performance Learning what a system is not able to do Evaluating the capabilities of a system Etc …

Researchers’ Definitions n n n Testing is the process of executing a program or system with the intent of finding errors. (Myers) Testing is the process of establishing confidence that a program or system does what it supposed to. (Hetzel) Testing is obviously concerned with errors, faults, failures and incidents. A test is the act of exercising software with test cases with an objective of 1) finding failure 2) demonstrate correct execution (Jorgensen )

Testing Scope n Depending on the target, testing could be used in either of the following way n Verification n n Process of determining whether output of one phase of development conforms to its previous phase, i. e. , building the product right Validation n Process of determining whether a fully developed system conforms to its SRS document, i. e. , building the right product

Testing Objective n Which objective of testing a program is true n n n To show that this program is correct? To find errors in this program? The first goal is not achievable in general and will not lead to effective testing The second is not easily achieved either Today, the purpose of most testing is to measuring quality

Difficulties to Effectively Testing a program n Given that testing is show the presence, but not the absence, of errors in the program, it is still an uneasy task n n The number of distinct paths in a program may be infinite if it has loops Even if the number of paths is finite, exhaustive testing is usually impractical One execution of a path is insufficient to determine correctness of that path Exhaustive testing is impossible

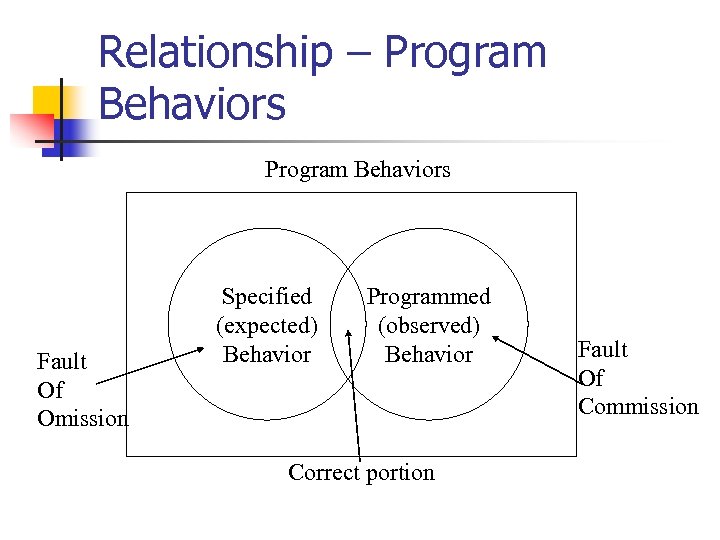

Relationship – Program Behaviors Fault Of Omission Specified (expected) Behavior Programmed (observed) Behavior Correct portion Fault Of Commission

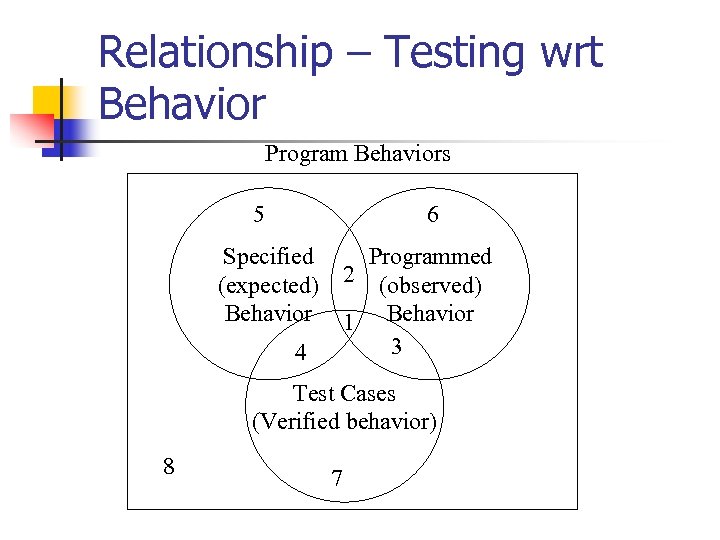

Relationship – Testing wrt Behavior Program Behaviors 5 6 Specified (expected) Behavior Programmed 2 (observed) 1 Behavior 3 4 Test Cases (Verified behavior) 8 7

Cont… n 2, 5 n n 1, 4 n n Specified behavior that are not tested Specified behavior that are tested 3, 7 n Test cases corresponding to unspecified behavior

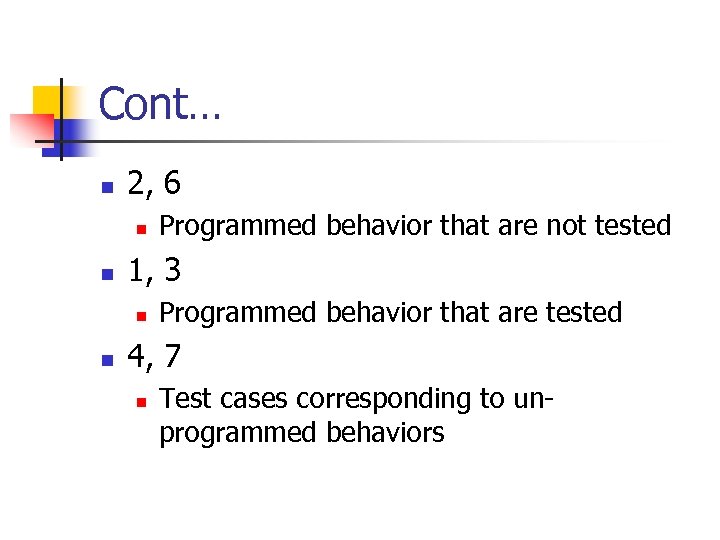

Cont… n 2, 6 n n 1, 3 n n Programmed behavior that are not tested Programmed behavior that are tested 4, 7 n Test cases corresponding to unprogrammed behaviors

Therefore, … n n If there are specified behaviors for which there are no test cases, the testing is incomplete If there are test cases that correspond to unspecified behaviors n n Either such test cases are unwarranted, or specification is deficient n It also implies that testers should participate in specification and design reviews

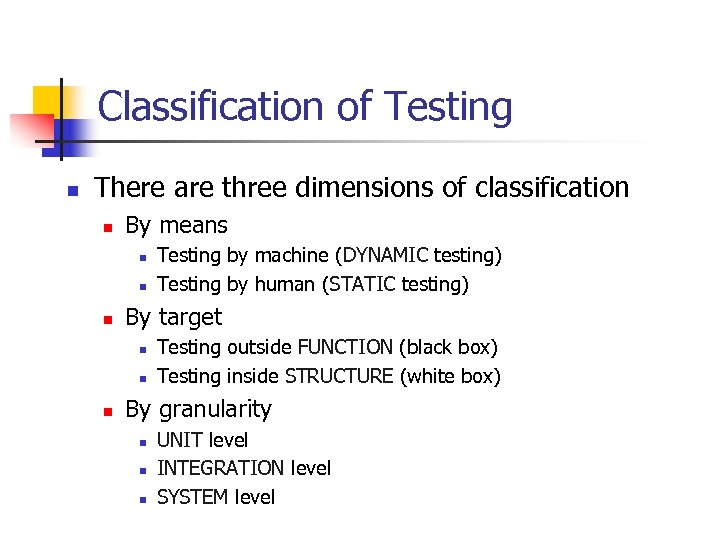

Classification of Testing n There are three dimensions of classification n By means n n n By target n n n Testing by machine (DYNAMIC testing) Testing by human (STATIC testing) Testing outside FUNCTION (black box) Testing inside STRUCTURE (white box) By granularity n n n UNIT level INTEGRATION level SYSTEM level

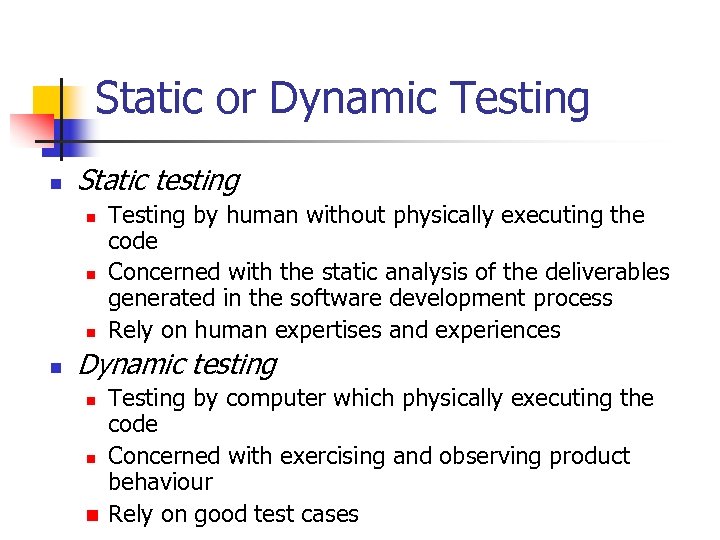

Static or Dynamic Testing n Static testing n n Testing by human without physically executing the code Concerned with the static analysis of the deliverables generated in the software development process Rely on human expertises and experiences Dynamic testing n n n Testing by computer which physically executing the code Concerned with exercising and observing product behaviour Rely on good test cases

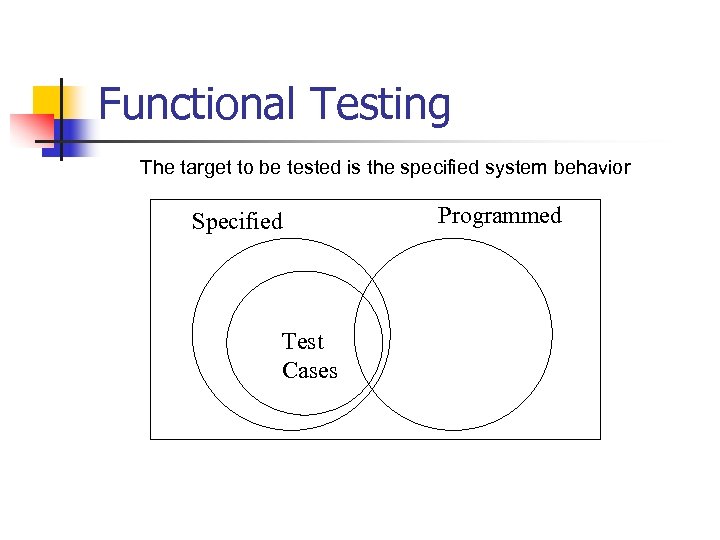

Functional Testing The target to be tested is the specified system behavior Specified Test Cases Programmed

Structural Testing The target to be tested is the programmed system behavior Specified Programmed Test Cases

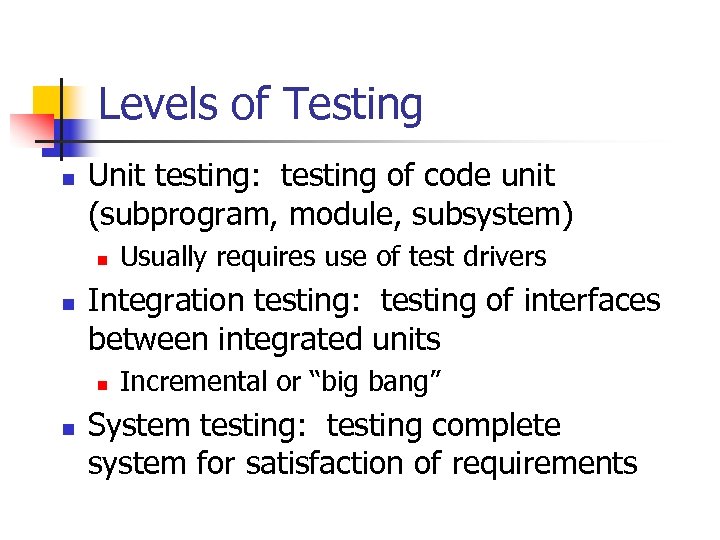

Levels of Testing n Unit testing: testing of code unit (subprogram, module, subsystem) n n Integration testing: testing of interfaces between integrated units n n Usually requires use of test drivers Incremental or “big bang” System testing: testing complete system for satisfaction of requirements

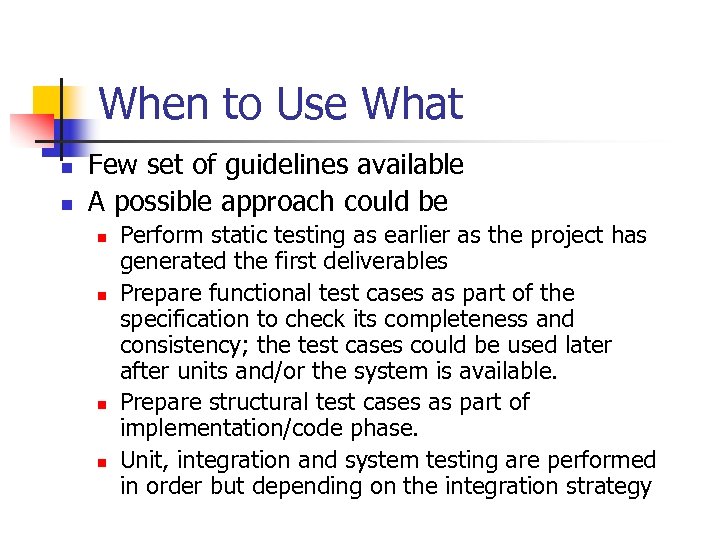

When to Use What n n Few set of guidelines available A possible approach could be n n Perform static testing as earlier as the project has generated the first deliverables Prepare functional test cases as part of the specification to check its completeness and consistency; the test cases could be used later after units and/or the system is available. Prepare structural test cases as part of implementation/code phase. Unit, integration and system testing are performed in order but depending on the integration strategy

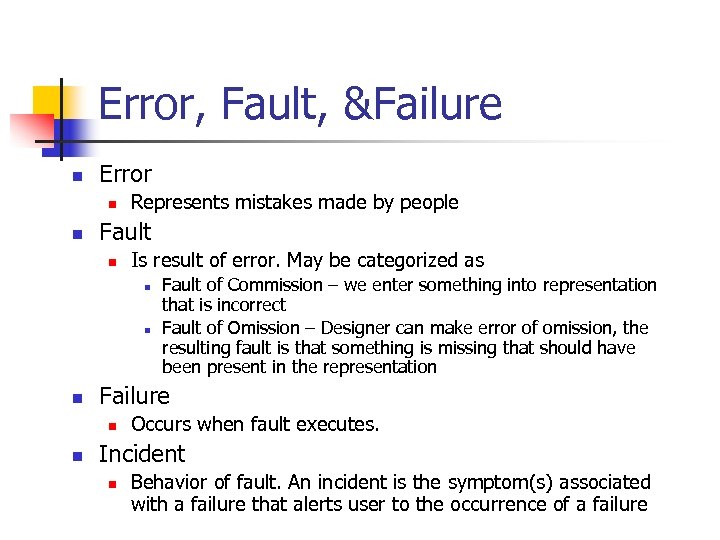

Error, Fault, &Failure n Error n n Represents mistakes made by people Fault n Is result of error. May be categorized as n n n Failure n n Fault of Commission – we enter something into representation that is incorrect Fault of Omission – Designer can make error of omission, the resulting fault is that something is missing that should have been present in the representation Occurs when fault executes. Incident n Behavior of fault. An incident is the symptom(s) associated with a failure that alerts user to the occurrence of a failure

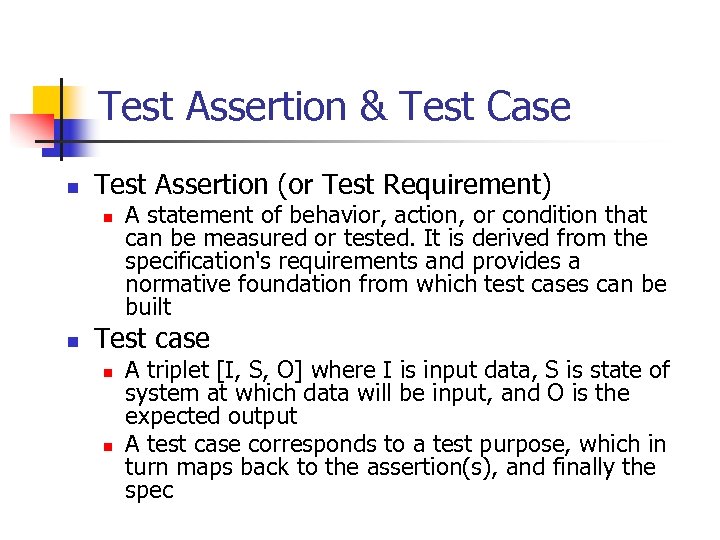

Test Assertion & Test Case n Test Assertion (or Test Requirement) n n A statement of behavior, action, or condition that can be measured or tested. It is derived from the specification's requirements and provides a normative foundation from which test cases can be built Test case n n A triplet [I, S, O] where I is input data, S is state of system at which data will be input, and O is the expected output A test case corresponds to a test purpose, which in turn maps back to the assertion(s), and finally the spec

Test Purpose & Test Suite n n Test Purpose n An explanation of why the test was written, and must map directly to one or more test assertions Test Suite n The set of all test cases (common definition) n A set of documents and tools providing tool developers with an objective methodology to verify the level of conformance of an implementation for a given standard (definition from W 3 C)

Example n To test following code If (x>y) max = x; else max = x; n n This test suite {(x=3, y=2); (x=2, y=3)} is good But this one {(x=3, y=2); (x=4, y=3); (x=5, y = 1)} is bad

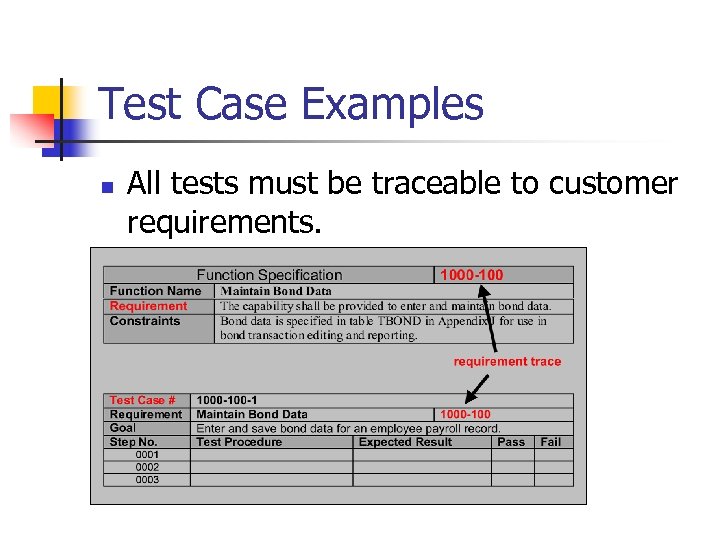

Test Case Examples n All tests must be traceable to customer requirements.

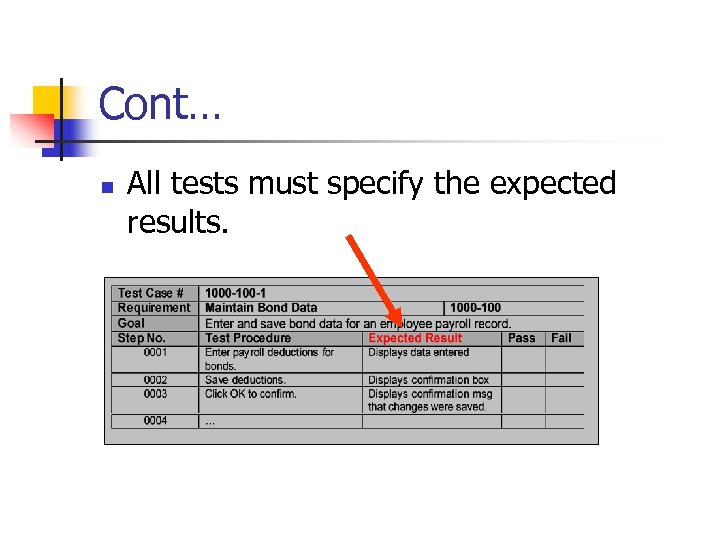

Cont… n All tests must specify the expected results.

PART II DYNAMIC TESTING

Designing Test Cases n n n Dynamic testing relies on good test cases Testing techniques are characterized by their different strategies how to generate test cases Testing effectiveness is not maximized by arbitrarily selected test cases since they may expose an already detected fault by some other test case and waste effort n n Number of test cases do not determine the effectiveness Each test case should detect different faults

Testing from External n Treat code as a black box and verify whether its requirements have been met, e. g. , design test cases for n n n incorrect or missing functions interface faults in external database access behavior faults initialization or termination faults Etc.

Functional Testing Strategies n n Equivalence class partitioning Boundary value analysis Cause effect graph Decision table based testing

Equivalence Class Partitioning n n n Input values to a program are partitioned into equivalence classes. Partitioning is done such that n program behaves in similar ways to every input value belonging to an equivalence class. Test the code with just one representative value from each equivalence class n as good as testing using any other values from the equivalence classes.

Cont… n How do you determine the equivalence classes? n n n examine the input data. few general guidelines for determining the equivalence classes If input is an enumerated set of values, e. g. {a, b, c} n n one equivalence class for valid input values another equivalence class for invalid input values should be defined.

Example n A program reads an input value in the range of 1 and 5000 n computes the square root of the input number SQRT

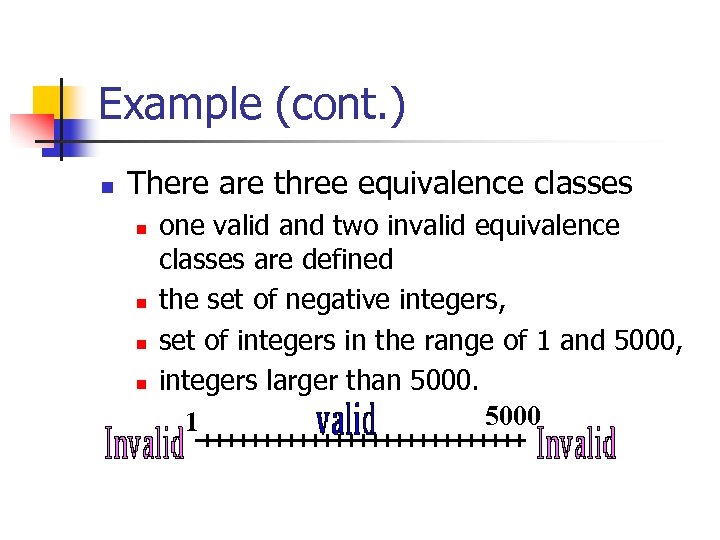

Example (cont. ) n There are three equivalence classes n n one valid and two invalid equivalence classes are defined the set of negative integers, set of integers in the range of 1 and 5000, integers larger than 5000 1

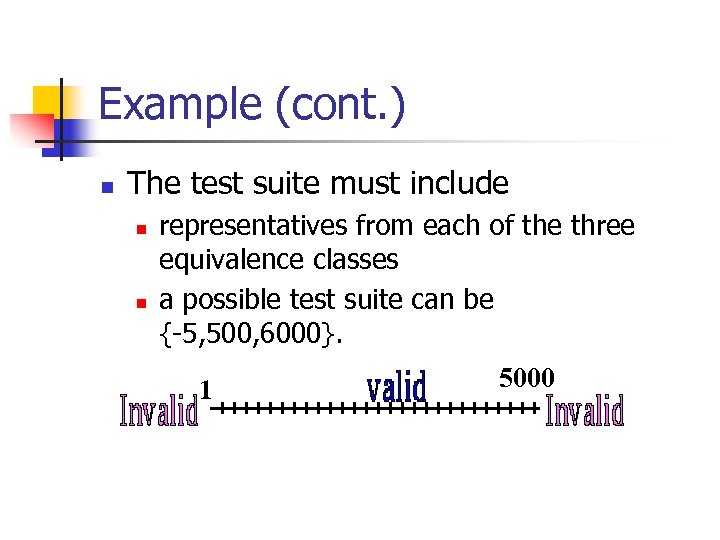

Example (cont. ) n The test suite must include n n representatives from each of the three equivalence classes a possible test suite can be {-5, 500, 6000}. 1 5000

Boundary Value Analysis n Some typical programming faults occur n n n at boundaries of equivalence classes might be purely due to psychological factors. Programmers often fail to see n special processing required at the boundaries of equivalence classes.

Boundary Value Analysis n n Programmers may improperly use < instead of <= Boundary value analysis n Select test cases at the boundaries of different equivalence classes.

Example n For a function that computes the square root of an integer in the range of 1 and 5000 n Test cases must include the values {0, 1, 5000, 5001}. 1 5000

Cause-Effect Graphs n n Restate the requirements in terms of logical relationships between inputs and outputs Represent the results as a Boolean graph, called a cause-effect graph Provide a systematic view to design functional test cases from requirements specifications Work first done at IBM

Steps to Create a Cause-Effect Graph n n n Study the functional requirements. Mark and number all causes and effects. Numbered causes and effects n n Draw causes on the LHS Draw effects on the RHS Draw logical relationship between causes and effects n n become nodes of the graph. as edges in the graph. Extra nodes can be added n to simplify the graph

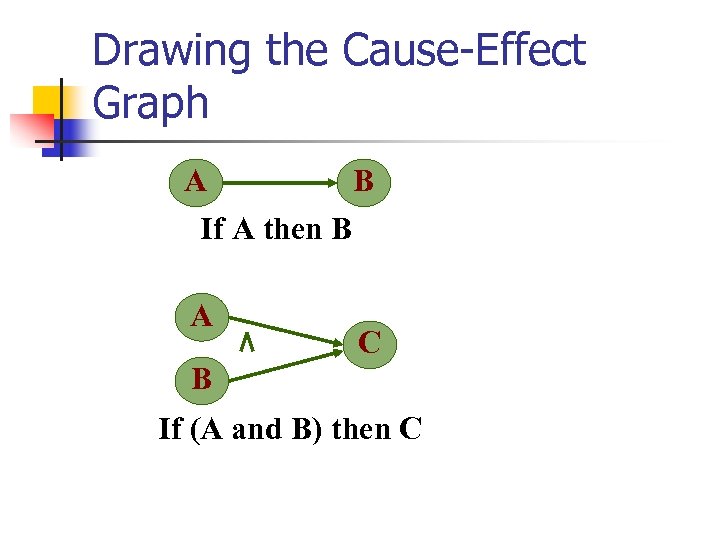

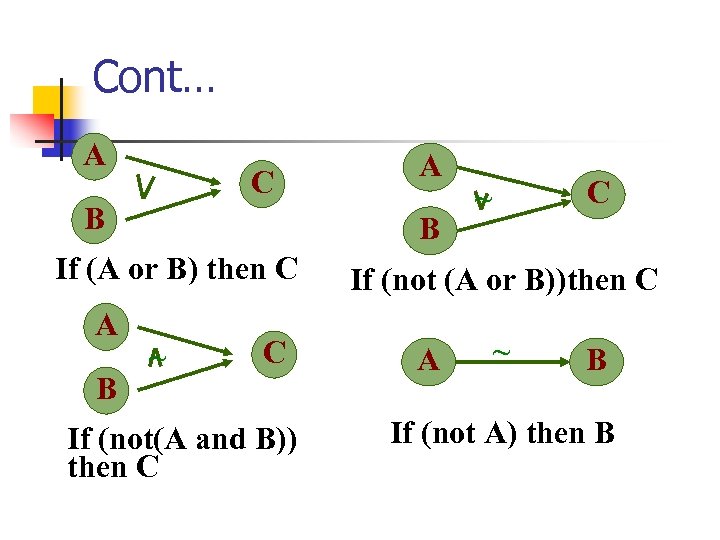

Drawing the Cause-Effect Graph A B If A then B A C B If (A and B) then C

Cont… A C B B If (A or B) then C A B A ~ C If (not(A and B)) then C C ~ If (not (A or B))then C A ~ B If (not A) then B

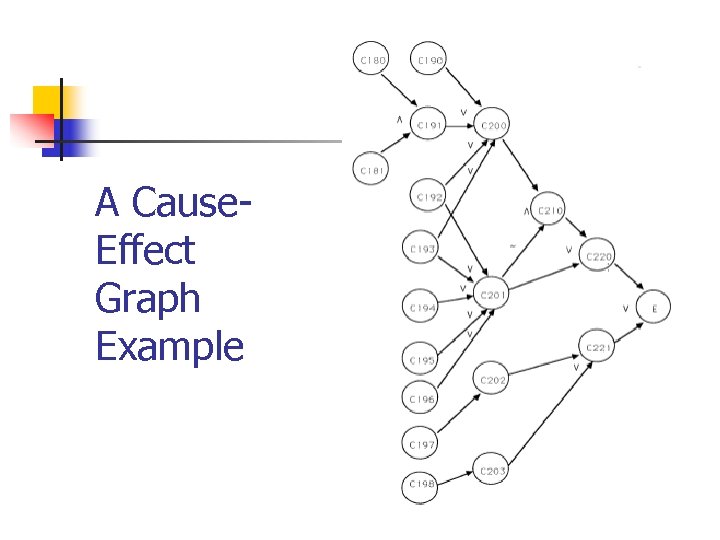

A Cause. Effect Graph Example

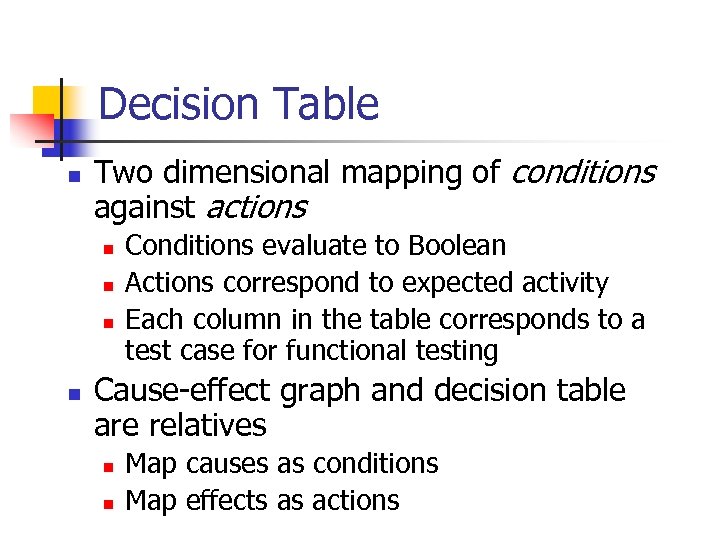

Decision Table n Two dimensional mapping of conditions against actions n n Conditions evaluate to Boolean Actions correspond to expected activity Each column in the table corresponds to a test case for functional testing Cause-effect graph and decision table are relatives n n Map causes as conditions Map effects as actions

Cause-Effect Graph and Decision Table Cause 1 Test 2 I I Test 3 Test 4 I S Test 5 I Cause 2 I I I X S Cause 3 Cause 4 I S S I X X Cause 5 S S S I S X X Effect 1 P P A A A Effect 2 A A P A A Effect 3 A A A P P

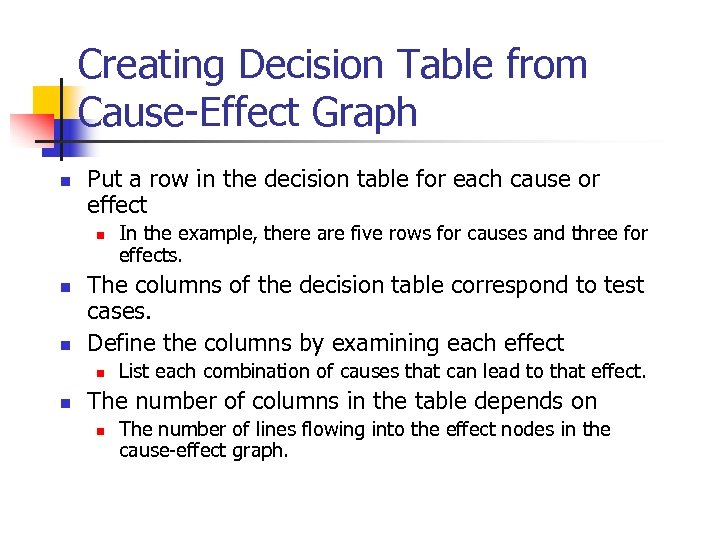

Creating Decision Table from Cause-Effect Graph n Put a row in the decision table for each cause or effect n n n The columns of the decision table correspond to test cases. Define the columns by examining each effect n n In the example, there are five rows for causes and three for effects. List each combination of causes that can lead to that effect. The number of columns in the table depends on n The number of lines flowing into the effect nodes in the cause-effect graph.

The Benefit n Reduce the number of unnecessary test cases n n n For example, there are 25 =32 test cases theoretically since there are 5 causes Using cause-effect graphing technique have reduced that number to 5 as seen in the table However, this graphing technique is not suitable for systems which include n n timing constraints, or feedback from some other processes.

Testing from Internal n Testing code by looking into its internal structure and verify whether it satisfies the requirements, i. e. , design test cases to cover n n n Program structures, e. g. , statements, branches, conditions, and/or paths Data flow definitions and usages Fault sensitive parts, e. g. , mutation or domain testing

White-Box Testing n n n Statement coverage Branch coverage Path coverage Condition coverage Mutation testing Data flow-based testing

Statement Coverage n Statement coverage methodology n n Design test cases so that every statement in a program is executed at least once The principal idea n n Unless a statement is executed, we have no way of knowing if a fault exists in that statement But given only one test input poses no guarantee that it will behave correctly for all input values.

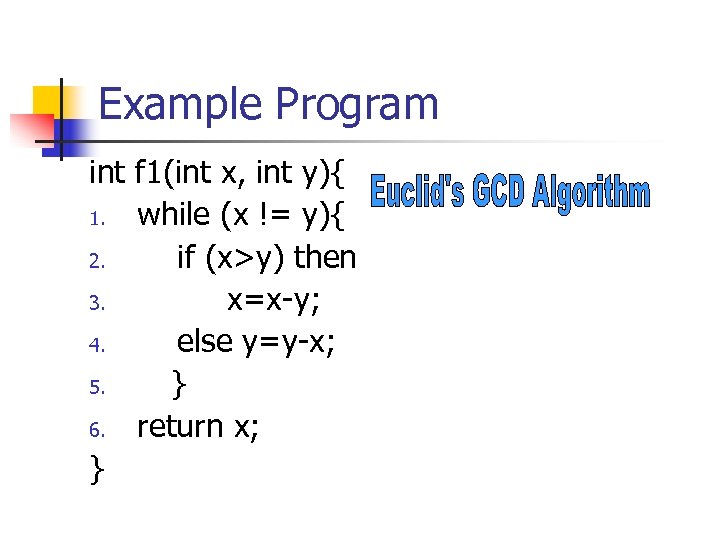

Example Program int f 1(int x, int y){ 1. while (x != y){ 2. if (x>y) then 3. x=x-y; 4. else y=y-x; 5. } 6. return x; }

Test Cases for Statement Coverage n An example test set could be following n n {(x=3, y=3), (x=4, y=3), and (x=3, y=4)} All statements are executed at least once here.

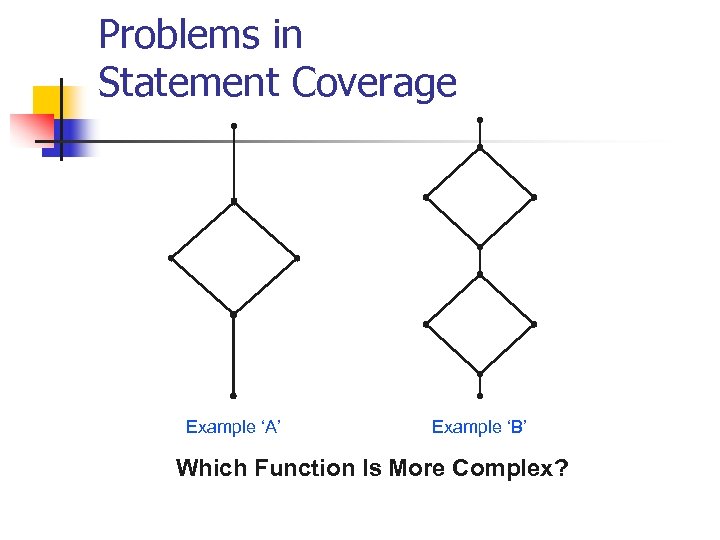

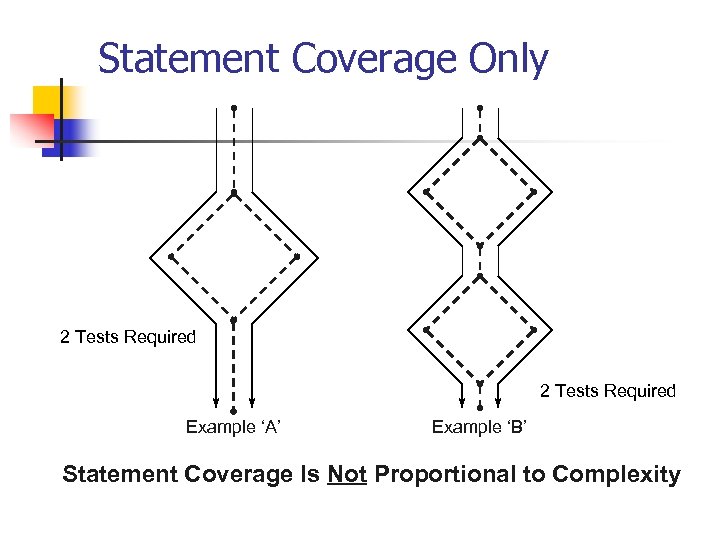

Problems in Statement Coverage Example ‘A’ Example ‘B’ Which Function Is More Complex?

Statement Coverage Only 2 Tests Required Example ‘A’ Example ‘B’ Statement Coverage Is Not Proportional to Complexity

Branch Coverage n Test cases are designed such that n n different branch conditions is given true and false values in turn. Branch testing subsumes statement coverage n i. e. , a stronger testing compared to the statement coverage-based testing

Test Cases for Branch Coverage n An example test set could be following n n {(x=3, y=3), (x=4, y=3), and (x=3, y=4)} All branches here are executed at least once

Condition Coverage n Test cases are designed such that n n n each component of a composite conditional expression given both true and false values. stronger than branch testing Example n n Consider the conditional expression ((c 1. and. c 2). or. c 3) Each of c 1, c 2, and c 3 are exercised at least once i. e. given true and false values.

Problems in Condition Coverage Testing n If a Boolean expression having n components n n For condition coverage, it will require 2 n test cases Thus, it is practical only if n (the number of conditions) is small.

Independent Path & Cyclomatic Metric Testing n A path through a program is n n An independent path is one through the program that n n a node and edge sequence from the starting node to a terminal node of the control flow graph introduces at least one new node not included in any other independent paths. Design test cases such that n all linearly independent paths in the program are executed at least once

Mc. Cabe's Cyclomatic Complexity n Mc. Cabe's complexity metric counts the number of independent paths through the program control graph G n n i. e. , the number of basic paths (all paths composed of basic paths) The cyclomatic complexity (or number) is defined as V(G) = L - N + 2 * P, where n n L is the number of links in the graph, N is the number of nodes in the graph, and P is the number of connected parts in the graph P = 1 when there is only one program, no subroutines

Cont … n This metric can also be calculated by n n adding one to the number of binary decisions in a structured flow graph with only one entry and one exit counting a three-way decision as two binary decisions and N-way case statements as N – 1 binary decisions The rationale behind this counting of N-way decisions is that it would take a string of N – 1 binary decisions to implement an N-way case statement. Thus V(G) can be used as a lower bound for the number of test cases for branch coverage

Cont … One Additional Path Required to Determine the Independence of the 2 Decisions Mc. Cabe's Cyclomatic Complexity v(G) Number of Linearly Independent Paths

Cyclomatic Complexity & Quality n Mc. Cabe's metric provides n n n a quantitative measure of estimating testing difficulty, also the psychological complexity of a program, and the difficulty level of understanding the program since it increases with the number of decision nodes and loops.

Applications of Cyclometic Complexity n Informal correlation exists among n n Mc. Cabe's metric, the number of faults existing in the code, and time spent to test the code. Thus, this metric can be used to n n n focus testing on high-risk or hard-to-test areas objectively measure testing progress and know when to stop testing assess the time and resources needed to ensure a well-tested application

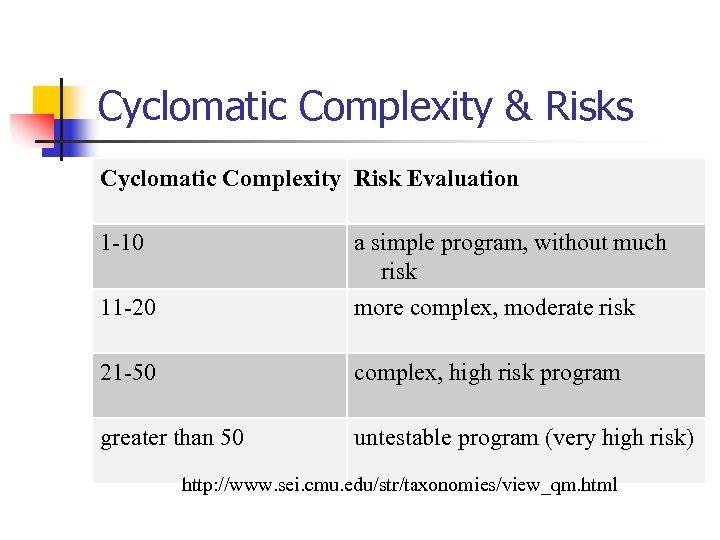

Cyclomatic Complexity & Risks Cyclomatic Complexity Risk Evaluation 1 -10 a simple program, without much risk 11 -20 more complex, moderate risk 21 -50 complex, high risk program greater than 50 untestable program (very high risk) http: //www. sei. cmu. edu/str/taxonomies/view_qm. html

Some Limitations of Cyclomatic Complexity n n n The cyclomatic complexity is a measure of the program's control complexity and not the data complexity The same weight is placed on nested and non -nested loops. However, deeply nested conditional structures are harder to understand than non-nested structures. It may give a misleading figure with regard to a lot of simple comparisons and decision structures. Whereas the dataflow method would probably be more applicable as it can track the data flow and its usage.

Data-Flow Based Testing n n n A program performs its function through a series of computations with immediate results retrieving from and storing into different variables In contrast to checking program structure, a good place to look for faults is these variable definition-and-usage chains The data flow testing strategy is to select test cases of a program n according to the locations of definitions and uses of different variables in a program.

Cont … n For a statement numbered S, n n DEF(S) = {X| variables that are defined in statement S} USES(S)= {X| variables that are referenced in statement S} For example, let statement 1 be a=b, then DEF(1)={a}, USES(1)={b}; Or let statement 2 be a=a+b, then DEF(1)={a}, USES(1)={a, b}.

Definition-Use chain (DU chain) n A variable X is said to be live at statement S 1, if n n n X is defined at a statement S there exists a path from S to S 1 not containing any definition of X, i. e. , the value of X is not redefined. [X, S, S 1] is a DU chain where n n S and S 1 are statement numbers, X in DEF(S) X in USES(S 1), and the definition of X in the statement S is live at statement S 1.

Data-Flow Based Testing Strategies n n There a number of testing strategies based on data flow information, e. g. , all-def, all-use, all-DU-chain, etc. which are similar to the hierarchy of structure coverage testing criteria The simplest one is all-def, which requires n n every variable definition in a program be covered at least once All-DU-chain, on the other hand, is more comprehensive and requires n every DU chain in a program be covered at least once.

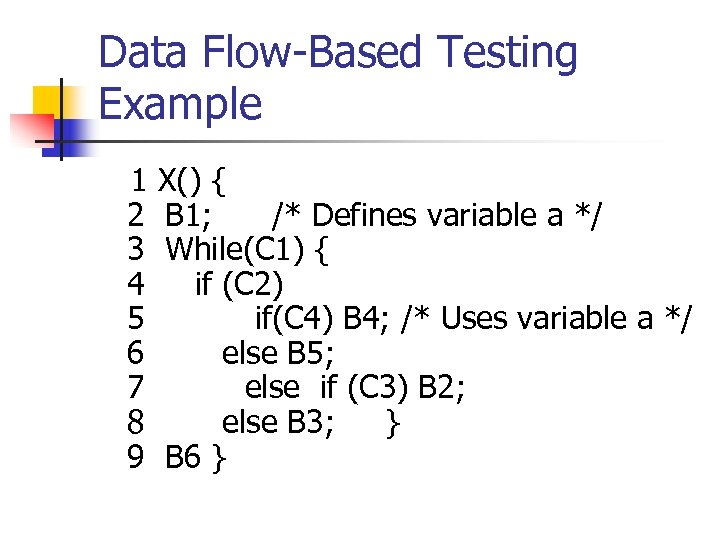

Data Flow-Based Testing Example 1 2 3 4 5 6 7 8 9 X() { B 1; /* Defines variable a */ While(C 1) { if (C 2) if(C 4) B 4; /* Uses variable a */ else B 5; else if (C 3) B 2; else B 3; } B 6 }

![Cont … n n [a, 1, 5] a DU chain. Assume n n DEF(X) Cont … n n [a, 1, 5] a DU chain. Assume n n DEF(X)](https://present5.com/presentation/ae05732a8792af6e8ef8c0c9d80339c1/image-80.jpg)

Cont … n n [a, 1, 5] a DU chain. Assume n n DEF(X) = {B 1, B 2, B 3, B 4, B 5} USED(X) = {B 2, B 3, B 4, B 5, B 6} There are 25 DU chains. However only 5 paths are needed to cover these chains.

Structure Based or Data-Flow Based n n Structure based testing criteria, e. g. , statement coverage, branch coverage, etc. are easier to implement; However, data-flow based testing strategies are more n useful for selecting test paths of a program containing nested if and loop statements

Measuring Testing Quality -Mutation Testing n First testing the software n n After the planned testing is complete, n n using any testing method or strategy that is discussed. mutation testing is applied. The idea behind mutation testing is to n n n create a number of mutants that make a few arbitrary small changes to a program at a time; test a mutated program against the full test suite of the program; and then check the test results.

Check the Testing Results n If there exists at least one test case in the test suite for which n n If a mutant remains alive n n a mutant gives an incorrect result, then the mutant is said to be dead or ‘killed’. even after all test cases have been exhausted, the test suite is enhanced to kill the mutant. The process of generation and killing of mutants n can be automated by predefining a set of primitive changes that can be applied to the program.

Mutation Testing Operators n The primitive changes can be n n n altering an arithmetic operator, changing the value of a constant, adding or removing a constant, altering a relational operator, and changing a data type, etc.

Problems in Mutation Testing n Although mutation testing can be automated, the major problem of mutation testing still exists, which is n n it is very computationally expensive, and There a very large number of possible mutants that can be generated, which makes it vary hard to be implemented

PART III STATIC TESTING

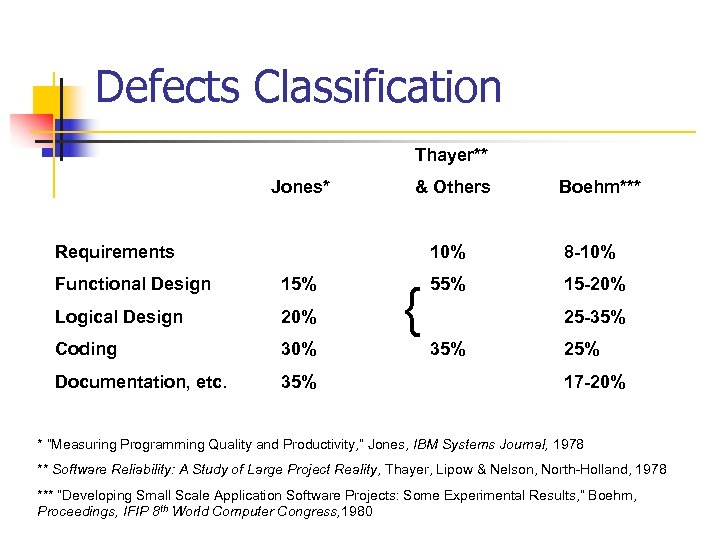

Defects Classification Thayer** Jones* & Others Requirements Boehm*** 10% Functional Design 15% Logical Design 20% Coding 30% Documentation, etc. 35% { 8 -10% 55% 15 -20% 25 -35% 25% 17 -20% * “Measuring Programming Quality and Productivity, ” Jones, IBM Systems Journal, 1978 ** Software Reliability: A Study of Large Project Reality, Thayer, Lipow & Nelson, North-Holland, 1978 *** “Developing Small Scale Application Software Projects: Some Experimental Results, ” Boehm, Proceedings, IFIP 8 th World Computer Congress, 1980

The Need of Non-execution Based Testing n n Many errors are introduced in the requirements and design phases, which need a way to discover them as earlier as possible Person creating a product should not be the only one responsible for reviewing it n n “Many eyes make all bugs shallow, ” as said in the open-source world. The solution is to have a document checked by a team of software professionals with a range of skills in a meeting called Review

Types of Review n There a number of types of review ranging in formality and effect. These include: n Buddy Checking n n having a person other than the author informally review a piece of work. generally does not require collection of data difficult to put under managerial control generally does not involve the use of checklists to guide inspection and is therefore not repeatable.

Types of Review n Walkthroughs n n n generally involve the author of an artifact presenting that document or program to an audience of their peers The audience asks questions and makes comments on the artifact being presented in an attempt to identify defects often break down into arguments about an issue usually involve no prior preparation on behalf of the audience usually involve minimal documentation of the process and of the issues found process improvement and defect tracking are therefore not easy

Types of Review n Review by Circulation n n n similar in concept to a walkthrough artifact to be reviewed is circulated to a group of the author(s) peers for comment avoids potential arguments over issues, however it also avoids the benefits of discussion reviewer may be able to spend longer reviewing the artifact there is documentation of the issues found, enabling defect tracking usually minimal data collection

Types of Review n Inspection (Fagan 76) n n n n formally structured and managed peer review processes involve a review team with clearly defined roles specific data is collected during inspections have quantitative goals set reviewers check an artifact against an unambiguous set of inspection criteria for that type of artifact the purpose is to find problems and see what's missing, not to fix anything. the required data collection promotes process improvement, and subsequent improvements in quality.

Inspection – A Static Defect Detecting Approach Proposed by Michael Fagan in the early 1970’s at IBM; Initially inspection was used to verify software designs or source code but later extended to product development. n Gilb and Graham expand this three stage process into the inspection steps; Entry, Planning, Kickoff Meeting, Individual Checking, Logging Meeting, Root Cause Analysis Edit, Follow Up, Exit. n It is a formal method which aims at assessing the quality of the software in question, not the quality of the software development process. n

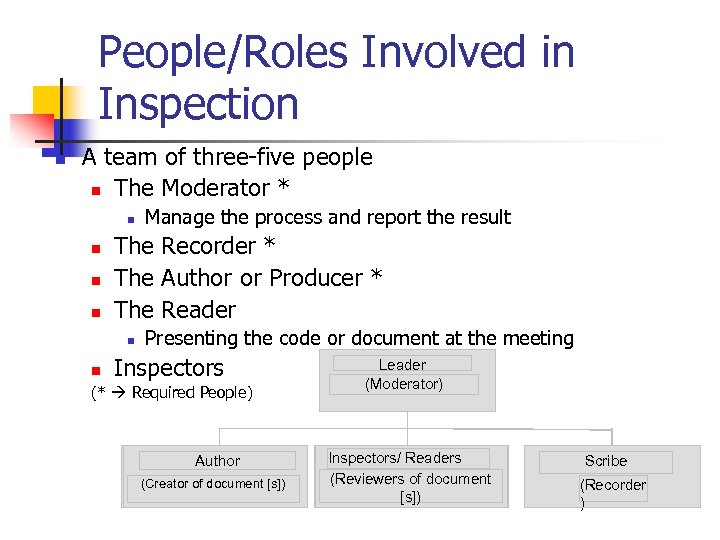

People/Roles Involved in Inspection n A team of three-five people n The Moderator * n n The Recorder * The Author or Producer * The Reader n n Manage the process and report the result Presenting the code or document at the meeting Inspectors (* Required People) Author (Creator of document [s]) Leader (Moderator) Inspectors/ Readers (Reviewers of document [s]) Scribe (Recorder )

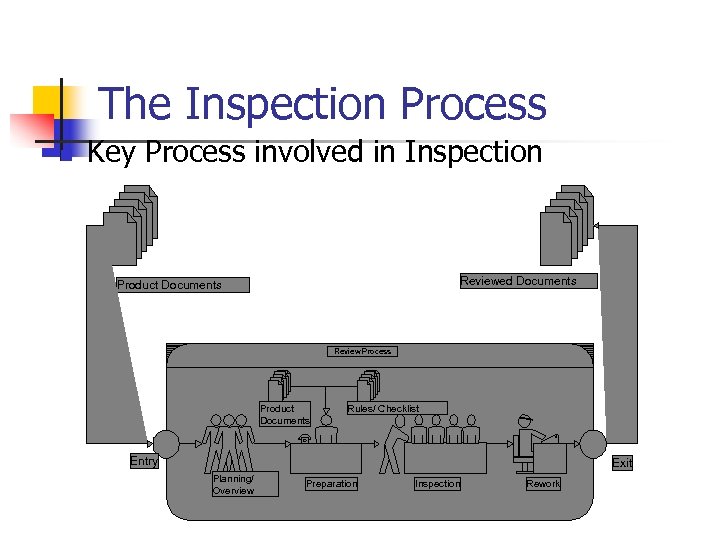

The Inspection Process n n Inspection process is divided into five (or six) stages Stage 1 - Planning/Overview n n n Overview document (specs/design/code/plan) to be prepared by person responsible for producing the product. Document is distributed to participants. Stage 2 - Preparation n n Understand the document in detail. List of fault types found in inspections ranked by frequency used for concentrating efforts.

Cont … n Stage 3 - Inspection n Walk through the document and ensure that n n n Stage 4 - Rework n n Each item is covered Every branch is taken at least once Find faults and document them (don’t correct) Leader (moderator) produces a written report Resolve all faults and problems Stage 5 - Follow-up n Moderator must ensure that every issue has been resolved in some way

The Inspection Process n Key Process involved in Inspection Reviewed Documents Product Documents Review Process Product Documents Rules/ Checklist Entry Exit Planning/ Overview Preparation Inspection Rework

A Sample Procedure n n n Announce the review meeting in advance (a week? ) Provide design document, implementation overview, and pointer to code Reviewers read code (and make notes) in advance of meeting During meeting, directives recorded by Scribe Testers/documenters attend too

What to look for in Inspections n Checklist of common errors should be used to drive the inspection n n n Is each item in a specs document adequately and correctly addressed? Do actual and formal parameters match? Error handling mechanisms identified? Design compatible with hardware resources? What about with software resources? Etc.

Inspection check List n Error checklists are programming language dependent and reflect the characteristic errors that are likely to arise in the language, e. g. , n n n Control flow analysis. Checks for loops with multiple exit or entry points, finds unreachable code, etc. Data use analysis. Detects uninitialised variables, variables written twice without an intervening assignment, variables which are declared but never used, etc. Interface analysis. Checks the consistency of routine and procedure declarations and their use

Cont … n n Information flow analysis. Identifies the dependencies of output variables. Does not detect anomalies itself but highlights information for code inspection or review Path analysis. Identifies paths through the program and sets out the statements executed in that path. Again, potentially useful in the review process Both these stages generate vast amounts of information. They must be used with care. And there are many others to be checked …

What to Record in Inspections n n n Record fault statistics Categorize by severity, fault type Compare # faults with average # faults in same stage of development Find disproportionate # in some modules, then begin checking other modules Too many faults => redesign the module Information on fault types will help in code inspection in the same module

Inspection Rate n n n 500 statements/hour during overview. 125 source statements/hour during individual preparation. 90 -125 statements/hour can be inspected. Inspection is therefore an expensive process. Inspecting 500 lines costs about 40 man/hours effort - about £ 2800 at UK rates.

Human Factor n Why software engineers are against inspection? n n n n Fear of being exposed Fear of losing control No time for Inspection Don’t touch the process Overconfidence in testing effectiveness Overconfidence in testing tools Misunderstood responsibilities.

Keys to Success n To make Inspection to be successful n n n Ego involvement and personality conflict Issue resolution and meeting digression Importance of checklist Training of the people inspection team Management support – Return On Investment ROI = [(Total cost without Peer Review) - (Total cost with Peer Review)] (Actual cost of the Peer Review)

Some Statistics about Inspection n n n Typical savings 35 -50% in development IBM removed 82% of defects before testing Inspection of test plans, designs, and test cases can save 85% in unit testing. UK Maintenance 1/10 th for inspected SW Standard Bank 28 x less maintenance cost Space Shuttle 0 defects 6/9 missions ‘ 85

Inspections vs. Testing n Inspections and testing are complementary techniques n n Pros of inspection n n Both should be used during the V & V process High number of faults found even before testing (design and code inspections) Higher programmer productivity, less time on module testing Fewer faults found in product that was inspected before Faults detected early in the process is a huge savings Cons of inspection n n Inspections can check conformance with a specification but not conformance with the customer’s real requirements Inspections cannot check non-functional characteristics such as performance, usability, etc.

Automated Static Analysis n n n Static analysers are software tools for source text processing. They parse the program text and try to discover potentially erroneous conditions and bring these to the attention of the V & V team. They are very effective as an aid to inspections - they are a supplement to but not a replacement for inspections.

Tools for Inspection JStyle - Java Code Review Tool n AUDIT - Mobile, paperless application n for accreditation, regulatory compliance, quality assurance, and performance review. n ASSIST- automatically defect list collation tool

Walkthrough n n Less formal than inspection Evaluation by a team of experts similar to the inspection, who go through a set tasks to verify its functions Author or a SQA representative runs the meeting Agenda: to prove it works n Discussion dissolves into discussion of fixes, architecture, blah.

Walkthrough Team n n Team of 3 -5 people. Moderator, as before. Secretary, records errors. Tester, play the role of a computer on some test suits on paper and board.

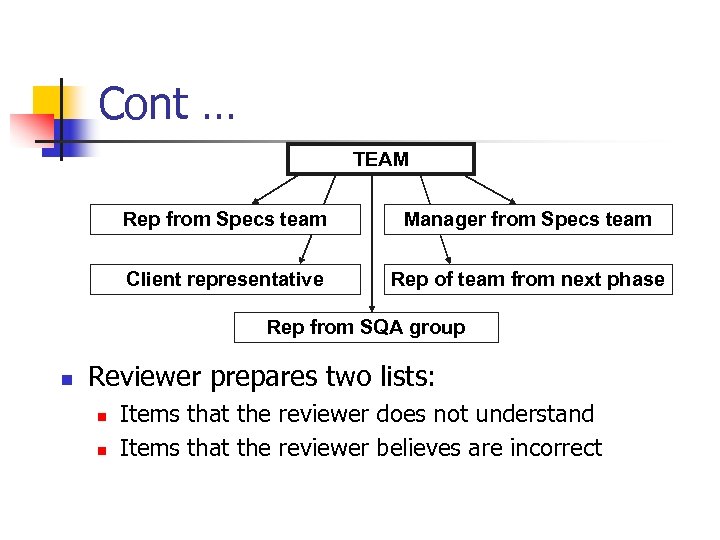

Cont … TEAM Rep from Specs team Manager from Specs team Client representative Rep of team from next phase Rep from SQA group n Reviewer prepares two lists: n n Items that the reviewer does not understand Items that the reviewer believes are incorrect

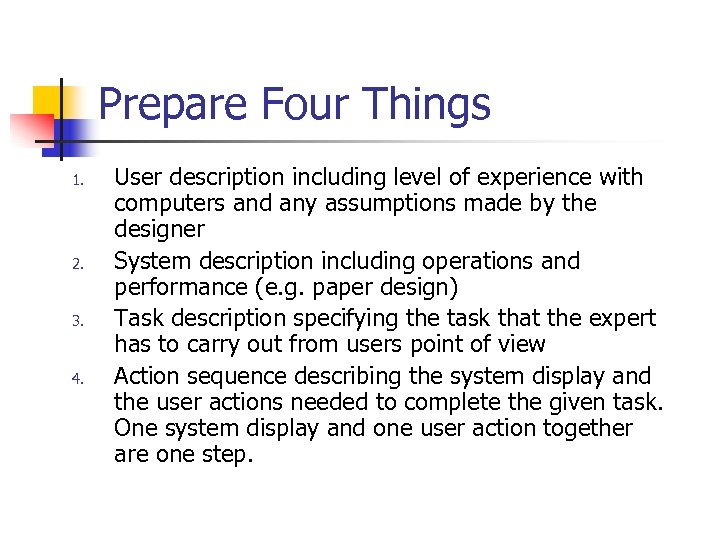

Prepare Four Things 1. 2. 3. 4. User description including level of experience with computers and any assumptions made by the designer System description including operations and performance (e. g. paper design) Task description specifying the task that the expert has to carry out from users point of view Action sequence describing the system display and the user actions needed to complete the given task. One system display and one user action together are one step.

Walkthrough Process n n n Distribute material for walkthrough in advance. The experts read the descriptions. Chaired by the SQA representative. The goal is to record faults for later correction The expert carries out the task by following the action list. The expert asks the following questions with EACH step of the action list

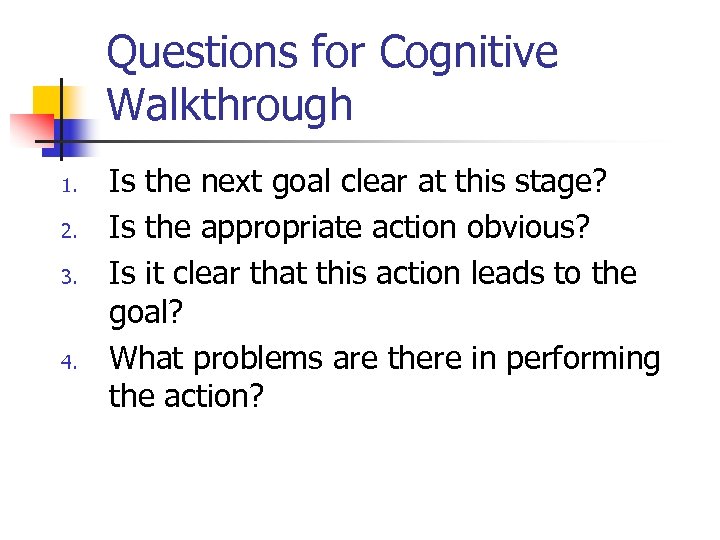

Questions for Cognitive Walkthrough 1. 2. 3. 4. Is the next goal clear at this stage? Is the appropriate action obvious? Is it clear that this action leads to the goal? What problems are there in performing the action?

Cont … n Two ways of doing walkthroughs n Participant driven n Document driven n n Present lists of unclear items and incorrect items Rep from specs team responds to each query Person responsible for document walks the participants through the document Reviewers interrupt with prepared comments or comments triggered by the presentation Interactive process Not to be used for the evaluation of participants

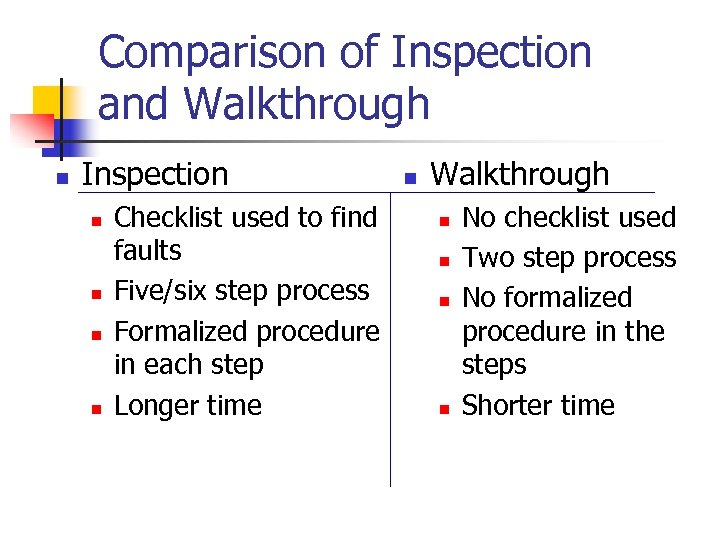

Comparison of Inspection and Walkthrough n Inspection n n Checklist used to find faults Five/six step process Formalized procedure in each step Longer time n Walkthrough n n No checklist used Two step process No formalized procedure in the steps Shorter time

PART IV TESTING IMPLEMENTATION

The V-model of development

Testing Implementation Unit Test System Test Integration/ interface Test Acceptance Test

Unit Testing n n Test individual modules one by one Primary Goals n Conformance to specifications n n Determine to which extent the processing logic satisfies the functions assigned to the module Locate a fault in a smaller region n n In an integrated system, it is not easier to determine which module has caused the fault Reduces debugging efforts

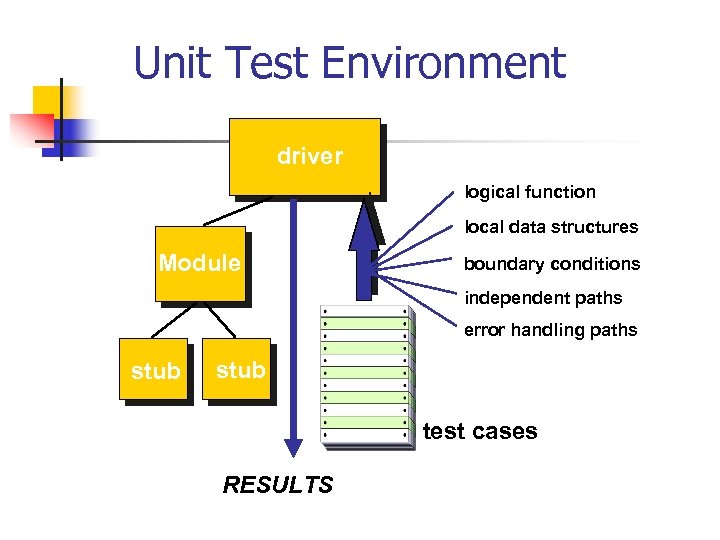

Unit Testing n n Target: the basic unit of a software system, usually it is a called module that corresponds to a function or a method in an object-oriented code Purpose: to verify its logical function, internal code structure, boundary conditions, etc.

Unit Test Environment driver logical function local data structures Module boundary conditions independent paths error handling paths stub test cases RESULTS

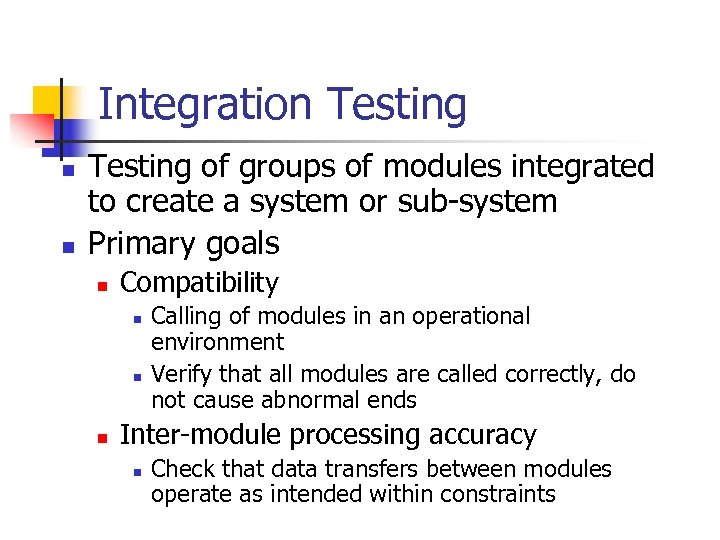

Integration Testing n n Testing of groups of modules integrated to create a system or sub-system Primary goals n Compatibility n n n Calling of modules in an operational environment Verify that all modules are called correctly, do not cause abnormal ends Inter-module processing accuracy n Check that data transfers between modules operate as intended within constraints

Integration Testing n n Takes place when modules or sub-systems are integrated to create larger systems Objectives are to detect faults due to interface errors or invalid assumptions about interfaces Particularly important for object-oriented development as objects are defined by their interfaces Integration testing should be black-box testing with tests derived from the specification

Interfaces Types n Parameter interfaces n n Shared memory interfaces n n Block of memory is shared between procedures Procedural interfaces n n Data passed from one procedure to another Sub-system encapsulates a set of procedures to be called by other sub-systems Message passing interfaces n Sub-systems request services from other subsystems

Some Interface Faults n Interface misuse n n Interface misunderstanding n n A calling component embeds assumptions about the behaviour of the called component which are incorrect Timing faults n n A calling component calls another component and makes an error in its use of its interface e. g. parameters in the wrong order The called and the calling component operate at different speeds and out-of-date information is accessed Etc.

Guidelines to Interface Testing Design n n Design tests so that parameters to a called procedure at the extreme ends of their ranges Always test pointer parameters with null pointers Design tests which cause the component to fail Use stress testing in message passing systems In shared memory systems, vary the order in which components are activated

How to Integrate? n n There needs a testing strategy to integrate the subsystems composed of integrated or basic units Possible choices n n the “big bang” approach, or an incremental integration approach The main consideration is to locate faults Incremental approach reduces this problem

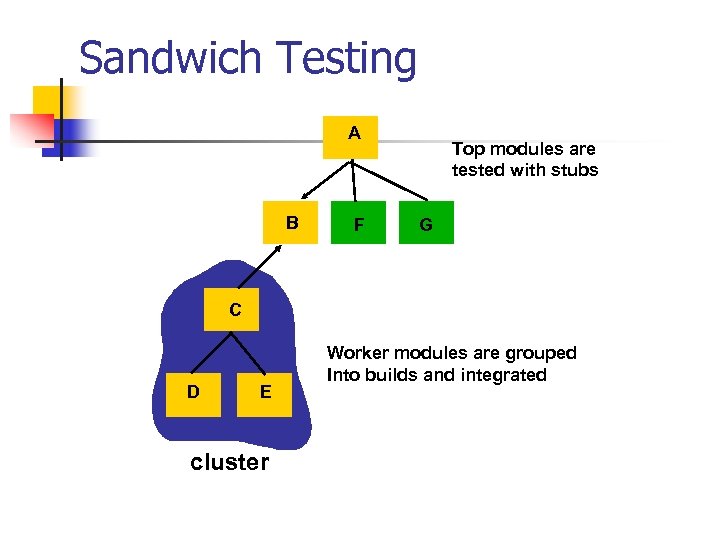

Integration Approaches n Top-down testing n n Bottom-up testing n n Start with high-level system and integrate from the top-down replacing individual components by stubs where appropriate Integrate individual components in levels until the complete system is created Sandwich testing n In practice, most integration involves a combination of these strategies

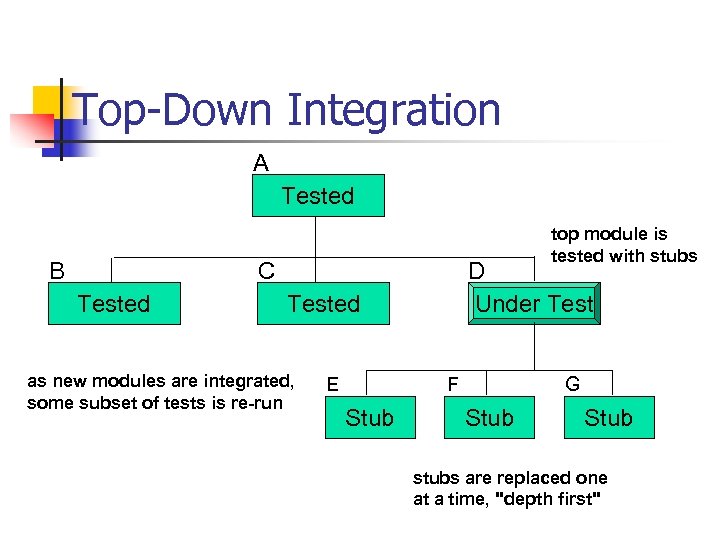

Top-Down Integration A Tested B top module is tested with stubs C Tested D Under Tested as new modules are integrated, some subset of tests is re-run E F Stub G Stub stubs are replaced one at a time, "depth first"

Cont …. n When the unit at the higher level of a hierarchy is tested, all the called units below have not been implemented yet, which are replaced by stubs. n Stubs are pieces of code that approximate the behavior of the missing component n Testing continues by replacing the stubs with the actual units, with lower level units being stubbed.

Cont …. n n n Strategy used in conjunction with top-down development Starts with the highest level of a system and works downwards Construction starts with the sub-models at the highest level Ends with sub-models at the lowest level When sub-models at a level are completed, they are integrated and integration testing is performed

Advantages of Top-Down Approach n Architectural validation n n System demonstration n n Top-down integration testing allows a limited demonstration at an early stage in the development Model integration testing is minimized n n Top-down integration testing is better at discovering errors in the system architecture provides an early integration of units before the software integration phase Redundant functionality in lower level units will be identified by top down unit testing, because there will be no route to test it

Disadvantages of Top-Down Approach n n n It may take time or be difficult to develop program stubs Typically needs system infrastructure before any testing is possible Testing can be expensive (since the whole model must be executed for each test) Adequate input data is difficult to obtain High cost associated with re-testing when changes are made, and a high maintenance cost

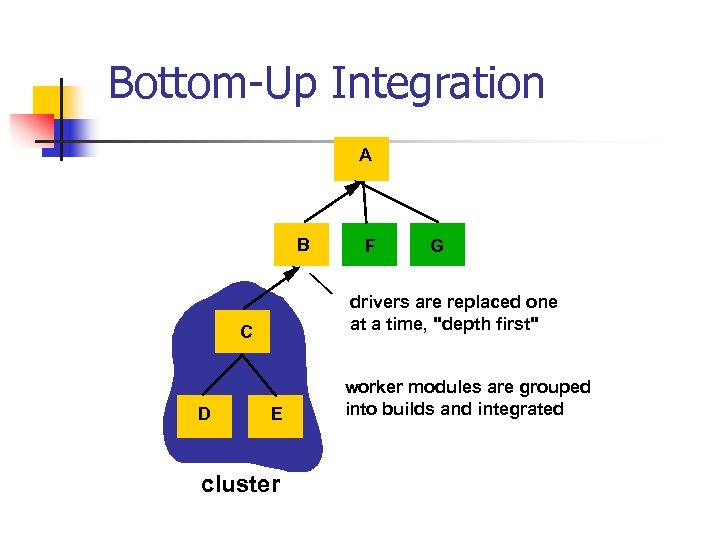

Bottom-Up Integration A B G drivers are replaced one at a time, "depth first" C D F E cluster worker modules are grouped into builds and integrated

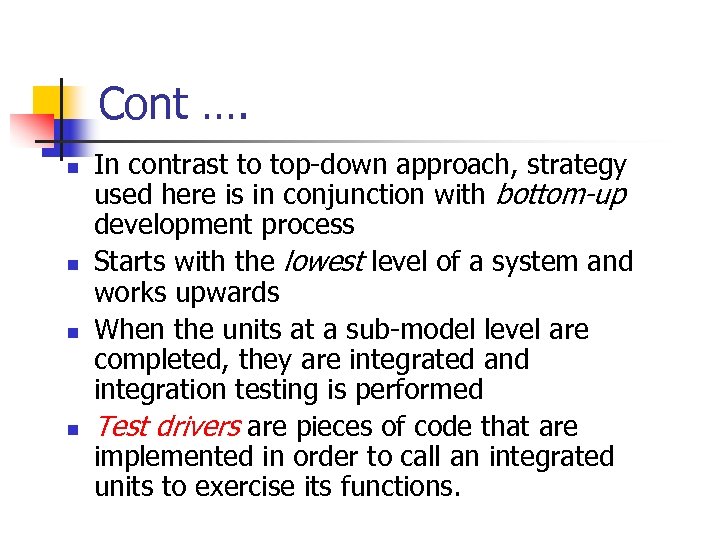

Cont …. n n In contrast to top-down approach, strategy used here is in conjunction with bottom-up development process Starts with the lowest level of a system and works upwards When the units at a sub-model level are completed, they are integrated and integration testing is performed Test drivers are pieces of code that are implemented in order to call an integrated units to exercise its functions.

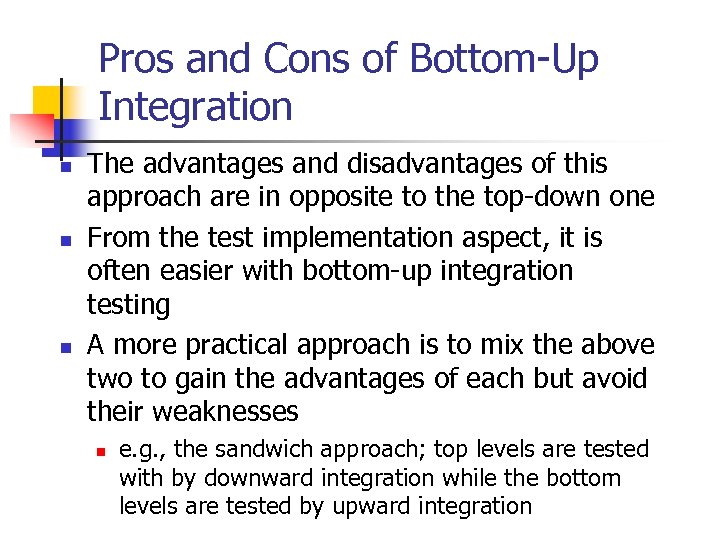

Pros and Cons of Bottom-Up Integration n The advantages and disadvantages of this approach are in opposite to the top-down one From the test implementation aspect, it is often easier with bottom-up integration testing A more practical approach is to mix the above two to gain the advantages of each but avoid their weaknesses n e. g. , the sandwich approach; top levels are tested with by downward integration while the bottom levels are tested by upward integration

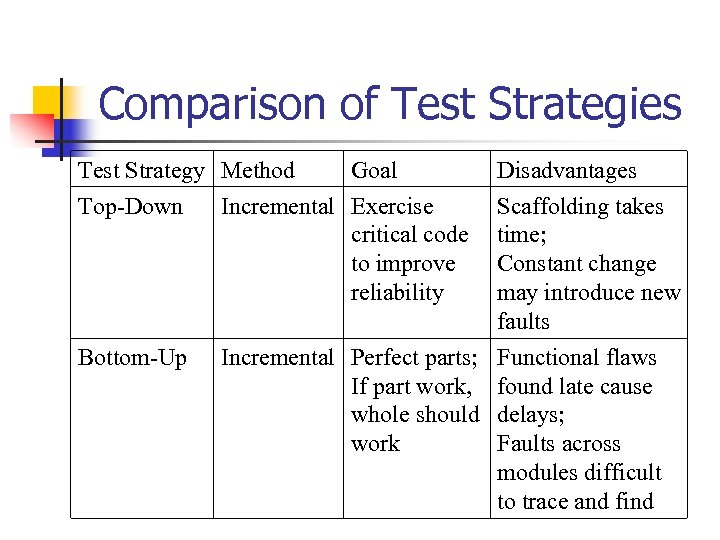

Comparison of Test Strategies Test Strategy Method Goal Top-Down Incremental Exercise critical code to improve reliability Bottom-Up Disadvantages Scaffolding takes time; Constant change may introduce new faults Incremental Perfect parts; Functional flaws If part work, found late cause whole should delays; work Faults across modules difficult to trace and find

Sandwich Testing A B F Top modules are tested with stubs G C D E cluster Worker modules are grouped Into builds and integrated

High Order Testing n n n System or alpha test Validation, acceptance or beta test Many other special purpose testing as illustrated in the two next slides

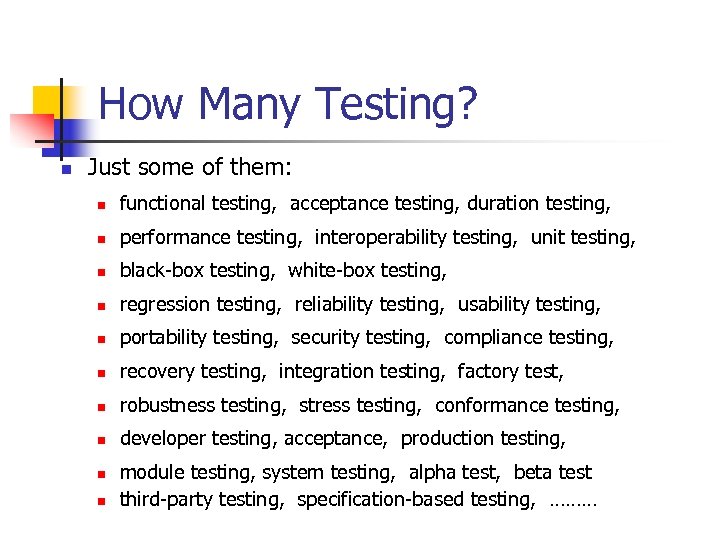

How Many Testing? n Just some of them: n functional testing, acceptance testing, duration testing, n performance testing, interoperability testing, unit testing, n black-box testing, white-box testing, n regression testing, reliability testing, usability testing, n portability testing, security testing, compliance testing, n recovery testing, integration testing, factory test, n robustness testing, stress testing, conformance testing, n developer testing, acceptance, production testing, n n module testing, system testing, alpha test, beta test third-party testing, specification-based testing, ………

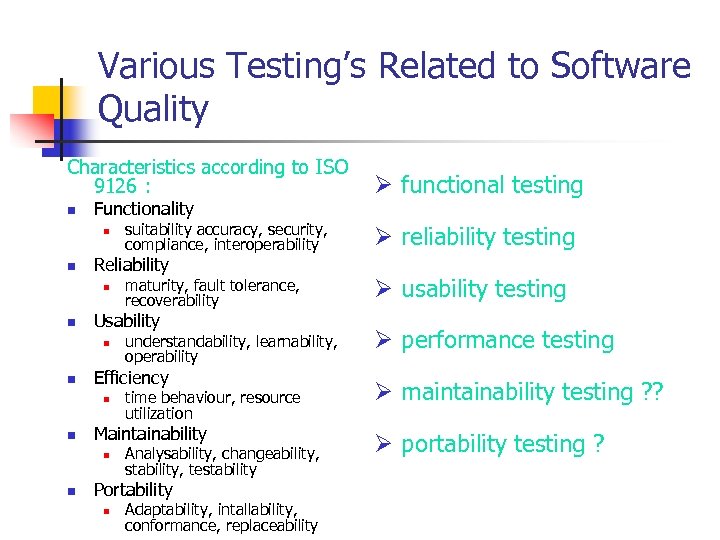

Various Testing’s Related to Software Quality Characteristics according to ISO 9126 : n Functionality n n n Ø usability testing understandability, learnability, operability Ø performance testing time behaviour, resource utilization Maintainability n n maturity, fault tolerance, recoverability Efficiency n n Ø reliability testing Usability n n suitability accuracy, security, compliance, interoperability Reliability n Analysability, changeability, stability, testability Portability n Ø functional testing Adaptability, intallability, conformance, replaceability Ø maintainability testing ? ? Ø portability testing ?

Testing workbenches n n n Testing is an expensive process phase. Testing workbenches provide a range of tools to reduce the time required and total testing costs Most testing workbenches are open systems because testing needs are organisation-specific Difficult to integrate with closed design and analysis workbenches

A testing workbench

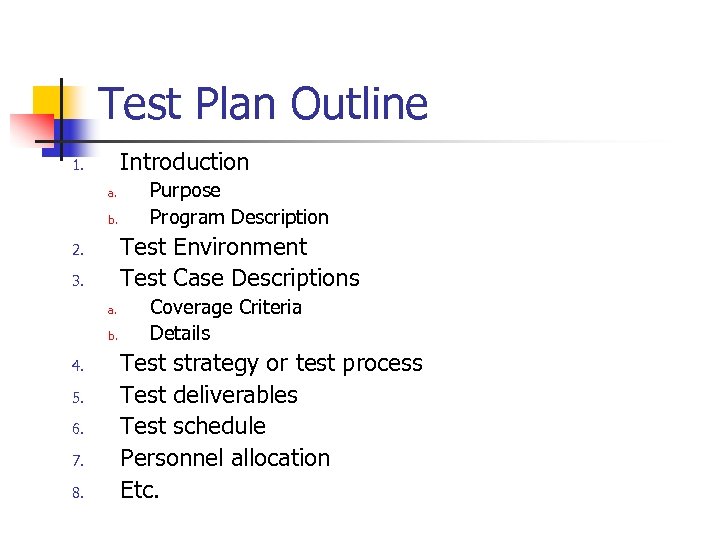

Test Plan Outline Introduction 1. a. b. Test Environment Test Case Descriptions 2. 3. a. b. 4. 5. 6. 7. 8. Purpose Program Description Coverage Criteria Details Test strategy or test process Test deliverables Test schedule Personnel allocation Etc.

Test Plan or Test Documentation Set n The set of test planning documents might include: n n A Testing Project Plan, which identifies classes of tasks and broadly allocates people and resources to them; Descriptions of the platforms (hardware and software environments) that you will test on, and of the relevant variations among the items that make up your platform. Examples of variables are operating system type and version, browser, printer driver, video card/driver, CPU, hard disk capacity, free memory, and third party utility software.

Cont … n n n High-level designs for test cases (individual tests) and test suites (collections of related tests); Detailed lists or descriptions of test cases; Descriptions (such as protocol specifications) of the interactions of the software under test (SUT) with other applications that the SUT must interact with. Example: SUT includes a web-based shopping cart, which must obtain credit card authorizations from VISA. Anything else that you would put in a hard copy or virtual binder that describes the tests you will develop and run. This set of materials is called the test plan or the test documentation set.

PART V SUMMARY

Bugs are costly n Pentium bug n n n ARIANE Failure n n n Intel Pentium chip, released in 1994 produced error in floating point division Cost : $475 million In December 1996, the Ariane 5 rocket exploded 40 seconds after take off. A software components threw an exception Cost : $400 million payload. Therac-25 Accident : n n A software failure caused wrong dosages of x-rays. Cost: Human Loss.

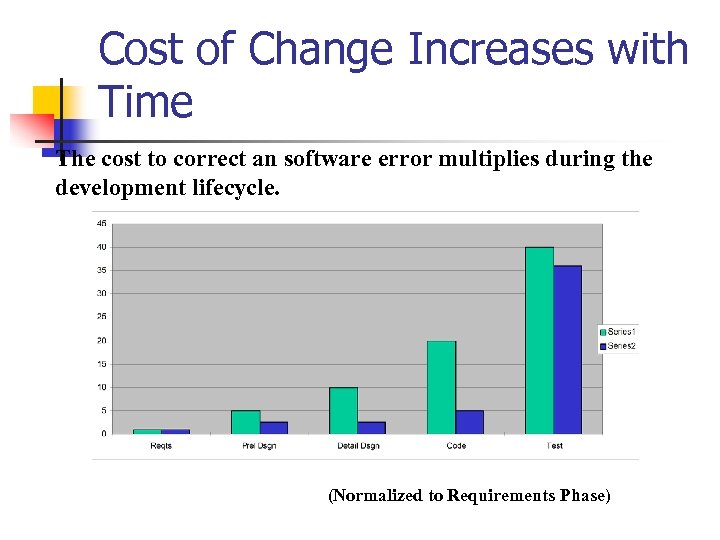

Cost of Change Increases with Time Cost scale factor The cost to correct an software error multiplies during the development lifecycle. (Normalized to Requirements Phase)

Bill Gates’ Comments on Testing n n n Testing is another area where I have to say I’m a little bit disappointed in the lack of progress. At Microsoft, in a typical development group, there are many more testers than there are engineers writing code. Yet engineers spend well over a third of their time doing testing type work. You could say that we spend more time testing than we do writing code. And if you go back through the history of large-scale systems, that’s the way they’ve been. But, you know, what kind of new techniques are there in terms of analyzing where those things come from and having constructs that do automatic testing? Very, very little. …

Problems in Current Practices n n There are never sufficiently many test cases Late detection of bugs Testing does not find all the errors Testing is expensive and takes a lot of time n n n Testing is still a largely informal task Industrial practices far from satisfactory n n ~70% of time spent on V&V Ad hoc, manual, error prone Inadequate for safety-critical systems

Maturity of Testing Philosophy Level 0: Testing == Debugging PHASE 1: Testing shows the software works PHASE 2: Testing shows the software doesn’t work PHASE 3: Testing doesn’t prove anything, it reduces risk of unacceptable software delivery. PHASE 4: Testing is not an act, it’s a mental discipline of quality.

The Latest Direction n Face with so many persistent problems, there are some new development that worth addressing n n Make testing into the heart of programming, e. g. , XP Test-first, programming second More emphasis on prevention than detection Doing better testing is not the only purpose; designing a consistent development process with quality assurance in the beginning, e. g. , CMMI, is more important

References/Textbooks n n n n Antonia Bertolino, Chapter 5 (2004) “Software Testing”, Guide to the Software Engineering Body of Knowledge, Software Engineering Coordinating Committee, IEEE. Ironman version. Glenford J. Myers. (2004) The Art of Software Testing, 2 nd, Wiley Jerry Z. Gao, H. -S. Tsao, Ye Wu. (2003) Testing and Quality Assurance for Component Based Software Elfriede Dustin. (2003) Effective software testing: 50 specific ways to improve your testing, Pearson Education, Inc. Paul C. Jorgensen. (2002) Software Testing: A Craftsman’s Approach, 2 nd Edition, CRC Press Boris Beizer. (1990) Software Testing Techniques, 2 nd Edition, The Coriolis Group Hetzel, W. (1988). The complete Guide to Software Testing, 2 nd Edition, John Wiley and Sons, Inc.

Other References n n n n Rick Hower. “Software QA / Test Resource Center” http: //www. softwareqatest. com/index. html Open Test-ware Review, http: //tejasconsulting. com/opentestware/ Software Test Tool Evaluation Center, http: //www. testtoolevaluation. com/ Center for Software Testing Education & Research, http: //www. testingeducation. org/k 04/index. html Pettichord, B. (2000). Testers and Developers Think Differently, Software Testing and Quality Engineering, STQE Magazine. http: //www. io. com/~wazmo/papers/testers_and_developers. pdf James A. Whittaker, “What is Software Testing? And Why Is It So Hard? ” IEEE Software, January / February 2000. Testing FAQ http: //www. cigital. com/c. s. t. faq. html Object Mentor Incorporation, “Junit Home Page”, 2001. http: //www. junit. org/

ae05732a8792af6e8ef8c0c9d80339c1.ppt