77131146313fdb56b8dcb02add836398.ppt

- Количество слайдов: 87

Chapter 6 Experiences Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Chapter 6 Experiences Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Additional Recommended Literature • IESE’s Knowledge Management Product Experience Base (KM-PEB) – Internet-based Information system on Knowledge Management Werkzeuge (http: //demolab. iese. fhg. de: 8080/KM-PEB/) • R. Bergmann, K. -D. Althoff, S. Breen, M. Göker, M. Manago, R. Traphöner & S. Wess (2003). Developing Industrial Case-Based Reasoning Applications. Springer Verlag, LNAI 1612 • Althoff, K. -D. & Nick, M. (2004). How to Support Experience Management with Evaluation – Foundations, Evaluation Methods, and Examples for Case-Based Reasoning and Experience Factory. Springer Verlag, LNAI. • M. M. Richter: Knowledge Mnagement for E-Commerce. Lecture Notes. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Additional Recommended Literature • IESE’s Knowledge Management Product Experience Base (KM-PEB) – Internet-based Information system on Knowledge Management Werkzeuge (http: //demolab. iese. fhg. de: 8080/KM-PEB/) • R. Bergmann, K. -D. Althoff, S. Breen, M. Göker, M. Manago, R. Traphöner & S. Wess (2003). Developing Industrial Case-Based Reasoning Applications. Springer Verlag, LNAI 1612 • Althoff, K. -D. & Nick, M. (2004). How to Support Experience Management with Evaluation – Foundations, Evaluation Methods, and Examples for Case-Based Reasoning and Experience Factory. Springer Verlag, LNAI. • M. M. Richter: Knowledge Mnagement for E-Commerce. Lecture Notes. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

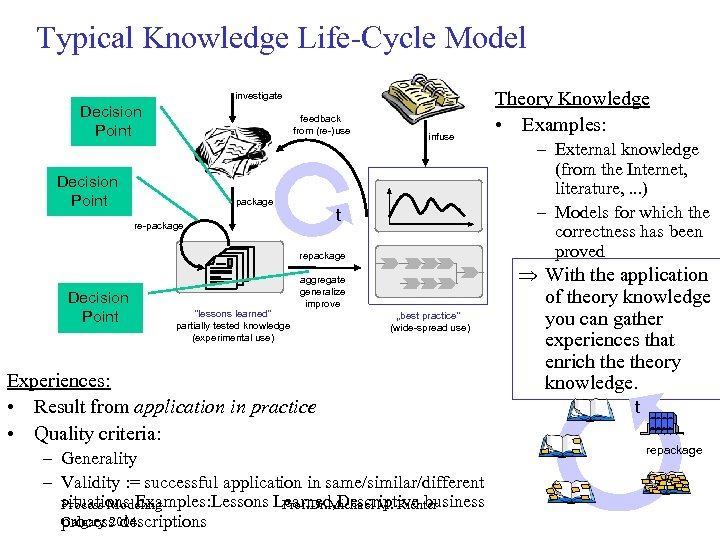

Process Models • In a process model one can find several methods for realizing a process. Each time a new project plan was made it could be stored and enrich the process model. • In this way a process model constitutes an experience and could be a part of an experience base. • This leaves the following problems unsolved_ – How to get the most useful experience for an actual problem? – There are many experience that are not coded in the form of a process model. • So the experience base needs to be much broader than just a process model. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Process Models • In a process model one can find several methods for realizing a process. Each time a new project plan was made it could be stored and enrich the process model. • In this way a process model constitutes an experience and could be a part of an experience base. • This leaves the following problems unsolved_ – How to get the most useful experience for an actual problem? – There are many experience that are not coded in the form of a process model. • So the experience base needs to be much broader than just a process model. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

PART 1 Lessons Learned and Best Practices Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

PART 1 Lessons Learned and Best Practices Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Informal Examples for Lessons Learned • Experience regarding customer – “Company X wants to change as little as possible. ” This experience helps in project acquisition talks and making offers. • Experience regarding a specific topic (e. g. , reports as deliverables) – "The effort for report writing must be carefully planned. Plan four weeks for a report for structuring, formatting, layout, and phrasing. This suggestion is based on two reports with approx. 70 pages each. " – "Do not hand out draft reports without writing on each page 'DRAFT'. " • Experience regarding certain project types – "Availability of case study partner: Finding a case study partner IS a risk factor for projects of the combined type 'R&D and transfer'. […]" • and any combination of the above. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Informal Examples for Lessons Learned • Experience regarding customer – “Company X wants to change as little as possible. ” This experience helps in project acquisition talks and making offers. • Experience regarding a specific topic (e. g. , reports as deliverables) – "The effort for report writing must be carefully planned. Plan four weeks for a report for structuring, formatting, layout, and phrasing. This suggestion is based on two reports with approx. 70 pages each. " – "Do not hand out draft reports without writing on each page 'DRAFT'. " • Experience regarding certain project types – "Availability of case study partner: Finding a case study partner IS a risk factor for projects of the combined type 'R&D and transfer'. […]" • and any combination of the above. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

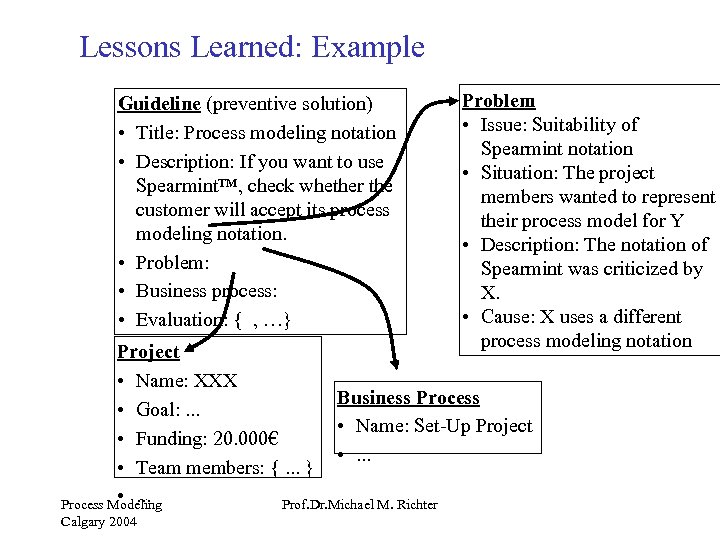

Lessons Learned: Example Guideline (preventive solution) • Title: Process modeling notation • Description: If you want to use Spearmint™, check whether the customer will accept its process modeling notation. • Problem: • Business process: • Evaluation: { , …} Problem • Issue: Suitability of Spearmint notation • Situation: The project members wanted to represent their process model for Y • Description: The notation of Spearmint was criticized by X. • Cause: X uses a different process modeling notation Project • Name: XXX Business Process • Goal: . . . • Name: Set-Up Project • Funding: 20. 000€ • . . . • Team members: {. . . } • . . . Process Modeling Prof. Dr. Michael M. Richter Calgary 2004

Lessons Learned: Example Guideline (preventive solution) • Title: Process modeling notation • Description: If you want to use Spearmint™, check whether the customer will accept its process modeling notation. • Problem: • Business process: • Evaluation: { , …} Problem • Issue: Suitability of Spearmint notation • Situation: The project members wanted to represent their process model for Y • Description: The notation of Spearmint was criticized by X. • Cause: X uses a different process modeling notation Project • Name: XXX Business Process • Goal: . . . • Name: Set-Up Project • Funding: 20. 000€ • . . . • Team members: {. . . } • . . . Process Modeling Prof. Dr. Michael M. Richter Calgary 2004

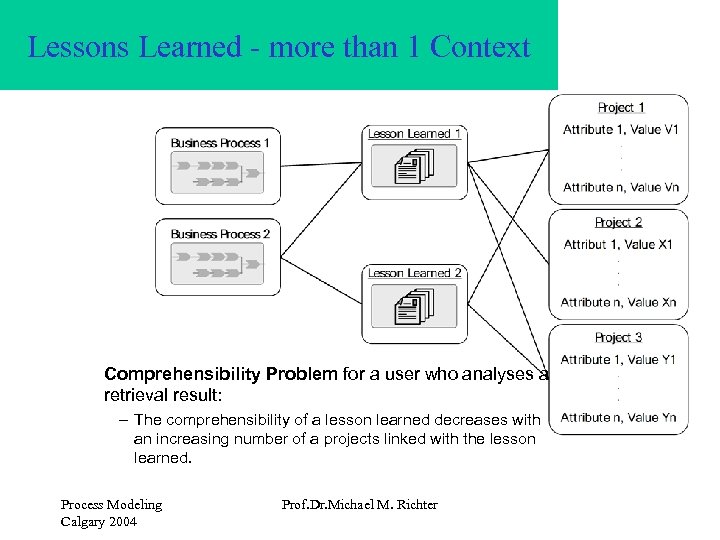

Lessons Learned - more than 1 Context Comprehensibility Problem for a user who analyses a retrieval result: – The comprehensibility of a lesson learned decreases with an increasing number of a projects linked with the lesson learned. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Lessons Learned - more than 1 Context Comprehensibility Problem for a user who analyses a retrieval result: – The comprehensibility of a lesson learned decreases with an increasing number of a projects linked with the lesson learned. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

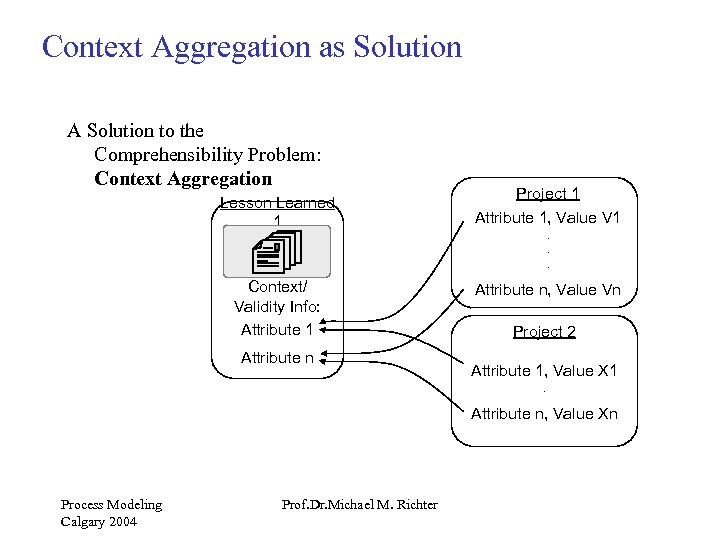

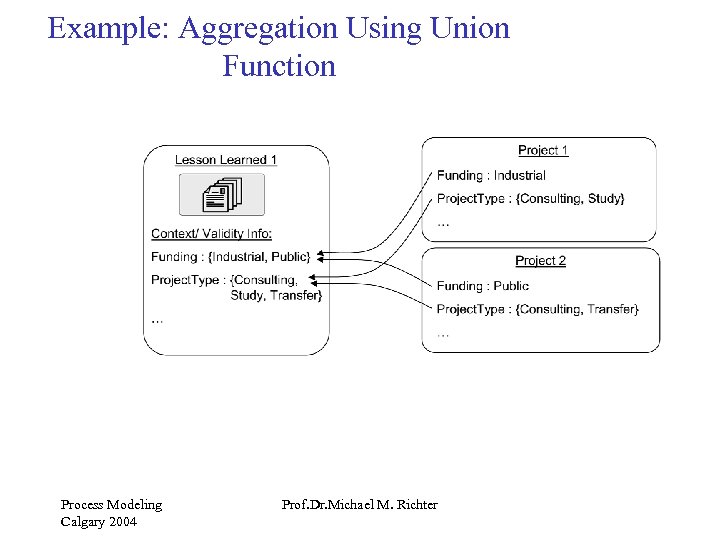

Context Aggregation as Solution A Solution to the Comprehensibility Problem: Context Aggregation Lesson Learned 1 Project 1 Attribute 1, Value V 1. . . Context/ Validity Info: Attribute 1 Attribute n, Value Vn Project 2 Attribute 1, Value X 1. Attribute n, Value Xn Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Context Aggregation as Solution A Solution to the Comprehensibility Problem: Context Aggregation Lesson Learned 1 Project 1 Attribute 1, Value V 1. . . Context/ Validity Info: Attribute 1 Attribute n, Value Vn Project 2 Attribute 1, Value X 1. Attribute n, Value Xn Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Example: Aggregation Using Union Function Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Example: Aggregation Using Union Function Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Benefits of “Lessons Learned” • • Prevention of the recurrence of undesirable events Promotion of the recurrence of good practice Improvement of current practices Increase in efficiency, productivity, and quality by reducing costs and meetings schedules • Increase in communication and teamwork • Lessons learned complement more formal measurement programs by allowing persons to articulate insights (usually informally) not captured in the measurement programs. – As such they can also be a vehicle for recording the data interpretations given during feedback sessions that are part of a GQM-based measurement program. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Benefits of “Lessons Learned” • • Prevention of the recurrence of undesirable events Promotion of the recurrence of good practice Improvement of current practices Increase in efficiency, productivity, and quality by reducing costs and meetings schedules • Increase in communication and teamwork • Lessons learned complement more formal measurement programs by allowing persons to articulate insights (usually informally) not captured in the measurement programs. – As such they can also be a vehicle for recording the data interpretations given during feedback sessions that are part of a GQM-based measurement program. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

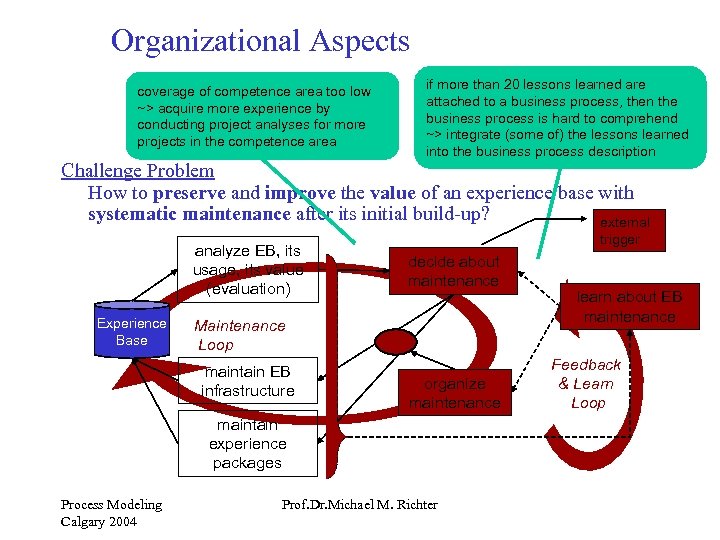

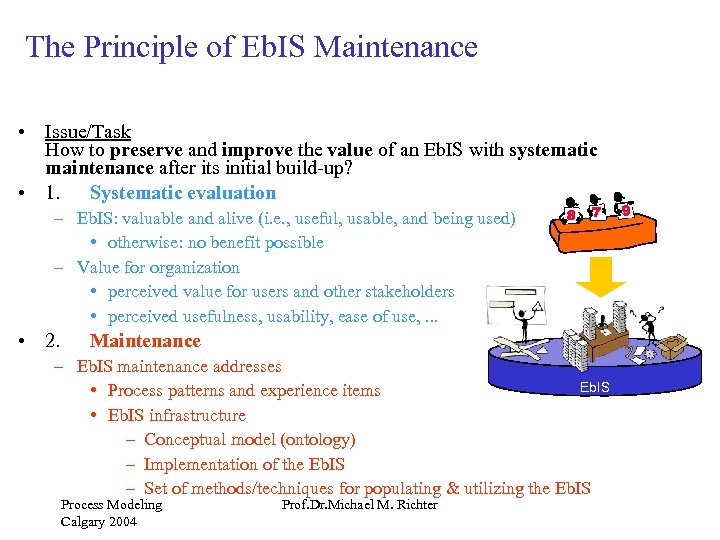

Organizational Aspects coverage of competence area too low ~> acquire more experience by conducting project analyses for more projects in the competence area if more than 20 lessons learned are attached to a business process, then the business process is hard to comprehend ~> integrate (some of) the lessons learned into the business process description Challenge Problem How to preserve and improve the value of an experience base with systematic maintenance after its initial build-up? external analyze EB, its usage, its value (evaluation) Experience Base trigger decide about maintenance Maintenance Loop maintain EB infrastructure organize maintenance maintain experience packages Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter learn about EB maintenance Feedback & Learn Loop

Organizational Aspects coverage of competence area too low ~> acquire more experience by conducting project analyses for more projects in the competence area if more than 20 lessons learned are attached to a business process, then the business process is hard to comprehend ~> integrate (some of) the lessons learned into the business process description Challenge Problem How to preserve and improve the value of an experience base with systematic maintenance after its initial build-up? external analyze EB, its usage, its value (evaluation) Experience Base trigger decide about maintenance Maintenance Loop maintain EB infrastructure organize maintenance maintain experience packages Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter learn about EB maintenance Feedback & Learn Loop

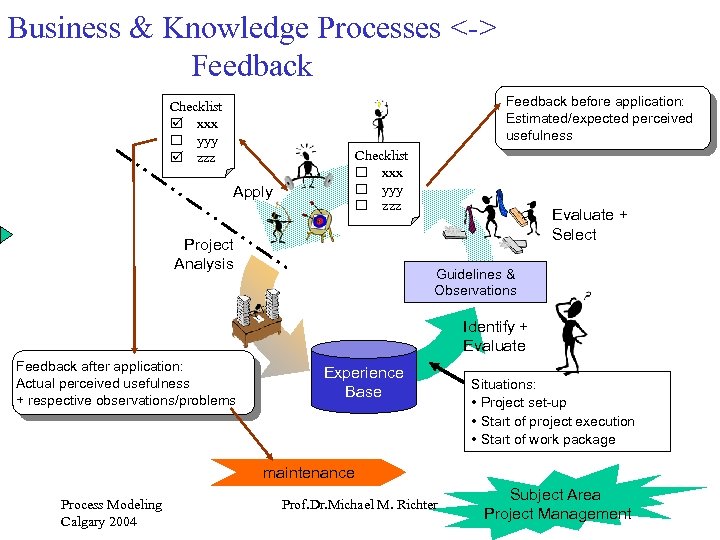

Business & Knowledge Processes <-> Feedback before application: Estimated/expected perceived usefulness Checklist þ xxx o yyy þ zzz Checklist o xxx o yyy o zzz Apply Project Analysis Evaluate + Select Guidelines & Observations Identify + Evaluate Feedback after application: Actual perceived usefulness + respective observations/problems Experience Base Situations: • Project set-up • Start of project execution • Start of work package maintenance Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter Subject Area Project Management

Business & Knowledge Processes <-> Feedback before application: Estimated/expected perceived usefulness Checklist þ xxx o yyy þ zzz Checklist o xxx o yyy o zzz Apply Project Analysis Evaluate + Select Guidelines & Observations Identify + Evaluate Feedback after application: Actual perceived usefulness + respective observations/problems Experience Base Situations: • Project set-up • Start of project execution • Start of work package maintenance Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter Subject Area Project Management

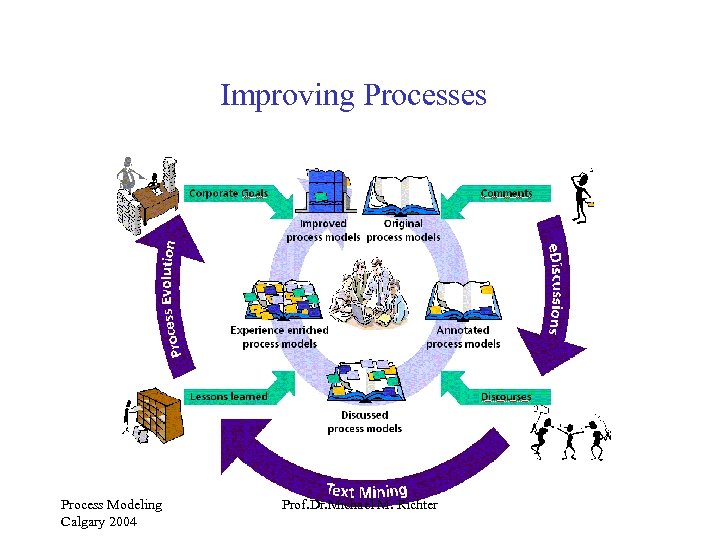

Improving Processes Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Improving Processes Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

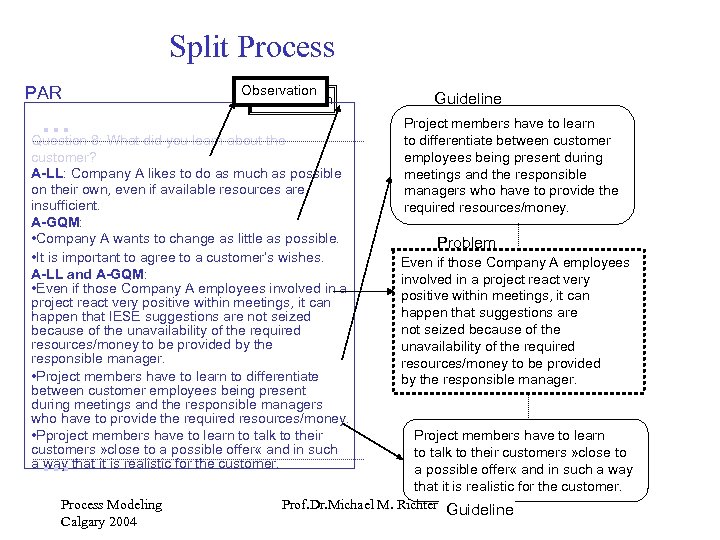

Split Process PAR . . . Observation Question 8: What did you learn about the customer? A-LL: Company A likes to do as much as possible on their own, even if available resources are insufficient. A-GQM: • Company A wants to change as little as possible. • It is important to agree to a customer’s wishes. A-LL and A-GQM: • Even if those Company A employees involved in a project react very positive within meetings, it can happen that IESE suggestions are not seized because of the unavailability of the required resources/money to be provided by the responsible manager. • Project members have to learn to differentiate between customer employees being present during meetings and the responsible managers who have to provide the required resources/money. • Pproject members have to learn to talk to their customers » close to a possible offer « and in such a way that it is realistic for the customer. . Process Modeling Calgary 2004 Guideline Project members have to learn to differentiate between customer employees being present during meetings and the responsible managers who have to provide the required resources/money. Problem Even if those Company A employees involved in a project react very positive within meetings, it can happen that suggestions are not seized because of the unavailability of the required resources/money to be provided by the responsible manager. Project members have to learn to talk to their customers » close to a possible offer « and in such a way that it is realistic for the customer. Prof. Dr. Michael M. Richter Guideline

Split Process PAR . . . Observation Question 8: What did you learn about the customer? A-LL: Company A likes to do as much as possible on their own, even if available resources are insufficient. A-GQM: • Company A wants to change as little as possible. • It is important to agree to a customer’s wishes. A-LL and A-GQM: • Even if those Company A employees involved in a project react very positive within meetings, it can happen that IESE suggestions are not seized because of the unavailability of the required resources/money to be provided by the responsible manager. • Project members have to learn to differentiate between customer employees being present during meetings and the responsible managers who have to provide the required resources/money. • Pproject members have to learn to talk to their customers » close to a possible offer « and in such a way that it is realistic for the customer. . Process Modeling Calgary 2004 Guideline Project members have to learn to differentiate between customer employees being present during meetings and the responsible managers who have to provide the required resources/money. Problem Even if those Company A employees involved in a project react very positive within meetings, it can happen that suggestions are not seized because of the unavailability of the required resources/money to be provided by the responsible manager. Project members have to learn to talk to their customers » close to a possible offer « and in such a way that it is realistic for the customer. Prof. Dr. Michael M. Richter Guideline

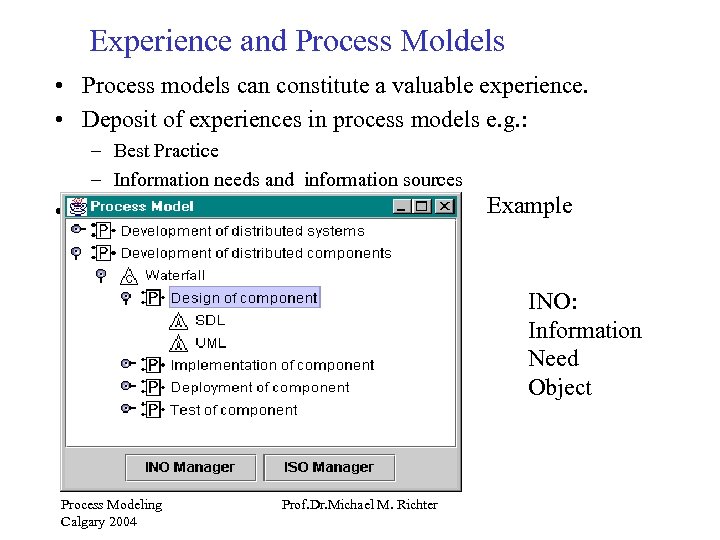

Experience and Process Moldels • Process models can constitute a valuable experience. • Deposit of experiences in process models e. g. : – Best Practice – Information needs and information sources Example • INO: Information Need Object Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Experience and Process Moldels • Process models can constitute a valuable experience. • Deposit of experiences in process models e. g. : – Best Practice – Information needs and information sources Example • INO: Information Need Object Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

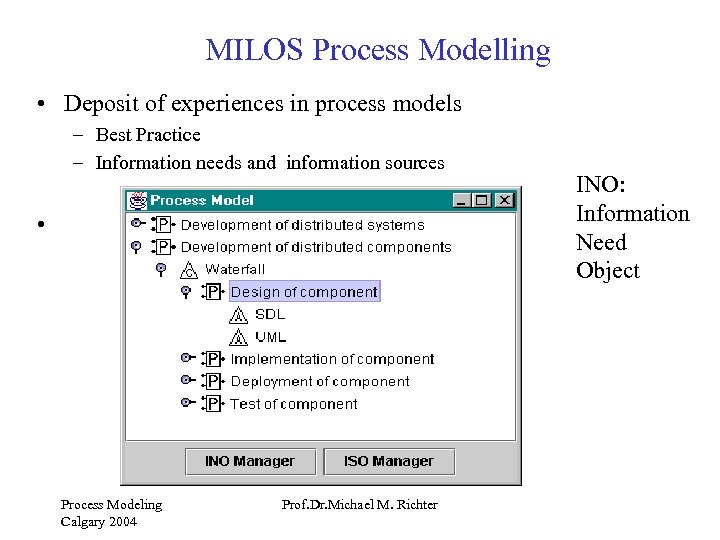

MILOS Process Modelling • Deposit of experiences in process models – Best Practice – Information needs and information sources • Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter INO: Information Need Object

MILOS Process Modelling • Deposit of experiences in process models – Best Practice – Information needs and information sources • Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter INO: Information Need Object

PART 2 Similarity Based Reasoning (Case Based Reasoning, CBR) Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

PART 2 Similarity Based Reasoning (Case Based Reasoning, CBR) Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

The Problem • If there are many experiences, which is the most suitable one in a given situation? • The difficulty is that usually none of the experiences deals with exactly the same problem as presented in the actual situation. • We need a ranking of the experiences which corresponds to their usefulness for the actual problem. • This will be achieved by defining numerical functions, the similarity measures. They are the dual of distance measures. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

The Problem • If there are many experiences, which is the most suitable one in a given situation? • The difficulty is that usually none of the experiences deals with exactly the same problem as presented in the actual situation. • We need a ranking of the experiences which corresponds to their usefulness for the actual problem. • This will be achieved by defining numerical functions, the similarity measures. They are the dual of distance measures. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Experience Units • Experience units are the elementary (atomic) pieces of experience. • We call such a unit a case. A case is structured in a problem description and a solution description. These descriptions can be more or less detailed; in particular the solution description can contain many comments. • The case took place in certain context which should also be mentioned. • A complex experience is a set of cases, organized in a case base. • The idea is to use cases in non-identical contexts for nonidentical problems. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Experience Units • Experience units are the elementary (atomic) pieces of experience. • We call such a unit a case. A case is structured in a problem description and a solution description. These descriptions can be more or less detailed; in particular the solution description can contain many comments. • The case took place in certain context which should also be mentioned. • A complex experience is a set of cases, organized in a case base. • The idea is to use cases in non-identical contexts for nonidentical problems. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

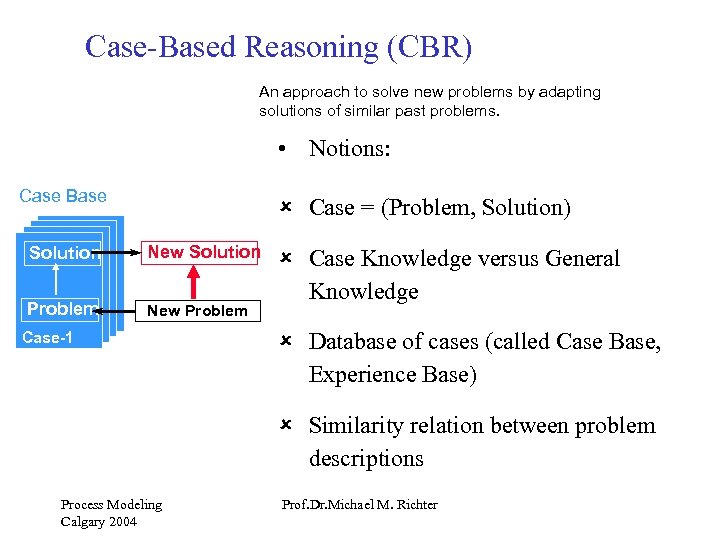

Case-Based Reasoning (CBR) An approach to solve new problems by adapting solutions of similar past problems. • Notions: Case Base û Case = (Problem, Solution) Solution New Solution Problem New Problem Case-1 û Case Knowledge versus General Knowledge û Database of cases (called Case Base, Experience Base) û Similarity relation between problem descriptions Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Case-Based Reasoning (CBR) An approach to solve new problems by adapting solutions of similar past problems. • Notions: Case Base û Case = (Problem, Solution) Solution New Solution Problem New Problem Case-1 û Case Knowledge versus General Knowledge û Database of cases (called Case Base, Experience Base) û Similarity relation between problem descriptions Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

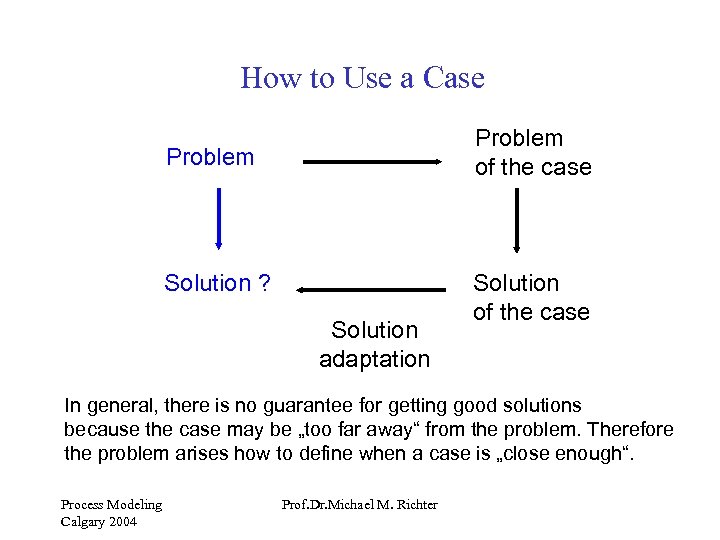

How to Use a Case Problem of the case Problem Solution ? Solution adaptation Solution of the case In general, there is no guarantee for getting good solutions because the case may be „too far away“ from the problem. Therefore the problem arises how to define when a case is „close enough“. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

How to Use a Case Problem of the case Problem Solution ? Solution adaptation Solution of the case In general, there is no guarantee for getting good solutions because the case may be „too far away“ from the problem. Therefore the problem arises how to define when a case is „close enough“. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

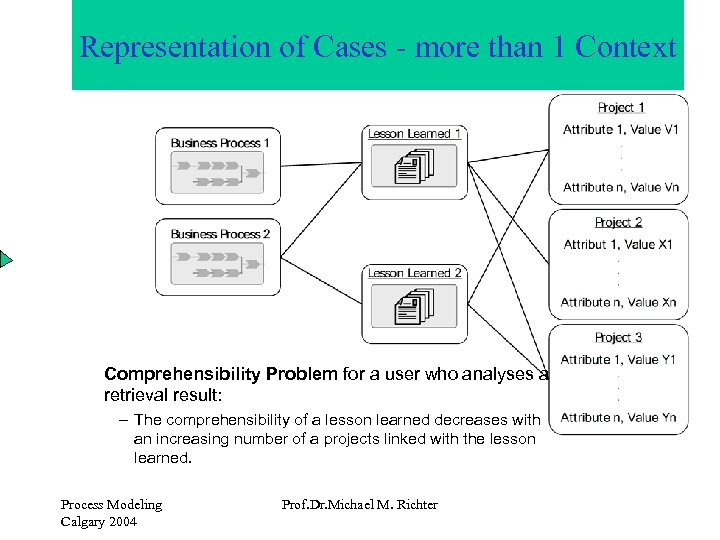

Representation of Cases - more than 1 Context Comprehensibility Problem for a user who analyses a retrieval result: – The comprehensibility of a lesson learned decreases with an increasing number of a projects linked with the lesson learned. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Representation of Cases - more than 1 Context Comprehensibility Problem for a user who analyses a retrieval result: – The comprehensibility of a lesson learned decreases with an increasing number of a projects linked with the lesson learned. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

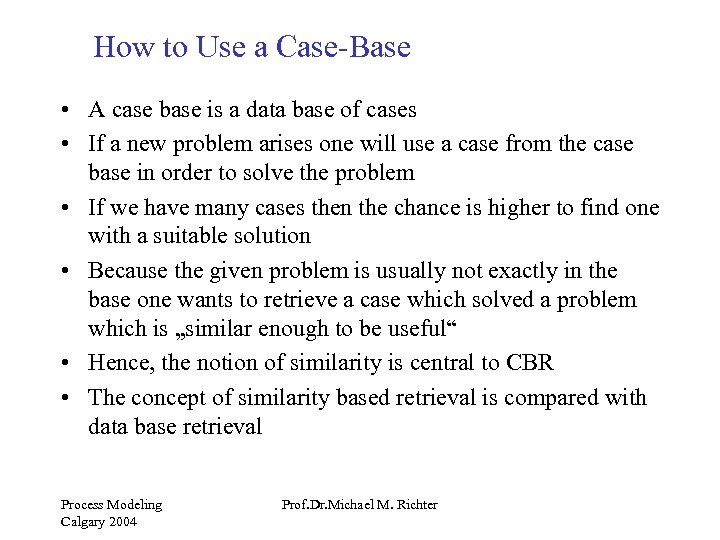

How to Use a Case-Base • A case base is a data base of cases • If a new problem arises one will use a case from the case base in order to solve the problem • If we have many cases then the chance is higher to find one with a suitable solution • Because the given problem is usually not exactly in the base one wants to retrieve a case which solved a problem which is „similar enough to be useful“ • Hence, the notion of similarity is central to CBR • The concept of similarity based retrieval is compared with data base retrieval Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

How to Use a Case-Base • A case base is a data base of cases • If a new problem arises one will use a case from the case base in order to solve the problem • If we have many cases then the chance is higher to find one with a suitable solution • Because the given problem is usually not exactly in the base one wants to retrieve a case which solved a problem which is „similar enough to be useful“ • Hence, the notion of similarity is central to CBR • The concept of similarity based retrieval is compared with data base retrieval Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

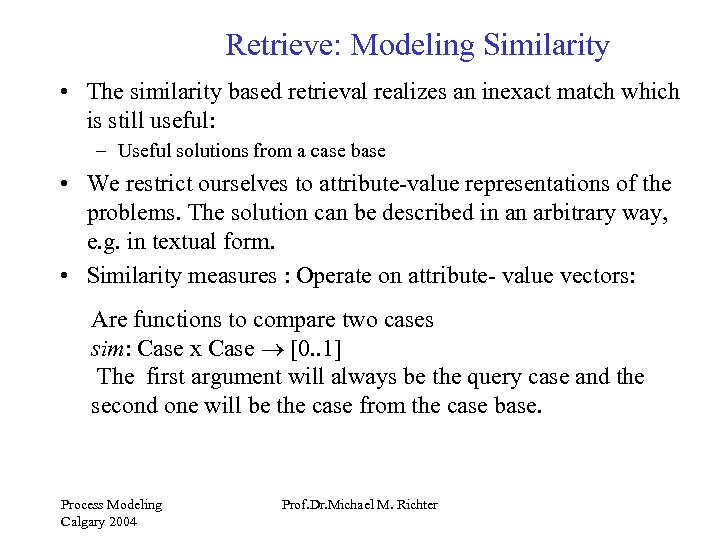

Retrieve: Modeling Similarity • The similarity based retrieval realizes an inexact match which is still useful: – Useful solutions from a case base • We restrict ourselves to attribute-value representations of the problems. The solution can be described in an arbitrary way, e. g. in textual form. • Similarity measures : Operate on attribute- value vectors: Are functions to compare two cases sim: Case x Case ® [0. . 1] The first argument will always be the query case and the second one will be the case from the case base. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Retrieve: Modeling Similarity • The similarity based retrieval realizes an inexact match which is still useful: – Useful solutions from a case base • We restrict ourselves to attribute-value representations of the problems. The solution can be described in an arbitrary way, e. g. in textual form. • Similarity measures : Operate on attribute- value vectors: Are functions to compare two cases sim: Case x Case ® [0. . 1] The first argument will always be the query case and the second one will be the case from the case base. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

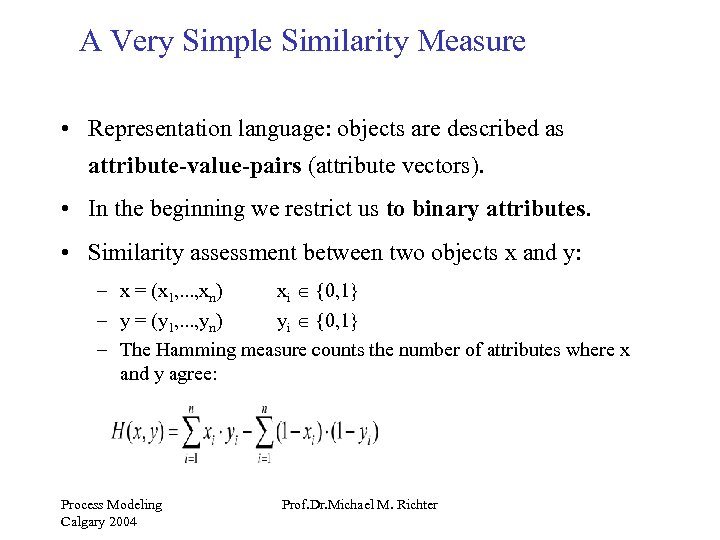

A Very Simple Similarity Measure • Representation language: objects are described as attribute-value-pairs (attribute vectors). • In the beginning we restrict us to binary attributes. • Similarity assessment between two objects x and y: – x = (x 1, . . . , xn) xi {0, 1} – y = (y 1, . . . , yn) yi {0, 1} – The Hamming measure counts the number of attributes where x and y agree: Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

A Very Simple Similarity Measure • Representation language: objects are described as attribute-value-pairs (attribute vectors). • In the beginning we restrict us to binary attributes. • Similarity assessment between two objects x and y: – x = (x 1, . . . , xn) xi {0, 1} – y = (y 1, . . . , yn) yi {0, 1} – The Hamming measure counts the number of attributes where x and y agree: Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

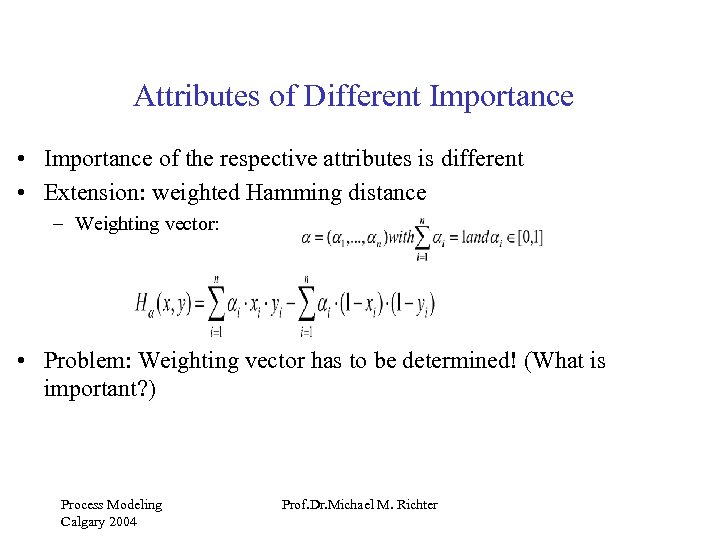

Attributes of Different Importance • Importance of the respective attributes is different • Extension: weighted Hamming distance – Weighting vector: • Problem: Weighting vector has to be determined! (What is important? ) Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Attributes of Different Importance • Importance of the respective attributes is different • Extension: weighted Hamming distance – Weighting vector: • Problem: Weighting vector has to be determined! (What is important? ) Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

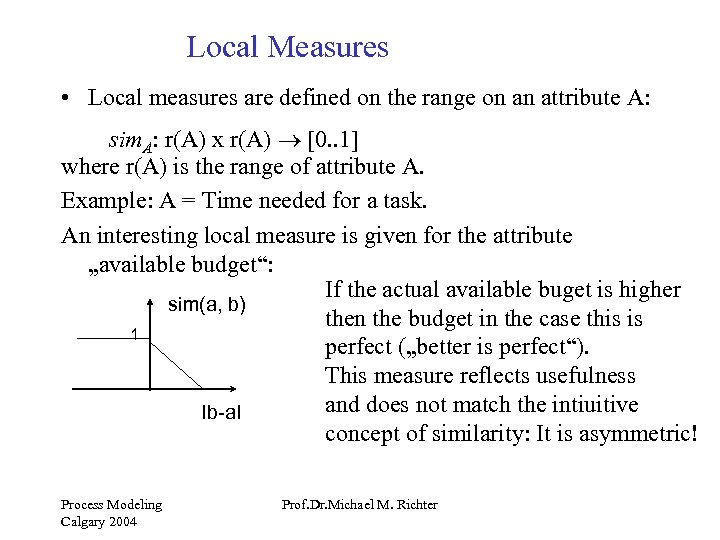

Local Measures • Local measures are defined on the range on an attribute A: sim. A: r(A) x r(A) ® [0. . 1] where r(A) is the range of attribute A. Example: A = Time needed for a task. An interesting local measure is given for the attribute „available budget“: If the actual available buget is higher sim(a, b) then the budget in the case this is 1 perfect („better is perfect“). This measure reflects usefulness and does not match the intiuitive Ib-a. I concept of similarity: It is asymmetric! Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Local Measures • Local measures are defined on the range on an attribute A: sim. A: r(A) x r(A) ® [0. . 1] where r(A) is the range of attribute A. Example: A = Time needed for a task. An interesting local measure is given for the attribute „available budget“: If the actual available buget is higher sim(a, b) then the budget in the case this is 1 perfect („better is perfect“). This measure reflects usefulness and does not match the intiuitive Ib-a. I concept of similarity: It is asymmetric! Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

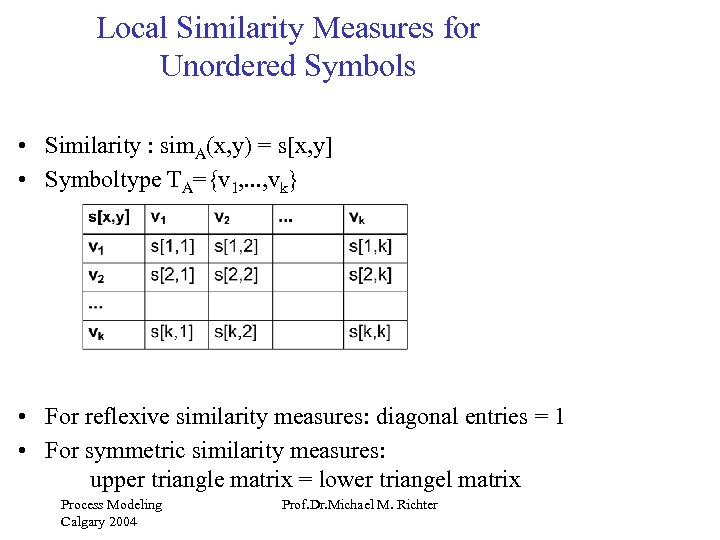

Local Similarity Measures for Unordered Symbols • Similarity : sim. A(x, y) = s[x, y] • Symboltype TA={v 1, . . . , vk} • For reflexive similarity measures: diagonal entries = 1 • For symmetric similarity measures: upper triangle matrix = lower triangel matrix Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Local Similarity Measures for Unordered Symbols • Similarity : sim. A(x, y) = s[x, y] • Symboltype TA={v 1, . . . , vk} • For reflexive similarity measures: diagonal entries = 1 • For symmetric similarity measures: upper triangle matrix = lower triangel matrix Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

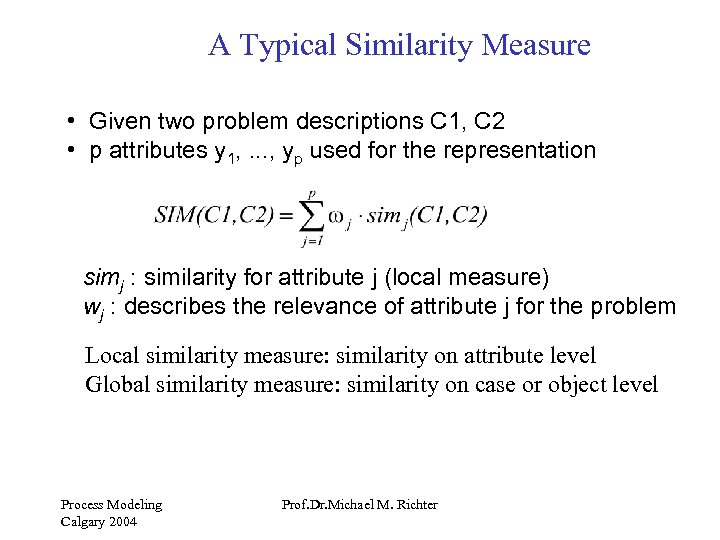

A Typical Similarity Measure • Given two problem descriptions C 1, C 2 • p attributes y 1, . . . , yp used for the representation simj : similarity for attribute j (local measure) wj : describes the relevance of attribute j for the problem Local similarity measure: similarity on attribute level Global similarity measure: similarity on case or object level Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

A Typical Similarity Measure • Given two problem descriptions C 1, C 2 • p attributes y 1, . . . , yp used for the representation simj : similarity for attribute j (local measure) wj : describes the relevance of attribute j for the problem Local similarity measure: similarity on attribute level Global similarity measure: similarity on case or object level Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

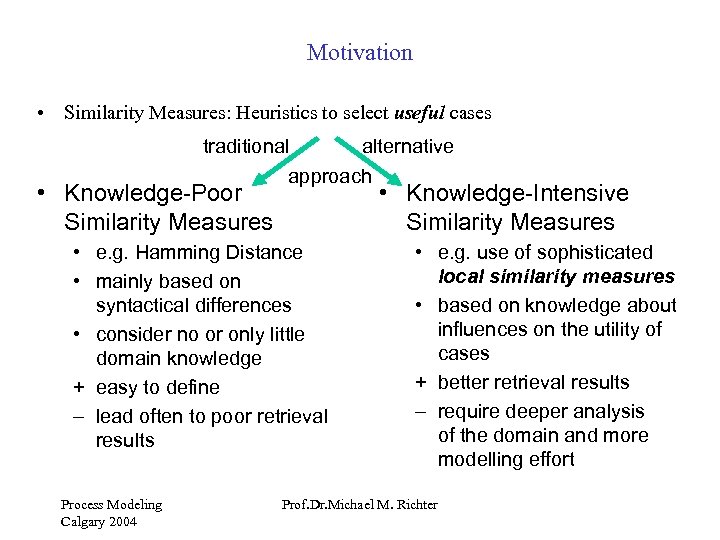

Motivation • Similarity Measures: Heuristics to select useful cases traditional alternative approach • Knowledge-Poor Similarity Measures • Knowledge-Intensive Similarity Measures • e. g. Hamming Distance • mainly based on syntactical differences • consider no or only little domain knowledge + easy to define – lead often to poor retrieval results Process Modeling Calgary 2004 • e. g. use of sophisticated local similarity measures • based on knowledge about influences on the utility of cases + better retrieval results – require deeper analysis of the domain and more modelling effort Prof. Dr. Michael M. Richter

Motivation • Similarity Measures: Heuristics to select useful cases traditional alternative approach • Knowledge-Poor Similarity Measures • Knowledge-Intensive Similarity Measures • e. g. Hamming Distance • mainly based on syntactical differences • consider no or only little domain knowledge + easy to define – lead often to poor retrieval results Process Modeling Calgary 2004 • e. g. use of sophisticated local similarity measures • based on knowledge about influences on the utility of cases + better retrieval results – require deeper analysis of the domain and more modelling effort Prof. Dr. Michael M. Richter

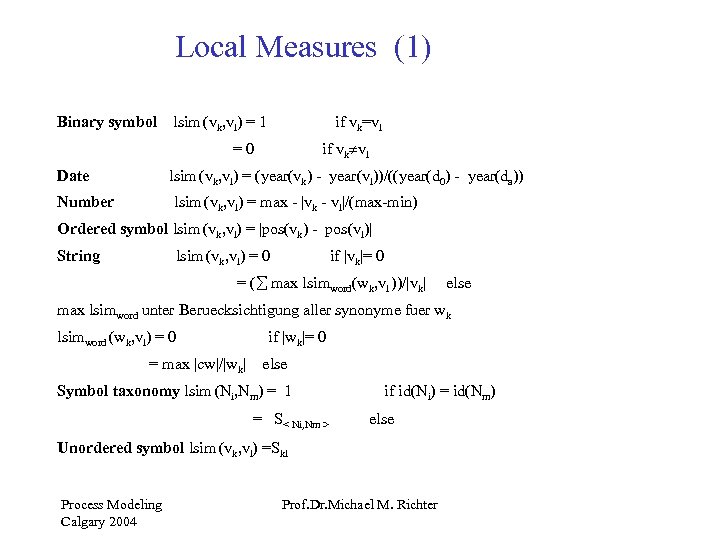

Local Measures (1) Binary symbol lsim (vk, vl) = 1 if vk=vl if vk vl =0 Date lsim (vk, vl) = (year(vk) - year(vl))/((year(d 0) - year(da)) Number lsim (vk, vl) = max - |vk - vl|/(max-min) Ordered symbol lsim (vk, vl) = |pos(vk) - pos(vl)| String lsim (vk, vl) = 0 if |vk|= 0 = ( max lsimword(wk, vl ))/|vk| else max lsimword unter Beruecksichtigung aller synonyme fuer wk lsimword (wk, vl) = 0 = max |cw|/|wk| if |wk|= 0 else Symbol taxonomy lsim (Ni, Nm) = 1 = S< Ni, Nm > if id(Ni) = id(Nm) else Unordered symbol lsim (vk, vl) =Skl Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Local Measures (1) Binary symbol lsim (vk, vl) = 1 if vk=vl if vk vl =0 Date lsim (vk, vl) = (year(vk) - year(vl))/((year(d 0) - year(da)) Number lsim (vk, vl) = max - |vk - vl|/(max-min) Ordered symbol lsim (vk, vl) = |pos(vk) - pos(vl)| String lsim (vk, vl) = 0 if |vk|= 0 = ( max lsimword(wk, vl ))/|vk| else max lsimword unter Beruecksichtigung aller synonyme fuer wk lsimword (wk, vl) = 0 = max |cw|/|wk| if |wk|= 0 else Symbol taxonomy lsim (Ni, Nm) = 1 = S< Ni, Nm > if id(Ni) = id(Nm) else Unordered symbol lsim (vk, vl) =Skl Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

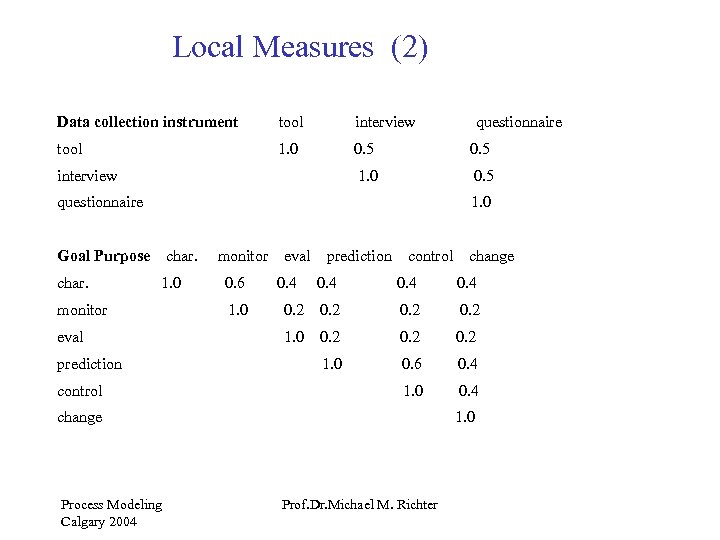

Local Measures (2) Data collection instrument tool interview tool 1. 0 0. 5 interview questionnaire 1. 0 Goal Purpose char. questionnaire char. 1. 0 monitor eval prediction control monitor 0. 6 1. 0 eval 0. 4 prediction control 0. 4 0. 2 1. 0 0. 6 0. 4 1. 0 0. 4 change Process Modeling Calgary 2004 change 1. 0 Prof. Dr. Michael M. Richter

Local Measures (2) Data collection instrument tool interview tool 1. 0 0. 5 interview questionnaire 1. 0 Goal Purpose char. questionnaire char. 1. 0 monitor eval prediction control monitor 0. 6 1. 0 eval 0. 4 prediction control 0. 4 0. 2 1. 0 0. 6 0. 4 1. 0 0. 4 change Process Modeling Calgary 2004 change 1. 0 Prof. Dr. Michael M. Richter

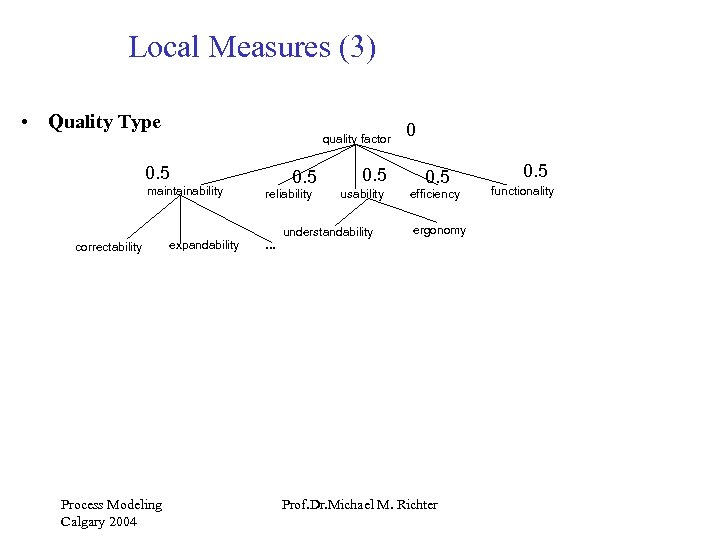

Local Measures (3) • Quality Type quality factor 0. 5 maintainability 0. 5 reliability 0. 5 usability understandability correctability Process Modeling Calgary 2004 expandability 0 0. 5 efficiency ergonomy . . . Prof. Dr. Michael M. Richter 0. 5 functionality

Local Measures (3) • Quality Type quality factor 0. 5 maintainability 0. 5 reliability 0. 5 usability understandability correctability Process Modeling Calgary 2004 expandability 0 0. 5 efficiency ergonomy . . . Prof. Dr. Michael M. Richter 0. 5 functionality

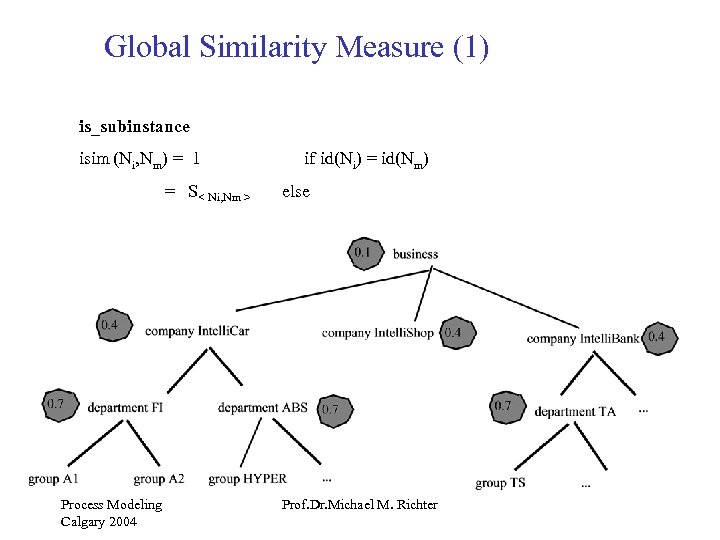

Global Similarity Measure (1) is_subinstance isim (Ni, Nm) = 1 = S< Ni, Nm > Process Modeling Calgary 2004 if id(Ni) = id(Nm) else Prof. Dr. Michael M. Richter

Global Similarity Measure (1) is_subinstance isim (Ni, Nm) = 1 = S< Ni, Nm > Process Modeling Calgary 2004 if id(Ni) = id(Nm) else Prof. Dr. Michael M. Richter

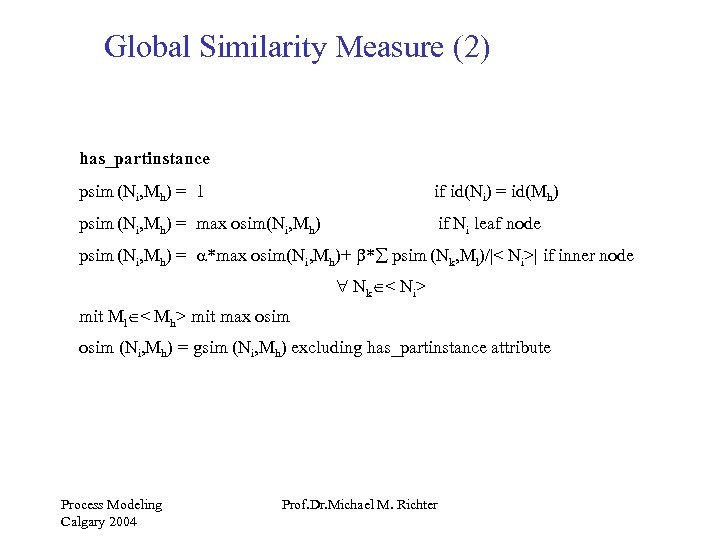

Global Similarity Measure (2) has_partinstance psim (Ni, Mh) = 1 if id(Ni) = id(Mh) psim (Ni, Mh) = max osim(Ni, Mh) if Ni leaf node psim (Ni, Mh) = *max osim(Ni, Mh)+ * psim (Nk, Ml)/|< Ni>| if inner node Nk < Ni> mit Ml < Mh> mit max osim (Ni, Mh) = gsim (Ni, Mh) excluding has_partinstance attribute Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Global Similarity Measure (2) has_partinstance psim (Ni, Mh) = 1 if id(Ni) = id(Mh) psim (Ni, Mh) = max osim(Ni, Mh) if Ni leaf node psim (Ni, Mh) = *max osim(Ni, Mh)+ * psim (Nk, Ml)/|< Ni>| if inner node Nk < Ni> mit Ml < Mh> mit max osim (Ni, Mh) = gsim (Ni, Mh) excluding has_partinstance attribute Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

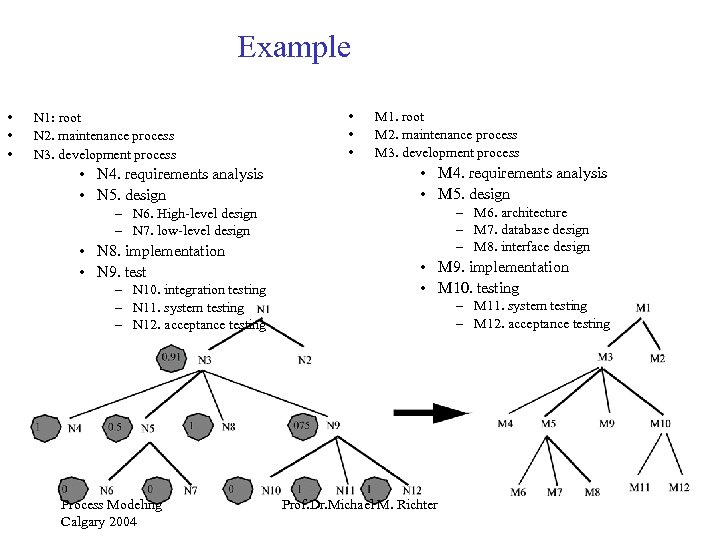

Example • • • N 1: root N 2. maintenance process N 3. development process • N 4. requirements analysis • N 5. design • • • M 1. root M 2. maintenance process M 3. development process • M 4. requirements analysis • M 5. design – M 6. architecture – M 7. database design – M 8. interface design – N 6. High-level design – N 7. low-level design • N 8. implementation • N 9. test – N 10. integration testing – N 11. system testing – N 12. acceptance testing Process Modeling Calgary 2004 • M 9. implementation • M 10. testing – M 11. system testing – M 12. acceptance testing Prof. Dr. Michael M. Richter

Example • • • N 1: root N 2. maintenance process N 3. development process • N 4. requirements analysis • N 5. design • • • M 1. root M 2. maintenance process M 3. development process • M 4. requirements analysis • M 5. design – M 6. architecture – M 7. database design – M 8. interface design – N 6. High-level design – N 7. low-level design • N 8. implementation • N 9. test – N 10. integration testing – N 11. system testing – N 12. acceptance testing Process Modeling Calgary 2004 • M 9. implementation • M 10. testing – M 11. system testing – M 12. acceptance testing Prof. Dr. Michael M. Richter

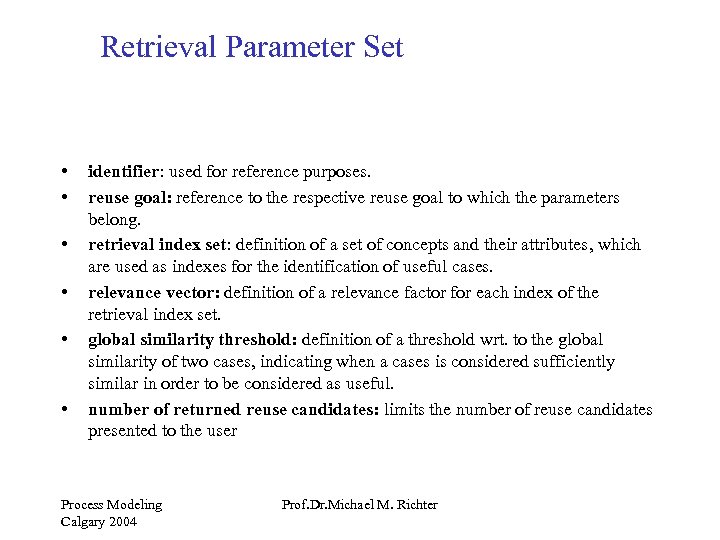

Retrieval Parameter Set • • • identifier: used for reference purposes. reuse goal: reference to the respective reuse goal to which the parameters belong. retrieval index set: definition of a set of concepts and their attributes, which are used as indexes for the identification of useful cases. relevance vector: definition of a relevance factor for each index of the retrieval index set. global similarity threshold: definition of a threshold wrt. to the global similarity of two cases, indicating when a cases is considered sufficiently similar in order to be considered as useful. number of returned reuse candidates: limits the number of reuse candidates presented to the user Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Retrieval Parameter Set • • • identifier: used for reference purposes. reuse goal: reference to the respective reuse goal to which the parameters belong. retrieval index set: definition of a set of concepts and their attributes, which are used as indexes for the identification of useful cases. relevance vector: definition of a relevance factor for each index of the retrieval index set. global similarity threshold: definition of a threshold wrt. to the global similarity of two cases, indicating when a cases is considered sufficiently similar in order to be considered as useful. number of returned reuse candidates: limits the number of reuse candidates presented to the user Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

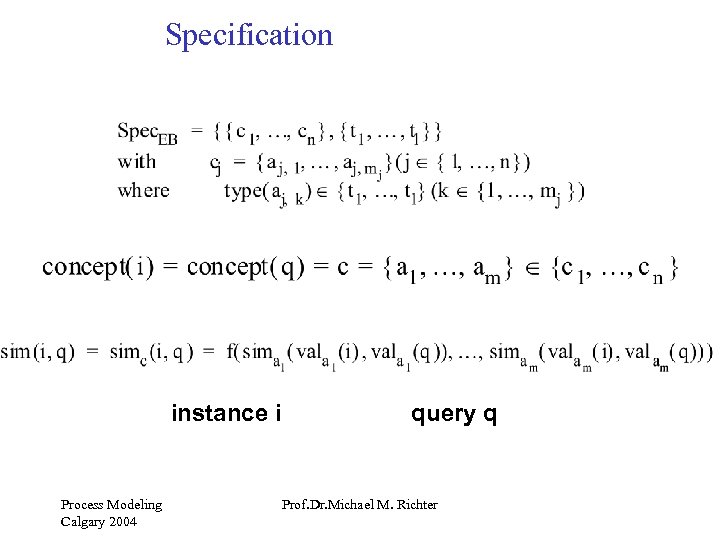

Specification instance i Process Modeling Calgary 2004 query q Prof. Dr. Michael M. Richter

Specification instance i Process Modeling Calgary 2004 query q Prof. Dr. Michael M. Richter

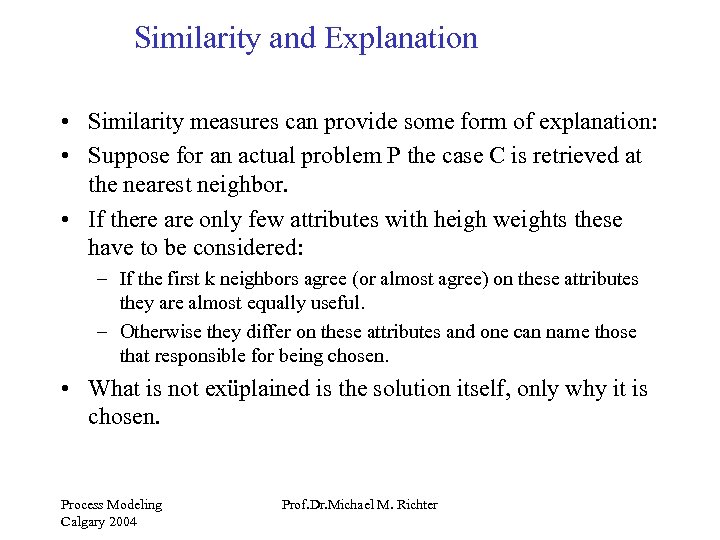

Similarity and Explanation • Similarity measures can provide some form of explanation: • Suppose for an actual problem P the case C is retrieved at the nearest neighbor. • If there are only few attributes with heigh weights these have to be considered: – If the first k neighbors agree (or almost agree) on these attributes they are almost equally useful. – Otherwise they differ on these attributes and one can name those that responsible for being chosen. • What is not exüplained is the solution itself, only why it is chosen. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Similarity and Explanation • Similarity measures can provide some form of explanation: • Suppose for an actual problem P the case C is retrieved at the nearest neighbor. • If there are only few attributes with heigh weights these have to be considered: – If the first k neighbors agree (or almost agree) on these attributes they are almost equally useful. – Otherwise they differ on these attributes and one can name those that responsible for being chosen. • What is not exüplained is the solution itself, only why it is chosen. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

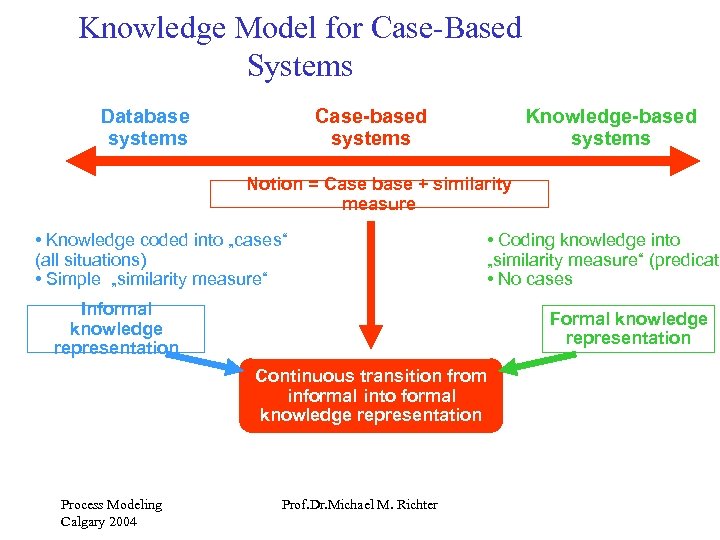

Knowledge Model for Case-Based Systems Database systems Case-based systems Knowledge-based systems Notion = Case base + similarity measure • Knowledge coded into „cases“ (all situations) • Simple „similarity measure“ • Coding knowledge into „similarity measure“ (predicate • No cases Informal knowledge representation Formal knowledge representation Continuous transition from informal into formal knowledge representation Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Knowledge Model for Case-Based Systems Database systems Case-based systems Knowledge-based systems Notion = Case base + similarity measure • Knowledge coded into „cases“ (all situations) • Simple „similarity measure“ • Coding knowledge into „similarity measure“ (predicate • No cases Informal knowledge representation Formal knowledge representation Continuous transition from informal into formal knowledge representation Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

PART 3 Experience Factory and Experience Management Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

PART 3 Experience Factory and Experience Management Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Experience Factory and Information Sources • An experience factory contains several information sources. • These sources are, however, more than just data bases. • They establish a connection between the objects with which the information is concerned with the possible contexts in which they can/should be used: – – Which method? Which tool? Which agent? Etc. • Therefore the experience factory gives recommendations. • The access to the factory is usually similarity based. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Experience Factory and Information Sources • An experience factory contains several information sources. • These sources are, however, more than just data bases. • They establish a connection between the objects with which the information is concerned with the possible contexts in which they can/should be used: – – Which method? Which tool? Which agent? Etc. • Therefore the experience factory gives recommendations. • The access to the factory is usually similarity based. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Experience management, experience-based information systems and case-based systems • From a technical point of view, the term „case-based system“ stands for a knowledge-based software system that employs the technology of Case-Based Reasoning, i. e. , software systems that process case-specific knowledge in a (largely) automated way. • From an organizational point of view, in the lecture, the term „case -based (reasoning) system“ stands for a socio-technical system, that processes human-based/automated case knowledge in a combined manner. Such systems are called Experience-based Information Systems (Eb. IS). • Experience management is the term used for the management of experience-based information systems as well as all related processes. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Experience management, experience-based information systems and case-based systems • From a technical point of view, the term „case-based system“ stands for a knowledge-based software system that employs the technology of Case-Based Reasoning, i. e. , software systems that process case-specific knowledge in a (largely) automated way. • From an organizational point of view, in the lecture, the term „case -based (reasoning) system“ stands for a socio-technical system, that processes human-based/automated case knowledge in a combined manner. Such systems are called Experience-based Information Systems (Eb. IS). • Experience management is the term used for the management of experience-based information systems as well as all related processes. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

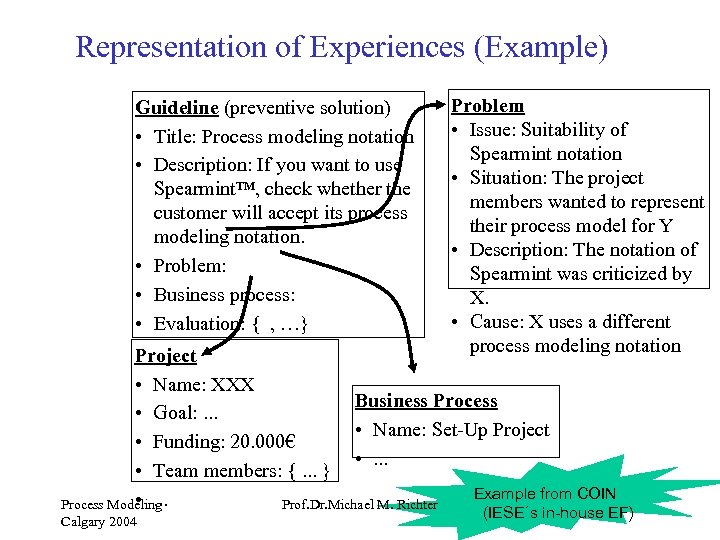

Representation of Experiences (Example) Guideline (preventive solution) • Title: Process modeling notation • Description: If you want to use Spearmint™, check whether the customer will accept its process modeling notation. • Problem: • Business process: • Evaluation: { , …} Problem • Issue: Suitability of Spearmint notation • Situation: The project members wanted to represent their process model for Y • Description: The notation of Spearmint was criticized by X. • Cause: X uses a different process modeling notation Project • Name: XXX Business Process • Goal: . . . • Name: Set-Up Project • Funding: 20. 000€ • . . . • Team members: {. . . } Example from COIN • . . . Process Modeling Prof. Dr. Michael M. Richter Calgary 2004 (IESE´s in-house EF)

Representation of Experiences (Example) Guideline (preventive solution) • Title: Process modeling notation • Description: If you want to use Spearmint™, check whether the customer will accept its process modeling notation. • Problem: • Business process: • Evaluation: { , …} Problem • Issue: Suitability of Spearmint notation • Situation: The project members wanted to represent their process model for Y • Description: The notation of Spearmint was criticized by X. • Cause: X uses a different process modeling notation Project • Name: XXX Business Process • Goal: . . . • Name: Set-Up Project • Funding: 20. 000€ • . . . • Team members: {. . . } Example from COIN • . . . Process Modeling Prof. Dr. Michael M. Richter Calgary 2004 (IESE´s in-house EF)

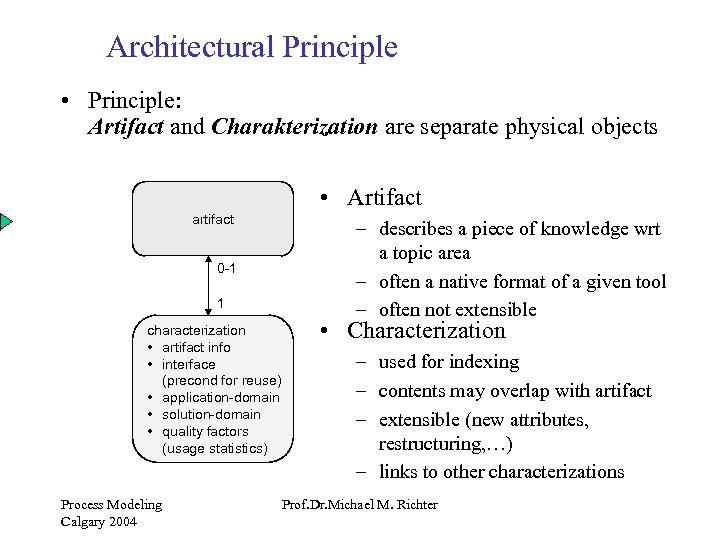

Architectural Principle • Principle: Artifact and Charakterization are separate physical objects • Artifact artifact 0 -1 1 characterization • artifact info • interface (precond for reuse) • application-domain • solution-domain • quality factors (usage statistics) Process Modeling Calgary 2004 – describes a piece of knowledge wrt a topic area – often a native format of a given tool – often not extensible • Characterization – used for indexing – contents may overlap with artifact – extensible (new attributes, restructuring, …) – links to other characterizations Prof. Dr. Michael M. Richter

Architectural Principle • Principle: Artifact and Charakterization are separate physical objects • Artifact artifact 0 -1 1 characterization • artifact info • interface (precond for reuse) • application-domain • solution-domain • quality factors (usage statistics) Process Modeling Calgary 2004 – describes a piece of knowledge wrt a topic area – often a native format of a given tool – often not extensible • Characterization – used for indexing – contents may overlap with artifact – extensible (new attributes, restructuring, …) – links to other characterizations Prof. Dr. Michael M. Richter

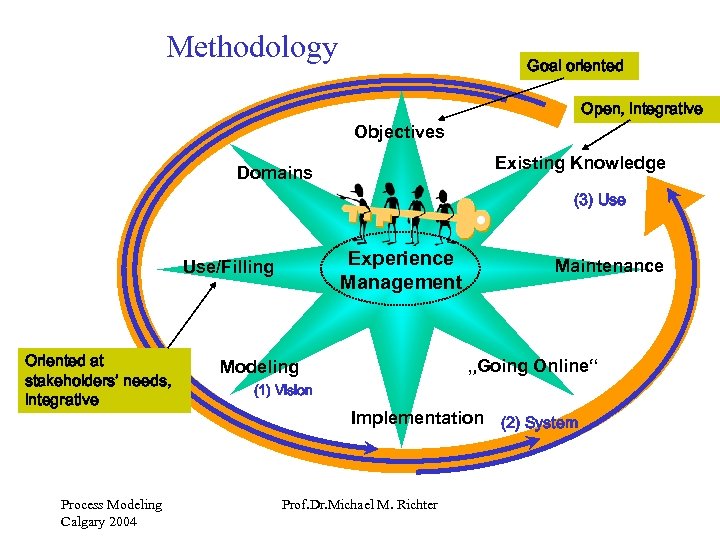

Methodology Goal oriented Open, integrative Objectives Existing Knowledge Domains (3) Use Experience Management Use/Filling Oriented at stakeholders’ needs, integrative Process Modeling Calgary 2004 Maintenance „Going Online“ Modeling (1) Vision Implementation (2) System Prof. Dr. Michael M. Richter

Methodology Goal oriented Open, integrative Objectives Existing Knowledge Domains (3) Use Experience Management Use/Filling Oriented at stakeholders’ needs, integrative Process Modeling Calgary 2004 Maintenance „Going Online“ Modeling (1) Vision Implementation (2) System Prof. Dr. Michael M. Richter

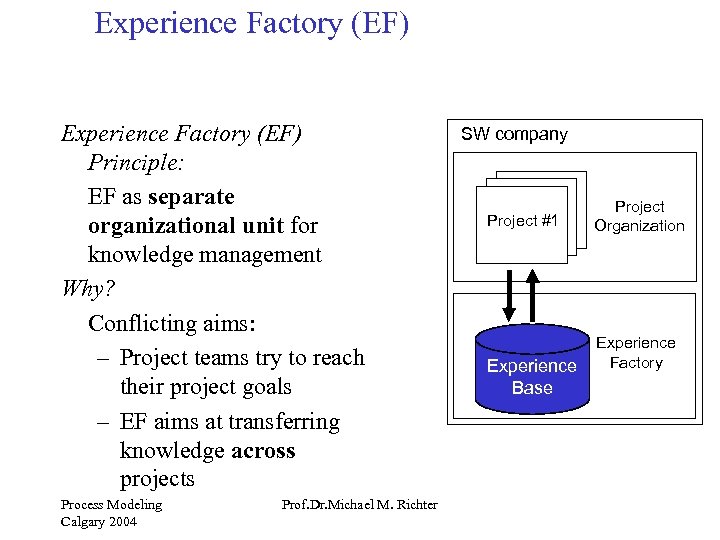

Experience Factory (EF) Principle: EF as separate organizational unit for knowledge management Why? Conflicting aims: – Project teams try to reach their project goals – EF aims at transferring knowledge across projects Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter SW company Project #1 Experience Base Project Organization Experience Factory

Experience Factory (EF) Principle: EF as separate organizational unit for knowledge management Why? Conflicting aims: – Project teams try to reach their project goals – EF aims at transferring knowledge across projects Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter SW company Project #1 Experience Base Project Organization Experience Factory

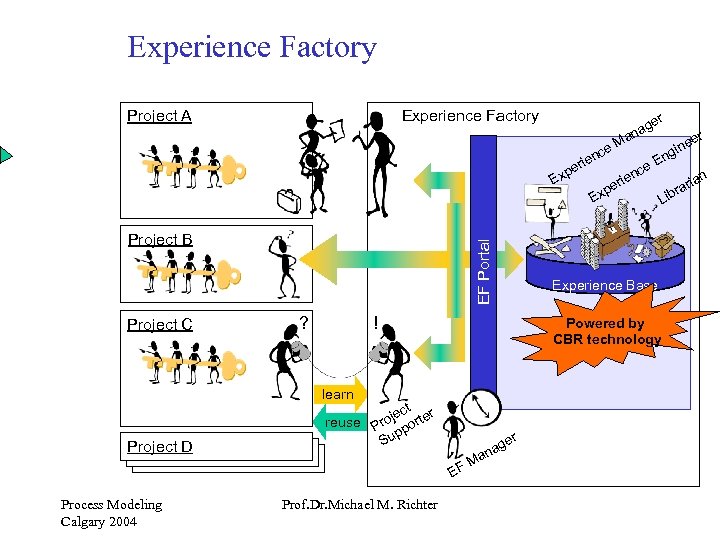

Experience Factory Project A Experience Factory a en ri pe Ex Project C EF Portal Project B ? Process Modeling Calgary 2004 ! En rie er EF M ag an ian ar ibr L Powered by CBR technology t r ec reuse Proj porte p Su Prof. Dr. Michael M. Richter pe Ex e nc er e gin Experience Base learn Project D M ce er g na

Experience Factory Project A Experience Factory a en ri pe Ex Project C EF Portal Project B ? Process Modeling Calgary 2004 ! En rie er EF M ag an ian ar ibr L Powered by CBR technology t r ec reuse Proj porte p Su Prof. Dr. Michael M. Richter pe Ex e nc er e gin Experience Base learn Project D M ce er g na

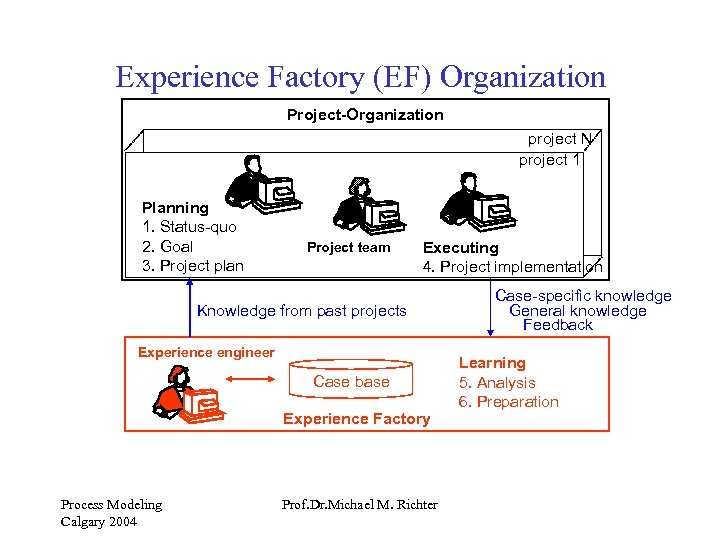

Experience Factory (EF) Organization Project-Organization project N project 1 Planning 1. Status-quo 2. Goal 3. Project plan Project team Executing 4. Project implementation Knowledge from past projects Experience engineer Case base Experience Factory Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter Case-specific knowledge General knowledge Feedback Learning 5. Analysis 6. Preparation

Experience Factory (EF) Organization Project-Organization project N project 1 Planning 1. Status-quo 2. Goal 3. Project plan Project team Executing 4. Project implementation Knowledge from past projects Experience engineer Case base Experience Factory Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter Case-specific knowledge General knowledge Feedback Learning 5. Analysis 6. Preparation

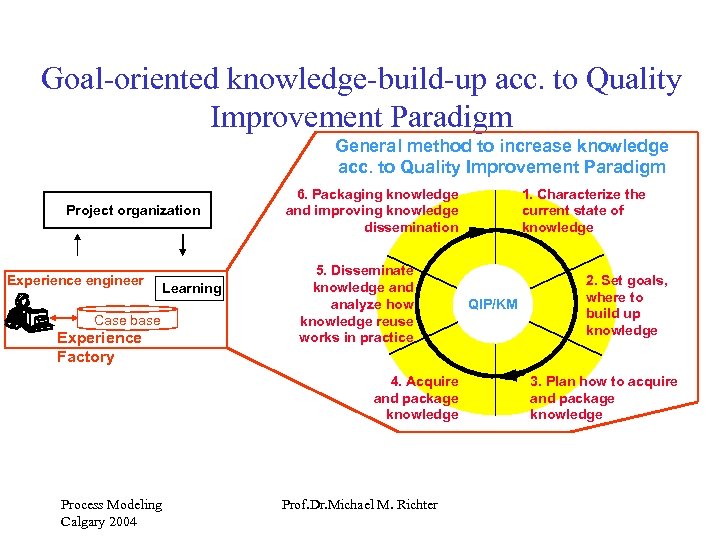

Goal-oriented knowledge-build-up acc. to Quality Improvement Paradigm General method to increase knowledge acc. to Quality Improvement Paradigm Project organization Experience engineer Case base Experience Factory Learning 6. Packaging knowledge and improving knowledge dissemination 5. Disseminate knowledge and analyze how knowledge reuse works in practice 4. Acquire and package knowledge Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter 1. Characterize the current state of knowledge QIP/KM 2. Set goals, where to build up knowledge 3. Plan how to acquire and package knowledge

Goal-oriented knowledge-build-up acc. to Quality Improvement Paradigm General method to increase knowledge acc. to Quality Improvement Paradigm Project organization Experience engineer Case base Experience Factory Learning 6. Packaging knowledge and improving knowledge dissemination 5. Disseminate knowledge and analyze how knowledge reuse works in practice 4. Acquire and package knowledge Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter 1. Characterize the current state of knowledge QIP/KM 2. Set goals, where to build up knowledge 3. Plan how to acquire and package knowledge

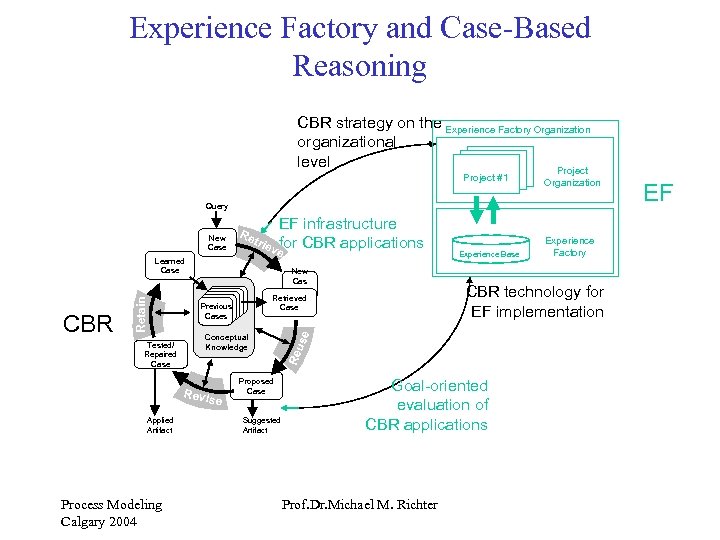

Experience Factory and Case-Based Reasoning CBR strategy on the Experience Factory Organization organizational level Project #1 Organization Query Re Tested/ Repaired Case Previous Cases Conceptual Knowledge Revi se Applied Artifact Process Modeling Calgary 2004 New Cas e Retrieved Case Proposed Case Suggested Artifact Experience Base Experience Factory CBR technology for EF implementation se Retain Learned Case CBR EF infrastructure for CBR applications ve trie Reu New Case Goal-oriented evaluation of CBR applications Prof. Dr. Michael M. Richter EF

Experience Factory and Case-Based Reasoning CBR strategy on the Experience Factory Organization organizational level Project #1 Organization Query Re Tested/ Repaired Case Previous Cases Conceptual Knowledge Revi se Applied Artifact Process Modeling Calgary 2004 New Cas e Retrieved Case Proposed Case Suggested Artifact Experience Base Experience Factory CBR technology for EF implementation se Retain Learned Case CBR EF infrastructure for CBR applications ve trie Reu New Case Goal-oriented evaluation of CBR applications Prof. Dr. Michael M. Richter EF

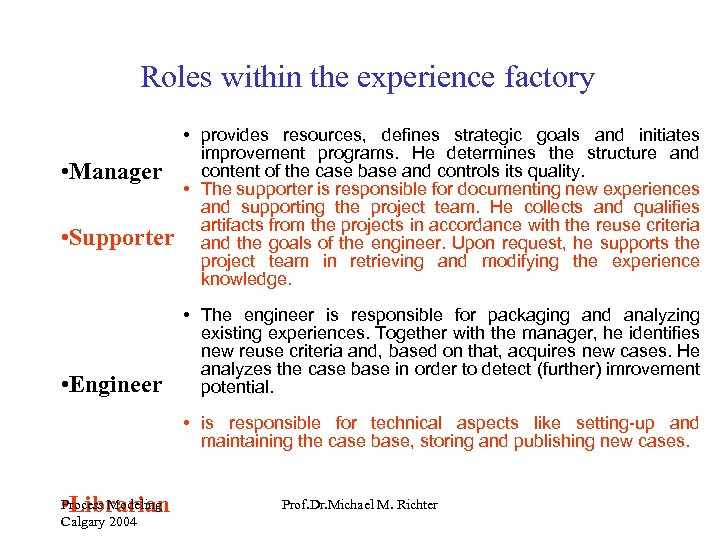

Roles within the experience factory • Manager • Supporter • Engineer • provides resources, defines strategic goals and initiates improvement programs. He determines the structure and content of the case base and controls its quality. • The supporter is responsible for documenting new experiences and supporting the project team. He collects and qualifies artifacts from the projects in accordance with the reuse criteria and the goals of the engineer. Upon request, he supports the project team in retrieving and modifying the experience knowledge. • The engineer is responsible for packaging and analyzing existing experiences. Together with the manager, he identifies new reuse criteria and, based on that, acquires new cases. He analyzes the case base in order to detect (further) imrovement potential. • is responsible for technical aspects like setting-up and maintaining the case base, storing and publishing new cases. Process Modeling • Librarian Calgary 2004 Prof. Dr. Michael M. Richter

Roles within the experience factory • Manager • Supporter • Engineer • provides resources, defines strategic goals and initiates improvement programs. He determines the structure and content of the case base and controls its quality. • The supporter is responsible for documenting new experiences and supporting the project team. He collects and qualifies artifacts from the projects in accordance with the reuse criteria and the goals of the engineer. Upon request, he supports the project team in retrieving and modifying the experience knowledge. • The engineer is responsible for packaging and analyzing existing experiences. Together with the manager, he identifies new reuse criteria and, based on that, acquires new cases. He analyzes the case base in order to detect (further) imrovement potential. • is responsible for technical aspects like setting-up and maintaining the case base, storing and publishing new cases. Process Modeling • Librarian Calgary 2004 Prof. Dr. Michael M. Richter

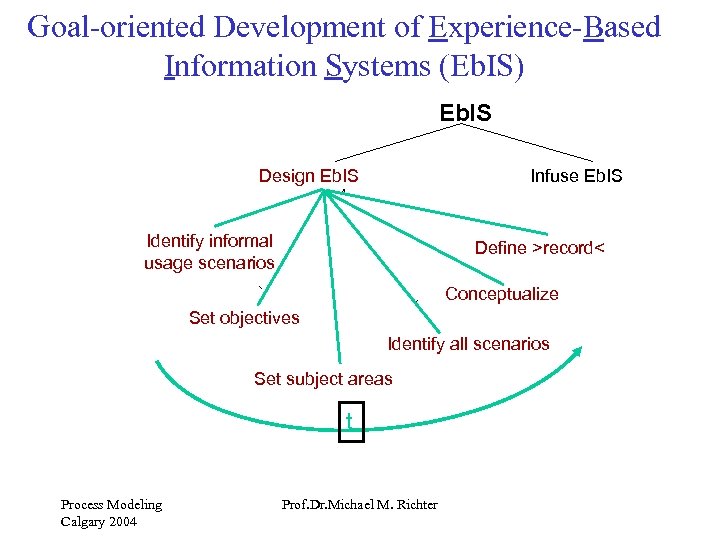

Goal-oriented Development of Experience-Based Information Systems (Eb. IS) Eb. IS Design Eb. IS Infuse Eb. IS Identify informal usage scenarios Define >record< Conceptualize Set objectives Identify all scenarios Set subject areas t Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Goal-oriented Development of Experience-Based Information Systems (Eb. IS) Eb. IS Design Eb. IS Infuse Eb. IS Identify informal usage scenarios Define >record< Conceptualize Set objectives Identify all scenarios Set subject areas t Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

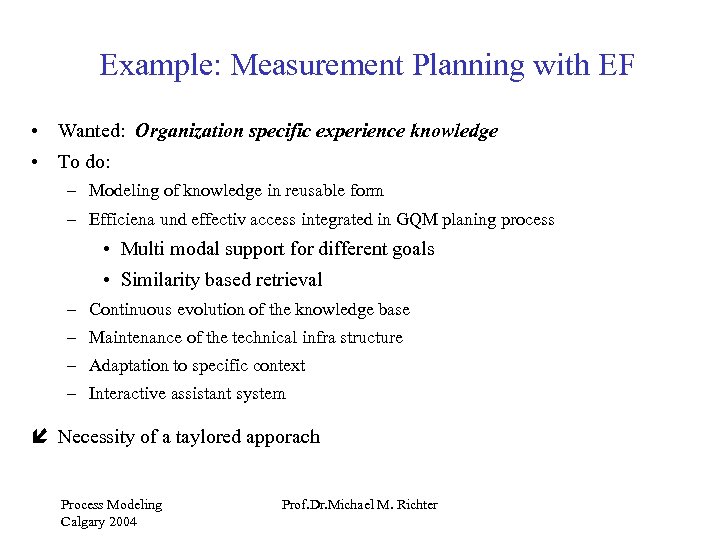

Example: Measurement Planning with EF • Wanted: Organization specific experience knowledge • To do: – Modeling of knowledge in reusable form – Efficiena und effectiv access integrated in GQM planing process • Multi modal support for different goals • Similarity based retrieval – Continuous evolution of the knowledge base – Maintenance of the technical infra structure – Adaptation to specific context – Interactive assistant system í Necessity of a taylored apporach Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Example: Measurement Planning with EF • Wanted: Organization specific experience knowledge • To do: – Modeling of knowledge in reusable form – Efficiena und effectiv access integrated in GQM planing process • Multi modal support for different goals • Similarity based retrieval – Continuous evolution of the knowledge base – Maintenance of the technical infra structure – Adaptation to specific context – Interactive assistant system í Necessity of a taylored apporach Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

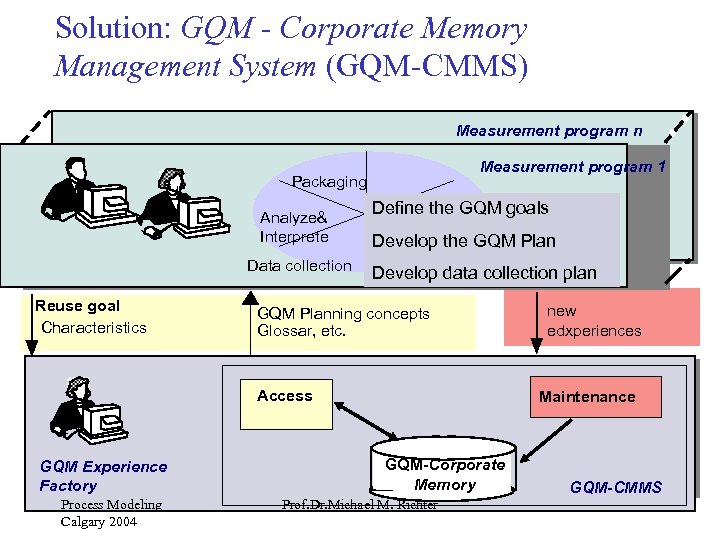

Solution: GQM - Corporate Memory Management System (GQM-CMMS) Measurement program n Measurement program 1 Packaging Analyze& Interprete Data collection Reuse goal Characteristics Define the GQM goals Develop the GQM Plan Develop data collection plan GQM Planning concepts Glossar, etc. Access GQM Experience Factory Process Modeling Calgary 2004 new edxperiences Maintenance GQM-Corporate Memory Prof. Dr. Michael M. Richter GQM-CMMS

Solution: GQM - Corporate Memory Management System (GQM-CMMS) Measurement program n Measurement program 1 Packaging Analyze& Interprete Data collection Reuse goal Characteristics Define the GQM goals Develop the GQM Plan Develop data collection plan GQM Planning concepts Glossar, etc. Access GQM Experience Factory Process Modeling Calgary 2004 new edxperiences Maintenance GQM-Corporate Memory Prof. Dr. Michael M. Richter GQM-CMMS

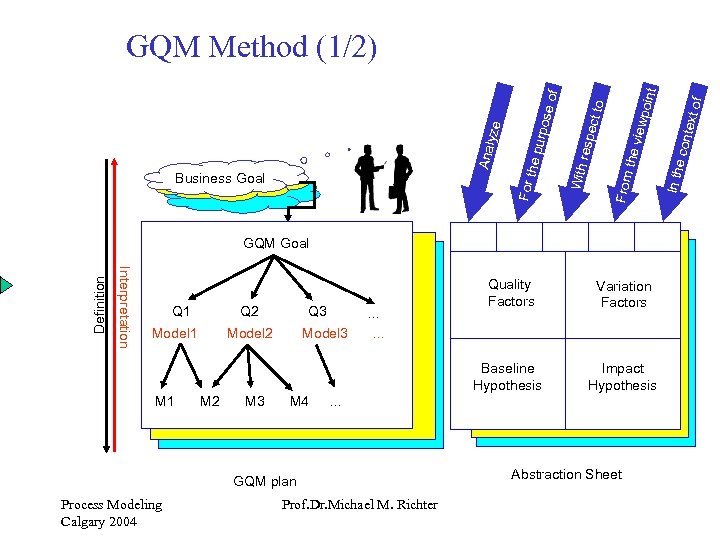

Interpretation Definition GQM Goal Q 2 Model 1 M 1 Q 3 Model 2 M 3 Model 3 M 4 . . . Impact Hypothesis . . . GQM plan Process Modeling Calgary 2004 Variation Factors Baseline Hypothesis Q 1 Quality Factors Prof. Dr. Michael M. Richter Abstraction Sheet xt of conte In th e From the v iewp oint of t to spec rpos e pu With re Business Goal For t h Anal yze e of GQM Method (1/2)

Interpretation Definition GQM Goal Q 2 Model 1 M 1 Q 3 Model 2 M 3 Model 3 M 4 . . . Impact Hypothesis . . . GQM plan Process Modeling Calgary 2004 Variation Factors Baseline Hypothesis Q 1 Quality Factors Prof. Dr. Michael M. Richter Abstraction Sheet xt of conte In th e From the v iewp oint of t to spec rpos e pu With re Business Goal For t h Anal yze e of GQM Method (1/2)

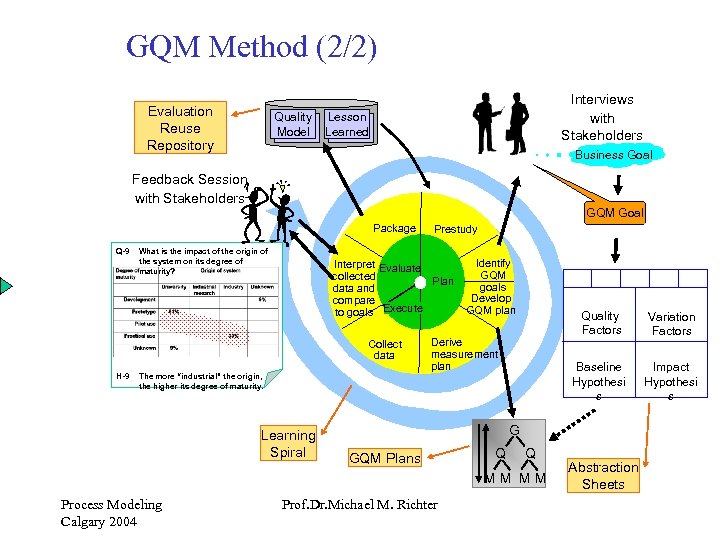

GQM Method (2/2) Evaluation Reuse Repository Quality Model Interviews with Stakeholders Lesson Learned Business Goal Feedback Session with Stakeholders GQM Goal Package Q-9 What is the impact of the origin of the system on its degree of maturity? Interpret Evaluate collected Plan data and compare to goals Execute Collect data H-9 Prestudy The more “industrial” the origin, the higher its degree of maturity. Learning Spiral Identify GQM goals Develop GQM plan Quality Factors Baseline Hypothesi s Derive measurement plan Impact Hypothesi s G GQM Plans Q Q MM MM Process Modeling Calgary 2004 Variation Factors Prof. Dr. Michael M. Richter Abstraction Sheets

GQM Method (2/2) Evaluation Reuse Repository Quality Model Interviews with Stakeholders Lesson Learned Business Goal Feedback Session with Stakeholders GQM Goal Package Q-9 What is the impact of the origin of the system on its degree of maturity? Interpret Evaluate collected Plan data and compare to goals Execute Collect data H-9 Prestudy The more “industrial” the origin, the higher its degree of maturity. Learning Spiral Identify GQM goals Develop GQM plan Quality Factors Baseline Hypothesi s Derive measurement plan Impact Hypothesi s G GQM Plans Q Q MM MM Process Modeling Calgary 2004 Variation Factors Prof. Dr. Michael M. Richter Abstraction Sheets

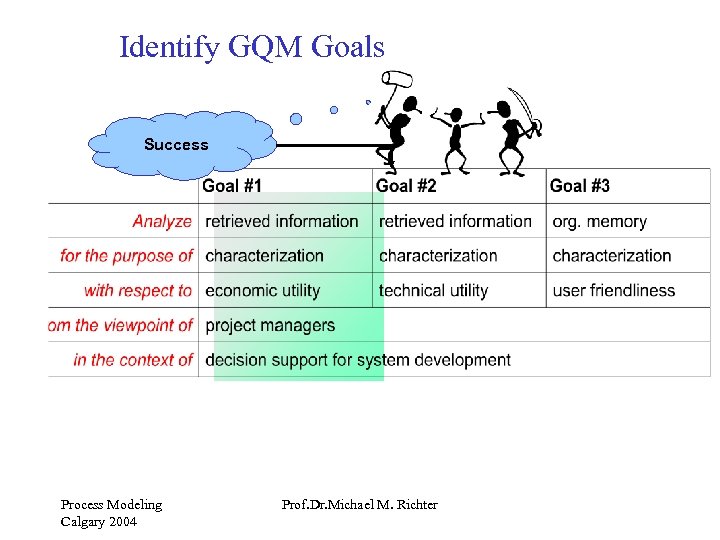

Identify GQM Goals Success Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Identify GQM Goals Success Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

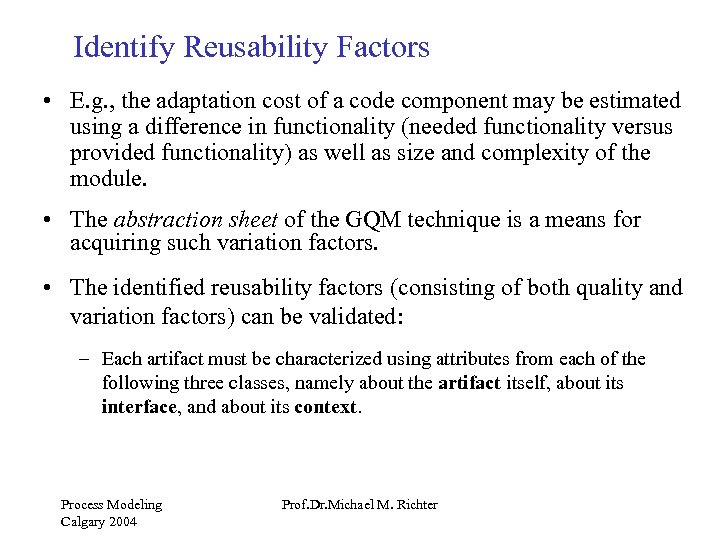

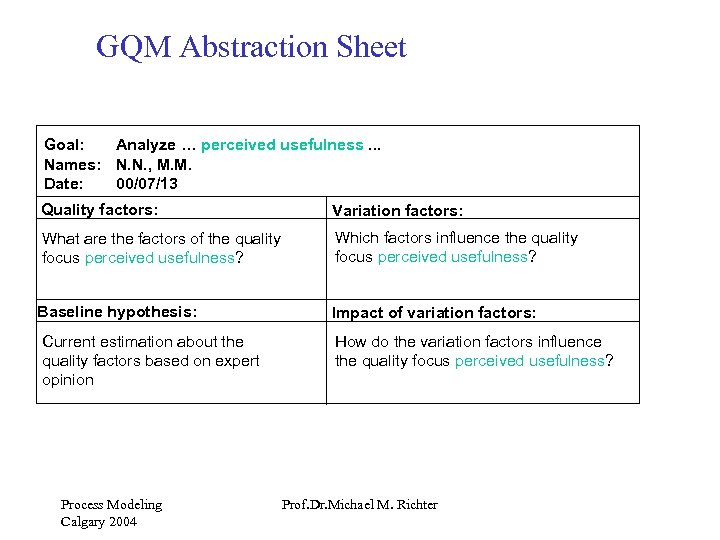

Identify Reusability Factors • E. g. , the adaptation cost of a code component may be estimated using a difference in functionality (needed functionality versus provided functionality) as well as size and complexity of the module. • The abstraction sheet of the GQM technique is a means for acquiring such variation factors. • The identified reusability factors (consisting of both quality and variation factors) can be validated: – Each artifact must be characterized using attributes from each of the following three classes, namely about the artifact itself, about its interface, and about its context. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Identify Reusability Factors • E. g. , the adaptation cost of a code component may be estimated using a difference in functionality (needed functionality versus provided functionality) as well as size and complexity of the module. • The abstraction sheet of the GQM technique is a means for acquiring such variation factors. • The identified reusability factors (consisting of both quality and variation factors) can be validated: – Each artifact must be characterized using attributes from each of the following three classes, namely about the artifact itself, about its interface, and about its context. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

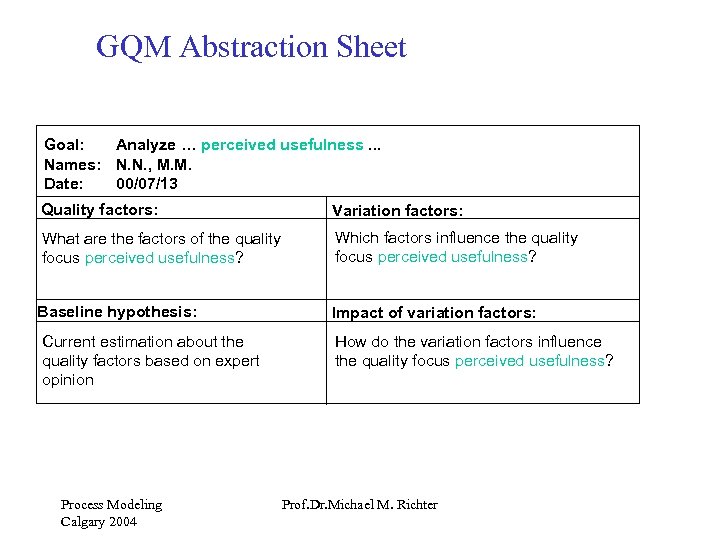

GQM Abstraction Sheet Goal: Analyze … perceived usefulness. . . Names: N. N. , M. M. Date: 00/07/13 Quality factors: Variation factors: What are the factors of the quality focus perceived usefulness? Which factors influence the quality focus perceived usefulness? Baseline hypothesis: Current estimation about the quality factors based on expert opinion Process Modeling Calgary 2004 Impact of variation factors: How do the variation factors influence the quality focus perceived usefulness? Prof. Dr. Michael M. Richter

GQM Abstraction Sheet Goal: Analyze … perceived usefulness. . . Names: N. N. , M. M. Date: 00/07/13 Quality factors: Variation factors: What are the factors of the quality focus perceived usefulness? Which factors influence the quality focus perceived usefulness? Baseline hypothesis: Current estimation about the quality factors based on expert opinion Process Modeling Calgary 2004 Impact of variation factors: How do the variation factors influence the quality focus perceived usefulness? Prof. Dr. Michael M. Richter

GQM Abstraction Sheet Goal: Analyze … perceived usefulness. . . Names: N. N. , M. M. Date: 00/07/13 Quality factors: Variation factors: What are the factors of the quality focus perceived usefulness? Which factors influence the quality focus perceived usefulness? Baseline hypothesis: Current estimation about the quality factors based on expert opinion Process Modeling Calgary 2004 Impact of variation factors: How do the variation factors influence the quality focus perceived usefulness? Prof. Dr. Michael M. Richter

GQM Abstraction Sheet Goal: Analyze … perceived usefulness. . . Names: N. N. , M. M. Date: 00/07/13 Quality factors: Variation factors: What are the factors of the quality focus perceived usefulness? Which factors influence the quality focus perceived usefulness? Baseline hypothesis: Current estimation about the quality factors based on expert opinion Process Modeling Calgary 2004 Impact of variation factors: How do the variation factors influence the quality focus perceived usefulness? Prof. Dr. Michael M. Richter

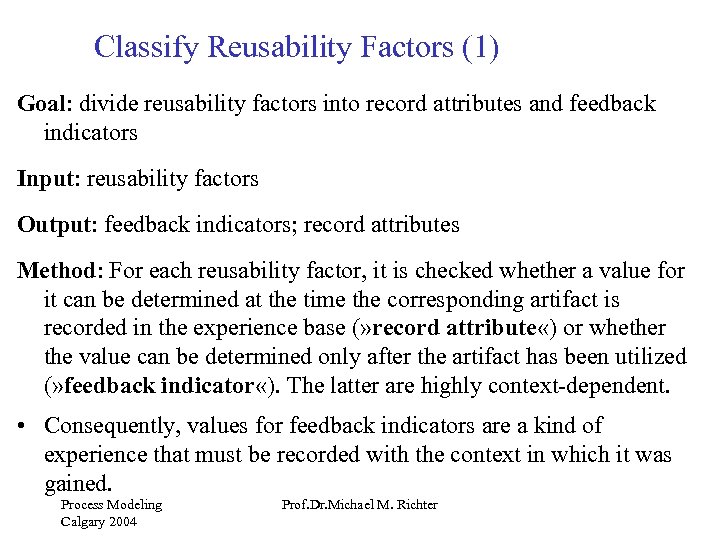

Classify Reusability Factors (1) Goal: divide reusability factors into record attributes and feedback indicators Input: reusability factors Output: feedback indicators; record attributes Method: For each reusability factor, it is checked whether a value for it can be determined at the time the corresponding artifact is recorded in the experience base (» record attribute «) or whether the value can be determined only after the artifact has been utilized (» feedback indicator «). The latter are highly context-dependent. • Consequently, values for feedback indicators are a kind of experience that must be recorded with the context in which it was gained. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Classify Reusability Factors (1) Goal: divide reusability factors into record attributes and feedback indicators Input: reusability factors Output: feedback indicators; record attributes Method: For each reusability factor, it is checked whether a value for it can be determined at the time the corresponding artifact is recorded in the experience base (» record attribute «) or whether the value can be determined only after the artifact has been utilized (» feedback indicator «). The latter are highly context-dependent. • Consequently, values for feedback indicators are a kind of experience that must be recorded with the context in which it was gained. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

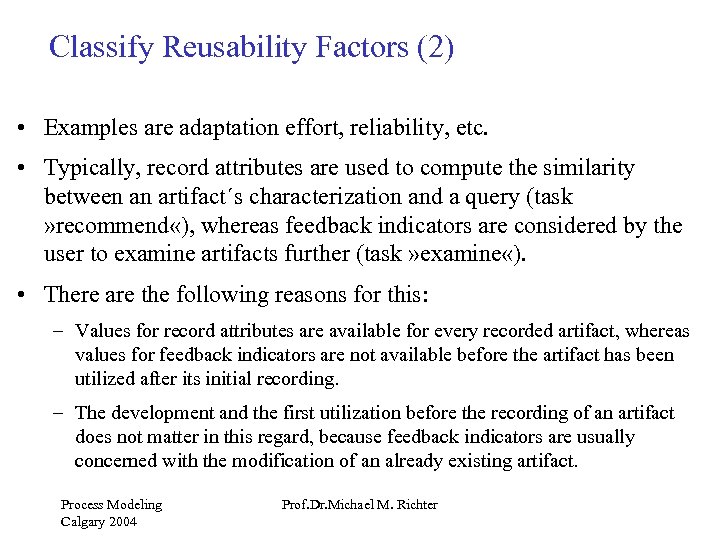

Classify Reusability Factors (2) • Examples are adaptation effort, reliability, etc. • Typically, record attributes are used to compute the similarity between an artifact´s characterization and a query (task » recommend «), whereas feedback indicators are considered by the user to examine artifacts further (task » examine «). • There are the following reasons for this: – Values for record attributes are available for every recorded artifact, whereas values for feedback indicators are not available before the artifact has been utilized after its initial recording. – The development and the first utilization before the recording of an artifact does not matter in this regard, because feedback indicators are usually concerned with the modification of an already existing artifact. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Classify Reusability Factors (2) • Examples are adaptation effort, reliability, etc. • Typically, record attributes are used to compute the similarity between an artifact´s characterization and a query (task » recommend «), whereas feedback indicators are considered by the user to examine artifacts further (task » examine «). • There are the following reasons for this: – Values for record attributes are available for every recorded artifact, whereas values for feedback indicators are not available before the artifact has been utilized after its initial recording. – The development and the first utilization before the recording of an artifact does not matter in this regard, because feedback indicators are usually concerned with the modification of an already existing artifact. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

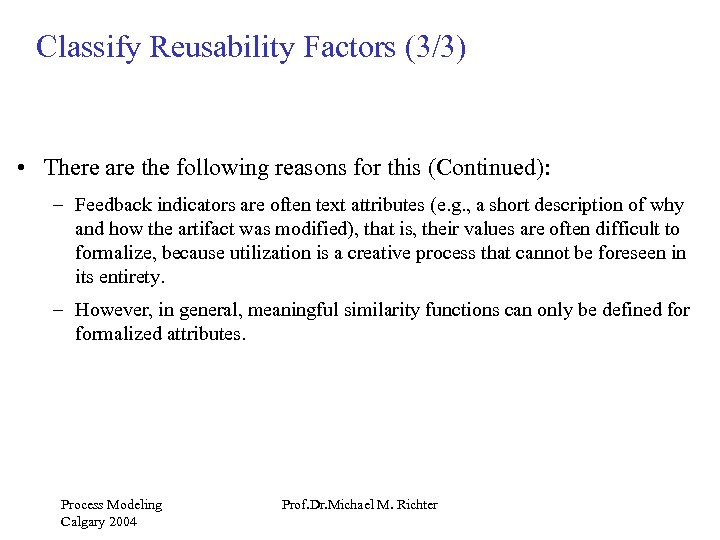

Classify Reusability Factors (3/3) • There are the following reasons for this (Continued): – Feedback indicators are often text attributes (e. g. , a short description of why and how the artifact was modified), that is, their values are often difficult to formalize, because utilization is a creative process that cannot be foreseen in its entirety. – However, in general, meaningful similarity functions can only be defined formalized attributes. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Classify Reusability Factors (3/3) • There are the following reasons for this (Continued): – Feedback indicators are often text attributes (e. g. , a short description of why and how the artifact was modified), that is, their values are often difficult to formalize, because utilization is a creative process that cannot be foreseen in its entirety. – However, in general, meaningful similarity functions can only be defined formalized attributes. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

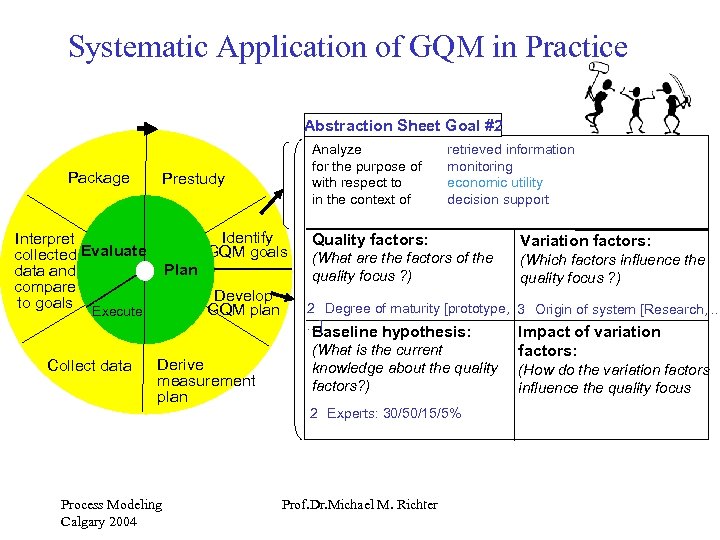

Systematic Application of GQM in Practice Abstraction Sheet Goal #2 Package Analyze for the purpose of with respect to in the context of Prestudy Identify GQM goals Interpret collected Evaluate data and compare to goals Plan Develop GQM plan Execute retrieved information monitoring economic utility decision support Quality factors: (What are the factors of the quality focus ? ) Derive measurement plan (What is the current knowledge about the quality factors? ) 2 Experts: 30/50/15/5% Process Modeling Calgary 2004 (Which factors influence the quality focus ? ) 2 Degree of maturity [prototype, 3 Origin of system [Research, . . . ] Baseline hypothesis: Collect data Variation factors: Prof. Dr. Michael M. Richter Impact of variation factors: (How do the variation factors influence the quality focus

Systematic Application of GQM in Practice Abstraction Sheet Goal #2 Package Analyze for the purpose of with respect to in the context of Prestudy Identify GQM goals Interpret collected Evaluate data and compare to goals Plan Develop GQM plan Execute retrieved information monitoring economic utility decision support Quality factors: (What are the factors of the quality focus ? ) Derive measurement plan (What is the current knowledge about the quality factors? ) 2 Experts: 30/50/15/5% Process Modeling Calgary 2004 (Which factors influence the quality focus ? ) 2 Degree of maturity [prototype, 3 Origin of system [Research, . . . ] Baseline hypothesis: Collect data Variation factors: Prof. Dr. Michael M. Richter Impact of variation factors: (How do the variation factors influence the quality focus

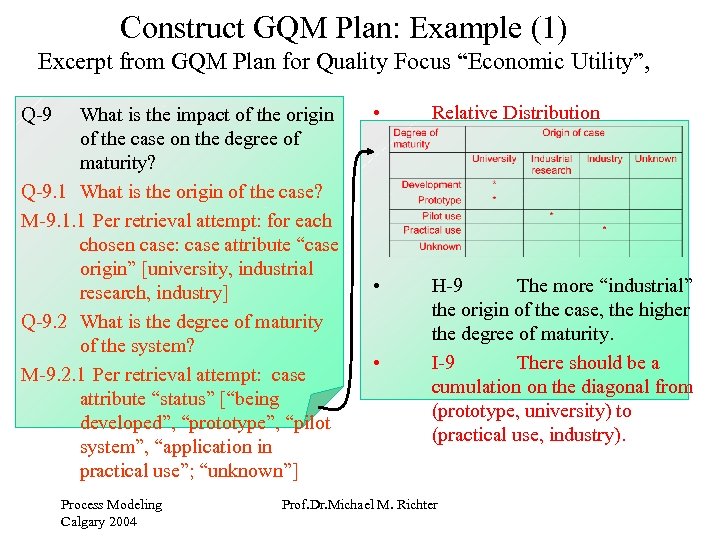

Construct GQM Plan: Example (1) Excerpt from GQM Plan for Quality Focus “Economic Utility”, Q-9 What is the impact of the origin of the case on the degree of maturity? Q-9. 1 What is the origin of the case? M-9. 1. 1 Per retrieval attempt: for each chosen case: case attribute “case origin” [university, industrial research, industry] Q-9. 2 What is the degree of maturity of the system? M-9. 2. 1 Per retrieval attempt: case attribute “status” [“being developed”, “prototype”, “pilot system”, “application in practical use”; “unknown”] Process Modeling Calgary 2004 • Relative Distribution • H-9 The more “industrial” the origin of the case, the higher the degree of maturity. I-9 There should be a cumulation on the diagonal from (prototype, university) to (practical use, industry). • Prof. Dr. Michael M. Richter

Construct GQM Plan: Example (1) Excerpt from GQM Plan for Quality Focus “Economic Utility”, Q-9 What is the impact of the origin of the case on the degree of maturity? Q-9. 1 What is the origin of the case? M-9. 1. 1 Per retrieval attempt: for each chosen case: case attribute “case origin” [university, industrial research, industry] Q-9. 2 What is the degree of maturity of the system? M-9. 2. 1 Per retrieval attempt: case attribute “status” [“being developed”, “prototype”, “pilot system”, “application in practical use”; “unknown”] Process Modeling Calgary 2004 • Relative Distribution • H-9 The more “industrial” the origin of the case, the higher the degree of maturity. I-9 There should be a cumulation on the diagonal from (prototype, university) to (practical use, industry). • Prof. Dr. Michael M. Richter

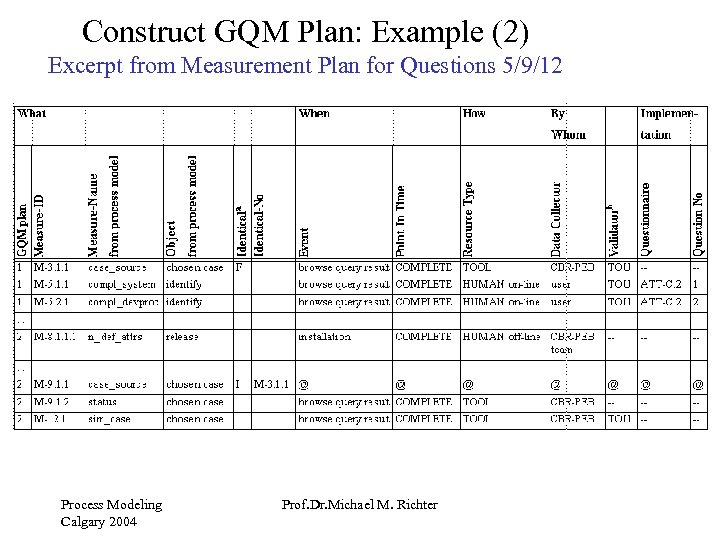

Construct GQM Plan: Example (2) Excerpt from Measurement Plan for Questions 5/9/12 Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Construct GQM Plan: Example (2) Excerpt from Measurement Plan for Questions 5/9/12 Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

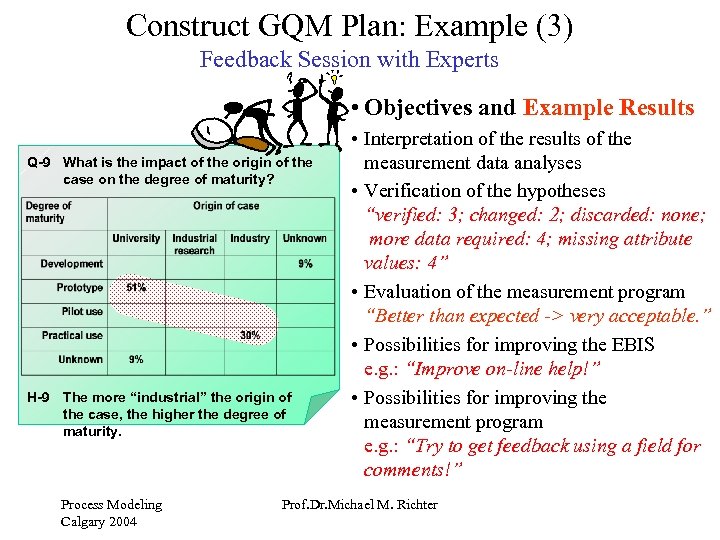

Construct GQM Plan: Example (3) Feedback Session with Experts • Objectives and Example Results Q-9 What is the impact of the origin of the case on the degree of maturity? H-9 The more “industrial” the origin of the case, the higher the degree of maturity. Process Modeling Calgary 2004 • Interpretation of the results of the measurement data analyses • Verification of the hypotheses “verified: 3; changed: 2; discarded: none; more data required: 4; missing attribute values: 4” • Evaluation of the measurement program “Better than expected -> very acceptable. ” • Possibilities for improving the EBIS e. g. : “Improve on-line help!” • Possibilities for improving the measurement program e. g. : “Try to get feedback using a field for comments!” Prof. Dr. Michael M. Richter

Construct GQM Plan: Example (3) Feedback Session with Experts • Objectives and Example Results Q-9 What is the impact of the origin of the case on the degree of maturity? H-9 The more “industrial” the origin of the case, the higher the degree of maturity. Process Modeling Calgary 2004 • Interpretation of the results of the measurement data analyses • Verification of the hypotheses “verified: 3; changed: 2; discarded: none; more data required: 4; missing attribute values: 4” • Evaluation of the measurement program “Better than expected -> very acceptable. ” • Possibilities for improving the EBIS e. g. : “Improve on-line help!” • Possibilities for improving the measurement program e. g. : “Try to get feedback using a field for comments!” Prof. Dr. Michael M. Richter

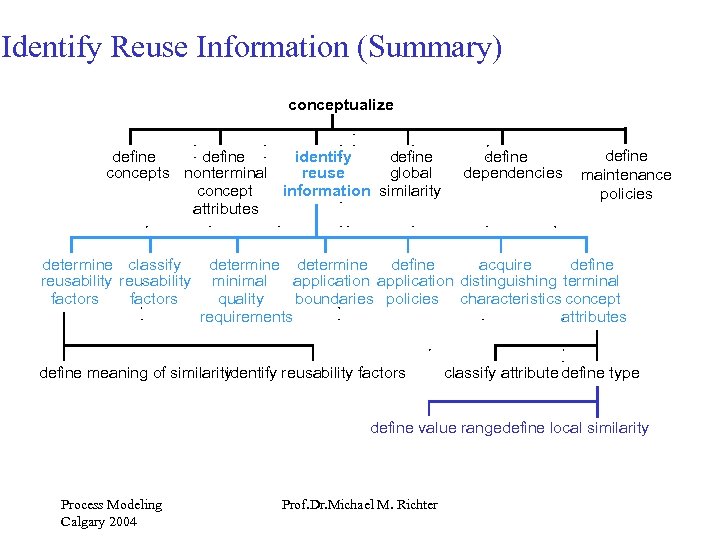

Identify Reuse Information (Summary) conceptualize define identify define concepts nonterminal reuse global concept information similarity attributes determine classify reusability factors define dependencies define maintenance policies determine define acquire define minimal application distinguishing terminal quality boundaries policies characteristics concept requirements attributes define meaning of similarity identify reusability factors classify attribute define type define value rangedefine local similarity Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Identify Reuse Information (Summary) conceptualize define identify define concepts nonterminal reuse global concept information similarity attributes determine classify reusability factors define dependencies define maintenance policies determine define acquire define minimal application distinguishing terminal quality boundaries policies characteristics concept requirements attributes define meaning of similarity identify reusability factors classify attribute define type define value rangedefine local similarity Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

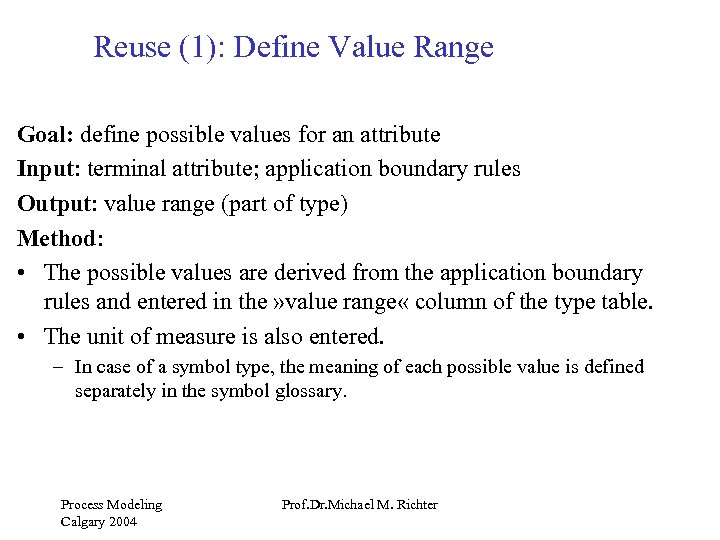

Reuse (1): Define Value Range Goal: define possible values for an attribute Input: terminal attribute; application boundary rules Output: value range (part of type) Method: • The possible values are derived from the application boundary rules and entered in the » value range « column of the type table. • The unit of measure is also entered. – In case of a symbol type, the meaning of each possible value is defined separately in the symbol glossary. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Reuse (1): Define Value Range Goal: define possible values for an attribute Input: terminal attribute; application boundary rules Output: value range (part of type) Method: • The possible values are derived from the application boundary rules and entered in the » value range « column of the type table. • The unit of measure is also entered. – In case of a symbol type, the meaning of each possible value is defined separately in the symbol glossary. Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Reuse (2): Define Local Similarity Goal: define meaning of similarity regarding a single attribute formally Input: • reuse scenarios; • terminal attribute; • value range (part of type); • application boundary rules Output: local similarity function (part of type) Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Reuse (2): Define Local Similarity Goal: define meaning of similarity regarding a single attribute formally Input: • reuse scenarios; • terminal attribute; • value range (part of type); • application boundary rules Output: local similarity function (part of type) Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Reuse (3): Define Global Similarity Goal: define meaning of similarity regarding a kind of artifact formally Input: informal description of global similarity; terminal and nonterminal attributes in form of concept attribute tables Output: global similarity (part of the changed schema for the Eb. IS) Method: – Using the defined attributes, the informal description of the global similarity is expressed formally. – This will allow the retrieval system to calculate the similarity automatically (task » calculate similarity «). Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Reuse (3): Define Global Similarity Goal: define meaning of similarity regarding a kind of artifact formally Input: informal description of global similarity; terminal and nonterminal attributes in form of concept attribute tables Output: global similarity (part of the changed schema for the Eb. IS) Method: – Using the defined attributes, the informal description of the global similarity is expressed formally. – This will allow the retrieval system to calculate the similarity automatically (task » calculate similarity «). Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Part 4 Maintenance Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Part 4 Maintenance Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

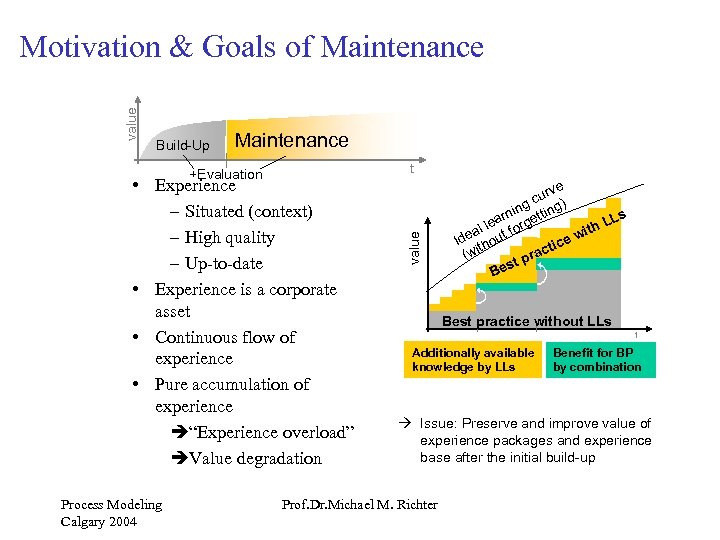

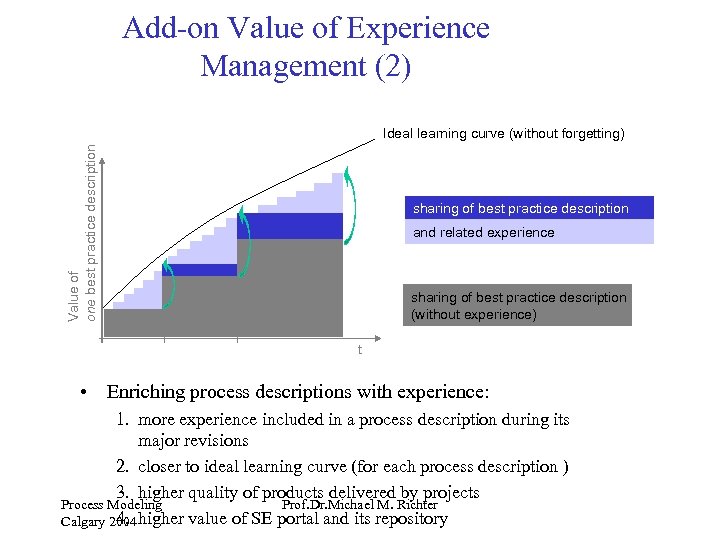

Build-Up Maintenance +Evaluation • Experience – Situated (context) – High quality – Up-to-date • Experience is a corporate asset • Continuous flow of experience • Pure accumulation of experience è“Experience overload” èValue degradation Process Modeling Calgary 2004 t value Motivation & Goals of Maintenance e urv ) gc nin etting r a LLs l le t forg ith a ew Ide thou i ctic ra (w st p Be Best practice without LLs t Additionally available knowledge by LLs Benefit for BP by combination à Issue: Preserve and improve value of experience packages and experience base after the initial build-up Prof. Dr. Michael M. Richter

Build-Up Maintenance +Evaluation • Experience – Situated (context) – High quality – Up-to-date • Experience is a corporate asset • Continuous flow of experience • Pure accumulation of experience è“Experience overload” èValue degradation Process Modeling Calgary 2004 t value Motivation & Goals of Maintenance e urv ) gc nin etting r a LLs l le t forg ith a ew Ide thou i ctic ra (w st p Be Best practice without LLs t Additionally available knowledge by LLs Benefit for BP by combination à Issue: Preserve and improve value of experience packages and experience base after the initial build-up Prof. Dr. Michael M. Richter

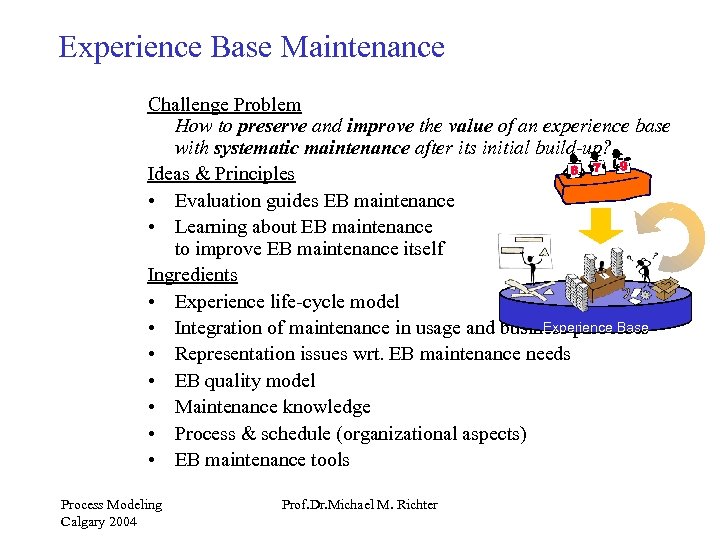

Experience Base Maintenance Challenge Problem How to preserve and improve the value of an experience base with systematic maintenance after its initial build-up? Ideas & Principles • Evaluation guides EB maintenance • Learning about EB maintenance to improve EB maintenance itself Ingredients • Experience life-cycle model Experience Base • Integration of maintenance in usage and business processes • Representation issues wrt. EB maintenance needs • EB quality model • Maintenance knowledge • Process & schedule (organizational aspects) • EB maintenance tools Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Experience Base Maintenance Challenge Problem How to preserve and improve the value of an experience base with systematic maintenance after its initial build-up? Ideas & Principles • Evaluation guides EB maintenance • Learning about EB maintenance to improve EB maintenance itself Ingredients • Experience life-cycle model Experience Base • Integration of maintenance in usage and business processes • Representation issues wrt. EB maintenance needs • EB quality model • Maintenance knowledge • Process & schedule (organizational aspects) • EB maintenance tools Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

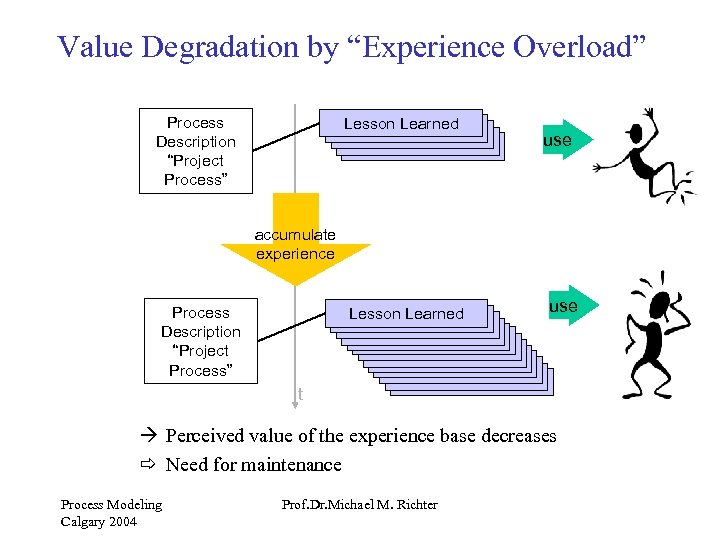

Value Degradation by “Experience Overload” Process Description “Project Process” Lesson Learned use accumulate experience Process Description “Project Process” Lesson Learned use t à Perceived value of the experience base decreases ð Need for maintenance Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Value Degradation by “Experience Overload” Process Description “Project Process” Lesson Learned use accumulate experience Process Description “Project Process” Lesson Learned use t à Perceived value of the experience base decreases ð Need for maintenance Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

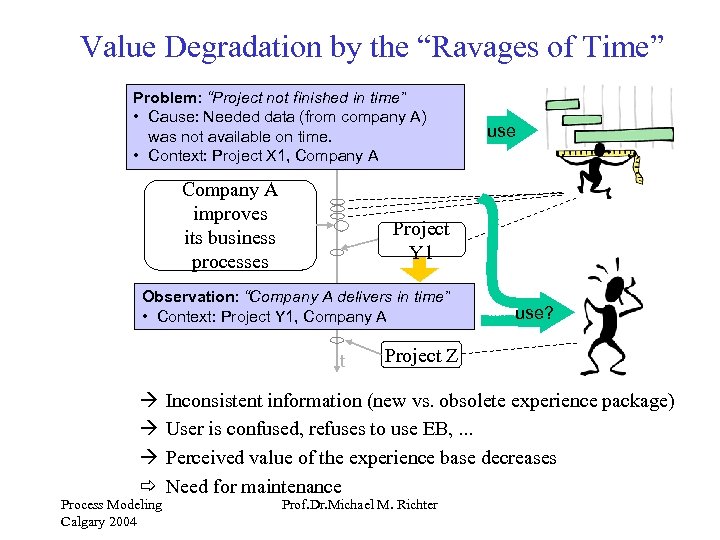

Value Degradation by the “Ravages of Time” Problem: “Project not finished in time” • Cause: Needed data (from company A) was not available on time. • Context: Project X 1, Company A improves its business processes Project Y 1 Observation: “Company A delivers in time” • Context: Project Y 1, Company A t à à à ð Process Modeling Calgary 2004 use? Project Z Inconsistent information (new vs. obsolete experience package) User is confused, refuses to use EB, . . . Perceived value of the experience base decreases Need for maintenance Prof. Dr. Michael M. Richter

Value Degradation by the “Ravages of Time” Problem: “Project not finished in time” • Cause: Needed data (from company A) was not available on time. • Context: Project X 1, Company A improves its business processes Project Y 1 Observation: “Company A delivers in time” • Context: Project Y 1, Company A t à à à ð Process Modeling Calgary 2004 use? Project Z Inconsistent information (new vs. obsolete experience package) User is confused, refuses to use EB, . . . Perceived value of the experience base decreases Need for maintenance Prof. Dr. Michael M. Richter

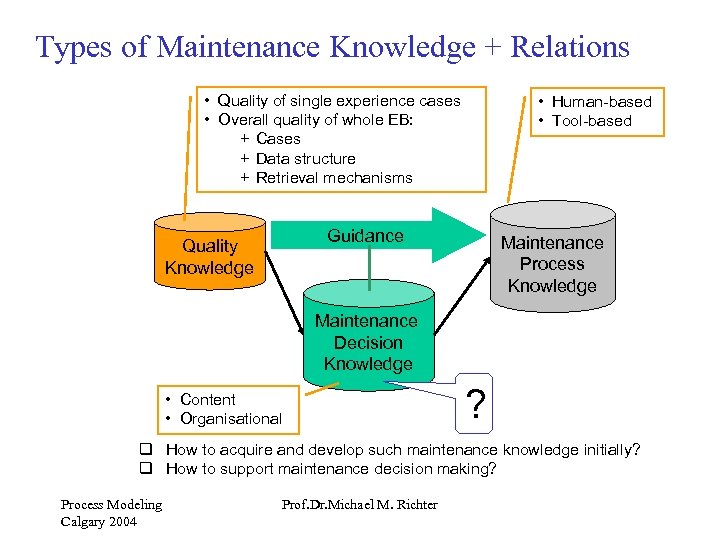

Types of Maintenance Knowledge + Relations • Quality of single experience cases • Overall quality of whole EB: + Cases + Data structure + Retrieval mechanisms • Human-based • Tool-based Guidance Quality Knowledge Maintenance Process Knowledge Maintenance Decision Knowledge • Content • Organisational ? q How to acquire and develop such maintenance knowledge initially? q How to support maintenance decision making? Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Types of Maintenance Knowledge + Relations • Quality of single experience cases • Overall quality of whole EB: + Cases + Data structure + Retrieval mechanisms • Human-based • Tool-based Guidance Quality Knowledge Maintenance Process Knowledge Maintenance Decision Knowledge • Content • Organisational ? q How to acquire and develop such maintenance knowledge initially? q How to support maintenance decision making? Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

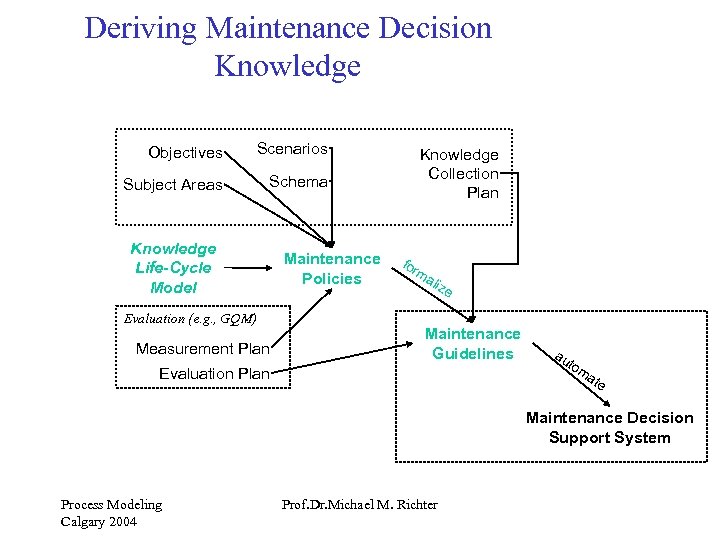

Deriving Maintenance Decision Knowledge Objectives Scenarios Subject Areas Schema Knowledge Life-Cycle Model Evaluation (e. g. , GQM) Measurement Plan Maintenance Policies Knowledge Collection Plan for ma liz e Maintenance Guidelines Evaluation Plan au tom ate Maintenance Decision Support System Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

Deriving Maintenance Decision Knowledge Objectives Scenarios Subject Areas Schema Knowledge Life-Cycle Model Evaluation (e. g. , GQM) Measurement Plan Maintenance Policies Knowledge Collection Plan for ma liz e Maintenance Guidelines Evaluation Plan au tom ate Maintenance Decision Support System Process Modeling Calgary 2004 Prof. Dr. Michael M. Richter

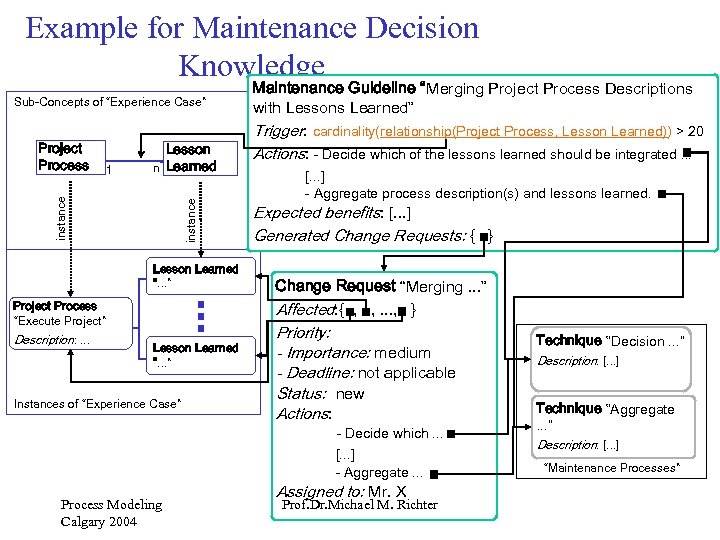

Example for Maintenance Decision Knowledge Sub-Concepts of “Experience Case” 1 Lesson n Learned instance Project Process Lesson Learned “. . . ” Project Process “Execute Project” Description: . . . Lesson Learned “. . . ” Instances of “Experience Case” Maintenance Guideline “Merging Project Process Descriptions with Lessons Learned” Trigger: cardinality(relationship(Project Process, Lesson Learned)) > 20 Actions: - Decide which of the lessons learned should be integrated. . . [. . . ] - Aggregate process description(s) and lessons learned. Expected benefits: [. . . ] Generated Change Requests: { } Change Request “Merging. . . ” Affected: { -, , . . . , } Priority: - Importance: medium - Deadline: not applicable Status: new Actions: - Decide which. . . [. . . ] - Aggregate. . . Process Modeling Calgary 2004 Assigned to: Mr. X Prof. Dr. Michael M. Richter Technique “Decision. . . ” Description: [. . . ] Technique “Aggregate. . . ” Description: [. . . ] “Maintenance Processes”

Example for Maintenance Decision Knowledge Sub-Concepts of “Experience Case” 1 Lesson n Learned instance Project Process Lesson Learned “. . . ” Project Process “Execute Project” Description: . . . Lesson Learned “. . . ” Instances of “Experience Case” Maintenance Guideline “Merging Project Process Descriptions with Lessons Learned” Trigger: cardinality(relationship(Project Process, Lesson Learned)) > 20 Actions: - Decide which of the lessons learned should be integrated. . . [. . . ] - Aggregate process description(s) and lessons learned. Expected benefits: [. . . ] Generated Change Requests: { } Change Request “Merging. . . ” Affected: { -, , . . . , } Priority: - Importance: medium - Deadline: not applicable Status: new Actions: - Decide which. . . [. . . ] - Aggregate. . . Process Modeling Calgary 2004 Assigned to: Mr. X Prof. Dr. Michael M. Richter Technique “Decision. . . ” Description: [. . . ] Technique “Aggregate. . . ” Description: [. . . ] “Maintenance Processes”