bbee614137a8862d3ba6ea02dd4d6695.ppt

- Количество слайдов: 99

Chapter 3: Data Warehousing and OLAP Technology: An Overview • What is a data warehouse? • A multi-dimensional data model • Data warehouse architecture • Data warehouse implementation • From data warehousing to data mining 16 March 2018 1

Chapter 3: Data Warehousing and OLAP Technology: An Overview • What is a data warehouse? • A multi-dimensional data model • Data warehouse architecture • Data warehouse implementation • From data warehousing to data mining 16 March 2018 1

3. 1 What is Data Warehouse? • Defined in many different ways, but not rigorously. – A decision support database that is maintained separately from the organization’s operational database – Support information processing by providing a solid platform of consolidated, historical data for analysis. • “A data warehouse is a subject-oriented, integrated, time-variant, and nonvolatile collection of data in support of management’s decision-making process. ”—W. H. Inmon • Data warehousing: – The process of constructing and using data warehouses 16 March 2018 2

3. 1 What is Data Warehouse? • Defined in many different ways, but not rigorously. – A decision support database that is maintained separately from the organization’s operational database – Support information processing by providing a solid platform of consolidated, historical data for analysis. • “A data warehouse is a subject-oriented, integrated, time-variant, and nonvolatile collection of data in support of management’s decision-making process. ”—W. H. Inmon • Data warehousing: – The process of constructing and using data warehouses 16 March 2018 2

Data Warehouse—Subject-Oriented • Organized around major subjects, such as customer, product, sales • Focusing on the modeling and analysis of data for decision makers, not on daily operations or transaction processing • Provide a simple and concise view around particular subject issues by excluding data that are not useful in the decision support process 16 March 2018 3

Data Warehouse—Subject-Oriented • Organized around major subjects, such as customer, product, sales • Focusing on the modeling and analysis of data for decision makers, not on daily operations or transaction processing • Provide a simple and concise view around particular subject issues by excluding data that are not useful in the decision support process 16 March 2018 3

Data Warehouse—Integrated • Constructed by integrating multiple, heterogeneous data sources – relational databases, flat files, on-line transaction records • Data cleaning and data integration techniques are applied. – Ensure consistency in naming conventions, encoding structures, attribute measures, etc. among different data sources • E. g. , Hotel price: currency, tax, breakfast covered, etc. – When data is moved to the warehouse, it is converted. 16 March 2018 4

Data Warehouse—Integrated • Constructed by integrating multiple, heterogeneous data sources – relational databases, flat files, on-line transaction records • Data cleaning and data integration techniques are applied. – Ensure consistency in naming conventions, encoding structures, attribute measures, etc. among different data sources • E. g. , Hotel price: currency, tax, breakfast covered, etc. – When data is moved to the warehouse, it is converted. 16 March 2018 4

Data Warehouse—Time Variant • The time horizon for the data warehouse is significantly longer than that of operational systems – Operational database: current value data – Data warehouse data: provide information from a historical perspective (e. g. , past 5 -10 years) • Every key structure in the data warehouse – Contains an element of time, explicitly or implicitly – But the key of operational data may or may not contain “time element” 16 March 2018 5

Data Warehouse—Time Variant • The time horizon for the data warehouse is significantly longer than that of operational systems – Operational database: current value data – Data warehouse data: provide information from a historical perspective (e. g. , past 5 -10 years) • Every key structure in the data warehouse – Contains an element of time, explicitly or implicitly – But the key of operational data may or may not contain “time element” 16 March 2018 5

Data Warehouse—Nonvolatile • A physically separate store of data transformed from the operational environment • Operational update of data does not occur in the data warehouse environment – Does not require transaction processing, recovery, and concurrency control mechanisms – Requires only two operations in data accessing: • initial loading of data and access of data 16 March 2018 6

Data Warehouse—Nonvolatile • A physically separate store of data transformed from the operational environment • Operational update of data does not occur in the data warehouse environment – Does not require transaction processing, recovery, and concurrency control mechanisms – Requires only two operations in data accessing: • initial loading of data and access of data 16 March 2018 6

3. 2 Data Warehouse vs. Heterogeneous DBMS • Traditional heterogeneous DB integration: A query driven approach – Build wrappers/mediators on top of heterogeneous databases – When a query is posed to a client site, a meta-dictionary is used to translate the query into queries appropriate for individual heterogeneous sites involved, and the results are integrated into a global answer set – Complex information filtering, compete for resources • Data warehouse: update-driven, high performance – Information from heterogeneous sources is integrated in advance and stored in warehouses for direct query and analysis 16 March 2018 7

3. 2 Data Warehouse vs. Heterogeneous DBMS • Traditional heterogeneous DB integration: A query driven approach – Build wrappers/mediators on top of heterogeneous databases – When a query is posed to a client site, a meta-dictionary is used to translate the query into queries appropriate for individual heterogeneous sites involved, and the results are integrated into a global answer set – Complex information filtering, compete for resources • Data warehouse: update-driven, high performance – Information from heterogeneous sources is integrated in advance and stored in warehouses for direct query and analysis 16 March 2018 7

Data Warehouse vs. Operational DBMS • OLTP (on-line transaction processing) – Major task of traditional relational DBMS – Day-to-day operations: purchasing, inventory, banking, manufacturing, payroll, registration, accounting, etc. • OLAP (on-line analytical processing) – Major task of data warehouse system – Data analysis and decision making • Distinct features (OLTP vs. OLAP): – User and system orientation: customer vs. market – Data contents: current, detailed vs. historical, consolidated – Database design: ER + application vs. star + subject – View: current, local vs. evolutionary, integrated – Access patterns: update vs. read-only but complex queries 16 March 2018 8

Data Warehouse vs. Operational DBMS • OLTP (on-line transaction processing) – Major task of traditional relational DBMS – Day-to-day operations: purchasing, inventory, banking, manufacturing, payroll, registration, accounting, etc. • OLAP (on-line analytical processing) – Major task of data warehouse system – Data analysis and decision making • Distinct features (OLTP vs. OLAP): – User and system orientation: customer vs. market – Data contents: current, detailed vs. historical, consolidated – Database design: ER + application vs. star + subject – View: current, local vs. evolutionary, integrated – Access patterns: update vs. read-only but complex queries 16 March 2018 8

OLTP vs. OLAP 16 March 2018 9

OLTP vs. OLAP 16 March 2018 9

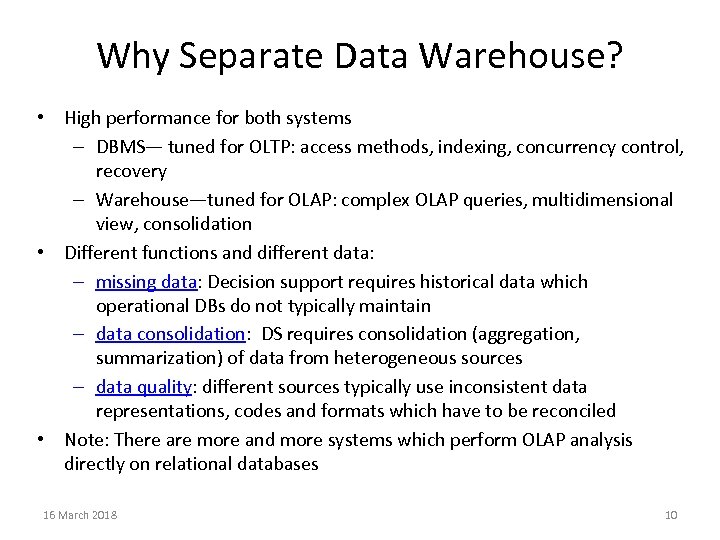

Why Separate Data Warehouse? • High performance for both systems – DBMS— tuned for OLTP: access methods, indexing, concurrency control, recovery – Warehouse—tuned for OLAP: complex OLAP queries, multidimensional view, consolidation • Different functions and different data: – missing data: Decision support requires historical data which operational DBs do not typically maintain – data consolidation: DS requires consolidation (aggregation, summarization) of data from heterogeneous sources – data quality: different sources typically use inconsistent data representations, codes and formats which have to be reconciled • Note: There are more and more systems which perform OLAP analysis directly on relational databases 16 March 2018 10

Why Separate Data Warehouse? • High performance for both systems – DBMS— tuned for OLTP: access methods, indexing, concurrency control, recovery – Warehouse—tuned for OLAP: complex OLAP queries, multidimensional view, consolidation • Different functions and different data: – missing data: Decision support requires historical data which operational DBs do not typically maintain – data consolidation: DS requires consolidation (aggregation, summarization) of data from heterogeneous sources – data quality: different sources typically use inconsistent data representations, codes and formats which have to be reconciled • Note: There are more and more systems which perform OLAP analysis directly on relational databases 16 March 2018 10

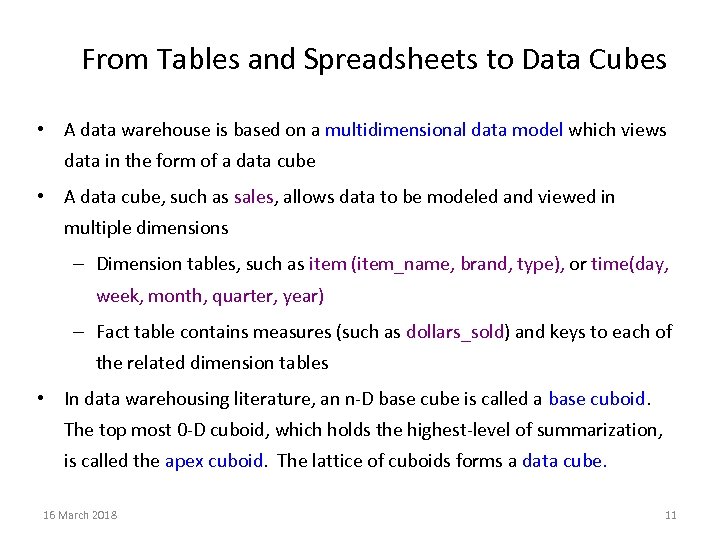

From Tables and Spreadsheets to Data Cubes • A data warehouse is based on a multidimensional data model which views data in the form of a data cube • A data cube, such as sales, allows data to be modeled and viewed in multiple dimensions – Dimension tables, such as item (item_name, brand, type), or time(day, week, month, quarter, year) – Fact table contains measures (such as dollars_sold) and keys to each of the related dimension tables • In data warehousing literature, an n-D base cube is called a base cuboid. The top most 0 -D cuboid, which holds the highest-level of summarization, is called the apex cuboid. The lattice of cuboids forms a data cube. 16 March 2018 11

From Tables and Spreadsheets to Data Cubes • A data warehouse is based on a multidimensional data model which views data in the form of a data cube • A data cube, such as sales, allows data to be modeled and viewed in multiple dimensions – Dimension tables, such as item (item_name, brand, type), or time(day, week, month, quarter, year) – Fact table contains measures (such as dollars_sold) and keys to each of the related dimension tables • In data warehousing literature, an n-D base cube is called a base cuboid. The top most 0 -D cuboid, which holds the highest-level of summarization, is called the apex cuboid. The lattice of cuboids forms a data cube. 16 March 2018 11

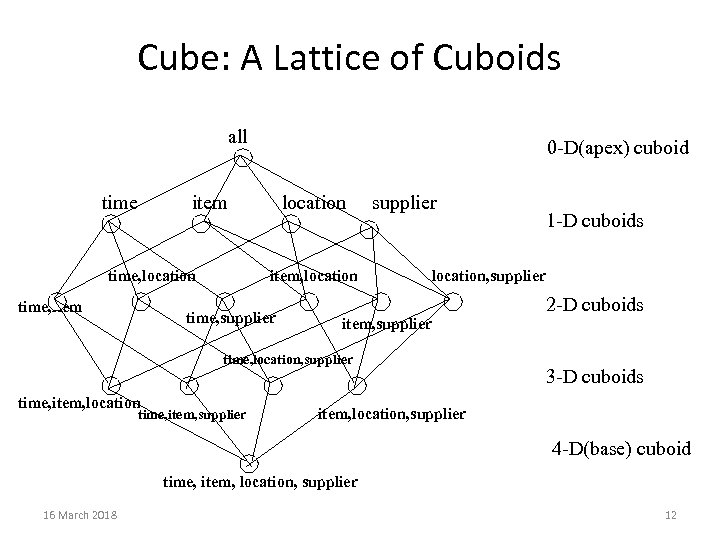

Cube: A Lattice of Cuboids all time 0 -D(apex) cuboid item time, location time, item location item, location time, supplier location, supplier item, supplier time, location, supplier time, item, location time, item, supplier 1 -D cuboids 2 -D cuboids 3 -D cuboids item, location, supplier 4 -D(base) cuboid time, item, location, supplier 16 March 2018 12

Cube: A Lattice of Cuboids all time 0 -D(apex) cuboid item time, location time, item location item, location time, supplier location, supplier item, supplier time, location, supplier time, item, location time, item, supplier 1 -D cuboids 2 -D cuboids 3 -D cuboids item, location, supplier 4 -D(base) cuboid time, item, location, supplier 16 March 2018 12

3. 3 Conceptual Modeling of Data Warehouses • Modeling data warehouses: dimensions & measures – Star schema: A fact table in the middle connected to a set of dimension tables – Snowflake schema: A refinement of star schema where some dimensional hierarchy is normalized into a set of smaller dimension tables, forming a shape similar to snowflake – Fact constellations: Multiple fact tables share dimension tables, viewed as a collection of stars, therefore called galaxy schema or fact constellation 16 March 2018 13

3. 3 Conceptual Modeling of Data Warehouses • Modeling data warehouses: dimensions & measures – Star schema: A fact table in the middle connected to a set of dimension tables – Snowflake schema: A refinement of star schema where some dimensional hierarchy is normalized into a set of smaller dimension tables, forming a shape similar to snowflake – Fact constellations: Multiple fact tables share dimension tables, viewed as a collection of stars, therefore called galaxy schema or fact constellation 16 March 2018 13

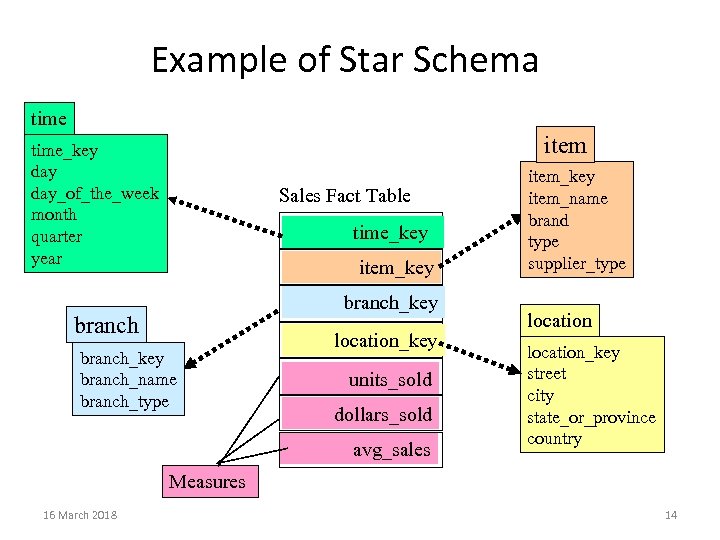

Example of Star Schema time item time_key day_of_the_week month quarter year Sales Fact Table time_key item_key branch_key branch_name branch_type location_key units_sold dollars_sold avg_sales item_key item_name brand type supplier_type location_key street city state_or_province country Measures 16 March 2018 14

Example of Star Schema time item time_key day_of_the_week month quarter year Sales Fact Table time_key item_key branch_key branch_name branch_type location_key units_sold dollars_sold avg_sales item_key item_name brand type supplier_type location_key street city state_or_province country Measures 16 March 2018 14

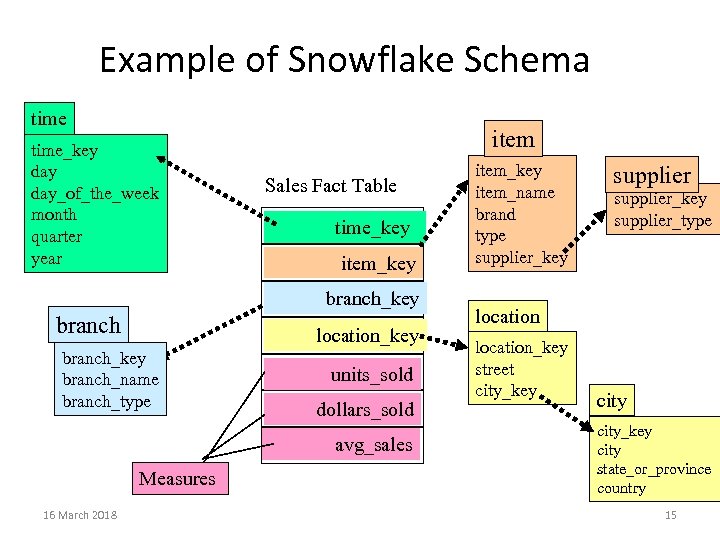

Example of Snowflake Schema time_key day_of_the_week month quarter year item Sales Fact Table time_key item_key branch location_key branch_name branch_type units_sold dollars_sold avg_sales Measures 16 March 2018 item_key item_name brand type supplier_key supplier_type location_key street city_key city state_or_province country 15

Example of Snowflake Schema time_key day_of_the_week month quarter year item Sales Fact Table time_key item_key branch location_key branch_name branch_type units_sold dollars_sold avg_sales Measures 16 March 2018 item_key item_name brand type supplier_key supplier_type location_key street city_key city state_or_province country 15

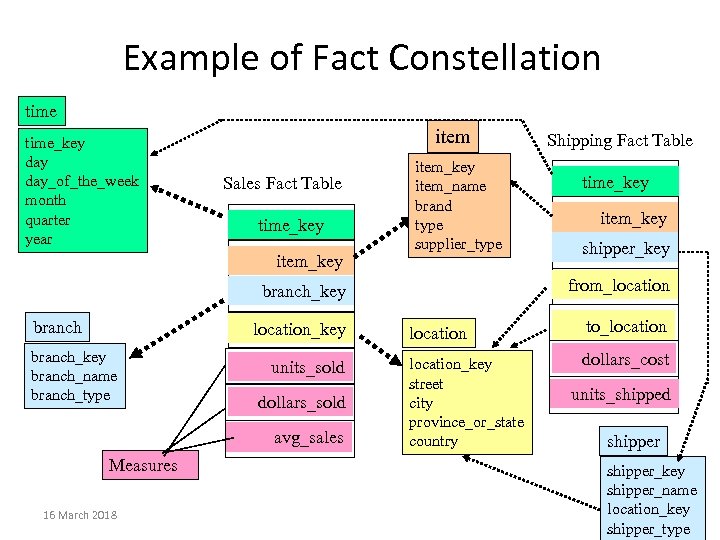

Example of Fact Constellation time_key day_of_the_week month quarter year item Sales Fact Table time_key item_key item_name brand type supplier_type location_key branch_name branch_type units_sold dollars_sold avg_sales Measures 16 March 2018 time_key item_key shipper_key from_location branch_key branch Shipping Fact Table location to_location_key street city province_or_state country dollars_cost units_shipped shipper_key shipper_name location_key 16 shipper_type

Example of Fact Constellation time_key day_of_the_week month quarter year item Sales Fact Table time_key item_key item_name brand type supplier_type location_key branch_name branch_type units_sold dollars_sold avg_sales Measures 16 March 2018 time_key item_key shipper_key from_location branch_key branch Shipping Fact Table location to_location_key street city province_or_state country dollars_cost units_shipped shipper_key shipper_name location_key 16 shipper_type

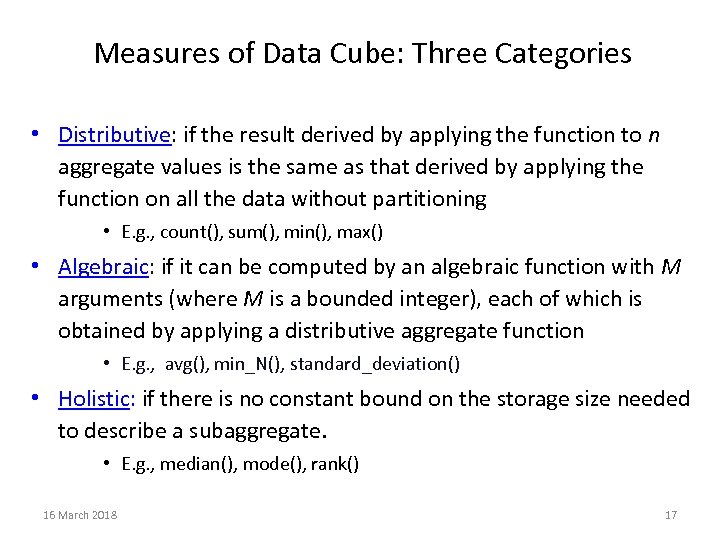

Measures of Data Cube: Three Categories • Distributive: if the result derived by applying the function to n aggregate values is the same as that derived by applying the function on all the data without partitioning • E. g. , count(), sum(), min(), max() • Algebraic: if it can be computed by an algebraic function with M arguments (where M is a bounded integer), each of which is obtained by applying a distributive aggregate function • E. g. , avg(), min_N(), standard_deviation() • Holistic: if there is no constant bound on the storage size needed to describe a subaggregate. • E. g. , median(), mode(), rank() 16 March 2018 17

Measures of Data Cube: Three Categories • Distributive: if the result derived by applying the function to n aggregate values is the same as that derived by applying the function on all the data without partitioning • E. g. , count(), sum(), min(), max() • Algebraic: if it can be computed by an algebraic function with M arguments (where M is a bounded integer), each of which is obtained by applying a distributive aggregate function • E. g. , avg(), min_N(), standard_deviation() • Holistic: if there is no constant bound on the storage size needed to describe a subaggregate. • E. g. , median(), mode(), rank() 16 March 2018 17

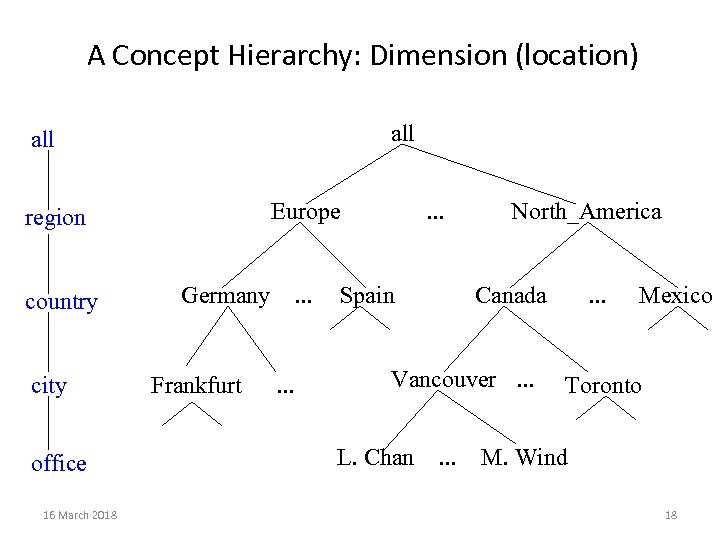

A Concept Hierarchy: Dimension (location) all Europe region country city office 16 March 2018 Germany Frankfurt . . Spain North_America Canada Vancouver. . . L. Chan . . . Mexico Toronto M. Wind 18

A Concept Hierarchy: Dimension (location) all Europe region country city office 16 March 2018 Germany Frankfurt . . Spain North_America Canada Vancouver. . . L. Chan . . . Mexico Toronto M. Wind 18

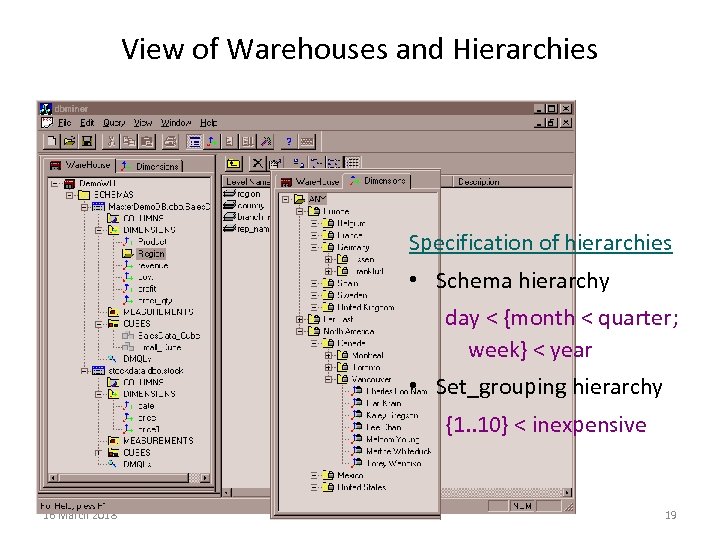

View of Warehouses and Hierarchies Specification of hierarchies • Schema hierarchy day < {month < quarter; week} < year • Set_grouping hierarchy {1. . 10} < inexpensive 16 March 2018 19

View of Warehouses and Hierarchies Specification of hierarchies • Schema hierarchy day < {month < quarter; week} < year • Set_grouping hierarchy {1. . 10} < inexpensive 16 March 2018 19

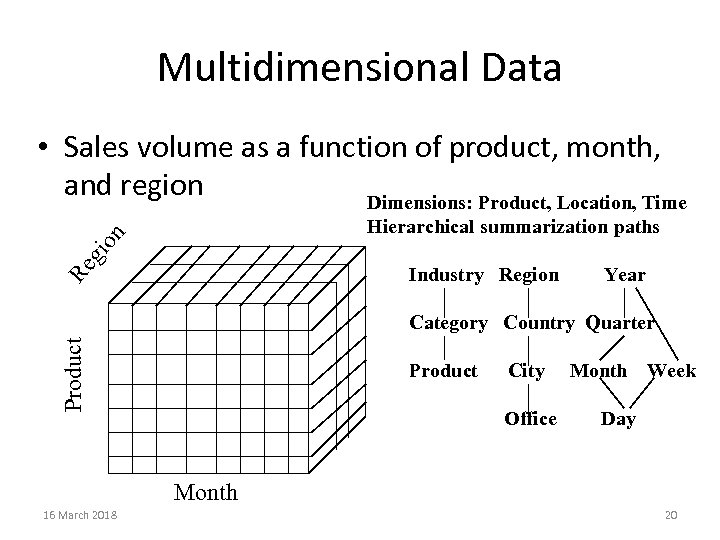

Multidimensional Data • Sales volume as a function of product, month, and region Dimensions: Product, Location, Time gi on Hierarchical summarization paths Re Industry Region Year Product Category Country Quarter Product City Office Month Week Day Month 16 March 2018 20

Multidimensional Data • Sales volume as a function of product, month, and region Dimensions: Product, Location, Time gi on Hierarchical summarization paths Re Industry Region Year Product Category Country Quarter Product City Office Month Week Day Month 16 March 2018 20

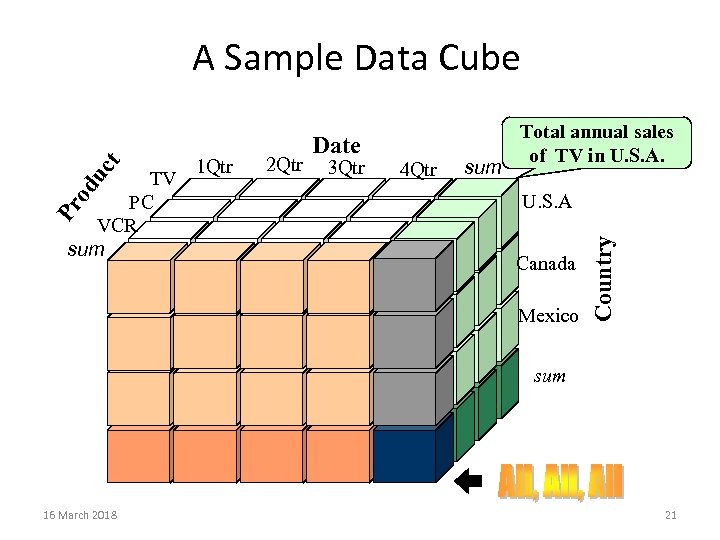

Pr od TV PC VCR sum 1 Qtr 2 Qtr Date 3 Qtr 4 Qtr sum Total annual sales of TV in U. S. A Canada Mexico Country uc t A Sample Data Cube sum 16 March 2018 21

Pr od TV PC VCR sum 1 Qtr 2 Qtr Date 3 Qtr 4 Qtr sum Total annual sales of TV in U. S. A Canada Mexico Country uc t A Sample Data Cube sum 16 March 2018 21

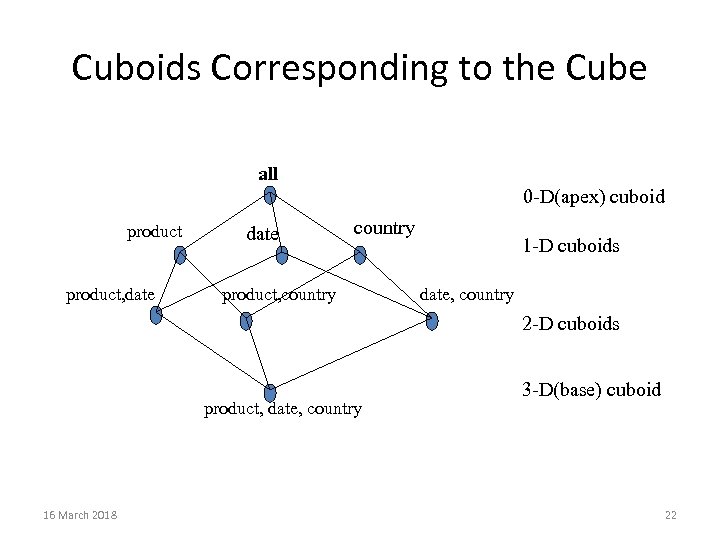

Cuboids Corresponding to the Cube all 0 -D(apex) cuboid product, date country product, country 1 -D cuboids date, country 2 -D cuboids product, date, country 16 March 2018 3 -D(base) cuboid 22

Cuboids Corresponding to the Cube all 0 -D(apex) cuboid product, date country product, country 1 -D cuboids date, country 2 -D cuboids product, date, country 16 March 2018 3 -D(base) cuboid 22

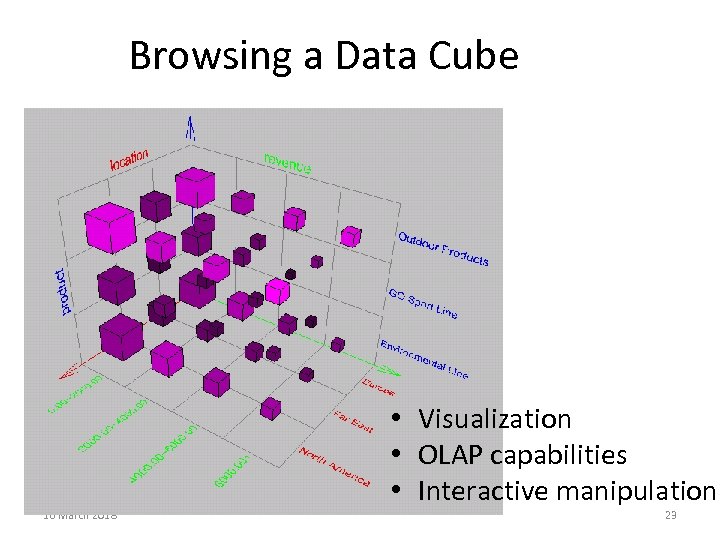

Browsing a Data Cube 16 March 2018 • Visualization • OLAP capabilities • Interactive manipulation 23

Browsing a Data Cube 16 March 2018 • Visualization • OLAP capabilities • Interactive manipulation 23

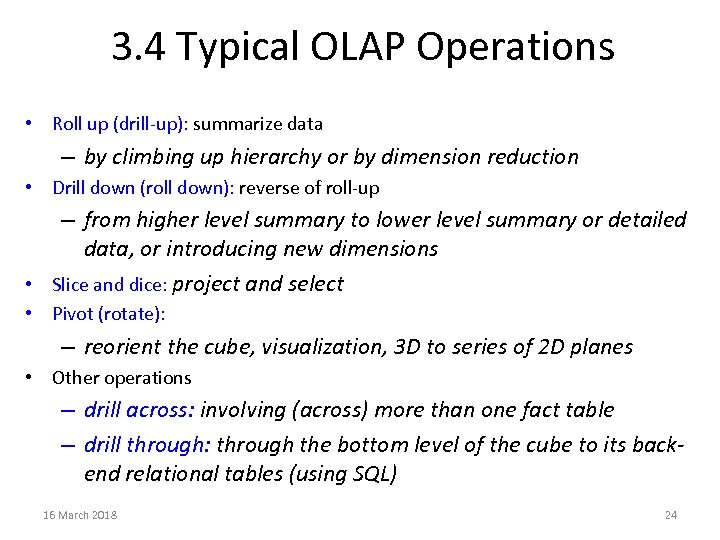

3. 4 Typical OLAP Operations • Roll up (drill-up): summarize data – by climbing up hierarchy or by dimension reduction • Drill down (roll down): reverse of roll-up – from higher level summary to lower level summary or detailed data, or introducing new dimensions • Slice and dice: project and select • Pivot (rotate): – reorient the cube, visualization, 3 D to series of 2 D planes • Other operations – drill across: involving (across) more than one fact table – drill through: through the bottom level of the cube to its backend relational tables (using SQL) 16 March 2018 24

3. 4 Typical OLAP Operations • Roll up (drill-up): summarize data – by climbing up hierarchy or by dimension reduction • Drill down (roll down): reverse of roll-up – from higher level summary to lower level summary or detailed data, or introducing new dimensions • Slice and dice: project and select • Pivot (rotate): – reorient the cube, visualization, 3 D to series of 2 D planes • Other operations – drill across: involving (across) more than one fact table – drill through: through the bottom level of the cube to its backend relational tables (using SQL) 16 March 2018 24

Fig. 3. 10 Typical OLAP Operations 16 March 2018 25

Fig. 3. 10 Typical OLAP Operations 16 March 2018 25

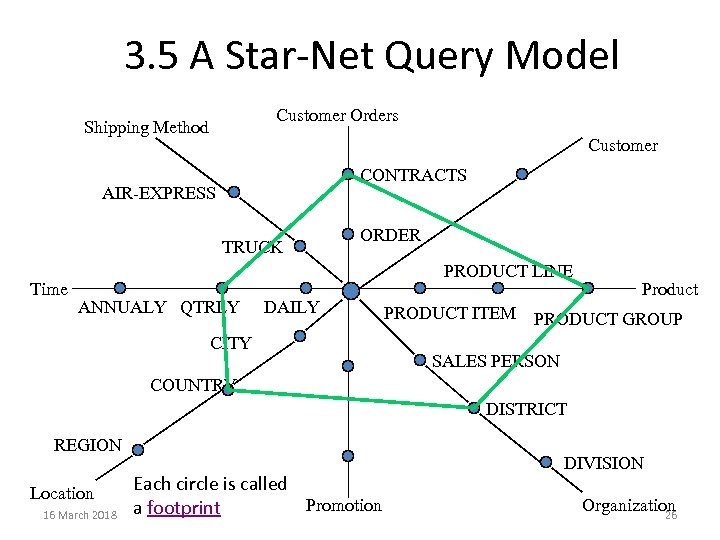

3. 5 A Star-Net Query Model Customer Orders Shipping Method Customer CONTRACTS AIR-EXPRESS TRUCK Time ORDER PRODUCT LINE ANNUALY QTRLY DAILY CITY Product PRODUCT ITEM PRODUCT GROUP SALES PERSON COUNTRY DISTRICT REGION Location 16 March 2018 Each circle is called Promotion a footprint DIVISION Organization 26

3. 5 A Star-Net Query Model Customer Orders Shipping Method Customer CONTRACTS AIR-EXPRESS TRUCK Time ORDER PRODUCT LINE ANNUALY QTRLY DAILY CITY Product PRODUCT ITEM PRODUCT GROUP SALES PERSON COUNTRY DISTRICT REGION Location 16 March 2018 Each circle is called Promotion a footprint DIVISION Organization 26

3. 6 Design of Data Warehouse: A Business Analysis Framework • Four views regarding the design of a data warehouse – Top-down view • allows selection of the relevant information necessary for the data warehouse – Data source view • exposes the information being captured, stored, and managed by operational systems – Data warehouse view • consists of fact tables and dimension tables – Business query view • sees the perspectives of data in the warehouse from the view of end-user 16 March 2018 27

3. 6 Design of Data Warehouse: A Business Analysis Framework • Four views regarding the design of a data warehouse – Top-down view • allows selection of the relevant information necessary for the data warehouse – Data source view • exposes the information being captured, stored, and managed by operational systems – Data warehouse view • consists of fact tables and dimension tables – Business query view • sees the perspectives of data in the warehouse from the view of end-user 16 March 2018 27

3. 6. 1 Data Warehouse Design Process • Top-down, bottom-up approaches or a combination of both – Top-down: Starts with overall design and planning (mature) – Bottom-up: Starts with experiments and prototypes (rapid) • From software engineering point of view – Waterfall: structured and systematic analysis at each step before proceeding to the next – Spiral: rapid generation of increasingly functional systems, short turn around time, quick turn around • Typical data warehouse design process – Choose a business process to model, e. g. , orders, invoices, etc. – Choose the grain (atomic level of data) of the business process – Choose the dimensions that will apply to each fact table record – Choose the measure that will populate each fact table record 16 March 2018 28

3. 6. 1 Data Warehouse Design Process • Top-down, bottom-up approaches or a combination of both – Top-down: Starts with overall design and planning (mature) – Bottom-up: Starts with experiments and prototypes (rapid) • From software engineering point of view – Waterfall: structured and systematic analysis at each step before proceeding to the next – Spiral: rapid generation of increasingly functional systems, short turn around time, quick turn around • Typical data warehouse design process – Choose a business process to model, e. g. , orders, invoices, etc. – Choose the grain (atomic level of data) of the business process – Choose the dimensions that will apply to each fact table record – Choose the measure that will populate each fact table record 16 March 2018 28

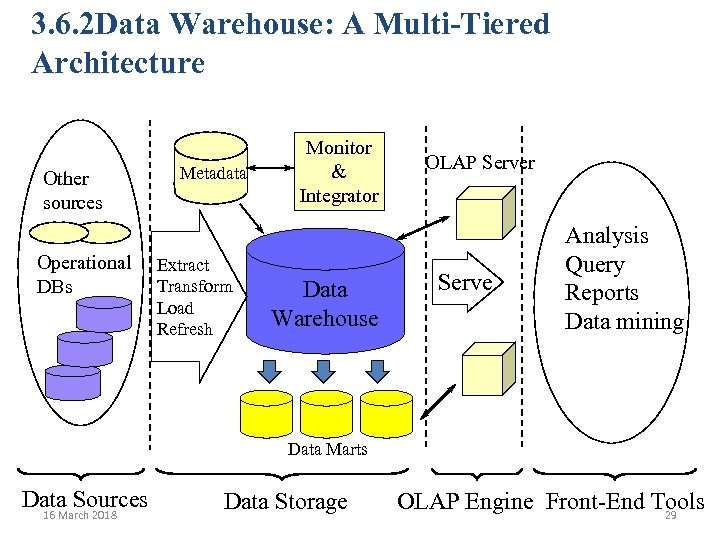

3. 6. 2 Data Warehouse: A Multi-Tiered Architecture Other sources Operational DBs Metadata Extract Transform Load Refresh Monitor & Integrator Data Warehouse OLAP Server Serve Analysis Query Reports Data mining Data Marts Data Sources 16 March 2018 Data Storage OLAP Engine Front-End Tools 29

3. 6. 2 Data Warehouse: A Multi-Tiered Architecture Other sources Operational DBs Metadata Extract Transform Load Refresh Monitor & Integrator Data Warehouse OLAP Server Serve Analysis Query Reports Data mining Data Marts Data Sources 16 March 2018 Data Storage OLAP Engine Front-End Tools 29

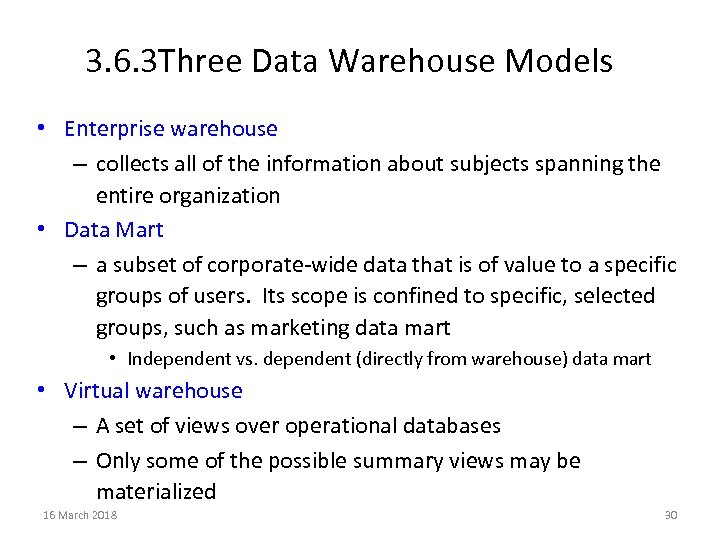

3. 6. 3 Three Data Warehouse Models • Enterprise warehouse – collects all of the information about subjects spanning the entire organization • Data Mart – a subset of corporate-wide data that is of value to a specific groups of users. Its scope is confined to specific, selected groups, such as marketing data mart • Independent vs. dependent (directly from warehouse) data mart • Virtual warehouse – A set of views over operational databases – Only some of the possible summary views may be materialized 16 March 2018 30

3. 6. 3 Three Data Warehouse Models • Enterprise warehouse – collects all of the information about subjects spanning the entire organization • Data Mart – a subset of corporate-wide data that is of value to a specific groups of users. Its scope is confined to specific, selected groups, such as marketing data mart • Independent vs. dependent (directly from warehouse) data mart • Virtual warehouse – A set of views over operational databases – Only some of the possible summary views may be materialized 16 March 2018 30

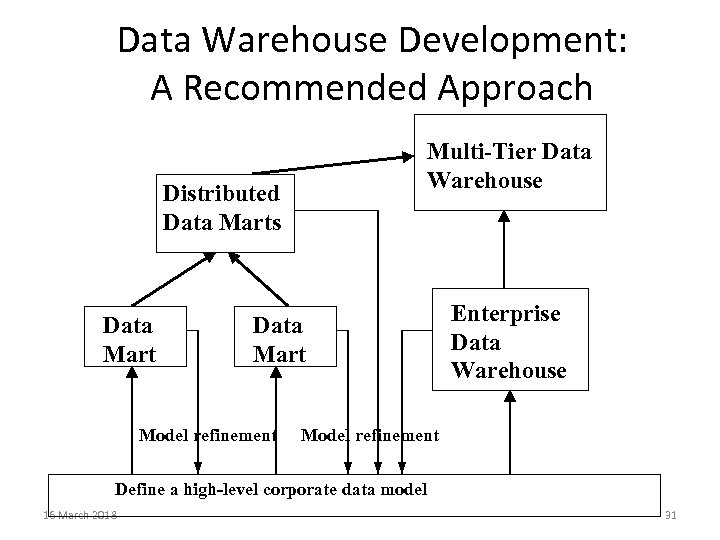

Data Warehouse Development: A Recommended Approach Multi-Tier Data Warehouse Distributed Data Marts Data Mart Model refinement Enterprise Data Warehouse Model refinement Define a high-level corporate data model 16 March 2018 31

Data Warehouse Development: A Recommended Approach Multi-Tier Data Warehouse Distributed Data Marts Data Mart Model refinement Enterprise Data Warehouse Model refinement Define a high-level corporate data model 16 March 2018 31

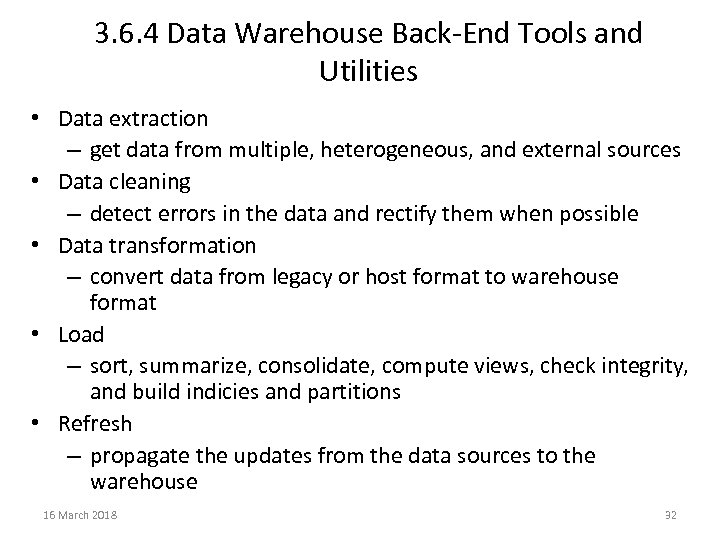

3. 6. 4 Data Warehouse Back-End Tools and Utilities • Data extraction – get data from multiple, heterogeneous, and external sources • Data cleaning – detect errors in the data and rectify them when possible • Data transformation – convert data from legacy or host format to warehouse format • Load – sort, summarize, consolidate, compute views, check integrity, and build indicies and partitions • Refresh – propagate the updates from the data sources to the warehouse 16 March 2018 32

3. 6. 4 Data Warehouse Back-End Tools and Utilities • Data extraction – get data from multiple, heterogeneous, and external sources • Data cleaning – detect errors in the data and rectify them when possible • Data transformation – convert data from legacy or host format to warehouse format • Load – sort, summarize, consolidate, compute views, check integrity, and build indicies and partitions • Refresh – propagate the updates from the data sources to the warehouse 16 March 2018 32

3. 7 Metadata Repository • Meta data is the data defining warehouse objects. It stores: • Description of the structure of the data warehouse – schema, view, dimensions, hierarchies, derived data defn, data mart locations and contents • Operational meta-data – data lineage (history of migrated data and transformation path), currency of data (active, archived, or purged), monitoring information (warehouse usage statistics, error reports, audit trails) • The algorithms used for summarization • The mapping from operational environment to the data warehouse • Data related to system performance – warehouse schema, view and derived data definitions • Business data – business terms and definitions, ownership of data, charging policies 16 March 2018 33

3. 7 Metadata Repository • Meta data is the data defining warehouse objects. It stores: • Description of the structure of the data warehouse – schema, view, dimensions, hierarchies, derived data defn, data mart locations and contents • Operational meta-data – data lineage (history of migrated data and transformation path), currency of data (active, archived, or purged), monitoring information (warehouse usage statistics, error reports, audit trails) • The algorithms used for summarization • The mapping from operational environment to the data warehouse • Data related to system performance – warehouse schema, view and derived data definitions • Business data – business terms and definitions, ownership of data, charging policies 16 March 2018 33

3. 8 OLAP Server Architectures • Relational OLAP (ROLAP) – Use relational or extended-relational DBMS to store and manage warehouse data and OLAP middle ware – Include optimization of DBMS backend, implementation of aggregation navigation logic, and additional tools and services – Greater scalability • Multidimensional OLAP (MOLAP) – Sparse array-based multidimensional storage engine – Fast indexing to pre-computed summarized data • Hybrid OLAP (HOLAP) (e. g. , Microsoft SQLServer) – Flexibility, e. g. , low level: relational, high-level: array • Specialized SQL servers (e. g. , Redbricks) – Specialized support for SQL queries over star/snowflake schemas 16 March 2018 34

3. 8 OLAP Server Architectures • Relational OLAP (ROLAP) – Use relational or extended-relational DBMS to store and manage warehouse data and OLAP middle ware – Include optimization of DBMS backend, implementation of aggregation navigation logic, and additional tools and services – Greater scalability • Multidimensional OLAP (MOLAP) – Sparse array-based multidimensional storage engine – Fast indexing to pre-computed summarized data • Hybrid OLAP (HOLAP) (e. g. , Microsoft SQLServer) – Flexibility, e. g. , low level: relational, high-level: array • Specialized SQL servers (e. g. , Redbricks) – Specialized support for SQL queries over star/snowflake schemas 16 March 2018 34

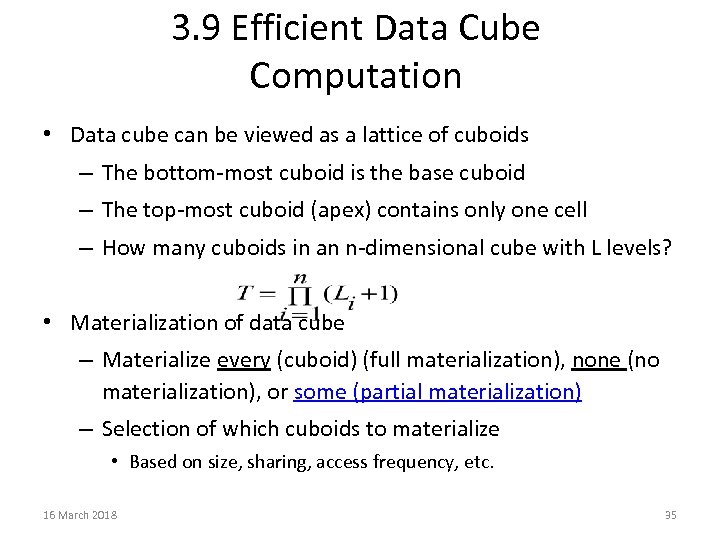

3. 9 Efficient Data Cube Computation • Data cube can be viewed as a lattice of cuboids – The bottom-most cuboid is the base cuboid – The top-most cuboid (apex) contains only one cell – How many cuboids in an n-dimensional cube with L levels? • Materialization of data cube – Materialize every (cuboid) (full materialization), none (no materialization), or some (partial materialization) – Selection of which cuboids to materialize • Based on size, sharing, access frequency, etc. 16 March 2018 35

3. 9 Efficient Data Cube Computation • Data cube can be viewed as a lattice of cuboids – The bottom-most cuboid is the base cuboid – The top-most cuboid (apex) contains only one cell – How many cuboids in an n-dimensional cube with L levels? • Materialization of data cube – Materialize every (cuboid) (full materialization), none (no materialization), or some (partial materialization) – Selection of which cuboids to materialize • Based on size, sharing, access frequency, etc. 16 March 2018 35

![Cube Operation • Cube definition and computation in DMQL define cube sales[item, city, year]: Cube Operation • Cube definition and computation in DMQL define cube sales[item, city, year]:](https://present5.com/presentation/bbee614137a8862d3ba6ea02dd4d6695/image-36.jpg) Cube Operation • Cube definition and computation in DMQL define cube sales[item, city, year]: sum(sales_in_dollars) compute cube sales • Transform it into a SQL-like language (with a new operator cube by, introduced by Gray et al. ’ 96) () SELECT item, city, year, SUM (amount) FROM SALES (city) (item) (year) CUBE BY item, city, year • Need compute the following Group-Bys (city, item) (city, year) (item, year) (date, product, customer), (date, product), (date, customer), (product, customer), (date), (product), (customer) (city, item, year) () 16 March 2018 36

Cube Operation • Cube definition and computation in DMQL define cube sales[item, city, year]: sum(sales_in_dollars) compute cube sales • Transform it into a SQL-like language (with a new operator cube by, introduced by Gray et al. ’ 96) () SELECT item, city, year, SUM (amount) FROM SALES (city) (item) (year) CUBE BY item, city, year • Need compute the following Group-Bys (city, item) (city, year) (item, year) (date, product, customer), (date, product), (date, customer), (product, customer), (date), (product), (customer) (city, item, year) () 16 March 2018 36

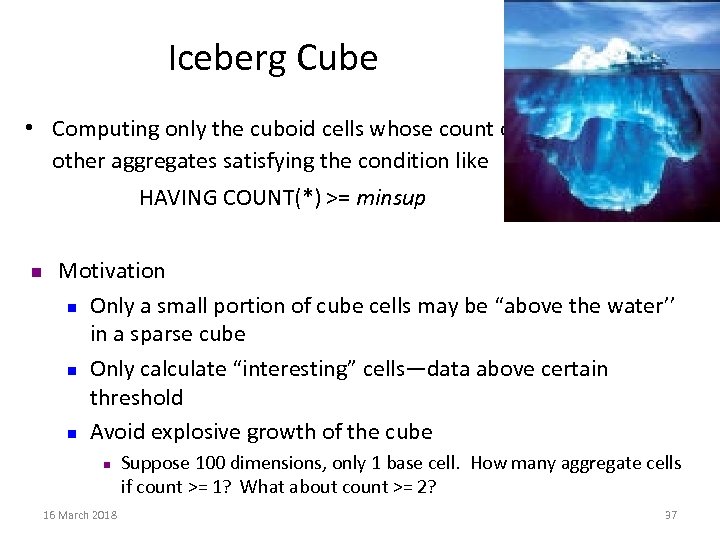

Iceberg Cube • Computing only the cuboid cells whose count or other aggregates satisfying the condition like HAVING COUNT(*) >= minsup n Motivation n Only a small portion of cube cells may be “above the water’’ in a sparse cube n Only calculate “interesting” cells—data above certain threshold n Avoid explosive growth of the cube n 16 March 2018 Suppose 100 dimensions, only 1 base cell. How many aggregate cells if count >= 1? What about count >= 2? 37

Iceberg Cube • Computing only the cuboid cells whose count or other aggregates satisfying the condition like HAVING COUNT(*) >= minsup n Motivation n Only a small portion of cube cells may be “above the water’’ in a sparse cube n Only calculate “interesting” cells—data above certain threshold n Avoid explosive growth of the cube n 16 March 2018 Suppose 100 dimensions, only 1 base cell. How many aggregate cells if count >= 1? What about count >= 2? 37

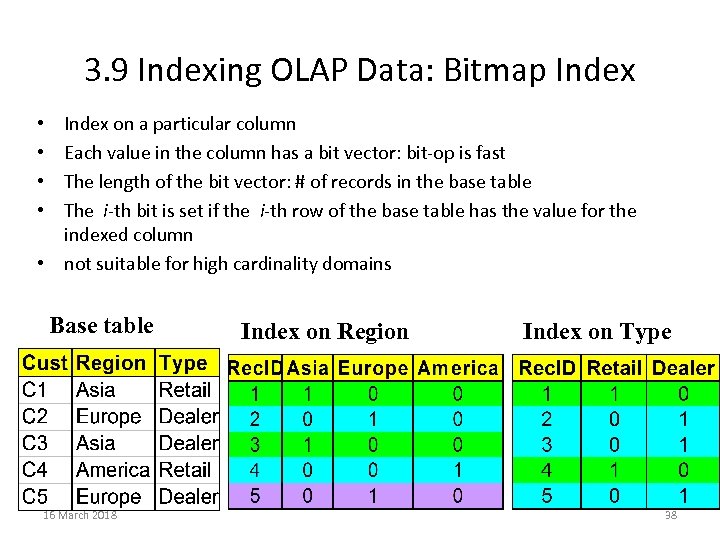

3. 9 Indexing OLAP Data: Bitmap Index on a particular column Each value in the column has a bit vector: bit-op is fast The length of the bit vector: # of records in the base table The i-th bit is set if the i-th row of the base table has the value for the indexed column • not suitable for high cardinality domains • • Base table 16 March 2018 Index on Region Index on Type 38

3. 9 Indexing OLAP Data: Bitmap Index on a particular column Each value in the column has a bit vector: bit-op is fast The length of the bit vector: # of records in the base table The i-th bit is set if the i-th row of the base table has the value for the indexed column • not suitable for high cardinality domains • • Base table 16 March 2018 Index on Region Index on Type 38

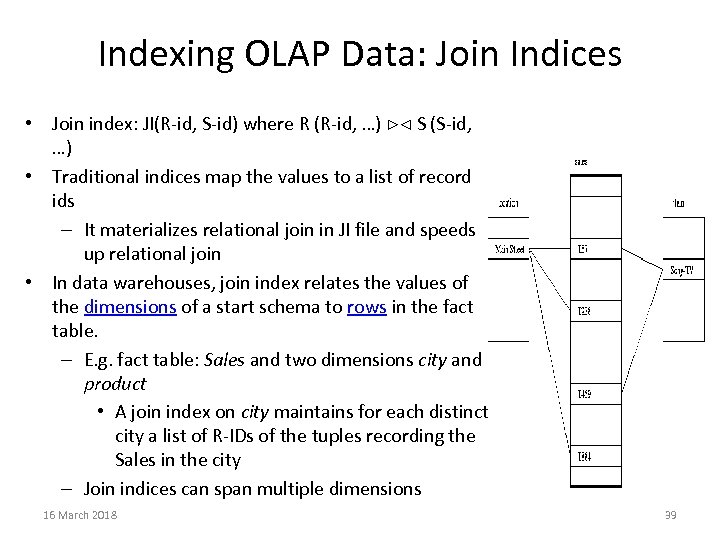

Indexing OLAP Data: Join Indices • Join index: JI(R-id, S-id) where R (R-id, …) S (S-id, …) • Traditional indices map the values to a list of record ids – It materializes relational join in JI file and speeds up relational join • In data warehouses, join index relates the values of the dimensions of a start schema to rows in the fact table. – E. g. fact table: Sales and two dimensions city and product • A join index on city maintains for each distinct city a list of R-IDs of the tuples recording the Sales in the city – Join indices can span multiple dimensions 16 March 2018 39

Indexing OLAP Data: Join Indices • Join index: JI(R-id, S-id) where R (R-id, …) S (S-id, …) • Traditional indices map the values to a list of record ids – It materializes relational join in JI file and speeds up relational join • In data warehouses, join index relates the values of the dimensions of a start schema to rows in the fact table. – E. g. fact table: Sales and two dimensions city and product • A join index on city maintains for each distinct city a list of R-IDs of the tuples recording the Sales in the city – Join indices can span multiple dimensions 16 March 2018 39

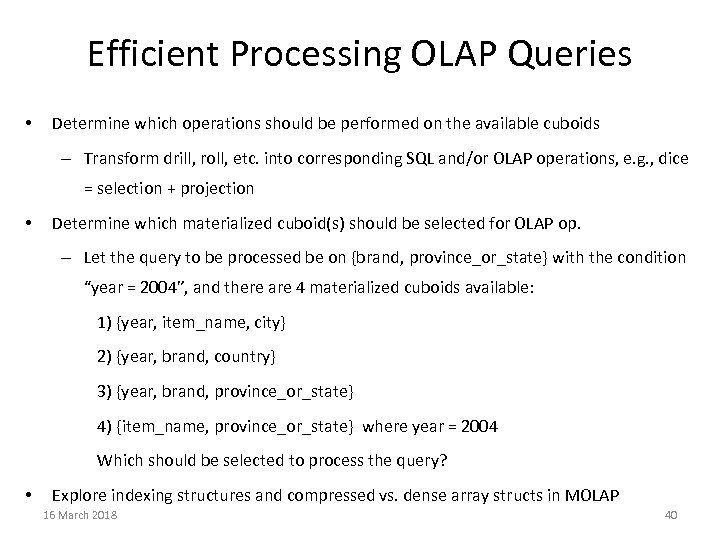

Efficient Processing OLAP Queries • Determine which operations should be performed on the available cuboids – Transform drill, roll, etc. into corresponding SQL and/or OLAP operations, e. g. , dice = selection + projection • Determine which materialized cuboid(s) should be selected for OLAP op. – Let the query to be processed be on {brand, province_or_state} with the condition “year = 2004”, and there are 4 materialized cuboids available: 1) {year, item_name, city} 2) {year, brand, country} 3) {year, brand, province_or_state} 4) {item_name, province_or_state} where year = 2004 Which should be selected to process the query? • Explore indexing structures and compressed vs. dense array structs in MOLAP 16 March 2018 40

Efficient Processing OLAP Queries • Determine which operations should be performed on the available cuboids – Transform drill, roll, etc. into corresponding SQL and/or OLAP operations, e. g. , dice = selection + projection • Determine which materialized cuboid(s) should be selected for OLAP op. – Let the query to be processed be on {brand, province_or_state} with the condition “year = 2004”, and there are 4 materialized cuboids available: 1) {year, item_name, city} 2) {year, brand, country} 3) {year, brand, province_or_state} 4) {item_name, province_or_state} where year = 2004 Which should be selected to process the query? • Explore indexing structures and compressed vs. dense array structs in MOLAP 16 March 2018 40

Chapter 3: Data Warehousing and OLAP Technology: An Overview • What is a data warehouse? • A multi-dimensional data model • Data warehouse architecture • Data warehouse implementation • From data warehousing to data mining 16 March 2018 41

Chapter 3: Data Warehousing and OLAP Technology: An Overview • What is a data warehouse? • A multi-dimensional data model • Data warehouse architecture • Data warehouse implementation • From data warehousing to data mining 16 March 2018 41

Data Warehouse Usage • Three kinds of data warehouse applications – Information processing • supports querying, basic statistical analysis, and reporting using crosstabs, tables, charts and graphs – Analytical processing • multidimensional analysis of data warehouse data • supports basic OLAP operations, slice-dice, drilling, pivoting – Data mining • knowledge discovery from hidden patterns • supports associations, constructing analytical models, performing classification and prediction, and presenting the mining results using visualization tools 16 March 2018 42

Data Warehouse Usage • Three kinds of data warehouse applications – Information processing • supports querying, basic statistical analysis, and reporting using crosstabs, tables, charts and graphs – Analytical processing • multidimensional analysis of data warehouse data • supports basic OLAP operations, slice-dice, drilling, pivoting – Data mining • knowledge discovery from hidden patterns • supports associations, constructing analytical models, performing classification and prediction, and presenting the mining results using visualization tools 16 March 2018 42

3. 10 From On-Line Analytical Processing (OLAP) to On Line Analytical Mining (OLAM) • Why online analytical mining? – High quality of data in data warehouses • DW contains integrated, consistent, cleaned data – Available information processing structure surrounding data warehouses • ODBC, OLEDB, Web accessing, service facilities, reporting and OLAP tools – OLAP-based exploratory data analysis • Mining with drilling, dicing, pivoting, etc. – On-line selection of data mining functions • Integration and swapping of multiple mining functions, algorithms, and tasks 16 March 2018 43

3. 10 From On-Line Analytical Processing (OLAP) to On Line Analytical Mining (OLAM) • Why online analytical mining? – High quality of data in data warehouses • DW contains integrated, consistent, cleaned data – Available information processing structure surrounding data warehouses • ODBC, OLEDB, Web accessing, service facilities, reporting and OLAP tools – OLAP-based exploratory data analysis • Mining with drilling, dicing, pivoting, etc. – On-line selection of data mining functions • Integration and swapping of multiple mining functions, algorithms, and tasks 16 March 2018 43

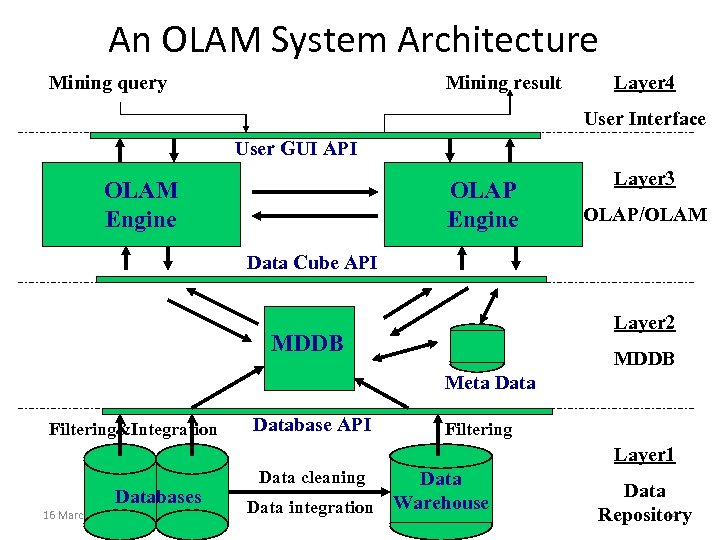

An OLAM System Architecture Mining query Mining result Layer 4 User Interface User GUI API OLAM Engine OLAP Engine Layer 3 OLAP/OLAM Data Cube API Layer 2 MDDB Meta Data Filtering&Integration Database API Filtering Layer 1 Databases 16 March 2018 Data cleaning Data integration Warehouse Data 44 Repository

An OLAM System Architecture Mining query Mining result Layer 4 User Interface User GUI API OLAM Engine OLAP Engine Layer 3 OLAP/OLAM Data Cube API Layer 2 MDDB Meta Data Filtering&Integration Database API Filtering Layer 1 Databases 16 March 2018 Data cleaning Data integration Warehouse Data 44 Repository

Chapter 4

Chapter 4

Chapter 4: Mining Frequent Patterns, Association and Correlations • Basic concepts • Efficient and scalable frequent itemset mining methods • Mining various kinds of association rules • From association mining to correlation analysis • Constraint-based association mining • Summary 16 March 2018 Data Mining: Concepts and Techniques 46

Chapter 4: Mining Frequent Patterns, Association and Correlations • Basic concepts • Efficient and scalable frequent itemset mining methods • Mining various kinds of association rules • From association mining to correlation analysis • Constraint-based association mining • Summary 16 March 2018 Data Mining: Concepts and Techniques 46

4. 1. 1 What Is Frequent Pattern Analysis? • Frequent pattern: a pattern (a set of items, subsequences, substructures, etc. ) that occurs frequently in a data set • First proposed by Agrawal, Imielinski, and Swami [AIS 93] in the context of frequent itemsets and association rule mining • Motivation: Finding inherent regularities in data – What products were often purchased together? — Beer and diapers? ! – What are the subsequent purchases after buying a PC? – What kinds of DNA are sensitive to this new drug? – Can we automatically classify web documents? • Applications – Basket data analysis, cross-marketing, catalog design, sale campaign analysis, Web log (click stream) analysis, and DNA sequence analysis. 16 March 2018 Data Mining: Concepts and Techniques 47

4. 1. 1 What Is Frequent Pattern Analysis? • Frequent pattern: a pattern (a set of items, subsequences, substructures, etc. ) that occurs frequently in a data set • First proposed by Agrawal, Imielinski, and Swami [AIS 93] in the context of frequent itemsets and association rule mining • Motivation: Finding inherent regularities in data – What products were often purchased together? — Beer and diapers? ! – What are the subsequent purchases after buying a PC? – What kinds of DNA are sensitive to this new drug? – Can we automatically classify web documents? • Applications – Basket data analysis, cross-marketing, catalog design, sale campaign analysis, Web log (click stream) analysis, and DNA sequence analysis. 16 March 2018 Data Mining: Concepts and Techniques 47

4. 1. 2 Why Is Freq. Pattern Mining Important? • Discloses an intrinsic and important property of data sets • Forms the foundation for many essential data mining tasks – Association, correlation, and causality analysis – Sequential, structural (e. g. , sub-graph) patterns – Pattern analysis in spatiotemporal, multimedia, time-series, and stream data – Classification: associative classification – Cluster analysis: frequent pattern-based clustering – Data warehousing: iceberg cube and cube-gradient – Semantic data compression: fascicles – Broad applications 16 March 2018 Data Mining: Concepts and Techniques 48

4. 1. 2 Why Is Freq. Pattern Mining Important? • Discloses an intrinsic and important property of data sets • Forms the foundation for many essential data mining tasks – Association, correlation, and causality analysis – Sequential, structural (e. g. , sub-graph) patterns – Pattern analysis in spatiotemporal, multimedia, time-series, and stream data – Classification: associative classification – Cluster analysis: frequent pattern-based clustering – Data warehousing: iceberg cube and cube-gradient – Semantic data compression: fascicles – Broad applications 16 March 2018 Data Mining: Concepts and Techniques 48

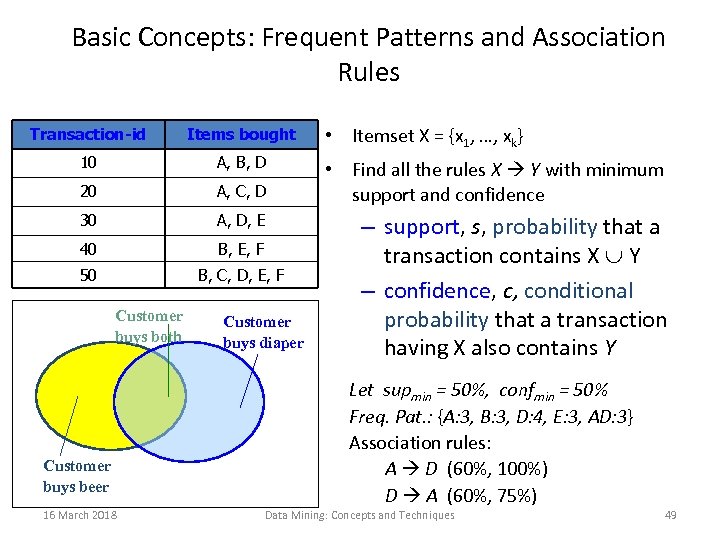

Basic Concepts: Frequent Patterns and Association Rules Transaction-id Items bought 10 A, B, D 20 A, C, D 30 A, D, E 40 B, E, F 50 B, C, D, E, F Customer buys both Customer buys beer 16 March 2018 Customer buys diaper • Itemset X = {x 1, …, xk} • Find all the rules X Y with minimum support and confidence – support, s, probability that a transaction contains X Y – confidence, c, conditional probability that a transaction having X also contains Y Let supmin = 50%, confmin = 50% Freq. Pat. : {A: 3, B: 3, D: 4, E: 3, AD: 3} Association rules: A D (60%, 100%) D A (60%, 75%) Data Mining: Concepts and Techniques 49

Basic Concepts: Frequent Patterns and Association Rules Transaction-id Items bought 10 A, B, D 20 A, C, D 30 A, D, E 40 B, E, F 50 B, C, D, E, F Customer buys both Customer buys beer 16 March 2018 Customer buys diaper • Itemset X = {x 1, …, xk} • Find all the rules X Y with minimum support and confidence – support, s, probability that a transaction contains X Y – confidence, c, conditional probability that a transaction having X also contains Y Let supmin = 50%, confmin = 50% Freq. Pat. : {A: 3, B: 3, D: 4, E: 3, AD: 3} Association rules: A D (60%, 100%) D A (60%, 75%) Data Mining: Concepts and Techniques 49

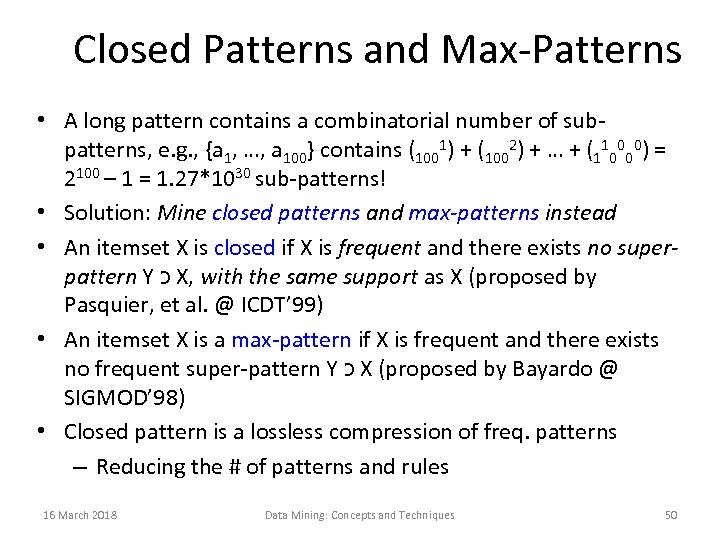

Closed Patterns and Max-Patterns • A long pattern contains a combinatorial number of subpatterns, e. g. , {a 1, …, a 100} contains (1001) + (1002) + … + (110000) = 2100 – 1 = 1. 27*1030 sub-patterns! • Solution: Mine closed patterns and max-patterns instead • An itemset X is closed if X is frequent and there exists no superpattern Y כ X, with the same support as X (proposed by Pasquier, et al. @ ICDT’ 99) • An itemset X is a max-pattern if X is frequent and there exists no frequent super-pattern Y כ X (proposed by Bayardo @ SIGMOD’ 98) • Closed pattern is a lossless compression of freq. patterns – Reducing the # of patterns and rules 16 March 2018 Data Mining: Concepts and Techniques 50

Closed Patterns and Max-Patterns • A long pattern contains a combinatorial number of subpatterns, e. g. , {a 1, …, a 100} contains (1001) + (1002) + … + (110000) = 2100 – 1 = 1. 27*1030 sub-patterns! • Solution: Mine closed patterns and max-patterns instead • An itemset X is closed if X is frequent and there exists no superpattern Y כ X, with the same support as X (proposed by Pasquier, et al. @ ICDT’ 99) • An itemset X is a max-pattern if X is frequent and there exists no frequent super-pattern Y כ X (proposed by Bayardo @ SIGMOD’ 98) • Closed pattern is a lossless compression of freq. patterns – Reducing the # of patterns and rules 16 March 2018 Data Mining: Concepts and Techniques 50

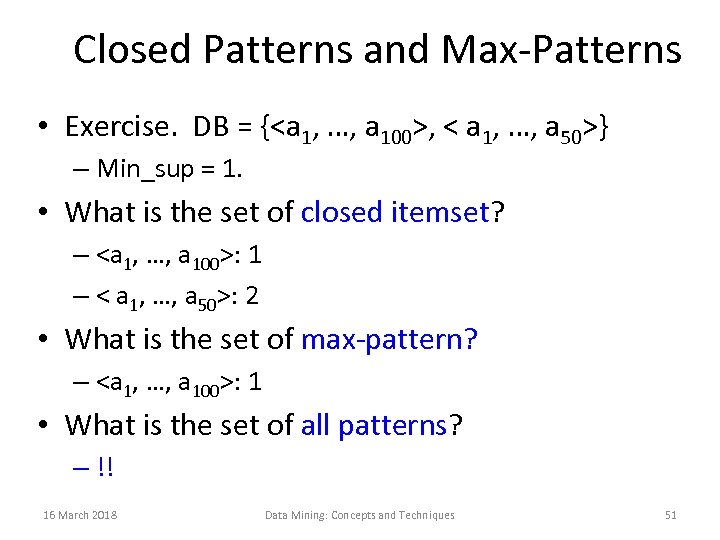

Closed Patterns and Max-Patterns • Exercise. DB = {, < a 1, …, a 50>} – Min_sup = 1. • What is the set of closed itemset? – : 1 – < a 1, …, a 50>: 2 • What is the set of max-pattern? – : 1 • What is the set of all patterns? – !! 16 March 2018 Data Mining: Concepts and Techniques 51

Closed Patterns and Max-Patterns • Exercise. DB = {, < a 1, …, a 50>} – Min_sup = 1. • What is the set of closed itemset? – : 1 – < a 1, …, a 50>: 2 • What is the set of max-pattern? – : 1 • What is the set of all patterns? – !! 16 March 2018 Data Mining: Concepts and Techniques 51

4. 1. 3 Frequent Pattern Mining: Frequent pattern mining can be classified in various ways • Based on the completeness of patterns to be mined: • Based on the levels of abstraction involved in the rule set: buys(X, “computer”))buys(X, “HP printer”) buys(X, “laptop computer”))buys(X, “HP printer”) • Based on the number of data dimensions involved in the rule: single dimension, multidimension

4. 1. 3 Frequent Pattern Mining: Frequent pattern mining can be classified in various ways • Based on the completeness of patterns to be mined: • Based on the levels of abstraction involved in the rule set: buys(X, “computer”))buys(X, “HP printer”) buys(X, “laptop computer”))buys(X, “HP printer”) • Based on the number of data dimensions involved in the rule: single dimension, multidimension

• Based on the types of values handled in the rule-Boolean association rule, quantitative • Based on the kinds of rules to be mined • Based on the kinds of patterns to be mined

• Based on the types of values handled in the rule-Boolean association rule, quantitative • Based on the kinds of rules to be mined • Based on the kinds of patterns to be mined

4. 2 Scalable Methods for Mining Frequent Patterns • The downward closure property of frequent patterns – Any subset of a frequent itemset must be frequent – If {A, B, C} is frequent, so is {A, B} – i. e. , every transaction having {A, B, C} also contains {A, B} • Scalable mining methods: Three major approaches – Apriori – Freq. pattern growth – Vertical data format approach 16 March 2018 Data Mining: Concepts and Techniques 54

4. 2 Scalable Methods for Mining Frequent Patterns • The downward closure property of frequent patterns – Any subset of a frequent itemset must be frequent – If {A, B, C} is frequent, so is {A, B} – i. e. , every transaction having {A, B, C} also contains {A, B} • Scalable mining methods: Three major approaches – Apriori – Freq. pattern growth – Vertical data format approach 16 March 2018 Data Mining: Concepts and Techniques 54

Apriori: A Candidate Generation-and-Test Approach • Apriori pruning principle: If there is any itemset which is infrequent, its superset should not be generated/tested! Or All nonempty subsets of a frequent itemset must also be frequent • Method: – Initially, scan DB once to get frequent 1 -itemset – Generate length (k+1) candidate itemsets from length k frequent itemsets – Test the candidates against DB – Terminate when no frequent or candidate set can be generated 16 March 2018 Data Mining: Concepts and Techniques 55

Apriori: A Candidate Generation-and-Test Approach • Apriori pruning principle: If there is any itemset which is infrequent, its superset should not be generated/tested! Or All nonempty subsets of a frequent itemset must also be frequent • Method: – Initially, scan DB once to get frequent 1 -itemset – Generate length (k+1) candidate itemsets from length k frequent itemsets – Test the candidates against DB – Terminate when no frequent or candidate set can be generated 16 March 2018 Data Mining: Concepts and Techniques 55

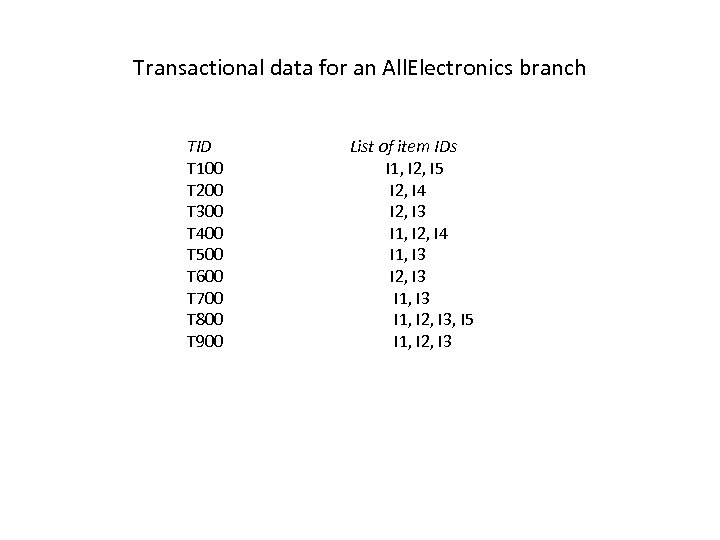

Transactional data for an All. Electronics branch TID T 100 T 200 T 300 T 400 T 500 T 600 T 700 T 800 T 900 List of item IDs I 1, I 2, I 5 I 2, I 4 I 2, I 3 I 1, I 2, I 4 I 1, I 3 I 2, I 3 I 1, I 2, I 3, I 5 I 1, I 2, I 3

Transactional data for an All. Electronics branch TID T 100 T 200 T 300 T 400 T 500 T 600 T 700 T 800 T 900 List of item IDs I 1, I 2, I 5 I 2, I 4 I 2, I 3 I 1, I 2, I 4 I 1, I 3 I 2, I 3 I 1, I 2, I 3, I 5 I 1, I 2, I 3

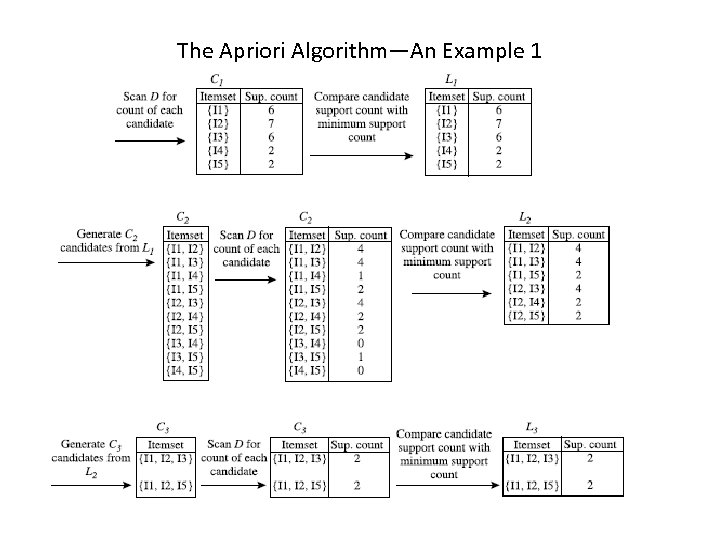

The Apriori Algorithm—An Example 1

The Apriori Algorithm—An Example 1

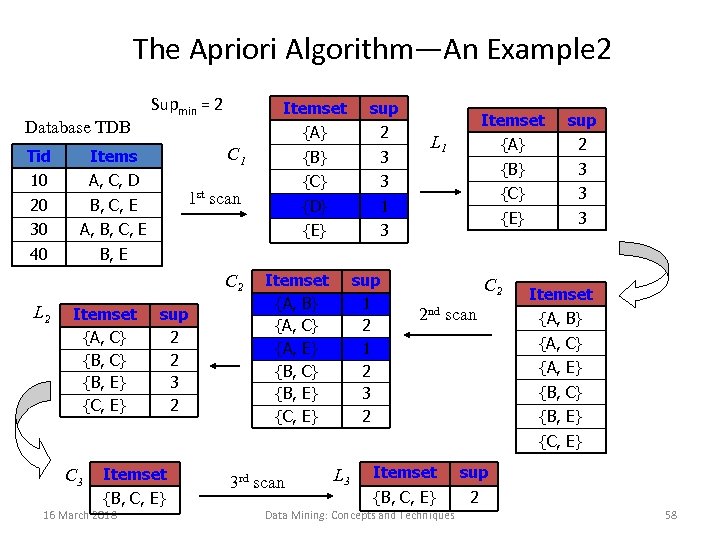

The Apriori Algorithm—An Example 2 Database TDB Tid {B} 3 {C} 3 {D} 1 3 A, B, C, E 40 2 {E} B, C, E 30 sup C 1 A, C, D 20 Itemset {A} Items 10 Supmin = 2 Itemset sup {A} 2 {B} 3 {C} 3 {E} 3 L 1 B, E 1 st scan C 2 L 2 Itemset {A, C} {B, E} {C, E} sup 2 2 3 2 Itemset {A, B} {A, C} {A, E} {B, C} {B, E} {C, E} sup 1 2 3 2 C 2 2 nd scan Itemset {A, B} {A, C} {A, E} {B, C} {B, E} {C, E} C 3 Itemset {B, C, E} 16 March 2018 3 rd scan L 3 Itemset sup {B, C, E} 2 Data Mining: Concepts and Techniques 58

The Apriori Algorithm—An Example 2 Database TDB Tid {B} 3 {C} 3 {D} 1 3 A, B, C, E 40 2 {E} B, C, E 30 sup C 1 A, C, D 20 Itemset {A} Items 10 Supmin = 2 Itemset sup {A} 2 {B} 3 {C} 3 {E} 3 L 1 B, E 1 st scan C 2 L 2 Itemset {A, C} {B, E} {C, E} sup 2 2 3 2 Itemset {A, B} {A, C} {A, E} {B, C} {B, E} {C, E} sup 1 2 3 2 C 2 2 nd scan Itemset {A, B} {A, C} {A, E} {B, C} {B, E} {C, E} C 3 Itemset {B, C, E} 16 March 2018 3 rd scan L 3 Itemset sup {B, C, E} 2 Data Mining: Concepts and Techniques 58

The Apriori Algorithm—An Example 3

The Apriori Algorithm—An Example 3

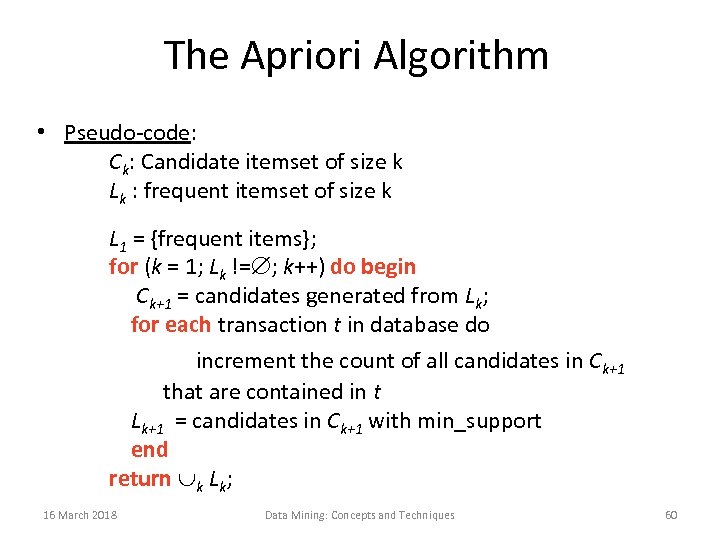

The Apriori Algorithm • Pseudo-code: Ck: Candidate itemset of size k Lk : frequent itemset of size k L 1 = {frequent items}; for (k = 1; Lk != ; k++) do begin Ck+1 = candidates generated from Lk; for each transaction t in database do increment the count of all candidates in Ck+1 that are contained in t Lk+1 = candidates in Ck+1 with min_support end return k Lk; 16 March 2018 Data Mining: Concepts and Techniques 60

The Apriori Algorithm • Pseudo-code: Ck: Candidate itemset of size k Lk : frequent itemset of size k L 1 = {frequent items}; for (k = 1; Lk != ; k++) do begin Ck+1 = candidates generated from Lk; for each transaction t in database do increment the count of all candidates in Ck+1 that are contained in t Lk+1 = candidates in Ck+1 with min_support end return k Lk; 16 March 2018 Data Mining: Concepts and Techniques 60

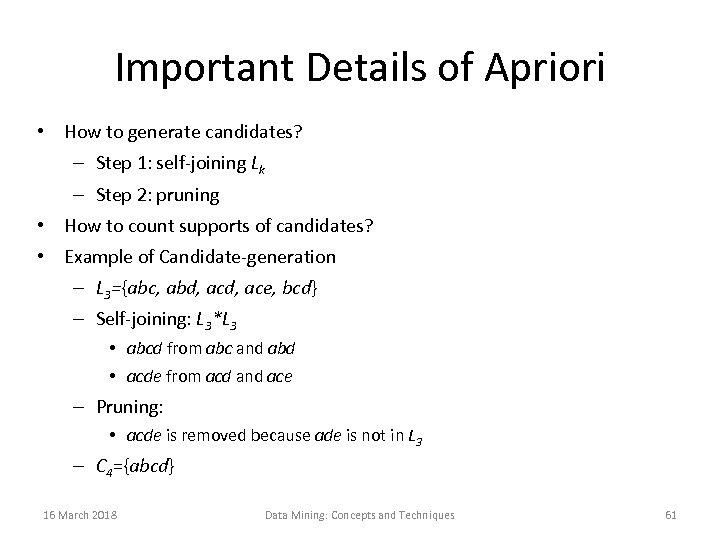

Important Details of Apriori • How to generate candidates? – Step 1: self-joining Lk – Step 2: pruning • How to count supports of candidates? • Example of Candidate-generation – L 3={abc, abd, ace, bcd} – Self-joining: L 3*L 3 • abcd from abc and abd • acde from acd and ace – Pruning: • acde is removed because ade is not in L 3 – C 4={abcd} 16 March 2018 Data Mining: Concepts and Techniques 61

Important Details of Apriori • How to generate candidates? – Step 1: self-joining Lk – Step 2: pruning • How to count supports of candidates? • Example of Candidate-generation – L 3={abc, abd, ace, bcd} – Self-joining: L 3*L 3 • abcd from abc and abd • acde from acd and ace – Pruning: • acde is removed because ade is not in L 3 – C 4={abcd} 16 March 2018 Data Mining: Concepts and Techniques 61

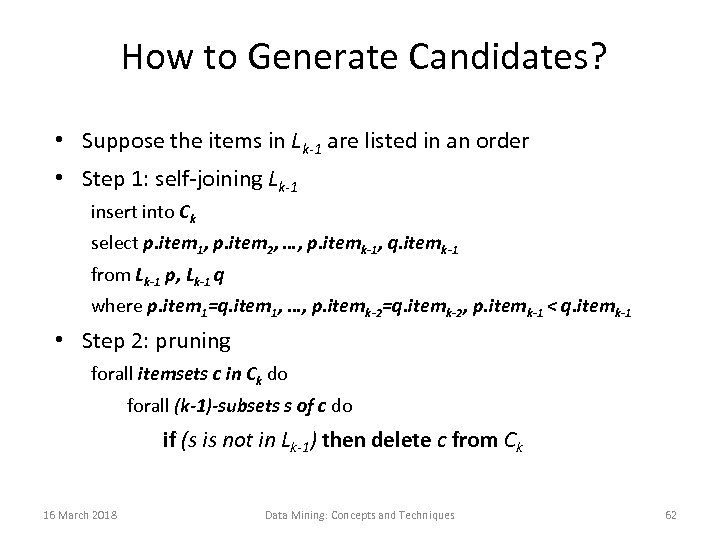

How to Generate Candidates? • Suppose the items in Lk-1 are listed in an order • Step 1: self-joining Lk-1 insert into Ck select p. item 1, p. item 2, …, p. itemk-1, q. itemk-1 from Lk-1 p, Lk-1 q where p. item 1=q. item 1, …, p. itemk-2=q. itemk-2, p. itemk-1 < q. itemk-1 • Step 2: pruning forall itemsets c in Ck do forall (k-1)-subsets s of c do if (s is not in Lk-1) then delete c from Ck 16 March 2018 Data Mining: Concepts and Techniques 62

How to Generate Candidates? • Suppose the items in Lk-1 are listed in an order • Step 1: self-joining Lk-1 insert into Ck select p. item 1, p. item 2, …, p. itemk-1, q. itemk-1 from Lk-1 p, Lk-1 q where p. item 1=q. item 1, …, p. itemk-2=q. itemk-2, p. itemk-1 < q. itemk-1 • Step 2: pruning forall itemsets c in Ck do forall (k-1)-subsets s of c do if (s is not in Lk-1) then delete c from Ck 16 March 2018 Data Mining: Concepts and Techniques 62

4. 2. 3 Improving the Efficiency of Apriori • Hash-based technique (hashing itemsets into corresponding buckets)

4. 2. 3 Improving the Efficiency of Apriori • Hash-based technique (hashing itemsets into corresponding buckets)

DHP: Reduce the Number of Candidates • A k-itemset whose corresponding hashing bucket count is below the threshold cannot be frequent – Candidates: a, b, c, d, e – Hash entries: {ab, ad, ae} {bd, be, de} … – Frequent 1 -itemset: a, b, d, e – ab is not a candidate 2 -itemset if the sum of count of {ab, ad, ae} is below support threshold • J. Park, M. Chen, and P. Yu. An effective hash-based algorithm for mining association rules. In SIGMOD’ 95 16 March 2018 Data Mining: Concepts and Techniques 64

DHP: Reduce the Number of Candidates • A k-itemset whose corresponding hashing bucket count is below the threshold cannot be frequent – Candidates: a, b, c, d, e – Hash entries: {ab, ad, ae} {bd, be, de} … – Frequent 1 -itemset: a, b, d, e – ab is not a candidate 2 -itemset if the sum of count of {ab, ad, ae} is below support threshold • J. Park, M. Chen, and P. Yu. An effective hash-based algorithm for mining association rules. In SIGMOD’ 95 16 March 2018 Data Mining: Concepts and Techniques 64

Sampling for Frequent Patterns • Select a sample of original database, mine frequent patterns within sample using Apriori • Scan database once to verify frequent itemsets found in sample, only borders of closure of frequent patterns are checked – Example: check abcd instead of ab, ac, …, etc. • Scan database again to find missed frequent patterns • H. Toivonen. Sampling large databases for association rules. In VLDB’ 96 16 March 2018 Data Mining: Concepts and Techniques 65

Sampling for Frequent Patterns • Select a sample of original database, mine frequent patterns within sample using Apriori • Scan database once to verify frequent itemsets found in sample, only borders of closure of frequent patterns are checked – Example: check abcd instead of ab, ac, …, etc. • Scan database again to find missed frequent patterns • H. Toivonen. Sampling large databases for association rules. In VLDB’ 96 16 March 2018 Data Mining: Concepts and Techniques 65

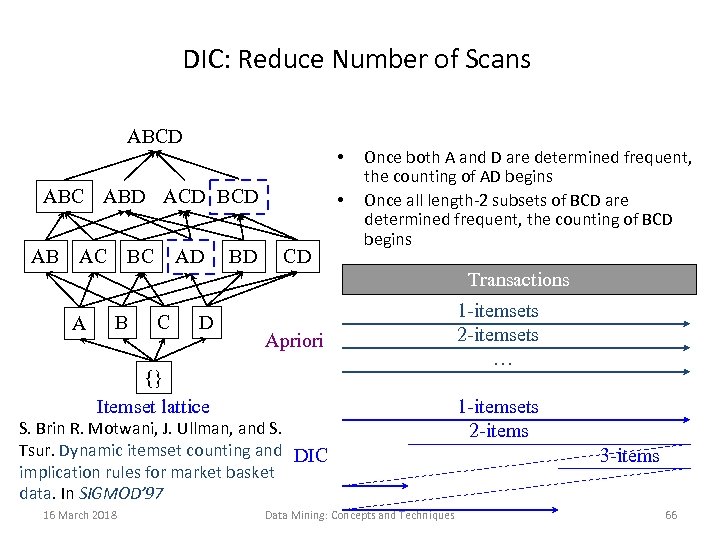

DIC: Reduce Number of Scans ABCD • ABC ABD ACD BCD AB AC BC AD BD • CD Once both A and D are determined frequent, the counting of AD begins Once all length-2 subsets of BCD are determined frequent, the counting of BCD begins Transactions A B C D Apriori {} Itemset lattice S. Brin R. Motwani, J. Ullman, and S. Tsur. Dynamic itemset counting and DIC implication rules for market basket data. In SIGMOD’ 97 16 March 2018 Data Mining: Concepts and Techniques 1 -itemsets 2 -itemsets … 1 -itemsets 2 -items 3 -items 66

DIC: Reduce Number of Scans ABCD • ABC ABD ACD BCD AB AC BC AD BD • CD Once both A and D are determined frequent, the counting of AD begins Once all length-2 subsets of BCD are determined frequent, the counting of BCD begins Transactions A B C D Apriori {} Itemset lattice S. Brin R. Motwani, J. Ullman, and S. Tsur. Dynamic itemset counting and DIC implication rules for market basket data. In SIGMOD’ 97 16 March 2018 Data Mining: Concepts and Techniques 1 -itemsets 2 -itemsets … 1 -itemsets 2 -items 3 -items 66

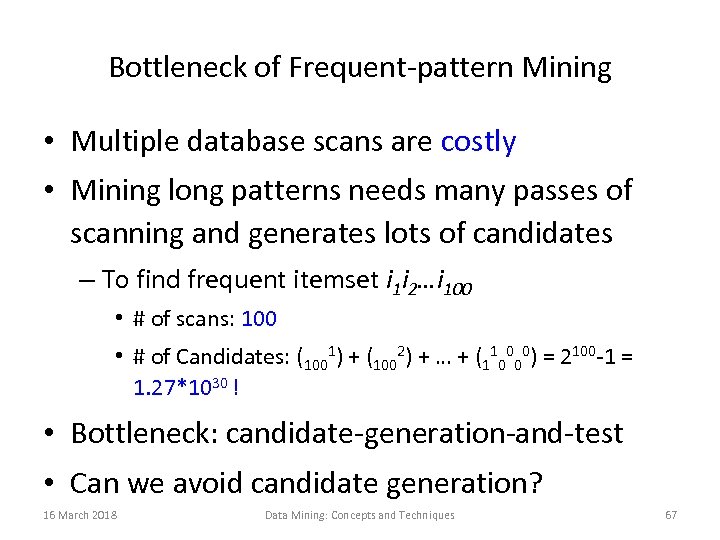

Bottleneck of Frequent-pattern Mining • Multiple database scans are costly • Mining long patterns needs many passes of scanning and generates lots of candidates – To find frequent itemset i 1 i 2…i 100 • # of scans: 100 • # of Candidates: (1001) + (1002) + … + (110000) = 2100 -1 = 1. 27*1030 ! • Bottleneck: candidate-generation-and-test • Can we avoid candidate generation? 16 March 2018 Data Mining: Concepts and Techniques 67

Bottleneck of Frequent-pattern Mining • Multiple database scans are costly • Mining long patterns needs many passes of scanning and generates lots of candidates – To find frequent itemset i 1 i 2…i 100 • # of scans: 100 • # of Candidates: (1001) + (1002) + … + (110000) = 2100 -1 = 1. 27*1030 ! • Bottleneck: candidate-generation-and-test • Can we avoid candidate generation? 16 March 2018 Data Mining: Concepts and Techniques 67

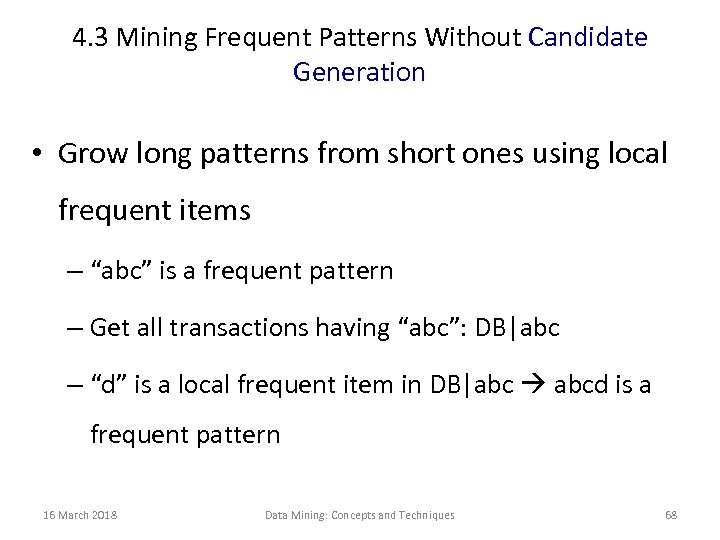

4. 3 Mining Frequent Patterns Without Candidate Generation • Grow long patterns from short ones using local frequent items – “abc” is a frequent pattern – Get all transactions having “abc”: DB|abc – “d” is a local frequent item in DB|abc abcd is a frequent pattern 16 March 2018 Data Mining: Concepts and Techniques 68

4. 3 Mining Frequent Patterns Without Candidate Generation • Grow long patterns from short ones using local frequent items – “abc” is a frequent pattern – Get all transactions having “abc”: DB|abc – “d” is a local frequent item in DB|abc abcd is a frequent pattern 16 March 2018 Data Mining: Concepts and Techniques 68

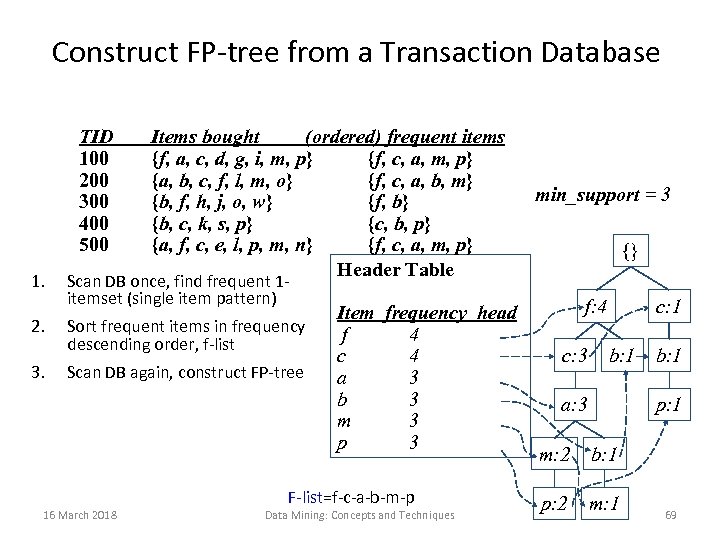

Construct FP-tree from a Transaction Database TID 100 200 300 400 500 1. 2. 3. Items bought (ordered) frequent items {f, a, c, d, g, i, m, p} {f, c, a, m, p} {a, b, c, f, l, m, o} {f, c, a, b, m} {b, f, h, j, o, w} {f, b} {b, c, k, s, p} {c, b, p} {a, f, c, e, l, p, m, n} {f, c, a, m, p} Header Table Scan DB once, find frequent 1 itemset (single item pattern) Sort frequent items in frequency descending order, f-list Scan DB again, construct FP-tree 16 March 2018 Item frequency head f 4 c 4 a 3 b 3 m 3 p 3 F-list=f-c-a-b-m-p Data Mining: Concepts and Techniques min_support = 3 {} f: 4 c: 3 c: 1 b: 1 a: 3 b: 1 p: 1 m: 2 b: 1 p: 2 m: 1 69

Construct FP-tree from a Transaction Database TID 100 200 300 400 500 1. 2. 3. Items bought (ordered) frequent items {f, a, c, d, g, i, m, p} {f, c, a, m, p} {a, b, c, f, l, m, o} {f, c, a, b, m} {b, f, h, j, o, w} {f, b} {b, c, k, s, p} {c, b, p} {a, f, c, e, l, p, m, n} {f, c, a, m, p} Header Table Scan DB once, find frequent 1 itemset (single item pattern) Sort frequent items in frequency descending order, f-list Scan DB again, construct FP-tree 16 March 2018 Item frequency head f 4 c 4 a 3 b 3 m 3 p 3 F-list=f-c-a-b-m-p Data Mining: Concepts and Techniques min_support = 3 {} f: 4 c: 3 c: 1 b: 1 a: 3 b: 1 p: 1 m: 2 b: 1 p: 2 m: 1 69

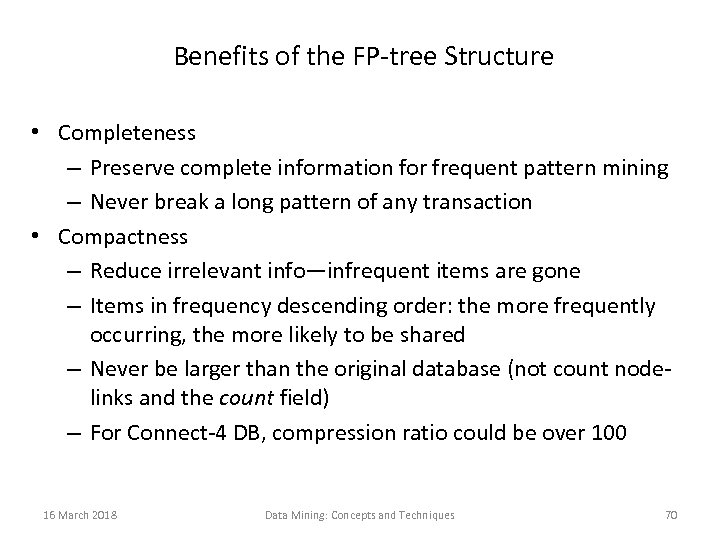

Benefits of the FP-tree Structure • Completeness – Preserve complete information for frequent pattern mining – Never break a long pattern of any transaction • Compactness – Reduce irrelevant info—infrequent items are gone – Items in frequency descending order: the more frequently occurring, the more likely to be shared – Never be larger than the original database (not count nodelinks and the count field) – For Connect-4 DB, compression ratio could be over 100 16 March 2018 Data Mining: Concepts and Techniques 70

Benefits of the FP-tree Structure • Completeness – Preserve complete information for frequent pattern mining – Never break a long pattern of any transaction • Compactness – Reduce irrelevant info—infrequent items are gone – Items in frequency descending order: the more frequently occurring, the more likely to be shared – Never be larger than the original database (not count nodelinks and the count field) – For Connect-4 DB, compression ratio could be over 100 16 March 2018 Data Mining: Concepts and Techniques 70

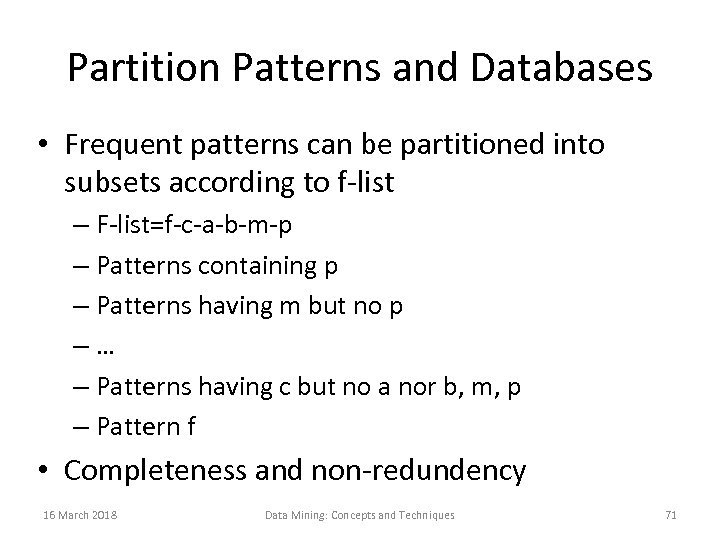

Partition Patterns and Databases • Frequent patterns can be partitioned into subsets according to f-list – F-list=f-c-a-b-m-p – Patterns containing p – Patterns having m but no p –… – Patterns having c but no a nor b, m, p – Pattern f • Completeness and non-redundency 16 March 2018 Data Mining: Concepts and Techniques 71

Partition Patterns and Databases • Frequent patterns can be partitioned into subsets according to f-list – F-list=f-c-a-b-m-p – Patterns containing p – Patterns having m but no p –… – Patterns having c but no a nor b, m, p – Pattern f • Completeness and non-redundency 16 March 2018 Data Mining: Concepts and Techniques 71

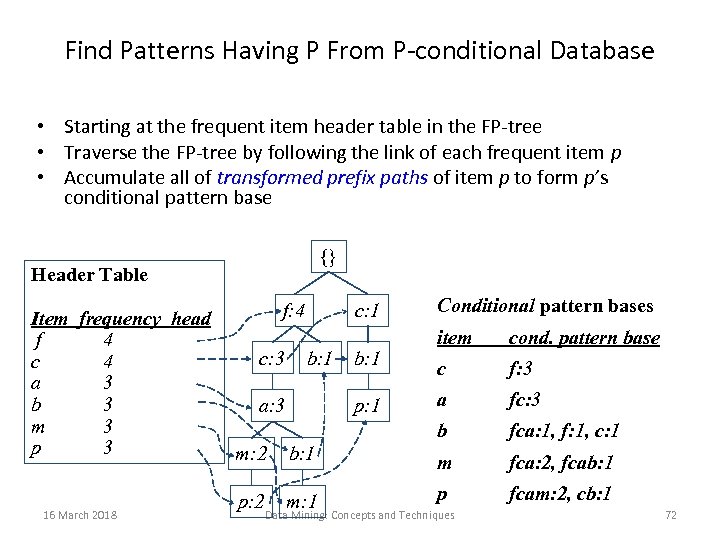

Find Patterns Having P From P-conditional Database • Starting at the frequent item header table in the FP-tree • Traverse the FP-tree by following the link of each frequent item p • Accumulate all of transformed prefix paths of item p to form p’s conditional pattern base {} Header Table Item frequency head f 4 c 4 a 3 b 3 m 3 p 3 16 March 2018 f: 4 c: 1 Conditional pattern bases b: 1 a: 3 b: 1 p: 1 cond. pattern base c f: 3 a fc: 3 b c: 3 item fca: 1, f: 1, c: 1 m: 2 b: 1 m fca: 2, fcab: 1 p: 2 m: 1 p fcam: 2, cb: 1 Data Mining: Concepts and Techniques 72

Find Patterns Having P From P-conditional Database • Starting at the frequent item header table in the FP-tree • Traverse the FP-tree by following the link of each frequent item p • Accumulate all of transformed prefix paths of item p to form p’s conditional pattern base {} Header Table Item frequency head f 4 c 4 a 3 b 3 m 3 p 3 16 March 2018 f: 4 c: 1 Conditional pattern bases b: 1 a: 3 b: 1 p: 1 cond. pattern base c f: 3 a fc: 3 b c: 3 item fca: 1, f: 1, c: 1 m: 2 b: 1 m fca: 2, fcab: 1 p: 2 m: 1 p fcam: 2, cb: 1 Data Mining: Concepts and Techniques 72

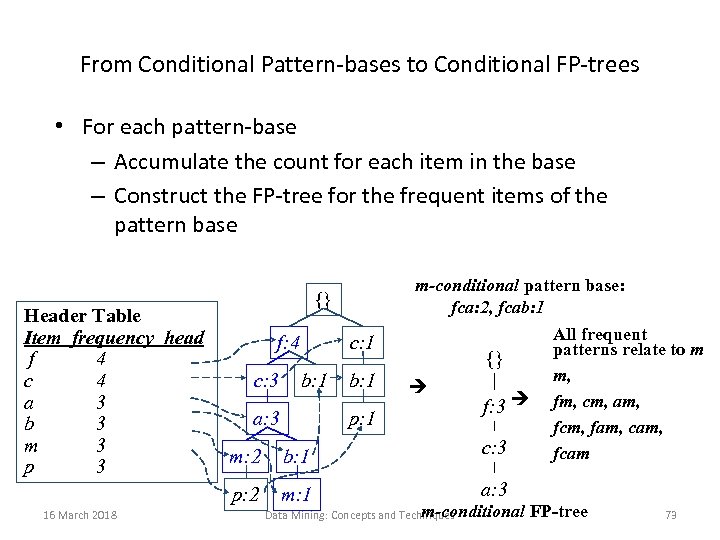

From Conditional Pattern-bases to Conditional FP-trees • For each pattern-base – Accumulate the count for each item in the base – Construct the FP-tree for the frequent items of the pattern base Header Table Item frequency head f 4 c 4 a 3 b 3 m 3 p 3 m-conditional pattern base: fca: 2, fcab: 1 {} f: 4 c: 3 c: 1 b: 1 a: 3 b: 1 p: 1 {} f: 3 b: 1 c: 3 p: 2 16 March 2018 m: 2 m: 1 All frequent patterns relate to m m, fm, cm, am, fcm, fam, cam, fcam a: 3 m-conditional Data Mining: Concepts and Techniques FP-tree 73

From Conditional Pattern-bases to Conditional FP-trees • For each pattern-base – Accumulate the count for each item in the base – Construct the FP-tree for the frequent items of the pattern base Header Table Item frequency head f 4 c 4 a 3 b 3 m 3 p 3 m-conditional pattern base: fca: 2, fcab: 1 {} f: 4 c: 3 c: 1 b: 1 a: 3 b: 1 p: 1 {} f: 3 b: 1 c: 3 p: 2 16 March 2018 m: 2 m: 1 All frequent patterns relate to m m, fm, cm, am, fcm, fam, cam, fcam a: 3 m-conditional Data Mining: Concepts and Techniques FP-tree 73

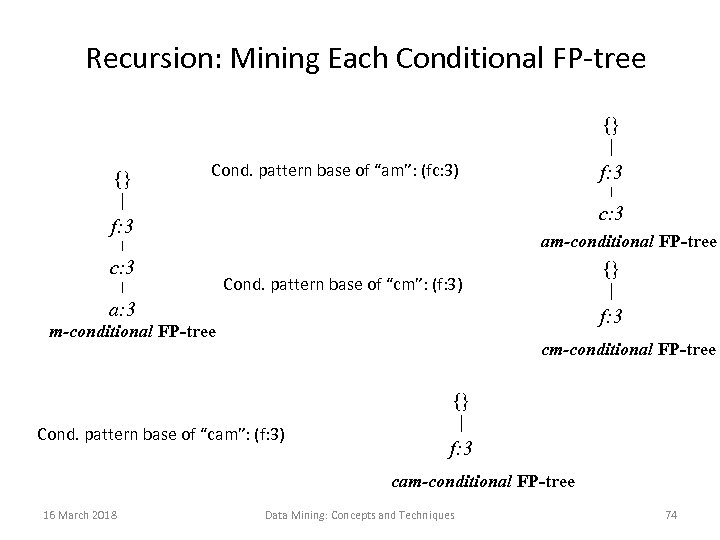

Recursion: Mining Each Conditional FP-tree {} {} Cond. pattern base of “am”: (fc: 3) c: 3 f: 3 am-conditional FP-tree {} Cond. pattern base of “cm”: (f: 3) a: 3 f: 3 m-conditional FP-tree cm-conditional FP-tree {} Cond. pattern base of “cam”: (f: 3) f: 3 cam-conditional FP-tree 16 March 2018 Data Mining: Concepts and Techniques 74

Recursion: Mining Each Conditional FP-tree {} {} Cond. pattern base of “am”: (fc: 3) c: 3 f: 3 am-conditional FP-tree {} Cond. pattern base of “cm”: (f: 3) a: 3 f: 3 m-conditional FP-tree cm-conditional FP-tree {} Cond. pattern base of “cam”: (f: 3) f: 3 cam-conditional FP-tree 16 March 2018 Data Mining: Concepts and Techniques 74

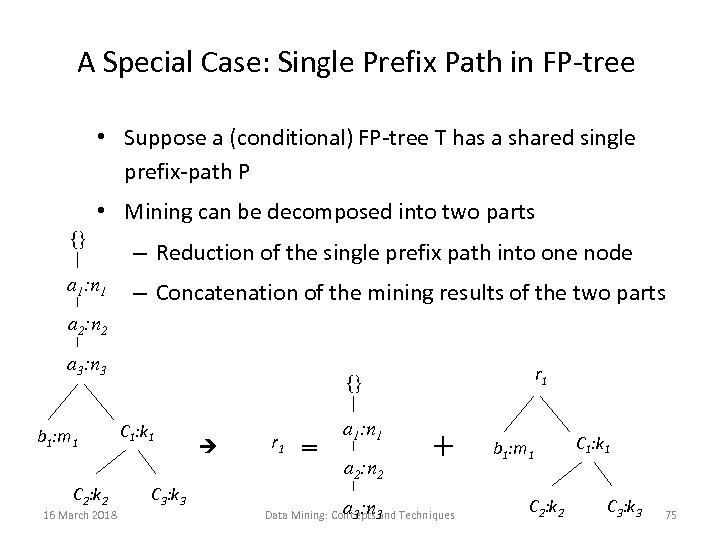

A Special Case: Single Prefix Path in FP-tree • Suppose a (conditional) FP-tree T has a shared single prefix-path P • Mining can be decomposed into two parts {} – Reduction of the single prefix path into one node a 1: n 1 – Concatenation of the mining results of the two parts a 2: n 2 a 3: n 3 b 1: m 1 C 2: k 2 16 March 2018 r 1 {} C 1: k 1 C 3: k 3 r 1 = a 1: n 1 a 2: n 2 a : n + Data Mining: Concepts 3 Techniques 3 and b 1: m 1 C 2: k 2 C 1: k 1 C 3: k 3 75

A Special Case: Single Prefix Path in FP-tree • Suppose a (conditional) FP-tree T has a shared single prefix-path P • Mining can be decomposed into two parts {} – Reduction of the single prefix path into one node a 1: n 1 – Concatenation of the mining results of the two parts a 2: n 2 a 3: n 3 b 1: m 1 C 2: k 2 16 March 2018 r 1 {} C 1: k 1 C 3: k 3 r 1 = a 1: n 1 a 2: n 2 a : n + Data Mining: Concepts 3 Techniques 3 and b 1: m 1 C 2: k 2 C 1: k 1 C 3: k 3 75

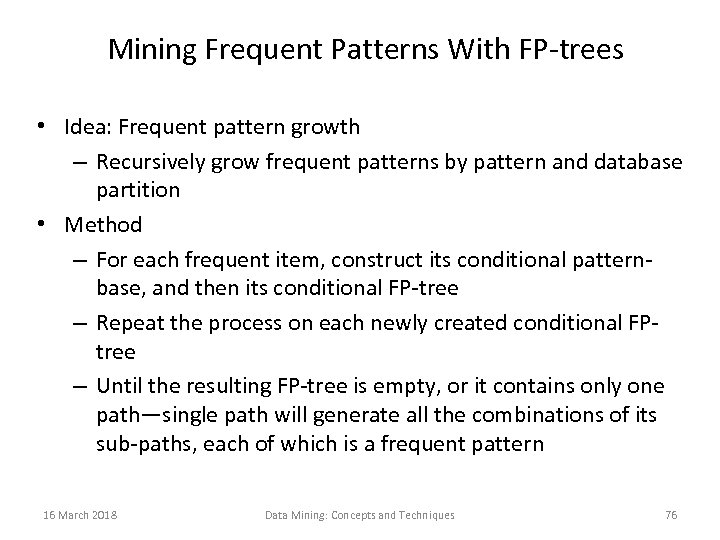

Mining Frequent Patterns With FP-trees • Idea: Frequent pattern growth – Recursively grow frequent patterns by pattern and database partition • Method – For each frequent item, construct its conditional patternbase, and then its conditional FP-tree – Repeat the process on each newly created conditional FPtree – Until the resulting FP-tree is empty, or it contains only one path—single path will generate all the combinations of its sub-paths, each of which is a frequent pattern 16 March 2018 Data Mining: Concepts and Techniques 76

Mining Frequent Patterns With FP-trees • Idea: Frequent pattern growth – Recursively grow frequent patterns by pattern and database partition • Method – For each frequent item, construct its conditional patternbase, and then its conditional FP-tree – Repeat the process on each newly created conditional FPtree – Until the resulting FP-tree is empty, or it contains only one path—single path will generate all the combinations of its sub-paths, each of which is a frequent pattern 16 March 2018 Data Mining: Concepts and Techniques 76

Scaling FP-growth by DB Projection • • FP-tree cannot fit in memory? —DB projection First partition a database into a set of projected DBs Then construct and mine FP-tree for each projected DB Parallel projection vs. Partition projection techniques – Parallel projection is space costly 16 March 2018 Data Mining: Concepts and Techniques 77

Scaling FP-growth by DB Projection • • FP-tree cannot fit in memory? —DB projection First partition a database into a set of projected DBs Then construct and mine FP-tree for each projected DB Parallel projection vs. Partition projection techniques – Parallel projection is space costly 16 March 2018 Data Mining: Concepts and Techniques 77

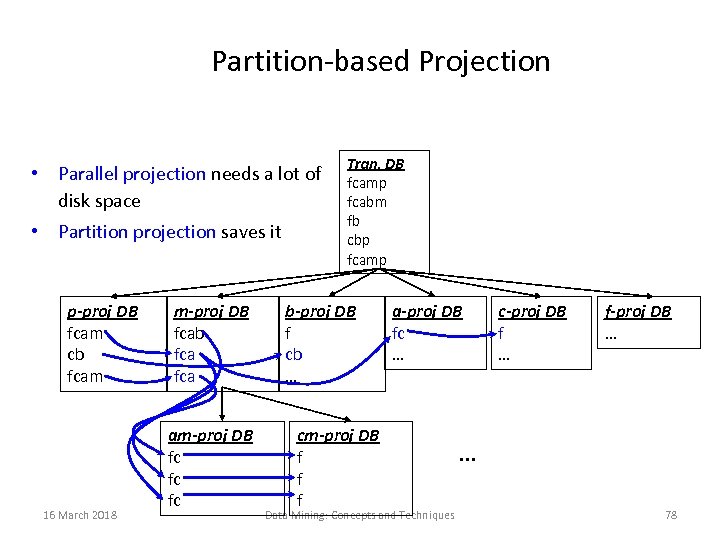

Partition-based Projection • Parallel projection needs a lot of disk space • Partition projection saves it p-proj DB fcam cb fcam 16 March 2018 m-proj DB fcab fca am-proj DB fc fc fc Tran. DB fcamp fcabm fb cbp fcamp b-proj DB f cb … cm-proj DB f f f a-proj DB fc … Data Mining: Concepts and Techniques c-proj DB f … f-proj DB … … 78

Partition-based Projection • Parallel projection needs a lot of disk space • Partition projection saves it p-proj DB fcam cb fcam 16 March 2018 m-proj DB fcab fca am-proj DB fc fc fc Tran. DB fcamp fcabm fb cbp fcamp b-proj DB f cb … cm-proj DB f f f a-proj DB fc … Data Mining: Concepts and Techniques c-proj DB f … f-proj DB … … 78

Why Is FP-Growth the Winner? • Divide-and-conquer: – decompose both the mining task and DB according to the frequent patterns obtained so far – leads to focused search of smaller databases • Other factors – no candidate generation, no candidate test – compressed database: FP-tree structure – no repeated scan of entire database – basic ops—counting local freq items and building sub FPtree, no pattern search and matching 16 March 2018 Data Mining: Concepts and Techniques 79

Why Is FP-Growth the Winner? • Divide-and-conquer: – decompose both the mining task and DB according to the frequent patterns obtained so far – leads to focused search of smaller databases • Other factors – no candidate generation, no candidate test – compressed database: FP-tree structure – no repeated scan of entire database – basic ops—counting local freq items and building sub FPtree, no pattern search and matching 16 March 2018 Data Mining: Concepts and Techniques 79

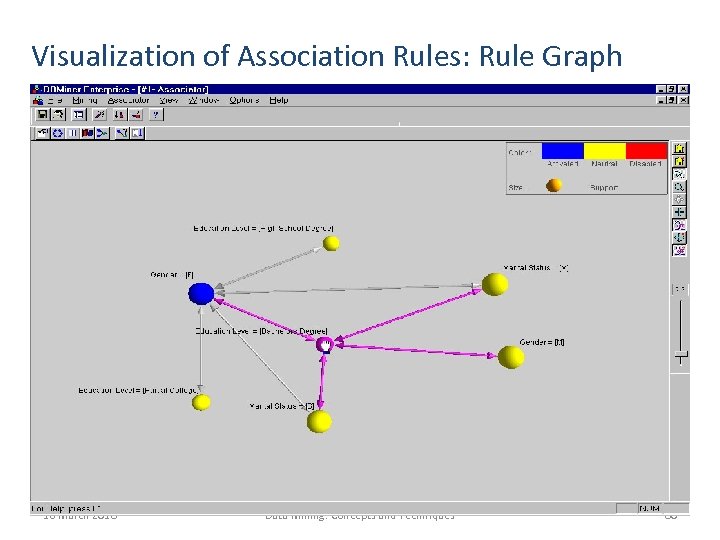

Visualization of Association Rules: Rule Graph 16 March 2018 Data Mining: Concepts and Techniques 80

Visualization of Association Rules: Rule Graph 16 March 2018 Data Mining: Concepts and Techniques 80

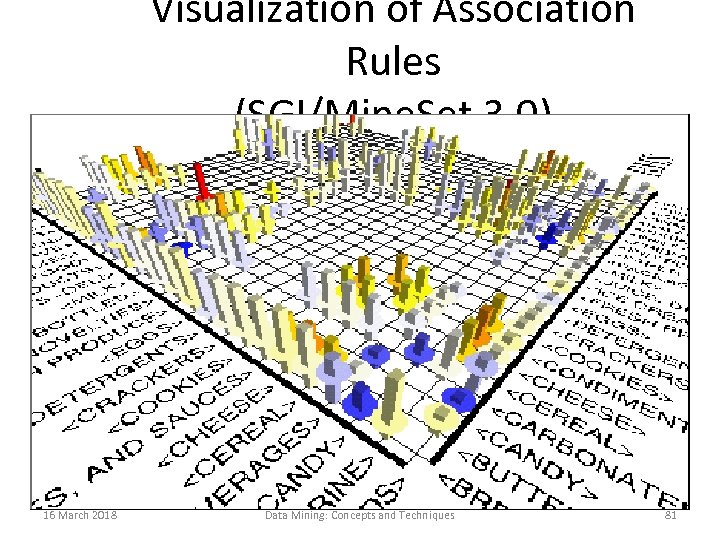

Visualization of Association Rules (SGI/Mine. Set 3. 0) 16 March 2018 Data Mining: Concepts and Techniques 81

Visualization of Association Rules (SGI/Mine. Set 3. 0) 16 March 2018 Data Mining: Concepts and Techniques 81

Chapter 5: Mining Frequent Patterns, Association and Correlations • Basic concepts and a road map • Efficient and scalable frequent itemset mining methods • Mining various kinds of association rules • From association mining to correlation analysis • Constraint-based association mining • Summary 16 March 2018 Data Mining: Concepts and Techniques 82

Chapter 5: Mining Frequent Patterns, Association and Correlations • Basic concepts and a road map • Efficient and scalable frequent itemset mining methods • Mining various kinds of association rules • From association mining to correlation analysis • Constraint-based association mining • Summary 16 March 2018 Data Mining: Concepts and Techniques 82

4. 4 Mining Various Kinds of Association Rules • Mining multilevel association • Miming multidimensional association • Mining quantitative association • Mining interesting correlation patterns 16 March 2018 Data Mining: Concepts and Techniques 83

4. 4 Mining Various Kinds of Association Rules • Mining multilevel association • Miming multidimensional association • Mining quantitative association • Mining interesting correlation patterns 16 March 2018 Data Mining: Concepts and Techniques 83

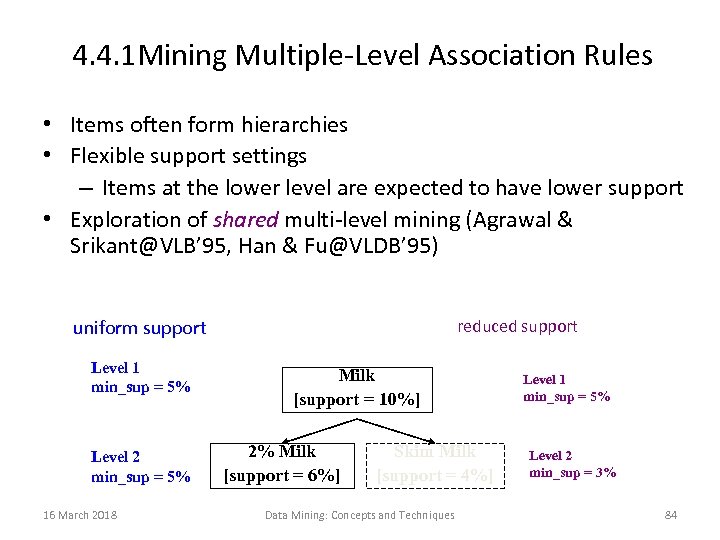

4. 4. 1 Mining Multiple-Level Association Rules • Items often form hierarchies • Flexible support settings – Items at the lower level are expected to have lower support • Exploration of shared multi-level mining (Agrawal & Srikant@VLB’ 95, Han & Fu@VLDB’ 95) uniform support Level 1 min_sup = 5% Level 2 min_sup = 5% 16 March 2018 reduced support Milk [support = 10%] 2% Milk [support = 6%] Skim Milk [support = 4%] Data Mining: Concepts and Techniques Level 1 min_sup = 5% Level 2 min_sup = 3% 84

4. 4. 1 Mining Multiple-Level Association Rules • Items often form hierarchies • Flexible support settings – Items at the lower level are expected to have lower support • Exploration of shared multi-level mining (Agrawal & Srikant@VLB’ 95, Han & Fu@VLDB’ 95) uniform support Level 1 min_sup = 5% Level 2 min_sup = 5% 16 March 2018 reduced support Milk [support = 10%] 2% Milk [support = 6%] Skim Milk [support = 4%] Data Mining: Concepts and Techniques Level 1 min_sup = 5% Level 2 min_sup = 3% 84

4. 4. 2 Multi-level Association: Redundancy Filtering • Some rules may be redundant due to “ancestor” relationships between items. • Example – milk wheat bread [support = 8%, confidence = 70%] – 2% milk wheat bread [support = 2%, confidence = 72%] • We say the first rule is an ancestor of the second rule. • A rule is redundant if its support is close to the “expected” value, based on the rule’s ancestor. 16 March 2018 Data Mining: Concepts and Techniques 85

4. 4. 2 Multi-level Association: Redundancy Filtering • Some rules may be redundant due to “ancestor” relationships between items. • Example – milk wheat bread [support = 8%, confidence = 70%] – 2% milk wheat bread [support = 2%, confidence = 72%] • We say the first rule is an ancestor of the second rule. • A rule is redundant if its support is close to the “expected” value, based on the rule’s ancestor. 16 March 2018 Data Mining: Concepts and Techniques 85

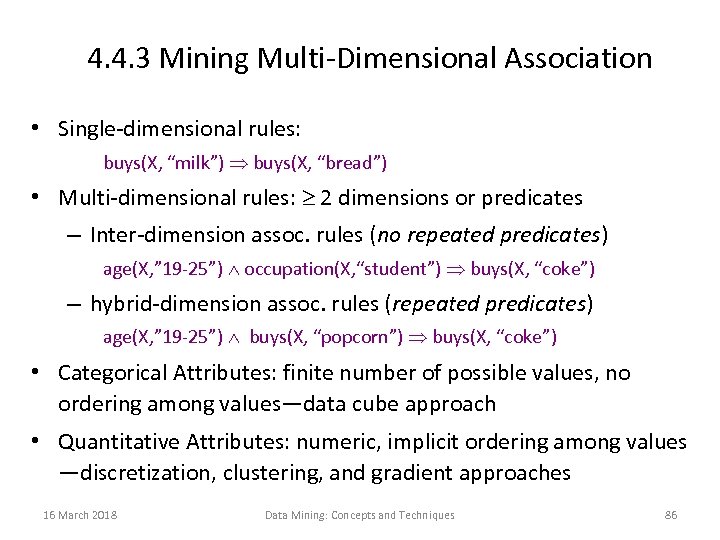

4. 4. 3 Mining Multi-Dimensional Association • Single-dimensional rules: buys(X, “milk”) buys(X, “bread”) • Multi-dimensional rules: 2 dimensions or predicates – Inter-dimension assoc. rules (no repeated predicates) age(X, ” 19 -25”) occupation(X, “student”) buys(X, “coke”) – hybrid-dimension assoc. rules (repeated predicates) age(X, ” 19 -25”) buys(X, “popcorn”) buys(X, “coke”) • Categorical Attributes: finite number of possible values, no ordering among values—data cube approach • Quantitative Attributes: numeric, implicit ordering among values —discretization, clustering, and gradient approaches 16 March 2018 Data Mining: Concepts and Techniques 86

4. 4. 3 Mining Multi-Dimensional Association • Single-dimensional rules: buys(X, “milk”) buys(X, “bread”) • Multi-dimensional rules: 2 dimensions or predicates – Inter-dimension assoc. rules (no repeated predicates) age(X, ” 19 -25”) occupation(X, “student”) buys(X, “coke”) – hybrid-dimension assoc. rules (repeated predicates) age(X, ” 19 -25”) buys(X, “popcorn”) buys(X, “coke”) • Categorical Attributes: finite number of possible values, no ordering among values—data cube approach • Quantitative Attributes: numeric, implicit ordering among values —discretization, clustering, and gradient approaches 16 March 2018 Data Mining: Concepts and Techniques 86

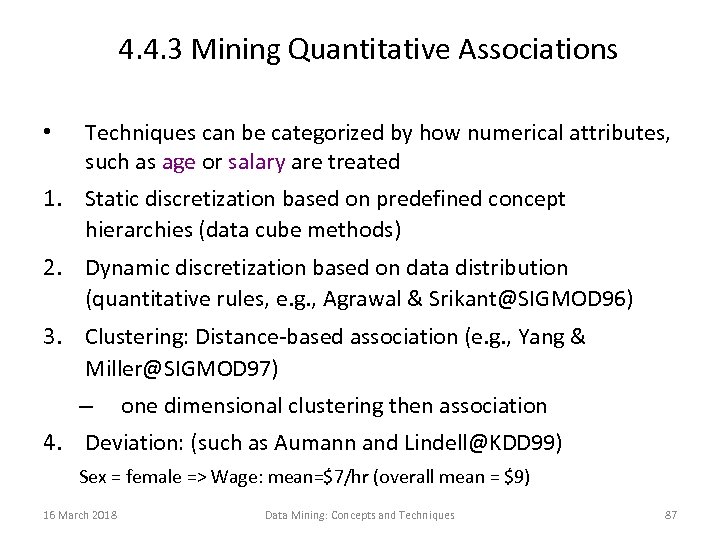

4. 4. 3 Mining Quantitative Associations • Techniques can be categorized by how numerical attributes, such as age or salary are treated 1. Static discretization based on predefined concept hierarchies (data cube methods) 2. Dynamic discretization based on data distribution (quantitative rules, e. g. , Agrawal & Srikant@SIGMOD 96) 3. Clustering: Distance-based association (e. g. , Yang & Miller@SIGMOD 97) – one dimensional clustering then association 4. Deviation: (such as Aumann and Lindell@KDD 99) Sex = female => Wage: mean=$7/hr (overall mean = $9) 16 March 2018 Data Mining: Concepts and Techniques 87

4. 4. 3 Mining Quantitative Associations • Techniques can be categorized by how numerical attributes, such as age or salary are treated 1. Static discretization based on predefined concept hierarchies (data cube methods) 2. Dynamic discretization based on data distribution (quantitative rules, e. g. , Agrawal & Srikant@SIGMOD 96) 3. Clustering: Distance-based association (e. g. , Yang & Miller@SIGMOD 97) – one dimensional clustering then association 4. Deviation: (such as Aumann and Lindell@KDD 99) Sex = female => Wage: mean=$7/hr (overall mean = $9) 16 March 2018 Data Mining: Concepts and Techniques 87

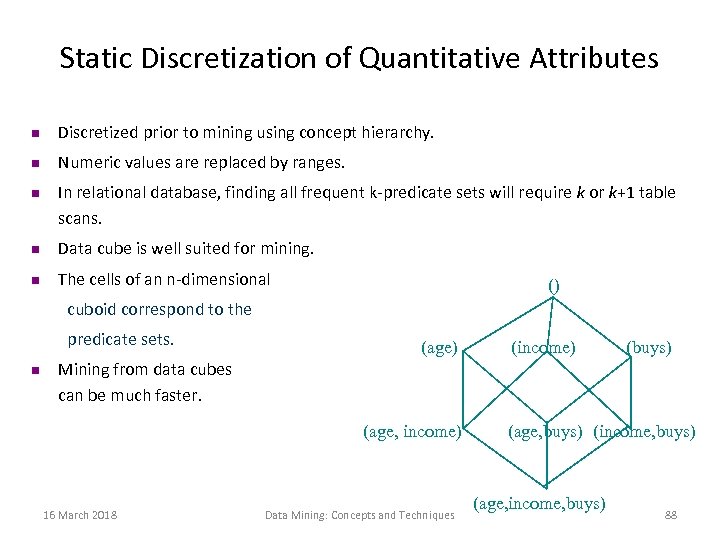

Static Discretization of Quantitative Attributes n Discretized prior to mining using concept hierarchy. n Numeric values are replaced by ranges. n In relational database, finding all frequent k-predicate sets will require k or k+1 table scans. n Data cube is well suited for mining. n The cells of an n-dimensional () cuboid correspond to the predicate sets. n (age) (income) (buys) Mining from data cubes can be much faster. (age, income) 16 March 2018 Data Mining: Concepts and Techniques (age, buys) (income, buys) (age, income, buys) 88

Static Discretization of Quantitative Attributes n Discretized prior to mining using concept hierarchy. n Numeric values are replaced by ranges. n In relational database, finding all frequent k-predicate sets will require k or k+1 table scans. n Data cube is well suited for mining. n The cells of an n-dimensional () cuboid correspond to the predicate sets. n (age) (income) (buys) Mining from data cubes can be much faster. (age, income) 16 March 2018 Data Mining: Concepts and Techniques (age, buys) (income, buys) (age, income, buys) 88

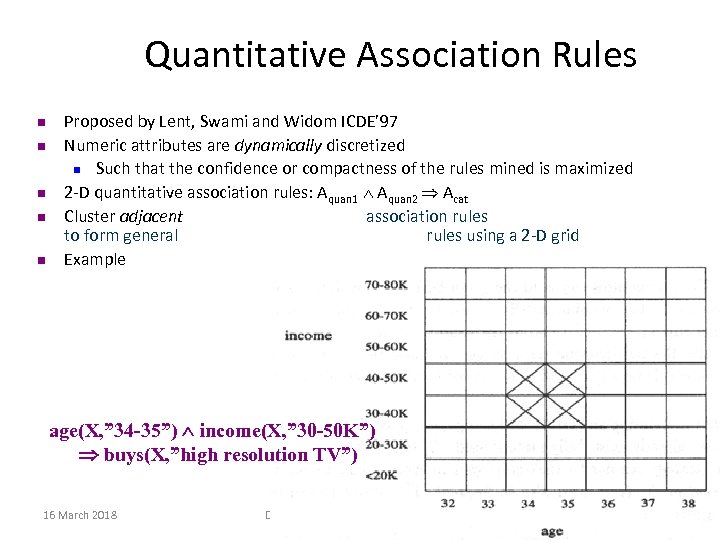

Quantitative Association Rules n n n Proposed by Lent, Swami and Widom ICDE’ 97 Numeric attributes are dynamically discretized n Such that the confidence or compactness of the rules mined is maximized 2 -D quantitative association rules: Aquan 1 Aquan 2 Acat Cluster adjacent association rules to form general rules using a 2 -D grid Example age(X, ” 34 -35”) income(X, ” 30 -50 K”) buys(X, ”high resolution TV”) 16 March 2018 Data Mining: Concepts and Techniques 89

Quantitative Association Rules n n n Proposed by Lent, Swami and Widom ICDE’ 97 Numeric attributes are dynamically discretized n Such that the confidence or compactness of the rules mined is maximized 2 -D quantitative association rules: Aquan 1 Aquan 2 Acat Cluster adjacent association rules to form general rules using a 2 -D grid Example age(X, ” 34 -35”) income(X, ” 30 -50 K”) buys(X, ”high resolution TV”) 16 March 2018 Data Mining: Concepts and Techniques 89

Mining Other Interesting Patterns • Flexible support constraints (Wang et al. @ VLDB’ 02) – Some items (e. g. , diamond) may occur rarely but are valuable – Customized supmin specification and application • Top-K closed frequent patterns (Han, et al. @ ICDM’ 02) – Hard to specify supmin, but top-k with lengthmin is more desirable – Dynamically raise supmin in FP-tree construction and mining, and select most promising path to mine 16 March 2018 Data Mining: Concepts and Techniques 90

Mining Other Interesting Patterns • Flexible support constraints (Wang et al. @ VLDB’ 02) – Some items (e. g. , diamond) may occur rarely but are valuable – Customized supmin specification and application • Top-K closed frequent patterns (Han, et al. @ ICDM’ 02) – Hard to specify supmin, but top-k with lengthmin is more desirable – Dynamically raise supmin in FP-tree construction and mining, and select most promising path to mine 16 March 2018 Data Mining: Concepts and Techniques 90

![4. 5 Interestingness Measure: Correlations (Lift) • play basketball eat cereal [40%, 66. 7%] 4. 5 Interestingness Measure: Correlations (Lift) • play basketball eat cereal [40%, 66. 7%]](https://present5.com/presentation/bbee614137a8862d3ba6ea02dd4d6695/image-91.jpg) 4. 5 Interestingness Measure: Correlations (Lift) • play basketball eat cereal [40%, 66. 7%] is misleading – The overall % of students eating cereal is 75% > 66. 7%. • play basketball not eat cereal [20%, 33. 3%] is more accurate, although with lower support and confidence • Measure of dependent/correlated events: lift Basketball Sum (row) Cereal 2000 1750 3750 Not cereal 1000 250 1250 Sum(col. ) 16 March 2018 Not basketball 3000 2000 5000 Data Mining: Concepts and Techniques 91

4. 5 Interestingness Measure: Correlations (Lift) • play basketball eat cereal [40%, 66. 7%] is misleading – The overall % of students eating cereal is 75% > 66. 7%. • play basketball not eat cereal [20%, 33. 3%] is more accurate, although with lower support and confidence • Measure of dependent/correlated events: lift Basketball Sum (row) Cereal 2000 1750 3750 Not cereal 1000 250 1250 Sum(col. ) 16 March 2018 Not basketball 3000 2000 5000 Data Mining: Concepts and Techniques 91

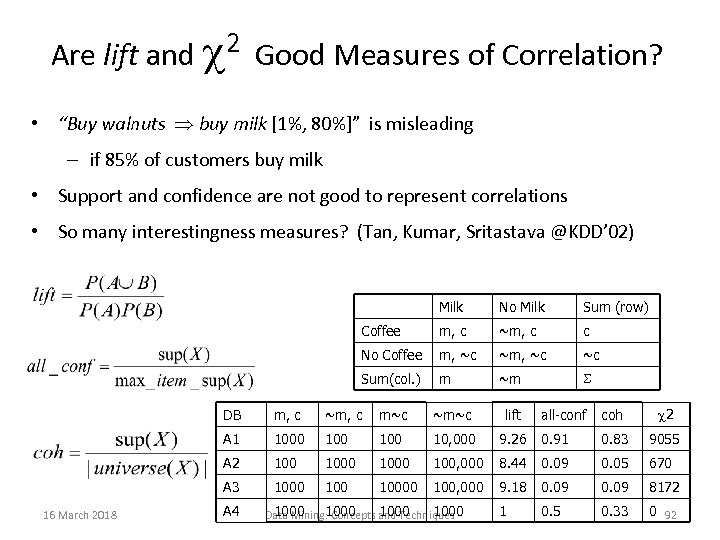

2 Good Measures of Correlation? Are lift and • “Buy walnuts buy milk [1%, 80%]” is misleading – if 85% of customers buy milk • Support and confidence are not good to represent correlations • So many interestingness measures? (Tan, Kumar, Sritastava @KDD’ 02) Milk No Milk Sum (row) Coffee m, c ~m, c c No Coffee m, ~c ~c Sum(col. ) m ~m all-conf coh 2 9. 26 0. 91 0. 83 9055 100, 000 8. 44 0. 09 0. 05 670 100, 000 9. 18 0. 09 8172 1 0. 5 0. 33 0 92 DB ~m, c m~c ~m~c lift A 1 1000 100 10, 000 A 2 1000 A 3 16 March 2018 m, c 10000 A 4 1000 Data Mining: Concepts and Techniques

2 Good Measures of Correlation? Are lift and • “Buy walnuts buy milk [1%, 80%]” is misleading – if 85% of customers buy milk • Support and confidence are not good to represent correlations • So many interestingness measures? (Tan, Kumar, Sritastava @KDD’ 02) Milk No Milk Sum (row) Coffee m, c ~m, c c No Coffee m, ~c ~c Sum(col. ) m ~m all-conf coh 2 9. 26 0. 91 0. 83 9055 100, 000 8. 44 0. 09 0. 05 670 100, 000 9. 18 0. 09 8172 1 0. 5 0. 33 0 92 DB ~m, c m~c ~m~c lift A 1 1000 100 10, 000 A 2 1000 A 3 16 March 2018 m, c 10000 A 4 1000 Data Mining: Concepts and Techniques

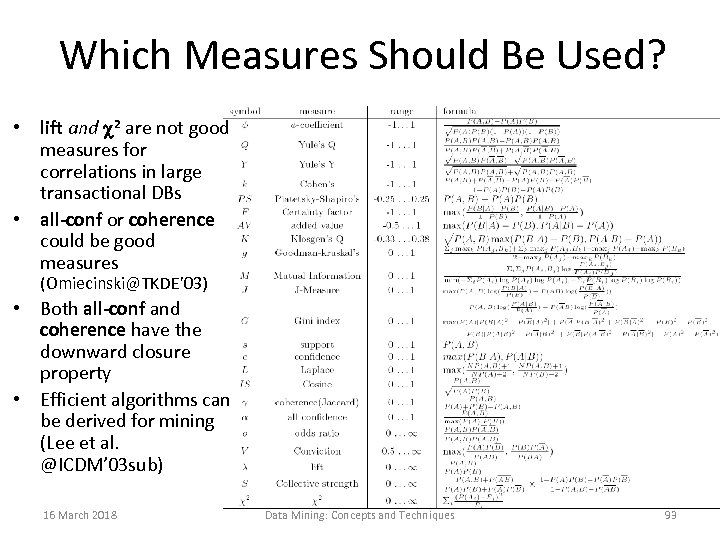

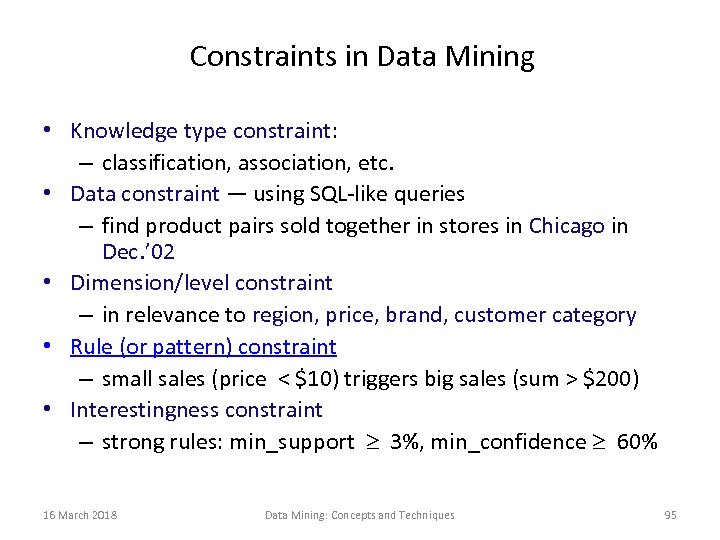

Which Measures Should Be Used? • lift and 2 are not good measures for correlations in large transactional DBs • all-conf or coherence could be good measures (Omiecinski@TKDE’ 03) • Both all-conf and coherence have the downward closure property • Efficient algorithms can be derived for mining (Lee et al. @ICDM’ 03 sub) 16 March 2018 Data Mining: Concepts and Techniques 93