e91ff64d41b7026e3ddff9194cbace3f.ppt

- Количество слайдов: 69

Chapter 2 Memory and Process Management Part 2: Process Management in OS

Chapter 2 Memory and Process Management Part 2: Process Management in OS

Learning Outcome 1) 2) 3) 4) 5) 6) 7) 8) 9) By the end of this chapter, student will be able to: Explain the major system resourse types within a computer system Explain of control blocks and interrupt in the dispatching process Describe the various process states Describe types of scheduling processes Describe the life cycle of a process Explain different types of scheduling algorithms Explain how queuing and the scheduler work together Define the purpose of CPU schedular Differentiate between preemptive and non-preemptive technique in scheduling

Learning Outcome 1) 2) 3) 4) 5) 6) 7) 8) 9) By the end of this chapter, student will be able to: Explain the major system resourse types within a computer system Explain of control blocks and interrupt in the dispatching process Describe the various process states Describe types of scheduling processes Describe the life cycle of a process Explain different types of scheduling algorithms Explain how queuing and the scheduler work together Define the purpose of CPU schedular Differentiate between preemptive and non-preemptive technique in scheduling

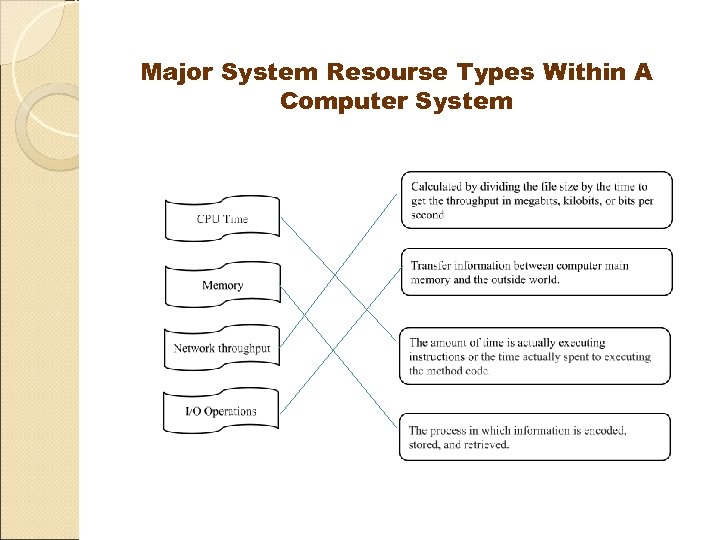

Major System Resourse Types Within A Computer System

Major System Resourse Types Within A Computer System

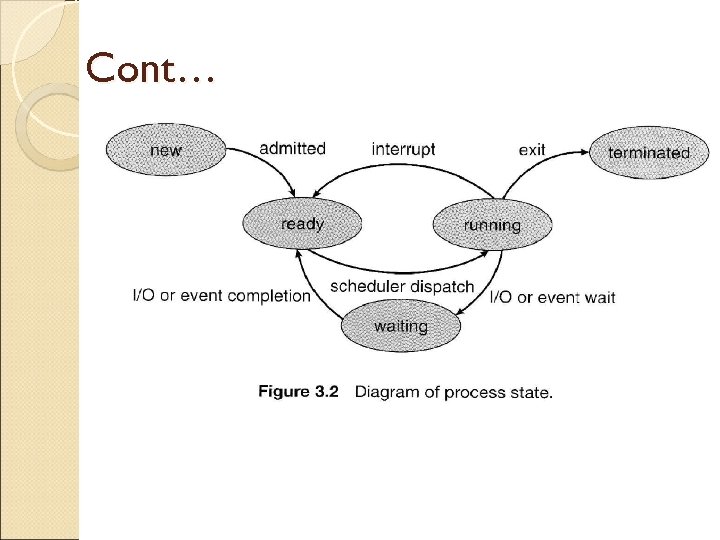

Process State A program execution, process execution must progress in sequential fashion Process include: 1) Program counter 2) Stack 3) Data section Process state: - New: The process is being created - Running: Instruction are being executed - Waiting: The process is waiting for some event to occur - Ready: The process is waiting to be assigned to process - Terminated: The process finished execution

Process State A program execution, process execution must progress in sequential fashion Process include: 1) Program counter 2) Stack 3) Data section Process state: - New: The process is being created - Running: Instruction are being executed - Waiting: The process is waiting for some event to occur - Ready: The process is waiting to be assigned to process - Terminated: The process finished execution

Cont…

Cont…

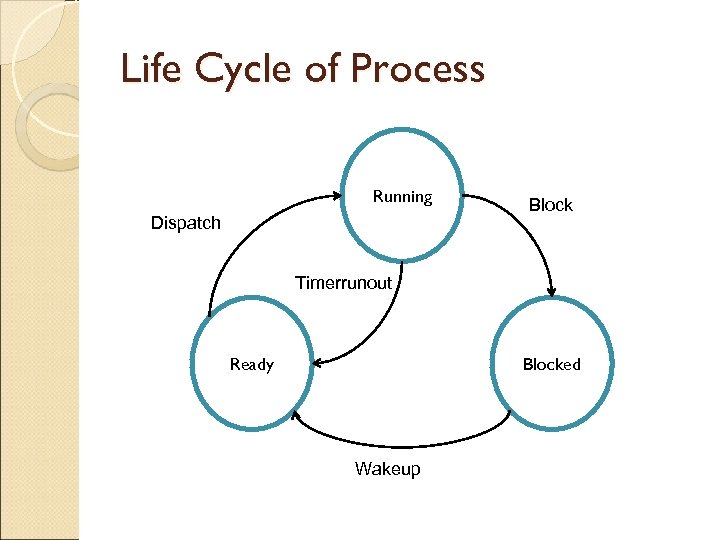

Life Cycle of Process A process goes through a series of discrete process states such as below (basic states): ◦ Running state if it currently has the CPU ◦ Ready state if it could use a CPU if available ◦ Blocked state if it is waiting for some event to happen (eg an I/O completion event)before it can proceed

Life Cycle of Process A process goes through a series of discrete process states such as below (basic states): ◦ Running state if it currently has the CPU ◦ Ready state if it could use a CPU if available ◦ Blocked state if it is waiting for some event to happen (eg an I/O completion event)before it can proceed

Cont… During process state transition, 2 types of list are established, which are : ◦ Ready list ◦ Blocked list

Cont… During process state transition, 2 types of list are established, which are : ◦ Ready list ◦ Blocked list

Cont… Ready list ◦ Consists of ready processes ◦ Is maintained in priority order ◦ The next process to receive the CPU is the first process in the list

Cont… Ready list ◦ Consists of ready processes ◦ Is maintained in priority order ◦ The next process to receive the CPU is the first process in the list

Cont… Blocked list ◦ Consists of blocked process ◦ Normally unordered ◦ Processes will become unblocked in the order in which the events they are waiting for occur

Cont… Blocked list ◦ Consists of blocked process ◦ Normally unordered ◦ Processes will become unblocked in the order in which the events they are waiting for occur

Process State Transition Dispatching process is a process of assigning CPU to the first process on the ready list (Refer next diagram)

Process State Transition Dispatching process is a process of assigning CPU to the first process on the ready list (Refer next diagram)

Life Cycle of Process Running Dispatch Block Timerrunout Ready Blocked Wakeup

Life Cycle of Process Running Dispatch Block Timerrunout Ready Blocked Wakeup

Interrupts An interrupt is an electronic signal. Hardware senses the signal, saves key control information for the currently executing program, and starts the operating system’s interrupt handler routine. At that instant, the interrupt ends. The operating system then handles the interrupt. Subsequently, after the interrupt is processed, the dispatcher starts an application program. Eventually, the program that was executing at the time of the interrupt resumes processing.

Interrupts An interrupt is an electronic signal. Hardware senses the signal, saves key control information for the currently executing program, and starts the operating system’s interrupt handler routine. At that instant, the interrupt ends. The operating system then handles the interrupt. Subsequently, after the interrupt is processed, the dispatcher starts an application program. Eventually, the program that was executing at the time of the interrupt resumes processing.

CPU Scheduler Selects from among the processes in memory that are ready to execute, and allocates the CPU to one of them CPU scheduling decisions may take place when a process: 1. Switches from running to waiting state 2. Switches from running to ready state 3. Switches from waiting to ready 4. Terminates Scheduling under 1 and 4 is nonpreemptive All other scheduling is preemptive

CPU Scheduler Selects from among the processes in memory that are ready to execute, and allocates the CPU to one of them CPU scheduling decisions may take place when a process: 1. Switches from running to waiting state 2. Switches from running to ready state 3. Switches from waiting to ready 4. Terminates Scheduling under 1 and 4 is nonpreemptive All other scheduling is preemptive

CPU Scheduler Preemptive scheduling policy interrupts processing of a job and transfers the CPU to another job. - The process may be pre-empted by the operating system when: 1) a new process arrives (perhaps at a higher priority), or 2) an interrupt or signal occurs, or 3) a (frequent) clock interrupt occurs. Non-preemptive scheduling policy functions without external interrupts. - once a process is executing, it will continue to execute until it terminates, or - it makes an I/O request which would block the process, or - it makes an operating system call.

CPU Scheduler Preemptive scheduling policy interrupts processing of a job and transfers the CPU to another job. - The process may be pre-empted by the operating system when: 1) a new process arrives (perhaps at a higher priority), or 2) an interrupt or signal occurs, or 3) a (frequent) clock interrupt occurs. Non-preemptive scheduling policy functions without external interrupts. - once a process is executing, it will continue to execute until it terminates, or - it makes an I/O request which would block the process, or - it makes an operating system call.

CPU Scheduling criteria: 1) CPU utilization The ratio of busy time of the processor to the total time passes for processes to finish. Processor Utilization = (Processor buy time) / (Processor busy time + Processor idle time) Maximize, to keep as busy as possible 2) Throughput The measure of work done in a unit time interval. Throughput = (Number of processes completed) / (Time Unit)

CPU Scheduling criteria: 1) CPU utilization The ratio of busy time of the processor to the total time passes for processes to finish. Processor Utilization = (Processor buy time) / (Processor busy time + Processor idle time) Maximize, to keep as busy as possible 2) Throughput The measure of work done in a unit time interval. Throughput = (Number of processes completed) / (Time Unit)

CPU Scheduling 3) Turnaround time The sum of time spent in the ready queue, execution time and I/O time. tat = t(process completed) – t(process submitted) minimize, time of submission to time of completion. 4) Waiting time minimize, time spent in ready queue - affected solely by scheduling policy 5) Response time The amount of time it takes to start responding to a request. This criterion is important for interactive systems. rt = t(first response) – t(submission of request) minimize

CPU Scheduling 3) Turnaround time The sum of time spent in the ready queue, execution time and I/O time. tat = t(process completed) – t(process submitted) minimize, time of submission to time of completion. 4) Waiting time minimize, time spent in ready queue - affected solely by scheduling policy 5) Response time The amount of time it takes to start responding to a request. This criterion is important for interactive systems. rt = t(first response) – t(submission of request) minimize

CPU Scheduling Types of scheduling: 1) long-term scheduling 2) Medium-term scheduling 3) Short-term scheduling

CPU Scheduling Types of scheduling: 1) long-term scheduling 2) Medium-term scheduling 3) Short-term scheduling

Long-term scheduling Determine which programs admitted to system for processing - controls degree of multiprogramming Once admitted, program becomes a process, either: – added to queue for short-term scheduler – swapped out (to disk), so added to queue for medium-term scheduler

Long-term scheduling Determine which programs admitted to system for processing - controls degree of multiprogramming Once admitted, program becomes a process, either: – added to queue for short-term scheduler – swapped out (to disk), so added to queue for medium-term scheduler

Medium –term scheduling Part of swapping function between main memory and disk - based on how many processes the OS wants available at any one time - must consider memory management if no virtual memory (VM), so look at memory requirements of swapped out processes

Medium –term scheduling Part of swapping function between main memory and disk - based on how many processes the OS wants available at any one time - must consider memory management if no virtual memory (VM), so look at memory requirements of swapped out processes

Short –term scheduling (dispatcher) Executes most frequently, to decide which process to execute next – Invoked whenever event occurs that interrupts current process or provides an opportunity to preempt current one in favor of another – Events: clock interrupt, I/O interrupt, OS call, signal

Short –term scheduling (dispatcher) Executes most frequently, to decide which process to execute next – Invoked whenever event occurs that interrupts current process or provides an opportunity to preempt current one in favor of another – Events: clock interrupt, I/O interrupt, OS call, signal

Scheduling algorithms: First in first out(FIFO) Round robin scheduling (RR) Shortest job first (SJF) Shortest remaining time (SRT) Priority (P) Multilevel queue (MLQ) Multilevel feedback queue (MLFQ)

Scheduling algorithms: First in first out(FIFO) Round robin scheduling (RR) Shortest job first (SJF) Shortest remaining time (SRT) Priority (P) Multilevel queue (MLQ) Multilevel feedback queue (MLFQ)

CPU Scheduling Types of scheduling algorithm: Basic strategies 1) First In First Out (FIFO) 2) Shortest Job First (SJF) 3) Round Robin (RR) 4) Priority Combined strategies 1) Multi-level queue 2) Multi-level feedback queue

CPU Scheduling Types of scheduling algorithm: Basic strategies 1) First In First Out (FIFO) 2) Shortest Job First (SJF) 3) Round Robin (RR) 4) Priority Combined strategies 1) Multi-level queue 2) Multi-level feedback queue

First In First Out(FIFO) The simplest scheduling discipline Processes are dispatched according to their arrival time on the ready queue Process that has the CPU will run until complete Non preemptive scheduling algo.

First In First Out(FIFO) The simplest scheduling discipline Processes are dispatched according to their arrival time on the ready queue Process that has the CPU will run until complete Non preemptive scheduling algo.

First In First Out(FIFO) Disadvantage: ◦ Long jobs make short jobs wait ◦ Unimportant jobs make important jobs wait ◦ Not useful in scheduling interactive users because it does not guarantee good response times

First In First Out(FIFO) Disadvantage: ◦ Long jobs make short jobs wait ◦ Unimportant jobs make important jobs wait ◦ Not useful in scheduling interactive users because it does not guarantee good response times

First In First Out(FIFO) Rarely used as a master scheduling algo but often embedded within other algo. ◦ Eg many scheduling algo dispatch processes according to priority but processes with the same priority are dispatched FIFO

First In First Out(FIFO) Rarely used as a master scheduling algo but often embedded within other algo. ◦ Eg many scheduling algo dispatch processes according to priority but processes with the same priority are dispatched FIFO

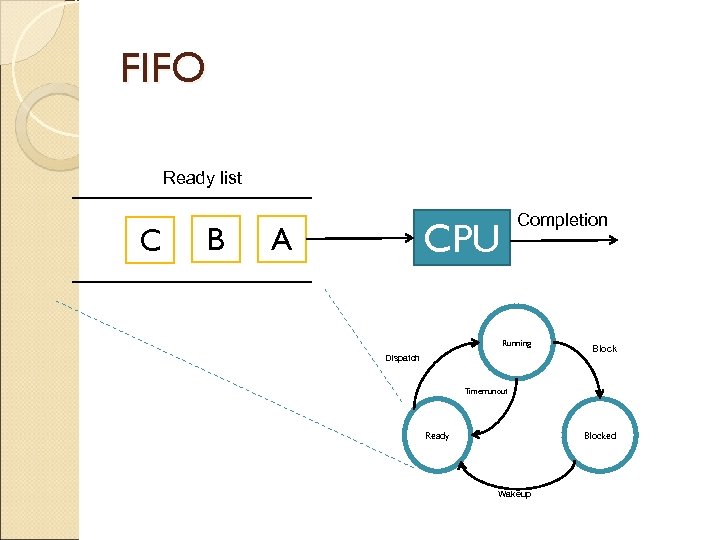

FIFO Ready list C B Completion CPU A Running Dispatch Block Timerrunout Ready Blocked Wakeup

FIFO Ready list C B Completion CPU A Running Dispatch Block Timerrunout Ready Blocked Wakeup

First Come First Serve (FIFO) Non-preemptive. Handles jobs according to their arrival time -- the earlier they arrive, the sooner they’re served. Simple algorithm to implement -- uses a FIFO queue. Good for batch systems; not so good for interactive ones. Turnaround time is unpredictable.

First Come First Serve (FIFO) Non-preemptive. Handles jobs according to their arrival time -- the earlier they arrive, the sooner they’re served. Simple algorithm to implement -- uses a FIFO queue. Good for batch systems; not so good for interactive ones. Turnaround time is unpredictable.

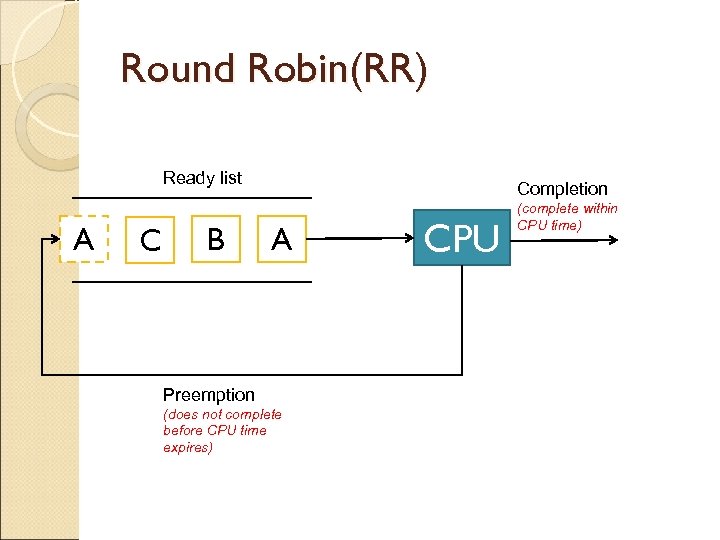

Round Robin(RR) Process are dispatched FIFO but are given a limited amount of CPU time(time-slice or quantum) If a process does not complete before its CPU time expires, the CPU is preempted and given to the next waiting process The preempted process is placed at the back of the ready list

Round Robin(RR) Process are dispatched FIFO but are given a limited amount of CPU time(time-slice or quantum) If a process does not complete before its CPU time expires, the CPU is preempted and given to the next waiting process The preempted process is placed at the back of the ready list

Round Robin(RR) Advantage: ◦ Effective in timesharing environments in which the system needs to guarantee reasonable response times for interactive users

Round Robin(RR) Advantage: ◦ Effective in timesharing environments in which the system needs to guarantee reasonable response times for interactive users

Round Robin(RR) Ready list A C B Completion A Preemption (does not complete before CPU time expires) CPU (complete within CPU time)

Round Robin(RR) Ready list A C B Completion A Preemption (does not complete before CPU time expires) CPU (complete within CPU time)

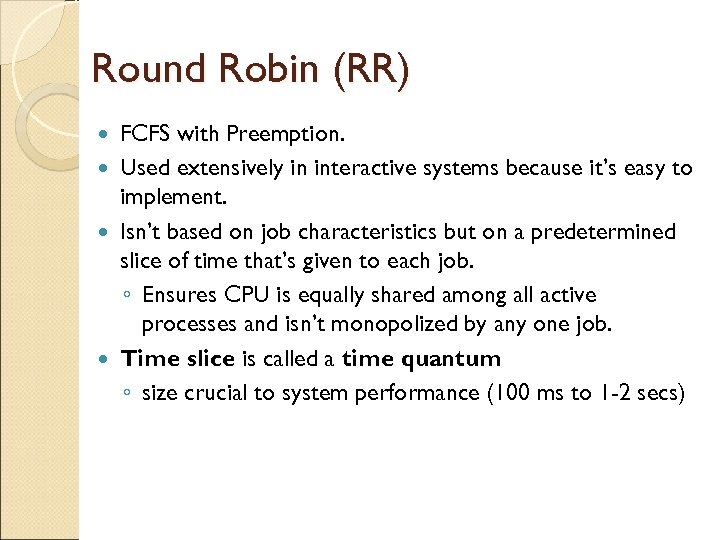

Round Robin (RR) FCFS with Preemption. Used extensively in interactive systems because it’s easy to implement. Isn’t based on job characteristics but on a predetermined slice of time that’s given to each job. ◦ Ensures CPU is equally shared among all active processes and isn’t monopolized by any one job. Time slice is called a time quantum ◦ size crucial to system performance (100 ms to 1 -2 secs)

Round Robin (RR) FCFS with Preemption. Used extensively in interactive systems because it’s easy to implement. Isn’t based on job characteristics but on a predetermined slice of time that’s given to each job. ◦ Ensures CPU is equally shared among all active processes and isn’t monopolized by any one job. Time slice is called a time quantum ◦ size crucial to system performance (100 ms to 1 -2 secs)

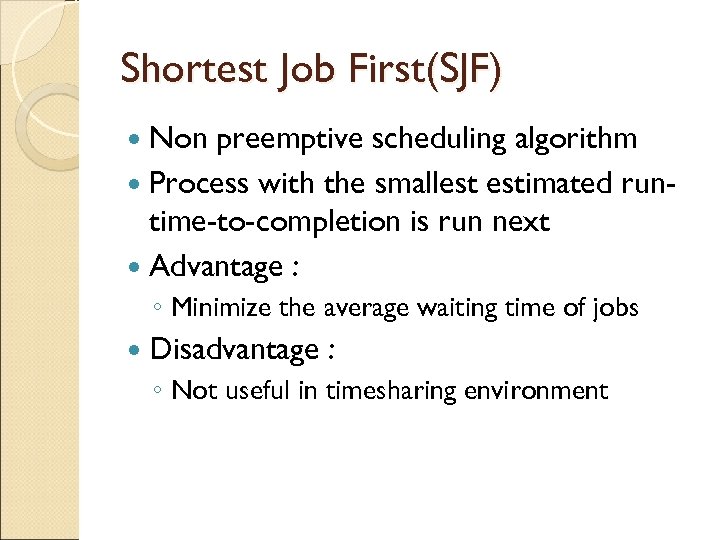

Shortest Job First(SJF) Non preemptive scheduling algorithm Process with the smallest estimated runtime-to-completion is run next Advantage : ◦ Minimize the average waiting time of jobs Disadvantage : ◦ Not useful in timesharing environment

Shortest Job First(SJF) Non preemptive scheduling algorithm Process with the smallest estimated runtime-to-completion is run next Advantage : ◦ Minimize the average waiting time of jobs Disadvantage : ◦ Not useful in timesharing environment

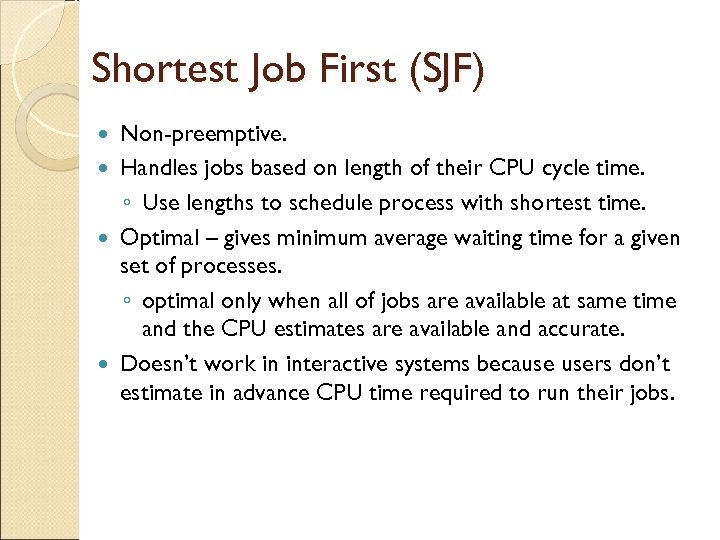

Shortest Job First (SJF) Non-preemptive. Handles jobs based on length of their CPU cycle time. ◦ Use lengths to schedule process with shortest time. Optimal – gives minimum average waiting time for a given set of processes. ◦ optimal only when all of jobs are available at same time and the CPU estimates are available and accurate. Doesn’t work in interactive systems because users don’t estimate in advance CPU time required to run their jobs.

Shortest Job First (SJF) Non-preemptive. Handles jobs based on length of their CPU cycle time. ◦ Use lengths to schedule process with shortest time. Optimal – gives minimum average waiting time for a given set of processes. ◦ optimal only when all of jobs are available at same time and the CPU estimates are available and accurate. Doesn’t work in interactive systems because users don’t estimate in advance CPU time required to run their jobs.

Priority scheduling Non-preemptive. Gives preferential treatment to important jobs. ◦ Programs with highest priority are processed first. ◦ Aren’t interrupted until CPU cycles are completed or a natural wait occurs. If 2+ jobs with equal priority are in READY queue, processor is allocated to one that arrived first (first come first served within priority). Many different methods of assigning priorities by system administrator or by Processor Manager.

Priority scheduling Non-preemptive. Gives preferential treatment to important jobs. ◦ Programs with highest priority are processed first. ◦ Aren’t interrupted until CPU cycles are completed or a natural wait occurs. If 2+ jobs with equal priority are in READY queue, processor is allocated to one that arrived first (first come first served within priority). Many different methods of assigning priorities by system administrator or by Processor Manager.

Priority A preemptive priority scheduling algorithm will preempt the CPU if the priority of the newly arrived process is higher than the priority of the currently running process.

Priority A preemptive priority scheduling algorithm will preempt the CPU if the priority of the newly arrived process is higher than the priority of the currently running process.

Priority A non preemptive priority scheduling algorithm will simply put the newly arrived process at the head of the ready queue.

Priority A non preemptive priority scheduling algorithm will simply put the newly arrived process at the head of the ready queue.

Priority Disadvantage : ◦ indefinite blocking or process starvation. This can be solved by a technique called aging wherein we gradually increase the priority of a long waiting process.

Priority Disadvantage : ◦ indefinite blocking or process starvation. This can be solved by a technique called aging wherein we gradually increase the priority of a long waiting process.

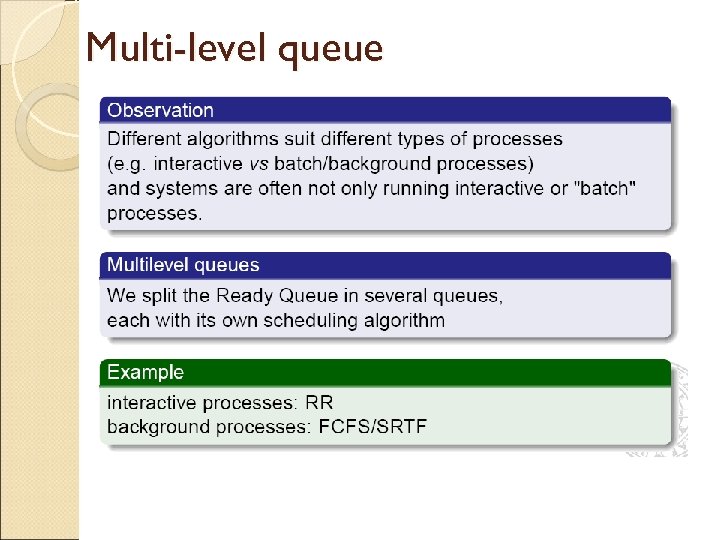

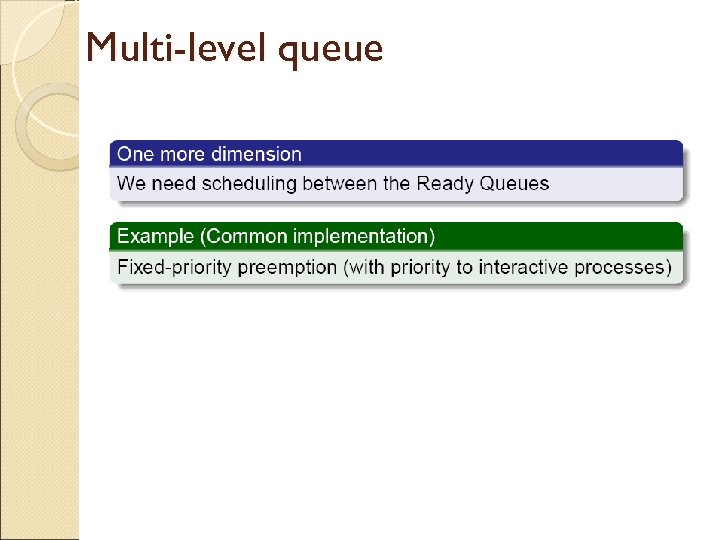

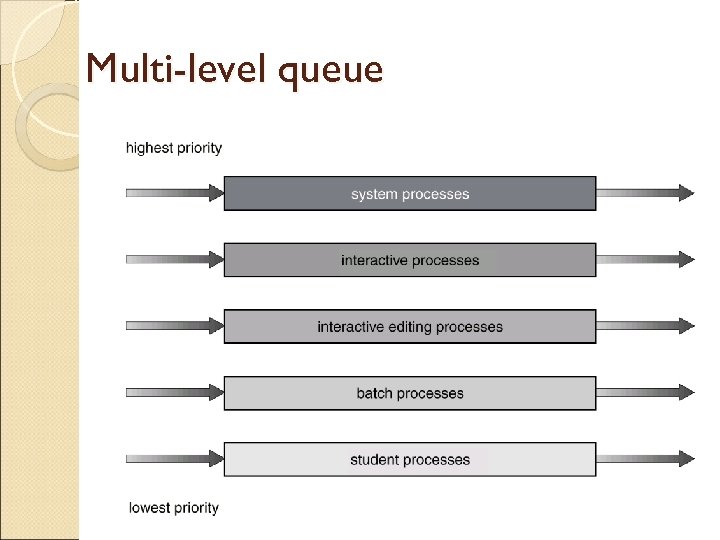

Multilevel Queue Partitions the ready queue into several separate queues. The processes are permanently assigned to one queue based on some property of the process. Eg : ◦ Interactive process ◦ Batch process

Multilevel Queue Partitions the ready queue into several separate queues. The processes are permanently assigned to one queue based on some property of the process. Eg : ◦ Interactive process ◦ Batch process

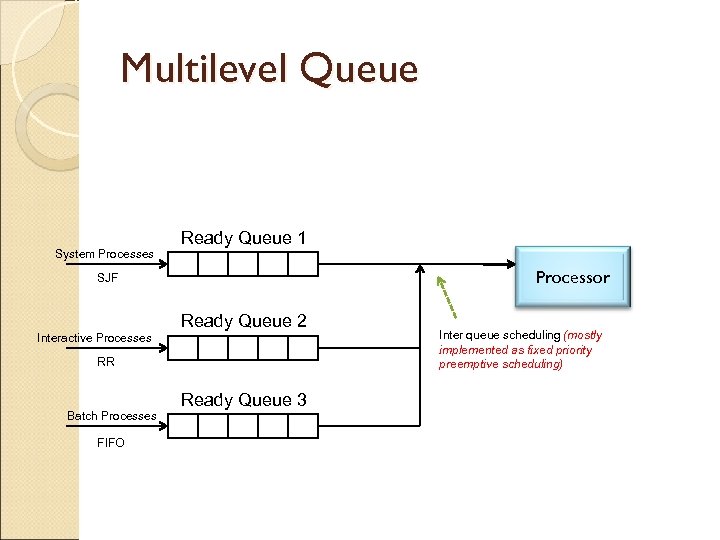

Multilevel Queue Each queue has its own scheduling algorithm. In addition, there must be scheduling between the queues which is mostly implemented as fixed priority preemptive scheduling

Multilevel Queue Each queue has its own scheduling algorithm. In addition, there must be scheduling between the queues which is mostly implemented as fixed priority preemptive scheduling

Multilevel Queue System Processes Ready Queue 1 Processor SJF Interactive Processes Ready Queue 2 RR Batch Processes FIFO Ready Queue 3 Inter queue scheduling (mostly implemented as fixed priority preemptive scheduling)

Multilevel Queue System Processes Ready Queue 1 Processor SJF Interactive Processes Ready Queue 2 RR Batch Processes FIFO Ready Queue 3 Inter queue scheduling (mostly implemented as fixed priority preemptive scheduling)

Multi-level queue

Multi-level queue

Multi-level queue

Multi-level queue

Multi-level queue

Multi-level queue

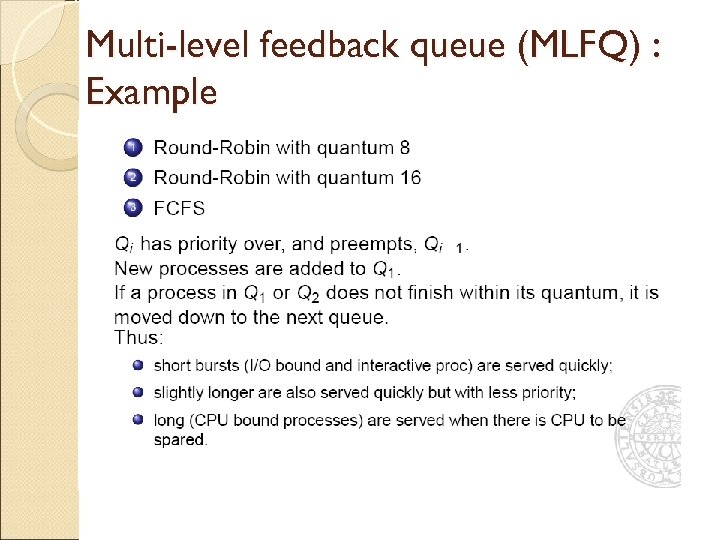

Multilevel Feedback Queue (MLFQ) Has a number of queues, each assigned a different priority level. A job that is ready to run can only be on a single queue.

Multilevel Feedback Queue (MLFQ) Has a number of queues, each assigned a different priority level. A job that is ready to run can only be on a single queue.

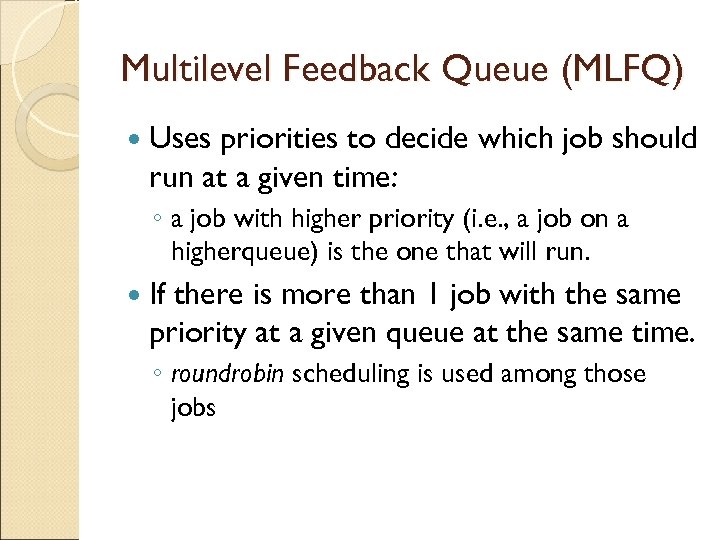

Multilevel Feedback Queue (MLFQ) Uses priorities to decide which job should run at a given time: ◦ a job with higher priority (i. e. , a job on a higherqueue) is the one that will run. If there is more than 1 job with the same priority at a given queue at the same time. ◦ roundrobin scheduling is used among those jobs

Multilevel Feedback Queue (MLFQ) Uses priorities to decide which job should run at a given time: ◦ a job with higher priority (i. e. , a job on a higherqueue) is the one that will run. If there is more than 1 job with the same priority at a given queue at the same time. ◦ roundrobin scheduling is used among those jobs

Multilevel Feedback Queue (MLFQ) MLFQ varies the priority of a job based on its observed behavior. Eg: ◦ If, for example, a job repeatedly release the CPU while waiting for input from the keyboard, MLFQ will keep its priority high. ◦ If a job uses the CPU intensively, MLFQ will reduce its priority. In this way, MLFQ will try to learn about processes as they run, and thus use the history of the job to predict its future behavior.

Multilevel Feedback Queue (MLFQ) MLFQ varies the priority of a job based on its observed behavior. Eg: ◦ If, for example, a job repeatedly release the CPU while waiting for input from the keyboard, MLFQ will keep its priority high. ◦ If a job uses the CPU intensively, MLFQ will reduce its priority. In this way, MLFQ will try to learn about processes as they run, and thus use the history of the job to predict its future behavior.

Multilevel Feedback Queue (MLFQ) Rules of MLFQ: ◦ Rule 1: If Priority(A) > Priority(B), A will run and B won’t ◦ Rule 2: If Priority(A) = Priority(B), both A and B will be run in round-robin fashion ◦ Rule 3: When a job enters the system, it is placed at the highest priority (the topmost queue). ◦ Rule 4: Once a job uses up its quantum at a given level (regardless of how many times it has given up the CPU), its priority is reduced (it moves down one queue). ◦ Rule 5: After some time period S, move all the jobs in the system to the topmost queue

Multilevel Feedback Queue (MLFQ) Rules of MLFQ: ◦ Rule 1: If Priority(A) > Priority(B), A will run and B won’t ◦ Rule 2: If Priority(A) = Priority(B), both A and B will be run in round-robin fashion ◦ Rule 3: When a job enters the system, it is placed at the highest priority (the topmost queue). ◦ Rule 4: Once a job uses up its quantum at a given level (regardless of how many times it has given up the CPU), its priority is reduced (it moves down one queue). ◦ Rule 5: After some time period S, move all the jobs in the system to the topmost queue

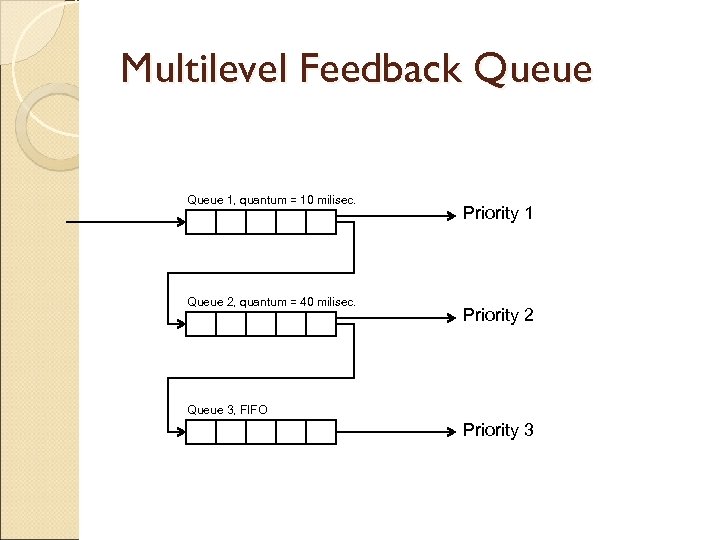

Multilevel Feedback Queue 1, quantum = 10 milisec. Queue 2, quantum = 40 milisec. Priority 1 Priority 2 Queue 3, FIFO Priority 3

Multilevel Feedback Queue 1, quantum = 10 milisec. Queue 2, quantum = 40 milisec. Priority 1 Priority 2 Queue 3, FIFO Priority 3

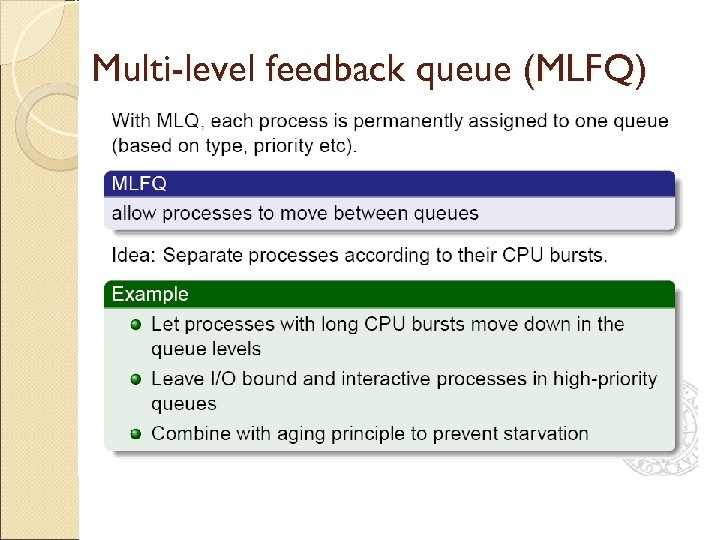

Multi-level feedback queue (MLFQ)

Multi-level feedback queue (MLFQ)

Multi-level feedback queue (MLFQ) : Example

Multi-level feedback queue (MLFQ) : Example

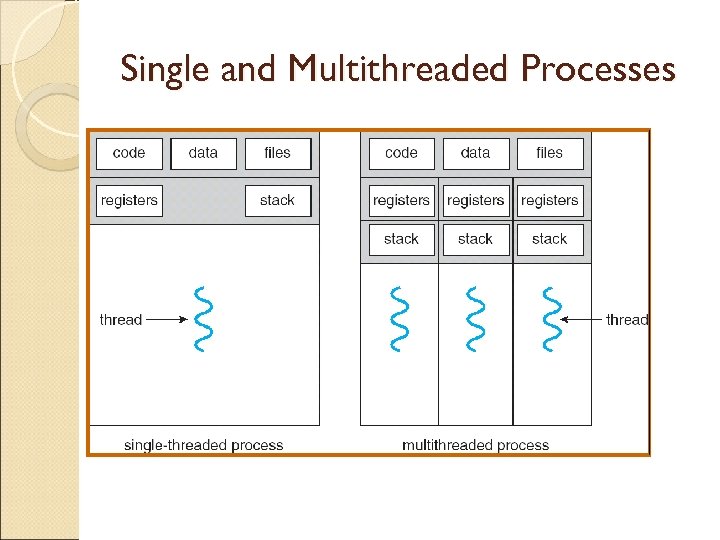

Single and Multithreaded Processes

Single and Multithreaded Processes

Benefits Responsiveness Resource Sharing Economy

Benefits Responsiveness Resource Sharing Economy

User Threads Thread management done by user-level threads library Three primary thread libraries: ◦ POSIX Pthreads ◦ Win 32 threads ◦ Java threads

User Threads Thread management done by user-level threads library Three primary thread libraries: ◦ POSIX Pthreads ◦ Win 32 threads ◦ Java threads

Kernel Threads Supported by the Kernel Examples ◦ ◦ ◦ Windows XP/2000 Solaris Linux Tru 64 UNIX Mac OS X

Kernel Threads Supported by the Kernel Examples ◦ ◦ ◦ Windows XP/2000 Solaris Linux Tru 64 UNIX Mac OS X

Multithreading Models Many-to-One One-to-One Many-to-Many

Multithreading Models Many-to-One One-to-One Many-to-Many

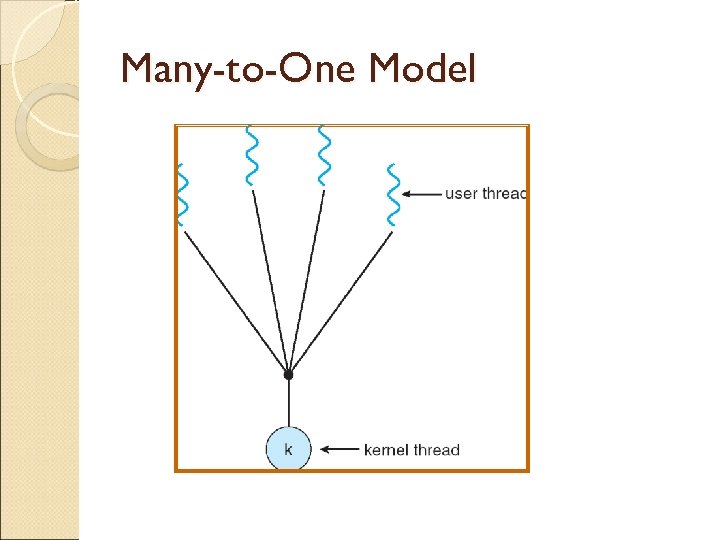

Many-to-One Many user-level threads mapped to single kernel thread Examples: ◦ Solaris Green Threads

Many-to-One Many user-level threads mapped to single kernel thread Examples: ◦ Solaris Green Threads

Many-to-One Model

Many-to-One Model

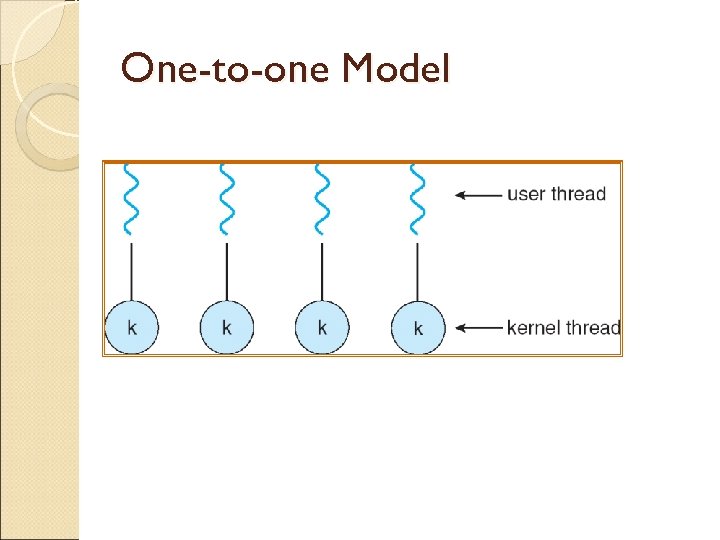

One-to-One Each user-level thread maps to kernel thread Examples ◦ Windows NT/XP/2000 ◦ Linux ◦ Solaris 9 and later

One-to-One Each user-level thread maps to kernel thread Examples ◦ Windows NT/XP/2000 ◦ Linux ◦ Solaris 9 and later

One-to-one Model

One-to-one Model

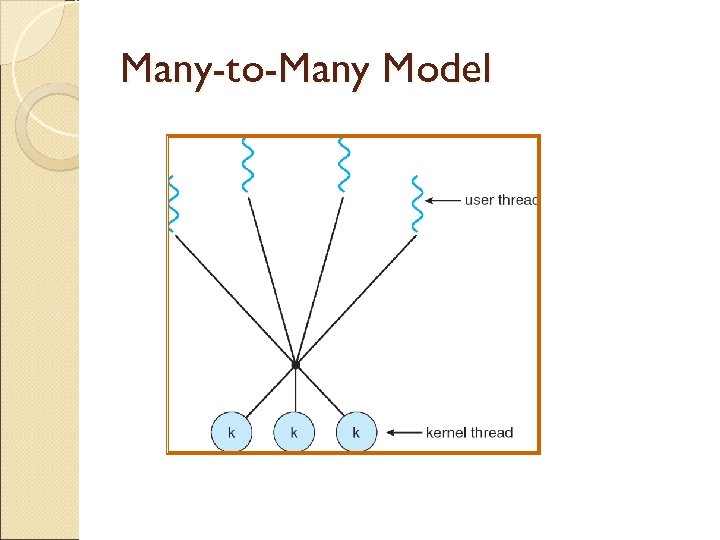

Many-to-Many Model Allows many user level threads to be mapped to many kernel threads Allows the operating system to create a sufficient number of kernel threads Solaris prior to version 9 Windows NT/2000 with the Thread. Fiber package

Many-to-Many Model Allows many user level threads to be mapped to many kernel threads Allows the operating system to create a sufficient number of kernel threads Solaris prior to version 9 Windows NT/2000 with the Thread. Fiber package

Many-to-Many Model

Many-to-Many Model

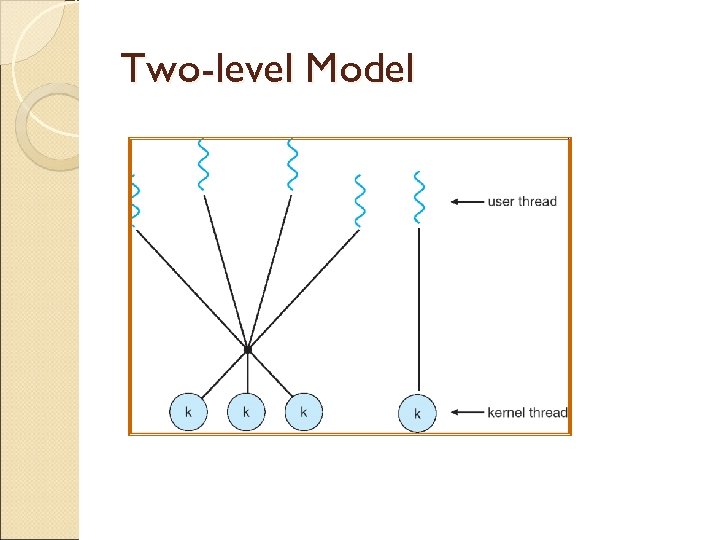

Two-level Model Similar to M: M, except that it allows a user thread to be bound to kernel thread

Two-level Model Similar to M: M, except that it allows a user thread to be bound to kernel thread

Two-level Model

Two-level Model

Scheduler Activations Both M: M and Two-level models require communication to maintain the appropriate number of kernel threads allocated to the application Scheduler activations provide upcalls - a communication mechanism from the kernel to the thread library This communication allows an application to maintain the correct number kernel threads

Scheduler Activations Both M: M and Two-level models require communication to maintain the appropriate number of kernel threads allocated to the application Scheduler activations provide upcalls - a communication mechanism from the kernel to the thread library This communication allows an application to maintain the correct number kernel threads

Pthreads A POSIX standard (IEEE 1003. 1 c) API for thread creation and synchronization API specifies behavior of the thread library, implementation is up to development of the library Common in UNIX operating systems (Solaris, Linux, Mac OS X)

Pthreads A POSIX standard (IEEE 1003. 1 c) API for thread creation and synchronization API specifies behavior of the thread library, implementation is up to development of the library Common in UNIX operating systems (Solaris, Linux, Mac OS X)

Windows XP Threads Implements the one-to-one mapping Each thread contains ◦ A thread id ◦ Register set ◦ Separate user and kernel stacks ◦ Private data storage area The register set, stacks, and private storage area are known as the context of the threads The primary data structures of a thread include: ◦ ETHREAD (executive thread block) ◦ KTHREAD (kernel thread block) ◦ TEB (thread environment block)

Windows XP Threads Implements the one-to-one mapping Each thread contains ◦ A thread id ◦ Register set ◦ Separate user and kernel stacks ◦ Private data storage area The register set, stacks, and private storage area are known as the context of the threads The primary data structures of a thread include: ◦ ETHREAD (executive thread block) ◦ KTHREAD (kernel thread block) ◦ TEB (thread environment block)

Linux Threads Linux refers to them as tasks rather than threads Thread creation is done through clone() system call clone() allows a child task to share the address space of the parent task (process)

Linux Threads Linux refers to them as tasks rather than threads Thread creation is done through clone() system call clone() allows a child task to share the address space of the parent task (process)

Java Threads Java threads are managed by the JVM Java threads may be created by: ◦ Extending Thread class ◦ Implementing the Runnable interface

Java Threads Java threads are managed by the JVM Java threads may be created by: ◦ Extending Thread class ◦ Implementing the Runnable interface

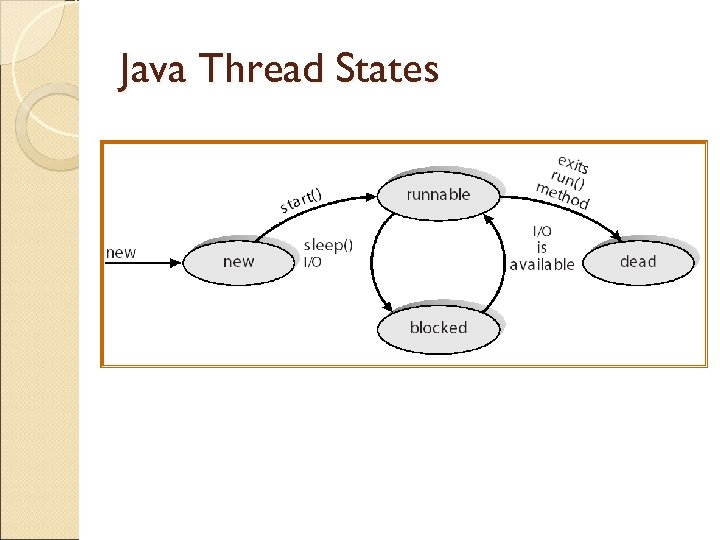

Java Thread States

Java Thread States