6620e68b69a61c72d8ef13f5c399f5c5.ppt

- Количество слайдов: 130

Chapter 2 Decisions and Games 1

Chapter 2 Decisions and Games 1

“Доверяй, Но Проверяй” (“Trust, but Verify”) - Russian Proverb (Ronald Reagan) 2

“Доверяй, Но Проверяй” (“Trust, but Verify”) - Russian Proverb (Ronald Reagan) 2

Criteria for evaluating systems • • • Computational efficiency Distribution of computation Communication efficiency Social welfare: maxoutcome ∑i ui(outcome) where ui is the utility for player i. Surplus: social welfare of outcome – social welfare of status quo – Constant sum games have 0 surplus. Markets are not constant sum Pareto efficiency: An outcome o is Pareto efficient if there exists no other outcome o’ s. t. some agent has higher utility in o’ than in o and no agent has lower utility – Implied by social welfare maximization Individual rationality: Participating in the negotiation (or individual deal) is no worse than not participating Stability: No agents can increase their utility by changing their strategies (given everyone else keeps the same strategy) Symmetry: No agent should be inherently preferred, e. g. dictator 3

Criteria for evaluating systems • • • Computational efficiency Distribution of computation Communication efficiency Social welfare: maxoutcome ∑i ui(outcome) where ui is the utility for player i. Surplus: social welfare of outcome – social welfare of status quo – Constant sum games have 0 surplus. Markets are not constant sum Pareto efficiency: An outcome o is Pareto efficient if there exists no other outcome o’ s. t. some agent has higher utility in o’ than in o and no agent has lower utility – Implied by social welfare maximization Individual rationality: Participating in the negotiation (or individual deal) is no worse than not participating Stability: No agents can increase their utility by changing their strategies (given everyone else keeps the same strategy) Symmetry: No agent should be inherently preferred, e. g. dictator 3

The term pareto efficient… • The term pareto efficient is named after Vilfredo Pareto, an Italian economist who used the concept in his studies of economic efficiency and income distribution. • If an economic system is not Pareto efficient, then it is the case that some individual can be made better off without anyone being made worse off. It is commonly accepted that such inefficient outcomes are to be avoided, and therefore Pareto efficiency is an important criterion for evaluating economic systems and political policies. • He is also the one credited with the 80/20 rule to describe the unequal distribution of wealth in his country, observing that twenty percent of the people owned eighty percent of the wealth. 4

The term pareto efficient… • The term pareto efficient is named after Vilfredo Pareto, an Italian economist who used the concept in his studies of economic efficiency and income distribution. • If an economic system is not Pareto efficient, then it is the case that some individual can be made better off without anyone being made worse off. It is commonly accepted that such inefficient outcomes are to be avoided, and therefore Pareto efficiency is an important criterion for evaluating economic systems and political policies. • He is also the one credited with the 80/20 rule to describe the unequal distribution of wealth in his country, observing that twenty percent of the people owned eighty percent of the wealth. 4

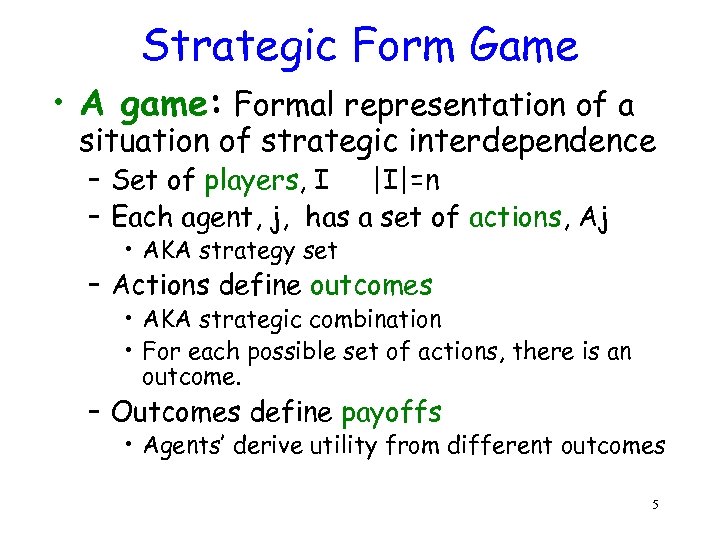

Strategic Form Game • A game: Formal representation of a situation of strategic interdependence – Set of players, I |I|=n – Each agent, j, has a set of actions, Aj • AKA strategy set – Actions define outcomes • AKA strategic combination • For each possible set of actions, there is an outcome. – Outcomes define payoffs • Agents’ derive utility from different outcomes 5

Strategic Form Game • A game: Formal representation of a situation of strategic interdependence – Set of players, I |I|=n – Each agent, j, has a set of actions, Aj • AKA strategy set – Actions define outcomes • AKA strategic combination • For each possible set of actions, there is an outcome. – Outcomes define payoffs • Agents’ derive utility from different outcomes 5

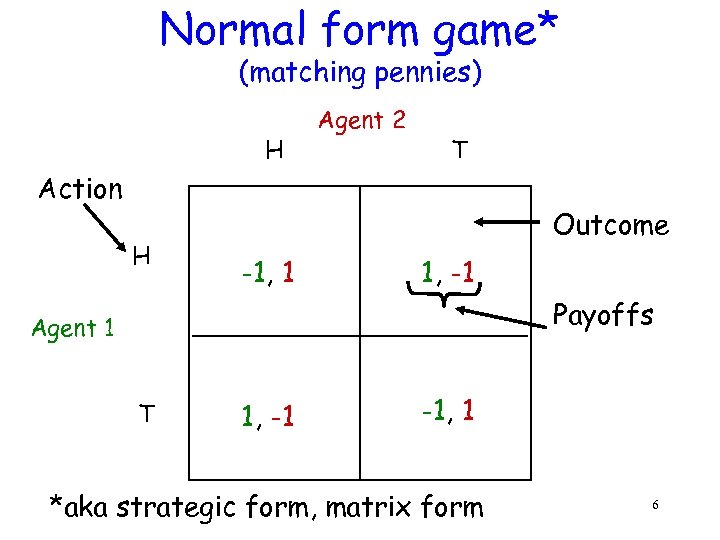

Normal form game* (matching pennies) H Agent 2 T Action H Outcome -1, 1 1, -1 Payoffs Agent 1 T 1, -1 -1, 1 *aka strategic form, matrix form 6

Normal form game* (matching pennies) H Agent 2 T Action H Outcome -1, 1 1, -1 Payoffs Agent 1 T 1, -1 -1, 1 *aka strategic form, matrix form 6

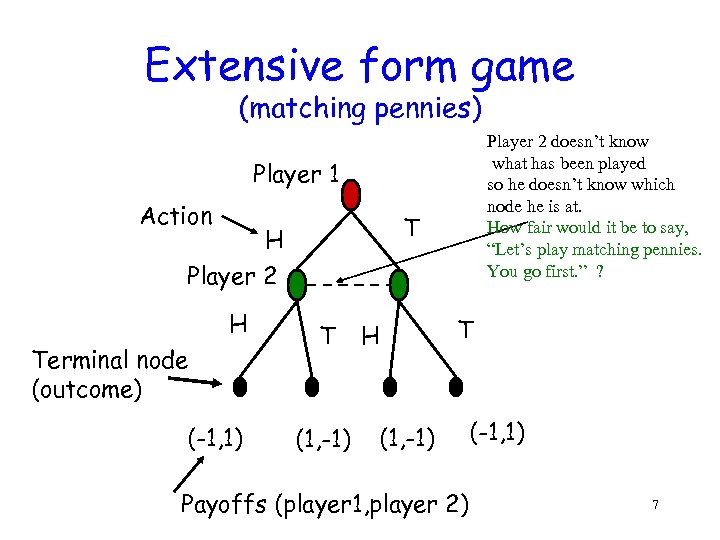

Extensive form game (matching pennies) Player 2 doesn’t know what has been played so he doesn’t know which node he is at. How fair would it be to say, “Let’s play matching pennies. You go first. ” ? Player 1 Action T H Player 2 H Terminal node (outcome) (-1, 1) T (1, -1) H T (1, -1) Payoffs (player 1, player 2) (-1, 1) 7

Extensive form game (matching pennies) Player 2 doesn’t know what has been played so he doesn’t know which node he is at. How fair would it be to say, “Let’s play matching pennies. You go first. ” ? Player 1 Action T H Player 2 H Terminal node (outcome) (-1, 1) T (1, -1) H T (1, -1) Payoffs (player 1, player 2) (-1, 1) 7

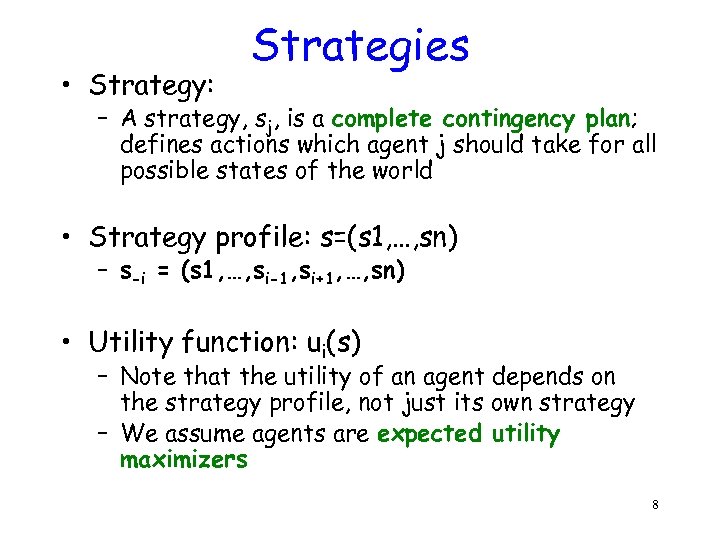

• Strategy: Strategies – A strategy, sj, is a complete contingency plan; defines actions which agent j should take for all possible states of the world • Strategy profile: s=(s 1, …, sn) – s-i = (s 1, …, si-1, si+1, …, sn) • Utility function: ui(s) – Note that the utility of an agent depends on the strategy profile, not just its own strategy – We assume agents are expected utility maximizers 8

• Strategy: Strategies – A strategy, sj, is a complete contingency plan; defines actions which agent j should take for all possible states of the world • Strategy profile: s=(s 1, …, sn) – s-i = (s 1, …, si-1, si+1, …, sn) • Utility function: ui(s) – Note that the utility of an agent depends on the strategy profile, not just its own strategy – We assume agents are expected utility maximizers 8

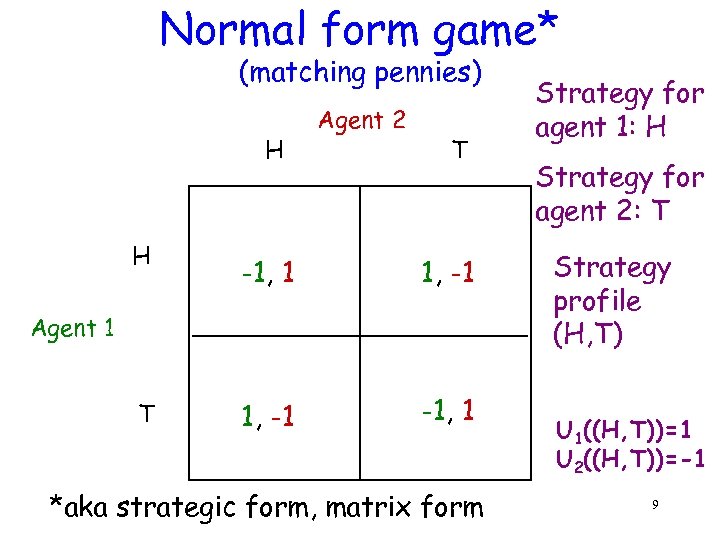

Normal form game* (matching pennies) H H Agent 2 T -1, 1 1, -1 -1, 1 Agent 1 T *aka strategic form, matrix form Strategy for agent 1: H Strategy for agent 2: T Strategy profile (H, T) U 1((H, T))=1 U 2((H, T))=-1 9

Normal form game* (matching pennies) H H Agent 2 T -1, 1 1, -1 -1, 1 Agent 1 T *aka strategic form, matrix form Strategy for agent 1: H Strategy for agent 2: T Strategy profile (H, T) U 1((H, T))=1 U 2((H, T))=-1 9

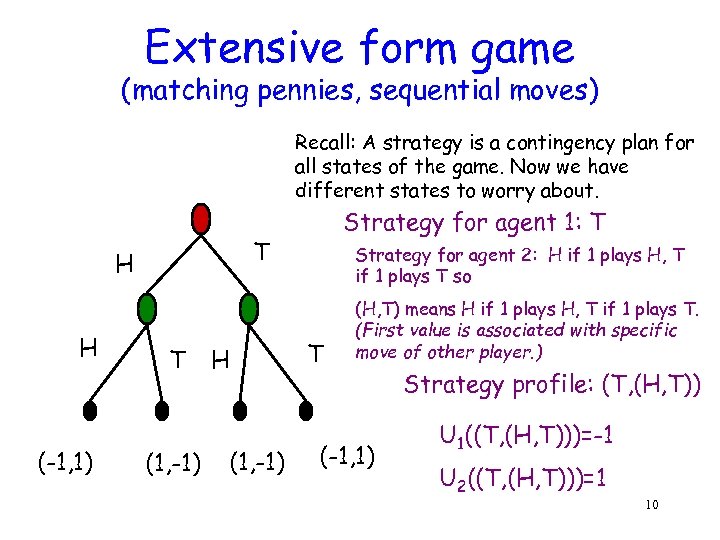

Extensive form game (matching pennies, sequential moves) Recall: A strategy is a contingency plan for all states of the game. Now we have different states to worry about. T H H (-1, 1) Strategy for agent 1: T T (1, -1) H (1, -1) Strategy for agent 2: H if 1 plays H, T if 1 plays T so T (H, T) means H if 1 plays H, T if 1 plays T. (First value is associated with specific move of other player. ) (-1, 1) Strategy profile: (T, (H, T)) U 1((T, (H, T)))=-1 U 2((T, (H, T)))=1 10

Extensive form game (matching pennies, sequential moves) Recall: A strategy is a contingency plan for all states of the game. Now we have different states to worry about. T H H (-1, 1) Strategy for agent 1: T T (1, -1) H (1, -1) Strategy for agent 2: H if 1 plays H, T if 1 plays T so T (H, T) means H if 1 plays H, T if 1 plays T. (First value is associated with specific move of other player. ) (-1, 1) Strategy profile: (T, (H, T)) U 1((T, (H, T)))=-1 U 2((T, (H, T)))=1 10

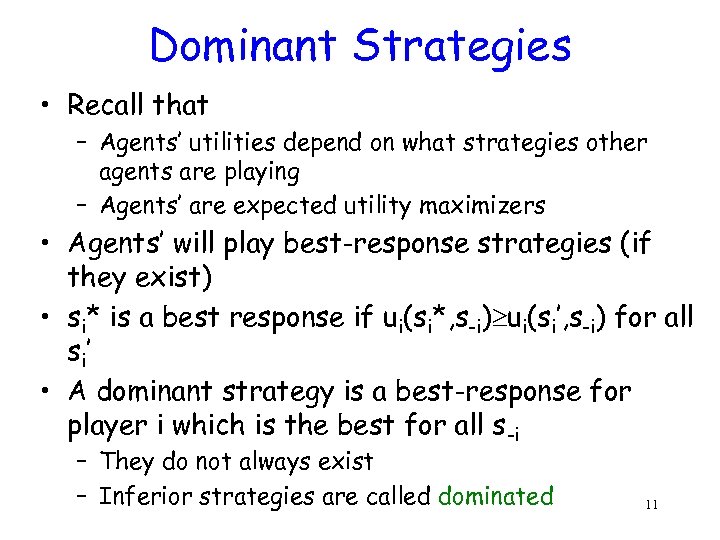

Dominant Strategies • Recall that – Agents’ utilities depend on what strategies other agents are playing – Agents’ are expected utility maximizers • Agents’ will play best-response strategies (if they exist) • si* is a best response if ui(si*, s-i) ui(si’, s-i) for all s i’ • A dominant strategy is a best-response for player i which is the best for all s-i – They do not always exist – Inferior strategies are called dominated 11

Dominant Strategies • Recall that – Agents’ utilities depend on what strategies other agents are playing – Agents’ are expected utility maximizers • Agents’ will play best-response strategies (if they exist) • si* is a best response if ui(si*, s-i) ui(si’, s-i) for all s i’ • A dominant strategy is a best-response for player i which is the best for all s-i – They do not always exist – Inferior strategies are called dominated 11

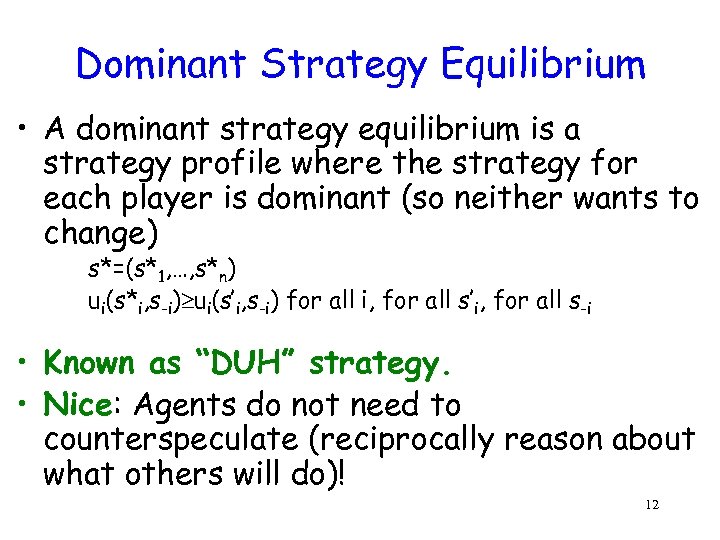

Dominant Strategy Equilibrium • A dominant strategy equilibrium is a strategy profile where the strategy for each player is dominant (so neither wants to change) s*=(s*1, …, s*n) ui(s*i, s-i) ui(s’i, s-i) for all i, for all s’i, for all s-i • Known as “DUH” strategy. • Nice: Agents do not need to counterspeculate (reciprocally reason about what others will do)! 12

Dominant Strategy Equilibrium • A dominant strategy equilibrium is a strategy profile where the strategy for each player is dominant (so neither wants to change) s*=(s*1, …, s*n) ui(s*i, s-i) ui(s’i, s-i) for all i, for all s’i, for all s-i • Known as “DUH” strategy. • Nice: Agents do not need to counterspeculate (reciprocally reason about what others will do)! 12

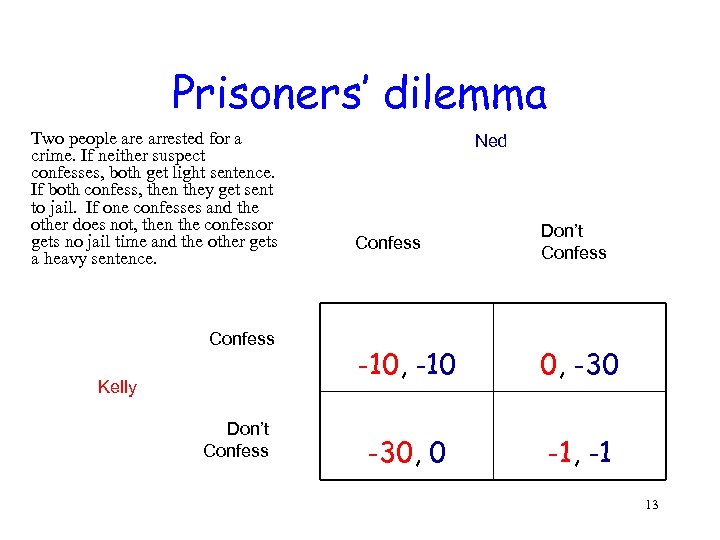

Prisoners’ dilemma Two people arrested for a crime. If neither suspect confesses, both get light sentence. If both confess, then they get sent to jail. If one confesses and the other does not, then the confessor gets no jail time and the other gets a heavy sentence. Confess Kelly Don’t Confess Ned Confess Don’t Confess -10, -10 0, -30, 0 -1, -1 13

Prisoners’ dilemma Two people arrested for a crime. If neither suspect confesses, both get light sentence. If both confess, then they get sent to jail. If one confesses and the other does not, then the confessor gets no jail time and the other gets a heavy sentence. Confess Kelly Don’t Confess Ned Confess Don’t Confess -10, -10 0, -30, 0 -1, -1 13

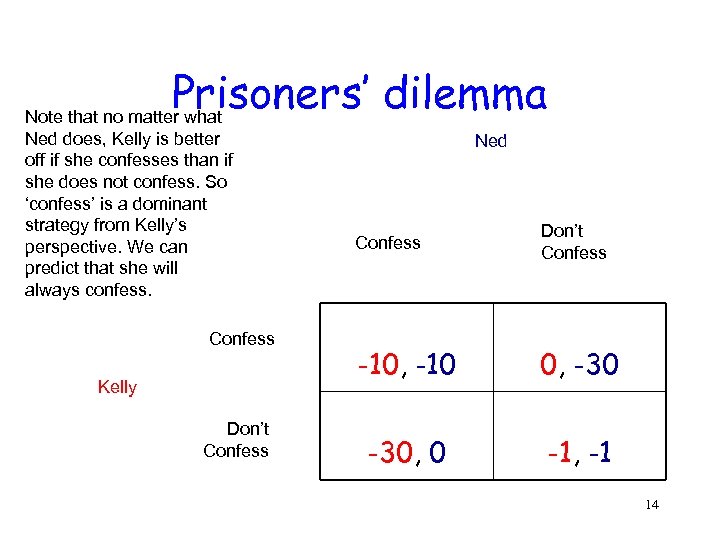

Prisoners’ dilemma Note that no matter what Ned does, Kelly is better off if she confesses than if she does not confess. So ‘confess’ is a dominant strategy from Kelly’s perspective. We can predict that she will always confess. Confess Kelly Don’t Confess Ned Confess Don’t Confess -10, -10 0, -30, 0 -1, -1 14

Prisoners’ dilemma Note that no matter what Ned does, Kelly is better off if she confesses than if she does not confess. So ‘confess’ is a dominant strategy from Kelly’s perspective. We can predict that she will always confess. Confess Kelly Don’t Confess Ned Confess Don’t Confess -10, -10 0, -30, 0 -1, -1 14

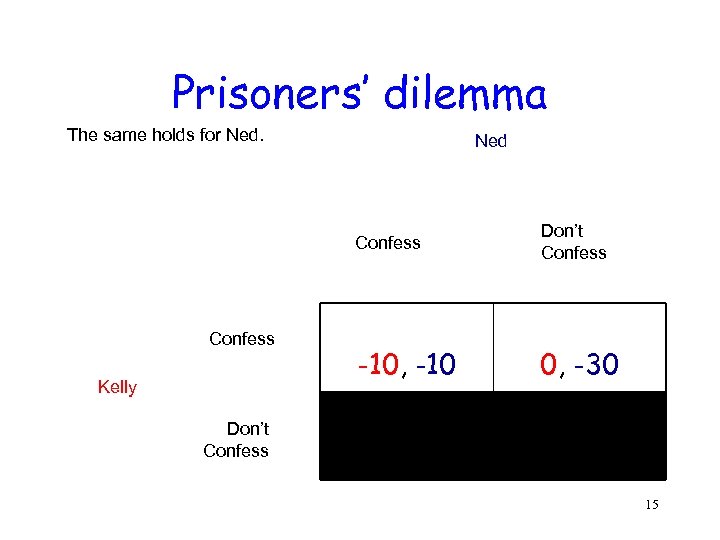

Prisoners’ dilemma The same holds for Ned Confess Kelly Don’t Confess -10, -10 0, -30 Don’t Confess 15

Prisoners’ dilemma The same holds for Ned Confess Kelly Don’t Confess -10, -10 0, -30 Don’t Confess 15

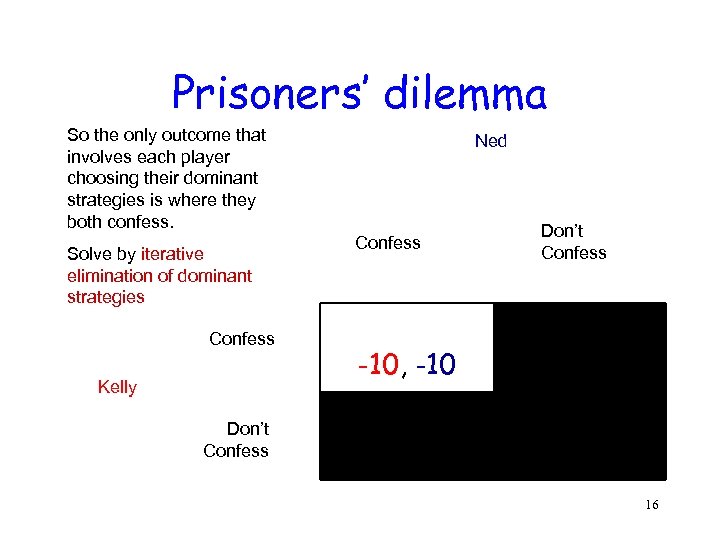

Prisoners’ dilemma So the only outcome that involves each player choosing their dominant strategies is where they both confess. Solve by iterative elimination of dominant strategies Confess Kelly Ned Confess Don’t Confess -10, -10 Don’t Confess 16

Prisoners’ dilemma So the only outcome that involves each player choosing their dominant strategies is where they both confess. Solve by iterative elimination of dominant strategies Confess Kelly Ned Confess Don’t Confess -10, -10 Don’t Confess 16

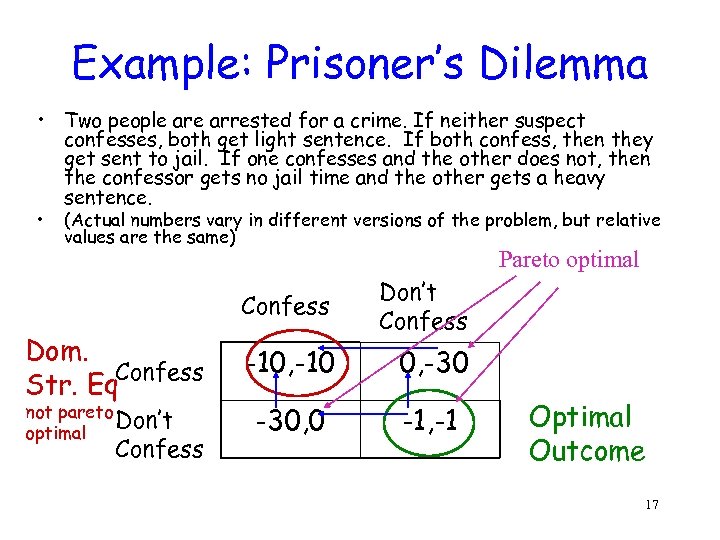

Example: Prisoner’s Dilemma • Two people arrested for a crime. If neither suspect confesses, both get light sentence. If both confess, then they get sent to jail. If one confesses and the other does not, then the confessor gets no jail time and the other gets a heavy sentence. • (Actual numbers vary in different versions of the problem, but relative values are the same) Pareto optimal Confess Dom. Str. Eq. Confess not pareto Don’t optimal Confess Don’t Confess -10, -10 0, -30, 0 -1, -1 Optimal Outcome 17

Example: Prisoner’s Dilemma • Two people arrested for a crime. If neither suspect confesses, both get light sentence. If both confess, then they get sent to jail. If one confesses and the other does not, then the confessor gets no jail time and the other gets a heavy sentence. • (Actual numbers vary in different versions of the problem, but relative values are the same) Pareto optimal Confess Dom. Str. Eq. Confess not pareto Don’t optimal Confess Don’t Confess -10, -10 0, -30, 0 -1, -1 Optimal Outcome 17

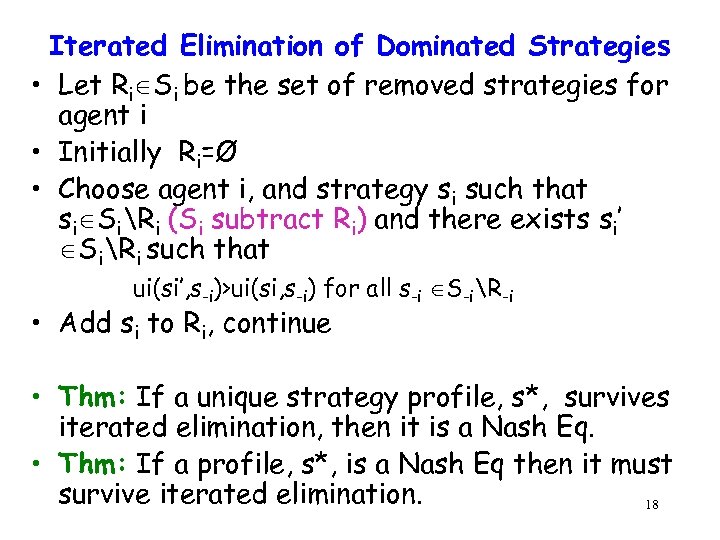

Iterated Elimination of Dominated Strategies • Let Ri Si be the set of removed strategies for agent i • Initially Ri=Ø • Choose agent i, and strategy si such that si SiRi (Si subtract Ri) and there exists si’ SiRi such that ui(si’, s-i)>ui(si, s-i) for all s-i S-iR-i • Add si to Ri, continue • Thm: If a unique strategy profile, s*, survives iterated elimination, then it is a Nash Eq. • Thm: If a profile, s*, is a Nash Eq then it must survive iterated elimination. 18

Iterated Elimination of Dominated Strategies • Let Ri Si be the set of removed strategies for agent i • Initially Ri=Ø • Choose agent i, and strategy si such that si SiRi (Si subtract Ri) and there exists si’ SiRi such that ui(si’, s-i)>ui(si, s-i) for all s-i S-iR-i • Add si to Ri, continue • Thm: If a unique strategy profile, s*, survives iterated elimination, then it is a Nash Eq. • Thm: If a profile, s*, is a Nash Eq then it must survive iterated elimination. 18

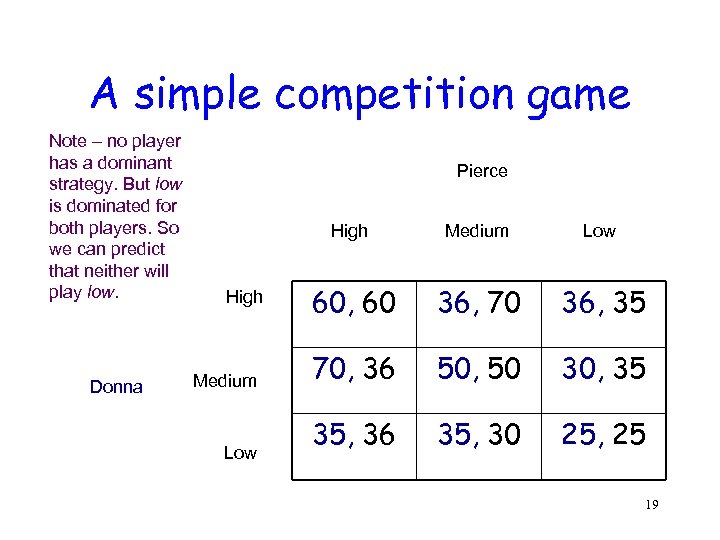

A simple competition game Note – no player has a dominant strategy. But low is dominated for both players. So we can predict that neither will play low. Donna Pierce High Medium Low 60, 60 36, 70 36, 35 70, 36 50, 50 30, 35 35, 36 35, 30 25, 25 19

A simple competition game Note – no player has a dominant strategy. But low is dominated for both players. So we can predict that neither will play low. Donna Pierce High Medium Low 60, 60 36, 70 36, 35 70, 36 50, 50 30, 35 35, 36 35, 30 25, 25 19

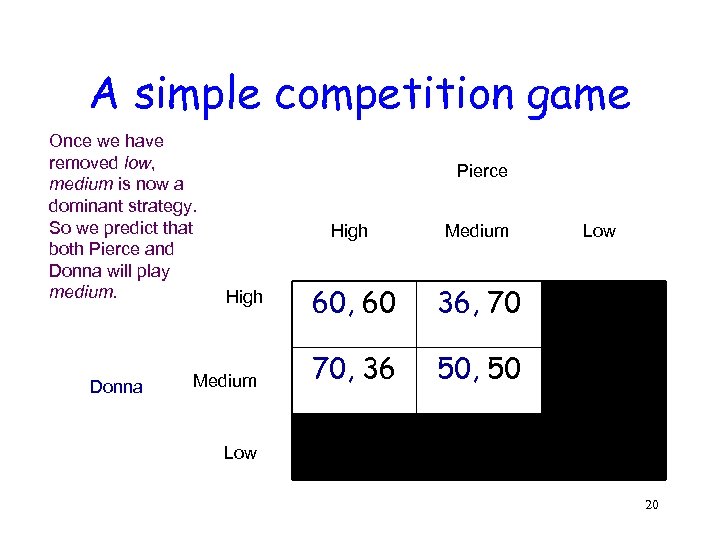

A simple competition game Once we have removed low, medium is now a dominant strategy. So we predict that both Pierce and Donna will play medium. Donna Pierce High Medium 60, 60 36, 70 70, 36 Low 50, 50 Low 20

A simple competition game Once we have removed low, medium is now a dominant strategy. So we predict that both Pierce and Donna will play medium. Donna Pierce High Medium 60, 60 36, 70 70, 36 Low 50, 50 Low 20

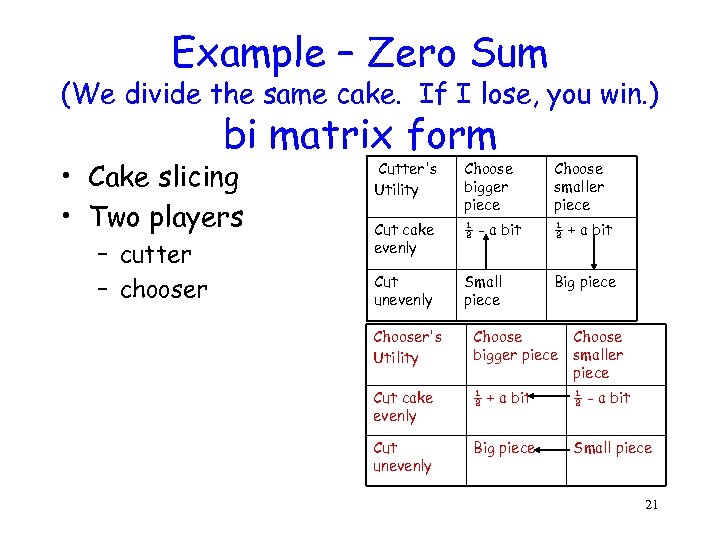

Example – Zero Sum (We divide the same cake. If I lose, you win. ) bi matrix form • Cake slicing • Two players – cutter – chooser Cutter's Utility Choose bigger piece Choose smaller piece Cut cake evenly ½ - a bit ½ + a bit Cut unevenly Small piece Big piece Chooser's Utility Choose bigger piece smaller piece Cut cake evenly ½ + a bit ½ - a bit Cut unevenly Big piece Small piece 21

Example – Zero Sum (We divide the same cake. If I lose, you win. ) bi matrix form • Cake slicing • Two players – cutter – chooser Cutter's Utility Choose bigger piece Choose smaller piece Cut cake evenly ½ - a bit ½ + a bit Cut unevenly Small piece Big piece Chooser's Utility Choose bigger piece smaller piece Cut cake evenly ½ + a bit ½ - a bit Cut unevenly Big piece Small piece 21

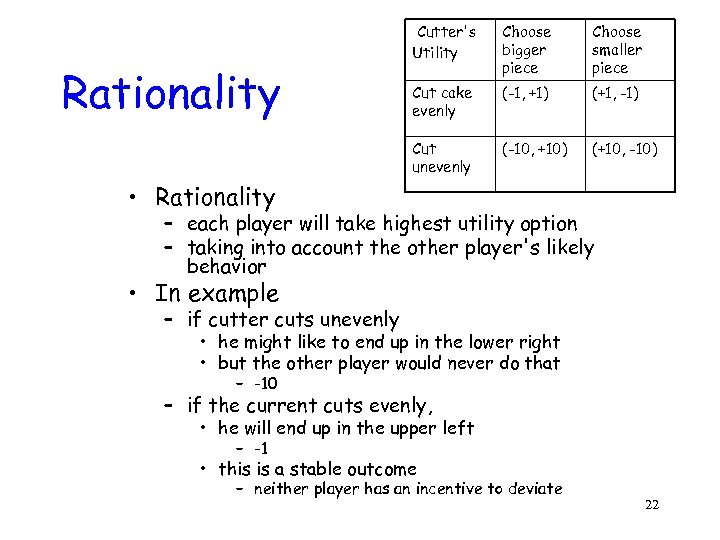

Choose bigger piece Choose smaller piece Cut cake evenly (-1, +1) (+1, -1) Cut unevenly Rationality Cutter's Utility (-10, +10) (+10, -10) • Rationality – each player will take highest utility option – taking into account the other player's likely behavior • In example – if cutter cuts unevenly • he might like to end up in the lower right • but the other player would never do that – -10 – if the current cuts evenly, • he will end up in the upper left – -1 • this is a stable outcome – neither player has an incentive to deviate 22

Choose bigger piece Choose smaller piece Cut cake evenly (-1, +1) (+1, -1) Cut unevenly Rationality Cutter's Utility (-10, +10) (+10, -10) • Rationality – each player will take highest utility option – taking into account the other player's likely behavior • In example – if cutter cuts unevenly • he might like to end up in the lower right • but the other player would never do that – -10 – if the current cuts evenly, • he will end up in the upper left – -1 • this is a stable outcome – neither player has an incentive to deviate 22

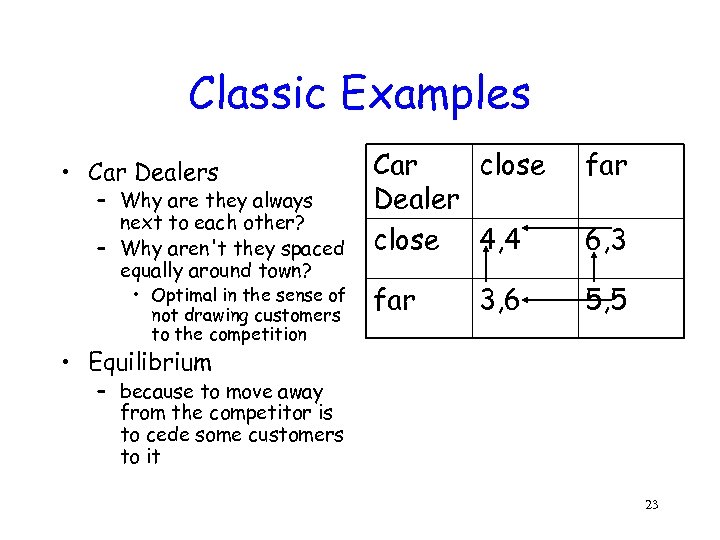

Classic Examples • Car Dealers – Why are they always next to each other? – Why aren't they spaced equally around town? • Optimal in the sense of not drawing customers to the competition Car close Dealer close 4, 4 far 5, 5 3, 6 6, 3 • Equilibrium – because to move away from the competitor is to cede some customers to it 23

Classic Examples • Car Dealers – Why are they always next to each other? – Why aren't they spaced equally around town? • Optimal in the sense of not drawing customers to the competition Car close Dealer close 4, 4 far 5, 5 3, 6 6, 3 • Equilibrium – because to move away from the competitor is to cede some customers to it 23

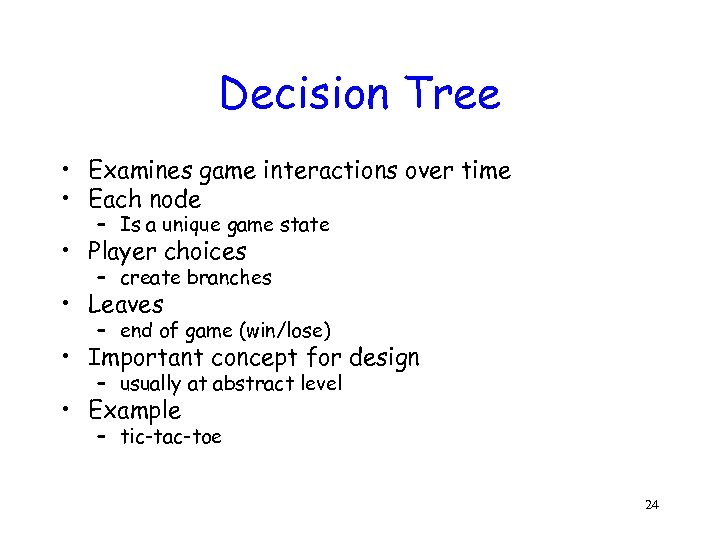

Decision Tree • Examines game interactions over time • Each node – Is a unique game state • Player choices – create branches • Leaves – end of game (win/lose) • Important concept for design – usually at abstract level • Example – tic-tac-toe 24

Decision Tree • Examines game interactions over time • Each node – Is a unique game state • Player choices – create branches • Leaves – end of game (win/lose) • Important concept for design – usually at abstract level • Example – tic-tac-toe 24

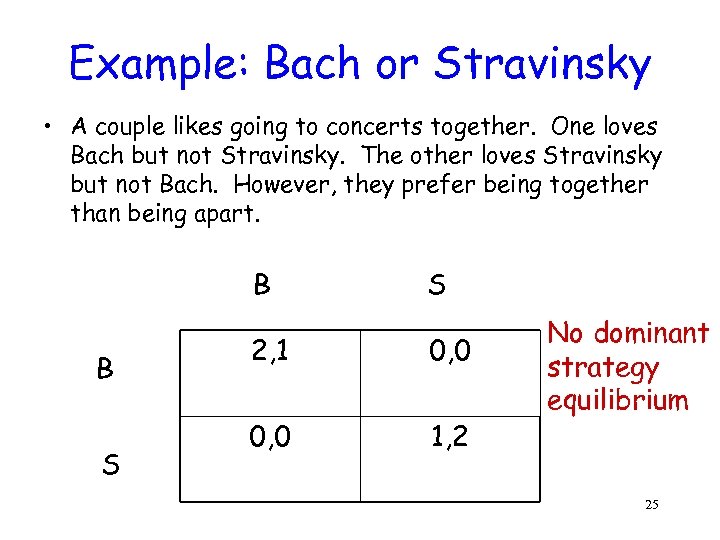

Example: Bach or Stravinsky • A couple likes going to concerts together. One loves Bach but not Stravinsky. The other loves Stravinsky but not Bach. However, they prefer being together than being apart. B B S S 2, 1 0, 0 1, 2 No dominant strategy equilibrium 25

Example: Bach or Stravinsky • A couple likes going to concerts together. One loves Bach but not Stravinsky. The other loves Stravinsky but not Bach. However, they prefer being together than being apart. B B S S 2, 1 0, 0 1, 2 No dominant strategy equilibrium 25

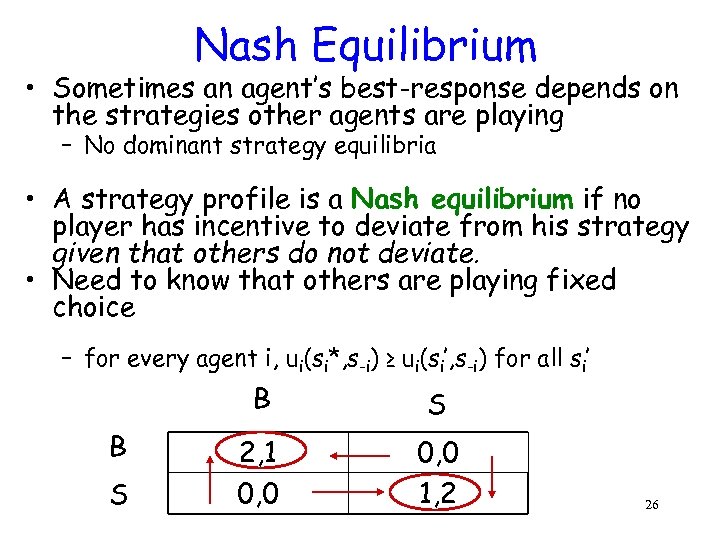

Nash Equilibrium • Sometimes an agent’s best-response depends on the strategies other agents are playing – No dominant strategy equilibria • A strategy profile is a Nash equilibrium if no player has incentive to deviate from his strategy given that others do not deviate. • Need to know that others are playing fixed choice – for every agent i, ui(si*, s-i) ≥ ui(si’, s-i) for all si’ B B S S 2, 1 0, 0 1, 2 26

Nash Equilibrium • Sometimes an agent’s best-response depends on the strategies other agents are playing – No dominant strategy equilibria • A strategy profile is a Nash equilibrium if no player has incentive to deviate from his strategy given that others do not deviate. • Need to know that others are playing fixed choice – for every agent i, ui(si*, s-i) ≥ ui(si’, s-i) for all si’ B B S S 2, 1 0, 0 1, 2 26

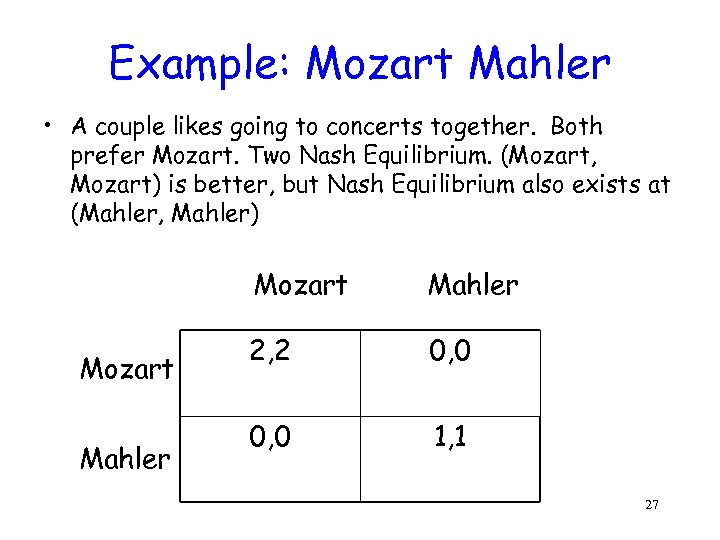

Example: Mozart Mahler • A couple likes going to concerts together. Both prefer Mozart. Two Nash Equilibrium. (Mozart, Mozart) is better, but Nash Equilibrium also exists at (Mahler, Mahler) Mozart Mahler 2, 2 0, 0 1, 1 27

Example: Mozart Mahler • A couple likes going to concerts together. Both prefer Mozart. Two Nash Equilibrium. (Mozart, Mozart) is better, but Nash Equilibrium also exists at (Mahler, Mahler) Mozart Mahler 2, 2 0, 0 1, 1 27

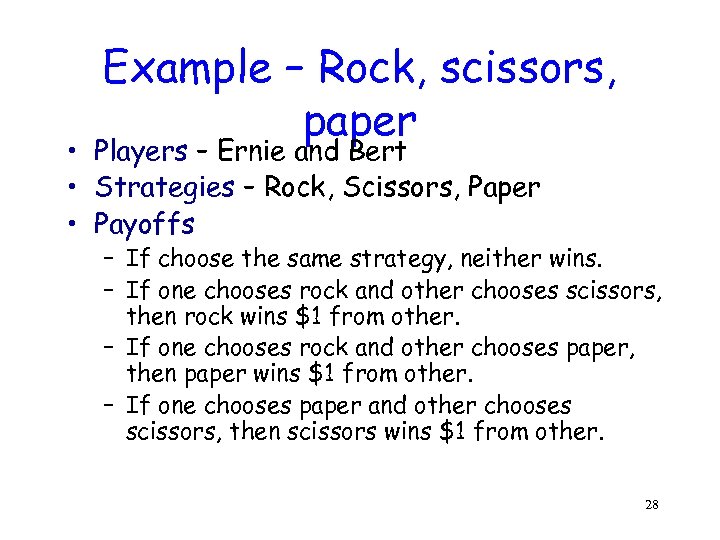

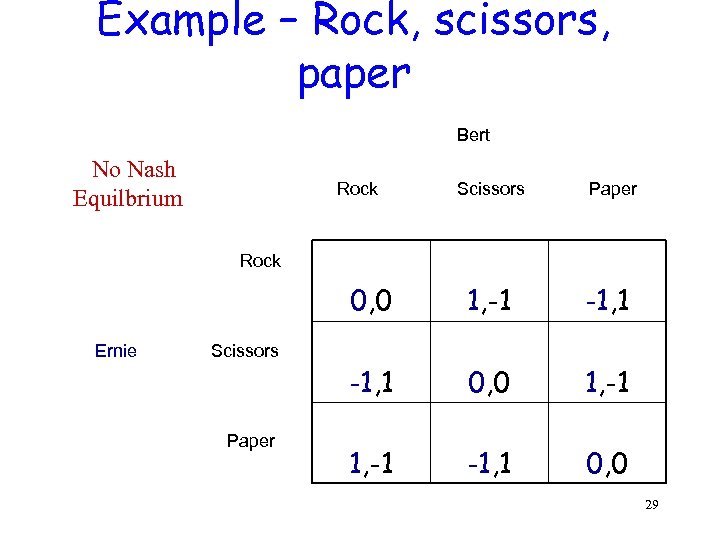

Example – Rock, scissors, paper • Players – Ernie and Bert • Strategies – Rock, Scissors, Paper • Payoffs – If choose the same strategy, neither wins. – If one chooses rock and other chooses scissors, then rock wins $1 from other. – If one chooses rock and other chooses paper, then paper wins $1 from other. – If one chooses paper and other chooses scissors, then scissors wins $1 from other. 28

Example – Rock, scissors, paper • Players – Ernie and Bert • Strategies – Rock, Scissors, Paper • Payoffs – If choose the same strategy, neither wins. – If one chooses rock and other chooses scissors, then rock wins $1 from other. – If one chooses rock and other chooses paper, then paper wins $1 from other. – If one chooses paper and other chooses scissors, then scissors wins $1 from other. 28

Example – Rock, scissors, paper Bert No Nash Equilbrium Rock Scissors Paper Rock 0, 0 Ernie Scissors Paper 1, -1 -1, 1 0, 0 29

Example – Rock, scissors, paper Bert No Nash Equilbrium Rock Scissors Paper Rock 0, 0 Ernie Scissors Paper 1, -1 -1, 1 0, 0 29

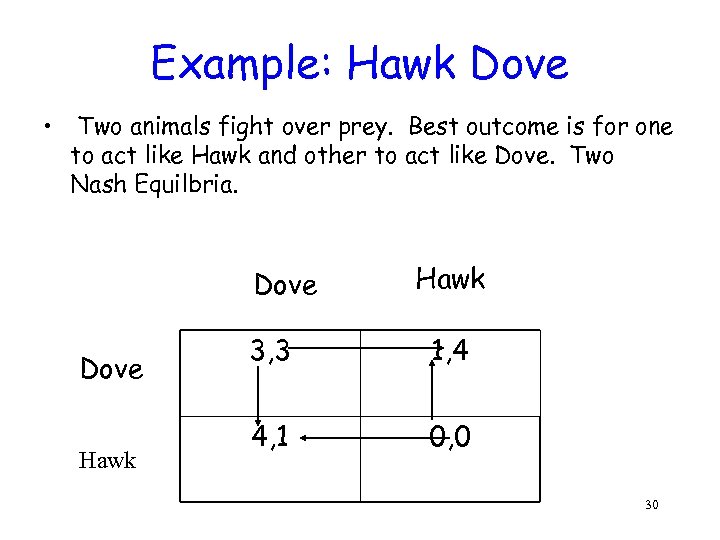

Example: Hawk Dove • Two animals fight over prey. Best outcome is for one to act like Hawk and other to act like Dove. Two Nash Equilbria. Dove Hawk 3, 3 1, 4 4, 1 0, 0 30

Example: Hawk Dove • Two animals fight over prey. Best outcome is for one to act like Hawk and other to act like Dove. Two Nash Equilbria. Dove Hawk 3, 3 1, 4 4, 1 0, 0 30

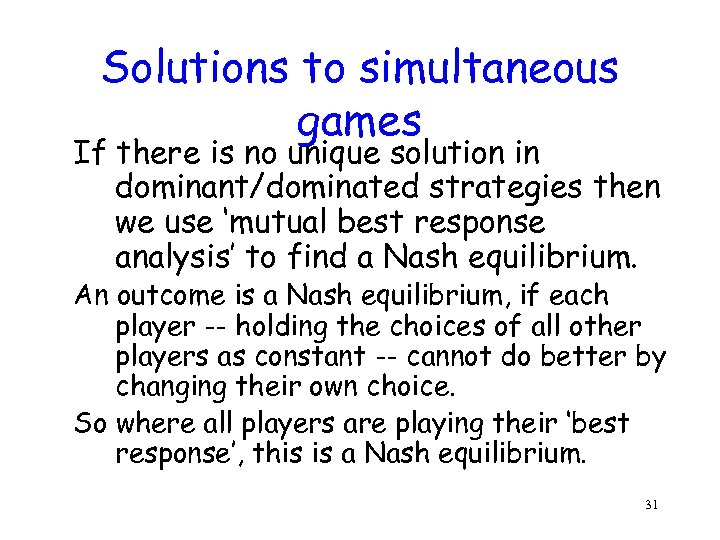

Solutions to simultaneous games If there is no unique solution in dominant/dominated strategies then we use ‘mutual best response analysis’ to find a Nash equilibrium. An outcome is a Nash equilibrium, if each player -- holding the choices of all other players as constant -- cannot do better by changing their own choice. So where all players are playing their ‘best response’, this is a Nash equilibrium. 31

Solutions to simultaneous games If there is no unique solution in dominant/dominated strategies then we use ‘mutual best response analysis’ to find a Nash equilibrium. An outcome is a Nash equilibrium, if each player -- holding the choices of all other players as constant -- cannot do better by changing their own choice. So where all players are playing their ‘best response’, this is a Nash equilibrium. 31

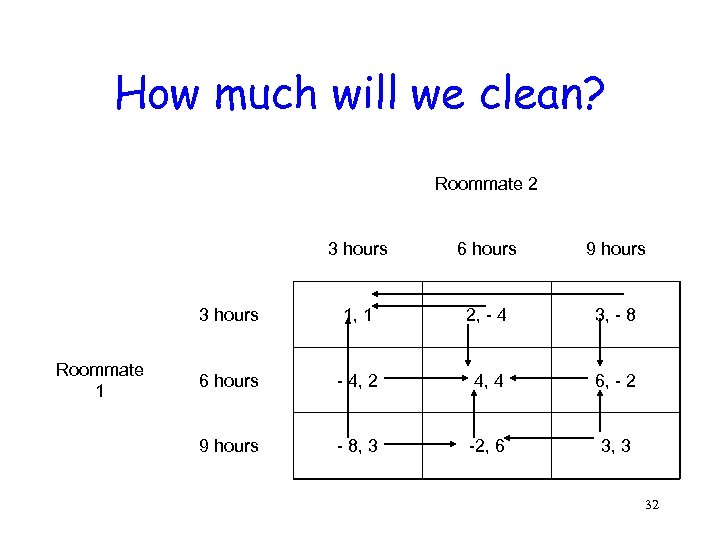

How much will we clean? Roommate 2 3 hours 9 hours 3 hours Roommate 1 6 hours 1, 1 2, - 4 3, - 8 6 hours - 4, 2 4, 4 6, - 2 9 hours - 8, 3 -2, 6 3, 3 32

How much will we clean? Roommate 2 3 hours 9 hours 3 hours Roommate 1 6 hours 1, 1 2, - 4 3, - 8 6 hours - 4, 2 4, 4 6, - 2 9 hours - 8, 3 -2, 6 3, 3 32

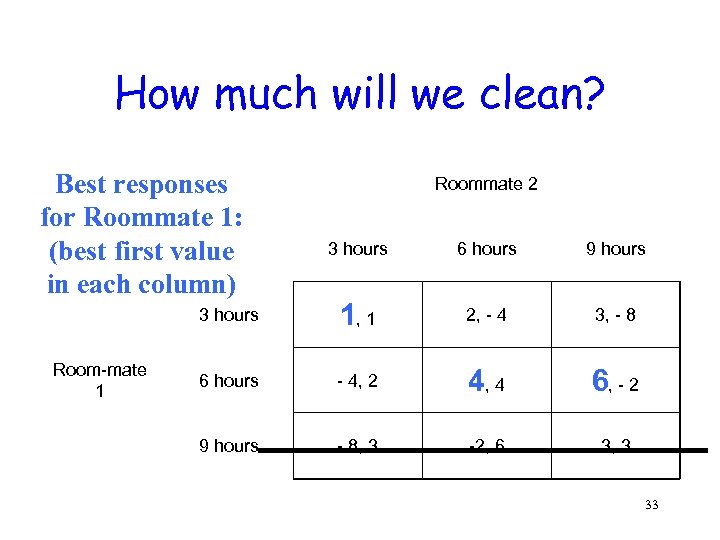

How much will we clean? Best responses for Roommate 1: (best first value in each column) Roommate 2 6 hours 9 hours 3 hours Room-mate 1 3 hours 1, 1 2, - 4 3, - 8 6 hours - 4, 2 4, 4 6, - 2 9 hours - 8, 3 -2, 6 3, 3 33

How much will we clean? Best responses for Roommate 1: (best first value in each column) Roommate 2 6 hours 9 hours 3 hours Room-mate 1 3 hours 1, 1 2, - 4 3, - 8 6 hours - 4, 2 4, 4 6, - 2 9 hours - 8, 3 -2, 6 3, 3 33

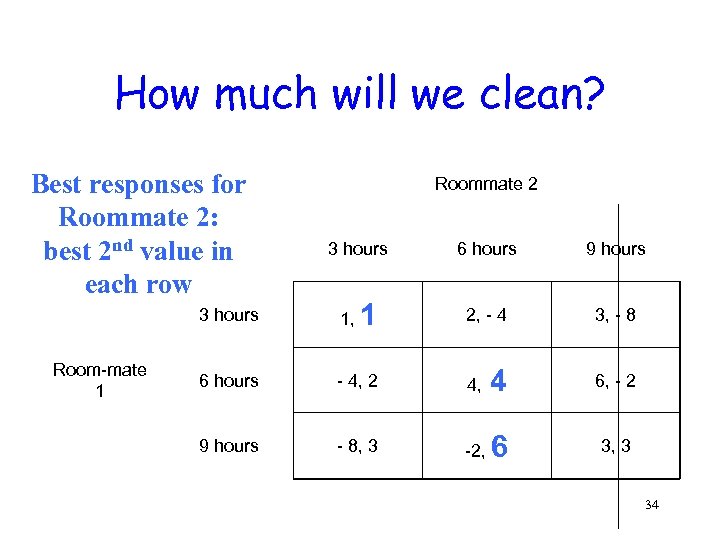

How much will we clean? Best responses for Roommate 2: best 2 nd value in each row Roommate 2 3 hours 1 6 hours 9 hours 2, - 4 3, - 8 3 hours Room-mate 1 1, 6 hours - 4, 2 4, 4 6, - 2 9 hours - 8, 3 -2, 6 3, 3 34

How much will we clean? Best responses for Roommate 2: best 2 nd value in each row Roommate 2 3 hours 1 6 hours 9 hours 2, - 4 3, - 8 3 hours Room-mate 1 1, 6 hours - 4, 2 4, 4 6, - 2 9 hours - 8, 3 -2, 6 3, 3 34

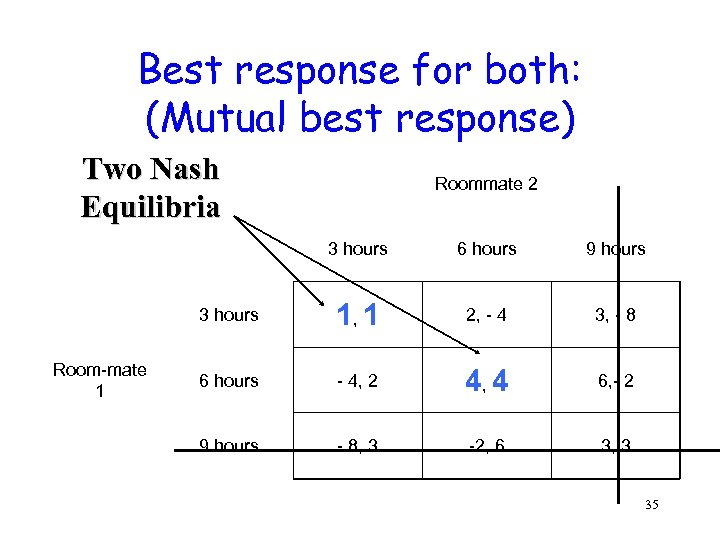

Best response for both: (Mutual best response) Two Nash Equilibria Roommate 2 3 hours 9 hours 3 hours Room-mate 1 6 hours 1, 1 2, - 4 3, - 8 6 hours - 4, 2 4, 4 6, - 2 9 hours - 8, 3 -2, 6 3, 3 35

Best response for both: (Mutual best response) Two Nash Equilibria Roommate 2 3 hours 9 hours 3 hours Room-mate 1 6 hours 1, 1 2, - 4 3, - 8 6 hours - 4, 2 4, 4 6, - 2 9 hours - 8, 3 -2, 6 3, 3 35

![concepts of rationality [doing the rational thing] • undominated strategy (problem: too weak) can’t concepts of rationality [doing the rational thing] • undominated strategy (problem: too weak) can’t](https://present5.com/presentation/6620e68b69a61c72d8ef13f5c399f5c5/image-36.jpg) concepts of rationality [doing the rational thing] • undominated strategy (problem: too weak) can’t always find a single one • (weakly) dominating strategy (alias “duh? ”) (problem: too strong, rarely exists) • Nash equilibrium (or double best response) (problem: may not exist) • randomized (mixed) Nash equilibrium – players choose various options based on some random number (assigned via a probability) Theorem [Nash 1952]: randomized Nash Equilibrium always. exists. . . 36

concepts of rationality [doing the rational thing] • undominated strategy (problem: too weak) can’t always find a single one • (weakly) dominating strategy (alias “duh? ”) (problem: too strong, rarely exists) • Nash equilibrium (or double best response) (problem: may not exist) • randomized (mixed) Nash equilibrium – players choose various options based on some random number (assigned via a probability) Theorem [Nash 1952]: randomized Nash Equilibrium always. exists. . . 36

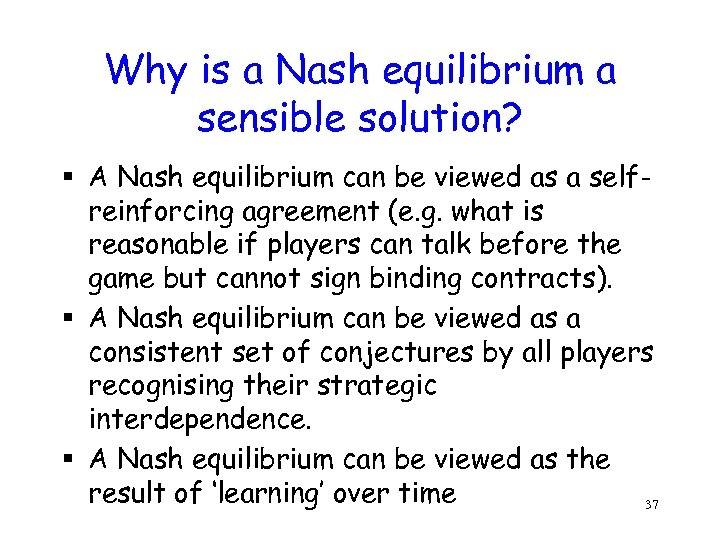

Why is a Nash equilibrium a sensible solution? § A Nash equilibrium can be viewed as a selfreinforcing agreement (e. g. what is reasonable if players can talk before the game but cannot sign binding contracts). § A Nash equilibrium can be viewed as a consistent set of conjectures by all players recognising their strategic interdependence. § A Nash equilibrium can be viewed as the result of ‘learning’ over time 37

Why is a Nash equilibrium a sensible solution? § A Nash equilibrium can be viewed as a selfreinforcing agreement (e. g. what is reasonable if players can talk before the game but cannot sign binding contracts). § A Nash equilibrium can be viewed as a consistent set of conjectures by all players recognising their strategic interdependence. § A Nash equilibrium can be viewed as the result of ‘learning’ over time 37

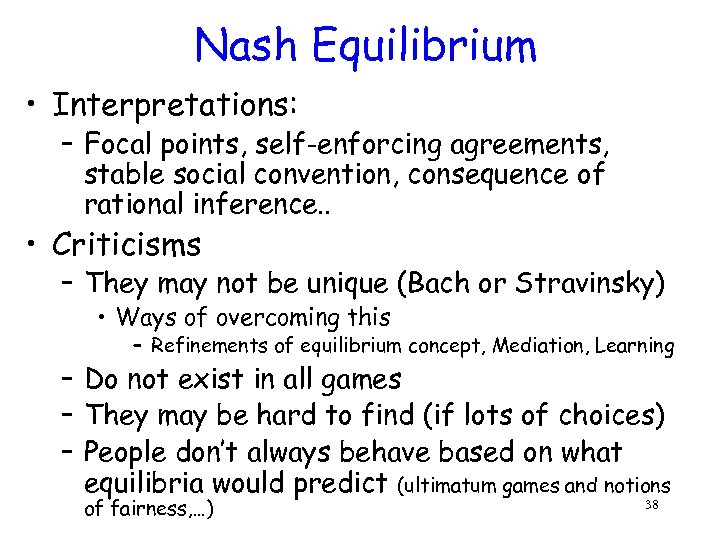

Nash Equilibrium • Interpretations: – Focal points, self-enforcing agreements, stable social convention, consequence of rational inference. . • Criticisms – They may not be unique (Bach or Stravinsky) • Ways of overcoming this – Refinements of equilibrium concept, Mediation, Learning – Do not exist in all games – They may be hard to find (if lots of choices) – People don’t always behave based on what equilibria would predict (ultimatum games and notions of fairness, …) 38

Nash Equilibrium • Interpretations: – Focal points, self-enforcing agreements, stable social convention, consequence of rational inference. . • Criticisms – They may not be unique (Bach or Stravinsky) • Ways of overcoming this – Refinements of equilibrium concept, Mediation, Learning – Do not exist in all games – They may be hard to find (if lots of choices) – People don’t always behave based on what equilibria would predict (ultimatum games and notions of fairness, …) 38

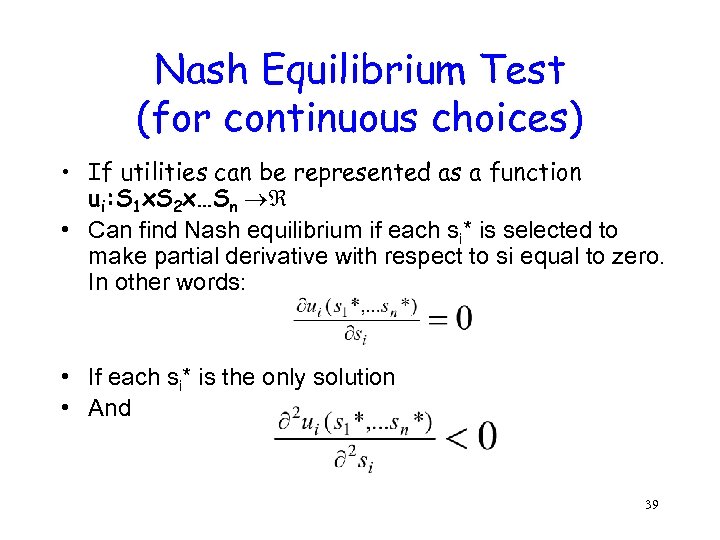

Nash Equilibrium Test (for continuous choices) • If utilities can be represented as a function ui: S 1 x. S 2 x…Sn • Can find Nash equilibrium if each si* is selected to make partial derivative with respect to si equal to zero. In other words: • If each si* is the only solution • And 39

Nash Equilibrium Test (for continuous choices) • If utilities can be represented as a function ui: S 1 x. S 2 x…Sn • Can find Nash equilibrium if each si* is selected to make partial derivative with respect to si equal to zero. In other words: • If each si* is the only solution • And 39

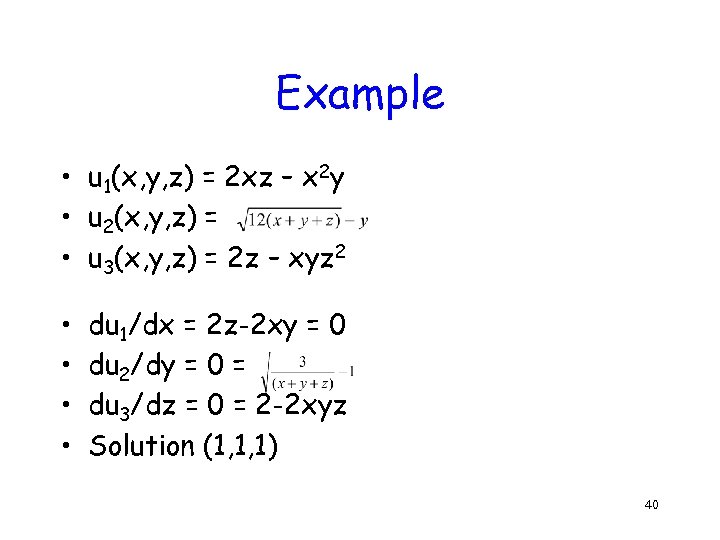

Example • u 1(x, y, z) = 2 xz – x 2 y • u 2(x, y, z) = • u 3(x, y, z) = 2 z – xyz 2 • • du 1/dx = 2 z-2 xy = 0 du 2/dy = 0 = du 3/dz = 0 = 2 -2 xyz Solution (1, 1, 1) 40

Example • u 1(x, y, z) = 2 xz – x 2 y • u 2(x, y, z) = • u 3(x, y, z) = 2 z – xyz 2 • • du 1/dx = 2 z-2 xy = 0 du 2/dy = 0 = du 3/dz = 0 = 2 -2 xyz Solution (1, 1, 1) 40

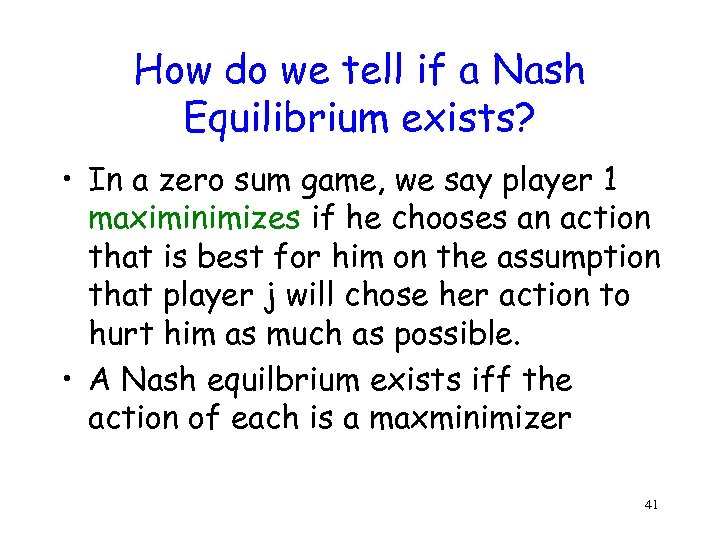

How do we tell if a Nash Equilibrium exists? • In a zero sum game, we say player 1 maximinimizes if he chooses an action that is best for him on the assumption that player j will chose her action to hurt him as much as possible. • A Nash equilbrium exists iff the action of each is a maxminimizer 41

How do we tell if a Nash Equilibrium exists? • In a zero sum game, we say player 1 maximinimizes if he chooses an action that is best for him on the assumption that player j will chose her action to hurt him as much as possible. • A Nash equilbrium exists iff the action of each is a maxminimizer 41

Fixed Points • Let a* be a profile of actions such that a*i Bi(a*-i) where B is the “best response” function. In other words, Bi says that if other responses are known, a*i is the best for player i. • Fixed point theorems give conditions on B under which there exists a value of a* such that a* B(a*). In other words, given what other people will do, no one will change. 42

Fixed Points • Let a* be a profile of actions such that a*i Bi(a*-i) where B is the “best response” function. In other words, Bi says that if other responses are known, a*i is the best for player i. • Fixed point theorems give conditions on B under which there exists a value of a* such that a* B(a*). In other words, given what other people will do, no one will change. 42

• Intuition behind Brouwer’s fixed one lying directly point theorem Take two sheets of paper, above the other. Draw a grid on the paper, number the gridboxes, then xerox that sheet of paper. Crumple the top sheet, and place it on top of the other sheet. You will see that at least one number is on top of the corresponding number on the lower sheet of paper. Brouwer's theorem says that there must be at least one point on the top sheet that is directly above the corresponding point on the bottom sheet. • In dimension three, Brouwer's theorem says that if you take a cup of coffee, and slosh it around, then after the sloshing there must be some point in the coffee which is in the exact spot that it was before you did the sloshing (though it might have moved around in between). Moreover, if you tried to slosh that point out of its original position, you can't help but slosh another point back into its original position. 43

• Intuition behind Brouwer’s fixed one lying directly point theorem Take two sheets of paper, above the other. Draw a grid on the paper, number the gridboxes, then xerox that sheet of paper. Crumple the top sheet, and place it on top of the other sheet. You will see that at least one number is on top of the corresponding number on the lower sheet of paper. Brouwer's theorem says that there must be at least one point on the top sheet that is directly above the corresponding point on the bottom sheet. • In dimension three, Brouwer's theorem says that if you take a cup of coffee, and slosh it around, then after the sloshing there must be some point in the coffee which is in the exact spot that it was before you did the sloshing (though it might have moved around in between). Moreover, if you tried to slosh that point out of its original position, you can't help but slosh another point back into its original position. 43

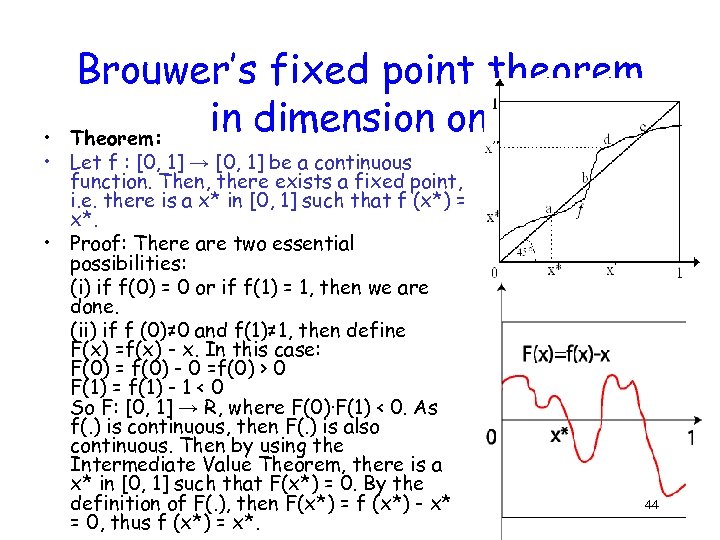

Brouwer’s fixed point theorem in dimension one Theorem: • • Let f : [0, 1] → [0, 1] be a continuous function. Then, there exists a fixed point, i. e. there is a x* in [0, 1] such that f (x*) = x*. • Proof: There are two essential possibilities: (i) if f(0) = 0 or if f(1) = 1, then we are done. (ii) if f (0)≠ 0 and f(1)≠ 1, then define F(x) =f(x) - x. In this case: F(0) = f(0) - 0 =f(0) > 0 F(1) = f(1) - 1 < 0 So F: [0, 1] → R, where F(0)·F(1) < 0. As f(. ) is continuous, then F(. ) is also continuous. Then by using the Intermediate Value Theorem, there is a x* in [0, 1] such that F(x*) = 0. By the definition of F(. ), then F(x*) = f (x*) - x* = 0, thus f (x*) = x*. 44

Brouwer’s fixed point theorem in dimension one Theorem: • • Let f : [0, 1] → [0, 1] be a continuous function. Then, there exists a fixed point, i. e. there is a x* in [0, 1] such that f (x*) = x*. • Proof: There are two essential possibilities: (i) if f(0) = 0 or if f(1) = 1, then we are done. (ii) if f (0)≠ 0 and f(1)≠ 1, then define F(x) =f(x) - x. In this case: F(0) = f(0) - 0 =f(0) > 0 F(1) = f(1) - 1 < 0 So F: [0, 1] → R, where F(0)·F(1) < 0. As f(. ) is continuous, then F(. ) is also continuous. Then by using the Intermediate Value Theorem, there is a x* in [0, 1] such that F(x*) = 0. By the definition of F(. ), then F(x*) = f (x*) - x* = 0, thus f (x*) = x*. 44

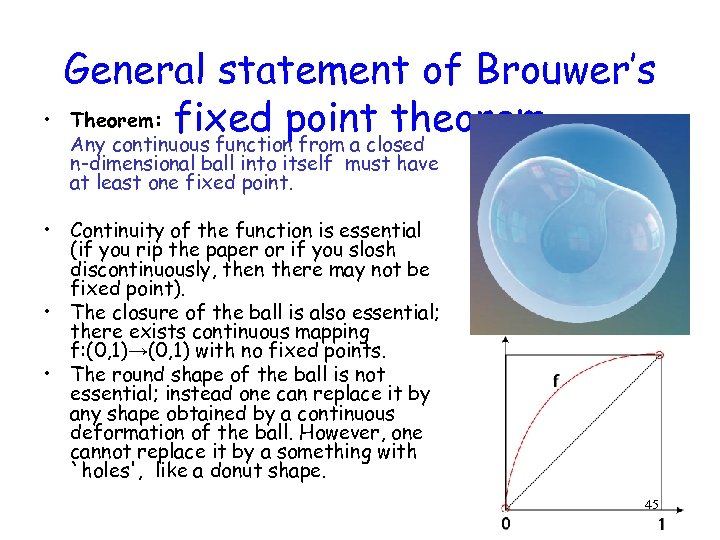

• General statement of Brouwer’s Theorem: fixed point theorem Any continuous function from a closed n-dimensional ball into itself must have at least one fixed point. • Continuity of the function is essential (if you rip the paper or if you slosh discontinuously, then there may not be fixed point). • The closure of the ball is also essential; there exists continuous mapping f: (0, 1)→(0, 1) with no fixed points. • The round shape of the ball is not essential; instead one can replace it by any shape obtained by a continuous deformation of the ball. However, one cannot replace it by a something with `holes', like a donut shape. 45

• General statement of Brouwer’s Theorem: fixed point theorem Any continuous function from a closed n-dimensional ball into itself must have at least one fixed point. • Continuity of the function is essential (if you rip the paper or if you slosh discontinuously, then there may not be fixed point). • The closure of the ball is also essential; there exists continuous mapping f: (0, 1)→(0, 1) with no fixed points. • The round shape of the ball is not essential; instead one can replace it by any shape obtained by a continuous deformation of the ball. However, one cannot replace it by a something with `holes', like a donut shape. 45

Applications of Brouwer’s fixed point theorem • Topology is a branch of pure mathematics devoted to the shape of objects. It ignores issues like size and angle, which are important in geometry. • For this reason, it is sometimes called rubber-sheet geometry. • One important problem in topology is the study of the conditions under which any transformation of a certain domain has a point that remains fixed. • Fixed point theorems are some of the most important theorems in all of mathematics. Among other applications, they are used to show the existence of solutions to differential equations, as well as the existence of equilibria in game theory. • The Brouwer fixed point theorem was a main mathematical tool in John Nash’s papers, for which he has won a Nobel prize in economics. 46

Applications of Brouwer’s fixed point theorem • Topology is a branch of pure mathematics devoted to the shape of objects. It ignores issues like size and angle, which are important in geometry. • For this reason, it is sometimes called rubber-sheet geometry. • One important problem in topology is the study of the conditions under which any transformation of a certain domain has a point that remains fixed. • Fixed point theorems are some of the most important theorems in all of mathematics. Among other applications, they are used to show the existence of solutions to differential equations, as well as the existence of equilibria in game theory. • The Brouwer fixed point theorem was a main mathematical tool in John Nash’s papers, for which he has won a Nobel prize in economics. 46

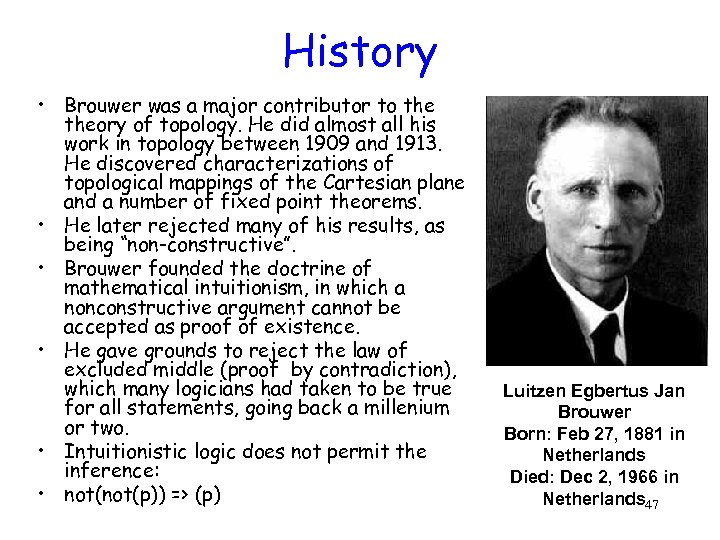

History • Brouwer was a major contributor to theory of topology. He did almost all his work in topology between 1909 and 1913. He discovered characterizations of topological mappings of the Cartesian plane and a number of fixed point theorems. • He later rejected many of his results, as being “non-constructive”. • Brouwer founded the doctrine of mathematical intuitionism, in which a nonconstructive argument cannot be accepted as proof of existence. • He gave grounds to reject the law of excluded middle (proof by contradiction), which many logicians had taken to be true for all statements, going back a millenium or two. • Intuitionistic logic does not permit the inference: • not(p)) => (p) Luitzen Egbertus Jan Brouwer Born: Feb 27, 1881 in Netherlands Died: Dec 2, 1966 in Netherlands 47

History • Brouwer was a major contributor to theory of topology. He did almost all his work in topology between 1909 and 1913. He discovered characterizations of topological mappings of the Cartesian plane and a number of fixed point theorems. • He later rejected many of his results, as being “non-constructive”. • Brouwer founded the doctrine of mathematical intuitionism, in which a nonconstructive argument cannot be accepted as proof of existence. • He gave grounds to reject the law of excluded middle (proof by contradiction), which many logicians had taken to be true for all statements, going back a millenium or two. • Intuitionistic logic does not permit the inference: • not(p)) => (p) Luitzen Egbertus Jan Brouwer Born: Feb 27, 1881 in Netherlands Died: Dec 2, 1966 in Netherlands 47

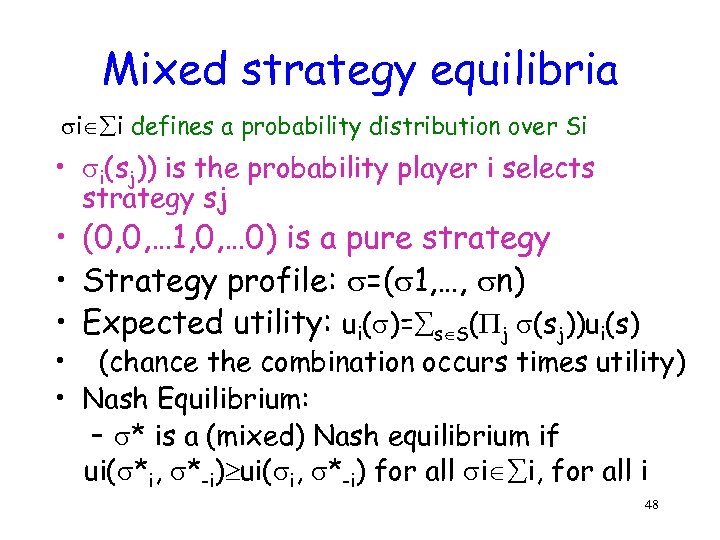

Mixed strategy equilibria i i defines a probability distribution over Si • i(sj)) is the probability player i selects strategy sj • (0, 0, … 1, 0, … 0) is a pure strategy • Strategy profile: =( 1, …, n) • Expected utility: ui( )= s S( j (sj))ui(s) • (chance the combination occurs times utility) • Nash Equilibrium: – * is a (mixed) Nash equilibrium if ui( *i, *-i) ui( i, *-i) for all i i, for all i 48

Mixed strategy equilibria i i defines a probability distribution over Si • i(sj)) is the probability player i selects strategy sj • (0, 0, … 1, 0, … 0) is a pure strategy • Strategy profile: =( 1, …, n) • Expected utility: ui( )= s S( j (sj))ui(s) • (chance the combination occurs times utility) • Nash Equilibrium: – * is a (mixed) Nash equilibrium if ui( *i, *-i) ui( i, *-i) for all i i, for all i 48

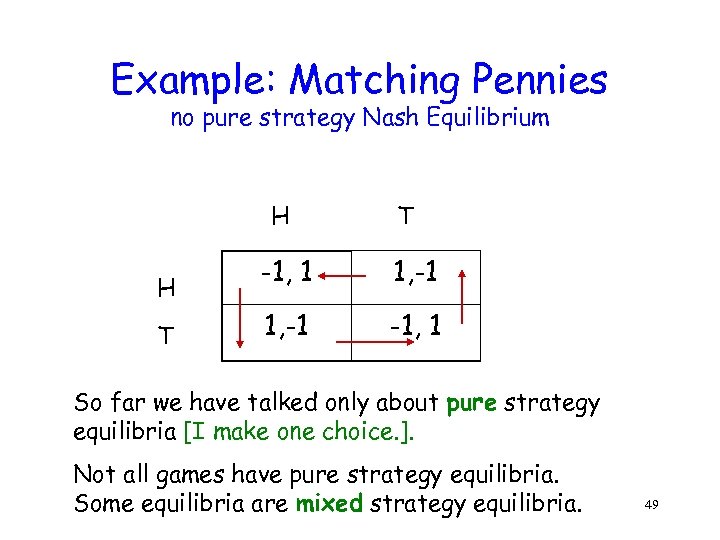

Example: Matching Pennies no pure strategy Nash Equilibrium H H T T -1, 1 1, -1 -1, 1 So far we have talked only about pure strategy equilibria [I make one choice. ]. Not all games have pure strategy equilibria. Some equilibria are mixed strategy equilibria. 49

Example: Matching Pennies no pure strategy Nash Equilibrium H H T T -1, 1 1, -1 -1, 1 So far we have talked only about pure strategy equilibria [I make one choice. ]. Not all games have pure strategy equilibria. Some equilibria are mixed strategy equilibria. 49

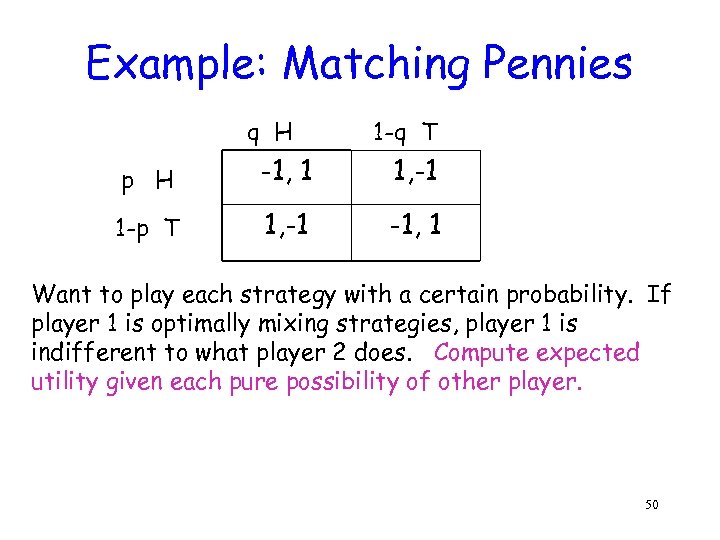

Example: Matching Pennies q H 1 -q T p H -1, 1 1, -1 1 -p T 1, -1 -1, 1 Want to play each strategy with a certain probability. If player 1 is optimally mixing strategies, player 1 is indifferent to what player 2 does. Compute expected utility given each pure possibility of other player. 50

Example: Matching Pennies q H 1 -q T p H -1, 1 1, -1 1 -p T 1, -1 -1, 1 Want to play each strategy with a certain probability. If player 1 is optimally mixing strategies, player 1 is indifferent to what player 2 does. Compute expected utility given each pure possibility of other player. 50

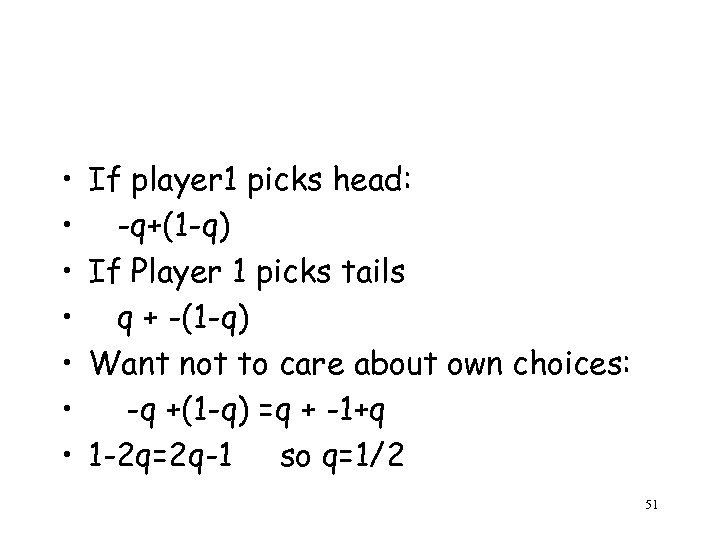

• • If player 1 picks head: -q+(1 -q) If Player 1 picks tails q + -(1 -q) Want not to care about own choices: -q +(1 -q) =q + -1+q 1 -2 q=2 q-1 so q=1/2 51

• • If player 1 picks head: -q+(1 -q) If Player 1 picks tails q + -(1 -q) Want not to care about own choices: -q +(1 -q) =q + -1+q 1 -2 q=2 q-1 so q=1/2 51

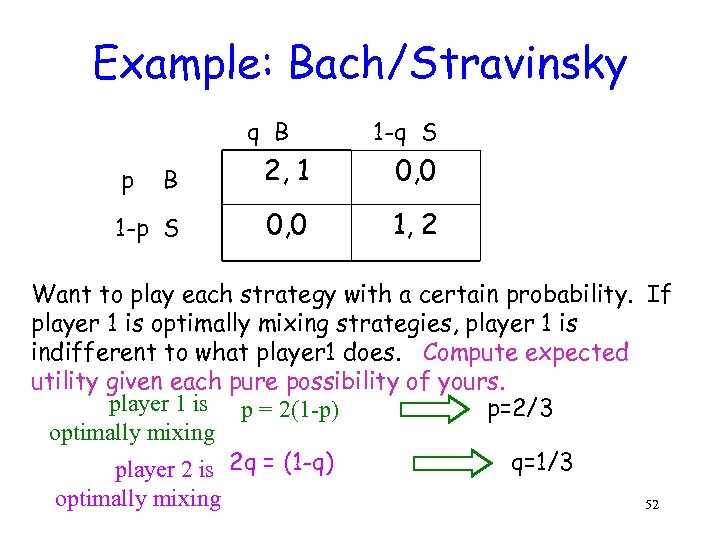

Example: Bach/Stravinsky q B 1 -q S B 2, 1 0, 0 1 -p S 0, 0 1, 2 p Want to play each strategy with a certain probability. If player 1 is optimally mixing strategies, player 1 is indifferent to what player 1 does. Compute expected utility given each pure possibility of yours. player 1 is p = 2(1 -p) p=2/3 optimally mixing q=1/3 player 2 is 2 q = (1 -q) optimally mixing 52

Example: Bach/Stravinsky q B 1 -q S B 2, 1 0, 0 1 -p S 0, 0 1, 2 p Want to play each strategy with a certain probability. If player 1 is optimally mixing strategies, player 1 is indifferent to what player 1 does. Compute expected utility given each pure possibility of yours. player 1 is p = 2(1 -p) p=2/3 optimally mixing q=1/3 player 2 is 2 q = (1 -q) optimally mixing 52

“I Used to Think I Was Indecisive - But Now I’m Not So Sure” -Anonymous 53

“I Used to Think I Was Indecisive - But Now I’m Not So Sure” -Anonymous 53

Mixed Strategies • Unreasonable predictors of -time human interaction one • Reasonable predictors of long-term proportions 54

Mixed Strategies • Unreasonable predictors of -time human interaction one • Reasonable predictors of long-term proportions 54

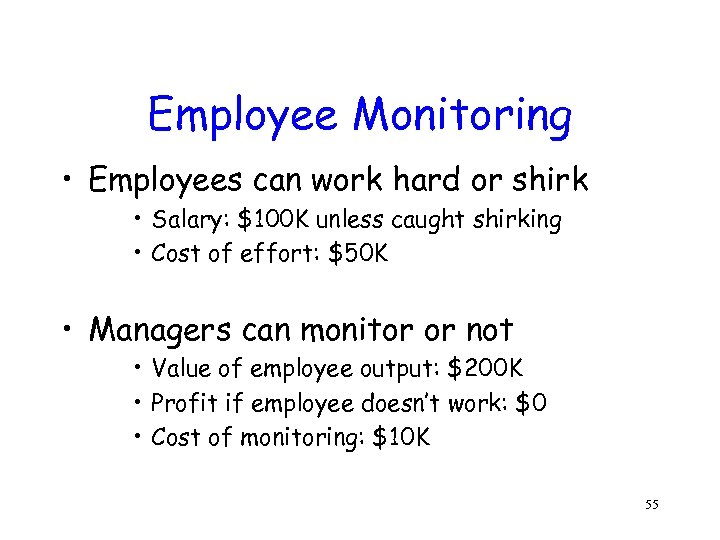

Employee Monitoring • Employees can work hard or shirk • Salary: $100 K unless caught shirking • Cost of effort: $50 K • Managers can monitor or not • Value of employee output: $200 K • Profit if employee doesn’t work: $0 • Cost of monitoring: $10 K 55

Employee Monitoring • Employees can work hard or shirk • Salary: $100 K unless caught shirking • Cost of effort: $50 K • Managers can monitor or not • Value of employee output: $200 K • Profit if employee doesn’t work: $0 • Cost of monitoring: $10 K 55

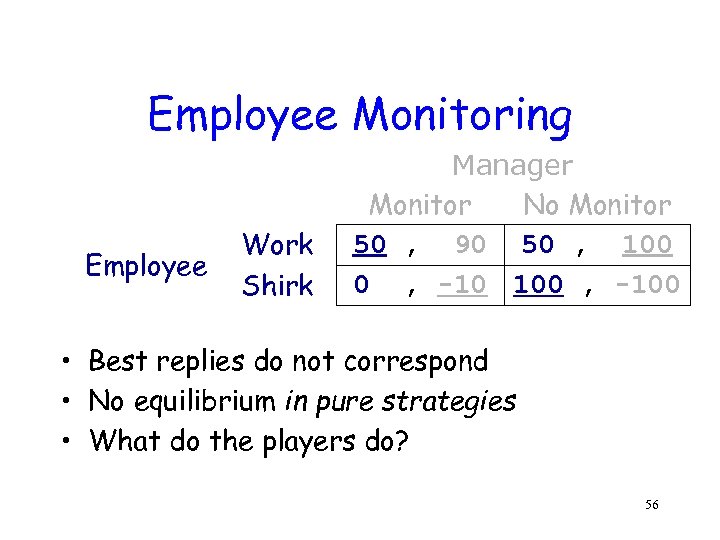

Employee Monitoring Employee Work Shirk Manager Monitor No Monitor 50 , 90 50 , 100 0 , -10 100 , -100 • Best replies do not correspond • No equilibrium in pure strategies • What do the players do? 56

Employee Monitoring Employee Work Shirk Manager Monitor No Monitor 50 , 90 50 , 100 0 , -10 100 , -100 • Best replies do not correspond • No equilibrium in pure strategies • What do the players do? 56

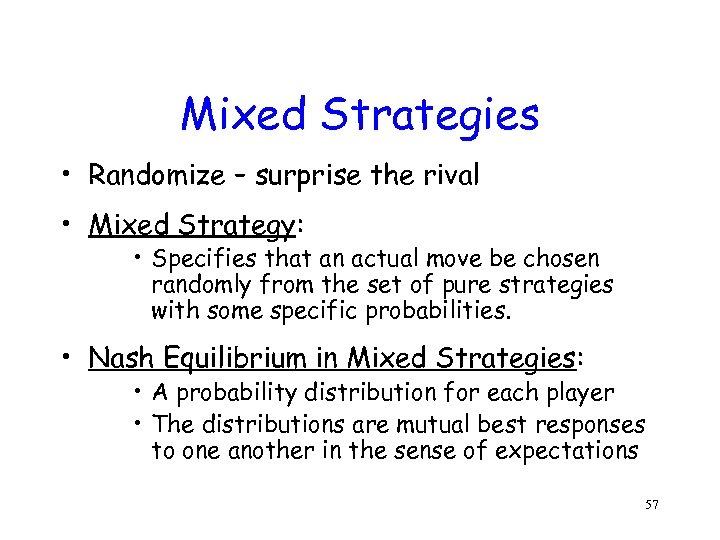

Mixed Strategies • Randomize – surprise the rival • Mixed Strategy: • Specifies that an actual move be chosen randomly from the set of pure strategies with some specific probabilities. • Nash Equilibrium in Mixed Strategies: • A probability distribution for each player • The distributions are mutual best responses to one another in the sense of expectations 57

Mixed Strategies • Randomize – surprise the rival • Mixed Strategy: • Specifies that an actual move be chosen randomly from the set of pure strategies with some specific probabilities. • Nash Equilibrium in Mixed Strategies: • A probability distribution for each player • The distributions are mutual best responses to one another in the sense of expectations 57

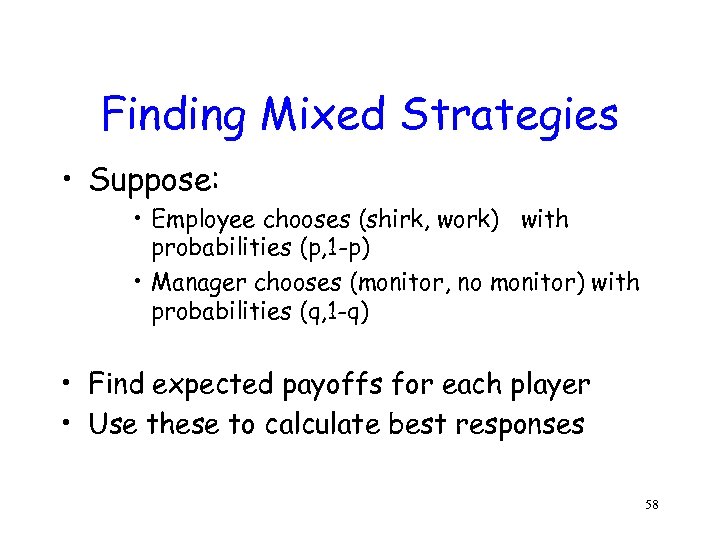

Finding Mixed Strategies • Suppose: • Employee chooses (shirk, work) with probabilities (p, 1 -p) • Manager chooses (monitor, no monitor) with probabilities (q, 1 -q) • Find expected payoffs for each player • Use these to calculate best responses 58

Finding Mixed Strategies • Suppose: • Employee chooses (shirk, work) with probabilities (p, 1 -p) • Manager chooses (monitor, no monitor) with probabilities (q, 1 -q) • Find expected payoffs for each player • Use these to calculate best responses 58

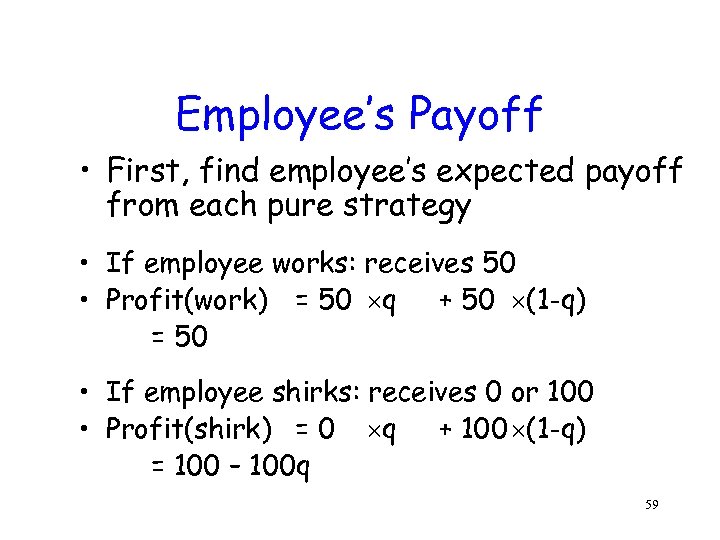

Employee’s Payoff • First, find employee’s expected payoff from each pure strategy • If employee works: receives 50 • Profit(work) = 50 q + 50 (1 -q) = 50 • If employee shirks: receives 0 or 100 • Profit(shirk) = 0 q + 100 (1 -q) = 100 – 100 q 59

Employee’s Payoff • First, find employee’s expected payoff from each pure strategy • If employee works: receives 50 • Profit(work) = 50 q + 50 (1 -q) = 50 • If employee shirks: receives 0 or 100 • Profit(shirk) = 0 q + 100 (1 -q) = 100 – 100 q 59

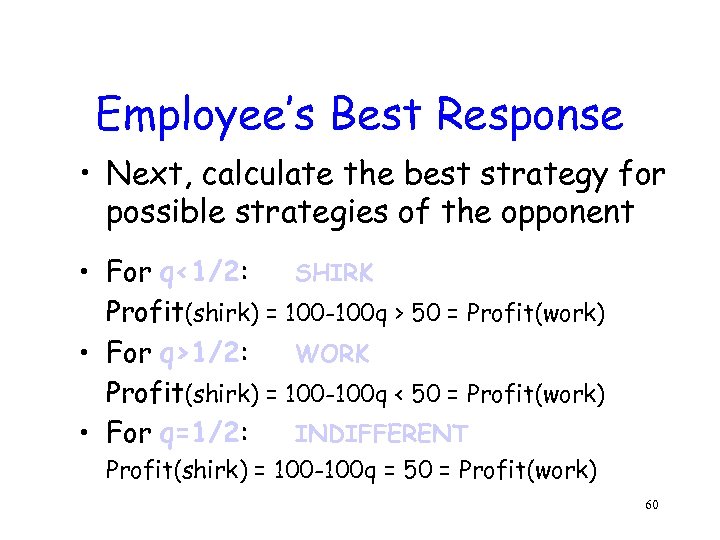

Employee’s Best Response • Next, calculate the best strategy for possible strategies of the opponent • For q<1/2: SHIRK Profit(shirk) = 100 -100 q > 50 = Profit(work) • For q>1/2: WORK Profit(shirk) = 100 -100 q < 50 = Profit(work) • For q=1/2: INDIFFERENT Profit(shirk) = 100 -100 q = 50 = Profit(work) 60

Employee’s Best Response • Next, calculate the best strategy for possible strategies of the opponent • For q<1/2: SHIRK Profit(shirk) = 100 -100 q > 50 = Profit(work) • For q>1/2: WORK Profit(shirk) = 100 -100 q < 50 = Profit(work) • For q=1/2: INDIFFERENT Profit(shirk) = 100 -100 q = 50 = Profit(work) 60

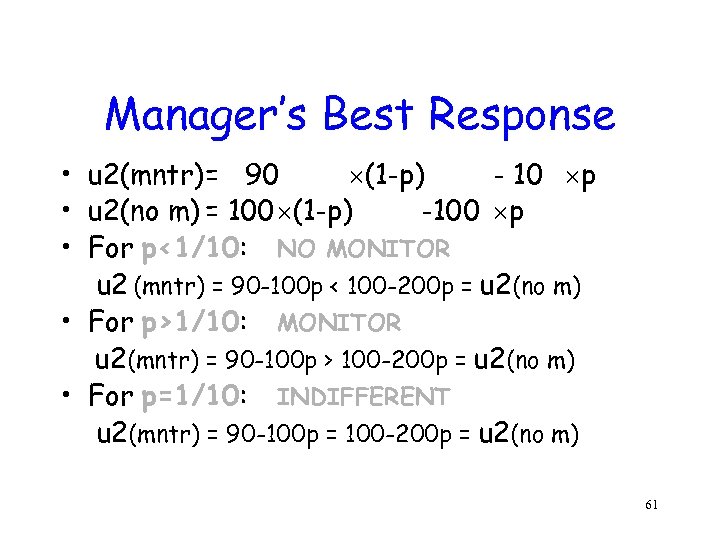

Manager’s Best Response • u 2(mntr)= 90 (1 -p) - 10 p • u 2(no m) = 100 (1 -p) -100 p • For p<1/10: NO MONITOR u 2 (mntr) = 90 -100 p < 100 -200 p = u 2(no m) • For p>1/10: MONITOR u 2(mntr) = 90 -100 p > 100 -200 p = u 2(no m) • For p=1/10: INDIFFERENT u 2(mntr) = 90 -100 p = 100 -200 p = u 2(no m) 61

Manager’s Best Response • u 2(mntr)= 90 (1 -p) - 10 p • u 2(no m) = 100 (1 -p) -100 p • For p<1/10: NO MONITOR u 2 (mntr) = 90 -100 p < 100 -200 p = u 2(no m) • For p>1/10: MONITOR u 2(mntr) = 90 -100 p > 100 -200 p = u 2(no m) • For p=1/10: INDIFFERENT u 2(mntr) = 90 -100 p = 100 -200 p = u 2(no m) 61

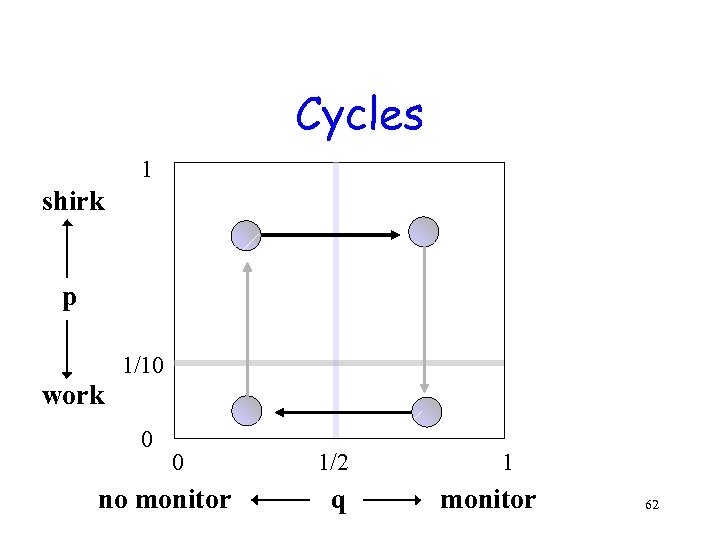

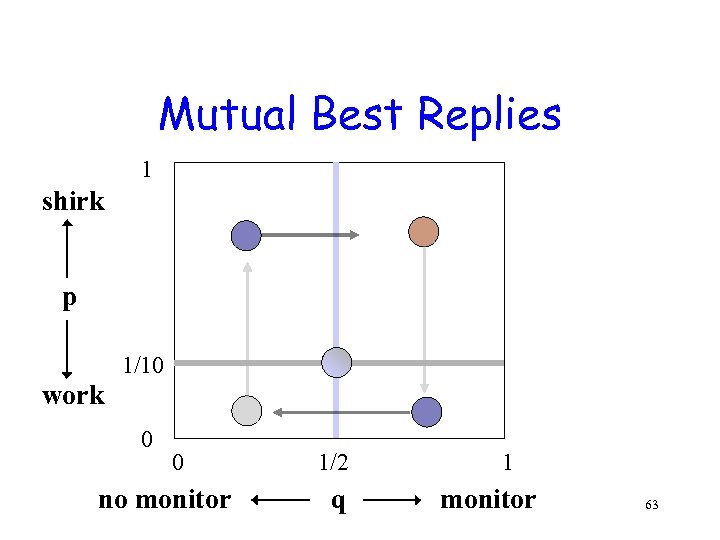

Cycles 1 shirk p 1/10 work 0 0 no monitor 1/2 q 1 monitor 62

Cycles 1 shirk p 1/10 work 0 0 no monitor 1/2 q 1 monitor 62

Mutual Best Replies 1 shirk p 1/10 work 0 0 no monitor 1/2 q 1 monitor 63

Mutual Best Replies 1 shirk p 1/10 work 0 0 no monitor 1/2 q 1 monitor 63

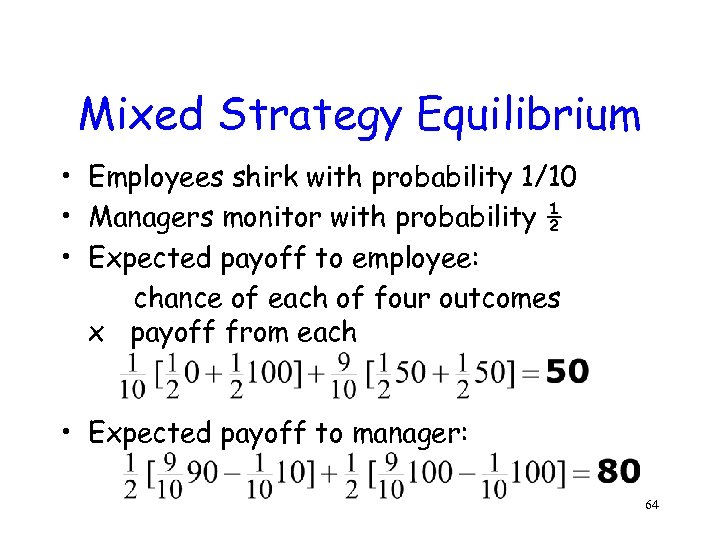

Mixed Strategy Equilibrium • Employees shirk with probability 1/10 • Managers monitor with probability ½ • Expected payoff to employee: chance of each of four outcomes x payoff from each • Expected payoff to manager: 64

Mixed Strategy Equilibrium • Employees shirk with probability 1/10 • Managers monitor with probability ½ • Expected payoff to employee: chance of each of four outcomes x payoff from each • Expected payoff to manager: 64

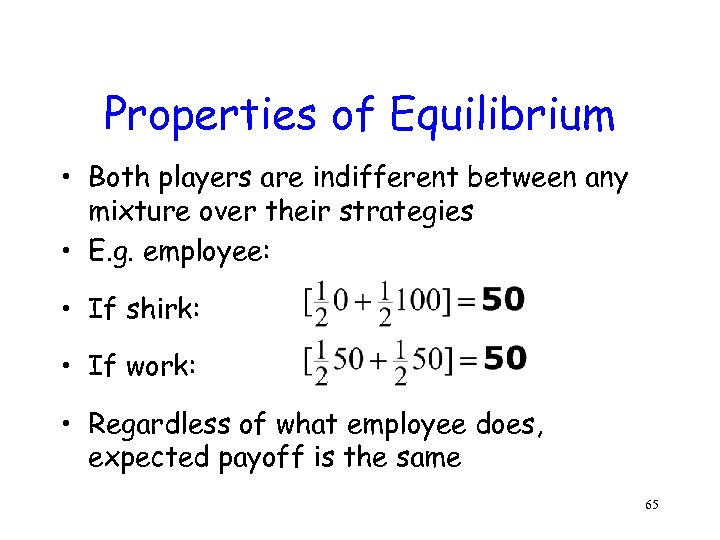

Properties of Equilibrium • Both players are indifferent between any mixture over their strategies • E. g. employee: • If shirk: • If work: • Regardless of what employee does, expected payoff is the same 65

Properties of Equilibrium • Both players are indifferent between any mixture over their strategies • E. g. employee: • If shirk: • If work: • Regardless of what employee does, expected payoff is the same 65

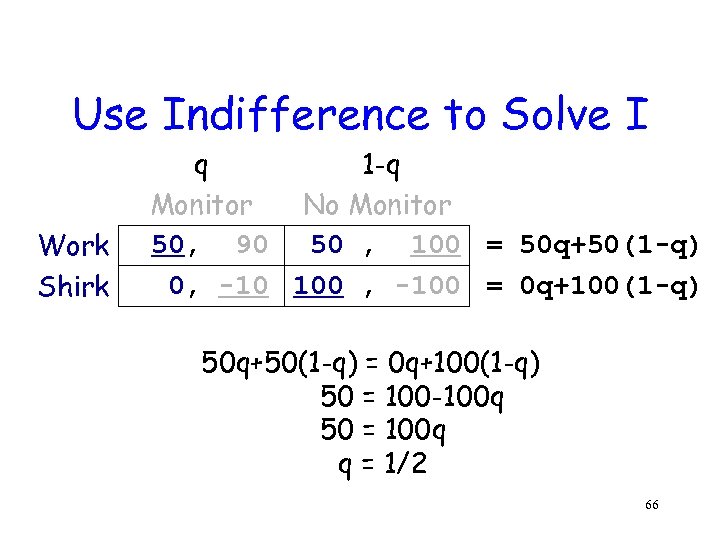

Use Indifference to Solve I Work Shirk q 1 -q Monitor No Monitor 50, 90 50 , 100 = 50 q+50(1 -q) 0, -10 100 , -100 = 0 q+100(1 -q) 50 q+50(1 -q) = 0 q+100(1 -q) 50 = 100 -100 q 50 = 100 q q = 1/2 66

Use Indifference to Solve I Work Shirk q 1 -q Monitor No Monitor 50, 90 50 , 100 = 50 q+50(1 -q) 0, -10 100 , -100 = 0 q+100(1 -q) 50 q+50(1 -q) = 0 q+100(1 -q) 50 = 100 -100 q 50 = 100 q q = 1/2 66

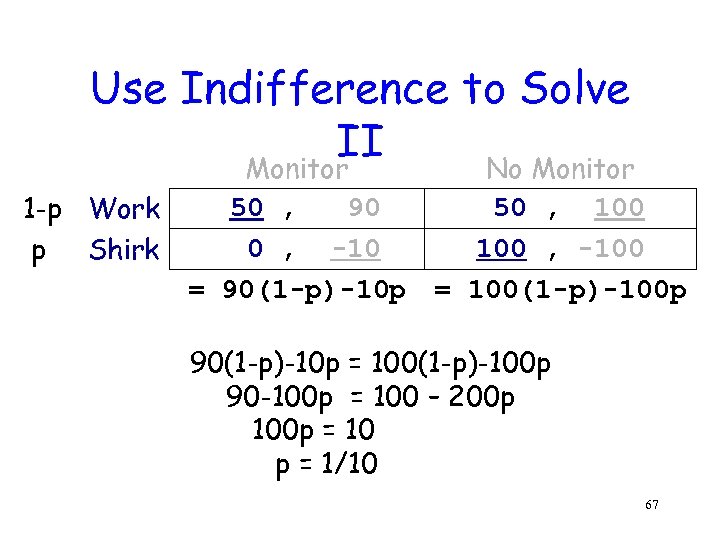

Use Indifference to Solve II Monitor No Monitor 1 -p Work p Shirk 50 , 90 0 , -10 = 90(1 -p)-10 p 50 , 100 , -100 = 100(1 -p)-100 p 90(1 -p)-10 p = 100(1 -p)-100 p 90 -100 p = 100 – 200 p 100 p = 10 p = 1/10 67

Use Indifference to Solve II Monitor No Monitor 1 -p Work p Shirk 50 , 90 0 , -10 = 90(1 -p)-10 p 50 , 100 , -100 = 100(1 -p)-100 p 90(1 -p)-10 p = 100(1 -p)-100 p 90 -100 p = 100 – 200 p 100 p = 10 p = 1/10 67

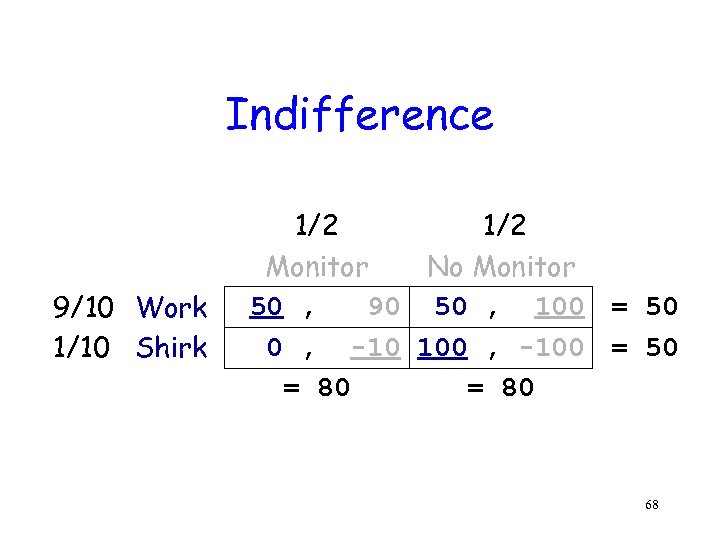

Indifference 9/10 Work 1/10 Shirk 1/2 Monitor No Monitor 50 , 90 50 , 100 = 50 0 , -10 100 , -100 = 50 = 80 68

Indifference 9/10 Work 1/10 Shirk 1/2 Monitor No Monitor 50 , 90 50 , 100 = 50 0 , -10 100 , -100 = 50 = 80 68

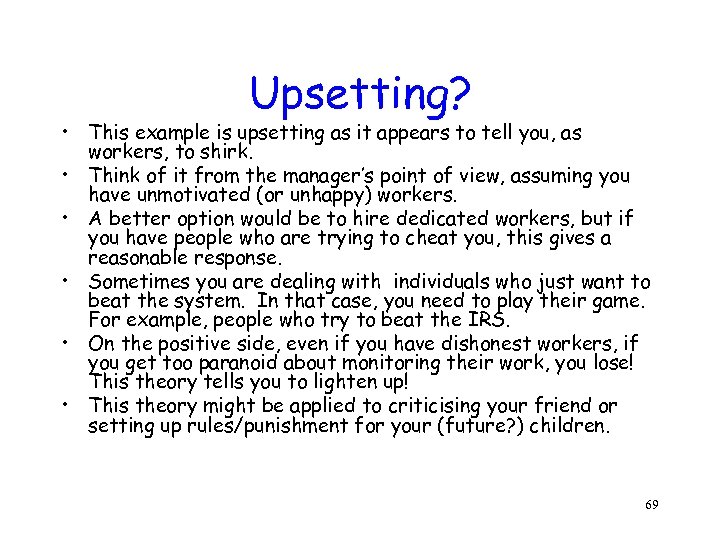

Upsetting? • This example is upsetting as it appears to tell you, as workers, to shirk. • Think of it from the manager’s point of view, assuming you have unmotivated (or unhappy) workers. • A better option would be to hire dedicated workers, but if you have people who are trying to cheat you, this gives a reasonable response. • Sometimes you are dealing with individuals who just want to beat the system. In that case, you need to play their game. For example, people who try to beat the IRS. • On the positive side, even if you have dishonest workers, if you get too paranoid about monitoring their work, you lose! This theory tells you to lighten up! • This theory might be applied to criticising your friend or setting up rules/punishment for your (future? ) children. 69

Upsetting? • This example is upsetting as it appears to tell you, as workers, to shirk. • Think of it from the manager’s point of view, assuming you have unmotivated (or unhappy) workers. • A better option would be to hire dedicated workers, but if you have people who are trying to cheat you, this gives a reasonable response. • Sometimes you are dealing with individuals who just want to beat the system. In that case, you need to play their game. For example, people who try to beat the IRS. • On the positive side, even if you have dishonest workers, if you get too paranoid about monitoring their work, you lose! This theory tells you to lighten up! • This theory might be applied to criticising your friend or setting up rules/punishment for your (future? ) children. 69

Why Do We Mix? • Since a player does not care what mixture she uses, she picks the mixture that will make her opponent indifferent! COMMANDMENT Use the mixed strategy that keeps your opponent guessing. 70

Why Do We Mix? • Since a player does not care what mixture she uses, she picks the mixture that will make her opponent indifferent! COMMANDMENT Use the mixed strategy that keeps your opponent guessing. 70

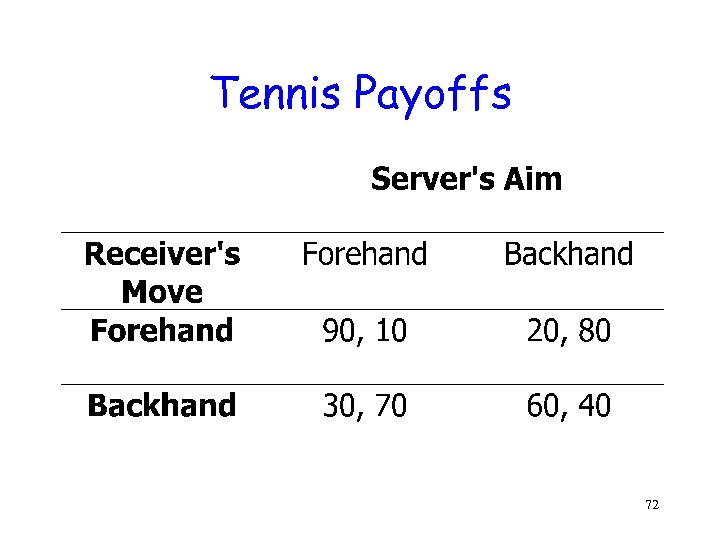

Mixed Strategy Equilibriums • Anyone for tennis? – Should you serve to the forehand or the backhand? 71

Mixed Strategy Equilibriums • Anyone for tennis? – Should you serve to the forehand or the backhand? 71

Tennis Payoffs 72

Tennis Payoffs 72

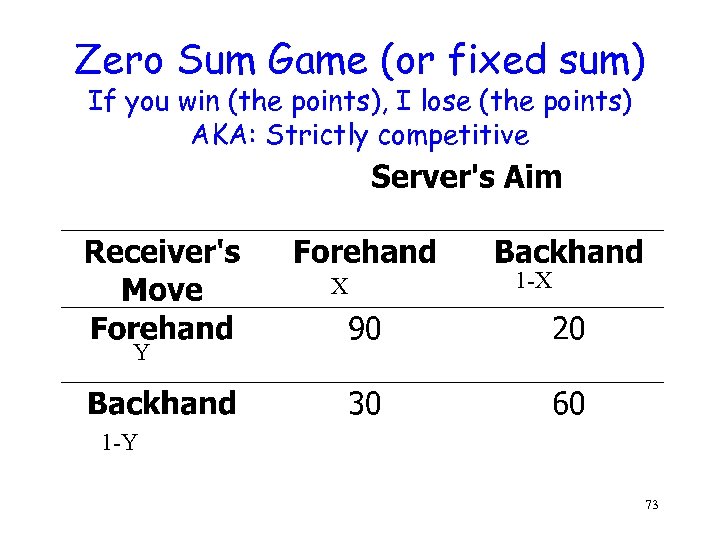

Zero Sum Game (or fixed sum) If you win (the points), I lose (the points) AKA: Strictly competitive X 1 -X Y 1 -Y 73

Zero Sum Game (or fixed sum) If you win (the points), I lose (the points) AKA: Strictly competitive X 1 -X Y 1 -Y 73

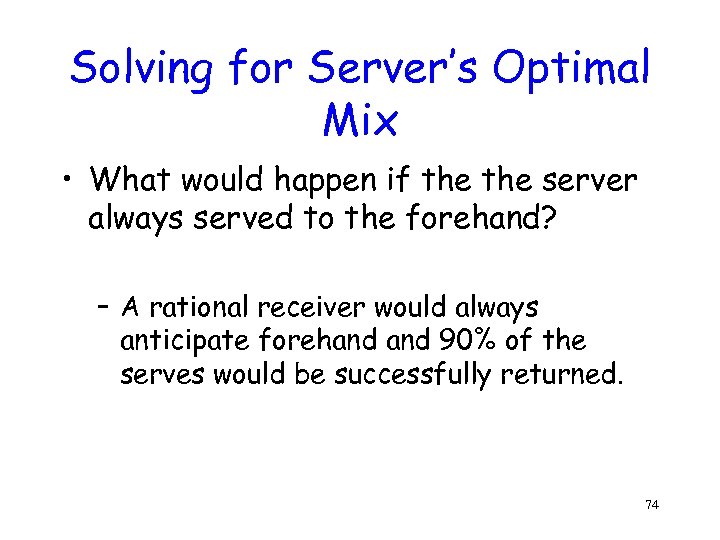

Solving for Server’s Optimal Mix • What would happen if the server always served to the forehand? – A rational receiver would always anticipate forehand 90% of the serves would be successfully returned. 74

Solving for Server’s Optimal Mix • What would happen if the server always served to the forehand? – A rational receiver would always anticipate forehand 90% of the serves would be successfully returned. 74

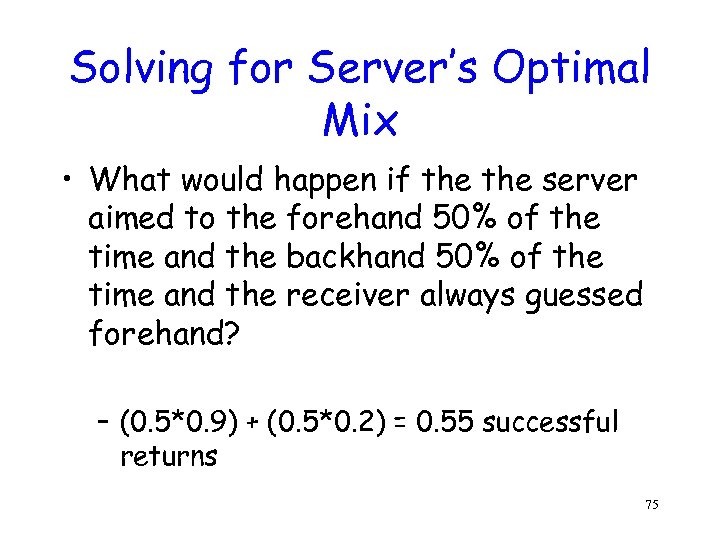

Solving for Server’s Optimal Mix • What would happen if the server aimed to the forehand 50% of the time and the backhand 50% of the time and the receiver always guessed forehand? – (0. 5*0. 9) + (0. 5*0. 2) = 0. 55 successful returns 75

Solving for Server’s Optimal Mix • What would happen if the server aimed to the forehand 50% of the time and the backhand 50% of the time and the receiver always guessed forehand? – (0. 5*0. 9) + (0. 5*0. 2) = 0. 55 successful returns 75

Solving for Server’s Optimal Mix • What is the best mix for each player? 76

Solving for Server’s Optimal Mix • What is the best mix for each player? 76

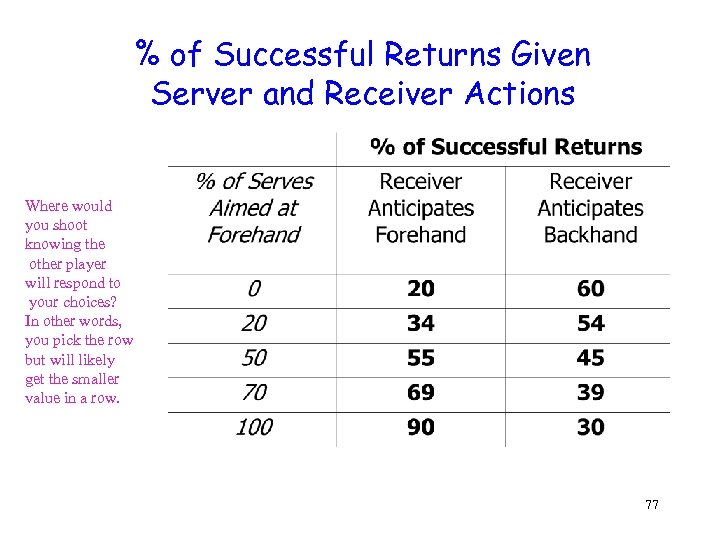

% of Successful Returns Given Server and Receiver Actions Where would you shoot knowing the other player will respond to your choices? In other words, you pick the row but will likely get the smaller value in a row. 77

% of Successful Returns Given Server and Receiver Actions Where would you shoot knowing the other player will respond to your choices? In other words, you pick the row but will likely get the smaller value in a row. 77

% of Successful Returns Given Server and Receiver Actions • If 20% of the serves are aimed at the forehand the receiver is anticipating forehand then the % of successful returns is: – (0. 2 * 0. 9) + (0. 8 * 0. 2) = 0. 34 – Therefore, 34% of the serves are returned successfully. 78

% of Successful Returns Given Server and Receiver Actions • If 20% of the serves are aimed at the forehand the receiver is anticipating forehand then the % of successful returns is: – (0. 2 * 0. 9) + (0. 8 * 0. 2) = 0. 34 – Therefore, 34% of the serves are returned successfully. 78

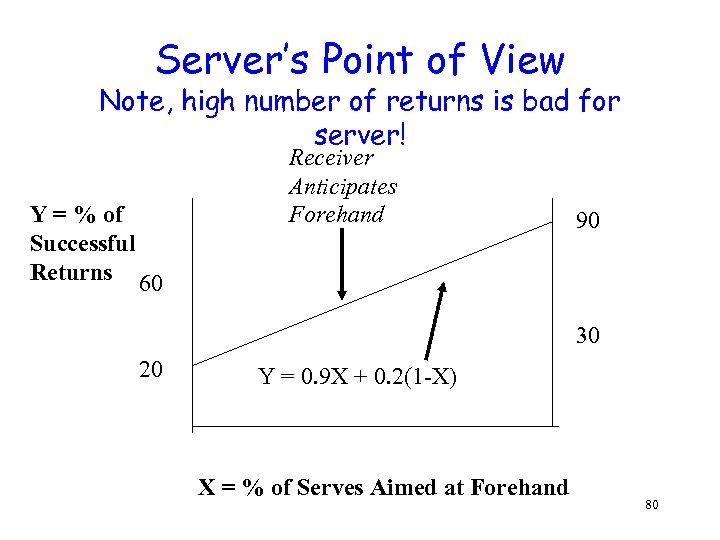

% of Successful Returns Given Server and Receiver Actions • More generally, when the receiver anticipates forehand the % of successful returns is defined by: – X = % of serves aimed at forehand – 1 -X = % of serves aimed at backhand – % of Successful Returns = 0. 90 X + 0. 20(1 -X) 79

% of Successful Returns Given Server and Receiver Actions • More generally, when the receiver anticipates forehand the % of successful returns is defined by: – X = % of serves aimed at forehand – 1 -X = % of serves aimed at backhand – % of Successful Returns = 0. 90 X + 0. 20(1 -X) 79

Server’s Point of View Note, high number of returns is bad for server! Y = % of Successful Returns 60 Receiver Anticipates Forehand 90 30 20 Y = 0. 9 X + 0. 2(1 -X) X = % of Serves Aimed at Forehand 80

Server’s Point of View Note, high number of returns is bad for server! Y = % of Successful Returns 60 Receiver Anticipates Forehand 90 30 20 Y = 0. 9 X + 0. 2(1 -X) X = % of Serves Aimed at Forehand 80

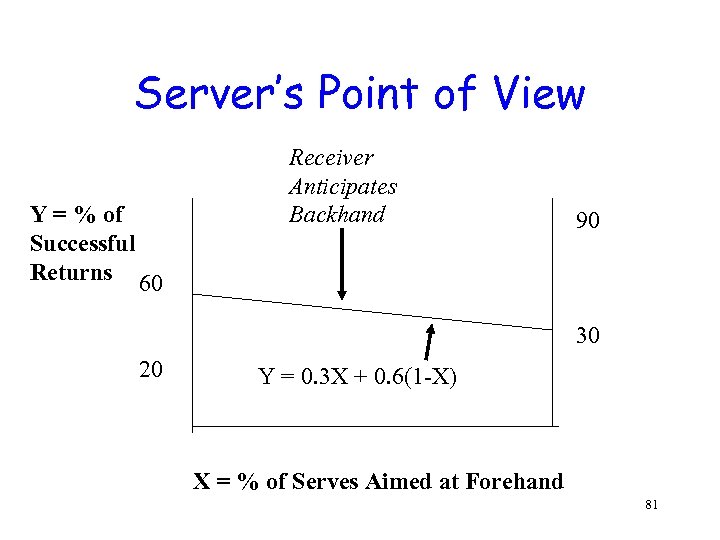

Server’s Point of View Y = % of Successful Returns 60 Receiver Anticipates Backhand 90 30 20 Y = 0. 3 X + 0. 6(1 -X) X = % of Serves Aimed at Forehand 81

Server’s Point of View Y = % of Successful Returns 60 Receiver Anticipates Backhand 90 30 20 Y = 0. 3 X + 0. 6(1 -X) X = % of Serves Aimed at Forehand 81

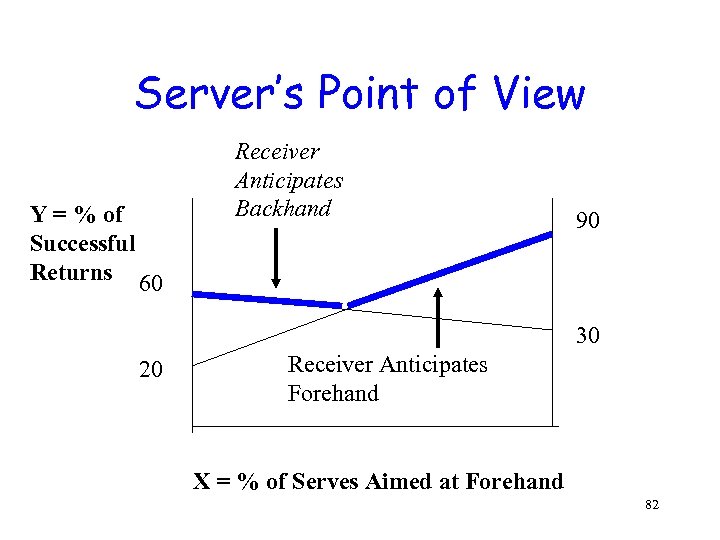

Server’s Point of View Y = % of Successful Returns 60 Receiver Anticipates Backhand 90 30 20 Receiver Anticipates Forehand X = % of Serves Aimed at Forehand 82

Server’s Point of View Y = % of Successful Returns 60 Receiver Anticipates Backhand 90 30 20 Receiver Anticipates Forehand X = % of Serves Aimed at Forehand 82

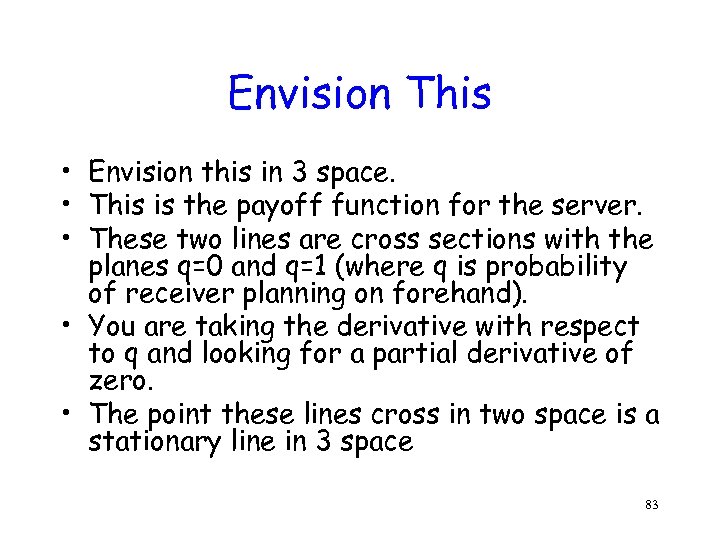

Envision This • Envision this in 3 space. • This is the payoff function for the server. • These two lines are cross sections with the planes q=0 and q=1 (where q is probability of receiver planning on forehand). • You are taking the derivative with respect to q and looking for a partial derivative of zero. • The point these lines cross in two space is a stationary line in 3 space 83

Envision This • Envision this in 3 space. • This is the payoff function for the server. • These two lines are cross sections with the planes q=0 and q=1 (where q is probability of receiver planning on forehand). • You are taking the derivative with respect to q and looking for a partial derivative of zero. • The point these lines cross in two space is a stationary line in 3 space 83

Best Response • Where can the server minimize the receiver’s maximum payoff? 84

Best Response • Where can the server minimize the receiver’s maximum payoff? 84

Solving for Mixed Strategy Equilibrium • Set the linear equations equal to each other and solve: – 0. 9 X + 0. 2(1 -X) = 0. 3 X + 0. 6(1 -X) – X = 0. 40 85

Solving for Mixed Strategy Equilibrium • Set the linear equations equal to each other and solve: – 0. 9 X + 0. 2(1 -X) = 0. 3 X + 0. 6(1 -X) – X = 0. 40 85

Solving for Mixed Strategy Equilibrium • If the server mixes his serves 40% forehand / 60% backhand, the receiver is indifferent between anticipating forehand anticipating backhand because her payoff (% of successful returns) is the same. 86

Solving for Mixed Strategy Equilibrium • If the server mixes his serves 40% forehand / 60% backhand, the receiver is indifferent between anticipating forehand anticipating backhand because her payoff (% of successful returns) is the same. 86

Solving for the Optimal Mix • Now we have to do the same thing from the receiver’s point of view to determine how often the receiver should anticipate forehand/backhand. • In equilibrium, if player A is optimally mixing then player B is indifferent to the action player B selects. If a player is not optimally mixing then he can be taken advantage of by his opponent. This fact allows us to easily solve for the optimal mix in zero sum, 2 x 2 games. 87

Solving for the Optimal Mix • Now we have to do the same thing from the receiver’s point of view to determine how often the receiver should anticipate forehand/backhand. • In equilibrium, if player A is optimally mixing then player B is indifferent to the action player B selects. If a player is not optimally mixing then he can be taken advantage of by his opponent. This fact allows us to easily solve for the optimal mix in zero sum, 2 x 2 games. 87

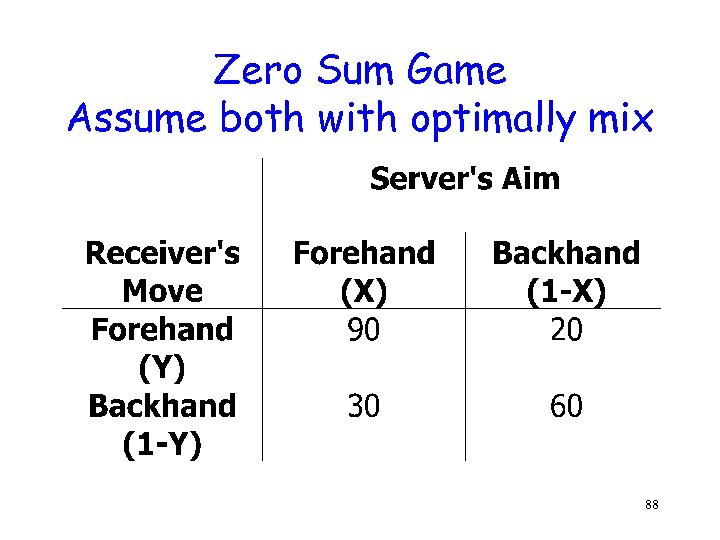

Zero Sum Game Assume both with optimally mix 88

Zero Sum Game Assume both with optimally mix 88

Receiver’s Optimal Mix • If the receiver is optimally mixing her anticipation of forehand (Y) and backhand (1 -Y), then the server is indifferent between aiming forehand/backhand because his payoff is the same. • y(10) + (1 -y)70 = y(80)+(1 -y)40 • 10 y +70 -70 y=80 y+40 -40 y • y = 30/100 89

Receiver’s Optimal Mix • If the receiver is optimally mixing her anticipation of forehand (Y) and backhand (1 -Y), then the server is indifferent between aiming forehand/backhand because his payoff is the same. • y(10) + (1 -y)70 = y(80)+(1 -y)40 • 10 y +70 -70 y=80 y+40 -40 y • y = 30/100 89

Receiver’s Optimal Mix • This means that if the receiver is optimally mixing then the server’s payoff for aiming forehand is equal to his payoff for aiming backhand. 90

Receiver’s Optimal Mix • This means that if the receiver is optimally mixing then the server’s payoff for aiming forehand is equal to his payoff for aiming backhand. 90

Similarly Server’s Optimal Mix • If the server is optimally mixing her forehand (X) and backhand (1 -X), then the receiver is indifferent between anticipating forehand/backhand because her payoff is the same. • Solving for X: • • 90 X + 20(1 -X) = 30 X + 60(1 -X) 90 X + 20 - 20 X = 30 X + 60 -60 X 70 X+20 = -30 X + 60 X =. 40 • Thus the server should serve forehand 40% of the time and backhand 60%. 91

Similarly Server’s Optimal Mix • If the server is optimally mixing her forehand (X) and backhand (1 -X), then the receiver is indifferent between anticipating forehand/backhand because her payoff is the same. • Solving for X: • • 90 X + 20(1 -X) = 30 X + 60(1 -X) 90 X + 20 - 20 X = 30 X + 60 -60 X 70 X+20 = -30 X + 60 X =. 40 • Thus the server should serve forehand 40% of the time and backhand 60%. 91

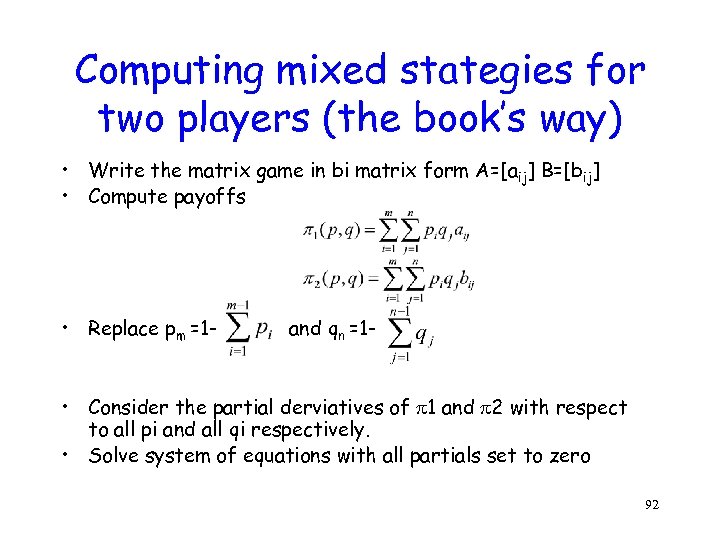

Computing mixed stategies for two players (the book’s way) • Write the matrix game in bi matrix form A=[aij] B=[bij] • Compute payoffs • Replace pm =1 - and qn =1 - • Consider the partial derviatives of 1 and 2 with respect to all pi and all qi respectively. • Solve system of equations with all partials set to zero 92

Computing mixed stategies for two players (the book’s way) • Write the matrix game in bi matrix form A=[aij] B=[bij] • Compute payoffs • Replace pm =1 - and qn =1 - • Consider the partial derviatives of 1 and 2 with respect to all pi and all qi respectively. • Solve system of equations with all partials set to zero 92

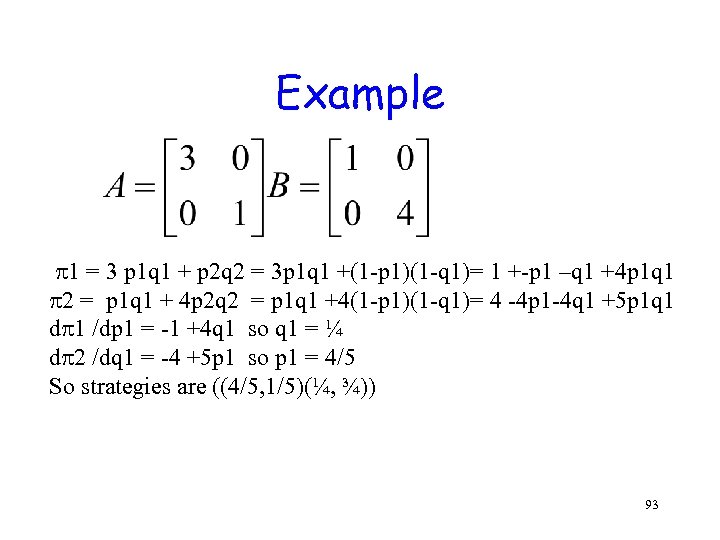

Example 1 = 3 p 1 q 1 + p 2 q 2 = 3 p 1 q 1 +(1 -p 1)(1 -q 1)= 1 +-p 1 –q 1 +4 p 1 q 1 2 = p 1 q 1 + 4 p 2 q 2 = p 1 q 1 +4(1 -p 1)(1 -q 1)= 4 -4 p 1 -4 q 1 +5 p 1 q 1 d 1 /dp 1 = -1 +4 q 1 so q 1 = ¼ d 2 /dq 1 = -4 +5 p 1 so p 1 = 4/5 So strategies are ((4/5, 1/5)(¼, ¾)) 93

Example 1 = 3 p 1 q 1 + p 2 q 2 = 3 p 1 q 1 +(1 -p 1)(1 -q 1)= 1 +-p 1 –q 1 +4 p 1 q 1 2 = p 1 q 1 + 4 p 2 q 2 = p 1 q 1 +4(1 -p 1)(1 -q 1)= 4 -4 p 1 -4 q 1 +5 p 1 q 1 d 1 /dp 1 = -1 +4 q 1 so q 1 = ¼ d 2 /dq 1 = -4 +5 p 1 so p 1 = 4/5 So strategies are ((4/5, 1/5)(¼, ¾)) 93

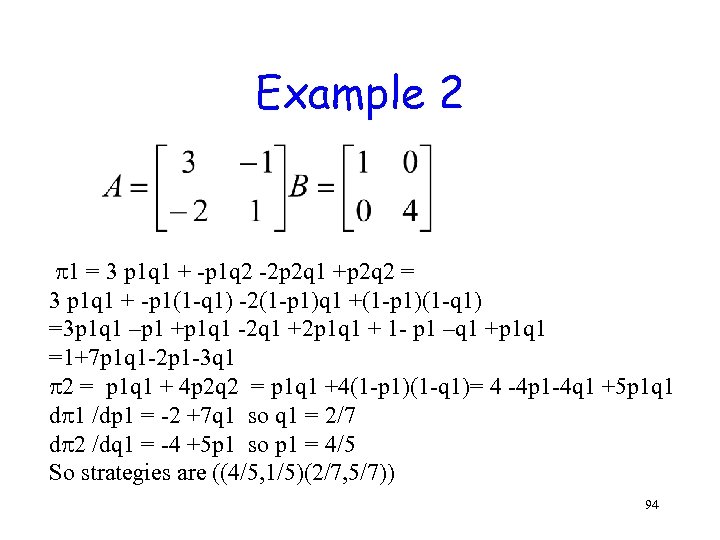

Example 2 1 = 3 p 1 q 1 + -p 1 q 2 -2 p 2 q 1 +p 2 q 2 = 3 p 1 q 1 + -p 1(1 -q 1) -2(1 -p 1)q 1 +(1 -p 1)(1 -q 1) =3 p 1 q 1 –p 1 +p 1 q 1 -2 q 1 +2 p 1 q 1 + 1 - p 1 –q 1 +p 1 q 1 =1+7 p 1 q 1 -2 p 1 -3 q 1 2 = p 1 q 1 + 4 p 2 q 2 = p 1 q 1 +4(1 -p 1)(1 -q 1)= 4 -4 p 1 -4 q 1 +5 p 1 q 1 d 1 /dp 1 = -2 +7 q 1 so q 1 = 2/7 d 2 /dq 1 = -4 +5 p 1 so p 1 = 4/5 So strategies are ((4/5, 1/5)(2/7, 5/7)) 94

Example 2 1 = 3 p 1 q 1 + -p 1 q 2 -2 p 2 q 1 +p 2 q 2 = 3 p 1 q 1 + -p 1(1 -q 1) -2(1 -p 1)q 1 +(1 -p 1)(1 -q 1) =3 p 1 q 1 –p 1 +p 1 q 1 -2 q 1 +2 p 1 q 1 + 1 - p 1 –q 1 +p 1 q 1 =1+7 p 1 q 1 -2 p 1 -3 q 1 2 = p 1 q 1 + 4 p 2 q 2 = p 1 q 1 +4(1 -p 1)(1 -q 1)= 4 -4 p 1 -4 q 1 +5 p 1 q 1 d 1 /dp 1 = -2 +7 q 1 so q 1 = 2/7 d 2 /dq 1 = -4 +5 p 1 so p 1 = 4/5 So strategies are ((4/5, 1/5)(2/7, 5/7)) 94

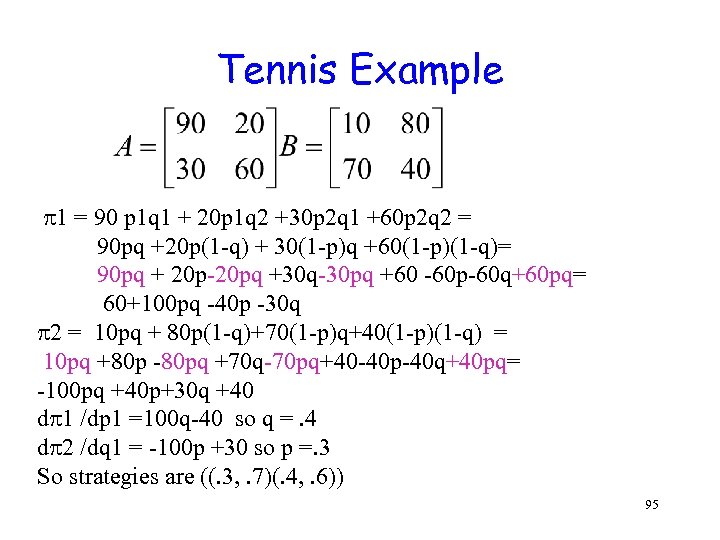

Tennis Example 1 = 90 p 1 q 1 + 20 p 1 q 2 +30 p 2 q 1 +60 p 2 q 2 = 90 pq +20 p(1 -q) + 30(1 -p)q +60(1 -p)(1 -q)= 90 pq + 20 p-20 pq +30 q-30 pq +60 -60 p-60 q+60 pq= 60+100 pq -40 p -30 q 2 = 10 pq + 80 p(1 -q)+70(1 -p)q+40(1 -p)(1 -q) = 10 pq +80 p -80 pq +70 q-70 pq+40 -40 p-40 q+40 pq= -100 pq +40 p+30 q +40 d 1 /dp 1 =100 q-40 so q =. 4 d 2 /dq 1 = -100 p +30 so p =. 3 So strategies are ((. 3, . 7)(. 4, . 6)) 95

Tennis Example 1 = 90 p 1 q 1 + 20 p 1 q 2 +30 p 2 q 1 +60 p 2 q 2 = 90 pq +20 p(1 -q) + 30(1 -p)q +60(1 -p)(1 -q)= 90 pq + 20 p-20 pq +30 q-30 pq +60 -60 p-60 q+60 pq= 60+100 pq -40 p -30 q 2 = 10 pq + 80 p(1 -q)+70(1 -p)q+40(1 -p)(1 -q) = 10 pq +80 p -80 pq +70 q-70 pq+40 -40 p-40 q+40 pq= -100 pq +40 p+30 q +40 d 1 /dp 1 =100 q-40 so q =. 4 d 2 /dq 1 = -100 p +30 so p =. 3 So strategies are ((. 3, . 7)(. 4, . 6)) 95

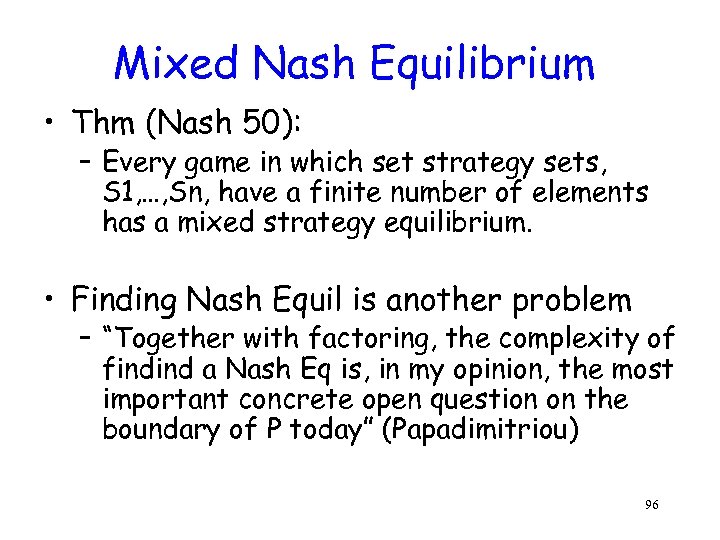

Mixed Nash Equilibrium • Thm (Nash 50): – Every game in which set strategy sets, S 1, …, Sn, have a finite number of elements has a mixed strategy equilibrium. • Finding Nash Equil is another problem – “Together with factoring, the complexity of findind a Nash Eq is, in my opinion, the most important concrete open question on the boundary of P today” (Papadimitriou) 96

Mixed Nash Equilibrium • Thm (Nash 50): – Every game in which set strategy sets, S 1, …, Sn, have a finite number of elements has a mixed strategy equilibrium. • Finding Nash Equil is another problem – “Together with factoring, the complexity of findind a Nash Eq is, in my opinion, the most important concrete open question on the boundary of P today” (Papadimitriou) 96

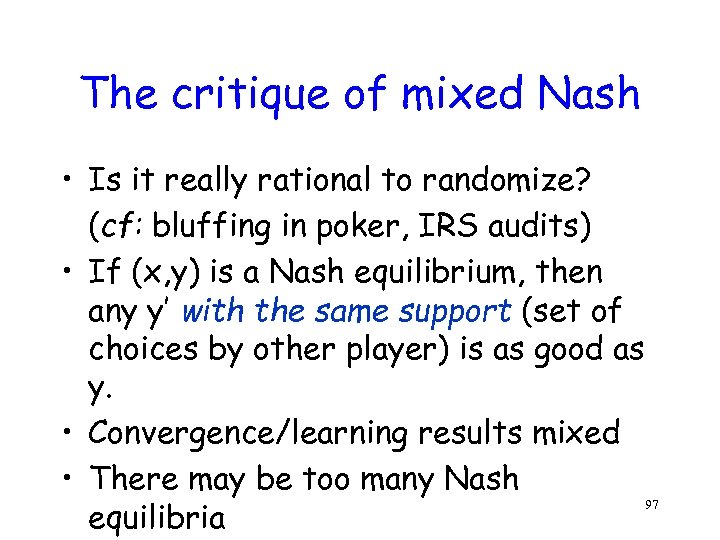

The critique of mixed Nash • Is it really rational to randomize? (cf: bluffing in poker, IRS audits) • If (x, y) is a Nash equilibrium, then any y’ with the same support (set of choices by other player) is as good as y. • Convergence/learning results mixed • There may be too many Nash 97 equilibria

The critique of mixed Nash • Is it really rational to randomize? (cf: bluffing in poker, IRS audits) • If (x, y) is a Nash equilibrium, then any y’ with the same support (set of choices by other player) is as good as y. • Convergence/learning results mixed • There may be too many Nash 97 equilibria

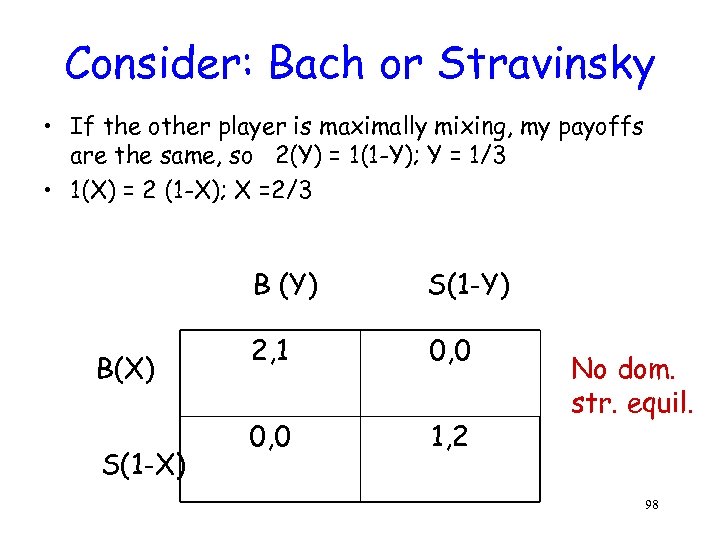

Consider: Bach or Stravinsky • If the other player is maximally mixing, my payoffs are the same, so 2(Y) = 1(1 -Y); Y = 1/3 • 1(X) = 2 (1 -X); X =2/3 B (Y) B(X) S(1 -Y) 2, 1 0, 0 1, 2 No dom. str. equil. 98

Consider: Bach or Stravinsky • If the other player is maximally mixing, my payoffs are the same, so 2(Y) = 1(1 -Y); Y = 1/3 • 1(X) = 2 (1 -X); X =2/3 B (Y) B(X) S(1 -Y) 2, 1 0, 0 1, 2 No dom. str. equil. 98

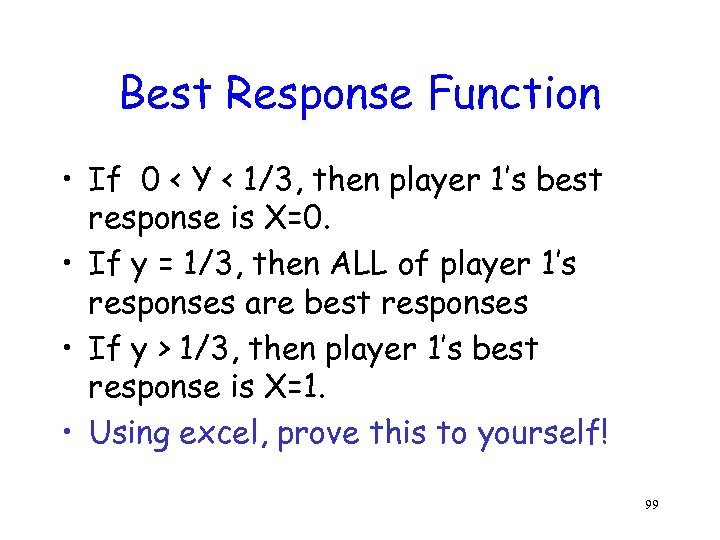

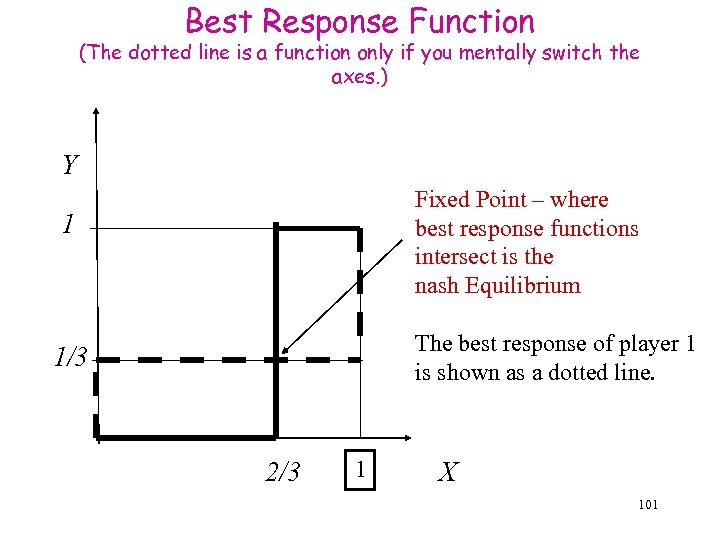

Best Response Function • If 0 < Y < 1/3, then player 1’s best response is X=0. • If y = 1/3, then ALL of player 1’s responses are best responses • If y > 1/3, then player 1’s best response is X=1. • Using excel, prove this to yourself! 99

Best Response Function • If 0 < Y < 1/3, then player 1’s best response is X=0. • If y = 1/3, then ALL of player 1’s responses are best responses • If y > 1/3, then player 1’s best response is X=1. • Using excel, prove this to yourself! 99

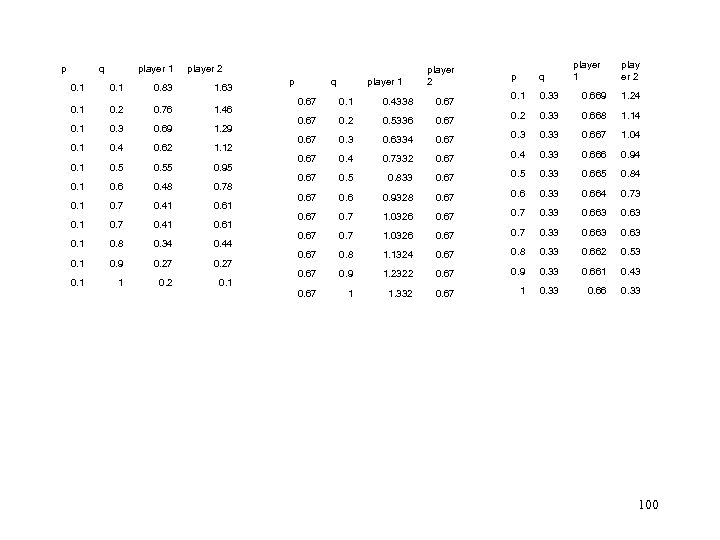

p q 0. 1 player 1 0. 83 player 2 1. 63 0. 1 0. 2 0. 76 1. 46 0. 1 0. 3 0. 69 1. 29 0. 1 0. 4 0. 62 1. 12 0. 1 0. 55 0. 95 0. 1 0. 6 0. 48 0. 78 0. 1 0. 7 0. 41 0. 61 0. 8 0. 34 0. 44 0. 1 0. 9 0. 27 0. 1 1 0. 2 0. 1 p q player 1 player 2 player 1 play er 2 p q 0. 1 0. 33 0. 669 1. 24 0. 2 0. 33 0. 668 1. 14 0. 67 0. 1 0. 4338 0. 67 0. 2 0. 5336 0. 67 0. 3 0. 6334 0. 67 0. 33 0. 667 1. 04 0. 67 0. 4 0. 7332 0. 67 0. 4 0. 33 0. 666 0. 94 0. 67 0. 5 0. 833 0. 67 0. 5 0. 33 0. 665 0. 84 0. 67 0. 6 0. 9328 0. 67 0. 6 0. 33 0. 664 0. 73 0. 67 0. 7 1. 0326 0. 67 0. 33 0. 663 0. 67 0. 8 1. 1324 0. 67 0. 8 0. 33 0. 662 0. 53 0. 67 0. 9 1. 2322 0. 67 0. 9 0. 33 0. 661 0. 43 0. 67 1 1. 332 0. 67 1 0. 33 0. 66 0. 33 100

p q 0. 1 player 1 0. 83 player 2 1. 63 0. 1 0. 2 0. 76 1. 46 0. 1 0. 3 0. 69 1. 29 0. 1 0. 4 0. 62 1. 12 0. 1 0. 55 0. 95 0. 1 0. 6 0. 48 0. 78 0. 1 0. 7 0. 41 0. 61 0. 8 0. 34 0. 44 0. 1 0. 9 0. 27 0. 1 1 0. 2 0. 1 p q player 1 player 2 player 1 play er 2 p q 0. 1 0. 33 0. 669 1. 24 0. 2 0. 33 0. 668 1. 14 0. 67 0. 1 0. 4338 0. 67 0. 2 0. 5336 0. 67 0. 3 0. 6334 0. 67 0. 33 0. 667 1. 04 0. 67 0. 4 0. 7332 0. 67 0. 4 0. 33 0. 666 0. 94 0. 67 0. 5 0. 833 0. 67 0. 5 0. 33 0. 665 0. 84 0. 67 0. 6 0. 9328 0. 67 0. 6 0. 33 0. 664 0. 73 0. 67 0. 7 1. 0326 0. 67 0. 33 0. 663 0. 67 0. 8 1. 1324 0. 67 0. 8 0. 33 0. 662 0. 53 0. 67 0. 9 1. 2322 0. 67 0. 9 0. 33 0. 661 0. 43 0. 67 1 1. 332 0. 67 1 0. 33 0. 66 0. 33 100

Best Response Function (The dotted line is a function only if you mentally switch the axes. ) Y Fixed Point – where best response functions intersect is the nash Equilibrium 1 The best response of player 1 is shown as a dotted line. 1/3 2/3 1 X 101

Best Response Function (The dotted line is a function only if you mentally switch the axes. ) Y Fixed Point – where best response functions intersect is the nash Equilibrium 1 The best response of player 1 is shown as a dotted line. 1/3 2/3 1 X 101

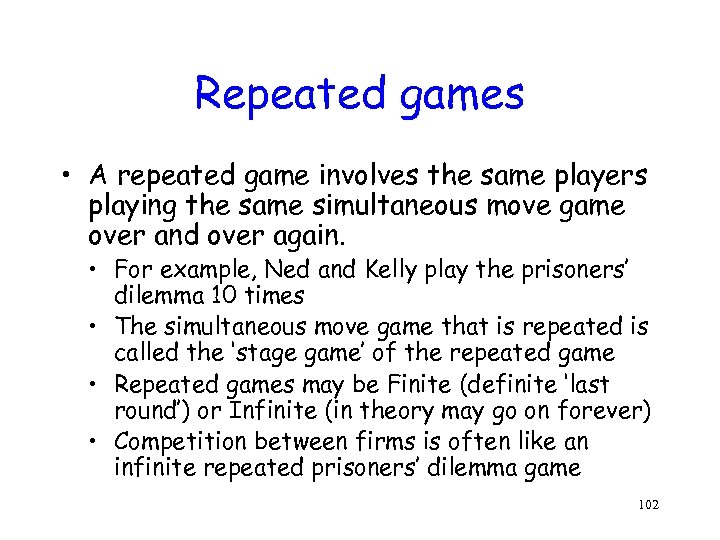

Repeated games • A repeated game involves the same players playing the same simultaneous move game over and over again. • For example, Ned and Kelly play the prisoners’ dilemma 10 times • The simultaneous move game that is repeated is called the ‘stage game’ of the repeated game • Repeated games may be Finite (definite ‘last round’) or Infinite (in theory may go on forever) • Competition between firms is often like an infinite repeated prisoners’ dilemma game 102

Repeated games • A repeated game involves the same players playing the same simultaneous move game over and over again. • For example, Ned and Kelly play the prisoners’ dilemma 10 times • The simultaneous move game that is repeated is called the ‘stage game’ of the repeated game • Repeated games may be Finite (definite ‘last round’) or Infinite (in theory may go on forever) • Competition between firms is often like an infinite repeated prisoners’ dilemma game 102

Repeated Interaction • Review – Simultaneous games • Put yourself in your opponent’s shoes • Iterative reasoning • Outline: – What if interaction is repeated? – What strategies can lead players to cooperate? 103

Repeated Interaction • Review – Simultaneous games • Put yourself in your opponent’s shoes • Iterative reasoning • Outline: – What if interaction is repeated? – What strategies can lead players to cooperate? 103

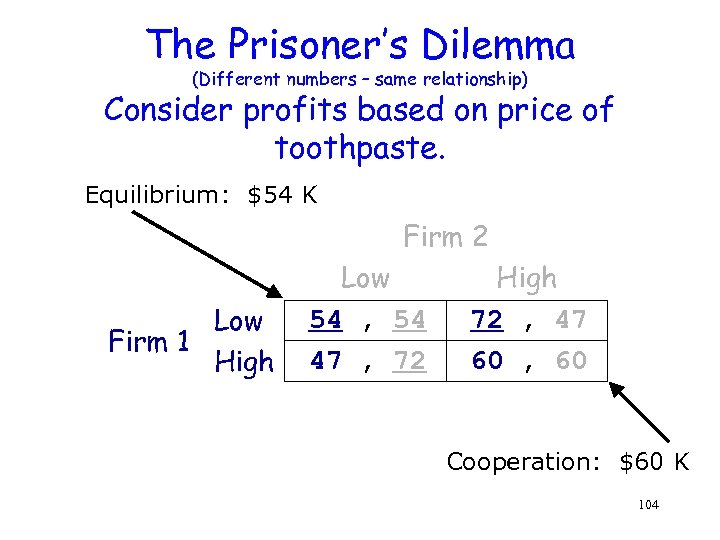

The Prisoner’s Dilemma (Different numbers – same relationship) Consider profits based on price of toothpaste. Equilibrium: $54 K Firm 2 Low Firm 1 High Low 54 , 54 47 , 72 High 72 , 47 60 , 60 Cooperation: $60 K 104

The Prisoner’s Dilemma (Different numbers – same relationship) Consider profits based on price of toothpaste. Equilibrium: $54 K Firm 2 Low Firm 1 High Low 54 , 54 47 , 72 High 72 , 47 60 , 60 Cooperation: $60 K 104

Prisoner’s Dilemma • Private rationality collective irrationality • The equilibrium that arises from using dominant strategies is worse for every player than the outcome that would arise if every player used her dominated strategy instead • Goal: • To sustain mutually beneficial cooperative outcome overcoming incentives to cheat (if you have agreed beforehand what you will do) 105

Prisoner’s Dilemma • Private rationality collective irrationality • The equilibrium that arises from using dominant strategies is worse for every player than the outcome that would arise if every player used her dominated strategy instead • Goal: • To sustain mutually beneficial cooperative outcome overcoming incentives to cheat (if you have agreed beforehand what you will do) 105

Moving Beyond the Prisoner’s Dilemma • Why does the dilemma occur? – Interaction • No fear of punishment • Short term or myopic play – Firms: • Lack of monopoly power – can’t force others to pick the cooperative choice. • Homogeneity in products and costs – if all the same, can easily buy from different firm. • Overcapacity – if have capacity for more without increased cost, changes incentives. • Incentives for profit or market share – if desperate to get more of market share, may select a lower payoff initially. Wal. Mart strategy. 106

Moving Beyond the Prisoner’s Dilemma • Why does the dilemma occur? – Interaction • No fear of punishment • Short term or myopic play – Firms: • Lack of monopoly power – can’t force others to pick the cooperative choice. • Homogeneity in products and costs – if all the same, can easily buy from different firm. • Overcapacity – if have capacity for more without increased cost, changes incentives. • Incentives for profit or market share – if desperate to get more of market share, may select a lower payoff initially. Wal. Mart strategy. 106

Moving Beyond the Prisoner’s Dilemma • Why does the dilemma occur? – Consumers • Price sensitive, want cheaper regardless of quality. • Price aware – know real value and unwilling to pay more • Low switching costs – can switch between brands easily as prices fluctuate. 107

Moving Beyond the Prisoner’s Dilemma • Why does the dilemma occur? – Consumers • Price sensitive, want cheaper regardless of quality. • Price aware – know real value and unwilling to pay more • Low switching costs – can switch between brands easily as prices fluctuate. 107

Solution - Altering Interaction • Interaction – No fear of punishment • Exploit repeated play – Short term or myopic play • Introduce repeated encounters • Introduce uncertainty – not sure when interaction will end 108

Solution - Altering Interaction • Interaction – No fear of punishment • Exploit repeated play – Short term or myopic play • Introduce repeated encounters • Introduce uncertainty – not sure when interaction will end 108

Finite Interaction (Silly Theoretical Trickery) • Suppose the market relationship lasts for only T periods • Use backward induction (rollback) • Tth period: no incentive to cooperate • No future loss to worry about in last period • T-1 th period: no incentive to cooperate • No cooperation in Tth period in any case • No opportunity cost to cheating in period T-1 • Unraveling: logic goes back to period 1 109

Finite Interaction (Silly Theoretical Trickery) • Suppose the market relationship lasts for only T periods • Use backward induction (rollback) • Tth period: no incentive to cooperate • No future loss to worry about in last period • T-1 th period: no incentive to cooperate • No cooperation in Tth period in any case • No opportunity cost to cheating in period T-1 • Unraveling: logic goes back to period 1 109

Finite Interaction • Cooperation is impossible if the relationship between players is for a fixed and known length of time. • But, people think forward (what will my opponent do) if … – Game length uncertain – Game length unknown – Game length too long to think to end 110

Finite Interaction • Cooperation is impossible if the relationship between players is for a fixed and known length of time. • But, people think forward (what will my opponent do) if … – Game length uncertain – Game length unknown – Game length too long to think to end 110

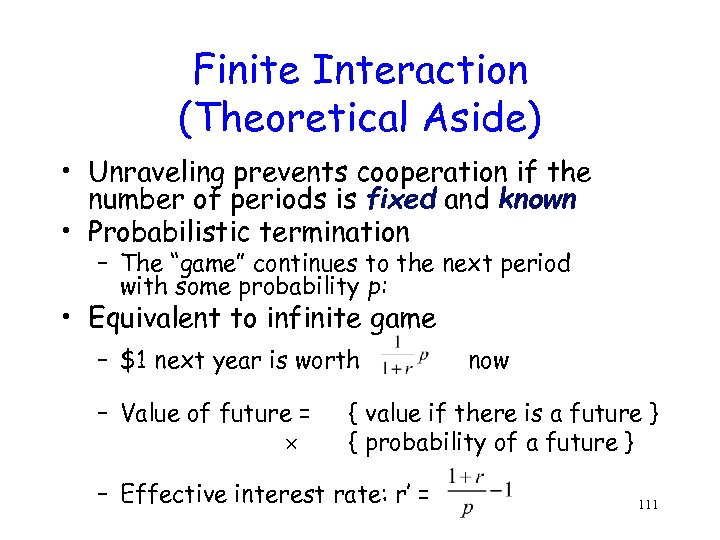

Finite Interaction (Theoretical Aside) • Unraveling prevents cooperation if the number of periods is fixed and known • Probabilistic termination – The “game” continues to the next period with some probability p: • Equivalent to infinite game – $1 next year is worth – Value of future = now { value if there is a future } { probability of a future } – Effective interest rate: r’ = 111

Finite Interaction (Theoretical Aside) • Unraveling prevents cooperation if the number of periods is fixed and known • Probabilistic termination – The “game” continues to the next period with some probability p: • Equivalent to infinite game – $1 next year is worth – Value of future = now { value if there is a future } { probability of a future } – Effective interest rate: r’ = 111

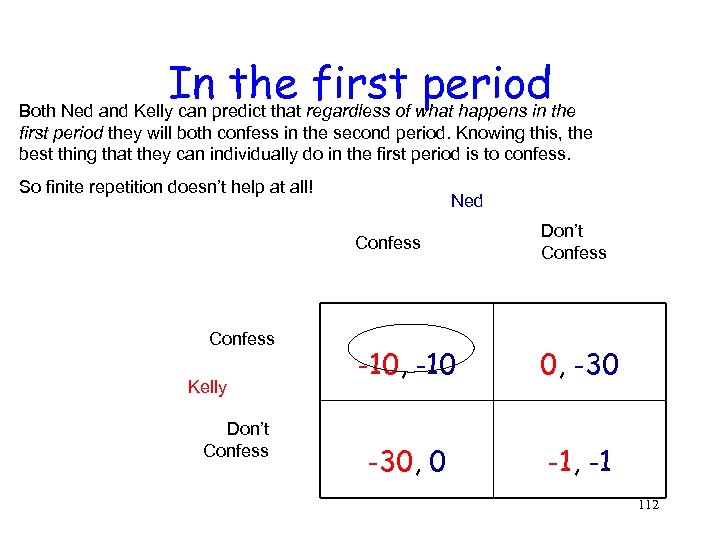

Inpredict that regardless of what happens in the first period Both Ned and Kelly can first period they will both confess in the second period. Knowing this, the best thing that they can individually do in the first period is to confess. So finite repetition doesn’t help at all! Ned Confess Kelly Don’t Confess -10, -10 0, -30, 0 -1, -1 112

Inpredict that regardless of what happens in the first period Both Ned and Kelly can first period they will both confess in the second period. Knowing this, the best thing that they can individually do in the first period is to confess. So finite repetition doesn’t help at all! Ned Confess Kelly Don’t Confess -10, -10 0, -30, 0 -1, -1 112

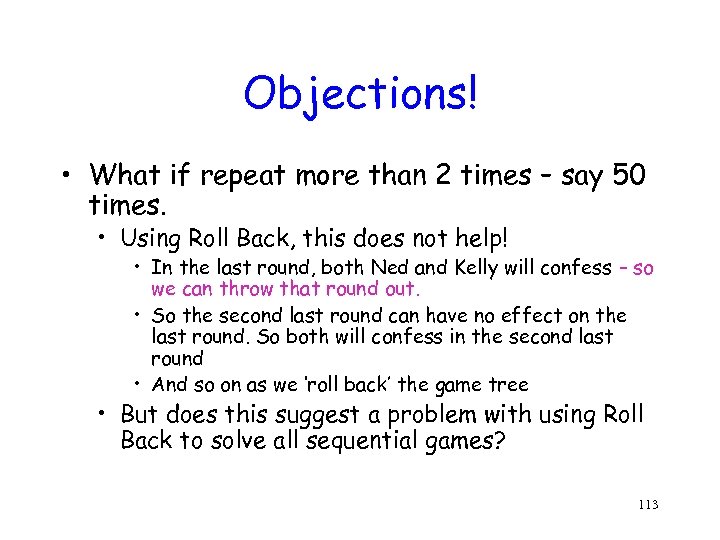

Objections! • What if repeat more than 2 times – say 50 times. • Using Roll Back, this does not help! • In the last round, both Ned and Kelly will confess – so we can throw that round out. • So the second last round can have no effect on the last round. So both will confess in the second last round • And so on as we ‘roll back’ the game tree • But does this suggest a problem with using Roll Back to solve all sequential games? 113

Objections! • What if repeat more than 2 times – say 50 times. • Using Roll Back, this does not help! • In the last round, both Ned and Kelly will confess – so we can throw that round out. • So the second last round can have no effect on the last round. So both will confess in the second last round • And so on as we ‘roll back’ the game tree • But does this suggest a problem with using Roll Back to solve all sequential games? 113

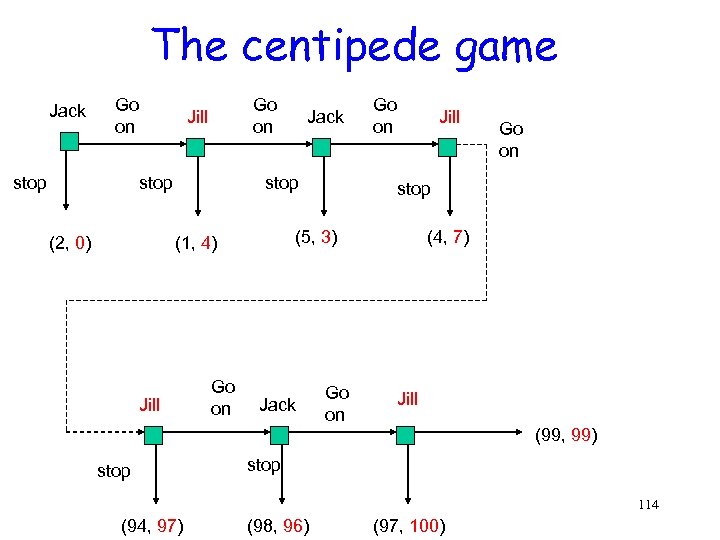

The centipede game Jack Go on stop Go on Jill stop (2, 0) Jack stop Jill Jack Go on stop (5, 3) (1, 4) Go on (4, 7) Jill (99, 99) stop 114 (94, 97) (98, 96) (97, 100)

The centipede game Jack Go on stop Go on Jill stop (2, 0) Jack stop Jill Jack Go on stop (5, 3) (1, 4) Go on (4, 7) Jill (99, 99) stop 114 (94, 97) (98, 96) (97, 100)

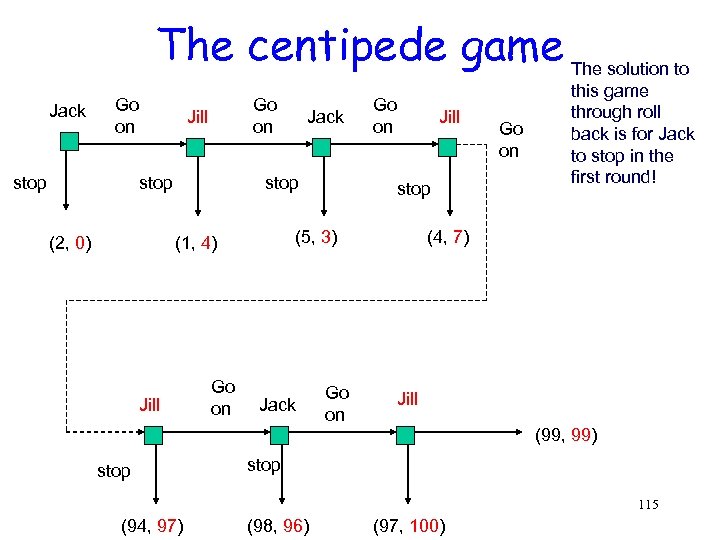

The centipede game The solution to Jack Go on stop Go on Jill stop (2, 0) Jack stop Jill stop (5, 3) (1, 4) Go on Jack Go on this game through roll back is for Jack to stop in the first round! (4, 7) Jill (99, 99) stop 115 (94, 97) (98, 96) (97, 100)

The centipede game The solution to Jack Go on stop Go on Jill stop (2, 0) Jack stop Jill stop (5, 3) (1, 4) Go on Jack Go on this game through roll back is for Jack to stop in the first round! (4, 7) Jill (99, 99) stop 115 (94, 97) (98, 96) (97, 100)

The centipede game • What actually happens? • In experiments the game usually continues for at least a few rounds and occasionally goes all the way to the end. • But going all the way to the (99, 99) payoff almost never happens – at some stage of the game ‘cooperation’ breaks down. • So still do not get sustained cooperation even if move away from ‘roll back’ as a solution 116

The centipede game • What actually happens? • In experiments the game usually continues for at least a few rounds and occasionally goes all the way to the end. • But going all the way to the (99, 99) payoff almost never happens – at some stage of the game ‘cooperation’ breaks down. • So still do not get sustained cooperation even if move away from ‘roll back’ as a solution 116

Lessons from finite repeated games – Finite repetition often does not help players to reach better solutions – Often the outcome of the finitely repeated game is simply the one-shot Nash equilibrium repeated again and again. – There are SOME repeated games where finite repetition can create new equilibrium outcomes. But these games tend to have special properties – For a large number of repetitions, there are some games where the Nash equilibrium logic breaks down in practice. 117

Lessons from finite repeated games – Finite repetition often does not help players to reach better solutions – Often the outcome of the finitely repeated game is simply the one-shot Nash equilibrium repeated again and again. – There are SOME repeated games where finite repetition can create new equilibrium outcomes. But these games tend to have special properties – For a large number of repetitions, there are some games where the Nash equilibrium logic breaks down in practice. 117

Infinitely repeated games • In ‘real life’, there are many times when you do not know for sure that this is the ‘last round’ of the game • When firms interact there is always a chance that they will interact again in the future. So repeated competition is more like an infinitely repeated game. 118

Infinitely repeated games • In ‘real life’, there are many times when you do not know for sure that this is the ‘last round’ of the game • When firms interact there is always a chance that they will interact again in the future. So repeated competition is more like an infinitely repeated game. 118

Long-Term Interaction • No last period, so no rollback • Use history-dependent strategies • Trigger strategies: • Begin by cooperating • Cooperate as long as the rivals do • Upon observing a defection: immediately revert to a period of punishment of specified length in which everyone plays non-cooperatively 119

Long-Term Interaction • No last period, so no rollback • Use history-dependent strategies • Trigger strategies: • Begin by cooperating • Cooperate as long as the rivals do • Upon observing a defection: immediately revert to a period of punishment of specified length in which everyone plays non-cooperatively 119

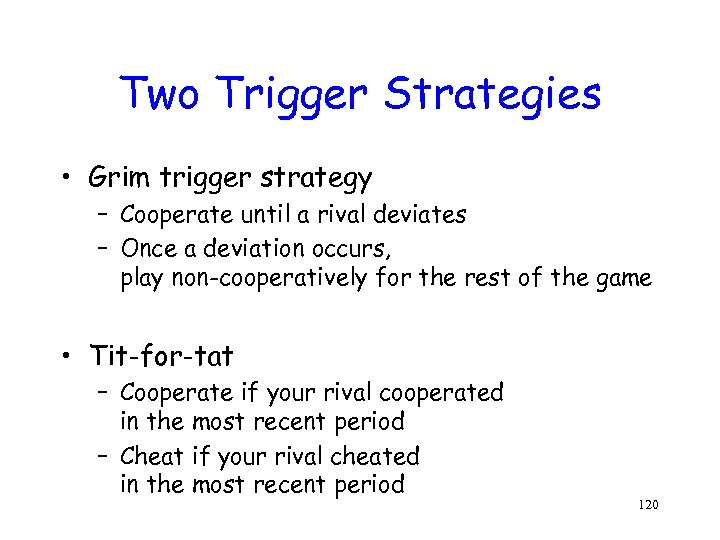

Two Trigger Strategies • Grim trigger strategy – Cooperate until a rival deviates – Once a deviation occurs, play non-cooperatively for the rest of the game • Tit-for-tat – Cooperate if your rival cooperated in the most recent period – Cheat if your rival cheated in the most recent period 120

Two Trigger Strategies • Grim trigger strategy – Cooperate until a rival deviates – Once a deviation occurs, play non-cooperatively for the rest of the game • Tit-for-tat – Cooperate if your rival cooperated in the most recent period – Cheat if your rival cheated in the most recent period 120

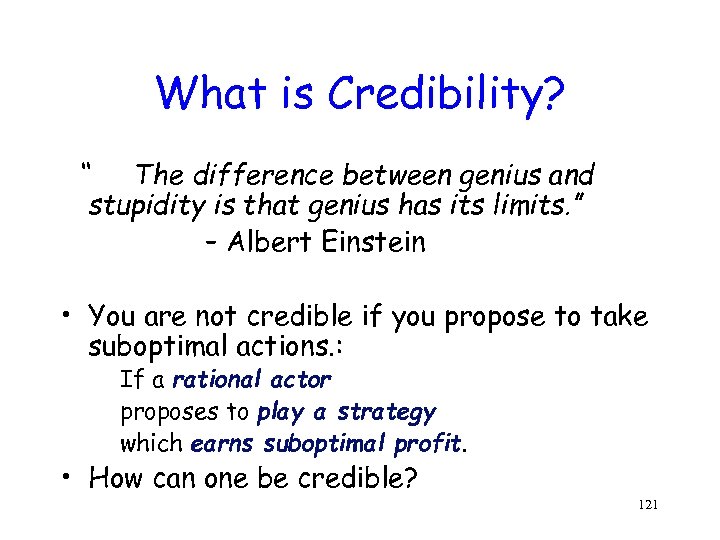

What is Credibility? “ The difference between genius and stupidity is that genius has its limits. ” – Albert Einstein • You are not credible if you propose to take suboptimal actions. : If a rational actor proposes to play a strategy which earns suboptimal profit. • How can one be credible? 121

What is Credibility? “ The difference between genius and stupidity is that genius has its limits. ” – Albert Einstein • You are not credible if you propose to take suboptimal actions. : If a rational actor proposes to play a strategy which earns suboptimal profit. • How can one be credible? 121

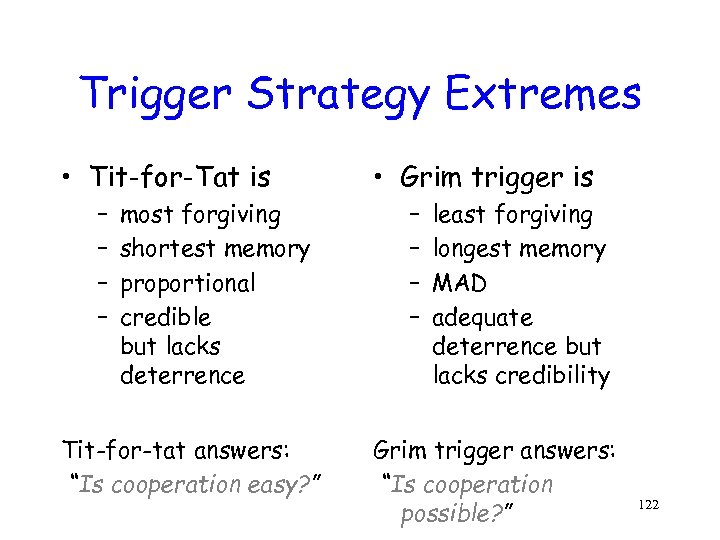

Trigger Strategy Extremes • Tit-for-Tat is – – most forgiving shortest memory proportional credible but lacks deterrence Tit-for-tat answers: “Is cooperation easy? ” • Grim trigger is – – least forgiving longest memory MAD adequate deterrence but lacks credibility Grim trigger answers: “Is cooperation possible? ” 122

Trigger Strategy Extremes • Tit-for-Tat is – – most forgiving shortest memory proportional credible but lacks deterrence Tit-for-tat answers: “Is cooperation easy? ” • Grim trigger is – – least forgiving longest memory MAD adequate deterrence but lacks credibility Grim trigger answers: “Is cooperation possible? ” 122

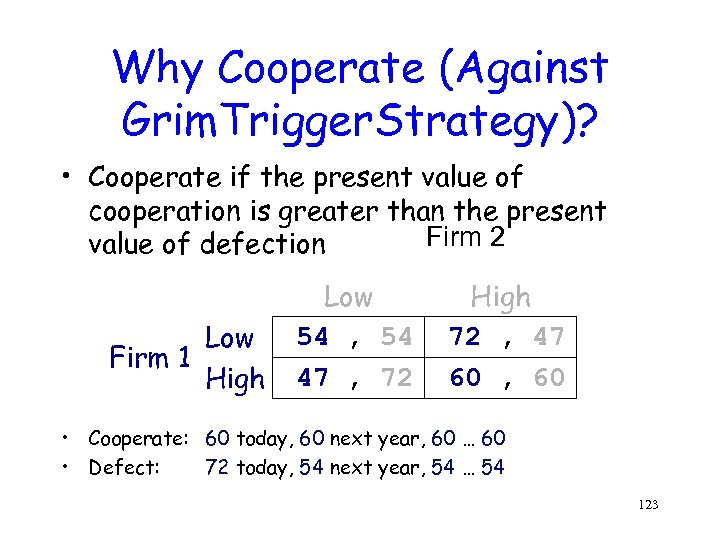

Why Cooperate (Against Grim. Trigger. Strategy)? • Cooperate if the present value of cooperation is greater than the present Firm 2 value of defection Low Firm 1 High Low 54 , 54 47 , 72 High 72 , 47 60 , 60 • Cooperate: 60 today, 60 next year, 60 … 60 • Defect: 72 today, 54 next year, 54 … 54 123

Why Cooperate (Against Grim. Trigger. Strategy)? • Cooperate if the present value of cooperation is greater than the present Firm 2 value of defection Low Firm 1 High Low 54 , 54 47 , 72 High 72 , 47 60 , 60 • Cooperate: 60 today, 60 next year, 60 … 60 • Defect: 72 today, 54 next year, 54 … 54 123

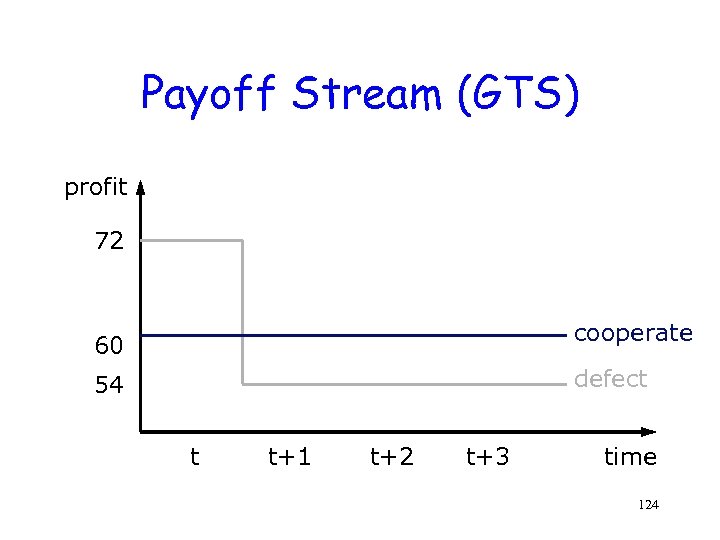

Payoff Stream (GTS) profit 72 cooperate 60 defect 54 t t+1 t+2 t+3 time 124

Payoff Stream (GTS) profit 72 cooperate 60 defect 54 t t+1 t+2 t+3 time 124

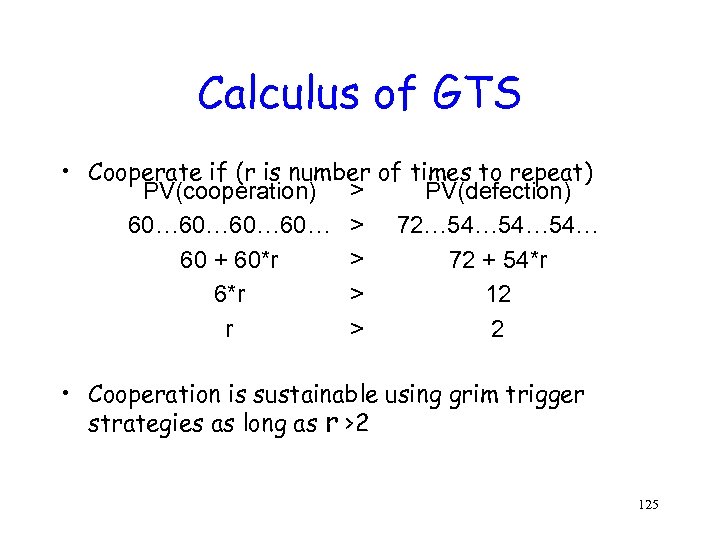

Calculus of GTS • Cooperate if (r is number of times to repeat) PV(cooperation) > PV(defection) 60… 60… > 72… 54… 54… > 60 + 60*r 72 + 54*r > 6*r 12 > r 2 • Cooperation is sustainable using grim trigger strategies as long as r >2 125

Calculus of GTS • Cooperate if (r is number of times to repeat) PV(cooperation) > PV(defection) 60… 60… > 72… 54… 54… > 60 + 60*r 72 + 54*r > 6*r 12 > r 2 • Cooperation is sustainable using grim trigger strategies as long as r >2 125

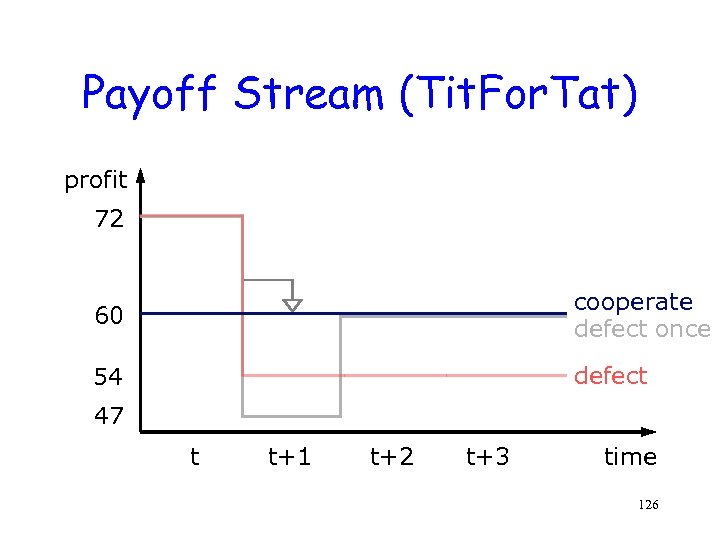

Payoff Stream (Tit. For. Tat) profit 72 60 cooperate defect once 54 defect 47 t t+1 t+2 t+3 time 126

Payoff Stream (Tit. For. Tat) profit 72 60 cooperate defect once 54 defect 47 t t+1 t+2 t+3 time 126

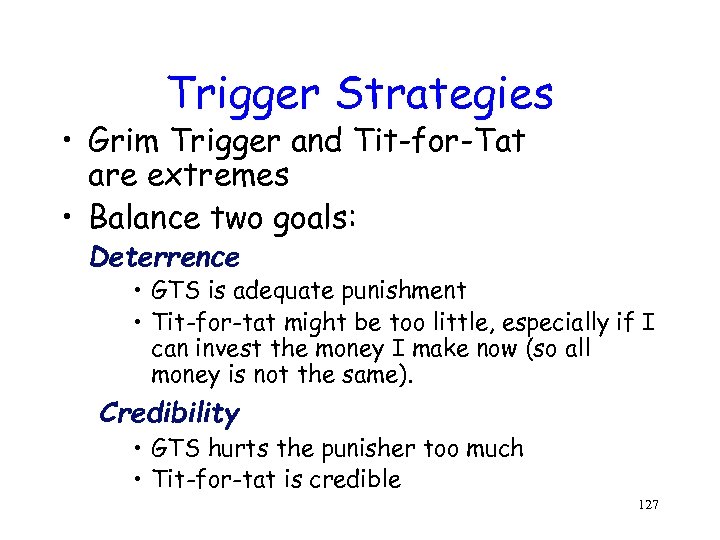

Trigger Strategies • Grim Trigger and Tit-for-Tat are extremes • Balance two goals: Deterrence • GTS is adequate punishment • Tit-for-tat might be too little, especially if I can invest the money I make now (so all money is not the same). Credibility • GTS hurts the punisher too much • Tit-for-tat is credible 127

Trigger Strategies • Grim Trigger and Tit-for-Tat are extremes • Balance two goals: Deterrence • GTS is adequate punishment • Tit-for-tat might be too little, especially if I can invest the money I make now (so all money is not the same). Credibility • GTS hurts the punisher too much • Tit-for-tat is credible 127

Optimal Punishment COMMANDMENT In announcing a punishment strategy: Punish enough to deter your opponent. Temper punishment to remain credible. 128

Optimal Punishment COMMANDMENT In announcing a punishment strategy: Punish enough to deter your opponent. Temper punishment to remain credible. 128