473afd1c87f683b7ffe1d213cc2a3b40.ppt

- Количество слайдов: 20

Challenges in Performance Evaluation and Improvement of Scientific Codes Boyana Norris Argonne National Laboratory http: //www. mcs. anl. gov/~norris Ivana Veljkovic February 13, 2005 Pennsylvania State University SIAM CSE

Challenges in Performance Evaluation and Improvement of Scientific Codes Boyana Norris Argonne National Laboratory http: //www. mcs. anl. gov/~norris Ivana Veljkovic February 13, 2005 Pennsylvania State University SIAM CSE

Outline n Performance evaluation challenges n Component-based approach n Motivating example: adaptive linear system solution n A component infrastructure for performance monitoring and adaptation of applications n Summary and future work February 13, 2005 SIAM CSE 2

Outline n Performance evaluation challenges n Component-based approach n Motivating example: adaptive linear system solution n A component infrastructure for performance monitoring and adaptation of applications n Summary and future work February 13, 2005 SIAM CSE 2

Acknowledgments n n n Ivana Veljkovic, Padma Raghavan (Penn State) Sanjukta Bhowmick (ANL/Columbia) Lois Curfman Mc. Innes (ANL) TAU developers (U. Oregon) PERC members Sponsor: DOE and NSF February 13, 2005 SIAM CSE 3

Acknowledgments n n n Ivana Veljkovic, Padma Raghavan (Penn State) Sanjukta Bhowmick (ANL/Columbia) Lois Curfman Mc. Innes (ANL) TAU developers (U. Oregon) PERC members Sponsor: DOE and NSF February 13, 2005 SIAM CSE 3

Challenges in performance evaluation + Many tools for performance data gathering and analysis ¨ ¨ PAPI, TAU, Sv. Pablo, Kojak, … Various interfaces, levels of automation, and approaches to information presentation Ø User’s point of view - What do the different tools do? Which is most appropriate for a given application? - (How) can multiple tools be used in concert? - I have tons of performance data, now what? - What automatic tuning tools are available, what exactly do they do? - How hard is it to install/learn/use tool X? - Is instrumented code portable? What’s the overhead of instrumentation? How does code evolution affect the performance analysis process? February 13, 2005 SIAM CSE 4

Challenges in performance evaluation + Many tools for performance data gathering and analysis ¨ ¨ PAPI, TAU, Sv. Pablo, Kojak, … Various interfaces, levels of automation, and approaches to information presentation Ø User’s point of view - What do the different tools do? Which is most appropriate for a given application? - (How) can multiple tools be used in concert? - I have tons of performance data, now what? - What automatic tuning tools are available, what exactly do they do? - How hard is it to install/learn/use tool X? - Is instrumented code portable? What’s the overhead of instrumentation? How does code evolution affect the performance analysis process? February 13, 2005 SIAM CSE 4

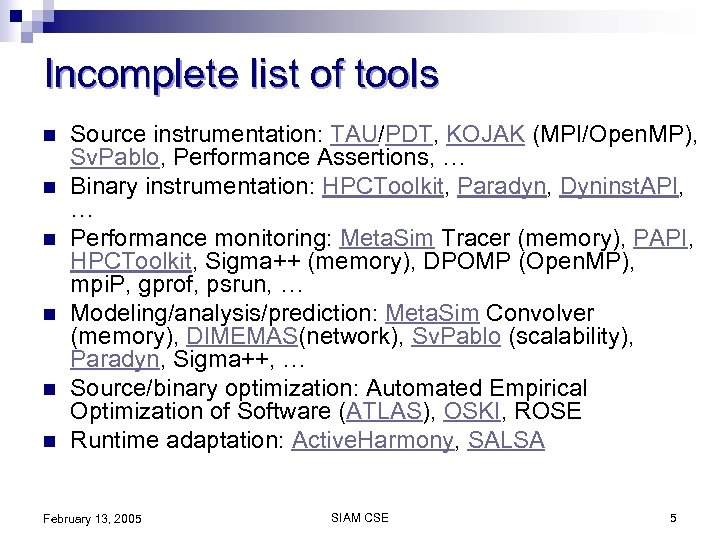

Incomplete list of tools n n n Source instrumentation: TAU/PDT, KOJAK (MPI/Open. MP), Sv. Pablo, Performance Assertions, … Binary instrumentation: HPCToolkit, Paradyn, Dyninst. API, … Performance monitoring: Meta. Sim Tracer (memory), PAPI, HPCToolkit, Sigma++ (memory), DPOMP (Open. MP), mpi. P, gprof, psrun, … Modeling/analysis/prediction: Meta. Sim Convolver (memory), DIMEMAS(network), Sv. Pablo (scalability), Paradyn, Sigma++, … Source/binary optimization: Automated Empirical Optimization of Software (ATLAS), OSKI, ROSE Runtime adaptation: Active. Harmony, SALSA February 13, 2005 SIAM CSE 5

Incomplete list of tools n n n Source instrumentation: TAU/PDT, KOJAK (MPI/Open. MP), Sv. Pablo, Performance Assertions, … Binary instrumentation: HPCToolkit, Paradyn, Dyninst. API, … Performance monitoring: Meta. Sim Tracer (memory), PAPI, HPCToolkit, Sigma++ (memory), DPOMP (Open. MP), mpi. P, gprof, psrun, … Modeling/analysis/prediction: Meta. Sim Convolver (memory), DIMEMAS(network), Sv. Pablo (scalability), Paradyn, Sigma++, … Source/binary optimization: Automated Empirical Optimization of Software (ATLAS), OSKI, ROSE Runtime adaptation: Active. Harmony, SALSA February 13, 2005 SIAM CSE 5

Incomplete list of tools n n n Source instrumentation: TAU/PDT, KOJAK (MPI/Open. MP), Sv. Pablo, Performance Assertions, … Binary instrumentation: HPCToolkit, Paradyn, Dyninst. API, … Performance monitoring: Meta. Sim Tracer (memory), PAPI, HPCToolkit, Sigma++ (memory), DPOMP (Open. MP), mpi. P, gprof, psrun, … Modeling/analysis/prediction: Meta. Sim Convolver (memory), DIMEMAS(network), Sv. Pablo (scalability), Paradyn, Sigma++, … Source/binary optimization: Automated Empirical Optimization of Software (ATLAS), OSKI, ROSE Runtime adaptation: Active. Harmony, SALSA February 13, 2005 SIAM CSE 6

Incomplete list of tools n n n Source instrumentation: TAU/PDT, KOJAK (MPI/Open. MP), Sv. Pablo, Performance Assertions, … Binary instrumentation: HPCToolkit, Paradyn, Dyninst. API, … Performance monitoring: Meta. Sim Tracer (memory), PAPI, HPCToolkit, Sigma++ (memory), DPOMP (Open. MP), mpi. P, gprof, psrun, … Modeling/analysis/prediction: Meta. Sim Convolver (memory), DIMEMAS(network), Sv. Pablo (scalability), Paradyn, Sigma++, … Source/binary optimization: Automated Empirical Optimization of Software (ATLAS), OSKI, ROSE Runtime adaptation: Active. Harmony, SALSA February 13, 2005 SIAM CSE 6

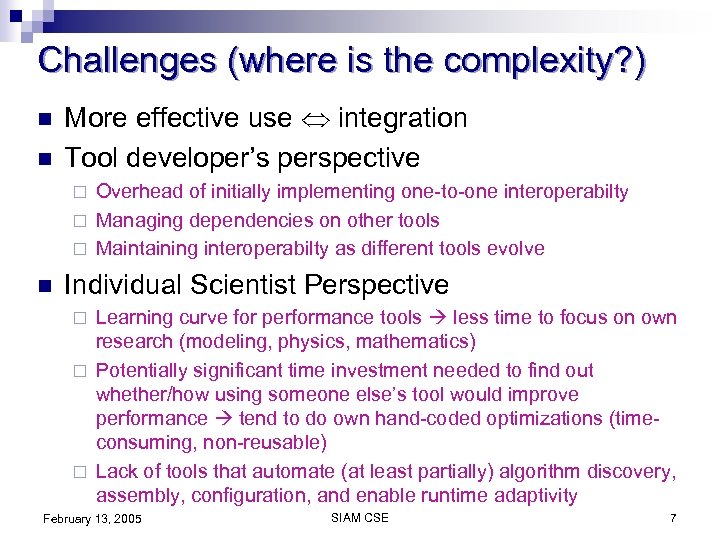

Challenges (where is the complexity? ) n n More effective use integration Tool developer’s perspective Overhead of initially implementing one-to-one interoperabilty ¨ Managing dependencies on other tools ¨ Maintaining interoperabilty as different tools evolve ¨ n Individual Scientist Perspective Learning curve for performance tools less time to focus on own research (modeling, physics, mathematics) ¨ Potentially significant time investment needed to find out whether/how using someone else’s tool would improve performance tend to do own hand-coded optimizations (timeconsuming, non-reusable) ¨ Lack of tools that automate (at least partially) algorithm discovery, assembly, configuration, and enable runtime adaptivity ¨ February 13, 2005 SIAM CSE 7

Challenges (where is the complexity? ) n n More effective use integration Tool developer’s perspective Overhead of initially implementing one-to-one interoperabilty ¨ Managing dependencies on other tools ¨ Maintaining interoperabilty as different tools evolve ¨ n Individual Scientist Perspective Learning curve for performance tools less time to focus on own research (modeling, physics, mathematics) ¨ Potentially significant time investment needed to find out whether/how using someone else’s tool would improve performance tend to do own hand-coded optimizations (timeconsuming, non-reusable) ¨ Lack of tools that automate (at least partially) algorithm discovery, assembly, configuration, and enable runtime adaptivity ¨ February 13, 2005 SIAM CSE 7

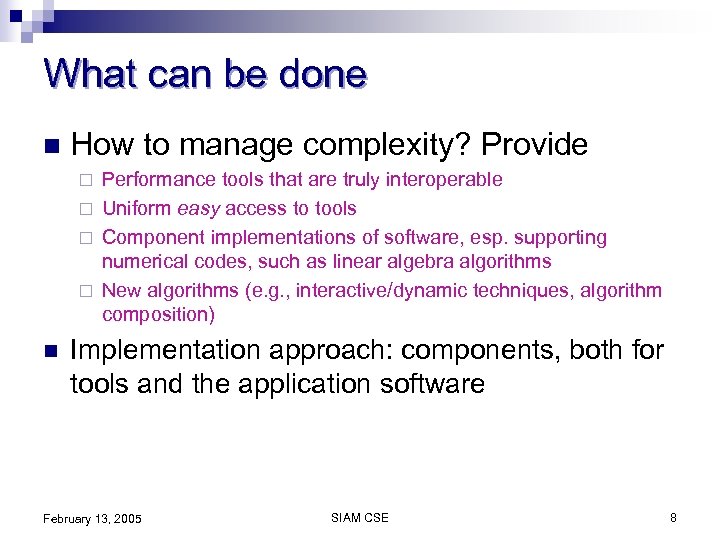

What can be done n How to manage complexity? Provide Performance tools that are truly interoperable ¨ Uniform easy access to tools ¨ Component implementations of software, esp. supporting numerical codes, such as linear algebra algorithms ¨ New algorithms (e. g. , interactive/dynamic techniques, algorithm composition) ¨ n Implementation approach: components, both for tools and the application software February 13, 2005 SIAM CSE 8

What can be done n How to manage complexity? Provide Performance tools that are truly interoperable ¨ Uniform easy access to tools ¨ Component implementations of software, esp. supporting numerical codes, such as linear algebra algorithms ¨ New algorithms (e. g. , interactive/dynamic techniques, algorithm composition) ¨ n Implementation approach: components, both for tools and the application software February 13, 2005 SIAM CSE 8

What is being done n n No “integrated” environment for performance monitoring, analysis, and optimization Most past efforts ¨ One-to-one n tool interoperability More recently ¨ OSPAT (initial meeting at SC’ 04), focus on common data representation and interfaces ¨ Tool-independent performance databases: Perf. DMF ¨ Eclipse parallel tools project (LANL) ¨… February 13, 2005 SIAM CSE 9

What is being done n n No “integrated” environment for performance monitoring, analysis, and optimization Most past efforts ¨ One-to-one n tool interoperability More recently ¨ OSPAT (initial meeting at SC’ 04), focus on common data representation and interfaces ¨ Tool-independent performance databases: Perf. DMF ¨ Eclipse parallel tools project (LANL) ¨… February 13, 2005 SIAM CSE 9

OSPAT n The following areas were recommended for OSPAT to investigate: ¨ ¨ ¨ A common instrumentation API for source level, compiler level, library level, binary instrumentation A common probe interface for routine entry and exit events A common profile database schema An API to walk the callstack and examine the heap memory A common API for thread creation and fork interface Visualization components for drawing histograms and hierarchical displays typically used by performance tools February 13, 2005 SIAM CSE 10

OSPAT n The following areas were recommended for OSPAT to investigate: ¨ ¨ ¨ A common instrumentation API for source level, compiler level, library level, binary instrumentation A common probe interface for routine entry and exit events A common profile database schema An API to walk the callstack and examine the heap memory A common API for thread creation and fork interface Visualization components for drawing histograms and hierarchical displays typically used by performance tools February 13, 2005 SIAM CSE 10

Components n Working definition: a component is a piece of software that can be composed with other components within a framework; composition can be either static (at link time) or dynamic (at run time) “plug-and-play” model for building applications ¨ For more info: C. Szyperski, Component Software: Beyond Object. Oriented Programming, ACM Press, New York, 1998 ¨ n Components enable Tool interoperability ¨ Automation of performance instrumentation/monitoring ¨ Application adaptivity (automated or user-guided) ¨ February 13, 2005 SIAM CSE 11

Components n Working definition: a component is a piece of software that can be composed with other components within a framework; composition can be either static (at link time) or dynamic (at run time) “plug-and-play” model for building applications ¨ For more info: C. Szyperski, Component Software: Beyond Object. Oriented Programming, ACM Press, New York, 1998 ¨ n Components enable Tool interoperability ¨ Automation of performance instrumentation/monitoring ¨ Application adaptivity (automated or user-guided) ¨ February 13, 2005 SIAM CSE 11

Example: component infrastructure for multimethod linear solvers n Goal: provide a framework for Performance monitoring of numerical components ¨ Dynamic adaptativity, based on: ¨ n n n Off-line analyses of past performance information Online analysis of current execution performance information Motivating application examples: Driven cavity flow [Coffey et al, 2003], nonlinear PDE solution ¨ FUN 3 D – incompressible and compressible Euler equations ¨ n Prior work in multimethod linear solvers ¨ Mc. Innes et al, ’ 03, Bhowmick et al, ’ 03 and ’ 05, Norris at al. ’ 05. February 13, 2005 SIAM CSE 12

Example: component infrastructure for multimethod linear solvers n Goal: provide a framework for Performance monitoring of numerical components ¨ Dynamic adaptativity, based on: ¨ n n n Off-line analyses of past performance information Online analysis of current execution performance information Motivating application examples: Driven cavity flow [Coffey et al, 2003], nonlinear PDE solution ¨ FUN 3 D – incompressible and compressible Euler equations ¨ n Prior work in multimethod linear solvers ¨ Mc. Innes et al, ’ 03, Bhowmick et al, ’ 03 and ’ 05, Norris at al. ’ 05. February 13, 2005 SIAM CSE 12

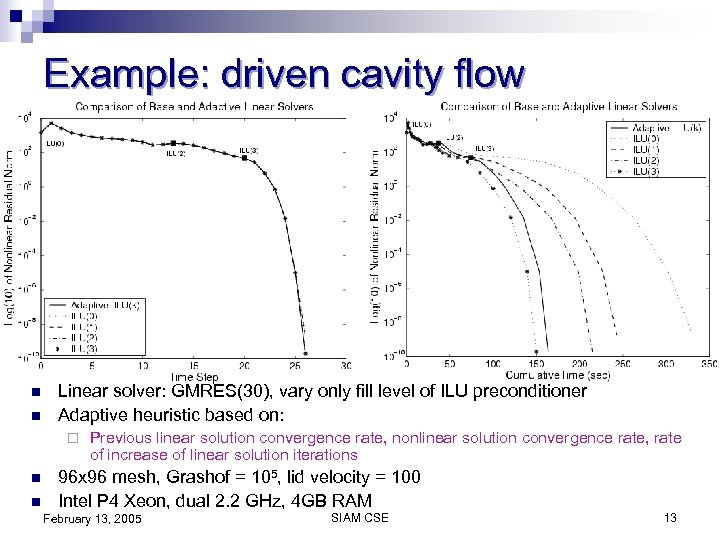

Example: driven cavity flow n n Linear solver: GMRES(30), vary only fill level of ILU preconditioner Adaptive heuristic based on: ¨ n n Previous linear solution convergence rate, nonlinear solution convergence rate, rate of increase of linear solution iterations 96 x 96 mesh, Grashof = 105, lid velocity = 100 Intel P 4 Xeon, dual 2. 2 GHz, 4 GB RAM February 13, 2005 SIAM CSE 13

Example: driven cavity flow n n Linear solver: GMRES(30), vary only fill level of ILU preconditioner Adaptive heuristic based on: ¨ n n Previous linear solution convergence rate, nonlinear solution convergence rate, rate of increase of linear solution iterations 96 x 96 mesh, Grashof = 105, lid velocity = 100 Intel P 4 Xeon, dual 2. 2 GHz, 4 GB RAM February 13, 2005 SIAM CSE 13

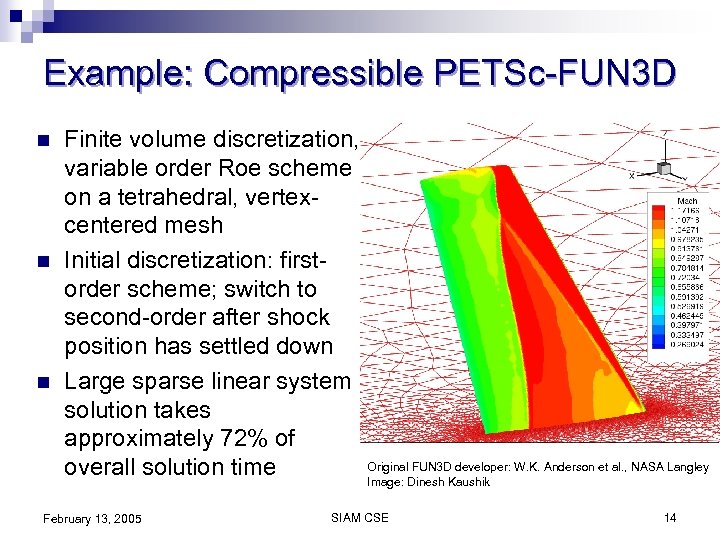

Example: Compressible PETSc-FUN 3 D n n n Finite volume discretization, variable order Roe scheme on a tetrahedral, vertexcentered mesh Initial discretization: firstorder scheme; switch to second-order after shock position has settled down Large sparse linear system solution takes approximately 72% of Original FUN 3 D developer: W. K. Anderson et al. , NASA Langley overall solution time Image: Dinesh Kaushik February 13, 2005 SIAM CSE 14

Example: Compressible PETSc-FUN 3 D n n n Finite volume discretization, variable order Roe scheme on a tetrahedral, vertexcentered mesh Initial discretization: firstorder scheme; switch to second-order after shock position has settled down Large sparse linear system solution takes approximately 72% of Original FUN 3 D developer: W. K. Anderson et al. , NASA Langley overall solution time Image: Dinesh Kaushik February 13, 2005 SIAM CSE 14

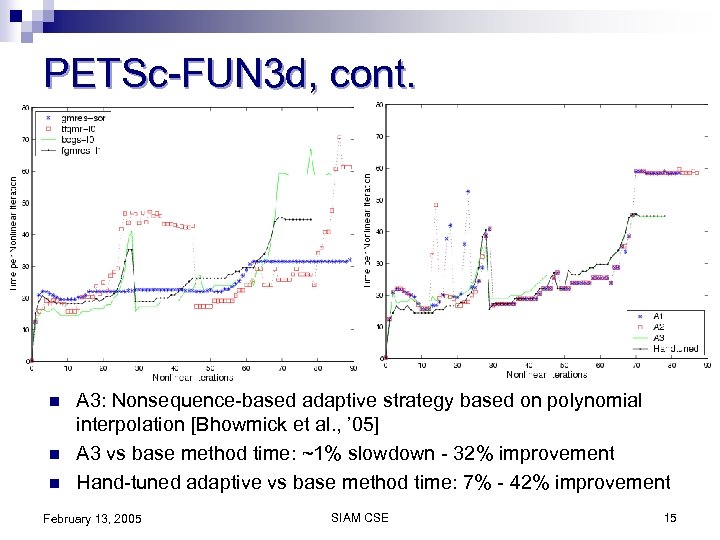

PETSc-FUN 3 d, cont. n n n A 3: Nonsequence-based adaptive strategy based on polynomial interpolation [Bhowmick et al. , ’ 05] A 3 vs base method time: ~1% slowdown - 32% improvement Hand-tuned adaptive vs base method time: 7% - 42% improvement February 13, 2005 SIAM CSE 15

PETSc-FUN 3 d, cont. n n n A 3: Nonsequence-based adaptive strategy based on polynomial interpolation [Bhowmick et al. , ’ 05] A 3 vs base method time: ~1% slowdown - 32% improvement Hand-tuned adaptive vs base method time: 7% - 42% improvement February 13, 2005 SIAM CSE 15

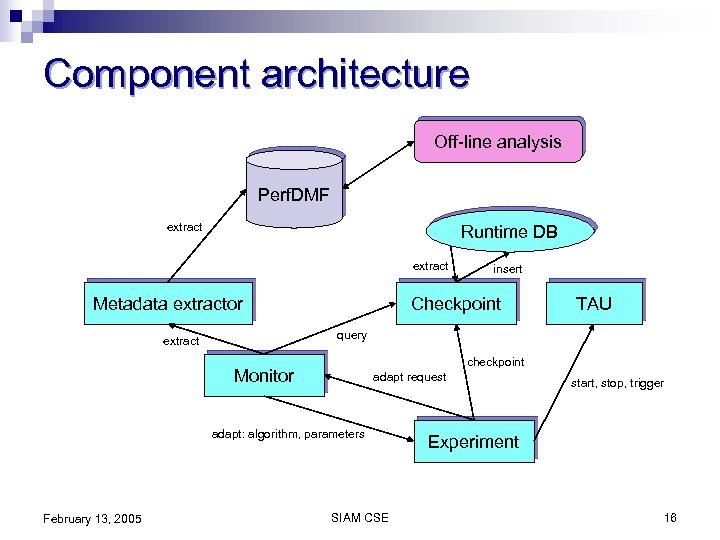

Component architecture Off-line analysis Perf. DMF extract Runtime DB extract Metadata extractor Checkpoint TAU query extract checkpoint Monitor adapt request adapt: algorithm, parameters February 13, 2005 insert SIAM CSE start, stop, trigger Experiment 16

Component architecture Off-line analysis Perf. DMF extract Runtime DB extract Metadata extractor Checkpoint TAU query extract checkpoint Monitor adapt request adapt: algorithm, parameters February 13, 2005 insert SIAM CSE start, stop, trigger Experiment 16

Future work n Integration of ongoing efforts in ¨ Performance tools: common interfaces and data represenation (leverage OSPAT, Perf. DMF, TAU performance interfaces, and similar efforts) ¨ Numerical components: emerging common interfaces (e. g. , TOPS solver interfaces) increase choice of solution method automated composition and adaptation strategies n Long term ¨ Is a more organized (but not too restrictive) environment for scientific software lifecycle development possible/desirable? February 13, 2005 SIAM CSE 17

Future work n Integration of ongoing efforts in ¨ Performance tools: common interfaces and data represenation (leverage OSPAT, Perf. DMF, TAU performance interfaces, and similar efforts) ¨ Numerical components: emerging common interfaces (e. g. , TOPS solver interfaces) increase choice of solution method automated composition and adaptation strategies n Long term ¨ Is a more organized (but not too restrictive) environment for scientific software lifecycle development possible/desirable? February 13, 2005 SIAM CSE 17

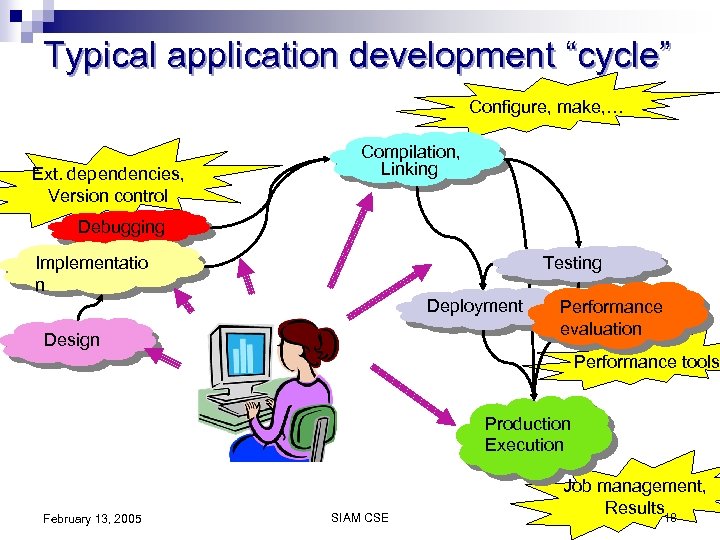

Typical application development “cycle” Configure, make, … Ext. dependencies, Version control Compilation, Linking Debugging Testing Implementatio n Deployment Design Performance evaluation Performance tools Production Execution February 13, 2005 SIAM CSE Job management, Results 18

Typical application development “cycle” Configure, make, … Ext. dependencies, Version control Compilation, Linking Debugging Testing Implementatio n Deployment Design Performance evaluation Performance tools Production Execution February 13, 2005 SIAM CSE Job management, Results 18

Future work n Beyond components ¨ Work flow ¨ Reproducible results – associate all necessary information for reproducing particular application instance ¨ Ontology of tools and tools to guide selection and use February 13, 2005 SIAM CSE 19

Future work n Beyond components ¨ Work flow ¨ Reproducible results – associate all necessary information for reproducing particular application instance ¨ Ontology of tools and tools to guide selection and use February 13, 2005 SIAM CSE 19

Summary n n n No shortage of performance evaluation, analysis, and optimization technology (and new capabilities are continuously added) Little shared infrastructure, limiting the utility of performance technology in scientific computing Components, both in performance tools, and numerical software can be used to manage complexity and enable better performance through dynamic adaptation or multimethod solvers A life-cycle environment may be the best long-term solution Some relevant sites: ¨ ¨ ¨ http: //www. mcs. anl. gov/~norris http: //perc. nersc. gov (performance tools) http: //cca-forum. org (component specification) February 13, 2005 SIAM CSE 20

Summary n n n No shortage of performance evaluation, analysis, and optimization technology (and new capabilities are continuously added) Little shared infrastructure, limiting the utility of performance technology in scientific computing Components, both in performance tools, and numerical software can be used to manage complexity and enable better performance through dynamic adaptation or multimethod solvers A life-cycle environment may be the best long-term solution Some relevant sites: ¨ ¨ ¨ http: //www. mcs. anl. gov/~norris http: //perc. nersc. gov (performance tools) http: //cca-forum. org (component specification) February 13, 2005 SIAM CSE 20