9766b454eb8d62e29d61a1f46d942a12.ppt

- Количество слайдов: 38

Ch 5 Mining Frequent Patterns, Associations, and Correlations Dr. Bernard Chen Ph. D. University of Central Arkansas

Ch 5 Mining Frequent Patterns, Associations, and Correlations Dr. Bernard Chen Ph. D. University of Central Arkansas

Outline n n Association Rules with FP tree Misleading Rules Multi-level Association Rules

Outline n n Association Rules with FP tree Misleading Rules Multi-level Association Rules

What Is Frequent Pattern Analysis? n n Frequent pattern: a pattern (a set of items, subsequences, substructures, etc. ) that occurs frequently in a data set First proposed by Agrawal, Imielinski, and Swami [AIS 93] in the context of frequent itemsets and association rule mining

What Is Frequent Pattern Analysis? n n Frequent pattern: a pattern (a set of items, subsequences, substructures, etc. ) that occurs frequently in a data set First proposed by Agrawal, Imielinski, and Swami [AIS 93] in the context of frequent itemsets and association rule mining

What Is Frequent Pattern Analysis? n Motivation: Finding inherent regularities in data n n What are the subsequent purchases after buying a PC? n What kinds of DNA are sensitive to this new drug? n n What products were often purchased together? bread and milk? Can we automatically classify web documents? Applications n Basket data analysis, cross-marketing, catalog design, sale campaign analysis, Web log (click stream) analysis, and DNA sequence analysis.

What Is Frequent Pattern Analysis? n Motivation: Finding inherent regularities in data n n What are the subsequent purchases after buying a PC? n What kinds of DNA are sensitive to this new drug? n n What products were often purchased together? bread and milk? Can we automatically classify web documents? Applications n Basket data analysis, cross-marketing, catalog design, sale campaign analysis, Web log (click stream) analysis, and DNA sequence analysis.

Association Rules

Association Rules

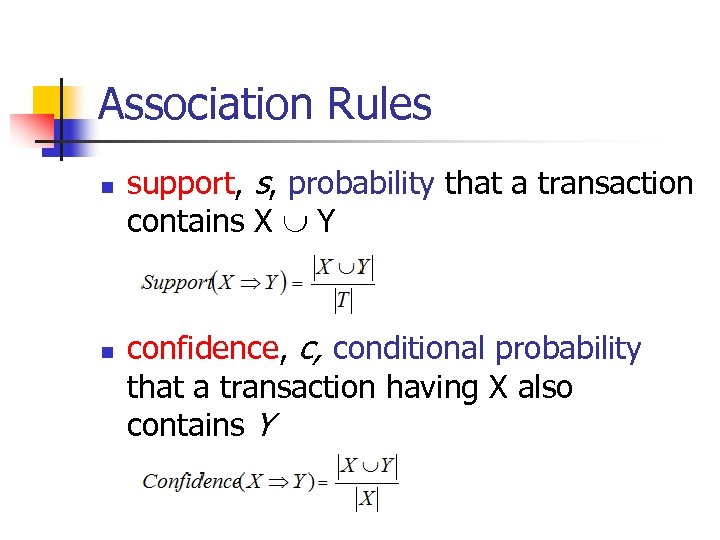

Association Rules n n support, s, probability that a transaction contains X Y confidence, c, conditional probability that a transaction having X also contains Y

Association Rules n n support, s, probability that a transaction contains X Y confidence, c, conditional probability that a transaction having X also contains Y

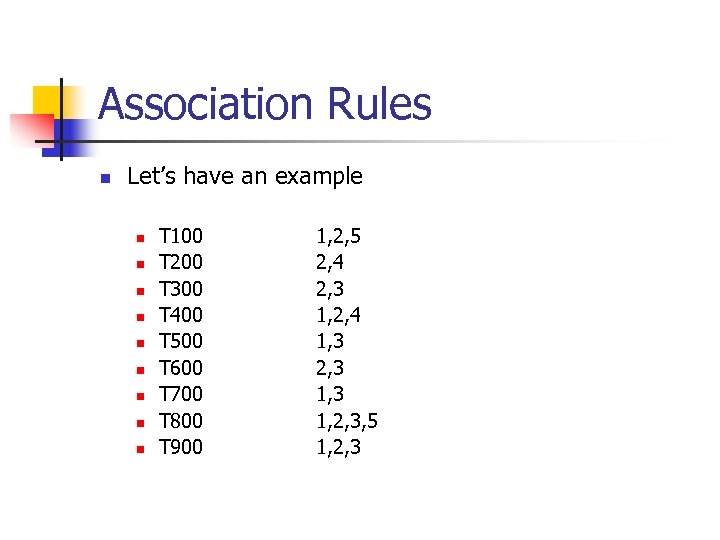

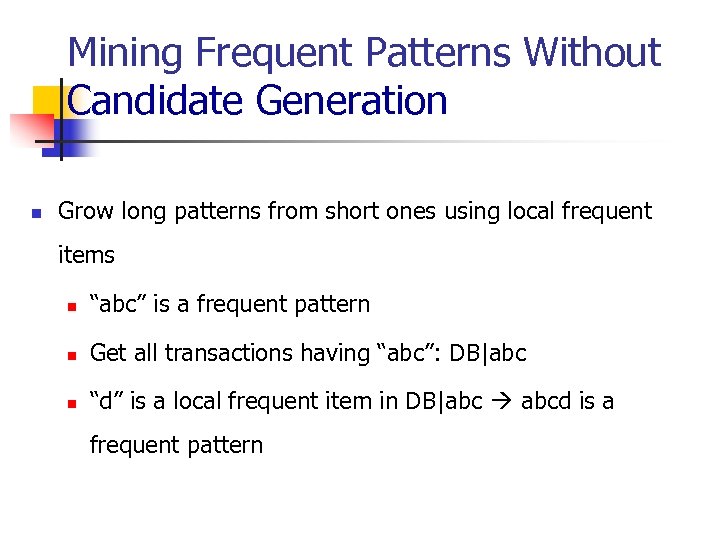

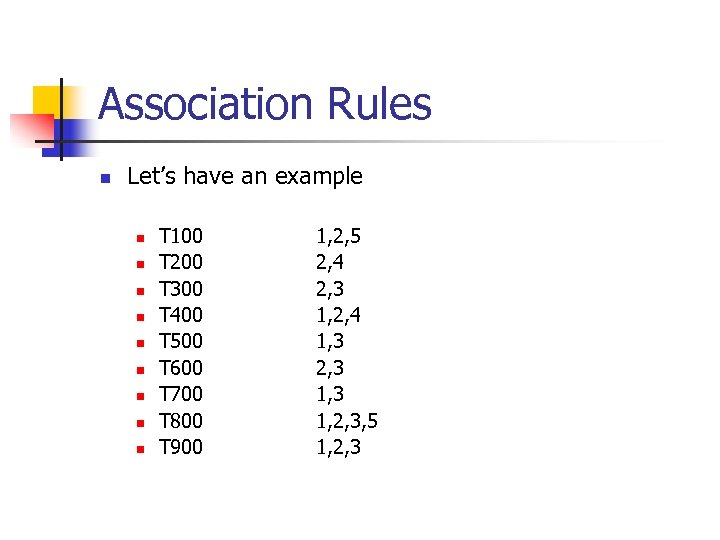

Association Rules n Let’s have an example n n n n n T 100 T 200 T 300 T 400 T 500 T 600 T 700 T 800 T 900 1, 2, 5 2, 4 2, 3 1, 2, 4 1, 3 2, 3 1, 2, 3, 5 1, 2, 3

Association Rules n Let’s have an example n n n n n T 100 T 200 T 300 T 400 T 500 T 600 T 700 T 800 T 900 1, 2, 5 2, 4 2, 3 1, 2, 4 1, 3 2, 3 1, 2, 3, 5 1, 2, 3

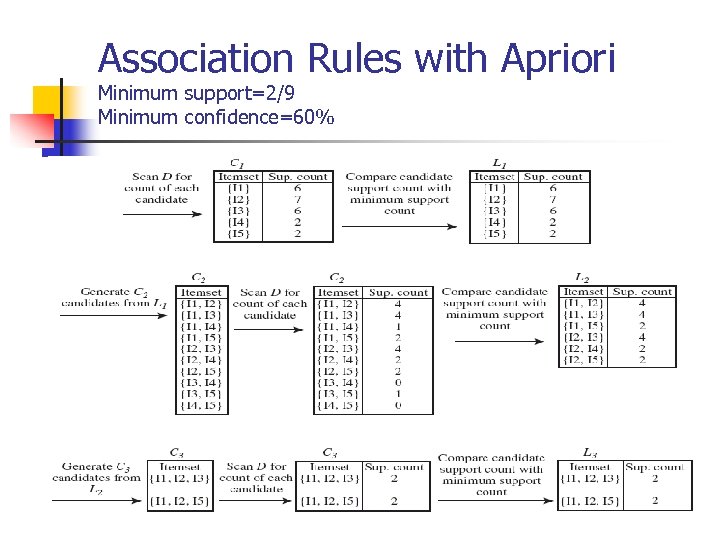

Association Rules with Apriori Minimum support=2/9 Minimum confidence=60%

Association Rules with Apriori Minimum support=2/9 Minimum confidence=60%

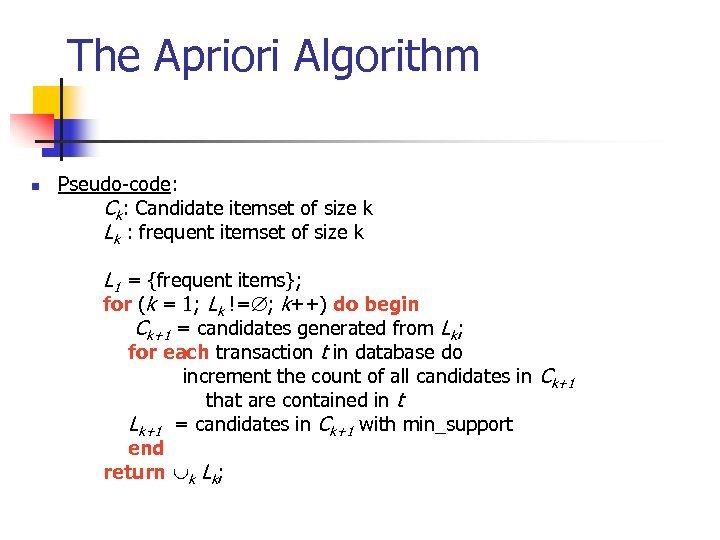

The Apriori Algorithm n Pseudo-code: Ck: Candidate itemset of size k Lk : frequent itemset of size k L 1 = {frequent items}; for (k = 1; Lk != ; k++) do begin Ck+1 = candidates generated from Lk; for each transaction t in database do Lk+1 increment the count of all candidates in Ck+1 that are contained in t = candidates in Ck+1 with min_support end return k Lk;

The Apriori Algorithm n Pseudo-code: Ck: Candidate itemset of size k Lk : frequent itemset of size k L 1 = {frequent items}; for (k = 1; Lk != ; k++) do begin Ck+1 = candidates generated from Lk; for each transaction t in database do Lk+1 increment the count of all candidates in Ck+1 that are contained in t = candidates in Ck+1 with min_support end return k Lk;

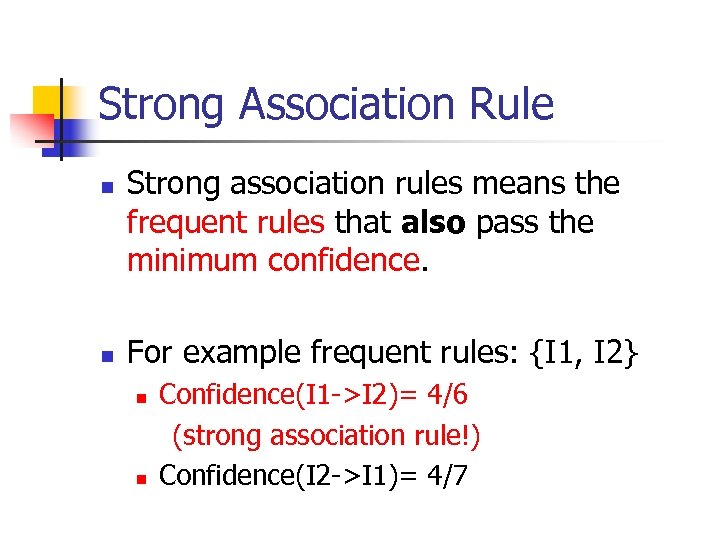

Strong Association Rule n n Strong association rules means the frequent rules that also pass the minimum confidence. For example frequent rules: {I 1, I 2} n n Confidence(I 1 ->I 2)= 4/6 (strong association rule!) Confidence(I 2 ->I 1)= 4/7

Strong Association Rule n n Strong association rules means the frequent rules that also pass the minimum confidence. For example frequent rules: {I 1, I 2} n n Confidence(I 1 ->I 2)= 4/6 (strong association rule!) Confidence(I 2 ->I 1)= 4/7

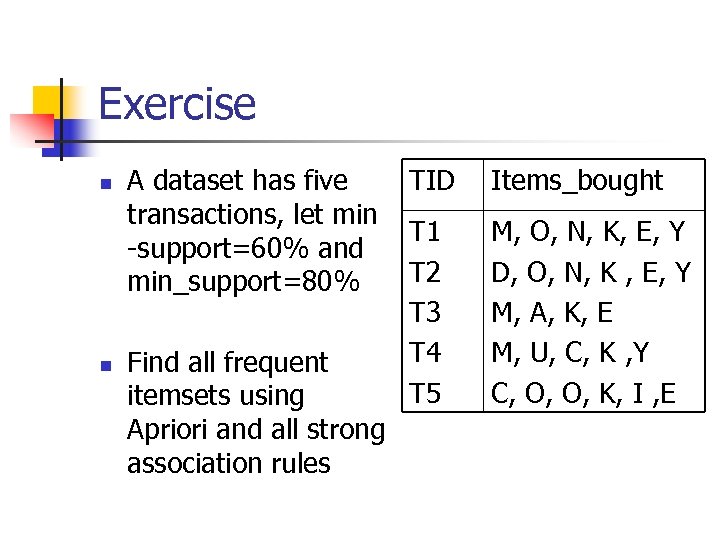

Exercise n n A dataset has five transactions, let min -support=60% and min_support=80% Find all frequent itemsets using Apriori and all strong association rules TID Items_bought T 1 T 2 T 3 T 4 T 5 M, O, N, K, E, Y D, O, N, K , E, Y M, A, K, E M, U, C, K , Y C, O, O, K, I , E

Exercise n n A dataset has five transactions, let min -support=60% and min_support=80% Find all frequent itemsets using Apriori and all strong association rules TID Items_bought T 1 T 2 T 3 T 4 T 5 M, O, N, K, E, Y D, O, N, K , E, Y M, A, K, E M, U, C, K , Y C, O, O, K, I , E

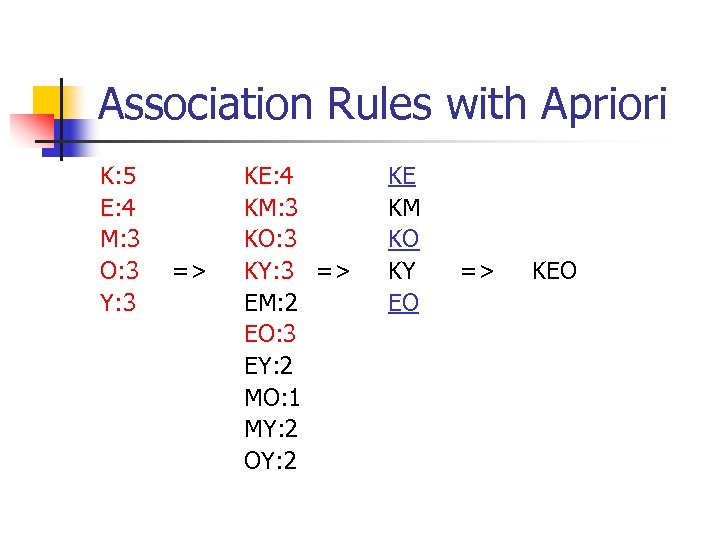

Association Rules with Apriori K: 5 E: 4 M: 3 O: 3 Y: 3 => KE: 4 KM: 3 KO: 3 KY: 3 => EM: 2 EO: 3 EY: 2 MO: 1 MY: 2 OY: 2 KE KM KO KY EO => KEO

Association Rules with Apriori K: 5 E: 4 M: 3 O: 3 Y: 3 => KE: 4 KM: 3 KO: 3 KY: 3 => EM: 2 EO: 3 EY: 2 MO: 1 MY: 2 OY: 2 KE KM KO KY EO => KEO

Outline n n Association Rules with FP tree Misleading Rules Multi-level Association Rules

Outline n n Association Rules with FP tree Misleading Rules Multi-level Association Rules

Mining Frequent Itemsets without Candidate Generation n n In many cases, the Apriori candidate generate -and-test method significantly reduces the size of candidate sets, leading to good performance gain. However, it suffer from two nontrivial costs: n n It may generate a huge number of candidates (for example, if we have 10^4 1 -itemset, it may generate more than 10^7 candidata 2 -itemset) It may need to scan database many times

Mining Frequent Itemsets without Candidate Generation n n In many cases, the Apriori candidate generate -and-test method significantly reduces the size of candidate sets, leading to good performance gain. However, it suffer from two nontrivial costs: n n It may generate a huge number of candidates (for example, if we have 10^4 1 -itemset, it may generate more than 10^7 candidata 2 -itemset) It may need to scan database many times

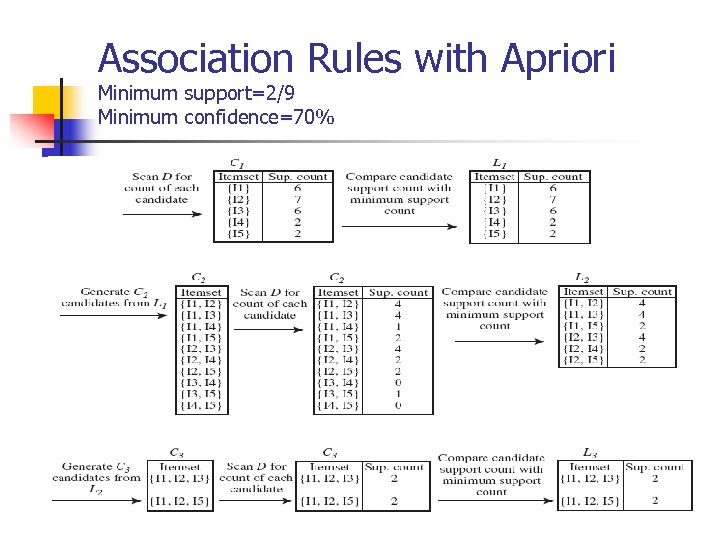

Association Rules with Apriori Minimum support=2/9 Minimum confidence=70%

Association Rules with Apriori Minimum support=2/9 Minimum confidence=70%

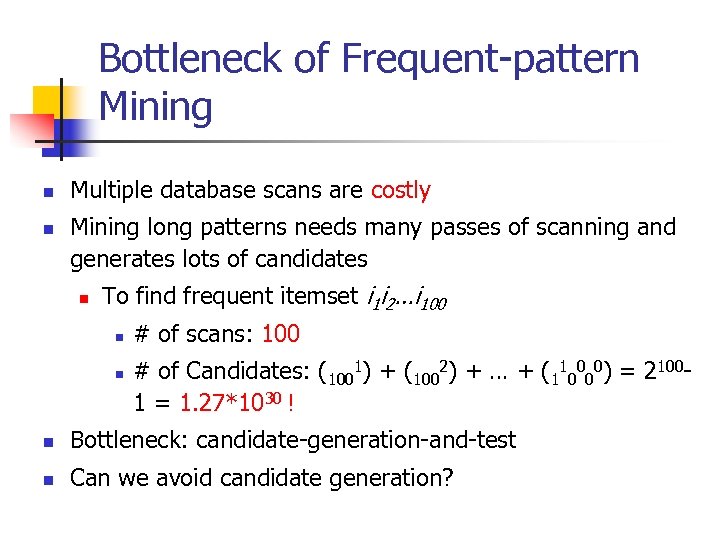

Bottleneck of Frequent-pattern Mining n n Multiple database scans are costly Mining long patterns needs many passes of scanning and generates lots of candidates n To find frequent itemset i 1 i 2…i 100 n n # of scans: 100 # of Candidates: (1001) + (1002) + … + (110000) = 21001 = 1. 27*1030 ! n Bottleneck: candidate-generation-and-test n Can we avoid candidate generation?

Bottleneck of Frequent-pattern Mining n n Multiple database scans are costly Mining long patterns needs many passes of scanning and generates lots of candidates n To find frequent itemset i 1 i 2…i 100 n n # of scans: 100 # of Candidates: (1001) + (1002) + … + (110000) = 21001 = 1. 27*1030 ! n Bottleneck: candidate-generation-and-test n Can we avoid candidate generation?

Mining Frequent Patterns Without Candidate Generation n Grow long patterns from short ones using local frequent items n “abc” is a frequent pattern n Get all transactions having “abc”: DB|abc n “d” is a local frequent item in DB|abc abcd is a frequent pattern

Mining Frequent Patterns Without Candidate Generation n Grow long patterns from short ones using local frequent items n “abc” is a frequent pattern n Get all transactions having “abc”: DB|abc n “d” is a local frequent item in DB|abc abcd is a frequent pattern

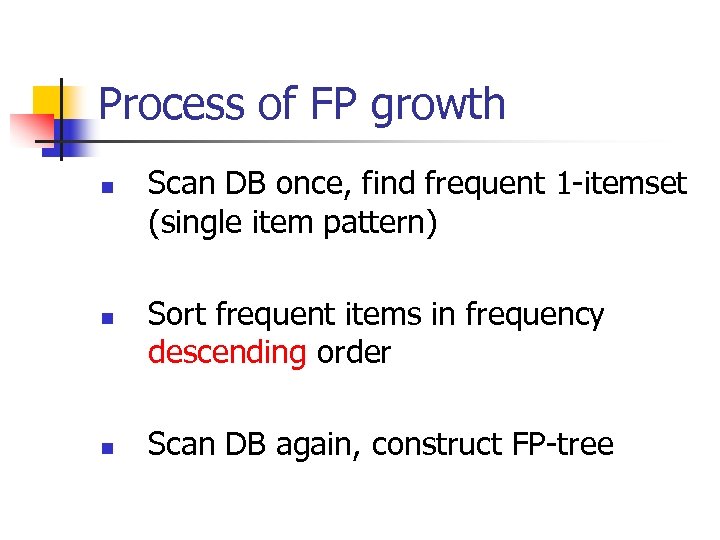

Process of FP growth n n n Scan DB once, find frequent 1 -itemset (single item pattern) Sort frequent items in frequency descending order Scan DB again, construct FP-tree

Process of FP growth n n n Scan DB once, find frequent 1 -itemset (single item pattern) Sort frequent items in frequency descending order Scan DB again, construct FP-tree

Association Rules n Let’s have an example n n n n n T 100 T 200 T 300 T 400 T 500 T 600 T 700 T 800 T 900 1, 2, 5 2, 4 2, 3 1, 2, 4 1, 3 2, 3 1, 2, 3, 5 1, 2, 3

Association Rules n Let’s have an example n n n n n T 100 T 200 T 300 T 400 T 500 T 600 T 700 T 800 T 900 1, 2, 5 2, 4 2, 3 1, 2, 4 1, 3 2, 3 1, 2, 3, 5 1, 2, 3

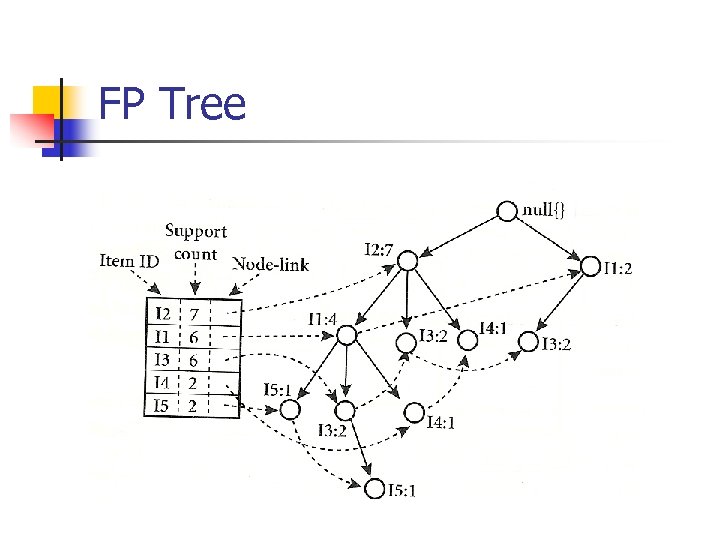

FP Tree

FP Tree

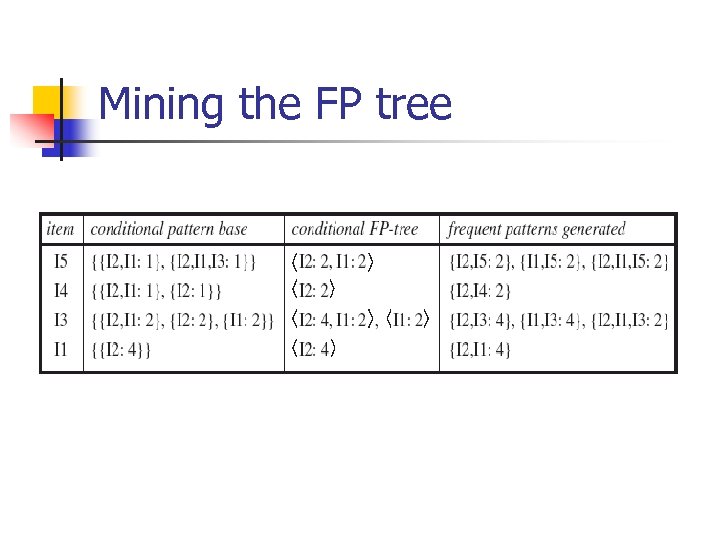

Mining the FP tree

Mining the FP tree

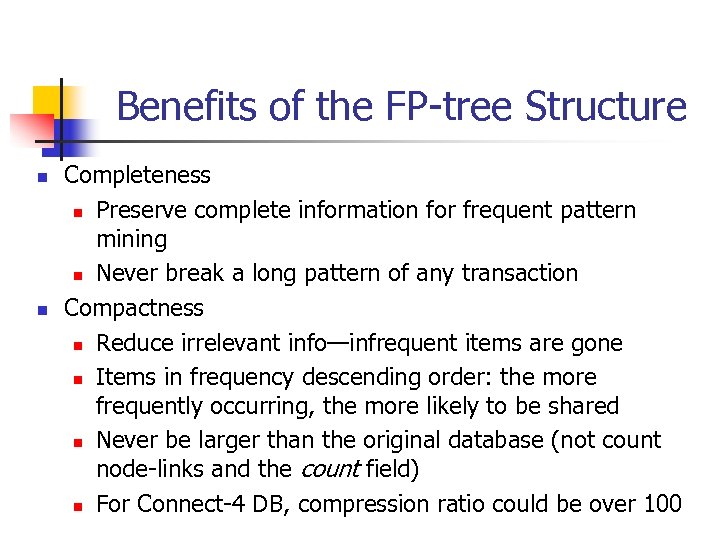

Benefits of the FP-tree Structure n n Completeness n Preserve complete information for frequent pattern mining n Never break a long pattern of any transaction Compactness n Reduce irrelevant info—infrequent items are gone n Items in frequency descending order: the more frequently occurring, the more likely to be shared n Never be larger than the original database (not count node-links and the count field) n For Connect-4 DB, compression ratio could be over 100

Benefits of the FP-tree Structure n n Completeness n Preserve complete information for frequent pattern mining n Never break a long pattern of any transaction Compactness n Reduce irrelevant info—infrequent items are gone n Items in frequency descending order: the more frequently occurring, the more likely to be shared n Never be larger than the original database (not count node-links and the count field) n For Connect-4 DB, compression ratio could be over 100

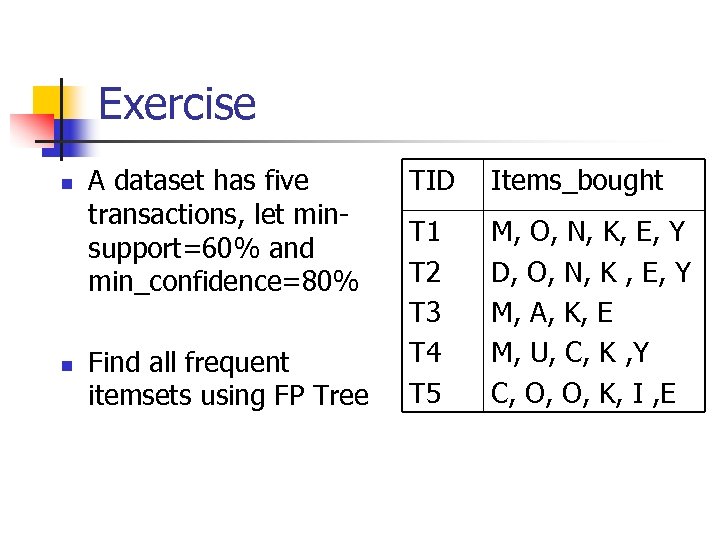

Exercise n n A dataset has five transactions, let minsupport=60% and min_confidence=80% Find all frequent itemsets using FP Tree TID Items_bought T 1 T 2 T 3 T 4 T 5 M, O, N, K, E, Y D, O, N, K , E, Y M, A, K, E M, U, C, K , Y C, O, O, K, I , E

Exercise n n A dataset has five transactions, let minsupport=60% and min_confidence=80% Find all frequent itemsets using FP Tree TID Items_bought T 1 T 2 T 3 T 4 T 5 M, O, N, K, E, Y D, O, N, K , E, Y M, A, K, E M, U, C, K , Y C, O, O, K, I , E

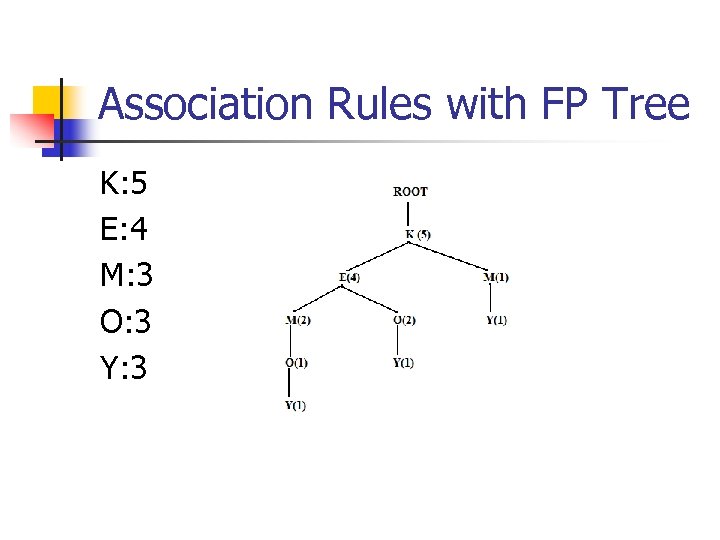

Association Rules with FP Tree K: 5 E: 4 M: 3 O: 3 Y: 3

Association Rules with FP Tree K: 5 E: 4 M: 3 O: 3 Y: 3

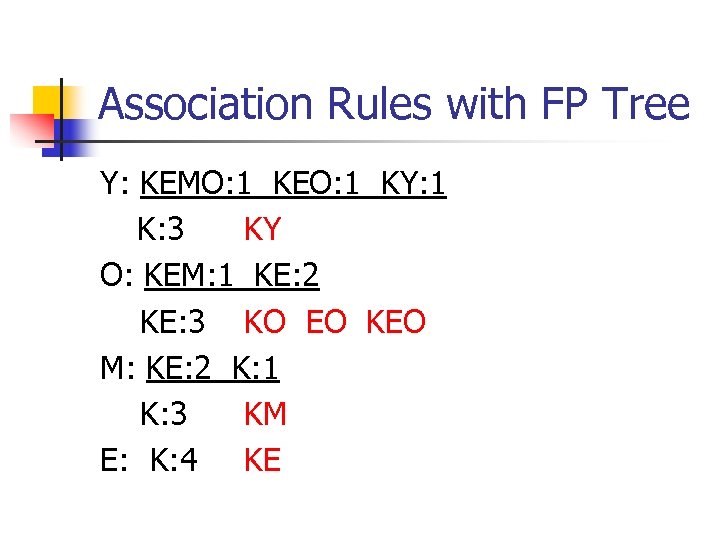

Association Rules with FP Tree Y: KEMO: 1 KEO: 1 KY: 1 K: 3 KY O: KEM: 1 KE: 2 KE: 3 KO EO KEO M: KE: 2 K: 1 K: 3 KM E: K: 4 KE

Association Rules with FP Tree Y: KEMO: 1 KEO: 1 KY: 1 K: 3 KY O: KEM: 1 KE: 2 KE: 3 KO EO KEO M: KE: 2 K: 1 K: 3 KM E: K: 4 KE

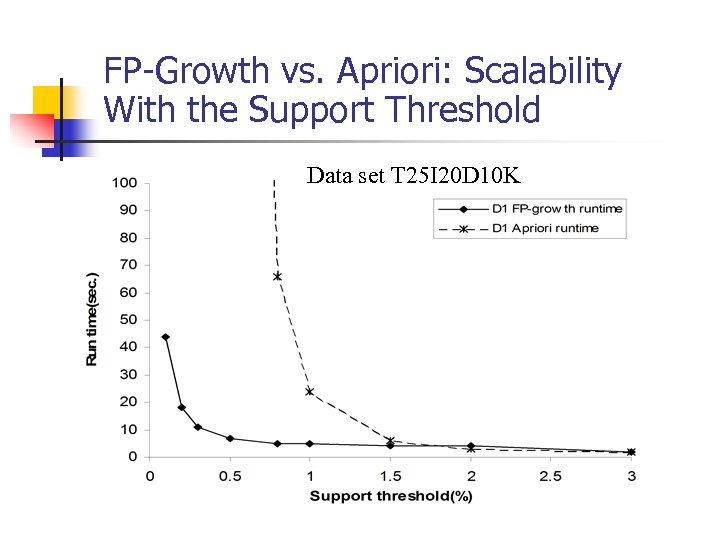

FP-Growth vs. Apriori: Scalability With the Support Threshold Data set T 25 I 20 D 10 K

FP-Growth vs. Apriori: Scalability With the Support Threshold Data set T 25 I 20 D 10 K

Why Is FP-Growth the Winner? n Divide-and-conquer: n n n decompose both the mining task and DB according to the frequent patterns obtained so far leads to focused search of smaller databases Other factors n no candidate generation, no candidate test n compressed database: FP-tree structure n no repeated scan of entire database n basic ops—counting local freq items and building sub FP-tree, no pattern search and matching

Why Is FP-Growth the Winner? n Divide-and-conquer: n n n decompose both the mining task and DB according to the frequent patterns obtained so far leads to focused search of smaller databases Other factors n no candidate generation, no candidate test n compressed database: FP-tree structure n no repeated scan of entire database n basic ops—counting local freq items and building sub FP-tree, no pattern search and matching

Outline n n Association Rules with FP tree Misleading Rules Multi-level Association Rules

Outline n n Association Rules with FP tree Misleading Rules Multi-level Association Rules

Example 5. 8 Misleading “Strong” Association Rule n Of the 10, 000 transactions analyzed, the data show that n n n 6, 000 of the customer included computer games, while 7, 500 include videos, And 4, 000 included both computer games and videos

Example 5. 8 Misleading “Strong” Association Rule n Of the 10, 000 transactions analyzed, the data show that n n n 6, 000 of the customer included computer games, while 7, 500 include videos, And 4, 000 included both computer games and videos

Misleading “Strong” Association Rule n For this example: n n n Support (Game & Video) = 4, 000 / 10, 000 =40% Confidence (Game => Video) = 4, 000 / 6, 000 = 66% Suppose it pass our minimum support and confidence (30% , 60%, respectively)

Misleading “Strong” Association Rule n For this example: n n n Support (Game & Video) = 4, 000 / 10, 000 =40% Confidence (Game => Video) = 4, 000 / 6, 000 = 66% Suppose it pass our minimum support and confidence (30% , 60%, respectively)

Misleading “Strong” Association Rule n n n However, the truth is : “computer games and videos are negatively associated” Which means the purchase of one of these items actually decreases the likelihood of purchasing the other. (How to get this conclusion? ? )

Misleading “Strong” Association Rule n n n However, the truth is : “computer games and videos are negatively associated” Which means the purchase of one of these items actually decreases the likelihood of purchasing the other. (How to get this conclusion? ? )

Misleading “Strong” Association Rule n Under the normal situation, n n 60% of customers buy the game 75% of customers buy the video Therefore, it should have 60% * 75% = 45% of people buy both That equals to 4, 500 which is more than 4, 000 (the actual value)

Misleading “Strong” Association Rule n Under the normal situation, n n 60% of customers buy the game 75% of customers buy the video Therefore, it should have 60% * 75% = 45% of people buy both That equals to 4, 500 which is more than 4, 000 (the actual value)

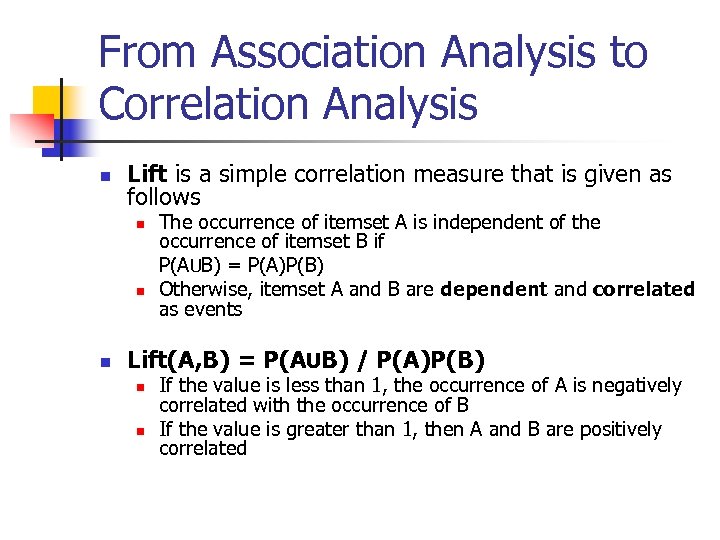

From Association Analysis to Correlation Analysis n Lift is a simple correlation measure that is given as follows n n n The occurrence of itemset A is independent of the occurrence of itemset B if P(AUB) = P(A)P(B) Otherwise, itemset A and B are dependent and correlated as events Lift(A, B) = P(AUB) / P(A)P(B) n n If the value is less than 1, the occurrence of A is negatively correlated with the occurrence of B If the value is greater than 1, then A and B are positively correlated

From Association Analysis to Correlation Analysis n Lift is a simple correlation measure that is given as follows n n n The occurrence of itemset A is independent of the occurrence of itemset B if P(AUB) = P(A)P(B) Otherwise, itemset A and B are dependent and correlated as events Lift(A, B) = P(AUB) / P(A)P(B) n n If the value is less than 1, the occurrence of A is negatively correlated with the occurrence of B If the value is greater than 1, then A and B are positively correlated

Outline n n Association Rules with FP tree Misleading Rules Multi-level Association Rules

Outline n n Association Rules with FP tree Misleading Rules Multi-level Association Rules

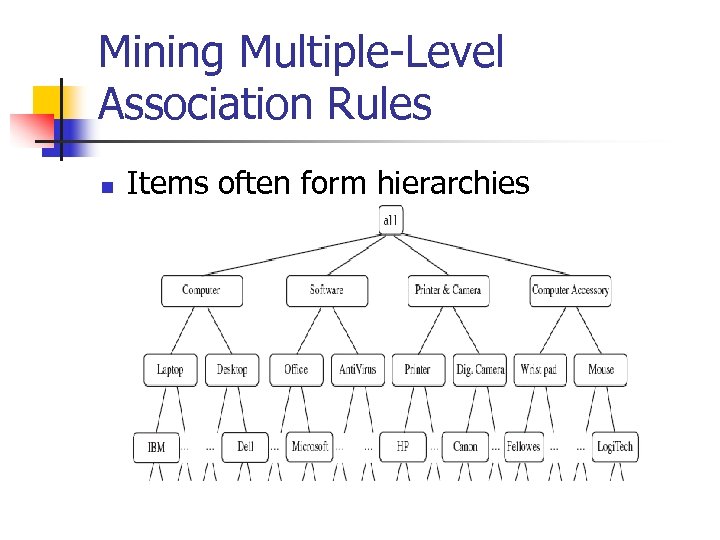

Mining Multiple-Level Association Rules n Items often form hierarchies

Mining Multiple-Level Association Rules n Items often form hierarchies

Mining Multiple-Level Association Rules n Items often form hierarchies

Mining Multiple-Level Association Rules n Items often form hierarchies

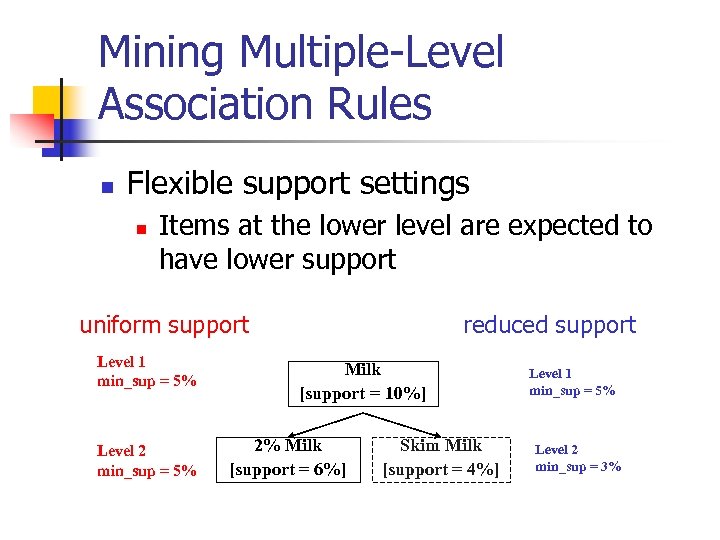

Mining Multiple-Level Association Rules n Flexible support settings n Items at the lower level are expected to have lower support uniform support Level 1 min_sup = 5% Level 2 min_sup = 5% reduced support Milk [support = 10%] 2% Milk [support = 6%] Skim Milk [support = 4%] Level 1 min_sup = 5% Level 2 min_sup = 3%

Mining Multiple-Level Association Rules n Flexible support settings n Items at the lower level are expected to have lower support uniform support Level 1 min_sup = 5% Level 2 min_sup = 5% reduced support Milk [support = 10%] 2% Milk [support = 6%] Skim Milk [support = 4%] Level 1 min_sup = 5% Level 2 min_sup = 3%

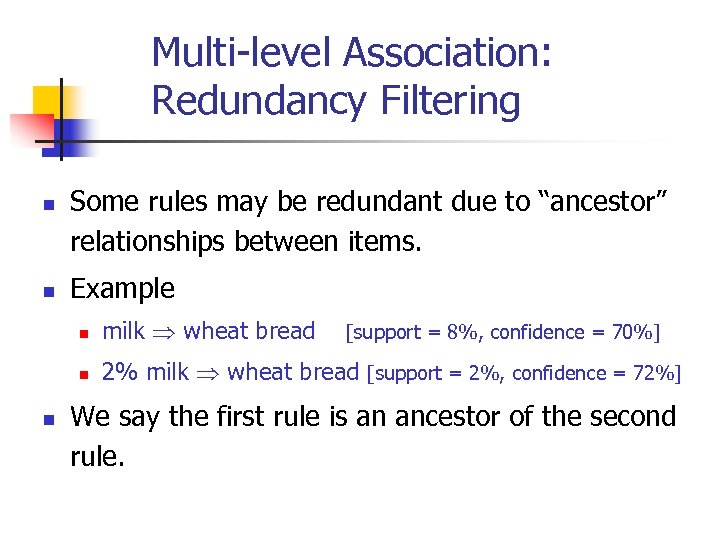

Multi-level Association: Redundancy Filtering n n Some rules may be redundant due to “ancestor” relationships between items. Example n n n milk wheat bread 2% milk wheat bread [support = 2%, confidence = 72%] [support = 8%, confidence = 70%] We say the first rule is an ancestor of the second rule.

Multi-level Association: Redundancy Filtering n n Some rules may be redundant due to “ancestor” relationships between items. Example n n n milk wheat bread 2% milk wheat bread [support = 2%, confidence = 72%] [support = 8%, confidence = 70%] We say the first rule is an ancestor of the second rule.