3b4acabfedd577e5cbf7f07a9bcab09f.ppt

- Количество слайдов: 28

CERN’s openlab Project François Fluckiger, Sverre Jarp, Wolfgang von Rüden IT Division CERN November 2002 1

CERN site: Next to Lake Geneva Mont Blanc, 4810 m Downtown Geneva Lake Geneva LHC Tunnel November 2002 2

What is CERN ? n European Centre for Nuclear Research (European Laboratory for Particle Physics) n n n Frontier of Human Scientific Knowledge Creation of ‘Big bang’ like conditions Accelerators with latest super-conducting technologies n Tunnel is 27 km in circumference n n n Detectors as ‘big as cathedrals’ n Four LHC detectors n n Large Electron/Positron Ring (used until 2000) Large Hadron Collider (LHC) as of 2007 ALICE, ATLAS, CMS, LHCb World-wide participation n n Europe, plus USA, Canada, Brazil, Japan, China, Russia, Israel, etc. November 2002 3

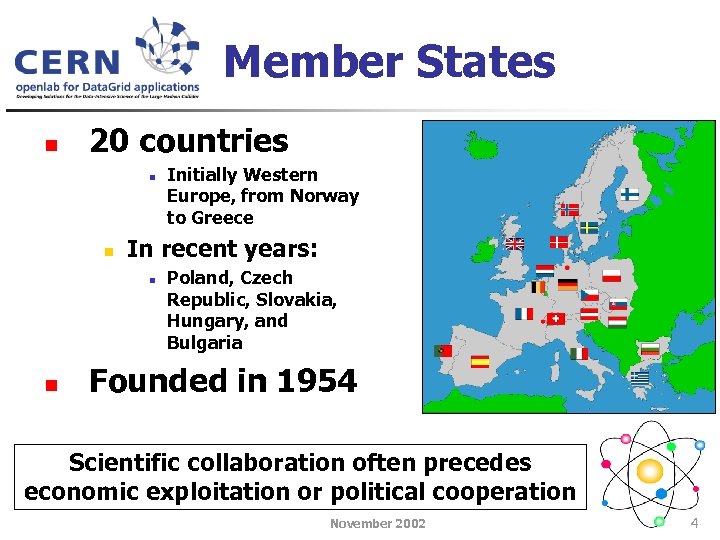

Member States n 20 countries n n In recent years: n n Initially Western Europe, from Norway to Greece Poland, Czech Republic, Slovakia, Hungary, and Bulgaria Founded in 1954 Scientific collaboration often precedes economic exploitation or political cooperation November 2002 4

CERN in more detail n Organisation with: n n 2400 staff, plus 6000 visitors (per year) Inventor of the World-Wide Web n Tim Berners-Lee’s vision: n n “Tie all the physicists together – no matter where they are” CERN’s Budget n n n 1000 MCHF (~685 M€/US$) 50 -50 materials/personnel Computing budget n n ~25 MCHF (central infrastructure) Desktop/Departmental computing in addition November 2002 5

Why CERN may be interesting to the computing industry n Several arguments: n Huge computing requirements: n The Large Hadron Collider will need unprecedented computing resources, driven by scientists’ needs n n n Early adopters: n n n At CERN, but even more in regional centres. . and in hundreds of institutes world-wide Scientific community is willing to ‘take risk’, hoping it is linked to ‘rewards’ Lots of source-based applications, easy to port Reference site: n Many institutes adopt CERN’s computing policies n n Applications/Libraries are ported; software runs well Lots of expertise available in relevant areas November 2002 6

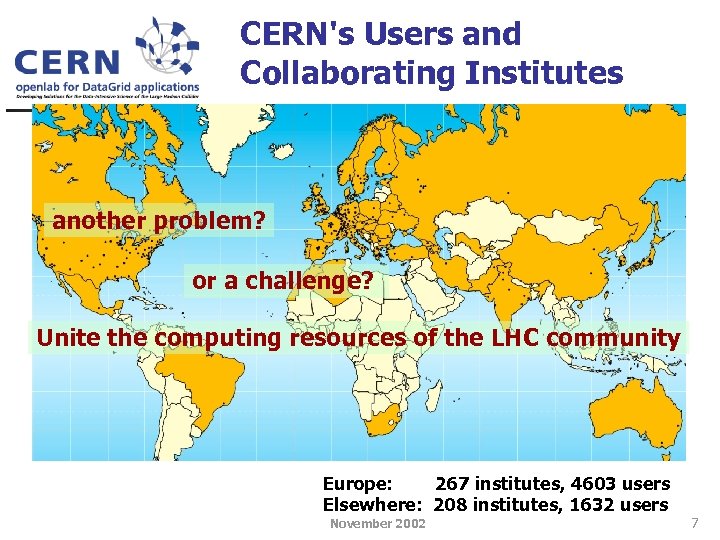

CERN's Users and Collaborating Institutes another problem? or a challenge? Unite the computing resources of the LHC community Europe: 267 institutes, 4603 users Elsewhere: 208 institutes, 1632 users November 2002 7

Our ties to IA-64 (IPF) n A long history already…. n Nov. 1992: Visit to HP Labs (Bill Worley): n n 1994 -6: CERN becomes one of the few external definition partners for IA-64 n n Now a joint effort between HP and Intel 1997 -9: Creation of a vector math library for IA-64 n n “We will soon launch PA-Wide Word!” Full prototype to demonstrate the precision, versatility, and unbeatable speed of execution 2000 -1: Port of Linux onto IA-64 n n “Trillian” project Real applications n Demonstrated already at Intel’s “Exchange” exhibition on Oct. 2000 November 2002 8

IA-64 wish list n For IA-64 (IPF) to establish itself solidly in the market-place: n Better compiler technology n n Wider range of systems and processors n n Offering better system performance For instance: Really low-cost entry models, low power systems State-of-the-art process technology Similar “commoditization” as for IA-32 November 2002 9

The openlab “advantage” openlab will be able to build on: 1) CERN-IT’s technical talent 2) CERN’s existing computing environment 3) The size and complexity of the LHC computing needs 4) CERN’s strong role in the development of GRID “middleware” 5) CERN’s ability to embrace emerging technologies November 2002 10

IT Division n 250 people, ~about 200 engineers n 11 groups: n n n Advanced Projects’ Group (part of DI) Applications for Physics and Infrastructure (API) (Farm) Architecture and Data Challenges (ADC) Fabric Infrastructure and Operations (FIO) (Physics) Data Services (DS) Databases (DB) Internet (and Windows) Services (IS) Communications Services (CS) User Services (US) Product Support (PS) (Detector) Controls (CO) Groups have both a development and a service responsibility n n n n November 2002 11

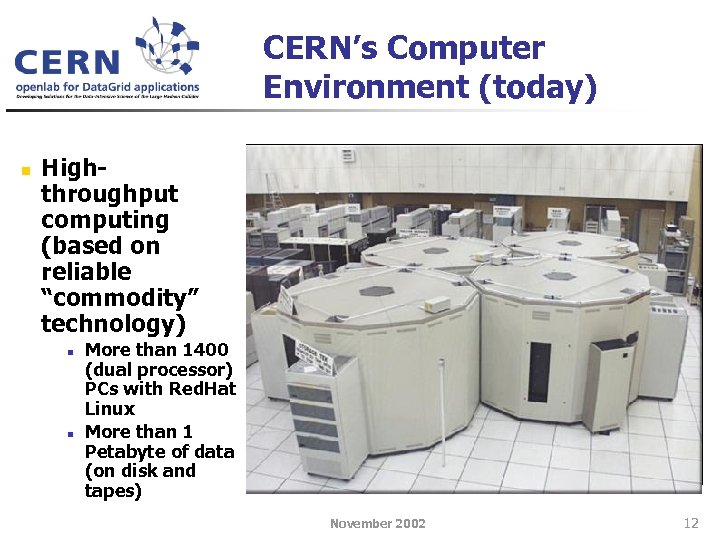

CERN’s Computer Environment (today) n Highthroughput computing (based on reliable “commodity” technology) n n More than 1400 (dual processor) PCs with Red. Hat Linux More than 1 Petabyte of data (on disk and tapes) November 2002 12

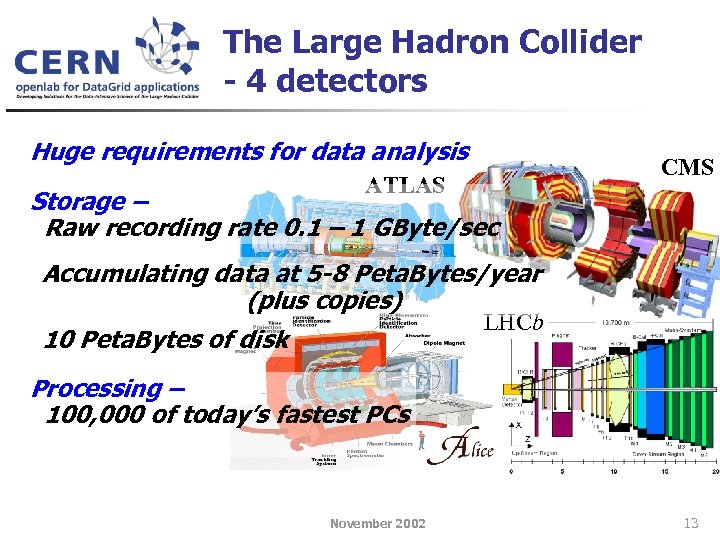

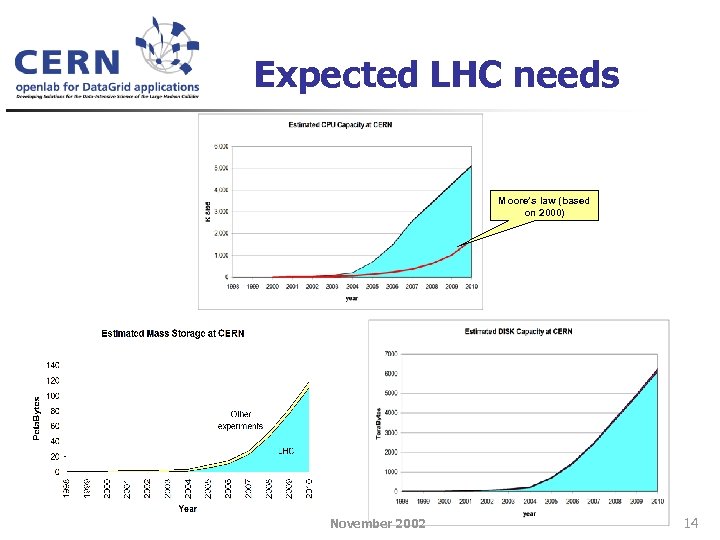

The Large Hadron Collider - 4 detectors Huge requirements for data analysis CMS ATLAS Storage – Raw recording rate 0. 1 – 1 GByte/sec Accumulating data at 5 -8 Peta. Bytes/year (plus copies) LHCb 10 Peta. Bytes of disk Processing – 100, 000 of today’s fastest PCs November 2002 13

Expected LHC needs Moore’s law (based on 2000) November 2002 14

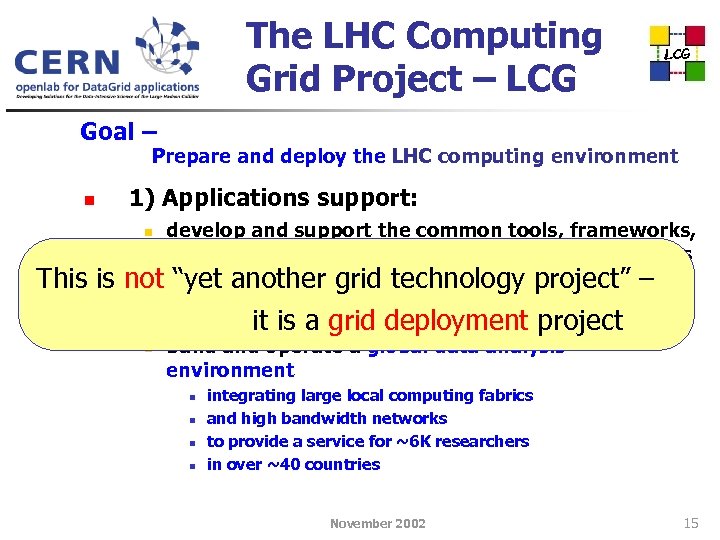

The LHC Computing Grid Project – LCG Goal – Prepare and deploy the LHC computing environment n 1) Applications support: n develop and support the common tools, frameworks, and environment needed by the physics applications This is not “yet another grid technology project” – n 2) Computing system: it is a grid deployment project n build and operate a global data analysis environment n n integrating large local computing fabrics and high bandwidth networks to provide a service for ~6 K researchers in over ~40 countries November 2002 15

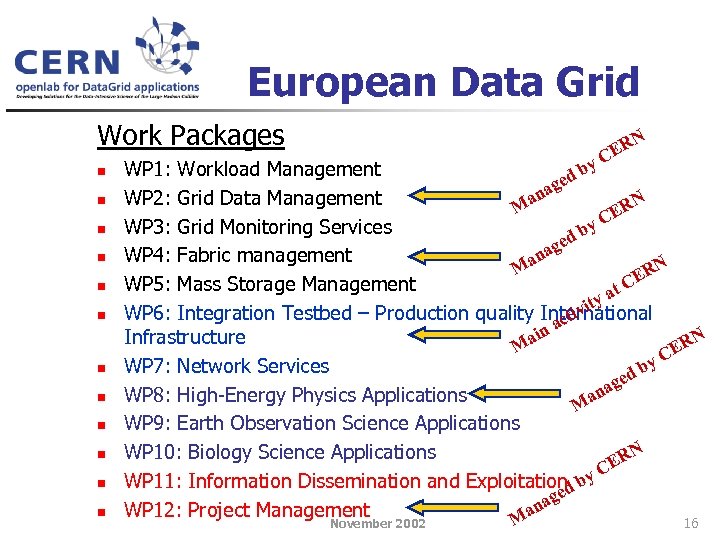

European Data Grid Work Packages n n n R CE N y WP 1: Workload Management db e ag n WP 2: Grid Data Management N Ma ER C WP 3: Grid Monitoring Services by ed ag n WP 4: Fabric management N Ma ER C WP 5: Mass Storage Management at ty ivi WP 6: Integration Testbed – Production quality International act N Infrastructure ain M ER C WP 7: Network Services by ed ag n WP 8: High-Energy Physics Applications Ma WP 9: Earth Observation Science Applications N WP 10: Biology Science Applications ER C WP 11: Information Dissemination and Exploitationd by e ag n WP 12: Project Management Ma November 2002 16

“Grid” technology providers Cross. Grid The LHC Computing Grid will choose the best parts and integrate them! American projects European projects November 2002 17

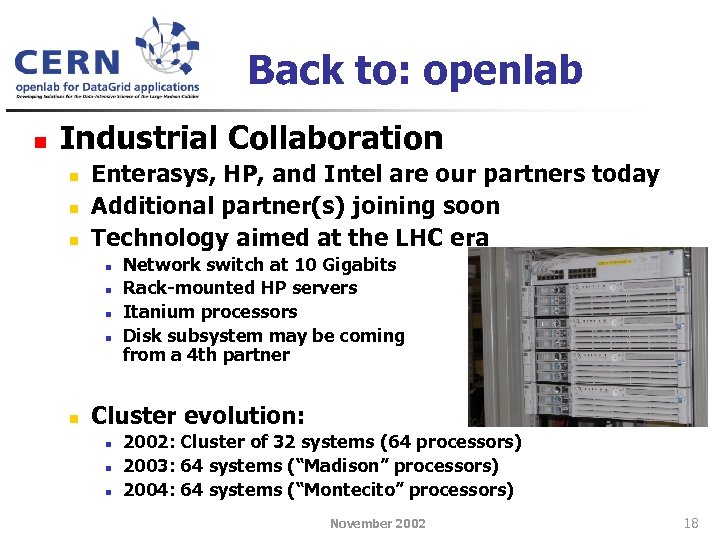

Back to: openlab n Industrial Collaboration n Enterasys, HP, and Intel are our partners today Additional partner(s) joining soon Technology aimed at the LHC era n n n Network switch at 10 Gigabits Rack-mounted HP servers Itanium processors Disk subsystem may be coming from a 4 th partner Cluster evolution: n n n 2002: Cluster of 32 systems (64 processors) 2003: 64 systems (“Madison” processors) 2004: 64 systems (“Montecito” processors) November 2002 18

openlab Staffing n Management in place: n n n n W. von Rüden: Head (Director) F. Fluckiger: Associate Head S. Jarp: Chief Technologist, HP & Intel liaison F. Grey: Communications & Development D. Foster: LCG liaison J. M. Juanigot: Enterasys liaison Additional part-time experts Help from general IT services Actively recruiting the systems experts: 2 -3 persons November 2002 19

openlab - phase 1 n Integrate the open. Cluster n 32 nodes + development nodes n n n n Red. Hat 7. 3 Advanced Server Open. AFS, LSF GNU, Intel Compilers (+ ORC? ) Database software (My. SQL, Oracle? ) CERN middleware: Castor data mgmt CERN Applications n n n Rack-mounted DP Itanium-2 systems Porting, Benchmarking, Performance improvements CLHEP, GEANT 4, ROOT, Anaphe, (CERNLIB? ) Cluster benchmarks n Also: Prepare porting strategy for phase 2 1 10 Gigabit interfaces November 2002 Estimated time scale: 6 months Prerequisite: 20 1 system administrator

openlab - phase 2 n European Data Grid n n Integrate Open. Cluster alongside EDG testbed Also: Porting, Verification n Interoperability with WP 6 n n Relevant software packages (hundreds of RPMs) Understand chain of prerequisites Integration into existing authentication scheme Prepare porting strategy for phase 3 GRID benchmarks n To be defined later Estimated time scale: 9 months (may be subject to change!) Prerequisite: 1 system programmer November 2002 21

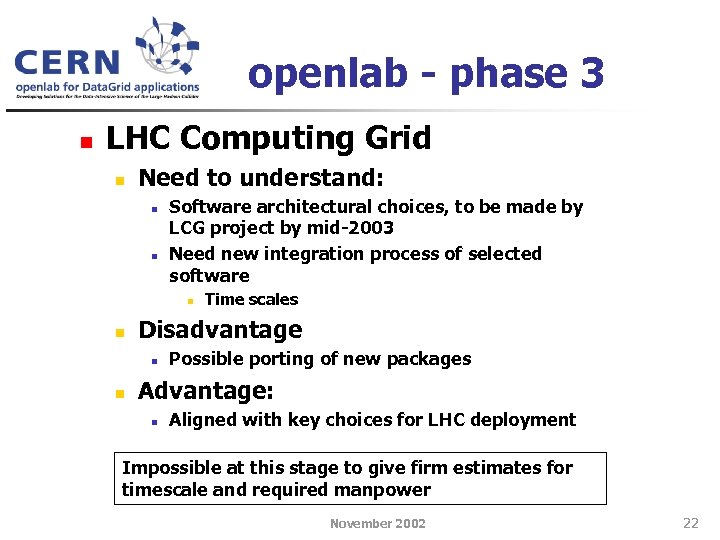

openlab - phase 3 n LHC Computing Grid n Need to understand: n n Software architectural choices, to be made by LCG project by mid-2003 Need new integration process of selected software n n Disadvantage n n Time scales Possible porting of new packages Advantage: n Aligned with key choices for LHC deployment Impossible at this stage to give firm estimates for timescale and required manpower November 2002 22

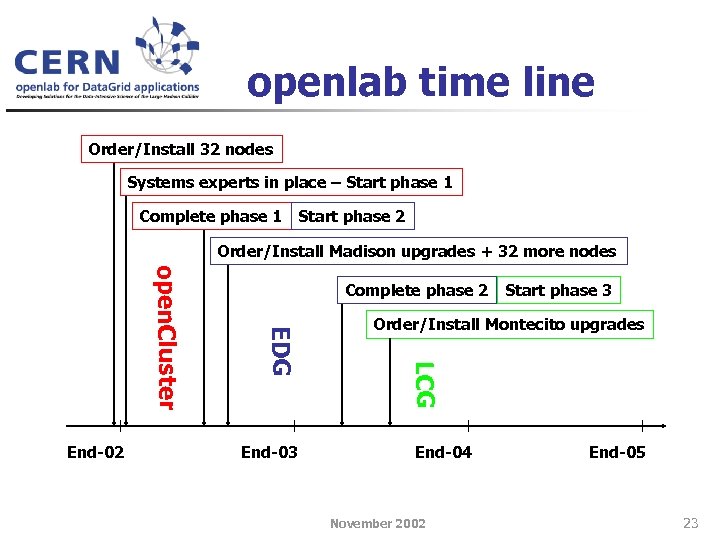

openlab time line Order/Install 32 nodes Systems experts in place – Start phase 1 Complete phase 1 Start phase 2 Order/Install Madison upgrades + 32 more nodes End-03 Start phase 3 Order/Install Montecito upgrades LCG EDG open. Cluster End-02 Complete phase 2 End-04 November 2002 End-05 23

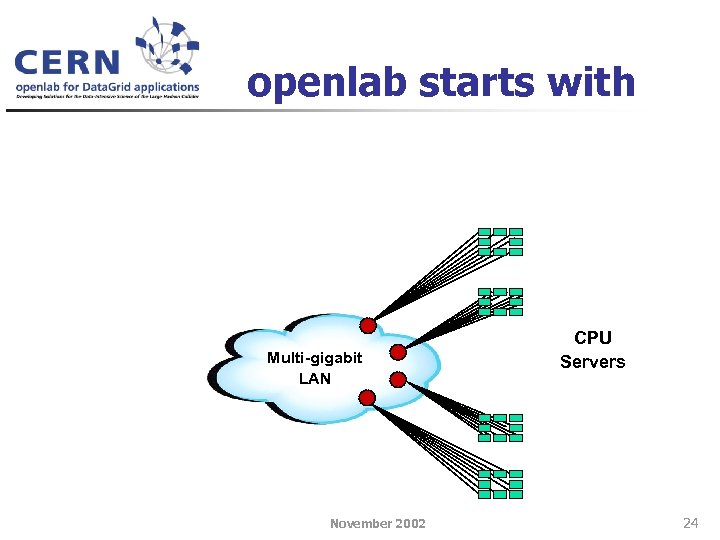

openlab starts with Multi-gigabit LAN November 2002 CPU Servers 24

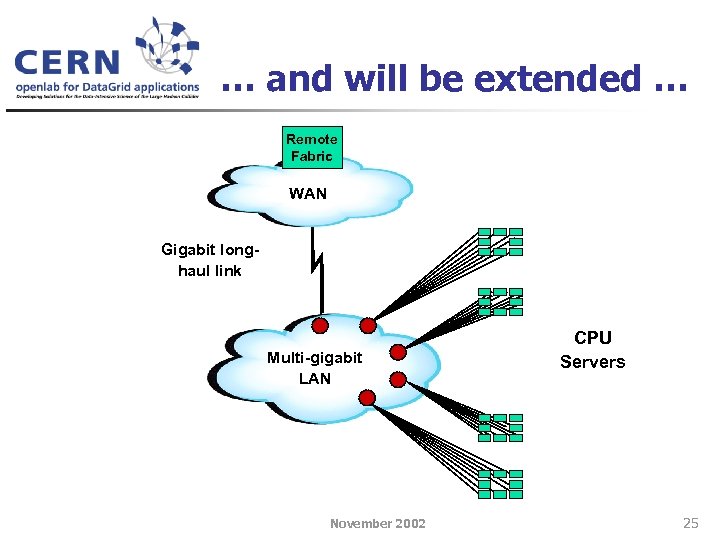

… and will be extended … Remote Fabric WAN Gigabit longhaul link Multi-gigabit LAN November 2002 CPU Servers 25

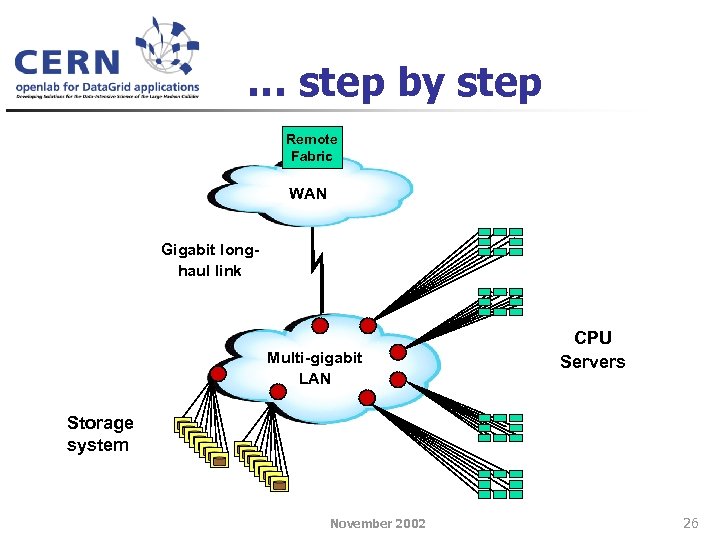

… step by step Remote Fabric WAN Gigabit longhaul link Multi-gigabit LAN CPU Servers Storage system November 2002 26

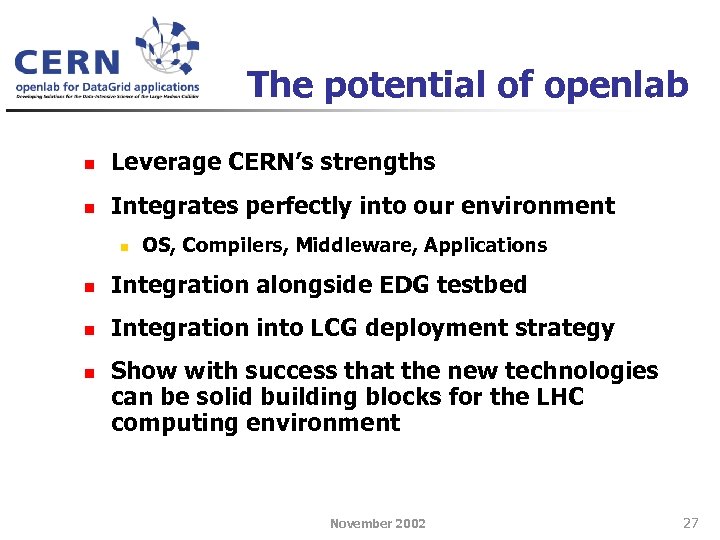

The potential of openlab n Leverage CERN’s strengths n Integrates perfectly into our environment n OS, Compilers, Middleware, Applications n Integration alongside EDG testbed n Integration into LCG deployment strategy n Show with success that the new technologies can be solid building blocks for the LHC computing environment November 2002 27

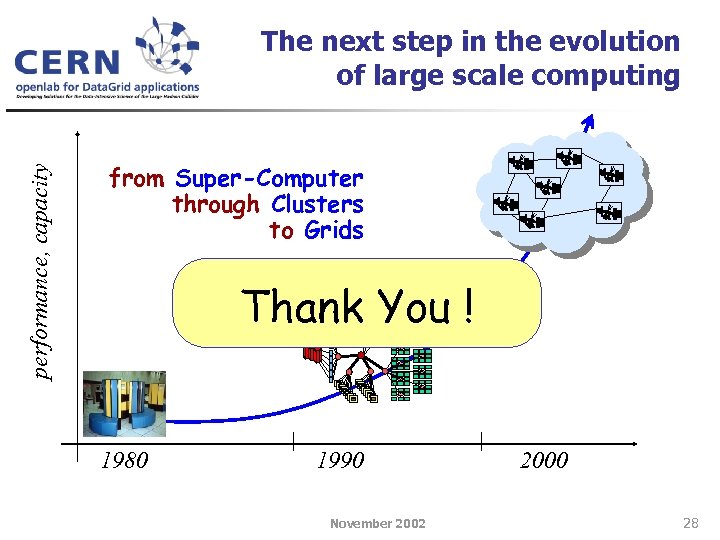

performance, capacity The next step in the evolution of large scale computing from Super-Computer through Clusters to Grids Thank You ! 1980 1990 November 2002 2000 28

3b4acabfedd577e5cbf7f07a9bcab09f.ppt