ac7040557a3d47ad0c85a92752078c41.ppt

- Количество слайдов: 22

CERN-Russia JWG - 19 Mar. 2004 ATLAS Data Challenges 19 March 2004 Dario Barberis CERN & Genoa University Dario Barberis: ATLAS Data Challenges 1

CERN-Russia JWG - 19 Mar. 2004 ATLAS Data Challenges 19 March 2004 Dario Barberis CERN & Genoa University Dario Barberis: ATLAS Data Challenges 1

CERN-Russia JWG - 19 Mar. 2004 ATLAS DC 1 (July 2002 -April 2003) l Primary concern was delivery of events to High Level Trigger (HLT) and to Physics communities n n l HLT-TDR due by June 2003 Athens Physics workshop in May 2003 Put in place the full software chain from event generation to reconstruction n Switch to Athena. Root I/O (for Event generation) n n New Event Data Model and Detector Description n l Updated geometry Reconstruction (mostly OO) moved to Athena Put in place the distributed production n “ATLAS kit” (rpm) for software distribution n Scripts and tools (monitoring, bookkeeping) Ø Ø n l AMI database; Magda replica catalogue; VDC Job production (At. Com) Quality Control and Validation of the full chain Use as much as possible Grid tools Dario Barberis: ATLAS Data Challenges 2

CERN-Russia JWG - 19 Mar. 2004 ATLAS DC 1 (July 2002 -April 2003) l Primary concern was delivery of events to High Level Trigger (HLT) and to Physics communities n n l HLT-TDR due by June 2003 Athens Physics workshop in May 2003 Put in place the full software chain from event generation to reconstruction n Switch to Athena. Root I/O (for Event generation) n n New Event Data Model and Detector Description n l Updated geometry Reconstruction (mostly OO) moved to Athena Put in place the distributed production n “ATLAS kit” (rpm) for software distribution n Scripts and tools (monitoring, bookkeeping) Ø Ø n l AMI database; Magda replica catalogue; VDC Job production (At. Com) Quality Control and Validation of the full chain Use as much as possible Grid tools Dario Barberis: ATLAS Data Challenges 2

CERN-Russia JWG - 19 Mar. 2004 ATLAS DC 1 (July 2002 -April 2003) l DC 1 was divided in 3 phases n Phase 1 (July-August 2002) Ø Event generation and detector simulation n Phase 2 (December 2002 – April 2003) Ø Pile-up production l Classical batch production l With Grid tools on Nordu. Grid and US-ATLAS-Grid n Reconstruction (April-May 2003) Ø l Offline code only Worldwide exercise with many participating institutes Dario Barberis: ATLAS Data Challenges 3

CERN-Russia JWG - 19 Mar. 2004 ATLAS DC 1 (July 2002 -April 2003) l DC 1 was divided in 3 phases n Phase 1 (July-August 2002) Ø Event generation and detector simulation n Phase 2 (December 2002 – April 2003) Ø Pile-up production l Classical batch production l With Grid tools on Nordu. Grid and US-ATLAS-Grid n Reconstruction (April-May 2003) Ø l Offline code only Worldwide exercise with many participating institutes Dario Barberis: ATLAS Data Challenges 3

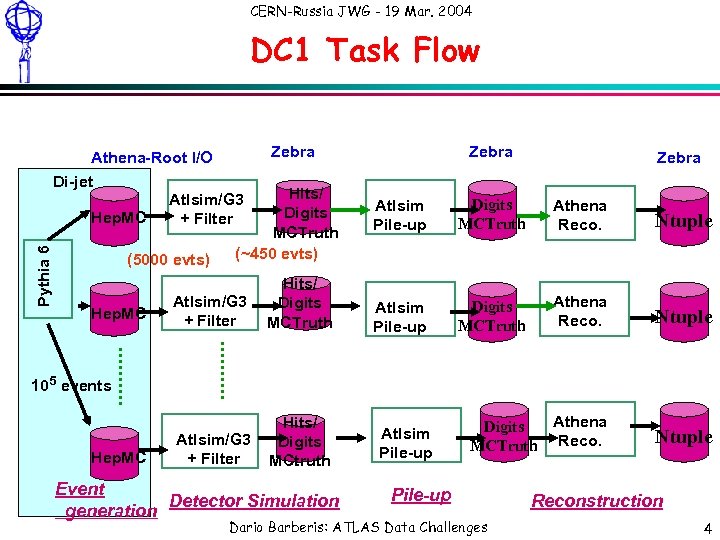

CERN-Russia JWG - 19 Mar. 2004 DC 1 Task Flow Zebra Athena-Root I/O Di-jet Pythia 6 Hep. MC (5000 evts) Hep. MC Hits/ Digits MCTruth (~450 evts) Atlsim/G 3 + Filter Hits/ Digits MCTruth Atlsim/G 3 + Filter Hits/ Digits MCtruth Zebra Atlsim Pile-up Digits MCTruth Athena Reco. Ntuple Athena Digits MCTruth Reco. Ntuple 105 events Hep. MC Event Detector Simulation generation Atlsim Pile-up Dario Barberis: ATLAS Data Challenges Reconstruction 4

CERN-Russia JWG - 19 Mar. 2004 DC 1 Task Flow Zebra Athena-Root I/O Di-jet Pythia 6 Hep. MC (5000 evts) Hep. MC Hits/ Digits MCTruth (~450 evts) Atlsim/G 3 + Filter Hits/ Digits MCTruth Atlsim/G 3 + Filter Hits/ Digits MCtruth Zebra Atlsim Pile-up Digits MCTruth Athena Reco. Ntuple Athena Digits MCTruth Reco. Ntuple 105 events Hep. MC Event Detector Simulation generation Atlsim Pile-up Dario Barberis: ATLAS Data Challenges Reconstruction 4

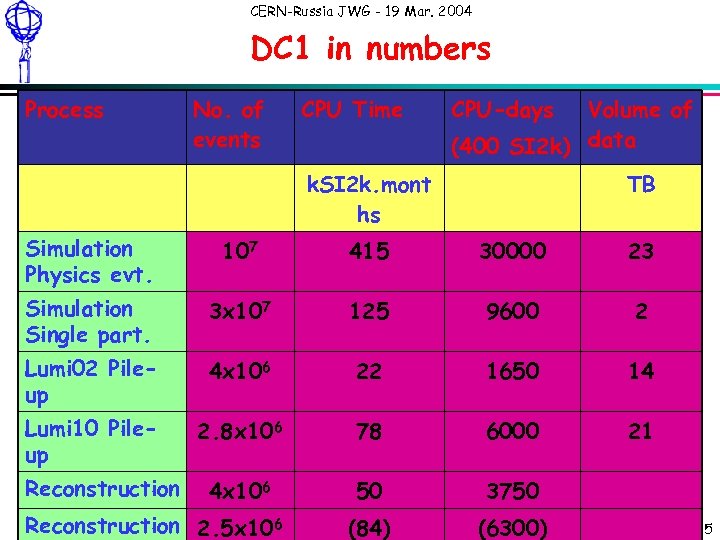

CERN-Russia JWG - 19 Mar. 2004 DC 1 in numbers Process No. of events CPU Time CPU-days Volume of (400 SI 2 k) data k. SI 2 k. mont hs TB Simulation Physics evt. 107 415 30000 23 Simulation Single part. 3 x 107 125 9600 2 Lumi 02 Pileup 4 x 106 22 1650 14 Lumi 10 Pileup 2. 8 x 106 78 6000 21 4 x 106 50 3750 Reconstruction Dario Barberis: ATLAS Data Challenges Reconstruction 2. 5 x 106 (84) (6300) 5

CERN-Russia JWG - 19 Mar. 2004 DC 1 in numbers Process No. of events CPU Time CPU-days Volume of (400 SI 2 k) data k. SI 2 k. mont hs TB Simulation Physics evt. 107 415 30000 23 Simulation Single part. 3 x 107 125 9600 2 Lumi 02 Pileup 4 x 106 22 1650 14 Lumi 10 Pileup 2. 8 x 106 78 6000 21 4 x 106 50 3750 Reconstruction Dario Barberis: ATLAS Data Challenges Reconstruction 2. 5 x 106 (84) (6300) 5

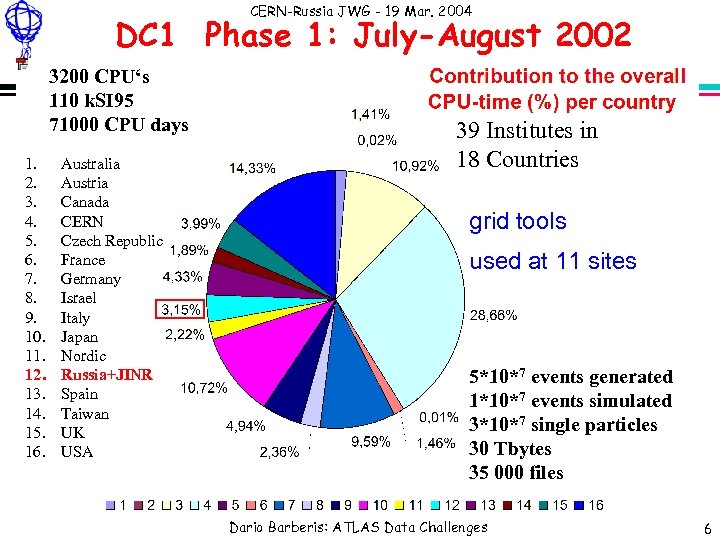

CERN-Russia JWG - 19 Mar. 2004 DC 1 Phase 1: July-August 2002 3200 CPU‘s 110 k. SI 95 71000 CPU days 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. 15. 16. Australia Austria Canada CERN Czech Republic France Germany Israel Italy Japan Nordic Russia+JINR Spain Taiwan UK USA 39 Institutes in 18 Countries grid tools used at 11 sites 5*10*7 events generated 1*10*7 events simulated 3*10*7 single particles 30 Tbytes 35 000 files Dario Barberis: ATLAS Data Challenges 6

CERN-Russia JWG - 19 Mar. 2004 DC 1 Phase 1: July-August 2002 3200 CPU‘s 110 k. SI 95 71000 CPU days 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. 15. 16. Australia Austria Canada CERN Czech Republic France Germany Israel Italy Japan Nordic Russia+JINR Spain Taiwan UK USA 39 Institutes in 18 Countries grid tools used at 11 sites 5*10*7 events generated 1*10*7 events simulated 3*10*7 single particles 30 Tbytes 35 000 files Dario Barberis: ATLAS Data Challenges 6

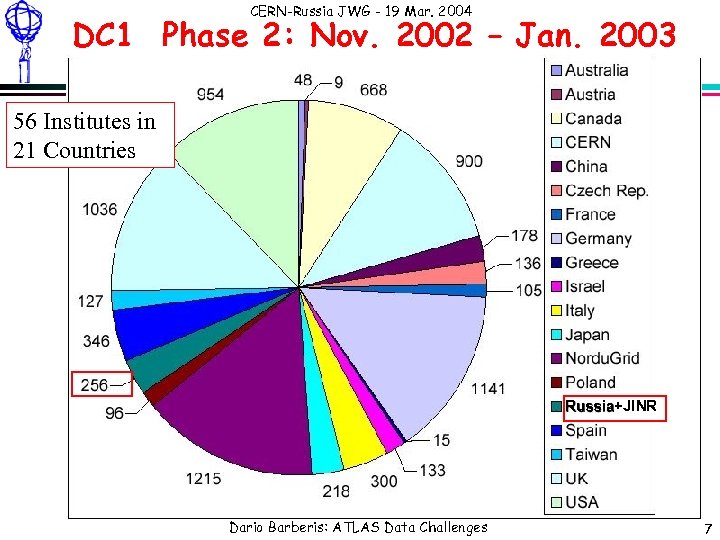

CERN-Russia JWG - 19 Mar. 2004 DC 1 Phase 2: Nov. 2002 – Jan. 2003 56 Institutes in 21 Countries Russia+JINR Dario Barberis: ATLAS Data Challenges 7

CERN-Russia JWG - 19 Mar. 2004 DC 1 Phase 2: Nov. 2002 – Jan. 2003 56 Institutes in 21 Countries Russia+JINR Dario Barberis: ATLAS Data Challenges 7

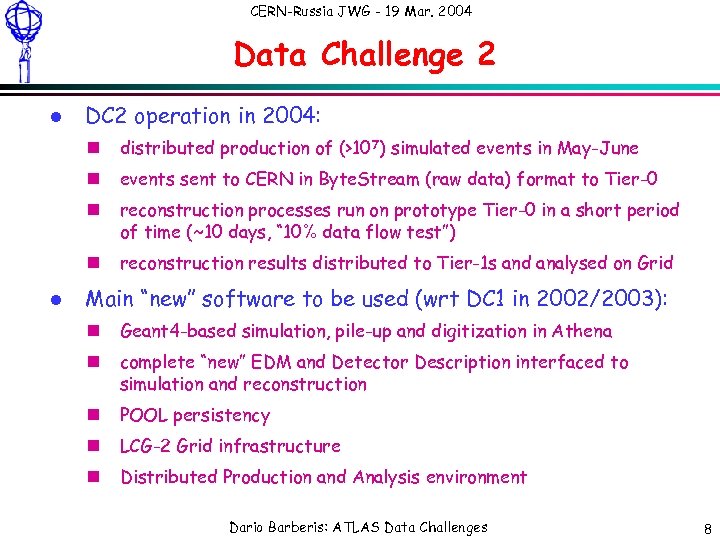

CERN-Russia JWG - 19 Mar. 2004 Data Challenge 2 l DC 2 operation in 2004: n n events sent to CERN in Byte. Stream (raw data) format to Tier-0 n reconstruction processes run on prototype Tier-0 in a short period of time (~10 days, “ 10% data flow test”) n l distributed production of (>107) simulated events in May-June reconstruction results distributed to Tier-1 s and analysed on Grid Main “new” software to be used (wrt DC 1 in 2002/2003): n Geant 4 -based simulation, pile-up and digitization in Athena n complete “new” EDM and Detector Description interfaced to simulation and reconstruction n POOL persistency n LCG-2 Grid infrastructure n Distributed Production and Analysis environment Dario Barberis: ATLAS Data Challenges 8

CERN-Russia JWG - 19 Mar. 2004 Data Challenge 2 l DC 2 operation in 2004: n n events sent to CERN in Byte. Stream (raw data) format to Tier-0 n reconstruction processes run on prototype Tier-0 in a short period of time (~10 days, “ 10% data flow test”) n l distributed production of (>107) simulated events in May-June reconstruction results distributed to Tier-1 s and analysed on Grid Main “new” software to be used (wrt DC 1 in 2002/2003): n Geant 4 -based simulation, pile-up and digitization in Athena n complete “new” EDM and Detector Description interfaced to simulation and reconstruction n POOL persistency n LCG-2 Grid infrastructure n Distributed Production and Analysis environment Dario Barberis: ATLAS Data Challenges 8

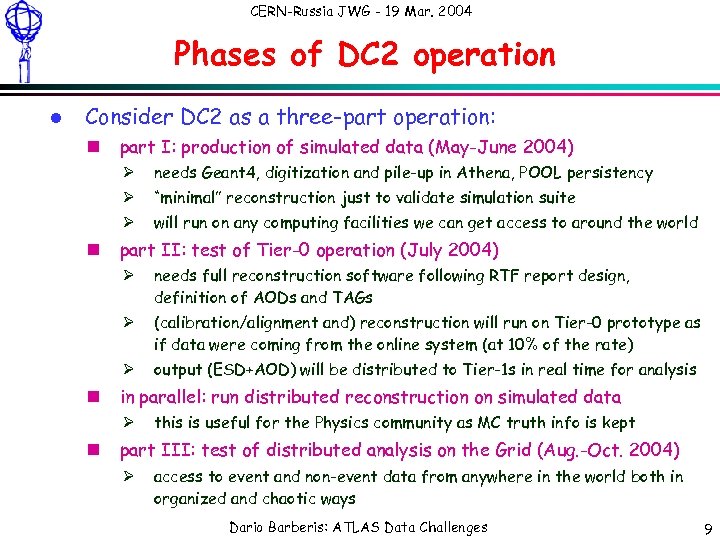

CERN-Russia JWG - 19 Mar. 2004 Phases of DC 2 operation l Consider DC 2 as a three-part operation: n part I: production of simulated data (May-June 2004) Ø Ø “minimal” reconstruction just to validate simulation suite Ø n needs Geant 4, digitization and pile-up in Athena, POOL persistency will run on any computing facilities we can get access to around the world part II: test of Tier-0 operation (July 2004) Ø Ø (calibration/alignment and) reconstruction will run on Tier-0 prototype as if data were coming from the online system (at 10% of the rate) Ø n needs full reconstruction software following RTF report design, definition of AODs and TAGs output (ESD+AOD) will be distributed to Tier-1 s in real time for analysis in parallel: run distributed reconstruction on simulated data Ø n this is useful for the Physics community as MC truth info is kept part III: test of distributed analysis on the Grid (Aug. -Oct. 2004) Ø access to event and non-event data from anywhere in the world both in organized and chaotic ways Dario Barberis: ATLAS Data Challenges 9

CERN-Russia JWG - 19 Mar. 2004 Phases of DC 2 operation l Consider DC 2 as a three-part operation: n part I: production of simulated data (May-June 2004) Ø Ø “minimal” reconstruction just to validate simulation suite Ø n needs Geant 4, digitization and pile-up in Athena, POOL persistency will run on any computing facilities we can get access to around the world part II: test of Tier-0 operation (July 2004) Ø Ø (calibration/alignment and) reconstruction will run on Tier-0 prototype as if data were coming from the online system (at 10% of the rate) Ø n needs full reconstruction software following RTF report design, definition of AODs and TAGs output (ESD+AOD) will be distributed to Tier-1 s in real time for analysis in parallel: run distributed reconstruction on simulated data Ø n this is useful for the Physics community as MC truth info is kept part III: test of distributed analysis on the Grid (Aug. -Oct. 2004) Ø access to event and non-event data from anywhere in the world both in organized and chaotic ways Dario Barberis: ATLAS Data Challenges 9

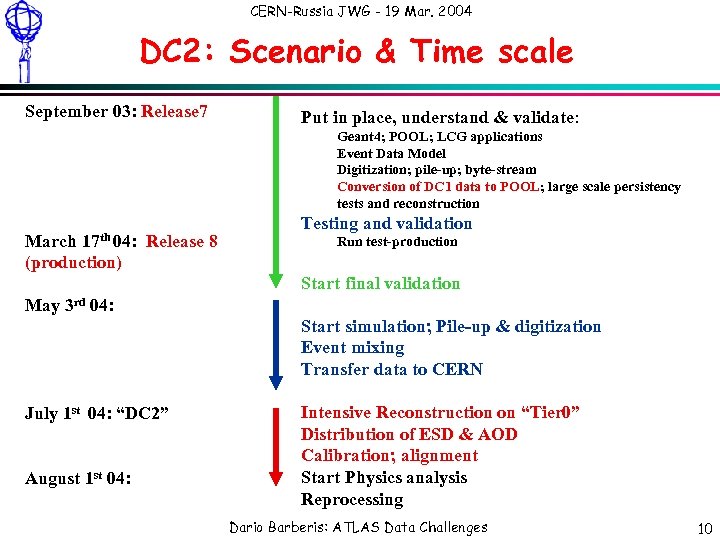

CERN-Russia JWG - 19 Mar. 2004 DC 2: Scenario & Time scale September 03: Release 7 Put in place, understand & validate: Geant 4; POOL; LCG applications Event Data Model Digitization; pile-up; byte-stream Conversion of DC 1 data to POOL; large scale persistency tests and reconstruction 17 th 04: March (production) Release 8 Testing and validation Run test-production Start final validation May 3 rd 04: Start simulation; Pile-up & digitization Event mixing Transfer data to CERN July 1 st 04: “DC 2” August 1 st 04: Intensive Reconstruction on “Tier 0” Distribution of ESD & AOD Calibration; alignment Start Physics analysis Reprocessing Dario Barberis: ATLAS Data Challenges 10

CERN-Russia JWG - 19 Mar. 2004 DC 2: Scenario & Time scale September 03: Release 7 Put in place, understand & validate: Geant 4; POOL; LCG applications Event Data Model Digitization; pile-up; byte-stream Conversion of DC 1 data to POOL; large scale persistency tests and reconstruction 17 th 04: March (production) Release 8 Testing and validation Run test-production Start final validation May 3 rd 04: Start simulation; Pile-up & digitization Event mixing Transfer data to CERN July 1 st 04: “DC 2” August 1 st 04: Intensive Reconstruction on “Tier 0” Distribution of ESD & AOD Calibration; alignment Start Physics analysis Reprocessing Dario Barberis: ATLAS Data Challenges 10

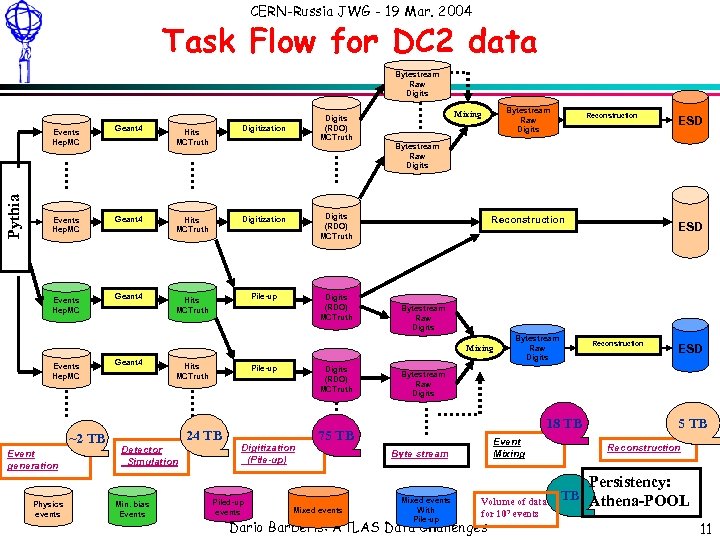

CERN-Russia JWG - 19 Mar. 2004 Task Flow for DC 2 data Bytestream Raw Digits Events Hep. MC Pythia Events Hep. MC Geant 4 Digits (RDO) MCTruth Hits MCTruth Digitization Geant 4 Hits MCTruth Digitization Digits (RDO) MCTruth Geant 4 Hits MCTruth Pile-up Digits (RDO) MCTruth Bytestream Raw Digits Mixing Events Hep. MC ~2 TB Event generation Physics events Hits MCTruth Pile-up 24 TB Detector Simulation Min. bias Events Digits (RDO) MCTruth Digitization (Pile-up) Piled-up events Reconstruction ESD Bytestream Raw Digits 18 TB 75 TB Mixed events ESD Bytestream Raw Digits Mixing Geant 4 Reconstruction Event Mixing Byte stream Mixed events With Pile-up Volume of data for 107 events Dario Barberis: ATLAS Data Challenges 5 TB Reconstruction Persistency: TB Athena-POOL 11

CERN-Russia JWG - 19 Mar. 2004 Task Flow for DC 2 data Bytestream Raw Digits Events Hep. MC Pythia Events Hep. MC Geant 4 Digits (RDO) MCTruth Hits MCTruth Digitization Geant 4 Hits MCTruth Digitization Digits (RDO) MCTruth Geant 4 Hits MCTruth Pile-up Digits (RDO) MCTruth Bytestream Raw Digits Mixing Events Hep. MC ~2 TB Event generation Physics events Hits MCTruth Pile-up 24 TB Detector Simulation Min. bias Events Digits (RDO) MCTruth Digitization (Pile-up) Piled-up events Reconstruction ESD Bytestream Raw Digits 18 TB 75 TB Mixed events ESD Bytestream Raw Digits Mixing Geant 4 Reconstruction Event Mixing Byte stream Mixed events With Pile-up Volume of data for 107 events Dario Barberis: ATLAS Data Challenges 5 TB Reconstruction Persistency: TB Athena-POOL 11

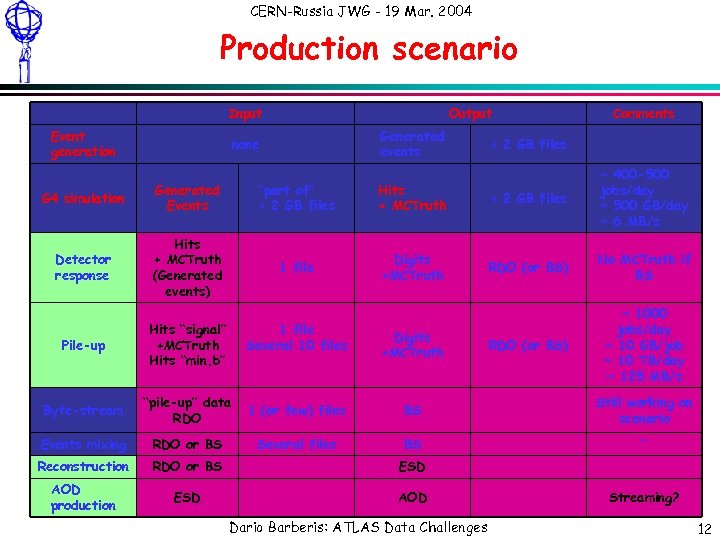

CERN-Russia JWG - 19 Mar. 2004 Production scenario Input Event generation Output Generated events none Comments < 2 GB files G 4 simulation Generated Events “part of” < 2 GB files Hits + MCTruth < 2 GB files ~ 400 -500 jobs/day ~ 500 GB/day ~ 6 MB/s Detector response Hits + MCTruth (Generated events) 1 file Digits +MCTruth RDO (or BS) No MCTruth if BS RDO (or BS) ~ 1000 jobs/day ~ 10 GB/job ~ 10 TB/day ~ 125 MB/s Pile-up Hits “signal” +MCTruth Hits “min. b” 1 file Several 10 files Byte-stream “pile-up” data RDO 1 (or few) files BS Still working on scenario Events mixing RDO or BS Several files BS “ Reconstruction RDO or BS ESD AOD production ESD AOD Digits +MCTruth Dario Barberis: ATLAS Data Challenges Streaming? 12

CERN-Russia JWG - 19 Mar. 2004 Production scenario Input Event generation Output Generated events none Comments < 2 GB files G 4 simulation Generated Events “part of” < 2 GB files Hits + MCTruth < 2 GB files ~ 400 -500 jobs/day ~ 500 GB/day ~ 6 MB/s Detector response Hits + MCTruth (Generated events) 1 file Digits +MCTruth RDO (or BS) No MCTruth if BS RDO (or BS) ~ 1000 jobs/day ~ 10 GB/job ~ 10 TB/day ~ 125 MB/s Pile-up Hits “signal” +MCTruth Hits “min. b” 1 file Several 10 files Byte-stream “pile-up” data RDO 1 (or few) files BS Still working on scenario Events mixing RDO or BS Several files BS “ Reconstruction RDO or BS ESD AOD production ESD AOD Digits +MCTruth Dario Barberis: ATLAS Data Challenges Streaming? 12

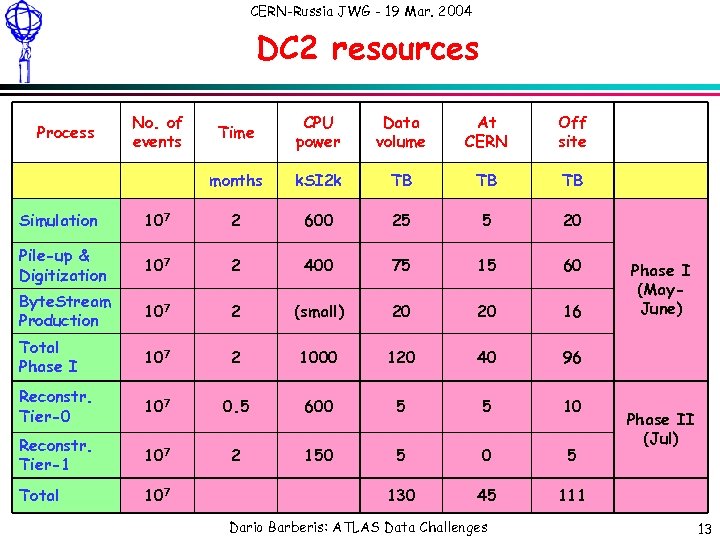

CERN-Russia JWG - 19 Mar. 2004 DC 2 resources No. of events Time CPU power Data volume At CERN Off site months Process k. SI 2 k TB TB TB Simulation 107 2 600 25 5 20 Pile-up & Digitization 107 2 400 75 15 60 Byte. Stream Production 107 2 (small) 20 20 16 Total Phase I 107 2 1000 120 40 96 Reconstr. Tier-0 107 0. 5 600 5 5 10 Reconstr. Tier-1 107 2 150 5 Total 107 130 45 111 Dario Barberis: ATLAS Data Challenges Phase I (May. June) Phase II (Jul) 13

CERN-Russia JWG - 19 Mar. 2004 DC 2 resources No. of events Time CPU power Data volume At CERN Off site months Process k. SI 2 k TB TB TB Simulation 107 2 600 25 5 20 Pile-up & Digitization 107 2 400 75 15 60 Byte. Stream Production 107 2 (small) 20 20 16 Total Phase I 107 2 1000 120 40 96 Reconstr. Tier-0 107 0. 5 600 5 5 10 Reconstr. Tier-1 107 2 150 5 Total 107 130 45 111 Dario Barberis: ATLAS Data Challenges Phase I (May. June) Phase II (Jul) 13

CERN-Russia JWG - 19 Mar. 2004 DC 2 resources - notes l CPU needs are now based on Geant 4 processing times l We assume 20% of simulation is done at CERN l l All data in Byte. Stream format are copied to CERN for the Tier-0 test (Phase II) Event sizes (except Byte. Stream format) are based on DC 1/Zebra format: events in POOL are a little larger (~1. 5 times for simulation) Reconstruction output is exported in 2 copies from CERN Tier-0 Output of parallel reconstruction on Tier-1 s, including links to MC truth, remains local and is accessed for analysis through the Grid(s) Dario Barberis: ATLAS Data Challenges 14

CERN-Russia JWG - 19 Mar. 2004 DC 2 resources - notes l CPU needs are now based on Geant 4 processing times l We assume 20% of simulation is done at CERN l l All data in Byte. Stream format are copied to CERN for the Tier-0 test (Phase II) Event sizes (except Byte. Stream format) are based on DC 1/Zebra format: events in POOL are a little larger (~1. 5 times for simulation) Reconstruction output is exported in 2 copies from CERN Tier-0 Output of parallel reconstruction on Tier-1 s, including links to MC truth, remains local and is accessed for analysis through the Grid(s) Dario Barberis: ATLAS Data Challenges 14

CERN-Russia JWG - 19 Mar. 2004 DC 2: Grid & Production tools l We foresee to use: n 3 Grid flavors (LCG-2; Grid 3+; Nordu. Grid) n (perhaps) “batch” systems (LSF; …) n Automated production system Ø New production DB (Oracle) Ø Supervisor-executer component model l Windmill supervisor project l Executers for each Grid and LSF n Data management system Ø Don Quijote DMS project Ø Successor of Magda Ø … but uses native catalogs n AMI for bookkeeping Ø Going to web services Ø Integrated to POOL Dario Barberis: ATLAS Data Challenges 15

CERN-Russia JWG - 19 Mar. 2004 DC 2: Grid & Production tools l We foresee to use: n 3 Grid flavors (LCG-2; Grid 3+; Nordu. Grid) n (perhaps) “batch” systems (LSF; …) n Automated production system Ø New production DB (Oracle) Ø Supervisor-executer component model l Windmill supervisor project l Executers for each Grid and LSF n Data management system Ø Don Quijote DMS project Ø Successor of Magda Ø … but uses native catalogs n AMI for bookkeeping Ø Going to web services Ø Integrated to POOL Dario Barberis: ATLAS Data Challenges 15

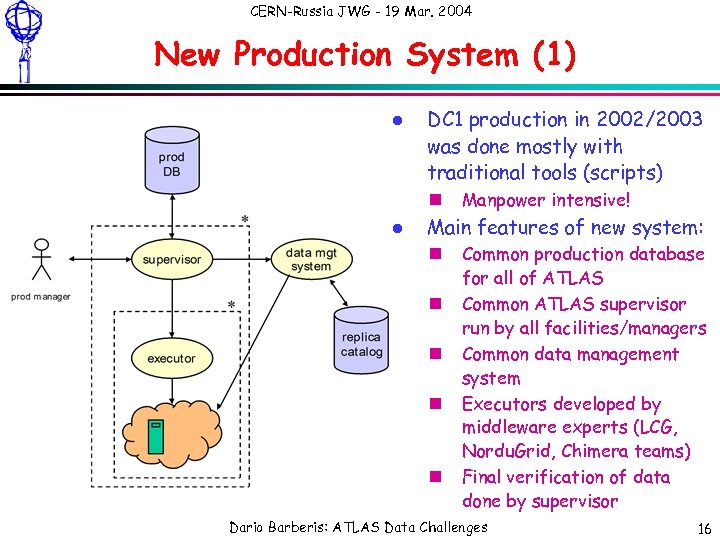

CERN-Russia JWG - 19 Mar. 2004 New Production System (1) l DC 1 production in 2002/2003 was done mostly with traditional tools (scripts) n l Manpower intensive! Main features of new system: n n n Common production database for all of ATLAS Common ATLAS supervisor run by all facilities/managers Common data management system Executors developed by middleware experts (LCG, Nordu. Grid, Chimera teams) Final verification of data done by supervisor Dario Barberis: ATLAS Data Challenges 16

CERN-Russia JWG - 19 Mar. 2004 New Production System (1) l DC 1 production in 2002/2003 was done mostly with traditional tools (scripts) n l Manpower intensive! Main features of new system: n n n Common production database for all of ATLAS Common ATLAS supervisor run by all facilities/managers Common data management system Executors developed by middleware experts (LCG, Nordu. Grid, Chimera teams) Final verification of data done by supervisor Dario Barberis: ATLAS Data Challenges 16

![CERN-Russia JWG - 19 Mar. 2004 New Production System (2) Task = [job]* Dataset CERN-Russia JWG - 19 Mar. 2004 New Production System (2) Task = [job]* Dataset](https://present5.com/presentation/ac7040557a3d47ad0c85a92752078c41/image-17.jpg) CERN-Russia JWG - 19 Mar. 2004 New Production System (2) Task = [job]* Dataset = [partition]* Luc Goossens JOB DESCRIPTION Location Hint (Task) Data Management System Task Transf. Definition Task (Dataset) Luc Goossens + physics signature Human intervention Job Run Info Kaushik De Supervisor 1 Location Hint (Job) Supervisor 2 Rob Gardner US Grid Executer Partition Executable name Transf. Release version Definition signature Job (Partition) Supervisor 3 Alessandro LCG De Salvo Executer Chimera NG Executer RB Supervisor 4 Oxana Smirnova LSF Executer Luc Goossens RB US Grid LCG NG Dario Barberis: ATLAS Data Challenges Local Batch 17

CERN-Russia JWG - 19 Mar. 2004 New Production System (2) Task = [job]* Dataset = [partition]* Luc Goossens JOB DESCRIPTION Location Hint (Task) Data Management System Task Transf. Definition Task (Dataset) Luc Goossens + physics signature Human intervention Job Run Info Kaushik De Supervisor 1 Location Hint (Job) Supervisor 2 Rob Gardner US Grid Executer Partition Executable name Transf. Release version Definition signature Job (Partition) Supervisor 3 Alessandro LCG De Salvo Executer Chimera NG Executer RB Supervisor 4 Oxana Smirnova LSF Executer Luc Goossens RB US Grid LCG NG Dario Barberis: ATLAS Data Challenges Local Batch 17

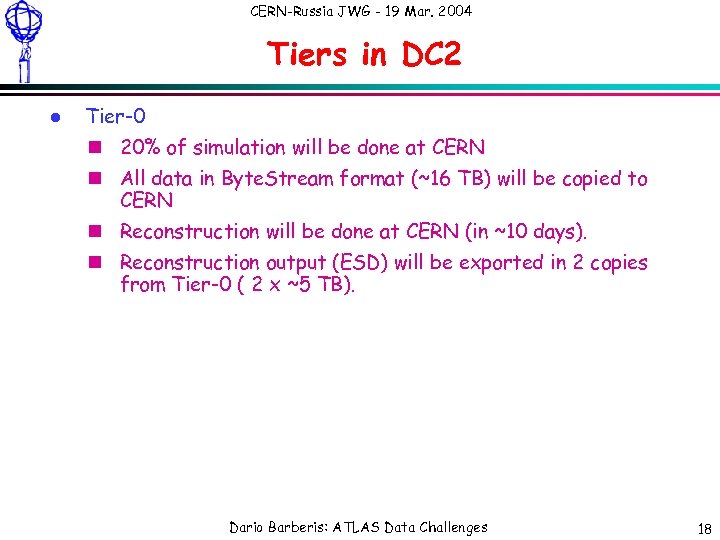

CERN-Russia JWG - 19 Mar. 2004 Tiers in DC 2 l Tier-0 n 20% of simulation will be done at CERN n All data in Byte. Stream format (~16 TB) will be copied to CERN n Reconstruction will be done at CERN (in ~10 days). n Reconstruction output (ESD) will be exported in 2 copies from Tier-0 ( 2 x ~5 TB). Dario Barberis: ATLAS Data Challenges 18

CERN-Russia JWG - 19 Mar. 2004 Tiers in DC 2 l Tier-0 n 20% of simulation will be done at CERN n All data in Byte. Stream format (~16 TB) will be copied to CERN n Reconstruction will be done at CERN (in ~10 days). n Reconstruction output (ESD) will be exported in 2 copies from Tier-0 ( 2 x ~5 TB). Dario Barberis: ATLAS Data Challenges 18

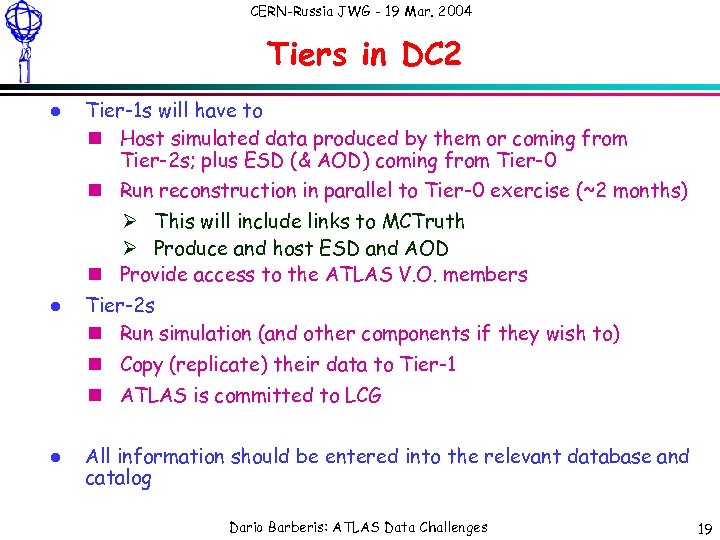

CERN-Russia JWG - 19 Mar. 2004 Tiers in DC 2 l l l Tier-1 s will have to n Host simulated data produced by them or coming from Tier-2 s; plus ESD (& AOD) coming from Tier-0 n Run reconstruction in parallel to Tier-0 exercise (~2 months) Ø This will include links to MCTruth Ø Produce and host ESD and AOD n Provide access to the ATLAS V. O. members Tier-2 s n Run simulation (and other components if they wish to) n Copy (replicate) their data to Tier-1 n ATLAS is committed to LCG All information should be entered into the relevant database and catalog Dario Barberis: ATLAS Data Challenges 19

CERN-Russia JWG - 19 Mar. 2004 Tiers in DC 2 l l l Tier-1 s will have to n Host simulated data produced by them or coming from Tier-2 s; plus ESD (& AOD) coming from Tier-0 n Run reconstruction in parallel to Tier-0 exercise (~2 months) Ø This will include links to MCTruth Ø Produce and host ESD and AOD n Provide access to the ATLAS V. O. members Tier-2 s n Run simulation (and other components if they wish to) n Copy (replicate) their data to Tier-1 n ATLAS is committed to LCG All information should be entered into the relevant database and catalog Dario Barberis: ATLAS Data Challenges 19

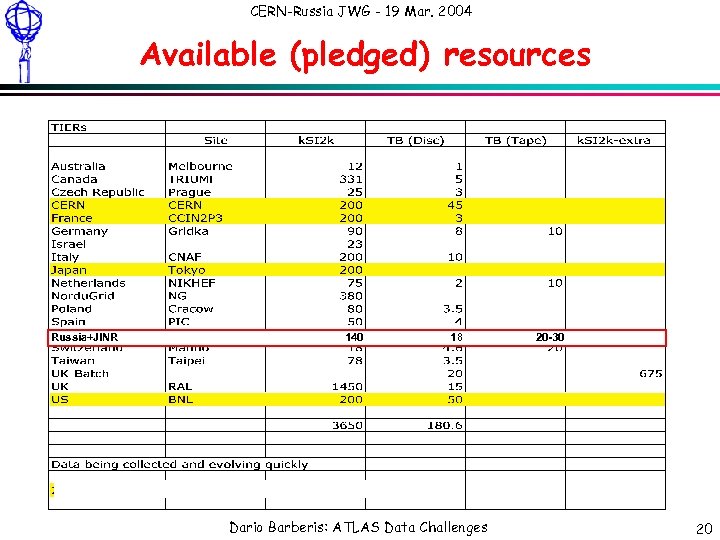

CERN-Russia JWG - 19 Mar. 2004 Available (pledged) resources Russia+JINR 140 18 Dario Barberis: ATLAS Data Challenges 20 -30 20

CERN-Russia JWG - 19 Mar. 2004 Available (pledged) resources Russia+JINR 140 18 Dario Barberis: ATLAS Data Challenges 20 -30 20

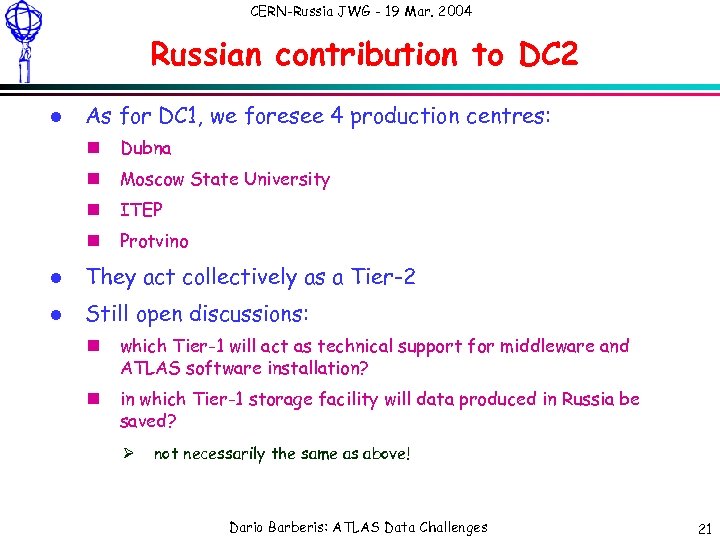

CERN-Russia JWG - 19 Mar. 2004 Russian contribution to DC 2 l As for DC 1, we foresee 4 production centres: n Dubna n Moscow State University n ITEP n Protvino l They act collectively as a Tier-2 l Still open discussions: n which Tier-1 will act as technical support for middleware and ATLAS software installation? n in which Tier-1 storage facility will data produced in Russia be saved? Ø not necessarily the same as above! Dario Barberis: ATLAS Data Challenges 21

CERN-Russia JWG - 19 Mar. 2004 Russian contribution to DC 2 l As for DC 1, we foresee 4 production centres: n Dubna n Moscow State University n ITEP n Protvino l They act collectively as a Tier-2 l Still open discussions: n which Tier-1 will act as technical support for middleware and ATLAS software installation? n in which Tier-1 storage facility will data produced in Russia be saved? Ø not necessarily the same as above! Dario Barberis: ATLAS Data Challenges 21

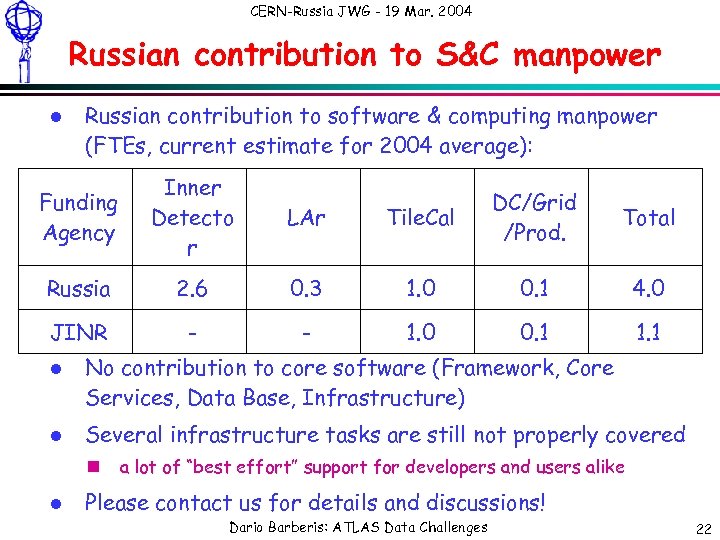

CERN-Russia JWG - 19 Mar. 2004 Russian contribution to S&C manpower l Russian contribution to software & computing manpower (FTEs, current estimate for 2004 average): Funding Agency Inner Detecto r LAr Russia 2. 6 JINR - l l Total 0. 3 1. 0 0. 1 4. 0 - 1. 0 0. 1 1. 1 No contribution to core software (Framework, Core Services, Data Base, Infrastructure) Several infrastructure tasks are still not properly covered n l Tile. Cal DC/Grid /Prod. a lot of “best effort” support for developers and users alike Please contact us for details and discussions! Dario Barberis: ATLAS Data Challenges 22

CERN-Russia JWG - 19 Mar. 2004 Russian contribution to S&C manpower l Russian contribution to software & computing manpower (FTEs, current estimate for 2004 average): Funding Agency Inner Detecto r LAr Russia 2. 6 JINR - l l Total 0. 3 1. 0 0. 1 4. 0 - 1. 0 0. 1 1. 1 No contribution to core software (Framework, Core Services, Data Base, Infrastructure) Several infrastructure tasks are still not properly covered n l Tile. Cal DC/Grid /Prod. a lot of “best effort” support for developers and users alike Please contact us for details and discussions! Dario Barberis: ATLAS Data Challenges 22