795c7c1305bfe447c1d0fa184beacdcc.ppt

- Количество слайдов: 23

CERN Building a Regional Centre A few ideas & a personal view CHEP 2000 – Padova 10 February 2000 Les Robertson CERN/IT les. robertson@cern. ch 10 -Feb-00

CERN § § § les robertson - cern/it Summary LHC regional computing centre topology Some capacity and performance parameters From components to computing fabrics Remarks about regional centres Policies & sociology Conclusions 10 -feb-00 - #2

Why Regional Centres? CERN § Bring computing facilities closer to home § final analysis on a compact cluster in the physics department § Exploit established computing expertise & infrastructure § Reduce dependence on links to CERN § full ESD available nearby - through a fat, fast, reliable network link § Tap funding sources not otherwise available to HEP § Devolve control over resource allocation § national interests? § regional interests? § at the expense of physics interests? les robertson - cern/it 10 -feb-00 - #3

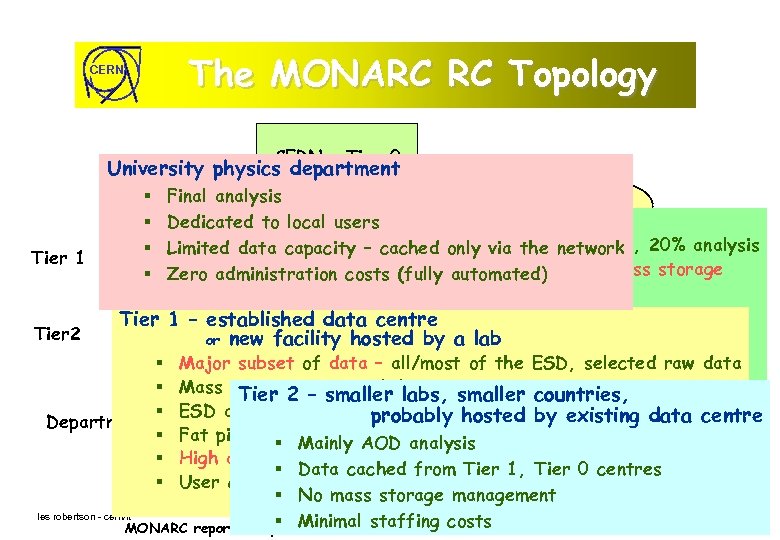

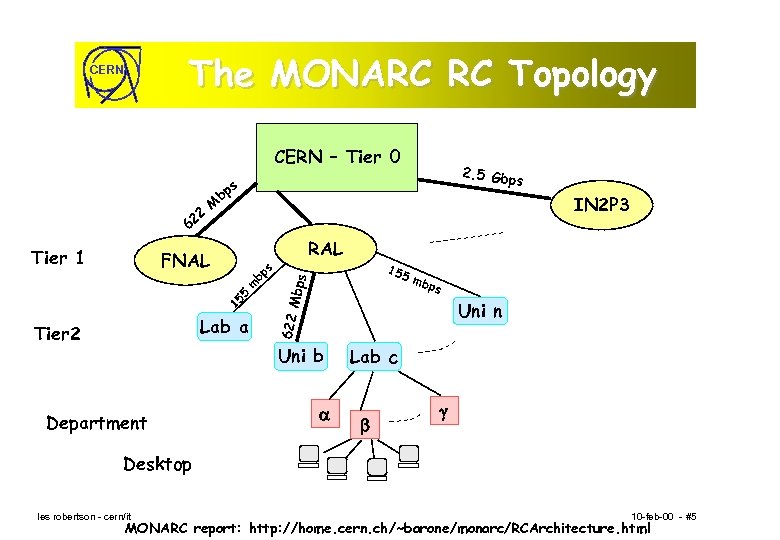

The MONARC RC Topology CERN – Tier 0 University physics department 622 Mbps s bp m 15 Tier 2 2. 5 Gbp s Final Mb analysis IN 2 P 3 2 Tier 0 – Dedicated to local users CERN 62 § Limited data capacity. Data recording, reconstruction, 20% analysis RAL – cached only via the network FNAL § Full data automated) 5 m Zero administration costs 15(fullysets on permanent mass storage – raw, bp. ESD, simulated data s § Hefty WAN capability Tier 1 – Lab a established data centre Uni n § Range by a lab or new facility hosted of export-import media Uni b § Lab c 24 all/most of the § Major subset of data – X 7 availability ESD, selected raw data § § 5 Tier 1 ps § Mass storage, – smallerdata operation countries, Tier 2 managed labs, smaller § ESD analysis, AOD generation, major analysis capacity Department probably hosted by existing data centre § Fat pipe to§ CERN Mainly AOD analysis § High availability Desktop § Data cached from Tier 1, Tier 0 centres § User consultancy – Library & Collaboration Software support § No mass storage management les robertson - cern/it 10 -feb-00 - #4 § Minimal staffing costs MONARC report: http: //home. cern. ch/~barone/monarc/RCArchitecture. html

The MONARC RC Topology CERN – Tier 0 22 b M 2. 5 Gbp s ps IN 2 P 3 6 RAL Mbps bp m 5 15 Lab a Tier 2 155 s FNAL ps Uni n Uni b Department mb 622 Tier 1 Lab c Desktop les robertson - cern/it 10 -feb-00 - #5 MONARC report: http: //home. cern. ch/~barone/monarc/RCArchitecture. html

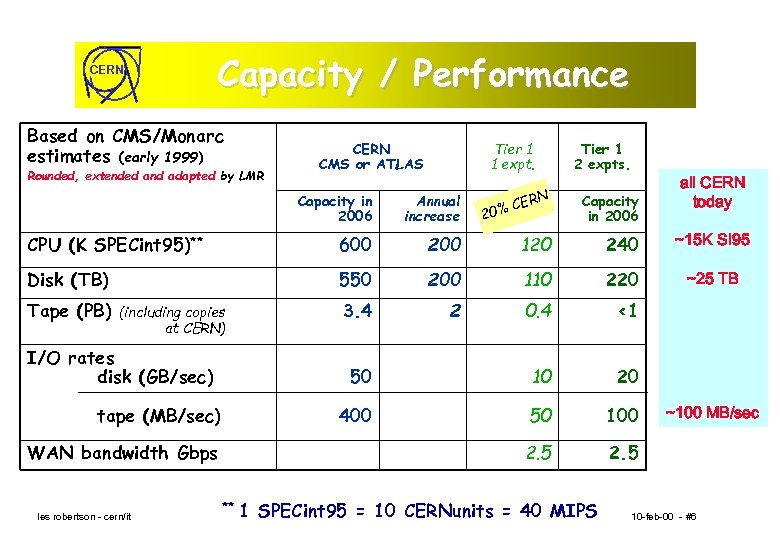

CERN Capacity / Performance Based on CMS/Monarc estimates (early 1999) Rounded, extended and adapted by LMR CERN CMS or ATLAS Tier 1 1 expt. Tier 1 2 expts. ERN Capacity in 2006 all CERN today Capacity in 2006 Annual increase CPU (K SPECint 95)** 600 200 120 240 ~15 K SI 95 Disk (TB) 550 200 110 220 ~25 TB 3. 4 2 0. 4 <1 50 10 20 400 50 100 2. 5 Tape (PB) (including copies at CERN) I/O rates disk (GB/sec) tape (MB/sec) WAN bandwidth Gbps les robertson - cern/it ** 20% C 1 SPECint 95 = 10 CERNunits = 40 MIPS ~100 MB/sec 10 -feb-00 - #6

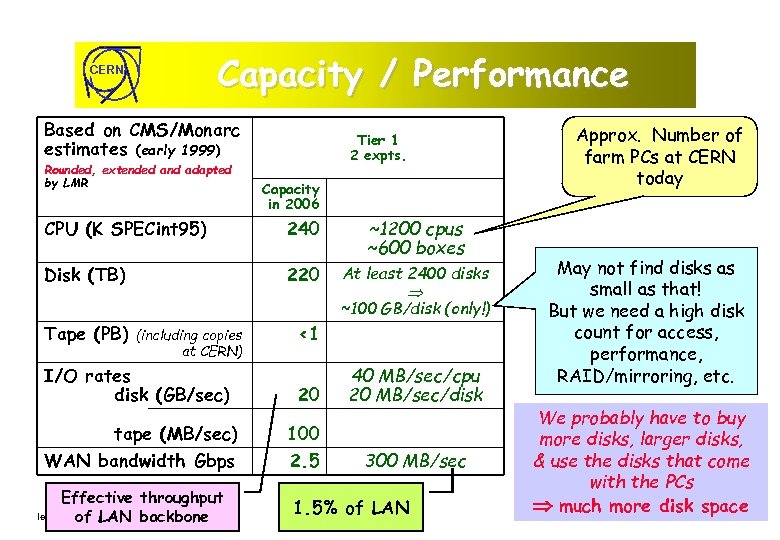

Capacity / Performance CERN Based on CMS/Monarc estimates (early 1999) Rounded, extended and adapted by LMR Approx. Number of farm PCs at CERN today Tier 1 2 expts. Capacity in 2006 CPU (K SPECint 95) 240 ~1200 cpus ~600 boxes Disk (TB) 220 At least 2400 disks ~100 GB/disk (only!) Tape (PB) (including copies at CERN) I/O rates disk (GB/sec) tape (MB/sec) WAN bandwidth Gbps Effective throughput les robertson - cern/it backbone of LAN <1 20 100 2. 5 40 MB/sec/cpu 20 MB/sec/disk 300 MB/sec 1. 5% of LAN May not find disks as small as that! But we need a high disk count for access, performance, RAID/mirroring, etc. We probably have to buy more disks, larger disks, & use the disks that come with the PCs much more disk space 10 -feb-00 - #7

CERN Building a Regional Centre Commodity components are just fine for HEP § § Masses of experience with inexpensive farms LAN technology is going the right way § Inexpensive high performance PC attachments § Compatible with hefty backbone switches § les robertson - cern/it Good ideas for improving automated operation and management 10 -feb-00 - #8

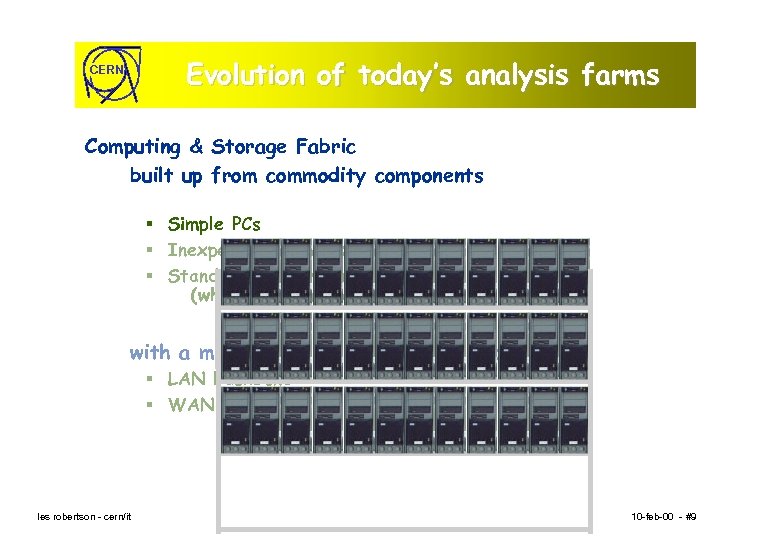

Evolution of today’s analysis farms CERN Computing & Storage Fabric built up from commodity components § Simple PCs § Inexpensive network-attached disk § Standard network interface (whatever Ethernet happens to be in 2006) with a minimum of high(er)-end components § LAN backbone § WAN connection les robertson - cern/it 10 -feb-00 - #9

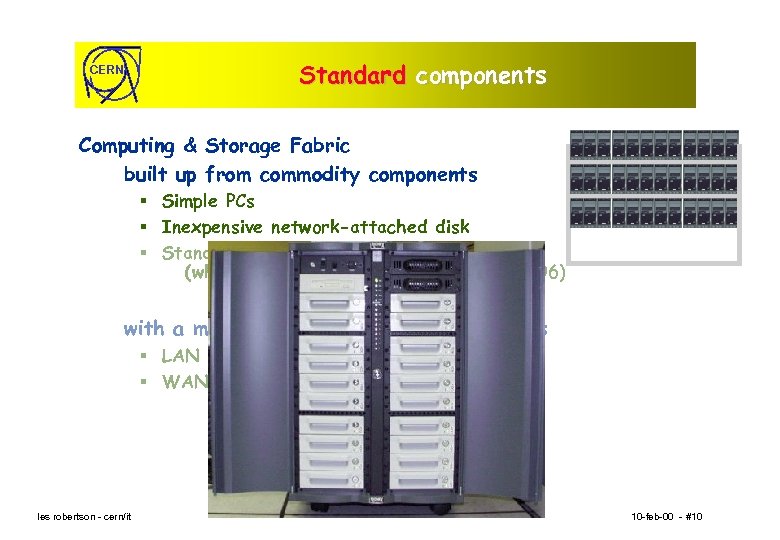

Standard components CERN Computing & Storage Fabric built up from commodity components § Simple PCs § Inexpensive network-attached disk § Standard network interface (whatever Ethernet happens to be in 2006) with a minimum of high(er)-end components § LAN backbone § WAN connection les robertson - cern/it 10 -feb-00 - #10

CERN HEP’s not special, just more cost conscious Computing & Storage Fabric built up from commodity components § Simple PCs § Inexpensive network-attached disk § Standard network interface (whatever Ethernet happens to be in 2006) with a minimum of high(er)-end components § LAN backbone § WAN connection les robertson - cern/it 10 -feb-00 - #11

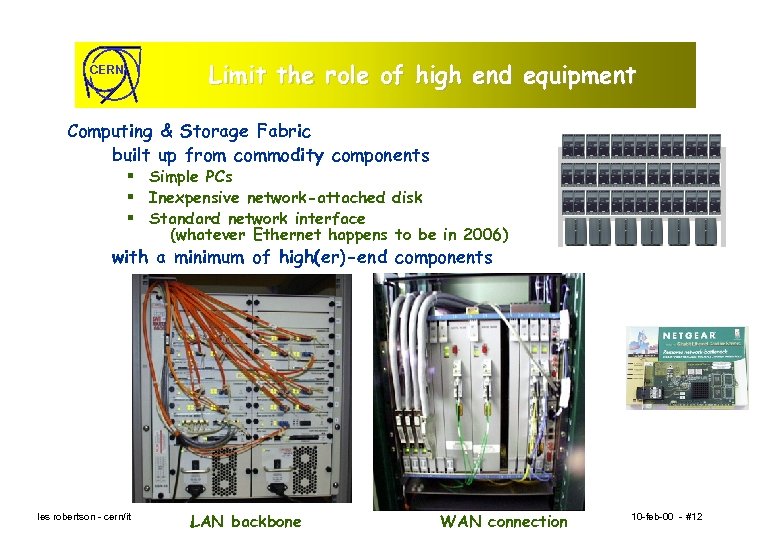

Limit the role of high end equipment CERN Computing & Storage Fabric built up from commodity components § Simple PCs § Inexpensive network-attached disk § Standard network interface (whatever Ethernet happens to be in 2006) with a minimum of high(er)-end components les robertson - cern/it LAN backbone WAN connection 10 -feb-00 - #12

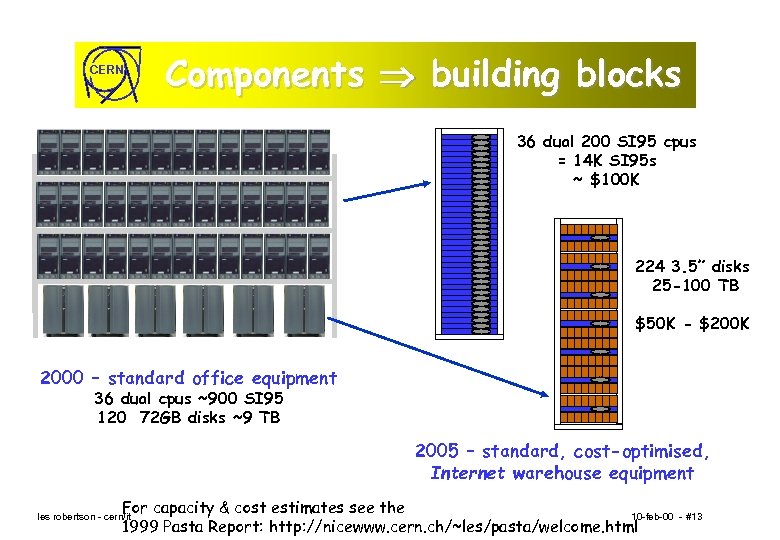

CERN Components building blocks 36 dual 200 SI 95 cpus = 14 K SI 95 s ~ $100 K 224 3. 5” disks 25 -100 TB $50 K - $200 K 2000 – standard office equipment 36 dual cpus ~900 SI 95 120 72 GB disks ~9 TB 2005 – standard, cost-optimised, Internet warehouse equipment For capacity & cost estimates see the 10 -feb-00 1999 Pasta Report: http: //nicewww. cern. ch/~les/pasta/welcome. html les robertson - cern/it - #13

CERN § The Physics Department System Two 19” racks & $200 K § CPU – 14 K SI 95 (10% of a Tier 1 centre) § Disk – 50 TB (50% of a Tier 1 centre) § § § les robertson - cern/it Rather comfortable analysis machine Small Regional Centres are not going to be competitive Need to rethink the storage capacity at the Tier 1 centres 10 -feb-00 - #14

CERN Tier 1, Tier 2 RCs, CERN A few general remarks: § A major motivation for the RCs is that we are hard pressed to finance the scale of computing needed for LHC § We need to start now to work together towards minimising costs § Standardisation among experiments, regional centres, CERN so that we can use the same tools and practices to … § Automate everything § § Operation & monitoring Disk & data management Work scheduling Data export/import (prefer the network to mail) in order to … § Minimise operation, staffing – § § les robertson - cern/it Trade off mass storage for disk + network bandwidth Acquire contingency capacity rather than fighting bottlenecks Outsource what you can (at a sensible price) ……. simple eep it K r gethe to ork 10 -feb-00 - #15 W

CERN The middleware The issues are: § integration of this amorphous collection of Regional Centres § Data § Workload § Network performance § § application monitoring quality of data analysis service Leverage the “Grid” developments § Extending Meta-computing to Mass-computing § Emphasis on data management & caching § les robertson - cern/it … and production reliability & quality – simple eep it K ether og ork t W 10 -feb-00 - #16

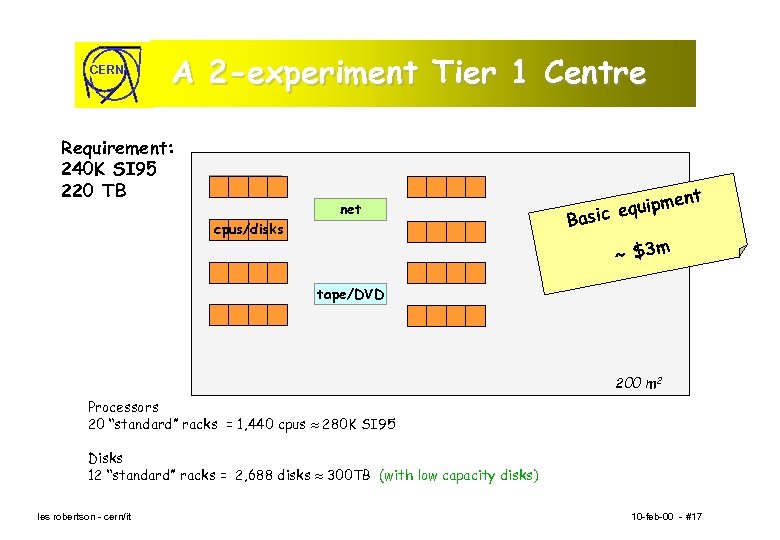

CERN A 2 -experiment Tier 1 Centre Requirement: 240 K SI 95 220 TB cpu/disk net cpus/disks ent uipm sic eq Ba ~ $3 m tape/DVD 200 m 2 Processors 20 “standard” racks = 1, 440 cpus 280 K SI 95 Disks 12 “standard” racks = 2, 688 disks 300 TB (with low capacity disks) les robertson - cern/it 10 -feb-00 - #17

The full costs? CERN § § § Space Power, cooling Software § § LAN Replacement/Expansion 30% per year § Mass storage § People les robertson - cern/it 10 -feb-00 - #18

CERN mass storage ? Do all Tier 1 centres really need a full mass storage operation? § Tapes, robots, storage management software? Need support for export/import media § But think hard before getting into mass storage § Rather § more disks, bigger disks, mirrored disks § cache data across the network from another centre (that is willing to tolerate the stresses of mass storage management) Mass storage is person-power intensive les robertson - cern/it long term costs 10 -feb-00 - #19

CERN § Consider outsourcing Massive growth in co-location centres, ISP warehouses, ASPs, storage renters, etc. § Level 3, Intel, Hot Office, Network Storage Inc, PSI, …. § § There will probably be one near you Check it out – compare costs & prices § Maybe personnel savings can be made les robertson - cern/it 10 -feb-00 - #20

CERN Policies & sociology Access policy? § Collaboration-wide? or restricted access (regional, national, …. ) § A rich source of unnecessary complexity Data distribution policies Analysis models § Monarc work will help to plan the centres § But the real analysis models will evolve when the data arrives Keep everything flexible – simple architecture - simple policies - minimal politics les robertson - cern/it 10 -feb-00 - #21

CERN § Concluding remarks I Lots of experience with farms of inexpensive components § We need to scale them up – lots of work but we think we understand it § But we have to learn how to integrate distributed farms into a coherent analysis facility § Leverage other developments § But we need to learn through practice and experience § Retain a healthy scepticism for scalability theories § Check it all out on a realistically sized testbed les robertson - cern/it 10 -feb-00 - #22

CERN Concluding remarks II § Don’t get hung up on optimising component costs Do be very careful with head-count § Personnel costs will probably dominate § Define clear objectives for the centre – § Efficiency, capacity, quality § Think hard if you really need mass storage § Discourage empires & egos § Encourage collaboration & out-sourcing § In fact – maybe we can just buy all this as an Internet service les robertson - cern/it 10 -feb-00 - #23

795c7c1305bfe447c1d0fa184beacdcc.ppt