7b83a1e51f97c449612a7fc240848007.ppt

- Количество слайдов: 21

Cellular Disco: Resource management using virtual clusters on sharedmemory multiprocessors Kinshuk Govil, Dan Teodosiu*, Yongqiang Huang, and Mendel Rosenblum Computer Systems Laboratory, Stanford University * Xift, Inc. , Palo Alto, CA www-flash. stanford. edu

Cellular Disco: Resource management using virtual clusters on sharedmemory multiprocessors Kinshuk Govil, Dan Teodosiu*, Yongqiang Huang, and Mendel Rosenblum Computer Systems Laboratory, Stanford University * Xift, Inc. , Palo Alto, CA www-flash. stanford. edu

Motivation • Why buy a large shared-memory machine? – Performance, flexibility, manageability, show-off • These machines are not being used at their full potential – Operating system scalability bottlenecks – No fault containment support – Lack of scalable resource management • Operating systems are too large to adapt 2

Motivation • Why buy a large shared-memory machine? – Performance, flexibility, manageability, show-off • These machines are not being used at their full potential – Operating system scalability bottlenecks – No fault containment support – Lack of scalable resource management • Operating systems are too large to adapt 2

Previous approaches • Operating system: Hive, SGI IRIX 6. 4, 6. 5 + Knowledge of application resource needs – Huge implementation cost (a few million lines) • Hardware: static and dynamic partitioning + Cluster-like (fault containment) – Inefficient, granularity, OS changes, large apps • Virtual machine monitor: Disco + Low implementation cost (13 K lines of code) – Cost of virtualization 3

Previous approaches • Operating system: Hive, SGI IRIX 6. 4, 6. 5 + Knowledge of application resource needs – Huge implementation cost (a few million lines) • Hardware: static and dynamic partitioning + Cluster-like (fault containment) – Inefficient, granularity, OS changes, large apps • Virtual machine monitor: Disco + Low implementation cost (13 K lines of code) – Cost of virtualization 3

Questions • Can virtualization overhead be kept low? – Usually within 10% • Can fault containment overhead be kept low? – In the noise • Can a virtual machine monitor manage resources as well as an operating system? – Yes 4

Questions • Can virtualization overhead be kept low? – Usually within 10% • Can fault containment overhead be kept low? – In the noise • Can a virtual machine monitor manage resources as well as an operating system? – Yes 4

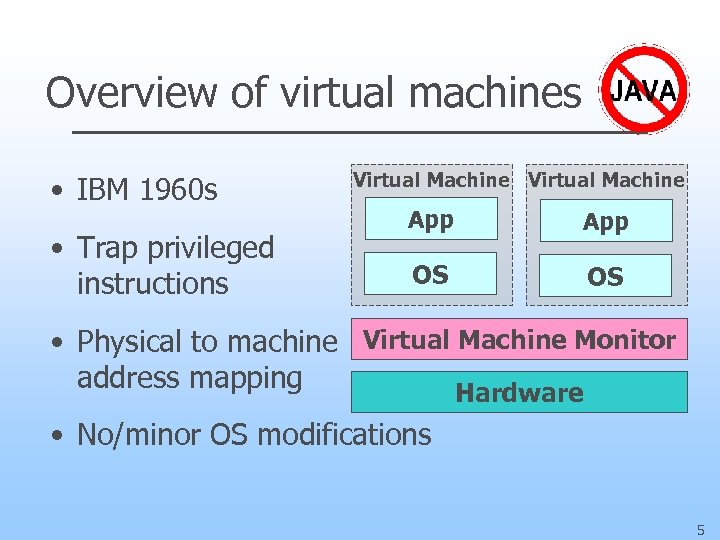

Overview of virtual machines • IBM 1960 s • Trap privileged instructions Virtual Machine App OS OS • Physical to machine Virtual Machine Monitor address mapping Hardware • No/minor OS modifications 5

Overview of virtual machines • IBM 1960 s • Trap privileged instructions Virtual Machine App OS OS • Physical to machine Virtual Machine Monitor address mapping Hardware • No/minor OS modifications 5

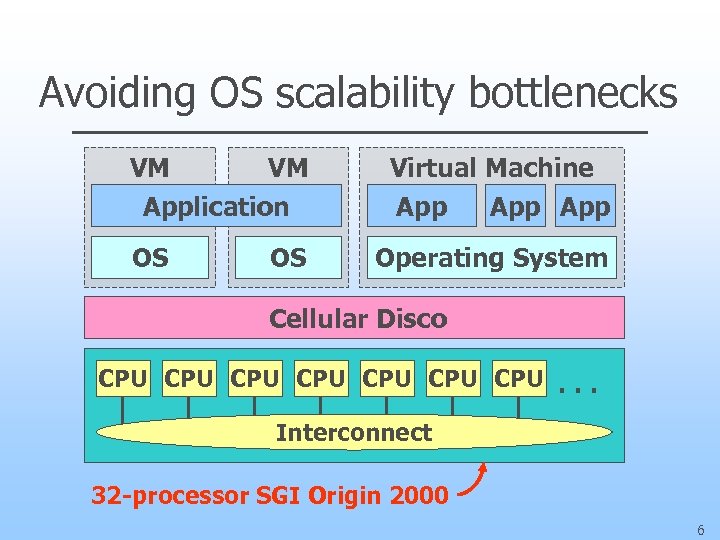

Avoiding OS scalability bottlenecks VM VM Application OS OS Virtual Machine App App Operating System Cellular Disco CPU CPU. . . Interconnect 32 -processor SGI Origin 2000 6

Avoiding OS scalability bottlenecks VM VM Application OS OS Virtual Machine App App Operating System Cellular Disco CPU CPU. . . Interconnect 32 -processor SGI Origin 2000 6

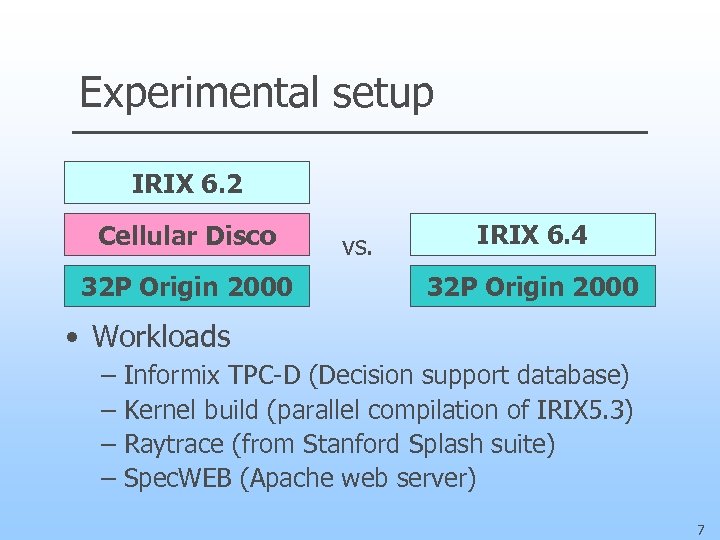

Experimental setup IRIX 6. 2 Cellular Disco 32 P Origin 2000 vs. IRIX 6. 4 32 P Origin 2000 • Workloads – – Informix TPC-D (Decision support database) Kernel build (parallel compilation of IRIX 5. 3) Raytrace (from Stanford Splash suite) Spec. WEB (Apache web server) 7

Experimental setup IRIX 6. 2 Cellular Disco 32 P Origin 2000 vs. IRIX 6. 4 32 P Origin 2000 • Workloads – – Informix TPC-D (Decision support database) Kernel build (parallel compilation of IRIX 5. 3) Raytrace (from Stanford Splash suite) Spec. WEB (Apache web server) 7

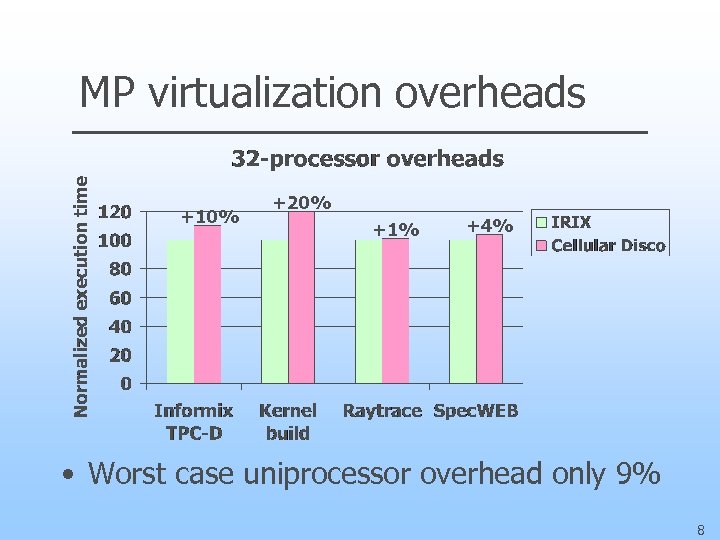

MP virtualization overheads +10% +20% +1% +4% • Worst case uniprocessor overhead only 9% 8

MP virtualization overheads +10% +20% +1% +4% • Worst case uniprocessor overhead only 9% 8

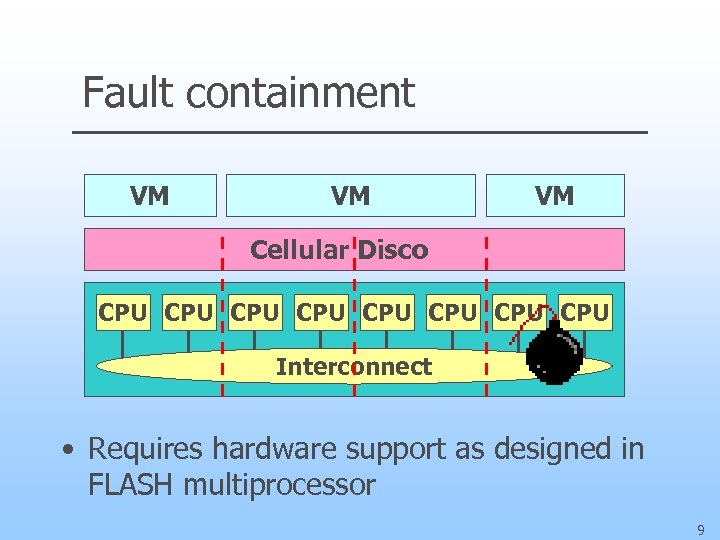

Fault containment VM VM VM Cellular Disco CPU CPU Interconnect • Requires hardware support as designed in FLASH multiprocessor 9

Fault containment VM VM VM Cellular Disco CPU CPU Interconnect • Requires hardware support as designed in FLASH multiprocessor 9

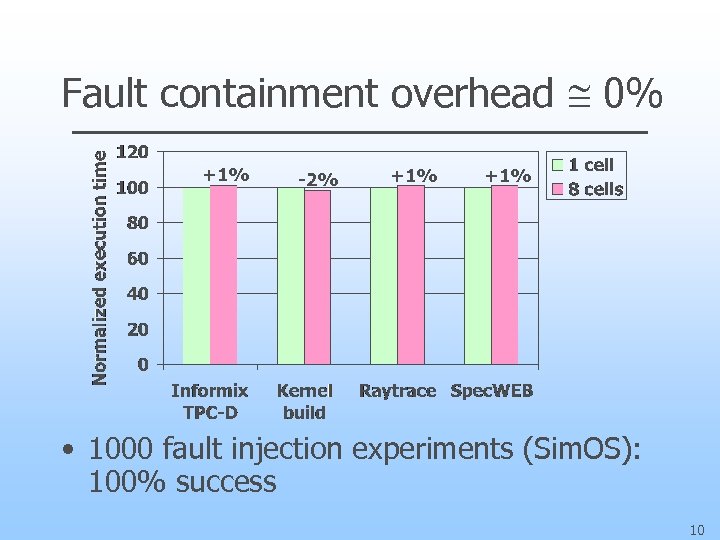

Fault containment overhead @ 0% +1% -2% +1% • 1000 fault injection experiments (Sim. OS): 100% success 10

Fault containment overhead @ 0% +1% -2% +1% • 1000 fault injection experiments (Sim. OS): 100% success 10

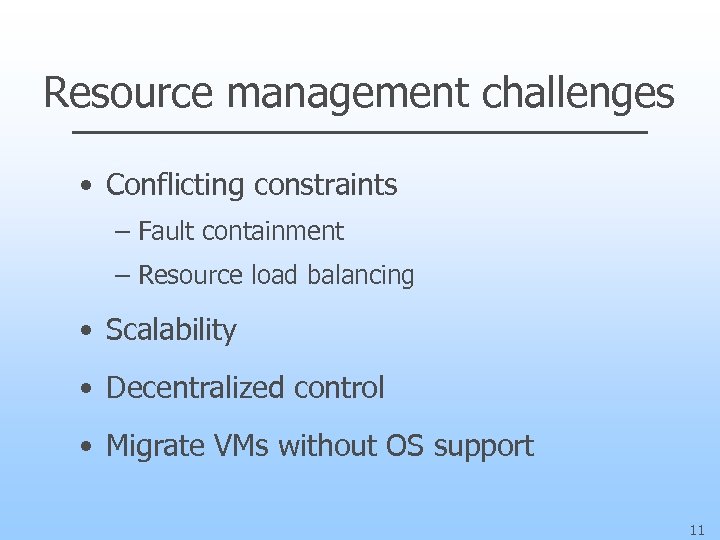

Resource management challenges • Conflicting constraints – Fault containment – Resource load balancing • Scalability • Decentralized control • Migrate VMs without OS support 11

Resource management challenges • Conflicting constraints – Fault containment – Resource load balancing • Scalability • Decentralized control • Migrate VMs without OS support 11

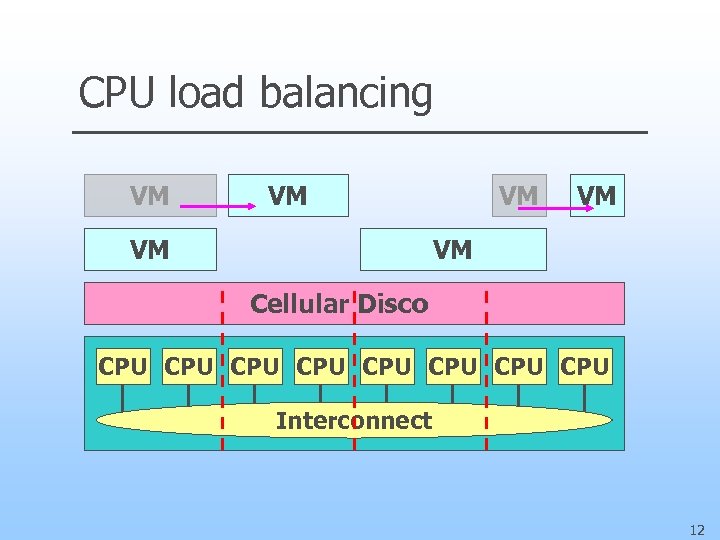

CPU load balancing VM VM VM Cellular Disco CPU CPU Interconnect 12

CPU load balancing VM VM VM Cellular Disco CPU CPU Interconnect 12

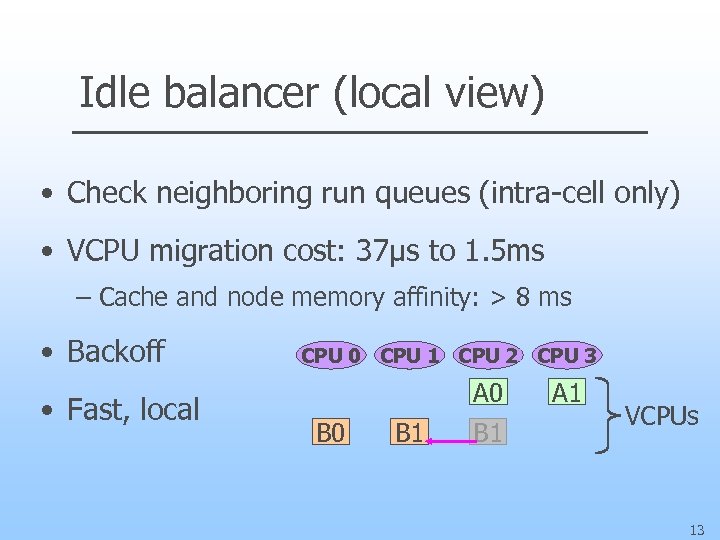

Idle balancer (local view) • Check neighboring run queues (intra-cell only) • VCPU migration cost: 37µs to 1. 5 ms – Cache and node memory affinity: > 8 ms • Backoff • Fast, local CPU 0 CPU 1 CPU 2 CPU 3 A 0 B 1 B 1 A 1 VCPUs 13

Idle balancer (local view) • Check neighboring run queues (intra-cell only) • VCPU migration cost: 37µs to 1. 5 ms – Cache and node memory affinity: > 8 ms • Backoff • Fast, local CPU 0 CPU 1 CPU 2 CPU 3 A 0 B 1 B 1 A 1 VCPUs 13

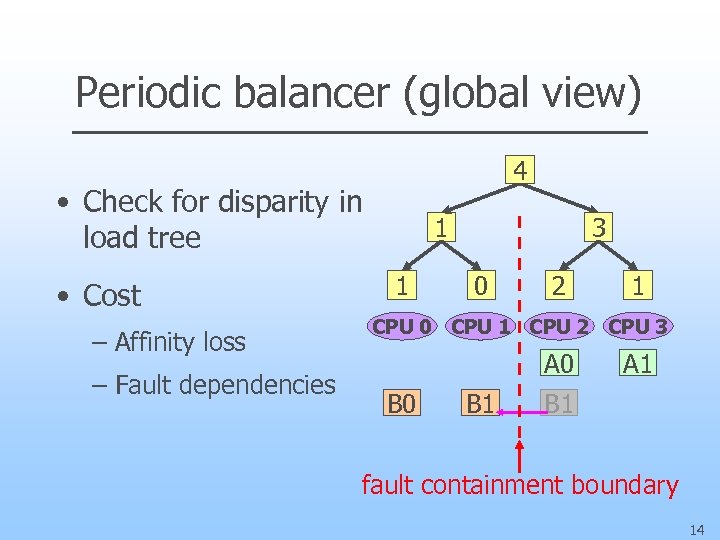

Periodic balancer (global view) 4 • Check for disparity in load tree • Cost – Affinity loss – Fault dependencies 1 1 3 0 2 1 CPU 0 CPU 1 CPU 2 CPU 3 A 0 B 1 A 1 B 1 fault containment boundary 14

Periodic balancer (global view) 4 • Check for disparity in load tree • Cost – Affinity loss – Fault dependencies 1 1 3 0 2 1 CPU 0 CPU 1 CPU 2 CPU 3 A 0 B 1 A 1 B 1 fault containment boundary 14

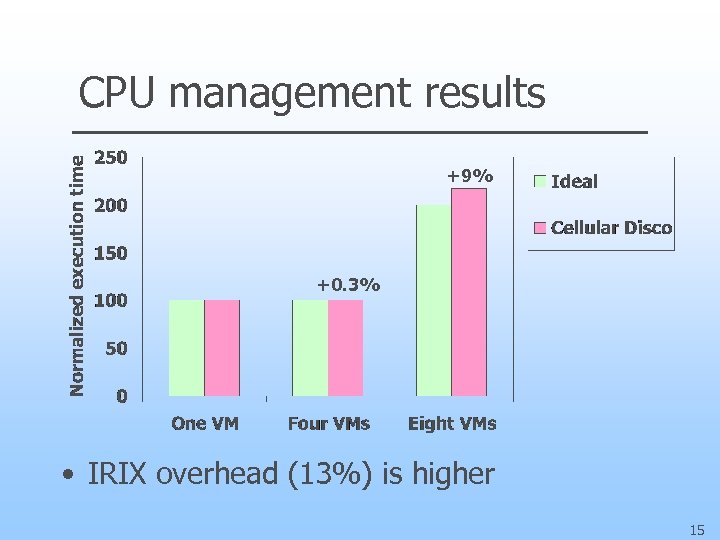

CPU management results +9% +0. 3% • IRIX overhead (13%) is higher 15

CPU management results +9% +0. 3% • IRIX overhead (13%) is higher 15

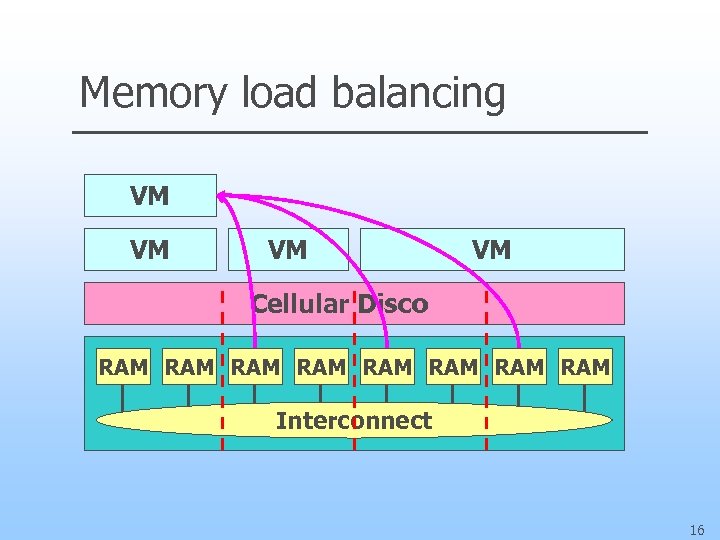

Memory load balancing VM VM Cellular Disco RAM RAM Interconnect 16

Memory load balancing VM VM Cellular Disco RAM RAM Interconnect 16

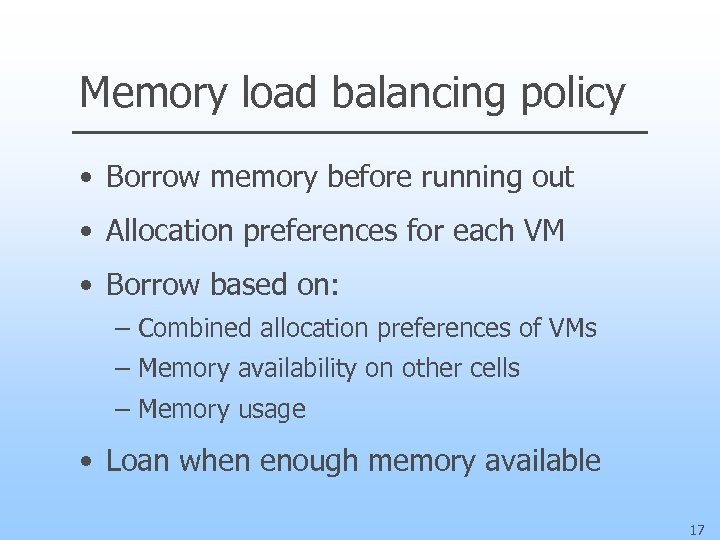

Memory load balancing policy • Borrow memory before running out • Allocation preferences for each VM • Borrow based on: – Combined allocation preferences of VMs – Memory availability on other cells – Memory usage • Loan when enough memory available 17

Memory load balancing policy • Borrow memory before running out • Allocation preferences for each VM • Borrow based on: – Combined allocation preferences of VMs – Memory availability on other cells – Memory usage • Loan when enough memory available 17

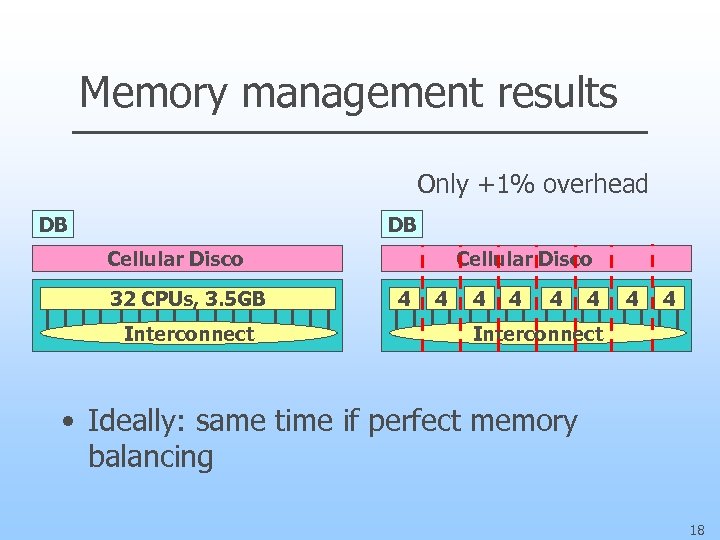

Memory management results Only +1% overhead DB DB Cellular Disco 32 CPUs, 3. 5 GB Interconnect Cellular Disco 4 4 4 4 Interconnect • Ideally: same time if perfect memory balancing 18

Memory management results Only +1% overhead DB DB Cellular Disco 32 CPUs, 3. 5 GB Interconnect Cellular Disco 4 4 4 4 Interconnect • Ideally: same time if perfect memory balancing 18

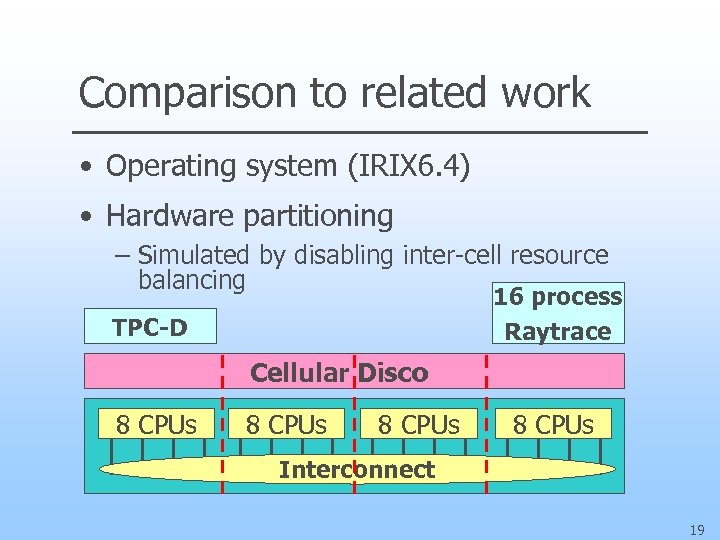

Comparison to related work • Operating system (IRIX 6. 4) • Hardware partitioning – Simulated by disabling inter-cell resource balancing 16 process Raytrace TPC-D Cellular Disco 8 CPUs Interconnect 19

Comparison to related work • Operating system (IRIX 6. 4) • Hardware partitioning – Simulated by disabling inter-cell resource balancing 16 process Raytrace TPC-D Cellular Disco 8 CPUs Interconnect 19

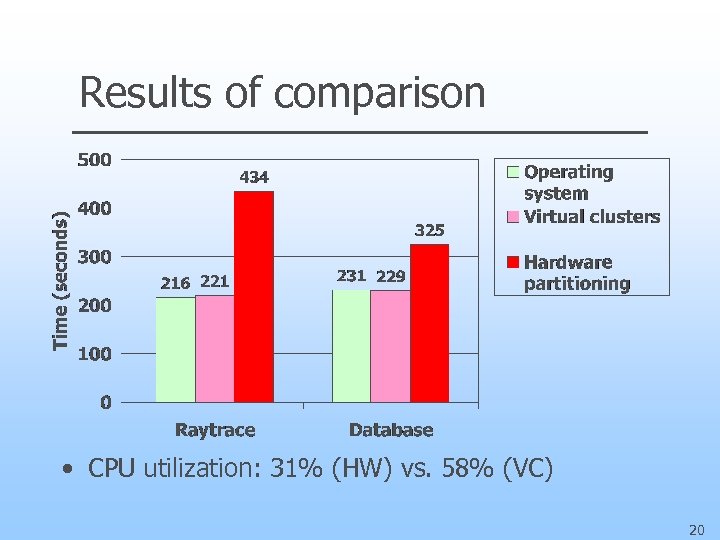

Results of comparison • CPU utilization: 31% (HW) vs. 58% (VC) 20

Results of comparison • CPU utilization: 31% (HW) vs. 58% (VC) 20

Conclusions • Virtual machine approach adds flexibility to system at a low development cost • Virtual clusters address the needs of large shared-memory multiprocessors – Avoid operating system scalability bottlenecks – Support fault containment – Provide scalable resource management – Small overheads and low implementation cost 21

Conclusions • Virtual machine approach adds flexibility to system at a low development cost • Virtual clusters address the needs of large shared-memory multiprocessors – Avoid operating system scalability bottlenecks – Support fault containment – Provide scalable resource management – Small overheads and low implementation cost 21