6c11a3aeca7a0260be6a155684995996.ppt

- Количество слайдов: 26

CCRC’ 08 Summary of February phase, preparations for May and later Jamie. Shiers@cern. ch ~~~ LHCC Referees Meeting, th May 2008 5

Agenda • Status of CCRC’ 08 • Summary of February run • Preparations for May run • and later… • Conclusions 2

Status of CCRC’ 08 • CCRC’ 08 was proposed during the WLCG Collaboration workshop held in Victoria prior to CHEP’ 07 • It builds on many years of work preparing and hardening the services, the experiments’ computing models and offline production chains • Data challenges, Service challenges, Dress rehearsals, … L The name says it all: Common. Computing. Readiness. Challenge • Are we (together) ready? 3

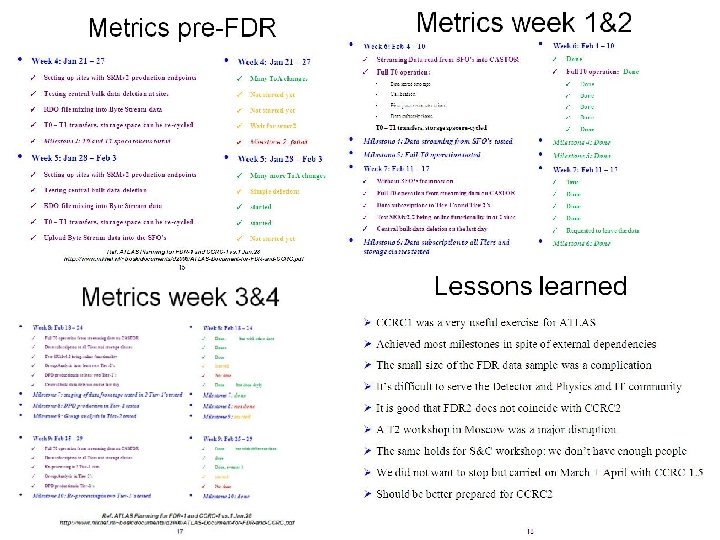

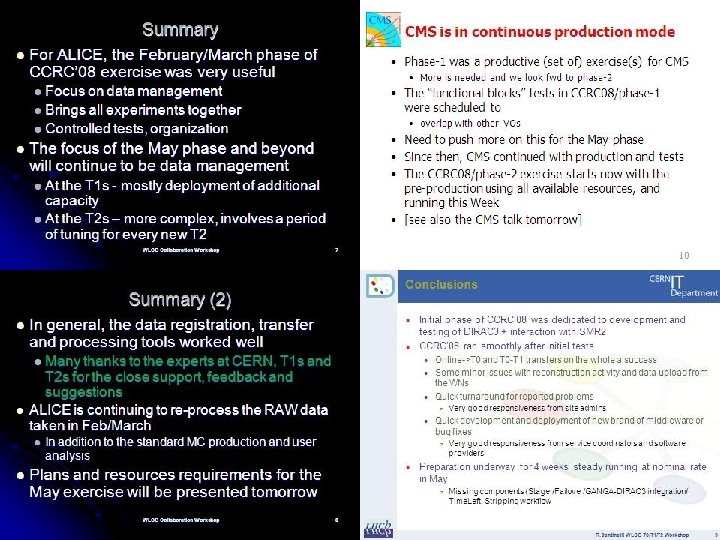

February run • Preparations started with “kick-off” F 2 F meeting in October 2007 • Followed by weekly planning con-calls and monthly F 2 Fs • The weekly calls were suspended end-January 2008 – it is not foreseen to restart them! • Daily “operations-style” con-calls started late January • Except Mondays (weekly operations) and during F 2 Fs / workshops • The February run was generally considered to be successful – although the overlap in terms of experiment activities (particularly Tier 0 -Tier 1 export) was lower than foreseen • SRM v 2. 2 production deployment, middleware services, operations aspects – generally better than expected! L Much still left to do in May: more resources, more services, more of everything… 4

What Did We Achieve? (High Level) • Even beforethe official start date of the February challenge, it had proven an extremely usefulfocusing exercise, in helping understand missing and / or weak aspects of the service and in identifying pragmatic solutions • Although later than desirable, the main bugsin the middleware were fixed (just) in time and many sites upgraded to these versions • The deployment, configuration and usage of SRM v 2. 2 went better than had predicted, with a noticeable improvement during the month • Despite the high workload, we also demonstrated (most importantly) that we can support this work with the available manpower, although essentially no remaining effort for longer-term work (more later… ) • If we can do the same in May – when the bar is placed much higher – we will be in a good position this year’s data taking for • However, there are certainly significant concerns around the available manpower at all sites – not only today, but also in the longer term, when funding is unclear (e. g. post EGEE III ) 5

6

11

May Run – Introduction • 2 nd phase of CCRC’ 08 is from Monday 5 th May to Friday 30 th. • This was immediately preceded by a long w/e (Thu-Sun) at CERN • May is also a month with quite a few public holidays • ~1 per week… L Everyone is keen that we improve on February in terms being ready well in time • And in terms of defining what “ready” actually means… • Detailed presentations on middleware, storage-ware, databases, experiments plans & requirements at April’s F 2 F • This can be summarized in a couple of tables, on the CCRC’ 08 wiki, as was done for February – very small updates wrt February Ø Further discussions and clarifications during WLCG Collaboration Workshop, 21 – 25 April at CERN 12

Critical Services / Service Reliability • Summary of key techniques (D. I. D. O. ) and status of deployed middleware • Techniques widely used – in particular for Tier 0 (“WLCG” & experiment” services) MQuite a few well identified weaknesses – action plan? • Measuring what we deliver – service availability targets • Testing experiment services • Update on work on ATLAS services • To be extended to all 4 LHC experiments • Top 2 ATLAS services “ATLONR” & DDM catalogs • Request(? ) to increase availability of latter during DB sessions • Possible use of Data. Guard to another location in CC? Design, Implementation, Deployment, Operation 13

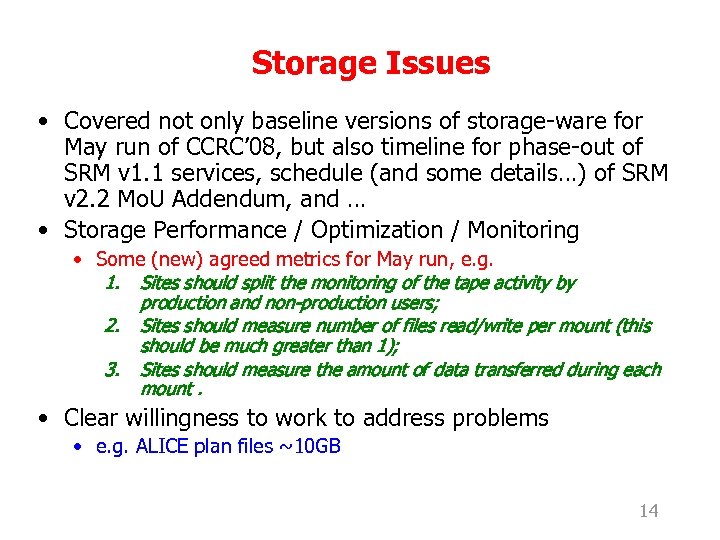

Storage Issues • Covered not only baseline versions of storage-ware for May run of CCRC’ 08, but also timeline for phase-out of SRM v 1. 1 services, schedule (and some details…) of SRM v 2. 2 Mo. U Addendum, and … • Storage Performance / Optimization / Monitoring • Some (new) agreed metrics for May run, e. g. 1. Sites should split the monitoring of the tape activity by 2. 3. production and non-production users; Sites should measure number of files read/write per mount (this should be much greater than 1); Sites should measure the amount of data transferred during each mount. • Clear willingness to work to address problems • e. g. ALICE plan files ~10 GB 14

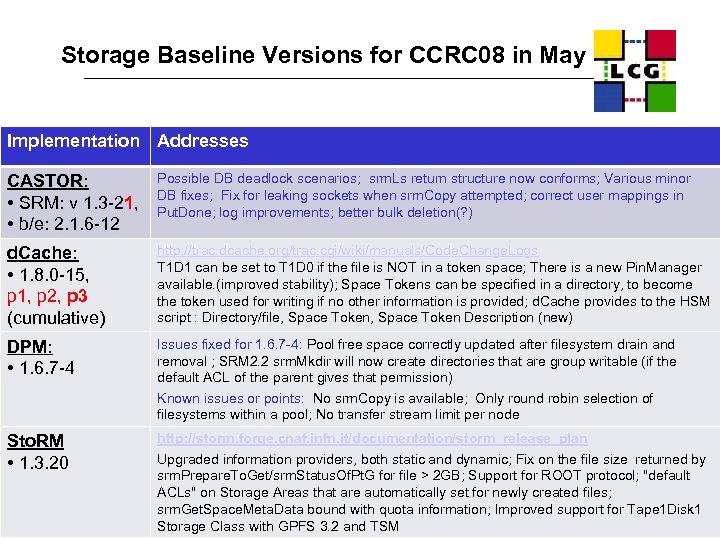

Storage Baseline Versions for CCRC 08 in May Implementation Addresses CASTOR: • SRM: v 1. 3 -21, • b/e: 2. 1. 6 -12 Possible DB deadlock scenarios; srm. Ls return structure now conforms; Various minor DB fixes; Fix for leaking sockets when srm. Copy attempted; correct user mappings in Put. Done; log improvements; better bulk deletion(? ) d. Cache: • 1. 8. 0 -15, p 1, p 2, p 3 (cumulative) http: //trac. dcache. org/trac. cgi/wiki/manuals/Code. Change. Logs T 1 D 1 can be set to T 1 D 0 if the file is NOT in a token space; There is a new Pin. Manager available. (improved stability); Space Tokens can be specified in a directory, to become the token used for writing if no other information is provided; d. Cache provides to the HSM script : Directory/file, Space Token Description (new) DPM: • 1. 6. 7 -4 Issues fixed for 1. 6. 7 -4: Pool free space correctly updated after filesystem drain and removal ; SRM 2. 2 srm. Mkdir will now create directories that are group writable (if the default ACL of the parent gives that permission) Known issues or points: No srm. Copy is available; Only round robin selection of filesystems within a pool; No transfer stream limit per node Sto. RM • 1. 3. 20 http: //storm. forge. cnaf. infn. it/documentation/storm_release_plan Upgraded information providers, both static and dynamic; Fix on the file size returned by srm. Prepare. To. Get/srm. Status. Of. Pt. G for file > 2 GB; Support for ROOT protocol; "default ACLs" on Storage Areas that are automatically set for newly created files; CCRC 08 F 2 F meeting – Flavia Donno 15 srm. Get. Space. Meta. Data bound with quota information; Improved support for Tape 1 Disk 1 Storage Class with GPFS 3. 2 and TSM

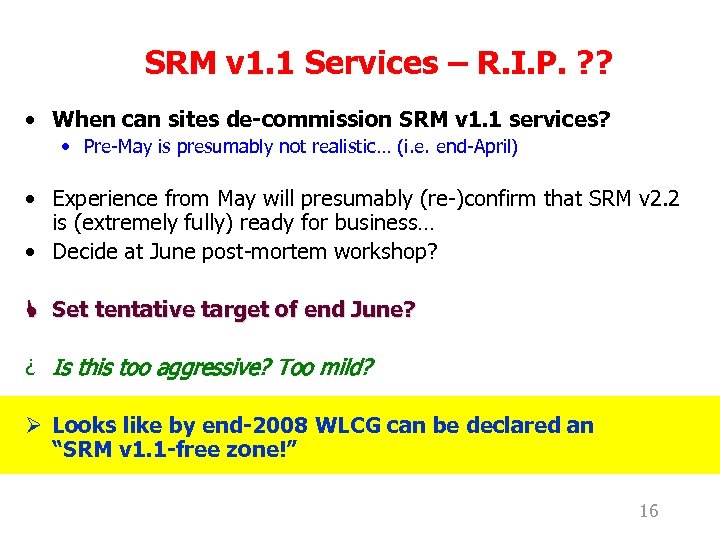

SRM v 1. 1 Services – R. I. P. ? ? • When can sites de-commission SRM v 1. 1 services? • Pre-May is presumably not realistic… (i. e. end-April) • Experience from May will presumably (re-)confirm that SRM v 2. 2 is (extremely fully) ready for business… • Decide at June post-mortem workshop? L Set tentative target of end June? ¿ Is this too aggressive? Too mild? Ø Looks like by end-2008 WLCG can be declared an “SRM v 1. 1 -free zone!” 16

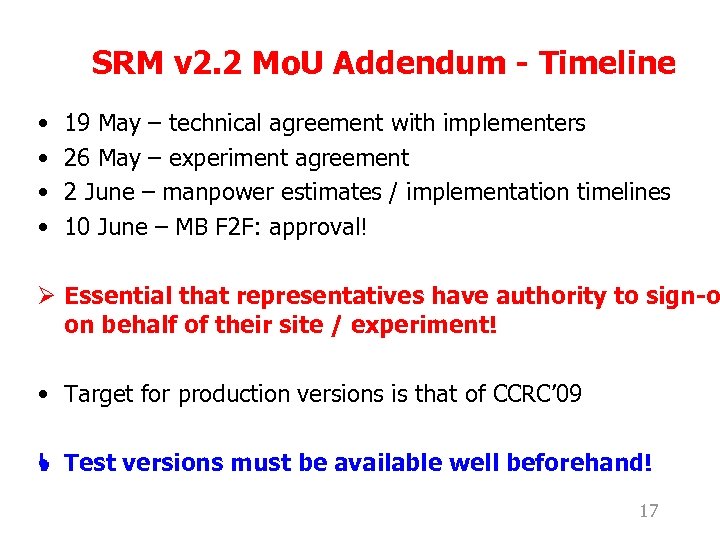

SRM v 2. 2 Mo. U Addendum - Timeline • • 19 May – technical agreement with implementers 26 May – experiment agreement 2 June – manpower estimates / implementation timelines 10 June – MB F 2 F: approval! Ø Essential that representatives have authority to sign-o on behalf of their site / experiment! • Target for production versions is that of CCRC’ 09 L Test versions must be available well beforehand! 17

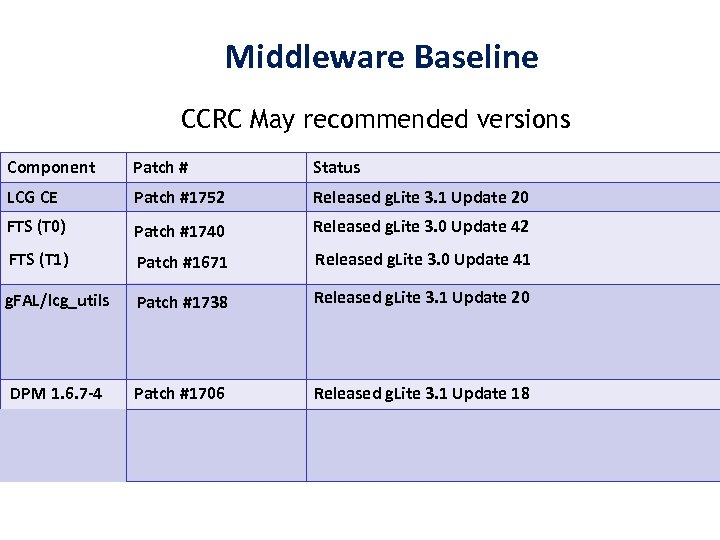

Middleware Baseline CCRC May recommended versions Component Patch # Status LCG CE Patch #1752 Released g. Lite 3. 1 Update 20 FTS (T 0) Patch #1740 Released g. Lite 3. 0 Update 42 FTS (T 1) Patch #1671 Released g. Lite 3. 0 Update 41 g. FAL/lcg_utils Patch #1738 Released g. Lite 3. 1 Update 20 DPM 1. 6. 7 -4 Patch #1706 Released g. Lite 3. 1 Update 18

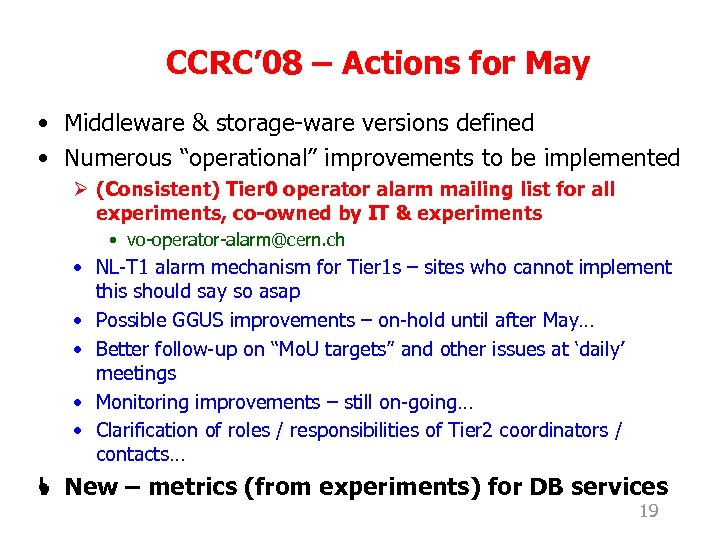

CCRC’ 08 – Actions for May • Middleware & storage-ware versions defined • Numerous “operational” improvements to be implemented Ø (Consistent) Tier 0 operator alarm mailing list for all experiments, co-owned by IT & experiments • vo-operator-alarm@cern. ch • NL-T 1 alarm mechanism for Tier 1 s – sites who cannot implement this should say so asap • Possible GGUS improvements – on-hold until after May… • Better follow-up on “Mo. U targets” and other issues at ‘daily’ meetings • Monitoring improvements – still on-going… • Clarification of roles / responsibilities of Tier 2 coordinators / contacts… L New – metrics (from experiments) for DB services 19

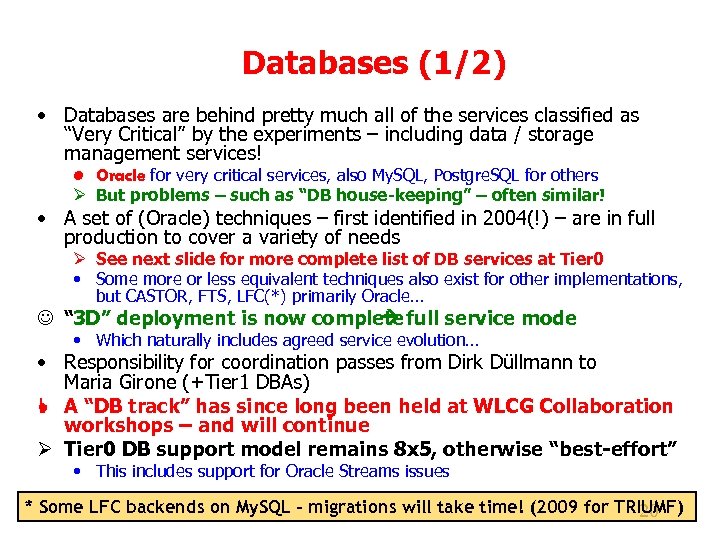

Databases (1/2) • Databases are behind pretty much all of the services classified as “Very Critical” by the experiments – including data / storage management services! • Oracle for very critical services, also My. SQL, Postgre. SQL for others Ø But problems – such as “DB house-keeping” – often similar! • A set of (Oracle) techniques – first identified in 2004(!) – are in full production to cover a variety of needs Ø See next slide for more complete list of DB services at Tier 0 • Some more or less equivalent techniques also exist for other implementations, but CASTOR, FTS, LFC(*) primarily Oracle… J “ 3 D” deployment is now complete full service mode • Which naturally includes agreed service evolution… • Responsibility for coordination passes from Dirk Düllmann to Maria Girone (+Tier 1 DBAs) L A “DB track” has since long been held at WLCG Collaboration workshops – and will continue Ø Tier 0 DB support model remains 8 x 5, otherwise “best-effort” • This includes support for Oracle Streams issues * Some LFC backends on My. SQL – migrations will take time! (2009 for TRIUMF) 20

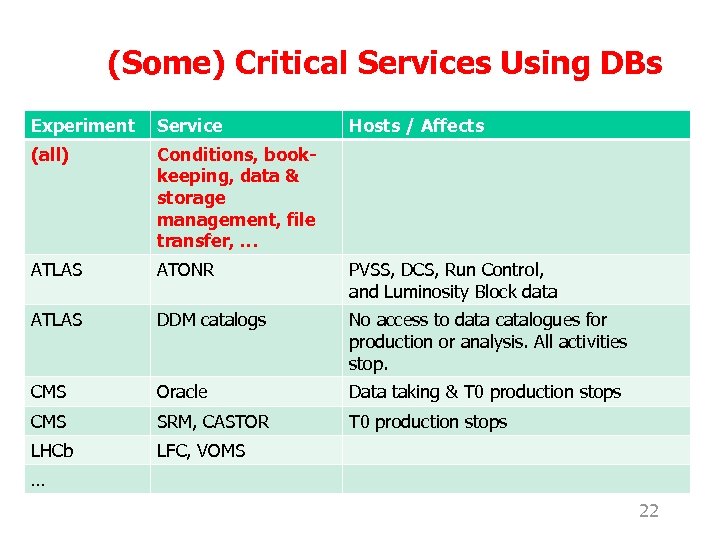

(Some) Critical Services Using DBs Experiment Service Hosts / Affects (all) Conditions, bookkeeping, data & storage management, file transfer, … ATLAS ATONR PVSS, DCS, Run Control, and Luminosity Block data ATLAS DDM catalogs No access to data catalogues for production or analysis. All activities stop. CMS Oracle Data taking & T 0 production stops CMS SRM, CASTOR T 0 production stops LHCb LFC, VOMS … 22

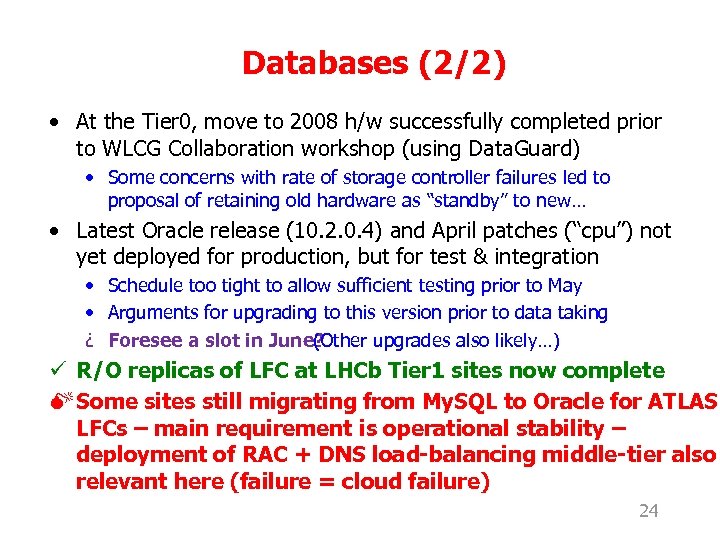

Databases (2/2) • At the Tier 0, move to 2008 h/w successfully completed prior to WLCG Collaboration workshop (using Data. Guard) • Some concerns with rate of storage controller failures led to proposal of retaining old hardware as “standby” to new… • Latest Oracle release (10. 2. 0. 4) and April patches (“cpu”) not yet deployed for production, but for test & integration • Schedule too tight to allow sufficient testing prior to May • Arguments for upgrading to this version prior to data taking ¿ Foresee a slot in June? (Other upgrades also likely…) ü R/O replicas of LFC at LHCb Tier 1 sites now complete M Some sites still migrating from My. SQL to Oracle for ATLAS LFCs – main requirement is operational stability – deployment of RAC + DNS load-balancing middle-tier also relevant here (failure = cloud failure) 24

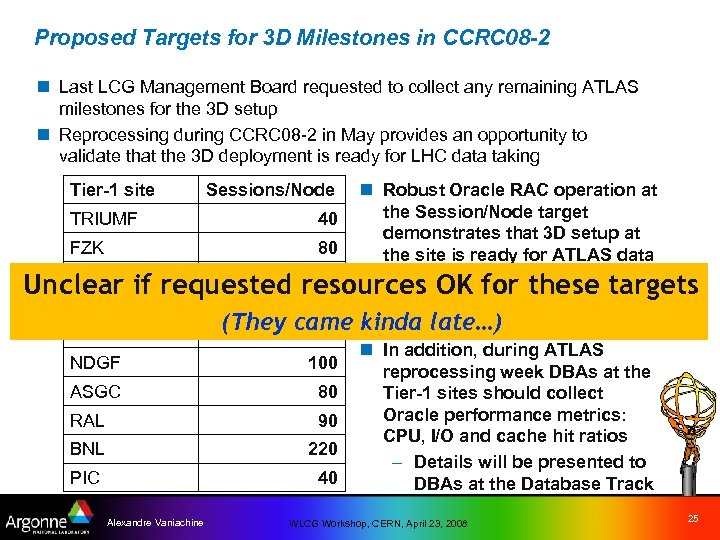

Proposed Targets for 3 D Milestones in CCRC 08 -2 n Last LCG Management Board requested to collect any remaining ATLAS milestones for the 3 D setup n Reprocessing during CCRC 08 -2 in May provides an opportunity to validate that the 3 D deployment is ready for LHC data taking Tier-1 site TRIUMF FZK IN 2 P 3 Unclear if CNAF SARA NDGF ASGC RAL BNL PIC n Robust Oracle RAC operation at the Session/Node target 40 demonstrates that 3 D setup at 80 the site is ready for ATLAS data 60 taking requested resources OK for these targets – Achieving 50% of the target 40 (They came kinda late…) site is 50% ready shows that 140 n In addition, during ATLAS 100 reprocessing week DBAs at the 80 Tier-1 sites should collect Oracle performance metrics: 90 CPU, I/O and cache hit ratios 220 – Details will be presented to 40 DBAs at the Database Track Alexandre Vaniachine Sessions/Node WLCG Workshop, CERN, April 23, 2008 25

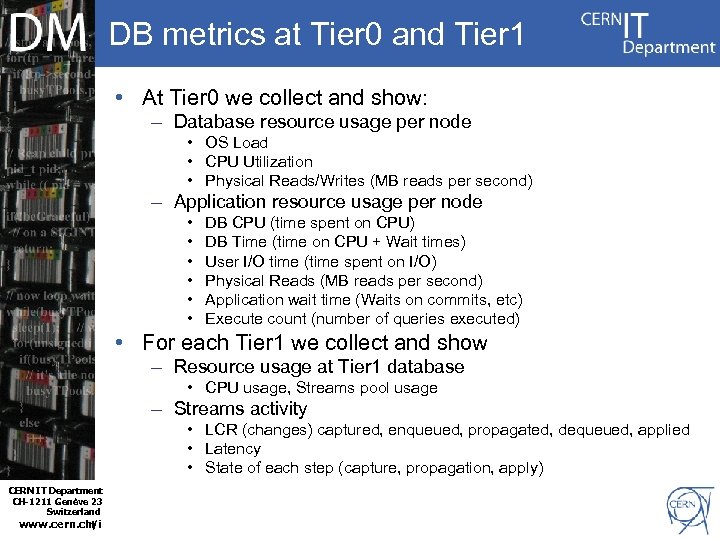

DB metrics at Tier 0 and Tier 1 • At Tier 0 we collect and show: – Database resource usage per node • OS Load • CPU Utilization • Physical Reads/Writes (MB reads per second) – Application resource usage per node • • • DB CPU (time spent on CPU) DB Time (time on CPU + Wait times) User I/O time (time spent on I/O) Physical Reads (MB reads per second) Application wait time (Waits on commits, etc) Execute count (number of queries executed) • For each Tier 1 we collect and show – Resource usage at Tier 1 database • CPU usage, Streams pool usage – Streams activity • LCR (changes) captured, enqueued, propagated, dequeued, applied • Latency • State of each step (capture, propagation, apply) CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/i t

Running The Challenge • The daily / weekly / monthly meetings are working reasonably well and are regularly optimized • e. g. tweaks to agenda and / or representation Ø Suppression of meetings no longer needed • More work needs to be done on: • Awareness of where to find information • Awareness of existing summaries • Better usage by sites of the above! • Automated reporting and stream-lined monitoring could ease burden of those involved L Most likely: incremental changes driven by production need for many months to come! • Strong motivation for common solutions • Across experiments, sites, Grids… 30

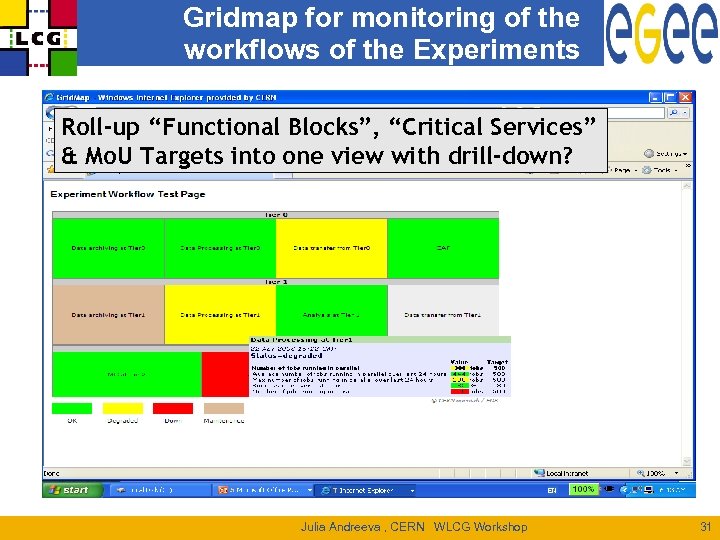

Gridmap for monitoring of the workflows of the Experiments Roll-up “Functional Blocks”, “Critical Services” & Mo. U Targets into one view with drill-down? Julia Andreeva , CERN WLCG Workshop 31

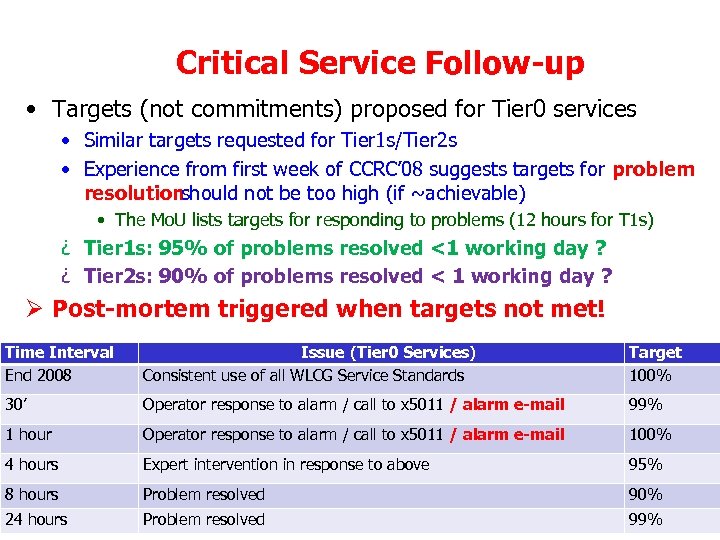

Critical Service Follow-up • Targets (not commitments) proposed for Tier 0 services • Similar targets requested for Tier 1 s/Tier 2 s • Experience from first week of CCRC’ 08 suggests targets for problem resolution should not be too high (if ~achievable) • The Mo. U lists targets for responding to problems (12 hours for T 1 s) ¿ Tier 1 s: 95% of problems resolved <1 working day ? ¿ Tier 2 s: 90% of problems resolved < 1 working day ? Ø Post-mortem triggered when targets not met! Time Interval End 2008 Issue (Tier 0 Services) Consistent use of all WLCG Service Standards Target 100% 30’ Operator response to alarm / call to x 5011 / alarm e-mail 99% 1 hour Operator response to alarm / call to x 5011 / alarm e-mail 100% 4 hours Expert intervention in response to above 95% 8 hours Problem resolved 90% 24 hours Problem resolved 32 99%

Outlook – 2008 L Stable baseline service with (small) incremental improveme throughout the year • Detailed post-mortem of May CCRC’ 08 in June (12 -13) • Still need to converge on additional service issues: • SRM v 2. 2 Mo. U addendum, monitoring improvements, better use of storage resources (file size, batching of requests, …) Ø It is inevitable that both the May run and first pp data taking will bring with them new issues – and solutions! • Continued (late) deployment of 2008 resources over several months • A further CCRC’ 08 phase in July should be expected to test these resources and any further service enhancements J First pp data taking innd half of the year… 2 33

Outlook – Beyond Ø We can still expect some significant increases in resources, plus changes in some of the services / experiment-ware, for the foreseeable future • Some examples of possible changes: • • Computing Models – based on real-life experience; LCG OPN (recent proposal rejected); Operations infrastructure – move from EGEE III to EGI(? ) … M Expecting everything to work without a large-scale test of a experiments and all sites is contrary to our experience L As previously discussed (February) a “readiness challenge” each year’s data taking should be foreseen • And scheduled to be consistent with needs of reprocessing! 34

CCRC’ 08 – Conclusions • The WLCG service is running (reasonably) smoothly • The functionality matcheswhat has been tested so far – and what is (known to be) required L We have a good baseline on which to build • (Big) improvements over the past year are a good indication of what can be expected over the next! • (Very) detailed analysisof results compared to up-front metrics – in particular from experiments! 35

6c11a3aeca7a0260be6a155684995996.ppt