76917c03483e8f4412899c70777b297d.ppt

- Количество слайдов: 22

CARE ASAS Validation Framework System Performance Metrics 10 th October 2002 M F (Mike) Sharples

CARE ASAS Validation Framework System Performance Metrics 10 th October 2002 M F (Mike) Sharples

System Performance Metrics Content • Aims • Approach • Analysis 2

System Performance Metrics Content • Aims • Approach • Analysis 2

System Performance Metrics Aims • Using recognised metrics is fundamental to measuring system performance • The ASAS Validation Framework requires consistent metrics to provide comparable results • The ‘System Performance Metrics’ work demonstrates a method for identifying existing metrics for new scenarios 3

System Performance Metrics Aims • Using recognised metrics is fundamental to measuring system performance • The ASAS Validation Framework requires consistent metrics to provide comparable results • The ‘System Performance Metrics’ work demonstrates a method for identifying existing metrics for new scenarios 3

System Performance Metrics Approach • Considerable existing work in this area – PRS – C/AFT – TORCH – INTEGRA • Collating these required a consistent hierarchy & taxonomy 4

System Performance Metrics Approach • Considerable existing work in this area – PRS – C/AFT – TORCH – INTEGRA • Collating these required a consistent hierarchy & taxonomy 4

System Performance Metrics 5 Hierarchy OBJECTIVES METRICS AREAS PERFORMANCE

System Performance Metrics 5 Hierarchy OBJECTIVES METRICS AREAS PERFORMANCE

System Performance Metrics Hierarchy • OBJECTIVES – Tie in with ATM 2000+ Strategy – High level & therefore no direct measure • PERFORMANCE AREAS – Tie in with PRC (as this gives greatest commonality) – Lower level & therefore easier to measure • METRICS – The measurements that can be made 6

System Performance Metrics Hierarchy • OBJECTIVES – Tie in with ATM 2000+ Strategy – High level & therefore no direct measure • PERFORMANCE AREAS – Tie in with PRC (as this gives greatest commonality) – Lower level & therefore easier to measure • METRICS – The measurements that can be made 6

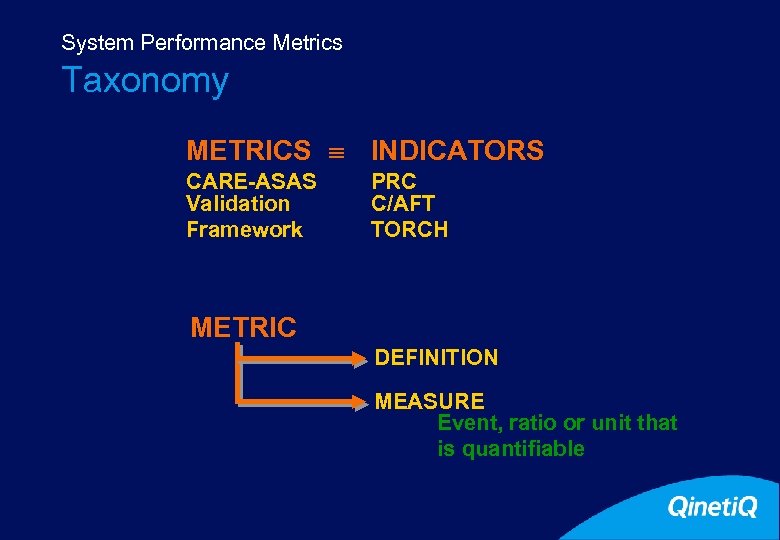

System Performance Metrics 7 Taxonomy METRICS INDICATORS CARE-ASAS Validation Framework PRC C/AFT TORCH METRIC DEFINITION MEASURE Event, ratio or unit that is quantifiable

System Performance Metrics 7 Taxonomy METRICS INDICATORS CARE-ASAS Validation Framework PRC C/AFT TORCH METRIC DEFINITION MEASURE Event, ratio or unit that is quantifiable

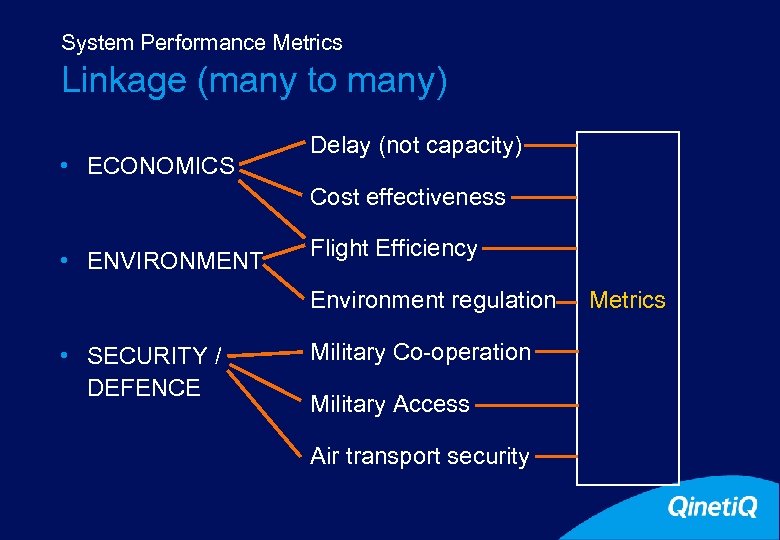

System Performance Metrics 8 Linkage (many to many) • ECONOMICS Delay (not capacity) Cost effectiveness • ENVIRONMENT Flight Efficiency Environment regulation • SECURITY / DEFENCE Military Co-operation Military Access Air transport security Metrics

System Performance Metrics 8 Linkage (many to many) • ECONOMICS Delay (not capacity) Cost effectiveness • ENVIRONMENT Flight Efficiency Environment regulation • SECURITY / DEFENCE Military Co-operation Military Access Air transport security Metrics

System Performance Metrics Further breakdown • PERFORMANCE AREAS broken down into ASPECTS where appropriate • Example: – ACCESS • Airports • Sectors • Routes (PERFORMANCE AREA) (ASPECTS) • Assists use with scenarios that look at specific airspace 9

System Performance Metrics Further breakdown • PERFORMANCE AREAS broken down into ASPECTS where appropriate • Example: – ACCESS • Airports • Sectors • Routes (PERFORMANCE AREA) (ASPECTS) • Assists use with scenarios that look at specific airspace 9

System Performance Metrics Perspectives • Different views (perspectives) can be applied to the selection of metrics: – Airline perspective as in C/AFT – ATM perspective as in PRC – Validation technique • Permits further breakdown and filtering other than purely hierarchical 10

System Performance Metrics Perspectives • Different views (perspectives) can be applied to the selection of metrics: – Airline perspective as in C/AFT – ATM perspective as in PRC – Validation technique • Permits further breakdown and filtering other than purely hierarchical 10

System Performance Metrics Example of perspective • Performance Area: Flight efficiency – Airline perspective • Actual fuel burn. v. planned fuel burn – ATM perspective • Efficiency of route structure 11

System Performance Metrics Example of perspective • Performance Area: Flight efficiency – Airline perspective • Actual fuel burn. v. planned fuel burn – ATM perspective • Efficiency of route structure 11

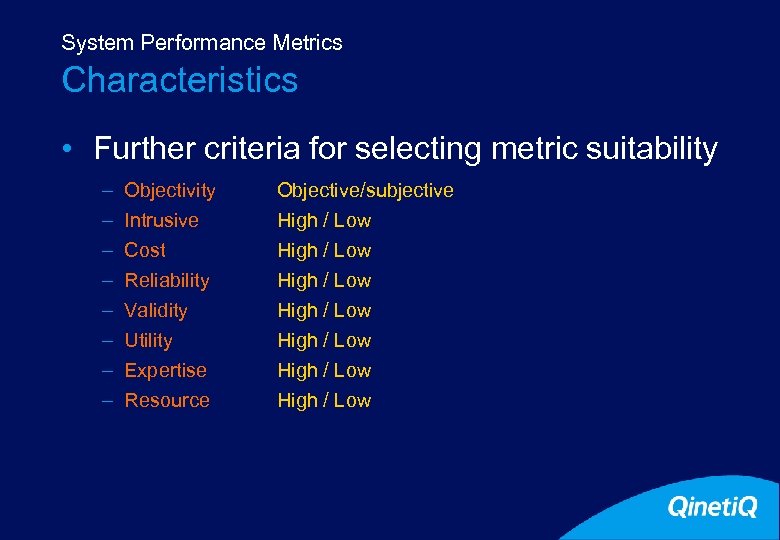

System Performance Metrics Characteristics • Further criteria for selecting metric suitability – – – – Objectivity Intrusive Cost Reliability Validity Utility Expertise Resource Objective/subjective High / Low High / Low 12

System Performance Metrics Characteristics • Further criteria for selecting metric suitability – – – – Objectivity Intrusive Cost Reliability Validity Utility Expertise Resource Objective/subjective High / Low High / Low 12

System Performance Metrics Analysis • To illustrate feasibility of approach a ‘demonstrator’ database was created • 230 System Performance Metrics stored on database • Derived from recognised sources • Preliminary metric classification • Perspectives available – ATS provider / Operator / ASAS / Analysis Type (or any combination of these) 13

System Performance Metrics Analysis • To illustrate feasibility of approach a ‘demonstrator’ database was created • 230 System Performance Metrics stored on database • Derived from recognised sources • Preliminary metric classification • Perspectives available – ATS provider / Operator / ASAS / Analysis Type (or any combination of these) 13

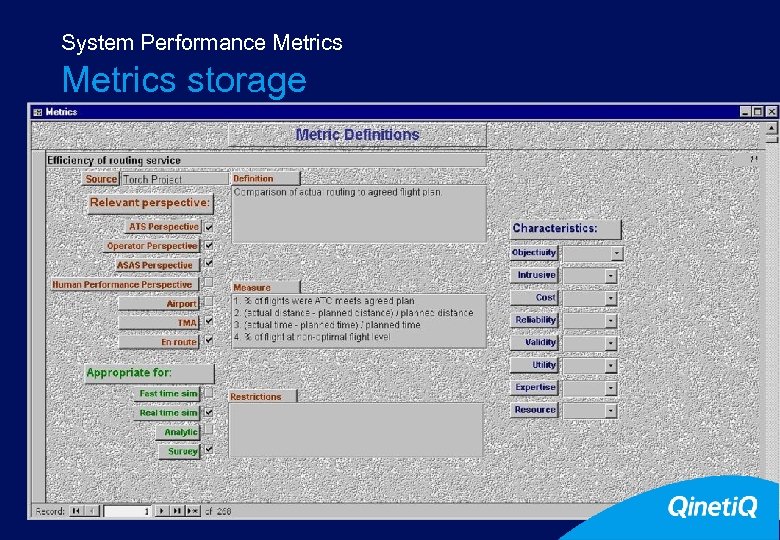

System Performance Metrics storage 14

System Performance Metrics storage 14

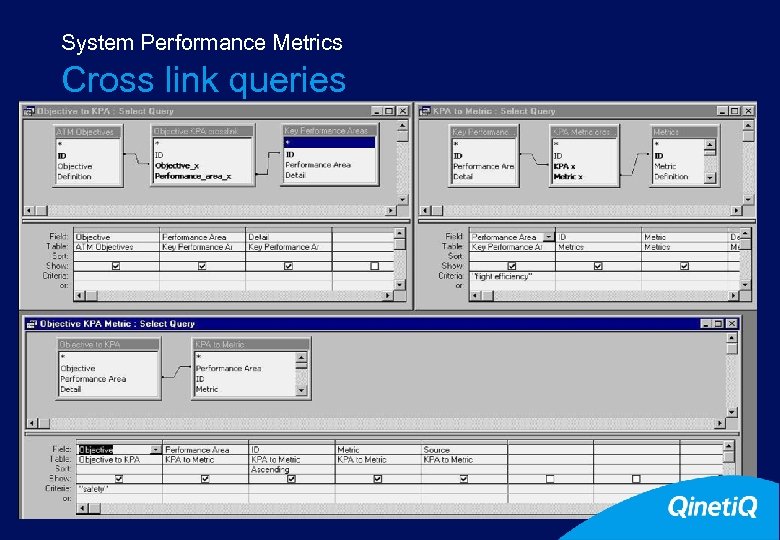

System Performance Metrics Cross link queries 15

System Performance Metrics Cross link queries 15

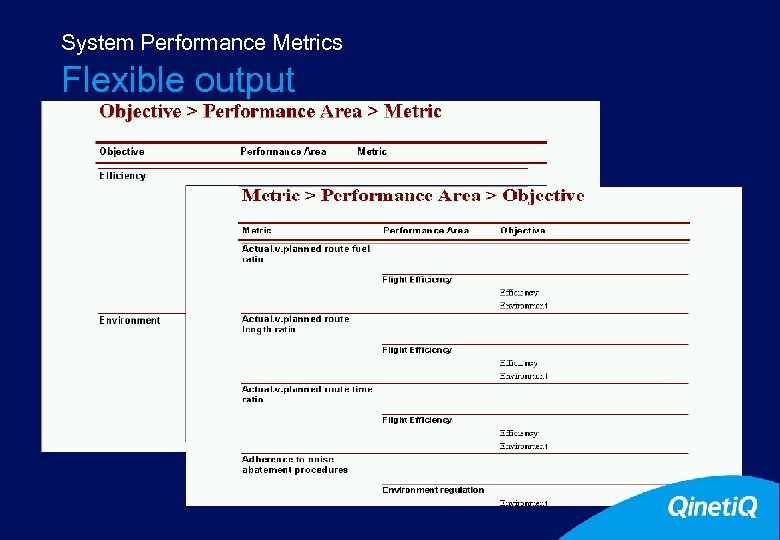

System Performance Metrics Flexible output 16

System Performance Metrics Flexible output 16

System Performance Metrics ASAS case studies • Time based sequencing in approach • Airborne self-separation in en-route airspace 17

System Performance Metrics ASAS case studies • Time based sequencing in approach • Airborne self-separation in en-route airspace 17

System Performance Metrics selection criteria • Time based sequencing in approach – Selected Objectives: Safety; Capacity; Economics – Selected Performance Areas: Safety; Delay; Cost Effectiveness; Flexibility; Flight Efficiency – Methodology: Each of. . . • 1 Analytic or fast-time simulation • 2 Real-time simulation – Airspace: TMA / Airport – Perspective: ASAS & each of. . . • 1 Operator • 2 Service provider 18

System Performance Metrics selection criteria • Time based sequencing in approach – Selected Objectives: Safety; Capacity; Economics – Selected Performance Areas: Safety; Delay; Cost Effectiveness; Flexibility; Flight Efficiency – Methodology: Each of. . . • 1 Analytic or fast-time simulation • 2 Real-time simulation – Airspace: TMA / Airport – Perspective: ASAS & each of. . . • 1 Operator • 2 Service provider 18

System Performance Metrics selection criteria • Airborne self-separation in en-route airspace – Selected Objectives: Safety; Capacity; Economics – Selected Performance Areas: Safety; Delay; Cost Effectiveness; Predictability; Flexibility; Flight Efficiency; Equity – Methodology: Each of. . . • 1 Analytic or fast-time simulation • 2 Real-time simulation – Airspace: En-route – Perspective: ASAS & each of. . . • 1 Operator • 2 Service provider 19

System Performance Metrics selection criteria • Airborne self-separation in en-route airspace – Selected Objectives: Safety; Capacity; Economics – Selected Performance Areas: Safety; Delay; Cost Effectiveness; Predictability; Flexibility; Flight Efficiency; Equity – Methodology: Each of. . . • 1 Analytic or fast-time simulation • 2 Real-time simulation – Airspace: En-route – Perspective: ASAS & each of. . . • 1 Operator • 2 Service provider 19

System Performance Metrics selection • Microsoft Access prototype developed to demonstrate the filtering and selection process • Automated selection process provides guidance – Identifies metrics used in previous work – List is not definitive or restrictive • Once automatic selection process is complete, a manual overview can select the most appropriate metrics 20

System Performance Metrics selection • Microsoft Access prototype developed to demonstrate the filtering and selection process • Automated selection process provides guidance – Identifies metrics used in previous work – List is not definitive or restrictive • Once automatic selection process is complete, a manual overview can select the most appropriate metrics 20

System Performance Metrics Conclusions • System performance metrics can be linked to the strategic objectives of ATM (and ASAS) • The work has successfully consolidated metrics from a number of sources • Effective filtering requires effective classification - this will necessarily be an ongoing and iterative process • Selection process provides guidance - it is not definitive or restrictive 21

System Performance Metrics Conclusions • System performance metrics can be linked to the strategic objectives of ATM (and ASAS) • The work has successfully consolidated metrics from a number of sources • Effective filtering requires effective classification - this will necessarily be an ongoing and iterative process • Selection process provides guidance - it is not definitive or restrictive 21