38c8952a68bf08918375ee676dd940e1.ppt

- Количество слайдов: 35

Cardiff University Advanced Research Computing Suppliers Briefing Dr Hugh Beedie (INSRV and ARCCA CTO) Dr Chris Dickson (SRIF 3 HEC Programme Coordinator) 1

Cardiff University Supplier Briefing • Purpose – Inform Suppliers of CU requirement and vision – Supplier to outline Technology Roadmap and Service capabilities 2

Morning Session • 11: 00 to 11: 40 – Presentation from CU staff (30 -35 minutes) – Questions from supplier (5 -10 minutes) • 11: 40 to 12: 40 – Presentation from supplier (45 -50 minutes) – Questions from CU staff (10 -15 minutes) • 12: 40 to 13: 00 – Closed session for CU staff (20 minutes) 3

Afternoon Session • 14: 00 to 14: 40 – Presentation from CU staff (30 -35 minutes) – Questions from supplier (5 -10 minutes) • 14: 40 to 15: 40 – Presentation from supplier (45 -50 minutes) – Questions from CU staff (10 -15 minutes) • 15: 40 to 16: 00 – Closed session for CU staff (20 minutes) 4

An Overview: Past, Present and Future Dr Chris Dickson 5

Cardiff University History • Founded by Royal Charter in 1883 6

Cardiff University Mission and Vision • Mission: To pursue research, learning and teaching of international distinction and impact • Vision: To be a world class university and to achieve the associated benefits for its students, staff and stakeholders 7

Cardiff University Scale and Scope • 26, 000 Students – 21, 000 Undergraduates – 5, 000 Postgraduates – 35, 000 Applications for 4, 500 Places • 5, 500 Staff – 2, 500 Academic & Research • 28 Academic Schools – 15 Schools RAE 5, 7 Schools RAE 5* 8

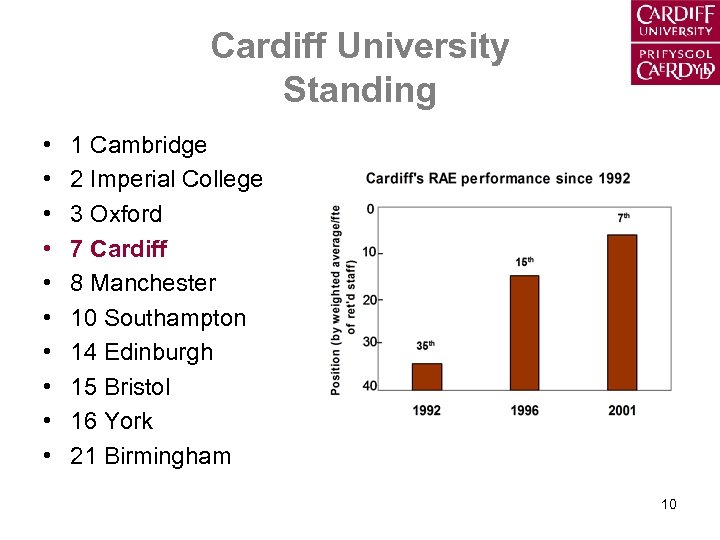

Cardiff University Standing • A member of the Russell Group of leading research universities • Research Excellence – 7 th UK University Ranking • Research Assessment Exercise 2001 – Most Recent RAE 9

Cardiff University Standing • • • 1 Cambridge 2 Imperial College 3 Oxford 7 Cardiff 8 Manchester 10 Southampton 14 Edinburgh 15 Bristol 16 York 21 Birmingham 10

Advanced Research Computing Current Applications • High Speed Cluster – – – Galaxy Formation Gene Sequencing Mantle Simulation Molecular Simulation Orthodontic Modelling Protein Sequencing • Gigabit Cluster – Gravitational Waves • Shared Memory – Computational Fluid Dynamics – Environmental Engineering – Star Formation • Condor – Protein Structure Determination – Radiotherapy Simulation 11

Advanced Research Computing Current Schools • • Architecture Biosciences Business Chemistry Computer Science Dentistry Earth, Ocean and Planetary Sciences • English, Communication and Philosophy • • • Engineering History and Archaeology Mathematics Medicine Optometry and Vision Sciences • Pharmacy • Physics and Astronomy • Psychology 12

Cardiff HEC Strategy • World class facility for the university • Adopt a more coordinated approach • University investment – New organisation - Advanced Research Computing @ Cardiff (ARCCA) – Staff accommodation and machine room • SRIF 3 investment in equipment and supporting infrastructure 13

Offices and Machine Room • Machine Room – Area 175 sq m – Ground Floor – False Flooring & Ceiling • Power, Cooling, Network • Power – Separate Bus-Bar + Meter • Cooling • Security – Key Card Access – Closed Circuit TV • Fire + Water Damage – Smoke Detection – Water Detection – Automated Shutdown • Office space for >12 staff – Separate Bus-Bar + Meter – Rack-based Cooling – Room-based Cooling 14

ARCCA Vision – To build and sustain a position which takes the university to the forefront of leading research universities in the UK and internationally in this field – To work very closely with all of the relevant academic schools and administrative directorates 15

ARCCA Objectives – To transform the University’s approach to advanced research computing – To bring together the advanced research computing community – To introduce and encourage the use of new techniques and technologies – To encourage and enable new user communities and new applications – To encourage new and interdisciplinary research using advanced research computing techniques 16

ARCCA Organisation – Director (TBC) – Chief Technology Officer (Hugh Beedie) – Application Specialists – Infrastructure Specialists – e-Research facilitation – Administrative Assistant 17

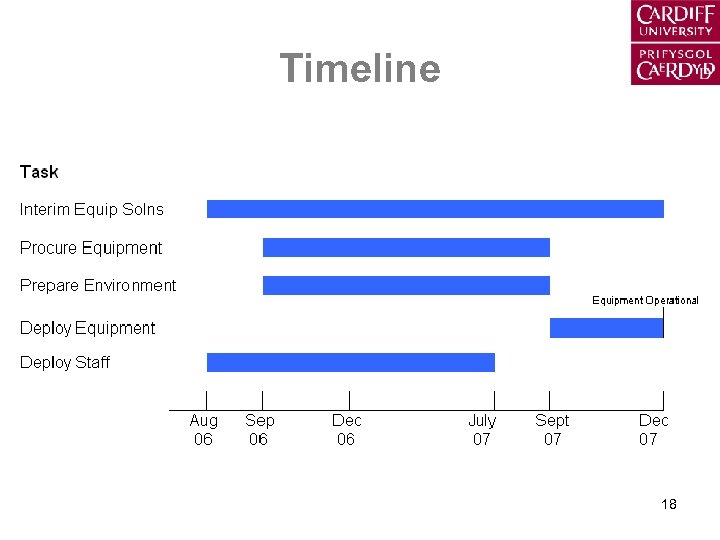

Timeline 18

Tender Requirements Dr Hugh Beedie 19

Cardiff’s Journey • Separate research projects with projectbased HEC facilities • Some collaborations (e. g. Helix) • Strategic decision to provide University level solution – SRIF 3 grant – ARCCA organisation – Select strategic partner 20

Partnership • State of the art of your technical offerings now and in Autumn 2007? • Form factor, heat management, power consumption and management? • HEC landscape over the next 3 -7 years ? • How, over 3 -7 years, you can help us to achieve our ambitions to offer "best-in-class" research computing capabilities and services on a par with world-leading campus-level centres? • Innovative and leap-frogging steps you can help us to take whilst at the same time being able to offer a service to today's "classic" HEC users. 21

Why Should You Partner Cardiff ? • Research quality (7 th in UK) • Condor leader (1500 PCs by end 2006) • Completing and coordinating the HEC service spectrum • Current breadth of academic involvement • We. SC involvement and NGS partner • Serious ambitions for – More Interdisciplinary work – Wider research applications – New techniques 22

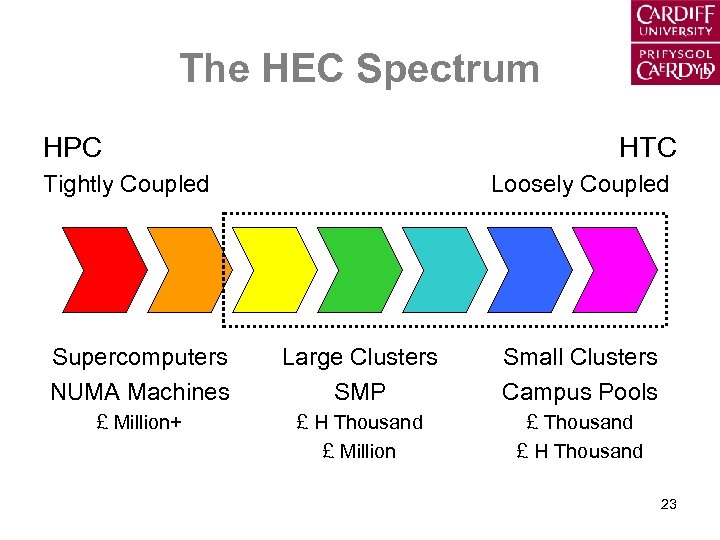

The HEC Spectrum HPC HTC Tightly Coupled Loosely Coupled Supercomputers NUMA Machines Large Clusters SMP Small Clusters Campus Pools £ Million+ £ H Thousand £ Million £ Thousand £ H Thousand 23

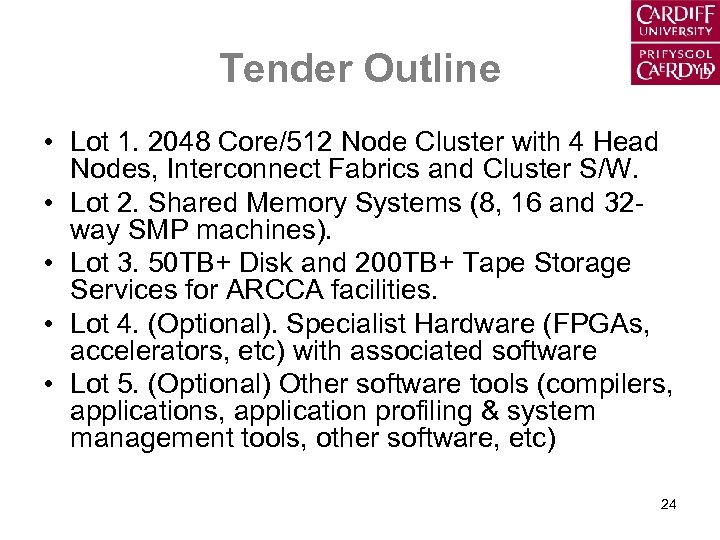

Tender Outline • Lot 1. 2048 Core/512 Node Cluster with 4 Head Nodes, Interconnect Fabrics and Cluster S/W. • Lot 2. Shared Memory Systems (8, 16 and 32 way SMP machines). • Lot 3. 50 TB+ Disk and 200 TB+ Tape Storage Services for ARCCA facilities. • Lot 4. (Optional). Specialist Hardware (FPGAs, accelerators, etc) with associated software • Lot 5. (Optional) Other software tools (compilers, application profiling & system management tools, other software, etc) 24

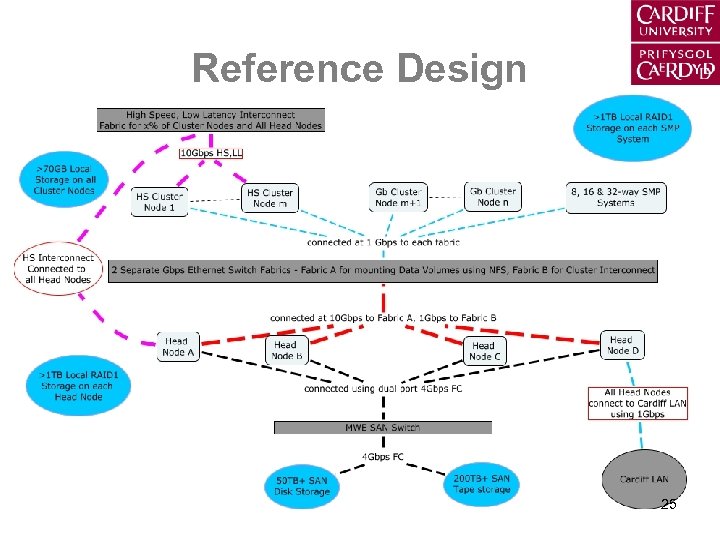

Reference Design 25

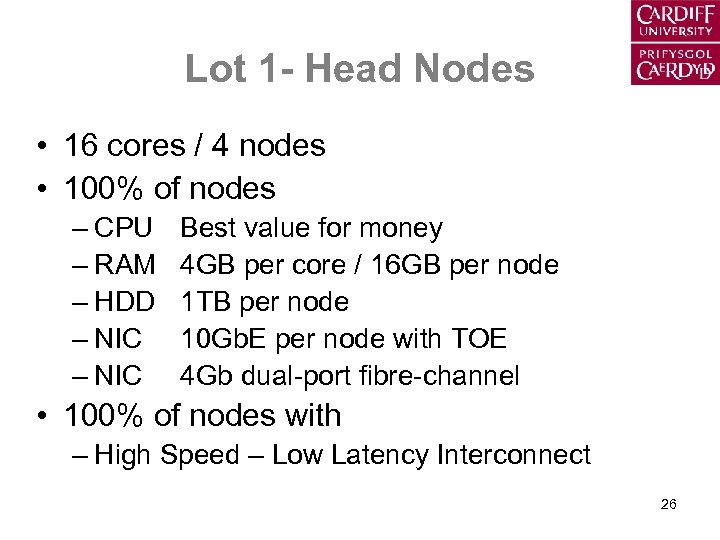

Lot 1 - Head Nodes • 16 cores / 4 nodes • 100% of nodes – CPU – RAM – HDD – NIC Best value for money 4 GB per core / 16 GB per node 1 TB per node 10 Gb. E per node with TOE 4 Gb dual-port fibre-channel • 100% of nodes with – High Speed – Low Latency Interconnect 26

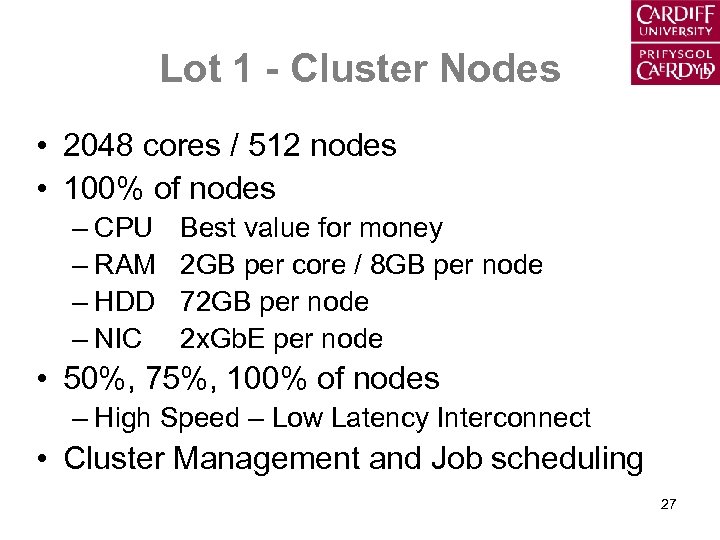

Lot 1 - Cluster Nodes • 2048 cores / 512 nodes • 100% of nodes – CPU – RAM – HDD – NIC Best value for money 2 GB per core / 8 GB per node 72 GB per node 2 x. Gb. E per node • 50%, 75%, 100% of nodes – High Speed – Low Latency Interconnect • Cluster Management and Job scheduling 27

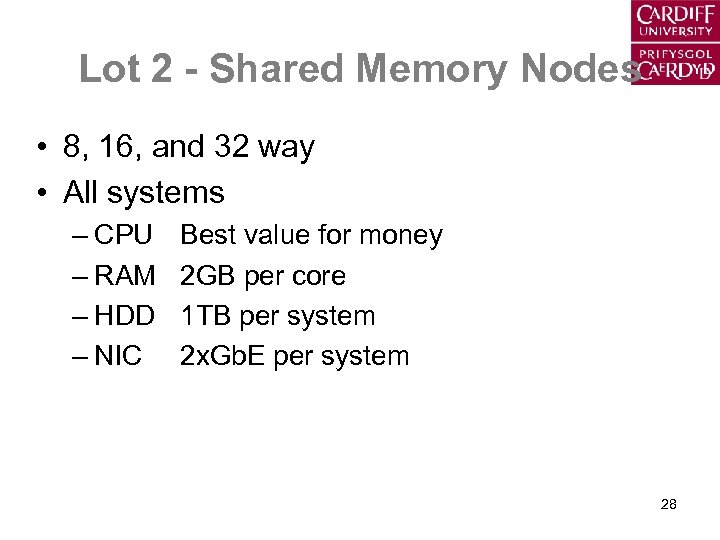

Lot 2 - Shared Memory Nodes • 8, 16, and 32 way • All systems – CPU – RAM – HDD – NIC Best value for money 2 GB per core 1 TB per system 2 x. Gb. E per system 28

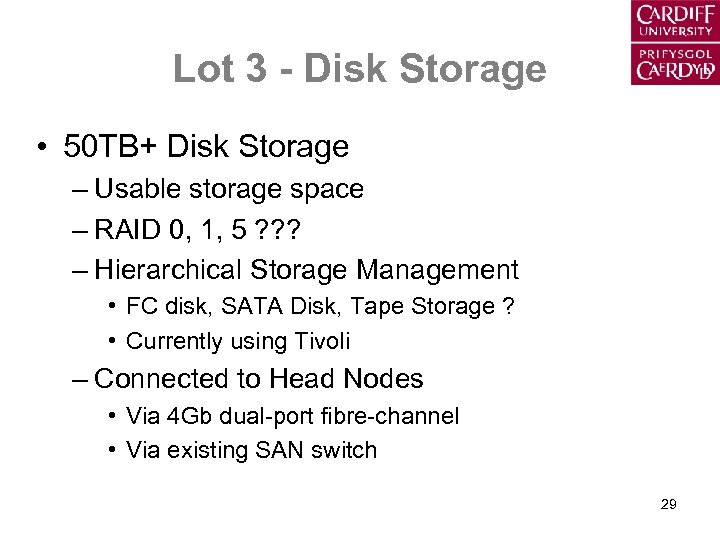

Lot 3 - Disk Storage • 50 TB+ Disk Storage – Usable storage space – RAID 0, 1, 5 ? ? ? – Hierarchical Storage Management • FC disk, SATA Disk, Tape Storage ? • Currently using Tivoli – Connected to Head Nodes • Via 4 Gb dual-port fibre-channel • Via existing SAN switch 29

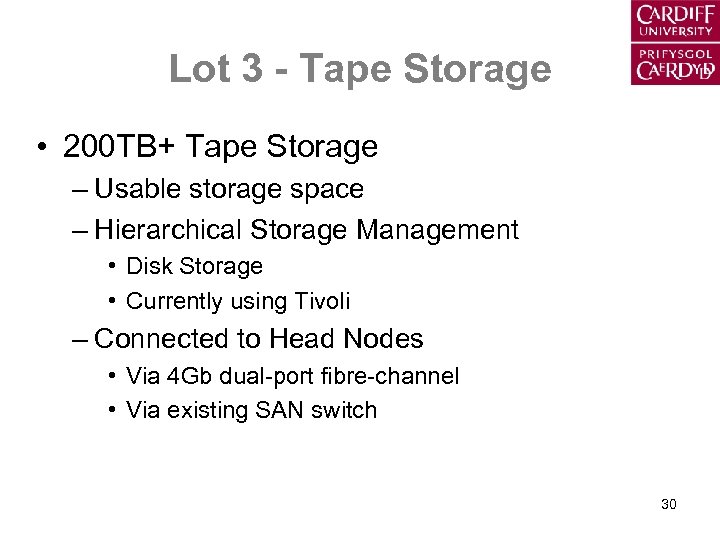

Lot 3 - Tape Storage • 200 TB+ Tape Storage – Usable storage space – Hierarchical Storage Management • Disk Storage • Currently using Tivoli – Connected to Head Nodes • Via 4 Gb dual-port fibre-channel • Via existing SAN switch 30

Lot 4 - Specialised Hardware R & D - Innovative Computational Methods • Computational Accelerators • Field-Programmable Gate Arrays • Programmable Graphics Processors • Cell Processors 31

Lot 5 - Other Software Tools • We invite you to comment on the following – Extra Cluster Management Tools – Extra Cluster Diagnostic Tools – Other Development Tools • Optimised Compilers & Libraries – Intel, Portland • Debuggers – Parallel Debuggers – Applications And Libraries • Profiling tools 32

Support Issues • We invite you to comment on the following – Spares stock vs guaranteed replacement time – Development/test nodes X 8 – Numbers of technical cluster support staff • Location • Skill sets – Single point of contact and responsibility • Including direct access to 3 rd party tech support 33

Any Questions ? Procurement contacts: Beedie@cardiff. ac. uk Primary contact Dickson@cardiff. ac. uk Secondary contact 34

Notes 35

38c8952a68bf08918375ee676dd940e1.ppt