c56a25b3fa29df2686e00fb6a3fef495.ppt

- Количество слайдов: 39

C&M Systems Developed by Local MICE Community J. Leaver 03/06/2009

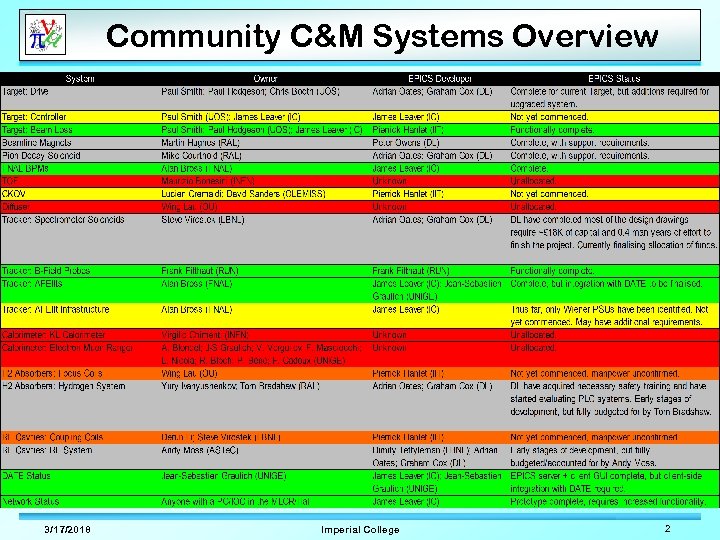

Community C&M Systems Overview 3/17/2018 Imperial College 2

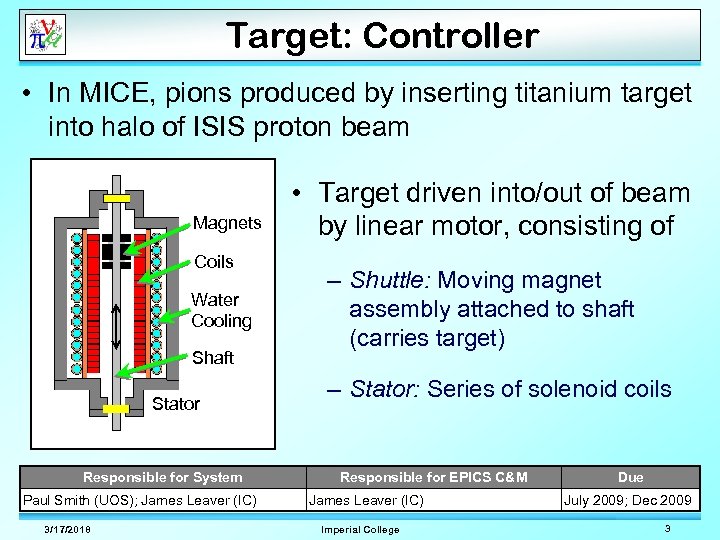

Target: Controller • In MICE, pions produced by inserting titanium target into halo of ISIS proton beam Magnets Coils Water Cooling Shaft Stator Responsible for System Paul Smith (UOS); James Leaver (IC) 3/17/2018 • Target driven into/out of beam by linear motor, consisting of – Shuttle: Moving magnet assembly attached to shaft (carries target) – Stator: Series of solenoid coils Responsible for EPICS C&M James Leaver (IC) Imperial College Due July 2009; Dec 2009 3

Target: Controller • Target Controller electronics actuates target by modulating Stator coil currents – Required motion profiles achieved with position monitoring + feedback algorithm • In terms of ‘user-level’ controls, need to – Enable actuation – Specify target dip depth (relative to beamline) – Specify timing delays for synchronisation with ISIS Responsible for System Paul Smith (UOS); James Leaver (IC) 3/17/2018 Responsible for EPICS C&M James Leaver (IC) Imperial College Due July 2009; Dec 2009 4

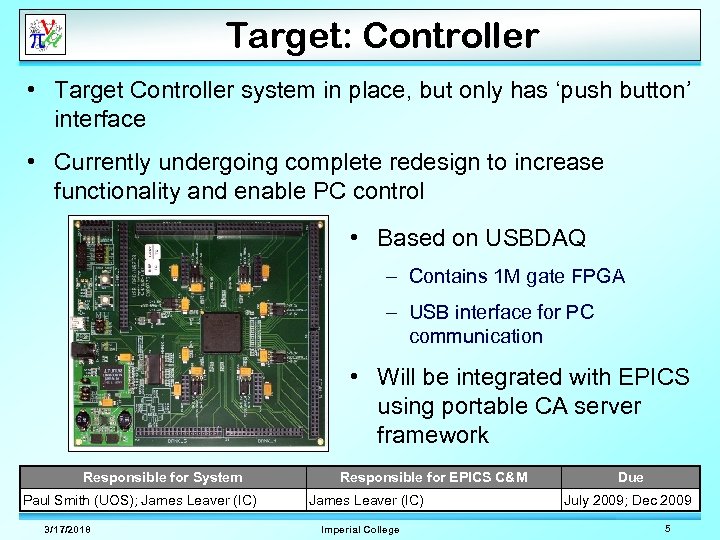

Target: Controller • Target Controller system in place, but only has ‘push button’ interface • Currently undergoing complete redesign to increase functionality and enable PC control • Based on USBDAQ – Contains 1 M gate FPGA – USB interface for PC communication • Will be integrated with EPICS using portable CA server framework Responsible for System Paul Smith (UOS); James Leaver (IC) 3/17/2018 Responsible for EPICS C&M James Leaver (IC) Imperial College Due July 2009; Dec 2009 5

Target: Controller • In hardware/firmware design stage – EPICS development not yet commenced • Stage 1 upgrade will be complete end of July 2009 – Interfaces USBDAQ with existing analogue electronics – EPICS C&M system recreating current ‘push button’ controls • Stage 2 upgrade to be completed end of December 2009 – Redesign of analogue electronics – Enable fine control of subsystems Responsible for System Paul Smith (UOS); James Leaver (IC) 3/17/2018 Responsible for EPICS C&M James Leaver (IC) Imperial College Due July 2009; Dec 2009 6

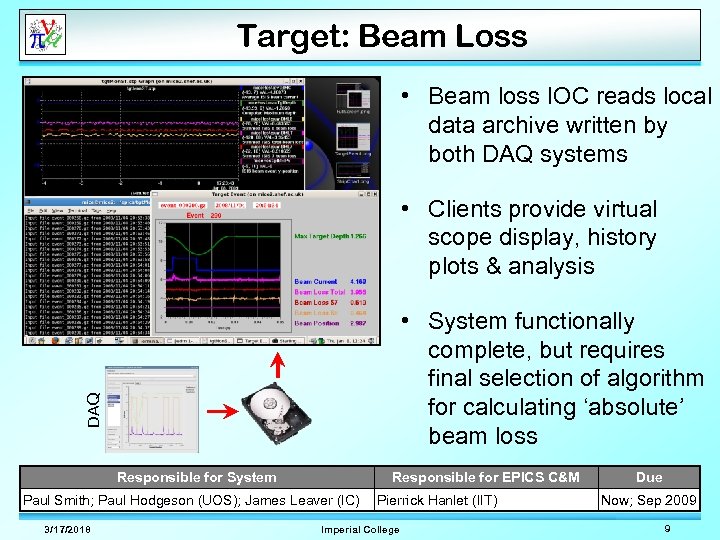

Target: Beam Loss • While actuating, necessary to record beam loss reported by ISIS – Monitor effect of MICE operations on ISIS – Ultimately a measure of particle production vs. Target dip depth • Target depth reported by control electronics • Beam loss signals provided by ionisation chambers distributed around ISIS synchrotron • Read using National Instruments PCI 6254 DAQ card – To be replaced by custom DAQ board (early 2010) Responsible for System Responsible for EPICS C&M Paul Smith; Paul Hodgeson (UOS); James Leaver (IC) 3/17/2018 Pierrick Hanlet (IIT) Imperial College Due Now; Sep 2009 7

Target: Beam Loss • Beam loss is an important physics parameter – Needs to be read directly via DATE, for correct synchronisation and inclusion in DAQ data stream • However, require capacity to run Target when DATE is inactive (during test & development phases) – Additional ‘standalone’ Target DAQ software exists • EPICS-based monitoring system should operate with either DATE or standalone DAQ Responsible for System Responsible for EPICS C&M Paul Smith; Paul Hodgeson (UOS); James Leaver (IC) 3/17/2018 Pierrick Hanlet (IIT) Imperial College Due Now; Sep 2009 8

Target: Beam Loss • Beam loss IOC reads local data archive written by both DAQ systems • Clients provide virtual scope display, history plots & analysis DAQ • System functionally complete, but requires final selection of algorithm for calculating ‘absolute’ beam loss Responsible for System Responsible for EPICS C&M Paul Smith; Paul Hodgeson (UOS); James Leaver (IC) 3/17/2018 Pierrick Hanlet (IIT) Imperial College Due Now; Sep 2009 9

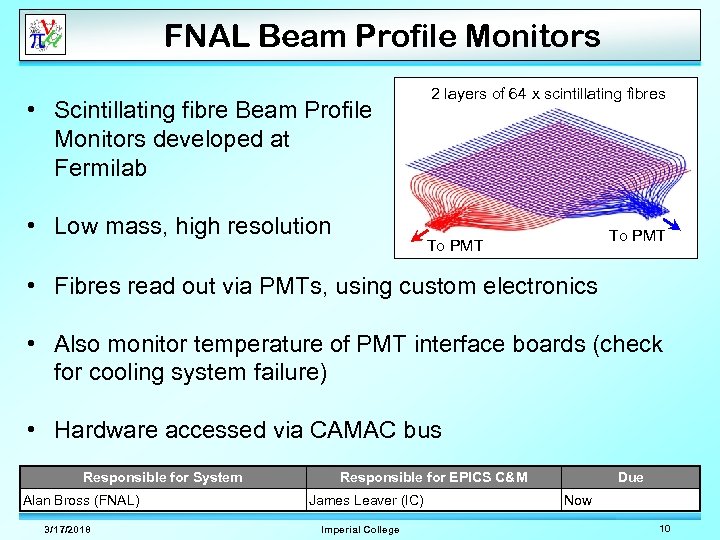

FNAL Beam Profile Monitors • Scintillating fibre Beam Profile Monitors developed at Fermilab • Low mass, high resolution 2 layers of 64 x scintillating fibres To PMT • Fibres read out via PMTs, using custom electronics • Also monitor temperature of PMT interface boards (check for cooling system failure) • Hardware accessed via CAMAC bus Responsible for System Alan Bross (FNAL) 3/17/2018 Responsible for EPICS C&M James Leaver (IC) Imperial College Due Now 10

FNAL Beam Profile Monitors • C&M system developed using MICE portable CA wrapper framework • 2 server applications – Control Server (performs hardware access) • Start/stop readout thread (external trigger enable) • Set pedestal levels • Writes acquired values to Data Server – Data Server • Contains most recent fibre data & temperature monitoring values • Reduces impact of client monitoring load on hardware readout Responsible for System Alan Bross (FNAL) 3/17/2018 Responsible for EPICS C&M James Leaver (IC) Imperial College Due Now 11

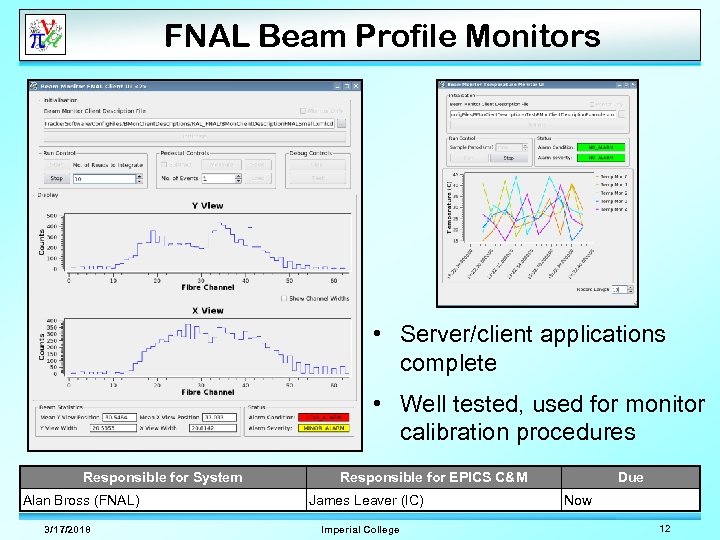

FNAL Beam Profile Monitors • Server/client applications complete • Well tested, used for monitor calibration procedures Responsible for System Alan Bross (FNAL) 3/17/2018 Responsible for EPICS C&M James Leaver (IC) Imperial College Due Now 12

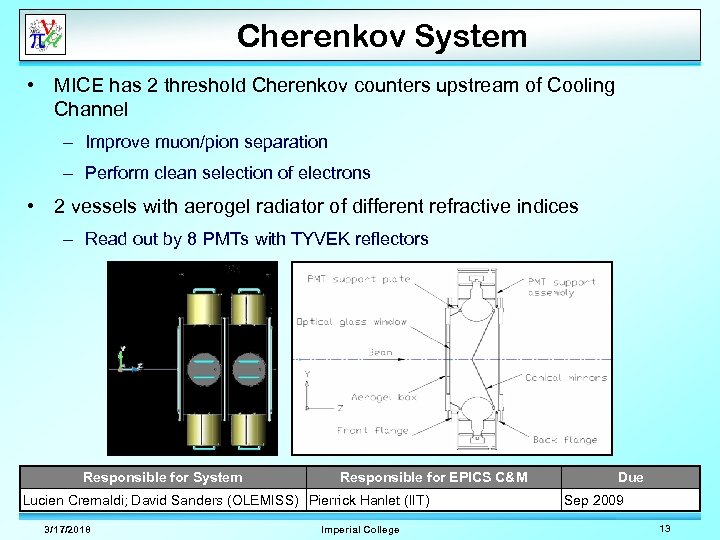

Cherenkov System • MICE has 2 threshold Cherenkov counters upstream of Cooling Channel – Improve muon/pion separation – Perform clean selection of electrons • 2 vessels with aerogel radiator of different refractive indices – Read out by 8 PMTs with TYVEK reflectors Responsible for System Responsible for EPICS C&M Lucien Cremaldi; David Sanders (OLEMISS) Pierrick Hanlet (IIT) 3/17/2018 Imperial College Due Sep 2009 13

Cherenkov System • C&M components – Temperature + humidity • Sensor module read out via SNMP • IOC will access sensors using existing dev. SNMP device support (LNAL) – PMT HV supplies • CAEN HV modules • C++ drivers available – use portable CA server • P. Hanlet currently investigating requirements – Work on IOC implementation not yet commenced – Will develop system in September (after completing general infrastructure tasks – AH, CA) Responsible for System Responsible for EPICS C&M Lucien Cremaldi; David Sanders (OLEMISS) Pierrick Hanlet (IIT) 3/17/2018 Imperial College Due Sep 2009 14

Tracker: Magnetic Field Probes • Scintillating fibre Trackers enable measurement of muon beam emittance before/after MICE Cooling Channel • Trackers require 4 T uniform field, provided by Spectrometer Solenoid modules • Necessary to monitor uniformity/stability of field • NIKHEF Hall probes will be installed – In homogeneous region of Tracker volume (centre) – At edges of Tracker volume – Outside solenoids (backup check of field polarity) Responsible for System Frank Filthaut (RUN) 3/17/2018 Responsible for EPICS C&M Frank Filthaut (RUN) Imperial College Due Nov 2009 15

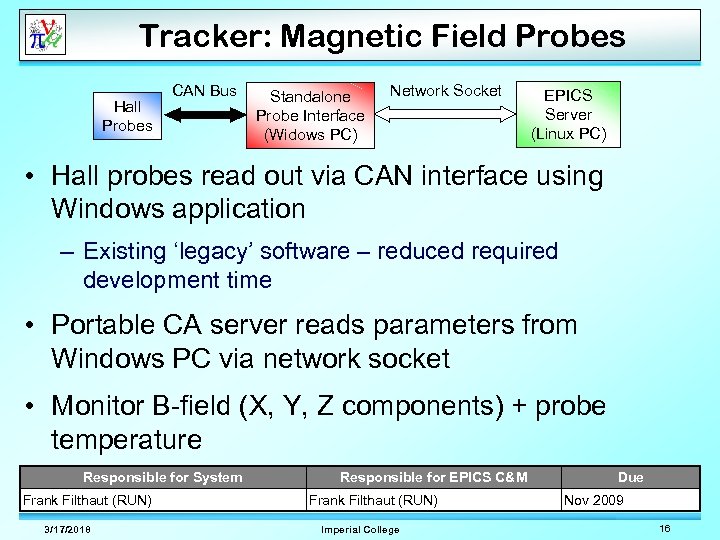

Tracker: Magnetic Field Probes Hall Probes CAN Bus Standalone Probe Interface (Widows PC) Network Socket EPICS Server (Linux PC) • Hall probes read out via CAN interface using Windows application – Existing ‘legacy’ software – reduced required development time • Portable CA server reads parameters from Windows PC via network socket • Monitor B-field (X, Y, Z components) + probe temperature Responsible for System Frank Filthaut (RUN) 3/17/2018 Responsible for EPICS C&M Frank Filthaut (RUN) Imperial College Due Nov 2009 16

Tracker: Magnetic Field Probes • C&M system functionally complete – Required additions: Error handling refinements & definition of alarm limits – To be finalised during installation at RAL • Installation schedule depends on availability of Tracker hardware & F. Filthaut – Expect working system November 2009 • No dedicated client will be written – sufficient to display parameters via Channel Archiver Data Server Responsible for System Frank Filthaut (RUN) 3/17/2018 Responsible for EPICS C&M Frank Filthaut (RUN) Imperial College Due Nov 2009 17

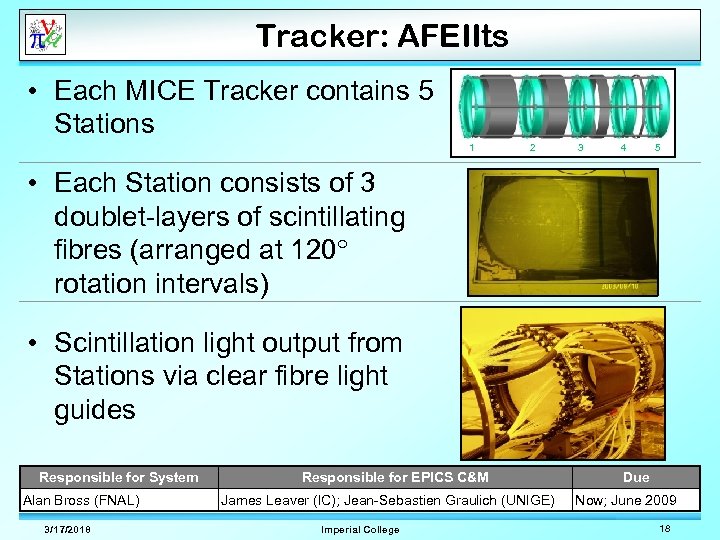

Tracker: AFEIIts • Each MICE Tracker contains 5 Stations 1 2 3 4 5 • Each Station consists of 3 doublet-layers of scintillating fibres (arranged at 120 rotation intervals) • Scintillation light output from Stations via clear fibre light guides Responsible for System Alan Bross (FNAL) 3/17/2018 Responsible for EPICS C&M James Leaver (IC); Jean-Sebastien Graulich (UNIGE) Imperial College Due Now; June 2009 18

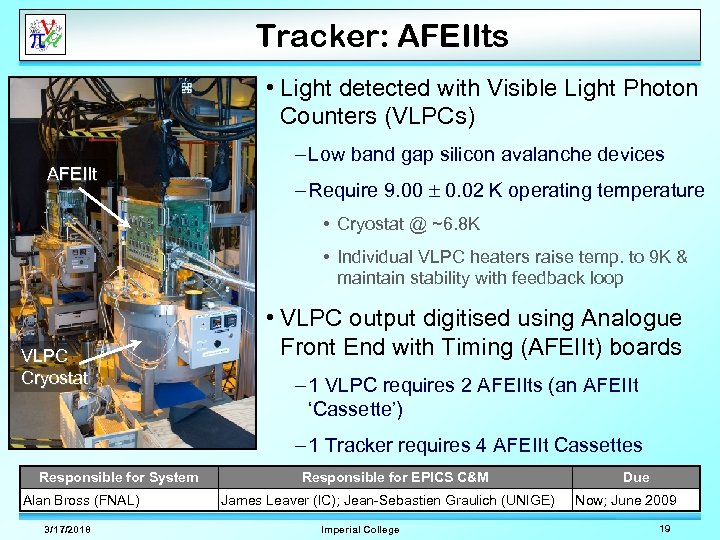

Tracker: AFEIIts • Light detected with Visible Light Photon Counters (VLPCs) AFEIIt – Low band gap silicon avalanche devices – Require 9. 00 0. 02 K operating temperature • Cryostat @ ~6. 8 K • Individual VLPC heaters raise temp. to 9 K & maintain stability with feedback loop VLPC Cryostat • VLPC output digitised using Analogue Front End with Timing (AFEIIt) boards – 1 VLPC requires 2 AFEIIts (an AFEIIt ‘Cassette’) – 1 Tracker requires 4 AFEIIt Cassettes Responsible for System Alan Bross (FNAL) 3/17/2018 Responsible for EPICS C&M James Leaver (IC); Jean-Sebastien Graulich (UNIGE) Imperial College Due Now; June 2009 19

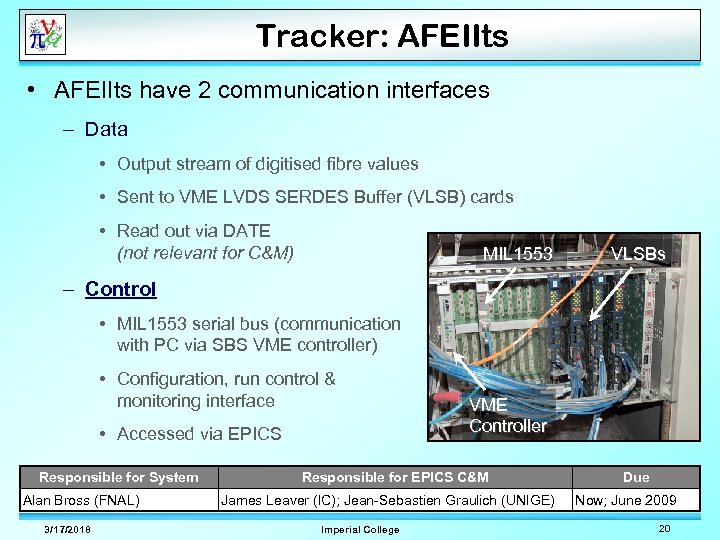

Tracker: AFEIIts • AFEIIts have 2 communication interfaces – Data • Output stream of digitised fibre values • Sent to VME LVDS SERDES Buffer (VLSB) cards • Read out via DATE (not relevant for C&M) MIL 1553 VLSBs – Control • MIL 1553 serial bus (communication with PC via SBS VME controller) • Configuration, run control & monitoring interface • Accessed via EPICS Responsible for System Alan Bross (FNAL) 3/17/2018 VME Controller Responsible for EPICS C&M James Leaver (IC); Jean-Sebastien Graulich (UNIGE) Imperial College Due Now; June 2009 20

Tracker: AFEIIts • AFEIIt control requirements – VLPC signal digitisation parameters • Preamp/opamp gain/drive current, delays, comparator references, etc. – VLPC bias settings – VLPC heater/feedback loop configuration – ~230 parameters per board • Monitoring requirements – VLPC temperatures (verify 9 K operation) – VLPC heater values Responsible for System Alan Bross (FNAL) 3/17/2018 Responsible for EPICS C&M James Leaver (IC); Jean-Sebastien Graulich (UNIGE) Imperial College Due Now; June 2009 21

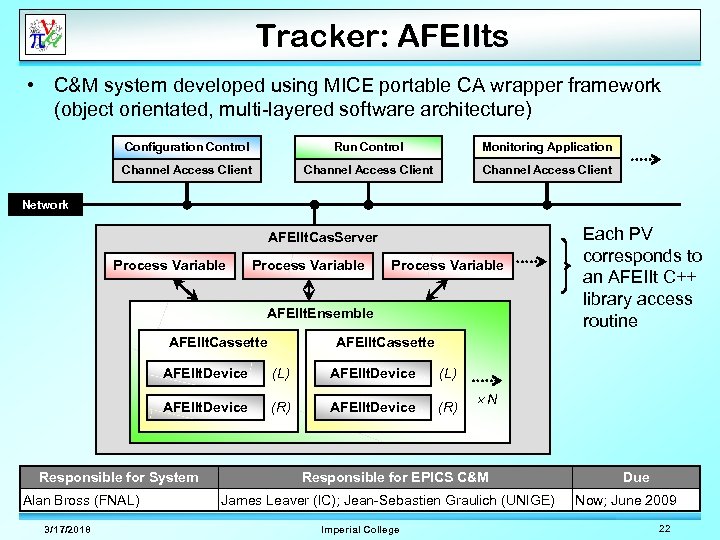

Tracker: AFEIIts • C&M system developed using MICE portable CA wrapper framework (object orientated, multi-layered software architecture) Configuration Control Run Control Monitoring Application Channel Access Client Network AFEIIt. Cas. Server Process Variable AFEIIt. Ensemble AFEIIt. Cassette AFEIIt. Device (L) AFEIIt. Device (R) Responsible for System Alan Bross (FNAL) 3/17/2018 Each PV corresponds to an AFEIIt C++ library access routine N Responsible for EPICS C&M James Leaver (IC); Jean-Sebastien Graulich (UNIGE) Imperial College Due Now; June 2009 22

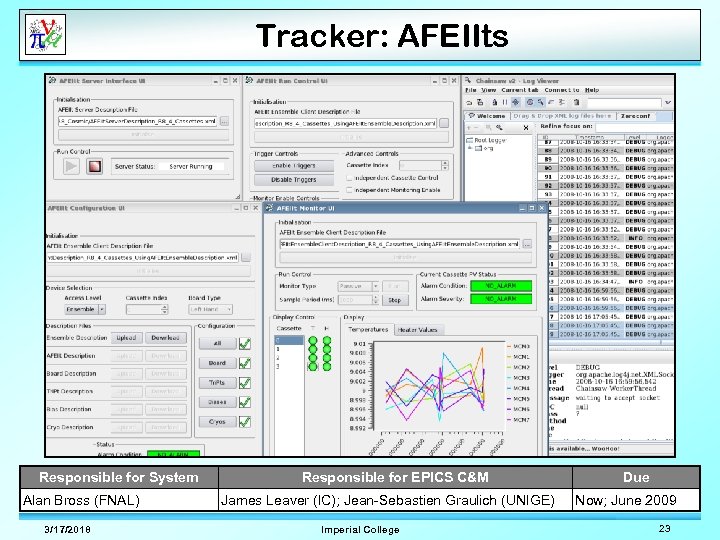

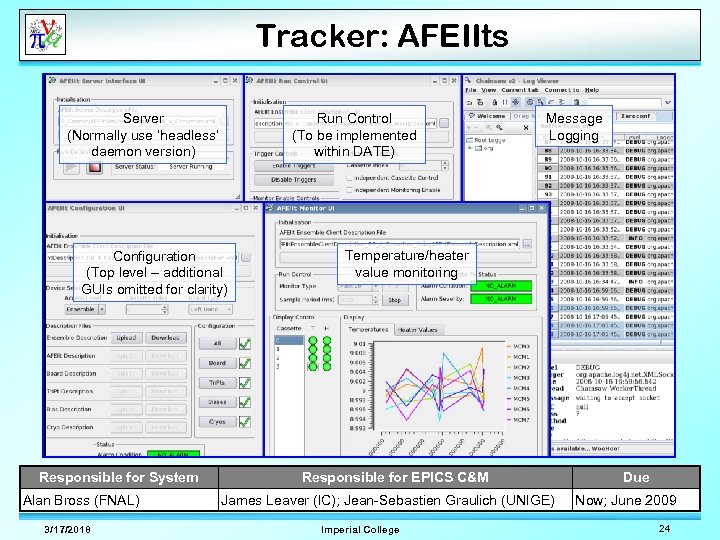

Tracker: AFEIIts Responsible for System Alan Bross (FNAL) 3/17/2018 Responsible for EPICS C&M James Leaver (IC); Jean-Sebastien Graulich (UNIGE) Imperial College Due Now; June 2009 23

Tracker: AFEIIts Server (Normally use ‘headless’ daemon version) Run Control (To be implemented within DATE) Configuration (Top level – additional GUIs omitted for clarity) Responsible for System Alan Bross (FNAL) 3/17/2018 Message Logging Temperature/heater value monitoring Responsible for EPICS C&M James Leaver (IC); Jean-Sebastien Graulich (UNIGE) Imperial College Due Now; June 2009 24

Tracker: AFEIIts • Server/client applications complete • Functionality verified using Tracker cosmic ray test stand – Successfully configure/monitor 8 AFEIIts required for single Tracker • Integration with DATE to be finalised – Embed very simple client in DATE code – Essentially enable/disable triggers at start/end of DAQ run Responsible for System Alan Bross (FNAL) 3/17/2018 Responsible for EPICS C&M James Leaver (IC); Jean-Sebastien Graulich (UNIGE) Imperial College Due Now; June 2009 25

Tracker: AFEIIt Infrastructure • ‘Infrastructure’ corresponds to auxiliary hardware necessary for operation of AFEIIts – Power supplies, cryo systems - compressors, vacuum pumps, etc. – Currently not well-defined • Many items (cryo safety hardware interlocks) integrated with DL Spectrometer Solenoid controls • Tracker group needs to specify full list of requirements & negotiate additions to DL systems (where appropriate/possible) Responsible for System Alan Bross (FNAL) 3/17/2018 Responsible for EPICS C&M James Leaver (IC) Imperial College Due Aug 2009; TBD 26

Tracker: AFEIIt Infrastructure • Known C&M requirements: AFEIIt power supplies – 4 Wiener PSUs (1 per VLPC cryo) – CAN Bus or RS 232 communication interface • Intend to use RS 232 – standard PC port, no additional interface hardware – No progress yet – expect manpower to be available for completion in August • Additional systems to be discussed (with consideration of limited resources/manpower) Responsible for System Alan Bross (FNAL) 3/17/2018 Responsible for EPICS C&M James Leaver (IC) Imperial College Due Aug 2009; TBD 27

Hydrogen Absorbers: Focus Coils • Ionisation cooling achieved by passing muons through low Z absorber to reduce transverse & longitudinal momentum • Reaccelerated to original longitudinal momentum via RF Cavities • MICE (primarily) uses liquid hydrogen absorbers • Each absorber mounted inside a pair of superconducting solenoids (Focus Coil Module) – Provides low beam beta region Responsible for System Wing Lau (OU) 3/17/2018 Responsible for EPICS C&M Pierrick Hanlet (IIT); TBD Imperial College Due May 2010; TBD 28

Hydrogen Absorbers: Focus Coils • Focus Coils expected to require C&M systems very similar to Pion Decay Solenoid & Spectrometer Solenoids – See DL’s talk • Would be most efficient for DL to take over project (wealth of relevant expertise) – Unfortunately prevented by MICE funding constraints – Task assigned to MOG Responsible for System Wing Lau (OU) 3/17/2018 Responsible for EPICS C&M Pierrick Hanlet (IIT); TBD Imperial College Due May 2010; TBD 29

Hydrogen Absorbers: Focus Coils • If possible, will attempt to use DL’s existing magnet designs as template – DL C&M systems have vx. Works IOCs • For MICE to develop vx. Works software, expensive (~£ 15. 2 K) license required • Investigate replacement with RTEMS controllers (‘similar’ realtime OS, free to develop) – DL systems include custom in-house hardware • Not available for general MICE usage – will check alternatives • However, will consider possibility of entirely new design (perhaps with Linux PC-based IOCs) Responsible for System Wing Lau (OU) 3/17/2018 Responsible for EPICS C&M Pierrick Hanlet (IIT); TBD Imperial College Due May 2010; TBD 30

Hydrogen Absorbers: Focus Coils • Work on Focus Coil C&M system has not yet commenced – Need to confirm availability of P. Hanlet – Assistance from FNAL Controls Group would be highly beneficial – need to discuss • Expect to start project in September 2009 Responsible for System Wing Lau (OU) 3/17/2018 Responsible for EPICS C&M Pierrick Hanlet (IIT); TBD Imperial College Due May 2010; TBD 31

RF Cavities: Coupling Coils • Each RF Cavity (used to reaccelerate muons) also surrounded by a superconducting solenoid (Coupling Coil) – Confines muon beam within RF Cavity beam windows • Coupling Coil C&M situation identical to Focus Coils – Similar to other MICE magnets – MOG responsibility (need to confirm P. Hanlet’s availability) – Project should run in parallel with Focus Coil C&M system Responsible for System Derun Li; Steve Virostek (LBNL) 3/17/2018 Responsible for EPICS C&M Pierrick Hanlet (IIT); TBD Imperial College Due Sep 2010; TBD 32

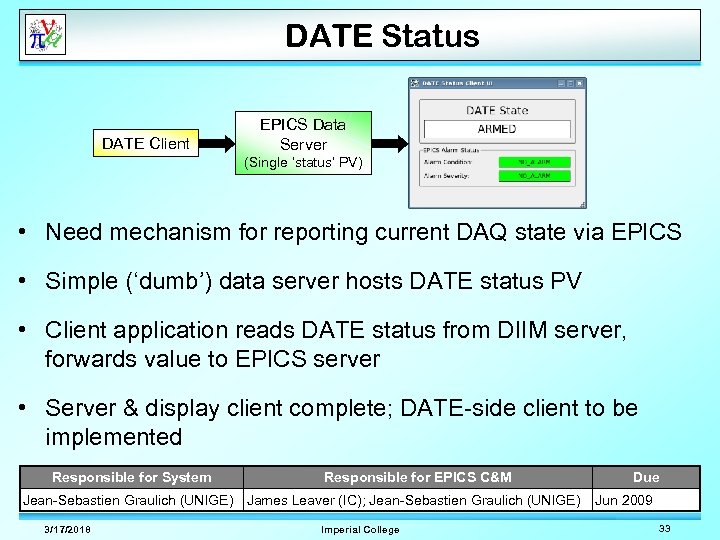

DATE Status DATE Client EPICS Data Server (Single ‘status’ PV) • Need mechanism for reporting current DAQ state via EPICS • Simple (‘dumb’) data server hosts DATE status PV • Client application reads DATE status from DIIM server, forwards value to EPICS server • Server & display client complete; DATE-side client to be implemented Responsible for System Responsible for EPICS C&M Jean-Sebastien Graulich (UNIGE) James Leaver (IC); Jean-Sebastien Graulich (UNIGE) 3/17/2018 Imperial College Due Jun 2009 33

Network Status • Need to verify that all machines on DAQ & control networks are functional throughout MICE operation • Two types of machine – Generic PC (Linux, Windows) – ‘Hard’ IOC (vx. Works, potentially RTEMS) • EPICS Network Status server contains one status PV for each valid MICE IP address Responsible for System Anyone with a PC/IOC in the MLCR/Hall 3/17/2018 Responsible for EPICS C&M James Leaver (IC) Imperial College Date Due Aug 2009 34

Network Status • Read status: PC – SSH into PC • Verifies network connectivity & PC identity – If successful, check list of currently running processes for required services • Read status: ‘Hard’ IOC – Check that standard internal status PV is accessible, with valid contents • e. g. ‘TIME’ PV, served by all MICE ‘hard’ IOCs Responsible for System Anyone with a PC/IOC in the MLCR/Hall 3/17/2018 Responsible for EPICS C&M James Leaver (IC) Imperial College Date Due Aug 2009 35

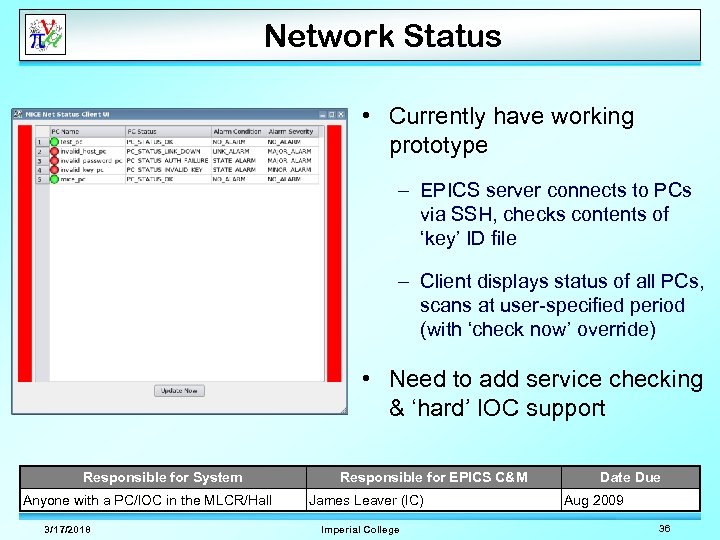

Network Status • Currently have working prototype – EPICS server connects to PCs via SSH, checks contents of ‘key’ ID file – Client displays status of all PCs, scans at user-specified period (with ‘check now’ override) • Need to add service checking & ‘hard’ IOC support Responsible for System Anyone with a PC/IOC in the MLCR/Hall 3/17/2018 Responsible for EPICS C&M James Leaver (IC) Imperial College Date Due Aug 2009 36

Unassigned Control Systems • Following systems have no allocated C&M effort – Time of Flight System – Diffuser – Calorimeter: KL Calorimeter – Calorimeter: Electron Muon Ranger • Currently investigating system requirements • Need to find additional resources within the MICE community – MOG operating at full capacity & no funds for DL to undertake these projects – Expect those responsible for each system will be required to implement corresponding EPICS controls – Possibility of assistance from FNAL Controls Group (to be discussed) 3/17/2018 Imperial College 37

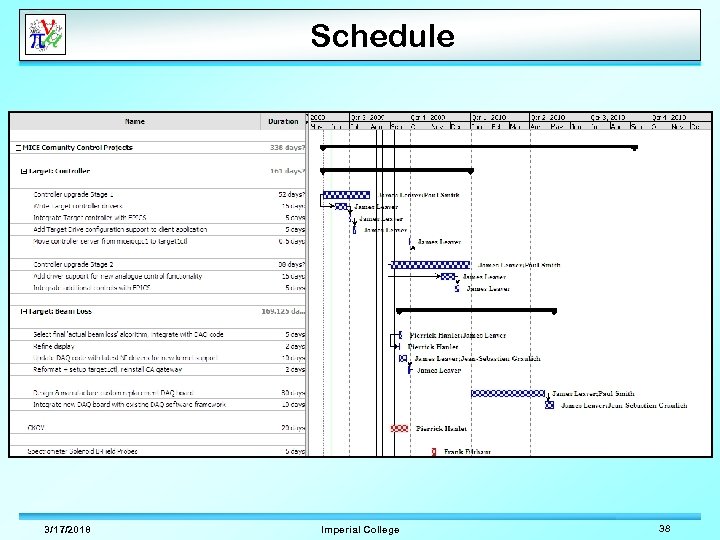

Schedule 3/17/2018 Imperial College 38

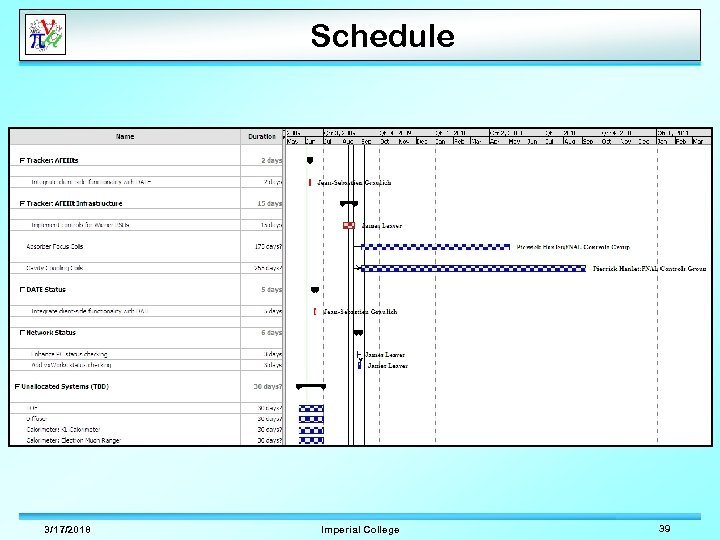

Schedule 3/17/2018 Imperial College 39

c56a25b3fa29df2686e00fb6a3fef495.ppt