cf7c17cb4dcc1287dcae2e6e7958bb9f.ppt

- Количество слайдов: 100

BUS 6192 SPECIAL TOPICS IN MANAGEMENT AND ORGANIZATION Source: William G. Zikmund’s Business Research Methods Lectured by Prof. Dr. Lütfihak Alpkan Gebze Institute of Technology

CONTENT • • • I. THEORY & REALITY II. THE PROCESS OF PROBLEM DEFINITION III. SURVEY RESEARCH IV. MEASUREMENT & SCALING V. CRITERIA FOR GOOD MEASUREMENT VI. EXTENDED ABSTRACT FORMAT

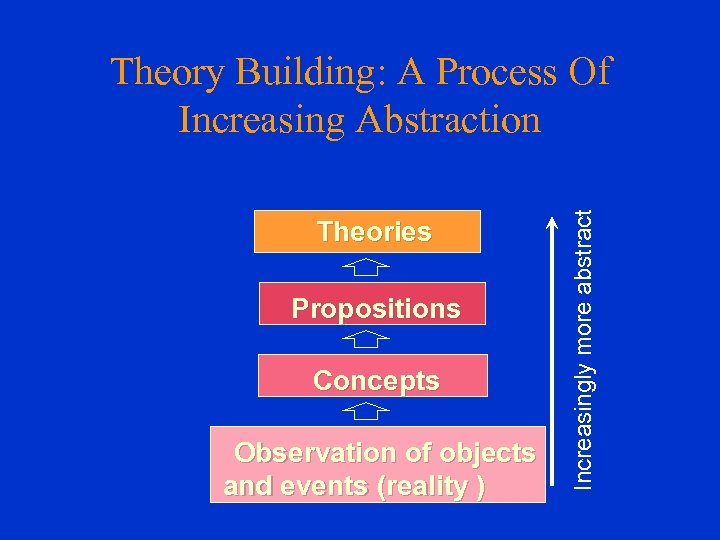

I. THEORY & REALITY Theory is a coherent set of general propositions used as principles of explanation of the apparent relationships of certain observed phenomena. Two Purposes of Theory: • Understanding • Prediction

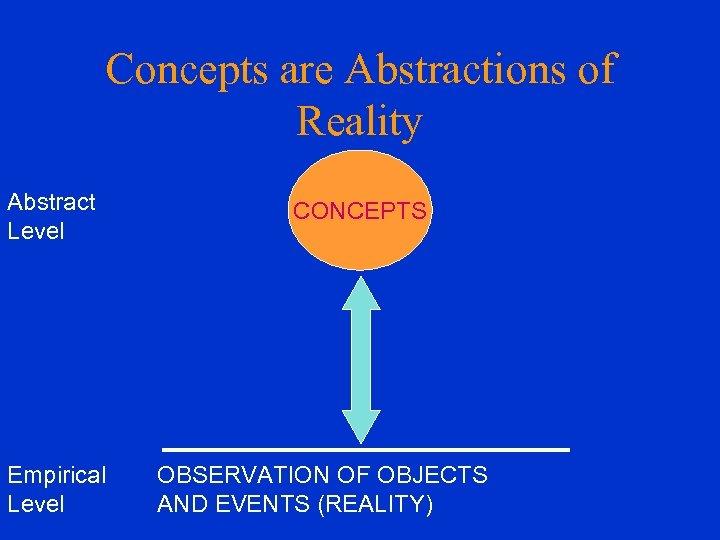

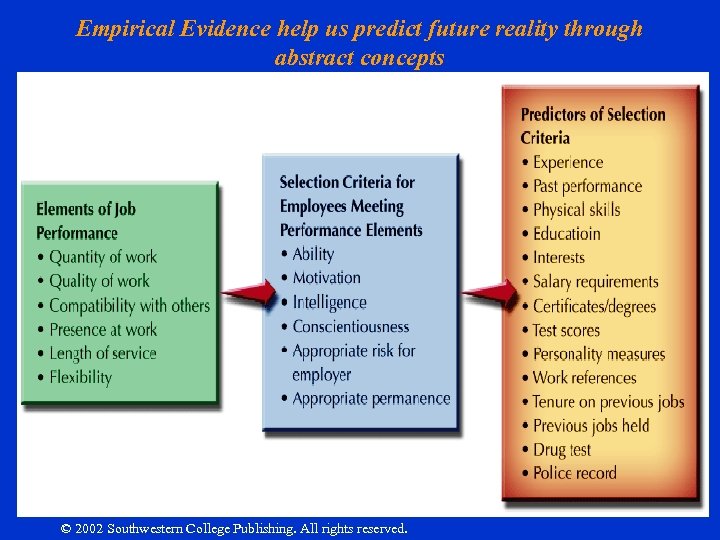

Levels of Reality • Abstract level (concepts & propositions): in theory development, the level of knowledge expressing a concept that exists only as an idea or a quality apart from an object. • Empirical level (variables & hypotheses): level of knowledge reflecting that which is verifiable by experience or observation.

1. 1. Concept (or Construct) • A generalized idea about a class of objects, attributes, occurrences, or processes that has been given a name • Building blocks that abstract reality • “leadership, ” “productivity, ” and “morale” • “gross national product, ” “asset, ” and “inflation”

Concepts are Abstractions of Reality Abstract Level Empirical Level CONCEPTS OBSERVATION OF OBJECTS AND EVENTS (REALITY)

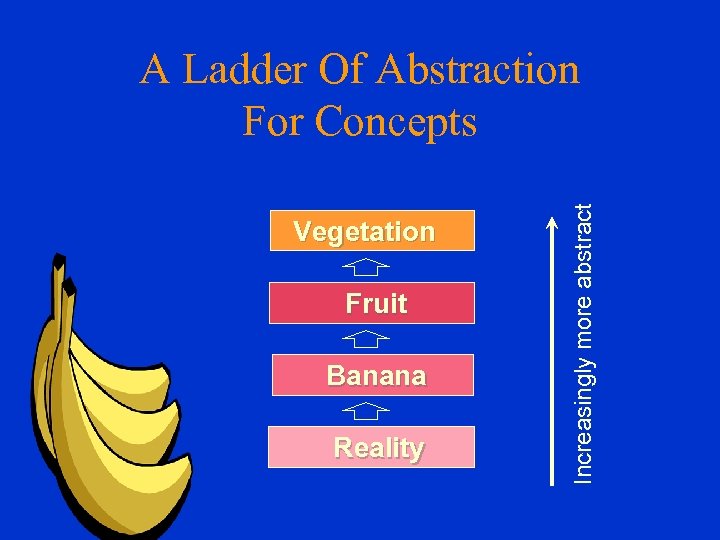

Vegetation Fruit Banana Reality Increasingly more abstract A Ladder Of Abstraction For Concepts

Theories Propositions Concepts Observation of objects and events (reality ) Increasingly more abstract Theory Building: A Process Of Increasing Abstraction

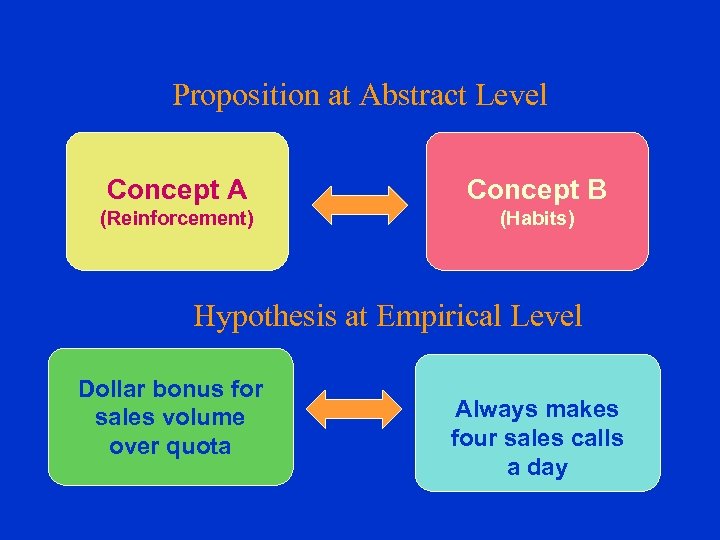

1. 2. Propositions • Propositions are statements concerned with the relationships among concepts. • A hypothesis is a proposition that is empirically testable. It is an empirical statement concerned with the relationship among variables. • A variable is anything that may assume different numerical values.

Proposition at Abstract Level Concept A Concept B (Reinforcement) (Habits) Hypothesis at Empirical Level Dollar bonus for sales volume over quota Always makes four sales calls a day

Empirical Evidence help us predict future reality through abstract concepts 1– 11 © 2002 Southwestern College Publishing. All rights reserved.

2. Scientific Method The use of a set of prescribed procedures for establishing and connecting theoretical statements about events and for predicting events yet unknown.

2. 1. Deductive Reasoning (tümdengelim) • The logical process of deriving a conclusion from a known premise or something known to be true. – We know that all managers are human beings. – If we also know that John Smith is a manager, – then we can deduce that John Smith is a human being.

2. 2. Inductive Reasoning (tümevarım) • The logical process of establishing a general proposition on the basis of observation of particular facts. – All managers that have ever been seen are human beings; – therefore all managers are human beings.

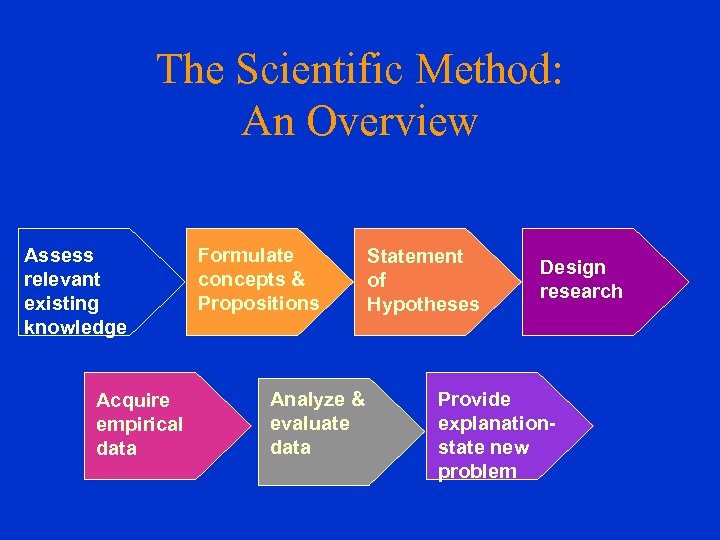

The Scientific Method: An Overview Assess relevant existing knowledge Acquire empirical data Formulate concepts & Propositions Analyze & evaluate data Statement of Hypotheses Design research Provide explanationstate new problem

3. Qualitative versus Quantitative Research • Purpose: preliminary versus conclusive • Samples: small versus large • Type of questions: broad range of questioning versus structured questions • Results: subjective interpretation versus statistical analysis

4. Ethics • Ethics : The established customs, morals, and fundamental human relationships that exist throughout the world. • Ethical Behavior: Behavior that is morally accepted as good or right as opposed to bad or wrong.

Research Ethics • General ethical rules apply also to the researchers. • If a society deems dishonesty to be unethical, then this means that any researcher who behaves dishonestly in the research process is acting unethically.

Types of Ethical Misconduct in Research (see also www. chem. wayne. edu/information/ethics_presentation. pdf) • Falsification: changing data • Fabrication: making up data • Plagiarism: using words or ideas without proper attribution • Duplication: writing exactly the same parts in different publications • Slicing: using the results of the same research project in more than one publication They should be assumed as unethical as lying, cheating, copying, etc.

II. THE PROCESS OF PROBLEM DEFINITION • Problem: existence of a difference between the current conditions and a more preferable set of future conditions. • Management Problem: a development that necessiates a decision to cope with difficulties and threats, or to exploit opportunities.

Management Problems Mean Performance Gaps – Business performance is worse than expected business performance. – Actual business performance is less than possible business performance. – Expected business performance is greater than possible business performance. 21

• Problem Discovery: getting aware of some symptons of a management problem • Problem Definition: The indication of a specific business decision area that will be clarified by answering some research questions.

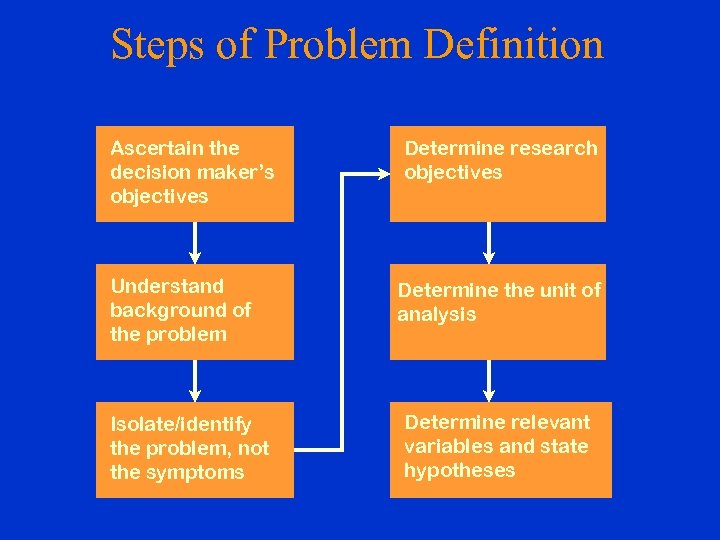

Steps of Problem Definition Ascertain the decision maker’s objectives Understand background of the problem Isolate/identify the problem, not the symptoms Determine research objectives Determine the unit of analysis Determine relevant variables and state hypotheses

1. Ascertain the Decision Maker’s Objectives • Managerial objectives should be expressed in measurable terms; however, line managers seldom clearly articulate their problems to the researchers. • Researchers should try to understand the problems by interviewing the related managers and collect information from other sources. 24

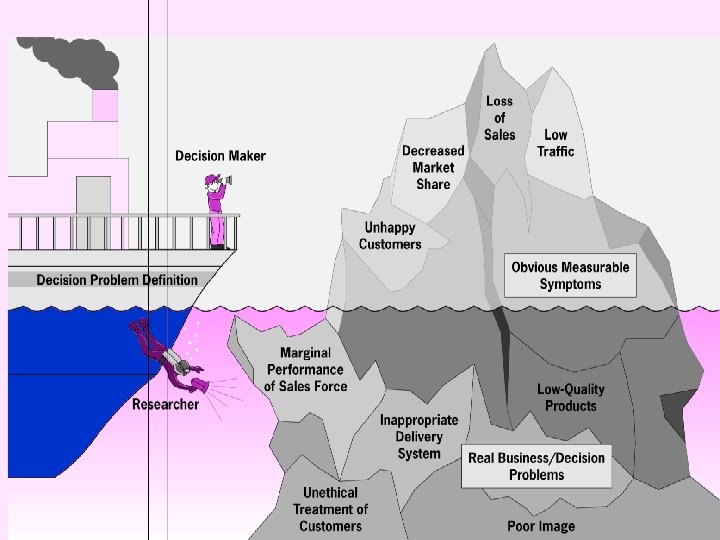

2. Understand the Background of the Problem • Situation analysis: the informal gathering of background information to familiarize researchers or managers with the decision area. • The Iceberg Principle: the dangerous part of many business problems is neither visible to nor understood by managers. 25

26

3. Isolate and Identify the Problems, Not the Symptoms *Identify the Symptoms • by interrogative techniques: Asking multiple what, where, who, when, why, and how questions about what has changed. • by probing : An interview technique that tries to draw deeper and more elaborate explanations from the discussion. *Isolate the Symptoms from the True Problem 27

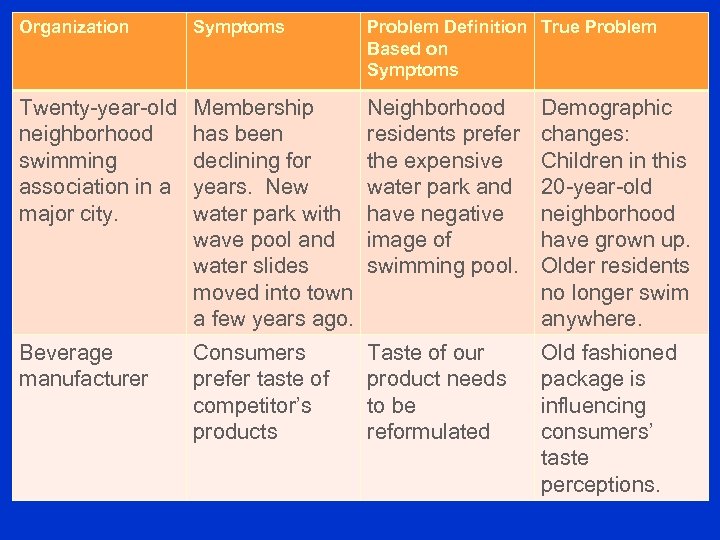

Symptoms can be confusing • The case of twenty-year-old neighborhood swimming association: • Membership has been declining for years. • Maybe neighborhood residents prefer the expensive water park.

Organization Symptoms Problem Definition True Problem Based on Symptoms Twenty-year-old neighborhood swimming association in a major city. Membership has been declining for years. New water park with wave pool and water slides moved into town a few years ago. Neighborhood residents prefer the expensive water park and have negative image of swimming pool. Demographic changes: Children in this 20 -year-old neighborhood have grown up. Older residents no longer swim anywhere. Beverage manufacturer Consumers prefer taste of competitor’s products Taste of our product needs to be reformulated Old fashioned package is influencing consumers’ taste perceptions.

What Language Is Written on This Stone Found by Archaeologists? The Language is English: “to tie mules to”

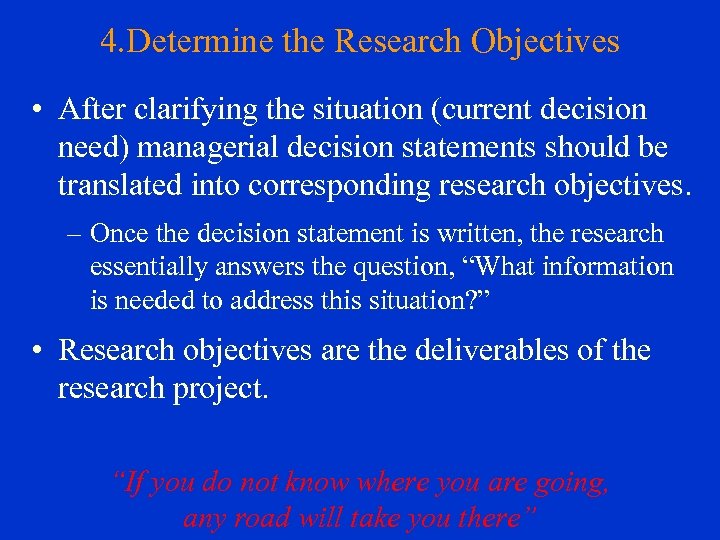

4. Determine the Research Objectives • After clarifying the situation (current decision need) managerial decision statements should be translated into corresponding research objectives. – Once the decision statement is written, the research essentially answers the question, “What information is needed to address this situation? ” • Research objectives are the deliverables of the research project. “If you do not know where you are going, any road will take you there”

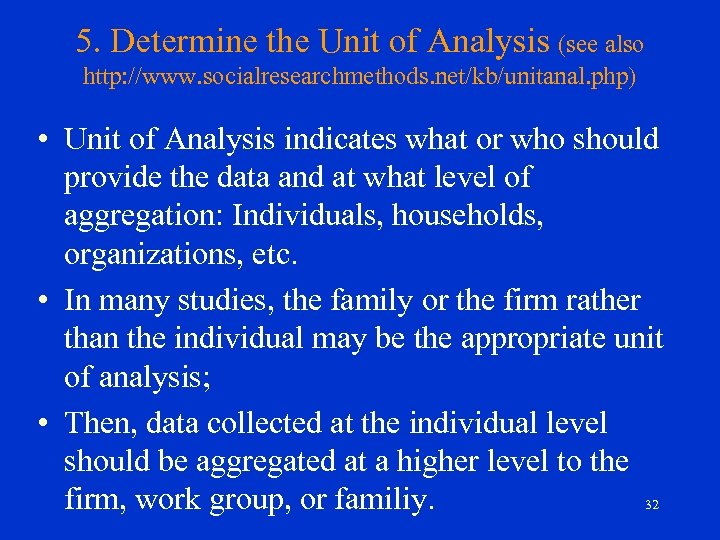

5. Determine the Unit of Analysis (see also http: //www. socialresearchmethods. net/kb/unitanal. php) • Unit of Analysis indicates what or who should provide the data and at what level of aggregation: Individuals, households, organizations, etc. • In many studies, the family or the firm rather than the individual may be the appropriate unit of analysis; • Then, data collected at the individual level should be aggregated at a higher level to the 32 firm, work group, or familiy.

Examples of Research Objectives and Unit of Analysis • To identify the critical factors affecting clients’ choice of some specific brands. (individual clients) • To identify the future performance of candidates for a specific job offer. (individual candidates) • To establish the reasons for stagnant sales and suggest means by which sales can be increased. (firms) 33

6. Determine the Relevant Variables and Hypotheses • To determine what characteristics of the unit of analysis will be measured by the researchers. • These characteristics may vary within the same unit of analysis. • For instance, Research Objective: to identify the ways of increasing marketing performance. • Unit of analysis: firm • Variable: marketing performance • Different firms’ marketing performance may be 34 different.

Definition of Variable • What is a Variable? – Anything that varies or changes from one instance to another; can exhibit differences in value, usually in magnitude or strength, or in direction. • What is a Constant? – Something that does not change; is not useful in addressing research questions. 35

Types of Variables • Continuous variable – Can take on a range of quantitative values. • Categorical variable – Indicates membership in some group. – Also called classificatory variable. • Dependent variable – A process outcome or a variable that is predicted and/or explained by other variables. • Independent variable – A variable that is expected to influence the dependent variable in some way. 36

Research Questions • In order to achieve research objectives, researchers should develop research questions, and try to answer them through research. • Research questions are about the nature of relations among variables. • Examples of research questions: – What are the reasons of sales decline? – What are the drivers of customer satisfaction? – What are the relations between new designs and customer satisfaction?

Hypothesis • An unsupported proposition to answer a research question to be tested by research • H 1: Decline in the purchasing power of the clients decreases the total sales of the industry. • H 2: New designs increase customer satisfaction.

An exemplary problem definition process • Symptons: our clients are complaining, they seem unhappy and we may loose them. • True Problem: our clients began to percieve our products as low quality but still expensive. • Research objective: to identify the ways to convince our clients about our products’ quality. • Unit of analysis: individual buyers.

An exemplary problem definition process • Variables: customer satisfaction, re-buying intention, product characteristics, customers’ demographics, etc. • Research question: what are the drivers of customer satisfaction, what are the relations among customer perceptions about the product characteristics, customer satisfaction, and re-buying intention? • Hypothesis: old fashioned products are percieved by the young customers as low quality.

III. SURVEY RESEARCH Survey: a research technique in which information (primary data) is gathered from a sample of people to make generalizations. Primary data: data gathered and assembled specifically for the project at hand. Sample of the survey: respondents who are asked to provide information, assuming that they can represent (possess same features with) a target population.

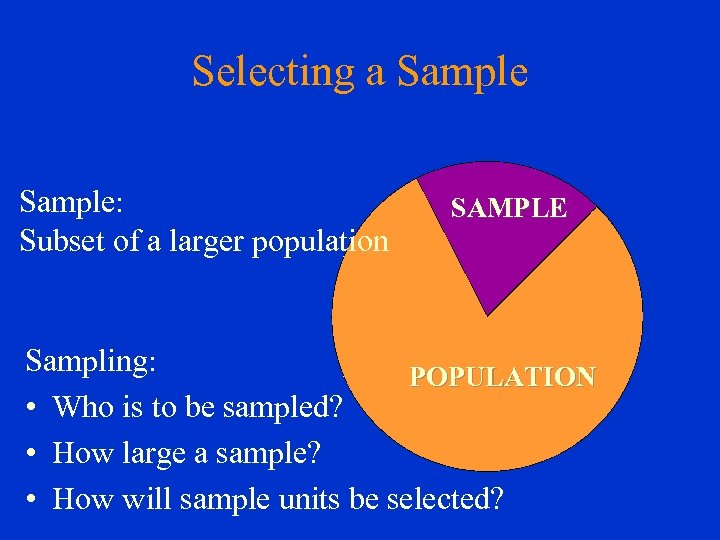

Selecting a Sample: Subset of a larger population SAMPLE Sampling: POPULATION • Who is to be sampled? • How large a sample? • How will sample units be selected?

Basic Definitions for sampling (http: //www. stats. gla. ac. uk/steps/glossary/sampling. html) Target population: the group about which the researcher wishes to draw conclusions and make generalizations Random sampling: selecting a sample from a larger target population where each respondent is chosen entirely by chance and each member of the population has a known, but possibly nonequal, chance of being included in the sample.

Basic Definitions for data collection Surveys ask respondents (who are the subjects of the research) questions by use of a questionnaire. Respondent: The person who provides information (primary data) by answering a questionnaire or an interviewer’s questions. Questionnaire: a list of structured questions designed by the researchers for the purpose of codifying and analyzing the respondents’ answers scientifically. Advantages of Surveys: Quick, Inexpensive, Efficient, Accurate, Flexible way of gathering information.

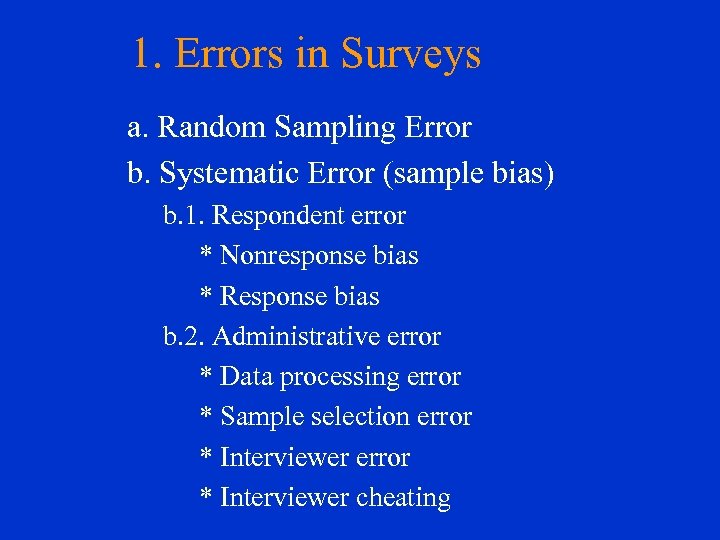

1. Errors in Surveys a. Random Sampling Error b. Systematic Error (sample bias) b. 1. Respondent error * Nonresponse bias * Response bias b. 2. Administrative error * Data processing error * Sample selection error * Interviewer cheating

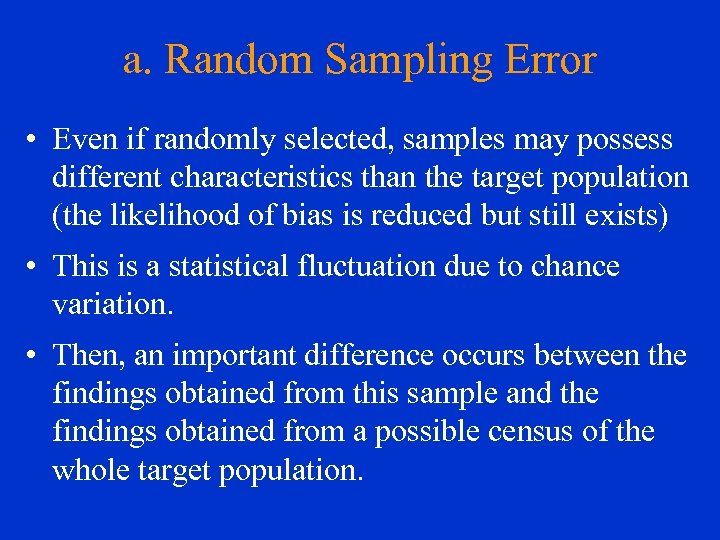

a. Random Sampling Error • Even if randomly selected, samples may possess different characteristics than the target population (the likelihood of bias is reduced but still exists) • This is a statistical fluctuation due to chance variation. • Then, an important difference occurs between the findings obtained from this sample and the findings obtained from a possible census of the whole target population.

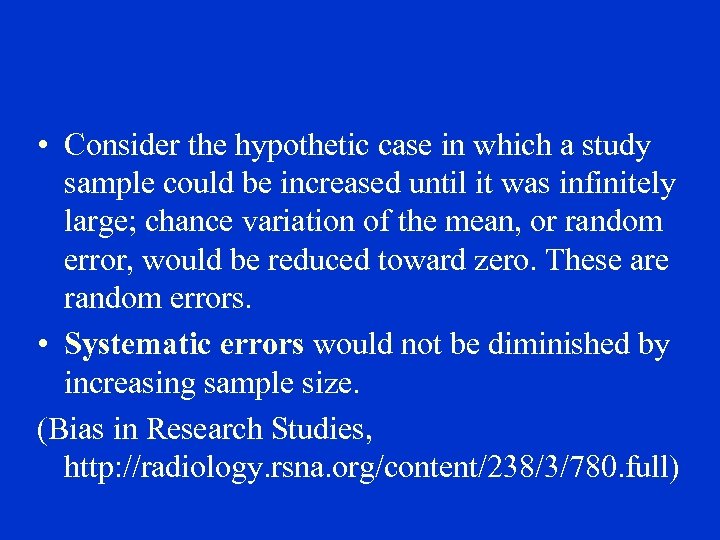

• Consider the hypothetic case in which a study sample could be increased until it was infinitely large; chance variation of the mean, or random error, would be reduced toward zero. These are random errors. • Systematic errors would not be diminished by increasing sample size. (Bias in Research Studies, http: //radiology. rsna. org/content/238/3/780. full)

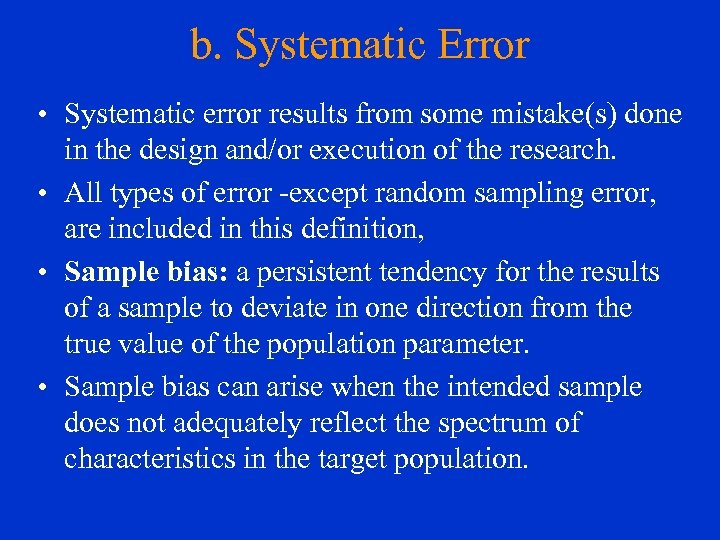

b. Systematic Error • Systematic error results from some mistake(s) done in the design and/or execution of the research. • All types of error -except random sampling error, are included in this definition, • Sample bias: a persistent tendency for the results of a sample to deviate in one direction from the true value of the population parameter. • Sample bias can arise when the intended sample does not adequately reflect the spectrum of characteristics in the target population.

b. 1. Respondent Bias • A classification of sample bias resulting from some respondent action or inaction • Nonresponse bias • Response bias

Nonresponse Error • Nonrespondents: in almost every survey information from a small or large portion of the sample cannot be collected. These are those people who refuse to respond, or who can not be contacted (not-at-homes) • Self-selection bias: only those people who are interested strongly with topic of the survey may respond while those who are still within the sample but indeferent or afraid avoid participating. • This leads to the over-representation of some extreme positions, but under-representation of others.

Response Bias • A bias that occurs when respondents tend to answer questions with a certain inclination ot viewpoint that consciously (deliberate falsification) or unconsciously (unconscious misinterpretation) misrepresents the truth.

Reasons of response bias • Knowingly or unknowingly people who answer questions of the interviewer may feel unconfortable about the truth that they share with others, and change it in their responses. • They may desire to show themselves as more intelligent, wealthy, sensitive, etc. than they really are.

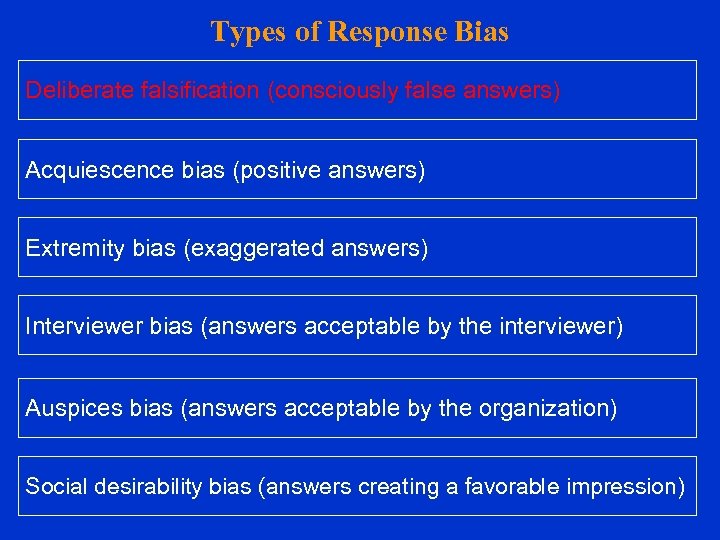

Types of Response Bias Deliberate falsification (consciously false answers) Acquiescence bias (positive answers) Extremity bias (exaggerated answers) Interviewer bias (answers acceptable by the interviewer) Auspices bias (answers acceptable by the organization) Social desirability bias (answers creating a favorable impression)

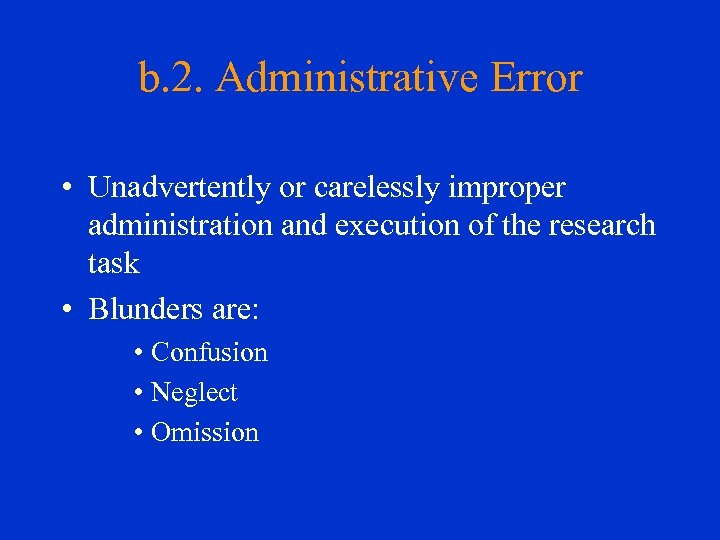

b. 2. Administrative Error • Unadvertently or carelessly improper administration and execution of the research task • Blunders are: • Confusion • Neglect • Omission

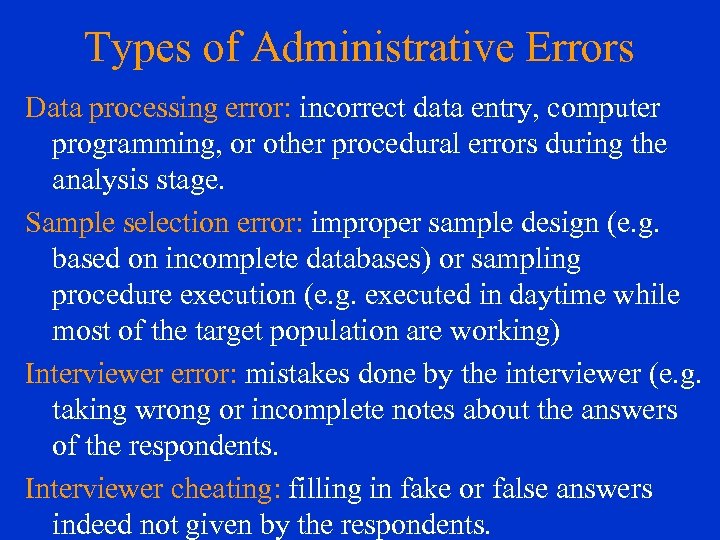

Types of Administrative Errors Data processing error: incorrect data entry, computer programming, or other procedural errors during the analysis stage. Sample selection error: improper sample design (e. g. based on incomplete databases) or sampling procedure execution (e. g. executed in daytime while most of the target population are working) Interviewer error: mistakes done by the interviewer (e. g. taking wrong or incomplete notes about the answers of the respondents. Interviewer cheating: filling in fake or false answers indeed not given by the respondents.

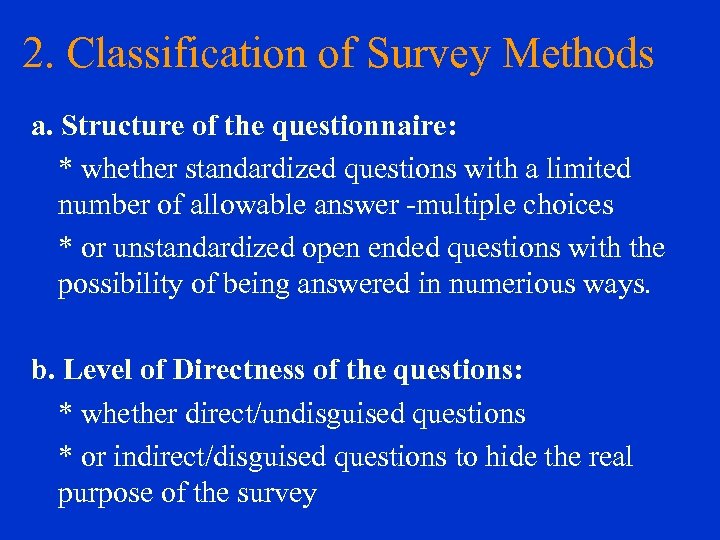

2. Classification of Survey Methods a. Structure of the questionnaire: * whether standardized questions with a limited number of allowable answer -multiple choices * or unstandardized open ended questions with the possibility of being answered in numerious ways. b. Level of Directness of the questions: * whether direct/undisguised questions * or indirect/disguised questions to hide the real purpose of the survey

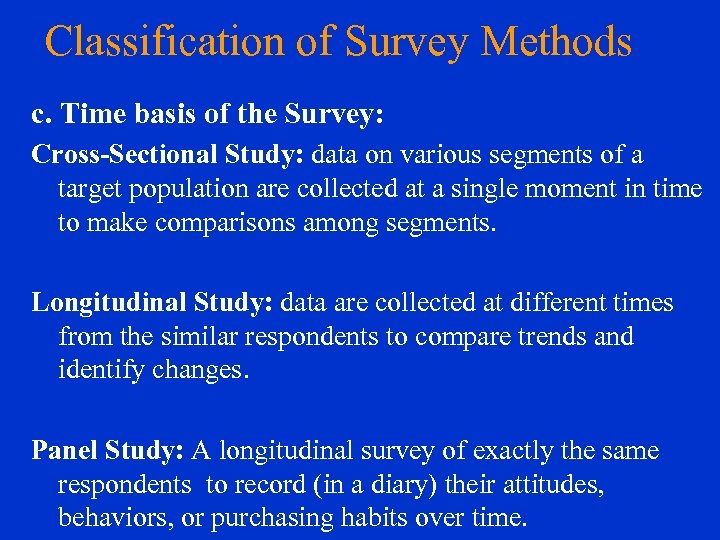

Classification of Survey Methods c. Time basis of the Survey: Cross-Sectional Study: data on various segments of a target population are collected at a single moment in time to make comparisons among segments. Longitudinal Study: data are collected at different times from the similar respondents to compare trends and identify changes. Panel Study: A longitudinal survey of exactly the same respondents to record (in a diary) their attitudes, behaviors, or purchasing habits over time.

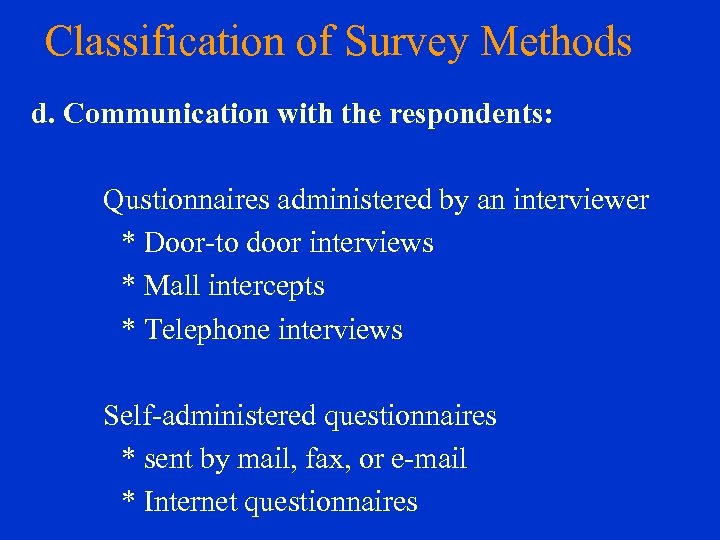

Classification of Survey Methods d. Communication with the respondents: Qustionnaires administered by an interviewer * Door-to door interviews * Mall intercepts * Telephone interviews Self-administered questionnaires * sent by mail, fax, or e-mail * Internet questionnaires

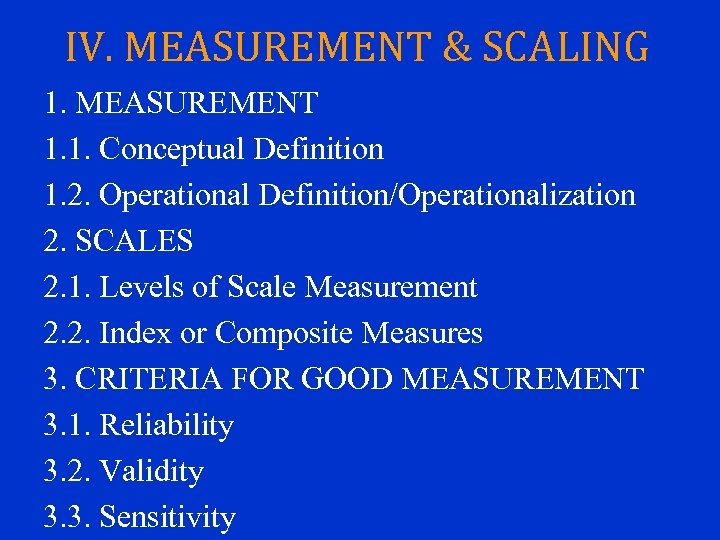

IV. MEASUREMENT & SCALING 1. MEASUREMENT 1. 1. Conceptual Definition 1. 2. Operational Definition/Operationalization 2. SCALES 2. 1. Levels of Scale Measurement 2. 2. Index or Composite Measures 3. CRITERIA FOR GOOD MEASUREMENT 3. 1. Reliability 3. 2. Validity 3. 3. Sensitivity

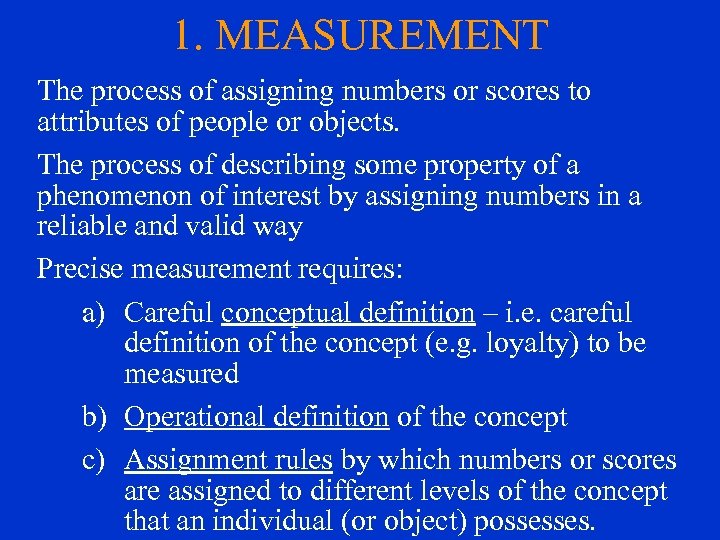

1. MEASUREMENT The process of assigning numbers or scores to attributes of people or objects. The process of describing some property of a phenomenon of interest by assigning numbers in a reliable and valid way Precise measurement requires: a) Careful conceptual definition – i. e. careful definition of the concept (e. g. loyalty) to be measured b) Operational definition of the concept c) Assignment rules by which numbers or scores are assigned to different levels of the concept that an individual (or object) possesses.

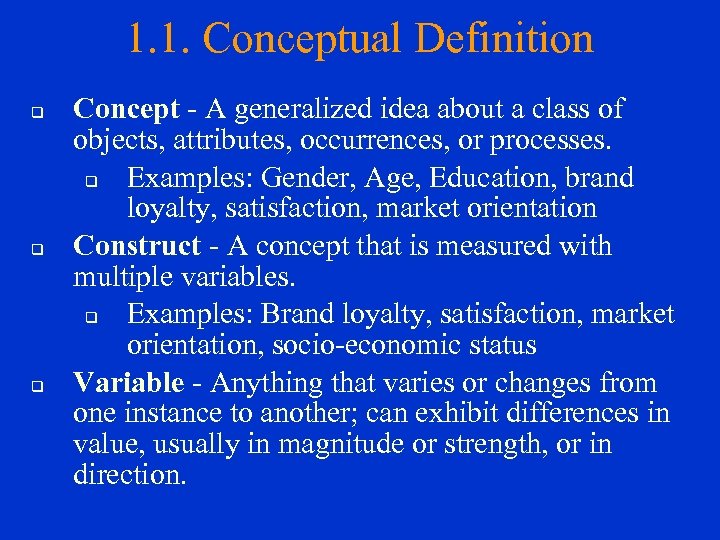

1. 1. Conceptual Definition q q q Concept - A generalized idea about a class of objects, attributes, occurrences, or processes. q Examples: Gender, Age, Education, brand loyalty, satisfaction, market orientation Construct - A concept that is measured with multiple variables. q Examples: Brand loyalty, satisfaction, market orientation, socio-economic status Variable - Anything that varies or changes from one instance to another; can exhibit differences in value, usually in magnitude or strength, or in direction.

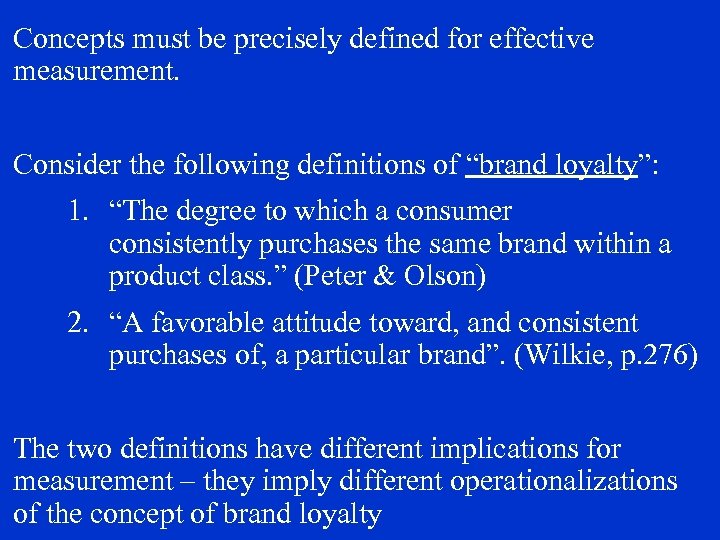

Concepts must be precisely defined for effective measurement. Consider the following definitions of “brand loyalty”: 1. “The degree to which a consumer consistently purchases the same brand within a product class. ” (Peter & Olson) 2. “A favorable attitude toward, and consistent purchases of, a particular brand”. (Wilkie, p. 276) The two definitions have different implications for measurement – they imply different operationalizations of the concept of brand loyalty

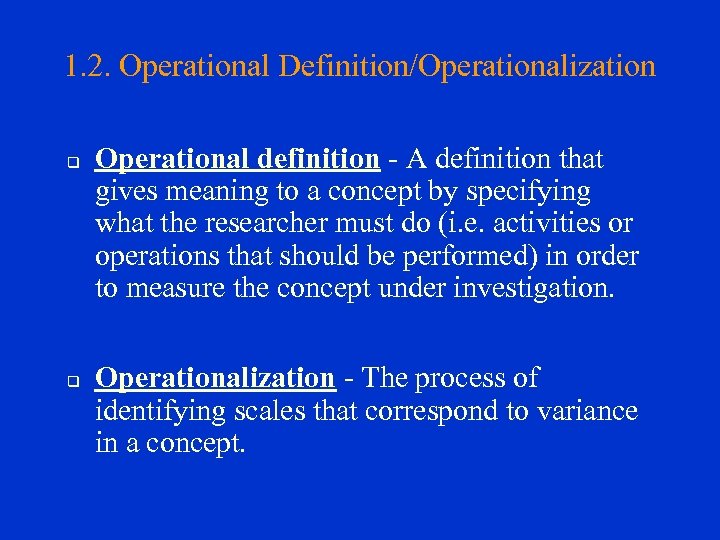

1. 2. Operational Definition/Operationalization q q Operational definition - A definition that gives meaning to a concept by specifying what the researcher must do (i. e. activities or operations that should be performed) in order to measure the concept under investigation. Operationalization - The process of identifying scales that correspond to variance in a concept.

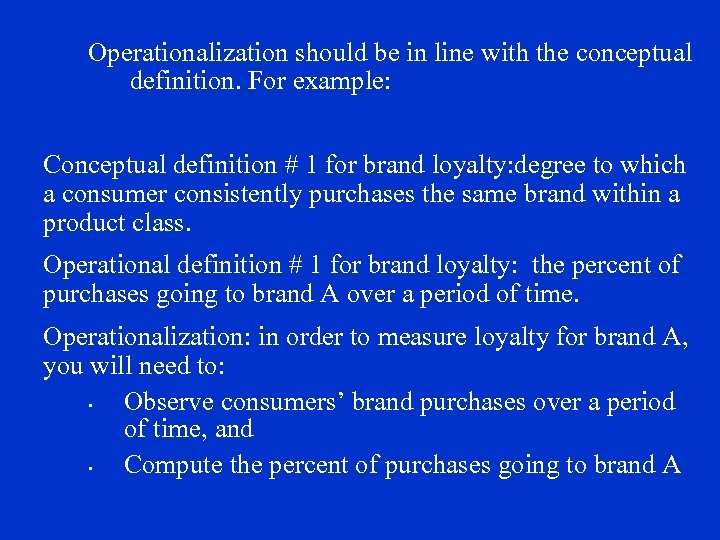

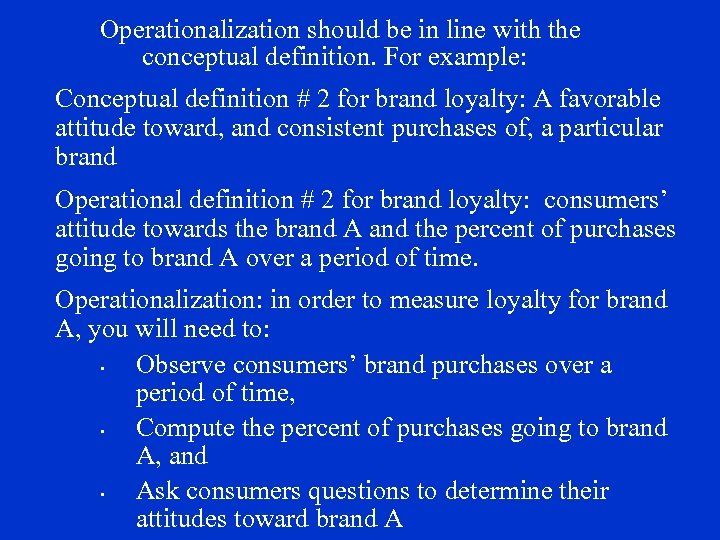

Operationalization should be in line with the conceptual definition. For example: Conceptual definition # 1 for brand loyalty: degree to which a consumer consistently purchases the same brand within a product class. Operational definition # 1 for brand loyalty: the percent of purchases going to brand A over a period of time. Operationalization: in order to measure loyalty for brand A, you will need to: • Observe consumers’ brand purchases over a period of time, and • Compute the percent of purchases going to brand A

Operationalization should be in line with the conceptual definition. For example: Conceptual definition # 2 for brand loyalty: A favorable attitude toward, and consistent purchases of, a particular brand Operational definition # 2 for brand loyalty: consumers’ attitude towards the brand A and the percent of purchases going to brand A over a period of time. Operationalization: in order to measure loyalty for brand A, you will need to: • Observe consumers’ brand purchases over a period of time, • Compute the percent of purchases going to brand A, and • Ask consumers questions to determine their attitudes toward brand A

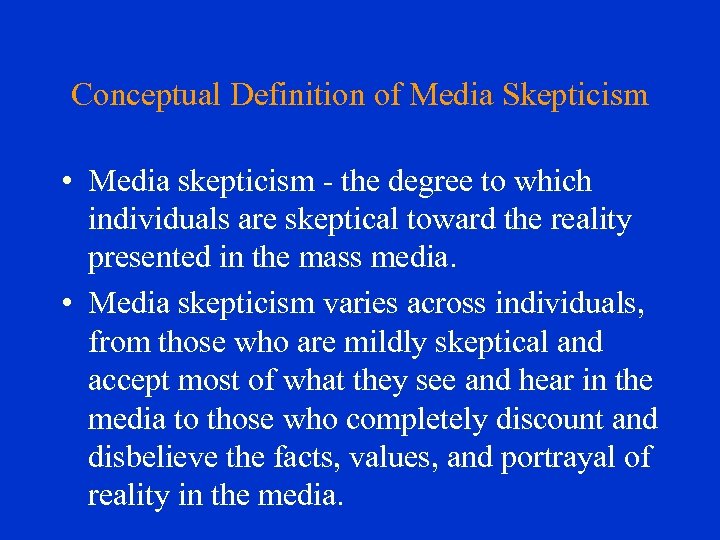

Conceptual Definition of Media Skepticism • Media skepticism - the degree to which individuals are skeptical toward the reality presented in the mass media. • Media skepticism varies across individuals, from those who are mildly skeptical and accept most of what they see and hear in the media to those who completely discount and disbelieve the facts, values, and portrayal of reality in the media.

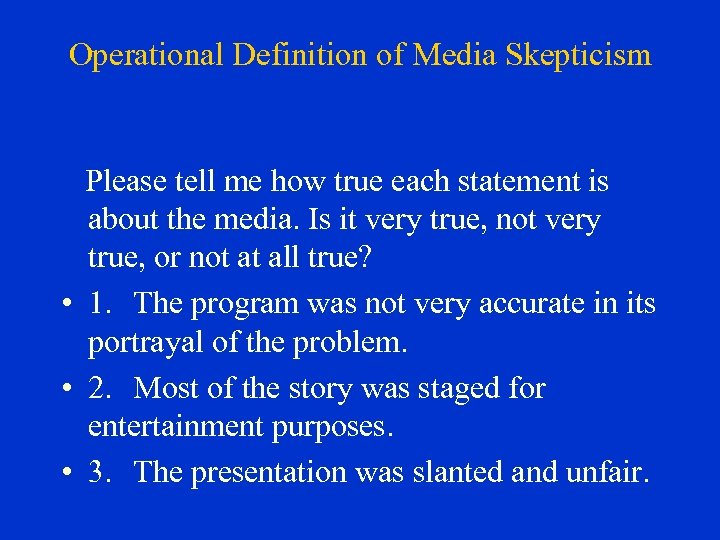

Operational Definition of Media Skepticism Please tell me how true each statement is about the media. Is it very true, not very true, or not at all true? • 1. The program was not very accurate in its portrayal of the problem. • 2. Most of the story was staged for entertainment purposes. • 3. The presentation was slanted and unfair.

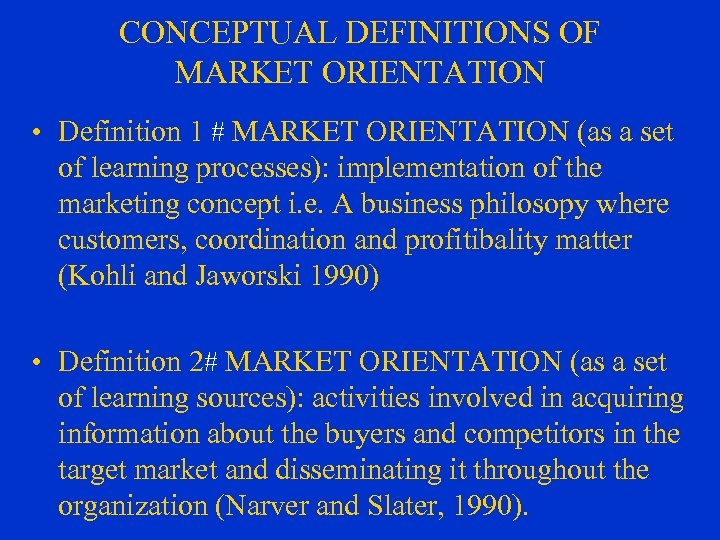

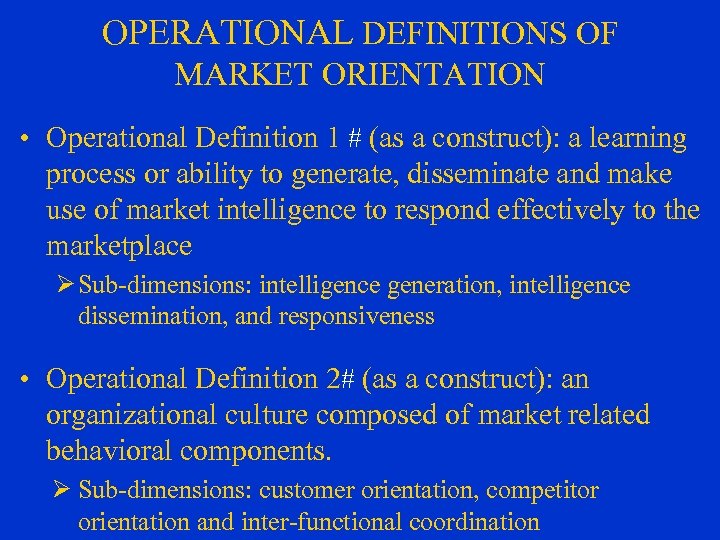

CONCEPTUAL DEFINITIONS OF MARKET ORIENTATION • Definition 1 # MARKET ORIENTATION (as a set of learning processes): implementation of the marketing concept i. e. A business philosopy where customers, coordination and profitibality matter (Kohli and Jaworski 1990) • Definition 2# MARKET ORIENTATION (as a set of learning sources): activities involved in acquiring information about the buyers and competitors in the target market and disseminating it throughout the organization (Narver and Slater, 1990).

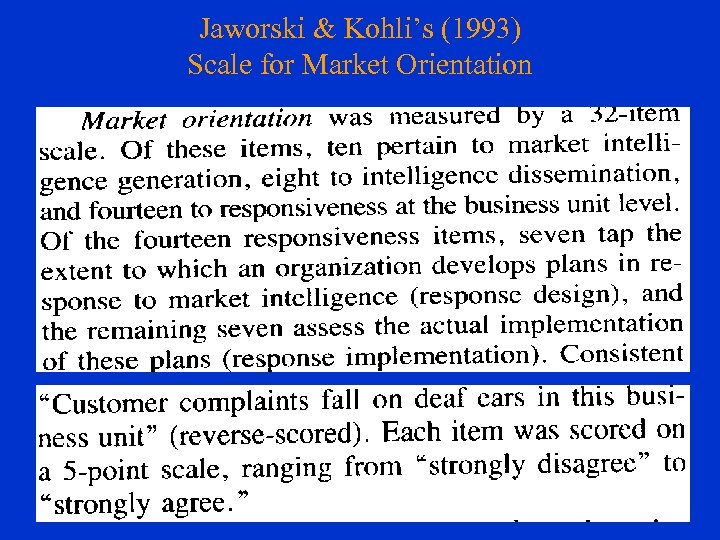

OPERATIONAL DEFINITIONS OF MARKET ORIENTATION • Operational Definition 1 # (as a construct): a learning process or ability to generate, disseminate and make use of market intelligence to respond effectively to the marketplace Ø Sub-dimensions: intelligence generation, intelligence dissemination, and responsiveness • Operational Definition 2# (as a construct): an organizational culture composed of market related behavioral components. Ø Sub-dimensions: customer orientation, competitor orientation and inter-functional coordination

2. SCALES To effectively carry out any measurement we need to use some form of a scale. A scale is any series of items (numbers) arranged along a continuous spectrum of values for the purpose of quantification (i. e. for the purpose of placing objects based on how much of an attribute they possess) The thermometer for instance consists of numbers arranged in a continuous spectrum to indicate the magnitude of “heat” possessed by an object.

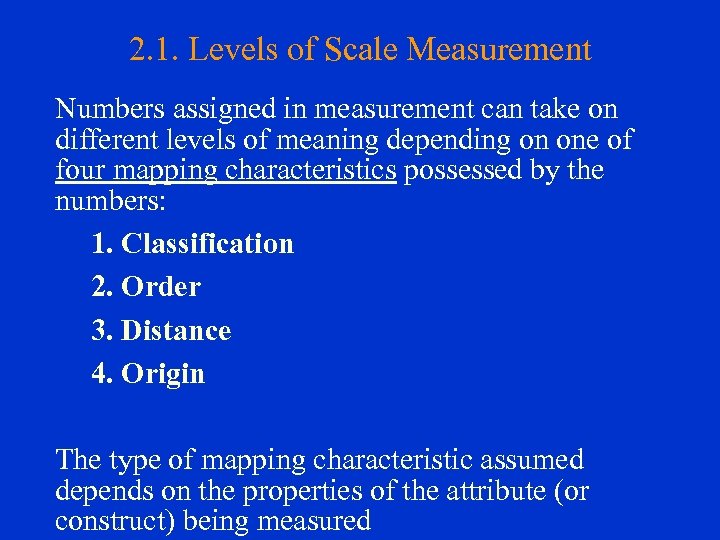

2. 1. Levels of Scale Measurement Numbers assigned in measurement can take on different levels of meaning depending on one of four mapping characteristics possessed by the numbers: 1. Classification 2. Order 3. Distance 4. Origin The type of mapping characteristic assumed depends on the properties of the attribute (or construct) being measured

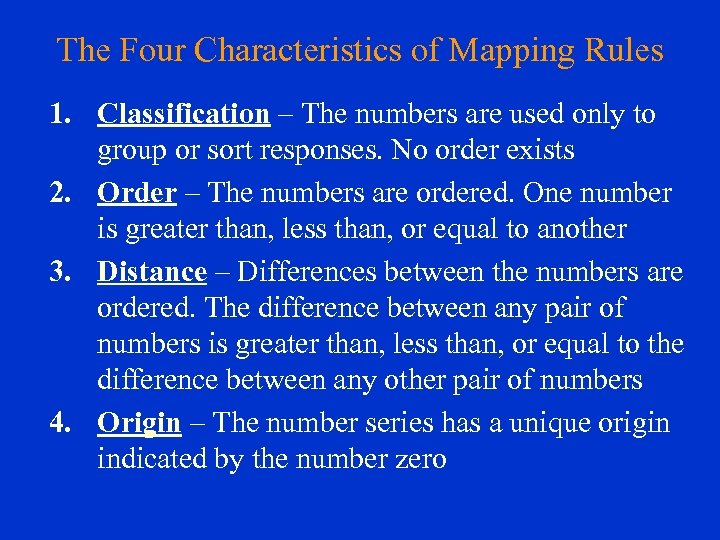

The Four Characteristics of Mapping Rules 1. Classification – The numbers are used only to group or sort responses. No order exists 2. Order – The numbers are ordered. One number is greater than, less than, or equal to another 3. Distance – Differences between the numbers are ordered. The difference between any pair of numbers is greater than, less than, or equal to the difference between any other pair of numbers 4. Origin – The number series has a unique origin indicated by the number zero

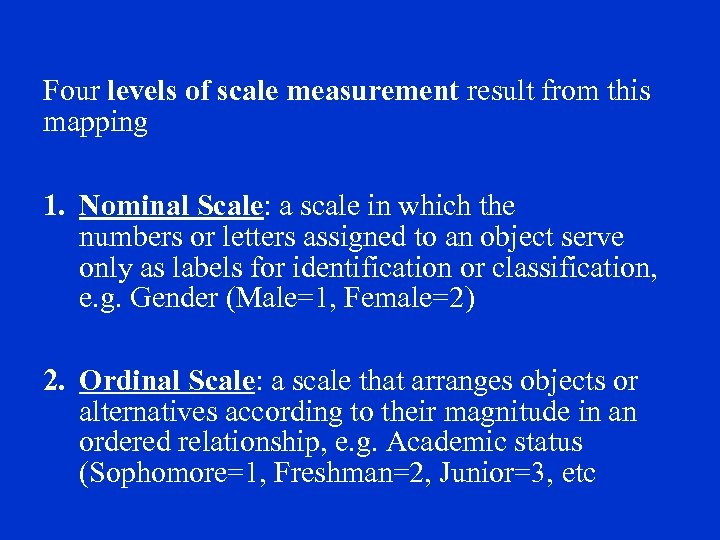

Four levels of scale measurement result from this mapping 1. Nominal Scale: a scale in which the numbers or letters assigned to an object serve only as labels for identification or classification, e. g. Gender (Male=1, Female=2) 2. Ordinal Scale: a scale that arranges objects or alternatives according to their magnitude in an ordered relationship, e. g. Academic status (Sophomore=1, Freshman=2, Junior=3, etc

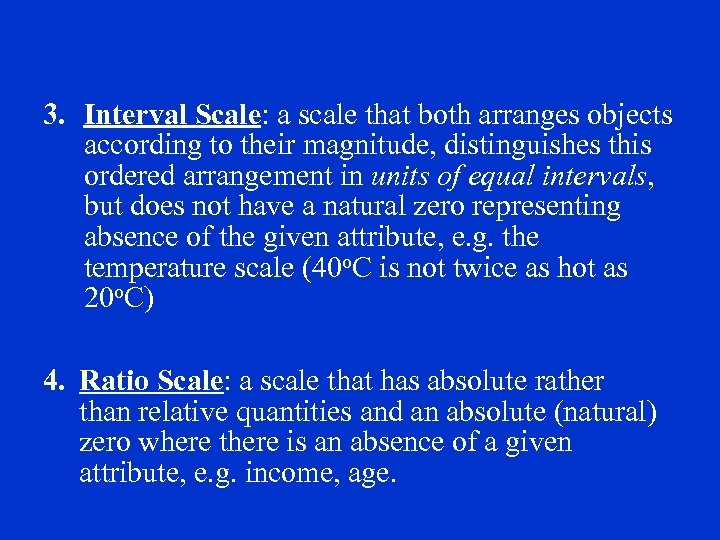

3. Interval Scale: a scale that both arranges objects according to their magnitude, distinguishes this ordered arrangement in units of equal intervals, but does not have a natural zero representing absence of the given attribute, e. g. the temperature scale (40 o. C is not twice as hot as 20 o. C) 4. Ratio Scale: a scale that has absolute rather than relative quantities and an absolute (natural) zero where there is an absence of a given attribute, e. g. income, age.

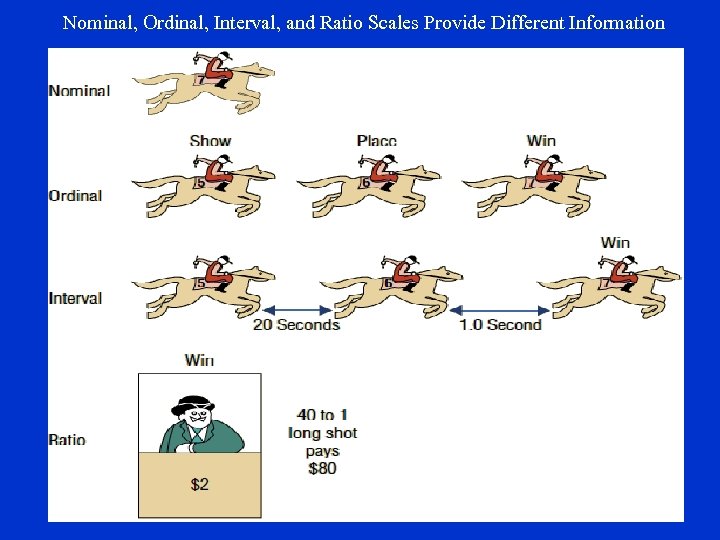

Nominal, Ordinal, Interval, and Ratio Scales Provide Different Information

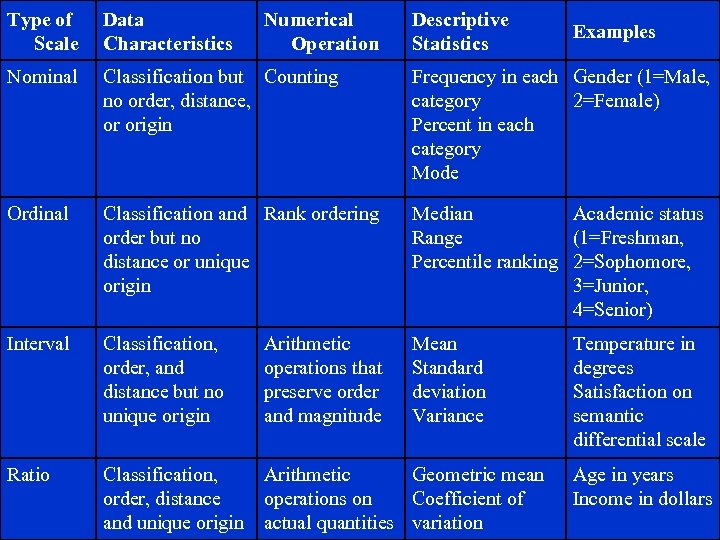

Type of Scale Data Characteristics Numerical Operation Descriptive Statistics Nominal Classification but Counting no order, distance, or origin Frequency in each Gender (1=Male, category 2=Female) Percent in each category Mode Ordinal Classification and Rank ordering order but no distance or unique origin Median Academic status Range (1=Freshman, Percentile ranking 2=Sophomore, 3=Junior, 4=Senior) Interval Classification, order, and distance but no unique origin Arithmetic operations that preserve order and magnitude Mean Standard deviation Variance Ratio Classification, order, distance and unique origin Arithmetic Geometric mean operations on Coefficient of actual quantities variation Examples Temperature in degrees Satisfaction on semantic differential scale Age in years Income in dollars

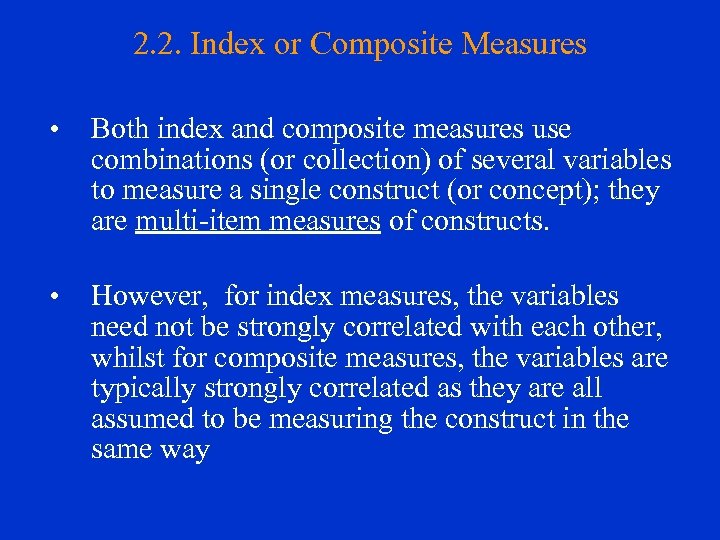

2. 2. Index or Composite Measures • Both index and composite measures use combinations (or collection) of several variables to measure a single construct (or concept); they are multi-item measures of constructs. • However, for index measures, the variables need not be strongly correlated with each other, whilst for composite measures, the variables are typically strongly correlated as they are all assumed to be measuring the construct in the same way

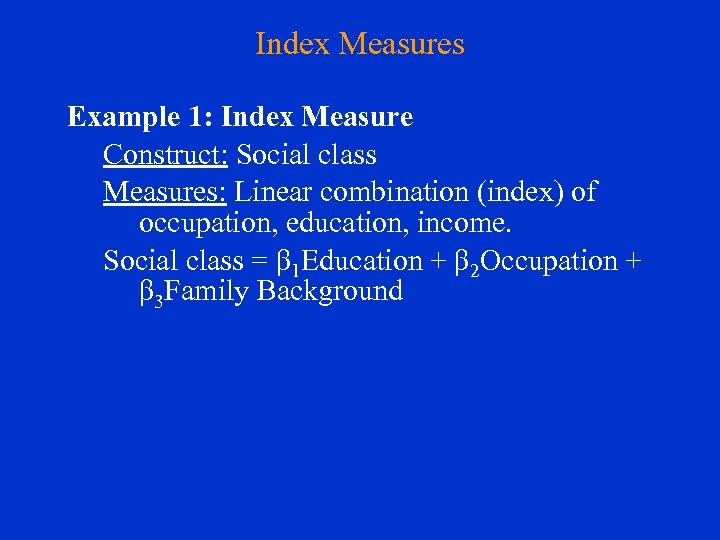

Index Measures Example 1: Index Measure Construct: Social class Measures: Linear combination (index) of occupation, education, income. Social class = β 1 Education + β 2 Occupation + β 3 Family Background

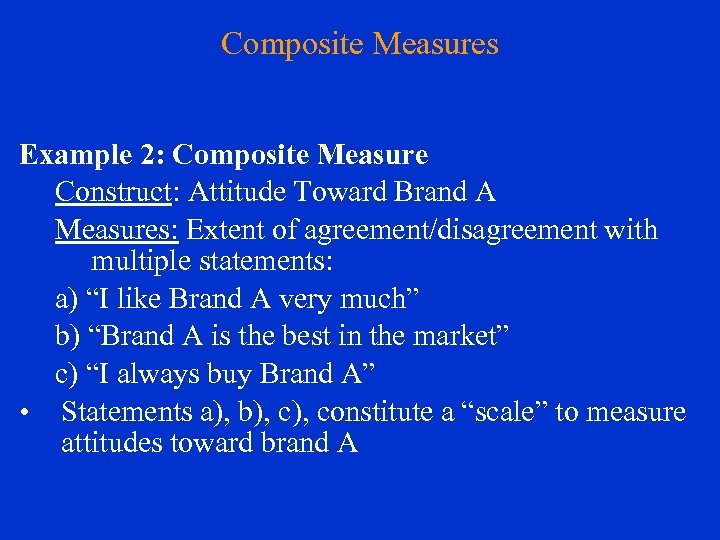

Composite Measures Example 2: Composite Measure Construct: Attitude Toward Brand A Measures: Extent of agreement/disagreement with multiple statements: a) “I like Brand A very much” b) “Brand A is the best in the market” c) “I always buy Brand A” • Statements a), b), constitute a “scale” to measure attitudes toward brand A

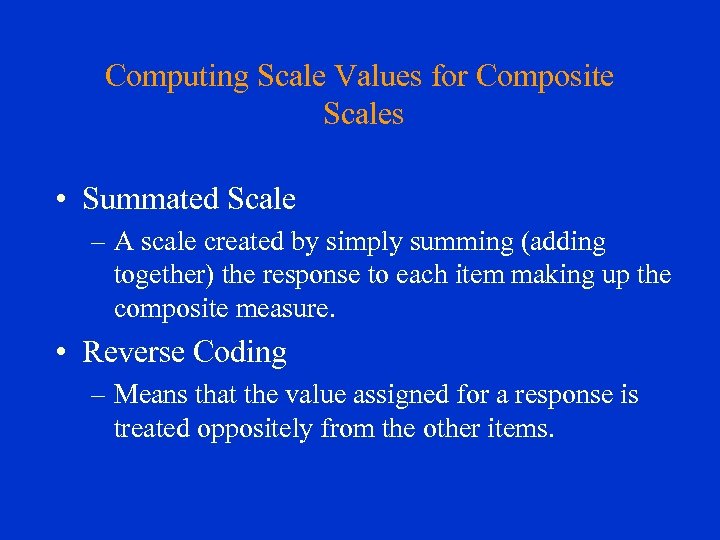

Computing Scale Values for Composite Scales • Summated Scale – A scale created by simply summing (adding together) the response to each item making up the composite measure. • Reverse Coding – Means that the value assigned for a response is treated oppositely from the other items.

Jaworski & Kohli’s (1993) Scale for Market Orientation

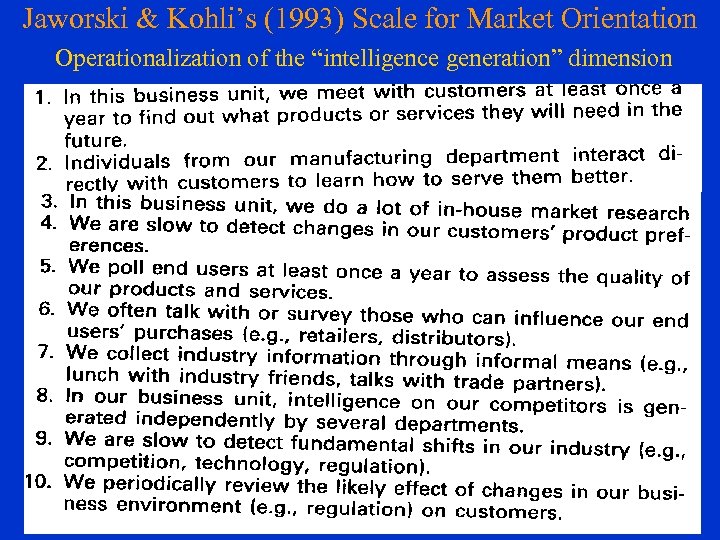

Jaworski & Kohli’s (1993) Scale for Market Orientation Operationalization of the “intelligence generation” dimension

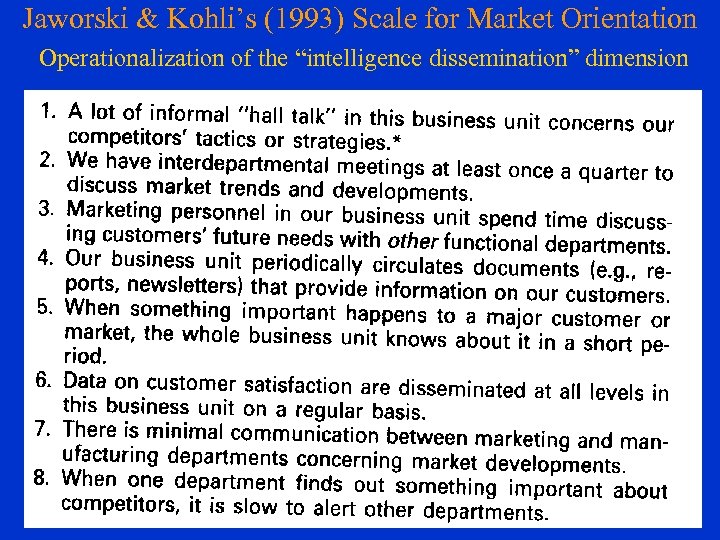

Jaworski & Kohli’s (1993) Scale for Market Orientation Operationalization of the “intelligence dissemination” dimension

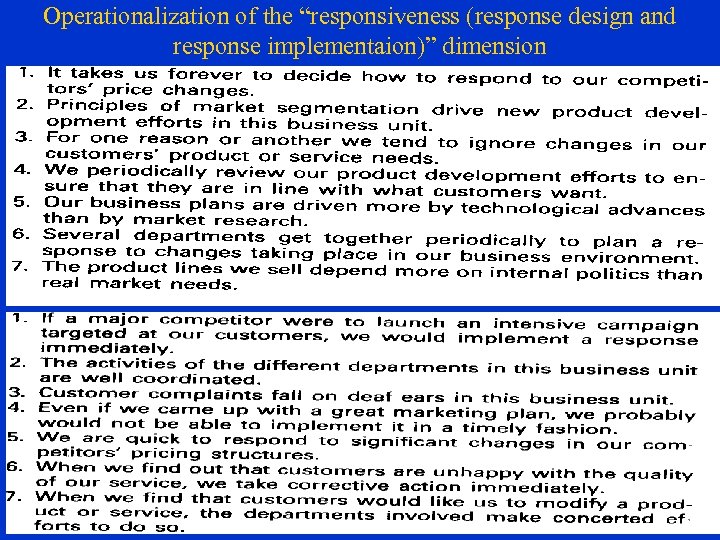

Operationalization of the “responsiveness (response design and response implementaion)” dimension

V. CRITERIA FOR GOOD MEASUREMENT Three criteria are commonly used to assess the quality of measurement scales : 1. Reliability 2. Validity 3. Sensitivity

1. RELIABILITY q q The degree to which a measure is free from random error and therefore gives consistent results. An indicator of the measure’s internal consistency

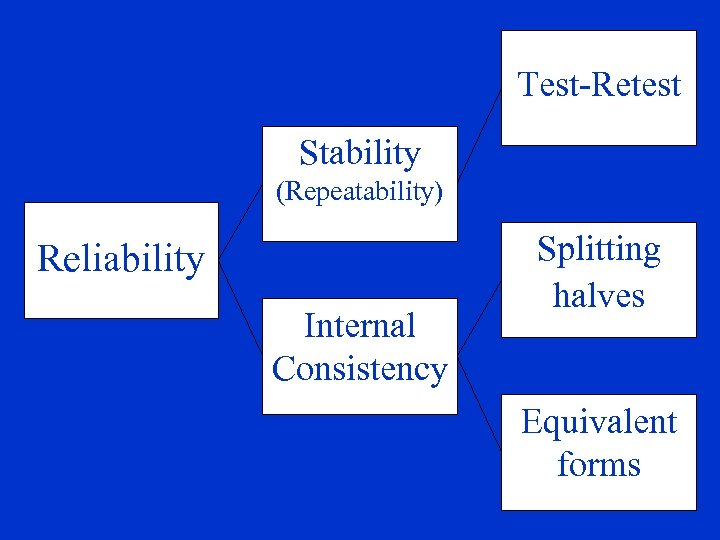

Test-Retest Stability (Repeatability) Reliability Internal Consistency Splitting halves Equivalent forms

a. Stability (Repeatability) • Stability the extent to which results obtained with the measure can be reproduced. 1. Test-Retest Method • Administering the same scale or measure to the same respondents at two separate points in time to test for stability. 2. Test-Retest Reliability Problems • • The pre-measure, or first measure, may sensitize the respondents and subsequently influence the results of the second measure. Time effects that produce changes in attitude or other maturation of the subjects.

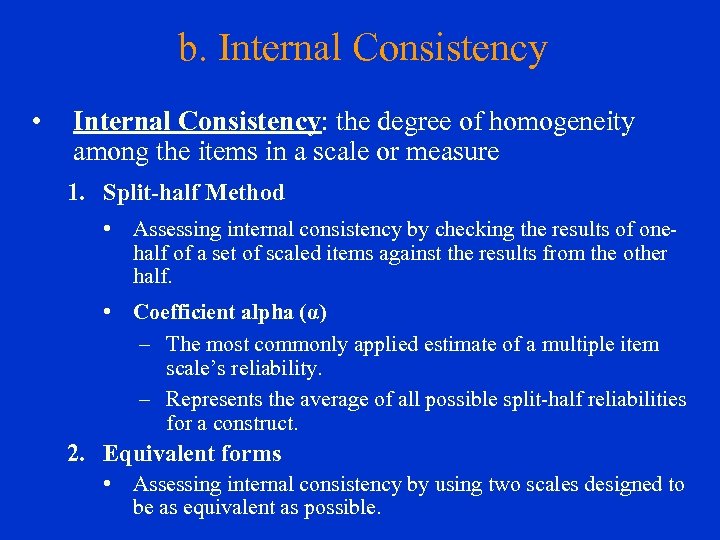

b. Internal Consistency • Internal Consistency: the degree of homogeneity among the items in a scale or measure 1. Split-half Method • Assessing internal consistency by checking the results of onehalf of a set of scaled items against the results from the other half. • Coefficient alpha (α) – The most commonly applied estimate of a multiple item scale’s reliability. – Represents the average of all possible split-half reliabilities for a construct. 2. Equivalent forms • Assessing internal consistency by using two scales designed to be as equivalent as possible.

2. VALIDITY • The accuracy of a measure or the extent to which a score truthfully represents a concept. • The ability of a measure (scale) to measure what it is intended measure. • Establishing validity involves answers to the following: – Is there a consensus that the scale measures what it is supposed to measure? – Does the measure correlate with other measures of the same concept? – Does the behavior expected from the measure predict actual observed behavior?

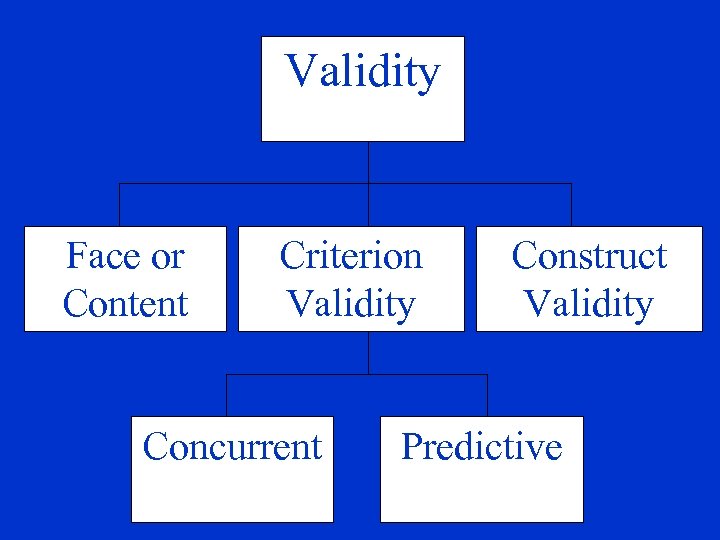

Validity Face or Content Criterion Validity Concurrent Construct Validity Predictive

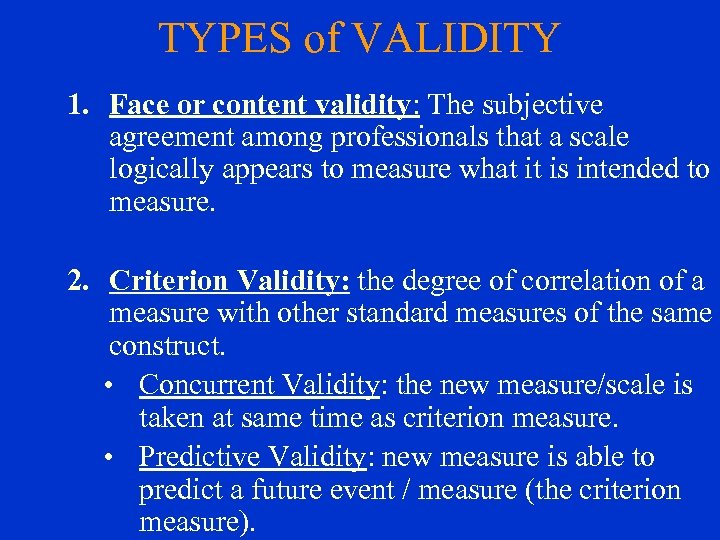

TYPES of VALIDITY 1. Face or content validity: The subjective agreement among professionals that a scale logically appears to measure what it is intended to measure. 2. Criterion Validity: the degree of correlation of a measure with other standard measures of the same construct. • Concurrent Validity: the new measure/scale is taken at same time as criterion measure. • Predictive Validity: new measure is able to predict a future event / measure (the criterion measure).

TYPES of VALIDITY 3. Construct Validity: degree to which a measure/scale confirms a network of related hypotheses generated from theory based on the concepts. • Convergent Validity. • Discriminant Validity.

Relationship Between Reliability & Validity 1. A measure that is not reliable cannot be valid, i. e. for a measure to be valid, it must be reliable Thus, reliability is a necessary condition for validity 2. A measure that is reliable is not necessarily valid; indeed a measure can be but not valid Thus, reliability is not a sufficient condition for validity 3. Therefore, reliability is a necessary but not sufficient condition for Validity.

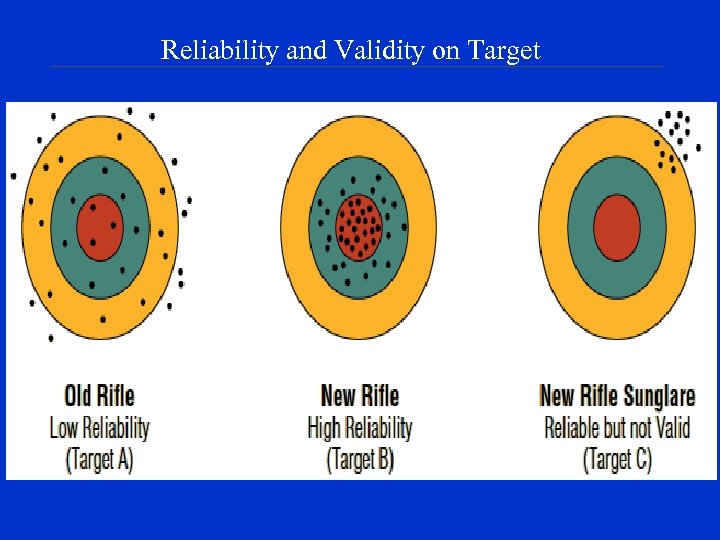

Reliability and Validity on Target

3. SENSITIVITY • The ability of a measure/scale to accurately measure variability in stimuli or responses; • The ability of a measure/scale to make fine distinctions among respondents with/objects with different levels of the attribute (construct). – Example - A typical bathroom scale is not sensitive enough to be used to measure the weight of jewelry; it cannot make fine distinctions among objects with very small weights.

• Composite measures allow for a greater range of possible scores, they are more sensitive than single-item scales. • Sensitivity is generally increased by adding more response points or adding scale items.

VI. EXTENDED ABSTRACT FORMAT • • Author(s): Title: Journal: Theoretical context and originality: Theoretical definitions of the variables: Research questions: (Hypothesized) relations and their rationale: Measurement scales of the variables:

Extended Abstract Format, con’t. • • • Research Method: Findings: Managerial Implications: Limitations of the current research: Further research implications: Conclusion:

References Main Textbook: • William G. Zikmund’s Business Research Methods Other Texbook: • Donald R. Cooper and Pamela S. Schindler’s Business Research Methods Lecture Notes: • Dr. Alhassan G. Abdul-Muhmin

cf7c17cb4dcc1287dcae2e6e7958bb9f.ppt