a49ef0187c17c251666427571f982367.ppt

- Количество слайдов: 62

Building Peta. Byte Servers Jim Gray Microsoft Research Gray@Microsoft. com http: //www. Research. Microsoft. com/~Gray/talks Kilo Mega Giga Tera Peta Exa 103 106 109 1012 1015 1018 today, we are here 1

Outline • The challenge: Building GIANT data stores – for example, the EOS/DIS 15 PB system • Conclusion 1 – Think about MOX and SCANS • Conclusion 2: – Think about Clusters 2

The Challenge -- EOS/DIS • Antarctica is melting -- 77% of fresh water liberated – – – sea level rises 70 meters Chico & Memphis are beach-front property New York, Washington, SF, LA, London, Paris • Let’s study it! Mission to Planet Earth • EOS: Earth Observing System (17 B$ => 10 B$) – 50 instruments on 10 satellites 1997 -2001 – Landsat (added later) • EOS DIS: Data Information System: – 3 -5 MB/s raw, 30 -50 MB/s processed. – 4 TB/day, – 15 PB by year 2007 3

The Process Flow • Data arrives and is pre-processed. – instrument data is calibrated, gridded averaged – Geophysical data is derived • Users ask for stored data OR to analyze and combine data. • Can make the pull-push split dynamically Pull Processing Other Data Push Processing 4

Designing EOS/DIS • Expect that millions will use the system (online) Three user categories: – NASA 500 -- funded by NASA to do science – Global Change 10 k - other dirt bags – Internet 20 m - everyone else Grain speculators Environmental Impact Reports New applications => discovery & access must be automatic • Allow anyone to set up a peer- node (DAAC & SCF) • Design for Ad Hoc queries, Not Standard Data Products If push is 90%, then 10% of data is read (on average). => A failure: no one uses the data, in DSS, push is 1% or less. => computation demand is enormous (pull: push is 100: 1) 5

The architecture • 2+N data center design • Scaleable OR-DBMS • Emphasize Pull vs Push processing • Storage hierarchy • Data Pump • Just in time acquisition 6

Obvious Point: EOS/DIS will be a cluster of SMPs • It needs 16 PB storage – = 1 M disks in current technology – = 500 K tapes in current technology • It needs 100 Tera. Ops of processing – = 100 K processors (current technology) – and ~ 100 Terabytes of DRAM • 1997 requirements are 1000 x smaller – smaller data rate – almost no re-processing work 7

2+N data center design • duplex the archive (for fault tolerance) • let anyone build an extract (the +N) • Partition data by time and by space (store 2 or 4 ways). • Each partition is a free-standing OR-DBBMS (similar to Tandem, Teradata designs). • Clients and Partitions interact via standard protocols – OLE-DB, DCOM/CORBA, HTTP, … 8

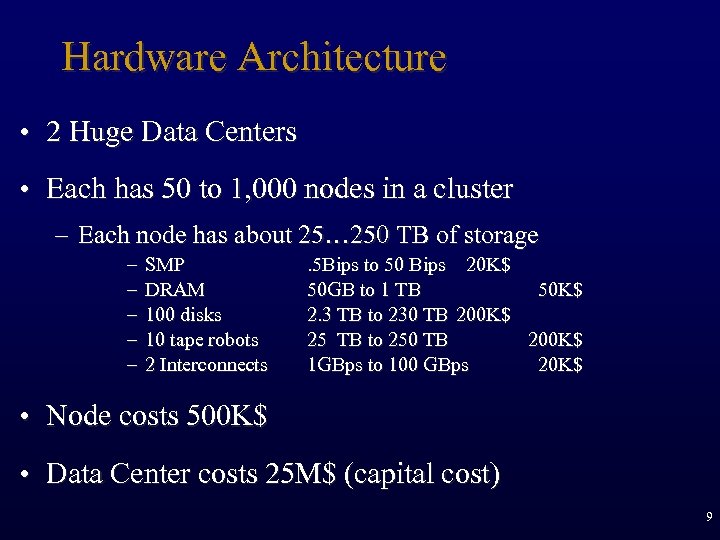

Hardware Architecture • 2 Huge Data Centers • Each has 50 to 1, 000 nodes in a cluster – Each node has about 25… 250 TB of storage – – – SMP DRAM 100 disks 10 tape robots 2 Interconnects . 5 Bips to 50 Bips 20 K$ 50 GB to 1 TB 50 K$ 2. 3 TB to 230 TB 200 K$ 25 TB to 250 TB 200 K$ 1 GBps to 100 GBps 20 K$ • Node costs 500 K$ • Data Center costs 25 M$ (capital cost) 9

Scaleable OR-DBMS • Adopt cluster approach (Tandem, Teradata, VMScluster, . . ) • System must scale to many processors, disks, links • OR DBMS based on standard object model – CORBA or DCOM (not vendor specific) • Grow by adding components • System must be self-managing 10

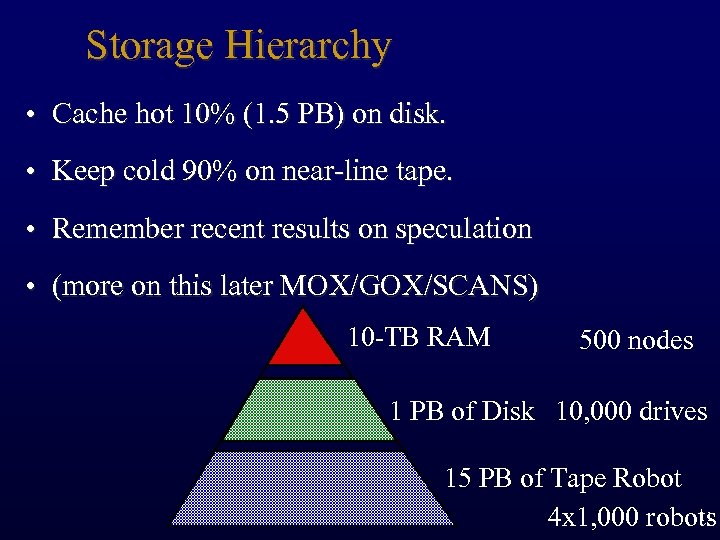

Storage Hierarchy • Cache hot 10% (1. 5 PB) on disk. • Keep cold 90% on near-line tape. • Remember recent results on speculation • (more on this later MOX/GOX/SCANS) 10 -TB RAM 500 nodes 1 PB of Disk 10, 000 drives 15 PB of Tape Robot 11 4 x 1, 000 robots

Data Pump • Some queries require reading ALL the data (for reprocessing) • Each Data Center scans the data every 2 weeks. – Data rate 10 PB/day = 10 TB/node/day = 120 MB/s • Compute on demand small jobs • less than 1, 000 tape mounts • • • less than 100 M disk accesses less than 100 Tera. Ops. (less than 30 minute response time) • For BIG JOBS scan entire 15 PB database • Queries (and extracts) “snoop” this data pump. 12

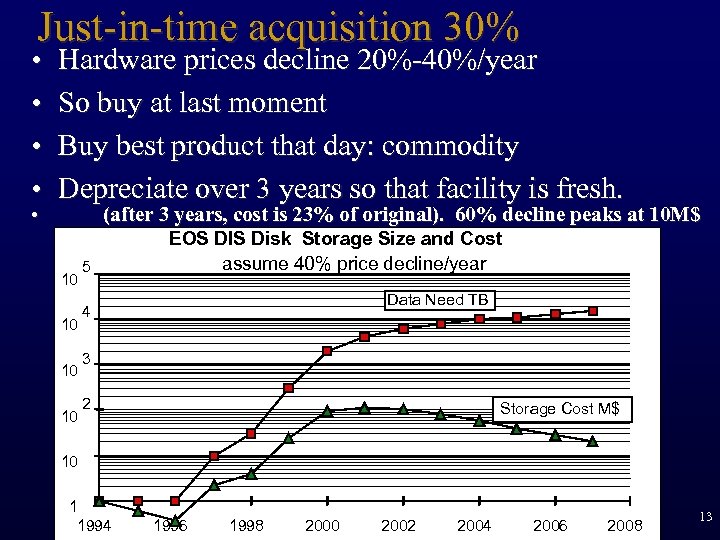

Just-in-time acquisition 30% • • • Hardware prices decline 20%-40%/year So buy at last moment Buy best product that day: commodity Depreciate over 3 years so that facility is fresh. (after 3 years, cost is 23% of original). 60% decline peaks at 10 M$ 10 10 5 EOS DIS Disk Storage Size and Cost assume 40% price decline/year Data Need TB 4 3 2 Storage Cost M$ 10 1 1994 1996 1998 2000 2002 2004 2006 2008 13

Problems • HSM • Design and Meta-data • Ingest • Data discovery, search, and analysis • reorg-reprocess • disaster recovery • cost 14

What this system teaches us • Traditional storage metrics – KOX: KB objects accessed per second – $/GB: Storage cost • New metrics: – MOX: megabyte objects accessed per second – SCANS: Time to scan the archive 15

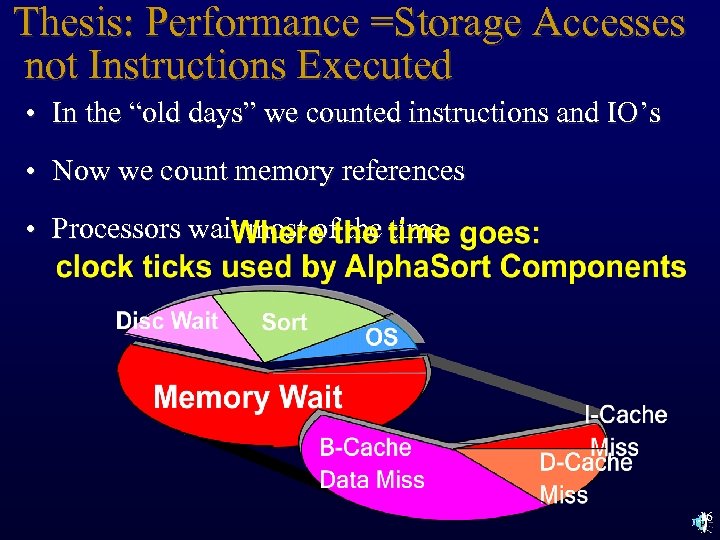

Thesis: Performance =Storage Accesses not Instructions Executed • In the “old days” we counted instructions and IO’s • Now we count memory references • Processors wait most of the time 16

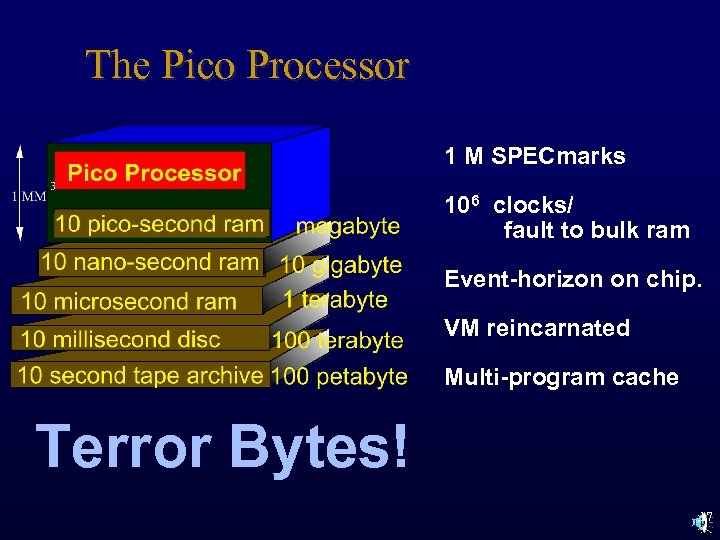

The Pico Processor 1 M SPECmarks 106 clocks/ fault to bulk ram Event-horizon on chip. VM reincarnated Multi-program cache Terror Bytes! 17

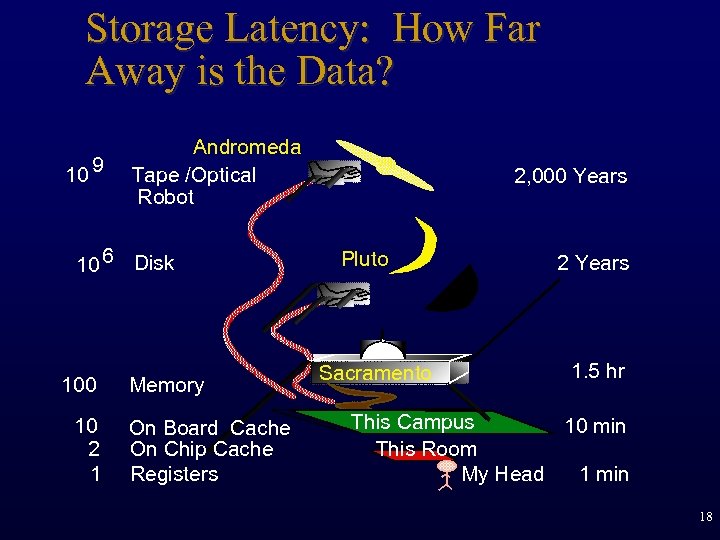

Storage Latency: How Far Away is the Data? 10 9 Andromeda Tape /Optical Robot 10 6 Disk 100 10 2 1 Memory On Board Cache On Chip Cache Registers 2, 000 Years Pluto Sacramento 2 Years 1. 5 hr This Campus 10 min This Room My Head 1 min 18

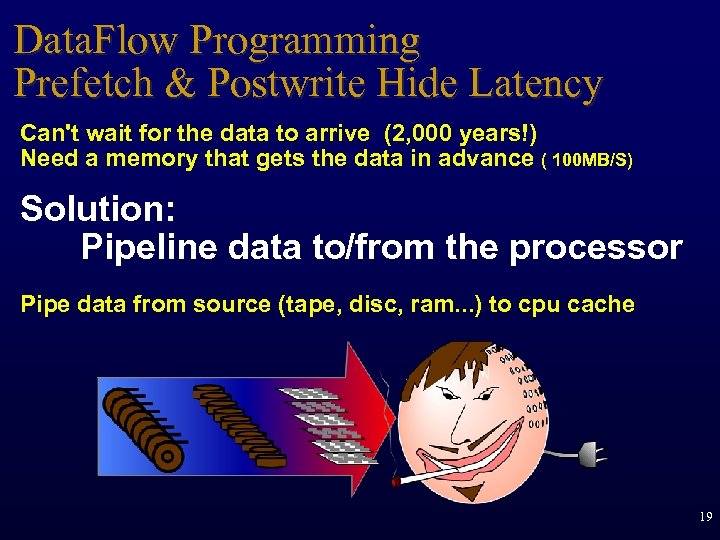

Data. Flow Programming Prefetch & Postwrite Hide Latency Can't wait for the data to arrive (2, 000 years!) Need a memory that gets the data in advance ( 100 MB/S) Solution: Pipeline data to/from the processor Pipe data from source (tape, disc, ram. . . ) to cpu cache 19

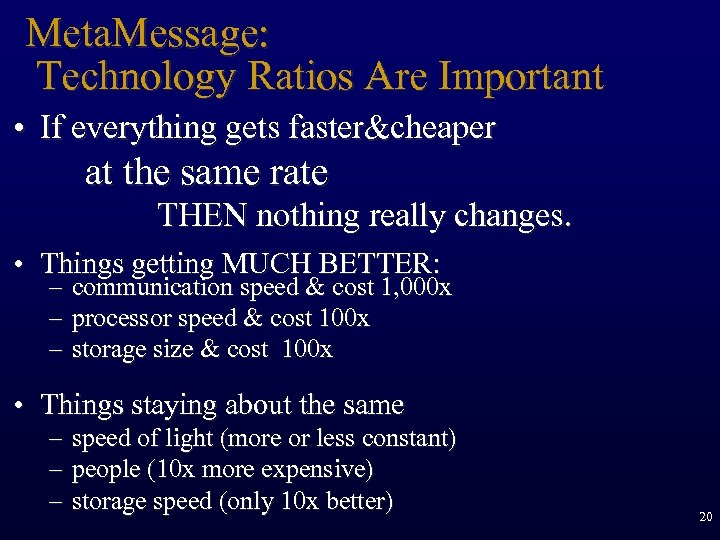

Meta. Message: Technology Ratios Are Important • If everything gets faster&cheaper at the same rate THEN nothing really changes. • Things getting MUCH BETTER: – – – communication speed & cost 1, 000 x processor speed & cost 100 x storage size & cost 100 x • Things staying about the same – speed of light (more or less constant) – people (10 x more expensive) – storage speed (only 10 x better) 20

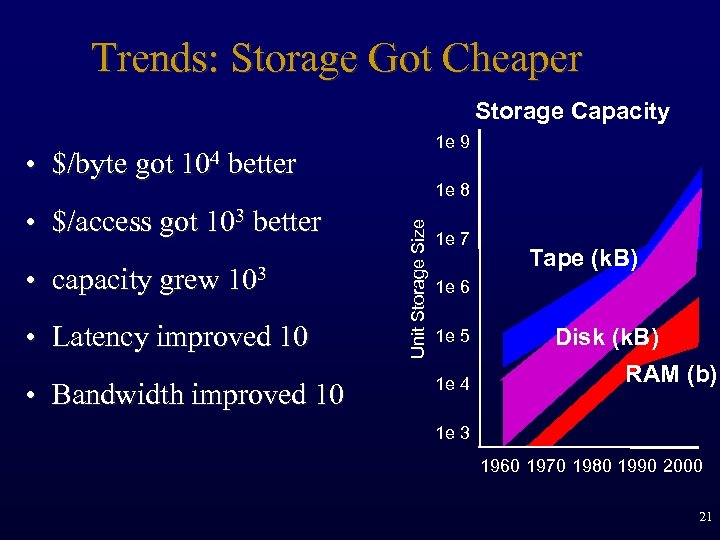

Trends: Storage Got Cheaper Storage Capacity 1 e 9 • $/byte got 104 better • capacity grew 103 • Latency improved 10 • Bandwidth improved 10 Unit Storage Size • $/access got 103 better 1 e 8 1 e 7 Tape (k. B) 1 e 6 1 e 5 1 e 4 Year Disk (k. B) RAM (b) 1 e 3 1960 1970 1980 1990 2000 21

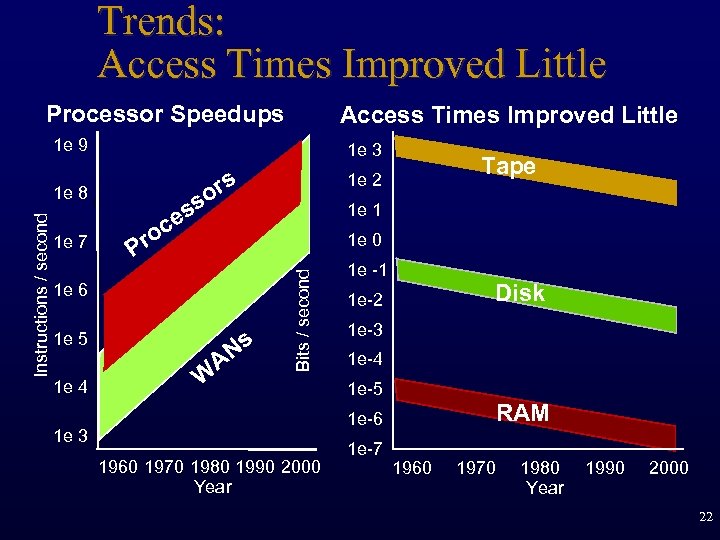

Trends: Access Times Improved Little Processor Speedups Access Times Improved Little 1 e 9 1 e 7 or ss e 1 s 1 e 5 1 e 4 1 e 1 oc Pr 1 e 6 Ns A W Tape 1 e 2 1 e 0 Bits / second Instructions / second 1 e 8 1 e 3 1 e -1 Disk 1 e-2 1 e-3 1 e-4 1 e-5 RAM 1 e-6 1 e 3 1960 1970 1980 1990 2000 Year 1 e-7 1960 1970 1980 Year 1990 2000 22

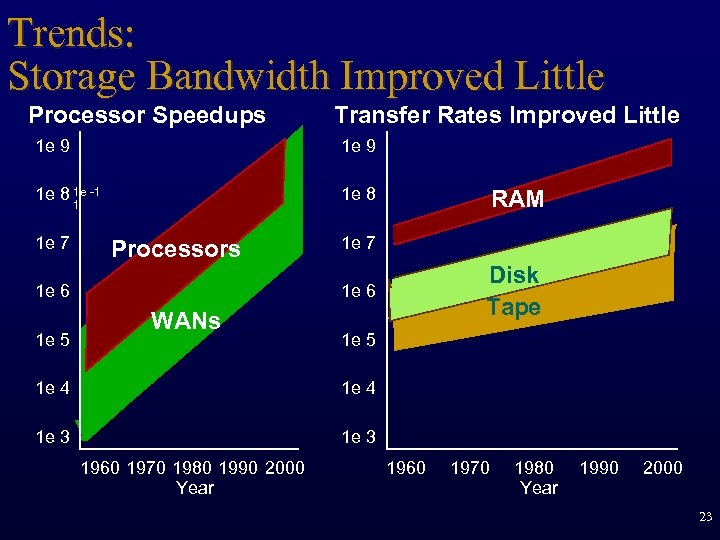

Trends: Storage Bandwidth Improved Little Processor Speedups 1 e 9 1 e 8 1 e -1 1 e 8 1 1 e 7 Processors 1 e 6 1 e 5 Transfer Rates Improved Little RAM 1 e 7 Disk Tape 1 e 6 WANs 1 e 5 1 e 4 1 e 3 1960 1970 1980 1990 2000 Year 1960 1970 1980 Year 1990 2000 23

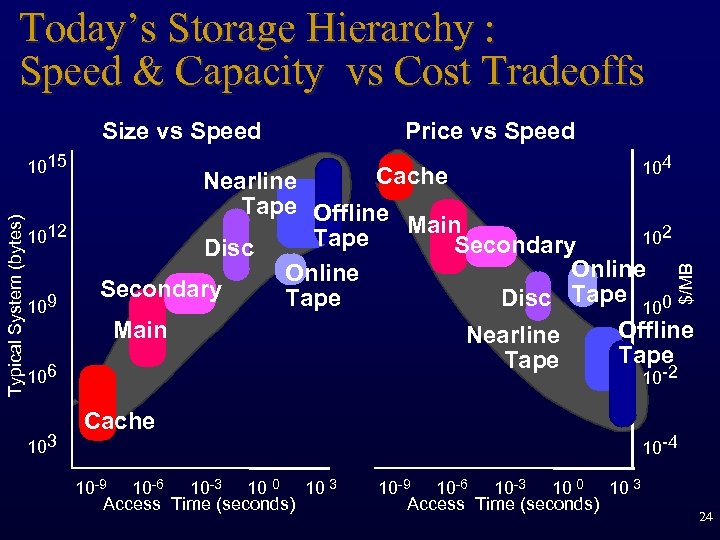

Today’s Storage Hierarchy : Speed & Capacity vs Cost Tradeoffs Size vs Speed 1012 109 106 103 104 Cache Nearline Tape Offline Main 102 Tape Secondary Disc Online Secondary Tape Disc Tape 100 Main Offline Nearline Tape -2 $/MB Typical System (bytes) 1015 Price vs Speed 10 Cache 10 -9 10 -6 10 -3 10 0 10 3 Access Time (seconds) 10 -4 10 -9 10 -6 10 -3 10 0 10 3 Access Time (seconds) 24

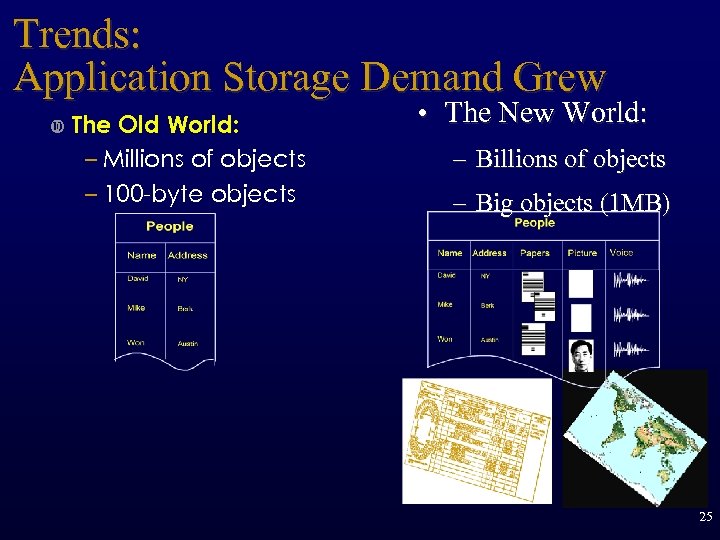

Trends: Application Storage Demand Grew The Old World: – Millions of objects – 100 -byte objects • The New World: – Billions of objects – Big objects (1 MB) 25

Trends: New Applications Multimedia: Text, voice, image, video, . . . The paperless office Library of congress online (on your campus) All information comes electronically entertainment publishing business Information Network, Knowledge Navigator, Information at Your Fingertips 26

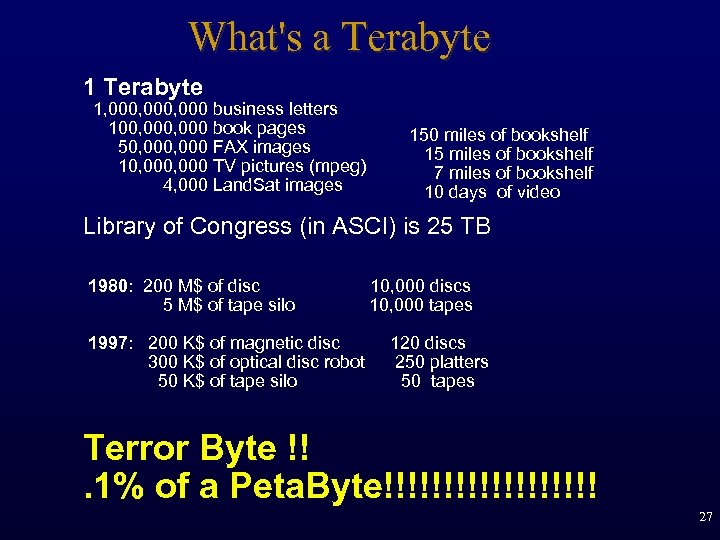

What's a Terabyte 1, 000, 000 business letters 100, 000 book pages 50, 000 FAX images 10, 000 TV pictures (mpeg) 4, 000 Land. Sat images 150 miles of bookshelf 15 miles of bookshelf 7 miles of bookshelf 10 days of video Library of Congress (in ASCI) is 25 TB 1980: 200 M$ of disc 5 M$ of tape silo 1997: 200 K$ of magnetic disc 300 K$ of optical disc robot 50 K$ of tape silo 10, 000 discs 10, 000 tapes 120 discs 250 platters 50 tapes Terror Byte !!. 1% of a Peta. Byte!!!!!!!!! 27

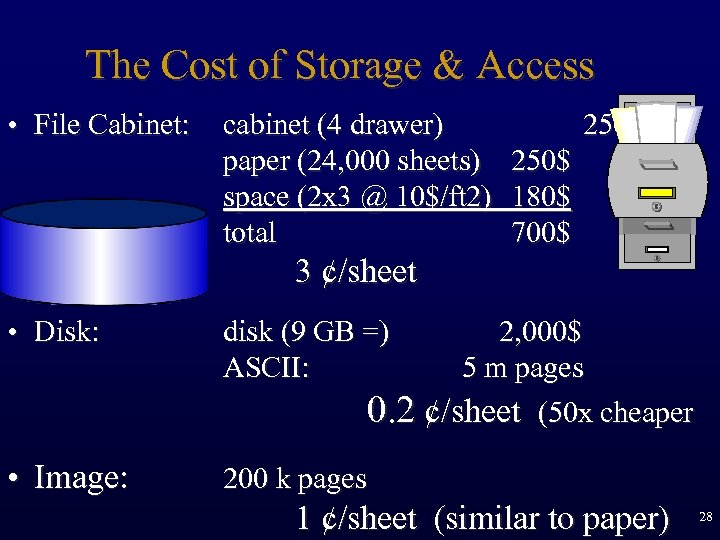

The Cost of Storage & Access • File Cabinet: cabinet (4 drawer) 250$ paper (24, 000 sheets) 250$ space (2 x 3 @ 10$/ft 2) 180$ total 700$ 3 ¢/sheet • Disk: disk (9 GB =) ASCII: • Image: 200 k pages 2, 000$ 5 m pages 0. 2 ¢/sheet (50 x cheaper 1 ¢/sheet (similar to paper) 28

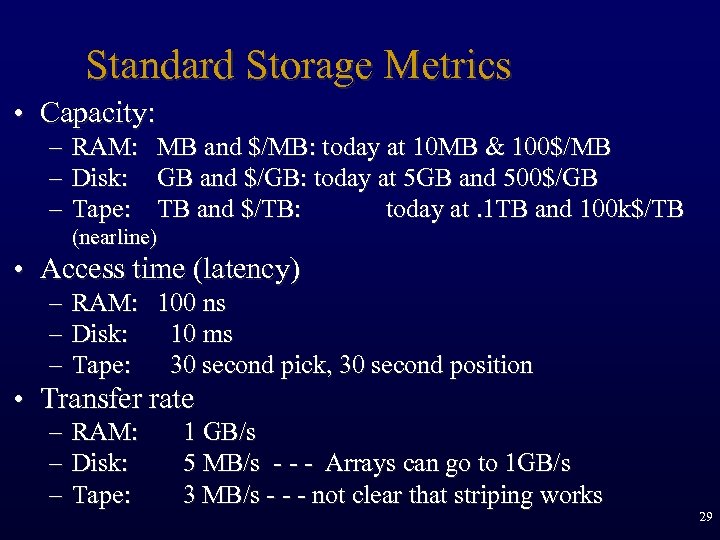

Standard Storage Metrics • Capacity: – RAM: MB and $/MB: today at 10 MB & 100$/MB – Disk: GB and $/GB: today at 5 GB and 500$/GB – Tape: TB and $/TB: today at. 1 TB and 100 k$/TB (nearline) • Access time (latency) – RAM: 100 ns – Disk: 10 ms – Tape: 30 second pick, 30 second position • Transfer rate – – – RAM: Disk: Tape: 1 GB/s 5 MB/s - - - Arrays can go to 1 GB/s 3 MB/s - - - not clear that striping works 29

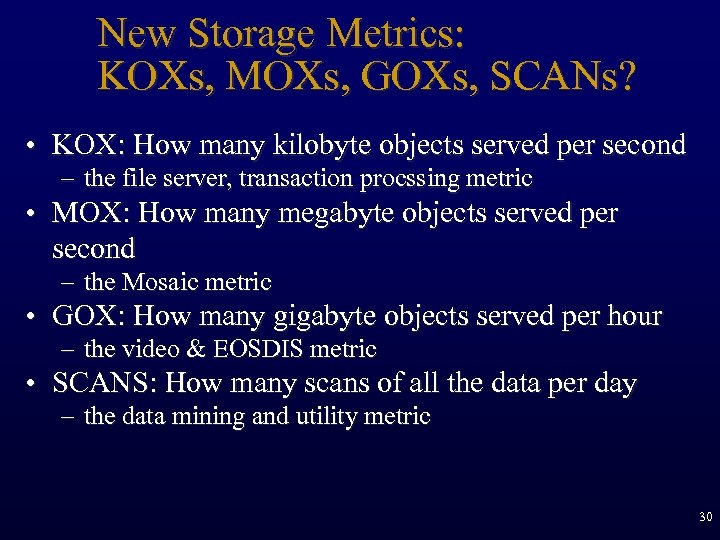

New Storage Metrics: KOXs, MOXs, GOXs, SCANs? • KOX: How many kilobyte objects served per second – the file server, transaction procssing metric • MOX: How many megabyte objects served per second – the Mosaic metric • GOX: How many gigabyte objects served per hour – the video & EOSDIS metric • SCANS: How many scans of all the data per day – the data mining and utility metric 30

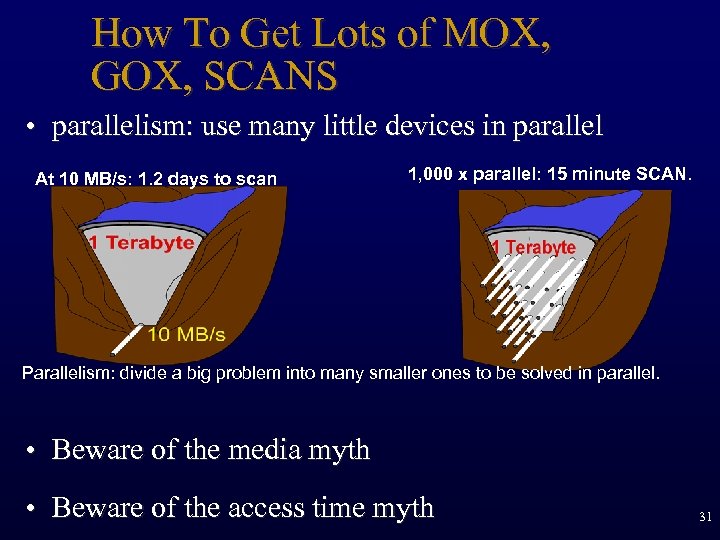

How To Get Lots of MOX, GOX, SCANS • parallelism: use many little devices in parallel At 10 MB/s: 1. 2 days to scan 1, 000 x parallel: 15 minute SCAN. Parallelism: divide a big problem into many smaller ones to be solved in parallel. • Beware of the media myth • Beware of the access time myth 31

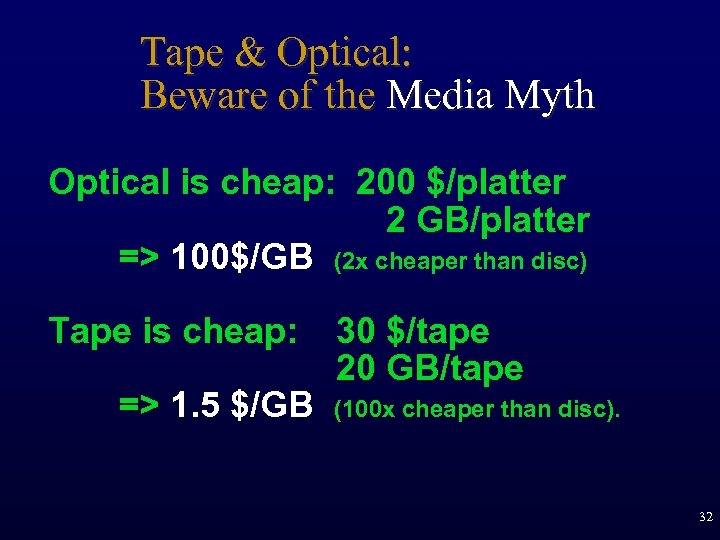

Tape & Optical: Beware of the Media Myth Optical is cheap: 200 $/platter 2 GB/platter => 100$/GB (2 x cheaper than disc) Tape is cheap: => 1. 5 $/GB 30 $/tape 20 GB/tape (100 x cheaper than disc). 32

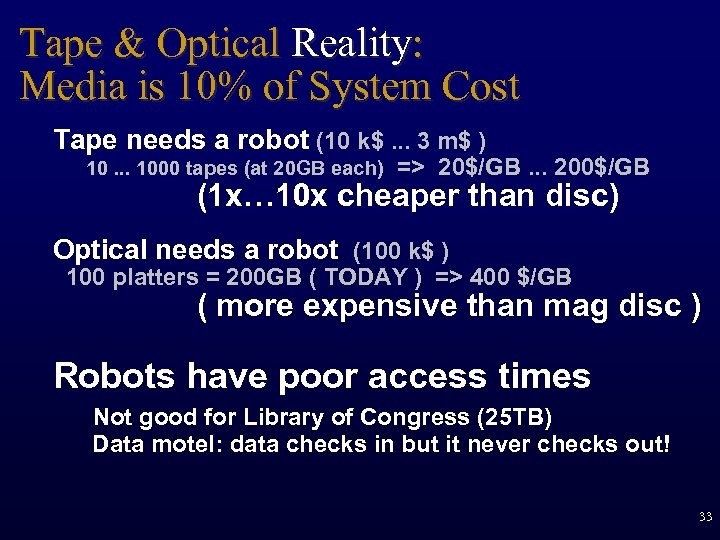

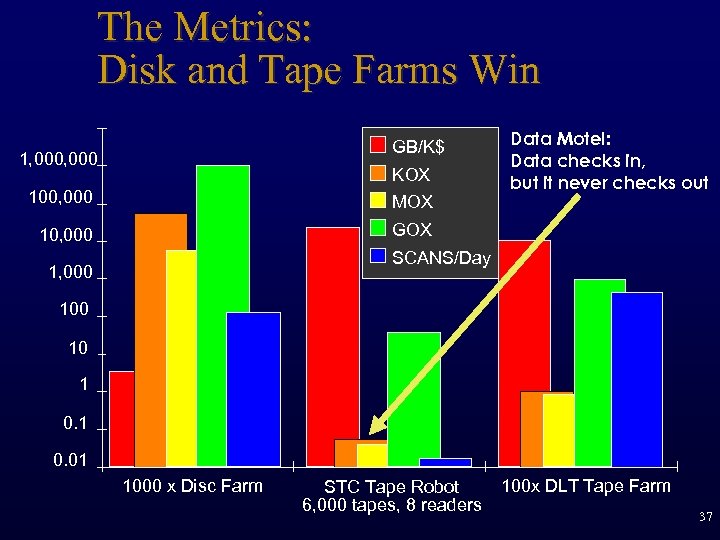

Tape & Optical Reality: Media is 10% of System Cost Tape needs a robot (10 k$. . . 3 m$ ) 10. . . 1000 tapes (at 20 GB each) => 20$/GB. . . 200$/GB (1 x… 10 x cheaper than disc) Optical needs a robot (100 k$ ) 100 platters = 200 GB ( TODAY ) => 400 $/GB ( more expensive than mag disc ) Robots have poor access times Not good for Library of Congress (25 TB) Data motel: data checks in but it never checks out! 33

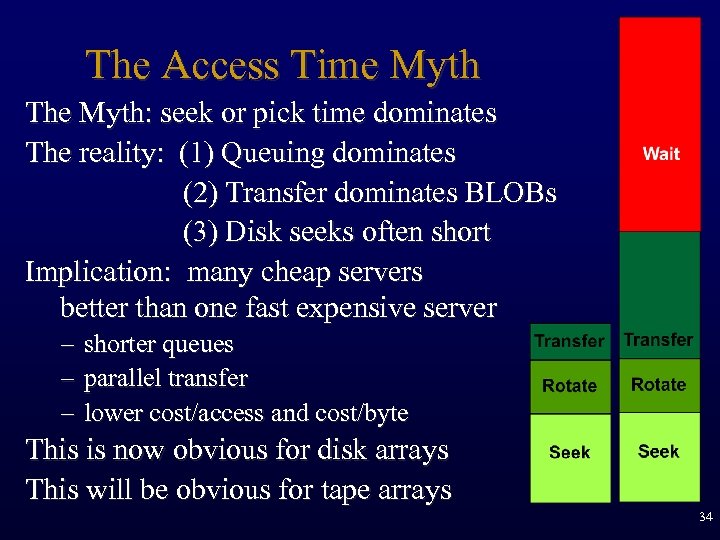

The Access Time Myth The Myth: seek or pick time dominates The reality: (1) Queuing dominates (2) Transfer dominates BLOBs (3) Disk seeks often short Implication: many cheap servers better than one fast expensive server – shorter queues – parallel transfer – lower cost/access and cost/byte This is now obvious for disk arrays This will be obvious for tape arrays 34

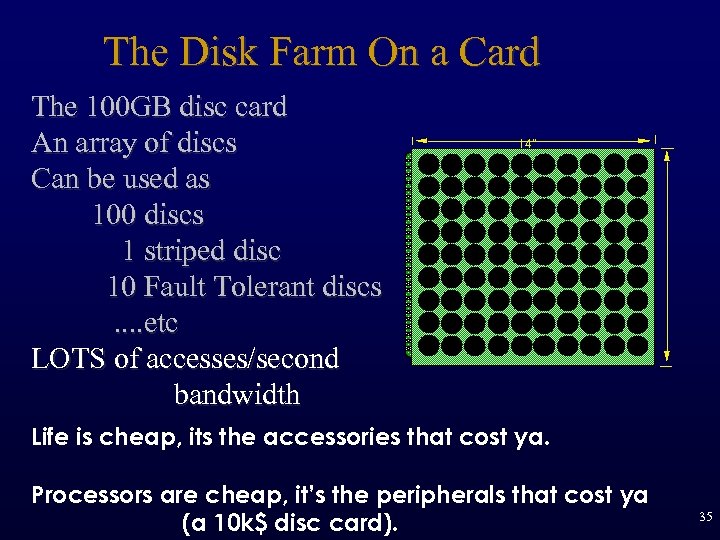

The Disk Farm On a Card The 100 GB disc card An array of discs Can be used as 100 discs 1 striped disc 10 Fault Tolerant discs. . etc LOTS of accesses/second bandwidth 14" Life is cheap, its the accessories that cost ya. Processors are cheap, it’s the peripherals that cost ya (a 10 k$ disc card). 35

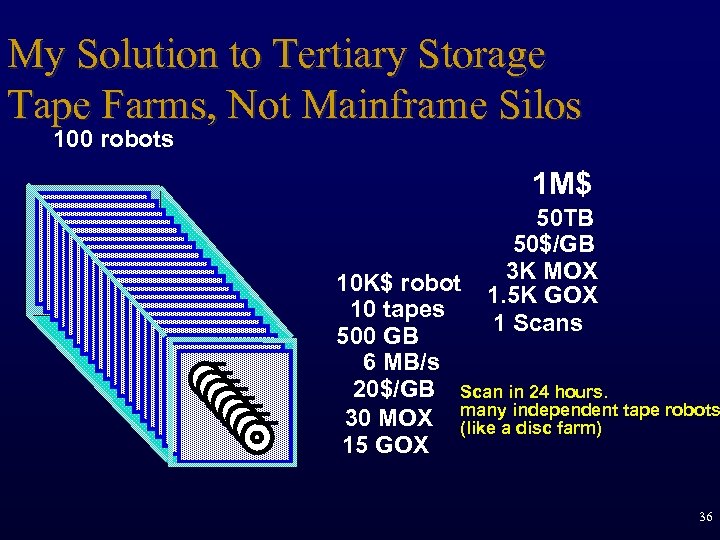

My Solution to Tertiary Storage Tape Farms, Not Mainframe Silos 100 robots 1 M$ 50 TB 50$/GB 3 K MOX 1. 5 K GOX 1 Scans 10 K$ robot 10 tapes 500 GB 6 MB/s 20$/GB Scan in 24 hours. 30 MOX many independent tape robots (like a disc farm) 15 GOX 36

The Metrics: Disk and Tape Farms Win GB/K$ 1, 000 KOX 100, 000 MOX 10, 000 Data Motel: Data checks in, but it never checks out GOX SCANS/Day 1, 000 10 1 0. 01 1000 x Disc Farm STC Tape Robot 6, 000 tapes, 8 readers 100 x DLT Tape Farm 37

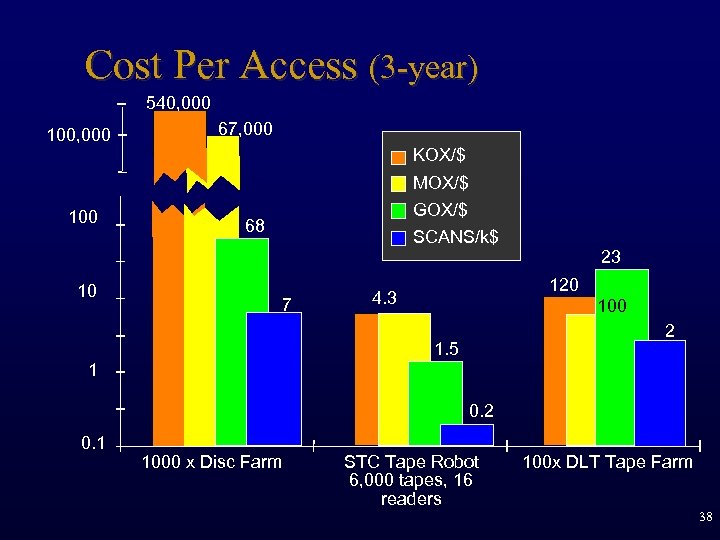

Cost Per Access (3 -year) 540, 000 100, 000 500 K 67, 000 KOX/$ MOX/$ 100 GOX/$ SCANS/k$ 68 23 10 7 7 120 4. 3 100 2 1. 5 1 0. 2 0. 1 1000 x Disc Farm STC Tape Robot 6, 000 tapes, 16 readers 100 x DLT Tape Farm 38

Summary (of new ideas) • Storage accesses are the bottleneck • Accesses are getting larger (MOX, GOX, SCANS) • Capacity and cost are improving • BUT • Latencies and bandwidth are not improving much • SO • Use parallel access (disk and tape farms) 39

Meta. Message: Technology Ratios Are Important • If everything gets faster&cheaper at the same rate nothing really changes. • Some things getting MUCH BETTER: – communication speed & cost 1, 000 x – processor speed & cost 100 x – storage size & cost 100 x • Some things staying about the same – – – speed of light (more or less constant) people (10 x worse) storage speed (only 10 x better) 40

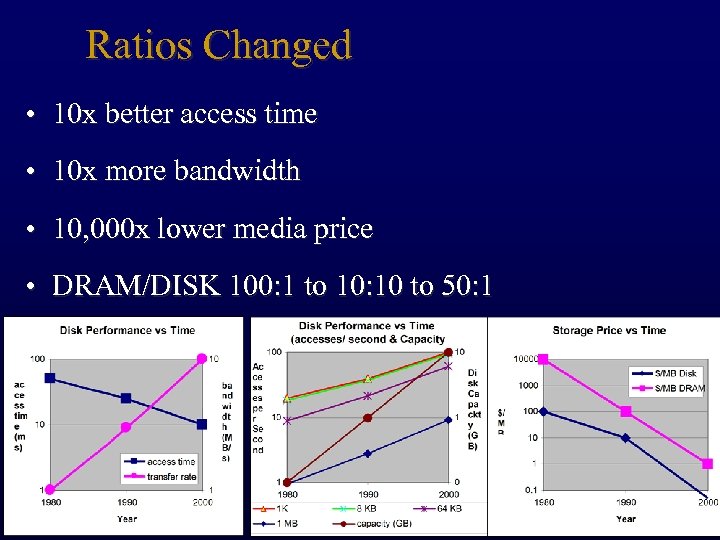

Ratios Changed • 10 x better access time • 10 x more bandwidth • 10, 000 x lower media price • DRAM/DISK 100: 1 to 10: 10 to 50: 1 41

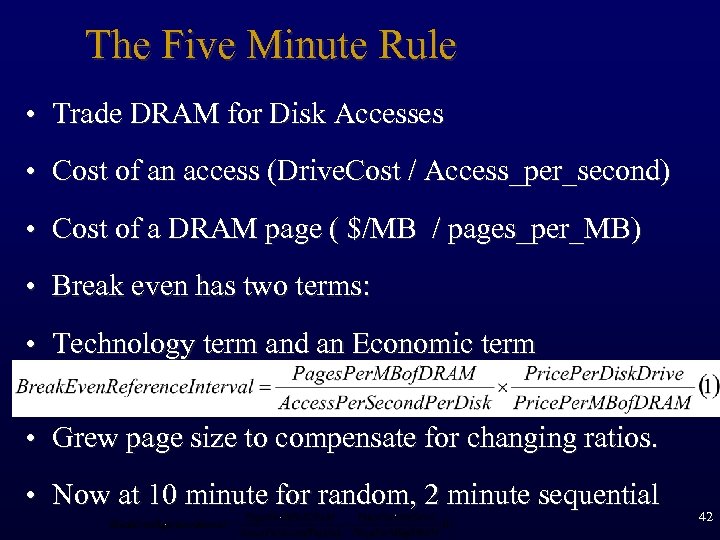

The Five Minute Rule • Trade DRAM for Disk Accesses • Cost of an access (Drive. Cost / Access_per_second) • Cost of a DRAM page ( $/MB / pages_per_MB) • Break even has two terms: • Technology term and an Economic term • Grew page size to compensate for changing ratios. • Now at 10 minute for random, 2 minute sequential 42

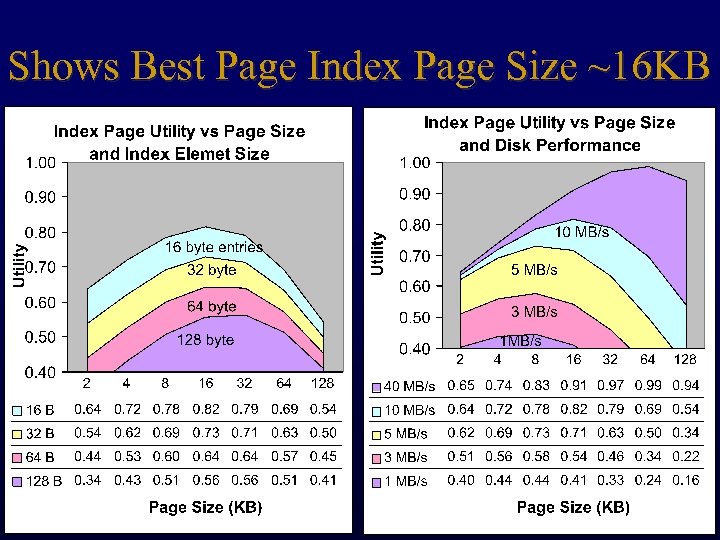

Shows Best Page Index Page Size ~16 KB 43

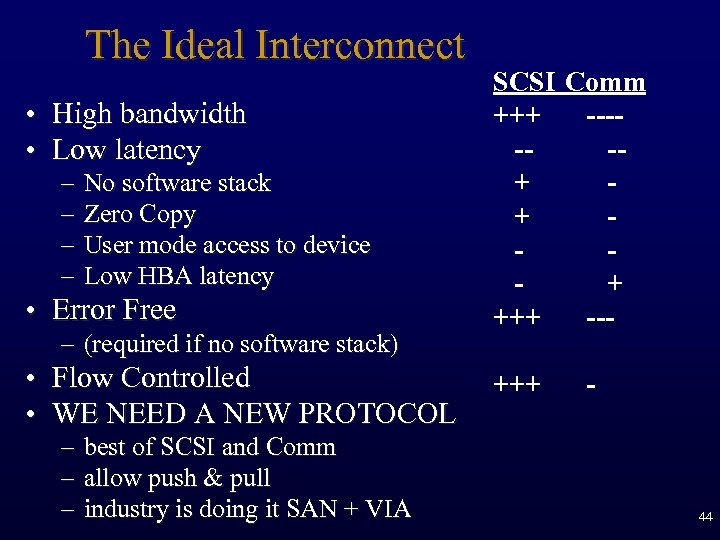

The Ideal Interconnect • High bandwidth • Low latency – – No software stack Zero Copy User mode access to device Low HBA latency • Error Free – (required if no software stack) • Flow Controlled • WE NEED A NEW PROTOCOL – best of SCSI and Comm – allow push & pull – industry is doing it SAN + VIA SCSI Comm +++ -----+ + + +++ --+++ - 44

Outline • The challenge: Building GIANT data stores – for example, the EOS/DIS 15 PB system • Conclusion 1 – Think about MOX and SCANS • Conclusion 2: – Think about Clusters – SMP report – Cluster report 45

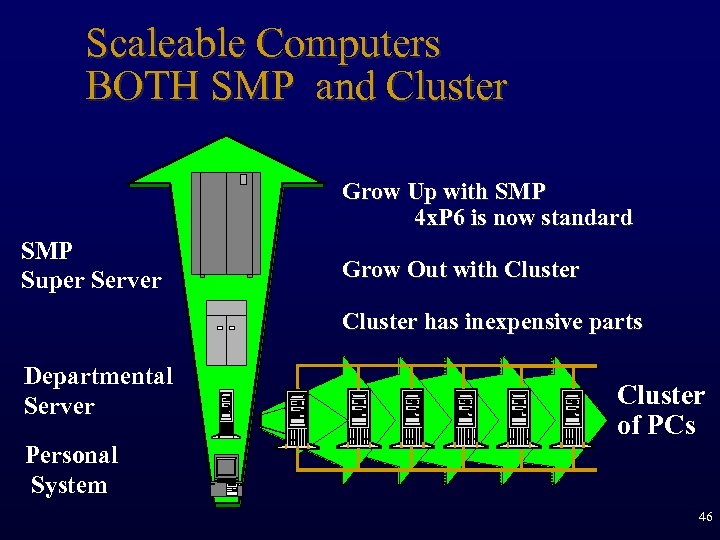

Scaleable Computers BOTH SMP and Cluster Grow Up with SMP 4 x. P 6 is now standard SMP Super Server Grow Out with Cluster has inexpensive parts Departmental Server Cluster of PCs Personal System 46

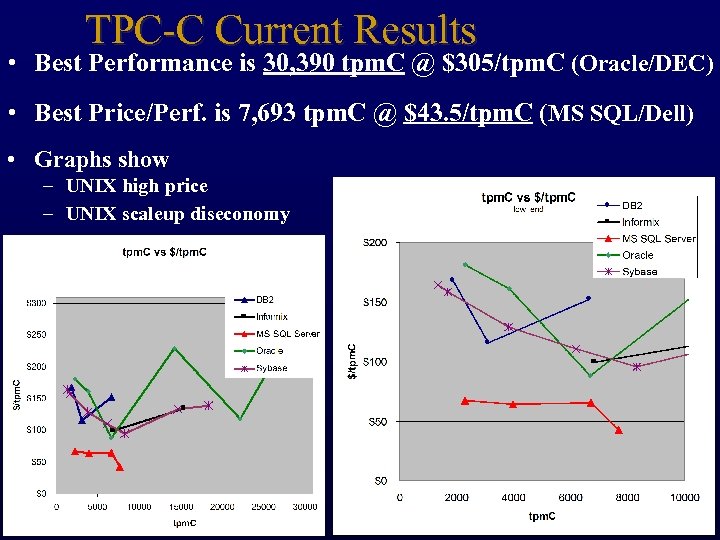

TPC-C Current Results • Best Performance is 30, 390 tpm. C @ $305/tpm. C (Oracle/DEC) • Best Price/Perf. is 7, 693 tpm. C @ $43. 5/tpm. C (MS SQL/Dell) • Graphs show – UNIX high price – UNIX scaleup diseconomy 47

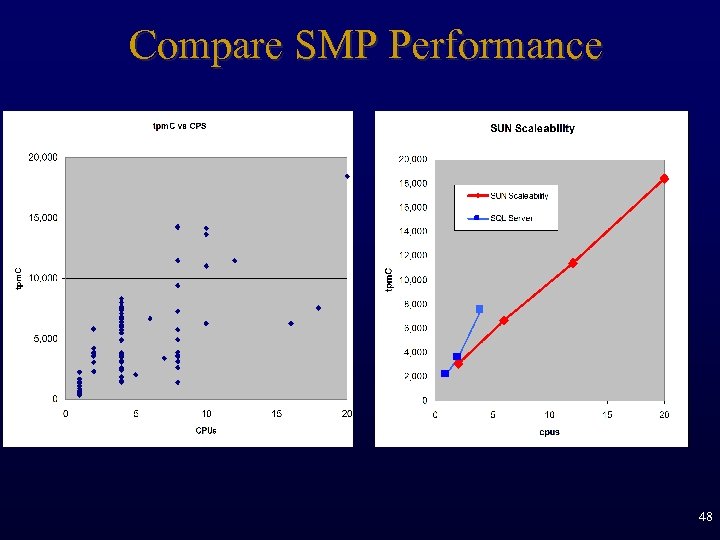

Compare SMP Performance 48

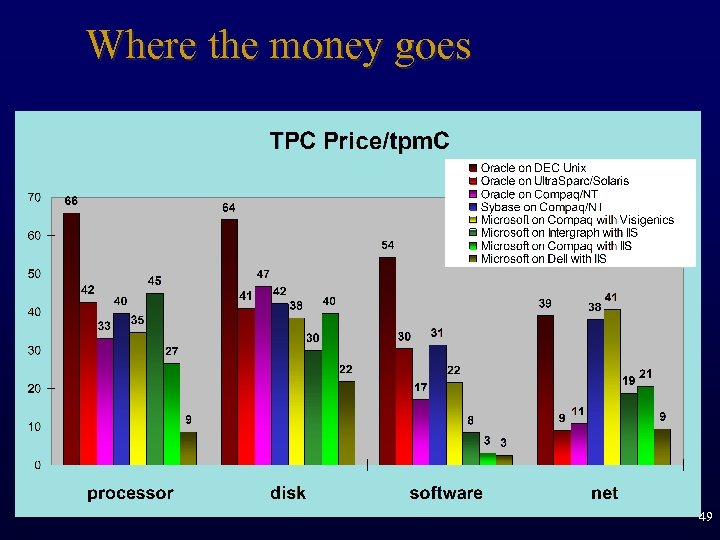

Where the money goes 49

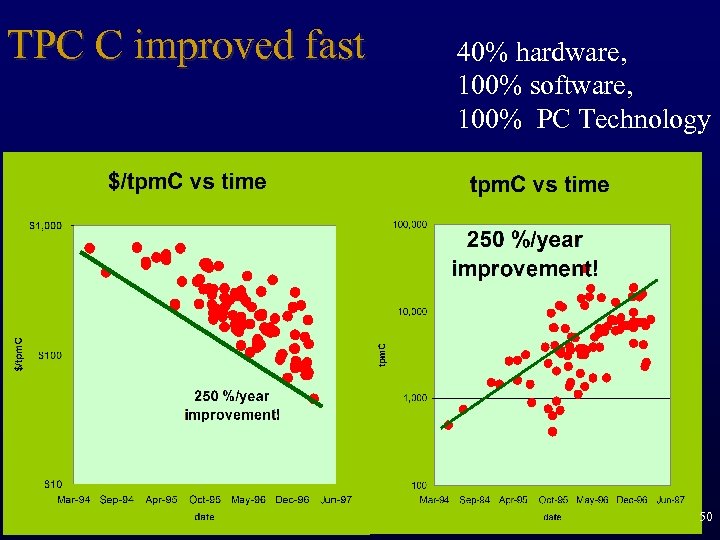

TPC C improved fast 40% hardware, 100% software, 100% PC Technology 50

What does this mean? • PC Technology is 3 x cheaper than high-end SMPs • PC nodes performance are 1/2 of high-end SMPs – 4 x. P 6 vs 20 x. Ultra. Sparc • Peak performance is a cluster – Tandem 100 node cluster – DEC Alpha 4 x 8 cluster • Commodity solutions WILL come to this market 51

Clusters being built • • Teradata 500 nodes (50 k$/slice) Tandem, VMScluster 150 nodes (100 k$/slice) Intel, 9, 000 nodes @ 55 M$ ( 6 k$/slice) Teradata, Tandem, DEC moving to NT+low slice price • IBM: 512 nodes @ 100 m$ (200 k$/slice) • PC clusters (bare handed) at dozens of nodes web servers (msn, Point. Cast, …), DB servers • KEY TECHNOLOGY HERE IS THE APPS. – Apps distribute data – Apps distribute execution 53

Cluster Advantages • Clients and Servers made from the same stuff. – Inexpensive: Built with commodity components • Fault tolerance: – Spare modules mask failures • Modular growth – grow by adding small modules • Parallel data search – use multiple processors and disks 54

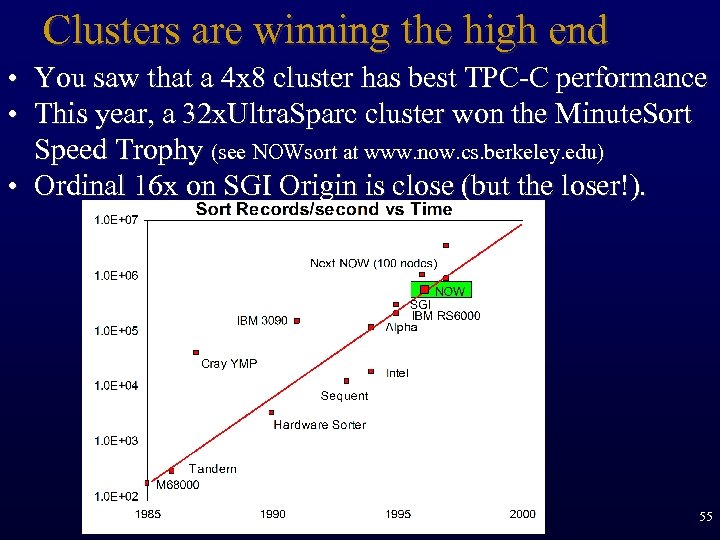

Clusters are winning the high end • You saw that a 4 x 8 cluster has best TPC-C performance • This year, a 32 x. Ultra. Sparc cluster won the Minute. Sort Speed Trophy (see NOWsort at www. now. cs. berkeley. edu) • Ordinal 16 x on SGI Origin is close (but the loser!). 55

Clusters (Plumbing) • Single system image – naming – protection/security – management/load balance • Fault Tolerance – Wolfpack Demo • Hot Pluggable hardware & Software 56

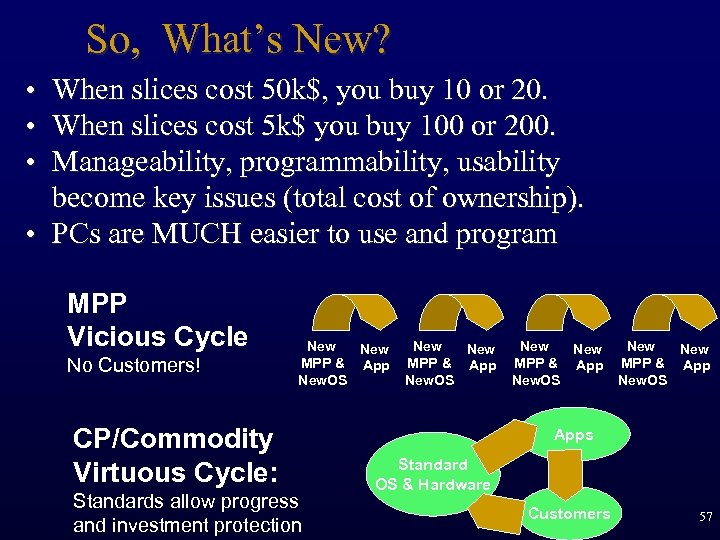

So, What’s New? • When slices cost 50 k$, you buy 10 or 20. • When slices cost 5 k$ you buy 100 or 200. • Manageability, programmability, usability become key issues (total cost of ownership). • PCs are MUCH easier to use and program MPP Vicious Cycle No Customers! New MPP & App New. OS CP/Commodity Virtuous Cycle: Standards allow progress and investment protection New New MPP & App New. OS Apps Standard OS & Hardware Customers 57

Windows NT Server Clustering High Availability On Standard Hardware Standard API for clusters on many platforms No special hardware required. Resource Group is unit of failover Typical resources: shared disk, printer, . . . IP address, Net. Name Service (Web, SQL, File, Print Mail, MTS …) API to define resource groups, dependencies, resources, GUI administrative interface A consortium of 60 HW & SW vendors (everybody who is anybody) 2 -Node Cluster in beta test now. Available 97 H 1 >2 node is next SQL Server and Oracle Demo on it today Key concepts System: a node Cluster: systems working together Resource: hard/ soft-ware module Resource dependency: resource needs another Resource group: fails over as a unit Dependencies: do not cross group boundaries 58

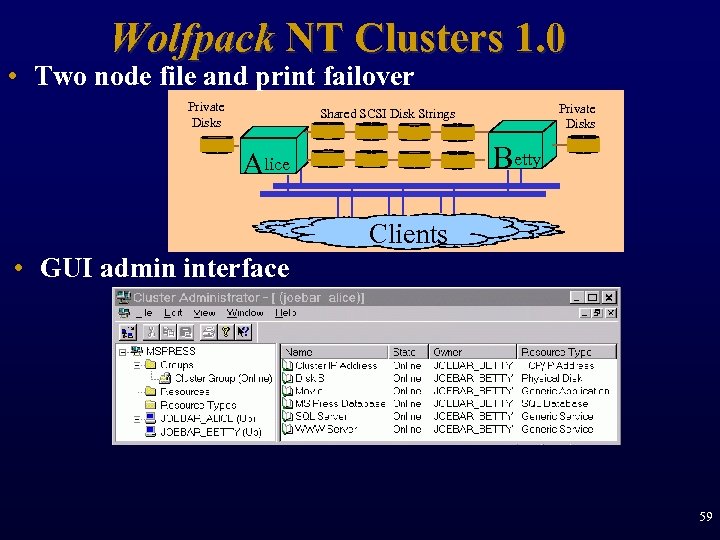

Wolfpack NT Clusters 1. 0 • Two node file and print failover Private Disks Shared SCSI Disk Strings Betty Alice Clients • GUI admin interface 59

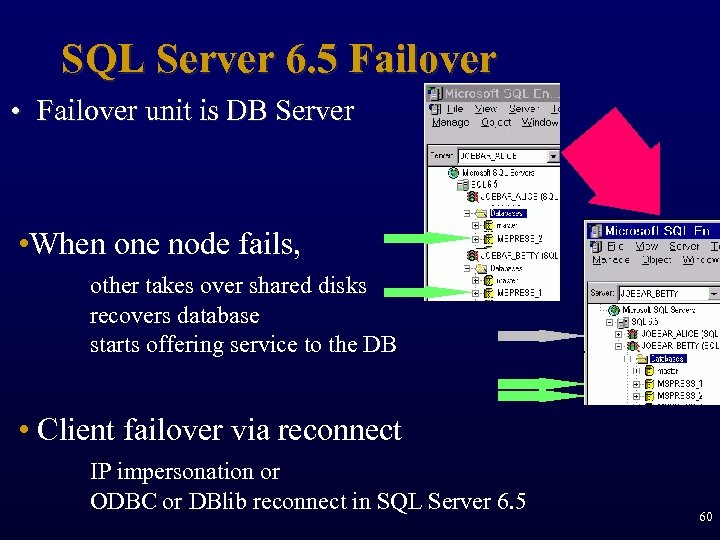

SQL Server 6. 5 Failover • Failover unit is DB Server • When one node fails, other takes over shared disks recovers database starts offering service to the DB • Client failover via reconnect IP impersonation or ODBC or DBlib reconnect in SQL Server 6. 5 60

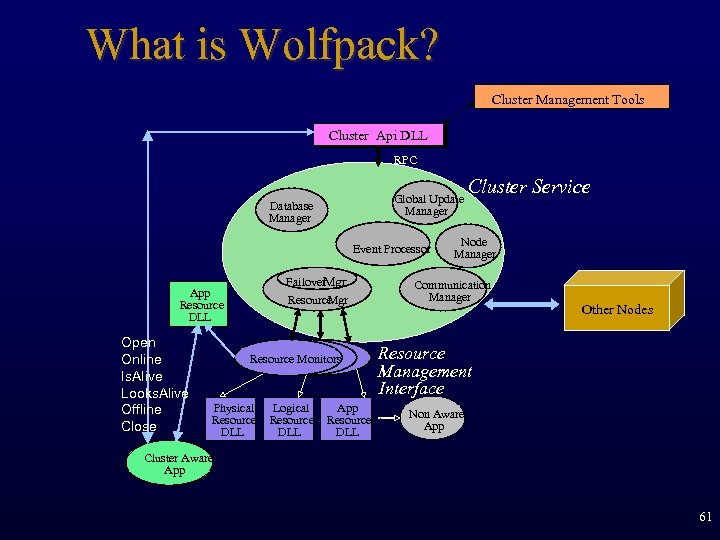

What is Wolfpack? Cluster Management Tools Cluster Api DLL RPC Global Update Manager Database Manager Event Processor Failover Mgr Resource Mgr App Resource DLL Open Online Is. Alive Looks. Alive Offline Close Resource Monitors Physical Resource DLL Logical Resource DLL App Resource DLL Cluster Service Node Manager Communication Manager Other Nodes Resource Management Interface Non Aware App Cluster Aware App 61

Where We Are Today • Clusters moving fast – OLTP – Sort – Wolf. Pack • Technology ahead of schedule – cpus, disks, tapes, wires, . . • OR Databases are evolving • Parallel DBMSs are evolving • HSM still immature 62

Outline • The challenge: Building GIANT data stores – for example, the EOS/DIS 15 PB system • Conclusion 1 – Think about MOX and SCANS • Conclusion 2: – Think about Clusters – SMP report – Cluster report 63

a49ef0187c17c251666427571f982367.ppt