d6112368f0ea9c203ed42e21f3ed9ce4.ppt

- Количество слайдов: 41

Building Grids: If Everybody Else Is Doing It, Why Shouldn’t You? Jay Boisseau, Texas Advanced Computing Center SURA Grid Application Planning & Implementation Workshop December 6 -8, 2005

Outline • Welcome! • Overview of TACC (with Grid Computing Context) • Some Perspectives on Grid Computing • Closing Thoughts • More

Overview of TACC (with Grid Computing Context)

TACC Mission To enhance knowledge discovery & education and to improve society through the application of advanced computing technologies.

TACC Strategic Approach To accomplish this mission, TACC: – Evaluates, acquires & operates advanced computing systems and software – Provides documentation, consulting, and training to users of advanced computing resources – Conducts R&D to produce new computational technologies & techniques that enhance advanced computing systems – Collaborates with users to apply advanced computing techniques in their research, develop, occupations, etc. – Educates the community to broaden and deepen the pipeline of talented persons choosing careers in advanced computing – Informs society about the value of advanced computing technologies in improving knowledge and quality of life Resources & Services Research & Development PR & EOT

TACC Advanced Computing Technology Areas • High Performance Computing (HPC) • Visualization & Data Analysis (VDA) • Data & Information Systems (DIS) • Distributed & Grid Computing (DGC)

TACC Advanced Computing Technology Areas • High Performance Computing (HPC) • Visualization & Data Analysis (VDA) • Data & Information Systems (DIS) • Distributed & Grid Computing (DGC) – newest area of R&D, resources, services at TACC – “tying it all together”

TACC Advanced Computing Applications Focus Areas • Computational Geosciences – World-class expertise, programs at UT Austin – Strategic to state of Texas • Computational Life Sciences – Broad & deep expertise in Texas higher ed institutions – Important to society • Emergency Situation Assessment & Response – Crucial to life, property – Leverages TACC expertise, resources, and applications

TACC Advanced Computing Applications Focus Areas • Computational Geosciences – World-class expertise, programs at UT Austin – Strategic to state of Texas • Computational Life Sciences – Broad & deep expertise in Texas higher ed institutions – Important to society • Emergency Situation Assessment & Response – Crucial to life, property – Leverages TACC expertise, resources, and applications • Each has need for resources sharing & coordination, workflow, data/instrument integration: grid computing

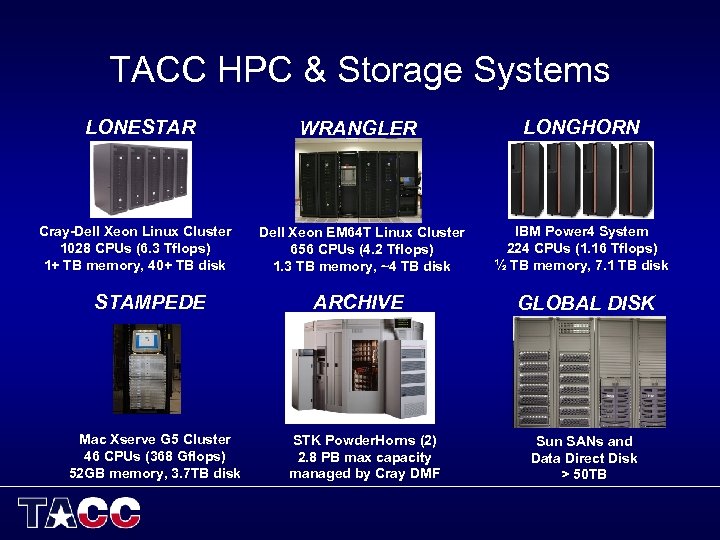

TACC HPC & Storage Systems LONESTAR Cray-Dell Xeon Linux Cluster 1028 CPUs (6. 3 Tflops) 1+ TB memory, 40+ TB disk STAMPEDE Mac Xserve G 5 Cluster 46 CPUs (368 Gflops) 52 GB memory, 3. 7 TB disk WRANGLER LONGHORN Dell Xeon EM 64 T Linux Cluster 656 CPUs (4. 2 Tflops) 1. 3 TB memory, ~4 TB disk IBM Power 4 System 224 CPUs (1. 16 Tflops) ½ TB memory, 7. 1 TB disk ARCHIVE GLOBAL DISK STK Powder. Horns (2) 2. 8 PB max capacity managed by Cray DMF Sun SANs and Data Direct Disk > 50 TB

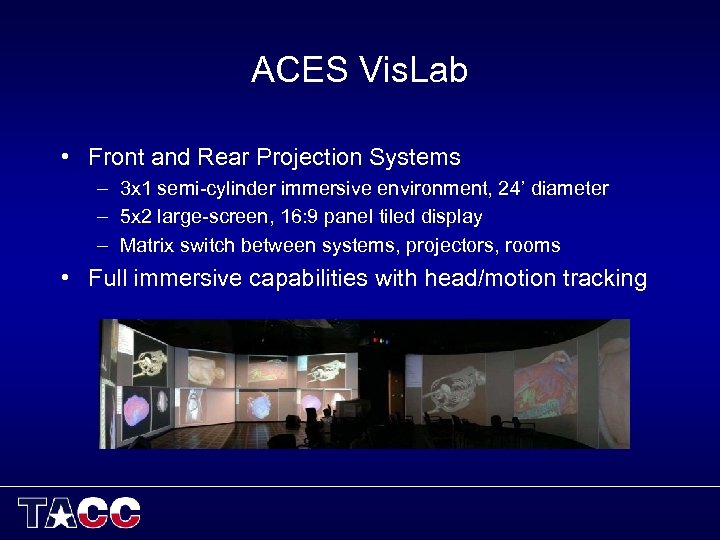

ACES Vis. Lab • Front and Rear Projection Systems – 3 x 1 semi-cylinder immersive environment, 24’ diameter – 5 x 2 large-screen, 16: 9 panel tiled display – Matrix switch between systems, projectors, rooms • Full immersive capabilities with head/motion tracking

TACC Advanced Visualization Systems • Sun Terascale Visualization System – 128 Ultra. Sparc 4 cores, ½ TB memory – 16 commodity graphics cards, > 3 Gpoly/sec – Remote to Vis. Lab; very remote to Tera. Grid! • SGI Onyx 2 – 24 CPUs, 6 Infinite Reality 2 Graphics Pipes – 25 GB Memory, 356 GB Disk

TACC Network Connectivity • Intercampus bandwidth – Force 10 switch/routers with 1. 2 Tbps backplane in TACC machine room and ACES building – 10 Gbps between TACC machine room and ACES provided by Nortel DWDM (waiting for 10 Gig. E cards) • WAN network upgrades: – UT Internet 2 at OC-12 – Tera. Grid connection at 10 Gbps – New Lonestar Education And Research Network (LEARN) being built for Texas universities – Texas Joining National Lambda Rail (10 Gbps waves) • High bandwidth networks (local and national) to facilitate resource sharing, coordination, data flow…

TACC R&D – Distributed & Grid Computing • Web-based grid portals – Grid. Port, Tera. Grid User Portal, SURA portal, TIGRE portal • Grid resource data collection & information services – GPIR • Overall grid deployment and integration – UT Grid, Tera. Grid, TIGRE, OSG, SURA • Grid scheduling and workflow tools – Grid. Shell, My. Cluster, Metascheduling Prediction Services • Remote and collaborative grid-enabled visualization – For Tera. Grid, UT Grid • Network performance for moving terascale data

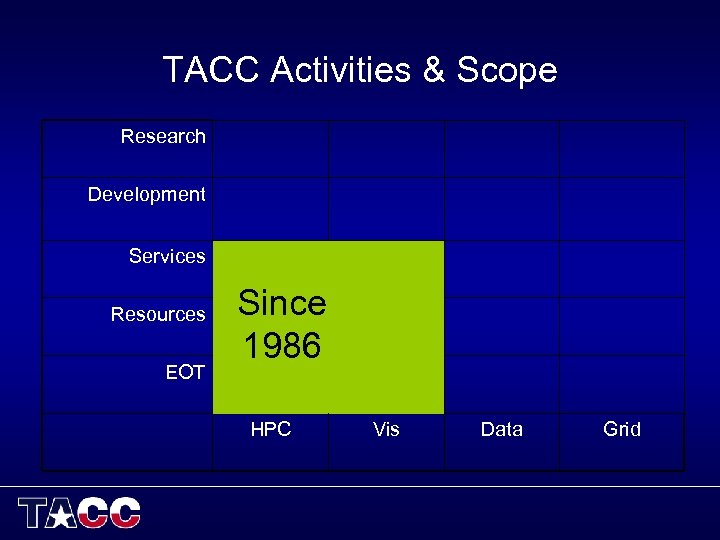

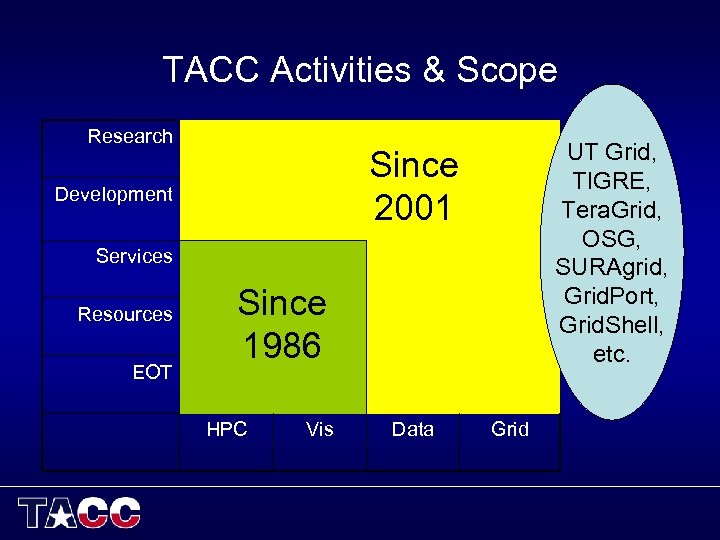

TACC Activities & Scope Research Development Services Resources EOT Since 1986 HPC Vis Data Grid

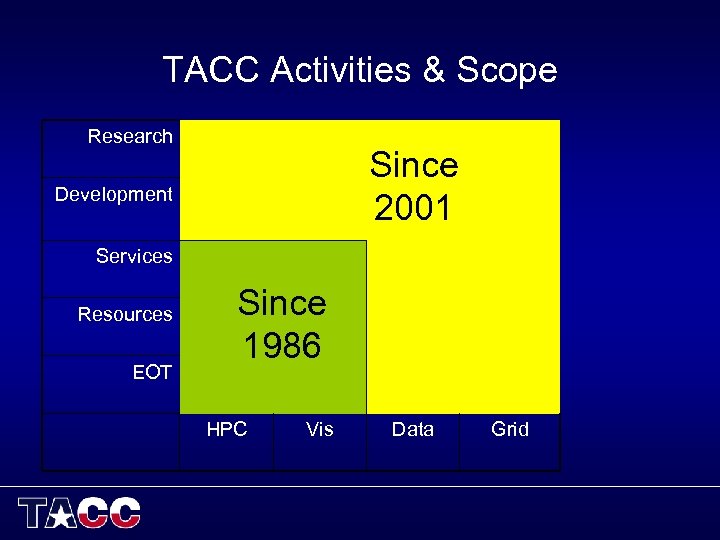

TACC Activities & Scope Research Since 2001 Development Services Resources EOT Since 1986 HPC Vis Data Grid

TACC Activities & Scope Research UT Grid, TIGRE, Tera. Grid, OSG, SURAgrid, Grid. Port, Grid. Shell, etc. Since 2001 Development Services Resources EOT Since 1986 HPC Vis Data Grid

TACC Today

TACC Tomorrow

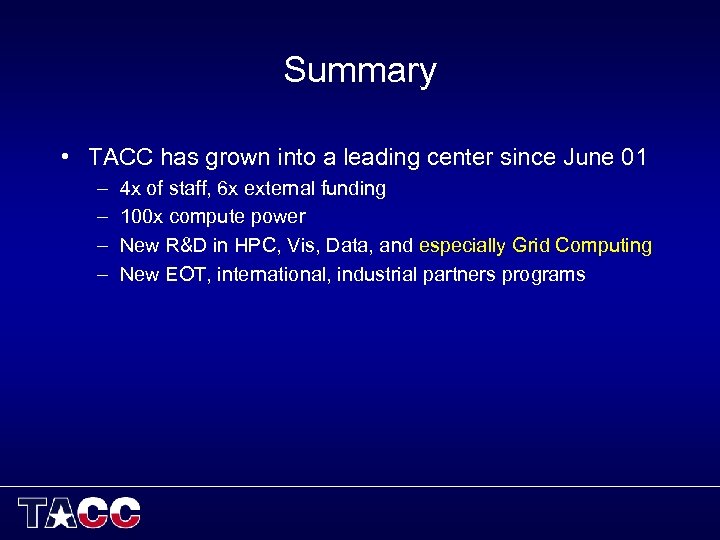

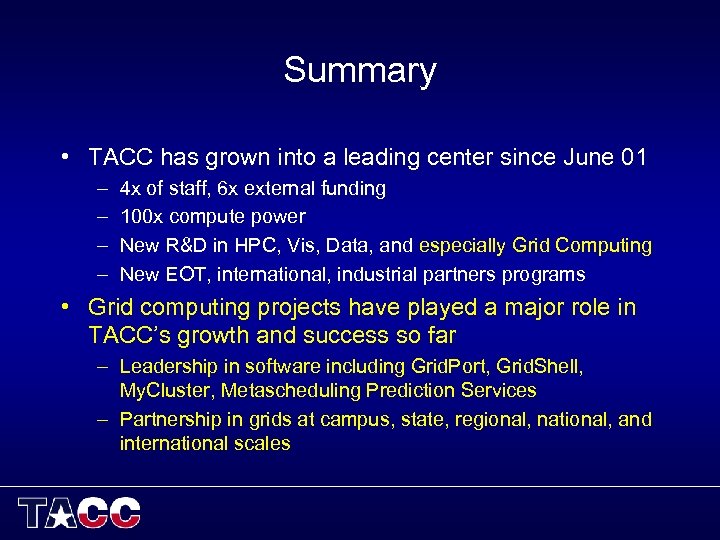

Summary • TACC has grown into a leading center since June 01 – – 4 x of staff, 6 x external funding 100 x compute power New R&D in HPC, Vis, Data, and especially Grid Computing New EOT, international, industrial partners programs

Summary • TACC has grown into a leading center since June 01 – – 4 x of staff, 6 x external funding 100 x compute power New R&D in HPC, Vis, Data, and especially Grid Computing New EOT, international, industrial partners programs • Grid computing projects have played a major role in TACC’s growth and success so far – Leadership in software including Grid. Port, Grid. Shell, My. Cluster, Metascheduling Prediction Services – Partnership in grids at campus, state, regional, national, and international scales

Some Perspectives on Grid Computing

Researchers Already Use Distributed Computing: Case Is Already Made! • Researchers already use distributed systems: – – Local workstations for some development, small simulations HPC at big centers Visualization back in their lab or in a Vislab Archival storage to SANs, NASes, tape silos, etc. • Researchers already collaborate with peers at other institutions – science is collaborative! • Grids should enable resource sharing, collaboration, etc. with – Greater ease – More flexibility – More capability

Or in English… “There are talented people everywhere in the world focused on solving the most challenging problems, and there are companies everywhere determined to provide the best products as efficiently as possible… people WILL collaborate and learn to share resources, as well as ideas and data, in order to ‘be first’ … people have been using distributed resources for decades, and this is only increasing… Grid computing to me is the subset of distributed computing that makes it easier… So, ‘Grid computing’ is here today and will remain important, by whatever name you want to call it. ” -- me in GRIDtoday 12/05/05

Grid Computing: My View • Grid computing is a standard, ‘complete’ set of distributed computing software capabilities • Grid computing must provide some basic functions – – resource discovery and information collection & publishing data management on and between resources process management on an between resources common security mechanism underlying the above • No grid computing package provides everything • Example: ‘Open Grid Services Architecture’ (OGSA) (e. g. , as implemented in Globus v 4) makes it possible to build the components and make them work together

Grid Computing: My View • TACC focuses on Grid computing to – enhance our HPC, Sci. Vis, and massive data storage – integrate researchers’ local computing systems with ours – eventually, integrate research instruments for research that also requires HPC, Sci. Vis, massive data storage

So TACC Drank The Grid Kool-Aid • What grids are we participating in? – – – UT Grid: campus-scale TIGRE: state SURA Grid: regional Tera. Grid: national Open Science Grid: international And we’re building grid tools to provide capabilities for/in these grids • Why are we participating in these grids? Some examples will answer that question….

UT Grid: Enable Campus-Wide Terascale Distributed Computing • Why Build It? To move from ‘island’ of high-end resources to ‘hub’ of campus computing continuum – provide models for local resources (clusters, vislabs, etc. ), training, and documentation – develop procedures for integrating local systems to UT Grid • single sign-on, data space, compute space • leverage every PC, cluster, NAS, etc. on campus! – integrate digital assets into UT Grid – integrate UT instruments & sensors into UT Grid – provide user portals and login nodes to access and use all campus resources!

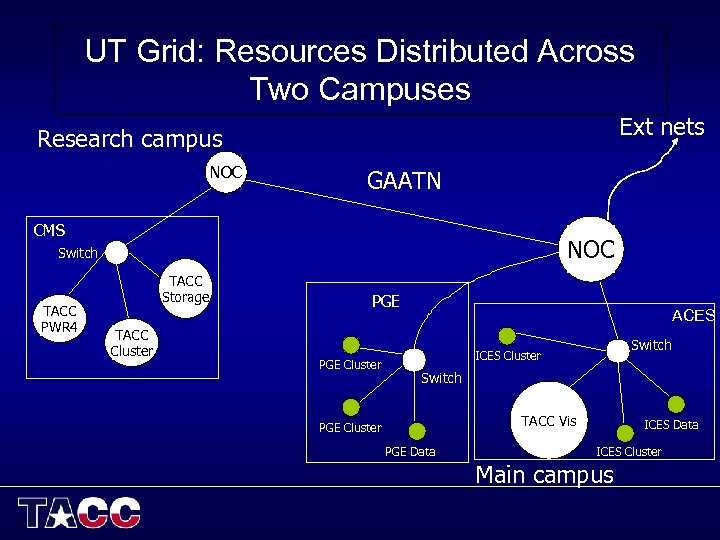

UT Grid: Resources Distributed Across Two Campuses Ext nets Research campus NOC GAATN CMS NOC Switch TACC PWR 4 TACC Storage TACC Cluster PGE Cluster ACES Switch ICES Cluster Switch TACC Vis PGE Cluster PGE Data ICES Cluster Main campus

UT Grid Status • First 20 Months: – Deployed production United Devices ‘grid, ’ (Roundup) – Deployed production Condor pool, integrated with other pools (Rodeo) – Developed Grid. Port v 4, Grid. Shell v 1 – Building user portal, downloadable client software stack – More to come… (see tomorrow’s talk)

TIGRE: Texas Internet Grid for Research & Education • Why Build It? : Help Texas universities & medical centers work together to share resources and advance Texas research, education, economy • 2 year project, $2. 5 M – But took 2+ years to get funding! • 5 funded participants – – – Rice University Texas Tech University Texas A&M University of Houston University of Texas

TIGRE: Texas Internet Grid for Research & Education • Develop, document, and deploy a grid across the 5 participants – Supporting driving applications • Enable other LEARN members to join TIGRE – – Package grid software so that others can easily install it Provide good documentation Ensure that it’s easy, lightweight Make it modular: enable institutions to provide just what they can offer • NOTE: Companion project (LEARN) will provide a highbandwidth network for use by TIGRE and other Texas institutions

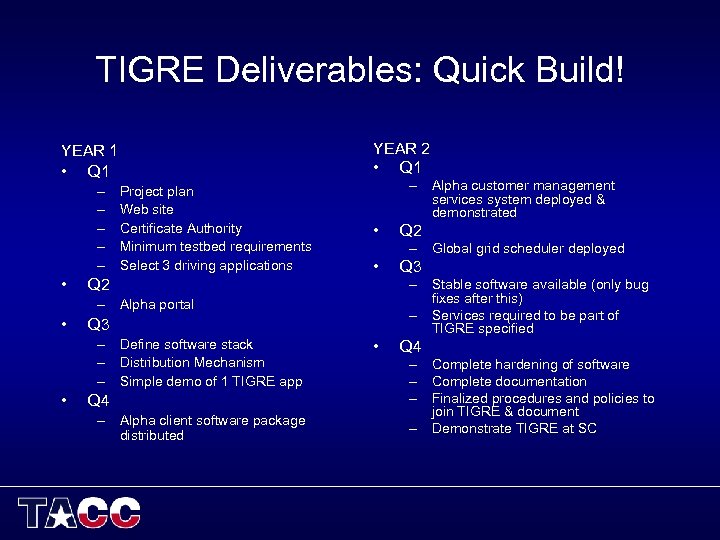

TIGRE Deliverables: Quick Build! YEAR 2 • Q 1 YEAR 1 • Q 1 – – – • Project plan Web site Certificate Authority Minimum testbed requirements Select 3 driving applications – Alpha customer management services system deployed & demonstrated • – Global grid scheduler deployed • Q 2 Q 3 – Define software stack – Distribution Mechanism – Simple demo of 1 TIGRE app • Q 4 – Alpha client software package distributed Q 3 – Stable software available (only bug fixes after this) – Services required to be part of TIGRE specified – Alpha portal • Q 2 • Q 4 – Complete hardening of software – Complete documentation – Finalized procedures and policies to join TIGRE & document – Demonstrate TIGRE at SC

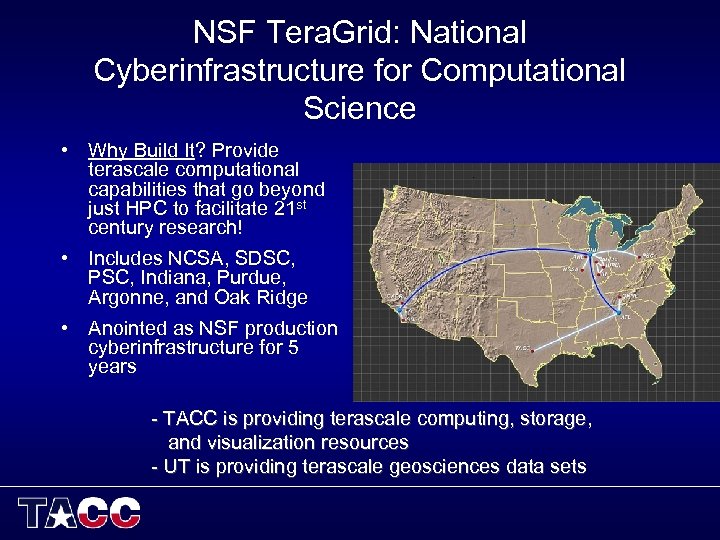

NSF Tera. Grid: National Cyberinfrastructure for Computational Science • Why Build It? Provide terascale computational capabilities that go beyond just HPC to facilitate 21 st century research! • Includes NCSA, SDSC, PSC, Indiana, Purdue, Argonne, and Oak Ridge • Anointed as NSF production cyberinfrastructure for 5 years - TACC is providing terascale computing, storage, and visualization resources - UT is providing terascale geosciences data sets

Closing Thoughts

So Should You Or Shouldn’t You? • Grid computing is here to stay, by one name or another… – The possibilities are too great – The needs are too great • But it’s not always needed – Simple solutions, powerful tools, sharp minds get answers – Can maximize collaboration, but can also inhibit people from working on the real problem • Get user requirements and THINK! – – What is needed? What is overkill? Use mature technologies unless doing grid R&D Use the minimum subset to meet requirements, build on successes incrementally

To Build Useful Grids, Software Must Be: • Easier – No more difficult than CLIs for ‘power users’ – No more difficult than the Web/PC apps for the other 99% of (potential) users (portals, desktop apps, etc. ) – No more difficult than configuring office network for admins • Smarter – Smart scheduling, data transfers, workflow – Built-in help/advice, like PC apps and portals

To Build Useful Grids, Software Must Be: • More robust – Must not break more than the individual resources – Opportunity is to break less than any individual resource (but only partially successful so far) • And standards-based & interoperable – Web services, etc. • So lots of opportunities for us geeks! – But let’s not lose sight of the forest for the trees!

Finally, Enjoy Your Time Here While You Learn • Austin is Fun, Cool, Weird, & Wonderful – Mix of hippies, slackers, academics, geeks, politicos, musicians, filmmakers, artists, and even a few cowboys – “Keep Austin Weird” is the official slogan – Live Music Capital of the World (seriously) • Also great restaurants, cafes, clubs, bars, theaters, galleries, museums, etc. – http: //www. austinchronicle. com/ – http: //www. austin 360. com/xl/content/xl/index. html – http: //www. research. ibm. com/arl/austin/index. html (!)

Your Austin To-Do List ü ü ü ü Eat barbecue at Rudy’s, Stubb’s, Iron Works, Green Mesquite, etc. Eat Tex-Mex at Chuy’s, Trudy’s, Maudie’s, etc. Have a cold Shiner Bock (but not Lone Star) Visit 6 th Street and Warehouse District at night Go to at least one live music show Learn to two-step at The Broken Spoke Visit the Texas State History Museum Walk/jog/bike around Town Lake Visit the UT main campus and the ACES Vis. Lab See a movie at Alamo Drafthouse Cinema (arrive early, order beer & food) Eat Amy’s Ice Cream Listen to and buy local music at Waterloo Records Buy a bottle each of Rudy’s Barbecue ‘Sause’ and Tito’s Vodka Drive into the Hill Country, visit small towns and wineries See sketch comedy at Esther’s Follies See a million bats emerge from Congress Ave. bridge at sunset

Welcome to TACC and Austin, Y’all!

d6112368f0ea9c203ed42e21f3ed9ce4.ppt