4607b64ad719e887cff21fe3959d22dc.ppt

- Количество слайдов: 16

Boostrapping language models for dialogue systems Karl Weilhammer, Matthew N Stuttle, Steve Young Presenter: Hsuan-Sheng Chiu 1

Introduction • Poor speech recognition performance is often described as the single most important factor prohibiting the wider use of spoken dialogue systems • Many dialogue systems use recognition grammars instead of statistical language models • In this paper, they compare the standard approach with using SLMs that trained from different corpora 2

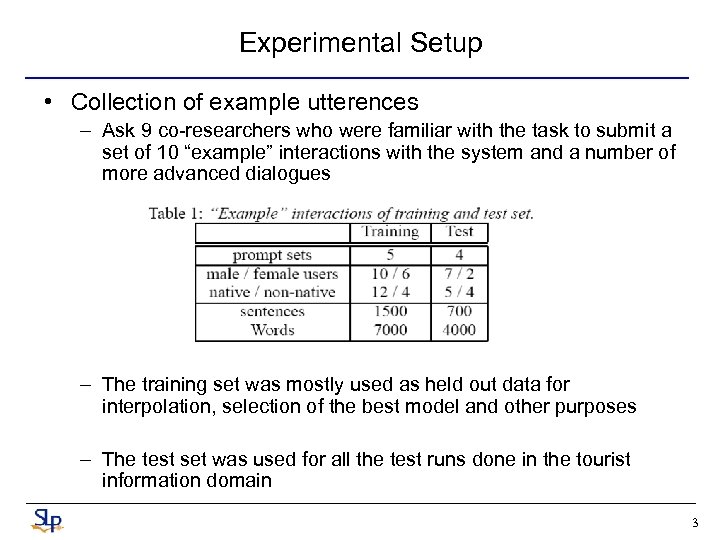

Experimental Setup • Collection of example utterences – Ask 9 co-researchers who were familiar with the task to submit a set of 10 “example” interactions with the system and a number of more advanced dialogues – The training set was mostly used as held out data for interpolation, selection of the best model and other purposes – The test set was used for all the test runs done in the tourist information domain 3

Experimental Setup (cont. ) • Generation and recognition grammar – A simple HTK grammar was written consisting of around 80 rules in EBNF and used in two ways • it was converted into a word network (16996 nodes and 40776 transitions) • A corpus of random sentences was generated from it – The task grammar was structured • 1. task specific semantic concepts (prices, hotel names, …) • 2. General concepts (local relations, numbers, dates, …) • 3. Query predicates (Want, Find, Exists, Select, …) • 4. basic phrases (yes, No, Dont. Mind, Grumble, …) • 5. List of sub-grammars for user answers to all prompts • 6. Main Grammar 4

Acoustic Models • Use trigram decoder in Application Toolkit for HTK • Acoustic models: – Training: WSJCAM 0, internal triphone models (92 speakers of British English, 7900 read sentences, 130 k words) – Adpatation: SACTI, 43 users, 3000 sentences, 20 k words) – MAP and HLDA 5

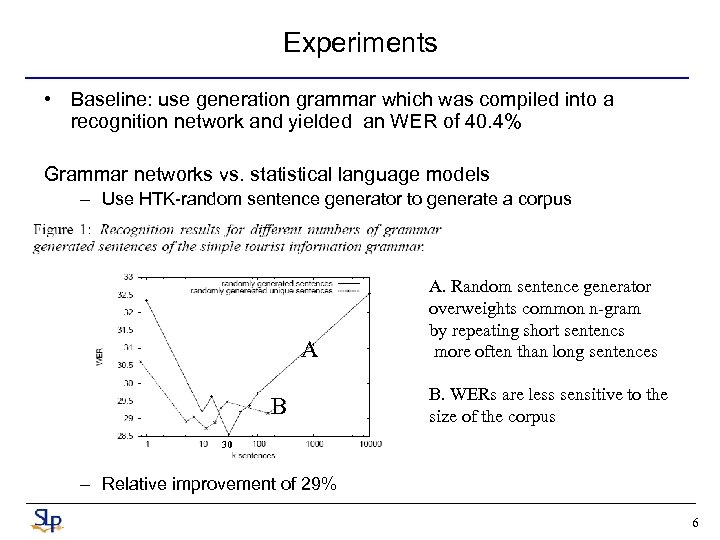

Experiments • Baseline: use generation grammar which was compiled into a recognition network and yielded an WER of 40. 4% Grammar networks vs. statistical language models – Use HTK-random sentence generator to generate a corpus A B A. Random sentence generator overweights common n-gram by repeating short sentencs more often than long sentences B. WERs are less sensitive to the size of the corpus 30 – Relative improvement of 29% 6

Experiments (cont. ) • In-domain language model – WOZ Corpus – A WOZ corpus in the Tourist information domain (SACTI) was collected – The major part of the dialogues was recorded in a simulated automated speech recognition channel – Half of the corpus consists of speech only dialogues – In the other half an interactive map interface could also be used along with speech 7

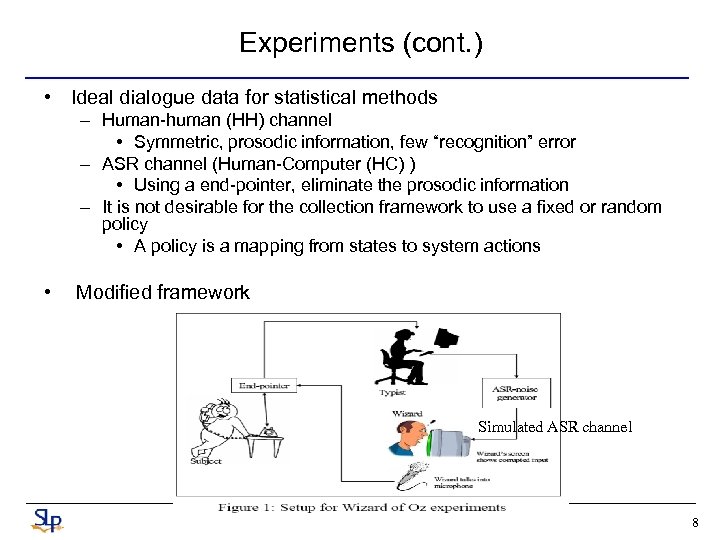

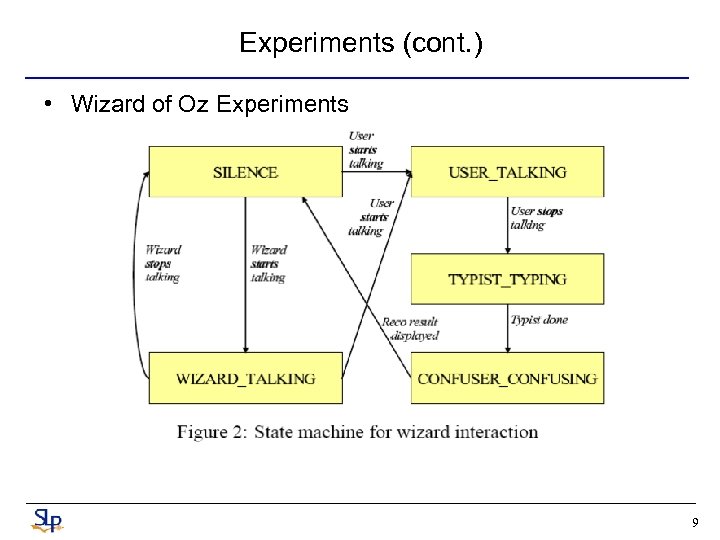

Experiments (cont. ) • Ideal dialogue data for statistical methods – Human-human (HH) channel • Symmetric, prosodic information, few “recognition” error – ASR channel (Human-Computer (HC) ) • Using a end-pointer, eliminate the prosodic information – It is not desirable for the collection framework to use a fixed or random policy • A policy is a mapping from states to system actions • Modified framework Simulated ASR channel 8

Experiments (cont. ) • Wizard of Oz Experiments 9

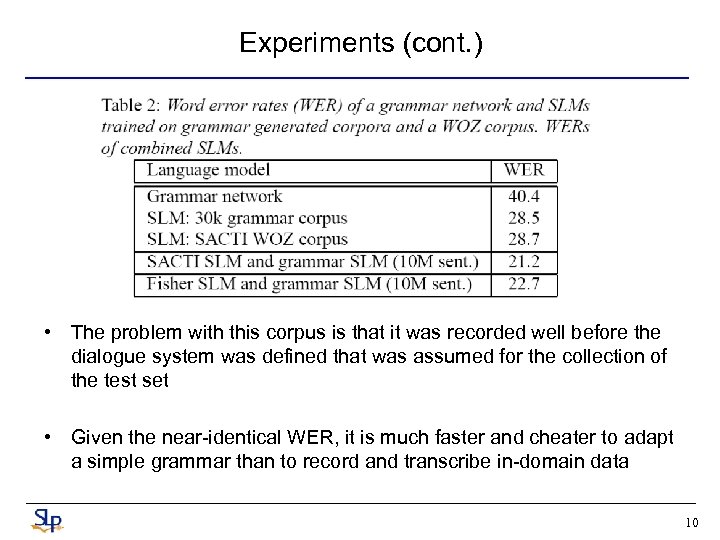

Experiments (cont. ) • The problem with this corpus is that it was recorded well before the dialogue system was defined that was assumed for the collection of the test set • Given the near-identical WER, it is much faster and cheater to adapt a simple grammar than to record and transcribe in-domain data 10

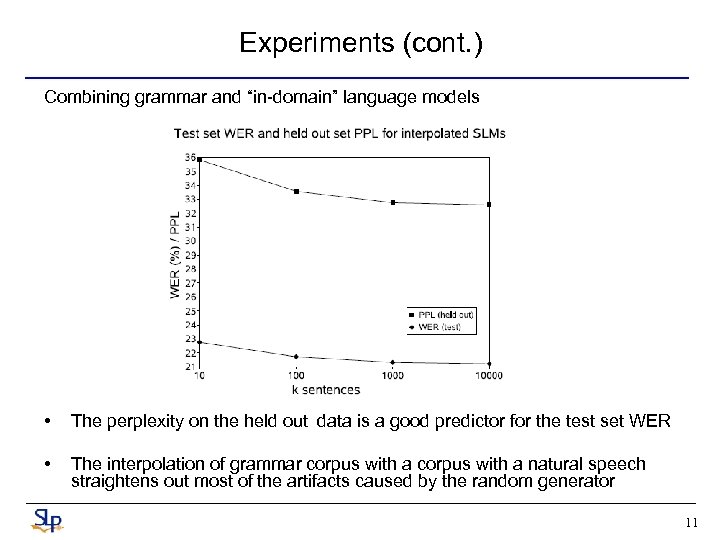

Experiments (cont. ) Combining grammar and “in-domain” language models • The perplexity on the held out data is a good predictor for the test set WER • The interpolation of grammar corpus with a natural speech straightens out most of the artifacts caused by the random generator 11

Experiments (cont. ) Interpolation language models derived from a grammar and a standard corpus – Fisher Corpus contains transcriptions of conversations about different topics – The idea behind this is that the grammar generated data will contribute in-domain n-grams and the general corpus will add colloquial phrases – For the reasons of comparison, the vocabulary used to build the Fisher SLM contained all words of the grammar and WOZ data collection – The absolute WER improvement that would have been selected based on perplexity minimum at the held out set is only 0. 25% 12

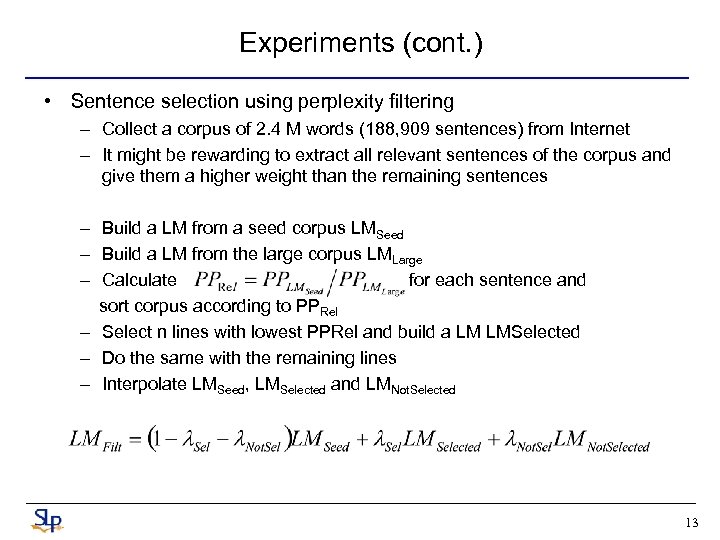

Experiments (cont. ) • Sentence selection using perplexity filtering – Collect a corpus of 2. 4 M words (188, 909 sentences) from Internet – It might be rewarding to extract all relevant sentences of the corpus and give them a higher weight than the remaining sentences – Build a LM from a seed corpus LMSeed – Build a LM from the large corpus LMLarge – Calculate for each sentence and sort corpus according to PPRel – Select n lines with lowest PPRel and build a LM LMSelected – Do the same with the remaining lines – Interpolate LMSeed, LMSelected and LMNot. Selected 13

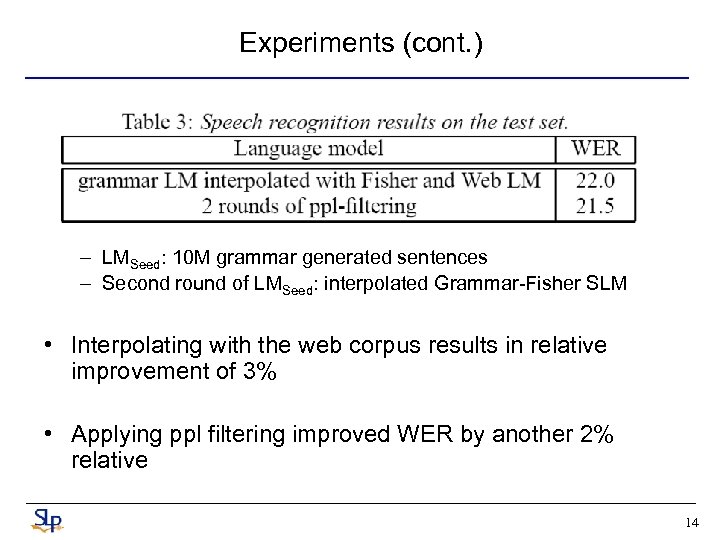

Experiments (cont. ) – LMSeed: 10 M grammar generated sentences – Second round of LMSeed: interpolated Grammar-Fisher SLM • Interpolating with the web corpus results in relative improvement of 3% • Applying ppl filtering improved WER by another 2% relative 14

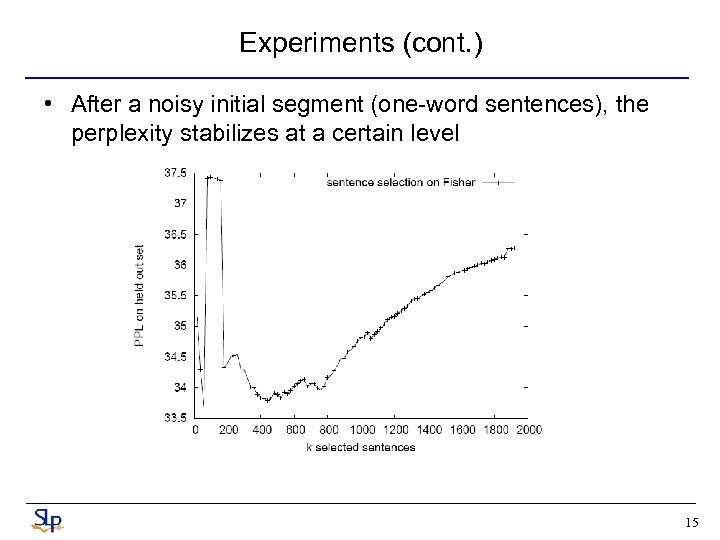

Experiments (cont. ) • After a noisy initial segment (one-word sentences), the perplexity stabilizes at a certain level 15

Conclusions • Compare speech recognition results using a recognition grammar with different kinds of SLMs • 1. Corpus trained on 30 k sentences generated by grammar with WER by 29% relative • 2. In-domain corpus with 21% relative improvement • 3. Standard Corpus with Sentence Filtering • Effective language models can be built for boot-strapping a spoken dialogue systems without recourse to expensive WOZ data collections 16

4607b64ad719e887cff21fe3959d22dc.ppt