cb4e8cc0d90719d6edbe2157880999c8.ppt

- Количество слайдов: 30

Binomial Random Variable Approximations, Conditional Probability Density Functions and Stirling’s Formula

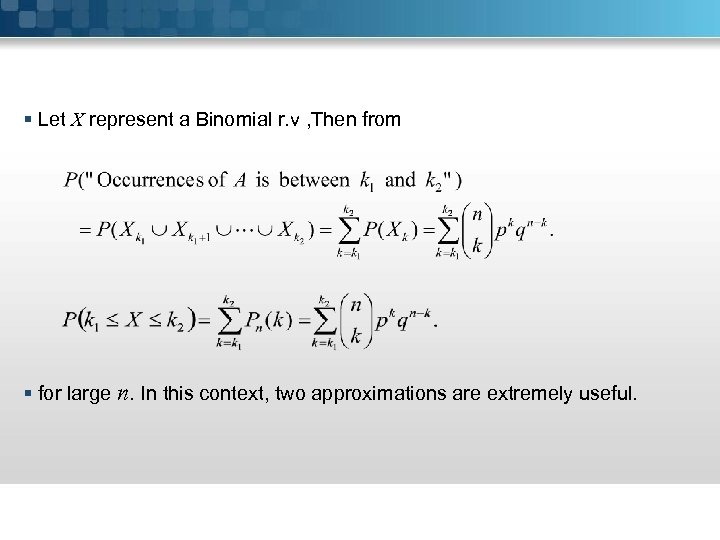

§ Let X represent a Binomial r. v , Then from § for large n. In this context, two approximations are extremely useful.

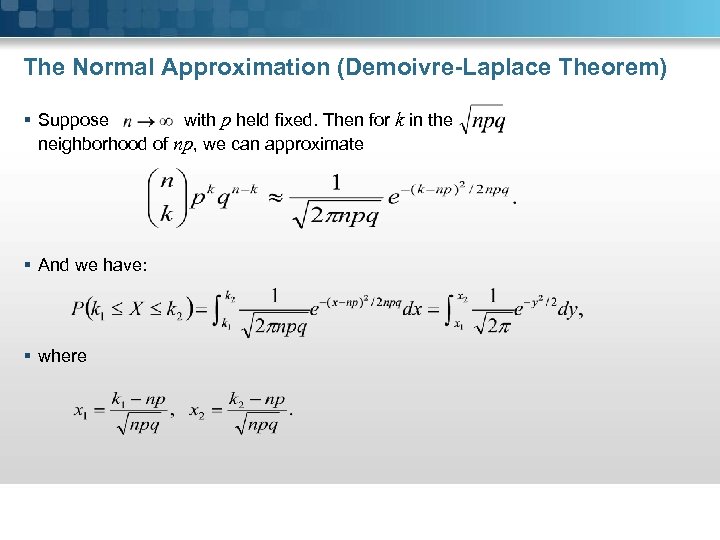

The Normal Approximation (Demoivre-Laplace Theorem) § Suppose with p held fixed. Then for k in the neighborhood of np, we can approximate § And we have: § where

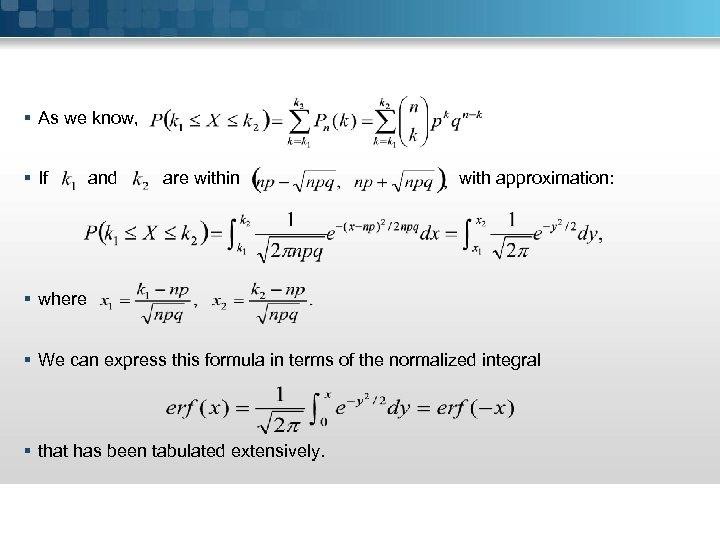

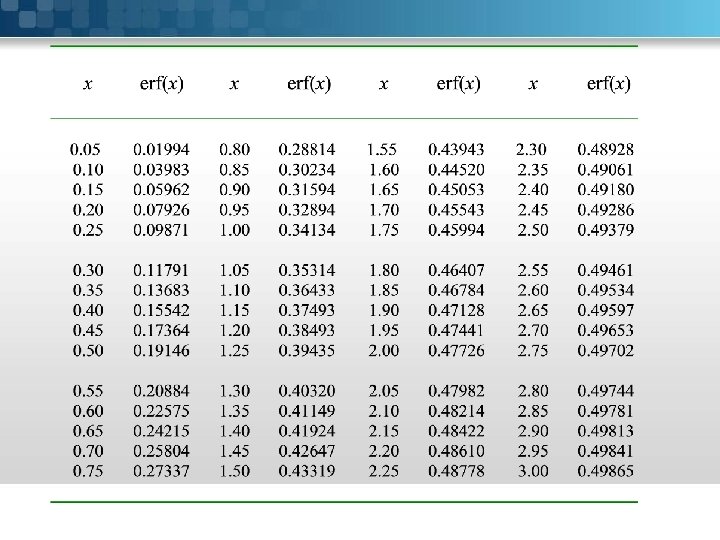

§ As we know, § If and are within with approximation: § where § We can express this formula in terms of the normalized integral § that has been tabulated extensively.

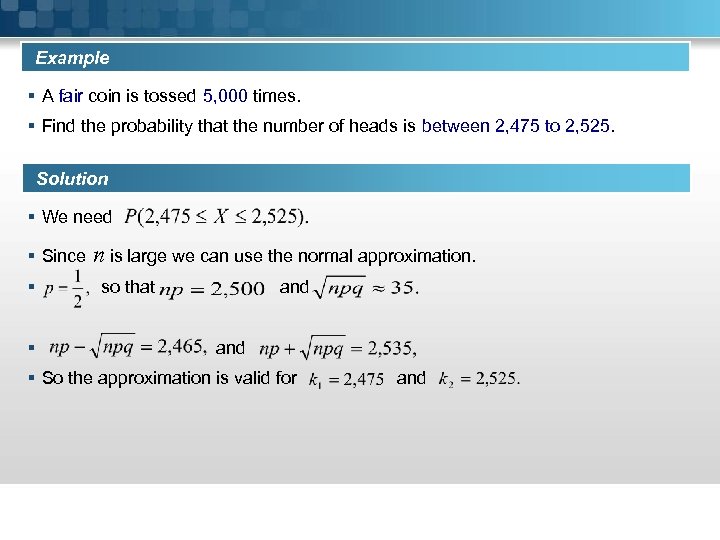

Example § A fair coin is tossed 5, 000 times. § Find the probability that the number of heads is between 2, 475 to 2, 525. Solution § We need § Since § § n is large we can use the normal approximation. so that and § So the approximation is valid for and

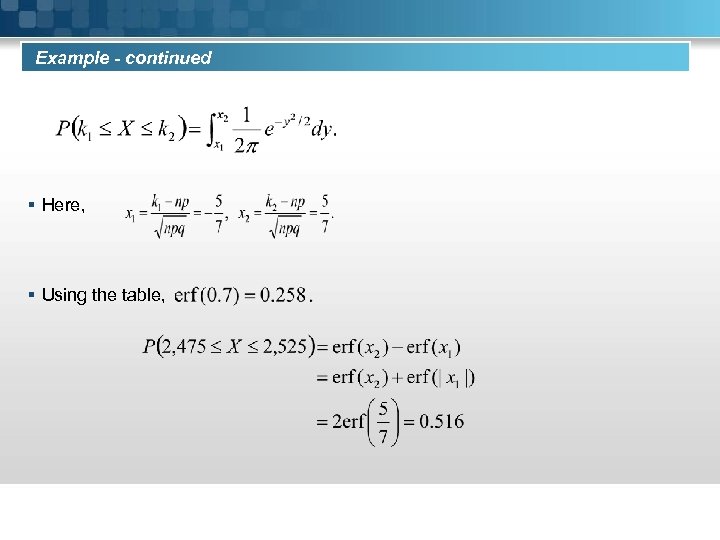

Example - continued § Here, § Using the table,

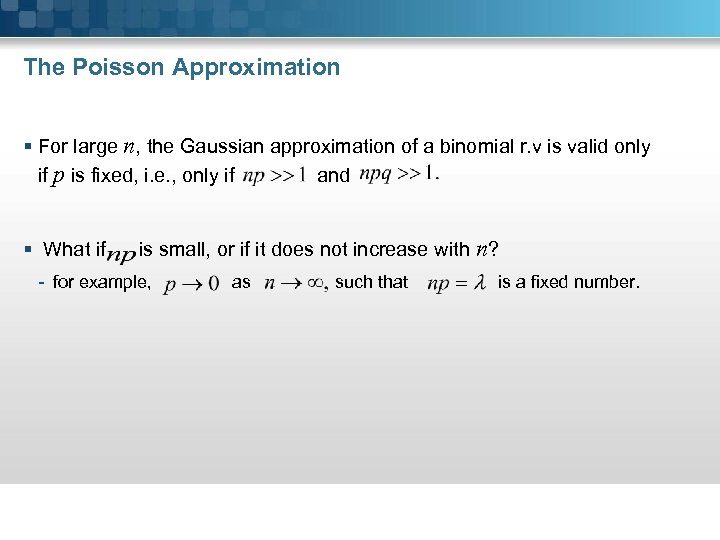

The Poisson Approximation § For large n, the Gaussian approximation of a binomial r. v is valid only if p is fixed, i. e. , only if and § What if is small, or if it does not increase with n? - for example, as such that is a fixed number.

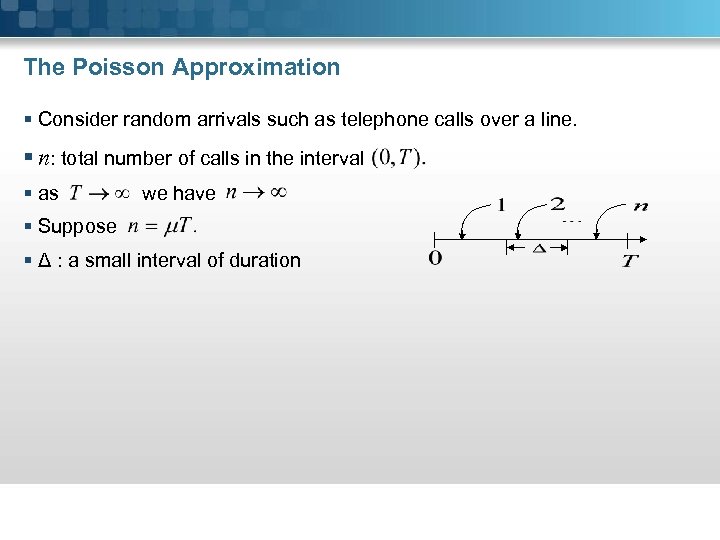

The Poisson Approximation § Consider random arrivals such as telephone calls over a line. § n: total number of calls in the interval § as we have § Suppose § Δ : a small interval of duration

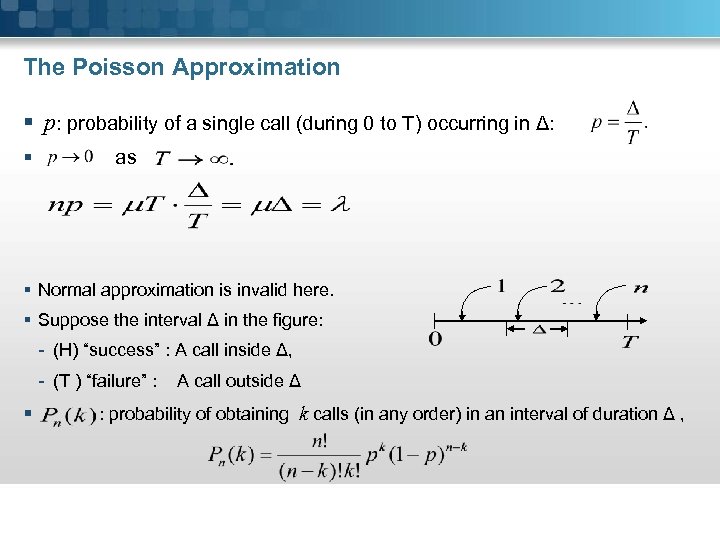

The Poisson Approximation § p: probability of a single call (during 0 to T) occurring in Δ: § as § Normal approximation is invalid here. § Suppose the interval Δ in the figure: - (H) “success” : A call inside Δ, - (T ) “failure” : § A call outside Δ : probability of obtaining k calls (in any order) in an interval of duration Δ ,

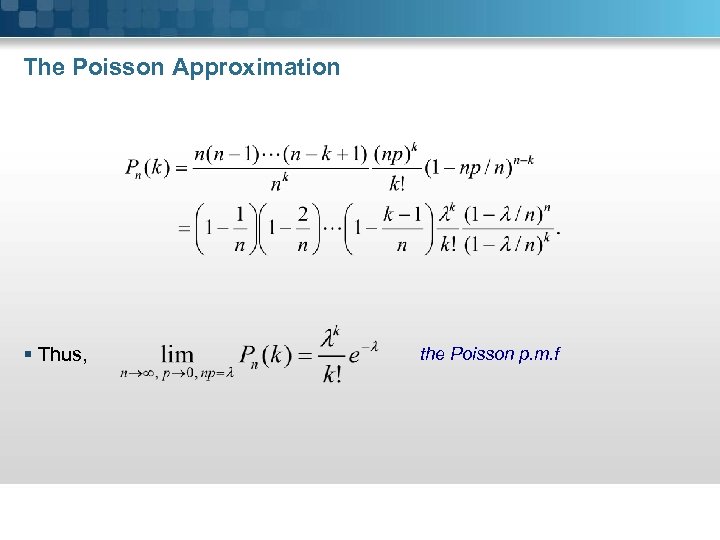

The Poisson Approximation § Thus, the Poisson p. m. f

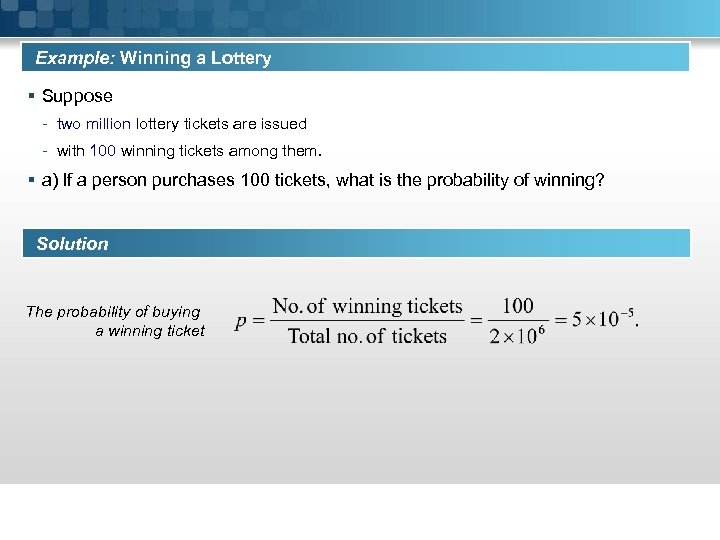

Example: Winning a Lottery § Suppose - two million lottery tickets are issued - with 100 winning tickets among them. § a) If a person purchases 100 tickets, what is the probability of winning? Solution The probability of buying a winning ticket

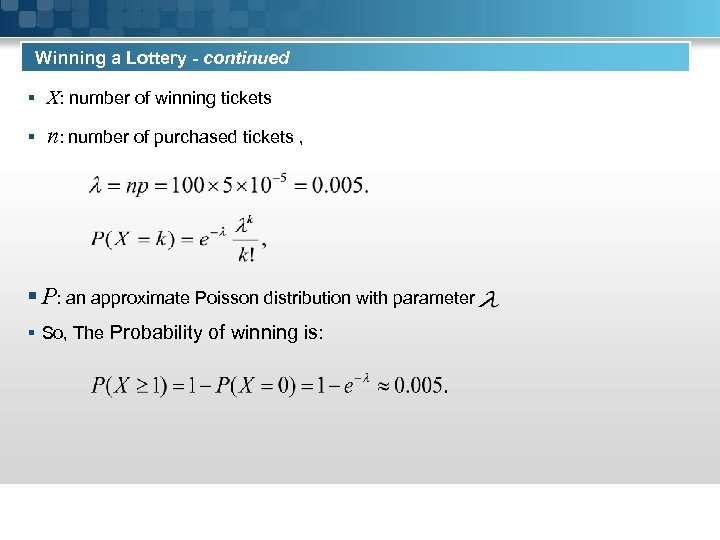

Winning a Lottery - continued § X: number of winning tickets § n: number of purchased tickets , § P: an approximate Poisson distribution with parameter § So, The Probability of winning is:

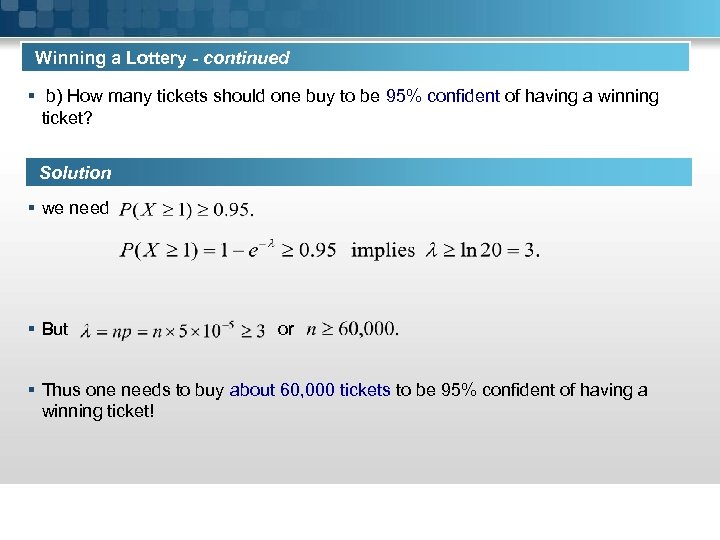

Winning a Lottery - continued § b) How many tickets should one buy to be 95% confident of having a winning ticket? Solution § we need § But or § Thus one needs to buy about 60, 000 tickets to be 95% confident of having a winning ticket!

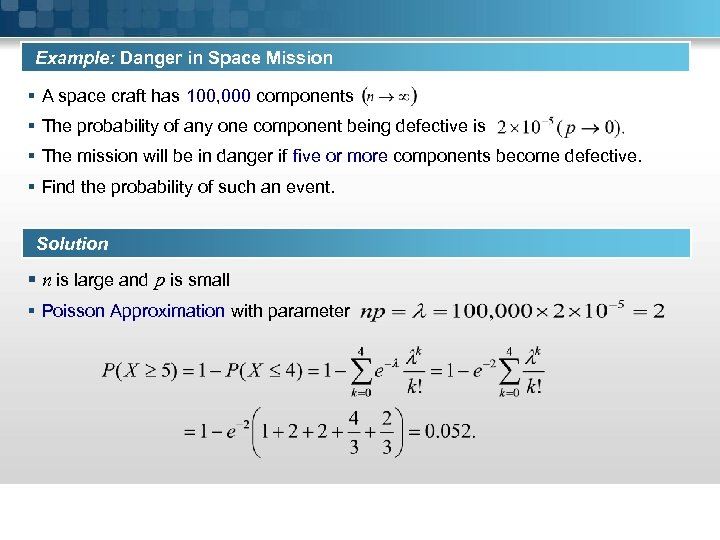

Example: Danger in Space Mission § A space craft has 100, 000 components § The probability of any one component being defective is § The mission will be in danger if five or more components become defective. § Find the probability of such an event. Solution § n is large and p is small § Poisson Approximation with parameter

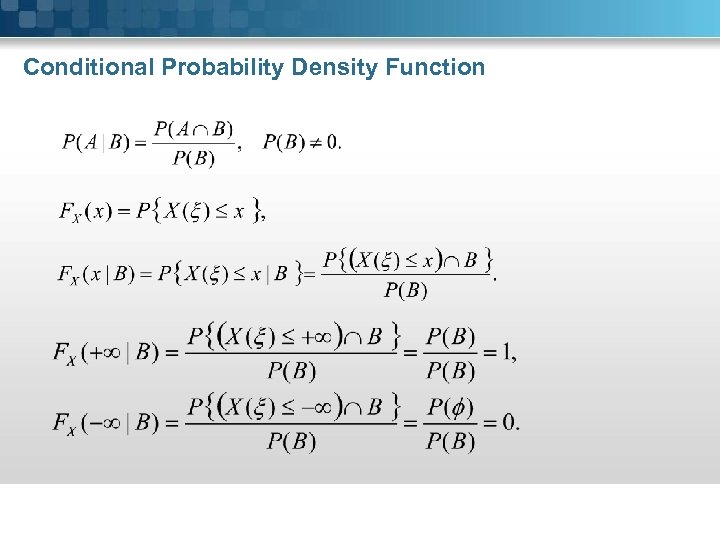

Conditional Probability Density Function

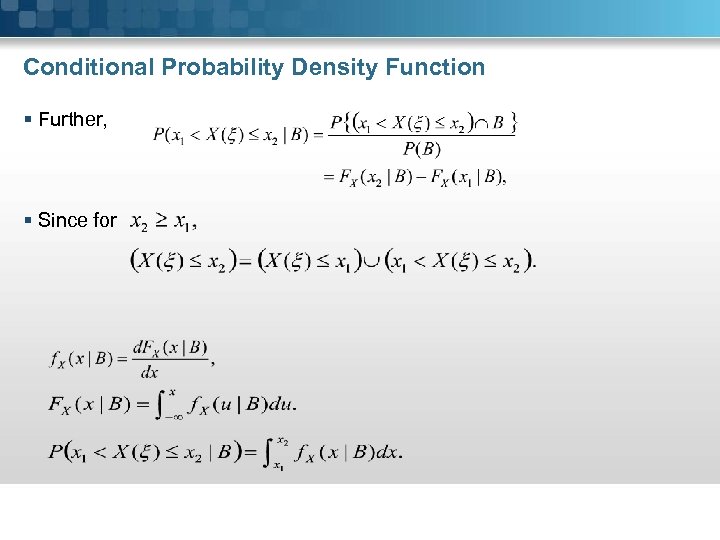

Conditional Probability Density Function § Further, § Since for

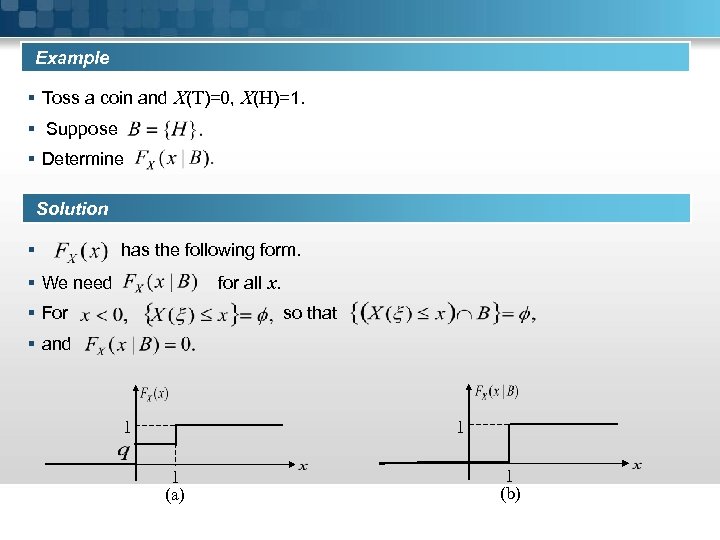

Example § Toss a coin and X(T)=0, X(H)=1. § Suppose § Determine Solution § has the following form. for all x. § We need § For so that § and 1 1 1 (a) 1 (b)

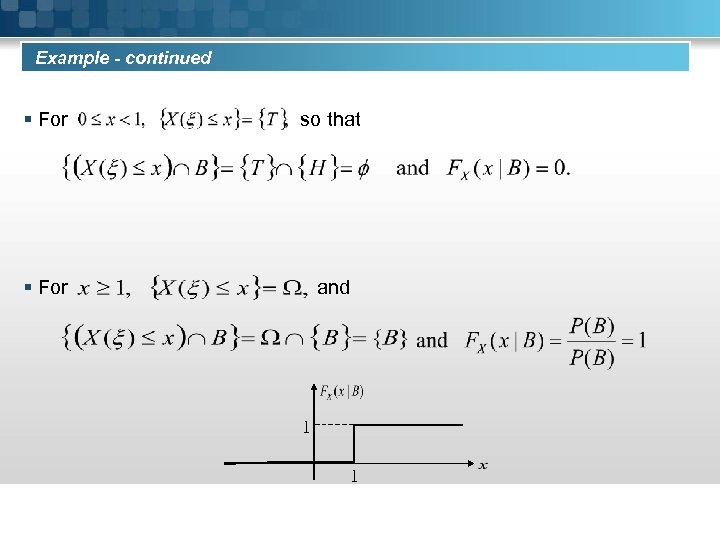

Example - continued § For so that § For and 1 1

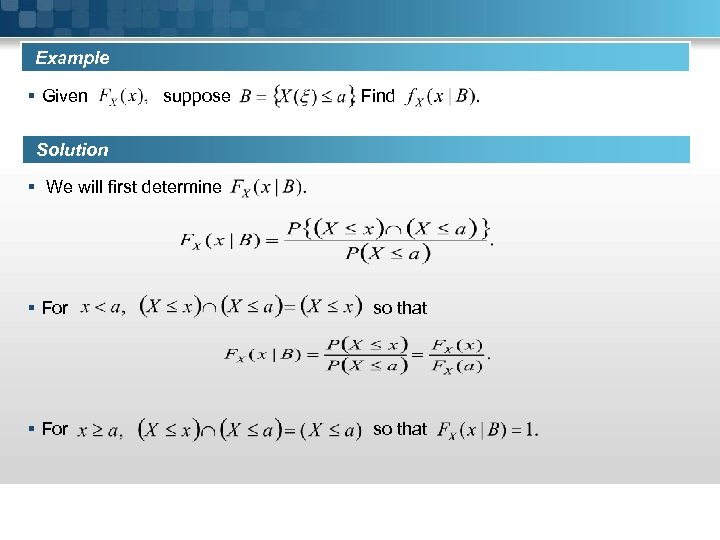

Example § Given suppose Find Solution § We will first determine § For so that

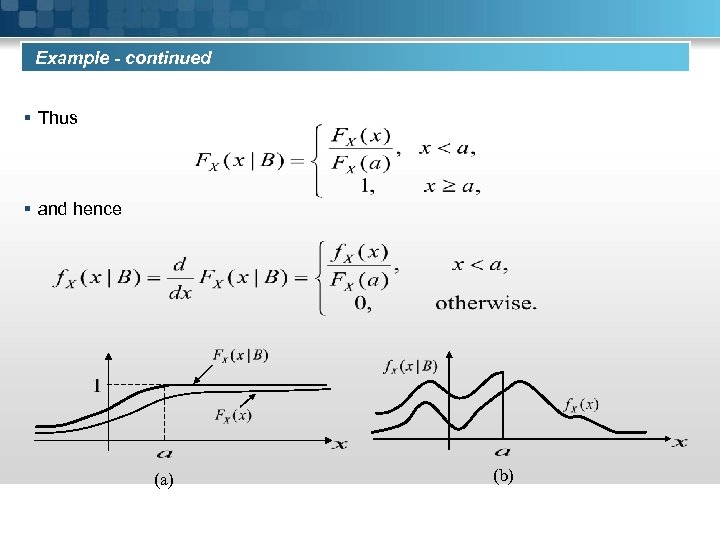

Example - continued § Thus § and hence (a) (b)

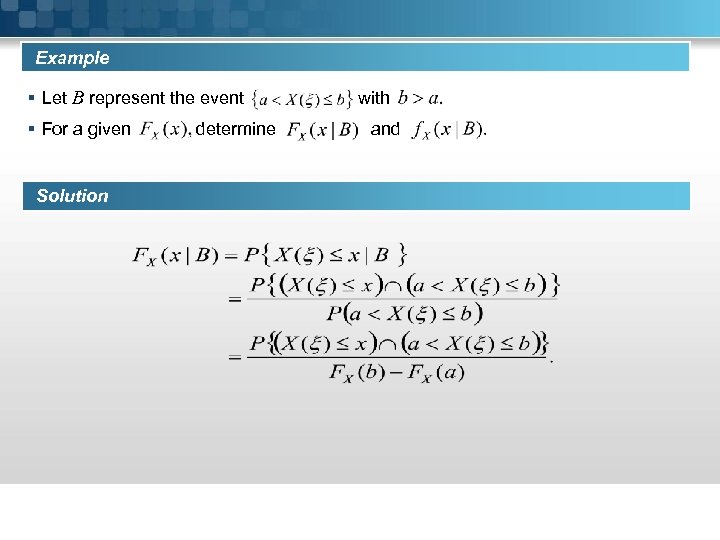

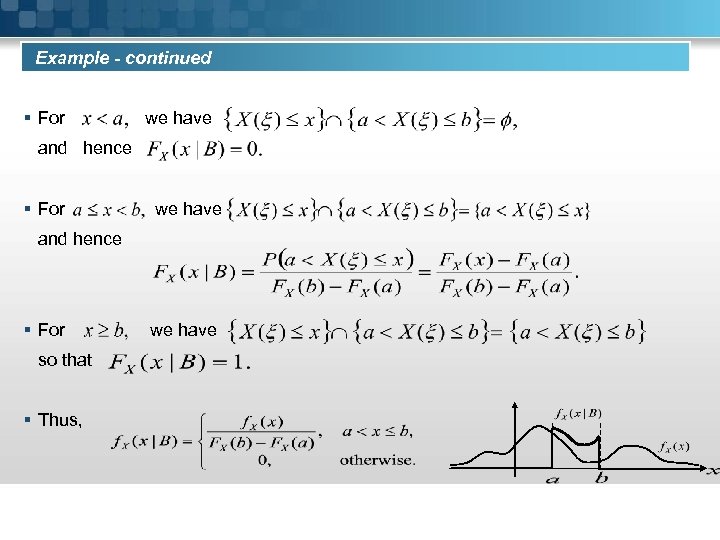

Example § Let B represent the event § For a given Solution determine with and

Example - continued § For we have and hence § For so that § Thus, we have

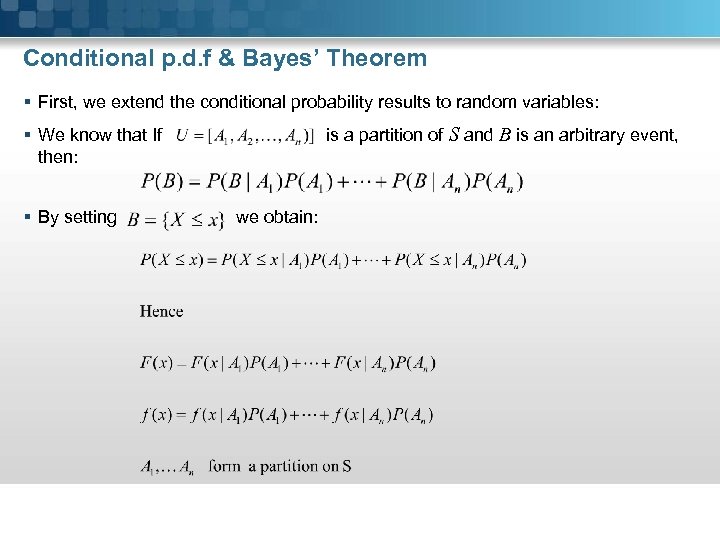

Conditional p. d. f & Bayes’ Theorem § First, we extend the conditional probability results to random variables: is a partition of S and B is an arbitrary event, § We know that If then: § By setting we obtain:

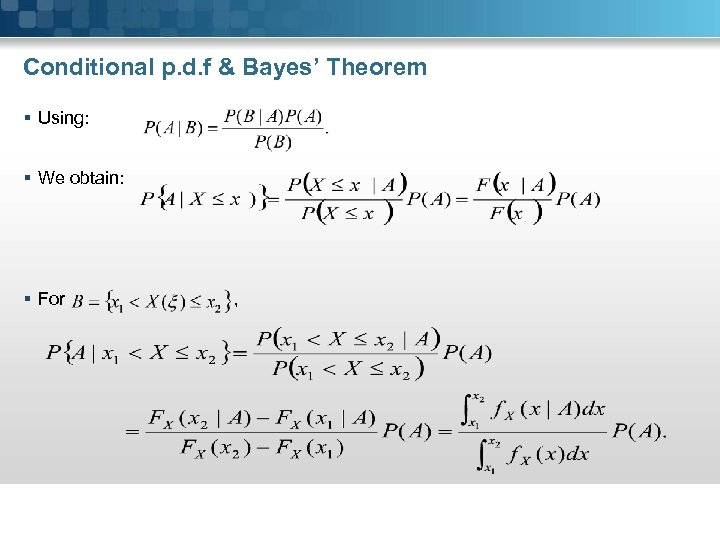

Conditional p. d. f & Bayes’ Theorem § Using: § We obtain: § For ,

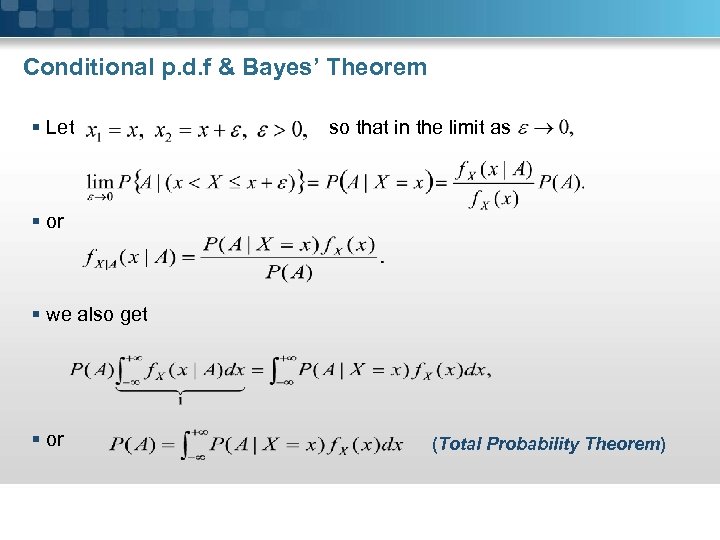

Conditional p. d. f & Bayes’ Theorem § Let so that in the limit as § or § we also get § or (Total Probability Theorem)

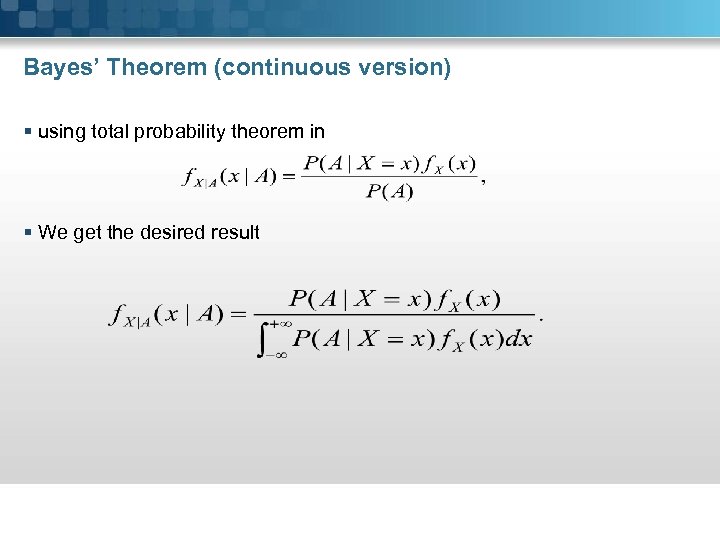

Bayes’ Theorem (continuous version) § using total probability theorem in § We get the desired result

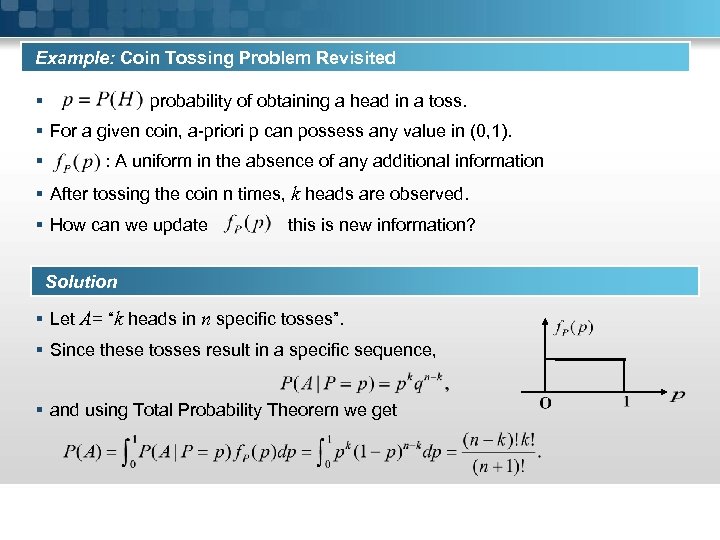

Example: Coin Tossing Problem Revisited § probability of obtaining a head in a toss. § For a given coin, a-priori p can possess any value in (0, 1). § : A uniform in the absence of any additional information § After tossing the coin n times, k heads are observed. § How can we update this is new information? Solution § Let A= “k heads in n specific tosses”. § Since these tosses result in a specific sequence, § and using Total Probability Theorem we get

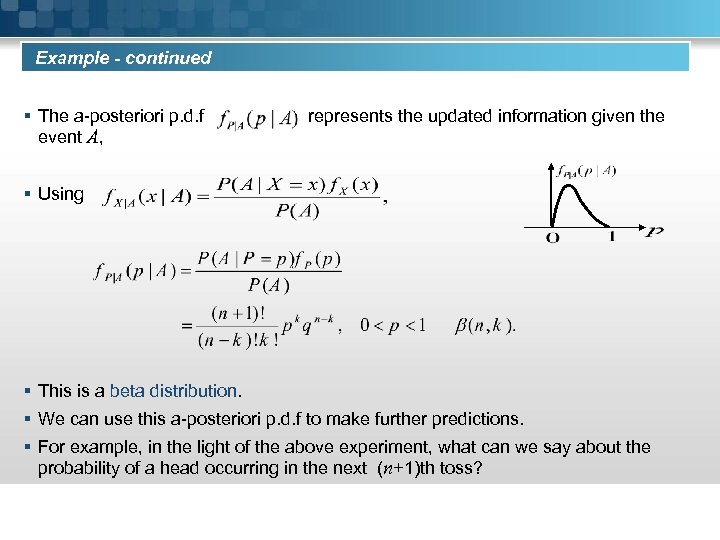

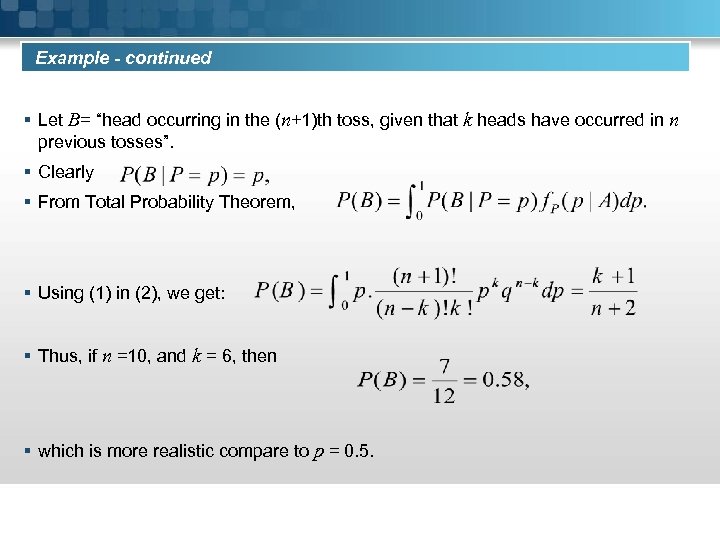

Example - continued § The a-posteriori p. d. f event A, represents the updated information given the § Using § This is a beta distribution. § We can use this a-posteriori p. d. f to make further predictions. § For example, in the light of the above experiment, what can we say about the probability of a head occurring in the next (n+1)th toss?

Example - continued § Let B= “head occurring in the (n+1)th toss, given that k heads have occurred in n previous tosses”. § Clearly § From Total Probability Theorem, § Using (1) in (2), we get: § Thus, if n =10, and k = 6, then § which is more realistic compare to p = 0. 5.

cb4e8cc0d90719d6edbe2157880999c8.ppt