31db915b472be7575ec49dcf488cf074.ppt

- Количество слайдов: 17

BIG DATA - DWT - Localized Automation Framework for Leveraging Home Pitch Advantage Muthu Venkatesh Sivakadatcham Principal Consultant Infosys Limited 1

Abstract • Automated Regression Test suite is always a blessing to have for testers ( from a productivity perspective ) , developers ( from a quick retesting turnaround time perspective ) , business stakeholders ( from a Time to Market perspective ) and project sponsors ( from a less cost perspective ). • When it comes to discrete technologies like Apache Hadoop Framework, Market ETL tools like Informatica or Datastage and isolated traditional datamarts like Oracle, SQL Server, Sybase Etc. , it is really a challenge to identify one tool which can automate transactions, End to End. • What if the above requirement is addressed technically and cost is also brought further down by eliminating costly market tools and interfaces to automate test cases across different technologies and at the same time, improve the efficiency by defragmenting the automation code to the lowest possible level? • Looks like a fairytale but can definitely be achieved by investing some time in understanding the technology backdrop of the project and by devising automation in bits and pieces, in their own backyard. • All the individual pieces can later be collated and triggered through a common driver script. • This paper will demonstrate one such framework for a typical DWT / BIG DATA Architecture. • Testers / Test Leads / Test Managers will benefit from this thought/idea. 2

Table of Contents • DW/ETL/Big Data – Complex Architecture • Challenges in fitting in Commercial Automation Tools • How can Challenges be Addressed ? • Sample ETL/Big Data Architecture • Validation Matrix of Testers • Home-Grown Automation Framework for Identified Architecture • Salient Features of Home-Grown Automation Framework • Qualitative Benefits • Quantitative Benefits • Long Term Focus 3

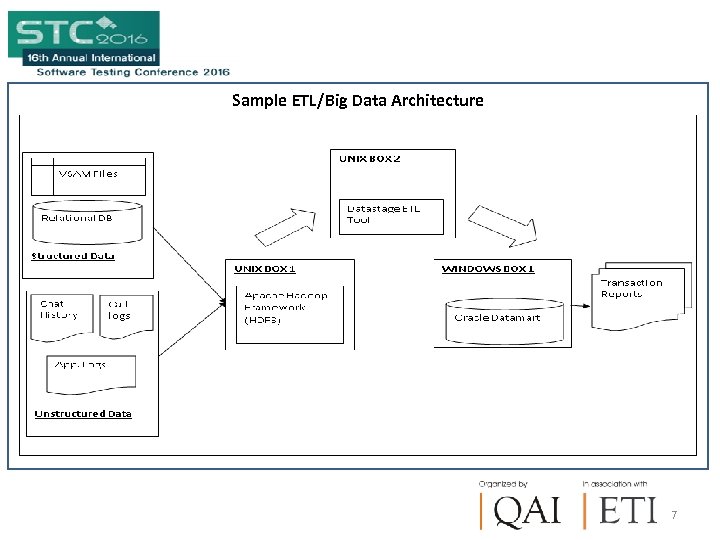

DW/ETL/Big Data – Complex Architecture • Input Data and Feeds from Multiple Upstream Systems • Data types could be both structured and unstructured • BIG Data Cluster hosted and running in a dedicated UNIX (or) Linux Box • ETL Tool hosted and running in a dedicated UNIX Box • Downstream Relational Data store ( Oracle) running in a dedicated Windows Box • BI Reporting Tool pulling data from Relational Data Store 4

Challenges in fitting in Commercial Automation Tools • Substantial Effort Involved in identifying the Best Fit tool from various vendors through POCs • Additional Plug-ins and Patches might be needed to integrate the tool with heterogeneous Systems • License Costs challenges the Budget Limitations of the Project/Program • Resources have to be trained in the commercial automation tool • Any Enhancements to the Automation Features has to be routed through vendor and involves additional costs • ROI might be a concern in case of short life cycle projects 5

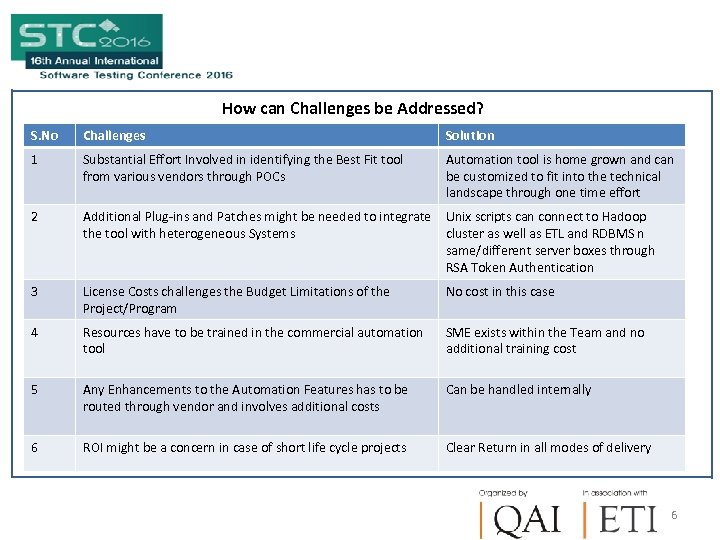

How can Challenges be Addressed? S. No Challenges Solution 1 Substantial Effort Involved in identifying the Best Fit tool from various vendors through POCs Automation tool is home grown and can be customized to fit into the technical landscape through one time effort 2 Additional Plug-ins and Patches might be needed to integrate Unix scripts can connect to Hadoop the tool with heterogeneous Systems cluster as well as ETL and RDBMS n same/different server boxes through RSA Token Authentication 3 License Costs challenges the Budget Limitations of the Project/Program No cost in this case 4 Resources have to be trained in the commercial automation tool SME exists within the Team and no additional training cost 5 Any Enhancements to the Automation Features has to be routed through vendor and involves additional costs Can be handled internally 6 ROI might be a concern in case of short life cycle projects Clear Return in all modes of delivery 6

Sample ETL/Big Data Architecture 7

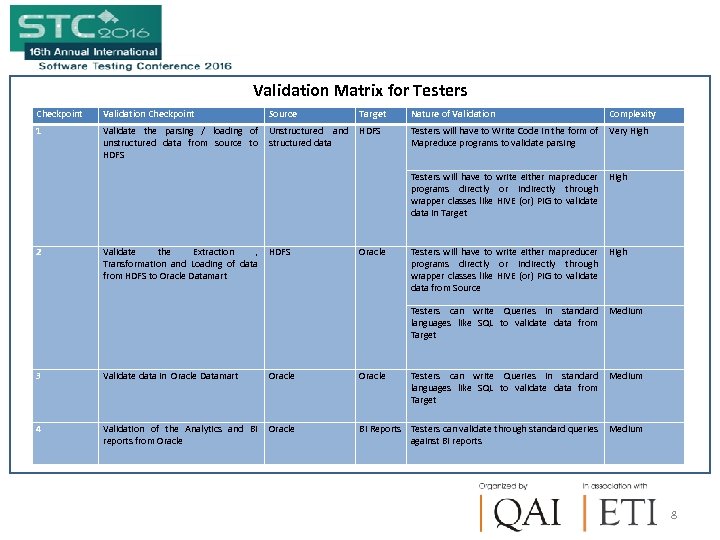

Validation Matrix for Testers Checkpoint Validation Checkpoint Source Target 1 Validate the parsing / loading of Unstructured and HDFS unstructured data from source to structured data HDFS Nature of Validation Complexity Testers will have to Write Code in the form of Very High Mapreduce programs to validate parsing Testers will have to write either mapreducer High programs directly or indirectly through wrapper classes like HIVE (or) PIG to validate data in Target 2 Validate the Extraction , HDFS Transformation and Loading of data from HDFS to Oracle Datamart Oracle Testers will have to write either mapreducer High programs directly or indirectly through wrapper classes like HIVE (or) PIG to validate data from Source Testers can write Queries in standard Medium languages like SQL to validate data from Target 3 Validate data in Oracle Datamart Oracle 4 Validation of the Analytics and BI Oracle reports from Oracle Testers can write Queries in standard Medium languages like SQL to validate data from Target BI Reports Testers can validate through standard queries Medium against BI reports 8

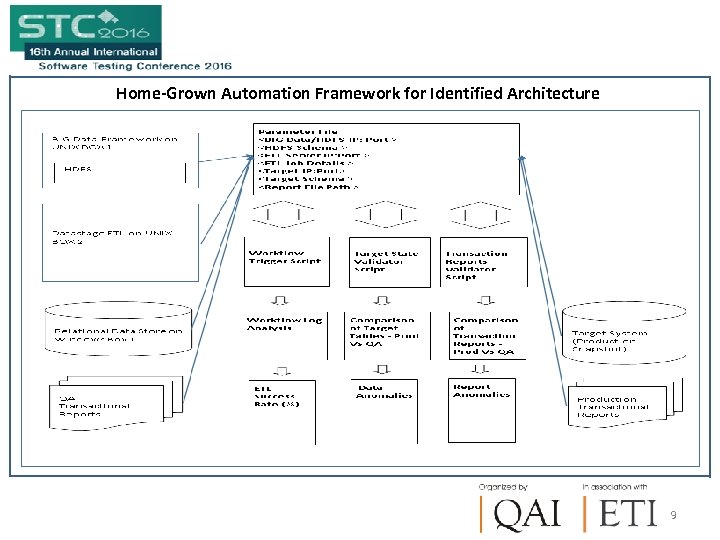

Home-Grown Automation Framework for Identified Architecture 9

10

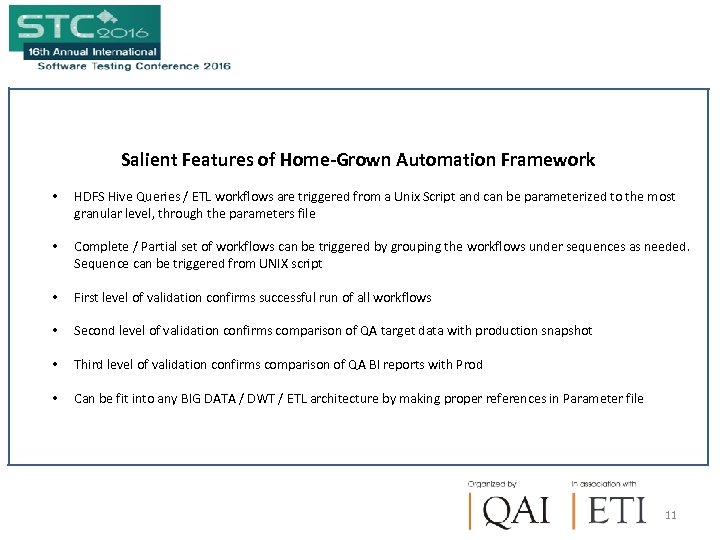

Salient Features of Home-Grown Automation Framework • HDFS Hive Queries / ETL workflows are triggered from a Unix Script and can be parameterized to the most granular level, through the parameters file • Complete / Partial set of workflows can be triggered by grouping the workflows under sequences as needed. Sequence can be triggered from UNIX script • First level of validation confirms successful run of all workflows • Second level of validation confirms comparison of QA target data with production snapshot • Third level of validation confirms comparison of QA BI reports with Prod • Can be fit into any BIG DATA / DWT / ETL architecture by making proper references in Parameter file 11

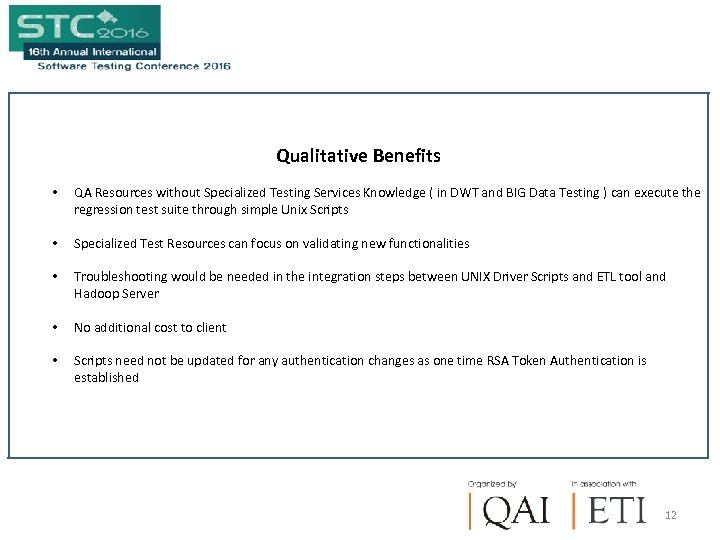

Qualitative Benefits • QA Resources without Specialized Testing Services Knowledge ( in DWT and BIG Data Testing ) can execute the regression test suite through simple Unix Scripts • Specialized Test Resources can focus on validating new functionalities • Troubleshooting would be needed in the integration steps between UNIX Driver Scripts and ETL tool and Hadoop Server • No additional cost to client • Scripts need not be updated for any authentication changes as one time RSA Token Authentication is established 12

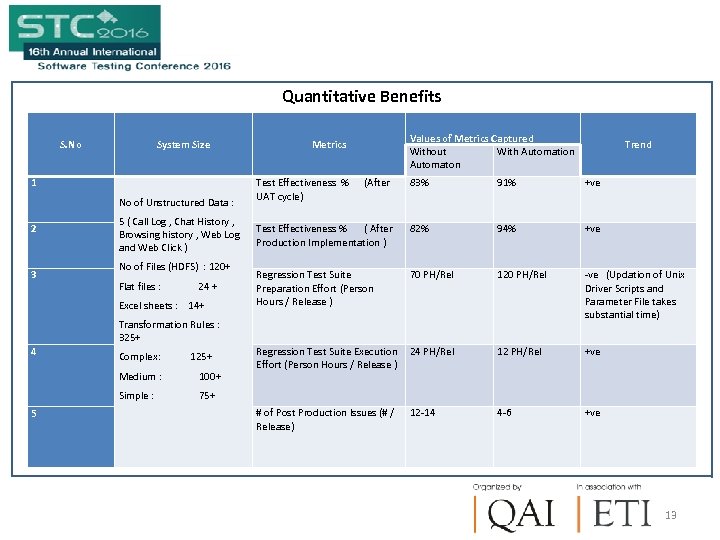

Quantitative Benefits S. No 1 System Size No of Unstructured Data : 2 3 5 ( Call Log , Chat History , Browsing history , Web Log and Web Click ) No of Files (HDFS) : 120+ Flat files : 24 + Excel sheets : 14+ 4 Metrics Values of Metrics Captured Without With Automation Automaton Trend Test Effectiveness % (After UAT cycle) 83% 91% +ve Test Effectiveness % ( After Production Implementation ) 82% 94% +ve Regression Test Suite Preparation Effort (Person Hours / Release ) 70 PH/Rel 120 PH/Rel -ve (Updation of Unix Driver Scripts and Parameter File takes substantial time) 12 PH/Rel +ve 4 -6 +ve Transformation Rules : 325+ Regression Test Suite Execution 24 PH/Rel Complex: 125+ Effort (Person Hours / Release ) Medium : 100+ Simple : 75+ 5 # of Post Production Issues (# / Release) 12 -14 13

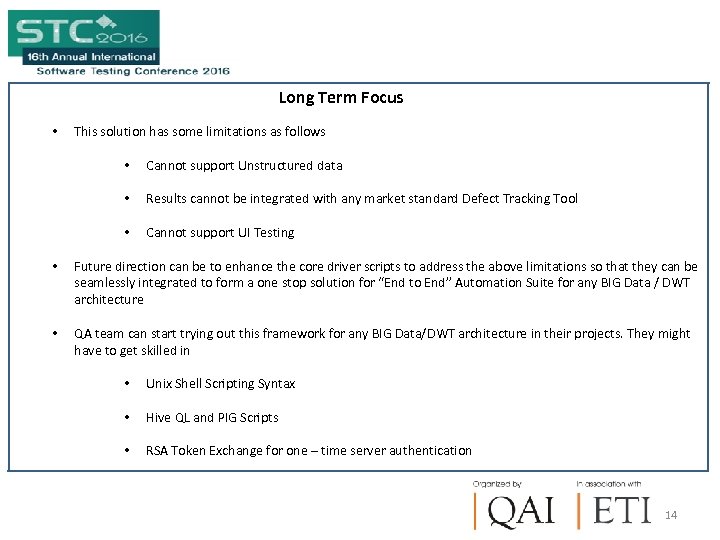

Long Term Focus • This solution has some limitations as follows • Cannot support Unstructured data • Results cannot be integrated with any market standard Defect Tracking Tool • Cannot support UI Testing • Future direction can be to enhance the core driver scripts to address the above limitations so that they can be seamlessly integrated to form a one stop solution for “End to End” Automation Suite for any BIG Data / DWT architecture • QA team can start trying out this framework for any BIG Data/DWT architecture in their projects. They might have to get skilled in • Unix Shell Scripting Syntax • Hive QL and PIG Scripts • RSA Token Exchange for one – time server authentication 14

Bibliography • http: //hadoop. apache. org/ • http: //avro. apache. org/docs/current/#intro • https: //parquet. apache. org/ • http: //hadoop. apache. org/docs/r 1. 2. 1/mapred_tutorial. html#Purpose 15

Question & Answers 16

Thank You 17

31db915b472be7575ec49dcf488cf074.ppt